Abstract

Employees who perceive their supervisors to listen well enjoy multiple benefits, including enhanced well-being. However, concerns regarding the construct validity of perceived-listening measures raise doubts about such conclusions. The perception of listening quality may reflect two factors: constructive and destructive listening, which may converge with desired (e.g., humility) and undesired (e.g., rudeness) supervisor-subordinate relationship behaviors, respectively, and both may converge with relationship quality (e.g., trust). Therefore, we assessed the convergent validity of four perceived listening measures and their divergent validity with eight measures of supervisor-subordinate relationship behaviors, eight relationship-quality measures, and a criterion measure of well-being. Using data from 2,038 subordinates, we calculated the disattenuated correlations and profile similarities among these measures. The results supported convergent but not divergent validity: 58.7% (12.6%) of the correlations expected to diverge had confidence intervals with upper limits above 0.80 (0.90), and 20% of their profile-similarity indices were close to 1. To probe these correlations, we ran a factor analysis revealing good and poor relationship factors and an exploratory graph analysis identifying three clusters: positive and negative relationship behaviors and relationship quality. A post-hoc analysis indicated that relationship-quality mediates the effect of the positive and negative behaviors on well-being. The results demonstrate the challenge of differentiating the perception of listening from commonly used supervisor-subordinate relationship constructs, and cast doubts on the divergent validity of many constructs of interest in Organizational Behavior. However, using the “sibling” constructs framework may allow disentangling these highly correlated relationship constructs, conceptually and empirically.

Similar content being viewed by others

How (if at All) do Perceptions of Supervisor’s Listening Differ from General Relationship Quality?: Psychometric Analysis

Employees who perceive that their supervisor listens well to them experience enhanced overall well-being and greater job satisfaction, commitment, psychological safety, and contentment in their relationship with their supervisor. Furthermore, they are more likely to attribute leadership qualities to their supervisor, resulting in a host of positive outcomes (for reviews and meta-analyses, see Kluger & Itzchakov, 2022; Kluger et al., 2023; Yip & Fisher, 2022). Moreover, experimental research suggests that high-quality listening elevates employees’ creativity (Castro et al., 2018) and affects speaker attitudes: it reduces extremity, increases clarity, and depolarizes the speaker’s attitude even when they know that the listener holds opposing views (Itzchakov et al., 2017, 2018; Itzchakov et al., 2023). Quasi-experiments generalized these findings to work settings (Itzchakov & Kluger, 2017a). Such findings justify calls for training employees in listening and adding listening to management education (Brink & Costigan, 2015; Hinz et al., 2022; Spataro & Bloch, 2018).

However, the conclusion that perceived listening positively affects employees' well-being and, thus, listening education is desirable can be challenged because perceived listening lacks construct validity (Kluger & Itzchakov, 2022; Yip & Fisher, 2022). That is, the construct of perceived listening has many definitions (Kluger & Mizrahi, 2023), and its operationalization is carried out with various measures (Kluger & Bouskila-Yam, 2018), for which there is evidence neither for convergent validity nor for divergent validity. Consequently, it is uncertain whether the benefits attributed to perceived listening are indeed its outcomes or due to other similar constructs. For example, is the perception of high-quality listening distinct from reports of a supervisor’s humility, responsiveness, support, or trust in them? The doubt about the construct validity is exacerbated by exploratory factor analyses of items measuring perceived listening, suggesting that two factors underlie perceptions of listening: high-quality and poor-quality. This two-factor structure suggests that poor-quality listening is a different construct. Hence, is the perception of poor-quality listening distinct from the supervisor’s insensitivity, rudeness, or incivility? If the construct of perceived listening, whether of high or poor quality, overlaps with other constructs, effects attributed to perceived listening could be attributed to other constructs. Conversely, effects attributed to the other constructs may need to be attributed to perceived listening.

To resolve this uncertainty, we discuss the construct of perceived listening and two construct validity concerns: convergent validity—that is, the possibility that the perceived listening measures may not all be assessing the same construct; and divergent validity—that is, the possibility that measures purportedly assessing perceived listening may be assessing equally well many other constructs, such as humility, trust, and rudeness.

We propose that the perceived listening construct is part of a network of constructs concerning various aspects of relationships (between the supervisor and the subordinate). The constructs in this network repeatedly influence each other. Due to their co-occurrence in nature, they can overlap substantially, although they are still distinguishable. For example, listening well to another person may increase the listener's humility. Moreover, a humble person may show a better ability to listen well (Lehmann et al., 2021). Thus, measures of listening quality and humility perceptions may be highly correlated, even though they assess theoretically different behaviors. Such constructs are considered “siblings” because they represent highly correlated phenomena that are still distinct (Lawson & Robins, 2021). The “sibling constructs” framework offers to view closely-related constructs as falling in the gray area between similarity and distinctness, making them challenging to disentangle. Lawson and Robins (2021), alongside others like Shaffer et al. (2016) and Rönkkö and Cho (2020), warn from dichotomous “yes–no”-divergent-validity view and offer conceptual and empirical criteria to evaluate the degree of similarity versus distinctness. Thus, these criteria enable a more comprehensive, in-depth understanding of the construct validity of perceived listening and other relationship constructs.

To address the construct validity concerns, we used the sibling constructs framework and criteria, along with additional recommendations made by other construct validity experts (Rönkkö & Cho, 2020; Shaffer et al., 2016). First, we identified measures of perceived listening developed in various contexts (management, marketing, communication). Second, we reviewed the perceived listening literature and identified constructs conceptually similar to perceived listening. Third, we reviewed the selected constructs’ definitions and measures and demonstrated their conceptual overlaps with perceived listening. Fourth, we empirically assessed and evaluated these overlaps; that is, we asked over 2,000 subordinates to report their perceptions of their supervisor’s listening on four different measures and to report on 16 other measures the relationship behaviors of their supervisor (e.g., humility) and the quality of their relationships (e.g., trust), totaling 26 scales and sub-scales. Following this, we assessed and analyzed several convergence and divergence indices: we inspected (a) their disattenuated correlations and (b) profile similarity and subjected them to (c) exploratory factor analysis (EFA) and (d) exploratory graph analysis (EGA; Golino et al., 2020), to detect clusters in the data.

The correlations and profile similarity indices will reflect convergence and divergence patterns. The cluster detection results will reflect convergence and divergence patterns between sets of measures. Lastly, we measured subjective well-being both as a yardstick to assess mono-method bias and as a criterion. Subjective well-being serves as a yardstick to gauge potential mono-method bias, anticipating relatively low correlations between measures of perceived listening and other constructs, given that subjective well-being is not inherently linked to relationships with supervisors. Additionally, we utilized it as a criterion because, since the onset of research on listening, subjective well-being was considered one of its primary outcomes: “Good communication … between people is always therapeutic” (Rogers & Roethlisberger, 1991/1952, p. 105). As a criterion, it allows testing the relatively predictive validity of the cluster found in the previous stage. Collectively, these actions promise to sharpen understanding of perceived listening and its placement in a network of constructs of relationships (between the supervisor and the subordinate).

Construct Validity of Perceived Listening

Listening is a process of interpersonal communication in which a listener receives messages from a speaker (Rogers & Farson, 1987; Yip & Fisher, 2022). In organizational research, perceived listening is the dominant concept, focusing on the speaker’s perspective and their evaluation of the quality of listening they received (Kluger & Itzchakov, 2022). The focus is on the perception of listening because it is the immediate antecedent of organizational outcomes. However, perceived listening is a vague concept that overlaps with other relational constructs, raising validity concerns (Itzchakov & Kluger, 2017b; Yip & Fisher, 2022): (a) the lack of consensus on the conceptual definition of perceived listening and difficulties in defining the construct, (b) conceptual overlaps with similar relationship constructs, and (c) the possibility that perceived listening may consist of two separate unipolar constructs.

First, there is no consensus on the definition of perceived listening (Kluger & Mizrahi, 2023; Yip & Fisher, 2022). Many reported benefits of perceived listening were assessed using different measures based on very different conceptual definitions in different disciplines (marketing, management, communication). Moreover, some definitions of perceived listening may overlap with relational outcomes. For example, one definition suggests that perceived listening is the speaker’s holistic judgment of the listener’s behaviors, including attention, comprehension, and good intention toward the speaker and their impact on the speakers (Castro et al., 2016). That is, the construct of perceived listening includes perceptions of the listener’s covert behaviors (e.g., pays attention), overt behaviors (e.g., eye contact), and their effect (e.g., “I felt understood”). This definition is based on empirical work suggesting that speakers tend to form a holistic judgment composed of perceptions of communication behaviors and the evaluations of the inner feelings evoked by those behaviors. Although people can describe the complexities of overt and covert listening behaviors and their relational effects, they seem to perceive it as a holistic and unitary experience (Kluger & Itzchakov, 2022; Lipetz et al., 2020).Footnote 1 Therefore, the construct of perceived listening combines the perception of listening behaviors with the effects of these behaviors on positive relational experience, which can also be described in terms of other concepts, such as trust or intimacy.

Second, perceived listening quality is sometimes included as a component of other concepts, such as respectful inquiry, leader humility, and respectful engagement. In these concepts, perceived listening is one manner of conveying understanding and respect to relational partners. Additionally, researchers in a variety of fields have noted that high-quality listening can signal love (Floyd, 2014), intimacy (Levine, 1991), and relationship quality (Bodie, 2012). Therefore, high-quality listening is either a component or a signal for other constructs that index relationships. However, scholars assume that the construct of perceived listening has a unique value as a distinct concept because people report on their perceived listening experiences in social interactions and have explicit evaluations of the degree of the listening quality they received (Lipetz et al., 2020; Yip & Fisher, 2022). For example, organizational leaders consistently report listening as a unique and vital activity that contributes to their relationship with their employees (Yip & Fisher, 2022). Even so, there is the question of whether people can differentiate between constructs when answering questionnaires reflecting them. Some empirical findings justify this concern. For example, one study reported a correlation of 0.86 between perceived salesperson listening and customer trust (Bergeron & Laroche, 2009).

Third, perceived listening measures reflect two unipolar constructs: constructive and destructive listening. That is, measures of perceived listening differentiate desired (e.g., “X understands me”) from undesired behaviors (e.g., “X does not pay attention to what I say”) (Kluger & Bouskila-Yam, 2018; Lipetz et al., 2020). The constructive and destructive facets predict different outcomes. For example, constructive but not destructive listening is a unique predictor of leaders’ people consideration (Kluger & Zaidel, 2013). In this distinction, constructive listening may conceptually overlap with positive constructs such as respect and humility, and destructive listening may overlap with negative constructs such as rudeness and insensitivity.

In sum, the construct of perceived listening needs further assessment and establishment of its (a) convergent validity and (b) divergent validity from other positive and negative constructs. Evidence for these construct validity concerns would mean that many positive and negative non-listening measures appear to reflect the construct of listening, and many perceived listening measures appear to reflect the construct of relationships, blurring the differentiating aspects of the various constructs (Hershcovis, 2011; Shaffer et al., 2016). We discuss both of these issues next.

Convergent Validity: Convergence (and Divergence) Among Perceived Listening Measures

Yip and Fisher (2022) reviewed 24 listening measures in management, and Fontana et al. (2015) noted 53 scales of perceived listening across disciplines.Footnote 2 Both found that they are surprisingly dissimilar, reflecting essential differences in how scholars conceptualize and measure high-quality listening across disciplines like management, communication, and psychology. For example, some highlight the listeners’ good intentions toward the speaker (Kluger & Bouskila-Yam, 2018; Lipetz et al., 2020), some the cognitive process involved in the listening process (Bodie, 2011; Drollinger et al., 2006), and some the listeners’ goals and preferences while listening (Bodie et al., 2013).

To choose measures to study the convergent validity of listening measures, we were guided by two principles: conceptual breadth and frequency of use. Consequently, we chose four different scales covering the conceptual space of the various listening measures. First, the Facilitating Listening Scale (FLS; Kluger & Bouskila-Yam, 2018) was developed empirically with 139 items gleaned from ten unique and mostly published listening scales and reduced to two ten-item subscales with factor analyses. Thus, the FLS appears to represent the commonality among a broad range of measures. Second, the Layperson-based Listening Scale (LBLS; Lipetz et al., 2020) was developed empirically based on laypeople’s definitions of listening attributes and the centrality of those attributes to the listening construct. Third, the Active-Empathic Listening Scale (AELS; Bodie, 2011) was based on a cognitive theory that includes cognitive and behavioral items assessing the listener’s ability to process and respond to the speaker’s message. Last, the Listening Styles Profile–Revised (LSP-R; Bodie et al., 2013) was designed to measure self-reported listening preferences. Therefore, it measures listening styles rather than listening quality.

As for the frequency of use, we chose the above scales based on our field knowledge. Our choice was later corroborated by a registered systematic review of 664 effect sizes (Kluger et al., 2023). It found that the most frequently used measure in research on listening and work outcomes is the FLS (16%), followed by the AELS (8.1%), which is an adapted version of a measure by Ramsey and Sohi (1997) (6.9%), and the Interpersonal Listening in Personal Selling (ILPS) (Castleberry et al., 1999) (1.7%). The most frequently used measures—FLS and the AELS—were included in our study, and the FLS used the items from all other frequently used scales as input to its factor analysis. Thus, all chosen scales offer comprehensive conceptual coverage, and the FLS and the AELS reflect the most popular measures in the work domain.

Based on past research that shows that different listening measures and items reflect two factors: desired (i.e., constructive) and undesired (i.e., destructive) listening behaviors (Kluger & Bouskila-Yam, 2018), we hypothesized that the four different listening measures would also diverge into constructive and destructive listening behaviors, but within this distinction, they will converge. We expect the LBLS, the FLS’s constructive-listening subscale, the AELS, and the LSP-R subscales of relational and analytical listening, which describe desired listening behaviors, to converge. We also expect that these scales will diverge from the FLS’s destructive-listening subscale and the LSP-R task-oriented and critical subscales, which describe undesired listening behaviors and will converge among themselves.

-

H1: Scales (subscales) that assess perceptions of constructive listening qualities converge; conversely, scales (subscales) that assess perceptions of destructive listening qualities converge.

Divergent Validity: Divergence (and Convergence) Among Perceived Listening Measures and Positive and Negative Relationship Constructs

Perceived listening is considered a vague construct because of the difficulty of differentiating the perception of listening from other relationship constructs. We propose that these constructs are closely related because they tend to co-occur in real-life relationships. Two types of relationship construct are likely to co-occur with listening: relationship behaviors (e.g., humility, rudeness) and relationship quality (e.g., trust, communal strength), which may be the outcomes of relationship behaviors. Measures of both relationship behaviors and relationship quality are likely to be strongly linked to perceptions of listening. Therefore, these closely-related constructs are difficult to disentangle. To address this difficulty, we adopted a theoretical and methodological approach for studying divergent validity, referred to as the “sibling constructs” framework (Lawson & Robins, 2021). We first briefly describe the framework’s principles and then discuss how we apply them to our conceptual and empirical investigation.

The “Sibling Constructs” Framework for Divergent Validity

Lawson and Robins (2021) suggest that some constructs share close familial relations because they tend to co-occur in nature and fall in the grey area of similarity versus distinctness. Such constructs can be suspected as twins (nearly identical and lacking differentiation) or siblings (similar but still distinct), both at the conceptual and empirical levels. Therefore, assessing the divergent validity of these sibling constructs requires strategies to understand the degree of similarity versus distinctness between them. The notion that divergent validity is a continuum rather than a yes or no decision is not new (Rönkkö & Cho, 2020; Shaffer et al., 2016). However, Lawson and Robins (2021) warn against concluding a lack of distinctness based on high observed correlations. Therefore, they suggest ten conceptual and empirical criteria for identifying sibling constructs and exploring their similarities and differences. The criteria are supposed to evaluate the extent to which two (or more) constructs: (1) are defined in a conceptually similar way; (2) have a high degree of overlap in their theorized nomological networks; (3) have a high degree of overlap in their observed nomological networks; (4) have measures that correlate strongly with each other; (5) have measures that together form a strong general factor; (6) have measures that show little incremental validity over each other; (7) have similar developmental trajectories; (8) share underlying causes (including environmental causes, genetic variance, and neural mechanisms); (9) are causally related to each other; and/or (10) are state/trait manifestations of the same underlying process. The first two criteria require conceptual assessments, and the other eight criteria require empirical assessments.

Therefore, we split our investigation into conceptual and empirical assessments. The conceptual assessments include theoretical considerations for (1) detecting the relevant constructs suspected to be similar to perceived listening and (2) assessing the conceptual overlaps between them by assessing the similarity in (a) definitions and (b) measuring items. The conceptual analysis encompasses criteria 1–2 and highlights possible overlaps to be tested empirically. The empirical assessments include the empirical tests and criteria recommended by Lawson and Robins (2021) and other scholars (Rönkkö & Cho, 2020; Shaffer et al., 2016) covered in criteria 3–6. Criteria 7–10 are based on longitudinal or experimental data and, therefore, beyond the scope of this study, although we suggest future steps to evaluate these criteria in the Discussion.

The Conceptual Similarity Between Perceived Listening and Relationship Constructs

In the first step of the conceptual investigation, listening-related constructs should be searched in various relevant disciplines (Lawson & Robins, 2021; Shaffer et al., 2016). To do so, they recommended requesting suggestions from colleagues and subject-matter experts from broad, heterogeneous disciplines who can provide an informed assessment of the potential overlap between a given construct and other existing constructs. For example, Shaffer et al. (2016) quote White (1994), who refers to this as “consultation,” in which searching citation databases that “are simply inside people’s heads,” and adds that “developing a personal and professional rapport with subject matter experts can make a meaningful difference in a scholar’s ability to identify constructs relevant to this type of study” (p. 84).

Given the potentially broad overlap between perceived listening and relationship-related constructs, our team was composed of researchers with a multidisciplinary background, including clinical, social, and applied psychology and management. In addition, we requested researchers whose scales we initially considered to suggest additional constructs we should consider. Next, we created a list of relevant constructs related to the nomological network of perceived listening used in various disciplines (e.g., psychology and management). Then, we reviewed the theoretical literature on perceived listening and the list of relevant constructs suggested by the experts. We included only constructs previously suspected to converge with perceived listening (based on theoretical perspective or previous empirical findings). Subsequently, we looked for measures of these constructs in the supervisor-subordinate relationship context. We selected this context for our study due to the extensive research on perceived listening within the organizational context. Therefore, another inclusion criterion was scales constructed to measure supervisor-employee relationships or previously validated to target supervisors or similar targets like instructors.

Table 1 presents the chosen 16 constructs and 26 scales (including subscales) representing the chosen constructs. The last column in Table 1b and 1c refers to scholars identifying conceptual similarities between perceived listening and the chosen relational construct or empirical evidence for their possible convergence. For example, Itzchakov et al. (2022) discussed the theoretical similarities and differences between perceived listening and perceived partner responsiveness, Kluger et al. (2021) discussed the possible convergence between perceived listening and intimacy, and Clark et al. (2019) discussed the similarity of cognitive empathy with active-empathic listening.

Table 1 also presents definitions for the chosen 16 constructs. Note that all measures in Table 1 assess subordinates’ perceptions (rather than the supervisor’s actual behaviors). The measures cover perceived supervisor behaviors (e.g., “admits it when they do not know how to do something”), intentions (e.g., “tries to see where I was coming from”), and relationship quality (e.g., “I have a relationship of mutual understanding with him/her”).

Next, we investigated the constructs’ definitions and item overlaps. The constructs’ definitions in Table 1 point to substantial overlap. For example, Table 1 shows that for the constructive FLS subscale, perceived listening was defined as the perception of the degree to which a person is attentive, understanding [emphasis added], nonjudgmental, empathic [emphasis added], and respectful [emphasis added] when another person speaks to them” (Itzchakov & Kluger, 2017b, p. 4). This definition includes overlaps with empathy and respect. Moreover, this definition is also similar to definitions of other constructs. For example, the Other Dyadic Perspective-Taking (ODPT) scale (Long, 1990) is based on the definition, “the extent to which one’s partner is perceived to be understanding [emphasis added] of the point of view of the other person in the dyad” (p. 93). Also, underlying the Perceived Partner Responsiveness Scale (PPRS; Reis & Shaver, 1988) is the definition, the “degree to which individuals feel understood [emphasis added] and validated” (Reis et al., 2018, p. 272). In the same manner, the negative relationship constructs also show similar overlaps. For example, incivility and rudeness are defined as “a display of lack of regard for others.” This definition is very similar to the perceived-partner-insensitivity definition: “Responses thought to lack understanding [emphasis added], validation, and caring convey a detachment or insensitivity,” similar to the destructive FLS meaning. These definitional similarities point to conceptual overlaps at the construct level.

At the measure level, the conceptual overlap can also be identified by item overlap (Hershcovis, 2011; Shaffer et al., 2016). Table 1 shows representative items of each construct and examples of item overlapping measures of other constructs. Because listening is conceptually related to relationship quality, some listening measures contain items that measure relationship quality. For example, the LBLS has an item “creates good relationships.” Moreover, many relationship constructs contain either an explicit item about listening or items about attention and comprehension of the other person, which are critical aspects of listening. Examples include the PPRS (Reis et al., 2018) items: “Really listens to me” and “Understands me,” and the Satisfaction With My Supervisor Scale (SWMSS; Scarpello & Vandenberg, 1987) item: “My supervisor listens when I have something important to say.”

Based on our conceptual investigation, we hypothesized,

-

H2: Measures assessing constructive listening qualities are highly positively correlated with measures assessing positive relationship behaviors and relationship quality, hence lacking divergence.

-

H3: Measures assessing destructive listening qualities are highly positively correlated with measures assessing negative relationship behaviors, hence lacking divergence.

However, the degree of overlap between the measures of the various relationship constructs and the perceived listening measures may still vary, such that some overlaps can indicate twin versus sibling constructs. Lawson and Robins (2021) suggest that researchers should outline the nomological network of the focal construct, including possible concomitants, moderators, and outcomes, and assess the degree of overlap between the nomological networks of the focal construct and other (suspected to be sibling) constructs. The second conceptual criterion by Lawson and Robins (2021) allows us to assess which relationship constructs are more theoretically similar to perceived listening and which can be more theoretically distinct. Therefore, the third step of the conceptual investigation is theorizing the nomological network of perceived listening, including the relationship-related constructs reviewed.

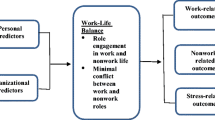

Perceived Listening Nomological Network: Concomitants and Outcomes

When considering relationship constructs that appear conceptually similar to perceived listening, we identified two groups: listening concomitants and listening outcomes. Concomitants of constructive listening are relationship behaviors that can be considered “side-effects” of high-quality listening or that high-quality listening is part of the behavioral repertory of these constructs. These may include perspective-taking (Long, 1990), showing responsiveness or being responsive (Reis & Shaver, 1988), and giving autonomy-related support (Moreau & Mageau, 2011). In parallel, concomitants of destructive listening may include constructs such as being uncivil (Cortina et al., 2001), rude (Foulk et al., 2016), and insensitive (Crasta et al., 2021), for example, by responding in uncaring ways, being condescending, ignoring, and the like. Perceived listening may also substantially affect many relationship-quality constructs. For example, an employee perceiving their supervisor to listen well may also perceive supervisor support (Shanock & Eisenberger, 2006), empathy (Kellett et al., 2006), affective trust (McAllister, 1995), and even intimacy (Kluger et al., 2021).

Concomitants (relationship behaviors) and outcomes (relationship quality) can be theoretically differentiated because concomitants seem to refer to specific behaviors within specific boundaries. In contrast, outcomes refer to a broad and abstract “sense of accumulated interactions over time” (Itzchakov et al., 2022, p. 9). For example, listening is a behavior that entails in-person conversation. In contrast, support can be conveyed in numerous ways not bound to a conversation or specific interaction. Another example is perspective-taking, which seems like a concomitant of listening, a behavior that requires the specific cognitive ability to understand the other (Long, 1990), while empathy, which seems like a perception of relationship quality, refers mainly to the global experience of being emotionally aligned with another person, independent of actual cognitive understanding (Rankin, Kramer & Miller, 2005 in Spreng et al., 2009).

Moreover, concomitants of perceived listening are theorized to reinforce the perception of meaningful relationships over time by creating a positive feedback loop or “upward spirals” of influence (Canevello & Crocker, 2010; Itzchakov et al., 2022; Wieselquist et al., 1999). Therefore, listening concomitants (relationship behaviors) and outcomes (relationship quality) are theoretically distinct because the perception of relationship quality is a long-term consequence of these “in-the-moment” relationship behaviors (Itzchakov et al., 2022). For example, perceiving that the other person is habitually listening well may cause the speaker to trust them and perceive their relationship as intimate. However, a single interaction in which one experiences high-quality listening following multiple experiences of low-quality listening will probably not foster the perception of an intimate relationship. Therefore, the proposed theoretical differentiation can be validated by demonstrating that while relationship behaviors can foster higher relationship quality, they sometimes fail to reach that goal, perhaps due to moderating factors such as personal (e.g., attachment style, agreeableness), dyadic (e.g., familiarity), and contextual (e.g., organizational climate) variables.

Based on the conceptual similarities and differences reviewed, we hypothesized,

-

H4: Measures of perceived listening correlate more strongly with measures of perceived listening concomitants (relationship behaviors) than with measures of relationship outcomes (relationship quality).

We note that some construct names point to relationship quality, but their items indicate perceived behaviors. For example, the scale Satisfaction With My Supervisor Scale (SWMSS; Scarpello & Vandenberg, 1987) indicates relationship quality, but the scale mainly consists of behavioral items, such as: “showed concern for my career progress.”

The Empirical Similarity Between Perceived Listening and Relationship Constructs

The four steps of empirical assessment of sibling constructs (Step 3 through Step 6; Lawson & Robins, 2021) require investigating the correlations among measures of constructs identified in the previous steps. Specifically, we conducted four-step testing to gauge convergent and divergent validity, following Lawson and Robins’s (2021) empirical criteria, with refinements of other divergent validity suggested methods (Rönkkö & Cho, 2020; Shaffer et al., 2016). The four-step testing includes assessing (1) the strength of the associations between the constructs’ measures (with the disattenuated correlations and the upper limit of their confidence interval), (2) the degree of overlap between the constructs’ nomological networks (with profile similarities of all measures), (3) the underlying clusters in the constructs nomological networks (with exploratory factor analysis, multidimensional scaling, and exploratory graph analysis), and (4) the ability to demonstrate incremental validity.

Therefore, we estimated the convergence and divergence of the measures in Table 1 in the context of subordinates’ perceptions of their supervisors. We asked over 2,000 employees to fill out questionnaires in two languages containing 20 instruments encompassing 26 subscales and 269 items. We investigated the correlation matrix with several recommended tools, explained in the Method section below.

We also measured subjective well-being as a criterion. We chose subjective well-being because it is one of the primary outcomes of listening (Kluger & Itzchakov, 2022). Moreover, subjective well-being was found to mediate supervisor support and turnover intentions and is strongly related to job satisfaction (Bowling et al., 2010). Following Lawson and Robins (2021) guidelines, we aimed to pick a criterion that is theoretically linked to perceived listening and the relationship quality with the supervisor but also sufficiently broad to “cover a wide scope of the constructs’ nomological networks” (Lawson & Robins, 2021, pp. 9–10). Therefore, we chose subjective well-being based on extensive literature showing that this construct strongly correlates with nearly every popular construct related to happiness, emotional well-being, and positive or negative affect (e.g., Diener, 1984; Diener et al., 1999). Moreover, subjective well-being, as an employee-centric characteristic instead of workplace criteria (such as job satisfaction or turnover intentions), allows us the conceptual distance needed to reduce mono-method variance (Podsakoff et al., 2003) and find the possible effects of the different relationship constructs with fewer concerns for halo effects (Mathisen et al., 2011), or affective schema overshadowing the employees' general evaluations (Martinko et al., 2018).

Method

Participants

We recruited English and Hebrew speakers from four sources: the Prolific online survey panel; volunteers recruited via https://www.researchmatch.org/ (English); an Israeli online panel, Panel4All; and Israeli working students participating for course credit (Hebrew). Prolific participants received 2.5 GBP (approximately 3.5 USD), and Panel4All participants received 15.5 NIS (approximately 5.5 USD). All participants had to be employed at least 20 h per week and primarily with the same supervisor for one year. We reviewed the data for false starts, incomplete data, and careless responses. We removed respondents who indicated they did not work at least 20 h a week, did not report mainly to one supervisor, had extensive missing data, or failed at least two consistency checks. The final sample had 2,038 complete or almost complete surveys, 54% females, Mage = 34.6, SD = 11.0. The tables in the Supplementary Materials report the following: Table S1, more details on sample sources; Table S2, information about the data dropped from the analyses; and Tables S3–S6, the demographic data by sample.

Because we combined the Hebrew and the English samples, we conducted a Measurement Invariance test for group equivalence with confirmatory factor analysis (CFA) and metric invariance test based on the Exploratory Graph Analysis (EGA) framework using network loadings (for the difference between the methods ad the utility in using EGA framework for measurement invariance see Jamison et al., 2022). The results indicated evidence for configural and partial metric invariance, as only 8 out of the 26 scales had a significant difference in loadings between the two samples (even in the eight scales that show language variance, the absolute difference in network loadings does not exceed 0.05). That is, we have relatively large samples in each language (about 1,000) that detect minor differences. See S7 and S8 in the Supplementary Materials.

Procedure

We invited all participants to fill out a questionnaire online. All participants gave their informed consent at the beginning of the survey. The instructions read,

Please think about your current supervisor. You will be asked questions regarding your relations with her/him during the past month. If you have more than one supervisor, the questions below pertain to the supervisor with whom you spend most of your work time.

We then presented participants with the measures in Table 1. We randomized the order of these measures and the order of the items within each measure. Finally, we asked participants to report their subjective well-being, followed by demographic questions.

Measures

One author translated all items from English to Hebrew, and a research assistant back-translated them to English. The discrepancies were discussed with another author, creating a final Hebrew version (Brislin, 1970). We kept the original anchors of the Likert scale for each measure but expanded all to 11-point scales for consistency. For example, where the extreme scale points were “Strongly disagree” and “Strongly agree,” we presented a scale ranging from 0 = Strongly disagree to 10 = Strongly agree. All items were adapted to refer to “my supervisor.” For example, in items referring to “my partner,” the target was changed to “my supervisor.” We provide example adapted items for each scale in Table 1 and Cronbach’s α for all scales in Table 2. See the elaborated list of measures in Supplementary Materials (S15).

Analyses

We conducted four-step testing to gauge discriminant validity. The first three are relatively novel and are explained below.

Step 1: A Strong Association Between Measures of Siblings Constructs.

Based on Rönkkö and Cho (2020) recommendation for assessing the divergence of constructs based on one-time measurement, we used two empirical methods: (a) the disattenuated correlation and (b) the upper limit of the confidence interval (CIul) of the disattenuated correlation.Footnote 3 The disattenuated correlation estimates the “true” correlation by dividing the observed correlation between two variables with the square root of the product of the variables’ reliabilities (often estimated with Cronbach’s α). The upper limit of the confidence interval (CIul) of the disattenuated correlation has the advantage of estimating the possible “true” correlation in the “worst-case scenario.” Rönkkö and Cho (2020) proposed that if the CIul is equal to or greater than 1, there is evidence of a severe-discriminant-validity problem. If 0.90 ≤ CIul < 1, the evidence is moderate; if 0.80 ≤ CIul < 0.90, the evidence is marginal.

Step 2: Assessing the Degree of Overlap Between the Constructs’ Nomological Networks.

We assessed the nomological networks of all measures in Table 1 by testing the profile similarities of all measures. Measures of profile agreement “can quantify the extent to which two siblings share similar “profiles” of correlations with the variables in their shared nomological networks.” (Lawson & Robins, 2021, p. 7), meaning the two constructs’ correlation of their correlations pattern with other constructs. We used two indices for profile similarity: Pearson’s r and the double-entry intraclass correlation (ICCde).Footnote 4

Step 3: Assessing Clusters in the Constructs Nomological Networks.

To explore the nomological network of perceived listening, we used exploratory factor analysis (EFA), multidimensional scaling (MDS), and exploratory graph analysis (EGA). EGA is part of a new field called network psychometrics, which focuses on estimating undirected network models to psychological datasets (Golino & Epskamp, 2017). Comparisons of EGA with traditional methods revealed that the EGA is less affected by sample size and inter-dimensional correlation (Avcu, 2021). A few simulation studies have shown that EGA outperforms the best-known EFA methods (Hayton et al., 2004)—parallel analysis (PA) and minimum average partial procedure (MAP)—when correlations between factors are high, and the number of items per factor is low (Christensen & Golino, 2021a; Golino & Demetriou, 2017; Golino & Epskamp, 2017; Golino et al., 2020). Moreover, EGA provides two desirable outputs: a network plot depicting the relations among the clusters and their components and stability estimates for the number of clusters and the placement of each measure in its assigned cluster, which are based on a bootstrapping procedure (for more details, see further explanation below). Considering its attractive features, EGA has taken its place in the literature as a remarkable alternative to traditional methods (Christensen et al., 2019; Cosemans et al., 2022; Panayiotou et al., 2022; Turjeman-Levi & Kluger, 2022). However, we also used the traditional EFA and MDS to gauge the benefit of the new EGA approach.

The methods are based on different data-generating hypotheses (the latent causes of the observed variables), even though they are mathematically equivalent (Christensen et al., 2020; Golino & Epskamp, 2017). EFA assumes latent common causes (i.e., factors) with manifest variables (e.g., questionnaire items) expressed as a function of these latent factors. In contrast, EGA assumes that psychological constructs (such as relationship quality) arise not because of a latent common cause but rather from bidirectional causal relationships between observed variables (Cramer et al., 2012, as cited in Christensen et al., 2020). Therefore, EGA creates psychometric network models whereby factors or dimensions emerge from densely connected sets of nodes that form coherent subnetworks within the overall network rather than reflecting latent common causes.

Because of EGA’s newness, we explain its three essential steps. The first step is estimating a network model. Network models are depicted by nodes and edges, where variables (e.g., test items), represented as nodes, are connected by edges, which indicate the strength of the association between the variables. Edges are partial correlation coefficients representing the remaining association between two variables after controlling for all other variables.

The second step applies a community detection algorithm to estimate the underlying dimensions in the network model estimation. EGA uses a clustering algorithm for weighted networks (walktrap; Pons & Latapy, 2006, as cited in Christensen & Golino, 2021a) that estimates the number and content of the network’s communities (Christensen & Golino, 2021a). As output, EGA produces a network loading matrix. Conceptually, network loadings represent the strength of the standardized node split between dimensions; mathematically, the network loading stands for the standardized sum of edge weights (i.e., partial correlations) of a node with all nodes in the same dimension (see Christensen & Golino, 2021b, for mathematical notation).Footnote 5 Christensen and Golino (2021b) used data simulations to identify effect size guidelines that correspond with traditional factor loading guidelines: small (0.15), moderate (0.25), and large (0.35) network loadings. The loadings are relatively small because network models do not extract the common covariance between variables (as in EFA) but instead map the unique associations between the variables with the estimated number of factors. That is, network loading magnitudes depend only on each node’s relative contribution to the overall sum of the weights in the factor (after removing much of the common covariance, and then partitioning across all factors detected). Therefore, relative to factor models, EGA would likely lead to fewer large network loadings but an abundance of small to moderate loadings (Christensen & Golino, 2021b).

The third step is conducting bootstrap EGA to estimate and evaluate the stability of the dimensional structure estimated using EGA (Christensen & Golino, 2021a). Two issues may affect the stability of network estimations. First, the number of dimensions identified may vary depending on random features of the sample or its size (damaging the network’s structural consistency). Second, even if the number of dimensions is consistent across samples, some items may be identified in one dimension and another in a different sample (damaging the item’s stability, which is another measure of structural consistency).Footnote 6 The bootstrap EGA generates the desired number of bootstrap samples and applies EGA to each replicate sample, forming a sampling distribution of EGA results. This procedure begins by estimating a network using EGA and generating new replicate data from a multivariate normal distribution (with the same number of cases as the original data). EGA is then applied to the replicate data iteratively until the desired number of samples is achieved (e.g., 500). The result is a sampling distribution of EGA networks.Footnote 7

We used R (version 4.0.4; R Core Team, 2021) for all analyses. For EGA, we applied the EGAnet package, including the EGA and bootEGA functions (version 1.2.3; Golino & Christensen, 2021) in R. For EFA, we used the n_factor function of the parameters package (Lüdecke et al., 2020). Then, we subjected the data to an EFA with oblimin rotation, using the fa function of the psych package (Revelle, 2020). We performed MDS with the isoMDS function of the MASS package (Venables et al., 2002) and searched for the lowest number of dimensions that would satisfy the criterion of stress < 0.15 (Dugard et al., 2010).

Transparency and openness

All data, analysis, code, and Supplementary materials are publicly available at the Open Science Framework (OSF) and can be accessed at https://osf.io/jp46y/?view_only=4840630d0b964df7a0ff5a61d749a0a7.

Results

In Step 1 of our empirical analysis, we inspect the disattenuated correlations among all measures (Table 2 above the diagonal). Disattenuated correlations suggesting a minor problem with divergent validity (CIul > 0.80) are in bold; disattenuated correlations suggesting a moderate problem with divergent validity (CIul > 0.90) are in bold and underlined. In Step 2, we inspect the degree of overlap between the constructs’ nomological networks (Table 3), with ICCde (below the diagonal) and Pearson’s r (above the diagonal). The first constructs in both tables are the listening measures, followed by the relationship ones. Therefore, the correlations and profile similarity indices of listening measures are found in the upper-left quadrant of the matrices, representing the convergence among listening measures. The correlations and profile similarity indices between listening and relationship constructs are located in the bottom-left and the upper-right quadrants and represent the degree of divergence of listening measures from measures of relationship constructs. Steps 1 (Table 2) and Step 2 (Table 3) test H1 to H3 as follows:

-

H1: Perceived Listening Measures: Convergence to Two Unipolar Constructs

Table 2 shows that five of the eight listening subscales converge to different degrees: the Facilitating Listening (FLS) constructive subscale seems almost identical with the Layperson-Based Listening (LBLS), ρ = 0.96, both correlate highly with Active‐Empathic Listening (AELS), ρ = 0.89, and at least 0.80 with the relational and analytical subscales of the Listening Styles Profile (LSP-R). The FLS destructive subscale and the task-oriented and critical LSP-R subscales diverge from each other and the other listening scales. The above conclusions are bolstered by the profile similarity indices in Table 3. For example, the profile similarity of the constructive FLS subscale and the LBLS was 0.99 with the ICCde and 1.00 with Pearson’s r. In contrast, the task-oriented LSP-R subscale has negative ICCde values with all other listening scales and low Pearson’s r values. Therefore, it appears unrelated to all other constructs other than incivility, r = 0.60, and psychological control, r = 0.71. Overall, the constructive FLS, LBLS, AELS, and the relational and analytical subscales of the LSP-R seem to converge and diverge from the destructive FLS. The task-oriented and critical LSP-R subscales diverge from all other perceived listening measures.

-

H2: Constructive Listening and Positive Relationship Constructs: Convergence and Divergence

Except for the Listening Styles Profile (LSP-R) task-oriented and critical subscales, all listening measures have high disattenuated correlations with many measures of relationship constructs. Out of the 325 disattenuated correlations in Table 2, 191 (58.7%) had CIul ≥ 0.80 (boldface), and 41 (12.6%) CIul ≥ 0.90 (boldface and underlined). This pattern suggests that most listening and related measures have overlaps of various degrees, indicating marginal or moderated discriminant validity concerns. For example, the measure of respect correlates at ρ = 0.90 or above with two listening subscales, perspective-taking, supervisory satisfaction (JDI), responsiveness (PRI), and insensitivity (negatively). Other scales, such as support, supervisory satisfaction (SWMSS), and responsiveness, correlate at ρ = 0.90 or above with listening subscales and other scales. Only three variables of the 27 included in Table 2 show clear divergence: two subscales of the LSP-R and our criterion measure of subjective well-being.

The profile similarities in Table 3 also support the above conclusions. Of all Pearson’s r values, 20% are 0.98 and above, and 19% of the ICCde values are 0.90 and above. For example, Pearson’s r of the LBLS, constructive FLS, and AELS with satisfaction with supervisor support (SWMSS) are all 0.99. Table 3 shows more concerns with discriminant validity. The Layperson-Based Listening (LBLS) has a profile correlation of 1.00 with the profile of Perceived Responsiveness (PRI) measure of responsiveness, and the Facilitating Listening (FLS) constructive subscale has a profile correlation of 1.00 with humility. Moreover, 9.2% of the profile similarities between the relationship constructs (based on Pearson’s r) are 0.99. These include, for example, humility and respect, communal strength and intimacy, and satisfaction with supervisor (SWMSS) with autonomy support, both measures of responsiveness, and the Layperson-Based Listening (LBLS) and constructive the Facilitating Listening (FLS) constructive subscale. This pattern raises questions about the discriminant validity of the listening measures and most relationship constructs.

-

H3: Destructive Listening and Negative Relationship constructs: Convergence and Divergence

The Facilitating Listening (FLS) destructive subscale also shows divergent validity problems. It correlates highly with the negative relationship constructs: Perceived Insensitivity (PRI), rudeness, and incivility. For rudeness, the disattenuated correlation with destructive FLS is 0.89, the CIul is above 0.90, and the profile similarity is 0.96 (Pearson’s r). For incivility, the disattenuated correlations with destructive FLS of 0.86 and profile similarity of 0.99 (Pearson’s r).

-

H4: Perceived Listening Association with Relationship Behaviors versus Relationships Quality Constructs: Convergence and Divergence of Clusters in the Data

In Step 3, we assess the clusters in the constructs’ nomological networks with EFA, MDS, and EGA, enabling us to test H4.

EFA suggested that the number of underlying factors in the data is likely one or two (see Table S9 in Supplementary Materials). We then explored whether we could sensibly interpret the two-factor solution. The results, shown in Table 4, point to positive and negative relationship factors, which are correlated negatively and strongly, r = -0.64, but with a low enough r value to indicate that the two may not be isomorphic (Kenny, 2012; van Mierlo et al., 2009, as cited in Shaffer et al., 2016).

To test the stability of this result, we repeated the EFA for each language separately. Then, we correlated the English-speaking sample’s loadings with the Hebrew-speaking sample’s loadings—the loadings of the first and second factors correlated at 0.88 and 0.96, respectively. Regressing the loadings of the first factor in one language on the loadings in the other language revealed discrepancies only for loadings of less than 0.40 in both languages. These results suggest a similar ordering of the loadings in both languages. These loadings are reported in Tables S10-S11 in the Supplementary Materials. These results also raise questions about the discriminant validity of the listening measures and most relationship constructs. They seem to support H2 and H3 by showing that the different measures diverge into two factors: constructive and destructive measures.

Next, we subjected the 26 subscales to MDS and EGA. The EGA replicated the MDS results. Therefore, the MDS results are reported only in the Supplementary Materials (Figures S16-S17, S26). The EGA (see the elaborated analysis report in the Supplementary Materials (S27)) concluded with a three-cluster solution (Fig. 1 and Table 5). The structural stability analysis showed that the three-cluster solution is highly stable (number of clusters CI [3, 3]) and is replicated in 100% of the bootstrap samples. All scales show good item stability, with replications of over 97% in their empirically derived cluster (see Figure S20 in Supplementary Materials). Similar results were obtained when we ran the EGA separately on the English-speaking and the Hebrew-speaking samples (see Figures S21-S22 in Supplementary Materials).

The EGA suggests three clusters: negative relationship behaviors, positive relationship behaviors, and relationship quality. The EGA (and MDS) reveal a clustering of measures not seen in the EFA. This solution separates the positive relationship factor into two clusters: the behaviors (e.g., humility, listening) and the quality (e.g., trust, intimacy).

Validation

In Step 4, we sought to determine whether the three clusters forming the nomological network of listening—negative relationship behaviors, positive relationship behaviors, and relationship quality—have incremental predictive power in explaining the variance of our chosen criterion, subjective well-being (SWBL; Diener et al., 1985). We also aimed to determine whether the relationship outcomes mediate between the negative and positive relationship behaviors and subjective well-being.

To proceed with validation, we created scales for the three relationship clusters by taking the scales with the highest EGA loadings for each cluster (> 0.25), loading only on one cluster, and averaging their scores to create a cluster score. Cronbach’s αs of all three cluster-based scales were high (Table 6). Then, we regressed our criterion of subjective well-being on the three relationship clusters. Only relationship quality was a significant predictor, β = 0.33, CI [0.24, 0.42], p < 0.001, and the partial effects of positive, β = 0.00, CI [-0.06, 0.07], p = 0.97, and negative behaviors, β = -0.04, CI [-0.09, 0.02], p = 0.21, were almost zero. This regression shows that the cluster that includes listening does not predict well-being, once relationship quality is considered. To better understand the plausible effect of relationship behaviors on well-being, we constructed, post hoc, a mediation model where relationship quality mediated the effects of positive and negative relationship behaviors (Fig. 2). The model fit the data well, χ2(2) = 2.16, p = 0.33, CFI = 1, RMSEA = 0.006 [0, 0.045], SRMR = 0.007. The model suggests that negative behaviors (e.g., rudeness, destructive listening) and positive behaviors (e.g., constructive listening, humility) of the supervisor affect relationship quality (e.g., intimacy, trust), which affect the employees’ subjective well-being; β = 0.36, CI [0.32, 0.40], p < 0.001. Moreover, whereas the semi-partial effect of positive behaviors on relationship quality is very strong, β = 0.86, CI [0.83, 0.89], p < 0.001; the semi-partial effect of negative behaviors on relationship quality is slim, β = -0.04, CI [-0.069, -0.006], p = 0.019. Nevertheless, this effect is significant, and its indirect effect on well-being is significant, β = -0.01, CI [-0.025, -0.002], p = 0.020. We searched whether the path analysis results could be an artifact of non-normality, interactions, or nonlinearity. These are present in the data but negligible in their effects. See elaboration in the supplementary materials (S28).

Discussion

We raised questions about the convergent and divergent validity of perceived listening measures in the context of subordinate perceptions of their supervisor. Because we suspected that perceived listening and other relationship-related constructs might be “sibling constructs,” we used the criteria proposed by Lawson and Robins (2021) to assess the constructs’ relationships. First, we detected, in several fields, constructs suspected of converging with perceived listening. These constructs showed high conceptual overlap with perceived listening at the construct level (i.e., similarity in definitions) and the measure level (i.e., overlapping items) (Criteria 1–2). We also asked subordinates to rate their supervisor’s listening and relationship with them on the suspected constructs’ measures, comprising 26 scales and subscales, and subjective well-being. We inspected the disattenuated correlations among the measures and their confidence intervals (Criterion 4), two profile similarity indices (Criterion 3), and the underlying structure of the 26 scales with EFA and EGA (Criterion 5). Moreover, we modeled the relationship between the discovered clusters and subjective well-being (Criterion 6). Finally, we tested the stability of the results across two cultures.

Overall, we found that (a) listening measures form two unipolar constructs (constructive and destructive listening); all the perceived listening measures showed a high degree of convergence, except for the task-oriented and critical listening profiles (LSP-R) subscales (which diverged from all measures). The latter finding is consistent with evidence that the critical and task-oriented subscales may not represent destructive behaviors exclusively (Bodie et al., 2013). (b) the two unipolar perceived listening constructs cannot be easily differentiated from other relationship constructs. Specifically, many of the disattenuated correlations of listening with relationship-related measures exceed 0.80, and even 0.90, indicating a marginal or a moderate problem of divergence; however, (c) the EGA suggests some divergence of three clusters: negative relationship behaviors, positive relationship behaviors, and relationship quality.

Our findings suggest several implications. First, they advance understanding of construct validity and the nomological network of the construct of perceived listening. Second, they highlight methodological issues related to overlapping, closely related relationship constructs and demonstrate how the “sibling constructs” framework and criteria can be applied to resolve them. Finally, they demonstrate the methodological utility of EGA for psychometric research by evaluating the possible divergence between closely related constructs. In the following section, we discuss our findings’ theoretical and methodological implications.

Perceived Listening Nomological Network: Two Versus Three Factors (Clusters) Solutions

The EFA of the perceived listening and relationship scales yielded two factors: positive and negative relationship behaviors, consistent with the findings of other researchers. Shapiro et al. (2008) asked 217 managers from the U.S. and Korea to rate seven prosocial work behaviors (e.g., respect) and four antisocial work behaviors (e.g., incivility) concerning four work-related scenarios. Their EFA suggested positive and negative action factors. They concluded that the supposedly different measures capture the same underlying constructs. Moreover, Martinko et al. (2018) demonstrate that subordinate evaluations of leaders are primarily a function of the degree to which subordinates have positive and negative feelings about their supervisor. They show that positive versus negative “affective schemas” may be the common factors explaining the high intercorrelations between leadership measures.

However, when we applied EGA (or MDS) to our data, the scales appeared to reflect three clusters: negative- and positive-relationship behaviors and relationship quality. Despite the very high correlations among these clusters, they are distinguishable empirically, as shown by the path model linking these three constructs with subjective well-being. The three clusters’ solutions enable a better understanding of the nomological network of perceived listening, differentiating destructive relationship behaviors (e.g., destructive listening, rudeness, insensitivity), constructive relationship behaviors (e.g., constructive listening, humility, autonomy support), and relationship quality (e.g., trust, intimacy). In the next section, we explore some of the theoretical implications of these findings.

The Distinction Between Constructive and Destructive Supervisory Behaviors

Our finding that relationship behaviors fall into two categories is consistent with the finding of two factors underlying affect and personality (Gable et al., 2003), motivation (Carver & White, 1994), and the structure of attitudes (Cacioppo & Berntson, 1994), suggesting that aversive and appetitive responses are fundamental to human behavior.

In the context of supervisory behaviors, Einarsen et al. (2007) discussed the theoretical importance of this distinction, indicating the need to present a nuanced picture of supervisory behaviors comprising constructive and destructive elements to reflect their possible impact on their employees. Fors Brandebo et al. (2016) empirically validated this theoretical distinction, suggesting that constructive and destructive behaviors predict different outcomes. For example, constructive behaviors were more strongly related to positive (than negative) outcomes, such as trust in the leader and work atmosphere. In contrast, destructive behaviors were more strongly related to negative (than positive) outcomes, such as emotional exhaustion and propensity to leave.

Additionally, identifying constructive and destructive behaviors as separate dimensions enables the exploration of the mixture of the different levels of destructive and constructive behaviors and their potential impact. For example, counterintuitively, the passive forms (i.e., “does not show an active interest”), compared to the active forms (i.e., “Uses threats to get his/her way”) of destructive leadership, had a stronger impact on the negative outcomes (Fors Brandebo et al., 2016). Moreover, Chénard-Poirier et al. (2022) have found that the leader’s mixed constructive and destructive behaviors (referred to as inconsistent leadership profile) were related to less desirable outcomes in terms of thriving at work and behavioral empowerment than exposure to a leadership profile dominated by destructive behaviors. These previous findings also show moderate to strong correlations between the destructive and constructive factors, suggesting that many leaders display constructive and destructive behaviors that reflect integrated parts of the behavioral repertoire of leaders (Aasland et al., 2010; Fors Brandebo et al., 2016). The relationship literature similarly shows that constructive and destructive interaction processes are distinct but correlated factors (Gable & Reis, 2001; Mattson et al., 2013). These results align with previous research on perceived constructive and destructive listening, indicating separated but highly correlated factors, validated by demonstrating incremental validity in predicting task-oriented leadership but not in predicting people-oriented leadership (Kluger & Zaidel, 2013).

These findings, including our research, highlight the importance of considering both constructive and destructive leadership behaviors when evaluating supervisory conduct. Simply focusing on the presence or absence of either type of behavior can limit understanding of the leader-subordinate relationship. Instead, one should aim to identify and explore a nuanced picture of supervisory behaviors that combines both constructive and destructive elements. This approach might help in improving leadership selection, development, and training.

The Distinction Between Relationship Behaviors and Relationship Quality

The theoretical literature distinguishes between perceptions of relationship behaviors and relationship quality. However, the empirical literature is mainly based on self-reports of perceptions, and typically, it is unclear whether perceptions of behaviors can be distinguished from perceptions of relationship outcomes (quality). For example, relationship researchers highlight the positive effects of pro-relationship behaviors on outcomes such as trust, commitment, and responsiveness, creating “upward spirals” of influence (Canevello & Crocker, 2010; Itzchakov et al., 2022; Wieselquist et al., 1999). Another theoretical example is the Social Exchange framework. It suggests that people reciprocate positive behaviors with relational outcomes like trust, commitment, and support (Cropanzano et al., 2017; Ladd & Henry, 2000). The need to distinguish relationship behaviors from relationship quality is the fundamental rationale behind behavioral observation studies of marital interaction—researchers want to assess more objectively the behaviors that lead people to feel a certain way (Gottman, 1998; Heyman et al., 2014). However, most of the empirical work on the subject was conducted using self-report measures, which are highly exposed to the problem of raters’ inability to sufficiently distinguish between relationship behaviors and their effects on relationship quality.

In theory, relationship behaviors differ from relationship quality because the former refers to specific behaviors within specific boundaries. In contrast, the latter refers to a broad and abstract “sense of accumulated interactions over time” (Itzchakov et al., 2022, p. 9). For example, listening is a behavior that entails in-person conversation. In contrast, support can be conveyed in numerous ways that are not bound to a conversation or even a specific interaction. Moreover, while relationship behaviors can foster better relationship quality, they sometimes fail to reach that goal. Thus, conceptually, behaviors are antecedents of outcomes that a third variable could moderate.

For example, the effect of high-quality listening behavior on relationship quality (psychological safety) is moderated by attachment style (Castro et al., 2016). Also, the effect of perceived supervisor behavior on the employees’ job satisfaction and commitment is moderated by organizational culture (Huey Yiing & Zaman Bin Ahmad, 2009). These findings support the conclusion that distinguishing perceptions of behaviors and perceptions of their outcomes is important because it enables investigating under which circumstances the relationship behaviors by the supervisor will or will not produce a higher-quality relationship.

Our results show that contrary to Martinko’s view (2018), relationship-behaviors and relationship-quality measures are highly correlated but are identifiable as separate clusters. Therefore, this theoretical distinction can be empirically validated. Yet, despite the empirical distinction in our data, the correlation between them was high, calling for further empirical work to establish divergent validity for these broad constructs. As discussed next, this work could be accomplished by adopting the sibling construct framework, which can help understand the complex relations between highly correlated constructs.

The Utility of the “Sibling Construct” Framework for Construct Validity Analyses

In recent years, it seems that the replications crisis, on the one hand, and the accumulation of knowledge, on the other hand, have contributed to the need for a bold look at the definitions of psychological constructs, which are often considered part of complex systems. Psychological constructs are part of a network of variables with different definitions, terminologies, and operationalizations; however, they share considerable conceptual and empirical overlap because they tend to co-occur in nature.

The aspiration for establishing divergent validity, considering it as a yes–no question, pushes researchers to look at different constructs either as converging and, therefore, redundant or diverging and, therefore, forming independent predictors for several outcomes. The “sibling constructs” framework suggests avoiding this dichotomous view and adopting a view that establishing divergent validity requires multiple steps to distinguish closely related constructs. This view entails accepting that some psychological constructs are distinct despite showing high intercorrelations and may be causally intertwined, mutually influencing each other. Thus, a high correlation between constructs does not mean they are identical. For example, among 10- and 11-year-old children, physical height and weight correlate in the range of 0.64–0.69 (Johnson et al., 2020), but height and weight are still distinct. If correlations approaching 0.70 among physical measures are still obviously distinct, then correlations among psychological constructs, such as those studied here, are likely to be much higher (due to the difficulty of measuring them without biases) but still theoretically and possibly empirically distinct.

To investigate constructs whose measures show such high correlations, Lawson and Robins (2021) offer ten criteria reflecting three approaches: conceptual (Criteria 1–2), empirical (Criteria 3–6), and developmental (Criteria 7–10). By considering these three approaches, we assess the contribution of our work and its limitations, which informs future directions. These considerations include the challenges of (a) distinguishing behaviors from their outcomes, (b) using multiple criteria for establishing incremental validity, and (c) advancing theoretical and empirical work to establish developmental evidence for divergent validity.

Distinguishing Behaviors from Their Outcomes

Our investigation revealed significant conceptual and empirical overlap between perceived listening and relationship quality (outcome) constructs because the definition of perceived listening combines listening behaviors with their relational effect on the speaker. This problematic conceptualization is familiar to the leadership literature, where many leadership scales also combine the perceptions of leadership behaviors with their emotional effect (Knippenberg and Sitkin, 2013; Yammarino et al., 2020). We have shown empirically, with EGA, that it is possible to separate behaviors from outcomes. Nevertheless, given that relationship behaviors overlap outcomes in many measures, addressing this issue requires fine-tuning the conceptualization of the constructs. For example, how can one distinguish listening behavior perceptions from perceptions of relationship quality outcomes?

Thus, one implication of using the sibling construct approach is suggesting the return to the drawing board of the construct of perceived listening and refining it. For example, existing definitions of listening emphasize attention to the listener (Castro et al., 2016; Itzchakov & Kluger, 2017b; Kluger & Itzchakov, 2022). Yet, attention may be too broad, and its perception may not be sufficiently differentiated from similar constructs. Therefore, it may be that a more specific element of listening, such as devotion, is distinct from other constructs. More specifically, defining listening as “the degree to which a person devotes themselves to co-explore the other with and for the other” (Kluger & Mizrahi, 2023) allows creating measures that will be distinct from outcomes, such as trust, and even from behaviors, such as humility. This conceptual refinement can lead to constructing matching measurement items (e.g., “When listening to me, my supervisor fully devotes themselves to help me explore my thoughts aloud”). Thus, one contribution of the sibling approach, exemplified here, is pointing for a direction to fine-tune the construct definition and measurement and plan additional studies to test whether there is better evidence for construct validity.

Using Multiple Criteria for Establishing Incremental Validity

One of the empirical criteria for identifying sibling constructs and exploring their similarities and differences is checking the incremental validity in predicting relevant outcomes because sibling constructs tend to show little to no incremental validity over each other (Lawson & Robins, 2021). For testing incremental validity, we used subjective well-being as a single criterion. We hypothesized that subjective well-being, as a broad and high-level construct empirically related to relationship behaviors and quality (Gordon et al., 2019), will be sufficient to tap nuanced differences between the different relationship constructs. However, we did not find incremental validity in predicting subjective well-being, perhaps because high subjective well-being is a positive phenomenon. Thus, subjective well-being can be assumed to be more strongly related to constructive than destructive leadership behaviors (Fors Brandebo et al., 2016). Thus, using, in addition, a negative criterion, such as burnout, may have revealed the incremental validity of the three clusters. For example, Roos et al. (2023) have found incremental validity of feeling heard (i.e., similar to perceived listening) over conversational intimacy in predicting post-conversation avoidance intentions. Therefore, multiple outcome criteria should be tested, where a single failure to find incremental validity is insufficient evidence for lack of divergence.

Establish Developmental Evidence for Divergent Validity

Lawson and Robins (2021) included four more criteria (i.e., Criteria 7–10) for testing how constructs emerge, develop, and change over time based on longitudinal or experimental data. These criteria can suggest future research to demonstrate specific relations between perceived listening and other relationship constructs. For example, it could be that constructive listening is a component of humility, where humility could be manipulated without affecting perceived listening quality. In contrast, manipulating perceived listening may elevate humility under most conditions. Indeed, manipulating listening quality increases humility (Lehmann et al., 2021). However, it remains to be tested whether humility can be manipulated with a non-listening manipulation, such as manipulating awe ( Goldy et al., 2022; Stellar et al., 2018), without affecting perceived listening quality.

Another option is testing whether the sibling constructs co-develop similarly (i.e., have similar developmental trajectories) or differently. For instance, can the perception of high-quality listening emerge in a particular relationship or interaction before the perception of responsiveness or trust emerges? Testing the different constructs in different timeframes (e.g., single interaction versus a month of continuing relationship) or in dynamic studies of interaction processes can expose subtleties in the divergence and convergence patterns. Work of this sort can shed light on the perceived listening construct and enhance the understanding of perceived listening’s contribution (among other relationship constructs) to the relationship-building processes.

In summary, our study is the first step in assessing the construct validity of perceived listening using the “sibling construct” framework, demonstrating that achieving construct validity is a continuous process of back-and-forth “conversation” between theory and data. These processes require us to shed a dichotomous “yes–no” perspective and strive to sharpen the ability to delve into the complexity of closely related networks of constructs. To delve into this complexity, we next discuss the methodological benefits of the psychometric network perspective.

The Methodological Utility of the Psychometric Network Perspective and EGA for Sibling Constructs Research