Abstract

The quality of listening in interpersonal contexts was hypothesized to improve a variety of work outcomes. However, research of this general hypothesis is dispersed across multiple disciplines and mostly atheoretical. We propose that perceived listening improves job performance through its effects on affect, cognition, and relationship quality. To test our theory, we conducted a registered systematic review and multiple meta-analyses, using three-level meta-analysis models, based on 664 effect sizes and 400,020 observations. Our results suggest a strong positive correlation between perceived listening and work outcomes, \(\overline{r}\) = .39, 95%CI = [.36, .43], \(\overline{\rho }\) = .44, with the effect on relationship quality, \(\overline{r}=\).51, being stronger than the effect on performance, \(\overline{r}=\).36. These findings partially support our theory, indicating that perceived listening may enhance job performance by improving relationship quality. However, 75% of the literature relied on self-reports raising concerns about discriminant validity. Despite this limitation, removing data solely based on self-reports still produced substantial estimates of the association between listening and work outcomes (e.g., listening and job performance, \(\overline{r}\) = .21, 95%CI = [.13, .29], \(\overline{\rho }\) = .23). Our meta-analyses suggest further research into (a) the relationship between listening and job knowledge, (b) measures assessing poor listening behaviors, (c) the incremental validity of listening in predicting listeners’ and speakers’ job performance, and (d) listening as a means to improve relationships at work.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Predicting job performance is central to business and psychology research and practice (Kozlowski et al., 2017). Thanks to advancements in job-performance research, it is now possible to predict as much as 50% of the variance in performance (Cascio & Aguinis, 2008). This success reflects voluminous research which has identified no fewer than 19 families of predictors (Schmidt & Hunter, 1998). Yet, none of these predictors include the quality of employees’ or managers’ listening.

The absence of listening in job performance research is curious because listening satisfies the three main criteria to consider a skill relevant to performance outcomes: frequency, difficulty, and contribution to effective functioning (Wolvin & Coakley, 1991). Concerning frequency, surveys have shown that employees and managers spend between 42 and 63% of their workday listening (Wolvin & Coakley, 1991). Concerning difficulty, managers consider poor listening as one of the biggest challenges they face in training employees (Wolvin & Coakley, 1991). Finally, concerning contribution, many scholars have suggested that listening is the most critical communication skill affecting organizational behavior, including performance (e.g., Brink & Costigan, 2015; Pery et al., 2020; Wolvin & Coakley, 1991; Zenger & Folkman, 2016). It seems that business and psychology research—theoretical and empirical—has somehow failed to ask two fundamental questions: Is listening an antecedent of job performance for speakers and listeners? If so, how theoretically and practically substantial is this effect? The present study seeks to address these questions.

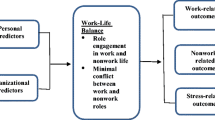

We aim to advance applied psychology and business by identifying a new, potentially impactful predictor of work outcomes that applies across various settings and contexts. Specifically, we explore the role of providing and receiving listening on job performance and its putative antecedents: relationship quality, affect, and cognitions. Additionally, we test a host of moderators of these effects, both general and specific. Our theory-based hypotheses and methodological research questions are presented in Fig. 1 and Table 1.

Theoretical Background

Listening: Conceptual Definition

Listening research typically focuses on listeners’ actions (Worthington & Bodie, 2018). To indicate listening and to create a give-and-take with the speaker, listeners emit multiple non-verbal and verbal cues. Non-verbal cues, known as backchannel communication (Yngve, 1970, as cited in Duncan, 1972; Pasupathi & Billitteri, 2015), include generic signals to indicate attention, such as nodding, directing the gaze, interjecting with fillers (e.g., “uh-huh”; Bavelas et al., 2002), or orienting the body toward the other (cf. Bodie et al., 2014); and specific signals, such as wincing, exclaiming, or laughing as appropriate (Bavelas et al., 2000). Verbal listening signals include paraphrasing (Nemec et al., 2017; Weger et al., 2010), reflecting the other’s feelings (Linehan, 1997; Nemec et al., 2017), asking open-ended questions (Nemec et al., 2017; Van Quaquebeke & Felps, 2018), and asking the speaker to repeat content (Lycan, 1977). Some non-verbal and verbal signals can indicate poor listening: tone used, expressions, or gestures that convey inattention, impatience, or judgment of the speaker; changing the topic; asking irrelevant questions; offering advice where none was requested; dual-tasking (e.g., looking at one’s smartphone); or physically disengaging from the conversation.

The non-verbal and verbal cues emitted by the listener are perceived and integrated by speakers holistically (Kluger & Itzchakov, 2022; Lipetz et al., 2020). The speakers use this holistic perception to assess the degree to which the listener pays attention, understands, and acts benevolently (Castro et al., 2016; Itzchakov et al., 2017). Such holistic perceptions of the listener’s behavior may better predict work outcomes than objective or listener-reported measures (see Bodie et al., 2014). For example, a customer’s purchase decisions may be better predicted by the customer’s perceptions of the salesperson’s listening than by the latter’s objective or self-reported listening. Indeed, the primacy of perceptions in predicting behavior has been observed in research on similarity, social support, and understanding: perceived similarity predicts attraction better than actual similarity (Tidwell et al., 2013); perceived social support predicts mental health better than objective social support (Lakey & Orehek, 2011); and the feeling of being understood predicts relational satisfaction better than the partner’s actual understanding (Reis et al., 2017).

Therefore, we focus primarily on perceived listening, defined as the speaker’s evaluation of the listener’s interest in and reaction to their (i.e., the speaker’s) utterances, ranging from negative to positiveFootnote 1. In the present study, we restrict perceived listening to perceptions of workplace conversations. These conversations could be both with people inside the organization (e.g., co-workers, subordinates) or outside of it (e.g., customers, suppliers, clients) and on any topic (i.e., work-related and other).

To complete our conceptualization of perceived listening, we distinguish it from other related constructs (Podsakoff et al., 2016). The evaluation of listening is closely related to perceptions of empathy (Kellett et al., 2006), perspective-taking (Lui et al., 2020), no rudeness (Porath & Erez, 2007), responsiveness (Reis & Clark, 2013), respect (Frei & Shaver, 2002), and feeling understood (Reis et al., 2017). Theoretically, perceived listening differs from all of these constructs in that it is based on evaluating the other person’s behavior during or after a conversation (Kriz et al., 2021a, b). All other perceptions may be formed without a conversation. For example, employees can judge from the actions of the CEO (e.g., announcing the opening of a day-care center on the company’s premises) that the CEO has looked at things from their perspective, even if the CEO and employees have never spoken directly. Similarly, a worker who hurt his back while lifting a load may perceive that coworkers’ glances convey empathy without conversing with them. Thus, perceptions of good listening are comprised in part of perceived empathy, perspective-taking, no rudeness, etc., but those things may be present without listening.

Our definition diverges from early definitions of listening (for a review, see Goyer, 1954; Nichols, 1948) that tacitly equated listening with recalling the content or meaning of verbal messages (cf. Thomas & Levine, 1994). Equating listening with memory is problematic because it is unclear whether memory differs from intelligence or understanding verbal stimuli (cf. Thomas & Levine, 1994; Worthington & Bodie, 2018). Moreover, the voluminous early research on listening has mainly focused on the cognitive understanding of aural stimuli (Duker & Petrie Jr., 1964). Understanding aural stimuli, or listening comprehension, was found to share 90% of its latent variance with reading comprehension, where both were determined by genetic influences (Christopher et al., 2016). In addition, the correlations between the recall of facts from a conversation and various behavioral indicators of listening (e.g., gaze) are modest at best (Thomas & Levine, 1994). Most relevant, in a sample of 1,148 lawyers, listening, conceived as a form of social influence, correlated only 0.02 with GPA and -0.05 with LSAT scores (Shultz & Zedeck, 2011). Thus, listening, as defined here, which refers to the perception of listening reported by the speaker, appears to be independent of general mental ability and can, therefore, predict work outcomes that cannot be explained by general mental ability.

Next, we propose that perceived listening affects job performance through relationship quality, affect, and cognition. Note that these outcomes are interrelated. As will be seen, some effects on affect and cognition derive from the relationship engendered or strengthened by perceived listening. Also, we use causal language in postulating the theory, but we will use primarily observational data to assess the consistency between the theory and the data. In following this procedure, we respond to calls for nonexperimental researchers to openly discuss causal assumptions while recognizing the limitations of the inferences the data might afford (Grosz et al., 2020).

Effects on the Speaker–Listener Relationship

Listening builds relationships (Rogers & Roethlisberger, 1991/1952) by satisfying, for the speaker, at least three sets of interrelated needs: epistemic needs (Rossignac-Milon et al., 2020), self-determination needs such as relatedness and autonomy (Itzchakov & Weinstein, 2021; Itzchakov et al., 2022a, b, c; Van Quaquebeke & Felps, 2018; Weinstein et al., 2021), and belongingness needs (Dutton & Heaphy, 2003; Stephens et al., 2012). First, because the perception of reality is inherently changeable, and knowledge of reality is uncertain, people need others to help them make sense of their world (Rossignac-Milon et al., 2020). Therefore, a speaker who perceives that the listener has grasped their frame of reference (Rogers, 1951) satisfies the speaker’s epistemic needs by contributing to the speaker’s self-insight (Itzchakov et al., 2020), clarity (Itzchakov et al., 2018), self-knowledge (Harber et al., 2014; Pasupathi, 2001; Rossignac-Milon et al., 2020), and humility (Lehmann et al., 2021). These gains in clarity and knowledge are rewards that reinforce an attachment to the speaker, creating an “epistemic glue” between the listener and speaker (Rossignac-Milon et al., 2020).

Second, a good listener helps satisfy the three needs specified in self-determination theory: autonomy, competence, and relatedness (Van Quaquebeke & Felps, 2018; Weinstein et al., 2022).Footnote 2 Perceived listening (or respectful inquiry, in the language of Van Quaquebeke and Felps) satisfies these basic needs because it carries three meta-messages: “(1)[Y]ou [the speaker] have control, (2) you are competent, and (3) you belong” (2018, p. 11). Others have tested experimentally and supported the effects of listening on autonomy and relatedness (Itzchakov, Weinstein, & Cheshin, 2022a, b, c; Itzchakov et al., 2022a, b, c; Weinstein et al., 2021). Again, the satisfaction of these basic needs is a reward. The reward reinforces an attachment to the listener, thus creating or strengthening a relationship.

Finally, concerning belongingness, the positive effects of listening on workplace relationships can be partly explained by high-quality connection (HQC) theory (Dutton & Heaphy, 2003). An HQC is a short-term dyadic interaction involving mutual positive regard, trust, and engagement, strengthening a sense of openness to others, competence, and psychological safety (Stephens et al., 2012). An HQC is partly created by positive regard (Rogers, 1951). Speakers form a perception of the listener’s positive regard when the listener is non-judgmental towards their self-disclosures. These feelings satisfy the speaker’s need for belongingness and foster HQCs between the listener and speaker.

Thus far, we have considered how the speaker’s perceptions of the conversation partner’s listening affect how the speaker experiences the quality of their relationship. Yet because listening is a dyadic phenomenon (Kluger et al., 2021), the listener, too, is likely to benefit from the speaker’s perceptions of good listening. A speaker whose needs (for epistemic clarity, self-determination, and belongingness) are satisfied is likely to reciprocate signals of gratitude to the listener that will reinforce the listener’s positive regard and humility (Lehmann et al., 2021), and improve the listener’s perception of the relationship with the speaker. Therefore we predict that,

-

H1: Perceived listening is positively associated, for both parties, with the quality of the relationship between the listener and the speaker.

Effects on Affective Outcomes: Affect, Job Attitudes, and Motivation

Because listening addresses basic psychological needs, satisfying these needs will likely heighten positive emotions and reduce negative emotions for both the speaker and the listener. Moreover, because the sense of positive regard and acceptance that accompanies listening strengthens the dyadic relationship, both parties are likely to feel a greater sense of vitality, understood as “a feeling of positive arousal and a heightened sense of positive energy” that “stems from positive and meaningful relational connections with others” (Shefer et al., 2018). This process then triggers a chain of other positive outcomes. First, heightened vitality levels boost job-related attitudes, such as job satisfaction and commitment. In turn, these positive job-related attitudes will likely increase both individuals’ job-related motivation (Judge et al., 2017). For example, teachers who participated in a year-long listening training reported increased relational energy from their interactions with their colleagues (Itzchakov, Weinstein, Vinokur, et al., 2022a, b, c).

Although affect, job attitudes, and motivation are distinct constructs, we treat them all as affective outcomes of listening:

-

H2: Perceived listening is positively associated with positive affect (including job attitudes and work motivation) for both the speaker and listener.

Effects on Cognition

Listening confers cognitive benefits on both the listener and the speaker, albeit via different mechanisms. The benefits for listeners can be obvious or subtle. Most obviously, listeners gain new information. Physicians have observed, “If you listen, the patient will tell you the diagnosis” (Holmes, 2007, p. 161). Listening allows people to benefit from others’ experiences, gleaning useful information about risks or opportunities encountered by the speaker or relayed by the speaker about a third party (Harber & Cohen, 2016). This process is dynamic, where good listening shapes the nature of the knowledge conveyed. For instance, Kraut et al. (1982) suggested that the more listeners transmit backchannel information (Bavelas et al., 2000; Duncan, 1972), the more speakers adapt their speech to ensure listeners understand the message. Thus, listening increases the amount and quality of information the speaker shares and boosts understanding of the speaker’s intent. At a deep level, listening can lead to “unlearning” processes by the listeners, in which they reassess their assumptions and prior knowledge (Arcavi & Isoda, 2007) and even become more humble (Lehmann et al., 2021).

Turning to the speaker, a perception of being listened to positively affects the cognitions of the speaker by improving memory, self-knowledge (Pasupathi & Hoyt, 2010; Pasupathi & Rich, 2005), attitudinal complexity (Itzchakov & Kluger, 2017; Itzchakov et al., 2017), attitude clarity, reflective self-awareness (Itzchakov et al., 2018), and self-insight (Itzchakov et al., 2020). Building on Bavelas et al. (2000) and others, Pasupathi (2001) theorized that as listeners and speakers co-construct a conversation, they affect the speaker’s memory of the narrated experience and, in turn, shapes how speakers think about themselves (their identity). Put differently, a sense of being listened to induces in the speaker a richer memory of the narrated event, which eventually becomes part of the speaker’s self-knowledge. In contrast, poor listening constrains the speaker from freely constructing a story and so causes the speaker’s self-knowledge to become fragmented and disconnected from the experiences being related (Pasupathi & Hoyt, 2010; Pasupathi & Rich, 2005).Footnote 3

Finally, emotional broadcaster theory (Harber & Cohen, 2016; Harber & Wenberg, 2005) suggests that good listening helps listeners access a story’s emotional core. When a story violates the listener’s initial beliefs, the resulting emotional arousal leads people to seek relief by retelling the story to others. Thus, emotionally powerful stories trigger a cycle of listening and retelling, in which listeners process and consolidate valuable new knowledge by sharing it with a new set of listeners (Harber et al., 2014).

In light of the research described above, we hypothesize:

-

H3: Perceived listening correlates positively with (a) the listener’s cognition (e.g., knowledge) and (b) the speaker’s cognition (e.g., cognitive complexity).

Effects on Job Performance

Our first three hypotheses concern the effects of perceived listening on three variables known to predict job performance: relationship quality, affect, and cognitions. In each case, our discussion can be extended to show how perceived listening relates to job performance in terms of the employee’s focal job assignments; organizational citizenship behavior (OCB; i.e., performance above and beyond tasks included in the job description); reduced levels of counterproductive work behaviors (CWB); and adaptability.

H1 posits that listening improves the relationship between the listener and the speaker. A range of theoretical mechanisms links the quality of relationships at work with job performance. Some of these effects stem from the positive feelings produced by good relationships. For instance, the feeling of vitality, which results from good relationships (discussed above under H2), protects employees from stressful events at work and motivates them to direct their energies toward work tasks, including their focal job assignment and OCB (Shefer et al., 2018). The positive emotions derived from HQC elevate cognitive flexibility and creativity (Dutton & Heaphy, 2003), both of which are associated with better job performance. Sguera et al. (2020) theorized that experiencing high-quality relationships at work meets employees’ psychological needs, leading them to identify with the organization and manifest more OCB and fewer CWB (for empirical evidence, see Kluger et al., 2021; Rave et al., 2022).

Another way that high-quality relationships at work improve performance is direct—through the relationship itself. For example, Gottfredson and Aguinis (2017) conducted 35 meta-analyses involving 3,327 primary-level studies and 930,349 observations and concluded that the supervisor-employee relationship, as perceived by the subordinate, is a better predictor of employee performance compared to several other constructs, such as consideration and initiating structure, contingent rewards, and transformational leadership. Rossignac-Milon et al. (2020) showed that relationships lead to shared cognitions and, eventually, a shared identity. Shared cognition, in turn, facilitates shared coordination and, consequently, contributes to focal task performance. Using similar reasoning, Gittell et al. (2010) proposed that shared relational coordination facilitates effective communication, contributing to performing interdependent tasks. Finally, Colquitt et al. (2007) suggested that trust enables the development of a more effective exchange relationship between the parties and allows employees to focus on the job without monitoring the actions of others or wondering where needed resources will come from. The outcome is improved task performance, higher OCB, lower levels of CWB, and willingness to take a risk (Colquitt et al., 2007).

H2 suggests that perceived listening increases positive emotions for the speaker and listener. In a review of several meta-analyses, Diener et al. (2020) found that positive emotions are associated with higher job performance, including creativity and OCB. We can assume this is true for positive emotions above and beyond those induced by improved relationships at work. Moreover, job satisfaction and commitment—two job-related attitudes that result from positive emotional states at work—predict extremely well (ρ = 0.59) a latent variable of job performance that affects focal performance, contextual performance (OCB), and absence of lateness, absenteeism, and turnover (Harrison et al., 2006). Positive job attitudes also promote motivation—one of the best predictors of job performance, even after controlling for cognitive ability (Van Iddekinge et al., 2018).

Finally, H3 posits that perceived listening correlates with cognitions— specifically, greater understanding, knowledge, and self-insight. As aforementioned, good listening increases the amount and quality of information divulged by the speaker. Knowledge gained from listening is likely to include job knowledge, which in turn, predicts job performance across roles and occupations (Hunter, 1986). Perceived listening also positively affects the speaker’s memory and cognitive complexity, likely improving the speaker’s job performance.

Thus, listening creates a bundle of processes, all expected to affect job performance. Hence, we hypothesize:

H4a: Perceived listening correlates positively with job performance for both the listener and the speaker.

H4a posits a main effect of listening on job performance rather than a mediated effect. Theoretically, we propose that the effects of listening on job performance are mediated by relationship quality, affect, and cognition. Empirically, however, we intend to restrict our meta-analyses to exclude data on the association between the three mediators and job performance. We decided this because we view testing H1 through H4 as a step to be accomplished first before planning research to test the entire model.

Nevertheless, a corollary of our previous hypotheses is that the association between listening and job performance will be weaker than the associations between listening and each of its putative antecedents (relationship quality, affect, and cognition). This hypothesis is a logical extension of our full theoretical (i.e., mediated) model. Therefore, if we consider all four work outcomes of perceived listening simultaneously,

-

H4b: Perceived listening is associated more strongly with work outcomes, such as relationship quality, affect, and cognition, than with job performance.

Operationally, H4b suggests that the type of outcome moderates the association between perceived listening and work outcomes. The association is stronger for work outcomes classified as relationship quality, affect, and cognition than for job performance.

Job Performance: Behaviors versus Outcomes and Taxonomies

Thus far, we have treated job performance as a unitary concept. The benefit of this approach is that a general factor of performance (Viswesvaran & Ones, 2000) may yield higher validities than any single job-performance component (Harrison et al., 2006). Nevertheless, the effect of listening on job performance may vary depending on whether the job-performance measure reflects behaviors on the job or their results. According to one definition, job performance includes only the “things that people actually do, actions they take, that contribute to the organization’s goals” (Campbell & Wiernik, 2015, p. 48). Others define job performance to include both behaviors and outcomes, viewing them as related (Aguinis, 2019; Dalal et al., 2020) and influencing each other (Aguinis, 2019). However, job-performance behaviors are more under the employee’s control than job-performance outcomes. Therefore,

-

H5: Perceived listening is associated with job performance more strongly when job performance is operationalized as a behavior than as an outcome.

Moreover, job-performance behaviors and outcomes are multifaceted (Dalal et al., 2020). They include, at the very least, (a) focal task or technical performance; (b) OCB or contextual performance; (c) CWB, and (d) proactive or adaptive behavior. In addition, following Harrison et al. (2006), we conceptualize withdrawal behaviors, including tardiness, absenteeism, and turnover, as additional facets of a general performance factor. The magnitude of perceived listening’s effects on these facets is likely to differ because focal task performance is likely affected mostly by one putative mediator (e.g., knowledge). In contrast, all the other facets are likely to be affected both by relationship quality and affect. Thus,

-

H6: Perceived listening is associated more strongly with OCB, CWB, adaptive behavior, and withdrawal behaviors than with focal-task performance.

In short, the way job performance is operationalized—whether as a behavior or as an outcome, and whether as a unitary or multifaceted concept—moderates the effects of listening on job performance. Morever, the effects of listening on job performance and its antecedents are likely to be moderated by methodological factors and culture, as discussed next.

Methodological and Culture Moderators

Several methodological considerations might affect the strength of the relationships we investigate. Two of these relate to the source of the data collected and the method of collecting data. For example, according to a meta-analysis of 16 studies testing the association between listening and job performance in the sales domain, the average dis-attenuated correlation between listening and sales volume is ρ = 0.47 (Itani et al., 2019). This meta-analysis indicated significant and unaccounted for heterogeneity. Yet some of the effect sizes in Itani et al. (2019) were based on salespersons’ self-reported listening and performance (e.g., Castleberry et al., 1999). These effects could be inflated by both mono-source and mono-method biases (Podsakoff et al., 2003). We expect that correlations based on a single source will be stronger than correlations based on different informants. We also expect a similar effect for different informants using the same method (e.g., a questionnaire eliciting listener-reported performance, and another questionnaire eliciting speaker-reported listening) relative to studies employing different informants and methods (e.g., customer-reported listening combined with company sales records).

Another relevant methodological factor is the fit between the time frame for measures of listening and job performance. In attitude research, validities are higher when the predictor and the criterion have a similar construct breadth (Ajzen, 1991). Accordingly, a measure of listening that refers to a single episode will predict job performance better when the measure of performance refers to the same episode (e.g., a customer-rated salesperson’s listening and sales to that customer) than when the measure of performance is general (e.g., that salesperson’s quarterly performance). Similarly, listening measures referring to chronic behavior may predict performance over time better than performance during a single episode. The effects of mono-source bias, mono-method bias, and time frame on effect sizes are likely to be found not only for the job performance measures but also for any of its antecedents (e.g., relationship quality). Thus,

-

H7: The association of listening with work outcomes is moderated by (a) the use of a single source vs. multiple sources, (b) the use of a single method vs. multiple methods, and (c) the congruence of listening–outcome time frames. The correlation will be weaker when multiple sources and methods are used compared to mono-source or mono-method designs and when listening and work outcomes are reported for mismatched time frames compared to the same time frames.

Relatedly, the type of study design used may influence the observed effects of listening on work outcomes. Although experimental research in general usually yields larger effects than observational studies due to experimenter control and reduced noise (Bosco et al., 2015), in the particular domain of listening, experimental studies may actually yield smaller effects because they are inherently free from common source biases. Also, studies using predictive validity designs (in which measures of listening in the present are compared against future performance outcomes) are likely to yield weaker results than studies using concurrent validity designs (in which measures of listening are compared against current performance outcomes). Thus,

-

H8: The correlation between perceived listening and work outcomes is moderated by the study design. The correlation will be lower (and probably less biased) in (a) experimental than correlational designs and (b) predictive than concurrent designs.

Moreover, we expect that even when the design is optimal—different sources, different methods, same time frame, experimental, and predictive—the correlation of perceived listening and job performance will still be substantial relative to other predictors of performance (e.g., conscientiousness).

Culture may also moderate the observed link between listening and performance outcomes. For example, the need for positive self-regard, which may be satisfied by a feeling that one is being listened to, tends to be higher in Western cultures (Heine et al., 1999). To operationalize this conjecture, we expect that the effects of listening will be more substantial in countries characterized by secular–rational values and self-expression values, both of which are prominent in Western cultures according to the Inglehart–Welzel Cultural Map (for reviews see Fog, 2021).Footnote 4 Thus, for example, employees in Morocco, characterized by low secular–rational and self-expression values, may not expect their leaders to listen to them (Es-Sabahi, 2015), whereas in Western societies, poor listening—even by leaders—is construed as a violation of expected behavior, with effects spilling over into affective reactions and job performance. Hence,

-

H9: The correlation of perceived listening with all work outcomes will be stronger in cultures high (vs. low) in (a) secular-rational values and (b) self-expression values.

Our nine hypotheses are derived from existing knowledge based on previous findings. We next propose five research questions addressing methodological issues that do not lend themselves to formulating hypotheses. The first three relate to the generalizability of findings, and the last two relate to how listening is measured.

Generalizability (Research Questions 1–3)

Putting aside the questions of time frame and predictive versus concurrent design, the existing studies that should be best able to test how perceived listening correlates with job performance are those in which one person reports perceived listening, and objective measures of performance are obtained from the organization (i.e., where the correlation is free from common-source and common-method bias). Yet, beyond these considerations, it is vital to ensure that we compare apples with apples before asking whether such studies produce consistent results. One of the first questions to ask in examining any study is, whose performance is being measured? For example, Bergeron and Laroche (2009) found that customer-reported perceptions of salesperson’s listening are strongly associated with a composite performance variable including salesperson’s gross sales, unit sales, and contribution to profit, r = 0.50, 95%CI = [0.42, 0.57]. In contrast, Ivancevich and Smith (1981) examined salespersons’ ratings of supervisor listening, and found weak and non-significant correlations with two performance variables: salesperson’s new account creations, r = 0.14, 95%CI = [-0.02, 0.29], and conversion ratio, r = 0.11, 95%CI = [-0.05, 0.27]. Yet the apparent inconsistency between these findings should not worry us because while both studies used objective performance measures, they assessed the performance of different targets (the listener and the speaker, respectively).

Given this, it is worth asking whether listening has differential effects when outcomes—job performance or its antecedents—are measured for the speaker vs. the listener. Hence, our first research question:

-

RQ1: Does the role (listener vs. speaker) for whom each job outcome is reported moderate the association between listening and the job outcome?

Our second research question relates to operationalizing the constructs measured (relationship quality, affect, cognition). We assessed these mediators at a relatively abstract level. In practice, we may find various operationalizations of these constructs. For example, relationship quality may be indexed by trust, liking, and relationship satisfaction. Similarly, affect and job attitudes may be indexed by PANAS, job satisfaction, commitment, and vitality. Thus,

-

RQ2: Do different operationalizations of (a) relationship quality, (b) affect, and (c) cognition moderate their association with listening?

Next, we ask whether the effect of listening on work outcomes is generalizable across different sample or study characteristics. These include demographic variables and the publication status:

-

RQ3: Do demographic characteristics of the sample (occupation, gender composition, age) and the publication status of the study (unpublished vs. published, year of publication) moderate the effects of listening on work outcomes?

Measurement of Listening (Research Questions 4–5)

Although our theory focuses primarily on listening as perceived by the speaker, there are two good reasons to consider other measures: the listener’s self-reported listening and objective listening behaviors assessment. First, the measurement of listening often spans the boundaries between enacted listening, behavioral indicators of listening, and the speaker’s perceptions of both. Second, to be as comprehensive as possible, we also assessed whether the type of listening measurement moderates the perceived listening effects hypothesized in H1–H4.

Listening research relies on three types of informants: listeners, observers, and speakers. For example, the Active Empathetic Listening scale (AEL; Drollinger et al., 2006) allows listeners to self-report their listening quality; the Roter Interaction Analysis System (Roter & Larson, 2002) enables coding by third-party observers; and the Facilitating Listening Survey (Kluger & Bouskila-Yam, 2018) measures speakers’ perceptions of the other party’s listening. Many of these listening measures aim to improve reliability by assessing both more observable and unobservable behaviors. For example, the AEL aims to capture both unobservable listener behaviors (e.g., “I am sensitive to what my customers are not saying”) and observable listener behaviors (e.g., “I summarize points of agreement and disagreement when appropriate”). Similarly, Castro et al. (2016), using speakers as informants, asked both about less quantifiable behaviors of the listener (“Focuses only on me”) and more quantifiable behaviors (“Asks for more details”). Thus, it is unclear whether the effects of listening on work outcomes are moderated by the operationalization of listening, including the informant (listener, observer, or speaker) and the types of measure(s) used.

Moreover, some listening measures seem to conflate listening with listening outcomes. For example, the survey used by Lloyd et al. (2017) includes the following: “Generally, when my supervisor listens to me, I feel my supervisor…makes me comfortable so I can speak openly/ makes it easy for me to open up.” It is unclear whether responses to such items reflect perceived listening or evaluations of the listener’s impact on the speaker.

The multitude of listening operationalizations also raises concerns that different studies are not sampling the same construct. On the other hand, various factor analytic studies of listening questionnaires suggest that their items converge to a single factor (Jones et al., 2016; Lipetz et al., 2020; Schroeder, 2016) or one second-order factor (Bodie et al., 2014; Kluger & Bouskila-Yam, 2018).Footnote 5 Many listening questionnaires also achieve high reliabilities, opening the possibility that “listening may even be measured sufficiently with a single item (‘He/She listened to me very well’), in a similar manner [to that] in which job satisfaction can be captured reliably with a single item (Wanous, Reichers, & Hudy, 1997)” (Lipetz et al., 2020, p. 89).

Given the multitude of listening measures, we first ask:

-

RQ4: What are all the approaches to operationalize listening in predicting work outcomes (e.g., the scale used, the number of items, and whether the scale is published, adapted, or developed ad hoc)? How do they map to the listener’s self-reported behavior, the listener’s observable behavior, the speaker’s perception of listening, and the speaker’s perception of how listening affects them?

RQ4 is a question about descriptive information. Answering it would characterize the literature (it does not involve a meta-analysis) and may illuminate potential biases in the literature. However, to the degree that we find heterogeneity for these variables, it could be informative to test whether these characteristics moderate the effects of listening on work outcomes. For example, suppose two studies find different effects using two different scales. One is published (and has been shown to be reliable), and the other has been developed for the study. In that case, we will test whether the scale’s publication status moderates the listening–outcome association. Similar questions are relevant for the outcome measures. Therefore,

-

RQ5: Is the relationship between listening and work outcomes moderated by differences in measurement approaches (e.g., the scale used, number of items, and whether the scale is published, adapted, or developed ad hoc)?

In tandem, the investigation of RQ4 and RQ5 may help shed light on the thorny issue of the construct validity of listening (Kluger & Itzchakov, 2022). If different listening scales moderate the effects, it will hint that the listening construct lacks convergent validity. However, as indicated in H7, the literature may use different informants to report listening, some asking the performers to self-report their listening, some relying on observers, and some on speakers. The source of the listening measure could also moderate the listening-induced effects. Therefore, we tested RQ5 separately for each source. For example, we tested the moderating effects of listening measures reported by speakers. If listening scales, within sources, moderate listening effects, convergent validity for the construct of listening would be questionable. In contrast, if different scales, within each source, indicate a likely convergence (no moderation effects), it will raise questions about the discriminant validity of listening (Kluger & Itzchakov, 2022). For example, it may suggest that all the findings in the literature are not uniquely about listening. They may reflect the effects of constructs such as perceived empathy, perspective-taking, or no rudeness.

In sum, we theorize that listening affects job performance because it improves relationship quality, affect, and cognition—known predictors of job performance. Our goal is to assess the direction, strength, and variance of the association between listening at work and our four work outcomes (the three mediators just mentioned and job performance itself), with attention to a host of potential moderators of these effects. The hypotheses and research questions are summarized in Fig. 1 and Table 1.

Method

We followed the PRISMA guidelines for systematic reviews (Liberati et al., 2009; Page et al., 2020) and guidelines developed in psychology and management both for meta-analysis and for transparency more generally (Aguinis et al., 2020, 2011, 2018, 2010a, 2010b; Aytug et al., 2011; Bosco et al., 2016; Gonzalez-Mulé & Aguinis, 2018; Kepes et al., 2013; Polanin et al., 2020; Steel et al., 2021). All authors agreed on the search terms, eligibility, and moderator coding criteria. To test the feasibility of the proposed meta-analyses, we pretested all procedures, from developing a search string to writing a code in R for testing all our hypotheses and research questions. The workflow from developing search strings to reaching conclusions is depicted in Fig. 2.

Open Science

We shared all procedures, materials, datasets, and code on Open Science Framework, https://osf.io/czg4u/?view_only=a2c0d53711ed4c4abef15a92633f4cf5; https://doi.org/10.17605/OSF.IO/CZG4U including a Word version of our Qualtrics coding survey and R codes (including a README.docx explaining the code). Our Qualtrics survey used for the first batch of studies is also accessible.Footnote 6

Disclosures

Before we became aware of the opportunity to submit this work for consideration for publication as a registered study, we pre-registered it at https://aspredicted.org/mb2s4.pdf. As noted in this pre-registration, the first author conducted meta-analyses of listening effects (not only work-related) based on a search limited to the Web of Science, inspecting records up to February 2017. That search does not qualify as a systematic review because it was done by one person. Nevertheless, the first author expected that H1 through H4 would be supported based on observed effect sizes. This earlier work is not published and will not be submitted for publication.

Eligibility Criteria

Empirical reports on listening and job performance are rare in management and organizational psychology. For example, the search term “listen* AND perform* NOT music” in the Journal of Applied Psychology from 1917 through August 2020, using APA PsychNet, yields eight hits only. Only one reports an association between listening and job performance (Pace, 1962). Therefore, our search strategy was the broadest possible. We searched any report, published or not, in any language or field of study, without regard to occupation, design, time frame (single interaction or established relationships), or year of publication. Then, to ensure that our examination was limited to studies carried out on employees, we manually excluded effects involving listening in families, romantic relationships, non-work friendships, children, and therapy settings (except effects involving therapists’ job performance, in which they are treated as employees).

Information Sources

We used two main strategies to locate candidate sources. First, we conducted comprehensive searches using two platforms covering multiple databases: ProQuest and EBSCO. To ensure an effective search of these sources, we consulted a university librarian and experts from both ProQuest and EBSCO, as described next.

Concerning ProQuest, two authors reviewed its 59 databases and independently rated their relevance for the search. Both authors included 28 databases, excluded 27, and disputed three. To avoid omission errors, these three were included in the final list, totaling 31 databases. The selected databases include, for example, ERIC and the ABI/INFORM Collection (1971–present) and exclude, for example, Early English Books Online. The complete list of included databases is in Appendix A. We further restricted ProQuest to search only scholarly journals, dissertations and theses, conference papers and proceedings, and working papers, excluding sources such as newspapers. The last date searched in ProQuest was on April 19, 2022. Based on advice from a ProQuest representative, we used the ABTI (abstract and title) operator.

Second, following consultation with an EBSCO representative, we restricted our EBSCO search to academic journals and dissertations/theses, excluding publication types such as newspapers and videos. Three authors reviewed the 68 disciplines covered by EBSCO and independently rated their relevance for the search. All three authors excluded 19 disciplines (e.g., anatomy and physiology) and included 13 (e.g., education), with disputed 37 disciplines. This level of agreement is low, κ = 0.37. Therefore, to avoid omission errors, the three authors discussed each area of disagreement and ultimately included 40 disciplines. The last date searched in EBSCO was on April 19, 2022.

In our second strategy, we reviewed the introduction of every paper retrieved from ProQuest and EBSCO that passed the inclusion criteria to search for backward citations. We also checked the reference lists of systematic reviews on our topics (Itani et al., 2019). Because our search spanned multiple disciplines, we did not advertise our search on academic websites. Yet, when we communicated with authors studying listening, we asked about unpublished studies related to our search.

Search Strategy

To optimize the search terms, we considered two conflicting objectives: including relevant terms reflecting our dependent variables (e.g., job performance) and excluding irrelevant occurrences of the word “listening” that do not reflect our definition. We followed recommendations for search optimization for meta-analyses (Salvador-Olivan et al., 2019). The recommendations we adapted include using Boolean searches with truncated search terms (e.g., listen* instead of listening) and including synonyms of constructs (e.g., contextual performance and organizational citizenship behavior). The first five authors discussed and agreed on the list of inclusion terms.

We used a capsule approach to exclude irrelevant contexts and methods to reduce searches likely to be futile and an iterative process to exclude specific terms. As can be seen in Table 2, we constructed a context capsule (e.g., “job*” OR “work*”) and a method capsule (e.g., “quantitative”). These capsules exclude papers reporting listening effects in non-work contexts and papers that are not quantitative. Although some relevant hits could be lost due to these capsules, they dramatically reduce the obtained hits to a manageable set of candidate papers. For example, without these two capsules, a search-string pretest in EBSCO produced approximately 25,000 hits, and with these capsules, about 11,000 hits.

For the iterative process, the first author identified ten terms likely to yield irrelevant records, such as music, audio, hear*, and WiFi. Next, ten research assistants, namely, advanced undergraduate students in psychology supervised by the first author, were assigned to review the ProQuest results using these exclusion words. Each assistant reviewed different years and searched for additional terms for exclusion. Once they discovered a candidate term (e.g., acoustic*), they added the candidate term for exclusion with the AND operator. They reviewed the top 25 ProQuest records and the bottom 25 records ordered according to the ProQuest algorithm of relevance. If none of these 50 records contained a relevant record, the term became a candidate for exclusion. Next, each candidate term for exclusion was tested by another assistant on at least one different set of years than the one used to generate the term. This test was also carried out on the top and bottom 25 records arranged by relevance. If the term survived both analyses, it was used as an exclusion term.

To validate our search strategy, we tested whether our strategy successfully identified several relevant studies the authors were aware of before the search (e.g., Pace, 1962). All were found either in EBSCO or ProQuest, or both.

Selection Process

The records of the searches were uploaded to Covidence.org, a web-based software platform designed to streamline the production of systematic reviews. It randomly assigned references for inspection and checked the agreement between coders.

Four authors led the Covidence reviewing and coding process. To pretest the procedure, we trained ten undergraduate psychology students on the research goals and how to review the Covidence records. Specifically, using about 50 records, the students were shown how to inspect a title and an abstract and decide to choose “include,” “exclude,” or “maybe.” We then programmed Covidence such that a consensus of two reviewers is sufficient to reach a decision, with one of the authors making the final decision in cases of disagreement. In addition, each paper selected for inclusion based on the title and abstract had its full text inspected by two of the four authors involved in reviewing and coding papers. Disagreements were recorded by Covidence and resolved through discussion.

For papers published since 2010 that had missing information needed to determine eligibility, we emailed the corresponding author to obtain relevant information. For papers written in a language that none of the authors understand (e.g., Persian), we translated them with Google Translate and, when needed, enlisted a speaker of that language to help us extract the relevant information.

Figure 3 presents a PRISMA chart of the selection process. We identified 10,431 non-duplicate candidate papers from EBSCOE and ProQuest and 46 papers from two prior meta-analyses (Henry et al., 2012; Itani et al., 2019), back-references, and authors who communicated with us. From the 10,431 records, we excluded 9,616 based on the title and abstract. The inter-rater reliability among all pairs of 12 judges who coded whether to include or exclude a paper based on the title and the abstract was Cohen’s κ = 0.19, z = 15.6, p < 10–54. While κ is significant, it appears low. Yet, it reflects that the judges agreed on 81.2% of the papers before the discussion. This pattern reflects the sensitivity of κ to an imbalance among the categories (we excluded most records and rarely accepted some).

Next, four authors reviewed the full text of 769 papers deemed relevant on Covidence.org (but not the 46 papers identified manually). The inter-rater reliability among all pairs of four judges that coded whether to include or exclude a paper based on the full text was Cohen’s κ = 0.56, z = 16.1, p < 10–57, reflecting that the authors agreed on 82.2% of the papers. Note that the imbalance among the categories in this decision was lower, as higher proportions of papers survived this exclusion stage. The most common reasons for excluding studies at this stage were that the paper was theoretical, descriptive, or not about listening (see Fig. 3 for all reasons).

Finally, we coded the 175 papers included in the previous step and 46 papers we identified manually (221 papers). During the coding, we discovered additional obstacles for extracting effect sizes. These were discussed among at least two coders. Papers were excluded at this stage mainly because they did not study listening in a conversation (e.g., listening as comprehension), listening was the outcome (and not the predictor), and authors of papers published after 2010 did not answer our request to provide needed information (see Fig. 3 for all reasons). This selection process culminated in 122 papers that were successfully coded, reporting 144 studies (some papers report multiple independent samples).

Data Collection Process

To facilitate data collection, maintain transparency, and reduce coding errors, we constructed a Qualtrics surveyFootnote 7 and pretested it for clarity. In the pretesting, three authors coded three papers and considered coding discrepancies. Based on these discrepancies, the Qualtrics instructions were modified. We performed three iterations of this process until we were satisfied that the coding instructions were not ambiguous.

The Qualtrics survey was divided into four sections: information about the sample, the listening measure(s), the dependent variable measures(s), and the effect size(s). After coding each section, coders are presented with a summary of their codings and asked to double-check coded data before moving on to the next section.

Each report was coded by two authors independently. Discrepancies between raters on any coded variable were detected with an R code. To avoid inflation in estimating agreement between coders (with kappa), we separately considered discrepancies in facts, textual input, and subjective ratings. Discrepancies in facts (e.g., N = 132 or 123) were double-checked, and the correct value was retained. Discrepancies in textual input (e.g., PANAS vs. PA and NA) were manually inspected. One version was retained if the two meanings were identical; otherwise, the wrong entry was corrected. Disagreements on subjective judgments were resolved afterward by discussion; if an agreement was not reached, a third author arbitrated.

Data Items

The variables extracted from each record are presented in Table 3. It also shows the number of ratings for each item. For study variables (e.g., country), the maximum number of ratings is 144 (number of independent samples). Note that many studies did not report age or sex. For all other items, the number of ratings is a function of the number of listening measures and dependent measures; some papers report multiple measures of listening, outcomes, or both. Next, we calculated three inter-coder-reliability indices: % of ratings for which the two coders fully agreed, Cohen’s κ, and, only for continuous variables, Pearson’s r. For objective items, the average percent of complete agreement, weighted by the number of ratings, was 81.3. The weighted average of κ was 0.64. For judgment items, the average percent complete agreement, weighted by the number of ratings, was 74.9. The weighted average κ was 0.52. Finally, for continuous items, the average weighted Pearson’s r was 0.68 (weighted Cronbach’s alpha = 0.82).

The lead cause of disagreements between coders regarding objective items was confusion about the order of coding variables in papers with multiple measures of listening, outcomes, or both. The confusion was often caused by inconsistency of variable reporting in the coded papers. For example, the order of the variables described in the Method section differed from that reported in the Results section. We had a few discrepancies between coders in estimating the effect size from frequency tables and very few discrepancies caused by typos. This information helps interpret the reliability estimates in Table 3 because they primarily reflect confusion in the variables’ order rather than disagreement about the extracted values. Thus, the reliability of the judgment items should be compared not only to the ideal (e.g., 100% agreement) but to the possible reliability, given the poor reporting standard in many coded papers. Thus, we conclude that the reliability of most coding was acceptable.

Nevertheless, although the reliabilities appear acceptable, we found it difficult to resolve disagreements about five variables: whether the outcome measure pertained to the listener or the speaker, the source of both the listening and the outcome measures (published measure, adapted or developed for the current study), the listener’s occupation, and percent missing data. The difficulty in deciding whether the outcome measure pertained to the listener or the speaker appears inherent to the phenomena under study. For example, when the listener is a physician, the speaker is a patient, and the outcome is whether or not the patient returns to the hospital’s emergency room within 30 days of hospital discharge. It is not clear whether the outcome is a benefit for the listener or the speaker—it appears to benefit both. We were challenged in coding the listening or outcome measure as used in the same manner as a published paper or adapted for the coded study due to the ambiguity in many coded papers. We disagreed about the listener’s occupation when the listener was both a professional and a manager (e.g., a head nurse or sales manager). Whenever we had a conflict about this item, we coded the occupation as management. Finally, we disagreed on the percentage of missing data because our a priori coding instructions were unclear. It was unclear whether missing data were data that the authors intended to collect (e.g., number of mailed surveys) or only data that were collected but became useless for analysis (e.g., respondents did not follow the study’s procedure). Therefore, for these variables, except occupation, the results should be viewed cautiously.

Risk of Study Bias Assessment

We presume that the study of listening is not associated with apparent gains to any party. Therefore, we do not suspect that any particular group of studies is more at risk of bias than any other (as seen, for example, in drug effectiveness studies, where pharmaceutical companies might fund some). However, bias could arise if, for example, some papers are published by consultants who want to prove to potential clients that listening “works.” We are not aware of any means to detect such bias.

Effect-size Estimates

Given that most of the literature is correlational, the effect meta-analyzed is the correlation coefficient, r. Other statistics—t-tests, F tests with 1 df, and χ2 tests—were converted to r with the R package compute.es (Del Re, 2013). Other effects (e.g., k*j contingency tables) were converted with the online calculator on the Campbell Collaboration site (https://campbellcollaboration.org/research-resources/effect-size-calculator.html).

All the effects are shown in a file stored on the OSF site and forest plots are included in Fig. 4. Many studies provided more than one effect size for the same research questions. For example, the links between listening and work outcomes were reported for two or more listening measures, two or more outcome measures (e.g., PA and NA, or OCB and CWB), or both. We employed a three-level meta-analysis to account for the dependencies between effects nested within studies (Van den Noortgate et al., 2013), which is needed when multiple effect size estimates are nested within the same study. In our study, multiple effects extracted from a single study are likely to be more similar to each other than those extracted from independent studies (i.e., the true effects within the same study are likely to be correlated). Therefore, we used the rma.mv function of the R package metafor (Viechtbauer, 2010) to fit multivariate/multilevel meta-analysis models with random effects.

To test the moderators, we used meta-regression with the rma.mv function. We tested each moderator with a separate meta-regression. Meta-regressions with all moderators entered in one model are theoretically superior and generally more parsimonious. But, by testing all our moderators within a single model (including k-1 sets of dummy codes for each categorical variable), we might fit over 100 predictors simultaneously, producing nonsensical results.

Nevertheless, for tests of hypothesized moderators, we report both pseudo R2 and adjusted pseudo R2. When relevant, we report pseudo-ΔR2 and its adjusted pseudo ΔR2 while controlling for the type of dependent variables (H6). While it is not common to adjust pseudo R2 in the context of a three-level meta-analysis, the larger the discrepancy between these estimates, the more serious the concern of overfitting the model might be. Yet, we consider these values only for models where k > 40 because the estimates are unreliable below this threshold (Lopez-Lopez et al., 2014).

To balance the concern for power with the risk of increasing study-wise error, we set p < 0.05 for our hypotheses but p < 0.01 for the research questions as a threshold for declaring a moderator significant. For moderators for which we planned to use all work outcomes as the dependent variables (Table 1), we control the different work outcomes (e.g., relationship quality, job performance) by entering dummy codes representing outcome types.

For purposes of interpretation only, we calculated a separate meta-analysis (a sub-group analysis) for categorical moderators for each category. The results of separate meta-analyses can differ slightly from the coefficients of the dummy codes in a meta-regression because meta-regression assumes identical heterogeneity in each category. In contrast, subgroup analyses estimate heterogeneity for each category separately.Footnote 8 Therefore, we tested differences between categories with meta-regressions and reported sub-group results so that separate heterogeneity estimates could be inspected for categorical variables. Finally, to facilitate the interpretation of categorical moderators, we fixed the category with the weakest listening effect as the intercept. The meta-regression coefficient tests indicate whether each category is significantly higher than the effect in the category with the lowest effect of listening.

We conducted sensitivity analyses for meta-analysis with k ≥ 10, following the threshold used by Field et al. (2021). We assessed the influence of outliers in three different ways. First, for each meta-analysis, we identified the observation with the highest Cook’s distance (the effect that is most influential on the estimate of \(\overline{r}\)). We re-calculated \(\overline{r}\), with the most influential observation removed. We report for every meta-analysis with k ≥ 10 the value of Δ \(\overline{r}\) = \(\overline{r}\) original—\(\overline{r}\) with the most influential effect on the estimate removed. Positive values of Δ \(\overline{r}\) indicate that one outlier increased the estimate of \(\overline{r}\) original and a negative values indicate that it decreased it. For |Δ \(\overline{r}\)|> 0.05 and for \(\overline{r}\) with the most influential effect on the estimate removed that changes the conclusion, we planned to probe the study and try to understand its uniqueness. In addition, we explored the possibility that more than one influential outlier could change the conclusion. For that purpose, we visually explored a plot of Cook’s distances. If more than one observation appears to have a relatively strong influence, we planned to repeat the above procedure, removing all extremely influential observations. As recommended best practices for outlier management, we report results with and without the outliers (Aguinis et al., 2013). Finally, we also inspected plots of Cook’s distances aggregated by publication (see https://wviechtb.github.io/metafor/reference/influence.rma.mv.html). We provide the plots in the Supplementary Materials and explore whether removing any influential publication alters the statistical conclusions.

Reporting Bias Assessment

The standard techniques to assess publication bias (e.g., trim-and-fill) are not available for three-level meta-analyses because they do not account for dependencies in the data. To address this challenge, we followed the recommendation of the author of metafor, which can be found in recent discussions at https://stat.ethz.ch/mailman/listinfo/r-sig-meta-analysis. Specifically, for each meta-analysis with k ≥ 10 (Field et al., 2021), we tested the effect of the standard error (SE) of each r as a continuous moderator. A significant z value for the SE indicates that sample size predicts the effect size, r. A significant and positive z indicates a plausible publication bias, suggesting that effects based on small samples (high SE) yield stronger r values. This rule is also valid for undesirable outcomes (e.g., CWB) because we reversed the sign of such effects before the inclusion in the meta-analyses. The advantage of this approach for detecting a plausible publication bias is that it is part of a multi-level model that considers the dependencies in the data.

Because tests of publication bias could reflect the effect of an outlier (Field et al., 2021), we planned to assess the plausibility of publication bias after the removal of the effect size with the highest Cook’s distance score (see Field et al., 2021, for the approach of testing publication bias after removal of outliers). When we detected significant z, we used a bubble plot to understand the biasing effects of small sample sizes.

Certainty Assessment

Two factors could reduce the certainty we assign to the results in our research domains: missing data and selective reporting. Therefore, we coded for every study whether the author(s) reported missing data (attrition, coding errors, response refusals, etc.), and if they did, the percentage of data loss. We also coded the presence of independent and dependent variables for which results are not reported, even if irrelevant to our research questions. Failure to report data loss may indicate bias, as most studies have data attrition. Thus, a lack of information about data loss may cover up an attempt to present only significant results. For those reporting data loss, the higher the proportion of missing data, the higher the risk of biased results. The presence of dependent variables without reported results may indicate “cherry-picking” in the study. We tested these as potential moderators. These moderators are subsumed under our RQ5 regarding potential methodological moderators.

Results

Summary of Main Characteristics of Listening Studies

Before presenting the results of our hypothesis tests, we provide an overview of the extracted effects in Table 4, which also addresses our RQ4. Table 4 indicates that the mean publication year was 2012, and 67% of the effects were derived from studies that disclosed data loss, calculated typically as the discrepancy between the number of questionnaires distributed and the number of responses analyzed. On average, the reported data loss was 35%. Results also showed that 89% of the studies reported all effects, suggesting a low likelihood of reporting bias. The average age of the participants was approximately 41 years old, and the average percentage of women in the sample was 35% for studies reporting the listener’s sex and 55% for studies reporting the speaker’s sex.

The roles of the speakers included in the studies were customers (27%), followed by subordinates (23%), and patients (19%). The listeners were predominantly managers (36%), salespeople (21%), and healthcare professionals (19%). The vast majority of the studies (98%) were conducted within a single country, with the United States representing the most common location for data collection (46%), followed by Israel (15%), Germany (7%), and the United Kingdom (5%). The cultural values of the study samples were slightly below average regarding secular values but considerably higher than average regarding self-values (the average is zero).

The measurement of listening relied primarily on questionnaires, which typically comprised six items. Many measures (40%) were exclusive to a particular study, and 13% of the effects were tested using a single item. The most commonly employed scales across multiple studies were the FLS and the AELS. Most listening measures reflected the speaker's perception (63%), while others were based on the listener’s self-reports (23%). Furthermore, most of the listening measures reflected perceived listening. Lastly, most (80%) of the listening measures assessed listening as a chronic trait.

The measurement of work outcomes also relied mainly on questionnaires, which included an average of five items and employed published or adapted measures (76%) rather than ad-hoc measures. The majority of the measures of work outcomes pertained to chronic outcomes (e.g., job satisfaction) as opposed to single outcomes (e.g., returning to the emergency room after hospitalization).

Figure 4 includes forest plots illustrating the associations between listening and the dependent variables: relationship quality, affect, cognition, and performance. For each dependent variable, we placed all effects (correlations) nested in one study on the same line. We employed this approach because representing each effect on a separate line would render the forest plots incomprehensible. The forest plots indicate that most correlations between listening and all dependent variables are positive, and the magnitude of the correlations varies significantly.

Main Meta-analyses

Table 5 presents the results of meta-analyses examining the correlations between listening and any job outcome (first row) and each dependent variable, as specified by hypotheses H1 through H4. The correlations with the dependent variables, excluding the first row, are arranged in ascending order of effect sizes. The estimates in Table 5 are derived from three-level meta-analyses that control for the non-independence among the effect sizes. Moreover, the table reports statistics following best practice recommendations outlined in Gonzalez-Mulé and Aguinis (2018).

The first raw in Table 5 provides a meta-analysis of the relationship between listening and work outcomes, encompassing all outcome types. It indicates that we coded 122 papers, which reported 144 independent samples and 664 effect sizes. These effects were drawn from 400,020 observations nested in data gathered from 155,143 independent individuals. The meta-analysis suggests that the inverse-variance-weighted average correlation between listening and job outcome is 0.39, with a 95% confidence interval ranging from 0.36 to 0.43. Additionally, the effect corrected for measurement unreliability, of both the listening and the dependent variable measures, is 0.44.

Results also revealed significant heterogeneity in the effects. The Q statistics is 80,865.1 with 663 degrees of freedom, suggesting that the null hypothesis of no heterogeneity is improbable. Additionally, the estimate of the true variability, τ = 0.26, indicates that the range of true correlations between listening and performance can be found within ± 0.26 x 1.96 about the mean. As a result, the prediction (credibility) interval is approximately 0.39 ± 0.51, or between -0.12 and 0.90. It is noteworthy that in rare circumstances, listening may have a negative association with desirable organizational outcomes. Out of the 664 effects extracted, 5.4% showed negative associations. The negative associations could be partly attributed to sampling error in small samples: the median sample size for negative associations was 76 participants, and for positive associations 195 participants, Mann–Whitney’ test = 7,444.5, p < 0.001.

Furthermore, some studies indicated a strong effect size of listening that could indicate a lack of discriminant validity. Out of the 664 effects, 4% exceeded 0.80. Finally, the I2 statistic shows that 98.9% of the variance is due to true variation in effect sizes, suggesting the presence of moderators. Overall, the substantial variance in the effects suggests the need for further exploration of potential moderating factors.

Table 5 and the following tables also report potential biases in each effect with k ≥ 10. We examined potential biases caused by outliers and publication bias. The first row of Table 5 shows a Δ \(\overline{r}\) value close to zero, indicating that removing the most influential study from the meta-analysis did not significantly alter the estimate. Therefore, outliers did not affect the meta-analysis estimate of 0.39. The estimate with or without the most extreme outlier remained nearly the same. Last, the large and negative z-test value of -21.2 indicated that larger sample sizes resulted in larger effect size estimates, which is inconsistent with the hypothesis of publication bias (see also Fig. 5).

Tests of the Association of Listening with Work Outcomes

Table 5 presents the findings of the tests conducted for H1 to H4. On average, listening is significantly associated with affect, 0.36, cognition, 0.40, performance, 0.36, and relationship, 0.51, with each of these estimates accompanied by significant variability suggesting the presence of moderators. Furthermore, all effects are statistically significant based on the confidence intervals. The correlations adjusted for unreliability (\(\overline{\rho}\)) are obviously larger. However, two noteworthy aspects of these findings should be highlighted. First, the number of studies examining the relationship between listening and cognition is approximately one-third of the studies investigating the association between listening and each of the other outcomes, indicating an under-researched area of the impact of listening. Second, the association between listening and relationship is notably stronger than the association between listening and the other outcomes.

Finally, regarding H4b, results in Table 5 show that perceived listening is most strongly related to relationship quality. Inconsistent with H4b, the correlation between listening and job performance was not the weakest, with a mean estimate of \(\overline{r}\) = 0.36. Yet, the meta-regression analysis revealed that the type of job outcome category moderated significantly the impact of listening on work outcomes. Specifically, as shown in the note accompanying Table 5, Q(3) = 25.8, p < 0.001, pseudo-R2 = 0.06, and adjusted-pseudo-R2 = 0.05. The Q is an omnibus test of the moderating effect of the job outcome category, and pseudo-R2 estimates the amount of variance in listening effects explained by it.

To investigate the underlying cause of the omnibus result, we set affect, which had the weakest effect size (before rounding to two decimals), as the intercept category. The estimate for the intercept was 0.36 (Column B), which was almost identical to the estimate of the effect of listening on performance, \(\overline{r}\) = 0.36. Additionally, the predicted values in this regression were slightly dissimilar from \(\overline{r}\). For example, the meta-regression (B) weight for the relationship outcome was 0.10, implying that the effect of listening on the relationship outcome would be 0.36 + 0.10 × relationship-dummy code = 0.46. However, the separate meta-analysis for this effect was \(\overline{r}\) = 0.51. This discrepancy resulted from different estimates of τ underlying these estimates, as described under Effect-size Estimates above. The estimate of \(\overline{r}\) for each job-outcome category (e.g., performance) was based on the heterogeneity estimated separately within that category. In contrast, in the meta-regression, the estimate of B was based on τ calculated across all effect sizes, assuming homogeneous heterogeneity across the categories. Nevertheless, the association between listening and the relationship outcome was significantly stronger than the association between listening and affect, the intercept, as indicated in the p Column of Table 5 under Meta-regression, p < 0.001. To test whether the effect on relationships was stronger than on performance, we ran a contrast effect, which was significant, χ2(1) = 18.8, p < 0.001, providing partial support for H4b.

Hypothesized Moderators

We tested our hypothesis that the effects of listening on job performance behaviors are stronger than on job performance outcomes (H5) by incorporating this binary moderator in a meta-regression (Table 6). Our findings indicated that the effect of listening on job performance behaviors, \(\overline{r}\) = 0.36, was stronger than its effect on job performance outcomes, \(\overline{r}\) = 0.28. The meta-regression revealed that the estimate for job performance behaviors, the intercept, was \(\overline{r}\) = 0.30. The coefficient for job performance outcomes was 0.06, indicating that the estimate for job performance outcomes was 0.30 + 0.06 = 0.36. As previously explained under Effect-size Estimates, the difference between the separate meta-analyses (0.36 - 0.28 = 0.08) and the meta-regression coefficient (0.06) was due to different approaches to treating heterogeneity within categories. Despite these differences, the results were in the predicted direction, although the effect on behavior was not significantly stronger than on performance outcomes, p = 0.104, failing to support H5.

H6 suggests that perceived listening is more strongly associated with organizational citizenship behaviors (OCB), counterproductive work behaviors (CWB), adaptive behavior, and withdrawal behaviors than with focal-task performance. To test H6, we conducted an overall test to examine the moderating effect of performance measures on the association between listening and performance (Table 6). Results revealed significant differences, p = 0.002, accounting for 14% of the variance, indicating that the type of performance measure influences the strength of the association between listening and performance. However, our data did not support H6. Instead, we observed the weakest effect sizes for negative performance indicators: CWB, \(\overline{r}\) = -0.19, and withdrawal, \(\overline{r}\) = -0.12.Footnote 9 Additionally, the strongest effect size was found for adaptive performance, \(\overline{r}\) = 0.46. As H6 did not predict this pattern, caution should be taken when interpreting the results of this moderation test.

H7 proposes that the relationship between listening and work outcomes will be weaker when (a) multiple sources and (b) methods are used, as opposed to using only one source or method, and (c) when different time frames are used to report listening and work outcomes, as opposed to the same time frame. The results support H7a and H7b (see Table 7). The correlation between listening and work outcomes is weaker when different sources, \(\overline{r}\) = 0.26, are used, compared to the same source, \(\overline{r}\) = 0.43. The correlation is also weaker when different methods are used, \(\overline{r}\) = 0.29, compared with the same method, \(\overline{r}\) = 0.42. Moreover, the results indicate that when listening and work outcomes are measured at different times, the correlation is weaker, \(\overline{r}\) = 0.29 than when they are measured at the same time, \(\overline{r}\) = 0.41, but this difference is not statistically significant.