Abstract

Few randomized controlled trials have evaluated social media study groups as educational aids in the context of online and blended teaching programs. We present the Behavioral Education in Social Media (BE-Social) intervention package, which integrates key evidence-informed behavioral intervention strategies delivered through a closed social media study group. BE-Social combines instructor-mediated cooperative learning and self-management training via multimedia posts and video modeling. Forty-six students were randomly assigned to a default online program (control) group or default online program plus BE-Social (intervention) group. Intervention outcomes included academic performance and social media engagement (reactions, comments). A mixed-effect ANOVA showed that individuals in the BE-Social group attained higher academic performance, F (1, 46) = 18.37, p < .001, η2 = .34). On average, the intervention produced a 20-point increase in academic performance over a 100-point scale and significant increases in social media engagement. A parallel single-subject analysis revealed that intervention gains were not always consistent across participants. Findings are consistent with the view that social media platforms provide a prosthetic social milieu that can enrich traditional education by maximizing social rewards through increased interaction opportunities and timely positive feedback. We propose the digital environment reward optimization hypothesis to denote these processes.

Similar content being viewed by others

Online education has increased steadily over the last 30 years, experiencing an exponential leap during 2020 due to the COVID-19 pandemic (UNESCO, 2020). Online and blended learning programs are now near-universal in tertiary education in many countries. A radical change in the teaching channel will inevitably modify the social dynamics motivating academic behaviors. Some authors have suggested that peer-to-peer and instructor-mediated social media experiences may help to replace the social environment of traditional education that online education may be lacking (Susanto et al., 2021).

As of 2023, there are 4.8 billion distinct social media users across platforms worldwide (Statista, 2023). Consistently with the widespread use of social media among college and university students, some higher education institutions have integrated these tools into their courses (Schworm & Gruber, 2012; Tang & Hew, 2017). Yet, the potential of social media to enhance academic participation and performance has seldom been evaluated (Aslan, 2021). It remains unclear whether the added interaction opportunities for instructors and learners brought about by social media platforms may have a quantifiable impact on academic performance.

Social Media and Education

The concept of social media refers to digital platforms with core functionalities for producing and sharing content, as well as facilitating user interaction with the material and with other users through a variety of engagement responses (Mao, 2014). Engagement responses include reacting, commenting, and reposting content, among others (see a behavior-based taxonomy by Tarifa-Rodriguez et al., 2023). Some studies have shown that over 90% of students use social media to discuss academic content with their peers (e.g., Madge et al., 2009). Junco (2012) stated that education professionals can take advantage of these widespread educational spaces to improve student experience and performance (see similar claims by Hicks & Graber, 2010; and Smith, 2012).

Among the numerous social media platforms available, several surveys have identified Facebook as highly popular among college and university students in various countries (Cheung et al., 2011; Michikyan et al., 2015; Wang et al., 2011). Facebook allows for the exchange of synchronous and asynchronous information and facilitates interaction through thematic closed groups, instant messaging, push notifications, and other key functionalities (Mazman & Usluel, 2010). Researchers have often discussed the possibility of taking advantage of Facebook's educational capabilities (see, for example, Garcia et al., 2015; Kalelioğlu, 2017; and Selwyn, 2009). Numerous survey studies suggest that the parallel use of Facebook as an interaction channel in tertiary education may promote collaboration, communication, peer-mediated feedback, and cognitive and social skills (Arteaga-Sánchez et al., 2019; Aydin, 2012; Doleck & Lajoie, 2018; Hurt et al., 2012; Mazman & Usluel, 2010). For example, Al-Rahmi et al. (2015) found a positive correlation between increased self-reported participation in social networks and curricular engagement. Despite the many assertions by survey studies in support of the role of social media in education, the existing evidence is mixed. Doleck and Lajoie (2018) summarized 23 peer-reviewed studies and failed to establish consistent gains in academic performance caused by adding social media platforms to formal university courses.

A potential caveat to the existing literature may be that its focus is often on the channel rather than the educational practices. An obvious research extension in this field would involve identifying evidence-based behavioral education strategies that may be compatible with the social media environment and, second, evaluating the quantitative effects of specific evidence-based practices when delivered through social media channels in tertiary education.

Evidence-Based Educational Practices and Social Media

Several evidence-based behavioral education strategies may be compatible with the social media channel. These include cooperative learning strategies (including immediate feedback through instant messaging and push notifications) and self-management training for effective study habits via multimedia posts and video modeling.

Cooperative Learning

Cooperative learning is a teaching–learning strategy and a learning environment whereby students actively work together in small groups to achieve particular learning objectives or complete specific tasks. The approach implies active participation, collaboration, and communication among students (Gillies, 2016). Cooperative learning has been identified as a predictor of increased academic performance, reportedly due to its effect on student participation and self-reported motivation (Ataie et al., 2015; van Ryzin et al., 2020). Critical aspects of cooperative learning include timely sharing of information and resources, small-group learning, and positive interdependent interactions mediated by an instructor (see a meta-analysis for school-age applications in Little et al., 2014). In addition, peer-mediated feedback has also been identified as a central component of a cooperative learning environment. For example, a study by Yu and Chen (2021) indicated that peer-mediated feedback in a university-level science course had a greater impact on academic performance than instructor-mediated feedback. Since the 2000s, authors have stated that higher education institutions can benefit from interaction opportunities and instant communication in social media platforms (see, for example, Pempek et al., 2009; and Yuan & Wu, 2020). For instance, Hsu (2018) has shown that students receiving immediate and relevant feedback via instant messaging showed greater course engagement and academic performance.

Self-management

Self-management strategies typically involve goal setting, performance self-monitoring, self-evaluation, and self-reinforcement components (Briesch & Chafouleas, 2009; Cooper et al., 2019). Self-management skills allow students to actively acquire adequate study habits, a crucial mediator of academic performance (e.g., Credé & Kuncel, 2008). While self-management strategies have been used most often in organizational and clinical settings, a variety of applications among university studies have also been reported, whether learning to play a musical instrument, studying a new language, or presenting academic material, among others (Kitsantas et al., 2004; Malott, 2012).

Video Modeling

Social media platforms offer intriguing opportunities for training study behavior via video modeling, including study self-management skills. Video modeling has been defined as presenting a video of a model performing a target skill to a learner (Bellini & Akullian, 2007). While there is no precedent of video modeling with neurotypical tertiary education students, this approach is readily compatible with social media's synchronous and asynchronous video functionalities, for example, for modeling appropriate study habits.

Goals of the Study

After selecting critical evidence-based behavioral education strategies that would be fully compatible with the social media channel (i.e., cooperative learning, self-management, video modeling), we aim to present and evaluate the above-mentioned strategies as part of a behavioral intervention package for adult students. The Behavioral Education and Social Media (BE-Social) program presented here features the following intervention components: (a) timely instructor and peer-mediated feedback (thorough instant messaging and push notifications), and (b) study habits self-management training via multimedia posts and video modeling (through synchronous and asynchronous video posts).

The goal of the current study is to evaluate the overall impact of the BE-Social program on social media engagement and academic performance in a cohort of college-level adult students. An ancillary goal involves evaluating the overall contribution of timely feedback to the BE-Social intervention package. We chose timely feedback as a distinct assessment goal due to the added instructor hours required relative to the other program components. This is the first randomized controlled trial (RCT) to evaluate the impact of social media aids on academic performance.

Methods

Participants and Setting

Participants were students of a 1 year online postgraduate course in applied psychology (applied behavior analysis with a focus on autism intervention) delivered in Spanish. The course started in October 2019 and ended in May 2020. Students accessed the curricular content in weekly lessons through a Moodle platform. In addition, all students had access to a closed Facebook group (general Facebook group). In contrast, participants in the intervention group had access to an additional Facebook group through which the intervention was delivered (BE-Social Facebook group). We invited eighty students meeting the following inclusion criteria to participate: (a) student had been inactive in the general Facebook group during the first 3 months of the course, and (b) student had an average performance in weekly multiple-choice course content tests below 80%. Participants were randomly assigned to the two arms of the study (i.e., BE-Social intervention group and control group). Forty-six students agreed to participate by providing informed consent; 28 were assigned to the BE-Social group and 18 to the control group. The age, sex, educational level, reading comprehension skills, and pre-entry course content knowledge of participants in both groups were not significantly different (see Table 1). All students who initially accepted participation remained in the study until its completion. To be part of the social media group, the students had to accept the group rules, including discussing only academic material and refraining from sharing group content with others outside the group. Participants were not aware of the study hypotheses and goals.

The study took place between February and May 2020. Throughout the study, students were experiencing various forms of lockdowns in their respective residential areas due to the COVID-19 pandemic. The study methods were approved by the ethics committee of the Universidad Autónoma de Madrid (Approval Number CEI 112-2204).

Dependent Variables

Course Content Tests

Participants completed tests for each of the course's weekly lessons. Each test comprised 15 multiple-choice items with three distractors and one correct answer. We computed test scores by dividing correct responses by 15 and converting that ratio into a percentage. To minimize content-specific effects (e.g., lower performance in weeks with particularly challenging tests or course contents), participants were allowed to complete the test of a given lesson at any time within a 3-week window starting on the week when the lesson was delivered. In addition, participants were allowed to complete missing tests during the last three weeks of the study. This meant that the number, timing, and sequence of tests varied slightly across participants. Participants completed 12 to 14 content tests throughout the study. Participants took the tests through a Moodle platform. Tests were self-corrected upon completion.

Social Media Engagement Outcomes

Social media engagement outcomes may be important to document the teaching–learning process. For example, reacting to a course-related post (e.g., clicking the like icon) suggests a degree of exposure to the material. In addition, commenting on a course-related post may indicate a degree of elaboration of the course content referred to in the post. We computed the frequency of reactions (e.g., likes) and comments (e.g., responses to an instructor’s post) per week for each participant. An independent observer tallied Facebook reactions and comments for every participant weekly across the general and BE-Social Facebook groups over a 16-week period. For each participant in the control group, we summed the weekly number of reactions and comments in the general Facebook group. Likewise, for each participant in the intervention group, we calculated two separate dependent variables: the total number of weekly reactions and the total number of weekly comments posted across the general and the BE-Social Facebook groups.

Experimental Design

We used a two-arm simple randomization RCT. Specifically, those meeting the inclusion criteria were randomly assigned to the intervention and control groups. This approach ensures unbiased and independent participant allocation, statistical validity, ease of implementation, transparency, and minimal manipulation. Participants in the control group underwent an extended time series without receiving the intervention.

We studied single-subject effects with a concurrent multiple-baseline design (Bailey & Burch, 2018). As part of the single-subject analysis, participants in the intervention group were randomly assigned to four predetermined baseline lengths staggered in successive three-week blocks (except for vacation breaks, lessons were delivered weekly). Twelve participants were assigned to a six-week baseline, 7 participants were assigned to a 9-week baseline, and 9 participants were assigned to a 13-week baseline (this period included the Eastern week break).

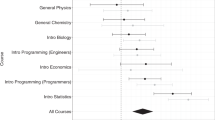

The RCT and the single-subject experimental design were compatible and were implemented concurrently (Virues-Ortega et al., 2023). The duration of study phases was determined by both the academic program duration and the need to ensure that the level of data aggregation was consistent across participants for the purposes of the group-based analysis. To compensate for relatively short baselines in the multiple-baseline design, we added control group participants who did not receive the intervention to the multiple-baseline triads. Because treatment inception could not be delayed until baseline stability was achieved, we expected that those undergoing “extended baselines” would help inform the baseline prediction function (Cooper et al., 2019, p. 164). Figure 1 presents a diagram with the temporal distribution of the baseline and intervention phases for all groups and baseline lengths across successive weeks.

Procedure

Before the start of the first baseline, a course instructor created (and administered from that point onward) the two closed Facebook groups required to implement the intervention (i.e., general and BE-Social Facebook groups). In the sections below, we describe the procedures for the control group and the intervention group (see again Fig. 1 for the timeline of the study phases).

Baseline

All participants had access to the general Facebook group for a period of at least 6 weeks before the start of the intervention (Fig. 1). Participants in the control group remained in the general Facebook group throughout the duration of the study. The general Facebook group was intended as an opportunity for students to gain additional exposure to the course. The instructor posted daily multiple-choice study questions. Students could not create novel posts but could respond to course content-related posts. The instructor provided general feedback once a week on each post immediately before turning off commenting for that post.

Intervention

In addition to their participation in the general Facebook group, individuals in the intervention group were invited to participate in the BE-Social Facebook group, which incorporated the evidence-informed intervention components described below. The full intervention was implemented for a 3-week period. Individuals in the shorter baselines received the intervention without timely feedback for a few additional weeks (Fig. 1).

Cooperative Learning

The BE-Social group allowed student peer- and instructor-mediated feedback. The instructor posted daily open-ended questions for participants to gain additional exposure to the material and create interaction opportunities. In addition, the instructor engaged in various actions intended to create a cooperative learning environment: (a) providing personalized and timely instructor feedback within the same day on student comments, including corrective feedback and social praise (hereinafter referred to as timely feedback), (b) praising students that gave feedback to peers, and (c) creating posts and comments encouraging students to share materials they had elaborated (i.e., summaries, glossaries, flashcards). Social praise was specific; praise statements referred to discrete desirable behaviors (e.g., “Thanks for lending a hand to Lizbeth on question x!”). The instructor was available over a continuous four-hour period at designated times during weekdays for the first three weeks of the intervention period (Fig. 1).

Self-management Training via Multimedia Posts and Video Modeling

The instructor created daily textual and multimedia posts presenting selected study self-management strategies. Posts were intended to promote independent study habits and organizational skills. The instructor focused on study goal setting, self-monitoring, and self-reinforcement skills (see a selection of study self-management posts in the Supplementary Information, Appendix 1). Self-management posts remained accessible to participants for the duration of the intervention phase. In addition, the instructor streamed three one-hour video modeling sessions intended to enhance studying and self-management skills. The instructor used elements of the behavioral skills training protocol by Parsons et al. (2013). Specifically, video modeling sessions started with a description of the target skill. Then, a demonstration of the target skill with additional examples (i.e., problem-solving applied scenarios, engaging in study self-recording) followed by a 10-min Q&A session (see an example of the timeline of a video modeling post in the Supplementary material, Appendix 2). Video modeling sessions closed with the instructor encouraging students to practice the target skill and thanking those who attended. Videos remained accessible through the BE-Social Facebook group for the duration of the intervention phase.

Intervention without Timely Feedback

Given that individualized, timely feedback was the most resource-intensive element of the multi-component BE-Social program, we limited timely instructor feedback to the first three weeks of intervention for each participant. Specifically, elements (a) and (b) of the cooperative learning component were only available for the first three weeks of the intervention. We conducted separate analyses to determine whether participants continued to engage with the group (by reacting to posts and posting comments) following the timely feedback period. Participants continued to be exposed to all other elements of the multi-component package during this phase (i.e., peer feedback, self-management, and video modeling posts), but they did not receive individualized feedback from the instructor.

Both the general and the BE-Social groups allowed students reactions to posts and comments (e.g., “liking” a post or comment), comments on posts, or other comments. Students were not allowed to publish primary posts.

Interobserver Agreement and Procedural Integrity

While data generation was automated, we studied the interobserver agreement (IOA) of the data extraction process. A secondary observer extracted occurrences of Facebook reactions and comments from 25% of the BE-Social Facebook group posts. An agreement was defined as the two observers recording an equal number of reactions (or comments) for a given post. We computed IOA as the number of posts with agreement divided by the total number of posts evaluated. The IOA for both reactions and comments equaled 94%.

In order to evaluate whether key aspects of the intervention were delivered as intended, we documented the percentage of student posts in the BE-Social group that received feedback within a 24-h period and the percentage of students in the BE-Social group that visualized posts published by the instructor or by peers. We studied procedural integrity in 25% of randomly selected instructor and student posts of the BE-Social group. Two independent observers extracted the number of visualizations per post, the timestamp of the first student input, and the timestamp of the first instructor feedback. The analysis indicated that the mean percentage of students visualizing posts was 87% (range, 65–96%) and that 100% of the student’s inputs received feedback within a 24-h cycle (feedback latency: Me = 14 min, M = 3.9 h, range, 0.0–21.1 h). The IOA of the procedural integrity data extraction process was 96, 98, and 94% for visualizations, student input timestamp, and instructor feedback timestamp, respectively.

Social Validity

We collected unsolicited feedback from all study participants as provided after the end of the intervention phase through Facebook comments, messages, and emails. We then classified comments as positive, negative, or neutral and computed the percentage of unsolicited positive feedback for participants in the control and intervention groups. In addition, we separated feedback comments into individual sentences and conducted a theme analysis with the 3rd Eye theme analysis algorithm (Hunerberg, 2019). The algorithm groups distinct sentences that share common sets of words. The output displays these groups as topics labeled with the words shared by all the elements within them. The elements of topics are not categorized exclusively, so distinct elements may be grouped into multiple topics (e.g., “The videos helped me solve my many questions” could be integrated into four different topics: “video,” “helping,” “solving,” and “question”).

Analysis

We conducted a two-factor mixed-effect analysis of variance (ANOVA) with Time as a within-subjects measures factor (Time 1: baseline, Time 2: intervention, Time 3: intervention without timely feedback) and Group as a between-subjects factor (control group, BE-Social intervention group). The analysis was repeated for the three main outcomes: course content tests, reactions, and comments. The effect of the intervention without timely feedback was evaluated only among individuals in the intervention group with short (n = 12) and medium (n = 7) baselines. We computed η2 effect sizes for all main and interaction effects. All analyses were conducted with SPSS (IBM Corp., 2019). An alpha value of 0.05 was used throughout.

In order to determine whether the attained sample size led to a sufficiently powered analysis, we conducted a series of post hoc power analyses with the G*Power software (Faul et al., 2009, a priori power analyses are available upon request). The achieved power values for course content tests were 1.00 (η2 = 0.34, effect size f = 0.72, rbaseline-to-treatment = 0.12, critical F = 4.06, n = 46), 0.96 (η2 = 0.21, effect size f = 0.52, rbaseline-to-treatment = 0.12, critical F = 3.01, n = 46), and 0.97 (η2 = 0.30, effect size f = 0.65, rbaseline-to-treatment = 0.12, critical F = 3.56, n = 46) for the Group, Time, and interaction effects, respectively.

We conducted visual and statistical analyses of the single-subject experimental design. In order to facilitate the visual analysis of the single-subject dataset, the time series of all outcomes were organized in three-week blocks with the exception of the block that included the Eastern break week, which was four weeks in duration (Week 10 through Week 13). Because content tests for a given lesson remained available through the Moodle platform for a three-week window, participants could complete a varying number of tests during each three-week period. Therefore, content test performance was graphed as successive rather than weekly events. Engagement responses (reactions and comments) were aggregated in consecutive weekly periods. Participants were ranked by mean baseline performance and assigned to multiple-baseline triads. Each triad included two participants with different baseline lengths (e.g., 6 week and 9 week baselines) and a participant with an extended baseline who did not receive the intervention. The range of baseline performance within each triad was below 10% (range, 0.1–7.8%).

In addition to the visual analysis, we analyzed the single-subject dataset by computing between-case standardized mean differences (BC-SMD) and 95% confidence intervals for all outcomes (tests, comments, reactions) and relevant two-term comparisons: baseline to intervention, baseline to intervention without timely feedback, intervention to intervention without timely feedback, and baseline to intervention (Hedges et al., 2012, 2013). We computed BC-SMD with the SPSS macro DHPS (Marso & Shadish, 2015). BC-SMD effect sizes may be interpreted according to the usual guides for Cohen d effect sizes (Cohen, 1988; Sawilowsky, 2009).

Results

Content Tests

The mixed-effect ANOVA revealed a main effect of Group, F (1, 46) = 18.37, p < 0.001, η2 = 0.34, and Time, F(2, 46) = 9.13, p < 0.001, η2 = 0.21, on content tests performance (Table 2). The Mauchly test (p = 0.187) suggested that the sphericity assumption was met. The main effect of Time should be attributed to the intervention effect within the intervention group. Specifically, when the effect of Time was restricted to those in the control group, the main effect of Time was not statistically significant, F (2, 18) = 2.78, p = 0.81, η2 = 0.14. In fact, there was a significant Time X Group interaction for content test performance, F (2, 37) = 14.65, p < 0.001, η2 = 0.30. A pairwise comparison restricted to the intervention group using a Bonferroni adjustment revealed a statistically significant difference for the mean difference between baseline and treatment (p < 0.001) and the mean difference between baseline and intervention without timely feedback (p = 0.002). The mean difference between the two sequential forms of intervention (BE-Social with and without timely feedback) was not significant (p = 1), suggesting that intervention gains remained after withdrawing timely feedback.

The full multiple-baseline time series is presented in Fig. 2 (participants 1 through 18), Fig. 3 (participants 19 through 36), and Fig. 4 (participants 37 through 46). The visual analysis reveals that the effect of the intervention was largely idiosyncratic. Specifically, 15 participants showed an immediate moderate-to-large effect upon the start of the intervention with moderate to minimal overlap (P1, P2, P4, P10, P13, P16, P22, P23, P25, P26, P31, P34, P35, P37, P40). By contrast, 12 participants showed no discernable effect through the visual analysis, with considerable overlap across the baseline and intervention time series (P5, P7, P8, P11, P14, P17, P19, P20, P28, P29, P38, P42). Most participants showing an intervention effect maintained their performance after withdrawing timely feedback. However, for P13 and P37, the effect of the intervention quickly disappeared upon the withdrawal of timely feedback. Only one participant (P43) exposed to an extended baseline (control group) showed a consistent change in performance coinciding with intervention transition dates. In addition, three participants undergoing the extended baseline (P3, P42, and P46) showed transient increases in performance. Moreover, none of the participants receiving the intervention showed a detrimental effect on performance during the treatment and post-treatment phases. A summary of the visual analysis is available in Table 3. The single-subject analysis seemed critical to ascertain inconsistencies in treatment effects among participants.

Single-Subject Analysis of Participants 1 through 18. Notes Percentage of correct content test responses and weekly frequency of engagement responses are scaled on the left and right y axes, respectively. Polygonal lines separate baseline and treatment phases. Broken vertical lines separate successive 3-week periods (vacation breaks not counted). Arrows indicate the withdrawal of timely feedback

Single-Subject Analysis of Participants 19 through 36. Notes See graphical conventions in Fig. 2

Single-Subject Analysis of Participants 37 through 46. Notes See graphical conventions in Fig. 2

The single-subject effect size analysis generally confirmed the findings of the group analysis (Table 4). Specifically, large effect sizes were found in the baseline-to-intervention comparisons. As shown in the mixed-effects ANOVA, the intervention-to-intervention without timely feedback comparison revealed that the intervention gains persisted largely unaffected after timely feedback had been withdrawn.

Facebook Group Engagement

The Mauchly test for the repeated-measures ANOVA for both Facebook group comments and reactions was significant (p < 0.001), suggesting that the sphericity assumption was not met. Therefore, we used the Greenhouse–Geisser correction for degrees of freedom and p values. The mixed-effect ANOVA of the randomized controlled trial revealed a main effect of Group on Facebook engagement responses, including reactions, F (1, 46) = 68.07, p = 0.07, η2 = 0.6, and comments, F (1, 46) = 9.62, p < 0.05, η2 = 0.18 (Table 2). We also identified a main effect of Time for reactions, F (1.14, 46) = 12.57, p < 0.01, η2 = 0.23, but only a statistical trend for comments, F (1.49, 46) = 3.26, p = 0.06, η2 = 0.07. The main effect of Time on Facebook reactions may be attributed entirely to the intervention group; the control group produced no reactions or comments in the general Facebook group throughout the study. Moreover, there was a significant Time X Group interaction for reactions, F (1.14, 46) = 12.57, p < 0.01, η2 = 0.23. A pairwise comparison using a Bonferroni adjustment revealed a statistically significant difference for mean comments between baseline and intervention (p < 0.05), but not between baseline and intervention after timely feedback was withdrawn (p = 0.08). The mean difference in comments across intervention periods (with and without timely feedback) was not significant (p = 1). The analysis suggests that the increased number of comments during treatment was generally maintained after the withdrawal of timely feedback, even though the group-based analysis lacked sufficient power to establish significant Time by Group interactions for comments. The pairwise comparison with Bonferroni adjustments for reactions revealed a statistically significant difference in the mean difference between baseline and intervention (p < 0.001) and between baseline and intervention after the withdrawal of timely feedback (p < 0.001). The difference in reactions between intervention periods (with and without timely feedback) was not significant (p = 0.51), suggesting that the effect of the intervention remained unaltered after the withdrawal of timely feedback.

Figures 2, 3, and 4 present the complete multiple-baseline analysis for all participants. Visual analysis reveals that the effect of engagement, as measured by reactions and comments, was not always consistent across participants. Except for P19, all participants showed a rapid increase in reactions upon the start of the intervention (P19 showed a delayed increase in reactions). By contrast, only 16 participants showed a rapid rise in comments (P2, P4, P10, P13, P20, P22, P23, P25, P26, P29, P31, P32, P34, P38, P40, P41) and one additional participant (P16) demonstrated a delayed increase in comments. Overall, the increase in comments was more modest than that observed for reactions. None of the participants showed clear evidence of the rise in engagement being transient or dependent on timely feedback. Finally, a few individuals showed an apparent correlation between content tests performance and engagement (particularly reactions) during the intervention phase (P4, P7, P11, P22, P23, P25, P28, P31, P34, P37).

The single-subject effect size analysis was consistent with the findings of the group analysis, both suggesting relatively larger effect sizes for reactions than comments (Table 4). In addition, baseline-to-intervention effect sizes were larger before the withdrawal of timely feedback for reactions, but not for comments. Interestingly, the single-subject effect size analysis produced identical baseline-to-intervention effect sizes for comments before and after the withdrawal of timely feedback, which is a slight departure from the results of the mixed-effects ANOVA. This difference may be attributed to the greater sensitivity to within-phase trends of the Hedges g effect size.

Social Validity

Fourteen participants (50%) of the BE-Social group provided unsolicited positive feedback (P2, P10, P11, P13, P14, P19, P20, P25, P31, P32, P38, P40, P41). We did not receive any neutral or negative feedback from the BE-Social group. No unsolicited feedback was received from participants in the control group. The top five thematic words or topics in the unsolicited feedback messages according to a sentence-by-sentence theme analysis were instructor (9 entries, 28.12% of repeated entries), support (4, 12.5%), videos (3, 9.38%), knowledge (3, 9.38%), and time (3, 9.38%). The context for these themes included gratitude toward the instructor for sharing knowledge, devoting time to students, and supporting them. Comments also mentioned the utility of the video posts.

Discussion

The current study provides evidence supporting the practical implementation and potential impact on academic performance of an evidence-informed intervention package delivered through a closed Facebook group. The BE-Social program was intended as an adjunct to a one-year postgraduate course in applied psychology delivered through a Moodle platform. The overall results indicated a considerable increase in engagement indices and academic performance. Specifically, academic performance, as evaluated by frequent multiple-choice objective tests, demonstrated a 20-point increase in the intervention group over a 100-point scale. It is important to emphasize that students did not receive course credit for completing the tests. Therefore, there was no apparent motivation for engaging in academic dishonesty. Intervention gains persisted after timely feedback had been withdrawn. A single-subject analysis indicated that intervention gains were primarily driven by a subgroup of 15 individuals responding particularly favorably to the intervention (Table 3). Interestingly, about half of these responders showed an apparent covariation between social media engagement and gain in academic performance over the course of the intervention.

Theoretical Considerations

Digital social interaction through social media platforms has already become more prevalent than face-to-face and analogical forms of social interaction. Social media platforms are designed to enhance interaction. We would expect that the immediacy of communication and other response-inducing social media functionalities (e.g., push notifications, multi-device capability, labeling, engagement-dependent badges, and multimedia content) were critical to the effects of the intervention (Changsu et al., 2016; Méndez et al., 2014). The behavioral procedures composing the multi-component intervention were amenable to the social media channel, and their effects on academic behavior might have been enhanced by social media functionalities. For example, immediacy has been established as one of the key elements of feedback effectiveness in online education (see, for example, Jensen et al., 2021). Moreover, the ephemeral nature of social media feeds means that delayed feedback may be less likely to impact the audience (e.g., the recency of the relevant context may be lost).

There is ample evidence to suggest that social media engagement is, to a significant extent, a function of the social rewards that users receive for their active online behavior. For example, in a series of quantitative analyses, Lindström et al. (2021) showed that the latency of posting behavior (a dimension of behavior strength) was a function of the number of likes received in preceding posts. In addition, users tend to provide more feedback to others after they themselves have received positive feedback (Eckles et al., 2016). It has also been shown that social comparison affects social media feedback similarly to non-social rewards (e.g., Rosenthal-von der Pütten et al., 2019). These findings and the apparent effect of feedback immediacy fit well with a reward learning model of online behavior.

Our results are consistent with the view that instructor- and peer-mediated feedback are forms of social reward that activate student engagement. Course content engagement as part of a Facebook group provides a social milieu that, in addition to replacing (totally or partially) the traditional social context of learning, may also enhance interaction opportunities (i.e., easy access to a community, regularly posted content) and timely social reinforcement (peer- and instructor-mediated feedback). The digital environment reward optimization hypothesis denotes this mechanism. Specifically, social media engagement may be a function of operant parameters, such as reinforcer delay, reinforcement rate, reinforcer magnitude, and schedule (e.g., Lindström et al., 2021). Because of the numerous actors involved in a social network (potentially large numbers of students and instructors), the low effort involved in responding, the salience of the input received from others (e.g., instant push notifications), and the virtually infinite opportunities to respond, social media may induce greater engagement than traditional learning contexts, where students may have fewer engagement opportunities. By reward optimization, we simply suggest that students may match the potential rate of reinforcement of the online environment through increased engagement responses (see an analogous example of the matching law in Borrero et al., 2007).

In the current study, enhanced engagement may result in improved content exposure, content repetition, and content elaboration, which are known mediators of information acquisition and, ultimately, academic performance (Zheng et al., 2015). Incidentally, the matching process that may be leading to adequate course engagement in the current study may also be present in problematic internet use, such as internet addiction, a high-prevalence condition among college-level students (e.g., Joseph et al., 2021).

The current evaluation of the BE-Social package suggests that increased opportunities for operant learning brought about both by evidence-based teaching–learning strategies (e.g., video-modeling, regular content posting) and social media functionalities (e.g., timely feedback, low response effort, content sharing) may maximize social reinforcement for engagement responses, some of which are important elements of effective study behavior (e.g., content elaboration, problem-solving). Key elements of this conceptual framework were not directly assessed here. Future studies could evaluate the impact of discrete elements of the BE-Social program on engagement frequency and latency as a function of various social reward parameters (e.g., likes received, contingent commenting, and praising). The study by Lindström et al. (2021) already provides an experimental paradigm in this direction. Elaborating the proposed hypothesis further would require an ample laboratory and translational research program.

Methodological Considerations

The current study borrowed methods from the mainstream behavioral education literature that had not been evaluated in the context of social media. For example, video modeling has been used predominantly to teach social skills (Sherer et al., 2001), daily living skills (Kellems et al., 2016), vocational skills (Kellems & Morningstar, 2012), and academic skills (Cihak et al., 2009) to people with developmental disability (see a systematic review in Park et al., 2019). However, video modeling has not been used to enhance self-management skills in tertiary education. Video modeling presents a significant research opportunity in this context as it has several unevaluated applications. These include content elaboration, public speaking delivery style, and study behaviors during long-duration study sessions (e.g., “study with me” videos), to mention a few.

The study featured a novel channel for delivering feedback with evidence-informed formal requirements (e.g., low-latency feedback, feedback specific to a target academic behavior). Social media platforms can facilitate timely communication, allowing instructors to address questions, clarify concepts, and, more importantly, encourage peer-to-peer communication outside the formal lecture environment (e.g., Tarifa-Rodriguez et al., 2023). They provide frequent opportunities for students to have positive and safe interactions (in part due to the instructor’s oversight and explicit group rules) and enjoy diverse perspectives. Also, the accessibility of these platforms ensures low latency and frequent communication, possibly explaining the apparent reinforcement effect that we see in various engagement responses.

Randomized controlled trials (RCTs) often mask individual or subgroup effects (Frieden, 2017). The current study featured a combination of RCT and single-subject research methodologies, a key strategy to ascertain idiosyncratic effects (Smith, 2012). While RCTs are ideal for outcome research, they may be less practical when novel multi-component interventions are being evaluated and fine-tuned. Single-subject experimental designs are increasingly used in education to identify delayed effects, transient effects, and specific groups of non-responders (Ledford & Gast, 2018). In the current analysis, only about 60% of participants in the intervention group demonstrated an apparent gain in academic performance. This suggests that the intervention may be optimized further by adding new intervention components, adjusting the intensity of the existing ones, or evaluating the impact of the various intervention elements in the BE-Social package separately and in various combinations (see a review of component analysis methods for behavioral interventions in Ward-Horner & Sturmey, 2010). Some novel elements that may be added in future research include more systematic token and social reinforcement contingencies for peer-mediated feedback, integration of instructor-trained large language models as a vehicle for cost-effective immediate feedback, and direct evaluation of the complexity of student textual inputs.

The “parallel” approach to group-based and single-subject analyses used here was not without challenges. For example, we did not have the luxury of extending the baseline length to attain stability. The study had to fit within the tight schedule of the academic program, and the combination of a multiple-baseline design and an RCT meant that baseline durations were predetermined. While the extended baselines showed that performance in content tests might be inherently variable (i.e., variability would not have been “fixed” by extending the baseline), they did not show consistent trends or changes in level, thereby supporting the prediction function of the baseline.

The current study also features an assortment of relevant methodological strategies appropriate for using a social media platform for educational purposes in naturalistic settings. Specifically, the proposed direct measurement, interobserver agreement, and procedural integrity strategies provide the basis for evaluating educational interventions in this context. Future studies would benefit from purposely developed applications intended for real-time monitoring of key outcomes of the teaching–learning process as it unfolds in social media groups attached to online and blended learning programs. It would be a welcome addition to this literature to document more molecular outcomes such as feedback latency, length of feedback exchanges, peer-to-instructor feedback ratio, and automated text analysis, among others. Because we could only compute feedback latency retrospectively, this potentially informative outcome was restricted to monitoring procedural integrity in the current study. The computational capability of extracting these and other outcomes may allow for their use by instructors as part of the teaching–learning process.

Limitations and Future Directions

Various limitations to the current study should be noted. First, the amount of exposure to academic content was difficult to equate across the arms of the RCT. Specifically, the intervention procedures (e.g., additional opportunities for peer-to-peer interaction and video presentations of self-management strategies) required the perfunctory addition of course-related content resulting in additional exposure to course material among those in the intervention group. More research is needed to establish minimally disruptive strategies for equating the intensity of educational interventions delivered through social media (e.g., yoking the number of social media posts across groups).

Second, we did not experimentally explore the potentially mediating role of social media engagement in academic achievement. Various engagement responses may offer different information. Reactions provide evidence of sentiment and content reception, whereas comments may inform content elaboration and motivation, and may be subject to more complex analyses (e.g., latency of responding, text analysis). Moreover, the different levels of effort involved in reacting and commenting may explain the disparity in frequencies observed in these two indices (see Billington & DiTommaso, 2003, for a discussion on the impact of response effort on choice).

Third, more robust measures of academic performance may be achieved by adding proctored testing or proctored final examinations as part of the intervention outcomes. While unsupervised tests may incur a positive bias (Steger et al., 2020), the assessment system used here was identical across groups, and test items focused on applied scenarios, which cannot be solved by copying course contents, searching the Internet, or using AI engines.

Fourth, a gender gap is often observed in psychology courses (American Psychological Association, 2017), where there is a high proportion of female students, and this was certainly the case here. The unbalanced gender distribution in our sample of participants means that our findings ought to be replicated with diverse populations.

Fifth, future studies could determine whether the proposed intervention could also be implemented “autonomously” by trained students using pyramidal or peer-proctor training structures (see, for example, Erath et al., 2020; Svenningsen & Pear, 2011), which would help to optimize the cost-effectiveness of the intervention even further. Cost analyses of this and other variations of the program would also be welcomed developments in this respect.

Sixth, the simple randomization strategy meant that the sample sizes across the control and intervention groups were likely to be unbalanced. While simple randomization often leads to unbalanced groups, as was the case here, it remains a randomization strategy minimally prone to bias (Schulz & Grimes, 2002).

Finally, as noted in the methodological section, the proposed program incorporated several intervention elements (cooperative learning, feedback, and self-management training via multimedia posts and video modeling). Future studies could optimize the intervention by evaluating the relative impact of these various components through component analyses, multi-arm RCTs, and other methods.

Practical Implications

The study showed how a time-efficient intervention could result in a mean 20-point gain in academic performance. Specifically, 60 instructor person-hours were required to deliver the intervention to 28 students (person-hours per student = 2.1 h). Further gains in efficiency may be possible by evaluating the specific effects of the various intervention components, focusing the intervention on low-performance students, optimizing the ratio between the feedback provided by peers and that provided by the instructor, and evaluating the scalability of the BE-Social program with larger groups.

Future studies may help to improve the promising effect of the BE-Social program. The proposed intervention may have some knowledge transfer potential as is. In addition, the required teaching repertoire may be easily acquired by instructors (e.g., self-management, video modeling, behavioral skills training, timely specific feedback) after adequate training. Our findings suggest that the intervention gains may be observed within days of its inception, consistent with the duration of semester-long or shorter courses. In addition, positive student feedback (analyzed qualitatively in the social validity section) suggests that the intervention may also favor motivation, social climate, and positive peer-to-peer and peer-to-instructor interactions.

Conclusions

With the near-universal growth of online education and social media use, social media adjuncts to online and blended learning programs are expected to increase over the foreseeable future. Unfortunately, this field has not yet emerged as an evidence-based practice. Educators' choice of social media functionalities and the design of the social dynamics delivered through this channel may have a large impact on student engagement and performance (Chen et al., 2020). The current study shows that a closed Facebook group may be a promising channel for delivering a multi-component behavioral intervention, including cooperative learning, self-management, and video modeling intervention elements, with adult students attending an online postgraduate course. Our findings are consistent with the view that social media platforms provide a prosthetic social milieu that can enrich traditional education by maximizing social rewards through increased interaction opportunities and timely positive feedback. The proposed digital environment reward optimization hypothesis captures these processes.

Data Availability Statement

The dataset of the current study will be made available through Figshare https://doi.org/10.6084/m9.figshare.20114798 upon the manuscript's acceptance.

References

Al-Rahmi, W. M., Othman, M. S., & Yusuf, L. M. (2015). Social media for collaborative learning and engagement: Adoption framework in higher education institutions in Malaysia. Mediterranean Journal of Social Sciences, 6(3S1), 246. https://doi.org/10.5901/mjss.2015.v6n3s1p246

American Psychological Association (2017). The changing gender composition of psychology: Update and expansion of the 1995 task force report. http://www.apa.org/pi/women/programs/gender-composition/task-force-report.pdf

Arteaga-Sánchez, R., Cortijo, V., & Javed, U. (2019). Factores que impulsan la adopción de Facebook en la educación superior. Elearning and Digital Media, 16(6), 455–474. https://doi.org/10.1177/2042753019863832

Aslan, A. (2021). Problem-based learning in live online classes: Learning achievement, problem-solving skill, communication skill, and interaction. Computers & Education, 171, 104237. https://doi.org/10.1016/j.compedu.2021.104237

Ataie, F., Shah, A., Nasir, M., & Nazir, M. (2015). Collaborative learning, using Facebook’s page and Groups. Internal Journal of Computer System, 02(02), 47–52.

Aydin, S. (2012). A review of research on Facebook as an educational environment. Educational Technology Research and Development, 60(6), 1093–1106. https://doi.org/10.1007/s11423-012-9260-7

Bailey, J. S., & Burch, M. R. (2018). Research methods in applied behavior analysis (2nd ed.). Routledge: New York.

Bellini, S., & Akullian, J. (2007). A meta-analysis of video modeling and video self-modeling interventions for children and adolescents with autism spectrum disorders. Exceptional Children, 73(3), 264–287. https://doi.org/10.1177/001440290707300301

Billington, E., & DiTommaso, N. M. (2003). Demonstrations and applications of the matching law in education. Journal of Behavioral Education, 12(2), 91–104. https://doi.org/10.1023/A:1023881502494

Borrero, J. C., Crisolo, S. S., Tu, Q., Rieland, W. A., Ross, N. A., Francisco, M. T., & Yamamoto, K. Y. (2007). An application of the matching law to social dynamics. Journal of Applied Behavior Analysis, 40(4), 589–601. https://doi.org/10.1901/jaba.2007.589-601

Briesch, A. M., & Chafouleas, S. M. (2009). Review and analysis of literature on self-management interventions to promote appropriate classroom behaviors (1988–2008). School Psychology Quarterly, 24(2), 106–118. https://doi.org/10.1037/a0016159

Changsu, K., Minghui, K., & Tao, W. (2016). Influence of knowledge transfer on SNS community cohesiveness. Online Information Review, 40(7), 959–978. https://doi.org/10.1108/OIR-08-2015-0258

Chen, T., Peng, L., Yin, X., Rong, J., Yang, J., & Cong, G. (2020). Analysis of user satisfaction with online education platforms in China during the COVID-19 pandemic. Healthcare (basel, Switzerland), 8(3), 200. https://doi.org/10.3390/healthcare8030200

Cheung, C. M. K., Chiu, P. Y., & Lee, M. K. O. (2011). Online social networks: Why do students use Facebook? Computers in Human Behavior, 27(4), 1337–1343. https://doi.org/10.1016/j.chb.2010.07.028

Cihak, D., Fahrenkrog, C., Ayres, K. M., & Smith, C. (2009). The use of video modeling via a video iPod and a system of least prompts to improve transitional behaviors for students with autism spectrum disorders in the general education classroom. Journal of Positive Behavior Interventions, 12(2), 103–115. https://doi.org/10.1177/1098300709332346

Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd ed.). Lawrence Erlbaum Associates: New Jersey.

Cooper, J. O., Heron, T. E., & Heward, W. L. (2019). Applied behavior analysis (3rd ed.). Pearson: London.

Credé, M., & Kuncel, N. (2008). Study habits, skills, and attitudes: The third pillar supporting collegiate academic performance. Perspectives on Psychological Science, 3(6), 425–453. https://doi.org/10.1111/j.1745-6924.2008.00089.x

Doleck, T., & Lajoie, S. (2018). Social networking and academic performance: A review. Education and Information Technologies, 23(1), 435–465. https://doi.org/10.1007/s10639-017-9612-3

Eckles, D., Kizilcec, R. F., & Bakshy, E. (2016). Estimating peer effects in networks with peer encouragement designs. Proceedings of the National Academy of Sciences, 113, 7316–7322. https://doi.org/10.1073/pnas.1511201113

Erath, T. G., DiGennaro Reed, F. D., Sundermeyer, H. W., Brand, D., Novak, M. D., Harbison, M. J., & Shears, R. (2020). Enhancing the training integrity of human service staff using pyramidal behavioral skills training. Journal of Applied Behavior Analysis, 53(1), 449–464. https://doi.org/10.1002/jaba.608

Faul, F., Erdfelder, E., Lang, A.-G., & Buchner, A. (2009). G*Power (Version3.1.9.4). [Computer Software]. https://www.psychologie.hhu.de/arbeitsgruppen/allgemeine-psychologie-und-arbeitspsychologie/gpower

Frieden, T. R. (2017). Evidence for health decision making—beyond randomized, controlled trials. The New England Journal of Medicine, 377(5), 465–475. https://doi.org/10.1056/NEJMra1614394

Garcia, E., Elbeltagi, I. M., Dungay, K., & Hardaker, G. (2015). Student use of Facebook for informal learning and peer support. International Journal of Information and Learning Technology, 32(5), 286–299. https://doi.org/10.1108/IJILT-09-2015-0024

Gillies, R. M. (2016). Cooperative learning: Review of research and practice. Australian Journal of Teacher Education. https://doi.org/10.14221/ajte.2016v41n3.3

Hedges, L. V., Pustejovsky, J. E., & Shadish, W. R. (2012). A standardized mean difference effect size for single case designs. Research Synthesis Methods, 3, 224–239. https://doi.org/10.1002/jrsm.1052

Hedges, L. V., Pustejovsky, J. E., & Shadish, W. R. (2013). A standardized mean difference effect size for multiple baseline designs across individuals. Research Synthesis Methods, 4, 324–341. https://doi.org/10.1002/jrsm.1086

Hicks, A., & Graber, A. (2010). Shifting paradigms: Teaching, learning and Web 2.0. Reference Services Review, 38(4), 621–633. https://doi.org/10.1108/00907321011090764

Hsu, T. C. (2018). Behavioral sequential analysis of using an instant response application to enhance peer interactions in a flipped classroom. Interactive Learning Environments, 26(1), 91–105. https://doi.org/10.1080/10494820.2017.1283332

Hunerberg, E. (2019). 3rd Eye theme analysis [Online Application]. https://3rdeyeinformation.com/home

Hurt, N. E., Moss, G., Bradley, C., Larson, L., Lovelace, M., Prevost, L., Riley, N., Domizi, D., & Camus, M. (2012). The ‘Facebook’’ effect: College students’ perceptions of online discussions in the age of social networking. International Journal for the Scholarship of Teaching and Learning. https://doi.org/10.20429/ijsotl.2012.060210

IBM Corp. (2019). IBM SPSS Statistics for Mac (Version 26.0) [Computer Software]. https://www.ibm.com/products/spss-statistics

Jensen, L. X., Bearman, M., & Boud, D. (2021). Understanding feedback in online learning—A critical review and metaphor analysis. Computers and Education, 173, 104271. https://doi.org/10.1016/j.compedu.2021.104271

Joseph, J., Varghese, A., Vr, V., Dhandapani, M., Grover, S., Sharma, S., Khakha, D., Mann, S., & Varkey, B. P. (2021). Prevalence of internet addiction among college students in the Indian setting: a systematic review and meta-analysis. General Psychiatry, 34(4), e100496. https://doi.org/10.1136/gpsych-2021-100496

Junco, R. (2012). The relationship between frequency of Facebook use, participation in Facebook activities, and student engagement. Computers and Education, 58(1), 162–171. https://doi.org/10.1016/j.compedu.2011.08.004

Kalelioğlu, F. (2017). Using Facebook as a learning management system: Experiences of pre-service teachers. Informatics in Education, 16(1), 83–101. https://doi.org/10.15388/infedu.2017.05

Kellems, R. O., & Morningstar, M. E. (2012). Using video modeling delivered through iPods to teach vocational tasks to young adults with autism spectrum disorders. Career Development and Transition for Exceptional Individuals, 35(3), 155–167. https://doi.org/10.1177/0885728812443082

Kellems, R. O., Mourra, K., Morgan, R. L., Riesen, T., Glasgow, M., & Huddleston, R. (2016). Video modeling and prompting in practice. Career Development and Transition for Exceptional Individuals, 39(3), 185–190. https://doi.org/10.1177/2165143416651718

Kitsantas, A., Robert, A. R., & Doster, J. (2004). Developing self-regulated learners: Goal setting, self-evaluation, and organizational signals during acquisition of procedural skills. The Journal of Experimental Education, 72(4), 269–287. https://doi.org/10.3200/JEXE.72.4.269-287

Ledford, J. R., & Gast, D. L. (2018). Single case research methodology: Applications in special education and behavioral sciences (3rd ed.). Routledge: New York.

Lindström, B., Bellander, M., Schultner, D. T., Chang, A., Tobler, P. N., & Amodio, D. M. (2021). A computational reward learning account of social media engagement. Nature Communications, 12, 1311. https://doi.org/10.1038/s41467-020-19607-x

Little, S. G., Akin-Little, A., & O’Neill, K. (2014). Group contingency interventions with children—1980–2010. Behavior Modification, 39(2), 322–341. https://doi.org/10.1177/0145445514554393

Madge, C., Meek, J., Wellens, J., & Hooley, T. (2009). Facebook, social integration and informal learning at university: “It is more for socializing and talking to friends about work than for actually doing work.” Learning, Media and Technology, 34(2), 141–155. https://doi.org/10.1080/17439880902923606

Malott, R. W. (2012). I’ll stop procrastinating when I get around to it. Behaviordelia.

Mao, J. (2014). Social media for learning: A mixed methods study of high school students’ technology affordances and perspectives. Computers in Human Behavior, 33, 213–223. https://doi.org/10.1016/j.chb.2014.01.002

Marso, D., & Shadish, W. R. (2015). Software for Meta-analysis of Single-Case Design DHPS (Version March 7, 2015) [Computer Software]. https://faculty.ucmerced.edu/wshadish/software/software-meta-analysis-single-case-design/dhps-version-march-7-2015

Mazman, S. G., & Usluel, Y. K. (2010). Modeling educational usage of Facebook. Computers and Education, 55(2), 444–453. https://doi.org/10.1016/j.compedu.2010.02.008

Mendez, J. P., Le, K., & De la Cruz, J. (2014). Integrating Facebook in the classroom: pedagogical dilemmas. Journal of Instructional Pedagogies, 13, 10.

Michikyan, M., Subrahmanyam, K., & Dennis, J. (2015). Facebook use and academic performance among college students: A mixed-methods study with a multi-ethnic sample. Computers in Human Behavior, 45, 265–272. https://doi.org/10.1016/j.chb.2014.12.033

Park, J., Bouck, E., & Duenas, A. (2019). The effect of video modeling and video prompting interventions on individuals with intellectual disability. Journal of Special Education Technology, 34(1), 3–16. https://doi.org/10.1177/0162643418780464

Parsons, M. B., Rollyson, J. H., & Reid, D. H. (2013). Teaching practitioners to conduct behavioral skills training: A pyramidal approach for training multiple human service staff. Behavior Analysis in Practice, 6, 4–16. https://doi.org/10.1007/BF03391798

Pempek, T. A., Yermolayeva, Y. A., & Calvert, S. L. (2009). College students’ social networking experiences on Facebook. Journal of Applied Developmental Psychology, 30(3), 227–238. https://doi.org/10.1016/j.appdev.2008.12.010

Rosenthal-von der Pütten, A. M., Hastall, M., Köcher, S., Meske, C., Heinrich, T., Labrenz, F., & Ocklenburg, S. (2019). “Likes” as social rewards: Their role in online social comparison and decisions to like other people’s selfies. Computers in Human Behavior, 92, 76–86.

Sawilowsky, S. (2009). New effect size rules of thumb. Journal of Modern Applied Statistical Methods, 8, 467–474. https://doi.org/10.22237/jmasm/1257035100

Schulz, K. F., & Grimes, D. A. (2002). Unequal group sizes in randomized trials: Guarding against guessing. Lancet, 359(9310), 966–970. https://doi.org/10.1016/S0140-6736(02)08029-7

Schworm, S., & Gruber, H. (2012). E-Learning in universities: Supporting help-seeking processes by instructional prompts. British Journal of Educational Technology, 43(2), 272–281. https://doi.org/10.1111/j.1467-8535.2011.01176.x

Selwyn, N. (2009). Faceworking: Exploring students’ education-related use of Facebook. Learning, Media and Technology, 34(2), 157–174. https://doi.org/10.1080/17439880902923622

Sherer, M., Pierce, K. L., Paredes, S., Kisacky, K. L., Ingersoll, B., & Schreibman, L. (2001). Enhancing conversation skills in children with autism via video technology. Which is better, “self” or “other” as a model? Behavior Modification, 25(1), 140–158. https://doi.org/10.1177/0145445501251008

Smith, J. D. (2012). Single-case experimental designs: A systematic review of published research and current standards. Psychological Methods, 17(4), 510–550. https://doi.org/10.1037/a0029312

Statista. (2023). Number of internet and social media users worldwide as of April 2023. https://www.statista.com/statistics/617136/digital-population-worldwide/

Steger, D., Schroeders, U., & Gnambs, T. (2020). A meta-analysis of test scores in proctored and unproctored ability assessments. European Journal of Psychological Assessment, 36(1), 174–184. https://doi.org/10.1027/1015-5759/a000494

Susanto, H., Yie, L. F., Mohiddin, F., Setiawan, A. A. R., Haghi, P. K., & Setiana, D. (2021). Revealing social media phenomenon in time of COVID-19 pandemic for boosting start-up businesses through digital ecosystem. Applied System Innovation, 4(1), 1–21. https://doi.org/10.3390/asi4010006

Svenningsen, L., & Pear, J. J. (2011). Effects of computer-aided personalized system of instruction in developing knowledge and critical thinking in blended learning courses. The Behavior Analyst Today, 12(1), 34–40. https://doi.org/10.1037/h0100709

Tang, Y., & Hew, K. F. (2017). Is mobile instant messaging (MIM) useful in education? Examining its technological, pedagogical, and social affordances. Educational Research Review, 21, 85–104. https://doi.org/10.1016/j.edurev.2017.05.001

Tarifa-Rodriguez, A., Virues-Ortega, J., Perez-Bustamante Pereira, A., Calero-Elvira, A., & Cowie, S. (2023). Quantitative indices of student social media engagement in tertiary education: A systematic review and a taxonomy. Journal of Behavioral Education. https://doi.org/10.1007/s10864-023-09516-6

UNESCO. (2020). Impact of COVID-19 on education. https://en.unesco.org/covid19/educationresponse

van Ryzin, M. J., Roseth, C. J., & Biglan, A. (2020). Mediators of effects of cooperative learning on prosocial behavior in middle school. International Journal of Applied Positive Psychology, 5(1–2), 37–52. https://doi.org/10.1007/s41042-020-00026-8

Virues-Ortega, J., Moeyaert, M., Sivaraman, M., Rodriguez, T., & Castilla, F. B. (2023). Quantifying outcomes in applied behavior analysis through visual and statistical analyses: A synthesis. In J. L. Matson (Ed.), Handbook of Applied Behavior Analysis (pp. 515–537). Berlin: Springer. https://doi.org/10.1007/978-3-031-19964-6_28

Wang, Q., Woo, H. L., Quek, C. L., Yang, Y., & Liu, M. (2011). Using the Facebook group as a learning management system: An exploratory study. British Journal of Educational Technology, 43(3), 428–438. https://doi.org/10.1111/j.1467-8535.2011.01195.x

Ward-Horner, J., & Sturmey, P. (2010). Component analyses using single-subject experimental designs: A review. Journal of Applied Behavior Analysis, 43(4), 685–704. https://doi.org/10.1901/jaba.2010.43-685

Yu, F. Y., & Chen, C. Y. (2021). Student-versus teacher-generated explanations for answers to online multiple-choice questions: What are the differences? Computers and Education, 173, 104273. https://doi.org/10.1016/j.compedu.2021.104273

Yuan, C. H., & Wu, Y. J. (2020). Mobile instant messaging or face-to-face? Group interactions in cooperative simulations. Computers in Human Behavior, 113, 106508. https://doi.org/10.1016/j.chb.2020.106508

Zheng, L., Huang, R., Hwang, G.-J., & Yang, K. (2015). Measuring knowledge elaboration based on a computer-assisted knowledge map analytical approach to collaborative Learning. Educational Technology & Society, 18(1), 321–336.

Acknowledgements

This study was conducted in partial fulfillment of the requirements of the degree of Doctor in Psychology of Ms. Aida Tarifa-Rodriguez at the Universidad Autónoma de Madrid (Spain). We thank Agustín Perez-Bustamante and Evan Hunerberg for their assistance in data coding and analysis. The authors declare no conflict of interest.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions. This project received support from a research contract between ABA España (Madrid, Spain) and The University of Auckland (Auckland, New Zealand) (Project No. CON02739). The first author received a one-year research contract funded through a Ramon y Cajal Fellowship (Ministerio de Ciencia e Innovación, Spain) awarded to the second author (Reference No. RYC-2016-20706).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare no conflict of interest.

Ethics Approval

The study methods were approved by the ethics committee of the Universidad Autónoma de Madrid (Approval Number CEI 112-2204).

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tarifa-Rodriguez, A., Virues-Ortega, J. & Calero-Elvira, A. The Behavioral Education in Social Media (BE-Social) Program for Postgraduate Academic Achievement: A Randomized Controlled Trial. J Behav Educ (2024). https://doi.org/10.1007/s10864-024-09545-9

Accepted:

Published:

DOI: https://doi.org/10.1007/s10864-024-09545-9