Abstract

In this work, we propose a new primal–dual algorithm with adaptive step sizes. The stochastic primal–dual hybrid gradient (SPDHG) algorithm with constant step sizes has become widely applied in large-scale convex optimization across many scientific fields due to its scalability. While the product of the primal and dual step sizes is subject to an upper-bound in order to ensure convergence, the selection of the ratio of the step sizes is critical in applications. Up-to-now there is no systematic and successful way of selecting the primal and dual step sizes for SPDHG. In this work, we propose a general class of adaptive SPDHG (A-SPDHG) algorithms and prove their convergence under weak assumptions. We also propose concrete parameters-updating strategies which satisfy the assumptions of our theory and thereby lead to convergent algorithms. Numerical examples on computed tomography demonstrate the effectiveness of the proposed schemes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The stochastic primal–dual hybrid gradient (SPDHG) algorithm introduced in [8] is a stochastic version of the primal–dual hybrid gradient (PDHG) algorithm, also known as Chambolle–Pock algorithm [9]. SPDHG has proved more efficient than PDHG for a variety of problems in the framework of large-scale non-smooth convex inverse problems [13, 22, 24, 27]. Indeed, SPDHG only uses a subset of the data at each iteration, hence reducing the computational cost of evaluating the forward operator and its adjoint; as a result, for the same computational burden, SPDHG attains convergence faster than PDHG. This is especially relevant in the context of medical imaging, where there is a need for algorithms whose convergence speed is compatible with clinical standards, and at the same time able to deal with convex, non-smooth priors like total variation (TV), which are well-suited to ill-posed imaging inverse problems, but preclude the recourse to scalable gradient-based methods.

Like PDHG, SPDHG is provably convergent under the assumption that the product of its primal and dual step sizes is bounded by a constant depending on the problem to solve. On the other hand, the ratio between the primal and dual step sizes is a free parameter, whose value needs to be chosen by the user. The value of this parameter, which can be interpreted as a control on balance between primal and dual convergence, can have a severe impact on the convergence speed of PDHG, and the same also holds true for SPDHG [12]. This leads to an important challenge in practice, as there is no known theoretical or empirical rule to guide the choice of the parameter. Manual tuning is computationally expensive, as it would require running and comparing the algorithm on a range of values, and there is no guarantee that a value leading to fast convergence for one dataset would keep being a good choice for another dataset. For PDHG, [14] have proposed an online primal–dual balancing strategy to solve the issue, where the values of the step sizes evolve along the iterations. More generally, adaptive step sizes have been used for PDHG with backtracking in [14, 20], adapting to local smoothness in [25], and are widely used for a variety of other algorithms, namely gradient methods in [19], subgradient methods in [3] and splitting methods in [4,5,6,7, 18] to improve convergence speed and bypass the need for explicit model constants, like Lipschitz constants or operator norms. For SPDHG, an empirical adaptive scheme has been used for Magnetic Particle Imaging but without convergence proof [27].

On the theoretical side, a standard procedure to prove the convergence of proximal-based algorithms for convex optimization is to use the notion of Féjer monotonicity [2]. Constant step sizes lead to a fixed metric setting, while adaptive step sizes lead to a variable metric setting. Work [11] states the convergence of deterministic Féjer-monotone sequences in the variable metric setting, while work [10] is concerned by the convergence of random Féjer-monotone sequences in the fixed metric setting.

In this work, we introduce and study an adaptive version of SPDHG. More precisely:

-

We introduce a broad class of strategies to adaptively choose the step sizes of SPDHG. This class includes, but is not limited to, the adaptive primal–dual balancing strategy, where the ratio of the step sizes, which controls the balance between convergence of the primal and dual variable, is tuned online.

-

We prove the almost-sure convergence of SPDHG under the schemes of the class. In order to do that, we introduce the concept of C-stability, which generalizes the notion of Féjer monotonicity, and we prove the convergence of random C-stable sequences in a variable metric setting, hence generalizing results from [11] and [10]. We then show that our proposed algorithm falls within this novel theoretical framework by following similar strategies than in the almost-sure convergence proofs of [1, 16].

-

We compare the performance of SPDHG for various adaptive schemes and the known fixed step-size scheme on large-scale imaging inverse tasks (sparse-view CT, limited-angle CT, low-dose CT). We observe that the primal–dual balancing adaptive strategy is always as fast or faster than all the other strategies. In particular, it consistently leads to substantial gains in convergence speed over the fixed strategy if the fixed step sizes, while in the theoretical convergence range, are badly chosen. This is especially relevant as it is impossible to know whether the fixed step sizes are well or badly chosen without running expensive comparative tests. Even in the cases where the SPDHG’s fixed step sizes are well tuned, meaning that they are in the range to which the adaptive step sizes are observed to converge, we observe that our adaptive scheme still provides convergence acceleration over the standard SPDHG after a certain number of iterations. Finally, we pay special attention to the hyperparameters used in the adaptive schemes. These hyperparameters are essentially controlling the degree of adaptivity for the algorithm and each of them has a clear interpretation and is easy to choose in practice. We observe in our extensive numerical tests that the convergence speed of our adaptive scheme is robust to the choices of these parameters within the empirical range we provide, hence can be applied directly to the problem at hand without fine-tuning, and solves the step-size choice challenge encountered by the user.

The rest of the paper is organized as follows. In Sect. 2, we introduce SPDHG with adaptive step sizes, state the convergence theorem, and carry the proof. In Sect. 3, we propose concrete schemes to implement the adaptiveness, followed by numerical tests on CT data in Sect. 4. We conclude in Sect. 5. Finally, Sect. 6 collects some useful lemmas and proofs.

2 Theory

2.1 Convergence Theorem

The variational problem to solve takes the form:

where X and \((Y_i)_{i \in \left\{ 1,\dots , n\right\} }\) are Hilbert spaces, \(A_i:X\rightarrow Y_i\) are bounded linear operators, and \(f_i:Y_i\rightarrow {\mathbb {R}}\cup \left\{ +\infty \right\} \) and \(g:X\rightarrow {\mathbb {R}}\cup \left\{ +\infty \right\} \) are convex functions. We define \(Y=Y_1\times \dots \times Y_n\) with elements \(y=(y_1,\dots ,y_n)\) and \(A:X\rightarrow Y\) such that \(Ax = (A_1 x,\dots ,A_n x)\). The associated saddle-point problem reads as

where \(f_i^*\) stands for the Fenchel conjugate of \(f_i\). The set of solution to (2.1) is denoted by \({\mathcal {C}}\), and the set of nonnegative integers by \({\mathbb {N}}\) and \(\llbracket 1,n \rrbracket \) stands for \(\left\{ 1,\dots , n\right\} \). Elements \((x^*,y^*)\) of \({\mathcal {C}}\) are called saddle points and characterized by

In order to solve the saddle-point problem, we introduce the adaptive stochastic primal–dual hybrid gradient (A-SPDHG) algorithm in Algorithm 2.1. At each iteration \(k\in {\mathbb {N}}\), A-SPDHG involves the following five steps:

-

update the primal step size \(\tau ^k\) and the dual step sizes \((\sigma _i^{k})_{i \in \llbracket 1,n \rrbracket }\) (line 4);

-

update the primal variable \(x^k\) by a proximal step with step size \(\tau ^{k+1}\) (line 5);

-

randomly choose an index i with probability \(p_i\) (line 6);

-

update the dual variable \(y_i^k\) by a proximal step with step size \(\sigma _i^{k+1}\) (line 7);

-

compute the extrapolated dual variable (line 8).

A-SPDHG is adaptive in the sense that the step-size values are updated at each iteration according to an update rule which takes into account the value of the primal and dual iterates \(x^l\) and \(y^l\) up to the current iteration. As the iterates are stochastic, the step sizes are themselves stochastic, which must be carefully accounted for in the theory.

Before turning to the convergence of A-SPDHG, let us recall some facts about the state-of-the-art SPDHG. Each iteration of SPDHG involves the selection of a random subset of \(\llbracket 1,n \rrbracket \). In the serial sampling case where the random subset is a singleton, SPDHG algorithm [8] is a special case of Algorithm 2.1 with the update rule

Under the condition

SPDHG iterates converge almost surely to a solution of the saddle-point problem (2.1) [1, 16].

Let us now turn to the convergence of A-SPDHG. The main theorem, Theorem 2.1, gives conditions on the update rule under which A-SPDHG is provably convergent. Plainly speaking, these conditions are threefold:

-

(i)

the step sizes for step \(k+1\), \((\sigma _i^{k+1})_{i \in \llbracket 1,n \rrbracket }\) and \(\tau ^{k+1}\), depend only on the iterates up to step k,

-

(ii)

the step sizes satisfy a uniform version of condition (2.3),

-

(iii)

the step-size sequences \((\tau ^k)_{k \ge 0}\) and \((\sigma _i^k)_{k \ge 0}\) for \(i \in \llbracket 1,n \rrbracket \) do not decrease too fast. More precisely, they are uniformly almost surely quasi-increasing in the sense defined below.

In order to state the theorem rigorously, let us introduce some useful notation and definitions. For all \(k\in {\mathbb {N}}\), the \(\sigma \)-algebra generated by the iterates up to point k, \({\mathcal {F}}\left( (x^l,y^l), l \in \llbracket 0,k \rrbracket \right) \), is denoted by \({\mathcal {F}}^k\). We say that a sequence \((u^k)_{k\in {\mathbb {N}}}\) is \(\left( {\mathcal {F}}^k\right) _{k\in {\mathbb {N}}}\)-adapted if for all \(k\in {\mathbb {N}}\), \(u^k\) is measurable with respect to \({\mathcal {F}}^k\).

A positive real sequence \((u^k)_{k \in {\mathbb {N}}}\) is said to be quasi-increasing if there exists a sequence \((\eta ^k)_{k \in {\mathbb {N}}}\) with values in [0, 1), called the control on \((u^k)_{k \in {\mathbb {N}}}\), such that \(\sum _{k=1}^\infty \eta ^k < \infty \) and:

By extension, we call a random positive real sequence \((u^k)_{k \in {\mathbb {N}}}\) uniformly almost surely quasi-increasing if there exists a deterministic sequence \((\eta ^k)_{k \in {\mathbb {N}}}\) with values in [0, 1) such that \(\sum _{k=1}^\infty {\eta ^k} < \infty \) and equation (2.4) above holds almost surely (a.s.).

Theorem 2.1

(Convergence of A-SPDHG) Let X and Y be separable Hilbert spaces, \(A_i:X\rightarrow Y_i\) bounded linear operators, \(f_i:Y_i\rightarrow {\mathbb {R}}\cup \left\{ +\infty \right\} \) and \(g:X\rightarrow {\mathbb {R}}\cup \left\{ +\infty \right\} \) proper, convex and lower semi-continuous functions for all \(i \in \llbracket 1,n \rrbracket \). Assume that the set of saddle points \({\mathcal {C}}\) is non-empty and the sampling is proper, that is to say \(p_i>0\) for all \(i\in \llbracket 1,n \rrbracket \). If the following conditions are met:

-

(i)

the step-size sequences \((\tau ^{k+1})_{k \in {\mathbb {N}}}, (\sigma _i^{k+1})_{k \in {\mathbb {N}}},\,i \in \llbracket 1,n \rrbracket \) are \(\left( {\mathcal {F}}^k\right) _{k \in {\mathbb {N}}}\)-adapted,

-

(ii)

there exists \(\beta \in (0,1)\) such that for all indices \(i \in \llbracket 1,n \rrbracket \) and iterates \(k \in {\mathbb {N}}\),

$$\begin{aligned} \tau ^k \sigma _i^k \frac{\Vert A_i\Vert ^2}{p_i} \le \beta < 1, \end{aligned}$$(2.5) -

(iii)

the initial step sizes \(\tau ^0\) and \(\sigma _i^{0}\) for all indices \(i \in \llbracket 1,n \rrbracket \) are positive and the step-size sequences \((\tau ^{k})_{k \in {\mathbb {N}}}\) and \((\sigma _i^{k})_{k \in {\mathbb {N}}}\) for all indices \(i \in \llbracket 1,n \rrbracket \) are uniformly almost surely quasi-increasing,

then the sequence of iterates \((x^k,y^k)_{k \in {\mathbb {N}}}\) converges almost surely to an element of \({\mathcal {C}}\).

While the conditions (i)–(iii) are general enough to cover a large range of step-size update rules, we will focus in practice on the primal–dual balancing strategy, which consists in scaling the primal and the dual step sizes by an inverse factor at each iteration. In that case, the update rule depends on a random positive sequence \((\gamma ^k)_{k\in {\mathbb {N}}}\) and reads as:

Lemma 2.2

(Primal–dual balancing) Let the step-size sequences satisfy equation (2.6) and assume in addition that \((\gamma ^k)_{k\in {\mathbb {N}}}\) is \(\left( {\mathcal {F}}^k\right) _{k \in {\mathbb {N}}}\)-adapted that the initial step sizes satisfy

and are positive, that there exists a deterministic sequence \((\epsilon ^k)_{k\in {\mathbb {N}}}\) with values in [0, 1) such that \(\sum \epsilon ^k < \infty \) and for all \(k\in {\mathbb {N}}\) and \(i \in \llbracket 1,n \rrbracket \),

Then, the step-size sequences satisfy assumptions (i)–(iii) of Theorem 2.1.

Lemma 2.2 is proved in Sect. 6.

Connection with the literature:

-

The primal–dual balancing strategy has been introduced in [14] for PDHG and indeed for \(n=1\) we recover with Lemma 2.2 the non-backtracking algorithm presented in [14]. As a consequence, our theorem also implies the pointwise convergence of this algorithm, whose convergence was established in the sense of vanishing residuals in [14].

-

Still for PDHG, [20] proposes without proof an update rule where the ratio of the step sizes is either quasi-non-increasing or quasi-non-decreasing. This requirement is similar to but not directly connected with ours, where we ask the step sizes themselves to be quasi-non-increasing.

-

For SPDHG, the angular constraint step-size rule proposed without convergence proof in [27] satisfies assumptions (i)–(iii).

Outline of the proof: Theorem 2.1 is proved in the following subsections. We first define in Sect. 2.2 metrics related to the algorithm step sizes on the primal–dual product space. As the step sizes are adaptive, we obtain a sequence of metrics. The proof of Theorem 2.1 is then similar in strategy to those of [1] and [16] but requires novel elements to deal with the metrics variability. In Theorem 2.5, we state convergence conditions for an abstract random sequence in a Hilbert space equipped with random variable metrics. In Sects. 2.4 and 2.5, we show that A-SPDHG falls within the scope of Theorem 2.5. We collect all elements and conclude the proof in Sect. 2.6.

2.2 Variable Metrics

For a Hilbert space H, we call \({\mathbb {S}}(H)\) the set of bounded self-adjoint linear operators from H to H, and for all \(M \in {\mathbb {S}}(H)\) we introduce the notation:

By an abuse of notation, we write \(\Vert \cdot \Vert _\alpha ^2 =\Vert \cdot \Vert _{\alpha \text {Id}}^2\) for a scalar \(\alpha \in \mathbb R\). Notice that \(\Vert \cdot \Vert _M\) is a norm on H if M is positive definite. Furthermore, we introduce the partial order \(\preccurlyeq \) on \({\mathbb {S}}(H)\) such that for \(M,\,N \in {\mathbb {S}}(H)\),

We call \({\mathbb {S}}_\alpha (H)\) the subset of \({\mathbb {S}}(H)\) comprised of M such that \(\alpha \text {Id} \preccurlyeq M\). Furthermore, a random sequence \((M^k)_{k \in {\mathbb {N}}}\) in \({\mathbb {S}}(H)\) is said to be uniformly almost surely quasi-decreasing if there exists a deterministic nonnegative sequence \((\eta ^k)_{k \in {\mathbb {N}}}\) such that \(\sum _{k=1}^\infty {\eta ^k} < \infty \) and a.s.

Coming back to A-SPDHG, let us define for every iteration \(k \in {\mathbb {N}}\) and every index \(i \in \llbracket 1,n \rrbracket \) two block operators of \({\mathbb {S}}(X\times Y_i)\) as:

and a block operator of \({\mathbb {S}}(X\times Y)\) as:

The following lemma translates assumptions (i)–(iii) of Theorem 2.1 on properties on the variable metric sequences.

Lemma 2.3

(Variable metric properties)

-

(a)

Assumption (i) of Theorem 2.1 implies that \((M_i^{k+1})_{k \in {\mathbb {N}}}\), \((N_i^{k+1})_{k \in {\mathbb {N}}},\,i \in \llbracket 1,n \rrbracket \) and \((N^{k+1})_{k \in {\mathbb {N}}}\) are \(\left( {\mathcal {F}}^k\right) _{k \in {\mathbb {N}}}\)-adapted.

-

(b)

Assumption (ii) of Theorem 2.1 is equivalent to the existence of \(\beta \in (0,1)\) such that for all indices \(i \in \llbracket 1,n \rrbracket \) and iterates \(k \in {\mathbb {N}}\),

$$\begin{aligned} (1-{\sqrt{\beta }}) N_i^k \preccurlyeq M_i^k. \end{aligned}$$ -

(c)

Assumptions (ii) and (iii) of Theorem 2.1 imply that \((M_i^k)_{k \in {\mathbb {N}}}, (N_i^k)_{k \in {\mathbb {N}}},\,i \in \llbracket 1,n \rrbracket \) and \((N^k)_{k \in {\mathbb {N}}}\) are uniformly a.s. quasi-decreasing.

-

(d)

Assumption (ii) and (iii) of Theorem 2.1 imply that the sequences \((\tau ^{k})_{k \in {\mathbb {N}}}\) and \((\sigma _i^{k})_{k \in {\mathbb {N}}}\) for all \(i \in \llbracket 1,n \rrbracket \) are a.s. bounded from above and by below by positive constants. In particular, this implies that there exists \(\alpha >0\) such that \(N_i^k \in {\mathbb {S}}_\alpha (X\times Y_i)\) for all \(i \in \llbracket 1,n \rrbracket \) and \(k \in {\mathbb {N}}\), or equivalently that \(N^k \in {\mathbb {S}}_\alpha (X \times Y)\) for all \(k \in {\mathbb {N}}\).

Remark 2.4

(Step-size induced metrics on the primal–dual product space) The lemma implies that \(M_i^k\), \(N_i^k\) and \(N^k\) are positive definite and hence induce a metric on the corresponding spaces. If \(n=1\) and for constant step sizes, \(M_i^k\) corresponds to the metric used in [17], where PDHG is reformulated as a proximal-point algorithm for a non-trivial metric on the primal–dual product space.

Proof of Lemma 2.3

Assertion (a) of the lemma follows from the fact that for all iterate \(k \in {\mathbb {N}}\), the operators \(M_i^{k+1}\), \(N_i^{k+1}\) and \(N^{k+1}\) are in the \(\sigma \)-algebra generated by \(\left\{ \tau ^{k+1},\, \sigma _i^{k+1}, \, i \in \llbracket 1,n \rrbracket \right\} \). Assertion (b) follows from equation (6.2) of Lemma 6.1 to be found in the complementary material. The proof of assertion (c) is a bit more involved. Let us assume that assumption (iii) of Theorem 2.1 holds and let \((\eta _0^k)_{k \in {\mathbb {N}}}\) and \((\eta _i^k)_{k \in {\mathbb {N}}}\) be the controls of \((\tau ^k)_{k \in {\mathbb {N}}}\) and \((\sigma _i^k)_{k \in {\mathbb {N}}}\) for \(i \in \llbracket 1,n \rrbracket \), respectively. We define the sequence \((\eta ^k)_{k \in {\mathbb {N}}}\) by:

which is a common control on \((\tau ^k)_{k \in {\mathbb {N}}}\) and \((\sigma _i^k)_{k \in {\mathbb {N}}}\) for \(i \in \llbracket 1,n \rrbracket \) as the maximum of a finite number of controls. Let us fix \(k \in {\mathbb {N}}\) and \(i \in \llbracket 1,n \rrbracket \). Because the intersection of a finite number of measurable events of probability one is again a measurable event of probability one, it holds almost surely that for all \((x,y_i)\in X \times Y_i\),

Hence, the sequence \((N_i^k)_{k \in {\mathbb {N}}}\) is uniformly quasi-decreasing with control \(\left( \eta ^k(1-\eta ^k)^{-1}\right) _{k \in {\mathbb {N}}}\), which is indeed a positive sequence with bounded sum. (To see that \(\left( \eta ^k(1-\eta ^k)^{-1}\right) _{k \in {\mathbb {N}}}\) has a bounded sum, consider that \(\left( \eta ^k\right) _{k \in {\mathbb {N}}}\) is summable, hence converges to 0, hence is smaller than 1/2 for all integers k bigger than a certain K; in turn, for all integers k bigger than K, the term \(\eta ^k(1-\eta ^k)^{-1}\) is bounded from below by 0 and from above by \(2\eta ^k\), hence is summable.) One can see by a similar proof that \((N^k)_{k \in {\mathbb {N}}}\) is uniformly quasi-decreasing with the same control. To follow with the case of \((M_i^k)_{k \in {\mathbb {N}}}\), we have, as before:

thanks to (b).

Let us conclude with the proof of assertion (d). By assumption (iii), the sequences \((\tau ^k)_{k \in {\mathbb {N}}}\) and \((\sigma _i^k)_{k \in {\mathbb {N}}}\) are uniformly a.s. quasi-increasing. We define a common control \((\eta ^k)_{k \in {\mathbb {N}}}\) as in (2.9). Then, the sequences \((\tau ^k)_{k \in {\mathbb {N}}}\) and \((\sigma _i^k)_{k \in {\mathbb {N}}}\) are a.s. bounded from below by the same deterministic constant \(C=\min \left\{ \tau ^0, \,\sigma _i^0,\, i \in \llbracket 1,n \rrbracket \right\} \prod _{j={0}}^\infty (1-\eta ^j)\) which is positive as the initial step sizes are positive and \((\eta ^k)_{k \in {\mathbb {N}}}\) takes values in [0, 1) and has finite sum. Furthermore, by assumption (ii), the product of the sequences \((\tau ^k)_{k \in {\mathbb {N}}}\) and \((\sigma _i^k)_{k \in {\mathbb {N}}}\) is almost surely bounded from above. As a consequence, each sequence \((\tau ^k)_{k \in {\mathbb {N}}}\) and \((\sigma _i^k)_{k \in {\mathbb {N}}}\) is a.s. bounded from above. The equivalence with \(N_i^k \in {\mathbb {S}}_\alpha (X\times Y_i)\) for all \(i \in \llbracket 1,n \rrbracket \), and with \(N^k \in {\mathbb {S}}_\alpha (X\times Y)\), is straightforward. \(\square \)

2.3 Convergence of Random C-stable Sequences in Random Variable Metrics

Let H be a Hilbert space and \(C\subset H\) a subset of H. Let \(\left( \Omega , \sigma (\Omega ), {\mathbb {P}}\right) \) be a probability space. All random variables in the following are assumed to be defined on \(\Omega \) and measurable with respect to \(\sigma (\Omega )\) unless stated otherwise. Let \((Q^k)_{k \in {\mathbb {N}}}\) be a random sequence of \({\mathbb {S}}(H)\).

A random sequence \((u^k)_{k \in {\mathbb {N}}}\) with values in H is said to be stable with respect to the target C relative to \((Q^k)_{k \in {\mathbb {N}}}\) if for all \(u\in C\), the sequence \(\left( \Vert u^k - u\Vert _{Q^k}\right) _{k \in {\mathbb {N}}}\) converges almost surely. The following theorem then states sufficient conditions for the convergence of such sequences.

Theorem 2.5

(Convergence of C-stable sequences) Let H be a separable Hilbert space, C a closed non-empty subset of H, \((Q^k)_{k \in {\mathbb {N}}}\) a random sequence of \({\mathbb {S}}(H)\), and \((u^k)_{k \in {\mathbb {N}}}\) a random sequence of H. If the following conditions are met:

-

(i)

\((Q^k)_{k \in {\mathbb {N}}}\) takes values in \({\mathbb {S}}_\alpha (H)\) for a given \(\alpha >0\) and is uniformly a.s. quasi-decreasing,

-

(ii)

\((u^k)_{k \in {\mathbb {N}}}\) is stable with respect to the target C relative to \((Q^k)_{k \in {\mathbb {N}}}\),

-

(iii)

every weak sequential cluster point of \((u^k)_{k \in {\mathbb {N}}}\) is almost surely in C, meaning that there exists \(\Omega _{(iii)}\) a measurable subset of \(\Omega \) of probability one such that for all \(\omega \in \Omega \), every weak sequential cluster point of \((u^k(\omega ))_{k \in {\mathbb {N}}}\) is in C.

then \((u^k)_{k \in {\mathbb {N}}}\) converges almost surely weakly to a random variable in C.

Stability with respect to a target set C is implied by Féjer and quasi-Féjer monotonicity with respect to C, which have been studied either for random sequences [10] or in the framework of variable metrics [11], but to the best of our knowledge not both at the same time. The proof of Theorem 2.5 follows the same lines than [10, Proposition 2.3 (iii)] and uses two results from [11].

Proof

The set C is a subset of the separable Hilbert space H, hence is separable. As C is a closed and separable, there exists \(\left\{ c^n,\, n\in {\mathbb {N}}\right\} \) a countable subset of C whose closure is equal to C. Thanks to assumption (ii), there exists for all \(n\in {\mathbb {N}}\) a measurable subset \(\Omega _{(ii)}^n\) of \(\Omega \) with probability one such that the sequence \({(\Vert u^k(\omega ) - c^n\Vert _{Q^k(\omega )})_{k \in {\mathbb {N}}}}\) converges for all \(\omega \in \Omega _{(ii)}^n\). Furthermore, let \(\Omega _{(i)}\) be a measurable subset of \(\Omega \) of probability one corresponding to the almost-sure property for assumption (i). Let

As the intersection of a countable number of measurable subsets of probability one, \({\tilde{\Omega }}\) is itself a measurable set of \(\Omega \) with \({\mathbb {P}}({\tilde{\Omega }})=1\). Fix \(\omega \in {\tilde{\Omega }}\) for the rest of the proof.

The sequence \((Q^k(\omega ))_{k \in {\mathbb {N}}}\) takes values in \({\mathbb {S}}_\alpha (H)\) for \(\alpha >0\) and is quasi-decreasing with control \((\eta ^k(\omega ))_{k \in {\mathbb {N}}}\). Furthermore, for all \(k \in {\mathbb {N}}\),

where the product \(\prod _{j=0}^{\infty }\left( 1+\eta ^j\right) \) is finite because \((\eta ^k)_{k \in {\mathbb {N}}}\) is positive and summable. By [11, Lemma 2.3], \((Q^k(\omega ))_{k \in {\mathbb {N}}}\) converges pointwise strongly to some \(Q(\omega )\in {\mathbb {S}}_\alpha (H)\).

Furthermore, for all \(x\in C\), there exists a sequence \((x^n)_{n\in {\mathbb {N}}}\) with values in \(\left\{ c^n,\, n\in {\mathbb {N}}\right\} \) converging strongly to x. By assumption, for all \(n\in {\mathbb {N}}\), the sequence \((\Vert u^k(\omega ) - x^n\Vert _{Q^k(\omega )})_{k \in {\mathbb {N}}}\) converges to a limit which shall be called \(l^n(\omega )\). For all \(n\in {\mathbb {N}}\) and \(k \in {\mathbb {N}}\), we can write thanks to the triangular inequality:

By taking the limit \(k\rightarrow +\infty \), it follows that:

Taking now the limit \(n\rightarrow +\infty \) shows that the sequence \((\Vert u^k(\omega ) - x\Vert _{Q^k(\omega )})_{k \in {\mathbb {N}}}\) converges for all \(x\in C\). On the other hand, because \(\omega \in \Omega _{(iii)}\), the weak cluster points of \((u^k(\omega ))_{k \in {\mathbb {N}}}\) lie in C. Hence, by [11, Theorem 3.3], the sequence \((u^k(\omega ))_{k \in {\mathbb {N}}}\) converges almost surely to a point \(u(\omega )\in C\). \(\square \)

We are now equipped to prove Theorem 2.1. We show in Sects. 2.4 and 2.5 that A-SPDHG satisfies points (ii) and (iii) of Theorem 2.5, respectively, and conclude the proof in Sect. 2.6. Interestingly, the proofs of point (ii) and of point (iii) rely on two different ways of apprehending A-SPDHG. Point (ii) relies on a convex optimization argument: By taking advantage of the measurability of the primal variable at step \(k+1\) with respect to \({\mathcal {F}}^k\), one can write a contraction-type inequality relating the conditional expectation of the iterates’ norm at step \(k+1\) to the iterates’ norm at step k. Point (iii) relies on monotone operator theory: We use the fact that the update from the half-shifted iterations \((y^k,x^{k+1})\) to \((y^{k+1},x^{k+2})\) can be interpreted as a step of a proximal-point algorithm on \(X\times Y_i\) conditionally to i being the index randomly selected at step k.

2.4 A-SPDHG is Stable with Respect to the Set of Saddle Points

In this section, we show that \((x^k,y^k)_{k \in {\mathbb {N}}}\) is stable with respect to \({\mathcal {C}}\) relative to the variable metrics sequence \((N^k)_{k \in {\mathbb {N}}}\) defined in equation (2.8) above. We introduce the operators \(P\in {\mathbb {S}}(Y)\) and \(\Sigma ^k\in {\mathbb {S}}(Y)\) defined, respectively, by

and the functionals \((U^k)_{k \in {\mathbb {N}}},\, (V^k)_{k \in {\mathbb {N}}}\) defined for all \((x,y)\in X\times Y\) as:

We begin by recalling the cornerstone inequality satisfied by the iterates of SPDHG stated first in [8] and reformulated in [1].

Lemma 2.6

([1], Lemma 4.1) For every saddle-point \((x^*,y^*)\), it a.s. stands that for all \(k \in {\mathbb {N}}{\setminus }\left\{ 0\right\} \),

The second step is to relate the assumptions of Theorem 2.1 to properties of the functionals appearing in (2.10). Let us introduce \(Y_{\text {sparse}} \subset Y\) the set of elements \((y_1, \dots , y_n)\) having at most one non-vanishing component.

Lemma 2.7

(Properties of functionals of interest) Under the assumptions of Theorem 2.1, there exists a nonnegative, summable sequence \((\eta ^k)_{k \in {\mathbb {N}}}\) such that a.s. for every iterate \(k \in {\mathbb {N}}\) and \(x\in X,\,y\in Y,\, z\in Y_{\text {sparse}}\):

Proof

Let \((\eta _i^k)_{k \in {\mathbb {N}}}\) and \(({\tilde{\eta }}_i^k)_{k \in {\mathbb {N}}}\) be the controls of \((M_i^k)_{k \in {\mathbb {N}}}\) and \((N_i^k)_{k \in {\mathbb {N}}}\), respectively, for all \(i \in \llbracket 1,n \rrbracket \). We define the common control \((\eta ^k)_{k \in {\mathbb {N}}}\) by:

For all \(y\in Y\), we can write

which proves (2.11a). Let us now fix \(x\in X\), \(z\in Y_{\text {sparse}}\) and \(k \in {\mathbb {N}}\). By definition, there exists \(i \in \llbracket 1,n \rrbracket \) such that \(z_j=0\) for all \(j\ne i\). We obtain the inequalities (2.11b)–(2.11d) by writing:

Finally, we obtain inequality (2.11e) by writing:

where the last inequality is a consequence of (2.5). \(\square \)

Lemma 2.8

(A-SPDHG is \({\mathcal {C}}\)-stable) Under the assumptions of Theorem 2.1,

-

(i)

The sequence \((x^k,y^k)_{k \in {\mathbb {N}}}\) of Algorithm 2.1 is stable with respect to \({\mathcal {C}}\) relative to \((N^k)_{k \in {\mathbb {N}}}\),

-

(ii)

the following results hold:

$$\begin{aligned}&{\mathbb {E}}\left[ \sum _{k=1}^\infty \left\| (x^{k+1}-x^k,y^{k}-y^{k-1})\right\| ^2 \right] \\&\quad < \infty \quad \text { and a.s.}\quad \left\| x^{k+1}-x^k \right\| \rightarrow 0. \end{aligned}$$

Proof

Let us begin with the proof of point (i). By definition of A-SPDHG with serial sampling, the difference between two consecutive dual iterates is almost surely sparse:

Let us define the sequences

which are a.s. nonnegative thanks to (2.11c) and (2.11d). Notice that the primal iterates \(x^l\) from \(l=0\) up to \(l=k+1\) are measurable with respect to \({\mathcal {F}}^k\), whereas the dual iterates \(y^l\) from \(l=0\) up to \(l=k\) are measurable with respect to \({\mathcal {F}}^k\). Hence, \(a^k\) and \(b^k\) are measurable with respect to \({\mathcal {F}}^k\). Furthermore, inequalities (2.10), (2.11a) and (2.11b) imply that almost surely for all \(k \in {\mathbb {N}}{\setminus }\left\{ 0\right\} \),

By Robbins–Siegmund lemma [23], \((a^k)\) converges almost surely, \(\sup _k {\mathbb {E}}\left[ a^k \right] < \infty \) and \(\sum _{k=1}^\infty {\mathbb {E}}\left[ b^k \right] < \infty \). From the last point in particular, we can write thanks to (2.11d) and the monotone convergence theorem:

hence \( \sum _{k=1}^\infty \Vert y^{k}-y^{k-1}\Vert _{(P\Sigma ^{k+1})^{-1}}^2\) is almost surely finite, thus \(\left( \Vert y^{k}-y^{k-1}\Vert _{(P\Sigma ^{k+1})^{-1}}^2\right) {_{k\in {\mathbb {N}}\setminus \left\{ 0\right\} }}\), and in turn \(\big (\Vert y^{k}-y^{k-1}\Vert _{(P\Sigma ^{k+1})^{-1}}\big ){_{k\in {\mathbb {N}}\setminus \left\{ 0\right\} }}\), converge almost surely to 0. Furthermore, \(\sup _k {\mathbb {E}}\left[ a^k \right] < \infty \) hence \(\sup _k \Vert x^{k}-x^*\Vert _{(\tau ^{k})^{-1}}^2\), and in turn \(\sup _k \Vert x^{k}-x^*\Vert _{(\tau ^{k})^{-1}}\), are finite, and by (2.11e), one can write that for \(k\in {\mathbb {N}}{\setminus }\left\{ 0\right\} \),

We know that \((\eta ^k)^{k\in {\mathbb {N}}}\) is summable hence converges to 0. As a consequence,

To conclude with, thanks to the identity

the almost-sure convergence of \((a^k)_{k \in {\mathbb {N}}}\) implies in turn that of \((\Vert (x^{k}-x^*,y^{k}-y^*)\Vert _{N^k}^2)_{k \in {\mathbb {N}}}\).

Let us now turn to point (ii). The first assertion is a straightforward consequence of

and bounds (2.11c) and (2.11d). Furthermore, it implies that \(\sum _{k=1}^\infty \left\| (x^{k+1}-x^k,y^{k}-y^{k-1})\right\| ^2\) is a.s. finite, hence \(\left( \left\| (x^{k+1}-x^k,y^{k}-y^{k-1})\right\| \right) \) a.s. converges to 0, and so does \(\left( \left\| x^{k+1}-x^k\right\| \right) \). \(\square \)

2.5 Weak Cluster Points of A-SPDHG are Saddle Points

The goal of this section is to prove that A-SPDHG satisfies point (iii) of Theorem 2.5. On the event \(\left\{ I^k=i \right\} \), A-SPDHG update procedure can be rewritten as

We define \(T_i^{\sigma ,\tau }: (x,y)\mapsto ({\hat{x}},{\hat{y}}_i)\) by:

so that \((x^{k+2},y_i^{k+1}) = T_i^{\sigma _i^{k+1},\tau ^{k+2}}(x^{k+1},y^k)\) on the event \(\{I^{k}=i\}\) (and \(y^{k+1}_j=y^k_j\) for \(j\ne i\)).

Lemma 2.9

(Cluster points of A-SPDHG are saddle points) Let \(({\bar{x}},{\bar{y}})\) a.s. be a weak cluster point of \((x^k,y^k)_{k \in {\mathbb {N}}}\) (meaning that there exists a measurable subset \({\bar{\Omega }}\) of \(\Omega \) of probability one such that for all \(\omega \in {\bar{\Omega }}\), \(({\bar{x}}(\omega ),{\bar{y}}(\omega ))\) is a weak sequential cluster point of \((x^k(\omega ),y^k(\omega ))_{k \in {\mathbb {N}}}\)) and assume that the assumptions of Theorem 2.1 hold. Then, \(({\bar{x}},{\bar{y}})\) is a.s. in \({\mathcal {C}}\).

Proof

Thanks to Lemma 2.8-(ii) and the monotone convergence theorem,

Now,

Hence, we can deduce that

It follows that the series in the expectation is a.s. finite, and since \(p_i>0\) we deduce that almost surely,

for all \(i=1,\dots n\). We consider a sample \((x^k,y^k)\) which is bounded and such that (2.13) holds. We let for each i, \(({\hat{x}}^{i,k+1},{\hat{y}}_i^{i,k}) = T_i^{\sigma ^k_i,\tau ^{k+1}}(x^k,y^{k-1})\), so that \(\Vert ({\hat{x}}^{i,k+1},{\hat{y}}_i^{i,k})- (x^{k},y_i^{k-1})\Vert \rightarrow 0\) for \(i=1,\dots ,n\). Then, one has

where \(\delta _{x,y}^{i,k}\rightarrow 0\) as \(k\rightarrow \infty \). Given a test point (x, y), one may write for any k:

and summing all these inequalities, we obtain:

where \(\delta ^k\rightarrow 0\) as \(k\rightarrow \infty \). We deduce that if \((\bar{x},{{\bar{y}}})\) is the weak limit of a subsequence \((x^{k_l},y^{k_l-1})\) (as well as, of course, \((x^{k_l},y^{k_l})\)), then:

Since (x, y) is arbitrary, we find that (2.2) holds for \(({{\bar{x}}},{{\bar{y}}})\). \(\square \)

2.6 Proof of Theorem 2.1

Under the assumptions of Theorem 2.1, the set \({\mathcal {C}}\) of saddle points is closed and non-empty and \(X\times Y\) is a separable Hilbert space. By Lemma 2.3, the variable metrics sequence \((N^k)_{k \in {\mathbb {N}}}\) defined in (2.8) satisfies condition (i) of Theorem 2.5. Furthermore, the iterates of Algorithm 2.1 comply with condition (ii) and (iii) of Theorem 2.5 by Lemma 2.8 and Lemma 2.9, respectively, and hence converge almost surely to a point in \({\mathcal {C}}\).

3 Algorithmic Design and Practical Implementations

In this section, we present practical instances of our A-SPDHG algorithm, where we specify a step-size adjustment rule which satisfies our assumptions in convergence proof. We extend the adaptive step-size balancing rule for deterministic PDHG, which is proposed by [14], into our stochastic setting, with minibatch approximation to minimize the computational overhead.

3.1 A-SPDHG Rule (a)—Tracking and Balancing the Primal–Dual Progress

Let’s first briefly introduce the foundation of our first numerical scheme, which is built upon the deterministic adaptive PDHG algorithm proposed by Goldstein et al [14], with the iterates:

In this foundational work of Goldstein et al [14], they proposed to evaluate two sequences in order to track and balance the progresses of the primal and dual iterates of deterministic PDHG (denoted here as \(v_k^*\) and \(d_k^*\)):

These two sequences measure the lengths of the primal and dual subgradients for the objective \(\min _{x\in X} \max _{y \in Y} g(x)+\langle Ax, y \rangle - f^*(y)\), which can be demonstrated by the definition of proximal operators. The primal update of deterministic PDHG can be written as:

The optimality condition of the above objective declares:

By adding \(-A^*y^{k+1}\) on both sides and rearranging the terms, one can derive:

and similarly for the dual update one can also derive:

which indicates that the sequences \(v_k^*\) and \(d_k^*\) given by (3.1) should effectively track the primal progress and dual progress of deterministic PDHG, and hence, Goldstein et al [14] propose to utilize these as the basis of balancing the primal and dual step sizes for PDHG.

In light of this, we propose our first practical implementation of A-SPDHG in Algorithm 3.1 as our rule-(a), where we use a unique dual step-size \(\sigma ^k=\sigma _j^k\) for all iterates k and indices j and where we estimate the progress of achieving optimality on the primal and dual variables via the two sequences \(v^k\) and \(d^k\) defined at each iteration k with \(I^k=i\) as:

which are minibatch extension of (3.1) tailored for our stochastic setting. By making them balanced on the fly via adjusting the primal–dual step-size ratio when appropriate, we can enforce the algorithm to achieve similar progress in both primal and dual steps and hence improve the convergence. To be more specific, as shown in Algorithm 3.1, in each iteration the values of \(v_k\) and \(d_k\) are evaluated and compared. If the value of \(v_k\) (which tracks the primal subgradients) is significantly larger than \(d_k\) (which tracks the dual subgradients), then we know that the primal progress is slower than the dual progress, and hence, the algorithm would boost the primal step size while shrinking the dual step size. If \(v_k\) is noticeably smaller than \(d_k\), then the algorithm would do the opposite.

Note that here we adopt the choice of \(\ell _1\)-norm as the length measure for \(v^k\) and \(d^k\) as done by Goldstein et al [14, 15], since we also observe numerically the benefit over the more intuitive choice of \(\ell _2\)-norm.

For full-batch case (\(n=1\)), it reduces to the adaptive PDHG proposed by [14, 15]. We adjust the ratio between primal and dual step sizes according to the ratio between \(v^k\) and \(d^k\), and whenever the step-size change, we shrink \(\alpha \) (which controls the amplitude of the changes) by a factor \(\eta \in (0,1)\)—we typically choose \(\eta = 0.995\) in our experiments. For the choice of s, we choose \(s=\Vert A\Vert \) as our default.Footnote 1

3.1.1 Reducing the Overhead with Subsampling

Noting that unlike the deterministic case which does not have the need of extra matrix–vector multiplication since \(A^*y^k\) and \(Ax^k\) can be memorized, our stochastic extension will require the computation of \(A_i x^k\) since we will sample different subsets between back-to-back iterations with high probability. When using this strategy, we will only have a maximum \(50\%\) overhead in terms of FLOP counts, which is numerically negligible compared to the significant acceleration it will bring toward SPDHG especially when the primal–dual step-size ratio is suboptimal, as we will demonstrate later in the experiments. Moreover, we found numerically that we can significantly reduce this overhead by approximation tricks such as subsampling:

with \(S^k\) being a random subsampling operator such that \({\mathbb {E}} [(S^k)^TS^k] = \frac{1}{\rho }\text {Id}\). In our experiments, we choose \(10\%\) subsampling for this approximation and hence the overhead is reduced from \(50\%\) to only \(5\%\) which is negligible, without compromising the convergence rates in practice.

3.2 A-SPDHG Rule (b)—Exploiting Angle Alignments

More recently, Yokota and Hontani [26] propose a variant of adaptive step-size balancing scheme for PDHG, utilizing the angles between the subgradients \(\partial g(x^{k+1}) + A^*y^{k+1}\) and the difference of the updates \(x^k - x^{k+1}\).

If these two directions are highly aligned, then the primal step size can be increased for bigger step. If these two directions have a large angle, then the primal step size should be shrunken. By extending this scheme to stochastic setting, we obtain another choice of adaptive scheme for SPDHG.

We present this scheme in Algorithm 3.2 as our rule (b). At iteration k with \(I^k=i\), compute:

as an estimate of \(\partial g(x^{k+1}) + A^*y^{k+1}\), then measure the cosine of the angle between this and \(x^k - x^{k+1}\):

The threshold c for the cosine value (which triggers the increase of the primal step size) typically needs to be very close to 1 (we use \(c=0.999\)) due to the fact that we mostly apply these type of algorithms in high-dimensional problems, following the choice in [26] which was for deterministic PDHG.

Recently, Zdun et al [27] proposed a heuristic similar to our rule (b), but they choose \(q^{k+1}\) to be the approximation for an element of \(\partial g(x^{k+1})\) instead of \(\partial g(x^{k+1}) + A^*y^{k+1}\). Our choice follows more closely to the original scheme of Yokota and Hontani [26]. We numerically found that their scheme is not competitive in our settings.

Comparison between SPDHG and A-SPDHG on sparse-view CT (Example 1), with a variety of starting primal–dual step size ratios. Here, the forward operator is \(A\in {\mathbb {R}}^{m\times d}\) with dimensions \(m=368640\), \(d= 1048576\). We include the images reconstructed by the algorithms at termination (50th epoch). In the first plot of each subfigure, the black circle indicates the starting step-size ratio for all the algorithms, same for the following figures

Comparison between SPDHG and A-SPDHG on sparse-view CT (Example 2), with a variety of starting primal–dual step-size ratios. Here, the forward operator is \(A\in {\mathbb {R}}^{m\times d}\) with dimensions \(m=92160\), \(d= 262144\). We include the images reconstructed by the algorithms at termination (50th epoch)

Comparison between SPDHG and A-SPDHG on low-dose CT (where we use a large number of highly-noisy X-ray measurements), with a variety of starting primal–dual step-size ratios. Here, the forward operator is \(A\in {\mathbb {R}}^{m\times d}\) with dimensions \(m=184320\), \(d= 65536\). We resized the phantom image to 256 by 256. We include the images reconstructed by the algorithms at termination (50th epoch)

Comparison between SPDHG and A-SPDHG with the data being split to 40 minibatches on low-dose CT. Comparing to the results presented in Fig. 3 which used 10 minibatches, we obtain similar results and our A-SPDHG continues to perform more favorably comparing to SPDHG.

Comparison between SPDHG and A-SPDHG with warm-start using a FBP (filtered backprojection) on low-dose CT. Comparing to the results shown in Fig. 3 which are without warm-start, actually our methods seem to compare even more favorably with warm-start. Please also note that the early jump in terms of function value is within our expectation due to the stochasticity of the algorithms. We include the images reconstructed by the algorithms at termination (50th epoch)

Comparison between SPDHG and A-SPDHG on limited-angle CT (Example 2), with a variety of starting primal–dual step-size ratios. Here, the forward operator is \(A\in {\mathbb {R}}^{m\times d}\) with dimensions \(m=92160\), \(d= 262144\). We include the images reconstructed by the algorithms at termination (50th epoch)

4 Numerical Experiments

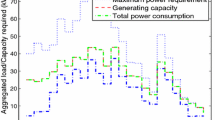

In this section, we present numerical studies of the proposed scheme in solving one of the most typical imaging inverse problems, the computed tomography (CT). We compare A-SPDHG algorithm with the original SPDHG, on different choices of starting ratio of the primal and dual step sizes.

In our CT imaging example, we seek to reconstruct the tomography images from fanbeam X-ray measurement data, by solving the following TV-regularized objective:

where D denotes the 2D differential operator, \(A\in {\mathbb {R}}^{m\times d}\) and \(x\in {\mathbb {R}}^{d}\). We consider three fanbeam CT imaging modalities: sparse-view CT, low-dose CT and limited-angle CT. We test the A-SPDHG and SPDHG on two images of different sizes (Example 1 on a phantom image sized \(1024 \times 1024\), while Example 2 being an image from the Mayo Clinic Dataset [21] sized \(512 \times 512\).), on 4 different starting ratios (\(10^{-3}\), \(10^{-5}\), \(10^{-7}\) and \(10^{-9}\)). We interleave partitioned the measurement data and operator into \(n=10\) minibatches for both algorithms. To be more specific, we first collect all the X-ray measurement data and list them consecutively from 0 degree to 360 degree to form the full A and b, and then interleavingly group every 10-th of the measurements into one minibatch, to form the partition \(\{A_i\}_{i=1}^{10}\) and \(\{b_i\}_{i=1}^{10}\).

For A-SPDHG, we choose to use the approximation step for \(d^k\) presented in (3.7) with \(10\%\) subsampling and hence the computational overhead is negligible in this experiment. We initialize all algorithms from a zero image.

We present our numerical results in Figs. 1, 2, 3 and 6. In these plots, we compare the convergence rates of the algorithms in terms of number of iterations (the execution time per iteration for the algorithms are almost the same, as the overhead of A-SPDHG is trivial numerically). Among these, Figs. 1 and 2 report the results for large-scale sparse-view CT experiments on a phantom image and a lung CT image from Mayo Clinic dataset [21], while Fig. 3 reports the results for low-dose CT experiments where we simulate a large number of measurements corrupted with a significant amount Poisson noise, and then, in Fig. 6 we report the results for limited-angle CT which only a range of 0-degree to 150-degree of measurement angles are present, while the measurements from the rest [150, 360] degrees of angles are all missing. In all these examples, we can consistently observe that no matter how we initialize the primal–dual step-size ratio, A-SPDHG can automatically and consistently adjust the step-size ratio to the optimal choice which is around either \(10^{-5}\) or \(10^{-7}\) for these four different CT problems and significantly outperform the vanilla SPDHG for the cases where the starting ratio is away from the optimal range. Meanwhile, even for the cases where the starting ratio of SPDHG algorithm is near-optimal, we can observe consistently from most of these examples that our scheme outperforms the vanilla SPDHG algorithm locally after a certain number of iterations (highlighted by the vertical dash lines in relevant subfigures), which further indicates the benefit of adaptivity for this class of algorithmsFootnote 2. Note that throughout all these different examples, we use only one fixed set of parameters for A-SPDHG suggested in the previous section, which again indicates the strong practicality of our scheme.

For the low-dose CT example, we run two extra sets of experiments, regarding a larger number of partitioning of minibatches (40) in Fig. 4, and warm-start from a better initialization image obtained via filter backprojection in Fig. 5. We found that in all these extra examples we consistently observe superior performances of A-SPDHG over the vanilla SPDHG especially when the primal–dual step-size ratios are suboptimal. Interestingly, we found that the warm-start’s effect does not have noticeable impact of the comparative performances between SPDHG and A-SPDHG. This is mainly due to the fact that the SPDHG with suboptimal primal–dual step-size ratio will converge very slowly in high accuracy regimes (see Fig. 5d for example) in practice hence the warm-start won’t help much here.

We should also note that conceptually all the hyperparameters in our adaptive schemes are basically the controllers of the adaptivity of the algorithm (while for extreme choices we recover the vanilla SPDHG). In Figs. 7 and 9, we present some numerical studies on the choices of hyperparameters of rule (a) and rule (b) of A-SPDHG algorithm. We choose the fixed starting ratio of \(10^{-7}\) for primal–dual step sizes in these experiments. For rule (a), we found that it is robust to the choice of the starting shrinking rate \(\alpha _0\), shrinking speed \(\eta \) and the gap \(\delta \). Overall, we found that these parameters have weak impact of the convergence performance of our rule (a) and easy to choose.

For rule (b), we found that the performance is more sensitive to the choice of parameter c and \(\eta \) comparing to rule (a), although the dependence is still weak. Our numerical studies suggest that rule (a) is a better-performing choice than rule (b), but each of them have certain mild weaknesses (the first rule has a slight computational overhead which can be partially addressed with subsampling scheme, while the second rule seems often being slower than the first rule), which require further studies and improvements. Nevertheless, we need to emphasis that all these parameters are essentially controlling the degree of adaptivity of the algorithms and fairly easy to choose, noting that for all these CT experiments with varying sizes/dimensions and modalities we only use one fixed set of the hyperparameters in A-SPDHG, and we are already able to consistently observe numerical improvements over vanilla SPDHG.

5 Conclusion

In this work, we propose a new framework (A-SPDHG) for adaptive step-size balancing in stochastic primal–dual hybrid gradient methods. We first derive theoretically sufficient conditions on the adaptive primal and dual step sizes for ensuring convergence in the stochastic setting. We then propose a number of practical schemes which satisfy the condition for convergence, and our numerical results on imaging inverse problems support the effectiveness of the proposed approach.

To our knowledge, this work constitutes the first theoretical analysis of adaptive step sizes for a stochastic primal–dual algorithm. Our ongoing work includes the theoretical analysis and algorithmic design of further accelerated stochastic primal–dual methods with line-search schemes for even faster convergence rates.

Test on the default choice \(s = \Vert A\Vert \) of A-SPDHG (rule-a) on X-ray low-dose fanbeam CT example. Left figure: starting ratio of primal–dual step sizes: \(10^{-7}\). Right figure: starting ratio of primal–dual step sizes: \(10^{-5}\). We can observe that our default choice of s is indeed a reasonable choice (at least near-optimal) in practice, and when deviating from it may lead to slower convergence

6 Complementary Material for Sect. 2

We begin by a useful lemma.

Lemma 6.1

Let \(a,\,b\) be positive scalars, \(\beta \in (0,1)\), and P a bounded linear operator from a Hilbert space X to a Hilbert space Y. Then,

Proof

Let us call

For all \((x,y)\in X\times Y\),

which proves the direct implication of (6.1). For the converse implication, consider \(x\in X {\setminus } \left\{ 0\right\} \) such that \(\Vert Px\Vert =\Vert P\Vert \Vert x\Vert \) and \(y=-\lambda Px\) for a scalar \(\lambda \). Then, the nonnegativity of the polynomial

for all \(\lambda \in {\mathbb {R}}\) implies that \(\Vert P\Vert ^4 - ab \Vert P\Vert ^2 \le 0\), which is equivalent to the desired conclusion \((ab)^{-1/2}\Vert P\Vert \le 1\).Equivalence (6.2) is straightforward by noticing that

\(\square \)

Let us now turn to the proof of Lemma 2.2.

Proof of Lemma 2.2

Let us assume that the step sizes satisfy the assumptions of the lemma. Then, Assumption (i) of Theorem 2.1 is straightforwardly satisfied. Moreover, for \(i\in \llbracket 1,n\rrbracket \), the product sequence \((\tau ^k \sigma _i^k)_{k \in {\mathbb {N}}}\) is constant along the iterations by equation (2.6) and satisfies equation (2.5) for iterate \(k=0\) and thus satisfies (2.5) for all \(k \in {\mathbb {N}}\) for \(\beta = \max _i\left\{ \tau ^0 \sigma _i^0\Vert A_i\Vert ^2 / p_i\right\} \), which proves Assumption (ii). Finally, equation (2.7) implies that Assumption (iii) is satisfied. \(\square \)

Availability of data and materials

The related implementation of the algorithms and the image data used in the experiment will be made available on the website https://junqitang.com. For the phantom image example, we use the one in the experimental section of [8], while for the lung CT image example we use an image from the Mayo Clinic Dataset [21] which is publicly available.

Notes

The choice of s is crucial for the convergence behavior of rule (a), and we found numerically that it is better to scale with the operator norm \(\Vert A\Vert \) instead of depending on the range of pixel values as suggested in [15].

The most typical example here would be Fig. 1b where the optimal step-size ratio selected by the adaptive scheme at convergence is almost exactly \(10^{-5}\), where we have set SPDHG to run with this ratio. We can still observe benefit of local convergence acceleration given by our adaptive scheme.

References

Alacaoglu, A., Fercoq, O., Cevher, V.: On the convergence of stochastic primal-dual hybrid gradient. SIAM J. Optim. 32(2), 1288–1318 (2022)

Bauschke, H.H., Combettes, P.L.: Convex Analysis and Monotone Operator Theory in Hilbert Spaces, vol. 408. Springer (2011)

Bonettini, S., Benfenati, A., Ruggiero, V.: Scaling techniques for epsilon-subgradient methods. SIAM J. Optim. 26(3), 1741–1772 (2016)

Bonettini, S., Porta, F., Ruggiero, V., Zanni, L.: Variable metric techniques for forward-backward methods in imaging. J. Comput. Appl. Math. 385, 113192 (2021)

Bonettini, S., Prato, M., Rebegoldi, S.: A block coordinate variable metric linesearch based proximal gradient method. Comput. Optim. Appl. 71(1), 5–52 (2018)

Bonettini, S., Rebegoldi, S., Ruggiero, V.: Inertial variable metric techniques for the inexact forward-backward algorithm. SIAM J. Sci. Comput. 40(5), A3180–A3210 (2018)

Bonettini, S., Ruggiero, V.: On the convergence of primal-dual hybrid gradient algorithms for total variation image restoration. J. Math. Imaging Vis. 44(3), 236–253 (2012)

Chambolle, A., Ehrhardt, M.J., Richtárik, P., Schönlieb, C.-B.: Stochastic primal-dual hybrid gradient algorithm with arbitrary sampling and imaging applications. SIAM J. Optim. 28(4), 2783–2808 (2018)

Chambolle, A., Pock, T.: A first-order primal-dual algorithm for convex problems with applications to imaging. J. Math. Imaging Vis. 40(1), 120–145 (2011)

Combettes, P.L., Pesquet, J.-C.: Stochastic quasi-Fejér block-coordinate fixed point iterations with random sweeping. SIAM J. Optim. 25(2), 1221–1248 (2015)

Combettes, P.L., Vũ, B.C.: Variable metric quasi-Fejér monotonicity. Nonlinear Anal.: Theory Methods Appl. 78, 17–31 (2013)

Delplancke, C., Gurnell, M., Latz, J., Markiewicz, P.J., Schönlieb, C.-B., Ehrhardt, M.J.: Improving a stochastic algorithm for regularized PET image reconstruction. In: 2020 IEEE Nuclear Science Symposium and Medical Imaging Conference (NSS/MIC), pp. 1–3. IEEE (2020)

Ehrhardt, M.J., Markiewicz, P., Schönlieb, C.-B.: Faster PET reconstruction with non-smooth priors by randomization and preconditioning. Phys. Med. Biol. 64(22), 225019 (2019)

Goldstein, T., Li, M., Yuan, X.: Adaptive primal-dual splitting methods for statistical learning and image processing. Adv. Neural. Inf. Process. Syst. 28, 2089–2097 (2015)

Goldstein, T., Li, M., Yuan, X., Esser, E., Baraniuk, R.: Adaptive primal-dual hybrid gradient methods for saddle-point problems. arXiv preprint arXiv:1305.0546 (2013)

Gutiérrez, E.B., Delplancke, C., Ehrhardt, M.J.: On the convergence and sampling of randomized primal-dual algorithms and their application to parallel MRI reconstruction. arXiv preprint arXiv:2207.12291 (2022)

He, B., Yuan, X.: Convergence Analysis of Primal-dual Algorithms for Total Variation Image Restoration. Rapport technique, Citeseer (2010)

Malitsky, Y.: Golden ratio algorithms for variational inequalities. Math. Program. 184(1), 383–410 (2020)

Malitsky, Y., Mishchenko, K.: Adaptive gradient descent without descent. In: Daumé III, H. Singh, A., (eds) Proceedings of the 37th International Conference on Machine Learning, vol. 119 of Proceedings of Machine Learning Research, pp. 6702–6712 (2020)

Malitsky, Y., Pock, T.: A first-order primal-dual algorithm with linesearch. SIAM J. Optim. 28(1), 411–432 (2018)

McCollough, C.: TU-FG-207A-04: overview of the low dose CT grand challenge. Med. Phys. 43(6Part35), 3759–3760 (2016)

Papoutsellis, E., Ametova, E., Delplancke, C., Fardell, G., Jørgensen, J.S., Pasca, E., Turner, M., Warr, R., Lionheart, W.R.B., Withers, P.J.: Core imaging library-part II: multichannel reconstruction for dynamic and spectral tomography. Philos. Trans. R. Soc. A 379(2204), 20200193 (2021)

Robbins, H., Siegmund, D.: A convergence theorem for non negative almost supermartingales and some applications. In: Optimizing Methods in Statistics, pp. 233–257. Elsevier (1971)

Schramm, G., Holler, M.: Fast and memory-efficient reconstruction of sparse poisson data in listmode with non-smooth priors with application to time-of-flight PET. Phys. Med. Biol. (2022)

Vladarean, M.-L., Malitsky, Y., Cevher, V.: A first-order primal-dual method with adaptivity to local smoothness. Adv. Neural. Inf. Process. Syst. 34, 6171–6182 (2021)

Yokota, T., Hontani, H.: An efficient method for adapting step-size parameters of primal-dual hybrid gradient method in application to total variation regularization. In: 2017 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), pp. 973–979. IEEE (2017)

Zdun, L., Brandt, C.: Fast MPI reconstruction with non-smooth priors by stochastic optimization and data-driven splitting. Phys. Med. Biol. 66(17), 175004 (2021)

Funding

CD acknowledges support from the EPSRC (EP/S026045/1). MJE acknowledges support from the EPSRC (EP/S026045/1, EP/T026693/1, EP/V026259/1) and the Leverhulme Trust (ECF-2019-478). CBS acknowledges support from the Philip Leverhulme Prize, the Royal Society Wolfson Fellowship, the EPSRC advanced career fellowship EP/V029428/1, EPSRC grants EP/S026045/1 and EP/T003553/1, EP/N014588/1, EP/T017961/1, the Wellcome Innovator Awards 215733/Z/19/Z and 221633/Z/20/Z, the European Union Horizon 2020 research and innovation program under the Marie Sklodowska-Curie grant agreement No. 777826 NoMADS, the Cantab Capital Institute for the Mathematics of Information and the Alan Turing Institute.

Author information

Authors and Affiliations

Contributions

CD, MJE and AC elaborated the proof strategy, and CD wrote parts 1, 2 and 6. JT worked on the algorithmic design, performed the numerical experiments and wrote parts 3-5. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Conflict of interest

There are no competing interests to declare.

Ethical approval

This declaration is not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

CD was at the Department of Mathematical Sciences, University of Bath, while the research presented in this article was undertaken.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chambolle, A., Delplancke, C., Ehrhardt, M.J. et al. Stochastic Primal–Dual Hybrid Gradient Algorithm with Adaptive Step Sizes. J Math Imaging Vis 66, 294–313 (2024). https://doi.org/10.1007/s10851-024-01174-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10851-024-01174-1