Abstract

Nonlinear filtering approaches allow to obtain decomposition of images with respect to a non-classical notion of scale, induced by the choice of a convex, absolutely one-homogeneous regularizer. The associated inverse scale space flow can be obtained using the classical Bregman iteration with quadratic data term. We apply the Bregman iteration to lifted, i.e., higher-dimensional and convex, functionals in order to extend the scope of these approaches to functionals with arbitrary data term. We provide conditions for the subgradients of the regularizer – in the continuous and discrete setting– under which this lifted iteration reduces to the standard Bregman iteration. We show experimental results for the convex and non-convex case.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Motivation and Introduction

Scale-space of solutions for non-convex depth estimation. We apply the sublabel-accurate lifting approach [31] to the non-convex problem of depth estimation in order to obtain a convex problem to which the Bregman iteration [34] can be applied. In addition to the final depth map (left), the Bregman iteration generates a scale space of solutions with increasing spatial detail, as can be seen from the two horizontal sections (center, right)

In modern image processing tasks, variational problems constitute an important tool [4, 42]. They are used in a variety of applications such as denoising [40], segmentation [18], and depth estimation [43, 45]. In this work, we consider variational image processing problems with energies of the form

where the integrand \(\eta : {\mathbb {R}}^d \mapsto {\mathbb {R}}\) of the regularizer is nonnegative and convex, and the integrand \(\rho : \Omega \times \Gamma \mapsto \overline{{\mathbb {R}}}\) of the data term H is proper, nonnegative and possibly non-convex with respect to u. We assume that the domain \(\Omega \subset {\mathbb {R}}^d\) is open and bounded and that the range \(\Gamma \subset {\mathbb {R}}\) is compact. After discretization, we refer to \(\Gamma \) as the label space in analogy to multi-label problems with discrete range [28].

The lifted Bregman iteration, proposed in this paper, combines two established frameworks. Throughout the paper, we are mainly concerned with three distinct problem classes, which are fundamental for either or both of these frameworks. They are well studied in the corresponding framework and allow us to build on some known results. Whenever we are working with the total variation regularizer [3]

as well as an arbitrary data term we use the abbreviation TV-(1). This problem class is of special interest to the functional lifting approach we are using, since it allows the use of a “sublabel-accurate” discretization [31].

Let f denote some input and \(\lambda > 0\). In case of the total variation regularizer and quadratic data term,

we use the abbreviation ROF-(1). This Rudin-Osher-Fatemi problem is the original use case of the Bregman iteration [34]. As a sanity check, we investigate whether the results of the lifted Bregman iteration agree with the ones of the original Bregman iteration in case of the ROF-(1) problem.

For the quadratic data term (3) and an arbitrary convex, absolutely one-homogeneous regularizer \(\eta \), we write OH-(1). This problem class has been extensively studied by the inverse scale space flow community.

The Bregman iteration introduced in [34] recovers a scale space of solutions of the ROF-(1) problem. These solutions can be used to define a nonlinear transformation and filters that result in a high-quality decomposition of the input image into different scales. In this work, we aim to achieve a scale space of solutions of the TV-(1) problem. For this purpose, we combine the Bregman iteration with a lifting approach [31, 32, 36], which allows to solve problems with a non-convex data term in a higher-dimensional space in a convex fashion. In the following, we briefly review both of these concepts.

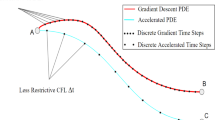

1.1 Inverse Scale Space Flow

Consider the so-called inverse scale space flow [11, 12, 34] equation

where J is assumed to be convex and absolutely one-homogeneous. The evolution \(u:[0,T]\times \Omega \rightarrow {\mathbb {R}}\) in (4) starts at \(u(0,\cdot )=\text {mean}(f)\) and p(s) is forced to lie in the subdifferential of J.

One can show [13, 22] that during the evolution nonlinear eigenfunctions of the regularizer with increasingly larger eigenvalues are added to the flow, where a nonlinear eigenfunction u of J for an eigenvalue \(\lambda \) is understood as a solution of the inclusion

Typically, and in particular for the total variation regularization \(J=\text {TV}\), the flow \(u(s,\cdot )\) incorporates details of the input image at progressively finer scales as s increases. Large-scale details can be understood as the structure of the image and fine-scale details as the texture. Stopping the flow at a suitable time returns a denoised image, whereas for \(s\rightarrow \infty \) the flow converges to the input image.

By considering the derivative \(u_s\), one can define a nonlinear decomposition of the input f [13, 23]: First a transformation to the spectral domain of the regularizer is defined. After the transformation, the input data are represented as a linear combination of generalized eigenfunctions of the regularizer. The use of filters in the spectral domain followed by a reconstruction to the spatial domain leads to a high-quality decomposition of the input image into different scales [23, 26]. Such nonlinear decompositions can be used for, e.g., image manipulation and fusion [26], image style transformation [11] or multiscale segmentation [46]. The latter work reformulates the Chan-Vese as a ROF problem with binary constraint.

Nonlinear decompositions have also been developed in the context of variational models of the form OH-(1) and gradient flow formulations [6, 11, 12, 20,21,22]. In [11], the equivalence of these two approaches and the inverse scale space flow is shown. The analysis of nonlinear spectral decompositions is extended to infinite-dimensional Hilbert spaces in [10]. In [25], a neural network is introduced that approximates the nonlinear spectral decomposition.

As we will see in the next section, the first part of the flow equation (4) directly relates to the derivative of the quadratic data term (3). To the best of our knowledge, the question of how to define scale space transformations and filters for the solutions of variational problems with arbitrary data terms has not yet been studied. The lifted Bregman iteration is a step in this direction.

Calibration based lifting. Function u in original solution space (left). The idea in calibration based lifting is to represent functions u by the higher- dimensional indicator function of their subgraph \(\varvec{1}_u\). The variational problem is then rewritten as a flux of vector fields \(\phi \) through the complete graph \(\Gamma _u\); the latter is the measure theoretic boundary of the subgraph. Here, \(\nu _{\Gamma _u}\) denotes the inner (downwards-pointing) unit normal (middle). By enlarging the feasible set to the convex hull of the subgraph indicator functions, which also allows diffuse solutions (right), one obtains an overall convex problem

1.2 Bregman Iteration

For problems in the class OH-(1), the inverse scale space flow can be understood [12] as a continuous limit of the Bregman iteration [34]. The Bregman iteration uses the Bregman divergence first introduced in [9]. For both the data term H and regularizer J being nonnegative and in particular convex, the Bregman iteration is defined as:

In case of the ROF-(1) problem, the subgradient \(p_k\) can be chosen explicitly as \(p_k = p_{k-1} - \lambda (u_k - f)\). Rearranging this equation as \((p_k - p_{k-1})/\lambda =-(u_k-f)\) shows that it is simply a time step for (4). Solving the ROF problem with the Bregman iteration improves the results insofar as to avoid the ROF typical loss of contrast. An extensive analysis of the iteration including well-definedness of the iterates and convergence results can be found in [34].

Further extensions include the split Bregman method for \(\ell _1\)-regularized problems [24] and the linearized Bregman iteration for compressive sensing and sparse denoising [14, 35]. However, applying the Bregman iteration to variational problems with non-convex data term H is not trivial since the well-definedness of the iterations, the use of subgradients, as well as the convergence results in [34] rely on the convexity of the data term. In [27], the Bregman iteration was used to solve a non-convex optical flow problem, however, the approach relies on an iterative reduction to a convex problem using first-order Taylor approximations. In [46], the Bregman iteration is applied to the relaxed and convexified segmentation problem suggested in [17]. In this case, the Bregman iteration did not improve the results. However, due to the connection to the inverse scale space flow, it still allowed the definition of transformation to the spectral domain of the regularizer and definition of filters in the latter representation.

In this work, we aim to apply the Bregman iteration to scalar problems with an arbitrary non-convex data term such as the non-convex stereo matching problem (Figs. 1, 6). In order to do so, we follow a lifting approach [31, 32, 36]: Instead of minimizing the non-convex problem

over some suitable (discrete or function) space U, we solve a lifted problem

over a larger space \({\mathcal {U}}\) but with convex energies \({\mathcal {H}}, {\mathcal {J}}\) in a way that allows to recover solutions u of the original problem (8) from solutions v of the lifted problem (9). The Bregman iteration can then be performed on the – now convex – lifted problem (9):

This allows to extend the Bregman iteration to non-convex data terms and prompts the following questions:

1. The (lifted) Bregman iteration crucially depends on the choice of subgradients of the (lifted) regularizer. How are the results of the original (Alg. 1) and lifted (Alg. 2) Bregman iteration related to each other in case of the ROF-(1) problem?

2. Does the lifted Bregman iteration yield a scale space of solutions for TV-(1) problems?

In this paper we analyze the equivalence for the ROF-(1) problem in the continuous and discrete setting in order to justify our approach. We show some promising experimental results of the lifted Bregman iteration for the non-convex TV-(1) problem.

1.3 Outline and Contribution

This work is an extension of the conference report [5] where we first introduced the lifted Bregman iteration. Compared to the report, we expand our theoretical analysis of the lifted Bregman iteration to the fully continuous setting and present analogous statements about the equivalence of the original and lifted Bregman iteration under certain assumptions. Additional numerical experiments demonstrate that eigenfunctions of the TV regularizer appear according to the size of their eigenvalues at different steps of the iteration – this also holds true for the non-convex and nonlinear stereo matching problem.

In section 2, we summarize the lifting approach for problems of the form TV-(1) both in continuous (function space) and the discretized (sublabel-accurate) formulations. We derive conditions under which the original and lifted Bregman iteration are equivalent in the continuous (section 3) and in the discretized (section 4–5) setting. The conditions in the discretized setting are in particular met by the anisotropic TV. In section 6, we validate our findings experimentally by comparing the original and lifted iteration on the convex ROF-(1) problem and present numerical results on the non-convex stereo matching problem.

1.4 Notation

We denote the extended real line by \(\overline{{\mathbb {R}}}:= {\mathbb {R}}\cup \{ \pm \infty \}\). Given a function \(f:{\mathbb {R}}^n\mapsto \overline{{\mathbb {R}}}\), the conjugate \(f^*:{\mathbb {R}}^n\mapsto \overline{{\mathbb {R}}}\) is defined as [39, Ch. 11]

If f has a proper convex hull, both the conjugate and biconjugate are proper, lower semi-continuous and convex [39, Ch. 11]. The indicator function of a set C is defined as

The support function of a set C is defined as

The Fenchel conjugate can similarly be defined on general normed spaces by taking \(u^*\) from the dual space [19, Def. I.4.1].

Whenever \(\varvec{u}\) denotes a vector, we use subscripts \(\varvec{u}_k\) to indicate an iteration or sequence, and superscripts \(\varvec{u}^k\) to indicate the k-th value of the vector. We use calligraphic letters to denote lifted energies in the continuous setting (e.g., \({\mathcal {F}}, {\mathcal {K}}, \mathcal{TV}\mathcal{}\)) and bold letters to denote lifted energies in the discrete setting (e.g., \(\varvec{F}, \varvec{K}, \varvec{TV}\)).

The total variation regularizer is defined as

By \(\text {BV}(\Omega ;\Gamma )\) we denote the set of functions u that are of bounded variation, i.e., for which \(\text {TV}(u)<\infty \).

2 Lifting Approach

In the next two subsections, we summarize the lifting approach in the fully continuous and the (half) discretized setting and collect important properties presented in the literature.

2.1 Continuous Setting

Our work is based on methods for scalar but continuous range \(\Gamma \) with first-order regularization in the spatially continuous setting [1, 16, 36, 37]: The feasible set of the scalar-valued functions \(u\in W^{1,1}(\Omega ;\Gamma )\) is embedded into the convex set of functions which are of bounded variation on every compact subset of \(\Omega \times {\mathbb {R}}\) (i.e., \(v\in \text {BV}_{\text {loc}}(\Omega \times {\mathbb {R}}; [0,1])\)) by associating each function u with the characteristic function of its subgraph, i.e.,

To extend the energy F in (1) for \(\Gamma = {\mathbb {R}}\) onto this larger space, a lifted convex functional \({\mathcal {F}}: \text {BV}_{\text {loc}}(\Omega \times {\mathbb {R}}; [0,1])\rightarrow {\mathbb {R}}\) based on the distributional derivative Dv is defined [1]:

where the admissible dual vector fields are given by

If v is indicator function of the subgraph of u, i.e., \(v=1_u\), one has [7, 36]

where \(G_u\) denotes the measure theoretic boundary of the subgraph (i.e., the complete graph of u or the singular set of \(1_u\)) and \(\nu _{G_u}\) the inner (downwards-pointing) unit normal to \(G_u\). For smooth u, the latter is

See Fig. 2 for a visualization.

In case of the \(\text {TV}\) regularizer (15), \(\eta \) is convex and one-homogeneous, and its Fenchel conjugate \(\eta ^*\) is the indicator function of a convex set. Therefore, the constraint in (18) can be separated [32]:

In [7, 36], the authors show that \(F(u) = {\mathcal {F}}(1_u)\) holds for any \(u\in W^{1,1}\). Moreover, if the non-convex set \( \{ 1_u : u\in W^{1,1} \} \) is relaxed to the convex set

any minimizer of the lifted problem \(\inf _{v\in C}{\mathcal {F}}(v)\) can be transformed into a global minimizer of the original nonconvex problem \(\inf _{u\in W^{1,1}}{\mathcal {F}}(1_u)\) by thresholding: The thresholding process does not change the energy, and produces a characteristic function of the form \(1_u\) for some u in the original function space, on which \({\mathcal {F}}(1_u)\) and F(u) agree [36, Thm. 3.1] [7, Thm. 4.1,Prop. 4.13].

The lifting approach has also been connected to dynamical optimal transport and the Benamou–Brenier formulation, which allows to incorporate higher-order regularization [44].

Lifted total variation. For \(H\equiv 0\) and \(J=\text {TV}\) it turns out that \(\phi _t \equiv 0\) is optimal in (21)-(23). This can either be derived from the fact that \((Dv)_t\) is non-positive (see [36]) and that \(\phi _t\) is nonnegative due to the constraint (23). If \(v = 1_u\) for sufficiently smooth u, one can also easily see \(\phi _t\equiv 0\) by applying (19)-(20) and again arguing that \(\phi _t\ge 0\) holds due to the constraint (23):

Subsequently, we can reduce the lifted total variation for any \(v=1_u\) (i.e., indicator functions of a subgraph of \(u\in W^{1,1}\)) to

Furthermore, the following equality holds [36, Thm. 3.2]:

2.2 Discrete Sublabel-Accurate Setting

Discrete setting. In a fully discrete setting with discretized domain and finite range \(\Gamma \), Ishikawa and Geiger proposed first lifting strategies for the labeling problem [28, 29]. Later the relaxation of the labeling problem was studied in a spatially continuous setting with binary [17, 18] and multiple labels [30, 45].

Sublabel-accurate discretization. In practice, a straightforward discretization of the label space \(\Gamma \) during the implementation process leads to artifacts and the quality of the solution strongly depends on the number and positioning of the chosen discrete labels. Therefore, it is advisable to employ a sublabel-accurate discretization [31], which allows to preserve information about the data term in between discretization points. The same accuracy can be achieved with fewer labels which results in smaller problems. In [32], the authors point out that this approach is closely linked to the approach in [36] when a combination of piecewise linear and piecewise constant basis functions is used for discretization. We also refer to [33] for an extension of the sublabel-accurate lifting approach to arbitrary convex regularizers.

For reference, we provide a short summary of the lifting approach with sublabel-accurate discretization for TV-(1) problems using the notation from [31]. The approach comprises three steps:

Lifting of the label space. First, we choose L labels \( \gamma _1< \gamma _2< ... < \gamma _L \) such that \( \Gamma = [ \gamma _1, \gamma _L ] \). These labels decompose the label space \(\Gamma \) into \(l := L-1\) sublabel spaces \(\Gamma _i := [\gamma _i, \gamma _{i+1}]\). Any value in \(\Gamma \) can be written as

for some \(i \in \{1, 2, ..., l\}\) and \(\alpha \in [0,1]\). The lifted representation of such a value in \({\mathbb {R}}^l\) is defined as

where \(\varvec{1}_i \in {\mathbb {R}}^l\) is the vector of i ones followed by \(l-i\) zeroes. The lifted label space – which is non-convex – is given as \(\varvec{\Gamma } := \{ \varvec{1}_i^{\alpha } \in {\mathbb {R}}^l | i \in \{ 1,2,...,l \},\, \alpha \in [0,1] \}\).

If \(\varvec{u}(x) = \varvec{1}_i^{\alpha } \in \varvec{\Gamma }\) for (almost) every x, it can be mapped uniquely to the equivalent value in the unlifted (original) label space by

We refer to such functions \(\varvec{u}\) as sublabel-integral.

Lifting of the data term. Next, a lifted formulation of the data term is derived that in effect approximates the energy locally convex between neighboring labels, justifying the “sublabel-accurate” term. For the possibly non-convex data term of (1), the lifted – yet still non-convex – representation is defined as \(\varvec{\rho }(x,\cdot ):{\mathbb {R}}^l \mapsto \overline{{\mathbb {R}}}\),

Note that the domain is \({\mathbb {R}}^l\) and not just \(\varvec{\Gamma }\). Outside of the lifted label space \(\varvec{\Gamma }\) the lifted representation \(\varvec{\rho }\) is set to \(\infty \). Applying the definition of Legendre–Fenchel conjugates twice with respect to the second variable results in a relaxed – and convex – data term:

For explicit expressions of \(\varvec{\rho }^{**}\) in the linear and nonlinear case we refer to [31, Prop. 1,Prop. 2].

Lifting of the total variation regularizer. Lastly, a lifted representation of the (isotropic) total variation regularizer is established, building on the theory developed in the context of multiclass labeling approaches [15, 30]. The method heavily builds on representing the total variation regularizer with the help of Radon measures \(D\varvec{u}\). For further details we refer the reader to [3]. The lifted – and non-convex – integrand \(\varvec{\phi }: {\mathbb {R}}^{l\times d} \mapsto \overline{{\mathbb {R}}}\) is defined as

Applying the definition of Legendre–Fenchel conjugates twice results in a relaxed – and convex – regularization term:

where \(D\varvec{u}\) is the distributional derivative in the form of a Radon measure. For isotropic TV, it can be shown that, for \(\varvec{g}\in {\mathbb {R}}^{l\times d}\),

For more details, we refer to [31, Prop. 4] and [15].

Unfortunately isotropic TV in general does not allow to prove global optimality for the discretized system, as there is no known coarea-type formula for the discretized isotropic case. Therefore, we also consider the lifted anisotropic (\(L^1\)) TV, i.e., \(\text {TV}_{\text {an}}(u):=\int _\Omega \Vert Du\Vert _1\). With the same strategy as in the isotropic case (35), one obtains

Derivation of \(\varvec{K}_{\text {an}}\) Here the maximum norm in (36) originates as the dual norm to \(\Vert \cdot \Vert _1\). To see the equality to (37), let \(\varvec{q} \in {\mathbb {R}}^{l\times d}\). Then

Since \(q_i^j\) is a scalar, this is equivalent to

This shows the equality (36)–(37). \(\square \)

Together, the previous three sections allow us to formulate a version of the problem of minimizing the lifted energy (17) over the relaxed set (24) that is discretized in the label space \(\Gamma \):

Once the non-convex set \(\varvec{\Gamma }\) is relaxed to its convex hull, we obtain a fully convex lifting of problem TV-(1) similar to (9), which can now be spatially discretized.

3 Equivalence of the Lifted Bregman Iteration in the Continuous Setting

The (lifted) Bregman iteration greatly depends on the choice of a subgradient of the (lifted) total variation. Therefore, a main contribution in this paper is to establish a connection between the subdifferential of the original and lifted total variation. As a plausibility check for the lifted Bregman iteration we investigate whether the algorithm reproduces results of the original Bregman iteration; i.e., the “equivalence” of the original and lifted Bregman iteration in case of the ROF-(1) problem.

The exact definition of equivalence is not trivial, since there is potential ambiguity in choosing the subgradient in the lifted setting. We say that Alg. 1 and Alg. 2 are equivalent if we can find a “suitable” subgradient \({\tilde{p}}_{k-1}\) in the lifted setting that “relates” to a subgradient \(p_{k-1}\) in the original setting such that some \(u_k\) solves the original Bregman iteration and the indicator of its subgraph \(1_{u_k}\) solves the lifted Bregman iteration.

In this section, we consider the problem in the function space with continuous range \(\Gamma \), before moving on to the discretized setting in the later sections.

3.1 Subdifferential of the Total Variation

The Bregman iteration crucially requires elements from the subdifferential \(\partial J\) of the regularizer. Unfortunately, for the choice \(J=\text {TV}\), this requires to study elements from \(\text {BV}^*\), i.e., the dual space of \(\text {BV}\), which is not yet fully understood.

In order to still allow a reasonably accurate discussion, we make two simplifying assumptions: Firstly, we restrict ourselves to the case \(u\in W^{1,1}\) and \(\Omega \subseteq {\mathbb {R}}^2\), which allows to embed \(W^{1,1} \hookrightarrow L^2\) by the Sobolev embedding theorem [2, Thm. 10.9] Secondly, we will later assume that the subgradients can be represented as \(L^2\) functions. This is a rather harsh condition, and there are many subgradients of \(\text {TV}\) outside of this restricted class.

The assumptions allow us to formulate the major arguments in an intuitive way without being encumbered by too many technicalities; Unfortunately, it also restricts the validity of our arguments to this smaller set of problems.

The total variation (15) can be viewed as a support function:

Using the relation \(\sigma _C^*= \delta _{\text {cl } C}\) for convex sets C, its Fenchel conjugate is [19][Def. I.4.1, Example I.4.3]

According to [19, Prop. I.5.1] it holds \(p\in \partial \text {TV}(u)\) iff

The closure of \(\Psi \) with respect to the \(L^2\) norm (note that we have restricted ourselves to \(L^2\)) is [8, proof of Prop. 7]

where

Consequently, in our setting with \(u\in W^{1,1}\subset L^2\), we know that \(u^*\in L^2\) is a subgradient iff \(u^*= -{{\,\mathrm{div}\,}}\psi \) for some \(\psi \in W_0^2({{\,\mathrm{div}\,}};\Omega )\) which satisfies \(\Vert \psi \Vert _\infty \le 1\). Furthermore, it holds \(TV(u)=\langle u, -{{\,\mathrm{div}\,}}\psi \rangle _{L^2}\) [8, Prop. 7].

3.2 Lifted Bregman Iteration (Continuous Setting)

The lifted Bregman iteration (Alg. 2) is conceptualized in the lifted setting, i.e., applying the Bregman iteration on a lifted problem and choosing a subgradient of the lifted regularizer. In order to motivate our findings in the next two sections, we here introduce a third variation of the Bregman iteration. We perform the lifting on the original Bregman iteration, i.e., lift (6) for a given subgradient \(p_{k-1}\in \partial \text {TV}(u_{k-1})\).

We assume that \(u_{k-1}\in W^{1,1}\) and \(p_{k-1}\in L^2(\Omega )\) are given, such that \(p_{k-1}\in \partial \text {TV}(u_{k-1})\) holds. We regard the linear Bregman term as part of the data term. Using the theory of calibration-based lifting (21)-(23), the lifted version of (6) is:

The term \(t p_{k-1}(x)\) comes from the integrand in the Bregman term,

Let \(v^*\) be a global solution of (51) and let \(s \in [0,1)\) be arbitrary. According to [36, Thm. 3.1] the function \(1_{\{v^* > s\}}\) is the characteristic of the subgraph of a minimizer of the original Bregman iteration; i.e., this third variation of the Bregman iteration and the original Bregman iteration are in our terminology “equivalent”.

In order to make the bridge to the equivalence between Alg. 1 and Alg 2., we have to analyze how the lifting step operates on the linear Bregman term dependent on the given subgradient \(p_{k-1}\in \partial \text {TV}(u_{k-1})\) (Sect. 3.3). If the lifted given subgradient is a valid subgradient in the lifted setting of Alg. 2 (Sect. 3.4), it means we can find a “suitable” subgradient in the lifted setting that “relates” to a subgradient in the original setting and that Alg. 1 and Alg. 2 are equivalent.

3.3 Sufficient Condition for Equivalence (Continuous Case)

The following proposition shows that the Bregman iteration (Alg. 1) and the fully continuous formulation of the lifted Bregman iteration (Alg. 2) are equivalent as long as the subgradients used in either setting fulfil a certain condition. We have to assume unique solvability of the original problem, as is the case for strictly convex functionals such as ROF.

Proposition 1

Assume that the minimization problems (6) in the original Bregman iteration have unique solutions \(u_k\). Moreover, assume that the solutions \(v_k\) in the lifted iteration (10) are integral, i.e., indicator functions of subgraphs \(v_k = 1_u\) of some \(u\in W^{1,1}\). If the chosen subgradients \(p_{k-1}\in \partial \text {TV}(u_{k-1})\) and \({\tilde{p}}_{k-1} \in \partial \mathcal{TV}\mathcal{} (v_{k-1})\) satisfy

then the iterates \(v_k\) of the lifted Bregman iteration are the indicator functions of the subgraphs of the iterates \(u_k\) of the original Bregman iteration, i.e., \(v_k = 1_{u_k}\).

Proof

We first show that the Bregman iteration for the lifted energy with this specific choice of \({\tilde{p}}_{k-1}\) is simply the lifted Bregman energy for the subgradient \(p_{k-1}\) (note that this is not necessarily the case for an arbitrary subgradient of the lifted energy).

In order to do so, we substitute \(\tilde{\phi _t}(x,t) := \phi _t(x,t) - t p_{k-1}(x)\) in (51)–(53) and rewrite the problem as

with \({\mathcal {K}}_x\) from (52). If \({\tilde{p}}_{k-1}(\cdot ,t) = p_{k-1}(\cdot )\) as in the assumption, we see that the second term in (56) is simply \(-\left\langle {\tilde{p}}_{k-1}, v \right\rangle \), i.e., \({\mathcal {F}}_{\text {Breg}}(v) = {\mathcal {F}}(v)-\left\langle {\tilde{p}}_{k-1}, v\right\rangle \): Adding the linear Bregman term with this specific \({\tilde{p}}_{k-1}\) results in the same energy as lifting the original Bregman energy including the \(\left\langle p_{k-1}, u \right\rangle \) term.

Therefore, for any integral solution \(v=\varvec{1}_{u}\), the function u must be a solution of the original Bregman energy. Due to the uniqueness, this means \(u=u_k\). \(\square \)

3.4 Existence of Subgradients Fulfilling the Sufficient Condition

One question that remains is whether subgradients \({\tilde{p}}_{k-1}\) as required in Prop. 1 actually exist. In this section, we show that under the assumptions made in section 3.1 this is the case for \(J=\text {TV}\).

Lemma 1

For a subgradient \(p\in \partial \text {TV}(u)\), there exists a subgradient \({\tilde{p}} \in \partial \mathcal{TV}\mathcal{} (\varvec{1}_u)\) satisfying

Proof

For fixed \(p\in \partial \text {TV}(u)\subseteq BV^*(\Omega )\) we define \({\tilde{p}}\in BV^*(\Omega \times \Gamma )\) by \(\langle {\tilde{p}}, v \rangle := \int _\Gamma \left\langle p, v(\cdot ,t) \right\rangle dt\). If p is a function, this corresponds to setting \({\tilde{p}}\) constant copies of p along the \(\Gamma \) axis, i.e., \({\tilde{p}}(x,t):=p(x) \, \forall t\in \Gamma \). Similar to the previous paragraphs, if u is a \(W^{1,1}\) and therefore (in our setting) \(L^2\) function, and under the assumption of section 3.1 that \(p\in L^2(\Omega )\), we know that \({\tilde{p}}\in L^2(\Omega \times \Gamma )\) as defined due to the boundedness of \(\Gamma \).

Therefore, similar to [8, Prop. 7] and [19, Example I.4.3, Prop. I.5.1], \({\tilde{p}}\) is a subgradient of \(\mathcal{TV}\mathcal{}\) at \(\varvec{1}_{u}\) iff

From section 2.1, we recall

and, therefore,

where the closure is taken with respect to the \(L^2(\Omega \times \Gamma )\) norm. Therefore, if we can show that \({\tilde{p}}\in {{\,\mathrm{cl}\,}}\Phi _x\) and \(\mathcal{TV}\mathcal{}(1_{u}) = \left\langle {\tilde{p}}, 1_{u} \right\rangle \), by (59) we know that \({\tilde{p}}\in \partial \mathcal{TV}\mathcal{}(\varvec{1}_{u})\).

The fact that \({\tilde{p}} \in {{\,\mathrm{cl}\,}}\Phi _x\) follows directly from \(p \in {{\,\mathrm{cl}\,}}\Psi \) with \(\Psi \) as in (44): For every sequence \(\Psi \supset \left( p_n\right) \rightarrow p\) we have a sequence \(\psi _n\) of \(C_c^\infty (\Omega )\) functions with \(\Vert \psi (x)\Vert _2\le 1\) and \(p_n=-{{\,\mathrm{div}\,}}\psi _n\). Thus we can set \((\phi _x)_n(x,t):=\psi _n(x)\), so that \(-{{\,\mathrm{div}\,}}_x (\phi _x)_n\in \Phi _x\) from (60) and \(-{{\,\mathrm{div}\,}}_x (\phi _x)_n (x,t) = -{{\,\mathrm{div}\,}}\psi _n(x)=p_n(x)\). Thus \(-{{\,\mathrm{div}\,}}_x (\phi _x)_n(\cdot ,t)\rightarrow p\) in \(L^2(\Omega )\) for all \(t\in \Gamma \). Due to the boundedness of \(\Gamma \), this implies \(-{{\,\mathrm{div}\,}}_x (\phi _x)_n \rightarrow {\tilde{p}}\) in \(L^2(\Omega \times \Gamma )\), which shows \({\tilde{p}}\in {{\,\mathrm{cl}\,}}\Phi _x\) as desired.

In order to show the final missing piece in (59), i.e., \(\mathcal{TV}\mathcal{}(1_{u}) = \left\langle {\tilde{p}}, 1_{u} \right\rangle \), note that

The crucial step is \((*)\), where we again used a coarea-type formula for linear terms. \(\square \)

Therefore, by defining \({\tilde{p}}\) based on p as above, we have recovered a subgradient of the lifted regularizer \(\mathcal{TV}\mathcal{}\) of the form required by Prop. 1.

4 Equivalence in the Half-Discretized Formulation

In the previous section, we argued in the function space, i.e., \(u\in W^{1,1}(\Omega \times \Gamma )\). While theoretically interesting, this leaves the question whether a similar equivalence between the original and lifted Bregman iterations can also be formulated after discretizing the range; i.e., in the sublabel-accurate lifted case.

For simplicity, the following considerations are formal due to the mostly pointwise arguments. However, they can equally be understood in the spatially discrete setting with finite \(\Omega \), where arguments are more straightforward. A full discussion of the general case would involve a large functional-analytic and measure-theoretic overhead. In particular, the objects in the subdifferential generally cannot be assumed to be functions, which makes discussion hard and non-intuitive. In this paper, we follow a formal line of argument as is also common in the related literature. For readability, we consider a fixed \(x \in \Omega \) and omit x in the arguments.

4.1 Lifted Bregman Iteration (Discretized Case)

Analogous to our argumentation in the continuous setting, we first perform the (sublabel-accurate) lifting on the equation from the original Bregman iteration (6), assuming that a subgradient \(p_{k-1}\in \partial \text {TV}(u_{k-1})\) is given. We show that the extended data term \(H_\text {Breg}(u) := H(u) - \left\langle p_{k-1}, u \right\rangle \) has a lifted representation of the form \(\varvec{H}_\text {Breg}(\varvec{u}) := \varvec{H}(\varvec{u}) - \left\langle p_{k-1}\varvec{\tilde{\gamma }}, \varvec{u} \right\rangle \), similar to (10). Again, it is not clear whether \(p_{k-1}\varvec{\tilde{\gamma }}\) is a subgradient of the lifted total variation. In the following, in a slight abuse of notation, we use pointwise arguments for fixed \(x\in \Omega \), e.g., \(u=u(x),\) \(p=p(x)\), etc.

Proposition 2

Assume \(\rho _1,\rho _2,h: \Gamma \mapsto \overline{{\mathbb {R}}}\) with

where \(\rho _1\) and \(\rho _2\) should be understood as two different data terms in (1). Define

Then, for the lifted representations \(\varvec{\rho }_1,\varvec{\rho }_2,\varvec{h} : {\mathbb {R}}^l \mapsto \overline{{\mathbb {R}}}\) in (30), it holds

Proof

We deduce the biconjugate of \(\varvec{\rho }_2\) step-by-step and show that the final expression implies the anticipated equality. According to (30) the lifted representation of \(\rho _2\) is

We use the definition of the Fenchel conjugate and note that the supremum is attained for some \({{\textbf {u}}}\in \varvec{\Gamma }\):

The definition of \(\varvec{\rho }_2\) and \(\rho _2\) lead to:

Using \(\tilde{\varvec{\gamma }}\) as in (67) we can furthermore express \(p\gamma _j^{\beta } \) in terms of \({{\textbf {1}}}_j^{\beta }\):

Next we compute the biconjugate of \(\varvec{\rho }\):

By substituting \({{\textbf {z}}} := {{\textbf {v}}} + p \tilde{\varvec{\gamma }} \) we get

In reference to [31, Prop. 2] we see that the expression \( \langle {{\textbf {w}}}, p \tilde{\varvec{\gamma }} \rangle \) is in fact \({{\textbf {h}}}^{**}({{\textbf {w}}})\). This concludes the proof of Thm. 2. \(\square \)

4.2 Sufficient Condition for Equivalence (Discretized Setting)

In the previous section, we performed the lifting on (6) of the original Bregman iteration for a fixed \(p_{k-1}\). In this section, we show that – under a sufficient condition on the chosen subgradients – we can equivalently perform the Bregman iteration on the lifted problem where a subgradient \(\varvec{p}_{k-1}\) is chosen in the lifted setting (Alg. 2). This is the semi-discretized version of Prop. 1:

Proposition 3

Assume that the minimization problems (6) in the original Bregman iteration have unique solutions. Moreover, assume that in the lifted iteration, the solutions \(\varvec{u}_k\) of (10) in each step satisfy \(\varvec{u}_k(x)\in \varvec{\Gamma }\), i.e., are sublabel-integral. If at every point x the chosen subgradients \(p_{k-1}\in \partial J(u_{k-1})\) and \(\tilde{\varvec{p}}_{k-1} \in \partial \varvec{J}(\varvec{u}_{k-1})\) satisfy

with \(\tilde{\varvec{\gamma }}\) as in (67), then the lifted iterates \(\varvec{u}_k\) correspond to the iterates \(u_k\) of the classical Bregman iteration (6) according to (29).

Proof of Proposition 3

We define the extended data term

which incorporates the linear term of the Bregman iteration. Using Prop. 2, we reach the following lifted representation:

Hence the lifted version of (6) is

Comparing this to (10) shows that the minimization problem in the lifted iteration is the lifted version of (6) if the subgradients \(p_{k-1}\in \partial J(u_{k-1})\) and \(\tilde{\varvec{p}}_{k-1} \in \partial \varvec{J}(\varvec{u}_{k-1})\) satisfy \( \tilde{\varvec{p}}_{k-1} = p_{k-1} \tilde{\varvec{\gamma }}\). In this case, since we have assumed that the solution of the lifted problem (10) is sublabel-integral, it can be associated via (29) with the solution of the original problem (6), which is unique by assumption.\(\square \)

Thus, under the condition of the proposition, the lifted and unlifted Bregman iterations are equivalent.

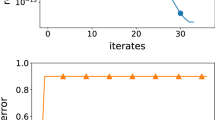

Equivalence of classical and lifted Bregman on a convex problem. On the convex ROF-(1) problem with anisotropic TV, a plain implementation of the classical Bregman iteration as in Alg. 1 (top row) and a naïve implementation of the lifted generalization as in Alg. 2 with lifted subgradients taken unmodified from the previous iteration (middle row) show clear differences. If the lifted subgradients are first transformed as in Prop. 3, the lifted iterates (bottom row) are visually indistinguishable from the classical iteration in this fully convex case. Note that the subgradients in the lifted setting were chosen without any knowledge of the subgradients in the original setting

5 Fully Discretized Setting

In this section, we consider the spatially discretized problem on a finite discretized domain \(\Omega ^h\) with grid spacing h. In particular, we will see that the subgradient condition in Prop. 3 can be met in case of anisotropic TV and how such subgradients can be obtained in practice.

5.1 Finding a Subgradient

The discretized, sublabel-accurate relaxed total variation is of the form

with \(\varvec{K}\) defined by (35) or (36)-(37) and \(\nabla \) denoting the discretized forward-difference operator. By standard convex analysis ([38, Thm. 23.9], [39, Cor. 10.9], [39, Prop. 11.3]) we can show that if \(\varvec{q}^h\) is a maximizer of (86), then \(\varvec{p}^h := \nabla ^{\top }\varvec{q}^h\) is a subgradient of \(J^h(\nabla \varvec{u}^h)\). Thus, the step of choosing a subgradient (11) boils down to \(\varvec{p}_k^h = \nabla ^\top \varvec{q}_k^h\) and for the dual maximizer \(\varvec{q}_{k-1}^h\) of the last iteration we implement (10) as:

5.2 Transformation of Subgradients

In Prop. 3 we formulated a constraint on the subgradients for which the original and lifted Bregman iteration are equivalent. While this property is not necessarily satisfied if the subgradient \(\varvec{p}^h_{k-1}\) is chosen according to the previous paragraph, we will now show that any such subgradient can be transformed into another valid subgradient that satisfies condition (81), in analogy to the construction of the subgradient \({\bar{p}}\) in Sect. 3.4.

Consider a pointwise sublabel-integral solution \(\varvec{u}^h_k\) with subgradient \(\varvec{p}^h_k := \nabla ^\top \varvec{q}^h_k \in \partial \varvec{J}^h(\varvec{u}^h_k)\) for \(\varvec{q}^h_k(\cdot ) \in \varvec{K}\) being a maximizer of (86). We define a pointwise transformation: For fixed \(x^m \in \Omega ^h\) and \(\varvec{u}^h_k(x^m) = \varvec{1_i^\alpha }\), let \((\varvec{q}^h_k(x^m))^i \in {\mathbb {R}}^d\) denote the i-th row of \(\varvec{q}^h_k(x^m)\) corresponding to the i-th label as prescribed by \(\varvec{u}^h_k(x^m) = \varvec{1}_i^\alpha \). Both in the isotropic and anisotropic case the transformation

returns an element of the set \(\varvec{K}\), i.e., \(\varvec{K}_{\text {iso}}\) or \(\varvec{K}_{\text {an}}\). In the anisotropic case we can furthermore show that \(\tilde{\varvec{q}}^h_k\) also maximizes (86) and therefore the transformation gives a subgradient \(\tilde{\varvec{p}}^h_k := \nabla ^\top \tilde{ \varvec{q}}^h_k \in \partial \varvec{J}^h(\varvec{u}^h_k)\) of the desired form (81). The restriction to the anisotropic case is unfortunate but necessary due to the fact that the coarea formula does not hold in the discretized case for the usual isotropic discretizations.

Proposition 4

Consider the anisotropic TV-regularized case (36)-(37). Assume that the iterate \(\varvec{u}^h_{k}\) is sublabel-integral. Moreover, assume that \(\varvec{p}^h_k := \nabla ^\top \varvec{q}^h_k\) is a subgradient in \(\partial \varvec{J}^h(\varvec{u}^h_{k})\) and define \(\tilde{\varvec{q}}^h_k\) pointwise as in (89). Then \(\tilde{\varvec{p}}^h_k := \nabla ^\top \tilde{ \varvec{q}}^h_k\) is also a subgradient and furthermore of the form

where \(p^h_{k}\) is a subgradient in the unlifted case, i.e., \(p^h_{k}\in \partial J^h(u^h_{k})\).

Proof of Proposition 4

The proof consists of two parts. First, we show that the transformation (89) of any subgradient \(\varvec{p}_k^h\) in the lifted setting leads to another valid subgradient \(\tilde{\varvec{p}}_k^h\) in the lifted setting of the form (90). Second, we show that the prefactor \(p^h_{k}\) in (90) is a valid subgradient in the unlifted setting.

In the anisotropic case the spatial dimensions are uncoupled, therefore w.l.o.g. assume \(d=1\). Consider two neighboring points \(x^m\) and \(x^{m+1}\) with \(\varvec{u}^h_k(x^m) = \varvec{1}_i^\alpha \) and \(\varvec{u}^h_k(x^{m+1}) = \varvec{1}_j^\beta \). Applying the forward difference operator, we have \(h \nabla \varvec{u}^h_k(x^m)=\)

Maximizers \(\varvec{q}^h_k(x^m) \in \varvec{K}_{\text {an}}\) of the dual problem (86) are exactly all vectors of the form \(\varvec{q}^h_k(x^m)=\)

The elements marked with \(*\) can be chosen arbitrarily as long as \(\varvec{q}^h_k(x^m)\in \varvec{K}_{\text {an}}\). Due to this special form, the transformation (89) leads to \( \tilde{\varvec{q}}^h_k(x^m) = \pm \tilde{\varvec{\gamma }}\) depending on the case. Crucially, this transformed vector is another equally valid choice in (92) and therefore (89) returns another valid subgradient \(\tilde{\varvec{p}}^h_k = \nabla ^\top \tilde{\varvec{q}}^h_k\).

In order to show that \(p_k^h= \nabla ^\top q_k^h\) for \(q_k^h(\cdot )= \pm 1\) is a subgradient in the unlifted setting we use the same arguments. To this end, we use the sublabel-accurate notation with \(L=2\). The “lifted” label space is \(\varvec{\Gamma } = [0,1]\), independently of the actual \(\Gamma \subset {\mathbb {R}}\); see [31, Prop. 3]. Then with \(u^h_k(x^m) = \gamma _i^\alpha \) and \(u^h_k(x^{m+1}) = \gamma _j^\beta \) (corresponding to \(\varvec{1}_i^\alpha \) and \(\varvec{1}_j^\beta \) from before), applying the forward difference operator \(\nabla u^h_k(x^m) = \frac{1}{h} ( \gamma _j^\beta - \gamma _i^\alpha )\) shows that dual maximizers are \(q^h_k(x^m) = {{\,\mathrm{sgn}\,}}(\gamma _j^\beta - \gamma _i^\alpha ) | \varvec{\Gamma } | = \pm 1\). It can be seen that the algebraic signs coincide pointwise in the lifted and unlifted setting. Thus \(p^h_{k}\) in (90) is of the form \(p^h_k = \nabla ^\top q_k^h\) and in particular a subgradient in the unlifted setting. \(\square \)

6 Numerical Results

In this section, we investigate the equivalence of the original and lifted Bregman iteration for the ROF-(1) problem numerically. Furthermore, we present a stereo-matching example which supports our conjecture that the lifted Bregman iteration for variational models with arbitrary data terms can be used to decompose solutions into eigenfunctions of the regularizer.

6.1 Convex Energy with Synthetic Data

We compare the results of the original and lifted Bregman iteration for the ROF-(1) problem with \(\lambda =20\), synthetic input data and anisotropic TV regularizer. In the lifted setting, we compare implementations with and without transforming the subgradients as in (89). The results shown in Fig. 3 clearly support the theory: Once subgradients are transformed as in Prop. 3, the iterates agree with the classical, unlifted iteration. We want to emphasize, that we chose the subgradients in the original and lifted setting arbitrarily and independently, i.e. without any knowledge of the other.

A subtle issue concerns points where the minimizer of the lifted energy is not sublabel-integral, i.e., cannot be easily identified with a solution of the original problem. This impedes the recovery of a suitable subgradient as in (29), which leads to diverging Bregman iterations. We found this issue to occur in particular with isotropic TV discretization, which does not satisfy a discrete version of the coarea formula – which is used to prove in the continuous setting that solutions of the original problem can be recovered by thresholding – but is also visible to a smaller extent around the boundaries of the objects in Fig. 3, especially when subgradients are not modified.

6.2 Non-Convex Stereo Matching with Artificial Data

Artificial data for stereo matching. The backgrounds of the input images \(I_1\) and \(I_2\) are chosen to be identical. Only within the three circles \(I_1\) and \(I_2\) differ. The information within the circles is shifted four pixels sideways; the circles themselves stay in place. The black square marks the area of the close-ups of \(I_1\) (top) and \(I_2\) (bottom)

Lifted Bregman on stereo matching problem with artificial input data. TV-(1) problem with data term (93). We use \(\lambda = 14 \) and isotropic TV (top), and \(\lambda = 7 \) and anisotropic TV (bottom), respectively. Figure 4 describes our input data in the isotropic setting (top). In the anisotropic setting we use square cutouts instead (bottom left). For this non-convex data term, the lifted Bregman iteration progressively adds components corresponding to eigenfunctions of the TV regularizer to the depth map that. Components associated with larger eigenvalues appear later

Lifted Bregman for non-convex nonlinear scale space. Shown are the Bregman iterations for the non-convex TV-(1) stereo matching problem with data term (95), evaluated on the Umbrella and Backpack data set from the Middlebury stereo datasets [41]. At \(k=1\) the solution is a coarse approximation of the depth field. As the iteration advances, details are progressively recovered according to scale. Although the problem is not of the convex and positively one-homogeneous form OH-(1) classically associated with the inverse scale space flow, the results show a qualitative similarity to a nonlinear scale space for this difficult non-convex problem

In the following two toy examples, we empirically investigate how properties of the Bregman iteration carry over to the lifted Bregman iteration for arbitrary (non-convex) data terms. We consider a relatively simple stereo-matching problem, namely TV-(1) with the non-convex data term

Here, \(I_1\) and \(I_2\) are two given input images and \(h_\tau (\alpha ) := \min \left\{ \tau , \alpha \right\} \) is a thresholding function. We assume that the input images are rectified, i.e., the epipolar lines in the images align, so that the unknown – but desired – displacement of points between the two images is restricted to the \(x_2\) axis and can be modeled as a scalar function u.

A typical observation when using nonlinear scale space method is that components in the solution corresponding to nonlinear eigenfunctions of the regularizer appear at certain points in time depending on their eigenvalue.

We thus construct \(I_1\) and \(I_2\) such that the solution \({\tilde{u}}\) of \(\arg \min _u \rho (x,u(x))\) is clearly the sum of eigenfunctions of the isotropic [anisotropic] \(\text {TV}\), i.e., multiples of indicator functions of circles [squares].

In the following we elaborate the isotropic setting. For some non-overlapping circles \(B_{r_i}(m_i)\) with centers \(m_i\) and radii \(r_i\) we would like the solution

Fig. 4 shows the corresponding data; note that there is no displacement except inside the circles, where it is non-zero but constant.

In analogy to the convex ROF example in Fig. 3 and the theory of inverse scale space flow, we would expect the following property to hold for the lifted Bregman iteration: The solutions – here the depth maps of the artificial scene – returned in each iteration of the lifted Bregman iteration progressively incorporate the discs (eigenfunctions of isotropic TV) according to their radius (associated eigenvalue); the biggest disc should appear first, the smallest disc last.

Encouragingly, these expectations are also observed in this non-convex case, see Fig. 5. This suggests that the lifted Bregman iteration could be useful to decompose the solution of a variational problem with arbitrary data term with respect to eigenfunctions of the regularizer.

6.3 Non-Convex Stereo Matching with Real-World Data

We also computed results for a stereo-matching problem with real life data. We used TV-(1) and the data term [31]

Here, W(x) denotes a patch around x, \(h_\tau \) is the truncation function with threshold \(\tau \) and \(d_j\) is the absolute gradient difference

This data term is non-convex and nonlinear in u. We applied the lifted Bregman iteration on three data sets [41] using \(L=5\) labels, the isotropic TV regularizer and untransformed subgradients. The results can be seen in Fig. 1 (Motorbike: \(\lambda =20\), \(k=30\)) and Fig. 6 (Umbrella: \(\lambda = 10\); Backpack: \(\lambda = 25\)). We also ran the experiment with an anisotropic TV regularizer as well as transformed subgradients. Overall, the behavior was similar, but transforming the subgradients led to more pronounced jumps compared to the isotropic case.

Again, the evolution of the depth map throughout the iteration is reminiscent of an inverse scale space flow. The first solution is a smooth approximation of the depth proportions and as the iteration continues, finer structures are added. This behavior is also visible in the progression of the horizontal profiles depicted in Fig. 1.

7 Conclusion

We have proposed a combination of the Bregman iteration and a lifting approach with sublabel-accurate discretization in order to extend the Bregman iteration to non-convex energies such as stereo matching. If a certain form of the subgradients can be ensured – which can be shown under some assumptions in the continuous case as well as the discretized case in particular for total variation regularization – the iterates agree in theory and in practice for the classical convex ROF-(1) problem. In the nonconvex case, the numerical experiments show behavior that is very similar to what one expects in classical inverse scale space. This opens up a number of interesting theoretical questions, such as the decomposition into nonlinear eigenfunctions, as well as practical applications such as non-convex scale space transformations and nonlinear filters for arbitrary nonconvex data terms.

References

Alberti, G., Bouchitté, G., Dal Maso, G.: The calibration method for the mumford-shah functional and free-discontinuity problems. Calc. Var. Partial. Differ. Equ. 16(3), 299–333 (2003)

Alt, H.W.: Linear functional analysis. An application oriented introduction (1992)

Ambrosio, L., Fusco, N., Pallara, D.: Functions of bounded variation and free discontinuity problems. Oxford mathematical monographs (2000)

Aubert, G., Kornprobst, P.: Mathematical problems in image processing: partial differential equations and the calculus of variations, vol. 147. Springer Science & Business Media (2006)

Bednarski, D., Lellmann, J.: Inverse scale space iterations for non-convex variational problems using functional lifting. In: Elmoataz, A., Fadili, J., Quéau, Y., Rabin, J., Simon, L. (eds.) Scale Space and Variational Methods in Computer Vision, pp. 229–241. Springer International Publishing, Cham (2021)

Benning, M., Burger, M.: Ground states and singular vectors of convex variational regularization methods. arXiv preprint arXiv:1211.2057 (2012)

Bouchitté, G., Fragalà, I.: A duality theory for non-convex problems in the calculus of variations. Arch. Ration. Mech. Anal. 229(1), 361–415 (2018)

Bredies, K., Holler, M.: A pointwise characterization of the subdifferential of the total variation functional. arXiv preprint arXiv:1609.08918 (2016)

Bregman, L.M.: The relaxation method of finding the common point of convex sets and its application to the solution of problems in convex programming. USSR Comput. Math. Math. Phys. 7(3), 200–217 (1967)

Bungert, L., Burger, M., Chambolle, A., Novaga, M.: Nonlinear spectral decompositions by gradient flows of one-homogeneous functionals. Anal. PDE 14(3), 823–860 (2021)

Burger, M., Gilboa, G., Moeller, M., Eckardt, L., Cremers, D.: Spectral decompositions using one-homogeneous functionals. SIAM J. Imag. Sci. 9(3), 1374–1408 (2016)

Burger, M., Gilboa, G., Osher, S., Xu, J., et al.: Nonlinear inverse scale space methods. Commun. Math. Sci. 4(1), 179–212 (2006)

Burger, M., Eckardt, L., Gilboa, G., Moeller, M.: Spectral representations of one-homogeneous functionals. In: International Conference on Scale Space and Variational Methods in Computer Vision. pp. 16–27. Springer (2015)

Cai, J., Osher, S., Shen, Z.: Linearized Bregman iterations for compressed sensing. Math. Comput. 78(267), 1515–1536 (2009)

Chambolle, A., Cremers, D., Pock, T.: A convex approach for computing minimal partitions (2008)

Chambolle, A.: Convex representation for lower semicontinuous envelopes of functionals in L1. J. Convex Anal. 8(1), 149–170 (2001)

Chan, T.F., Esedoglu, S., Nikolova, M.: Algorithms for finding global minimizers of image segmentation and denoising models. SIAM J. Appl. Math. 66(5), 1632–1648 (2006)

Chan, T.F., Vese, L.A.: Active contours without edges. IEEE Trans. Image Proc. 10(2), 266–277 (2001)

Ekeland, I., Temam, R.: Convex Analysis and Variational Problems, vol. 28. Siam (1999)

Gilboa, G.: Semi-inner-products for convex functionals and their use in image decomposition. J. Math. Imaging Vis. 57(1), 26–42 (2017)

Gilboa, G.: A spectral approach to total variation. In: International Conference on Scale Space and Variational Methods in Computer Vision. pp. 36–47. Springer (2013)

Gilboa, G.: A total variation spectral framework for scale and texture analysis. SIAM J. Imaging Sci. 7(4), 1937–1961 (2014)

Gilboa, G., Moeller, M., Burger, M.: Nonlinear spectral analysis via one-homogeneous functionals: overview and future prospects. J. Math. Imaging Vis. 56(2), 300–319 (2016)

Goldstein, T., Osher, S.: The split Bregman method for L1-regularized problems. SIAM J. Imag. Sci. 2(2), 323–343 (2009)

Grossmann, T.G., Korolev, Y., Gilboa, G., Schönlieb, C.B.: Deeply learned spectral total variation decomposition. Adv. Neural. Inf. Process. Syst. 33, 12115–12126 (2020)

Hait, E., Gilboa, G.: Spectral total-variation local scale signatures for image manipulation and fusion. IEEE Trans. Image Process. 28(2), 880–895 (2018)

Hoeltgen, L., Breuß, M.: Bregman iteration for correspondence problems: A study of optical flow. arXiv preprint arXiv:1510.01130 (2015)

Ishikawa, H.: Exact optimization for Markov random fields with convex priors. Patt. Anal. Mach. Intell. 25(10), 1333–1336 (2003)

Ishikawa, H., Geiger, D.: Segmentation by grouping junctions. In: CVPR. vol. 98, p. 125. Citeseer (1998)

Lellmann, J., Schnörr, C.: Continuous multiclass labeling approaches and algorithms. SIAM J. Imaging Sci. 4(4), 1049–1096 (2011)

Möllenhoff, T., Laude, E., Möller, M., Lellmann, J., Cremers, D.: Sublabel-accurate relaxation of nonconvex energies. CoRR abs/1512.01383 (2015)

Mollenhoff, T., Cremers, D.: Sublabel-accurate discretization of nonconvex free-discontinuity problems. In: Proceedings of the IEEE International Conference on Computer Vision. pp. 1183–1191 (2017)

Mollenhoff, T., Cremers, D.: Lifting vectorial variational problems: a natural formulation based on geometric measure theory and discrete exterior calculus. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. pp. 11117–11126 (2019)

Osher, S., Burger, M., Goldfarb, D., Xu, J., Yin, W.: An iterative regularization method for total variation-based image restoration. Multiscale Model. Simul. 4(22), 460–489 (2005)

Osher, S., Mao, Y., Dong, B., Yin, W.: Fast linearized Bregman iteration for compressive sensing and sparse denoising. arXiv preprint arXiv:1104.0262 (2011)

Pock, T., Cremers, D., Bischof, H., Chambolle, A.: Global solutions of variational models with convex regularization. SIAM J. Imag. Sci. 3(4), 1122–1145 (2010)

Pock, T., Schoenemann, T., Graber, G., Bischof, H., Cremers, D.: A convex formulation of continuous multi-label problems pp. 792–805 (2008)

Rockafellar, R.T.: Convex analysis, vol. 28. Princeton university press (1970)

Rockafellar, R.T., Wets, R.J.: Variational analysis, vol. 317. Springer Science & Business Media (2009)

Rudin, L., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms. Physica D 60(1–4), 259–268 (1992)

Scharstein, D., Hirschmüller, H., Kitajima, Y., Krathwohl, G., Nešić, N., Wang, X., Westling, P.: High-resolution stereo datasets with subpixel-accurate ground truth. In: German conference on pattern recognition. pp. 31–42. Springer (2014)

Scherzer, O., Grasmair, M., Grossauer, H., Haltmeier, M., Lenzen, F.: Variational Methods in Imaging, Applied Mathematical Sciences, vol. 167. Springer (2009)

Seitz, S.M., Curless, B., Diebel, J., Scharstein, D., Szeliski, R.: A comparison and evaluation of multi-view stereo reconstruction algorithms. In: 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06). vol. 1, pp. 519–528. IEEE (2006)

Vogt, T., Haase, R., Bednarski, D., Lellmann, J.: On the connection between dynamical optimal transport and functional lifting. arXiv preprint arXiv:2007.02587 (2020)

Zach, C., Gallup, D., Frahm, J.M., Niethammer, M.: Fast global labeling for real-time stereo using multiple plane sweeps. In: Vis. Mod. Vis. pp. 243–252 (2008)

Zeune, L., van Dalum, G., Terstappen, L.W., van Gils, S.A., Brune, C.: Multiscale segmentation via bregman distances and nonlinear spectral analysis. SIAM J. Imag. Sci. 10(1), 111–146 (2017)

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The authors acknowledge support through DFG grant LE 4064/1-1 “Functional Lifting 2.0: Efficient Convexifications for Imaging and Vision”and NVIDIA Corporation.

Code and results of our experiments are also available on https://github.com/danbedmic/iss_non_convex

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bednarski, D., Lellmann, J. Inverse Scale Space Iterations for Non-Convex Variational Problems: The Continuous and Discrete Case. J Math Imaging Vis 65, 124–139 (2023). https://doi.org/10.1007/s10851-022-01125-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10851-022-01125-8