Abstract

This paper shows Korean speakers’ strong preference for incremental structure building based on the following core phenomena: (1) left–right asymmetry; (2) pre-verbal structure building and a strong preference for early association. This paper argues that these phenomena reflect the procedural aspects of linguistic competence, which are difficult to explain within non-procedural grammar formalisms. Based on these observations, I argue for the necessity of a grammar formalism that adopts left-to-right incrementality as a core property of the syntactic architecture. In particular, I aim to show the role of (1) constructive particles; (2) prosody; and (3) structural routines in incremental Korean structure building. Though the nature of this discussion is theory-neutral, in order to formalise this idea I will adopt Dynamic Syntax [DS: Kempson et al. (Dynamic syntax: the flow of language understanding, Blackwell, Oxford, 2001); Cann et al. (The dynamics of language. Elsevier, Oxford, 2005)] in this paper.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Core Data: Puzzles

This paper aims to explore two phenomena in Korean: (1) left–right asymmetry and (2) pre-verbal structure building and a strong preference for early association. In particular, I investigate the role of (1) constructive particles; (2) prosody; and (3) structural routines in yielding incremental structure building. In order to explain the incrementality presented in this paper, I shall adopt the Dynamic Syntax framework [DS: Kempson et al. (2001), Cann et al. (2005)]. The key questions I will address are as follows:

(1) | |

(a) | Freedom at Left and Restriction at Right (1.1) |

(i) Why are expressions at the left periphery interpreted more freely than those at the right periphery? | |

(b) | Pre-verbal structure building and early syntactic association (1.2) |

(i) How can Korean speakers build a structure without a verb? | |

(ii) Why do Korean speakers strongly prefer early syntactic association and put the arguments in the same local domain? | |

(iii) What happens when prosody intervenes? |

In this paper, I will present core data corresponding to each question.

1.1 Leftward Freedom and Rightward Restriction

In Korean, expressions at the left periphery can be interpreted more flexibly than those at the right. For instance, a sentence-initial dative NP kilin-hanthey ‘to a giraffe’ in (2) can be interpreted in the three possible structures hosted by a verb malhaysseyo ‘said’ (= 2a), yaksokhayssta ‘promised’ (= 2b) or mantwule-cwukeyssta ‘make-give’ (= 2c).

(2) | Dative NP can be interpreted within three different structures | ||||

kilin-hanthey | kangaci-nun | twayci-ka | mas-iss-nun | cookie-lul | |

giraffe-DAT | puppy-TOP | pig-NOM | taste-exist-ADN | cookie-ACC | |

mantwule-cwukeyssta-ko | yaksokhayssta-ko | malhayss-eyo. | |||

make-give-COMP | promised-COMP | said-DECL | |||

(a) ‘A puppy said to a giraffe that a pig promised that he will make him a delicious cookie.’ | |||||

(b) A puppy said to somebody that a pig promised to a giraffe that he will make and give him a delicious cookie.’ | |||||

(c) ‘A puppy said to somebody that a pig promised that he will make and give a giraffe a delicious cookie.’ | |||||

However, the interpretation of expressions becomes gradually more restricted towards the right edge of the sentence. Before and after the final matrix verb, the dative NP can be only interpreted within the matrix clause as in (3).

(3) | Dative NP located before the matrix verb is preferred to be interpreted within the matrix structure | |||

kangaci-nun | twayci-ka mas-iss-nun | cookie-lul | mantwule-cwukeyssta-ko | |

puppy-TOP | pig-NOM taste-exist-ADN | cookie-ACC | make-will.give-COMP | |

yaksokhayssta-ko | kilin-hanthey | malhayss-eyo. | ||

promised-COMP | giraffe-DAT | said-DECL | ||

(a) ‘A puppy said to a giraffe that a pig promised that he will make and give him a delicious cookie.’ | ||||

(b) ‘A puppy said to somebody that a pig promised to a giraffe that he will make and give him a delicious cookie.’ (this reading is very hard to get.) | ||||

(c) A puppy said to somebody that a pig promised that he will make and give a giraffe a delicious cookie.’ (this reading is very hard to get.) | ||||

(4) | Dative NP located after the matrix verb is preferred to be interpreted within the matrix structure | |||

kangaci-nun | twayci-ka | mas-iss-nun cookie-lul | mantwule-cwukeyssta-ko | |

puppy-TOP | pig-NOM taste-exist-ADN cookie-ACC | make-will.give-COMP | ||

yaksokhayssta-ko | malhayss-eyokilin-hanthey | ||

promised-COMP | said-DECL | giraffe-DAT |

(a) ‘A puppy said to a giraffe that a pig promised that he will make and give him a delicious cookie.’ | ||||

(b) ‘A puppy said to somebody that a pig promised to a giraffe that he will make and give him a delicious cookie.’ (this reading is very hard to get.) | ||||

(c) ‘A puppy said to somebody that a pig promised that he will make and give giraffe a delicious cookie.’ (this reading is very hard to get.) | ||||

1.1.1 Grammaticality Judgements

I have asked Korean native speakers (N = 33) to judge naturalness for the pairs of examples as given in (5) on the scale of 1–3 (1 refers to very natural, 2 refers to natural, 3 refers to very unnatural). The result shows 70% of the participants gave the score 3 for ditransitive-transitive sequence examples as in (5b), whereas 30% of the participants gave the score 3 for transitive-ditransitive sequence examples as in (5a). To examine whether the difference between the two groups is statistically significant, an independent t-test was conducted. The homoscedasticity of the two groups was checked before conducting the independent t-test, and the result showed no violation of the homoscedasticity assumption. The result of the independent t-test shows that there was a significant difference between the mean of the two groups.

(5) | (a) Transitive-Ditransitive verbs followed by a dative NP | |||||

Sena-ka | khiwi-lul | mekess-ta-ko | Mina-ka malhaysseMiho-hanthey | |||

Sena -NOM kiwi-ACC ate-DECL-COMP M-NOM said | M-DAT | |||

Intended reading: ‘Mina said to Miho that Sena ate a kiwi.’ | ||||

(b) Ditransitive-Transitive verbs followed by a dative NP | ||||||

???Sena -ka khiwi-lul | cwuess-ta-ko | Mina-ka sayngkakhaysse Miho -hanthey | ||||

Sena-NOM kiwi-ACC gave-DECL-COMP `M-NOM thought | M-DAT | |||||

Intended reading: ‘Mina thought Sena gave Miho a kiwi.’ | ||||||

This result confirms our earlier discussion on interpretational restriction at the right periphery or post-verbal position in Korean. That is, the reason why (5b)-like examples are unnatural is because the dative NP Miho-hanthey is expected to be interpreted not within any arbitrary structure but the closest structure, that is a matrix clause. This phenomenon is not uncommon in Korean as we shall now see.

1.1.2 Post-Verbal Expression and Closing-Off Prosody

Korean is considered to be a strictly verb-final language. Yet, it is very easy to find post-verbal expressions. In the SILIC Corpus,Footnote 1 Kim (2010) extracted 731 examples where expressions occur after the verbal cluster. Kim found that 98% of the time, only one expression followed the verbal cluster at the end. Kim also found that 81.5% of the time the post-verbal expression was comprised of two to four syllables. Kiaer (2007, 2014) showed how the post-verbal expression and the preceding verb tend to form one prosodic constituent. This shows that even if a verb plays a crucial role in confirming the end of a structure, prosody also plays a crucial role in confirming the completion of the structure building.

The interpretational asymmetry between left and right edge of a structure has been problematic since it was first discussed by Ross as the Right Roof Constraint (1967). However, this phenomenon is not a reflection of some exceptional property of natural language, instead it is a natural consequence of incremental structure building (see also Wasow 2002 for a similar discussion). If we disregard the left-to-right growth property of syntactic structure, it is difficult to provide an explanatorily adequate account of the data that have resulted from the dynamic growth of a structure. However, if we assume that a structure is built left-to-right in a procedural manner, the asymmetry above is natural. Within left-to-right syntax, it is natural to predict that at an early stage, more freedom is granted, yet as the structure grows, less freedom will be granted. From a working memory perspective too, it makes sense that the last expression would be interpreted not in the earliest or any arbitrary structure, but in the closest, most local structure.

1.2 Pre-Verbal Structure Building and Early Syntactic Association

Consider (6). (6a) is grammatical and natural while (6b) is ungrammatical and unnatural. The two examples are the same except for the location of the Intonational Phrase (IP) boundary (%).

(6) | |||

a. Direct object and indirect object NP cannot form a cluster due to the IP boundary | |||

{Ku kilin-ul} % | Mina-hanthey emma-nun appa-ka | Christmas-senmwul-lo | |

The giraffe-ACC | Mina-DAT | mum-TOP dad-NOM Christmas-present-INST | |

Jena-hanthey cwusiessta-ko malhaysseyo. | |||

Jena-DAT | gave.HON-COMP | said | |

Intended reading: ‘Mother said to Mina that dad gave the teddy giraffe as a Christmas present to Jena.’ | |||

b. Direct object and indirect object NP forms a cluster | |||

??{Ku kilin-ul Mina-hanthey} % | emma-nun appa-ka | Christmas-senmwul-lo | |

The giraffe-ACC | Mina-DAT | mum-TOP dad-NOM Christmas-present-INST | |

Jena-hanthey cwusiessta-ko malhaysseyo. | |||

Jena-DAT | gave.HOM-COMP | said | |

‘The interpretation is impossible due to the two conflicting dative NPs.’ | |||

Kiaer (2007) reports through online cross-modal self-paced reading comprehension that (6b) shows significant delay when the second dative NP Jena-hanthey ‘to Jena’ is read. The delay is caused because the first dative NP Mina-hanthey is built in the same local structure with the preceding accusative NP Ku kilin-ul ‘the giraffe’ in (6b) unlike in (6a). As I shall discuss in Sect. 3, this clustering is projected by case particles and IP boundary tones. Note that in this test, the first region of the self-paced reading was given only via auditory input. When the underlined cluster is formed as in (6b), it is obvious that this cluster is to be interpreted within the embedded clause. Hence, when another dative NP Jena-hanthey ‘to Jena’ is read, the parser cannot but be surprised and the processing is delayed. This is clear-cut evidence of pre-verbal, incremental structure building in Korean. Pre-verbal structure building such as this is puzzling to explain in static grammar formalisms to which I turn in Sect. 2.

Furthermore, Kiaer (2014) reports an experiment where children aged between 7 and 9 (N = 29) participated in a questionnaire study. They were asked to answer a question as in (7) after looking at Fig. 1. The result shows that they strongly prefer to provide an answer as in (7ii) which confirms that they have built a cluster from the dative NP with the preceding accusative NP.

(7) | {NP-ACC NP-DAT} %NP-TOP NP-NOM | |||

Ppalkan sakwa-lul | nwukwu-hanthey ellwukmal-un kilin-i | cwukoissta-ko malhay? | ||

red | apple-ACC who-DAT | zebra-TOP | giraffe-NOM gave-COMP is.saying | |

(i) ‘To whom is a zebra saying that a giraffe is giving a red apple?’ (matrix clause reading if the answer is a rabbit.) | ||||

(ii) ‘According to a zebra, to whom is a giraffe giving a red apple?’ (embedded clause reading if the answer is an elephant.) | ||||

Syntactic constituent formation with seemingly verb-less NP clusters has been a puzzle in syntactic theory (see Kempson and Kiaer 2010 for a review). Early structural association by the use of particles, prosody or structural routines is the main source of incremental structure building in languages like Korean, where a verb comes in the final position. The question arises as to why such a syntactic choice is preferred and how we can explain this preference adequately, given that such a preference is a reflection of the linguistic knowledge of a Korean speaker. I argue that incremental, left-to-right structure building maximizes the efficiency of such structure building and this knowledge is encoded in the grammar of Korean. For instance, in (7), forming a cluster between an accusative and a dative NP is preferred because real-time comprehensibility is maximised when a structure is built from left-to-right incrementally in the same local structure, unless there is need to do otherwise. However, such a choice can be overridden when the phonological weight of the preceding NP is so heavy that it is hard to phrase (i.e. pronounce) both NPs together (see Kiaer 2007).

In order to incorporate concepts such as early syntactic association, one needs to assume a left-to-right architecture in the grammar—which allows step-wise growth before the verb is reached. Otherwise, as we shall see later, we end up either adding an ad-hoc mechanism such as un-forced revision (Aoshima et al. 2004) in the grammar, or adopting grammar-independent parsing strategies as in Miyamoto (2002).

Expressions occurring at the left periphery have in principle more freedom than those at the right periphery. However, native speakers prefer to interpret or understand the sentence-initial, left-peripheral expression within the immediately following structure in the left-to-right, time-linear sequence, rather than in any arbitrary structure. Consider (2), repeated as (8).

(8) | The dative NP can be interpreted within three different structures | |||

Kilin-hanthey | kangaci-nun | twayci-ka mas-iss-nun | cookie-lul | |

giraffe-DAT | puppy-TOP | pig-NOM | taste-exist-ADN cookie-ACC | |

mantule-cwukeyssta-ko | yaksokhayssta-ko | malhayss-eyo. | ||

make-will.give-COMP | promised-COMP | said-DECL | ||

(a) ‘A puppy said to a giraffe that a pig promised that he would make and give him a delicious cookie.’ (preferred) | ||||

(b) A puppy said to somebody that a pig promised to a giraffe that he would make and give him a delicious cookie.’ (possible yet not preferred) | ||||

(c) ‘A puppy said to somebody that a pig promised that he would make and give a giraffe a delicious cookie.’ (possible yet not preferred) | ||||

The left-most dative NP Kilin-hanthey ‘to giraffe’ can be interpreted in all three structures hosted by (i) mantule-cwukeyssta ‘make-and-give’, (ii) yaksokhayssta ‘promised’, and (iii) malhayss-eyo ‘said’. Yet, most Korean speakers prefer the reading in (8a) (Kiaer 2014). A similar tendency has been observed in various comprehension and production studies, including a corpus investigation (see Kiaer 2014). Within the tenet of generative grammar, it is often assumed that an ideal speaker has the capacity to understand and produce all the logically possible forms with similar efficiency. Under this assumption, it is not easy to explain why certain forms are more efficient and frequent than other possible forms. Such efficiency/frequency asymmetry is often ignored or regarded as only coincidental.

At the sentence-initial position, where structural ambiguity could arise, Korean speakers tend to resolve the possibly ambiguous dative NP soon within the first available structure. The first-available structure is hard to define within top-down syntactic architecture but can be easily defined within left-to-right structural architectures. The syntactic competence that enables early association reveals that Korean parsers build structures incrementally even without the full knowledge of the upcoming structure or in the absence of a verb. I argue that this is made possible by the constructive use of particles and some structure-building routines together with prosody. Capturing and explaining syntactic competence demonstrated via early syntactic association such as this remains a puzzle in static grammar formalisms as I shall show in the next section. However, within the processing-oriented framework Dynamic Syntax, incremental structure building as found in early association is considered as the natural consequence of left-to-right structure building. In Sect. 2, I will discuss the challenges of explaining incrementality in existing grammar formalisms. In Sect. 3, I will present the basics of Dynamic Syntax and provide an analysis based on DS. In Sect. 4, I will present my conclusions.

2 Challenges of Explaining Incrementality in Grammar

In natural language, there is a clear asymmetry between preferred sequences and avoided sequences among logically well-formed strings both in comprehension and production. This tendency is observed in diachronic, synchronic and typological variations (Hawkins 2004, 2014; Kiaer 2014). Incremental, step-wise structure building is at the heart of efficient structure building. Yet, it is not straightforward to explain incrementality in most grammar formalisms–within either derivational or lexicalist approaches. This is mainly because most grammar formalisms assume that (1) only verbs carry combinatorial information and (2) structures are built in an “all-at-once” manner at the end when all fragments are presented. Both assumptions make it hard to understand and explain dynamic growth of a structure. Particularly, this becomes a serious problem in explaining verb-final languages: Are speakers of these languages not able to understand a linguistic sequence step-by-step?

In this paper, I provide evidence from Korean. Korean is known as a verb-final and (relatively) free word-order language. There are many languages which share structural properties with Korean, namely, agglutinative morphology and relatively flexible SOV word order. They include Japonic languages (Japanese, Ainu), Mongolian and Tungusic languages (Evenki), Turkish languages, Basque and many Uralic languages, to name a few. The discussions in this paper can be extended to most verb-final languages, which comprise about 50% of world languages according to the World Atlas of Language Structures (WALS) Online (Dryer 2013).

Most syntactic theories have been verb-oriented, with the implicit assumption that it is the verb alone which holds the blueprint for the forthcoming structure. This view predicts that native speakers of verb-final languages may not be able to understand a linguistic sequence in an incremental manner, as the verb containing all the combinatorial information only comes at the very end (see Pritchett 1992, among many others). Although such a line of thinking may appear unnatural and unintuitive, this was the dominant view held for some time. However, challenging evidence started to appear in the pioneering works of Inoue and Fodor (1995) and Mazuka and Itoh (1995). Inoue and Fodor (1995) showed that in a sentence such as (9) below, a Japanese speaker may experience a mild surprise effect (i.e. delay) when the first verb tabeta (‘eat’) is encountered, because the parser is expecting a verb that takes all three NPs as its arguments. The slowdown that would be experienced in a reading of (9) after facing the transitive verb shows that the sequence of NPs preceding the verb projects a structural template where all the NPs can be interpreted together. Simply, the reason why native speakers of Japanese experience delay is due to the existence of Mary-ni because it yields a prediction for a ditransitive verb.

(9) | Bob-ga | Mary-ni | ringo-wo | tabeta inu-wo | ageta. [Japanese] |

B-NOM | M-DAT | apple-ACC ate | dog-ACC gave | ||

‘Bob gave Mary the dog that ate an apple.’ | |||||

Similarly, Mazuka and Itoh (1995) studied the eye movements of Japanese native speakers as they parsed sentences. They demonstrated that Japanese native speakers find double centre-embedded sentences starting with a sequence as in (10a) extremely difficult to read relative to sentences starting with other case-marked NP strings as in (10b). (‘???’ here refers to difficulties in comprehension and temporary slow-down in real-time processing.)

(10) | Three consecutive –ga is difficult to understand | |||

(a) | NP-NOM NP-NOM NP-NOM: extremely difficult to understand | |||

???Yoko-ga Hiromi-ga Asako-ga | ||||

Y-NOM | H-NOM | A-NOM | ||

(b) | NP-NOM NP-ACC NP-DAT: easy to understand | |||

Yoko-ga Hiromi-wo Asako-ni… | ||||

Y-NOM H-ACC | A-DAT | |||

Mazuka and Itoh (1995) argued that the comprehension asymmetry as in (10) is caused because a -ga marked nominative NP constantly unfolds a new structure. The difficulty in understanding sequence (10a) is due to a number of ‘incomplete’ sentences projected by the -ga marked NPs. If a structure were not built incrementally, no significant difficulty should be encountered in (10a), compared to (10b), at such an early stage in structure building. In a similar vein, Ko (1997), Kiaer (2007, 2014) among others showed incremental structure building in Korean. Özge et al. (2015) shows incremental structure building in Turkish.

The verb-centred view cannot offer a satisfying account of the nature of incremental structure-building in verb-final languages. What this implies practically is that native speakers of verb-final languages do not and cannot understand what is being said before the final verb is encountered. This is also hard to believe, particularly given the frequency with which verb-less utterances are encountered in everyday language use. As Nordlinger (1998) among others discuss, it seems that the existence of verb-less clauses is language-universal. Following Kiaer (2007, 2014), this paper argues that particles play constructive roles in structure building along with default word orders and prosody as well as verbs.

Originally, both dependency grammar and Lexical Functional Grammar (LFG) were designed to allow an incremental update of the ongoing underspecified structure without excessive derivational complexity, as summarized in Bresnan et al. (2016). Yet, as it stands, it is hard to use the LFG schemata in explaining incrementality for not-yet complete structures during the process of structure building. In Combinatory Categorial Grammar (CCG), which showcases incremental structure building in verb-final languages, the way incrementality works is not sensitive to real-time processing order; hence, the parser needs to know all the combinatory information at the start of understanding a sequence. McConville (2001) assumed a ruthless parser to reduce any structural uncertainty. This approach may be hard to adopt in explaining verb-final languages like Korean as some of the important structural cues are only available at the later stages of the sequence.

In principle, most grammars and linguistic theories do not tolerate any structural indeterminism when they provide a (tentative) structural representation. Put simply, any analysis for an ‘incomplete’ linguistic sequence is in principle illegitimate. There is no syntactic theory applicable to ‘not-yet-seen’ structures. This is one of the crucial reasons that left-to-right motivation has not been widely adopted in linguistic theories. It has been implicitly believed that the issues surrounding the incremental or partial growth of an incomplete sequence is what a grammar-independent parsing theory should seek to explain, rather than a competence-based linguistic theory.

The problem of explaining incrementality occurs not only in OV languages but also in English and other VO languages. Phillips (1996, 2003) showed that linear order and the notion of incrementality need to be taken into consideration in order to define even the basic syntactic unit such as a constituent in English. Further works by Phillips and his colleagues show similar challenges for verb-final languages. As a response to these challenges, Aoshima et al. (2004:42) argued for the notion of un-forced revision in order to explain incremental structure building in Japanese. Yet, the nature of un-forced revision is problematic. Within the minimalist tradition, morpho-syntactic features play an important role in explaining structural variation. Yet, as the label un-forced implies, it seems hard to spell out the morpho-syntactic feature responsible for the revision process which Aoshima et al. (2004) proposes (see Kiaer 2007 for further discussion). In principle, feature-driven derivational grammar-formalisms have limits in explaining the incrementality found in verb-final languages like Korean/Japanese, because the logic of the formalism is intolerant to non-deterministic, left-to-right, incremental structure building. In the following section, I shall introduce the Dynamic Syntax formalism and show how the processing-driven DS formalism can explain the core phenomena in Korean presented in Sect. 1.

3 Explaining Core Phenomena in Dynamic Syntax

3.1 Dynamic Syntax: A Bird Eye’s View

Dynamic Syntax (DS) is a processing-based formalism which assumes a left-to-right directional derivation. Incrementality, therefore, is at the heart of the DS architecture. Based on Sperber and Wilson (1995), DS takes as the starting point in any structure-building the goal to establish some propositional formula. Kiaer (2014) further expanded this notion and formalised this goal as in (11). This paper also adopts (11) as the goal of syntactic structure building in natural language.

(11) | Goal of Linguistic Structure Building: |

(For an efficient communication), native speakers aim to optimise their syntactic structure building (both production and comprehension) by achieving the meaningful, communicative proposition as quickly as possible with minimal structure-building effort. |

Linguistic structure building is not only sensitive to what human parsers build, but also how they build. Human parsers aim to build a structure in the most optimal way and this optimisation process is encoded in the grammar. The key notions in DS are the paired concepts of underspecification and update: a structure is underspecified initially and gradually updated and specified as the structure grows. This observation is close to Phillips (1996), yet is different in that DS shows how a partial and incomplete structure is updated, whereas Phillips’ approach is based on a complete structure. Within DS, it is naturally expected that structural freedom decreases as the structure grows further following the linear order.

Within DS, there are three main operations used for structural update: (1) local/immediate update (via an operation of local *adjunction); (2) non-local/non-immediate update (via an operation of *adjunction); (3) general update (via an operation of generalised adjunction). Hence, a structure can be enriched or extended to the on-going local structural template or in the non-local template or in an arbitrary template in principle. Three operations are all available at any point of structure building in principle. Nevertheless, not all operations are equally chosen by human parsers. Kiaer (2007, 2014) argued for the locality principle where localised options are stored as the default structure building option in Korean. Kiaer showed evidence from processing efficiency and frequency in corpora (i.e., production) as well as in diachronic and synchronic variations of Korean. This is in line with Hawkins’ (2004) Performance-Grammar Correspondence Hypothesis shown in (12).

(12) | Performance-Grammar Correspondence Hypothesis |

Grammars have conventionalised syntactic structures in proportion to their degree of preference in performance, as evidenced by frequency of use and ease of processing. |

Based on this observation, I argue that Korean speakers routinise the structure-building options in the following order as in (13). That said, local/immediate structure building will be chosen as a default structure building option unless prosody or other factors intervene. The arbitrary structural growth option will be least preferred. A ≫ B means A is preferred to B.

(13) | Routinisation of Structure-building options: |

Immediate Growth ≫ Non-immediate Growth ≫ Arbitrary Growth |

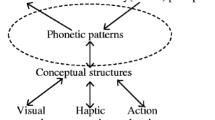

3.2 Basic Formalism

DS is based on LOFT [Logic Of Finite Trees; Blackburn and Meyer-Viol (1994)]. In LOFT, there are three key functors: (1) Tn (= TREE NODE) functor. This indicates the address of a tree. (2). Fo (= FORMULA) functor. This points to the content of a node. (3) Ty (= TYPE) functor. This has all the combinatory information of a node. Consider Fig. 2. In Fig. 2, the pointer ◊ indicates a node under development. ?Ty(t) indicates the overall goal, that is, to build a proposition of Ty(t). The requirement ?X for any X is the core concept of the framework: all requirements, whether of content, structure, or type, have to be achieved at the end of parse actions. Consider Jina’s answer in (14) and the structure projected by the verb manna- ‘to meet’ in Fig. 2.

(14) | Hena: Semi-ka | Mina-lul | manna-ss-ni? |

Hena: Semi-NOM | Mina-ACC | meet-PAST-Q | |

‘Did Semi meet Mina?’ | |||

Jina: Manna-ss-e. | |||

Jina: meet-PAST-DECL | |||

‘(Yes, Semi) met (Mina).’ |

Consider the top node (1) labelled as ?Ty(t), Tn(a) in Fig. 2. ?Ty(t) means that a proposition (Ty(t)) is required. This is the goal of the structure building. Tn(a) is an underspecified S node, whose structural relation over the entire structure is yet to be confirmed. Consider the node below (1) along the argument (0) relation, that is, node (2). In LOFT, the argument node is a node under the 0 relation, whereas the functor node is a node under the 1 relation. The argument node is described as {?∃xFo(x), Fo(U), < ↑0 > Tn(a)} in (2). This is a subject node. ?∃xFo(x) means a formula (or content) is needed. U in Fo(U) is a place-holding meta-variable, whose content needs to be found and updated in the context. < ↑0 > Tn(a) is a modal relation and means that if the parser goes up (↑) along with 0 (= argument) relation from the current node, there is a top-node (= Tn(a)), which is a proposition/sentence. The top node ?Ty(t), Tn(a) has another node a daughter node that is the node (3), the predicate (VP) node. This is a functor node of the proposition. ?Ty(e → t) means that a predicate is required. The address of this node is < ↑1 > Tn(a). From this VP node in (3), two nodes can be anticipated further. If one moves along the argument (0) node from the node (3), we see the node (4). This is an object node and can be described as {?∃xFo(x), Fo(V), < ↑0 > <↑1 > Tn(a)}. Fo(V) is also a place-holding meta-variable, projected by the transitive verb. Its content needs to be found and updated by the given dialogue context. The tree node address < ↑0 > <↑1 > Tn(a) states that if the parser goes up along the argument (= 0) node relation and then the functor node (= 1) relation, a top node (= Tn(a)) is to be found. There is another argument node from the verb phrase node (3), that is, the node (5). This node (5) indicates the current state of parsing and its address can be written as < ↑1 > <↑1 > Tn(a). Figure 2 is projected by the verb stem manna- ‘to meet’ alone. Yet, inversely, structural projections such as this are made possible by the case marked NPs in Korean. Contrary to the above example (14), a partial structure can be projected by case morphology before a verb is seen. Case particles form an important source of incremental structure building in verb-final languages like Korean. Consider Table 1.

3.3 Incremental Syntax: A Sample Parse

In the following, I shall show how a single or a cluster of case particles project a partial structure and hence enable incremental structure building. Although a verb alone can project a partial structure as in Fig. 2, as shown in Table 1, it is not just a verb alone which projects a structural skeleton. Nordlinger (1998) discussed a similar constructive role of case particles in Wambaya based on LFG. Following Nordlinger’s insight, Kiaer (2007) explores the constructive role of case particles in Korean based on DS. The core idea of Kiaer (2007) is the following: When a sequence of case-marked NPs such as (NOM plus ACC or NOM plus DAT) is parsed, a parser will be able to build a partial, local structure as in Fig. 3. The bold line directly reflects the structural anticipation borne out by the particle cluster.

This approach will allow us to explain apparently complex syntactic phenomena such as multiple long distance scrambling, capturing its incremental nature (Kiaer 2007). Consider (15). (15) is rated natural and grammatical by native speakers of Korean.

(15) | Password-lul | spy-hanthey Kim-kemsa-nun | Park-kica-ka | kaluchyecwuessta-ko |

Password-ACC spy-DAT | Kim-prosecutor-TOP Park-reporter-NOM told-COMP | |||

malhayssta. | ||||

said | ||||

‘Prosecutor Kim said that it is the reporter Park who told the spy the password.’ | ||||

Figure 4 is abbreviated from Kiaer (2007: 264) and shows the step just before the final structure building for (14) is achieved. This step shows how the local structure built far ahead of the end (circled) is merged with the structural template projected by the ditransitive verb kaluchyecwue ‘to tell’. Crucially, the partial structure here is formed by the cluster of case particles -lul and -hanthey in the absence of a verb. The partial structure established in this way, circled, awaits a suitable predicate. Hence, the step drawn in Fig. 4 shows how the last-minute merge happens, confirming what has been already built (see Kempson and Kiaer 2010 for similar discussion of Japanese).

However, even if the role of case particles is crucial in unfolding a sentence, if the structural anticipation for the upcoming structure is clear and predictable, then particles tend to remain unsaid (Kiaer and Shin 2012). This confirms that a default order and local structure building routines play a crucial role just like case particles and prosody in making a structure building incremental.

4 Conclusion

This paper shows Korean speakers’ strong preference for incremental, left-to-right structure building based on the following core phenomena: (1) left–right asymmetry; and (2) pre-verbal structure building and strong preference for early association. Static grammars struggle to explain those phenomena in an explanatorily adequate way, since structural indeterminism is not tolerated and most grammar formalisms are verb-centered. In this paper, I show the necessity of adopting a grammar formalism such as Dynamic Syntax which can capture the dynamics of structural growth without stipulation. I claim that the core data we observed show the dynamic, procedural nature of linguistic competence that remains puzzling in static grammar formalisms.

Notes

Fifty-seven adult Korean native speakers were divided into groups of three, and asked to freely discuss any topic for one hour in the lab. 19 h of speech were recorded and transcribed by members of the Korea University Spoken Language Information Lab, both phonetically and orthographically. The recording contains 174,409 words and 140,657 accentual phrases.

.

References

Aoshima, S., Phillips, C., & Weinberg, A. (2004). Processing filler-gap dependencies in a head-final language. Journal of Memory and Language, 51, 23–54.

Blackburn, P., & Meyer-Viol, W. (1994). Linguistics, logic, and finite trees. Bulletin of Interest Group of Pure and Applied Logics, 2, 2–39.

Bresnan, Joan, Ash Asudeh, Ida Toivonen, and Stephen Wechsler (2016). Lexical-functional Syntax. Second ed. Chichester, Blackwell Textbooks in Linguistics; 16.

Cann, R., Kempson, R., & Marten, L. (2005). The dynamics of language. Oxford: Elsevier.

Dryer, M.S. (2013). Order of Subject, Object and Verb. In: Dryer, Matthew S. & Haspelmath, Martin (eds.) The world atlas of language structures online. leipzig: max planck institute for evolutionary anthropology. Retrieved 07 Oct, 2020, from http://wals.info/chapter/81.

Hawkins, J. (2004). Efficiency and complexity in grammars. Oxford: Oxford University Press.

Hawkins, J. (2014). Cross-linguistic variation and efficiency. Oxford: Oxford University Press.

Inoue, A., & Fodor, J. (1995). Information-based parsing of Japanese. In R. Mazuka & N. Nagai (Eds.), Japanese sentence processing (pp. 9–64). Hillsdale: Lawrence Erlbaum.

Kempson, R., & Kiaer, J. (2010). Multiple long-distance scrambling: Syntax as reflections of processing 1. Journal of Linguistics, 46(1), 127–192.

Kempson, R., Meyer-Viol, W., & Gabbay, D. (2001). Dynamic syntax: The flow of language understanding. Oxford: Blackwell.

Kiaer, J. (2007) Processing and interfaces in syntactic theory: The case of Korean. Unpublished doctoral dissertation, University of London.

Kiaer, J. (2014). Pragmatic syntax (Bloomsbury studies in theoretical linguistics). London.

Kiaer, J., & Shin, J. (2012). Prosodic interpretation of object particle omission in Korean (Mokjeokgyeokjosa saengryak hyeonsange daehan unyuljeok haeseok). Korean Linguistics (Hangugeohak), 57, 331–355.

Kim (2010). Hwupochwung kwumwunuy wunyulkwu hyengseng yangsang, ‘A prosodic analysis on post-verbal expressions in Korean, Master’s thesis, Korea University.

Ko, S. (1997). The resolution of the dative NP ambiguity in Korean. Journal of Psycholinguistic Research, 26(2), 265–273.

Mazuka, R., & Itoh, K. (1995). Can Japanese speakers be led down the garden path? In R. Mazuka & N. Nagai (Eds.), Japanese sentence processing (pp. 295–329). Hillsdale: Lawrence Erlbaum Associates.

McConville, M. (2001) Incremental natural language understanding with combinatory categorial grammar, Mst Thesis, Univesity of Ed.

Miyamoto, E. (2002). Case markers as clause boundary inducers in Japanese. Journal of Psycholinguistic Research, 31, 307–347.

Nordlinger, R. (1998). Constructive case. Stanford: CSLI.

Ozge, D., Marinis, T., & Zeyrek, D. (2015). Incremental processing in head-final child language: online comprehension of relative clauses in Turkish-speaking children and adults. Language and Cognitive Processes, 30(9), 1230–1243.

Phillips, C. (1996) Order and structure. Unpublished doctoral dissertation, MIT.

Phillips, C. (2003). Linear order and constituency. Linguistic Inquiry, 34, 37–90.

Pritchett, B. (1992). Grammatical competence and parsing performance. Chicago: University of Chicago Press.

Ross, J. R. (1967) Constraints on variables in syntax. Doctoral dissertation, MIT. Published as Ross, J. R. (1986) Infinite syntax! Norwood, NJ: ABLEX.

Sperber, D., & Wilson, D. (1995). Relevance: Communication and cognition (2nd ed.). Oxford: Blackwell.

Wasow, T. (2002). Postverbal behavior. Stanford: CSLI.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Kiaer, J. Left-to-Right Asymmetry and Early Association in Korean. J of Log Lang and Inf 30, 363–378 (2021). https://doi.org/10.1007/s10849-020-09318-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10849-020-09318-3