Abstract

A stable welding process is crucial to obtain high quality parts in wire arc additive manufacturing. The complexity of the process makes it inherently unstable, which can cause various defects, resulting in poor geometric accuracy and material properties. This demands for in-process monitoring and control mechanisms to industrialize the technology. In this work, process monitoring algorithms based on welding camera image analysis are presented. A neural network for semantic segmentation of the welding wire is used to monitor the working distance as well as the horizontal position of the wire during welding and classic image processing techniques are applied to capture spatter formation. Using these algorithms, the process stability is evaluated in real time and the analysis results enable the direction independent closed-loop-control of the manufacturing process. This significantly improves geometric fidelity as well as mechanical properties of the fabricated part and allows the automated production of parts with complex deposition paths including weld bead crossings, curvatures and overhang structures.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Wire arc additive manufacturing (WAAM) is a process for 3D printing of large, near-net-shape metal parts layer-by-layer, using arc welding technologies. It offers significant time and cost advantages for various applications compared to conventional subtractive methods (Martina & Williams, 2015; Williams et al., 2016) and has been widely used with steel, aluminium, titanium, and nickel-based alloys (Rodrigues et al., 2019). Currently most WAAM processes are based on Cold Metal Transfer (CMT), a modified Metal Inert Gas (MIG) welding process based on short-circuiting transfer (Selvi et al., 2018). Due to an oscillating wire, it allows a lower heat input in comparison to other welding technologies, and thus reduces residual stresses. Besides several advantages, WAAM is a multi-scale and multi-physics coupling process with complicated and unbalanced physical, chemical, thermal and metallurgical characteristics (Chen et al., 2021). The forming of a workpiece is intensely associated with the dynamic fluid characteristic of the melt pool, which is significantly influenced by several process parameters such as welding current and voltage, welding-speed, welding-angle or wire feed rate. Improper parametrization leads to a series of defects such as fluctuation effects, oxidation, pores, lack of fusion or slag inclusion (Hauser et al., 2020, 2021a, b; Wu et al., 2018). Also, varying heat dissipation conditions may result in morphological changes of deposited beads (Li et al., 2021). Furthermore, for certain materials such as steel, the wires’ contact tube wears off over time, which could lead to material deposition defects or spattering. Heterogeneous error sources lead to various defects, negatively affecting both geometric accuracy and material properties. Morphological errors propagate to the next layer and accumulate over time. This may lead to a continuous deterioration of the overall geometric accuracy of the part, so that it eventually has to be rejected. In order to ensure high component quality and productivity, in-process monitoring and control strategies are indispensable.

Process deviations are associated with the change of various signals, which can be used for online defect detection. Several sensor data evaluation algorithms were proposed in recent years. Tang et al. (2017) used an industrial camera behind the welding torch to capture weld bead images and trained a deep convolutional neural network (DCNN) combining it with a support vector machine (SVM) to classify weld bead images into five patterns as normal, pore, hump, depression and undercut. Infrared thermography data was utilized by Chen et al. (2019) to identify weld bead deviation, hump and flow defects using a neural network. Reisch et al. (2020) developed a distance based anomaly detection based on the evaluation of current, voltage and welding camera images with a LSMT model, a convolution neural network and an autoencoder, respectively. The proposed approach showed the capability of detecting anomalies due to oxidation, polluted surfaces and form deviations. Lee et al. (2021) used a high dynamic range camera to extract features metal transfer and weld-pool that are used for an artificial intelligence model to classify normal and abnormal statuses of arc welding. Li (2021) developed two intelligent WAAM defect detection modules. The first module takes welding arc current and voltage signals during the deposition process as inputs and uses algorithms such as SVM and incremental SVM to identify disturbances and continuously learn new defects. The second module takes CCD images as inputs and uses object detection algorithms to predict the unfused defect during the WAAM manufacturing process.

Although these studies contribute to the understanding of the complex process dynamics and provide methods for detecting typical defects, it is crucial to automatically compensate for weld bead deviations during production to industrialize the technology. Especially, fluctuations in the weld bead height are critical when accumulating over time. Since the welding torch is raised by a predefined layer height after each applied bead, large deviations in the distance between the nozzle of the welding torch and the workpiece, the nozzle-to-work (NtW) distance \(d_{NtW}\), can occur after several applied layers, which destabilizes the welding process. A long distance may generate bad gas protective effects, resulting in porosities or bad formation of the layer. In contrast, a short distance can lead to a higher spatter rate and cause weld spatter to stick to the nozzle or even cause a collision between the welding nozzle and the workpiece (Xiong & Zhang, 2014). Thus, it is crucial to have a stable layer height resp. \(d_{NtW}\) throughout the whole build process.

In recent years, there has been increasing research into methods for measuring and controlling the layer height. Mostly a laser scan is used after each deposited layer to deduce height informations, which increases the inter-layer waiting time. Han et al. (2018) used the extracted features to control the layer height by adjusting the voltage, based on experience rules for a specific steel. The developed height controller was tested for a multilayer multi bead cuboid structure. Mu et al. (2022) compared the measurements against the CAD model. Geometric errors are then compensated and a new set of welding parameters for the next layer is created. Li et al. (2021) introduced an interlayer closed-loop-control (ICLC) algorithm for multi-layer multi-bead deposition of cuboid components with straight, parallel paths. Height errors are determined by comparing the measurements to the bead geometry model. Tang et al. (2021) established a multi-sensor system to monitor process parameter and proposed a method for weld bead modelling by means of a deep neural network. The feedback of the weld bead shape was received by means of an offline weld bead scan.

Ščetinec et al. (2021) proposed a layer height controller based on the average arc current of the last deposited layer. Height deviations are compensated by replanning the tool path. Xiong et al. (2021) developed a closed-loop-control (CLC) of the deposition height by visual inspection of previous and current layer using a passive vision system. Classic image processing techniques are used to track the height. Deviations are automatically compensated via controlling the wire feed speed. The solution was tested for a single track unidirectional wall in one welding direction. Kissinger et al. (2019) and Hallam et al. (2022) used coherent range-resolved interferometry (CO-RRI) for layer height measurement when building straight walls made of steel. The sensor is mounted directly to the welding torch and measures the distance to the weld bead immediately behind the melt pool. There is no inherent sensitivity to the arc light and it is a cost effective and simple to setup solution. Mahfudianto et al. (2019) developed an artificial neural network with one hidden layer using several characteristic process parameter such as travel and feeding speed, current, voltage, etc. as input sources to estimate the distance of the wires’ contact tube to the work piece in real time. Hölscher et al. (2022) matched different process parameters to that distance. It has been shown that the electrical resistance during short circuit monitored the distance best for WAAM with and without using a CMT source. Both solutions verified their measurement techniques on single-track experiments by continuously modifying the weld distance.

The above studies present different solutions for either measuring the weld bead height or determining the weld distance. Also, layer height control strategies are proposed, mostly validated by fabricating straight walls or cuboids. Mu et al. (2022) and Ščetinec et al. (2021) verified the adaptiveness of the designed control strategy by fabricating inclined and free-form structures. However, when printing complex geometries, material agglomerations for example at weld bead crossings could lead to sudden changes in the weld distance, severely influencing the forming quality, when building up over time. If such short-term deviations are not properly accounted for in the control strategy, this can lead to inaccurate compensation. Furthermore, optical systems for direct measurement of the weld bead dimensions behind the molten pool have an eccentric guidance and thus a directional dependency on the welding path. Reisgen et al. (2019) utilized visual monitoring of the processing zone to determine height informations in-situ, independently of process parameters, the selected material and the welding direction, by measuring the length of the visible wire (stickout), using no CMT welding technology. Workpiece and welding torch height control strategies were implemented based on an elevation map and showed the capability to compensate for surface errors and to control the distance between welding torch and workpiece. Reisch et al. (2021) investigated and evaluated the usage of several sensors for in-process measurement of the nozzle-to-work distance \(d_{NtW}\), using a CMT welding source. It has been shown, that a welding camera is most suitable for process and direction independent measurements. A CLC with in-situ deposition control was developed, where layer height deviations are compensated by adjusting the welding speed according to the evaluations of welding camera frames with a DCNN, predicting the visible length of the oscillating wire (stickout). Validation-parts showed significantly reduced weld bead deviations and thus a higher geometrical accuracy. Humping effects at weld bead crossings in particular could be compensated. Even a free-form object with incline structure was printed correctly on the first try without manual intervention, while the build up without deposition control had to be stopped after 20 deposited layers due to severe oxidation, misalignment and unstable process behaviour.

This paper presents image based WAAM process monitoring algorithms for the CMT welding technology. The goal is to obtain information about the process stability in real time. Monitoring of the \(d_{NtW}\) is realized by measuring the wire stickout. Therefore, the latter procedure using a DCNN, is extended to a wire segmentation. The additional semantic information makes the procedure more robust and not only the wire length but also the horizontal position can be extracted, which is used to identify deflection of the wire. Deflection occurs if the wire contact tube is worn off or \(d_{NtW}\) is too large. This could lead to unstable arc behaviour, increased spattering or weld seam irregularities such as lack of fusion (Henckell et al., 2020) and the resulting offset affects the software supported path planning with the risk of dimensional deviations from the CAD model and the work piece (Zhan et al., 2017). Spattering indicates instabilities in the welding process for various reasons, like improper feedstock, incorrect welding parameters, insufficient shielding gas or an incorrect \(d_{NtW}\). This may result in reduced surface quality, strength, durability and functionality (Serrati et al., 2023). To capture this phenomenon, a novel spatter monitoring algorithm, based on detecting changes between consecutive frames, is presented. The collected spatter statistic is a valuable data-source for ex-situ tests of the fabricated part and optimization strategies concerning design and deposition paths.

The reminder of this paper is organized as follows: Section “Vision system” describes the experimental setup. WAAM- and monitoring-components as well as a semi-automatic system calibration method are introduced. Section “Wire monitoring” focuses on the deduction of the \(d_{NtW}\) from wire stickout and discusses in detail the developed machine learning solution for wire monitoring. A semantic wire segmentation and a classification of the results as well as a following feature extraction is proposed. Section “Spatter monitoring” presents a two-stage algorithm to robustly detect spatter formation in welding camera frames. Finally, the findings of this paper are summarized in section “Conclusion”.

Vision system

Experimental setup

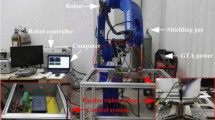

Experiments and validation tests were conducted on a robot-based WAAM setup with Fronius TPS CMT 4000 advanced system as welding source which is capable of using CMT wire feed technology. The welding torch was attached to the head of a calibrated six axis robot (COMAU NJ130 2.0), see Fig. 1. An AlSi12 wire (  1.2 mm ) and Argon as inert gas at a gas flow rate of 10 L\(\text {min}^{-1}\) in an air-conditioned room was used.

1.2 mm ) and Argon as inert gas at a gas flow rate of 10 L\(\text {min}^{-1}\) in an air-conditioned room was used.

Camera

To visually monitor the processing zone, a welding camera (Cavitar C300) was mounted to the robot head, see Fig. 2. It enables the visualization of build-part and welding process at the same time, using active laser-illumination. It provides a 8-bit grayscale video stream and was operated at a sample rate of 20 Hz with a resolution of \(960 \times 740\) pixels.

Corner detection on a checkerboard pattern was used to calibrate the welding camera to the processing zone, see Fig. 3. This automatically compensates for the monitoring angle.

Wire monitoring

Welding stability for the CMT process with respect to uniform material deposition is monitored by observing the oscillating wire. The perspective on the processing zone is fixed throughout the build process and process related, the oscillating wire dips into the weld bead. Then, the stickout \(l_{w}\) corresponds to the \(d_{NtW}\) when added-off a known hidden portion \(l_h\). This enables the measurement of the \(d_{NtW}\) by measuring the stickout \(l_{w}\), see Fig. 4.

From a classic image processing point of view, measuring the stickout is difficult to achieve due to permanently changing specular reflections and thus badly posed, see Fig. 5.

To overcome the problem of optical measurement in an environment with highly fluctuating lighting conditions, a data-driven approach was adopted. To robustly extract wire information in real time, a three stage architecture is proposed. First, a neural network for semantic segmentation localizes the wire, followed by a classification that checks the integrity of the prediction, validating it for the final feature extraction.

Wire segmentation

Semantic segmentation is used to locate objects in an image. The goal is to label each pixel of an image with a corresponding class of what is being represented. This can be achieved using the well known U-net architecture, originally developed for biomedical image processing by Ronneberg et al. (2015). The proposed architecture was modified to detect and locate the visible wire, utilizing a feature vector \({\textbf {v}}=\) \(\begin{pmatrix}4, 8 ,16, 32, 64\end{pmatrix}\), a single output class (wire), zero padding and additional dropout layers with 25% dropout, as modifications, see the left side of Fig. 6. It consists of a downsampling part (left) and a symmetric upsampling part (right). A downsampling step consists of a back to back execution of two convolutions, a rectified linear unit (ReLU), max pooling for downsampling and dropout to prevent over-fitting and improve the generalization error. By repeating this, more compact and increasingly abstract representations (feature maps) of the input image are created, according to the feature vector. The network learns to detect a wire using these detailed feature maps. As a result, the information about where the wire is located is lost. The spatial information is recovered by upsampling, i.e. by converting the low-resolution representations into a high-resolution image. Every up step consists of a transposed convolution that halves the number and doubles the dimensions of the feature maps, dropout, a concatenation with the corresponding feature maps from the downsampling part and two convolutions, each followed by a ReLU. The corresponding feature maps from the downsampling step help to localize the features more precisely during upsampling. At the final layer a 1x1 convolution is used to map each 4-component feature vector to one class. The architecture returns a full resolution prediction of a wire. That means, each pixel is assigned a probability, called precision score, of belonging to a wire.

The semantic segmentation model is trained using segmentation maps. Therefore, welding camera videos of various test-prints served as data source. Interesting subsets from different printing stages were annotated using a semi-automatic annotation tool. In total, 3000 frames were labelled, where 20% of them were set aside for validation. The network was trained on cut-outs of dimensions 256x512 pixels which contain the immediate processing zone, together with their corresponding segmentation maps with value 1 inside an enclosing quadrangle for the visible wire and 0 elsewhere, see Fig. 7.

The network was implemented with the Tensorflow frameworkFootnote 1 using its Keras API and trained for 80 epochs with 32 samples per iteration (batch size), binary cross-entropy loss and default RMSprop optimizer achieving a training and validation accuracy of \(\sim 99.5\%\). Figure 8 shows the learning curve.

Classification

The quality of a specific wire prediction is determined by the accuracy of the network and the actual visual conditions present. The data situation can occasionally be poor, leading the network to provide wire predictions containing areas with low confidence, whereas in other regions, the confidence is high. To decide if the information content of a prediction is suitable for feature extraction, it is classified into one of three categories by measuring its certainty. Therefore, the probability distribution of the prediction is considered to construct a confidence marker. It is assumed that precision scores below 0.1 are irrelevant for the determination of a wire instance. To measure the certainty of the prediction, a dichotomy of the relevant precision scores is performed, using the standard decision threshold for semantic segmentation \(\tau =0.5\). A value above \(\tau \) is classified as high, otherwise as low. High certainty of a prediction is indicated by a high proportion of high precision scores. For a prediction P, consider the sets

Then \(\sigma = \frac{\vert N \vert }{\vert M \vert }\) is used as a confidence marker and P is validated by setting a suitable threshold \(\rho \) for \(\sigma \). If

then the corresponding prediction P is considered to be of high certainty and a binary wire representation is computed by thresholding P using \(\tau \). This results either in a fragmented or a full wire representation, as depicted within the yellow and green rectangle in Fig. 6. If Eq. 3 does not hold, this indicates that the corresponding prediction P is of low certainty and thresholding P results in an unsuitable wire representation for feature extraction, see the red rectangle in Fig. 6. Images corresponding to invalid classified wires served as data source for re-teaching of the model. In this way, the network became more robust with respect to the permanently changing specular reflections from the wire.

Feature extraction

The stickout is determined by the lowest detected pixel in a binary wire returned by the classification. Figure 9 shows the monitoring result for the stickouts of a specific build job part with adverse visual conditions along with \(\sigma \), identifying phases during fabrication, where the feature extraction should be suspended.

Confidence marker \(\sigma \) (red) and stickouts (blue), deduced with the proposed approach. The proportion of high confidence values drops when entering adverse visual conditions at the end of the observation, identified using a confidence threshold \(\rho =0.75\), indicated by a horizontal line (Color figure online)

The benefit of this approach is that, compared to the DCNN regression model used in Reisch et al. (2021), now the prediction results are explainable. This breaks up the black box characteristic of the regression model and only suitable predictions are used to deduce the stickout for process control. Figure 10 illustrates the problem of using the regression model to predict the stickout. Under adverse visual conditions, it may return plausible values, but they are not correct.

Now that a prediction is comprehensible, not only the stickout can be robustly determined, but also the center of gravity and thus the position of the wire. It is deduced from non-fragmented wire representations, which is ensured by an additional connected component analysis (CCA). Figure 11 shows three valid wire representations of the same build job and the derived features, superimposed on the corresponding welding camera frames.

The wire position is used to track the wear off of the contact tube by measuring deviations in its horizontal component. Wear off causes the wire to lose guidance over time. This leads to increased deposition defects, which becomes unacceptable at a certain point during fabrication. Then, the process must be stopped to exchange the wear part. The life cycle of that specific wear part can be determined adaptively in-process independent of a specific build job. Figure 12 shows two contact tube examples with the wire guide worn to different degrees.

To monitor the positional offset of the wire and consequently the wear state of the contact tube, the median of the positional deviations from the original is calculated for every 200 frames along the processing. Figure 13 shows the monitoring result of a 45 minute build job.

This approach was tested with a single camera on industrial WAAM data as stated in section “Vision system”. In general, it is a priori not clear in which direction the wear off will evolve. In the worst case, it evolves in the view direction of the monitoring system and thus cannot be detected. In most cases, a suitable positioning of the camera is sufficient. For an optimal solution, a second camera could be used, mounted horizontal perpendicular, to ensure the capturing of positional deviations. However, this is accompanied by higher costs.

Computational aspect

The evaluation of a welding camera frame, using the proposed method, takes 40 ms on an Intel Core i7-10700K CPU and 25 ms on the Nvidia GeForce RTX 3070 GPU, with a setup as described in section “Vision system”. The real time monitoring of the welding process could be realized and the developed wire monitoring system enables the in-situ control of the weld bead height, when using the CLC for the \(d_{NtW}\) designed by Reisch et al. (2021).

Spatter monitoring

Spatters in welding might indicate process instabilities. In the following, an automated spatter detection and quantification is described. Monitoring spatter formation does not face the same image processing challenges like monitoring the wire. Spatter components have similar shapes and brightness and thus are, as objects, easier to detect. But, specular reflections can have the same visual characteristics may be detected falsely as spatter. These are identified by determining changes between consecutive frames. The algorithm consists of two stages, spatter detection and a refinement stage to remove false positives.

Spatter detection

Spatter appears as small sized bright spots, is a temporary phenomenon and distributes beyond the immediate processing zone. Thus the full frames are inspected. The video stream is a function in time with discrete arguments. Therefore, the first step is to compute the finite difference between consecutive frames for removing background and low intensity areas. Next, clusters of specular reflections, mostly residing around the immediate processing zone are identified using morphological closing. Finally, a CCA keeps only areas representing potential spatter. The image processing pipeline for a frame, together with the corresponding intermediate results depicted for a cut-out (blue) for visualization reasons, is shown in Fig. 14 .

Background subtraction: For a frame \(f_i\), \(i=0,1, ...\) consider its successor \(f_{i+1}\) and the pixel-wise finite difference

Using a suitable threshold \(T>0\), this yields binary images \(f^{+}\) resp. \(f^{-}\) containing high intensity areas only visible in \(f_{i+1}\) resp. \(f_{i}\), defined by:

Spatter detection must not be performed twice for \(f_i\), since the information about potential spatter is already known from the previous iteration, except if \(i=0\). In the latter case, the rest of the spatter detection pipeline is performed for both \(f^+\) and \(f^-\), else it is performed only for \(f^+\). The algorithm is insensitive to the choice of T. Any bright objects left after the background subtraction that do not represent spatter are removed by the following processing steps.

Clustering: Assuming that individual spatter instances have a minimum distance \(d_{min}\) to each other, cluster of specular reflections, mostly residing on weld bead and melt pool, can be identified using morphological closing. This connects high intensity areas which are close together, with respect to \(d_{min}\) and leaves the rest. This results in the morphological closure \(C(f^{+})\) of the binary image \(f^{+}\).

Spatter identification: Non-spatter-like areas are finally removed considering their morphology and size, using a CCA. This yields a binary image \(S(f^{+}) \) containing only spatter-like components visible in \(f_{i+1}\).

Spatter refinement

Not all of the potential spatter components are real. Inherent specular reflections from metal surfaces often have the same visual characteristics like spatter and mostly exist over several consecutive frames. They underly a relatively slow translation within subsequent frames and can be filtered out by comparing the binary spatter detection results of the considered frame \(f_i\) with its corresponding predecessor- and successor-results using a predefined translation radius R. Let \(S(f_i)\) and \(S(f_{i+1}) \) be the spatter detection result of \(f_i\) and \(f_{i+1}\). For \( k=1,2,...,m\) where m is the number of potential spatter components in \(S(f_i)\), the distances \(d(s_k,s_j) =\Vert s_k - s_j\Vert ,\quad j=0,1,...,n\) between its barycenter \(s_k\) and the n barycenters of the potential spatter components visible in \(S(f_{i+1})\) are computed. If \(d < R\), the component is considered false positive. Thereby, components referring to specular reflections visible in both frames \(f_i\) and \(f_{i+1}\) are removed from \(S(f_{i})\) and \(S(f_{i+1})\). For \(S(f_{i})\), this procedure is also performed with the predecessor \(S(f_{i-1})\), so that every frame, except for the first one, is checked against its predecessor and successor for specular reflections. A visualization of the spatter monitoring result for a welding camera frame is shown in Fig. 15.

Accuracy evaluation

The spatter monitoring algorithm was evaluated on a sequence of consecutive camera frames showing continued spattering.

In total 95.5% of the spatter was detected as such. 10% of all detected spatter were false positives. The reason for the negative rate are specular reflections from weld beads and wire. It has been shown, that such misclassification happens throughout the build process which can be considered as background noise, since there were never more than five false positives per frame and the goal is to detect heavy spattering. To improve the accuracy even further, one can use either an algorithmic approach like pattern matching or a neural network.

Accuracy improvement

Figure 16 illustrates incorrectly and correctly recognized spatter. To improve the accuracy of the spatter detection algorithm, the results are analysed by a convolutional autoencoder. Only a small database of 1000 spatter images like the ones shown in Fig. 16 (bottom) was required to train the network with sufficient accuracy. The network learns by reducing the reconstruction error. Objects that are not similar to the ones the network was trained on result in a significantly higher reconstruction error when fed into the trained network. This principle can be used to detect false positive spatter. A standard architecture was chosen, consisting of three convolutional layers for the encoder as well as for the decoder part. Each convolutional layer is followed by a downsampling step in the encoder part and an upsampling step in the decoder part.

To see if the network has learned to distinguish real spatter from specular reflections, the mean square error of the feed and the prediction image is computed for two sets of one hundred images containing real spatter and false positives, respectively. Figure 17 shows the result. Setting a suitable threshold of 0.002 for the mean square error, 87% of the false positives can be detected as such which significantly improves the accuracy of the proposed spatter detection.

Computational aspect

The spatter monitoring algorithm was implemented in Python using the computer vision library OpenCV. The evaluation of a welding camera frame takes an average of 15 ms on an Intel Core i7-10700K CPU. For the algorithm to work correctly, welding speed and capturing frequency must be coordinated. If the acquisition frequency is too low for a given welding speed, the temporal context for the refinement step is lost and it is no longer possible to distinguish between spatter and specular reflections. The experiments were conducted with a welding speed of 350 mm/min and a frame rate of 20 Hz. The temporal resolution is sufficient to capture the process dynamics and stability statements can be derived.

Conclusion

This paper explored on monitoring the process stability in WAAM using a welding camera as the only sensing system. Two algorithms were proposed. A machine learning based wire segmentation, which allows the direct measurements of the nozzle-to-work distance \(d_{NtW}\) and the horizontal position of the wire, and a spatter detection based on classic image processing techniques to identify weld instabilities. The developed wire monitoring overcomes the problem of distance measurement in an environment with highly fluctuating lighting conditions and is independent of inner changes in the welding process. This enables a robust closed-loop-control of the \(d_{NtW}\), which stabilizes the welding process and thus improves geometric accuracy and material properties of the manufactured parts and allows the fabrication of parts with complex geometries, that would be impossible to build without process control. Thereby, a further step towards first-time-right printing and process automation in WAAM is accomplished. The wire monitoring system is independent of the choice of material, thus eliminating the effort of empirical data determination for different materials.

In contrast to the wire segmentation, realized mainly using machine learning techniques, it was possible to detect and quantify spatter in-process with conventional image processing techniques, because it is sharply delineated from the surroundings. Nevertheless, there are misclassifications, which can be drastically reduced when using a convolutional autoencoder to further distinguish between spatter and specular reflections.

Overall, this study revealed great potentials of welding cameras to be used for process monitoring in industrial WAAM cells.

Data availability

Data sets generated during the current study are available from the corresponding author on reasonable request.

References

Chen, X., Kong, F., Fu, Y., et al. (2021). A review on wire-arc additive manufacturing: Typical defects, detection approaches, and multisensor data fusion-based model. The International Journal of Advanced Manufacturing Technology, 117, 707–727. https://doi.org/10.1007/s00170-021-07807-8

Chen, X., Zhang, H., Hu, J., et al. (2019). A passive on-line defect detection method for wire and arc additive manufacturing based on infrared thermography. In Solid freeform fabrication 2019: Proceedings of the 30th annual international solid freeform fabrication symposium.

Hallam, J. M., Kissinger, T., Charrett, T. O. H., et al. (2022). In-process range-resolved interferrometric (RRI) 3D layer height measurements for wire + arc additive manufacturing (WAAM). Measurement Science and Technology. https://doi.org/10.1088/1361-6501/ac440e

Han, P., Li, Y., & Zhang, G. (2018). Online control of deposited geometry of multi-layer multi-bead structure for wire and arc additive manufacturing. Transactions on Intelligent Welding Manufacturing. https://doi.org/10.1007/978-981-10-5355-9_7

Hauser, T., Da Silva, A., Reisch, R. T., et al. (2020). Fluctuation effects in wire arc additive manufacturing of aluminium analysed by high-speed imaging. Journal of Manufacturing Processes, 56, 1088–1098. https://doi.org/10.1016/j.jmapro.2020.05.030

Hauser, T., Reisch, R. T., Breese, P. P., et al. (2021). Porosity in wire arc additive manufacturing of aluminium alloys. Journal of Additive Manufacturing. https://doi.org/10.1016/j.addma.2021.101993

Hauser, T., Reisch, R. T., Breese, P. P., et al. (2021). Oxidation in wire arc additive manufacturing of aluminium alloys. Additive Manufacturing. https://doi.org/10.1016/j.addma.2021.101958

Henckell, P., Gierth, M., Ali, Y., et al. (2020). Reduction of energy input in wire arc additive manufacturing (WAAM) with gas metal arc welding (GMAW). Materials. https://doi.org/10.3390/ma13112491

Hölscher, L. V., Hassel, T., & Maier, H. J. (2022). Detection of the contact tube to working distance in wire and arc additive manufacturing. The International Journal of Advanced Manufacturing Technology, 120, 989–999. https://doi.org/10.1007/s00170-022-08805-0

Kissinger, T., Gomis, B., Ding, J., et al. (2019). Measurements of Wire + Arc additive manufacturing layer heights during arcoperation using coherent range-resolved interferometry (CO-RRI). euspen. Retrieved from https://www.euspen.eu/knowledge-base/AM19102.pdf

Lee, C., Seo, G., Kim, D., et al. (2021). Development of defect detection ai model for wire + arc additive manufacturing using high dynamic range images. Applied Sciences, 11, 7541. https://doi.org/10.3390/app11167541

Li, Y. (2021). Machine learning based defect detection in robotic wire arc additive manufacturing, doctor of philosophy thesis, school of mechanical, materials, mechatronic and biomedical engineering. University of Wollongong Australia. Retrieved from https://ro.uow.edu.au/theses1/1408/

Li, Y., Li, X., Horváth, I., et al. (2021). Interlayer closed-loop control of forming geometries for wire and arc additive manufacturing based on fuzzy-logic inference. Journal of Manufacturing Processes, 63, 35–47. https://doi.org/10.1016/j.jmapro.2020.04.009

Mahfudianto, F., Warinsiriruk, E., & Juy-A-Ka, S. (2019). Estimation of Contact Tip to Work Distance (CTWD) using Artificial Neural Network (ANN) in GMAW. MATEC Web Conf. 269:4004. https://doi.org/10.1051/matecconf/201926904004

Martina, F., & Williams, S. W. (2015). Wire+arc additive manufacturing vs. traditional machining from solid: A cost comparison.

Mu, H., Polden, J., Li, Y., et al. (2022). Layer by layer model-based adaptive control for wire arc additive manufacturing of thin-wall structures. Journal of Intelligent Manufacturing, 33, 1165–1180. https://doi.org/10.1007/s10845-022-01920-5

Reisch, R. T., Hauser, T., Franke, J., et al. (2021). Nozzle-to-work distance measurement and control in wire arc additive manufacturing. Paper presented at ESSE 2021 the 2nd European symposium on software engineering (pp 163–172). https://doi.org/10.1145/3501774.3501798

Reisch, R. T., Hauser, T., Lutz, B., et al. (2020). Distance-based multivariate anomaly detection in wire arc additive manufacturing. Paper presented at the 19th IEEE international conference on machine learning and applications (ICMLA). https://doi.org/10.1109/ICMLA51294.2020.00109

Reisgen, U., Mann, S., Oster, P., et al. (2019). Study on workpiece and welding torch height control for polydirectional waam by means of image processing. In International conference on automation science and engineering (CASE) (pp. 6–11). https://doi.org/10.1109/COASE.2019.8843076.

Rodrigues, T. A., Duarte, V., Miranda, R. M., et al. (2019). Current status and perspectives on wire and arc additive manufacturing. Materials, 12(7), 1121. https://doi.org/10.3390/ma12071121

Ronneberg, O., Fischer, P., & Brox, T. (2015). U-Net: Convolutional networks for biomedical image segmentation. Retrieved from https://arxiv.org/abs/1505.04597

Ščetinec, A., Klobčar, D., & Bračun, D. (2021). In-process path replanning and online layer height control through deposition arc current for gas metal arc based additive manufacturing. Journal of Manufacturing Processes, 64, 1169–1179.

Selvi, S., Vishvaksenan, A., & Rajasekar, E. (2018). Cold metal transfer (CMT) technology—An overview. Defence Technology, 14, 28–44. https://doi.org/10.1016/j.dt.2017.08.002

Serrati, D. S. M., Machado, M. A., Oliveira, J. P., et al. (2023). Non-destructive testing inspection for metal components produced using wire and arc additive manufacturing. Metals. https://doi.org/10.3390/met13040648

Tang, S., Wang, G., Song, H., et al. (2021). A novel method of bead modeling and control for wire and arc additive manufacturing. Rapid Prototyping Journal, 27(2), 311–320. https://doi.org/10.1108/rpj-05-2020-0097

Tang, S., Wang, G., Zhang, H., et al. (2017). An online surface defects detection system for AWAM based on deep learning. In Solid freeform fabrication 2017: Proceedings of the 28th annual international solid freeform fabrication symposium—An additive manufacturing conference. Retrieved from https://utw10945.utweb.utexas.edu/sites/default/files/2017/Manuscripts/AnOnlineSurfaceDefectsDetectionSystemforAWA.pdf

Williams, S. W., Martina, F., Addison, A. C., et al. (2016). Wire + arc additive manufacturing. Materials Science and Technology, 32(7), 641–647. https://doi.org/10.1179/1743284715Y.0000000073

Wu, B., Pan, Z., Ding, D., et al. (2018). A review of the wire arc additive manufacturing of metals: Properties, defects and quality improvement. Journal of Manufacturing Processes, 35, 127–139. https://doi.org/10.1016/j.jmapro.2018.08.001

Xiong, J., & Zhang, G. (2014). Adaptive control of deposited height in GMAW-based layer additive manufacturing. Journal of Materials Processing Technology, 214, 962–968. https://doi.org/10.1016/j.jmatprotec.2013.11.014

Xiong, J., Zhang, Y., & Pi, Y. (2021). Control of deposition height in WAAM using visual inspection of previous and current layers. Journal of Intelligent Manufacturing, 32, 2209–2217. https://doi.org/10.1007/s10845-020-01634-6

Zhan, Q., Liang, Y., Ding, J., et al. (2017). A wire deflection detection method based on image processing in wire+arc additive manufacturing. International Journal of Advanced Technology, 89, 85–93. https://doi.org/10.1007/s00170-016-9106-2

Acknowledgements

The authors gratefully acknowledge funding from “BayVFP Förderlinie Digitalisierung/FuE-Programm” for the project VALIDAD (Validierung additiver Fertigungstechniken für die Anwendung in der Metallverarbeitung), Bavarian funding project number: IUK-1905-0013.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors certify that they have no affiliations with or involvement in any organization or entity with any financial interest or non-financial interest in the subject matter or materials discussed in this manuscript.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Franke, J., Heinrich, F. & Reisch, R.T. Vision based process monitoring in wire arc additive manufacturing (WAAM). J Intell Manuf (2024). https://doi.org/10.1007/s10845-023-02287-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10845-023-02287-x