Abstract

Increasing digitalization enables the use of machine learning (ML) methods for analyzing and optimizing manufacturing processes. A main application of ML is the construction of quality prediction models, which can be used, among other things, for documentation purposes, as assistance systems for process operators, or for adaptive process control. The quality of such ML models typically strongly depends on the amount and the quality of data used for training. In manufacturing, the size of available datasets before start of production (SOP) is often limited. In contrast to data, expert knowledge commonly is available in manufacturing. Therefore, this study introduces a general methodology for building quality prediction models with ML methods on small datasets by integrating shape expert knowledge, that is, prior knowledge about the shape of the input–output relationship to be learned. The proposed methodology is applied to a brushing process with 125 data points for predicting the surface roughness as a function of five process variables. As opposed to conventional ML methods for small datasets, the proposed methodology produces prediction models that strictly comply with all the expert knowledge specified by the involved process specialists. In particular, the direct involvement of process experts in the training of the models leads to a very clear interpretation and, by extension, to a high acceptance of the models. While working out the shape knowledge requires some iterations in general, another clear merit of the proposed methodology is that, in contrast to most conventional ML, it involves no time-consuming and often heuristic hyperparameter tuning or model selection step.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The shift from mass production to mass customization and personalization (Hu, 2013) requires high standards on production processes. In spite of the high variance between different products and small batch sizes of the products to be manufactured, the product quality in mass customization has to be comparable to the quality of products from established mass production processes. It is therefore essential to keep process ramp-up times low and to achieve the required product quality as directly as possible. This requires a profound and solid understanding of the dependencies between process parameters and quality criteria of the final product, even before the start of production (SOP). Various ways exist to gain this kind of process knowledge: for example, by carrying out experiments, setting up simulations, or exploiting available expert knowledge. In production, expert knowledge in particular plays a central role. This is because complex cause–effect relationships operate between the input–output parameters during machining, and these parameters generally have to be set in a result-oriented manner in a short amount of time without recourse to real-time data sets. Indeed, process ramp-up is still commonly done by process experts purely based on their knowledge. Furthermore, many processes are controlled by experts during production to ensure that consistently high quality is produced.

In the course of digitalization, the acquisition of and the access to data in manufacturing have increased significantly in recent years. Sensors, extended data acquisition by the controllers themselves, and the continuous development of low-cost sensors allow for the acquisition of large amounts of data (Wuest et al., 2016). Accordingly, more and more data-driven approaches, most notably machine learning (ML) methods, are used in manufacturing to describe the dependencies between process parameters and quality parameters (Weichert et al., 2019). In principle, such data-driven methods are suitable for the rapid generation of quality prediction models in production, but the quality of ML models crucially depends on the amount and the information content of the available data. The data can be generated from experiments or from simulations. In general, experiments for process development or improvement are expensive and accordingly the number of experiments to be performed should be kept to a minimum. In this context, design of experiment can be used to obtain maximum information about the process behavior with as few experiments as possible (Montgomery, 2017; Fedorov & Leonov, 2014). Similarly, the generation of data using realistic simulation models can be expensive as well, because the models must be created, calibrated, and—depending on the process—high computing capacities are required to generate the data. Concluding, the data available in manufacturing before the SOP is typically rather small.

This paper introduces a novel and general methodology to leverage expert knowledge in order to compensate such data sparsities and to arrive at prediction models with good predictive power in spite of small datasets. Specifically, the proposed methodology is dedicated to shape expert knowledge, that is, expert knowledge about the qualitative shape of the input–output relationship to be learned. Simple examples of such shape knowledge are prior monotonicity or prior convexity knowledge, for instance. Additionally, the proposed methodology directly involves process experts in capturing and in incorporating their shape knowledge into the resulting prediction model.

In more detail, the proposed methodology starts out from an initial, purely data-based prediction model and then proceeds in the following steps. In a first step, a process expert inspects selected, particularly informative graphs of this model. In a second step, the expert then specifies in what way these graphs confirm or contradict his shape expectations. And in a third and last step, the thus specified shape expert knowledge is incorporated into a new prediction model which strictly complies with all the imposed shape constraints. In order to compute this new model, the semi-infinite optimization approach to shape-constrained regression (SIASCOR) is taken, based on the algorithms from (Schmid & Poursanidis, 2021). In the following, this approach is referred to as the SIASCOR method for brevity. While a semi-infinite optimization approach has also been pursued in von Kurnatowski et al. (2021), the algorithm used here is superior to the reference-grid algorithm from von Kurnatowski et al. (2021), both from a theoretical and from a practical point of view. Additionally, von Kurnatowski et al. (2021) treat only a single kind of shape constraints, namely monotonicity constraints. Apart from this reference, there is, to the best of our knowledge, only one other reference that treats shape constraints by means of semi-infinite optimization, namely Cozad et al. (2015). Compared to the algorithm used here, however, the algorithm from Cozad et al. (2015) is less satisfactory. In particular, it does not guarantee the strict fulfilment of the imposed shape constraints.

The general methodology is applied to the exemplary process of grinding with brushes. In spite of the small set of available measurement data, the methodology proposed here leads to a high-quality prediction model for the surface roughness of the brushed workpiece. Aside from the brushing process, the SIASCOR method can also be successfully applied to the glass-bending and press-hardening processes described in von Kurnatowski et al. (2021).

The paper is organized as follows. The section “Related works” gives an overview of the related work. In the section “A methodology to capture and incorporate shape expert knowledge”, the general methodology to capture and incorporate shape expert knowledge is introduced, and its individual steps are explained in detail. The section “Application example” describes the application example, that is, the brushing process. The section “Results and discussion” discusses the resulting prediction models applied to the brushing process and compares them to more traditional ML models. The section “Conclusion and future work” concludes the paper with a summary and an outlook on future research.

Related works

Quality prediction is essential to optimize processes in manufacturing. It can help to quickly ramp up processes and to document product quality. Quality prediction models can be analytical or data-driven. Benardos and Vosniakos (2003) review both approaches in the context of machining processes to describe the surface roughness as a function of different process variables. Data-driven quality prediction models for process optimization have recently been applied to a machining process in Proteau et al. (2021), to textile drapping processes in Pfrommer et al. (2018), and to a laser cutting process in Chaki et al. (2015), just to name a few.

Weichert et al. (2019) show that ML models used for optimization of production processes are often trained with relatively small datasets. In this context, attempts are often made to represent complex relationships with complex models and small datasets. Also in other domains, such as process engineering (Napoli & Xibilia, 2011) or medical applications (Shaikhina & Khovanova, 2017), small amounts of data play an important role in the use of ML methods—and will continue to do so (Kang et al., 2021). Accordingly, there already exist quite many methods to train complex models with small datasets in the literature. These known approaches to sparse-data learning can be categorized as purely data-based methods on the one hand and as expert-knowledge-based methods on the other hand.

In the following literature review, expert-knowledge-based approaches that typically require large—or, at least, non-sparse—datasets are not included. In particular, the projection- and rearrangement-based approaches to monotonic regression from Lin and Dunson (2014), Schmid (2021) and Dette and Scheder (2006), Chernozhukov et al. (2009) are not reviewed in detail here. Similarly, the kernel-based approach to shape-constrained regression from Aubin-Frankowski and Szabo (2020) is only mentioned here but not discussed in detail, because the way the shape-knowledge is integrated differs completely from our semi-infinite optimization approach.

Purely data-based methods for sparse-data learning in manufacturing

An important method for training ML models with small datasets is to generate additional, artificial data. Among these virtual-data methods the mega-trend-diffusion (MTD) technique is particularly common. It was developed by Li et al. (2007) using flexible manufacturing system scheduling as an example. In Li et al. (2013) virtual data is generated using a combination of MTD and a plausibility assessment mechanism. In the second step, the generated data is used to train an artificial neural network (ANN) and a support vector regression model with sample data from the manufacturing of liquid-crystal-display (LCD) panels. Using multi-layer ceramic capacitor manufacturing as an example, bootstrapping is used in Tsai and Li (2008) to generate additional virtual data and then train an ANN. Napoli and Xibilia (2011) also use bootstrapping and noise injection to generate virtual data and consequently improve the prediction of an ANN. The methodology is applied to estimate the freezing point of kerosene in a topping unit in chemical engineering. In Chen et al. (2017) virtual data is generated using particle swarm optimization to improve the prediction quality of an extreme learning machine model.

In addition to the methods for generating virtual data and the use of simple ML methods such as linear regression, lasso or ridge regression (Bishop, 2006), other ML methods from the literature can also be used in the context of small datasets. For example, the multi-model approaches in Li et al. (2012) and in Chang et al. (2015) can be mentioned here. The multi-model approaches are used in the field of LCD panel manufacturing to improve the prediction quality. Another concrete example are the models described by Torre et al. (2019), which are based on polynomial chaos expansion. These models are also suitable for learning complex relationships in spite of few data points.

Expert-knowledge-based methods for sparse-data learning in manufacturing

An extensive general survey about integrating prior knowledge in learning systems is given in Rueden et al. (2021). The integration of knowledge depends on the source and the representation of the knowledge: for example, algebraic equations or simulation results represent scientific knowledge and can be integrated into the learning algorithm or the training data, respectively.

Apart from this general reference, the recent years brought about various papers on leveraging expert knowledge in specific manufacturing applications. Among other things, these papers are motivated by the fact that production planning becomes more and more difficult for companies due to mass customization. In order to improve the quality of production planning, Schuh et al. (2019) show that enriching production data with domain knowledge leads to an improvement in the calculation of the transition time with regression trees.

Another broad field of research is knowledge integration via Bayesian networks. In Zhang et al. (2020) domain knowledge is incorporated using a Bayesian network to predict the energy consumption during injection molding. Lokrantz et al. (2018) present an ML framework for root cause analysis of faults and quality deviations, in which knowledge is integrated via Bayesian networks. Based on synthetically generated manufacturing data, an improvement of the inferences could be shown compared to models without expert knowledge. He et al. (2019) show a way to use a Bayesian network to inject expert knowledge about the manufacturing process of a cylinder head to evaluate the functional state of manufacturing on the one hand, and to identify causes of functional defects of the final product on the other hand.

Another possibility of root cause analysis using domain-specific knowledge is described by Rahm et al. (2018). Here, knowledge is acquired within an assistance system and combined with ML methods to support the operator in the diagnosis and elimination of faults occurring at packaging machines. Xu et al. (2018) suggested an intelligent knowledge-driven system to solve quality problems in the automotive industry. In that approach, an intelligent module structures and analyzes the knowledge database. The intelligent module provides additional information to experts responsible for solving quality problems.

Lu et al. (2017) incorporate knowledge of the electrochemical micro-machining process into the structure of a neural network. It is demonstrated that integrating knowledge achieves better prediction accuracy compared to classical neural networks. Another way to integrate knowledge about the additive manufacturing process into neural networks is based on causal graphs and proposed by Nagarajan et al. (2019). This approach leads to a more robust model with better generalization capabilities. In Ning et al. (2019), a control system for a grinding process is presented in which, among other things, a fuzzy neural network is used to control the surface roughness of the workpiece. Incorporating knowledge into models using fuzzy logic is a well-known and proven method, especially in the field of grinding (Brinksmeier et al., 2006).

In contrast to the methodology proposed in the present paper, the references mentioned above are not devoted to shape expert knowledge but to other kinds of expert knowledge, which relate to the input–output function to be learned only indirectly. Indeed, He et al. (2019), Lokrantz et al. (2018), Nagarajan et al. (2019), and Zhang et al. (2020) are concerned with expert knowledge in the form of cause–effect relationships and they integrate this kind of knowledge into the model’s architecture. Also, Ning et al. (2019), Lu et al. (2017), and Schuh et al. (2019) are concerned with expert knowledge in the form of explicit physical equation relationships and they integrate these equations into the models, for instance, in the form of new features.

A paper that does consider shape constraints in a manufacturing context is Hao et al. (2020). In contrast to the present paper, the mentioned paper is confined to (piecewise) monotonicity constraints and incorporates these constraints in a completely different way. Indeed, it incorporates the monotonicity constraints into Gaussian process surrogate models (Riihimäki & Vehtari, 2010). In order to get better surrogate models, these models are trained on an iteratively increasing number of sample points determined by Bayesian optimization. In particular, as in Riihimäki and Vehtari (2010), the monotonicity constraints are understood only in a probabilistic sense and their fulfilment is enforced only at a finite number of sampling points, namely at the sampling points that are proposed by the acquisition function for the Bayesian optimization in Hao et al. (2020). Another important difference to the methodology proposed here is that Hao et al. (2020) do not discuss methods of capturing the shape constraints or, in other words, of supporting the expert in working out and specifying their piecewise monotonicity constraints.

A methodology to capture and incorporate shape expert knowledge

As has been pointed out in the previous section, there are expert-knowledge-free and expert-knowledge-based methods to cope with small datasets in the training of ML models in manufacturing. An obvious advantage of expert-knowledge-based approaches is that they typically yield models with superior predictive power, because they take into account more information than the pure data. Another clear advantage of expert-knowledge-based approaches is that their models tend to enjoy higher acceptance among process experts, because the experts are directly involved in the training of these models.

Therefore, this paper proposes a general methodology to capture and incorporate expert knowledge into the training of a powerful prediction model for certain process output quantities of interest. Specifically, the proposed methodology is dedicated to shape expert knowledge, that is, prior knowledge about the qualitative shape of the considered output quantity y as a function

of relevant process input parameters \(x_1, \ldots , x_d\). Such shape expert knowledge can come in many forms. An expert might know, for instance, that the considered output quantity y is monotonically increasing with respect to (w.r.t.) \(x_1\), concave w.r.t. \(x_2\), and monotonically decreasing and convex w.r.t. \(x_3\).

In a nutshell, the proposed methodology to capture and incorporate shape expert knowledge starts out from an initial purely data-based prediction model and then proceeds in the following three steps.

-

(1)

Inspection of the initial model by a process expert,

-

(2)

Specification of shape expert knowledge by the expert,

-

(3)

Integration of the specified shape expert knowledge into the training of a new prediction model which strictly complies with the imposed shape knowledge.

This new and shape-knowledge-compliant prediction model is computed with the help of the SIASCOR method (Schmid & Poursanidis, 2021) and it is therefore referred to as the SIASCOR model in the following. After a first run through the steps above, the shape of the SIASCOR model can still be insufficient in some respects, because the shape knowledge specified at the first run might not have been complete, yet. In this case, the steps one to three can be passed through again (with the initial model replaced by the current SIASCOR model), until the expert notices no more shape knowledge violations in the final SIASCOR model. Schematically, this cyclic procedure of obtaining more and more refined shape-knowledge-compliant models from an initial purely data-based model is sketched in Fig. 1.

In the remainder of this section, the individual steps of the proposed methodology are explained in detail. The input parameter range on which the models are supposed to make reasonable predictions is always denoted by the symbol X. It is further assumed that X is a rectangular set, that is,

with lower and upper bounds \(a_i\) and \(b_i\) for the ith input parameter \(x_i\). Additionally, the—typically small—set of measurement data available for the relationship (1) is always denoted by the symbol

Initial prediction model

As a starting and reference point of the proposed methodology, an initial purely data-based model \({\hat{y}}^0\) is trained for (1), using standard polynomial regression with ridge or lasso regularization (Bishop, 2006). Its sole purpose is to visually assist the process expert in specifying shape knowledge for the SIASCOR model. So, the initial model \({\hat{y}}^0\) is assumed to be a multivariate polynomial

of some degree \(m^0 \in {\mathbb {N}}\), where \(\varvec{\phi }^0(\varvec{x})\) is the vector consisting of all monomials \(x_1^{p_1} \cdots x_d^{p_d}\) of degree less than or equal to \(m^0\) and where \(\varvec{w}\) is the vector of the corresponding monomial coefficients. In training, these monomial coefficients \(\varvec{w}\) are tuned such that \({\hat{y}}_{\varvec{w}}^0\) optimally fits the data \({\mathcal {D}}\) and such that, at the same time, the ridge or lasso regularization term is not too large. In other words, one has to solve the simple unconstrained regression problem

where \(\lambda \in (0,\infty )\) and \(q \in \{1, 2\}\) are suitable regularization hyperparameters (\(q=1\) corresponding to lasso and \(q=2\) corresponding to ridge regression). As usual, these hyperparameters are chosen such that some cross-validation error becomes minimal.

Inspection of the initial prediction model

In the first step of the proposed methodology, a process expert inspects the initial model in order to get an overview of its shape. To do so, the expert has to look at 1- or 2-dimensional graphs of the initial model. Such 1- and 2-dimensional graphs are obtained by keeping all input parameters except one (two) constant to some fixed value(s) of choice and by then considering the model as a function of the one (two) remaining parameter(s). As soon as the number d of inputs is larger than two, there are infinitely many of these graphs and it is notoriously difficult for humans to piece them together to a clear and coherent picture of the model’s shape (Oesterling, 2016). It is therefore crucial to provide the expert with a small selection of particularly informative graphs, namely graphs with particularly high model confidence and graphs with particularly low model confidence.

A simple method of arriving at such high- and low-fidelity graphs is as follows. Choose those two points \(\hat{\varvec{x}}^{\mathrm {min}}\), \(\hat{\varvec{x}}^{\mathrm {max}}\) from a given grid

in X with minimal or maximal accumulated distances from the data points, respectively. In other words,

where the gridpoint indices \(k_{\mathrm {min}}\) and \(k_{\mathrm {max}}\) are defined by

with \({\hat{y}}^k := {\hat{y}}^0(\hat{\varvec{x}}^k)\) being the initial model’s prediction at the gridpoint \(\hat{\varvec{x}}^k\). Starting from the two points \(\hat{\varvec{x}}^{\mathrm {min}}\) and \(\hat{\varvec{x}}^{\mathrm {max}}\), one then traverses each input dimension range. In this manner, one obtains, for each input dimension i, a 1-dimensional graph of the initial model of particularly high fidelity (namely the function \(x_i \mapsto {\hat{y}}^0({\hat{x}}_1^{\mathrm {min}}, \ldots , x_i, \dots , {\hat{x}}_d^{\mathrm {min}})\)) and a 1-dimensional graph of particularly low fidelity (namely the function \(x_i \mapsto {\hat{y}}^0({\hat{x}}_1^{\mathrm {max}}, \ldots , x_i, \dots , {\hat{x}}_d^{\mathrm {max}})\)). See Fig. 2 for exemplary high- and low-fidelity graphs as defined above.

An alternative method of obtaining low- and high-fidelity input parameters and graphs is to use design-of-experiments techniques (Fedorov & Leonov, 2014), but this alternative approach is not pursued here.

After inspecting particularly informative graphs as defined above, the expert can further explore the initial model’s shape by navigating through and investigating arbitrary graphs of the initial model with the help of commercial software or standard slider tools (from Python Dash or PyQt, for instance).

Specification of shape expert knowledge

In the second step of the proposed methodology, the process expert specifies his shape expert knowledge about the input–output relationship (1) of interest. In this process, the expert can greatly benefit from the initial model and especially from the high- and low-fidelity graphs generated in the first step. Indeed, with the help of these graphs, the expert can, on the one hand, easily detect shape behavior that contradicts his expectations and, on the other hand, identify shape behavior that already matches his expectations for the shape of (1). When inspecting the graphs from Fig. 2, for instance, the expert might notice that the initial model exceeds or deceeds physically meaningful bounds. Similarly, the expert might notice that the initial model

-

is convex w.r.t. \(x_1\) (as he expects),

-

is not monotonically decreasing w.r.t. \(x_1\) (contrary to what he expects).

All the shape knowledge that is noticed and worked out in this manner can then be specified and expressed pictorially in the form of simple schematic graphs like the ones from Fig. 3.

Integration of shape expert knowledge into the training of a new prediction model

In the third step of the proposed methodology, the shape expert knowledge specified in the second step is integrated into the training of a new and shape-knowledge-compliant prediction model, using the SIASCOR method. Similarly to the initial model, the SIASCOR model \({\hat{y}}\) is assumed to be a multivariate polynomial

of some degree \(m \in {\mathbb {N}}\) (not necessarily equal to the degree of the initial model) and \(\varvec{\phi }(\varvec{x})\), \(\varvec{w}\) represent the monomials and the corresponding monomial coefficients as in (4). In contrast to the initial model training, however, the monomial coefficients \(\varvec{w}\) are now tuned such that \({\hat{y}}_{\varvec{w}}\) not only optimally fits the data \({\mathcal {D}}\) but also strictly satisfies all the shape constraints specified in the second step. In other words, one has to solve the constrained regression problem

In order to do so, the core semi-infinite optimization algorithm from Schmid and Poursanidis (2021) is used, which covers a large variety of allowable shape constraints.

Some simple examples of shape constraints covered by the algorithm are boundedness constraints

with given lower and upper bounds \({\underline{b}}, {\overline{b}}\), monotonic increasingness or decreasingness constraints

in a given input dimension i, as well as convexity or concavity constraints

in a specified input dimension i. A more complex kind of shape constraint that is also covered by the employed algorithm is the so-called rebound constraint. It constrains the amount the model can rise after a descent to be no larger than a given rebound factor r. In mathematically precise terms, a rebound constraint in the ith input dimension takes the following form:

for all values \(x_j \in [a_j,b_j]\) of the input parameters in the remaining dimensions \(j \ne i\), where

and where \(r \in (0,1]\) is the prescribed rebound factor. Sample graphs of a model that satisfies this rebound constraint with \(r = 1/2\) can be seen in Fig. 3.

Sample graphs satisfying the rebound constraint with \(r=1/2\). In these graphs, \({\Delta }_{\downarrow i}\) is the total descent and \({\Delta }_{\uparrow i}\) is the imposed upper bound on the rebound of the model in the ith input dimension. In other words, \({\Delta }_{\uparrow i} = r \cdot {\Delta }_{\downarrow i}\) is the right-hand side of (17)

An important asset of the approach to shape-constrained regression taken here is that the core algorithm can handle arbitrary combinations of the kinds of shape constraints mentioned above, in an efficient manner. Also, the core algorithm is entirely implemented in Python which makes it particularly easy to use and interface. Another asset of the proposed approach is that the considered shape-constrained regression problem (11) features no hyperparameter except for the polynomial degree m. Consequently, no tuning of hard-to-interpret hyperparameters is necessary. Concerning other, more theoretical, merits of the employed semi-infinite optimization algorithm, the reader is referred to Schmid and Poursanidis (2021).

Application example

The brushing process

The brushing process is a metal-cutting process used for the grinding of metallic surfaces with the help of brushes. Its main applications are the deburring of precision components (Gillespie, 1979), the structuring of decorative surfaces of glass (Novotný et al., 2017), and the functional surface preparation of metals for subsequent process steps of joining (Teicher et al., 2018). Common to all these applications is that the brushing process functions as a finishing process for components with a high inherent added value. Additionally, brushing processes have established themselves in certain highly automated mass production processes (Kim et al., 2012).

While the focus of Deutsches Institut für Normung (2003–2009) is still on steel wires as brushing filaments, in recent years filaments made of plastic with interstratified abrasive grits have become much more important. Such filaments act only as carrier elements of the machining substrate and, accordingly, the corresponding brushing process can be classified as a process with a geometrically undefined cutting edge. In view of their increased relevance, only brushing filaments with interstratified abrasive grits are considered here. See Fig. 4 for a schematic representation of the considered brushing processes.

Apart from the material parameters of the workpiece, the machining process is influenced, on the one hand, by technological parameters of the process and, on the other hand, by a multitude of material parameters of the brush. Important technological parameters are the numbers of revolutions \(n_b\) and \(n_w\) of the brush and of the workpiece, the cutting depth \(a_e\), and the cutting time \(t_c\). The brush parameters relate to the individual filaments (length \(l_f\), diameter \(d_f\), modulus of elasticity, and other technical properties), their arrangement (axial, radial), and their coupling to the base body (cast, plugged). The cutting substrate as an abrasive grain is characterized, among other things, by the grain material, the grain concentration and the grain diameter dia. In addition, the shape of the brush is determined by its width and its diameter \(d_b\).

In view of this large variety of technological and material parameters, it is a challenging task to choose the tool and the tool settings such that a prescribed target value for the roughness of the brushed workpiece is reached quickly but also robustly. It is therefore important to have good prediction models for the surface roughness of the brushed workpiece. In principle, such prediction models can be obtained from a comprehensive simulation of the brushing process (Wahab et al., 2007; Novotný et al., 2017). Such simulation-based models are expensive and complex because—in addition to the many process parameters mentioned above—the dynamic behavior of the tool has to be broken down to the filaments and microscopically to the individual grain in engagement. In particular, the dynamically changing tool diameter (Matuszak & Zaleski, 2015) has to be taken into account. Another paper that highlights the complexity of the underlying physics of the brushing process is Pandiyan et al. (2020). In addition to the challenging modeling procedure, the resulting models are typically expensive to evaluate. Currently, these factors still limit the applicability of simulation-based models in real-world process design and process control. And therefore it is important to build good alternative prediction models in brushing, for example, by using ML. A basic overview of ML approaches used for the modeling of grinding and abrasive finishing processes, which are comparable to brushing, is given in Brinksmeier et al. (1998) and in Pandiyan et al. (2020), for instance.

Input parameters, output parameter, and dataset

In this paper, such an alternative, ML model is built. Specifically, the modeled output quantity is the arithmetic-mean surface roughness of the brushed workpiece,

It is modeled as a function of five particularly important process parameters \(\varvec{x} = (x_1, \ldots , x_5)\) of the brushing process, namely

The dataset used for the training of the prediction model consists of \(N = 125\) measurement points. Table 1 shows the ranges of the process and quality parameters covered by the measurement data.

Results and discussion

In this section, SIASCOR is applied to the brushing process example. In particular, shape expert knowledge is integrated according to the methodology described in the section “A methodology to capture and incorporate shape expert knowledge”. Aside from SIASCOR, a purely data-driven Gaussian process regression (GPR) was conducted for the brushing example. GPR was chosen because it is particularly suitable for small amounts of data. Therefore, it is expected to produce a model with a high predictive power for the comparison with the SIASCOR model. In the end, the two regression models are compared and their advantages and shortcomings are discussed.

Initial model

As a first step, an initial purely data-based model was trained as a reference model to visually assist the process expert in specifying shape knowledge for the SIASCOR model. A polynomial model (4) with the relatively small degree \(m^0=3\) was used to prevent an overfit to the small dataset. The parameters of the model were computed via lasso regression with a learning rate \(\lambda \) selected by means of cross-validation using scikit-learn (Pedregosa et al., 2011). Additionally, prior to training the input variables were transformed with the standard transformation (Kuhn & Johnson, 2013) \(x_i' := \sqrt{x_i}\) for all \(i=1, \ldots , 5\) and then scaled to the unit hypercube. The standard transformation with the square root function led to a better generalization performance.

Capturing shape expert knowledge

As a second step, for the inspection of the initial model, two points \(\hat{\varvec{x}}^{\mathrm {min}}\), \(\hat{\varvec{x}} ^{\mathrm {max}}\) of particularly high fidelity and of particularly low fidelity were computed according to (6)–(89) (Table 2). The corresponding 1-dimensional graphs of the initial model (anchored in these two points) are visualized in Fig. 5. When inspecting and analyzing the shape of these graphs, the process expert detected several physical inconsistencies. For example, some of the initial model’s predictions for \(R_a\) are significantly lower than the surface roughness that is technologically achievable with the brushing process. Another example is the violation of convexity along the \(n_w\) direction.

With these observations in mind, the expert specified shape constraints for the SIASCOR model in the form of the schematic graphs from Fig. 6. Specifically, the expert imposed the boundedness constraint \(0.1 \le R_a \le 0.5\) upon the surface roughness. Along the \(t_c\) direction, the expert required monotonic decreasingness and convexity. In the direction of \(n_b\) and \(n_w\), the model was required to be convex and to satisfy the rebound constraint (17) with \(r = 1/2\). And finally, the model was constrained to be convex w.r.t. \(a_e\) and monotonically increasing w.r.t. dia.

The way the expert arrived at the convexity constraints w.r.t. \(n_b\) and \(n_w\) is through the following physical consideration. As the tool speed \(n_b\) and workpiece speed \(n_w\) increase, the roughness decreases because the equivalent chip thickness is reduced (Hänel et al., 2017). A further increase of \(n_b\) or \(n_w\), however, leads to increased process discontinuities due to centrifugal forces, for example, and can thus cause the roughness to increase again. The other shape constraints were obtained through similar physical considerations.

Comparison of the high- (solid green) and low-fidelity (dashed red) graphs of the initial model. The high- and low-fidelity graphs are anchored in different points, namely the point \(\hat{\varvec{x}}^{\mathrm {min}}\) or \(\hat{\varvec{x}}^{\mathrm {max}}\), respectively, from Table 2 (Color figure online)

SIASCOR model

With the aforementioned shape constraints and the data described in the section “Input parameters, output parameter, and dataset”, the SIASCOR model was trained as explained in the section “Integration of shape expert knowledge into the training of a new prediction model”. For the degree of the polynomial model, \(m=4\) was found to produce the best fits compared to \(m \in \{3,4,5,6\}\). Moreover, the variables were transformed with the root function \(x_i' = \sqrt{x_i}\) for all \(i=1, \ldots , 5\) and then scaled to the unit hypercube. Table 3 lists various performance indices and Fig. 9 shows two plots of the final SIASCOR model.

GPR model

In addition to the SIASCOR model, a GPR model was trained for the sake of comparison since GPR with an appropriately chosen kernel is well-suited for small datasets. As a kernel, the sum of an anisotropic Matérn kernel with \(\nu = 3/2\) and a white-noise kernel was chosen:

where \(\Vert \varvec{z} \Vert _{2,\varvec{l}} := \Vert (z_1/l_1, \ldots , z_d/l_d)\Vert _2\) denotes the aniso-tropic norm of the d-component vector \(\varvec{z}\) and where \(\delta _{\varvec{x},\varvec{x}'}\) is 1 if \(\varvec{x}=\varvec{x}'\) and 0 otherwise. As usual, to optimize the hyperparameters \( \varvec{l} \) and n, the marginal likelihood was maximized according to Williams and Rasmussen (2006), using the Python package scikit-learn (Pedregosa et al., 2011). Due to the anisotropy of the Matérn kernel, for each input dimension i, a separate hyperparameter \(l_i \) is calculated. As for the SIASCOR model, the input variables were transformed with the root function \(x_i' = \sqrt{x_i}\) for all \(i=1, \ldots , 5\) and then scaled to the unit hypercube. Table 3 reports the pertinent performance indices and Fig. 10 shows two plots of the final GPR model.

Comparison of SIASCOR and GPR

Table 3 compares the predictive power of the initial lasso, the SIASCOR and the GPR model obtained by 10-fold cross-validation (10% of the data was taken for the test set in each fold). The predictive power is measured in terms of three averaged performance measures, namely the averaged root-mean-square error (RMSE), the averaged mean-absolute error (MAE), and the averaged coefficient of determination (\(\text {R}^2\)). In formulas, these averaged performance indices are defined as follows:

where \(({\mathcal {T}}_1, {\mathcal {D}}\setminus {\mathcal {T}}_1), \ldots , ({\mathcal {T}}_{10}, {\mathcal {D}}\setminus {\mathcal {T}}_{10})\) are the ten different test-training splits of the overall dataset \({\mathcal {D}}\) obtained by 10-fold cross-validation, and

In the last three equations, \(({\mathcal {T}}, {\mathcal {D}}\setminus {\mathcal {T}})\) is any of the test-training dataset pairs, \({\hat{y}}_{{\mathcal {D}}\setminus {\mathcal {T}}}\) denotes the lasso, SIASCOR, or GPR model trained on \({\mathcal {D}} \setminus {\mathcal {T}}\), and

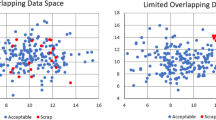

It can be seen from Table 3 that the lasso and the SIASCOR models have similar averaged prediction errors and a similar averaged coefficient of determination on the test data, while the purely data-based GPR model features slightly better averaged prediction errors (but a slightly worse averaged coefficient of determination). This can also be seen from Figs. 7 and 8.

Figures 9 and 10 juxtapose two plots of the SIASCOR and the GPR model, respectively. As can be seen, in contrast to the SIASCOR model, the GPR model is starkly non-convex w.r.t. \(n_w\). Consequently, the GPR model is at odds with physical shape expert knowledge, while the SIASCOR model is not. As has been explained in the section “A methodology to capture and incorporate shape expert knowledge”, the reason is that SIASCOR explicitly incorporates all the shape knowledge provided by the process expert, while the GPR model relies on the scarce and inherently noisy data alone. In other words, the discrepancies between the GPR model’s shape and the expected shape behavior can be traced back to the sparsity and the noisiness of the available measurement data.

Another downside of the GPR approach is that the resulting models are typically quite sensitive w.r.t. the selected kernel class and that the selection of this kernel class is typically not very systematic but rather based on heuristic rules of thumb. Accordingly, the model selection in GPR is typically quite time-consuming and cumbersome. In the SIASCOR method, by contrast, model selection is simple because the SIASCOR models have only one hyperparameter, namely the polynomial degree m. Also, the interpretation of the shape constraints needed for the SIASCOR method is straightforward and, in any case, much clearer than the interpretation and selection of different GPR kernel classes.

As a matter of fact, the solution of the SIASCOR training problem (10) with the algorithm from Schmid and Poursanidis (2021) takes a bit more computational time than the hyperparameter optimization in GPR because semi-infinite optimization problems have a more complex (bi-level) structure than the (unconstrained) marginal likelihood maximization problems used in GPR. Indeed, in the 5-dimensional brushing example considered here, the training of the SIASCOR model typically took around 30 min calculated with a standard office computer. Yet, this is negligible in view of the aforementioned clear advantages of SIASCOR over GPR in terms of shape-knowledge compliance, model selection, and interpretability.

Conclusion and future work

In order to achieve target product qualities quickly and consistently in manufacturing, reliable prediction models for the quality of process outcomes as a function of selected process parameters are essential. Since the datasets available in manufacturing—and especially before SOP—are typically small, the construction of data-driven prediction models is a challenging task. The present paper addresses this challenge by systematically leveraging expert knowledge. Specifically, this paper introduces a general methodology to capture and incorporate shape expert knowledge into ML models for quality prediction in manufacturing. It is based on the SIASCOR method.

The resulting SIASCOR model is mathematically guaranteed to satisfy all the shape constraints imposed by the expert. Conventional purely data-based models, by contrast, do not come with such a guarantee but, on the contrary, often exhibit an unphysical shape behavior in the sparse-data case considered here. Additionally, the direct involvement of process experts in the training of the SIASCOR model increases the acceptance of and the confidence in this model. Another asset of the SIASCOR method is that, in contrast to many conventional ML methods, it does not involve a time-consuming and unsystematic hyperparameter tuning or model selection step.

The proposed general methodology was applied to an exemplary brushing process in order to obtain a prediction model for the arithmetic-mean surface roughness of the brushed workpiece as a function of five process parameters. The dataset available in this application consisted of only 125 measurement points. After inspecting the initial lasso model based solely on these data, the expert defined shape constraints in all five input parameter dimensions. The SIASCOR model trained with these shape constraints was compared to a purely data-based GPR model. As opposed to the SIASCOR model, the GPR model contradicts the physical shape knowledge about the surface roughness in various ways. Also, the selection of an appropriate GPR kernel class is rather heuristic and time-consuming. In any case, the interpretation of the GPR kernel class is certainly less clear than the interpretation of the shape constraints used in the SIASCOR method.

A possible topic of future research is to develop a more sophisticated definition of high- and low-fidelity graphs, using techniques from the optimal design of experiments. Additionally, to further support the user in collecting and leveraging shape expert knowledge, additional information, e.g. from assistance systems similar to the ones from Rahm et al. (2018) or Xu et al. (2018), can be presented to the expert in a preliminary step. Another topic of future research is the further improvement of the SIASCOR algorithm’s runtimes. In addition, a methodology will be developed for assessing the model and for uncovering possible conflicts between the imposed shape constraints and the data. Such conflicts might arise especially as soon as more data is available towards or after the SOP, and the model can then be retrained, using the new and larger dataset and a more refined and consolidated set of shape constraints. And finally, a graphical user interface will be implemented allowing the domain experts to apply the proposed methodology completely independently of external support from data scientists or mathematicians. In particular, this user interface will no longer require a manual translation of the shape knowledge specified pictorially by the expert into mathematical constraints in the form expected by the SIASCOR algorithm.

References

Aubin-Frankowski, P.C., & Szabo, Z. (2020). Hard shape-constrained kernel machines. In: Larochelle H, Ranzato M, Hadsell R, Balcan MF, Lin H (eds) Advances in Neural Information Processing Systems, Curran Associates, Inc., vol 33, pp 384–395

Benardos, P., & Vosniakos, G. C. (2003). Predicting surface roughness in machining: a review. International Journal of Machine Tools and Manufacture, 43(8), 833–844. https://doi.org/10.1016/S0890-6955(03)00059-2

Bishop, C. M. (2006). Pattern Recognition and Machine Learning. Berlin, Heidelberg: Springer-Verlag.

Brinksmeier, E., Tönshoff, H. K., Czenkusch, C., & Heinzel, C. (1998). Journal of Intelligent Manufacturing, 9(4), 303–314. https://doi.org/10.1023/a:1008908724050

Brinksmeier, E., Aurich, J. C., Govekar, E., Heinzel, C., Hoffmeister, H. W., Klocke, F., Peters, J., Rentsch, R., Stephenson, D. J., Uhlmann, E., Weinert, K., & Wittmann, M. (2006). Advances in modeling and simulation of grinding processes. CIRP Annals, 55(2), 667–696. https://doi.org/10.1016/j.cirp.2006.10.003

Chaki, S., Bathe, R. N., Ghosal, S., & Padmanabham, G. (2015). Multi-objective optimisation of pulsed nd:YAG laser cutting process using integrated ANN–NSGAII model. Journal of Intelligent Manufacturing, 29(1), 175–190. https://doi.org/10.1007/s10845-015-1100-2

Chang, C. J., Dai, W. L., & Chen, C. C. (2015). A novel procedure for multimodel development using the grey silhouette coefficient for small-data-set forecasting. Journal of the Operational Research Society, 66(11), 1887–1894. https://doi.org/10.1057/jors.2015.17

Chen, Z. S., Zhu, B., He, Y. L., & Yu, L. A. (2017). A pso based virtual sample generation method for small sample sets: Applications to regression datasets. Engineering Applications of Artificial Intelligence, 59, 236–243. https://doi.org/10.1016/j.engappai.2016.12.024

Chernozhukov, V., Fernandez-Val, I., & Galichon, A. (2009). Improving point and interval estimators of monotone functions by rearrangement. Biometrika, 96(3), 559–575. https://doi.org/10.1093/biomet/asp030

Cozad, A., Sahinidis, N. V., & Miller, D. C. (2015). A combined first-principles and data-driven approach to model building. Computers & Chemical Engineering, 73, 116–127. https://doi.org/10.1016/j.compchemeng.2014.11.010

Dette, H., & Scheder, R. (2006). Strictly monotone and smooth nonparametric regression for two or more variables. Canadian Journal of Statistics, 34(4), 535–561. https://doi.org/10.1002/cjs.5550340401

Deutsches Institut für Normung (2003-09) Fertigungsverfahren Spanen - Teil 8: Bürstspanen; Einordnung, Unterteilung, Begriffe: Manufacturing processes chip removal - part 8: Machining with brushlike tools; classifications, subdivision, terms and definitions. https://doi.org/10.31030/9500672

Fedorov, V. V., & Leonov, S. L. (2014). Optimal Design for Nonlinear Response Models. Chapman & Hall/CRC Biostatistics Seris, Taylor & Francis, Boca Raton,. https://doi.org/10.1201/b15054.

Gillespie, L. K. (1979). Deburring precision miniature parts. Precision Engineering, 1(4), 189–198. https://doi.org/10.1016/0141-6359(79)90099-0

Hänel, A., Uwe Teicher, U., Pätzold, H., Nestler, A., & Brosius, A. (2017). Investigation of a carbon fibre-reinforced plastic grinding wheel for high-speed plunge-cut centreless grinding application. Proceedings of the Institution of Mechanical Engineers, Part B: Journal of Engineering Manufacture, 232(14), 2663–2669. https://doi.org/10.1177/0954405417690556

Hao, J., Zhou, M., Wang, G., Jia, L., & Yan, Y. (2020). Design optimization by integrating limited simulation data and shape engineering knowledge with bayesian optimization (BO-DK4do). Journal of Intelligent Manufacturing, 31(8), 2049–2067. https://doi.org/10.1007/s10845-020-01551-8

He, Z., He, Y., Chen, Z., Zhao, Y., & Lian, R. (2019). Functional failure diagnosis approach based on bayesian network for manufacturing systems. In: 2019 Prognostics and System Health Management Conference (PHM-Qingdao), IEEE, pp 1–6, https://doi.org/10.1109/PHM-Qingdao46334.2019.8942813

Hu, S. J. (2013). Evolving paradigms of manufacturing: From mass production to mass customization and personalization. Procedia CIRP, 7, 3–8. https://doi.org/10.1016/j.procir.2013.05.002

Kang, S., Jin, R., Deng, X., & Kenett, R. S. (2021). Challenges of modeling and analysis in cybermanufacturing: a review from a machine learning and computation perspective. Journal of Intelligent Manufacturing. https://doi.org/10.1007/s10845-021-01817-9

Kim, S. H., Kim, H. W., Euh, K., Kang, J. H., & Cho, J. H. (2012). Effect of wire brushing on warm roll bonding of 6XXX/5XXX/6XXX aluminum alloy clad sheets. Materials & Design, 35, 290–295. https://doi.org/10.1016/j.matdes.2011.09.024

Kuhn, M., & Johnson, K. (2013). Applied predictive modeling. New York, NY: Springer.

Li, D. C., Wu, C. S., Tsai, T. I., & Lina, Y. S. (2007). Using mega-trend-diffusion and artificial samples in small data set learning for early flexible manufacturing system scheduling knowledge. Computers & Operations Research, 34(4), 966–982. https://doi.org/10.1016/j.cor.2005.05.019

Li, D. C., Liu, C. W., & Chen, W. C. (2012). A multi-model approach to determine early manufacturing parameters for small-data-set prediction. International Journal of Production Research, 50(23), 6679–6690. https://doi.org/10.1080/00207543.2011.613867

Li, D. C., Huang, W. T., Chen, C. C., & Chang, C. J. (2013). Employing virtual samples to build early high-dimensional manufacturing models. International Journal of Production Research, 51(11), 3206–3224. https://doi.org/10.1080/00207543.2012.746795

Lin, L., & Dunson, D. B. (2014). Bayesian monotone regression using gaussian process projection. Biometrika, 101(2), 303–317. https://doi.org/10.1093/biomet/ast063

Lokrantz, A., Gustavsson, E., & Jirstrand, M. (2018). Root cause analysis of failures and quality deviations in manufacturing using machine learning. Procedia CIRP, 72(4–6), 1057–1062. https://doi.org/10.1016/j.procir.2018.03.229

Lu, Y., Rajora, M., Zou, P., & Liang, S. (2017). Physics-embedded machine learning: Case study with electrochemical micro-machining. Machines, 5(1), 4. https://doi.org/10.3390/machines5010004

Matuszak, J., & Zaleski, K. (2015). Dynamic diameter determination of circular brushes. Applied Mechanics and Materials, 791, 232–237. https://doi.org/10.4028/www.scientific.net/AMM.791.232

Montgomery, D. C. (2017). Design and Analysis of Experiments (9th ed.). New York: John Wiley & Sons.

Nagarajan, H. P. N., Mokhtarian, H., Jafarian, H., Dimassi, S., Bakrani-Balani, S., Hamedi, A., Coatanéa, E., Gary Wang, G., & Haapala, K. R. (2019). Knowledge-based design of artificial neural network topology for additive manufacturing process modeling: A new approach and case study for fused deposition modeling. Journal of Mechanical Design, 141(2), 442. https://doi.org/10.1115/1.4042084

Napoli, G., & Xibilia, M. G. (2011). Soft sensor design for a topping process in the case of small datasets. Computers & Chemical Engineering, 35(11), 2447–2456. https://doi.org/10.1016/j.compchemeng.2010.12.009

Ning, D., Jingsong, D., Chao, L., & Shuna, J. (2019). An intelligent control system for grinding. In: 2019 IEEE 3rd Information Technology, Networking, Electronic and Automation Control Conference (ITNEC), IEEE, pp 2562–2565, https://doi.org/10.1109/ITNEC.2019.8729268

Novotný, F., Horák, M., & Starý, M. (2017). Abrasive cylindrical brush behaviour in surface processing. International Journal of Machine Tools and Manufacture, 118–119(6), 61–72. https://doi.org/10.1016/j.ijmachtools.2017.03.006

Oesterling P (2016) Visual analysis of high-dimensional point clouds using topological abstraction. Dissertation, Universität Leipzig, Leipzig

Pandiyan, V., Shevchik, S., Wasmer, K., Castagne, S., & Tjahjowidodo, T. (2020). Modelling and monitoring of abrasive finishing processes using artificial intelligence techniques: A review. Journal of Manufacturing Processes, 57, 114–135. https://doi.org/10.1016/j.jmapro.2020.06.013

Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., Blondel, M., Prettenhofer, P., Weiss, R., Dubourg, V., Vanderplas, J., Passos, A., Cournapeau, D., Brucher, M., Perrot, M., & Duchesnay, E. (2011). Scikit-learn: Machine learning in Python. Journal of Machine Learning Research, 12, 2825–2830.

Pfrommer, J., Zimmerling, C., Liu, J., Kärger, L., Henning, F., & Beyerer, J. (2018). Optimisation of manufacturing process parameters using deep neural networks as surrogate models. Procedia CIRP, 72, 426–431. https://doi.org/10.1016/j.procir.2018.03.046

Proteau, A., Tahan, A., Zemouri, R., & Thomas, M. (2021). Predicting the quality of a machined workpiece with a variational autoencoder approach. Journal of Intelligent Manufacturing. https://doi.org/10.1007/s10845-021-01822-y

Rahm, J., Urbas, L., Graube, M., Muller, R., Klaeger, T., Schegner, L., Schult, A., Bonsel, R., Carsch, S., & Oehm, L. (2018). Kommdia: Dialogue-driven assistance system for fault diagnosis and correction in cyber-physical production systems. In: 2018 IEEE 23rd International Conference on Emerging Technologies and Factory Automation (ETFA), IEEE, pp 999–1006, https://doi.org/10.1109/ETFA.2018.8502615

Riihimäki, J., & Vehtari, A. (2010). Gaussian processes with monotonicity information. In: Teh YW, Titterington M (eds) Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics, PMLR, Chia Laguna Resort, Sardinia, Italy, Proceedings of Machine Learning Research, vol 9, pp 645–652

Rueden, L.v., Mayer, S., Beckh, K., Georgiev, B., Giesselbach, S., Heese, R., Kirsch, B., Walczak, M., Pfrommer, J., Pick, A., Ramamurthy, R., Garcke, J., Bauckhage, C., & Schuecker, J. (2021). Informed machine learning - a taxonomy and survey of integrating prior knowledge into learning systems. IEEE Transactions on Knowledge and Data Engineering pp 1–1, https://doi.org/10.1109/TKDE.2021.3079836

Schmid, J. (2021). Approximation, characterization, and continuity of multivariate monotonic regression functions. Analysis and Applications pp 1–39, https://doi.org/10.1142/s0219530521500299

Schmid, J., & Poursanidis, M. (2021). Approximate solutions of convex semi-infinite optimization problems in finitely many iterations. arXiv:2105.08417

Schuh, G., Prote, J.P., Hünnekes, P., Sauermann, F., & Stratmann, L. (2019). Using Prescriptive Analytics to Support the Continuous Improvement Process. In: Ameri F, Stecke KE, von Cieminski G, Kiritsis D (eds) Advances in Production Management Systems. Production Management for the Factory of the Future, IFIP Advances in Information and Communication Technology, vol 566, Springer International Publishing, Cham, pp 341–348, https://doi.org/10.1007/978-3-030-30000-5_43

Shaikhina, T., & Khovanova, N. A. (2017). Handling limited datasets with neural networks in medical applications: A small-data approach. Artificial intelligence in medicine, 75, 51–63. https://doi.org/10.1016/j.artmed.2016.12.003

Teicher, U., Schulze, R., Brosius, A., & Nestler, A. (2018). The influence of brushing on the surface quality of aluminium. MATEC Web of Conferences, 178, 01015. https://doi.org/10.1051/matecconf/201817801015

Torre, E., Marelli, S., Embrechts, P., & Sudret, B. (2019). Data-driven polynomial chaos expansion for machine learning regression. Journal of Computational Physics, 388(4), 601–623. https://doi.org/10.1016/j.jcp.2019.03.039

Tsai, T. I., & Li, D. C. (2008). Utilize bootstrap in small data set learning for pilot run modeling of manufacturing systems. Expert Systems with Applications, 35(3), 1293–1300. https://doi.org/10.1016/j.eswa.2007.08.043

von Kurnatowski, M., Schmid, J., Link, P., Zache, R., Morand, L., Kraft, T., Schmidt, I., Schwientek, J., & Stoll, A. (2021). Compensating data shortages in manufacturing with monotonicity knowledge. Algorithms 14(12), https://doi.org/10.3390/a14120345

Wahab, M. A., Parker, G., & Wang, C. (2007). Modelling rotary sweeping brushes and analyzing brush characteristic using finite element method. Finite Elements in Analysis and Design, 43(6–7), 521–532. https://doi.org/10.1016/j.finel.2006.12.003

Weichert, D., Link, P., Stoll, A., Rüping, S., Ihlenfeldt, S., & Wrobel, S. (2019). A review of machine learning for the optimization of production processes. The International Journal of Advanced Manufacturing Technology, 104(5–8), 1889–1902. https://doi.org/10.1007/s00170-019-03988-5

Williams, C. K., & Rasmussen, C. E. (2006). Gaussian processes for machine learning (Vol. 2). MA: MIT press Cambridge.

Wuest, T., Weimer, D., Irgens, C., & Thoben, K. D. (2016). Machine learning in manufacturing: advantages, challenges, and applications. Production & Manufacturing Research, 4(1), 23–45. https://doi.org/10.1080/21693277.2016.1192517

Xu, Z., Dang, Y., & Munro, P. (2018). Knowledge-driven intelligent quality problem-solving system in the automotive industry. Advanced Engineering Informatics, 38, 441–457. https://doi.org/10.1016/j.aei.2018.08.013

Zhang, H., Roy, U., & Tina Lee, Y. T. (2020). Enriching analytics models with domain knowledge for smart manufacturing data analysis. International Journal of Production Research, 58,. https://doi.org/10.1080/00207543.2019.1680895.

Acknowledgements

We gratefully acknowledge the funding provided by the Fraunhofer Society as part of the Lighthouse Project “Machine Learning for Production” (ML4P). In addition, we would like to thank Holger Pätzold (Schaeffler Technologies AG & Co KG, Herzogenaurach) for the valuable discussions regarding the brushing process, Markus Renner (Carl Hilzinger-Thum GmbH & Co. KG, Tuttlingen) for providing the brushing tools and Konstantin Kusch (Fraunhofer IWU, Chemnitz) for data acquisition and analysis. We would also like to thank Michael Bortz, Jan Schwientek, and Philipp Seufert (Fraunhofer ITWM, Kaiserslautern) for inspiring mathematical discussions.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Link, P., Poursanidis, M., Schmid, J. et al. Capturing and incorporating expert knowledge into machine learning models for quality prediction in manufacturing. J Intell Manuf 33, 2129–2142 (2022). https://doi.org/10.1007/s10845-022-01975-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10845-022-01975-4