Abstract

The stopping rule for a sequential experiment is the rule or procedure for determining when that experiment should end. Accordingly, the stopping rule principle (SRP) states that the evidential relationship between the final data from a sequential experiment and a hypothesis under consideration does not depend on the stopping rule: the same data should yield the same evidence, regardless of which stopping rule was used. I clarify and provide a novel defense of two interpretations of the main argument against the SRP, the foregone conclusion argument. According to the first, the SRP allows for highly confirmationally unreliable experiments, which concept I make precise, to confirm highly. According to the second, it entails the evidential equivalence of experiments differing significantly in their confirmational reliability. I rebut several attempts to deflate or deflect the foregone conclusion argument, drawing connections with replication in science and the likelihood principle.

Similar content being viewed by others

Availability of data and materials

Not applicable.

Code availability

Not applicable.

Notes

Despite their name, sequential experiments need not involve any robust experimenter control or manipulation.

I leave open the possibility that there are degrees of confirmation incomparable with the netural degree. These might be interpreted as degrees that are partially confirmatary in some resepects and partially disconfirmatory in others, although this interpretation may demand a further structural assumption, e.g., that for any degree, it and the neutral degree have a lower bound and an upper bound.

If one did not wish to identify evidential equivalence with equality of incremental confirmation, one could instead postulate that the former, understood as a separate, primitive concept, entails the latter in all cases in which it is defined. Nothing else substantial in the following would then change.

See, e.g., Raiffa and Schlaifer (1961, 38–40) for examples of directly and indirectly informative stopping rules.

In this essay, aside from the stopping parameters \(\Phi \) as described in the foregoing, I am setting aside the possibility of nuisance parameters, parameters whose values are necessary to determine the probability distribution for the data but which are not the object of inference or confirmation. While I believe the essential ideas here extend to such cases, I leave demonstrating that to future work.

Some statements of the SRP require, effectively, that \(z=z'\) (e.g., Berger and Wolpert 1988, 76). But this needlessly excludes situations where there is a trivial relabeling of the values of the data, or when the order of the data recorded from the sequential experiment is different.

\(\delta _{n,N} = 1\) if \(n=N\) and vanishes otherwise.

This argument, which proceeds via the Likelihood Principle introduced in Sect. 6, had been much earlier stated (Edwards et al. 1963, 237), its conclusion well-known (Savage 1962, 17), but Steel (2003) was, as far as I know, the first to point out the implicit assumption about the dependence of the confirmation measure.

This calculation shows that one should not be misled into thinking that l depends only on the likelihood \(P\,(Z|\theta )\) and not the prior \(P\,(\theta )\), despite its name.

It is typical in an experimental design to specify the stopping parameter completely, or else control it enough that the likelihood function for the experiment is well approximated by one so specified.

One could of course extend the definition of \(CU_c\) to the cases in which \(\tau =\theta \), but then the confirmational unreliability would just be equal to the disconfirmational reliability (Eq. 13), i.e., \(CU_c\,(Z,q,\theta ,\theta'\!,\theta ) = DR_c\,(Z,q,\theta ,\theta ')\).

For the former, one sets, for any random variable A, \(P_\theta \,(A)=P\,(A|\theta )\).

It would be interesting and worthwhile to determine more precisely how reliability and any particular Bayesian confirmation measure are interdependent, but that question is beyond the scope of the present work.

To avoid needless repetition, I will henceforth leave tacit parentheticals re-affirming that confirmation can be comparative or non-comparative, unless confusion might arise.

The example had already been known in the context of debates around the proper analysis of data from experiments with “optional” (i.e., probabilistic) stopping rules in classical statistics (Savage 1962, 18). (In retrospect, the division between deterministic and non-deterministic stopping rules is not in general invariant with respect to a redescription of the experiment, but this won’t matter for present purposes.)

I restrict my claim to finite \({\mathcal {E}}\), since it seems possible to have an infinite collection of experiments, each element \(\alpha \) for which a confirmation measure satisfies \(\varepsilon \)A\(q_\pm \)R\({\mathcal {E}}\) with \(\varepsilon _\alpha (\theta ,\theta ') > 0\), but such that for some \(\theta ,\theta '\!,\) \(\inf _\alpha \varepsilon_\alpha (\theta ,\theta ') = 0\).

Here the confirmation should be taken to be qualitative, rather than quantitative (Mayo 1996, 179n3): confirmation is “passing” a highly severe test.

Here one might make an exception for \(q_0\), for that neutral case might intuitively accommodate a variety of experiments with various reliabilities. I do not see how anything that follows in this essay depends on taking a stand on this issue, however.

Here I have adapted the calculations of Steele (2013, 957) to the present terminology.

See also examples 20 and 21 in Berger and Wolpert (1988, 75–76, 80–81) for further instances in which similar conclusions apply.

Mayo (1996, Ch. 10.4) seems to acknowledge this in her discussion of Bayesian methods that also assess reliability.

I interpret the illuminating and important literature (mostly in mathematical statistics) on rates of convergence to the truth—both in the finite-dimensional (Le Cam 1973, 1986) and infinite-dimensional cases (Ghosal et al. 2000; Shen and Wasserman 2001)—to be offering a kind of palliative for this, extending these long-run guarantees to the medium-term.

In more detail: for one two-valued independent variable, collect 20 normally distributed observations per value; if sufficient disconfirmation does not occur, collect 10 more observations per value (Simmons et al. 2011, 1361).

Bias in estimated effect sizes plays a role in confirmational unreliability because that latter concept relates two hypotheses by the probability of confirming one if the other is true. It also plays a role in the statistical theory of estimation, which is beyond the scope of the current argument, although issues concerning the SRP can well be extended to it. One can then have debates about the demerits of bias analogous to those about reliability that I describe in Sects. 4 and 5. See, e.g., Savage (1962, 18), Berger and Wolpert (1988, 80–82), and Howson and Urbach (2006, 164–166) for arguments dismissive of the importance of bias.

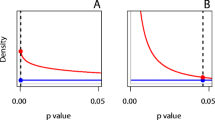

In particular, they examine the use of Bayes Factors, the comparative confirmation analog of l (Eq. 7). See also Sanborn and Hills (2014), who use simulation studies for a different variant, so-called Bayesian hypothesis testing. They emphasize that while there is no inconsistency in using Bayesian methods, those methods do not take into account something important for confidence in the conclusions of the research of psychological science. (See also Rouder (2014) and Sanborn et al. (2014) for a dialog on this topic.)

Their example is that of testing the mean of a normal distribution with known and fixed variance, but the substantive point at issue here is the same because the stopping process for the experiment does not depend on \(\theta \).

See also footnote 29.

Steele (2013) also considers two other “error theories” that she rejects: the first is that the intuition for reliability concerns arises from a conflation with considerations of experimental design, as Backe (1999) had urged. The second involves a conflation with experiments in which only a summary statistic of the experimental outcomes is available. Steele (2013, 949–950) rightly rejects these, in my view, as inaptly responding to the issue at hand. However, as I discuss in the sequel, this is also my view of her preferred error theory.

See also Gandenberger (2015, 13), who calls them “disingenuous experimenters”.

At least, what follows are the assumptions I have reconstructed from their exposition. Except for the Weak Conditionality Principle, I give my own names for these assumptions.

Berger and Wolpert (1988, 84) remark that “More general loss functions can be handled also” but it is difficult to see how their proof strategy could be substantively generalized without this assumption.

One decision rule \(\Delta \) is said to dominate another, \(\Delta '\), just when \(R\,(\theta ,\Delta ) \le R\,(\theta ,\Delta ')\) for all \(\theta \in \Theta \), and the inequality is strict for at least one value of \(\theta \in \Theta \). A decision rule is admissible if and only if it is not dominated by another decision rule.

A statistic T of the data X from an experiment for a parameter \(\theta \) is said to be sufficient for \(\theta \) when the probability distribution of X conditioned on T (X) does not depend on \(\theta \).

Actually, Birnbaum used slightly different principles; this version follows Berger and Wolpert (1988).

See footnote 37 for a definition of sufficiency.

References

Anscombe, F.J. 1954. Fixed-sample-size analysis of sequential observations. Biometrics 10: 89–100.

Backe, A. 1999. The likelihood principle and the reliability of experiments. Philosophy of Science 66 (Proceedings): 354–361.

Berger, J.O., and R.L. Wolpert. 1988. The likelihood principle: A review, generalizations, and statistical implications, 2nd ed. Hayward, CA: Institute of Mathematical Statistics.

Berger, R.L., and G. Casella. 2002. Statistical inference, 2nd ed. Pacific Grove, CA: Thomson Learning.

Birnbaum, A. 1962. On the foundations of statistical inference. Journal of the American Statistical Association 57 (298): 269–306.

Bovens, L., and S. Hartmann. 2002. Bayesian networks and the problem of unreliable instruments. Philosophy of Science 69 (1): 29–72.

Bovens, L., and S. Hartmann. 2003. Bayesian epistemology. Oxford: Oxford University Press.

Cornfield, J. 1970. The frequency theory of probability, Bayes’ theorem, and sequential clinical trials. In Bayesian statistics, ed. D.L. Meyer and R.O. Collier Jr., 1–28. Itasca, IL: Peacock Publishing.

Crupi, V. 2016. Confirmation. In The Stanford Encyclopedia of Philosophy (Winter 2016 Edition), ed. E.N. Zalta. Stanford: Metaphysics Research Lab, Stanford University.

Edwards, W., H. Lindman, and L.J. Savage. 1963. Bayesian statistical inference for psychological research. Psychological Review 70 (3): 193–242.

Feller, W. 1940. Statistical aspects of E.S.P. Journal of Parapsychology 4: 271–298.

Gandenberger, G. 2015. Differences among noninformative stopping rules are often relevant to Bayesian decisions. arXiv:1707.00214.

Ghosal, S., J.K. Ghosh, and A.W. van der Vaart. 2000. Convergence rates of posterior distributions. Annals of Statistics 28: 500–531.

Ghosh, M., N. Reid, and D.A.S. Fraser. 2010. Ancillary statistics: A review. Statistica Sinica 20 (4): 1309–1332.

Goldman, A., and B. Beddor. 2016. Reliabilist epistemology. In The Stanford Encyclopedia of Philosophy, (Winter 2016 Edition), ed. E.N. Zalta. Stanford: Metaphysics Research Lab, Stanford University.

Howson, C., and P. Urbach. 2006. Scientific reasoning: The Bayesian approach, 3rd ed. Chicago, IL: Open Court.

Huber, F. 2007. Confirmation theory. In The Internet Encyclopedia of Philosophy. Accessed 30 Mar. 2018.

John, L.K., G. Loewenstein, and D. Prelec. 2012. Measuring the prevalence of questionable research practices with incentives for truth telling. Psychological Science 23 (5): 524–532.

Kadane, J.B., M.J. Schervish, and T. Seidenfeld. 1996. Reasoning to a foregone conclusion. Journal of the American Statistical Association 91 (435): 1228–1235.

Kerridge, D. 1963. Bounds for the frequency of misleading Bayes inferences. The Annals of Mathematical Statistics 34 (3): 1109–1110.

Le Cam, L.M. 1973. Convergence of estimates under dimensionality restrictions. Annals of Statistics 1: 38–53.

Le Cam, L.M. 1986. Asymptotic methods in statistical decision theory. New York: Springer.

Mayo, D.G. 1996. Error and the growth of experimental knowledge. Chicago: University of Chicago Press.

Mayo, D.G. 2004. Rejoinder. In The nature of scientific evidence: Statistical, philosophical, and empirical considerations, ed. S.R. Lele and M.L. Taper, 101–118. Chicago: University of Chicago Press.

Mayo, D.G., and M. Kruse. 2001. Principles of inference and their consequences. In Foundations of Bayesianism, ed. D. Corfield and J. Williamson, 381–403. Dordrecht: Kluwer.

Raiffa, H., and R. Schlaifer. 1961. Applied statistical decision theory. Boston: Division of Research: Graduate School of Business Administration, Harvard University.

Robbins, H. 1952. Some aspects of sequential design of experiments. Bulletin of the American Mathematical Society 58: 527–535.

Rouder, J.N. 2014. Optional stopping: No problem for Bayesians. Psychonomic Bulletin and Review 21 (2): 301–308.

Royall, R. 2000. On the probability of observing misleading statistical evidence. Journal of the American Statistical Association 95 (451): 760–768.

Sanborn, A.N., and T.T. Hills. 2014. The frequentist implications of optional stopping on Bayesian hypothesis tests. Psychonomic Bulletin and Review 21 (2): 283–300.

Sanborn, A.N., T.T. Hills, M.R. Dougherty, R.P. Thomas, E.C. Yu, and A.M. Sprenger. 2014. Reply to Rouder (2014): Good frequentist properties raise confidence. Psychonomic Bulletin and Review 21 (2): 283–300.

Savage, L.J. 1962. The foundations of statistical inference: A discussion. London: Methuen.

Shen, X., and L. Wasserman. 2001. Rates of convergence of posterior distributions. Annals of Statistics 29: 687–714.

Simmons, J.P., L.D. Nelson, and U. Simonsohn. 2011. False-positive psychology: undisclosed flexibility in data collecting and analysis allows presenting anything as significant. Psychological Science 22 (11): 1359–1366.

Sprenger, J. 2009. Evidence and experimental design in sequential trials. Philosophy of Science 76 (5): 637–649.

Steel, D. 2003. A Bayesian way to make stopping rules matter. Erkenntnis 58: 213–227.

Steele, K. 2013. Persistent experimenters, stopping rules, and statistical inference. Erkenntnis 78: 937–961.

Steele, K., and H.O. Stefánsson. 2016. Decision theory. In The Stanford Encyclopedia of Philosophy, (Winter 2016 Edition), ed. E.N. Zalta. Stanford: Metaphysics Research Lab, Stanford University.

Yu, E.C., A.M. Sprenger, R.P. Thomas, and M.R. Dougherty. 2014. When decision heuristics and science collide. Psychonomic Bulletin and Review 21 (2): 268–282.

Funding

This material is based in part upon work supported by the National Science Foundation under Grant No. 2042366.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Fletcher, S.C. The Stopping Rule Principle and Confirmational Reliability. J Gen Philos Sci 55, 1–28 (2024). https://doi.org/10.1007/s10838-023-09645-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10838-023-09645-6