Abstract

Putnam coined what is now known as the no miracles argument “[t]he positive argument for realism”. In its opposition, he put an argument that by his own standards counts as negative. But are there no positive arguments against scientific realism? I believe that there is such an argument that has figured in the back of much of the realism-debate, but, to my knowledge, has nowhere been stated and defended explicitly. This is an argument from the success of quantum physics to the unlikely appropriateness of scientific realism as a philosophical stance towards science. I will here state this argument and offer a detailed defence of its premises. The purpose of this is to both exhibit in detail how far the intuition that quantum physics threatens realism can be driven, in the light also of more recent developments, as well as to exhibit possible vulnerabilities, i.e., to show where potential detractors might attack.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Motivation

Putnam (1975, 73) coined what is now known as the no miracles argument “[t]he positive argument for realism”. In its opposition, he put an argument that by his own standards should count as negative, i.e., as showing the failure of realism rather than the success of alternatives (cf. 1975, 73). This negative argument (Putnam 1978, 25), now known as the pessimistic (meta-)induction, has also spawned off related negative arguments (Stanford 2006; Frost-Arnold 2019; Boge 2021a).

But are there no positive arguments against scientific realism, i.e., arguments showing the benefits associated with embracing alternatives to realism (and thus with rejecting realism)? I believe that there is such an argument that has figured in the back of the realism-debate, but, to my knowledge, has nowhere been stated and defended explicitly. This is an argument from the success of quantum physics (QP) to the unlikely appropriateness of scientific realism as a philosophical stance towards science. I will state this argument in the next section, but let me first outline why I believe it has ‘figured in the back’ of the realism-debate.

Certainly the most influential anti-realist position to date is constructive empiricism (van Fraassen 1980). As is well known, constructive empiricism relies on a crucial distinction between observables and unobservables. Being in part inherited from earlier empiricisms, this divide was critically examined already by Maxwell (1962), who objected that “we are left without criteria which would enable us to draw a non-arbitrary line between ‘observation’ and ‘theory’.” (186)

In light of this well-known problem, van Fraassen (1980) had always conceded observability to be vague, appealing in defense, however, to what he considered clear cases:

A look through a telescope at the moons of Jupiter seems to me a clear case of observation, since astronauts will no doubt be able to see them as well from close up. But the purported observation of micro-particles in a cloud chamber seems to me a clearly different case—if our theory about what happens there is right. (van Fraassen 1980, 16–17; emphasis added)

The example is supposed to capture the distinction between entities that could in principle be observed with the naked eye and those that could not. However, what force would it have if the theory in question was not quantum? Consider Maxwell again:

Suppose [...] that a drug is discovered which vastly alters the human perceptual apparatus [...] [and] that in our altered state we are able to perceive [...] by means of [...] new entities [...] which interact with electrons in such a mild manner that if an electron is [...] in an eigenstate of position, then [...] the interaction does not disturb it. [...] Then we might be able to ‘observe directly’ the position and possibly the approximate diameter and other properties of some electrons. It would follow, of course, that quantum theory would have to be altered [...], since the new entities do not conform to its principles. (Maxwell 1962, 189, emphasis added)

Quite likely, van Frassen’s life-long interest in quantum theory has served as one major motivation for developing a new version of anti-realism (Dawid 2008; Musgrave 1985). This is most prominent in his Scientific Representation,Footnote 1 where he writes:

quantum theory exemplifies a clear rejection of the Criterion [that physics must explain how appearances are produced in reality]. [...] The rejection may not be unique in the history of science, but is brought home to us inescapably by the advent of the new quantum theory. Even if that theory is superseded [...] our view of science must be forever modified in the light of this historical episode. (van Fraassen 2010, 281, 291; emphasis omitted)

Yet even here, the points made by van Fraassen remain subtle, and he does not put forward a straightforward argument directly from the success of quantum physics to an objection to realism. To my knowledge, no such argument has ever been explicitly put forward.

I shall here advance just such an argument, hoping to further—in the light of more recent developments—an understanding of the much-debated contention that quantum physics threatens realism. At the same time, this will also give critics a more solid foundation for arguing against QP’s relevance for the realism-question, as it will exhibit the detailed steps necessary for ‘transmitting the realism problem upwards’.

An important clarification should be made at this point: I here follow Wallace (2020) in distinguishing ‘quantum theory’ as a formal framework from concrete theories formulated within that framework, such as the Standard Model of particle physics or Schrödinger’s theory of the hydrogen atom. ‘Quantum physics’ will be used to refer collectively to all physical applications of the quantum framework. Hence, the argument presented should not be mistaken for the kind of simplistic view that, say, atoms do not exist and since everything is made out of them, nothing does. The point is far more subtle, and maybe better roughly summarized as the claim that, because our most predictive calculus does not really have realistic presuppositions, but is involved, in subtle ways, in much of science, we do not have a good claim to those realistic presuppositions.

2 The Argument

In its intuitive formulation, the argument is that because quantum physics is tremendously successful but (for reasons expounded on below) quite probably says nothing true about the world, its existence and success provides a positive reason to embrace alternatives to realism. However, this does not make it quite clear why the rest of science, outside those domains that cannot be handled by means other than quantum calculations, should be affected by this.

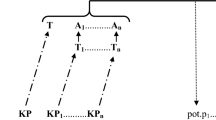

Hence, consider the following, more detailed argument:

-

1.

Scientific realism is only appropriate as a philosophy of science if science delivers approximately true explanations.

-

2.

If some scientific discipline \(\bar{D}\) inherits its success in no small part from another scientific discipline D, but not the other way around, \(\bar{D}\)’s ability to deliver true explanations depends on D’s.

-

3.

The success of other scientific disciplines is in no small part inherited from physics. This is not true the other way around.

-

4.

If some field \(\bar{F}\) in a scientific discipline D inherits its success in no small part from another field F in that discipline, but not the other way around, \(\bar{F}\)’s ability to deliver true explanations depends on F’s.

-

5.

The success of other fields in physics is in no small part inherited from quantum physics. This is not true the other way around.

-

6.

Quite probably, quantum physics does not deliver approximately true explanations.

-

7.

Therefore, scientific realism is quite probably not appropriate as a philosophy of science.

Because the want of a solid basis for realist commitments is transmitted ‘upwards’ from fundamental science to the special sciences, one might equally call this the bottom-up argument against realism. One can ‘grasp’ its validity, but the proof is non-trivial. Hence, a valid formalization, using a mixed notation of probability and logic, is offered in the Appendix.

I believe that this argument nicely captures and explicates several intuitions underlying that part of the realism-debate that has focussed on QP. However, its premises are certainly far from controversial, whence the remainder of the paper will be dedicated to their defence. As I said, this will at the same time highlight the argument’s potential vulnerabilities, and so give critics a chance to say exactly what they find wrong with claims to QP promoting anti-realism.

3 Scientific Realism is Only Appropriate If Science Delivers True Explanations

According to the standard analysis (Putnam 1975; Psillos 1999), realism involves (i) the metaphysical commitment that there is a world with definite, mind-independent nature and structure, (ii) the semantic commitment that scientific theories are capable of being true, and (iii) the epistemic commitment that mature, successful theories are approximately true of that world.

(i)–(iii) have been modified in several ways: For instance, structural realists either restrict (iii) to truth about the world’s structure (Worrall 1989) or strike ‘nature’ from (i) (Ladyman and Ross 2007). Other selective forms of realism endorse more general qualifications, such as (iii) being restricted to certain posits that are in some sense essential for a given theory’s success (Kitcher 1993; Psillos 1999). But none of these positions give up (i)–(iii) altogether.

Now if no statement that was both explanatory and true could be obtained from any given theory, obviously (i)–(iii) could not be upheld in any sensible form: Only purely descriptive or predictive scientific claims could be true, and this would exclude the bulk of scientific claims, thereby threatening condition (iii).Footnote 2

I should explain a little.Footnote 3 First note that scientific explanations “can serve to generate predictions. Explanations that do not have this generative power should be considered scientifically suspect.” (Douglas 2009, 446) Hence, successful scientific explanation goes hand in hand with prediction. But it requires something more: If I predict a future instance of some type of event from a sequence of past observations, that sequence does not count as an explanation. Similarly, if I have a model in hand that specifies observable relations between observable quantities, this too hardly explains why the value of one of these quantities will take on a certain value, even if that value is formally being predicted. For instance, suppose I observe that pushing my chair a bit across the floor leads to a screeching noise. I may predict the occurrences of screeching noises quite accurately from the pushing activities, but that would hardly count as an explanation.

A more illuminating example that combines both of these aspects is Rydberg’s formula. Said formula parametrizes the distance between the spectral lines of several elements, and thus identifies a certain observable patterns (of mutual distances) between observable entities (spectral lines). Furthermore, it is usually considered a prime example of a model that is ‘purely descriptive’ (e.g., Craver 2006; Cummins 2000). This is true despite the fact that both the Rydberg formula as well as its precursor, the Balmer formula, happened to generate novel predictions by positing the continuation of said pattern(s), and its transferability between certain elements (Banet 1966). However, according to various authors (e.g. Wilholt 2005; Bokulich 2011; Massimi 2005), it was not until the arrival of Bohr’s atom model that at least some form of explanation was available. Hence, explanation is more than (successful, novel) prediction; it is ‘prediction\(^+\)’. What it the ‘\(+\)’ here though?

I claim that it is the positing of additional entities, structures, variables, beyond the purely observable domain. Thus, it was Bohr’s positing of discrete electron orbits, discrete (instantaneous) transitions between these, and the emission of radiation with the corresponding energies in these transitions that performed the explanatory work.

Now, committing to the Rydberg formula’s truth over a certain restricted domain would be fully acceptable for constructive empiricists and anti-realists of like guises, whereas committing to the truth of any of Bohr’s posits would not. Hence, despite the fact that novel predictive success is typically advanced by realists as the basis for investing epistemic commitment in a theory, model, or posit, such predictive success alone cannot define the dividing line between realism and anti-realism: It must be predictive success on account of the posited additional entities, structures, variables—success which is ipso facto also at least minimally explanatory.

This already brings a fair amount of clarity to what I am getting at here, but I believe even more headway can be made by building on some of the recent developments on scientific explanation. Crucially, I have relied on a qualifier ‘some form of’ in the above claim to an explanation being offered by Bohr’s atom model. Thus, following a mainstream trend in recent debates over scientific explanation (cf. Gijsbers 2016), I here embrace explanatory pluralism: explanations might “represent causal structure; [...] deploy asymptotic reasoning; [...] represent mechanisms; [...] represent non-causal, contrastive, probabilistic relations; [...] unify phenomena into a single framework;” and so on (Khalifa 2017, 8). However, the point is thus that on no such account does science deliver approximately true explanations, so long as explanation involves the positing of unobservables (as I have claimed).

To make sense of this, consider the minimal account of explanation advanced by Khalifa (2017, 7), which is compatible with all these different forms:

q (correctly) explains why p if and only if:

- 1.

p is (approximately) true;

- 2.

q makes a difference to p;

- 3.

q satisfies your ontological requirements (so long as they are reasonable); and

- 4.

q satisfies the appropriate local constraints.

“Difference-making” can be understood in various ways; for instance, in the sense of a counterfactual dependence between events (Khalifa 2017, 7), but also in the slightly more mundane sense of posits and properties of a given model being relevant for the model’s very purpose, while others may be idealized away without harm (Strevens 2011, 330).Footnote 4 The local constraints in (4) are exactly an acknowledgement of the fact that standards of explanation vary. Thus, in the life sciences, explanations may have to be causal and/ or mechanistic, whereas in physics, the derivation from a presumed (though not necessarily causal) law might be considered sufficient. However, the ‘ontological requirements’ in (3) are what distinguishes the realist from the anti-realist:

realists will hold that the explanans q should be treated in the same manner as the explanandum—it should be (approximately) true. By contrast, many antirealists deny that our best explanations have true explanantia. [...] anything a theory says about unobservable entities—paradigmatic examples of which are subatomic particles, the curvature of spacetime, species, mental states, and social structures—may be false without forfeiting explanatory correctness. (Khalifa 2017, 7–8)

I claim that this level of detail is sufficient to see how ‘explanation’ can be used to sensibly distinguish realism from positions such as constructive empiricism, in which mature theories satisfy (ii) and those parts of them that have direct empirical consequences may also satisfy (iii), whereas this is unclear with respect to those parts delivering explanations for theories’ observable consequences in terms of unobservables. For, “to requests for explanation [...] realists typically attach an objective validity which anti-realists cannot grant.” (van Fraassen 1980, 13)

Thus, the question is not whether science is overall explanatory or not: Several authors on either side of the realism-debate acknowledge that a major aim of science is understanding (e.g. Rowbottom 2019; Khalifa 2017; Potochnik 2017; Strevens 2011; Elgin 2017; Stanford 2006; de Regt 2017), and all of them acknowledge that at least some form of understanding transpires from explanations. But then, even if the value of explanation is merely pragmatic, having explanations to foster scientific understanding may be considered valuable in order for science to proceed (see, especially, Rowbottom 2019; Stanford 2006). The crucial question instead is what status one attributes to purported explanations, or whether one thinks that all scientific posits that one could possibly claim (approximate) truth for are of an empirical, descriptive nature.

As a concrete example, consider how an entity realist may posit a certain type of entity to account for certain observations, such as cathode rays, or bumps on top of distributions of kinematic variables inferred from the electrical activity in a particle-detector. Said posit will thus immediately serve to explain the observations: The electrical activity in the detector is such that a bump can be exhibited in a certain plot (Khalifa’s condition 1); positing the particle does make a difference to the expected shape of the distribution (condition 2); the particle does exist, even if our theories about it may change (condition 3); and predicting a distribution from a set of laws describing the particle’s general properties, together with relevant models of the measurement-context, is appropriate practice in particle physics (condition 4).

The whole reason for positing the particle is, hence, to be able to understand the activity in the detector, using a certain reasoning chain that offers an explanation of it. But there is almost nothing here to dispute for the anti-realist, besides, first and foremost, condition 3: She will claim that the particle-posit is useful, not harmful but also not something one needs to commit oneself to, not to be taken literally... . A similar point can of course be made about, say, tracks in cloud chambers, and a similar game can be played with structure (pace Worrall 1989; Ladyman and Ross 2007), ‘phenomenological’ (though not directly observable) connections encoding causal patterns (pace Cartwright 1983; Potochnik 2017), and so forth. I thus maintain that embracing the existence of at least some (approximately) true scientific explanations—on as liberal a reading of ‘explanation’ as compatible with them being predictive on account of posited ‘unobservables’—is a necessary condition for any sensible form of realism.

Admittedly, there is now only a fine line left between realism and anti-realism that some, such as Saatsi (2017; 2019b), might be flirting with (in a slight abuse of these words). Nevertheless, a line must be drawn somewhere (see also Vickers 2019; Stanford 2021), and the ability to deliver true explanations is a condition for realism which, on account of the foregoing arguments, can be claimed to capture the core intuitions on both sides fairly well. I acknowledge, however, that a more involved conception of explanation could be invoked and defended, in order to criticize this premise. I could then only retreat to a weaker (though maybe somewhat more iffy) term such as ‘posits that make significant reference to unobservables’, instead of ‘explanations’. This would change the wording of some of the subsidiary arguments to follow, but I believe it would not threaten the overall argument’s validity.

Furthermore, one might also go in the opposite direction and call all kinds of empirical predictions ‘explanations’; but besides the fact that this too would be covered by the direct reference to ‘unobservables’, I submit it would mean stretching the concept of explanation too far.

4 Scientific Success is in No Small Part Inherited from Quantum Physics

Premises (3) and (5) are certainly more controversial, but I believe they are very much defensible upon closer inspection. First, consider the claim that success in science stems in no small part from physics, but not vice versa. This should not be mistaken as an expression of outright physicalism, but rather as a more qualified statement in the vein of Ladyman and Ross’ (2007, 44) Primacy of Physics Constraint: “Special science hypotheses that conflict with fundamental physics [...] should be rejected for that reason alone.”

The claim that success in science overall is in no small part due to physics (but not vice versa) is actually even quite a bit weaker: It does not involve a normative component (even fundamental physics is fallible), nor direct appeal to fundamental physics. It is merely a descriptive claim to the primacy of physics in furthering scientific success; something I believe to be hardly deniable when appropriately construed.

Frankly, there are two ways in which this primacy could be construed, only one of which is appropriate for this paper: (a) Obviously, progress in physics has made possible the manifold engineering achievements that were crucial to the advancement of other sciences. However, (b), it is also true that special sciences often rely directly on physics in building successful models and theories.

The relevant sense here is (b), because, for one, only there a strong asymmetry in dependence can be claimed: Scientific instrumentation may usually not involve biological mechanisms, but chemical composition, say, often matters. However, successful physical theorizing scarcely needs to take into account results from chemistry, and even less so from biology. This is clearly not true the other way around.

As an example, consider the present understanding of neurobiological processing, which relies fundamentally on the existence and propagation of potentials. A seminal model that “heralded the start of the modern era of biological research in general” (Schwiening 2012, 2575) is that of Hodgkin and Huxley (1952). The approach by Hodgkin and Huxley was to model the membrane of the giant squid axon as a complex electrical circuit. The model then acknowledged four different currents, one corresponding to the capacitance of the membrane, one for sodium and potassium ions each, and a ‘leakage’ current corresponding to chloride and other ions. The result was a differential equation

with the \(V_x\) characteristic displacements from the membrane’s rest potential, \(C_M\) the membrane capacity per area, the \(\bar{g}_x\) conductances (and the bar indicating the peak of a time-dependent function), and n, m, and h constants modelling the proportion of charged particles in certain domains (e.g. inside the membrane).

Even though the powers of n, m, and h could be motivated by fundamental modelling assumptions, to obtain a good description of the current in a giant squid axon Hodgkin and Huxley first had to fit these variables in a cumbersome numerical procedure (cf. Schwiening 2012). Hence, the correspondence to measured potentials of the giant squid axon itself did not provide any confirmation, at least in the narrow sense of successful use-novel prediction (Worrall 1985). But the model was subsequently also used to bring home several independent confirmations and to spawn off further empirically successful developments (cf. Schwiening 2012, 2575).

The point crucial for us here is the intimate reliance on physical concepts, such as charges, currents, capacitances, and resistances, which were crucial for the successes harvested with this model.

This is but one example, and further ones abound throughout chemistry and the life-sciences. For example, progress in cardiology and orthopaedics depended on the use of mechanical models specifying the heart’s and the feet’s dynamical and static properties in purely physical terms, respectively. Similarly, models from fluid dynamics and statics aided progress in understanding blood flow and pressure respectively (cf. Varmus 1999). And even bracketing physical chemistry for now, this is by far not an exhaustive list (see, for instance, Newman 2010, 2).

The importance of physics certainly does not stop at the level of the life sciences. For instance, in order to understand the prospects and limitations of actual computers, or hardware-related sources of error in implemented code, computer science, too, has to take a fair bit of physics into account (e.g. Clements 2006, for an overview).

Emphatically, I am not claiming that all of science depends on physics. A counter-example would be, say, economics, with most of its models being agent-based, or based on other macro-variables that are in no clear sense physical.Footnote 5 The extent of the dependence of all science on physics is hence disputable, and this may be a suitable avenue for attack by detractors. Nevertheless, I believe that the examples given above (and the many further ones that can be revealed by a dedicated search) reasonably justify the claim that success in science in no small part depends on success in physics, and in the right sense.

Now, what about the dependence of physics on quantum physics? On the face of it, physics for the most part proceeds just fine without giving any consideration to the quantum. I believe this impression to be deceitful though, as large chunks of applied modern physics rely at some point on quantum calculations, and indispensably so.

A nice example from solid state physics is the Hall effect; a phenomenon whose elementary descriptions seems to rely on rather benign ideas from classical physics: When electrons flow as a current \(j_x\) in a wire, driven by an electric field \(E_x\) applied in the x-direction, but are then also deflected according to the Lorentz force law

by a magnetic field \(\vec {H}\), it can be observed that the current nevertheless stabilizes along the x-direction, because the accumulation of charges in one part of the wire creates a net field \(E_y\), in turn giving rise to a Coulomb force \(\vec {F}_C\) that compensates \(\vec {F}_L\). This effect can be quantified in terms of the Hall coefficient

which, for negative charge carriers, must be negative. However:

One of the remarkable aspects of the Hall effect [...] is that in some metals the Hall coefficient is positive, suggesting that the carriers have a charge opposite to that of the electron. This is another mystery whose solution had to await the full quantum theory of solids. (Ashcroft and Mermin 1976, 13)

The ‘solution’ is the theory of holes—positively charged quasi-particles. But these are really just unfilled states in the energy bands of the solid, and the term ‘energy bands’ strictly speaking refers to the structure of eigenfunctions and -values of a Schrödinger equation with periodic potential. A little more precisely, an idealized representation of an ordered solid is as a Bravais lattice, i.e., an infinite arrangement of discrete points that can be generated by the periodicity-prescription

where the \(n_i\) are integers and the \(\vec {a}_i\) are fixed vectors that do not all lie in one plane. If the potential \(U(\vec {r})\) generated by the nuclei ordered in such a fashion is represented in terms of the reciprocal lattice, i.e., the set of all plane waves satisfying the co-periodicity condition

and plugged into the corresponding Schrödinger equation, it is possible to show that the eigenfunctions of the resulting Hamiltonian must be of the form

with u satisfying the lattice periodicity.Footnote 6 The eigenvalues of these functions often have a characteristic structure wherein several states are densely spaced—and so form a quasi-continuum, or ‘band’—and between these collections there are larger gaps. Unoccupied states in one band correspond to a ‘hole’.

This is, again, but one example, and already the first chapter of the classic solid state physics textbook by Ashcroft and Mermin (1976) mentions three further puzzles that could only be quantitatively solved by using quantum calculations.Footnote 7 Furthermore, this is only solid state physics, and the relevance of the quantum obviously extends beyond this one field. I hardly even need to mention atomic, nuclear, and particle physics: As is well known, atomic physics was the very first success of the quantum formalism, and (as is equally well known) modern particle physics is thoroughly based on quantum field theory (QFT) (e.g. Schwartz 2014). Nuclear physics features some classical models, but these are incapable of reproducing observed nuclear spectra; so most successful models of the nucleus use operators and state vectors (Greiner and Maruhn 1996).

A less obvious example is astrophysics. However, “classical physics is unable to explain how a sufficient number of particles can overcome the Coulomb barrier to produce the Sun’s observed luminosity.” (Carroll and Ostlie 2013, 391) Hence, it is not possible to adequately predict the light received from stars (most notably our own one) in terms of a classical description of their material constitution. Similarly, many important astrophysical observations, such as the apparent expansion of the universe, require an investigation of emission spectra.Footnote 8 However, shifts in these spectra can obviously only be recognized because they are discrete rather than continuous—something only predicted by quantum calculations.

A similarly unobvious example is the stability of matter. Treating, say, a wrench as a solid object whose center-of-mass coordinate can follow a Newtonian trajectory requires that the wrench, when understood also as a collection of smaller objects, be stable. There are two kinds of properties that can be shown with quantum models (i.e., models that use a Hamiltonian operator) and are taken to ensure (or predict) this kind of stability. In contrast, there was only a single, highly ad hoc classical model, with perfectly rigid, mutually repulsive nuclei, which could reproduce only one of these properties.

I here have in mind (i) the boundedness of the energy spectrum from below, which ensures that atoms cannot collapse into ever more energy-saving states, and so that the system does not collapse in on itself, and (ii) the additivity property, i.e., that the energy of a stable system increases linearly with the number of constituents, so that the system does not just ‘blow up’ (cf. Lieb and Seiringer 2010). The latter property was present in Onsager’s aforementioned classical model, but could only be predicted from the mentioned ad hoc assumptions about nuclei. However, Dyson was later on able to predict it by appeal to the anti-symmetrization of fermionic state spaces in QP—something which, unlike the rigidity assumption for nuclei, was clearly not introduced ad hoc for the very purpose of demonstrating stability (cf. Lieb and Seiringer 2010). The first property follows in quantum models with boundary conditions, but, as readers familiar with the episode of Bohr’s discovery of his atom model in Rutherford’s laboratory will be well-aware, can become highly problematic in a classical context.

Relativity and gravitation may seem special on the face of it; but I believe it is not without reason that the physics community is struggling to quantize gravity, whereas few even attempt to ‘gravitationalize the quantum’. Furthermore, most physicists accept the existence of a so far unknown ‘dark’ matter and -energy, influencing the gravitational goings on in the cosmos. However, all models put forward to introduce candidate particles are formulated in terms of QFT.

Now, that this sort of dependence does not go the other way around, i.e., from the classical to the quantum domain, could again be understood in sense (a). But that would be arguably false: Engineering accomplishments relevant for testing quantum theories rely to a large extent on pre-quantum physics, and so there is a reciprocal relationship. Sense (b), on the other hand, is arguably correct: There are several theorems, such as decoherence results, the \(\hbar \approx 0\)-limit, or the Ehrenfest relations, that successfully recover the bulk of classical predictions. This is, strictly speaking, true only in a statistical sense, but that is really just a feature, not a flaw: Experimental and observational results always scatter around central values, as those of us familiar with error-correction and uncertainty-estimation will readily agree. Hence, what QP needs to reproduce are expectation values.

Furthermore, there are these various classical limits to quantum theories, but no quantum limits to classical theories at all: Taking \(\hbar\) to zero means ‘cognitively zooming out’, i.e., neglecting the effects that scale with \(\hbar\). But \(\hbar\) is an empirical constant; so there is otherwise no real sense in varying its size. Furthermore, if QP was unknown, there would be no systematic way of successively introducing the effects that scale with \(\hbar\) into a classical model. Similarly, in decoherence theorems, when they do yield a classical limit, one dynamically retrieves what looks like a statistical ensemble of quasi-classical systems, i.e., a density matrix that is sufficiently (in)definite in both position and momentum so as to mimic (via its Wigner transform) the properties of a classical statistical ensemble. However, I am not aware of any procedure that dynamically yields what looks like a pure-state density matrix in, say, the momentum basis, from a classical probability density.

All in all, there is sufficient reason to think of quantum theories as simply more encompassing, and therefore they do not in the same sense inherit their success from classical theories as is the case the other way around. I admit, however, that it is debatable exactly how many classical results can be reproduced in this way, as quite certainly not all of them can. Hence, all that can be defended in this way is, once again, the claim that physics in general depends in no small part on the quantum but not vice versa, which I believe to be justified on account of the above discussion.

I would finally like to draw attention to the direct involvement of quantum calculations in the special sciences; i.e., to the fact that these themselves, without mediation by classical limits, depend to some degree on the quantum. The first, obvious example is the field of quantum chemistry. Many chemical results simply stem directly from using operators and state vectors, such as in, say, the linear combination of atomic orbitals (LCAO) technique. Quantum computer science is another obvious candidate; but we are currently also witnessing the emergence of a (maybe even more fascinating) field of quantum biology.

A rather homely example of the involvement of QP in biology is DNA sequencing, which itself is sometimes claimed to have revolutionized biology. A standard methodology here relies on laser-spectroscopy for identifying nucleobases. This could be mistaken as the kind of merely technological dependency in sense (a); but measuring the spectrum of nucleobases obviously means that these bases are associated with molecular orbitals.

Even more interestingly, there are reasons to think that entanglement plays a crucial role in certain biological contexts; i.e., “the characteristic trait of quantum mechanics, the one that enforces its entire departure from classical lines of thought” (Schrödinger 1935, 555; original emphasis). There are, for instance, theoretical reasons to think that a comprehensive treatment of photosynthesis requires an assessment of detectable quantum entanglement between light harvesting complexes (Sarovar et al. 2010). More impressively still, Marletto (2018) have recently argued that certain experimental findings suggest that bacteria can become entangled with photons probing them.Footnote 9

An important caveat is that these results are also consistent with modeling both bacteria and light classically. This is, however, deeply implausible for the tons of independent evidence that require us to quantize the electromagnetic field (see also Marletto et al. 2018, 4). Caveats of this sort presently still abound, and so quantum biology retains a somewhat controversial or preliminary status (see also Cao et al. 2020). Nevertheless, such examples clearly add to the more comprehensively established, indirect route via premises (3) and (5) discussed above.

5 Inheritance of Success Implies Dependence Between Explanations

Premises (2) and (4) I take to be about as controversial as (3) and (5); for even if one buys into (6), it could be upheld that quantum physics itself is instrumental, but that this does not impair the explanations delivered by the special sciences.

A position of this flavor has recently been defended by Hoefer (2020), who acknowledges that maintaining such a view requires to “quarantine the quantum quagmire while preserving the pristine certitude of [...] areas of science that seem to rely [...] on the correctness of quantum theories.” (2020, 30–31) This Hoefer believes to be possible on account of the fact that “it is absolutely crazy to not believe in viruses, DNA, atoms, molecules, tectonic plates, etc.; and in the correctness of at least many of the things we say about them.” (2020, 22; original emphasis) More precisely, Hoefer holds that “certain parts of our current scientific lore are such that we cannot conceive of any way that they could be seriously mistaken, other than by appealing to scenarios involving radical skepticism.” (2020, 22; original emphasis)

Hoefer’s position sounds reasonable on the face of it, but is difficult to maintain in detail. In fact, several steps in Hoefer’s argumentation are problematic. For example, what counts as a radically skeptical scenario is highly context-dependent. The weaker brain-in-a-vat scenario discussed by Putnam (1981), in which “a human being [...] has been subjected to an operation by an evil scientist” and her brain has been “connected to a super-scientific computer which causes the person [...] to have the illusion that everything is perfectly normal” (Putnam 1981, 5–6), is certainly much less of a pure science fiction fantasy in the age of advanced neuroscience and (early) applied quantum computation.

Independently of that, it is hard to see why whatever alternative to realism may be needed to understand scientific success should involve anything like a radical skeptical scenario: Neither logical positivism nor constructive empiricism ever invoked skeptical scenarios to analyze scientific success without recurring to standard realist commitments.

More importantly, however, Hoefer (2020, 21) acknowledges that “inference to the best explanation [...] will lead one astray if one happens to possess only a ‘bad lot’ of possible explanations”. Now what is the move from something seeming ‘crazy’ to it not being the case other than an inference to the best explanation? The conviction that everything besides realism about the bulk of scientific claims is crazy or radically skeptical just means judging realism to be far better than its alternatives—a move that verges on petitio. In particular, scientific realism, together with its presently available alternatives, could well be a bad lot, and the ‘right’ philosophy of science may still be on or beyond the horizon.

Indeed, Hoefer points to the vast experimental knowledge in chemistry to argue that most of our scientific claims about atoms and molecules should be considered true. Yet our present quantum theories have been confirmed to exceeding degrees of accuracy by massive amounts of empirical evidence. In the words of Saatsi (2019a, 142; original emphasis): “if there is anything we should want to be realists about, it is quantum physics.”

Hence, why should we not regard the majority of claims implied by quantum theory as worthy of realist commitment by the very same lights? Contrapositively, if, despite this tremendous empirical success, we have serious reasons to doubt the ability of quantum theories to deliver the truth, why would we allow a different connection in disciplines in which this success is arguably less, or at least less impressive?

A second layer can be added to this discussion by paying attention to the quality of explanations in chemistry. For Douglas (2009, 458) has famously argued that:

A scientific explanation will be expected to produce new, generally successful predictions. An explanation that is not in fact used to generate predictions, or whose predictions quickly and obviously fail, would be scientifically suspect. An example of an explanation that fails to meet these criteria is any “just-so” story.

Furthermore, notable realists have wielded against anti-realist claims to successful but quite certainly false past theories the fact that not all predictions, even successful ones, are on equal footing. For instance, Velikovsky (1950) predicted correctly, on account of a rather fantastic theory of near collisions between planets in the early solar system, that Venus’ surface would be hot. However:

Velikovsky says nothing more specific than that the surface temperature of Venus will be ‘hot’. This is, certainly, a falsifiable prediction, but it is not a very risky prediction precisely because it is so vague, and thus compatible with a very large number of possible observations. The lesson seems to be that the realist needs to include in her success-to-truth inference some clause concerning (e.g.) ‘sufficient degree of risk’ of a novel prediction. (Vickers 2019, 575; original emphasis)

Hence, in order for a prediction to be ‘risky’, it must be specific enough—something usually determined by numerical precision—so as to come out wrong under a relatively large number of empirical scenarios (i.e., to not be practically immune to falsification).

Now just how much of chemistry has really offered explanations which coincide with successful and sufficiently ‘risky’ predictions without giving any consideration to (Hermitian or unitary) operators and state vectors? Some, such as van Brakel (2000, 177), indeed argue that:

If quantum mechanics would turn out to be wrong, it would not affect all (or even any) chemical knowledge about molecules (bonding, structure, valence, and so on).

However, two things are noteworthy here:

(i) When van Brakel (2000, 177) writes that “it is not the case that the microdescription will, in principle, always give a more complete and true description”, it becomes clear that by ‘quantum mechanics’, he means something like atomic and (sub-)nuclear physics, and that his interest is in questions of micro-reductionism. Emphatically, I am not concerned with any of this here, and it is perfectly acceptable for my purposes if quantum chemistry is not reducible to atomic or particle physics.

(ii) It makes a big difference, especially for the purposes of this paper, whether not all or none of chemistry would be affected by the ‘falsity’ of quantum theory: If only a subset of empirical laws for chemical experimentation or the production of certain chemicals could be rendered independent of the quantum, then this would be perfectly consistent with, say, constructive empiricism or instrumentalism—up to the (commitment to an) interpretation of these laws in terms of ‘invisible little molecules’, of course. In sharper words, the vast experimental knowledge of chemistry cannot be at stake here, for that has nothing to do with realism.

While it is hard to quantify the need for quantum calculations in successful theoretical chemistry exactly, I believe that it is clearly doubt worthy that the quantum-free portion is as significant as Hoefer makes it look. For instance, the seemingly innocent formula

for the reaction rate k of a chemical as a function of temperature T, referred to as “[t]he best equation for predicting [chemical] reaction rates” by Daniels (1943, 509) in the 1940s, really contains Planck’s constant when the parameter a is theoretically resolved. In fact, In fact, Eyring’s (1935) seminal treatment of reaction rates, which delivers the more detailed, predictive formula of this form, begins by defining quantum degrees of freedom and then uses a semi-classical approximation, justified by the assumption of relatively high temperatures. For instance, for quantifying vibrational degrees of freedom, Planck’s famous radiation law is treated in the limit \(\hbar \omega _i\ll k_BT\), so that

Similarly, quantized eigenvalues of angular momentum for the quantification of rotational degrees of freedom are treated as continuous in virtue of the fact that the rotational energies are small relative to \(k_BT\), and thus integrated out. But of course the resulting formula would be different if these quantum magnitudes would not be considered in the first place. Hence, this early predictive, precise success of chemistry does depend on the quantum.

More general observations underscore the impression that quantum-free, numerically precise, predictively successful explanations are just not that typical of chemistry. In the 1960s, for instance, Coulson (1960) bemoaned that chemistry had essentially split into two camps: Those who tried to provide fairly accurate quantum calculations, and those who did not care for accuracy and focused on qualitative chemical concepts instead. According to Neese et al. (2019), that situation has changed, and insight and accuracy now often go together. However, wherein does the insight claimed here consist?

[T]oday, accurate wave function based first-principle calculations can be performed on large molecular systems, while tools are available to interpret the results of these calculations in chemical language. (Neese et al. 2019, 2814)

Hence, the fact that quantum calculations are generally needed for highly accurate predictions in chemistry remains. But whether the gain of insight by means of ‘translation into chemical language’ is legitimate stands and falls with the validity of the arguments given in Sect. 6 below.

As a final fly in the ointment, note that various no-go theorems severely limit our ability to keep the successful empirical content of quantum physics intact while significantly changing the theoretical basis. The bulk of these theorems tells us, in other words, that any future theory will look ‘remarkably quantum’ in important respects.Footnote 10 The bottom line is that present strategies for quarantining the quantum are doubtful,Footnote 11 and that no-go theorems tell us that there is no simple flight from it (if any). Hence, the direct or indirect relevance of quantum calculations to successful explanations in the special sciences should strike us as significant for the question of whether anything is truly explained by science.

I have thus only argued for the dependency of one field’s ability to offer true explanations on another field’s in case of a success-wise dependency between the two, and only with reference to phenomenological (not empirical) chemistry and quantum chemistry. But of course, the general style of argumentation can be carried over to similar relations between disciplines, and without invoking QT on either side. Suppose, for instance, that sociological models strongly depended on results from psychology (which is probably not true), but we had serious reasons to doubt our best current psychological theories (which might at least partly be true; cf. the reproduction crisis-debate). Then of course we should also throw doubt on our sociological explanations, by virtue of the very fact that their success depends on the doubt worthy claims made in psychology.

For the sake of argument, assume, for instance, that sociological models relied heavily on the properties of Freudian super-egos. Given that it is difficult to test such models, we could be simply unclear about their success, and similarly, we could be unclear about super-egos’ ability to promote successful applications of (Freudian) psychology; but the dependence could be evident nevertheless. Then finding some very strong reasons for super-egos’ non-existence would clearly impair any explanatory truths we might purport to have found out about social groups on account of sociological models. The reader may compare this to the case made above about explanations in chemistry and their dependency on quantum calculations.

There is a bit of an asymmetry here, for it may be the case that quantum calculations are not even capable of being true; and furthermore, psychology would have to be fairly successful but likely untrue, for the two cases to be exactly alike. But that is besides the point: All that is needed is that dependency in success is accompanied by a dependency in explanatory truth; and that I take to be reasonably well argued by the exposition of these two rather different examples: One actual, on the field-level, involving QT, and with the success being arguably tremendous; one hypothetical, on the discipline-level, not involving QT, and with the success being at best somewhat shaky.

6 Quantum Physics Does Not Deliver True Explanations

Premise (6) is the heart of the argument and certainly requires the strongest justification. It is also the only premise that is formulated probabilistically, which should be seen as an acknowledgement of its subtle status.

For the sake of justification, I want to distinguish negative, positive, and neutral reasons for embracing (6). Quite in line with the distinction considered in Sect. 1, the negative ones will concern the failure to offer a coherent interpretation of the formalism in terms of some ontology. The positive reasons will be those reasons from within the formalism that suggest that it does not make reference to a mind-independent reality.Footnote 12 Finally, neutral reasons concern the fact that it is not necessary to embrace any particular ontology in order to apply the formalism successfully.

6.1 No Unproblematic Explanatory Interpretation Yet

An important observation w.r.t. the negative reasons is the widely acknowledged underdetermination of realist interpretations of the formalism such as, in particular, Bohmian, Everettian, and objective collapse ones (see Saatsi 2019a). If we took all these to be empirically adequate (and hence: equivalent), we would have no special reason to believe in any of them. Naïvely, this could already justify a low overall credence for true quantum explanations.

However, underdetermination of course does not exclude that one of these realist interpretations be true, even though at present we do not known which (it could be ‘transient’). There could also be strong, extra-empirical reasons for favoring one over the other, as is evidently held true in ‘foundations circles’ (see also Callender 2020). In other words: underdetermination is compatible with the claim that quantum physics does deliver true explanations, we just do not know for sure (yet) whether they must be stated in terms of Bohmian point particles, Everettian branching wave functions, or, say, stochastic matter-flashes out of nowhere.

More interesting is the observation that arguments for an empirical equivalence of these interpretations (or versions, if you will) of the quantum formalism to the way this formalism is normally used by physicists typically take on a very general form: They somehow recover the successful predictions retrieved from Born probabilities by appeal to assumptions apparently compatible with the chosen interpretation.Footnote 13 When one looks into the details, however, a much more nuanced picture emerges.

Wallace (2020, 97; emphasis added), for instance, observes that “none of the various extant suggestions for Bohmian quantum field theories [have delivered,] say, the cross-section for electron-electron scattering, calculated to loop order where renormalisation matters”. Yet our best predictions, including those which allowed the discovery of a Higgs-like particle with properties compatible with particle physics’ ‘Standard Model’, rely crucially on these very calculations.

Similarly, collapse interpretations single out position as the variable ‘collapsed to’; but this is hardly compatible with decoherence in the relativistic regime (Wallace 2012a, 4589). To recall, ‘decoherence’ refers to a set of (generally well-confirmed) theorems from quantum physics with the upshot that quantum interference dynamically vanishes under certain circumstances (Joos et al. 2003). But essentially all predictions relevant for the LHC rely on the fact that quarks inside protons scattering at high energies are sufficiently decohered in the momentum basis (Schwartz 2014, 674).

The Everett interpretation would be free of such problems if it was not for the probability-problem; that, apparently, an Everettian account cannot (sensibly) recover the Born rule. Among the still-debated approaches are the ones championed by Deutsch (1999) and Wallace (2012b) and that by Zurek (2005) and Carroll and Sebens (2014; 2018). The former has been argued to be threatened by circularity (Baker 2007), and to suffer from an endorsement of untenable decision-theoretic axioms (Dizadji-Bahmani 2013; Maudlin 2014a; Boge 2018). The latter either relies on branch-counting—something incompatible with decoherence, and even with the classical probability calculus (Wallace 2012b)—, or otherwise has to invoke similarly dubious rationality principles (Dawid and Friederich 2019).

Overall, the difficulties associated with realist interpretations are far greater than ‘mere’ underdetermination:Footnote 14 it is not entirely clear that they can fully recover the empirical success of the standard formalism.

Pragmatist (Healey 2017) and subjectivist approaches—most prominently ‘QBism’ (Fuchs et al. 2014)—stick out as alternatives. These do not fall prey to the same problems, as they leave the formalism—including, and especially, the Born rule—largely intact, and refer interpretational subtleties to an informal, philosophical level. However, on this very level, both Healey and ‘arch QBist’ Fuchs declare subtle forms of realism, and this is where the trouble begins.

For QBism, the situation is relatively straightforward: Various commentators associate QBism strongly with phenomenalism (Earman 2019), instrumentalism (Bub 2016), operationalism (Wallace 2019), or even solipsism (Norsen 2016; Boge 2020). The ‘participatory’ realism of Fuchs (2017, 113; original emphasis), according to which “reality is more than any third-person perspective can capture” hardly impairs this point.Footnote 15 For this can only be understood as implying a (rather weak) metaphysical commitment (the world ‘is’)—but certainly nothing in the vein of the semantic and epistemic commitments of standard realisms. And of course, the strong subjective elements in the QBist program especially threaten the possibility of true explanations being delivered by quantum physics (see Timpson 2008).

As for Healey, it is unclear in what sense he can coherently endorse a realist attitude. Based on distinctive elements of Healey’s position, Dorato (2020, 240), for instance, concludes that “despite Healey’s claim to the contrary [...] [his] is a typical instrumentalist position”. Yet Dorato concedes “that Healey is a scientific realist [...] because entities (like quarks or muons) that agents apply quantum theory to exist independently of them.” (2020, 240; original emphasis) Hence, at the very least, Healey subscribes to a form of entity realism, which is in itself a form of selective realism (see Chakravartty 2007, Ch. 2).

However, recall the following feature of Healey’s interpretation: “when a model of quantum theory is applied it is the function of magnitude claims to represent elements of physical reality.” (Healey 2020, 133; emphasis added) Essentially, these are “statements about entities and magnitudes acknowledged by the rest of physics” (Healey 2017, 137), and “applying a quantum model of decoherence is a valuable way to gauge the significance of magnitude claims.” (Healey 2020, 226; emphasis added)

The problem is that it is dubious whether Healey thereby “succeeds in saying what the non-quantum physical magnitudes actually are.” (Wallace 2020, 386) In particular, magnitudes defining quarks or muons, entities Healey wants to be a realist about, are “not remotely classical; nor are they in any way picked out by decoherence” (2020, 386).

It is quite reasonable to maintain that what a quark is is not in any way defined if one does not refer to concepts such as the color SU(3) or, say, the (approximate) scaling invariance of the relevant cross section—elements of quantum chromodynamics. The evidence for entities like quarks is very indirect, and much in need of appeal to highly theoretical notions to even count as evidence for their existence. But there is just no coherent way of being a realist about entities not just postulated but in large part defined by a given theory, without ascribing to that theory a serious amount of content worthy of realist commitment and believing in that content’s approximate truth. Proper ‘quantum entities’, such as quarks and gluons, are just not the kinds of entities one can be a realist about in the same way as this was possible for Thomson’s electron.Footnote 16

With this also stands and falls the possibility of quantum physics delivering true explanations. For according to Healey, “quantum theory by itself explains nothing [...] the use of quantum theory to explain physical phenomena exhibits the physical dependence of those phenomena on conditions described in non-quantum claims.” (Healey 2015, 10, 19)

Now maybe the above reconstruction of Healey’s position is not entirely fair.Footnote 17 In his book The Quantum Revolution in Philosophy, for instance, Healey (2017, 233) writes:

By accepting relativistic quantum field theories of the Standard Model one accepts that neither particles nor fields are ontologically fundamental. One accepts that there are situations where the most basic truths about a physical system are truths about particles, while claims about (“classical”) fields lack significance. One accepts also that there are other situations in which the most basic truths about a physical system are truths about (“classical”) fields, but claims about particles lack significance.

Thus, Healey’s entity realism is in some sense contextual (see also Dorato 2020, 243). But if this is correct, it becomes hard to see how Healey’s position could possibly be compatible with realism: These entities are thus not “beables” but merely “assumables” (cf. Healey 2017, 233); and on the face of it, this seems like a notion “closely related to ‘useful posit’, or even fictional entity, as atoms were for Mach and Poincaré” (Dorato 2020, 243). However, this is not the reading of “assumable” Healey intends: they are rather “[t]hings whose physical existence is presupposed by an application of quantum theory” (Healey 2017, 127); and this makes it sound like they do exist non-contextually—even if applications of QFT cannot be used to devise a ‘gapless’ description of their behavior and properties (see also Healey 2020, 135).

But this now brings us back to step one: Neither quarks, nor gluons, nor Higgs bosons are ‘presupposed’ by Q(F)T. They were predicted by means of a specific application of it, and their defining features reside within that application. How could this be compatible with Healey’s vision of a mere contextual applicability of QFT, in order to sanction the meaningfulness of claims about either fields or particles? Ipso facto, it makes the entities’ existence a contextual matter.

In his more recent paper Pragmatist Quantum Realism, Healey has tried to make the sense of realism he endorses more precise. Furthermore, he there also offers an account of explanation that does not appeal to the entities whose mind-independent existence becomes doubt worthy on his own account of QT. However, both these aspects remain problematic. First off, the application of QT, Healey argues, advises us to endorse, exactly, a gappy story about events in a mind-independent reality. Furthermore, somewhat in contrast to earlier works (e.g. Healey 2012), Healey (2020, 137) now explicitly endorses at least a ‘thin’ reading of the correspondence theory of truth, which is to say that system “s has \(Q \in \Delta\) [...] is true if and only if s has \(Q \in \Delta\), and that will be so just in case ‘s’ refers to s and s satisfies ‘\(Q\in \Delta\)’.”

It seems that Healey is now entirely in realist territory and has left pragmatism fully behind; for pragmatism is counted as anti-realist by many realists exactly because its proponents like Peirce or James defined truth in terms of utility and practical success—as that whichever comes out as accepted on account of such virtues in the ideal limit of inquiry (see Chakravartty 2007, Sect. 4.5, on this issue). That has nothing to do with a correspondence between language and reality. However, the culprit in Healey’s endorsement is the term ‘reference’:

It is plausible that perception sets up causal relations (or at least regular correlations) between cognitive states and corresponding structures in the world, even in animals and other organisms incapable of language use. [...] It is much less plausible that reference works that way in sophisticated uses of language or scientific theories. [...] In applications of quantum models we are dealing with highly sophisticated uses of scientific language and mathematics, typically to physical phenomena that are not accessible to our perceptual faculties. Doubtless ultimately piggybacking on perceptual representation, these uses permit convergent agreement on the truth of claims framed in sophisticated scientific language. In such discourse truth is not to be explained in terms of reference: if anything it is the other way around. (Healey 2017, 256)

Thus, even thought the Tarskian biconditional mentioned above holds true, it is not that ‘s has \(Q \in \Delta\)’ is true because ‘s’ refers successfully to s and ‘\(Q\in \Delta\)’ to a property that s actually has; it is because we can agree on ‘s has \(Q \in \Delta\)’ in scientific discourse that these terms can claim successful reference. This is pragmatism alright, but it is clearly not realism in any sense close to (i)–(iii) from Sect. 3. How could this account be compatible with the possibility that

Einstein was right to hold out the hope that [...] the limits [...] acceptance of quantum theory [...] puts on our abilities to speak meaningfully about the physical world [...] may be transcended as quantum theory is succeeded by an even more successful theory that gives us an approximately true, literal story of what the physical world is like (Healey 2020, 144),

in any sense of these words sufficiently close to what Einstein could have possibly intended them to mean?

Now turn to explanation again. By predicting certain expected distributions of event, QT helps us explain “probabilistic phenomena”, where a “probabilistic phenomenon is a probabilistic data model of a statistical regularity” (Healey 2017, 135; original emphasis). Thus, we can explain violations of Bell-type inequalities, “by deriving the relevant probability distributions from the Born rule, legitimately applied to the appropriate polarization-entangled state of photon pairs whose detection manifests the phenomenon.” (2017, 136) This explanation would be of a broadly deductive-nomological type, specifically, something closely related to what Railton (1981) called deductive-nomological-probabilistic explanation; though without any appeal to individual events (see Healey 2020, 136). Furthermore, the ‘law’ in question is basically the Born rule, together with the conditions of its application as well as a preferred basis of states (or projectors) to apply it to, as typically given by a decoherence model, on Healey’s account.

This derivation of the expected regularities violating Bell-type inequalities is certainly a prediction of QT—and an impressive one at that—but can it seriously also count as an explanation? Recall that in Sect. 3, I had defined the ‘\(+\)’ of explanation to reside in the positing of additional structures, variables, entities. However, according to Healey, QT does not posit any such additional elements:

quantum models always have a prescriptive, not a descriptive, function. The function of [a given] model of decoherence is to advise an actual or hypothetical agent on the significance and credibility of magnitude claims, including some that may be reconstrued as rival claims about a determinate outcome of a measurement. (Healey 2017, 99)

Now, how does this make QT anything more than a highly successful prediction device? For the potential ‘laws’ in question thus do not posit any novel structures, entities, variables; they merely concern the ‘rules’ of successful behavior in an otherwise pretty much opaque reality.

In sum, I take it that Healey’s and the QBists’ accounts (arguably) successfully explicate how scientists should apportion their credences in the light of all scientific evidence. Yet it is difficult—if coherently possible at all—to equip either with a realist epistemology. And even if successful, the result would not be a version of scientific realism in any sense close to (i)–(iii).

6.2 QT Does Not Square Well with Explanationist Demands

Now turn to the positive reasons. These are rooted especially in quantum entanglement, and the various no-go theorems based on it. The most prominent of these theorems is, of course, Bell’s (1987a).

Recall the basic setup: In an experiment with two entangled spinful particles, if these are emitted by a common source and measured at a spacelike distance to each other, the outcomes of both measurements turn out to be so strongly correlated that it is natural to assume a common cause for this correlation. Yet the settings for along which direction to measure the spin can be determined during flight, so that causal influences would have to propagate at superluminal speeds—something excluded by relativity. On top of that, the causal relation thus found would also defy standard principles of probabilistic causation (Wood and Spekkens 2015; Näger 2016).

All this can be codified by an inequality, retrieved from conditioning on a tentative deeper description of the situation, \(\lambda\), using classical causal modeling and relativistic constraints on causation. Such ‘Bell-type’ inequalities are violated by the quantum prediction and experimentally.

As Lewis (2019, 35) points out, it is “something like the received view among physicists” that the theorem can be interpreted as telling us that, against the infamous ‘EPR incompleteness argument’ (Einstein et al. 1935), “descriptive completeness in a theory cannot be met at the micro-level.” I.e., denying that there is even a fact of the matter as to what spins the particles had during flight—or any other fact of the matter that could similarly explain the correlated outcomes in individual experimental runs—is considered a standard option among physicists.

This view has come under attack by philosophers and philosophically inclined physicists alike (Norsen 2007; 2016; Maudlin 2014b). In particular, Maudlin (2014b) claims that the criterion for ‘reality’ employed by Einstein et al. (1935, 777) cannot even be coherently denied. Hence, we must assume some form of non-local causation instead. This is a very contentious move, to say the least, and several commentators acknowledge ways in which the criterion could be coherently denied (Lewis 2019; Glick and Boge 2019; Gömöri and Hofer-Szabó 2021).

On the other hand, Norsen (2016, 206; original emphasis) more modestly claims that “the overall argument does not begin with realistic/deterministic hidden variables. Instead it begins with locality alone”. Hence, denying ‘realistic variables’ is just not an option for evading the theorem’s consequences.

One should not conflate issues of realism with issues of determinism here. As has been pointed out over and over in various places (Bell 1987b; Norsen 2009; Maudlin 2010), Bell-type inequalities, which are then violated experimentally, can be derived without any recursion to determinism. The failure to distinguish ‘determinate value’ from ‘deterministic variable’ is, indeed, a mistake on the side of large chunks of the physics community.

However, before even stating his locality condition, Bell (1987a, 15) introduces the aforementioned “more complete specification [...] effected by means of parameters \(\lambda\).” What else is \(\lambda\) than what Einstein et al. (1935) call “an element of physical reality”?Footnote 18 Without the introduction of the parameters (or variables) \(\lambda\), the premises of Bell’s theorem, such as local causality and the independence of that variable from the measurement settings (‘no conspiracy’), could not even be formulated.

Realism, in the sense of the assumption that it is possible to find some mathematical description of the real situation of particles (or whatever there is) in the relevant sort of entangled sates, is, in other words, explicitly addressed by a premise in Einstein et al.’s argument, in the form of the ‘reality criterion’. But it is clearly also a presupposition of Bell’s theorem.

In the parlance of linguists and philosophers of language, this is a presupposition ‘triggered by a facitve’ (Geurts 2017): that it is meaningful to assign a probability \(p(\uparrow |\ldots \lambda )\), say, to ‘spin up’ being measured given that, among other things, some event represented by \(\lambda\) taking on a particular value was the case, presupposes that there is a relevant event that can be represented by means of \(\lambda\).

Note that it “is a matter of indifference [...] whether \(\lambda\) denotes a single variable or a set, or even a set of functions, and whether the variables are discrete or continuous.” (Bell 1987a, 15) Pretty much all that is required of \(\lambda\) in proofs of Bell-type inequalities is that it is meaningful to associate a probability distribution to them, and that marginalizing over \(\lambda\) also delivers a distribution. Hence, \(\lambda\) captures a fairly broad range of means for representing ‘elements of reality’.

How could this possibility of interpreting ‘realism’, similarly considered by Wüthrich (2014, 605), have possibly escaped the grasp of opponents of anti-realist solutions to the Bell-puzzle like Norsen (2007) or Maudlin (2014b)? This is the closest reading of the word to conditions (i)–(iii) of scientific realism. In particular, if we take the lesson of Bell’s theorem to be that we cannot find a representational means (which could just be some abstract formal structure) for referring to whatever goes on in a Bell-type experiment, then the semantic condition of all standard realisms is violated. Mind-independent reality indeed becomes ‘unspeakable’.

However, presumably, the physics community is to blame in this case as well. Physicists usually define realism as “a philosophical view, according to which external reality is assumed to exist and have definite properties, whether or not they are observed by someone” (Clauser 2017, 480), or “the concept of an objective world that exists independently of subjects (“observers”)” (Epping et al. 2017, 241). This failure to distinguish metaphysics from semantics and epistemology, moving straightforwardly to a position that sounds like Berkeleyan idealism without god, has certainly provoked philosophers’ reluctance to taking the notion that Bell-type inequalities have anything to do with realism seriously.

Accordingly, denying realism is better understood here as denying the possibility of a theory which delivers some description \(\lambda\) that makes it possible to explain the outcomes in the arms of a Bell-type experiment. This means removing a presupposition of the theorem, as shown above. Consequently, denying realism does block the derivation of Bell’s theorem—as is true of essentially any other quantum ‘no-go’ theorem—and so this is a possible consequence to draw from violations of Bell-type inequalities.

But, you ask, could not the particles’ joint quantum state, \(\psi\), itself be the cause of the correlated values? Indeed, the way Bell’s theorem is formulated mathematically, the variable \(\lambda\) could just be \(\psi\) (Norsen 2007; Harrigan and Spekkens 2010).

The option to thus retrieve an explanation in which \(\psi\) acts as a causal factor has been explored by Wood and Spekkens (2015) and Näger (2016), the latter offering a positive account. This approach is ingenious, but requires a special sort of ‘fine-tuning’ (Wood and Spekkens 2015); an intransitivity in a chain of causal variables Näger coins ‘internally canceling paths’.

The idea is that the exact probabilistic dependencies between values taken on by causal variables are such that, despite the fact that there is a causal chain from some variable X to some variable Z via an intermediate variable Y, there is no statistical dependence of Z on X. In particular,—and this Näger (2016, 1148) believes to be the way “how the quantum mechanical formalism secures the unfaithful [i.e., statistical but not causal] independences”—a time-evolved quantum state, itself influenced by the settings chosen during flight, may cause two collapsed, individual states for the two entangled particles, and these states then cause the measured values in an ‘unfaithful’ way (they preserve the ‘no signaling’ property).

Let us not dwell on the fact that Näger here invokes a collapse interpretation: Wood and Spekkens (2015) discuss the sort of fine-tuning present in various versions of a Bohmian account. Moreover, maybe Näger’s story can be adapted to a Bohmian or Everettian vocabulary, wherein wave-packets ‘effectively collapse’ due to decoherence. All that is really required is that there be negligible interference around the time of measurement, while the correlation between the values is fixed by the initial state.

More troubling is the fact that this is a highly conspiratorial story; not in the narrow sense that \(\lambda\) influences the setting choices, but in the more encompassing sense that events in nature conspire in such a way as to fabricate observed correlations while concealing any exploitable causal path between them (similarly Wood and Spekkens 2015, 3).

As mentioned above, there is a non-local causal connection in Näger’s account, as the quantum state is instantaneously influenced by the distant setting choices (and necessarily so; Näger 2018). Given that relativity does not permit a preferred ‘now’, such an instantaneous connection implies the in-principle possibility of signaling into the past and creating causal paradoxes.

However, in Näger’s story, the statistics are cleverly arranged in such a way that the paradox cannot be effected: Despite the fact that the quantum state prepared and the settings chosen by the experimenters act as common cause-variables for the correlated values, this causal influence is not ‘felt’ or ‘seen’ in the final state in such ways that the connection could be used to, say, send signals.

Consider what this means: This is a causal story in which causation proceeds under the covers in such ways that it cannot be exploited (or even detected in the usual ways) by intervening, conscious agents. Surprisingly, this happens in just that one instance where the threat of paradox is known to be imminent otherwise. In sharper words: Under the threat of running into inconsistencies, the events in nature arrange themselves in such ways as to evade this threat. This seems like a messed up story in which logic dictates reality.

Of course, there are other approaches to causation in this context, such as retrocausal (e.g. Evans et al. 2012) or superdeterministic (e.g. ’t Hooft 2014) ones. However, Wood and Spekkens (2015) show that

the fine-tuning criticism applies to all of the various attempts to provide a causal explanation of Bell inequality violations. Accounts in terms of superluminal causes, superdeterminism or acyclic retrocausation are found to fall under a common umbrella. (2015, 3; original emphasis)

Hence, while intuitive, for instance, as an account of delayed-choice experiments, retrocausal explanations have the same nasty flavor as hidden common-cause explanations. Moreover, having a retrocausal connection that cannot be exploited to change the past is in a similar sense obviously conspiratorial.

Two alternatives suggest themselves: Modify the probabilistic causal framework so as to include non-causal (but ‘real’) relations into the story that explain the observed correlations (Gebharter and Retzlaff 2020), or replace the entire classical framework by a ‘properly quantum’ one (Costa and Shrapnel 2016; Shrapnel 2019).

The first kind of proposal, which, in a slightly different version, was first explored by Salmon (1984), is intuitively appealing. For instance, when a stone breaks into two pieces in a way that is assumed not deterministic with respect to the resulting pieces’ masses, the conservation of mass still enforces that both masses add up to the total mass of the original stone. So there is a non-causal dependency between the two pieces which fixes their masses dependently on one another, and the event which causes the two pieces’ existence (the non-deterministic breaking) does not screen off the resulting mass-values.

Looking into the details of the picture drawn for a Bell-type scenario, however, we retrieve something that makes for a poor explanation: The quantum state, together with the settings and measurement interventions, causes the existence of two measured values. The relevant distribution condition, which, in the case of two entangled, spinful particles is the conservation of angular momentum, then somehow fixes these values so as to exhibit the appropriately corresponding values. But of course this must occur during flight, and only upon the experimenters’ choices to measure along two respective axes. This now makes the law look like a murky, conspiratorial causal factor of the sort we were eager to leave behind. Oddities of exactly this kind are the very reason why virtually everyone in the debate has focused on the ‘non-local’ aspect of this sort of scenario.

An alternative option is to think of the two entangled particles as just ‘one system’. This would be a solution of the non-separability kind, and the correlated outcomes would essentially be like the two sides of a coin, or, pretty much exactly like the two resulting pieces of the stone.