Abstract

In this paper we propose a neurogeometrical model of the behaviour of cells of the arm area of the primary motor cortex (M1). We will mathematically express as a fiber bundle the hypercolumnar organization of this cortical area, first modelled by Georgopoulos (Georgopoulos et al., 1982; Georgopoulos, 2015). On this structure, we will consider the selective tuning of M1 neurons of kinematic variables of positions and directions of movement. We will then extend this model to encode the notion of fragments introduced by Hatsopoulos et al. (2007) which describes the selectivity of neurons to movement direction varying in time. This leads to consider a higher dimensional geometrical structure where fragments are represented as integral curves. A comparison with the curves obtained through numerical simulations and experimental data will be presented. Moreover, neural activity shows coherent behaviours represented in terms of movement trajectories pointing to a specific pattern of movement decomposition Kadmon Harpaz et al. (2019). Here, we will recover this pattern through a spectral clustering algorithm in the subriemannian structure we introduced, and compare our results with the neurophysiological one of Kadmon Harpaz et al. (2019).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A fundamental problem regarding the study of motor cortex deals with the information conveyed by the discharge pattern of motor cortical cells. This is a quite difficult topic if we compare it to sensory areas, indeed, for any specific sensory stimulus, there are many inputs captured by sensory receptors and one output signal processed in the cortex. Inputs the motor system come from basal ganglia, cerebellum and fronto-parietal cortex and there are as many output signals directed to interneurons and motorneurons of the spinal cord (Wise, 2001; Carpenter & Reddi, 2012; Economo et al., 2018). In the motor system the existence of a notion of receptive profiles, or maybe in this case, of “actuator profiles", is not established nor well understood. Nevertheless, it is recognized that the cortex, including the motor area itself, has a modular structure (see Hubel & Wiesel, 1962; Evarts, 1967; Mountcastle, 1997; Georgopoulos, 2015) and whose constituent modules, linked together simultaneously or in series in time, are considered to be responsible for the broad domain of voluntary movements (Flash & Hochner, 2005; Mussa-Ivaldi & Solla, 2004). With regard to the arm area of primates motor cortex, several studies reveal how neuronal activity involves the processing of the spatial-motor information (see for example Schwartz et al., 1988; Georgopoulos et al., 1988; Kettner et al., 1988; Caminiti et al., 1990). Starting from 1978, a pioneering work has been developed by A. Georgopoulos, whose experiments allow to recognize at least two main features of the arm area functional architecture. First, he discovered that cells of this area are sensible to the position and direction of the hand movement (see Georgopoulos et al., 1984; Kettner et al., 1988; Georgopoulos et al., 1982; Schwartz et al., 1988): cells response is maximal when hand position and direction coincide with a position and direction, characteristic of the cell. Secondly, the columnar structure, which organizes motor cortical cells in columns corresponding to movement directions (see Georgopoulos et al., 2007; Amirikian & Georgopoulos, 2003; Georgopoulos, 2015). After the work of Georgopoulos, other experiments proved that activity of neurons in the primary motor cortex correlates with a broader variety of movement-related variables, including endpoint position, velocity, acceleration (see for example Kettner et al., 1988; Moran & Schwartz, 1999; Schwartz, 2007), as well as joint angles (see Ajemian et al., 2001; Teka et al., 2017), endpoint force (Georgopoulos, 1992), muscle tensions (Evarts, 1967; Todorov, 2000; Holdefer & Miller, 2002). It is also proved that the tuning for movement parameters is not static, but varies with time (Ashe & Georgopoulos, 1994; Moran & Schwartz, 1999; Churchland & Shenoy, 2007; Paninski et al., 2004) and for this reason Hatsopoulos (Hatsopoulos et al., 2007; Reimer & Hatsopoulos, 2009) argues that individual motor cortical cells rather encode “movement fragments", i.e. movement trajectories. This feature can be considered in a more general perspective developed by M.S.A. Graziano who proposed that the motor cortex is organized into action maps (see Graziano et al., 2002; Graziano & Aflalo, 2007; Aflalo & Graziano 2006b). This means that the motor cortex organization reflects the complexity of a movement related to a specific task. His results allowed an extension of previous neural models; in fact, he proved that cellular tuning to a limited set of movement variables could stably account for neural activity with respect to a restricted set of movements (Aflalo & Graziano, 2006a). The reverse is also true, meaning that motor cortex neurons become tuned to movement parameters that are relevant to the task being performed. For example, in the case of center-out movements, directional tuning still plays a central role in the variation of neuronal activity, as it is strongly supported from Georgopoulos results (Georgopoulos et al., 1982, 1986).

The aim of this paper is to propose a mathematical model inspired by the functional architecture of the arm area of motor cortex for movements related to reaching tasks. We will model the selective behaviour of each neuron, which changes in time, through integral curves in a suitable space of kinematic variables. We will start with a static model expressing the A. Georgopoulos’ data of directional selectivity and columnar organization, and gradually we will add new aspects to get to describe the evidence of time dependence provided by Hatsopoulos et al. (2007) and Churchland and Shenoy (2007). As a starting point, we propose a first fiber bundle structure compatible with the hypercolumnar organization of directionally tuned cells. We assume that to every point on the cortical surface coding for hand’s position in the plane \(\left( x,y\right) \in \mathbb {R}^2\) is associated a full fiber of possible movement directions. More specifically, we suggest that a motor neuron can be represented by a point \(\left( x,y,\theta \right) \in \mathbb {R}^2\times S^1\), where \(\left( x,y\right)\) denotes the hand’s position in a two dimensional plane and \(\theta\) denotes a movement direction at position \(\left( x,y\right)\). We then present a comparison with models of the primary visual cortex V1 (Petitot & Tondut, 1999; Bressloff & Cowan, 2003; Citti & Sarti, 2006). Finally we combine the two previous model in a more complex one which takes into account the hand’s position \(\left( x,y\right)\) at time t, hand’s movement direction described by an angle \(\theta \in S^1\) and hand’s speed and acceleration along \(\theta\), denoted by \(\left( v, a\right)\). The resulting space is then \(\mathcal {M}= \mathbb {R}^{3}_{\left( x,y,t\right) } \times S^1_{\theta } \times \mathbb {R}^{2}_{\left( v,a\right) }\). In this model we describe the dependence on time of the preferred direction of cells as a curve of preferred direction, evolving in time. In Section 4, we show that it is possible to choose suitable parameters in such a way to recover the experimental data of Hatsopoulos et al. (2007) and Churchland and Shenoy (2007). A comparison with Cocci (2014) model for movement in the visual areas is presented.

We then validate the model as follows. We define a connectivity kernel

where \(\left( \eta _i,\eta _j\right) \in \mathcal {M}\) and \(d_{\mathcal {M}}\) is the sub-Riemannian metric of the space. Equation (1) is an estimate of the heat kernel, and we propose it as a model of the local connectivity between the cortical tuning points \(\eta _i\) and \(\eta _j\). We use this kernel, expressed in terms of kinematic variables, and a spectral clustering algorithm to detect a set of hand trajectories. These resulting paths are well in accordance with the ones obtained by Hatsopoulos et al. (2007) and by Kadmon Harpaz et al. (2019) with a clustering algorithm applied directly on cortical variables.

The structure of the paper is the following. In Section 2 we provide a short description of the neurophysiological structure of the motor cortex. Section 3 is devoted to the setting of our neurogeometrical model. In Section 4 we discuss the structure of the model by fitting its parameters with the experimental data of Churchland and Shenoy (2007) and we compare the present model with the one of Cocci (2014), describing visual areas devoted to movement coding. In Section 5 we validate the model by comparing the pattern of movement decomposition with the curves found with a grouping algorithm, driven by the proposed distance. Finally, Section 6 provides a conclusion and future development of this work. In Appendices A and B some essential definitions and properties of fiber bundles and sub-Riemannian distances are recalled.

2 Basic neurophysiology of the primate arm area of motor cortex

2.1 Role of the movement direction information

One of the key functions of the motor cortex consists on the control of the direction of movement trajectory (see Georgopoulos et al., 1982; Schwartz et al., 1988). Each cell is sensible to a specific direction of movement in the sense that its discharge rate is highest before and during the execution movements in a specific direction, called preferred direction of the cell (PD). This single cell behaviour is modelled in (Georgopoulos et al., 1982; Schwartz et al., 1988) through a sinusoidal function of the movement direction:

where \(\theta _{\text {PD}}\) represents the preferred direction of the cell, and the coefficient k denotes the increase in discharge over the overall mean b at the preferred direction \(\theta _{\text {PD}}\). Equation (2) is called directional tuning curve. PDs differ from different cells and a large proportion of arm-related cells are active during reaching movements, hence in (Georgopoulos, 1988; Georgopoulos et al., 1988) the authors proposed to estimate the direction of the movement via a weighted vectorial sum of cells PDs

where N is the number of cells in the population and \(w_i\) is a symmetric function with respect to the preferred direction \(\theta _{\text {PD}}^i\) of the i-th cell.

The sum (3) is called the neuronal population vector (Georgopoulos et al., 1986) and represents the direction of hand movement not only during the execution of the movement, but even before its starting. Best predictions for the upcoming direction of movement (see Table 2 of Georgopoulos et al., 1988) were made with weights of the form

Motor cortical cells activity is also related with the position at which the hand is actively maintained in space (see in particular Georgopoulos et al., 1984; Kettner et al., 1988). In Georgopoulos et al. (1984) a positional gradient tuning curve is presented as a function which locally acts as

where the value of \(g\left( x,y\right)\) denotes cell’s discharge rate at position \(\left( x,y\right)\) with respect to an origin located at the central button of the center-out reaching apparatus; the quantity b is the discharge rate in the origin and \(\alpha , \beta\) are expressions of the slopes of cell discharge per unit length along the x and y axes of the plane.

2.1.1 Columnar organization

A crucial problem is the cortical representation of the preferred direction over the cortical surface. Georgopoulos (1984) noted the location of cells with specific preferred movement direction along histologically identified penetrations and observed a change in PD in penetrations at an angle with anatomical cortical columns (see Fig. 1 as an illustrative explanation).

Columnar organization of the motor cortex. a Movement direction selectivity of four neurons recorded along the histologically identified penetration. The similarity of preferred directions for a penetration parallel to the cortical columns is shown. Source: Georgopoulos (2015). b Schematic illustration of the projection of recording sites onto the cortical surface along anatomical columns. Open and slashed circles denote recording and projected sites, respectively. Source: Georgopoulos et al. (2007)

In Amirikian and Georgopoulos (2003) and subsequently in Naselaris et al. (2006) and Georgopoulos et al. (2007), the spatial organization of PDs was examined and a columnar organization was discovered (see Fig. 2). A continuum of 500 \(\mu\)m in depth with cells of similar preferred directions and a repeating columnar pattern of similar PDs with a width of 50 to 100 \(\mu\)m together with a repetition distance of almost 200 \(\mu\)m were measured (see Fig. 2a for a schematic representation). A fundamental aspect of the above organization was that within each hypercolumn (i.e. assemblage of columns) of radius 120 \(\mu\)m, PDs were organized in such a way to represent any given direction of reach (Naselaris et al., 2006; Georgopoulos et al., 2007).

2.2 Complexity of motor coding and temporal behaviour

Subsequent studies revealed that the arm area of the motor cortex is related to a more complex and heterogeneous set of movement variables (see Kalaska, 2009; Scott, 2005 for a general review). A fundamental class is formed by “extrinsic" or “hand-centered" parameters which typically describe cortical activity with respect to hand’s movement. These variables mainly refer to endpoint position, velocity and acceleration of the hand both in two-dimensional and three-dimensional space (see Ashe & Georgopoulos, 1994; Georgopoulos et al., 1984; Kettner et al., 1988; Moran & Schwartz, 1999; Stark et al., 2009), in addition to the movement direction variable. This class of parameters has been broadly used for the characterization of the spatio-temporal form of movement (see for example the work of Flash & Hogan, 1985; Flash et al., 1992; Hogan, 1984). It is important to note that the sensibility of each neuron to these variables can depend on time (Ashe & Georgopoulos, 1994; Moran & Schwartz, 1999; Paninski et al., 2004; Churchland & Shenoy, 2007; Reimer & Hatsopoulos, 2009; Hatsopoulos et al., 2007). In particular, Hatsopoulos et al. (2007) (see also Reimer & Hatsopoulos, 2009) highlighted that tuning to movement parameters varies with time and proposed to describe the activity of neurons through a trajectory encoding model. In his model, the probability of spiking of a neuron is expressed as the exponential of the inner product between the “preferred velocity trajectory" \(\vec{k}\) and the normalized velocity trajectory of the hand \(\textbf{v}\,^{t_0}\) of duration 400 ms, as follows

The vector \(\vec{k}\) is named as “preferred velocity" since it maximizes the spike probability when it is aligned to \(\vec{v}\,^{t_0}\), whereas the parameter \(\gamma\) is an offset parameter of the model. Note how for fixed instant of time \(t_0\) Eq. (6) reduces to Eq. (2) of Georgopolous model. Indeed if \(\vec{k} = \left| k\right| \cos \theta _{\text {PD}}\), \(\vec{v} = \left| v\right| \cos \theta\), then

Consequently the argument of the exponential in Eq. (6) is exactly the function f in (2). The main difference is that now the same expression is considered at different instants of time \(t_0\). In addition, Eq. (6) evaluates the output of a single cell in response to a trajectory fragment as the probability of spiking a neuron. The preferred path of the neuron is then obtained by integrating \(\textbf{k}\) over a time window which precedes and follows the spike time \(t_0\). In the same work, it is also provided an extension to (6) by including the average speed \(\bar{v}^{t_{0}}\), and average position \(\left( \bar{x}^{t_{0}}, \bar{y}^{t_{0}}\right)\) of the hand trajectory:

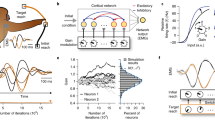

Overall, Hatsopoulos et al. (2007) argue that M1 neurons are selective to a preferred “movement fragment": a short trajectory describing a combination of parameters evolving in time (see also Reimer & Hatsopoulos, 2009; Omrani et al., 2017). Figure 3a (from Hatsopoulos et al., 2007) shows the temporal evolution of preferred directions for two neurons where each direction of movement (being an unit vector in \(\mathbb {R}^2\)) is represented by an angle in polar coordinates. Hence, in Hatsopoulos et al. (2007), the temporal behaviour of directionally tuned cells is represented by a function

At the bottom of Fig. 3a, vectors of preferred directions are added together giving rise to the movement fragment.

Churchland and Shenoy (2007) proposed an analogous model which describes the temporal properties of motor cortical responses. Figure 3b displays the temporal variation of the preferred directions of twelve M1 neurons during an instructed center-out reaching task. In this article, each direction of movement is expressed as a graph over a temporal interval, as follows

Through different representations of movement direction tuning, Hatsopoulos et al. (2007) and Churchland and Shenoy (2007) stressed the importance of time dependence on cell sensitivity. In addition, both groups exploit a principal component analysis on a space of trajectory templates allowing a finite dimensional basis over the heterogeneity of neuronal patterns. In other words, even though neurons in M1 encode elementary movements, thank to the intrinsic connectivity of the cortex, they can generate the rich variety of complex motor behaviours. We present here a model able to describe the temporal dependence of the selective tuning of motor cortical cells with a finite number of kinematic variables. The differential constraints which relate these variables will be fundamental to give the structure of the space.

Temporal evolution of cells PDs through different representations. a Red and blue arrows show the encoded PDs for movements before and after the measured neuron firing rate, respectively. Below, the vectors of preferred directions added together give rise to the preferred trajectories. The black directional paths show the similarity of the encoded trajectories computed during a different task. Image adapted from Hatsopoulos et al. (2007). b In the middle, change in PD expressed as continuous graphs for twelve M1 neurons. Below is shown the mean strength of direction tuning, where time 0 is assumed to be the strongest tuning instant. Above is represented the mean hand velocity profile whose peak is aligned at time \(t=0\). Image adapted from Churchland and Shenoy (2007)

2.3 Neural states and movement output

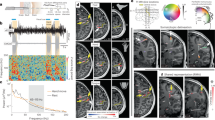

Another evidence of a trajectory encoding model comes from a recent study of the dynamics of neural population provided by the work of Kadmon Harpaz et al. (2019) who studied the cell population in M1 with a 100-electrode array, thus studying the area not at the level of a single neuron, but at the local population level. The authors processed neural activity by identifying sequences of coherent behaviours, called neural states, by means of a Hidden Markov model (HMM). A HMM is used to describe events (called hidden states) which are not directly observable, but are causal factors of other observed events. In their article, the recorded spike trains are interpreted as the observed states of the system, and are used to estimate global changes in cortical activity, considered as the hidden neural states of the model. The neural states (color-coded in Figs. 4 and 5) associated with motion trajectories and identified by the model, coincide with acceleration and deceleration phases with directional selectivity of the entire reaching movement (see Figs. 4 and 5). Transitions between neural states systematically coincide with minima and maxima points of the tangential velocity of the end-effector, decomposing the movement into accelerating and decelerating phases. Nevertheless, the neural states do not show selectivity to movement speed and amplitude (see Fig. 5). A consistent decomposition of the bell-shaped speed profiles at both minima and maxima of the tangential velocity is still present in straight reaching movements (see Fig. 4).

Examples of a center-out task, with position (left) and speed profile (right) colored according to the identified neural states. Black dot represents the starting position. Each color represents a single state (Kadmon Harpaz et al., 2019).

A Position data of a random target pursuit task segmented and colored according to the decoded neural states. Filled circles represent the target locations, first target is colored in red. B Corresponding speed profiles, colored as in A. Note the invariance of neural states to movement speed. Image taken from Kadmon Harpaz et al. (2019)

We stress that the observed segmentations in M1 were not directly predicted by previously proposed models of kinematic parameters, such as for example the one of Georgopoulos (see Eq. (2)). The data analysis was performed at the neural level, and the authors posed the problem to recover the same decomposition by using only kinematic variables.

In this paper, we will provide a kinematic features space endowed with a metric which is in agreement with both neural models incorporating kinematic variables and both the above movement decomposition.

3 A mathematical model

The goal is to develop a mathematical model inspired by the functional architecture of the arm area of the primary motor cortex, specifically taking into account the organization of motor cortical cells and temporal behaviour. We start by showing a fiber bundle structure emerging from Georgopoulos neural models in terms of hand’s position and movement direction in the plane (see Section 2.1). Then we extend the model in order to include kinematic variables to which motor cortical cells are selective. In Subsection 3.2, we integrate in a unified framework the preceding model by considering a 6D space which codes time, position, direction of movement, speed and acceleration of the hand in the plane.

3.1 Fiber bundle of positions and movement directions (static model)

As briefly exposed in Section 2.1, Georgopoulos neurophysiological studies (Georgopoulos et al., 1982, 1984) experimentally verify that the basic functional properties of cellular activity in the arm area of M1 involve directional and positional tuning. We therefore consider that a motor cortical neuron can be represented by a point \(\left( x,y,\theta \right) \in \mathbb {R}^2\times S^1\), where \(\left( x,y\right)\) denotes cell’s coding for hand’s position in a two dimensional space and \(\theta\) represents cell’s preferred direction at position \(\left( x,y\right)\). Hence, in this first model, we propose to describe directionally tuned cells organization as a fiber bundle \(\left( E, M, F, \pi \right)\) (see Definition 1), where

-

Due to the columnar representation, we identify the fiber F with the set \(S^{1}\) of the preferred directions of the cells in the plane. Moreover, as it is represented in Fig. 1, cells with similar preferred directions are organized in columns perpendicular to the cortical surface. Directional columns are in turn grouped into hypercolumns (see Fig. 2), each of them coding for the full range of reaching directions.

-

Here we choose as a basis of the fiber bundle the cortical tuning of the position of the plane. Hence \(M\subset \mathbb {R}^{2}\). There is wide neural literature supporting that M1 neurons encode hand positions (see e.g. Georgopoulos et al., 1984; Kettner et al., 1988; Schwartz, 2007, as well as Sections 2.1 and 2.2), but the way how these positions are mapped on the cortical plane is not well understood. Possibly the position of the hand will be indirectly coded through the command to the specific group of muscles which will implement the movement (Schwartz et al., 1988; Georgopoulos, 1988). In particular, according to Graziano and Aflalo (2007) (see also Graziano et al., 2002; Aflalo & Graziano, 2006b; Graziano, 2008) the topographic organization in motor cortex emerge from a competition among three mappings: somatotopic map of the body; a map of hand location in space; a map of movements organization. Since these maps preserve a principle of local similarity, and we are considering here very simple hand movements, a fiber bundle structure in the position-directions is not inconsistent with these data. On the other side, from a functional point of view, it is clear that at every point of the 2D space the hand can move in any direction, and this aspect is captured by a fiber bundle of direction on a 2D spatial bundle.

-

E is the total tuning space to which motor cortical cells are selective and it is locally described by the product \(\mathbb {R}^{2}\times S^1\);

-

\(\pi : E\rightarrow M\) is a projection on the \(\left( x,y\right)\) variables which acts as \(\pi \left( x,y,\theta \right) = \left( x,y\right)\).

A section \(\sigma : M\rightarrow E\) represents the selection of a point on a fiber of possible movement directions at position \(\left( x,y\right) \in M\), namely, it associates the point \(\left( x,y\right)\) to a point \(\left( x,y,\theta \right) = \sigma \left( x,y\right)\). A fiber \(E_{\left( x,y\right) }= \pi ^{-1}{\left( x,y\right) }\simeq S^{1}\) corresponds to an entire hypercolumn. A schematic representation of the fiber bundle structure is shown in the right side of Fig. 6.

We recall that formula (2), which is equivalent of (6) for every fixed instant of time, selects the maximum of the scalar product in the direction of the trajectory of movement. This is equivalent to say that the spike probability is maximized if the scalar product in the direction orthogonal to that of motion vanishes. For our model it will be essential to consider this and to do so we will make use of the following definition. We call 1-form a function \(\omega = a_1 dx + a_2 dy\) which acts on a vector v as a scalar product:

In our case, to be compatible with Eq. (6), we will choose \(a= v^\perp\), where \(v= \left( \cos \theta , \sin \theta \right)\) denotes cell’s preferred movement direction. As a result \(a=(-\sin (\theta ), \cos (\theta ))\) and

(Left) In the up, motor cortical map of preferred directions referred to movements on a two-dimensional space (adapted from Naselaris et al., 2006). Colors denote preferred directions within the interval \(\left[ 0,2\pi \right]\). A conventional zoom and a superimposition of the lattice model (see Fig. 2) have been made in order to visualize the directional map referred to the size of the hypercolumns. (Right) Arm area of M1 modelled as a set of hypercolumns. Here, the angle \(\theta\) lies in the interval \(\left[ 0, 2\pi \right]\) and it is represented as an arrow

3.1.1 Neuronal population vector and distance

As we clarified, we are interested in the set of vectors on which the 1-form (11) vanishes. This set identifies a two-dimensional subset of the tangent space at every point, called horizontal distribution (see Definition 2). It can be represented as

where the generators are

In terms of vector fields, they will be denoted respectively

According to Chow’s Theorem (see Montgomery (2006) and Agrachev et al. (2019) for a detailed analysis), vector fields (14) induce on the space \(\mathbb {R}^2\times S^1\) a distance d in terms of Definition 9.

We saw in Section 2.1 (see also Georgopoulos et al., 1986; Georgopoulos, 1988; Georgopoulos et al., 1988) that one estimate concerning the output of a population of M1 cells is given by the neuronal population vector (3). Its formula describes an expectation value weighted by the w-functions with respect to all possible cells preferred directions \(\theta '\in S^{1}\). Basically each cell assigns a contribution to the output given by its own preferred direction modulated by the distance between the actual direction of movement and cell’s preferred direction itself. As reported in Naselaris et al. (2006), within each hypercolumn the neuronal population vector ensures a good estimate of a reaching direction. These results suggest that within each hypercolumn of M1 there is a local and isotropic activity pattern characterized by the weight functions. We observe that the weight (4) can locally be approximated through Taylor expansion by

Since for small values of \(\theta\) the distance in the circumference is \(\left| \theta - \theta '\right|\), this suggests approximating the discrete formula (3) with the continuous correspective in which the weight (4) is replaced with the exponential

This formula can also be exploited in our case. Indeed, if we denote by d the distance induced by vector fields \(X_1\) and \(X_2\) (see Definition 9), we can provide an estimate of the collective behaviour of cells tuning with respect to a selective point \(\left( x,y,\theta \right)\) within a hypercolumn of positions and directions of movement:

where \(D\subset \mathbb {R}^2\) is a subset of a cortical module and the function \(\left( x',y', \theta '\right) \mapsto g_{x,y,\theta }\left( x',y', \theta '\right)\) represents the single cell’s spike probability density in response to \(\left( x,y,\theta \right)\):

The above equation is the analogue of Hatsopoulos model (7) only in relation to the variables of position and direction of movement. The new weighting function

measures the closeness between the cellular selectivity of the points \(\left( x,y,\theta \right)\) and \(\left( x',y',\theta '\right)\). Therefore, formula (18) is intended to express a core of connectivity (local, since it is evaluated in a small cortical module) that is functional. We will see that the analogous for this area of formula (18) is provided by Bressloff and Cowan (2003) and Sarti and Citti (2015) models in the visual cortex.

3.1.2 Comparison of the static model with primary visual cortex V1

An analogy on the selectivity behaviour of external features with neurons in the primary visual cortex area (V1) is evident. (Hubel & Wiesel, 1977; Hubel, 1995) discovered that to every retinal position is associated an hypercolumn of cells sensible to all possible orientations. A simplified representation is shown in the right side of Fig. 7. Since the early ‘70s, a large number of differential models were developed for visual cortex areas, starting with Hoffman (1970), Petitot and Tondut (1999), Bressloff and Cowan (2003), Citti and Sarti (2006), just to name a few of the main ones. Their models describe the functional architecture of V1 trough geometric frameworks such as contact bundles, jet bundles or Lie groups endowed with a sub-Riemannian metric.

(Left) Layout of orientation preferences in the visual cortex. At singular points (pinwheels), all orientations meet. Source: Bosking et al. (1997). (Right) V1 modelled as a set of hypercolumns

In particular, the model expressed through the one-form (11) matches the one proposed by Citti-Sarti in 2006 (Citti & Sarti, 2006) for the description of image edge selectivity by V1 cells. Both for V1 and the arm area of M1, the total space of the fiber bundle is three-dimensional, whereas the cortical layers are of dimension two, so that a dimensional constraint has to be taken into account. In Fig. 7, the orientation preferences of simple cells in V1 are color coded and every hypercolumn is represented by a pinwheel (see the work of Petitot (2003) neurogeometry}). For the motor cortex, a “directional map” is suggested from Fig. 2, for which PDs are repeatedly arranged on the motor cortical layer in such a way that, within a given locale (hypercolumn), the full range of movement directions are represented. Moreover, as distance increases away from the center of the hypercolumn (black filled circle), up to the radius of the hypercolumn (120 \(\mu\)m), PDs diverge from that at the center of the circle (see Figs. 7 and 6 for a direct comparison). We add that cells preferred directions in M1 are correlated across very small distances along the tangential dimension (see Amirikian & Georgopoulos, 2003) and this type of arrangement is consistent with the smooth variation of orientation preference observed in V1 (see Hubel & Wiesel, 1963). Moreover, the radii of the hypercolumns of arm area M1 and V1 are of the same order size and are respectively of 240 and 200\(\mu\)m.

One of the greatest difficulties and differences in modelling the functional architecture of M1 is the absence of an analogue of the simple cells receptive profiles, which we might call “actuator profiles". Simple cells of visual areas are indeed identified by their receptive field (RF) which is the domain, subset of the retinal plane, to which each cell is sensible in response to a visual stimulus. Activation of a cell’s RF evokes the impulse response, which is called the receptive profile (RP) of the cell. A widely used model (Jones, 1987; Daugman, 1985; Lee, 1996) for the RP representation of a simple cell located at the retinal position q and selective to the feature p, is in terms of Gabor filters \(\psi _{\left( q,p\right) }: \mathbb {R}^2\rightarrow \mathbb {C}\),

Although it is not well understood the presence or the definition of such functions for M1, we argue that the action of primary motor cortical cells occurs in a comparable way as in V1. This hypothesis is primarily supported by the tuning functions (2) and (5) expressed by Georgopoulos (see 2.1 and Georgopoulos et al. 1982; Schwartz et al. 1988) and through the trajectory encoding model (7) provided by Hatsopoulos (see 2.2 and Hatsopoulos et al., 2007). Their models share the selective tuning of neurons by evaluating the alignment between an external input variable and the individual cell’s preferred feature via a scalar product. Analogously for V1, the linear term in (19) evaluates the aligning between cell’s selective feature p with respect to the input x. Bressloff and Cowan (2003) (see also Bressloff et al., 2002; Wilson & Cowan, 1973) proposed to represent the stationary state of a population of simple cells through equation

where \(\omega _{\text {LOC}}\) and \(\omega _{\text {LAT}}\) correspond to the strength of connections from the iso-orientation patch within and between the hypercolumns of V1, respectively. The function \(a\left( \phi ,r\right)\) is the activity in an iso-orientation patch at the point r with orientation preference \(\phi\), whereas \(\sigma \left( a\right)\) is a smooth sigmoidal function of the activity a and \(\alpha\), \(\mu\) are time and coupling constants. The weight \(\omega _{\text {LOC}}\) represents the isotropic pattern of activity within any one hypercolumn, and in Bressloff and Cowan (2003), in the simplified case where the spatial frequencies are not taken into account, it is modelled by

Equation (21) is the analogous of the weighting function (4) assumed by Georgopoulos. In the work of Sarti and Citti (2015) both contributions of \(\omega _{LOC}\) and \(\omega _{LAT}\) are modelled by means of a single connectivity kernel given by

where \(d_c\) is a Carnot Carathéodory distance associated to the cortical feature space identified with the special Euclidean group \(SE\left( 2\right) \simeq \mathbb {R}^2\times S^1\). We will see in a higher dimensional model that a connectivity kernel of this type can be used to interpret the segmentation of neural activity in neural states (Section 5).

3.2 A 2D kinematic tuning model of movement directions

Now we aim at realizing a unified neurogeometrical framework that generalizes the preceding model of movement fiber bundle structures.

We will describe a sub-Riemannian model such that motor cortical cells selective behaviour can be represented through integral curves of the cortical features space of time, position, direction of movement, speed and acceleration. The resulting model will present time dependent variables, which seems particularly natural for a model of movement.

3.2.1 Integral curves and time dependent PD

We represent motor cortical cell tuning variables by the triple \(\left( t, x, y\right) \in \mathbb {R}^3\), which accounts for a specific hand’s position in time. We also consider the variable \(\theta \in S^1\) which encodes hand’s movement direction, and the variables v and a which represent hand’s speed and acceleration along \(\theta\). The triple \(\left( t, x, y\right) \in \mathbb {R}^3\) is assumed to belong to the base space of the new fiber bundle structure, whereas the variables \(\left( \theta , v, a\right) \in S^1 \times \mathbb {R}^{2}\) form the selected features on the fiber over the point \(\left( t, x, y\right)\). We therefore consider the 6D features set

where this time the couple \(\left( x,y\right) \in \mathbb {R}^2\) represents the cortical tuning for hand’s position in a two dimensional space.

We refer to Eq. (7) to recall that the spike probability of a neuron is maximized in the direction of the movement fragment. Therefore, as in Section 3.1, the choice of the variables with their differential constraints induce the vanishing of the following 1-forms

The one-form \(\omega _{1}\) encodes the direction of velocity over time: the unitary vector \(\left( \cos \theta , \sin \theta \right)\) is the vector in the direction of velocity, and its product with \(\left( \dot{x}, \dot{y}\right)\) yields the speed. As we already noted, conditions above are equivalent to find vector fields orthogonal to \(\omega _i\). Consequently, the associated horizontal distribution \(D^{\mathcal {M}}\) turns out to be spanned by the vector fields

Note that, if we prescribe time, movement direction and acceleration, we can deduce by integration first speed and then location. This is the reason why we prescribe only these 3 vector fields: \(X_1\) prescribes the change in time, \(X_2\) in the direction of movement and \(X_3\) in the acceleration. In addition, not all curves are physically meaningful in this space: it is not possible for a curve to change its velocity \(v=v(t)\) while the position (x, y) remains constant. Hence we have to restrict the set of admissible curves and define horizontal ones. Horizontal curves of the space are integral curves of the vector fields \(X_1, X_2\) and \(X_3\) and are of the form

where the coefficients \(\alpha _i\) are not necessarily constants.

We recalled in Section 2.2 that M1 cells are not selective to a single movement direction, but the preferred movement direction varies in time (Churchland & Shenoy, 2007; Hatsopoulos et al., 2007). In particular, in Fig. 3b we reproduced data from Churchland and Shenoy (2007), where the PD of a single M1 neuron was represented as a curve dependent on time.

We propose the curves expressed in (26) as a model of the integrated selective behaviour of M1 neurons. Note in particular that the t component of the horizontal curve \(\gamma\) satisfies \(t' = \alpha _1\). This means that the coefficient \(\alpha _1\) is a modulation of the time, and can account for the difference between the external time and the perceived one. By simplicity, we will assume that the two times coincide, therefore we will assume \(\alpha _1=1\) from now on. In the sequel we will see that it is possible to choose coefficients in Eq. (26) which allow to recover the full fan of curves reproduced in Fig. 3. The expression of \(\dot{\theta }\) described in (40) is the coefficient of the vector field \(X_2\) and the expression of \(\dot{a}\) described in (41) is the coefficient of the vector field \(X_3\):

The functions \(t\mapsto \dot{\theta }\left( t\right)\) and \(t\mapsto \dot{a}\left( t\right)\) represent, respectively, the rate of change of the selective tuning to movement direction and acceleration variables.

3.2.2 Time-dependent neuronal population vector

By analyzing the following commutation relations

we observe that \(\left( X_i\right) _{i=1}^{6}\) are linearly independent. Therefore, all \(\left( X_i\right) _{i=1}^{3}\) belonging to \(D^{\mathcal {M}}\) together with their commutators span the whole tangent space at every point, meaning that Hörmander condition is fulfilled (see Appendix A for a brief review). Thanks to Hörmander condition, it is possible to define a metric \(d_{\mathcal {M}}\) in the cortical feature space \(\mathcal {M}\). This allow to formally consider the analogous of the population vector (16) defined in Section 3.1.1 (see as well Definition 9). Indeed, we will call as time dependent neural population vector an estimate of the collective behaviour of cells tuning around a cortical module centered at point \(\eta _0\in \mathcal {M}\). We define it by means of the following

where \(E\subset \mathcal {M}\) is a neighbourhood of \(\eta _0\) and the weighting function

encodes an estimate of the local connectivity between the cortical tuning points \(\eta _0\) and \(\eta\). The function \(\eta \mapsto h_{\eta _0}\left( \eta \right)\) corresponds to the contribution provided by the variable \(\eta\) in the population coding. As in Hatsopoulos model (7), it is the spike probability of a neuron in response to the input variable \(\eta _0\):

The definition of (30) embodies the same meaning as the weighting function (18) showed in the “static" model and in the models for visual areas (see Eqs. (21) and (22) in Sect. 3.1.2 defined in Bressloff & Cowan, 2003; Sarti & Citti, 2015). It represents the local interactions between cells within a cortical module by means of a distance of the cortical feature space.

Due to the works of Nagel et al. (1985) and Montgomery (2006), it is possible to provide a local approximation of distance \(d_{\mathcal {M}}\) in terms of a homogeneous distance (see Definition 10), as follows

where \(\left( \eta _0,\eta \right) = \left( \left( t_0,x_0,y_0,\theta _0, v_0, a_0, \right) ,\left( t, x,y,\theta , v, a, \right) \right) \in \mathcal {M}^2\), \(c_i\) are non negative constant coefficients, the number 6 is the dimension of the space \(\mathcal {M}\) and the increments \(e_i\) are given by

and \(e_4, e_6\) are solutions of system

which in the linear case, for constant values of \(\theta\), can be solved as

We report a proof of distance (32) and of individual increments \(e_i\) in Appendix B (see in particular Remark 2).

Finally we are led to the estimate of the increment \(e_3\). We first note that

In addition, the neural states do not show a consistent selectivity to the acceleration amplitude, but only at its sign. For this reason we choose the increment \(e_3\) as follows

4 Parameters fitting and numerical results

4.1 Time dependent direction selectivity as local integral curves

In this section, we discuss the structure of our model, identifying the coefficients of the integral curves proposed in (27) as suitable polynomials. This allows to describe the proposed set of curves as a space of finite dimension. Precisely it has the same dimension as the space of curves identified by Churchland and Shenoy (2007), and generate a fan, which is qualitatively comparable with the experimentally discovered. After that, we perform a quantitative fitting between the modelled and measured curves, representing time dependent PDs. The main analysis here is not to show that polynomial models can fit the average curves shown in Churchland and Shenoy (2007). Rather, the issue is to identify a set of parameters of the same dimension as the one found with a neural analysis, which accurately captures the same patterns of acceleration and movement direction in the data.

4.1.1 The dimension of the space of parameters

Our model is expressed as a family of curves defined in (27). They only depend on the initial data, the interval where they are defined and the derivatives of the direction and acceleration.

In (Hatsopoulos et al., 2007; Churchland & Shenoy, 2007), given a temporal interval \(\Delta T\), m observations have been made at instants of time \(t_1, \cdots , t_m\) and the measured preferred directions can be denoted by

We can represent (37) through a continuous graph by representing the variable \(\theta\) as a function of time (as it is shown in Churchland & Shenoy, 2007, see Fig. 3b):

This is equivalent of assuming that at every instant of time the preferred movement directions are respectively described by the unitary vectors

as it is visualized in Fig. 3a, from Hatsopoulos et al. (2007).

As for the temporal behaviour of the selective tuning of a single cell’s PD, we observe that it can be linear, as it is shown for example by the black curve of Fig. 3b. We also see the presence of even curves, symmetric with respect to the interval where they are defined (as for example the dark green one). These will be described as polynomials of order two (or four). In Fig. 3b we also see the presence of odd curves changing concavity, which will be represented as polynomials of order 3 or 5. In this way, polynomials of degree, up to order five, provide good models of the PD curves experimentally measured.

As a consequence, \(\dot{\theta }\left( t\right)\) has to be a polynomial of degree up to 4 and there exist parameters \(k_i\) such that

and that all curves in the variable \(\theta\) satisfying (27) will be characterized by these five parameters. The other coefficient which defines the model is the derivative of the acceleration. In the top of Fig. 3b the mean of the measured hand’s speed profile is shown. It presents the typical bell-shaped trend already observed by (Morasso, 1981; Abend et al., 1982) and (Flash & Hogan, 1985). Hence, we posit to characterize the function \(t\mapsto v(t)\) by an even polynomial of fourth order. As a consequence, we will characterize a as a polynomial of degree 3 and its speed \(\dot{a}\) as a polynomial of degree 2. It will be identified by 3 parameters \(j_i\) which identify the map \(t\mapsto \dot{a}\left( t\right)\) in this way

In this model, only curves with polynomial coefficients are considered, so that each of these trajectories can also be identified as a point in a higher dimensional space. Since the initial instant of time \(t_0=0\) and the initial position \((x_0, y_0)\) are fixed in all the experiment, the initial variable \(\eta _0\in \mathcal {M}\) has only 3 free parameters. Then the problem depends on the time interval T, the 5 coefficients which define the trajectory of the preferred direction \(\theta\), and the 3 coefficients for \(\dot{a}\). In this way they form a space of dimension twelve. In particular, the dimension of the structure is of the same order as the one outlined by the principal components analysis performed in Churchland and Shenoy (2007) and in Hatsopoulos et al. (2007).

4.1.2 A polynomial fitting

Let us remark that this is not the only possible choice of parameters which allows to build a space of curves of dimension 12. We will here verify that the parameters we have chosen provide a good approximation for recovering both the profile of acceleration and of preferred direction depicted in Fig. 3b. Precisely, we will determine the optimal polynomial approximation of the coefficient of the curve proposed by our model through the least squares method (Björck, 1990).

Each time dependent PDs of neurons has been visualized in Churchland and Shenoy (2007) Fig. 13 (here reproduced in Fig. 3b) as a curve surrounded by two curves of the same color. These lateral curves plot \(95\%\) confidence interval and lines are suppressed when that interval was grater than 90 degrees. Moreover, gray vertical lines mark an interval within which data are most reliable, defined between 70 ms before and after the time of strongest tuning. For this reason, we will focus on approximating the time-dependent PDs in this range.

We propose in (40) to estimate the expression of \(\theta\) by polynomials up to order five. Table 1 summarizes the results obtained by applying the least-squares algorithm through polynomials of order two, three, four and five. In each case we compute the coefficient of determination (R\(^2\)) and the normalized root mean squared error (Nrmse) which provide an estimate of the standard deviation of the random component in the data. These coefficients are displayed for each single curves and on average.

With polynomials of order two we get an average R\(^2\) of 0.9515 and a good approximation over 0.99 only for the curves (a) Black, (d) Olive green and (k) Yellow. We can also observe from Fig. 8 that these curves are convex (up or down).

Approximation of third orders are shown in the second column of Table 1. The fitting of all curves is also visualized in Fig. 8. The colored-trace curves are the curves extracted from the experimental data of Fig. 3b (Fig. 13, from Churchland & Shenoy, 2007), whereas the cyan ones denote the polynomial approximation. In Table 1, the coefficient of determination (R\(^2\)) on average is 0.9831, and always greater than 0.99, except for the (e) Rose and the (i) Dark green curves. In particular, the dark green curve presents a very high error (Nrme \(= 0.1272\)): R\(^2\) is 0.8630 and, as can be seen from the representation in Fig. 8, the fitting is not accurate. Nonetheless, the approximated curve belongs to the confidence strip outlined by the thinner dark green curves (see also Fig. 9, left).

Better approximation is obtained for polynomials of order four, whose coefficient R\(^2\) indeed reaches 0.9936 (see Fig. 9, right as an example). Indeed, with polynomials of order four we obtain an optimal approximation with R\(^2\) always over 0.99. Clearly, the approximation is even better for approximation of order five.

Comparison of third (left) and fourth-order polynomial fitting (right) for time-dependent PD, colored in dark green. Fourth-order polynomial fit for the velocity profile extracted from the top panel of the Fig. 3b

Fourth-order polynomial fitting for the velocity profile extracted from the top panel of Fig. 3b. R\(^2= 0.9968\), while nrmse\(= 0.01786\)

Finally, we show in Fig. 10 the approximation obtained with a fourth-order polynomial for the velocity profile represented in the top panel of Fig. 3b. The factor R\(^2\) in this case is 0.9968 and normalized root mean-squared error 0.01786. Further, we note that Eq. (41) allows to recover the bell shape velocity profile proposed by Flash and Hogan model (1985), whose expression is

This polynomial fits the experimental velocity for \(v_0= 140\) (cm/s) and \(T= 0.07\)s. This can be considered an ideal perfectly symmetric approximation of the velocity. We find a similar polynomial in Fig. 10 with a small coefficient in the first order term, which takes into account the lack of symmetry in the experimental data.

4.1.3 A qualitative comparison

To recover the fan in Churchland and Shenoy (2007) (see Fig. 3b), we note that the curves have been re-ordered in such a way to have the same direction and velocity at the point \(t=0\). The whole set of curves, with the prescribed initial condition \(\eta _0\in \mathcal {M}\) at time \(t=0\), represents more precisely the cortical cells selectivity with respect to position, direction of movement, speed and acceleration:

Figures 11 and 12 shows a family of integral curves where the functions \(t\mapsto p\left( t\right)\) and \(t\mapsto q\left( t\right)\) are polynomials up to fourth and second order (see Eqs. (40) and (41)). All curves are characterized by a speed component having a bell-shaped profile, according to Eq. (42), whose maximum value occurs at time \(t=0\). This time is conceived to be the instant of a cell’s strongest tuning with respect to the speed and movement direction variables, and, in correspondence of it, all curves meet at point \(\left( x,y,\theta \right) = \left( 0,0,0\right)\) (see red and black dots of the plots in Fig. 12).

Model example of M1 cells temporal selective tuning as a family of 30 integral curves solutions of system (43). Movement direction components solutions over the temporal window \(\left[ -0.07, 0.07\right]\)s. Different curves correspond to different choices of second and third order polynomials in Eq. (43)

Other model examples of M1 cells tuning patterns. Fan of curves projections over the variables \(\left( x,y,\theta \right)\), \(\left( x,y,v\right)\) and \(\left( t,y,\theta \right)\). Different curves correspond to different choices of third (left column) and second order (right column) polynomials in Eq. (43)

Kinematic bundle model for time-dependent directionally tuned M1 cells. We only represent the \(\left( x,y,\theta \right)\) components of system (43) applied for movement direction tuning which linearly changes over time. Left, fixed a PD at the central point, a solutions pattern which spreads over a temporal window is represented. Right, over each cell’s selective tuning point \(\left( x,y\right)\) of the base bundle at time \(t=0\), there is a fiber of different patterns having strongest tuning for a preferred movement direction which spans the interval \(\left[ 0,2\pi \right]\)

We underline that in the “static" simplified model presented in Section 3.1, a point of the space is assumed to be a neuron characterized by its positions and directions of movement Fig. 6. In this context, a point of the previous structure corresponds to an “instantaneous" cell selective movement parameter. Here, in the temporal bundle, the selective behaviour of a single neuron is represented by a whole trajectory expressed as a solution of (43). We show a simplified representation of the new fiber bundle in Fig. 13, where we have depicted only the \(\left( x,y,\theta \right)\) components to facilitate a comparison with Fig. 6 and to directly observe the extension of the temporal model with respect to the static one. The central arrow in the left graph of Fig. 13 has the same meaning as those depicted in the previous “static” fiber bundle, but now, as seen in the right part of the image, the fiber has a higher dimension representing the spread of time-selective behaviour.

4.2 Comparison with the time dependent receptive profiles in V1

We will now compare the model of movement in the arm area of cortex M1 with models of movement coded in the visual cortex V1. We stress that the analysis on the comparison between visual and motor cortical cells is not based on their functionality. Visual cells are indeed characterized by their receptive profiles which detect features of the visual stimulus; on the other hand, cells in M1 are characterized by “actuator profiles" and whose properties have been synthesized in Sections 2.1 and 2.2. The analogy is based on the coding of their related features, and on the structure of the functional geometry of the two areas.

We briefly recalled in the Introduction and in Section 3.1.2 that simple cells in V1 detect positions, local orientations, however complex cells also encode parameters of movement via their receptive profiles (DeAngelis et al., 1993, 1995). For a given fixed position and orientation, cells receptive profiles sensible to movement are represented as a family of RPs varying in time (see Jones, 1987; DeAngelis et al., 1995):

This complex receptive profile can be modelled as a curve in the space of 2D profiles. The graph of a continuous curve of receptive profiles

has been represented by Cocci (2014) as a 3D volume defining a higher-dimensional profile selective of time frequency and velocity parameters (see Figs. 14 and 15).

The variation of the RP from one frame to the next encodes the velocity of movement. Hence, we argue that the model of M1 () is analogous, but more general to the model of movement in V1, since we coded the variation of a cell’s preferred movement direction not only via the first derivative, but via higher order derivatives.

Accordingly, the movement-receptive profile family was represented in Cocci’s model as a fiber bundle, with a base formed by position and time selective behaviour \(\left( q_1,q_2, t\right)\) and with engrafted variables \(\left( \theta , v\right)\), accounting for the orientation and velocity tuning over the point \(\left( q_1,q_2, t\right)\). For \(\theta\) fixed, there is therefore a one-parameter family of RPs depending on the velocity variable (as depicted in Fig. 16 left). This is the analogous of the fan of curves with varying PDs we represented in Fig. 13 left, for a given fixed central PD. Then, in the model of movement for V1, the entire fan is obtained by varying orientation and position variables (see Fig. 16 right), from which a total space of dimension five arises.

(Left) Representation of a cell’s RP over time. (Right) Schematization of the spatio-temporal fiber bundle of V1. For each spatio-temporal point \(\left( x,y,t\right)\) there is a two dimensional fiber of possible local detected orientations \(\theta\) and local velocity v. See Fig. 13 for a direct comparison with M1. Images from Cocci (2014)

5 Spatio-temporal grouping model for M1

In this section, we will proceed with the sub-Riemannian model for M1 cells by focusing on the coding of directional trajectories. As we recalled in section 2.3, neural activity shows coherent behaviours represented in terms of movement trajectories pointing to a specific pattern of movement decomposition (for further details see Kadmon Harpaz et al., 2019 and Figs. 4 and 5 as references). The authors of Kadmon Harpaz et al. (2019) underlined that they tried to obtain the neural decomposition in fragments by using various distances proposed in literature (see the list below). Precisely, they tested six models that included tuning for: movement direction; movement direction gain modulated by speed; direction of the acceleration vector; direction of the acceleration vector gain modulated by the magnitude of the acceleration vector; both movement direction and direction of the acceleration vector; or both movement direction and direction of the acceleration vector, each gain modulated by the magnitude of the corresponding vector:

-

1.

\(fr_i\;\left( t- \tau \right) = B_{0_{i}}+ B_{1_{i}}\cos \;\left( \theta \left( t\right) - \theta _{\text {PD}_{i}}\right)\);

-

2.

\(fr_i\;\left( t- \tau \right) = B_{0_{i}}+ B_{1_{i}}\Vert {\mathop v \limits^{\rightarrow}}\left( t\right) \Vert \cos \;\left( \theta \left( t\right) - \theta _{\text {PD}_{i}}\right)\);

-

3.

\(fr_i\;\left( t- \tau \right) = B_{0_{i}}+ B_{1_{i}}\cos\; \left( \theta _a\left( t\right) - \theta _{a_{\text {PD}_{i}}}\right)\);

-

4.

\(fr_i\;\left( t- \tau \right) = B_{0_{i}}+ B_{1_{i}}\Vert {\mathop a \limits^{\rightarrow}}\left( t\right) \Vert \cos \;\left( \theta _a\left( t\right) - \theta _{a_{\text {PD}_{i}}}\right)\);

-

5.

\(fr_i\;\left( t- \tau \right) = B_{0_{i}}+ B_{1_{i}}\cos\; \left( \theta \left( t\right) - \theta _{\text {PD}_{i}}\right) + B_{2_{i}}\cos \;\left( \theta _a\left( t\right) - \theta _{a_{\text {PD}_{i}}}\right)\);

-

6.

\(fr_i\;\left( t- \tau \right) = B_{0_{i}}+ B_{1_{i}}\Vert {\mathop v \limits^{\rightarrow}}\left( t\right) \Vert \cos\; \left( \theta \left( t\right) - \theta _{\text {PD}_{i}}\right) + B_{2_{i}}\Vert {\mathop a \limits^{\rightarrow}}\left( t\right) \Vert \cos \;\left( \theta _a\left( t\right) - \theta _{a_{\text {PD}_{i}}}\right) .\)

In the numbered list above (taken from Kadmon Harpaz et al., 2019), \(fr_i\) denotes the instantaneous firing rate of neuron i, \(\tau\) the time lag between neural activity and kinematic output (taken to be 100ms), \(B_{0_{i}}\) the baseline firing rate, \(B_{1_{i}}\) and \(B_{1_{i}}\) modulation depths, whereas \(\Vert \textbf{v}\left( t\right) \Vert\) and \(\Vert \textbf{a}\left( t\right) \Vert\) represent the magnitude of the velocity and acceleration vectors, respectively, \(\theta\) and \(\theta _a\) are the directions of the velocity and acceleration vectors, and \(\theta _{\text {PD}}\) and \(\theta _{a_{\text {PD}}}\) are the preferred velocity and acceleration angles of neuron i. However, none of these distances were successful in yielding the desired neural decomposition. This failure can have two explanations: either the considered kinematic parameters are not sufficient to recover the decomposition, and more parameters are coded in the brain, or a more complex distance is needed.

Here, we will show that with our distance \(d_{\mathcal {M}}\) defined in Section 3.2.2 (see Eq. (32)) which takes into account the differential relations between the variables exactly provides the same decomposition. The algorithm we will apply is a variant of k-means which considers the presence of this distance: first we perform a change of variables induced by distance \(d_{\mathcal {M}}\) and then we apply the k-means in the new variables. This provides an answer to the problem we posed above, and clarifies that the set of kinematic variables considered up to now is sufficient to recover the cortical decomposition, and it is most probably the set of parameters responsible for the considered task in this area.

Specifically, we will test the pattern of movement decomposition through a local estimate of distance \(d_{\mathcal {M}}\) (32) and of the associated kernel

where the cortical feature space \(\mathcal {M}= \mathbb {R}^{3}_{\left( t,x,y\right) } \times S^1_{\theta } \times \mathbb {R}^{2}_{\left( v,a\right) }\) is expressed in terms of kinematic variables. We also show that the same decomposition can not be recovered with a simpler algorithm based on the Euclidean distance, or a weighted Euclidean distance.

5.1 Spectral analysis

The most classical model describing the cortical activity is the mean field equation of Ermentrout and Cowan (1980) and of Bressloff and Cowan (2002, 2003). This equation describes the evolution of the cortical activity depending on a connectivity kernel. Sarti and Citti (2015) proved a relation between the stable states of Bressloff and Cowan equation and perceptual units of the visual input in V1. We also refer to the works of Faugeras et al. (2009), Cocci et al. (2015), Favali et al. (2017). In perfect analogy, we assume that the neural states corresponding to movement trajectories can be interpreted as a form of clustering. A vast literature is available on the theoretical and practical aspects of several clustering algorithms (see e.g. Von Luxburg, 2007 as a summary of the most known techniques). A classical method is the k-means algorithm, which clusters the data according to the Euclidean distance. As a result, clouds of points are correctly grouped. However, when dealing with aligned data, accurate clustering can not be achieved without first performing a change of variable which strongly correlates aligned points. This step is called spectral cluster algorithm, since the change of variable is induced by eigenvectors of a distance function.

To this end we define an affinity matrix A, whose elements \(\left( a_{ij}\right)\) have values proportional to the similarity of point \(\eta _i\) to point \(\eta _j\), for which A can be defined by

where d is a suitable distance over the considered space. Principally, there exist two classes of spectral clustering techniques (Lafon & Lee, 2006): methods for locality-preserving embeddings of large data sets, that project the data points onto the eigenspaces of the affinity matrices (Coifman & Lafon, 2006; Belkin & Niyogi, 2003; Roweis & Saul, 2000), and methods for data segregation and partitioning, that basically perform an additional clustering step taking as input the projected data set (Perona & Freeman, 1998; Weiss, 1999; Shi & Malik, 2000; Meilă & Shi, 2001; Ng et al., 2001). It has been originally shown by Perona and Freeman (1998) that the first eigenvector of A can represent a background/foreground contour separation. To reduce error due to noise, the affinity matrix can be suitably normalized. Many normalizations have been proposed (e.g. Butler et al., 2006; Ng et al., 2001; Shi & Malik, 2000): one of the most widely applied is the one presented by Meilă and Shi (2001) since it reveals properties of the underlying weighted graph by ways of the Markov chain, providing a probabilistic foundation of the clustering algorithm. Indeed, the authors defined a Markov-type matrix P as follows

In general the matrix P will not be symmetric, but its eigenvalues are real, positive and smaller than one, while the eigenvectors have real components (Lafon & Lee, 2006; Coifman & Lafon, 2006). The clustering properties of the eigenvectors of P can be clearly understood in the ideal case, when P is a block diagonal matrix. In this case a natural grouping is obtained via the projection on the eigenvectors. If the affinity matrix A is already a block matrix, the multiplication by D can be avoided.

In the general case, if the matrix P is not diagonal, a k-means algorithm in the coordinates induced by eigenvectors will provide the classification for our problem.

-

1.

Calculate the normalized affinity matrix \(P = D^{-1}A\) (skip this step if A is a block diagonal matrix).

-

2.

Call \(\lbrace \left( \lambda _i, u_i\right) \rbrace _{i=1}^k\) the first k eigenvalues and the corresponding eigenvectors of the matrix P.

-

3.

If P is a block matrix, projection on the eigenvectors forms the clusters for the decomposition. If P is not diagonal, perform a k-means algorithm in the coordinates induced by the eigenvectors

5.2 The spectral clustering method in the feature space \(\mathcal {M}\)

In this section we will apply the connectivity kernel (46) for the definition of an affinity matrix. We will show that the salient groups obtained due to spectral clustering are in agreement with the neural results present in Kadmon Harpaz et al. (2019).

More precisely, given a set of reaching paths, we discretize (46) by means of the real symmetric affinity matrix \(A_{ij}\):

that contains the connectivity information between all the kinematic variables. We then apply to (49) the algorithm provided in section 5.1. The eigenvectors associated with the largest eigenvalues of the affinity matrix will be short movement trajectories compatible with the movement fragments of (Hatsopoulos et al., 2007; Reimer & Hatsopoulos, 2009) and with the trajectories found in Kadmon Harpaz et al. (2019) for the pattern of movement decomposition (see Figs. 4 and 5 as references).

5.2.1 Results

We will provide two test cases in which the connectivity kernel (46) is applied to a set of movement trajectories.

Test 1: Simulation of a center-out task

As a first example, we will analyze a trajectory of movement performing a center-out task, as it is represented in Fig. 4 (from Kadmon Harpaz et al., 2019). Below we show an approximation of the image.

Reaching path and speed profile of a center-out task: approximation of Fig. 4. (Left) Reaching path over the \(\left( x,y\right)\) plane. (Right) Speed profile over the \(\left( t,v\right)\) plane. The red dot represents the movement starting position

The motion trajectory, as in the paper Kadmon Harpaz et al. (2019), is characterized by two graphs, one on the \(\left( x,y\right)\) plane, the reaching path, and one on the \(\left( t,v\right)\) plane corresponding to the velocity profile. The red dot in Fig. 17 identifies the starting point of the movement.

Spectral clustering. a The Affinity matrix (49). Yellow-colored areas represent points of higher affinity. b Eigenvectors projections over the reaching trajectory. c Results of movement decomposition. Eigenvectors identify acceleration and deceleration trajectories according with the experimental results of Kadmon Harpaz et al. (2019). See Fig. 4 for a direct comparison

In this very simple case, movement direction is almost constant with only one target point to be reached and just one maximum point of the speed profile.

As we clarified (32), distance \(d_{\mathcal {M}}\) depends on suitable coefficients \(c_1, \cdots , c_6\). In particular, we normalized the temporal component \(e_1\) with respect to the time window for which a neuron is found to be selective, which averages out to be equal to 0.4 sec Hatsopoulos et al. (2007). This choice is also consistent with the typical duration of increasing and decreasing velocity profiles present in Kadmon Harpaz et al. (2019) (see, for example, Fig. 5). Moreover, in all the tests we made, we obtained the best results with a weight \(c_1= 10\) and all the other constants identically 1, so as to give more importance to the temporal aspect of the trajectory.

The resulting affinity matrix is clearly divided into blocks (see Fig. 18). These blocks are the eigenvectors associated to the two major eigenvalues of the affinity matrix and represent the clusters of the pattern of movement decomposition. By projecting the eigenvectors over the reaching trajectory, it turns out that these correspond precisely to the acceleration and deceleration phases of the movement task. Acceleration-phase is orange-colored and deceleration-phase is blue-colored as the neural states represented in Fig. 4.

In this simple case, the same results could have been obtained using a weighted Euclidean k-means clustering. Precisely if \(\left( \left( t_0, x_0,y_0,\theta _0, v_0, a_0, \right) ,\left( t_1,x_1,y_1,\theta _1, v_1, a_1\right) \right)\), and \(c_1, \cdots , c_6\) are positive weights, a general distance can be defined as

If all coefficients are identically 1, we recover the standard Euclidean distance, but a better clustering result is obtained with a \(c_1= 10\), while all the other coefficients are chosen to be 1. Note that the coefficient \(c_1=10\) is exactly the same used in the sub-Riemannian case.

Below we show a representation of three kernels: the Euclidean one, the Euclidean one with the considered weight, and the sub-Riemannian one. The representation shown in Fig. 19 is the application of each of the three kernels to a bell-shaped velocity profile in the (t, v) plane (the corresponding path in the (x, y) plane is a segment).

As can be observed from Fig. 19, the subriemannian kernel is tangent to the curve at the violet-colored point \((t_0, v_0)\), since the coefficient \(e_5\) defined in (33), which expresses the increment in the velocity variable v, also depends on the acceleration a, which clearly is the derivative of v. On the contrary, the Euclidean one, is always aligned with the axes, and does not use any information regarding the derivative of the curve. For this reason only the sub-Riemannian one leads to a principle of good continuation in velocity.

The advantage of the subriemannian kernel is that it can capture more complex relationships between points, as it takes into account the intrinsic geometry of the data space. Significant differences between the kernels can be observed when applied to movements that are not smooth, with sudden changes in velocity and curvature, as we will see in the next test on a more complicated path.

Test 2: Simulation of a random target pursuit task

In this experiment, we consider the random target pursuit task depicted in Fig. 20 (adapted from Kadmon Harpaz et al., 2019), and compare the grouping performed with a standard Euclidean \(k-\)means algorithm with the spectral clustering with the sub-Riemannian distance \(d_{\mathcal {M}}\) introduced in the previous section. We consider a longer trajectory in order to have many different slopes of the trajectory in different points, and compare the algorithms in different situations.

Reaching path and speed profile of a random target pursuit task: approximation of Fig. 5. The red dot represents the movement starting position, while the blue one represents the first target to be reached

We first apply k-means clustering to the trajectory data with a generalized Euclidean distance, defined in (50) with the same coefficient \(c_1=10\).

The result is displayed in Fig. 21. However, not even with this coefficient, the segmentation is totally coherent with the expected results. Some sub-trajectories have abrupt changes in direction and speed, while others appear to be overly fragmented (Clusters 3, 4, 6, 10, 13, 14, 16). In addition, clusters 1, 2 and 9 fail to capture the phases of acceleration and deceleration despite the changes in acceleration and direction of movement. This is because Euclidean k-means relates each point with its neighbors in the direction of the axes, without considering the slope of the trajectory. This becomes clear while comparing Figs. 19 and 22. The Euclidean kernel is the same in the two cases, and clusters correctly when the change of slope is slow (Fig. 19), while covers points in ascending and descending phase in presence of an abrupt change of the speed.

Kernel representations on a non-smooth segment on the (t, v) plane of Fig. 20

Then we apply the sub-Riemannian clustering algorithm, always with the coefficient \(c_1= 10\). As we see in in Fig. 23, this algorithm allows us to correctly identify movement fragments as the acceleration and deceleration phases of the trajectory. The main reason is that sub-Riemannian distance correctly codes the differential constraints between the variables, strongly relating time, speed and acceleration in such a way that we can not change the velocity of a point, without changing its position. In Fig. 22, we zoomed on a segment of the trajectory in the (t, v) plane identified by Euclidean cluster 9. For this segment of the trajectory, we applied the three kernels to a point on the curve to highlight the role of the tangency of the sub-Riemannian kernel. Due to the sudden change of speed, the Euclidean kernels tend to group points belonging to different acceleration/deceleration phases, while the sub-Riemannian one tends to aligne with the shape of the curve, and performs the desired grouping.

Hence, we claim that the distance \(d_{\mathcal {M}}\) (32) is adequate, not only because of the properties of the kinematic space, but also because of the classification given by the clustering algorithm. We emphasize how instead the distances tested in the paper (Kadmon Harpaz et al., 2019) (see the list recalled in Section 5.1) or a Euclidean \(k-\)means algorithm did not justify the classification results into movement trajectories. We therefore introduced a distance that allows to perform a kernel component analysis which is the phenomenological counterpart of the neural PCAs provided by Kadmon Harpaz et al. (2019).

6 Conclusions

Our model proposes a geometric setting to explain the neural behaviour of the motor arm area M1. By getting inspiration from Georgopoulos neural models (Georgopoulos et al., 1982; Georgopoulos, 2015), we provided a fiber bundle structure which is able to describe the hypercolumnar organization of the cortical area. On this structure, we considered the selective tuning of M1 neurons of kinematic variables by especially focusing on their temporal behaviour (Hatsopoulos et al., 2007; Churchland & Shenoy, 2007). We then extended the previous structure by considering that the cortex can code the time dependent direction of movement expressed as movement fragments as attested by experimental data of Hatsopoulos et al. (2007) and Churchland and Shenoy (2007). This led to consider a higher dimensional fiber bundle which codes movement fragments in the fiber: these were described as integral curves of the geometric structure with subriemannian metrics. Finally, in this space we defined a distance which models the weighting functions measured in the cortex and allows to recover an estimate of the neuronal population vector. The problem of identifying cortical activity patterns and their associated phenomenological primitives has been extensively studied in the visual cortex, specifically to identify perceptual units. Here we have applied the approach of Sarti and Citti (2015) who related the emergent states of cortical activity studied in Koffka (2013) with visual perceptual units obtained with grouping algorithms. Similarly, we linked the neural states found in Kadmon Harpaz et al. (2019) to elementary trajectories obtained through a clustering algorithm based on a Sub-Riemannian distance. We decompose movement trajectories into curves of acceleration or deceleration with a specific plane direction. These trajectories are well in accordance with the motor patterns measured in Kadmon Harpaz et al. (2019) and Hatsopoulos et al. (2007). We emphasize that by working only on kinematic variables we recovered the same neural classification acquired by electrode array. This also proves that the kinematic parameters we identified are sufficient to completely explain the process of movement decomposition into trajectory fragments observed in Kadmon Harpaz et al. (2019). In the future, we will expand the model to a space of movement trajectories, where admissible distances and variations can be defined. This could be of neural support, as it could better explain the data presented in Hatsopoulos et al. (2007), Churchland and Shenoy (2007), and Kadmon Harpaz et al. (2019), which strongly support the role of movement trajectories in motor encoding.

Data availibility

Graphical representations of the model are available upon request.

References

Abend, W., Bizzi, E., & Morasso, P. (1982). Human arm trajectory formation. Brain: a Journal of Neurology, 105(Pt 2), 331–348.

Aflalo, T. N., & Graziano, M. S. A. (2006a). Partial tuning of motor cortex neurons to final posture in a free-moving paradigm. Proceedings of the National Academy of Sciences, 103(8), 2909–2914.

Aflalo, T. N., & Graziano, M. S. A. (2006b). Possible origins of the complex topographic organization of motor cortex: Reduction of a multidimensional space onto a two-dimensional array. Journal of Neuroscience, 26(23), 6288–6297.

Agrachev, A., Barilari, D., & Boscain, U. (2019). A comprehensive introduction to sub-Riemannian geometry (Vol. 181). Cambridge University Press.

Ajemian, R., Bullock, D., & Grossberg, S. (2001). A model of movement coordinates in the motor cortex: posture-dependent changes in the gain and direction of single cell tuning curves. Cerebral Cortex, 11(12), 1124–1135.

Amirikian, B., & Georgopoulos, A. P. (2003). Modular organization of directionally tuned cells in the motor cortex: is there a short-range order? Proceedings of the National Academy of Sciences, 100(21), 12474–12479.

Ashe, J., & Georgopoulos, A. P. (1994). Movement parameters and neural activity in motor cortex and area 5. Cerebral Cortex, 4(6), 590–600.

Belkin, M., & Niyogi, P. (2003). Laplacian eigenmaps for dimensionality reduction and data representation. Neural Computation, 15(6), 1373–1396.

Björck, Å. (1990). Least squares methods. Handbook of Numerical Analysis, 1, 465–652.

Bosking, W. H., Zhang, Y., Schofield, B., & Fitzpatrick, D. (1997). Orientation selectivity and the arrangement of horizontal connections in tree shrew striate cortex. Journal of Neuroscience, 17(6), 2112–2127.

Bressloff, P. C., & Cowan, J. D. (2002). The visual cortex as a crystal. Physica D: Nonlinear Phenomena, 173(3-4), 226–258.