Abstract

We present a formal framework for process composition based on actions that are specified by their input and output resources. The correctness of these compositions is verified by translating them into deductions in intuitionistic linear logic. As part of the verification we derive simple conditions on the compositions which ensure well-formedness of the corresponding deduction when satisfied. We mechanise the whole framework, including a deep embedding of ILL, in the proof assistant Isabelle/HOL. Beyond the increased confidence in our proofs, this allows us to automatically generate executable code for our verified definitions. We demonstrate our approach by formalising part of the simulation game Factorio and modelling a manufacturing process in it. Our framework guarantees that this model is free of bottlenecks.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We present a formal framework for process composition based on actions that are specified by their input and output resources. Our composition actions take a declarative approach. We take inspiration from the proofs-as-processes paradigm [1], which relates process correctness to linear logic [14], and prove that process compositions deemed valid in our framework yield well-formed linear deductions.

We develop our framework in the proof assistant Isabelle/HOL [35]. This ensures that its logical underpinnings and the proofs of its properties are fully rigorous. As part of this we mechanise a deep embedding of intuitionistic linear logic (ILL) and then define how resources translate to its propositions and process compositions translate into its deductions. Figure 1 provides a visualisation of this connection. This mechanisation enables automated generation of verified executable code for our definitions, meaning we can use the verified concepts outside of the proof assistant.

Resources in our framework specify inputs and outputs of individual actions and whole processes. They can represent physical as well as digital objects, meaning they must be manipulated in a linear manner: preventing their free duplication or discarding. This linearity is ensured by our process compositions and we demonstrate this by producing well-formed linear logic deductions (see Sect. 5). Our resources form an algebraic structure [9] (see Sect. 3), allowing for combinations that can consistently represent, for instance, multiple simultaneous objects and non-deterministic outcomes. The atoms of this algebra are not constrained in any way beyond having a notion of equality, which makes them (and the resources induced by them) able to carry extra information such as location or internal state depending on the needs of the domain being modelled.

Processes are collections of actions that transform resources, such as manufacturing processes where physical objects are transformed with the use of tools and machines. We focus our view on the actions’ inputs and outputs, as described by resources, with compositions describing how resources move between individual actions to form a larger process (see Sect. 4). This view is reminiscent of algorithmic planning [40], but instead of preconditions and postconditions we focus on the objects and data that actions pass to each other. We formulate a simple condition (see Sect. 4.4) on how these compositions of processes are formed that ensures they handle the resources in the correct way, grounded in linear logic (see Sect. 5.6). Moreover, in the presence of complex information in resource atoms, this condition can have implications such as ensuring the process is free of bottlenecks or that it obeys a given graph of locations.

As a case study, we also formally model manufacturing in the logistics simulation game FactorioFootnote 1 (see Sect. 7) and generate code for a tool that can be used by players to implement processes as factories in the game.

This view is focused on processes as collections of actions connected through their inputs and outputs, rather than focusing on the agents executing those actions. This can be contrasted with many process calculi, whose presentations often focus on agents performing sequences of actions while communicating with each other or sometimes cooperating on the actions. Our approach is more specific, making correctness conditions simpler to check. Once validated, our process compositions could be cast into other formalisms (such as the \(\pi \)-calculus [27] or Petri nets [29]) with their properties carrying over.

The main contributions of our work are:

-

A formal language for describing the structure of processes composed from actions.

-

Translations of all valid processes into well-formed deductions of linear logic.

-

A model of manufacturing in the logistics simulation game Factorio as a case study using code generated from our mechanisation.

Running Example.

To illustrate some of our concepts we will be using a simple vending machine as a running example. This machine can accept cash, dispense one kind of drink at a fixed cost and return change.

A more involved case study is given in Sect. 7, where we model manufacturing while including notions such as rate of flow and locations. While we do not discuss these in the present paper, we have also used our framework to mechanise models of, for instance, manufacturing in a metalworking shop, website login form, cooking breakfast and chemical synthesis.

Outline.

This paper is structured as follows. In Sect. 2 we note the main threads of background work: ILL, the proofs-as-processes paradigm and Isabelle/HOL. Then in Sects. 3 and 4 we discuss resources and process compositions respectively, in each case introducing the formal notion and then discussing how it was mechanised in Isabelle/HOL. In Sect. 5 we describe our shallow and deep embeddings of ILL and the translation of valid process compositions into well-formed deductions, verifying that those compositions manipulate resources correctly. Then in Sect. 6 we discuss the generation of verified code from our mechanisation. In Sect. 7 we discuss an example formalisation in a particular domain, the logistics simulation game Factorio. There we illustrate how our resources can express notions such as rates of flow and locations, and the kind of conclusions we can draw for process compositions. In Sect. 8 we draw connections from our framework to prior work. Concluding remarks and a note on future work are given in Sect. 9.

2 Background

2.1 Intuitionistic Linear Logic

Linear logic is a formal system introduced by Girard [14] that disallows unconstrained weakening and contraction. Weakening states that whenever we can derive the proposition B from some group of propositions \(\Gamma \), we can also derive B from \(\Gamma \) with the addition of any proposition A. Contraction, for its part, states that whenever we can derive the proposition B from some group of propositions \(\Gamma \) with the addition of two instances of some proposition A, only a single instance of A in addition to \(\Gamma \) would have been sufficient to derive B.

As a result this logic accounts for the number of propositions and not just their presence, and deductions within it can be thought of as essentially consuming and producing propositions—this makes it well suited for representing resources and processes.

We use intuitionistic linear logic (ILL). Given a set of propositional variables A, the propositions of ILL are generated as follows:

where \(\otimes \) is read times, \(\oplus \) is read plus, & is read with, \(\multimap \) is linear implication and ! is exponential.

Sequents are of the form \(\Gamma \vdash C\) where \(\Gamma \) is a list of propositions, the antecedents, and C is a single proposition, the consequent. Valid sequents are generated by the sequent calculus rules shown in Fig. 2, which we take from Bierman’s work [4]. \(!\Gamma \) denotes the result of exponentiating (i.e. applying ! to) each proposition in the list \(\Gamma \).

ILL only allows sequents with exactly one consequent proposition. This constraint does not limit us because we represent the input and output of a process with one resource each. Its two-sided sequent calculus allows us to naturally represent the input on the left-hand side and the output on the right-hand side, with the sequent corresponding to processing the input into the output. We make this connection formal in Sect. 5.6.

In the rest of this section we introduce the operators of ILL in more detail, with reference to the rules in Fig. 2. We pay particular attention to the ! operator.

The operators \(\otimes \) and & are the two linear forms of conjunction, with units \({\textbf{1}}\) and \(\top \) respectively. Following Girard’s terminology, \(\otimes \) is called multiplicative while & is called additive. This is because in the premises of their rules \(\otimes _R\) and & \(_R\) we have \(\otimes \) requiring distinct formula lists \(\Gamma \) and \(\Delta \) as antecedents while & requires the same list \(\Gamma \). Intuitively, \(\otimes \) represents simultaneous availability of two formulas while & represents the availability of a choice of one of them. Thus \(\otimes _R\) combines into the antecedents those of both premises, while & \(_R\) only propagates one list of antecedents into its conclusion. In our work we only make use of \(\otimes \), which forms the counterpart to parallel resources.

The operator \({\oplus }\) is the only linear form of disjunction in ILL and has \(\bot \) as its unit. Note that in the premises of its rule \(\oplus _L\) it requires the same formula list \(\Gamma \) in the antecedents, making it be additive. Intuitively, \(\oplus \) represents the non-deterministic availability of one of the two formulas. Thus, just as with & \(_R\), the rule \(\oplus _L\) only propagates one list of antecedents into its conclusion. In our work this operator forms the counterpart to non-deterministic resources.

The operator \(\multimap \) is the linear form of implication. Note that in the premises of its rule \(\multimap _L\), it requires distinct formula lists \(\Gamma \) and \(\Delta \) in the antecedents, making it be multiplicative. Intuitively, \(\multimap \) represents the availability of a transformation from its left-hand formula to its right-hand formula. This can be seen in how it is derived using \(\multimap _R\). In our work this operator forms the counterpart to executable resources.

Finally, the ! operator controls the use of weakening and contraction. This is a distinguishing feature of linear logic, limiting the use of weakening and contraction but not outright rejecting them. Notably, reasoning in linear logic with exponentiated formulas recovers ordinary intuitionistic logic. In our work this operator forms the counterpart to copyable resources, restricting copying (contraction) and erasing (weakening) to them.

There are four rules in ILL concerning the ! operator. The first two, Weakening and Contraction, reintroduce these two concepts into the logic but constrain them only to exponentiated formulas. Thus, once we have an exponentiated formula we can get any number of copies of it or fully discard it. The third rule, Dereliction, says that if something can be derived from a list of formulae then it can be derived with any of them exponentiated. Intuitively this is because having one copy is a special case of being able to get any number of copies. The fourth rule, Promotion, says that if something can be derived from a list of only exponentiated formulas then the result can be exponentiated. Intuitively this is because to get any number of the consequent we need only copy all of the antecedents that many times.

2.2 Proofs-as-Processes

The proofs-as-processes paradigm was introduced by Abramsky [1] and examined in more depth by Bellin and Scott [3]. It concerns the connection of linear logic to processes akin to the famous propositions-as-types [45] paradigm. In particular, Bellin and Scott examine how a deduction in classical linear logic can be used to synthesise a \(\pi \)-calculus [27] agent. That agent’s behaviour mirrors the manipulation of propositional variables described by the deduction, with a correspondence between execution of the agent and cut elimination of the logic.

As such, we take adherence to the rules of linear logic as a proper formalisation of process correctness. However, instead of synthesising processes from deduction, we compose processes independently in a way that all valid compositions reflect well-formed deductions in ILL. We verify this in Sect. 5 by mechanising deductions of ILL in Isabelle/HOL and then defining exactly how compositions map to them. Moreover, we do not formalise a notion of execution for processes compositions and thus, unlike Bellin and Scott, we do not relate execution to cut elimination. Nevertheless, our processes take some inspiration from deductions of ILL as well as from the proofs-as-processes literature.

One application of the proofs-as-processes paradigm, and a significant source of inspiration for our framework, is WorkflowFM [33]. It takes a similar perspective on processes, but uses classical linear logic and adheres more strictly to the proofs-as-processes paradigm. We provide more detail on our work’s relation to WorkflowFM in Sect. 8.

2.3 Isabelle/HOL

We mechanise our work in the proof assistant Isabelle [35] using its higher order logic (HOL). This combination is known as Isabelle/HOL. Its proof language Isar [46] is close to that of mathematical proofs, which aids the readability. The tool Sledgehammer [5] allows the user to invoke a number of automated theorem provers on a specified goal with the aim of finding and automatically constructing its proof. It is useful for finding proofs of some (sub)goals, allowing us to concentrate on the higher-level reasoning.

In this paper we use Isabelle/HOL code blocks to introduce definitions and theorem statements, with terms rendered in italics. These definitions include inductive datatypes ( ), recursive functions (

), recursive functions ( and

and  ) including primitively recursive ones (

) including primitively recursive ones ( ), and general definitions (

), and general definitions ( ). Type variables are preceded by

). Type variables are preceded by  as for instance in

as for instance in  . We also use Isabelle/HOL syntax inline when talking about formal entities, such as the empty list constructor

. We also use Isabelle/HOL syntax inline when talking about formal entities, such as the empty list constructor  .

.

In our formal statements we use the following Isabelle/HOL notation:

-

Meta-level implication:

expresses the conjunction introduction rule from the assumptions

expresses the conjunction introduction rule from the assumptions  and

and  ;

; -

List construction:

;

; -

List append:

;

; -

String literal:

.

.

We take advantage of the code generator [15] included in Isabelle/HOL. This allows us to export verified executable code for our definitions in SML [28], OCaml [23], Haskell [36] and Scala [30]. Definitions for which we want to generate code must be computable, e.g. recursive functions with proven termination. For many of the constructs we use, such as inductive datatypes and primitively recursive functions, Isabelle/HOL automatically proves the relevant code equations for the generator. Only in one part of resource mechanisation, the resource term equivalence which we define as an inductive relation, do we have to manually prove a code equation and add it to the code generator (see Sect. 3.3.6).

3 Resources

In this section we describe the mechanised theory of resources. These represent the objects that form inputs and outputs of actions. The basis for resources are atoms drawn from an unconstrained type parameter. They combine in several ways, enabling us to express compositions of processes (see Sect. 4).

We start with a datatype of resource terms expressing how the atoms can be combined. These combinations, however, give rise to multiple terms representing what should be one resource. We thus introduce an equivalence relation such that resources are obtained via quotienting (see Sect. 3.2).

The term equivalence is defined as an inductive predicate, which means it is not directly decidable. To fix this we define a normalisation procedure based on a rewriting relation which we prove to be terminating and confluent. Thus equality of normal forms serves as a computable procedure for deciding the equivalence of resource terms.

3.1 Resource Terms

When describing interesting processes we rarely talk about singular objects. An action may for example require or produce multiple resources, or its result may be non-deterministic (e.g. it can fail). Thus our resources combine in various ways to formally express such situations, building from the individual objects of the domain and two special objects.

We express resource combinations in Isabelle/HOL via the datatype  , parameterised by the type of resource atoms

, parameterised by the type of resource atoms  , with three leaf resources and four resource constructions:

, with three leaf resources and four resource constructions:

The first kind of leaves, constructed by  , essentially inject the resource atoms into the type. This means that every resource atom is itself a resource. These we then combine into more complicated resources.

, essentially inject the resource atoms into the type. This means that every resource atom is itself a resource. These we then combine into more complicated resources.

The second leaf,  , represents the absence of any object as a resource. It is useful when we want to describe an action with no input or no output. It also acts as a unit for the parallel combination of resources discussed below.

, represents the absence of any object as a resource. It is useful when we want to describe an action with no input or no output. It also acts as a unit for the parallel combination of resources discussed below.

The third and final leaf,  , represents a resource about which we have no information. Any resource, and thus also their combinations, can be turned into this one by “forgetting” all information about it (see Sect. 4.3). In certain cases this allows us to more concisely express a composition of processes, but at the cost of no longer being able to act on the forgotten resources.

, represents a resource about which we have no information. Any resource, and thus also their combinations, can be turned into this one by “forgetting” all information about it (see Sect. 4.3). In certain cases this allows us to more concisely express a composition of processes, but at the cost of no longer being able to act on the forgotten resources.

Next are the four resource constructions, which express the following situations:

-

Copyable resource, where we assert that the resource can be copied and erased freely like data.

For example:

or

or

-

Parallel resources, representing a list of resources as one.

For example:

-

Non-deterministic resource, representing exactly one of two resources; such as a successful connection or an error, or the result of a coin flip.

For example:

or

or

-

Executable resource, representing a single potential execution of a process. It is specified by the process input and output resources, and allows us to talk about higher-order processes.

For example:

is the ability to cut a steel sheet into tiles while producing waste.

is the ability to cut a steel sheet into tiles while producing waste.

Note that the type parameter  from which resource atoms are drawn is not constrained in any way. This means it can be any type we can define in Isabelle/HOL, only requiring the elements to have a notion of equality. As a result we can build resources from atoms such as the following:

from which resource atoms are drawn is not constrained in any way. This means it can be any type we can define in Isabelle/HOL, only requiring the elements to have a notion of equality. As a result we can build resources from atoms such as the following:

-

Objects with internal state:

where

where  and

and  is the contained volume;

is the contained volume; -

Objects at graph-like locations:

,

,  where

where  and

and  are vertices of some graph;

are vertices of some graph; -

Stacks of objects:

where

where  is the count.

is the count.

This allows us to easily add information to the resources. As compositions of processes use resources, requiring their equality wherever a connection is made, this information has an effect on what compositions are valid (see Sect. 4.4). For instance, with resource atoms located in a graph we can ensure that any movement of resources is done between adjacent locations.

Example

In the case of our running example, the vending machine, there are three kinds of objects we care about: cash, drinks and the machine itself. So, in that domain, these form our type of resource atoms with constructors  ,

,  and

and  .

.

To represent a single drink the constructor  is sufficient and needs no parameter. But to represent some amount of cash we parameterise the

is sufficient and needs no parameter. But to represent some amount of cash we parameterise the  constructor with a natural number—the amount

constructor with a natural number—the amount  of some currency is

of some currency is  . Similarly for the vending machine itself, we parameterise the

. Similarly for the vending machine itself, we parameterise the  constructor with a natural number this time representing the amount the machine currently holds in credit.

constructor with a natural number this time representing the amount the machine currently holds in credit.

Note that for simplicity we assume any amount of currency is one object rather than deal with denominations used in the real world. However, this model could be extended in a straightforward way to account for this detail.

The resource construction most relevant in this domain is parallel combination. For instance, if we have a vending machine with  already in credit and want to buy a drink that costs

already in credit and want to buy a drink that costs  , then we may start in the situation described by the following resource:

, then we may start in the situation described by the following resource:

3.2 Parallel Resources as a Quotient

Resource terms give many ways of expressing the same resource. For instance, Fig. 3 shows syntax trees for five resource terms which express the same resource: the single atom A.

In our current formalisation we pay special attention to the variety of terms that can be produced by the parallel combination of resources. This is because parallel combinations arise frequently in our process combinations, and when they do it is often with more than two resources. As such, rendering the alternatives equal (see below) significantly simplifies building compositions at the price of a manageable increase to complexity of their mechanisation.

Other resource combinations can also produce a variety of terms, for example  can be considered the same as

can be considered the same as  . However, in our experiments, such equivalences lead to significant increase in mechanisation complexity with little gain in usability. For instance, in the given example, the resulting equivalence relation becomes more dependent on the contents of resource terms in addition to their structure. This in turn means we lose useful properties of resources, especially when it comes to systematically translating processes between domains.

. However, in our experiments, such equivalences lead to significant increase in mechanisation complexity with little gain in usability. For instance, in the given example, the resulting equivalence relation becomes more dependent on the contents of resource terms in addition to their structure. This in turn means we lose useful properties of resources, especially when it comes to systematically translating processes between domains.

For practical reasons, we consider the full integration of additional resource equalities into our framework as a future extension (see Sect. 9.1). However, although they are not resource term equivalences in the current formalisation, further resource transformations can be achieved through composition operators and resource actions (see Sects. 4.2 and 4.3) instead.

In our formalisation of resources we collect all of the possible forms of a resource into one object. We first define an equivalence relation on terms and then form their quotient by that relation.

We inductively define the relation  to relate terms that represent the same resource, as shown in Fig. 4. The first three introduction rules express the core of the equivalence, handling parallel combinations with no children, single child and nested parallel combinations respectively. Then the next seven introduction rules close the relation on the resource term structure, meaning the result will be a congruence. The final two rules make the relation on symmetric and transitive. The fact that it is reflexive, the last condition for it to be an equivalence, can be proven from this definition.

to relate terms that represent the same resource, as shown in Fig. 4. The first three introduction rules express the core of the equivalence, handling parallel combinations with no children, single child and nested parallel combinations respectively. Then the next seven introduction rules close the relation on the resource term structure, meaning the result will be a congruence. The final two rules make the relation on symmetric and transitive. The fact that it is reflexive, the last condition for it to be an equivalence, can be proven from this definition.

Now we can define resources as the quotient of their corresponding terms, treating each equivalence class as one object. This is easily done in Isabelle/HOL:

Because the relation is a congruence, meaning equivalent arguments to the same constructor yield equivalent results, it is also very simple to lift the constructors to the quotient. The lifting of definitions is automated by the Lifting package, introduced by Huffman and Kunčar [19]. Beyond the easier definition, this also automatically proves a number of useful facts relating it to the quotient and sets up code generation. For instance we lift the  constructor as follows:

constructor as follows:

What is considerably more difficult is i) characterising the equivalence in a computable way and ii) recovering useful structure from resource terms for resources. The computable characterisation is particularly important, because without it we would not be able to generate code for anything involving resources. We discuss our solution to these two issues in the next two sections.

Before addressing those issues, we define an infix notation for parallel resources. This simplifies statements that involve few resources being in parallel. The resource product  simply puts two resources in parallel:

simply puts two resources in parallel:

Thanks to resources being a quotient this operation is associative and has  as its left and right units. In other words, resources with this operation form a monoid.

as its left and right units. In other words, resources with this operation form a monoid.

Note that, once the quotient is made, it does not matter if we initially defined the parallel resource term combination as having arbitrary arity or as strictly binary. It only affects the phrasing of the equivalence rules, the normalisation procedure we use to decide that equivalence and the representation of resources in generated code. We choose to use lists because they more concisely reflect the monoidal nature of parallel resources and make for a simpler normalisation procedure.

3.3 Resource Term Normalisation by Rewriting

We give a computable characterisation of the resource term equivalence  through a normalisation procedure based on the following rewrite rules:

through a normalisation procedure based on the following rewrite rules:

The rules (2)–(4) are obtained directly from the introduction rules of the equivalence  by picking a specific direction (see the first three rules in Fig. 4). The rule (5) is obtained from a theorem about the equivalence

by picking a specific direction (see the first three rules in Fig. 4). The rule (5) is obtained from a theorem about the equivalence  , allowing us to drop any

, allowing us to drop any  resource within a

resource within a  one in a single step.

one in a single step.

An alternative procedure, which normalises a term in a single pass, is described in Sect. 6.1. While that variant is more direct, and should thus be more efficient for computation, it is more difficult to use in proofs.

3.3.1 Normalised Terms

A resource term is normalised if:

-

It is a leaf node (i.e. one of

,

,  or

or  ), or

), or -

It is a non-parallel internal node (i.e. one of

,

,  or

or  ) and all of its children are normalised, or

) and all of its children are normalised, or -

It is a parallel internal node (i.e.

) and all of the following hold:

) and all of the following hold:-

All of its children are normalised, and

-

None of its children are empty or parallel resource terms (i.e. one of

or

or  ), and

), and -

It has at least two children.

-

We formalise this in Isabelle as the predicate  through structural recursion on the type of resource terms:

through structural recursion on the type of resource terms:

3.3.2 Rewriting Relation

We define the rewriting relation, the congruence closure of the rules (2)–(5), as the inductive relation  :

:

Note that this relation is reflexive rather than partial, so its normal forms are fixpoints rather than terminal elements. The rewriting step function (see Sect. 3.3.4) will have to be total, like all functions defined in Isabelle. By making the rewriting relation reflexive we can have the rewriting step function graph be a subset of this relations, which is useful for reusing proof.

As a form of verification, we show that a resource term satisfies the predicate  if and only if it is a fixpoint of the relation

if and only if it is a fixpoint of the relation  . So these two definitions agree on what terms are normalised.

. So these two definitions agree on what terms are normalised.

3.3.3 Rewriting Bound

The rewriting bound expresses the upper limit on how many rewriting steps may be applied to a particular resource term. For this bound we disregard many details of the resource term at hand in order to arrive at a simple definition, which means that even terms in normal form can have a positive rewriting bound—this is not the least upper bound. But there being a finite bound is sufficient to show that normalisation by this rewriting terminates.

We define the bound through structural recursion on the type of resource terms:

-

If the term is a leaf (i.e. one of

,

,  or

or  ), then its bound is 0.

), then its bound is 0. -

If the term is a non-parallel internal node (i.e. one of

,

,  or

or  ), then its bound is the sum of bounds for its children.

), then its bound is the sum of bounds for its children. -

If the term is a parallel internal node (i.e.

), then its bound is the sum of bounds for its children plus its length (for possibly dealing with unwanted children) plus 1 (for possibly ending up with too few children).

), then its bound is the sum of bounds for its children plus its length (for possibly dealing with unwanted children) plus 1 (for possibly ending up with too few children).

The most crucial property we show is that for every term not in normal form this bound is positive. We also show that the rewriting relation does not increase this bound.

3.3.4 Rewriting Step

The rewriting relation given in Sect. 3.3.2 specifies all possible rewriting paths. When implemented, a specific algorithm must be chosen, which yields a rewriting function.

There are two choices when rewriting: the order in which we rewrite children of internal nodes, and the order in which we apply the rewriting rules. For an example of the latter: the term  could be rewritten directly into

could be rewritten directly into  by rule (3), or first into

by rule (3), or first into  by rule (5) and only then into

by rule (5) and only then into  by rule (2).

by rule (2).

Our rewriting algorithm is as follows:

-

For the internal nodes

and

and  , always rewrite the first child until it reaches its normal form and only then start rewriting the second child if that one is not also already normalised.

, always rewrite the first child until it reaches its normal form and only then start rewriting the second child if that one is not also already normalised. -

For the internal node

we proceed in phases:

we proceed in phases: -

i.

If any child is not normalised, then rewrite all the children (note that rewriting is the identity on already normalised terms); otherwise

-

ii.

If there is some nested

node in the children, then merge one up; otherwise

node in the children, then merge one up; otherwise -

iii.

If there is some

node in the children, then remove one; otherwise

node in the children, then remove one; otherwise -

iv.

If there are no children, then return the term

; otherwise

; otherwise -

v.

If there is exactly one child, then return that term; otherwise

-

vi.

Do nothing and return the same resource.

-

i.

We mechanise this algorithm in Isabelle/HOL via the function called  , defined as follows:

, defined as follows:

We show that the graph of this function is a sub-relation of the rewriting relation. Thus normalised resource terms are exactly those for which this function acts as identity and the input resource term is always equivalent to the result.

Most crucial is that with this more specific formulation we can prove that, for any term not already normalised, this  function strictly decreases its rewriting bound.

function strictly decreases its rewriting bound.

3.3.5 Normalisation

With this rewriting function the normalisation procedure is quite simple: keep applying  as long as the resource term is not normalised. We mechanise this as the function

as long as the resource term is not normalised. We mechanise this as the function  and prove that it terminates by using the rewriting bound as a termination measure.

and prove that it terminates by using the rewriting bound as a termination measure.

3.3.6 Characterising the Equivalence

In order to characterise the resource term equivalence we prove the following statement:

First, the \(\Longleftarrow \) direction is simpler. We have already shown that every term is equivalent to the result of applying the rewriting step to it. Because the normalisation is repeated application of the step, by transitivity of the equivalence every term is equivalent to its normalisation. So two terms with equal normal forms can be shown equivalent using transitivity and symmetry of the resource term equivalence.

Second, the \(\Longrightarrow \) direction is more complex. It relies on showing that equivalent resources are joinable by the rewriting function, meaning there is a sequence of rewrite steps from each term to some common (possibly intermediate) form. We formalise this statement by casting the rewriting step in the language of the Abstract Rewriting [41] theory already mechanised for the IsaFoR/CeTA project [42] and available in the Archive of Formal Proofs.Footnote 2 We prove it by induction on the resource term equivalence, where for each of its introduction rules we prove that such joining rewrite paths exist.

Now, we already know that every term is equivalent to its normal form. So, by transitivity and symmetry, normal forms of equivalent terms are themselves equivalent. Then, by the joinability rule we just showed, we have that these normal forms are joinable. But, because they are normalised terms, we know that each only rewrites to itself. Therefore the form that joins them can only be those normalised terms, and so they must be equal.

As a result we get a computable characterisation of the resource term equivalence  . We add equation (6) to the code generator, meaning that Isabelle/HOL is no longer blocked from generating code for anything involving resources. This characterisation is also important to how we translate resources into linear logic in Sect. 5.2.

. We add equation (6) to the code generator, meaning that Isabelle/HOL is no longer blocked from generating code for anything involving resources. This characterisation is also important to how we translate resources into linear logic in Sect. 5.2.

3.3.7 Representative Term

Now that the resource term normalisation is verified, we can use it to define a representative term for every resource. While every resource is an equivalence class of terms, there is exactly one normalised term among them. We denote the representative of  as

as  . Having such a representative is useful, for instance, for visualising the resource.

. Having such a representative is useful, for instance, for visualising the resource.

The representative can be constructed by applying the rewriting normalisation procedure to any term in the class. As with the resource constructors (see Sect. 3.2), this definition is facilitated by the Lifting package [19].

Thus, we define  to be the normalisation procedure but with resources as its domain. This requires that normalised terms be equivalent, which they are.

to be the normalisation procedure but with resources as its domain. This requires that normalised terms be equivalent, which they are.

We can check this by proving the following, where  is the equivalence class representing

is the equivalence class representing  and

and  chooses an arbitrary

chooses an arbitrary  that satisfies

that satisfies  :

:

3.4 Resource Type is a Bounded Natural Functor

When modelling processes in a certain context, the resources are built from available resource atoms. It is then useful to have a method for systematically changing the content of a resource (the resource atoms it contains) without changing its shape, translating the resource from one context to another. In general, such a method is called a mapper [13].

Suppose, for instance, that we were using a specific currency in our vending machine example, say British pounds. Then we may want to map a process from that domain to one using US dollars instead. We would do that by taking every resource in the process and systematically applying the currency conversion to all atoms the resource contains. The mapper generalises this operation for any function on resource atoms. For another example, we use the process mapper (and by extension the resource mapper) in Sect. 7.5 when combining two domains into one.

For resource terms, Isabelle/HOL automatically defines the mapper and proves its properties (e.g. that it respects the identity function and commutes with function composition). This is because every inductive datatype in Isabelle/HOL is a bounded natural functor (BNF), a structure for compositional construction of datatypes in HOL presented by Traytel et al. [43] and integrated in Isabelle/HOL by Blanchette et al. [6]. It therefore remains for us to lift this term-level mapper to the quotient and transfer its properties.

Fortunately, lifting the BNF structure from a concrete type to a quotient has been automated by Fürer et al. [13] who reduce this task to two proof obligations concerning the equivalence relation being used and parts of the BNF structure of the concrete type. Once these obligations are proven, the constants required for the BNF structure of the quotient and their properties are automatically derived.

The first obligation concerns the relator, an extension of the mapper to relations on the content instead of functions. It ensures that the generated relator will commute with relation composition. While its proof would usually be quite difficult, Fürer et al. describe how this condition can be proven using a confluent rewriting relation whose equivalence closure contains the equivalence relation we used to define the quotient type. In our case, the resource term normalisation procedure described in Sect. 3.3 gives us exactly such a relation.

The second obligation concerns the setter, which gathers the content into a set while discarding the shape. It ensures that the generated setter will be a natural transformation, that is applying the setter after mapping a function is the same as using the setter first and then applying that function to every element of its result. In our case this condition can be proven using structural induction on resource terms and Isabelle’s automated methods.

As a result we get the BNF constants (mapper, relator and setter) and properties, all already integrated with the automated tools within Isabelle.

4 Process Compositions

With resources formalised we now move to compositions of processes over them. Process compositions are about describing individual actions in terms of what resources they consume and produce, and how those can be put together to form larger processes.

Since resources may be physical objects we must be careful to handle them correctly, so that none are used twice or discarded without justification. We call this linearity. In Sect. 5, we describe how we formally verify that these compositions obey the rules of linear logic and so handle resources correctly.

We mechanise process compositions as the datatype  , with type variables

, with type variables  for resource atoms,

for resource atoms,  for labels and

for labels and  for other metadata:

for other metadata:

This datatype captures a complex process as a tree of composition actions applied to simple processes. In the rest of this section we describe the nodes of the process composition trees in more detail, including their input and output resources (also shown in Fig. 5), and discuss the correctness conditions on compositions.

Note that in text we use the notation  to say that process

to say that process  has input resource

has input resource  and output resource

and output resource  . Recall also that in Sect. 3.2 we defined

. Recall also that in Sect. 3.2 we defined  as infix syntax for

as infix syntax for  . So, we have for instance:

. So, we have for instance:  (see Sect. 4.3).

(see Sect. 4.3).

4.1 Primitive Actions

We start with primitive actions which depend on the domain we are modelling: they represent things we can do in the domain. For instance, if the domain has a notion of resources with locations, then there will be some kind of movement action which may move an object freely between locations or be constrained to edges of some graph. We treat these as assumptions of the model.

In Isabelle/HOL, we represent a primitive action by  , where

, where  and

and  are its input and output resources respectively,

are its input and output resources respectively,  is its label and

is its label and  is any other associated metadata.

is any other associated metadata.

The label serves to distinguish actions that may have equal inputs and outputs but different meaning, such as two modes of moving an object between locations with different costs and speeds (e.g. walking vs. running). The term “label” is used because it is often set to a printable type, such as a string, which we then use as a label in visualisations (particulars of process visualisation are not discussed in the present paper). The metadata can carry any further information we may wish to associate with the primitive actions, such as cost of execution or parameters for implementation (see for instance its use in Sects. 7.3 and 7.5).

Both the label and metadata are taken from arbitrary types (the unconstrained type variables  and

and  of

of  ) so they can carry any kind of complex information.

) so they can carry any kind of complex information.

Recall that these primitive actions correspond to the assumptions about the domain on which the composition relies. We make it convenient to collect the assumptions by defining the function  with the following signature:

with the following signature:

It gathers the parameters of every  node, returns the empty list for every other leaf of the composition and gathers children’s results for every internal node using list append.

node, returns the empty list for every other leaf of the composition and gathers children’s results for every internal node using list append.

Example

In our running example, the vending machine, we have three primitive actions: paying into the machine, getting a drink and getting change. We use string literals as labels and assign no metadata to these actions (by using the constant  of type

of type  ).

).

Paying money into a vending machine requires the machine, which can hold an amount of existing credit, and the cash being paid in. It produces a machine with the combined amount in credit. We define the action as a function of the two variables:

Returning funds from a vending machine only requires a machine and it produces a machine with zero credit left and the relevant amount of cash dispensed:

Getting a drink requires a machine with credit of at least the price of the drink and produces a machine with decreased credit and the drink. We define this action as a partial function of the price and credit:

Note that we separate the actions of getting a drink and returning change, while often vending machines return change automatically along with the purchase. Our formulation separates these two concerns, which allows returning change and cancelling to both be handled by the  action.

action.

4.2 Composition Operators

Next we have four ways of creating processes from one or two simpler ones, allowing us to inductively describe how to orchestrate the primitive actions:

-

Sequential composition—

First execute

and then use its outputs to execute

and then use its outputs to execute  .

. -

Parallel composition—

Execute

and

and  concurrently, but combine their inputs into one parallel resource and the same for their outputs.

concurrently, but combine their inputs into one parallel resource and the same for their outputs. -

Optional composition—

Take as input the non-deterministic combination of inputs of

and

and  , executing exactly one of them based on the branch of the input that is supplied at runtime. This serves as the only way to eliminate the non-determinism, so it requires that

, executing exactly one of them based on the branch of the input that is supplied at runtime. This serves as the only way to eliminate the non-determinism, so it requires that  and

and  have the same output resource.

have the same output resource. -

Representation—

Introduce the executable resource representing

, which could be a composition in its own right. This requires no input, analogously to a constant being viewed as a nullary function. Note that the output could have been defined as not copyable, but based on experience with modelling processes we decided to make the output explicitly copyable. This means we do not have to know ahead of time how many times we intend to use the representation.

, which could be a composition in its own right. This requires no input, analogously to a constant being viewed as a nullary function. Note that the output could have been defined as not copyable, but based on experience with modelling processes we decided to make the output explicitly copyable. This means we do not have to know ahead of time how many times we intend to use the representation.

The simplicity of these composition operations may seem restrictive. For instance, what if we wish to use only part of the output of  as input to

as input to  , essentially using

, essentially using  to further process some objects produced by

to further process some objects produced by  while keeping others untouched? In such a case our composition operations require us to state this explicitly so there is no ambiguity: do

while keeping others untouched? In such a case our composition operations require us to state this explicitly so there is no ambiguity: do  and then do

and then do  concurrently with “doing nothing” for an appropriate subset of

concurrently with “doing nothing” for an appropriate subset of  ’s outputs. This way process compositions (motivated by linearity) approach the frame problem [25]: the lack of change must be stated, but it is easy to do so automatically. The ways in which we express such lack of change are discussed in the next section.

’s outputs. This way process compositions (motivated by linearity) approach the frame problem [25]: the lack of change must be stated, but it is easy to do so automatically. The ways in which we express such lack of change are discussed in the next section.

Example

Perhaps the simplest process composition in our running example is paying money into a vending machine and then getting a drink. For example we can pay 10 into an empty machine and then get a drink costing 5 from the machine which then has 5 left as credit:

4.3 Resource Actions

Expressing concepts such as “doing nothing” is where resource actions come in. These are similar to primitive actions but, instead of expressing an assumption about some particular domain, they express things we can always do with resources.

As an inspiration for this range of actions we use theorems of linear logic: if an action can be argued in linear logic without making any assumption about the domain, then we can consider it as always doable with resources. Note that to argue for some of these actions one needs more than one inference rule, such as with OptDistrIn and OptDistrOut. We aim for convenience of expressing process compositions more than for atomicity with respect to the logic.

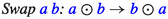

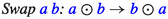

The resource actions are as follows:

-

Take any resource and produce it unchanged. For instance, to keep a resource aside while doing something with other resources.

-

Swap the order of any two parallel resources. This allows us to compose processes when their interface has the same resources but in different order, while retaining information about how they were reordered.

-

and

and

Take one resource to its non-deterministic combination with another. For instance, if a process

has input

has input  and we can deterministically obtain

and we can deterministically obtain  from another process

from another process  , then

, then  is the bridge that connects from

is the bridge that connects from  to

to  . Furthermore, injections in combination with Opt can also be used to optionally composeFootnote 3 any two processes

. Furthermore, injections in combination with Opt can also be used to optionally composeFootnote 3 any two processes  and

and  to one of the form

to one of the form  .

. -

Distribute a parallel resource into both branches of a non-deterministic one. This is useful when

is deterministically available but needed to resolve the branches of

is deterministically available but needed to resolve the branches of  . For instance, if always available tools are needed to repair a machine that may break during its use.

. For instance, if always available tools are needed to repair a machine that may break during its use. -

Distribute a parallel resource out of both branches of a non-deterministic one. This is useful when processing some non-deterministic resource produces a partially deterministic result. For instance, if using a machine may result in a successful or failed product but always also outputs the machine itself. (Note that this action could be defined as a composition of othersFootnote 4, but we keep it as a primitive for convenience and symmetry.)

-

Discard the copyable marker from a resource to use it in a process that does not assume its copyability. This is particularly useful to evaluate a copy of the executable resource produced by representing a composition.

-

Duplicate a copyable resource into two copies. For instance, to use a single password input for multiple actions requiring it.

-

Discard a copyable resource, producing nothing. For instance, when an action produces a digital alert to which we do not wish to react.

-

Take any resource and an executable resource with matching input, evaluate the process represented by the latter and produce its output. If we use a process to prepare an

resource, then this allows us to execute it.

resource, then this allows us to execute it. -

Forget all information about some resource, producing

. For instance: if we have a drawer with socks of two colours, then we can specify the output of a process that finds two matching socks more concisely as:

. For instance: if we have a drawer with socks of two colours, then we can specify the output of a process that finds two matching socks more concisely as:  (for more on this sock example see Dixon et al. [12]). Note that what is forgotten are details about the resource and not its presence, only copyable resources can be erased.

(for more on this sock example see Dixon et al. [12]). Note that what is forgotten are details about the resource and not its presence, only copyable resources can be erased.

Because these actions are defined regardless of the resource atoms we choose to use, and because those atoms can be of any type, none of the resource actions could possibly interact with the internal state of atoms. They do not change the objects themselves, only how they are arranged. We refer the reader once more to Fig. 5 for the formal definitions of inputs and outputs of all the resource actions.

Example

With resource actions we can follow paying money in and getting a drink with a refund of the remaining credit as change. We do this by following the previous composition with refunding in parallel with identity on the drink, defined formally as follows and visualised in Fig. 6:

4.4 Valid Compositions

While building compositions of processes, we wish to ensure that we are doing so sensibly, in particular that we are manipulating resources correctly. We call such process compositions valid.

One approach would be to perform each step as a deduction in linear logic. However, this would limit the concepts we can express in compositions and their validity to only what we can express within linear logic.

Instead, we set up rules purely in the language of resources about what compositions “make sense”. We then prove (Sect. 5.6) that the mechanical translation of any composition into a linear logic deduction will be well-formed if the composition satisfies these rules. This approach yields conditions simpler than the full rules of linear logic. The decoupling allows us to potentially extend validity with conditions beyond what linear logic can express, allowing our framework to extend beyond linearity.

There are only two non-trivial rules:

-

In sequential composition

, the output of the first process

, the output of the first process  must be the input of the second process

must be the input of the second process  .

. -

And in optional composition

the outputs of both processes must be equal, so that no matter the branch actually taken we know what the output will be.

the outputs of both processes must be equal, so that no matter the branch actually taken we know what the output will be.

We call a process composition valid when it satisfies these rules everywhere within it, and we define this predicate in Isabelle/HOL as shown in Fig. 7.

As an example consider processes  , with output

, with output  , and

, and  , with input

, with input  ,

,  and

and  . The sequential composition of

. The sequential composition of  then

then  is not valid, because the output of

is not valid, because the output of  is not the input of

is not the input of  . However, by composing

. However, by composing  in parallel with identity processes on

in parallel with identity processes on  and

and  we can “fill out” its output to make the sequential composition valid. This situation is visualised in Fig. 8.

we can “fill out” its output to make the sequential composition valid. This situation is visualised in Fig. 8.

Validity ensures that resources are only changed as explicitly stated by actions. Furthermore, composition operations and resource actions at most manipulate the structure of resource combinations and not their contents, to which they do not have access. The only way to change information in resource atoms is via primitive actions, which are all assumptions about the domain. As a result, the information within resource atoms can interact with this notion of composition validity in interesting ways.

Example

On top of the basic connections making sense, validity in our running example ensures that the amount of cash and the amount paid into a machine do not change between actions. By inspecting the primitive actions we use, we can also see that money either moves between the machine and cash, through paying in and refunding, or it is turned into drinks.

Another implication of validity in this domain is that every instance of getting a drink is possible. This is because the action definition is partial, only giving a process for machines that have enough paid in. Thus if we can prove a composition is valid, then every instance of getting a drink within it must have been defined and must have had a machine with enough paid in supplied to it. This rests on the fact that it is impossible to prove that the value  is a valid composition.

is a valid composition.

5 Translating into Linear Logic

In this section we describe our argument for the linearity of resources in process compositions, connecting the compositions to deductions in ILL (see Sect. 2.1).

The argument is, in short, that every composition corresponds to a deduction in ILL with the following properties:

- Well-formedness:

-

For every valid composition the corresponding deduction is well-formed: it follows the rules of ILL.

- Input-Output Correspondence:

-

The conclusion of the deduction is a sequent \(I \vdash O\) where I and O are propositions corresponding to the composition’s input and output respectively.

- Primitive Correspondence:

-

Primitive actions of the composition correspond exactly to the premises of the corresponding deduction.

- Structural Correspondence:

-

The structure of the composition matches that of the corresponding deduction.

To express the above, we must embed ILL in Isabelle/HOL. We first mechanise a shallow embedding, allowing us to formally talk about what sequents are valid in the logic. But this is not enough to demonstrate the above properties, so we also mechanise a deep embedding which allows us to formally talk about the deductions themselves and their structure. We prove that the deep embedding is sound and complete with respect to the shallow embedding. We refer the reader to the work of Dawson and Goré [11], for instance, for a further discussion of shallow and deep embeddings in Isabelle/HOL.

When connecting resources to ILL propositions we encounter a difficulty in the many concrete terms that may represent one resource. To resolve this we use the normal form and mirror the rewriting normalisation procedure (see Sect. 3.3) with ILL deductions to show that any equivalent resource terms correspond to logically equivalent propositions.

Note that the connection is from process compositions to linear logic deductions, but not necessarily the other way. There exist well-formed ILL deductions which do not reflect any of our process compositions, for instance because we do not make any connection to the ILL  operator. Similarly, the resources and process compositions may be extended to carry information that cannot be expressed in ILL.

operator. Similarly, the resources and process compositions may be extended to carry information that cannot be expressed in ILL.

5.1 Shallow Embedding of ILL

A shallow embedding of ILL is simpler to define than the deep one and it allows us to reuse much of the automation available in Isabelle. However, it is limited to formalising what sequents are valid within the logic rather than directly talking about the structure of its deductions.

There is already a shallow embedding of ILL by Kalvala and de Paiva [21] distributed with Isabelle, but this is part of the Isabelle/Sequents system and is not compatible with our HOL development. Nevertheless, their approach provides inspiration for some aspects of our mechanisation.

First we mechanise the propositions of ILL as the datatype  , mirroring the specification (1), given in Sect. 2.1, along with relevant notation. The type variable

, mirroring the specification (1), given in Sect. 2.1, along with relevant notation. The type variable  represents the type from which we draw the propositional atoms.

represents the type from which we draw the propositional atoms.

Then we represent the valid sequents of ILL as an inductive relation between a list of propositions (antecedents) and a single proposition (consequent). We denote it infix by  and the full definition is shown in Fig. 9.

and the full definition is shown in Fig. 9.

Every rule in this definition represents one of the inference rules of ILL shown in Fig. 2. However, we adjust their precise statement following the work of Kalvala and de Paiva [21] to remove implicit assumptions which make them less useful for pattern matching. For instance we adjust the \(\otimes _L\) rule by adding \(\Delta \) so that we no longer assume that A and B are the last antecedents:

Note that, compared to our statement in Sect. 2.1, ILL is often stated with multisets for antecedents instead of lists as in our formalisation. In such contexts the order of antecedents does not matter and the structural inference rule Exchange is made implicit.

With our shallow embedding of ILL (using explicit Exchange, see  in Fig. 9) we can prove that this move is admissible in the logic. We do so by proving that any two sequents whose antecedents form equal multisets are equally valid, stated more generally in Isabelle as follows:

in Fig. 9) we can prove that this move is admissible in the logic. We do so by proving that any two sequents whose antecedents form equal multisets are equally valid, stated more generally in Isabelle as follows:

This fact relies on the theories of multisets and of combinatorics already formalised in Isabelle/HOL. We first note that any two lists forming the same multiset are related by a permutation, which is a sequence of element transpositions. By induction on this sequence of transpositions we show that we can derive each sequent from the other.

5.2 Resources as Linear Propositions

Before relating process compositions to ILL deductions we first need to relate the resources within the former to ILL propositions. Then we can translate the input and output of processes into ILL.

Because resources are defined as a quotient, we first define the translation for resource terms:

Note how all but the  case map a resource term constructor directly to a constructor of ILL propositions. The

case map a resource term constructor directly to a constructor of ILL propositions. The  case instead uses the helper function

case instead uses the helper function  to combine the list of translations of the children using the binary

to combine the list of translations of the children using the binary  operator of ILL. This function is defined as follows:

operator of ILL. This function is defined as follows:

Then we extend this to resources by translating the normal form representative term obtained via  (see Sect. 3.3):

(see Sect. 3.3):

The crucial property of this translation is that for equivalent resource terms the translation of one can be derived from the translation of the other within ILL. So the logic agrees with the relation we defined. In our shallow embedding this is stated as follows:

with the reverse derivation following by symmetry of resource term equivalence.

In the translation of process compositions to deductions we may construct translations of non-normal terms, so this property is vital to the resulting deductions being well-formed. Consider a situation that may arise from parallel composition of a process with two inputs, say  and

and  , with a process with one input, say

, with a process with one input, say  :

:

-

The combined input is

which translates to

which translates to  .

. -

But the first input is

, which translates to

, which translates to  , and the second input is

, and the second input is  , which translates to

, which translates to  .

. -

The product of those translations is

which is not the same proposition.

which is not the same proposition.

We prove the property by induction on the resource term equivalence relation. In each case we use Isabelle’s automated methods to find the deduction pattern needed to transform one translation into the other. These methods make use of facts about ILL as well as facts about the proposition compacting operation, such as the following:

5.3 Shallow Embedding is Not Enough

With the shallow embedding of ILL we can start formalising our argument for linearity of process compositions. In this section we describe how this embedding allows us to demonstrate the Well-formedness and Input-Output Correspondence properties and how it is insufficient for the Primitive Correspondence and Structural Correspondence properties. This insufficiency motivates our use of a deep embedding in the following sections.

For every process composition  , we have the following ILL sequent formed from translating its input and output (call it the input-output sequent):

, we have the following ILL sequent formed from translating its input and output (call it the input-output sequent):

We can show that, for every valid process composition, its input-output sequent is valid in ILL given the validity of input-output sequents of primitive actions occurring in the composition (call this the shallow linearity theorem):

We prove this statement by structural induction on the process. In each case we make use of Isabelle’s automated methods to find an ILL sequent derivation from the translation of the input to the translation of the output.

The Well-formedness property is demonstrated by the proof being checked by Isabelle, while Input-Output Correspondence is demonstrated by the conclusion being the input-output sequent of the composition.

Primitive Correspondence is not sufficiently demonstrated by this theorem. Its assumption only says that input-output sequents of the primitive actions are sufficient for the proof. This does not necessarily mean that they are necessary nor that they are used as many times as the primitive actions occur in the composition. Thus we cannot conclude that the primitive actions correspond exactly to the premises of this deduction.

Structural Correspondence is also not demonstrated by the theorem. The structure of its proof does not necessarily follow the structure of the composition. We know that there exists some proof of the input-output sequent, but that proof may not have any further relation to the composition itself and so this is not a satisfying argument for its linearity.

Consider for example process compositions whose input is equal to their output. We can prove the input-output sequent for any such composition to be valid in ILL directly by the Identity inference rule:

In this way, the sequential composition of primitive actions  and then

and then  can have its input-output sequent shown to be valid in ILL without using any premise at all.

can have its input-output sequent shown to be valid in ILL without using any premise at all.

Moreover, consider the sequential composition of identities on resources  , then on

, then on  and then again on

and then again on  (where

(where  and

and  are distinct). Its input-output sequent can again be shown to be valid in ILL, despite this composition being invalid because it creates and discards the resource

are distinct). Its input-output sequent can again be shown to be valid in ILL, despite this composition being invalid because it creates and discards the resource  .

.

In the following sections we develop a deep embedding of ILL deductions which allows us to demonstrate the Primitive Correspondence and Structural Correspondence properties. With the deep embedding we can say that ILL accepts not just the input and output of a process composition but every step within it.

5.4 Deep Embedding of ILL

A deep embedding of ILL deductions produces “objects” we can directly construct. While this gives up much of the automation that Isabelle would offer during proof, it allows us to build deductions whose structure matches the process composition.

For this, we mechanise the deductions as the datatype  . Elements of this datatype are trees whose nodes exactly mirror the introduction rules of the sequent relation (see Fig. 9), with an additional node for explicitly representing premises (the meta-level assumption of a particular deduction). This additional node lets us express contingent deductions.

. Elements of this datatype are trees whose nodes exactly mirror the introduction rules of the sequent relation (see Fig. 9), with an additional node for explicitly representing premises (the meta-level assumption of a particular deduction). This additional node lets us express contingent deductions.

The type of deductions is parameterised by two type variables:  and

and  . The type

. The type  represents the type from which we draw the propositional variables, just as it does in the type

represents the type from which we draw the propositional variables, just as it does in the type  of propositions. The type

of propositions. The type  represents the type of labels we attach to the premise nodes. We use these labels to distinguish premises that may assume the same sequent but have different intended meaning.

represents the type of labels we attach to the premise nodes. We use these labels to distinguish premises that may assume the same sequent but have different intended meaning.

In total this datatype has 22 constructors, each with up to eight parameters. The deduction tree’s semantics are defined via two functions:  expresses the deduction’s conclusion sequent while the predicate

expresses the deduction’s conclusion sequent while the predicate  checks whether the deduction is well-formed. Full definitions are shown in Appendix A, but as an example we consider the Cut rule which is stated in the shallow embedding as follows:

checks whether the deduction is well-formed. Full definitions are shown in Appendix A, but as an example we consider the Cut rule which is stated in the shallow embedding as follows:

Its deep embedding, the term  , represents the deduction tree shown in Fig. 10. Note that

, represents the deduction tree shown in Fig. 10. Note that  and

and  correspond to deep embeddings of the two assumptions in the shallow rule:

correspond to deep embeddings of the two assumptions in the shallow rule:  and

and  respectively.

respectively.

The semantic functions take the following values for this rule:Footnote 5

Additionally, a function called  recursively gathers the list of all the premise leaves in a deduction. Continuing the above example, the premises of a cut node are those of its two child deductions:

recursively gathers the list of all the premise leaves in a deduction. Continuing the above example, the premises of a cut node are those of its two child deductions:

We verify this deep embedding by proving it is sound and complete with respect to the shallow one. Soundness requires that the conclusions of well-formed deductions are valid sequents given the validity of the premises, stated in Isabelle as:

Completeness requires that for every valid sequent there exist a well-formed deduction with it as conclusion and with no premises:

Because the deduction tree nodes mirror the sequent relation introduction rules, we can prove these statements rather simply by induction either on the deduction structure or on the sequent relation. Note that for completeness we require the deduction to have no premises because otherwise it would be trivial: we could just assume the sequent.

5.5 Deeply Embedded Equivalence of Resource Translations

In Sect. 5.2 we proved that translations of equivalent resource terms can be derived from one another:

We use this fact to fill gaps between linear logic translations of different but equivalent resource terms, which will be vital when we construct deductions from process compositions in the next section. To make use of this fact in the deep embedding, we first need to describe how a witness deduction is constructed for every case. This mirrors the earlier problem of deciding the resource term equivalence, so our solution is similar to the normalisation procedure described in Sect. 3.3.

We build a deduction from one resource term to its normal form and another deduction into the other term from its normal form (note the opposite direction). These two deductions can then be connected because the two terms have equal normal forms. Thus the core of our solution are two functions which construct, for any term  , the deductions with the respective conclusions:

, the deductions with the respective conclusions:

In the definitions of these functions we again mirror the normalisation procedure. However, instead of a rewriting step that transforms the resource term, we build a deduction (in the desired direction) proving the transformation is allowed in ILL. These are then chained with the Cut rule until the resource term is normalised.

We prove that these functions in all cases produce well-formed deductions with the above conclusions. Furthermore, we prove that they have no premises and are thus theorems of ILL.

We name the two functions  and

and  : ILL deductions that connect a resource term from or to its normal form. They are used in the next section when constructing deductions from process compositions to fill gaps between linear logic translations of different but equivalent resource terms.

: ILL deductions that connect a resource term from or to its normal form. They are used in the next section when constructing deductions from process compositions to fill gaps between linear logic translations of different but equivalent resource terms.

5.6 Process Compositions as Linear Deductions

With the deep embedding of ILL deductions we can prove the shallow linearity theorem from Sect. 5.3, which states that the input-output sequents of process compositions are valid in ILL. But now, we can do so in a way that satisfies the Primitive Correspondence and Structural Correspondence properties which we identified in Sect. 5, namely that: premises of the deduction correspond exactly to the primitive actions of the composition and the deduction matches the process composition in structure.

We do this by recursively constructing an ILL deduction for every process composition, mechanised as the following function

By associating every constructor of process compositions with a pattern of ILL inferences, this function ensures that the resulting deduction reflects every step of the composition.

We then prove that the resulting deductions demonstrate the Well-formedness, Input-Output Correspondence and  properties. That is, we show that for any valid process composition the deduction is well-formed:

properties. That is, we show that for any valid process composition the deduction is well-formed:

and the conclusion is always the input-output sequent:

and that the premises correspond to the primitive actions that occur in the composition (including in number and order):

By the soundness of the deep embedding (see Sect. 5.4) each thus constructed deduction is then a proof for an instance of the shallow linearity theorem from Sect. 5.3 but with added guarantees about the proof’s structure.

We next outline precisely how  constructs the deductions. In some cases the translation is direct, for instance

constructs the deductions. In some cases the translation is direct, for instance  and

and  are translated into premises and identity rules respectively:

are translated into premises and identity rules respectively:

In other cases the deduction being constructed may be more complex, especially where different forms of one resource are involved. For instance with parallel composition we need to:

-

1.

Separate the proposition translation of a parallel resource into translations of the two inputs,

-

2.

Use the children’s deductions to connect translations of their inputs with translations of their outputs.

-

3.

Merge the translations of the two outputs back into one proposition for the combined resource.