Abstract

This study evaluated the fidelity and effectiveness of a parent coach training program for toddlers at risk for autism spectrum disorder and identified factors required for successful training implementation under real-world conditions. Training addressed four tiers of clinical competence and was delivered to early intervention providers across 23 partner agencies in a large Canadian province. Results indicated that mean trainee fidelity scores were within the range reported in previous community-based training studies but there was considerable variability across trainees. Implementation facilitators included agency learning climate, leadership support, and trainee readiness for change. Implementation barriers included time/caseload demands and challenges related to technology learning and infrastructure. Results have implications for parent coach training in community settings.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Evidence suggests that participation in evidence-based early intervention (EI) can result in positive and meaningful improvement in the social communication skills of young children with autism spectrum disorder (ASD; Fuller & Kaiser, 2020; Sandback et al., 2020). In recent years, support has accumulated for a wide array of naturalistic developmental behavioural interventions (NDBIs; Schreibman et al., 2015). The various NDBIs share core components related to the nature of the learning targets, contexts, and teaching strategies that are emphasized. Learning targets that support the development of social communication and related skills establish a foundation for the acquisition of new and more complex behaviours across developmental domains. Learning targets are taught in the context of a child’s ongoing, daily activities—eating, dressing, bathing, playing, and so forth—that are facilitated by a trained intervention agent (i.e., service provider or parent) who provides opportunities for both social engagement and cause-and-effect learning. During face-to-face interactions that incorporate child choice, the adult uses positive affect; responds to the child’s communicative attempts; models appropriate language; creates situations to elicit social interaction and/or communication; and employs teaching strategies that include prompting, shaping, and naturally occurring reinforcement.

Sandback et al. (2020) conducted a comprehensive systematic review and meta-analysis of experimental group design studies representing 6,240 children with ASD (M age = 54.2 months). They analyzed data for six types of EI: behavioral (27 studies), developmental (14 studies), NDBI (26 studies), sensory-based (7 studies), technology-based (10 studies), and TEACCH (6 studies). Results indicated significant positive effects for developmental and NDBI types only, particularly in the areas of social communication, language, and play skills, with aggregated effect sizes that ranged from Hedge’s g = 0.31 to 0.47. Similarly, Fuller and Kaiser (2020) conducted a systematic review and meta-analysis of social communication outcomes from group experimental studies representing 1,422 children with ASD (M age = 42.6 months). Studies examining behavioral, developmental, and NDBI EI types were included together in the review, and results indicated a significant aggregated effect size (g = 0.36).

In their 2020 study, Fuller and Kaiser examined social communication outcomes in terms of three types of interventionists: clinicians (i.e., researchers or highly trained therapists), parents, and pre/school staff. Outcomes varied by intervention agent, with the highest effect size (g = 0.59) found for clinicians, followed by parents (g = 0.33), and school staff (g = 0.22). In reflecting on this discrepancy, the authors noted that future studies “should include strategies for improving the delivery of effective intervention strategies by parents, school staff, and other community providers” (p. 1697). A similar recommendation was made by Nahmias et al. (2019) on the basis of their meta-analysis of community-based EI programs. They examined communication, social, and adaptive functioning outcomes of 33 studies representing 1,722 children with ASD (M age = 37.4 months) who received educational or behavioral services that were routinely available in school, clinic, university/hospital, or home-based settings. They explicitly did not include studies in which community providers were trained by members of a university-affiliated research team to implement the intervention. They found small but statistically significant gains in cognitive, social, communication and adaptive functioning domains (g = 0.21–0.34). However, they noted that these results contrasted “starkly” (p. 1206) with those reported in meta-analyses of university-based clinical trials and, like Fuller and Kaiser, noted the need to bridge the science-to-service gap in publicly funded health care through “strategies that increase the successful implementation of demonstrated-effective interventions for young children with autism in community settings” (p. 1207).

Training Community-Based Providers

One of the challenges related to NDBI provision in the community is how to provide training that accommodates the constraints experienced in such settings. NDBI implementation—which, by definition, takes place during naturally-occurring routines—requires a high level of clinical judgment and in-the-moment decision-making on the part of an intervention agent. As a result, some research suggests that NDBI strategies may be more difficult to learn than more highly structured, adult-led approaches such as discrete trial teaching and may thus require more practice time and coaching support (Stahmer et al., 2015). Reflecting this, some NDBI training models require interventionists to complete a rigorous training program and demonstrate a high level of implementation fidelity (typically, ≥ 80% of component skills) in order to become certified as therapists or trainers. This requires a significant investment of resources for initial training, practice time, and ongoing supervision until the desired benchmark is achieved. Most community agencies have access to neither qualified training personnel nor the additional funding or revenue that is needed to meet these requirements (Glasgow & Emmons, 2007).

To address some of these concerns, NDBI researchers have examined the effectiveness of various streamlined approaches for training community-based providers via online instruction (e.g., Rogers et al., 2020; Rooks-Ellis et al., 2020; Vismara et al., 2009), in vivo instruction (e.g., Stahmer et al., 2020), or a combination of the two (e.g., Chang et al., 2016; Shire et al., 2017). Results have been mixed with regard to post-training fidelity. A mean fidelity criterion of ≥ 80% across all participants was reported in some studies (e.g., Shire et al., 2017; Wainer et al., 2017) but not in others (e.g., Chang et al., 2016; Shire et al., 2020; Vismara et al., 2009). Vismara et al. (2013) questioned whether a fidelity criterion of 80% is an appropriate standard to expect community professionals to achieve, given the constraints that they typically experience compared to university-lab settings that might have the resources, caseloads, and protected time to help clinicians reach higher fidelity standards. Others have echoed this concern, noting that the exact relationship between intervention fidelity and intervention outcome is still in question and that it is unclear how much fidelity is required to produce meaningful outcomes (Durlak & DuPre, 2008; Schoenwald et al., 2011; Wainer & Ingersoll, 2013).

Factors That Affect Training Fidelity

In line with the current movement to examine implementation outcomes in a purposeful, deliberate manner (Proctor et al., 2011), attempts have been made to explain the variability of training fidelity outcomes by examining contextual factors that act as either facilitators or barriers. For example, Wainer et al. (2017) included a measure of both potential and actual barriers in their Project ImPACT study. They attributed the positive fidelity outcomes they achieved to factors that included administrative support for the training, the interactive and collaborative nature of the training program, and compatibility of the Project ImPACT model with trainees’ current practices. In contrast, Wilson and Landa (2019) explored both training-specific and systemic barriers related to Early Achievements, another NDBI model. Barriers specific to training included the novelty of the model (e.g., the requirement that trainees identify specific developmental learning goals), its lack of alignment with current practices, and a lack of understanding of the model by administrators. Because this information was collected during the study as well as afterward, researchers were able to address the barriers and implement solutions to resolve at least some of them. Vismara et al. (2013) suggested that attitudes about the impact of training in addition to workplace barriers such as time, caseload constraints, and lack of administrative support affected providers’ ability to submit required videotapes for post-training support and fidelity assessment. Despite such challenges, a recent study suggested that EI providers in general endorse the need for systematic NDBI training for children both with and without an ASD diagnosis (Pickard et al., 2021). By understanding the impact of training on provider attitudes, coupled with feasibility of using the intervention, realistic training recommendations can follow for community implementation.

A Multi-Method Approach to Assess Training Outcomes

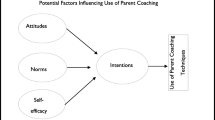

Researchers in the field of implementation science have suggested that formative assessment data related to contextual factors such as barriers and facilitators can and should be routinely collected alongside more traditional outcome data from the outset of any intervention (Zamboni et al., 2019). They argue that such efforts are essential in order to make ongoing adjustments to the initial implementation plan and to support later intervention “scale-up”—the expansion of an intervention that has been shown to be effective under controlled conditions to real-world conditions, while retaining effectiveness. In this regard, Glasgow and Emmons (2007) advocated the use of “practical trials” (p. 421) that combine both quantitative and qualitative methods to assess multiple outcomes that are relevant to community translation. In line with this recommendation, Miller’s Pyramid of Clinical Competence (Miller, 1990) offers a framework that can be used to examine training outcomes using multiple methods of assessment at multiple time points (Fig. 1). Miller noted that, “no single assessment method can provide all the data required for judgement of anything so complex as the delivery of professional services….’ (p. S63). The first two levels of the framework (Knows and Knows How) focus on learner cognition and the next two levels (Shows How and Does) focus on learner behavior. The Knows level recognizes the importance of mastering the foundational terminology and principles of a practice. A learner then applies this knowledge to demonstrate specific skills in circumscribed practice situations (i.e., Knows How). At the third level (Shows How), the learner is able to integrate knowledge and skills in complex performance situations, and at the top level (Does) the learner functions independently (or nearly so) in community practice. With regard to community-based NDBI training, use of Miller’s framework may help to elucidate factors that contribute to the variability of training outcomes, as described previously.

Miller’s (1990) pyramid of clinical competence and phases of PACE coaching training

Study Goals and Research Questions

This paper describes the results of a practical trial using a multi-method approach to examine NDBI parent coach training as one component of the Parent and Child Early (PACE) Coaching Project, a community-based research project conducted in the province of British Columbia, Canada. One goal of the PACE Coaching project was to evaluate the effectiveness of a training package based on the Early Start Denver Model (EDSM), a manualized NDBI (Rogers et al., 2012, 2021). A second goal was to identify processes required for successful implementation under real-world conditions (see Smith et al., 2021), in line with the National Implementation Research Network (NIRN) Stages of Implementation (Fixsen et al., 2005) and the Consolidated Framework for Implementation Research (CFIR; Damschroder et al., 2009). These goals were intended to address gaps in the current literature related to potential sources of variability in training fidelity across participants and identification of facilitators and barriers to training and implementation. We applied Miller’s (1990) framework to structure the levels of training and related outcome assessments. Both quantitative and qualitative data in order to answer the following questions: (a) what facilitators and barriers to training were anticipated and what mitigation strategies were instituted to address them?, (b) what were the outcomes immediately post-training and what factors appeared to affect variability in training fidelity at this stage?, and (c) what were the outcomes following parent coaching implementation and what factors appeared to affect variability in training fidelity at this stage?

Method

Setting

British Columbia (BC) is located in Western Canada and spans 944,735 square kilometers (i.e., 364,764 square miles, between Alaska and Texas in size), with an estimated population of 5.1 million (Statistics Canada, 2020), approximately 40% of whom live outside of urban population centres (Statistics Canada, 2016). Unlike in the United States, where children with developmental concerns under age 3 receive EI services through the publicly subsidized Individuals with Disabilities Education Act (IDEA) Part C system, children in many BC communities receive such services from provincial government-funded Child Development Centres (CDCs) and health units. While not a requirement, many CDCs belong to a provincial agency, the BC Association for Child Development and Intervention (BCACDI), whose mission is to advocate for high quality services and act as a coordinating body for member agencies. In 2017, the research team contacted the Executive Directors (EDs) of 20 of the 32 BCACDI member agencies across small, medium, and large population centres to introduce the PACE Coaching project and to inquire about their willingness to meet and discuss participation. The research team also invited participation of 12 agencies that provided support to Indigenous children and families (First Nations, Metis, Inuit) but were not members of the BCACDI. None of the invited agencies had been involved in previous parent coaching research studies. In total, EDs of 26 out of 32 agencies expressed interest and met with members of the research team to learn more about the project (e.g., purpose, potential benefits, timeline, personnel commitment). Subsequently, the EDs of 23 agencies (16 CDCs, 7 Indigenous) agreed to participate; those who declined cited caseload and personnel constraints as the primary barrier.

Participants

The ED of each partner agency was asked to nominate between 1 and 4 individuals to be trained as parent coaches. Criteria for selection included: (a) experience with young children with ASD and/or other developmental disabilities; (b) no previous training in the ESDM or another NDBI; (c) willingness to participate in the project for a 3-year period (i.e., no known plans to move out of province, change employment, go on leave, etc.); and (d) interest in developing skills as a parent coach and participating in a research project. EDs were free to nominate individuals with a range of educational backgrounds or job descriptions, including early educators (EEs) with college diplomas or undergraduate university degrees (e.g., infant development consultants, early childhood interventionists) and allied health professionals (AHPs), most of whom had graduate degrees (e.g., speech-language pathologists, occupational therapists). The project team emphasized the importance of selecting trainees whose involvement would have the most positive/least negative impact on service delivery in the agency as a whole. At the outset, 39 individuals from 23 different agencies were nominated as trainees. None had experience with parent coaching as defined in this study, although most had completed coursework related to the provision of other types of parent training and had provided such support for > 5 years. Subsequently, eight trainees representing seven different agencies withdrew during the formal training period; seven withdrew because of caseload demands that constrained their ability to complete the training and one withdrew for a maternity leave. Demographic data for trainees who completed all formal training (n = 31) are displayed in Table 1.

Coach Training Procedures

Coach training, which was financed by the PACE Coaching research grant, took place in four phases that were roughly aligned with the four levels described by Miller (1990): Training preparation (Knows; Fig. 1), PACE 1 and PACE 2 (Knows How and Shows), and Implementation (Does). With the exception of the Training Preparation phase, trainees were supported by three graduate-level trainers who were certified as ESDM therapists and parent coaches. All three trainers lived in the province and worked together previously to provide ESDM training for several years.

Training Preparation

In order to familiarize trainees with the foundational knowledge of both the ESDM and coaching practices (i.e., Knows; Fig. 1), they completed an online introductory module that was developed for the project and watched four online modules, Help is in Your Hands (https://helpisinyourhands.org) that were made available to the project by one of the co-investigators. In addition, the research team and trainers conducted 15 regional, face-to-face meetings with staff of 23 potential agencies to introduce the project and gather information about agency motivations, strengths, anticipated barriers and challenges, and needs. Meeting attendees (N = 94) included Executive Directors, potential trainees, qualified clinical specialists in ASD, and other interested staff.

PACE 1

The first formal training phase, PACE 1, was designed to teach trainees to provide a modified version of ESDM to toddlers with suspected ASD (see Mirenda et al., 2021). Training was delivered in two parts: an intensive 3-day workshop (i.e., Knows How; Fig. 1) followed by a 12-week practice period (i.e., Shows How; Fig. 1). Prior to the workshop, trainees were provided with an ESDM book written for parents (Rogers, et al., 2012) and encouraged to read at least the first few chapters on interactive strategies to help children connect, communicate, and learn. Face-to-face regional workshops with 3–5 trainees each were then conducted at a partner agency by a trainer who travelled to the region. Each workshop consisted of brief didactic lectures by the trainer on specific topics, interspersed with brief (~ 10 min) hands-on practice sessions with a toddler or early preschooler with ASD from the local community. Agency representatives at each workshop location invited one or more parents of children with ASD to participate with their child. Parents provided informed consent and attended the workshop as observers for all 3 days. During the workshop, trainees took turns working with the child while the trainer observed and coached them to use strategies for: (a) increasing child attention and motivation; (b) increasing communication; (c) creating and implementing joint activity routines; and (d) using the ABCs of learning (antecedent-behavior-consequence) to shape alternatives to unwanted behavior. Parents were reimbursed for mileage to and from the CDC and were also provided with an honorarium of $50 per day for participating, which was funded by the research grant supporting the PACE project.

Following the workshop, trainees practiced administering an assessment tool that was introduced during the workshop, the Community-ESDM (C-ESDM) Curriculum Checklist (Rogers et al., 2013), and implementing PACE 1 strategies with a young child with ASD in their agency. In some agencies, this was the same child who participated in the workshop; in other cases, this was a different child whose parent provided informed consent to participate. Parents were reimbursed for mileage and were also provided with an honorarium of $10 per practice session for participating, which was funded by the project. For most trainees, the practice period occurred over a 12-week period, with the expectation that they would complete one, 1-h practice session per week. During the practice period, trainees remained in remote contact with one another and with their trainer via regional peer “pod” meetings that were conducted via a secure online platform. Pod members met weekly or biweekly and were also able to meet privately with their trainer, on request. During pod meetings, trainees and the trainer engaged in a wide range of activities, including: (a) troubleshooting administration of the C-ESDM Checklist; (b) identifying priority intervention targets based on the Checklist; (c) writing step-by-step objectives to reflect the targets; and (d) reviewing and sharing resources. Trainees were also asked to use an iPad to record videos of practice sessions at least twice during the 12-week period, upload the videos to the secure online platform, and view them with their trainer and pod members during pod meetings to reflect on progress and receive feedback.

PACE 2

The second formal training phase, PACE 2, was designed to teach trainees how to coach parents to use the simplified version of ESDM with their child at risk for ASD. All trainees who completed PACE 1 advanced to PACE 2, regardless of their fidelity scores at completion of PACE 1. PACE 2 training was again delivered in two parts: an intensive 3-day workshop (i.e., Knows How; Fig. 1) followed by an extended practice period (i.e., Shows How; Fig. 1). Face-to-face regional workshops were conducted at a partner agency by the same trainer as in PACE 1, and, in most cases, with the same small group of trainees. As in PACE 1, an agency representative at each workshop location invited parents of toddlers with ASD to participate in the workshop with their child. Parents provided informed consent and attended the workshop with their child for all 3 days. During the workshop, brief didactic lectures were interspersed with 60-min, hands-on practice sessions with the parent and child; thus, unlike in PACE 1, parents were actively involved in PACE 2. Coaching followed the steps and qualities recognized by Hanft et al. (2004) that empower parents to (a) recognize what they are doing to promote learning for the child (i.e., reflection); (b) practice and evaluate new strategies to promote learning (i.e., action and evaluation); and (c) create ongoing learning opportunities for the child when the coach is not present (i.e., initiation). The model emphasizes the importance of collaborative, balanced parent-coach partnerships through listening and planning to cultivate decisions and ideas together; the coaches’ ability to observe and reflect on parent–child interactions; and the coaches’ ability to coach with respect, non-judgment, and sensitivity (see Rogers et al., 2021). Parents received the same reimbursement and honorarium as in PACE 1.

Following the PACE 2 workshop, trainees worked with the parent of a young child with ASD in their local community to practice the C-ESDM skills and parent coaching strategies taught in PACE 1. In some agencies, this was the same parent and child who participated in the workshop practice; in other cases, this was a different parent who provided informed consent to participate. Parents received the same reimbursement and honorarium as in PACE 1. For most trainees, the practice period occurred over a 16–20-week period, with the expectation that they would complete one, 60-min practice session per week. This practice period was longer than the one that followed PACE 1, primarily because the coaches were required to combine the C-ESDM skills they learned in PACE 1 with the coaching skills they learned in PACE 2. During the practice period, most trainees continued to stay in contact with one another and/or with their trainer via bi-weekly online pod meetings. Most trainees continued to upload videos of themselves in coaching sessions with the practice parent and child, to get feedback from their trainer and from the other members of their pod. The hours devoted to attending and travelling to workshops by trainers and coaches as well as limited travel expenses were documented and reimbursed from the PACE Coaching study grant.

Implementation

All trainees who completed PACE 2 participated in a randomized controlled trial (RCT) that was part of the overall PACE Coaching project and was aimed at parents of toddlers at risk for ASD (see Mirenda et al., 2021). Based on input from both trainees and trainers following PACE 2, trainees continued to receive intermittent trainer support while implementing coaching in the RCT (i.e., Does; Fig. 1). The frequency and focus of trainer support during implementation were variable across trainees and was largely dependent on factors such as trainees’ skill and confidence in the delivery of parent coaching as well as the number and complexity of the family(ies) they were assigned to coach. Most trainees coached one family during this phase but some coached two or three.

Measures and Analyses

Both quantitative and qualitative measures were used to examine participant motivations, anticipated contextual barriers, trainee outcomes, and implementation influences. Measures are organized by training phase in this section (see the Supplemental Table for a summary).

Training Preparation Measures

Trainees documented the time required to complete the introductory training module. They also completed a 25-item online multiple-choice quiz that covered material in both the introductory module and Help is in Your Hands and were required to achieve at least 80% correct within three opportunities. The time required, number of attempts required to meet criterion, and mean scores were calculated.

The pre-training conversations with agency staff about motivations, strengths, anticipated barriers and challenges, and agency support needs were audio-recorded, transcribed verbatim, and coded inductively using interpretive description (Thorne, 2008). Coding was conducted using NVivo version 12 software (QSR International, 2018) by one of the co-investigators (the primary coder) and two graduate research assistants (RAs). Initial coding was followed by a series of consensus meetings to resolve disagreement and a written summary of the coded data was provided to the Executive Director of each agency to check for accuracy. At the pre-training meetings, attendees also completed a demographic form and online Context Tool survey that was modified from the Alberta Context Tool (Estabrooks et al., 2009), a multidimensional measure of organizational context for healthcare settings. Seven subscales with items pertaining to organizational structure, connections, leadership, feedback, staffing, space, and time were scored from 1 (strongly disagree) to 5 (strongly agree). The context tool also explored the frequency with which respondents used various structural and technology resources; the latter category was particularly important because we planned to utilize a secure, online platform throughout the training period for pod meetings, individual consultations, and video sharing between trainers and trainees. Cronbach’s alphas, total scores, means, and standard deviations were calculated for each Context Tool subscale.

PACE 1 Measures

Following the PACE 1 workshop, trainees completed a workshop evaluation form with four items, rated on a scale of 1 (strongly disagree) to 5 (strongly agree). During each trainee’s final hands-on workshop session with a child with ASD, trainers completed a 24-item Therapist Practice Checklist (TPC) that served as a baseline assessment of trainee skills for working with the child (see Parent Progress Rating System, https://helpisinyourhands.org/provider/resourcecenter). Example items included “positions self inside the child’s spotlight (face to face and close enough to touch),” “follows child’s interests into an activity or action that the child prefers,” and “provides learning opportunities about every 30 s.” All trainers had previously established reliability on the TPC as part of the ESDM certification process. During the practice period, trainees submitted regular Activity Logs to document the time they spent in (a) direct practice with a child; (b) pod meetings; (c) individual consultations with the trainer; and (d) other activities (e.g., C-ESDM assessments, session preparation, and documentation). This information was submitted as project data and was also used to reimburse partner agencies for trainees’ project time. At completion of the 12-week practice period, trainees used a secure online platform to upload a 10-min video-recorded session with their practice child, and trainers used the TPC again to score the video. Trainees also completed a Self-Assessment Survey (SAS) consisting of seven positive statements that reflected the core strategies taught during PACE 1 (e.g., “I am able to write appropriate objectives and steps based on the results of the C-ESDM Checklist”), rated on a scale of 1 (strongly disagree) to 5 (strongly agree).

Activity Log hours were summed and the percent of items scored as + (present and meets the item definition) on the TPC was calculated. Total scores, means, and standard deviations were calculated for the workshop evaluations, SASs, and TPC scores. Independent samples t-tests were used to compare both total hours and TPC scores for EE and AHP trainees. TPC score change over Time by trainee Group was examined using a GLM repeated measures analysis of variance (ANOVA). Pearson correlations were used to examine associations between TPC scores and both PACE 1 practice hours and pod + trainer support hours.

PACE 2 Measures

As for PACE 1, trainees submitted a post-workshop evaluation form, Activity Logs during the practice period, and a SAS at the end of the practice period consisting of 10 positive statements that reflected the core coaching strategies taught in this phase (e.g., “When coaching a parent, I consistently implement the five ‘Warm-up Joint Activity’ strategies”). Items in both the evaluation form and the SAS were rated on a scale of 1 (strongly disagree) to 5 (strongly agree). At completion of this phase, trainers also assessed trainee skills during a 60-min coaching session using a 40-item manualized Coaching Skills Checklist (CSC) that was adapted by the three trainers and one of the co-investigators from Rogers et al. (2021). The CSC consists of 40 items related to coaching session management and coaching relationship skills with the parent that were scored as + (present and meets the item definition) or − (absent or does not meet the item definition). Trainees also submitted a 21-item Coach Engagement Survey that was adapted from the Tripod Engagement Questionnaire (Ferguson, 2012) to document their overall training experience along six dimensions: (a) trust (e.g., “If a person wanted to support toddlers at risk for autism, participating in PACE Coaching would be a great idea”); (b) cooperation (e.g., “So far, I have performed my best quality parent coaching as a result of this project”); (c) diligence (e.g., “I have pushed myself hard to completely understand the practices discussed in this project”); (d) satisfaction (e.g., “Participating in this project makes me a better early interventionist”); (e) relevance (e.g., “I often feel like this project has nothing to do with real circumstances of my job”; this item was reverse scored); and (f) stress (e.g., “The PACE Coaching Project feels like a safe experience”). Items were scored on a scale of 1 (totally untrue) to 5 (totally true) and reverse scored items were reconfigured prior to analysis.

Total scores, means, and standard deviations were calculated for the workshop evaluation, SAS, and Coach Engagement Survey. Cronbach’s alphas were calculated to examine internal consistency of the Coach Engagement Survey subscales. Activity Log hours were summed and an independent samples t-test was used to compare hours for EE and AHP trainees. The percent of items scored as + (present and meets the item definition) on the CSC were summed and mean scores and standard deviations were calculated across trainees. Pearson correlations were used to examine associations between CSC scores and both PACE 2 practice hours and pod + trainer support hours.

In addition, at PACE 2 completion, trainees and EDs engaged in semi-structured interviews with a member of the research team to explore their experiences during and responses to the training as well as facilitators and barriers that influenced training outcomes. The interviews were digitally-recorded, transcribed verbatim, and analyzed by one of the research team co-investigators and three graduate RAs, working in pairs. The CFIR (Damschroder et al., 2009) was used as the deductive coding framework and data were entered in NVivo 12. The CFIR provides a menu of 36 constructs across five domains: Intervention Characteristics, Inner Setting Influences, Outer Setting Influences, Characteristics of Individuals, and Process of Engaging Participation and Conducting the Implementation. Coders met regularly to review codes, discuss differences, and refine definitions of CFIR constructs to fit the PACE Coaching implementation context. This process was followed by case summaries and coding of each CFIR construct as positive, negative, neutral, mixed, or missing.

Implementation Measures

As noted previously, trainees continued to receive intermittent support from their trainer while they implemented parent coaching during the RCT that followed PACE 2. During this phase, trainees continued to submit Activity Logs documenting the time spent on a wide range of parent coaching activities used in a subsequent cost analysis. Near the end of parent coaching with each family in the RCT, trainees uploaded a 60-min video-recorded coaching session that was scored by a trainer other than one with whom they worked during the project, using the same CSC that was used post-PACE 2. At project completion, they also completed a 56-item Coaching Endpoint Survey developed for the project that sought feedback about their level of confidence with regard to specific skills acquired during the project. Skills were grouped into four clusters: (a) PACE 1 skills for working directly with a child, 33 items; (b) skills for assessment and goal setting during coaching, 6 items; (c) session management skills for coaching, 6 items; and (d) coaching relationship skills, 11 items. Each item was scored on a sliding scale of 1 (cannot do at all) to 100 (can do independently), with 50 as the midpoint (can do with support). Cronbach’s alphas, means, and standard deviations were calculated for the Coaching Endpoint Survey subscales. Activity Log hours were summed and an independent samples t-test was used to compare hours for EE and AHP trainees. The percent of items scored as + (present and meets the item definition) on the CSC were summed and mean scores and standard deviations were calculated across trainees. Pearson correlations were used to examine associations between final CSC scores and both implementation hours and trainer support hours.

Trainees also participated in a face-to-face focus group conducted at their agency by a member of the research team, together with other agency personnel who were involved in the project (e.g., the Executive Director). Specific to training, participants were asked to reflect on the sufficiency of various components of PACE 1 and PACE 2 training (e.g., workshops, practice periods), the importance of ongoing trainer support during implementation, unexpected challenges that were encountered during training and/or implementation, and the extent to which they planned to continue to use parent coaching in the agency. Similar to PACE 2, the interviews were digitally-recorded, transcribed verbatim and coded deductively using the CFIR framework (Damschroder et al., 2009).

Results

Training Preparation (Knows; Fig. 1)

This section provides results related to the first research question that sought to identify the facilitators and barriers to training that were anticipated the mitigation strategies that were instituted to address them. As noted previously, training preparation consisted of three activities: (a) completion of online modules by trainees and (b) face-to-face meetings between members of the research team, trainers, and staff from participating agencies, and (c) completion of a demographic survey and Context Tool by agency staff who attended the face-to-face meetings.

On average, completion of the introductory module (not including Help is in Your Hands) required 4.2 h of trainee time. The target criterion for the 25-item multiple-choice quiz was 80% correct within three attempts. The mean across trainees was 88% correct, with 54% completing the quiz successfully after one attempt, 36% requiring two attempts, and 10% requiring three attempts.

As summarized in Table 2, analysis of the transcripts from the training preparation meetings revealed three primary motivations for project participation. Staff from most agencies expressed interest in expanding their personal skill set as well as their agency’s toolkit of approaches to support families. The potential for building parent capacity and increasing service value was also seen as important. Finally, many staff expressed explicit interest in research participation as a way to improve service delivery across the province.

Pre-training meeting transcripts also revealed three potential agency and three potential family barriers prior to training (Table 2). Agency barriers included high caseload demands, limited staff access to and familiarity with technology, and the need to accommodate families across a wide range of socio-economic, language, and cultural groups. Potential barriers for families included a variety of family stressors that might make parent coaching implementation more difficult (e.g., poverty, unemployment, history of trauma), stigma related to a potential autism diagnosis, and limited access to technology.

Results of the Context Tool that was completed at the pre-training meetings revealed several strengths across agencies and echoed many of the barrier themes that emerged from the conversations. Cronbach’s alpha, an indicator of subscale reliability, ranged from 0.65 to 0.90 for the seven primary subscales, with a mean of 0.84 (generally, scores of 0.65 and above reflect adequate reliability; Taber, 2017). In general, participants viewed their agency’s organizational culture, leadership, and available space as relative strengths (i.e., mean scores between 4 and 5 on the measure, corresponding to agree-strongly agree). Formal and informal connections among people, the availability of regular feedback, adequacy of time to deliver services, and the availability of structural supports (e.g., in-service workshops) were generally rated as adequate but not high (i.e., mean scores of 3.0–3.99), with only a few low scores (i.e., < 3.0). Staff adequacy was rated low in eight agencies, adequate in 11, and was seen as a strength in only four, echoing the concerns about caseload demands that emerged in the conversations. Finally, specific questions about technology revealed that desktop/laptop computers and WIFI/Internet were available in all agencies, but fewer than 50% endorsed frequent use of either mobile/cellular data or text messaging. Similarly, iPad/Android devices and computer programs to plan and track data were either never/rarely used or used only occasionally in > 90% of agencies. Live 2-way video meetings with colleagues or families (Skype, Zoom, etc.) were never/rarely used (NOTE: this was pre-COVID-19).

Mitigation Strategies

Following the training preparation meetings and prior to the start of PACE 1, the research team made changes to the original training plan in order to build on strengths and mitigate the impact of anticipated barriers. In response to the anticipated technology access barrier, we provided iPads to all trainees and to other agency staff who were directly involved in the project. We also dedicated a research assistant and a member of the research team to provide support for technology learning and troubleshoot throughout the project. We attempted to mitigate the project’s impact on high caseloads by reducing the amount of data trainees were expected to submit to the project and reimbursing agencies for all coach training activity time (i.e., workshop time, as well as all practice time activities), so that EDs had the option of backfilling the time coaches dedicated to the project. We also provided additional research funds to cover agency administrative costs incurred during training. Awareness of anticipated family barriers resulted in a decision to reimburse parents who participated in training for mileage and dependent child care and to provide a per diem honorarium as well. Finally, awareness of the need to facilitate formal and informal connections both within and across agencies led us to distribute quarterly newsletters with progress updates about coach training and other project activities.

PACE 1 Outcomes (Knows How and Shows How for Skills with Child; Fig. 1)

This section and the one that follows (i.e., PACE 2 Outcomes) provide results related to the second research question that aimed to identify immediate post-training outcomes and the trainee and contextual factors that appeared to affect variability in training fidelity. All 39 trainees completed the PACE 1 workshop. Workshop satisfaction scores were high, with means that ranged from 4.4 to 4.6 (SD = 0.49) and little variability across trainees or sites. Subsequent to the workshop, two trainees withdrew from the project because of caseload demands at their agency that prevented continued participation. Twenty-five of the 37 trainees who completed PACE 1 were early educators (EEs), most of whom had college or bachelor’s degrees, and 12 were allied health professionals (AHPs), most of whom had graduate degrees. On average, they recorded 11.9 h of practice time with a child; however, 19 (51%) recorded fewer than 12 h (range 4.0–11.0 h), primarily because of delays in organizing practice sessions after the workshop. All trainees participated in pod meetings, 1:1 sessions with their trainer, or (in most cases) both. Independent samples t-tests indicated no significant difference (p > 0.05) in time spent by trainees in the two groups for total PACE 1 practice time or for any individual practice activities in this phase.

Both SAS and TPC scores (Table 3) were used to evaluate PACE 1 outcomes. The mean post-PACE 1 SAS score across all 10 items was 4.1 across the EE and AHP groups. Results for TPC scores indicated a main effect for both Time [F(1,34) = 14.91, p < 0.001] and Group [F(1,34) = 5.51, p = 0.023], with no Time × Group interaction. TPC scores improved significantly in both groups but mean EE scores post-training (80.1%) were significantly lower than mean AHP scores (89.8%). Fidelity scores ≥ 80% were achieved by 54.2% of EEs and 91.7% of AHPs. Pearson correlations between TPC scores and both PACE 1 practice hours and pod + trainer support hours were not significant (r = − 0.32, p = 0.07 and r = − 0.19, p = 0.32, respectively).

PACE 2 Outcomes (Knows How and Shows How for Parent Coaching Skills; Fig. 1)

Thirty-seven trainees completed the PACE 2 workshop but six withdrew prior to completion of the practice period, primarily because of caseload demands at the partner agency. The remaining EE trainees spent a mean of 16.8 h (SD = 6.76) in practice coaching activities and AHP trainees spent a mean of 12.8 h (SD = 3.77) in practice coaching, with no significant difference between the two groups (p > 0.05). Both SAS and CSC scores were used to examine PACE 2 outcomes. The mean PACE 2 SAS score was 4.2 across groups, indicating trainees confidence for using the coaching tools taught during PACE 2.

Because the trainers were focused on providing trainee support during parent coaching sessions at the PACE 2 workshop, it was not possible for them to record baseline CSC data simultaneously. Furthermore, because of technical difficulties uploading CSC videos for scoring at completion of the practice period, post-PACE 2 CSC scores were only available for 13 of the 31 trainees (41.9%) who completed this training phase. The 13 trainees (eight EE and five AHP) were from eight different partner agencies located across small, medium, and large population centres. To assess inter-observer agreement (IOA), 10 of the 13 videos (76.9%) were scored independently by two trainers. When IOA was less than 80%, consensus coding was undertaken to establish a final score; this was required on four occasions. The mean IOA was 81.8% (range 72.5–95%) prior to consensus coding and 94.5% after consensus coding. The mean post-PACE 2 CSC score for the 13 trainees was 75.0% (SD = 14.86), with no significant difference between EE and AHP trainees (t = 0.552, p = 0.59; see Table 3). Although data were missing for more than half of all trainees, fidelity scores ≥ 80% were achieved by 75.0% of EEs and 20.0% of AHPs who submitted videos. Pearson correlations between CSC scores and both PACE 2 practice hours and pod + trainer support hours were not significant (r = − 0.19, p = 0.53 and r = 0.36, p = 0.24, respectively).

The post-PACE 2 Coaching Engagement Survey was completed by 20 of the 31 trainees who completed PACE 2. Cronbach’s alpha ranged from 0.59 (trust) to 0.85 (diligence) for the six subscales, with a mean of 0.71 across all six subscales. Mean subscale scores for trust, cooperation, diligence, satisfaction, and relevance were all ≥ 4.1, indicating overall endorsement of the training experience. The mean score for stress was 3.6, suggesting that trainees experienced training as “somewhat” stressful.

Table 4 summarizes the results of the semi-structured interviews that were conducted at PACE 2 completion with all three trainers, eight EDs, 16 trainees, and eight support staff from small, medium, and large agencies. Positive influences on training outcomes were identified across all five CFIR domains, including the design of the training and the materials used; compatibility with agency needs; leadership engagement and support; coaches’ knowledge and beliefs about training; and engagement with the trainers and research team. Trainees commented positively about the well-thought-out sequence of learning opportunities, starting with the online modules (i.e., Knows; Fig. 1) and followed by didactic instruction and hands-on skill-building during the PACE 1 and PACE 2 workshops (i.e., Knows How). This was followed by extended practice, in combination with pod support and guided reflection on the use of coaching strategies with practice children and parents in local communities (i.e., Shows How). Finally, training culminated with community application of coaching skills in the RCT (i.e., Does). Despite general endorsement of this tiered approach to training, many trainees indicated a need for ongoing trainer support during the RCT, in order to further hone their skills and build confidence. Nonetheless, they described the training as “literally a game changer for me of how I practice,” “the most powerful thing I’ve ever seen in my work,” and “hands-down…the best in-service training I’ve ever had.” One coach described the difference between parent coaching and her previous approach to working with children and parents as “building that parent capacity [so parents can] build those strategies into…daily routines that are already happening. So you're not…doing a pull out therapy session and ‘here's your hour of therapy.’”.

Conversely, a number of factors were coded as influences that were either neutral or mixed (i.e., responses varied across partner agencies), including training adaptability, complexity, and cost; and execution of the technology-related aspects of the project (e.g., uploading videos for feedback and scoring). In particular, comments from the EDs about the time commitment and cost of training came up repeatedly. One ED commented that, “we just weren’t quite anticipating how large of a time commitment it was;” another suggested that the training could have been "a little bit more flexible so that the coaches could fit it all in with their schedule and their caseloads;" and a third noted that the cost of future parent coach training at her agency might not be viable because “[a big] consideration for non-profits like ourselves is what is our need and what can we afford to use, that's always the concern."

Implementation Outcomes (Does; Fig. 1)

Results in this section address the third research question related to trainee outcomes and the factors that appeared to affect variability in training fidelity following parent coach implementation in the RCT. Because this was a community-based training project, all trainees who completed PACE 2 were included in RCT implementation, regardless of their fidelity scores at completion of PACE 2. However, six of the 31 trainees who completed PACE 2 withdrew from the project either prior to initiation of or during RCT implementation. Reasons for withdrawal included maternity leaves, employment changes, and caseload demands that interfered with project continuation. Of the 25 remaining trainees, 23 were assigned to coach at least one parent during implementation; two trainees from the same agency were not assigned because no local families were recruited for the RCT.

Final implementation coaching session videos were submitted by 17 trainees (nine EE and eight AHP) across a total of 25 parents, as seven trainees coached more than one family each. Videos were missing for six trainees because of technology challenges (i.e., difficulty recording and/or uploading the videos) and/or onset of the COVID-19 pandemic. Missing videos were distributed approximately equally across agencies located in small, medium, and large population centres; across EE and AHP trainees; and across families of various sizes, compositions, and language backgrounds. Thus, it is likely that the videos that were submitted were representative of participating trainees and families overall. IOA was calculated for 12 (48%) of the 25 videos and, if IOA was less than 80%, consensus coding was undertaken to establish a final score; this was required on four occasions. The mean IOA was 80.3% prior to consensus coding (range = 60–92.5%) and 90.8% after consensus coding. Mean CSC scores across families were calculated for trainees who coached more than one family (Table 3). The mean score for EE trainees (66.9%) was 17 percentage points lower than for AHP trainees (83.9%). Scores ≥ 80% were achieved by 44.4% of EEs and 62.5% of AHPs; however, there was no significant difference between the two groups (t = − 1.83, p = 0.09), largely because of large standard deviations for both (Table 3). Pearson correlations between final CSC scores and both implementation hours and trainer support hours were not significant (r = 0.14, p = 0.54 and r = − 0.01, p = 0.96, respectively).

Coaching Endpoint Surveys were available for 21 trainees. Cronbach’s alphas for the four subscales were uniformly high, ranging from 0.93 to 0.97 with a mean of 0.95. Mean scores were 80 or above for all skill clusters except assessment and goal setting for EE coaches (78.5). Congruent with CSC score outcomes, independent samples t-tests indicated significant differences between EE and AHP trainees on all subscales in favour of the latter [skills with child, t(19) = 3.41, p = 0.003; assessment and goal setting, t(19) = 3.45, p = 0.001; session management, t(19) = 2.10, p = 0.049; and coaching relationship skills, t(19) = 2.42, p = 0.026].

Results of the analysis of post-implementation focus groups revealed five main themes (see Smith et al., 2021), two of which were primarily relevant to coach training. The first training-related theme was that the training created an opportunity for “working differently” in most partner agencies, including reflective practice, data-driven decision making, and family empowerment. Comments related to this theme included, for example, “I think a lot of people think they coach … like, ‘Well, we do parent coaching.’ No!… I think this program has completely changed my mindset around that” (trainee); and “… I think that…allowing [myself] that time to reflect, that was a sort of a high place in my career… it was required to change how I typically do things” (trainee). The second training-related theme was acknowledgement of the need to move beyond learning how to use parent coaching with children at risk for ASD toward learning how to implement parent coaching more generally to help families support their child’s development. Example comments included, “I've really shifted my practice to more parent coaching so I feel like…I was able to create opportunities to practice, practice, practice, practice on a more low-key level with families who were not part of PACE…” (trainee); and “I'd want more people trained even if it was in a gradual approach… I think if it rolled out in that everyone could get the training and then it became part of our practice…everyone would have this as a tool… if they want to use [it] in early intervention” (ED).

Discussion

This study was designed to examine the training and implementation outcomes of a parent coach training package based on the ESDM that was delivered across multiple sites in a large Canadian geographic area. Trainee satisfaction, training time, coach engagement, self-assessment, and fidelity of implementation were examined after both training phases. Workshop satisfaction was uniformly high across trainees and sites. Results of the post-PACE 2 Coach Engagement Survey suggested that trainees rated their trust, cooperation, diligence, and satisfaction with training as high, and that they endorsed the training as relevant to their current work. They experienced a moderate degree of stress, which is not surprising given the novelty and intensity of training.

Results of the post-PACE 2 interviews (Table 4) indicate that successful implementation of any program involves more than participant satisfaction; rather, because implementation efforts are complex, success is influenced by many concurrent factors. On the one hand, EDs and trainees were committed to the project overall, endorsed the training as useful, and believed that the availability of parent coaching offered through the research project would enhance the options available to their constituents. Agency and community factors that facilitated trainee outcomes included, for example, a perception that parent coaching would help to meet the needs of agency clients/families (Outer Setting Influence); support for the project by agency leaders who created a positive climate for implementation (Inner Setting Influence); and readiness for change on the part of most trainees (Characteristics of Individuals). Training factors that acted as facilitators included the strength, quality, design, and materials used (Intervention Characteristics); a perception that the training program was compatible with agency values and goals (Inner Setting Influence); and the engagement between trainees, trainers, and the research team for mentoring and support (Process of Engaging Participation). These facilitators are congruent with those reported by Wainer et al. (2017) in their NDBI training study and are the “flip side” of barriers reported in others (e.g., Vismara et al., 2013).

The tiered training model in PACE Coaching was aligned with Miller’s Pyramid of Clinical Competence, which is also useful as a framework for examining training outcomes. Data from the introductory module (i.e., multiple choice questions) suggest that the project was successful at the Knows level of competence (Fig. 1). Outcomes following PACE 1 and PACE 2 (i.e., Knows How and Shows How) were mixed; PACE 1 fidelity scores for AHP trainees were significantly higher than for EE trainees, and partial PACE 2 fidelity data also suggested considerable variability both within and across trainee groups. However, participants strongly endorsed the training overall and self-efficacy scores were generally high. Variability was also reflected in assessments following RCT implementation (i.e., Does; Fig. 1); partial fidelity scores suggested that some trainees were not yet fluent in their ability to coach parents, and participants identified both positive and challenging aspects of the training. In general, this multi-dimensional approach to training corroborates the importance of including activities at all four levels of competence and evaluating their outcomes, as knowledge outcomes (i.e., Knows and Knows How) do not necessarily translate to outcomes that require application (i.e., Shows How), especially at the community level (i.e., Does).

As noted previously, trainees in this project were not required to meet a specified fidelity criterion in order to advance to the next phase of formal training nor to be included in RCT implementation. Mean coach fidelity was 83.3% at completion of PACE 1, when coaches were trained to deliver therapy directly to young children at risk for ASD; 75% at completion of PACE 2, when they were trained to coach parents; and 74.9% post-implementation, when they were supported to coach parents in the RCT. The latter two mean scores were based on partial trainee samples because of attrition, technical difficulty uploading the required videos, and/or the onset of COVID-19. Nonetheless, fidelity scores are generally comparable to those reported in some community-based NDBI training studies (e.g., Chang et al., 2016; Shire et al., 2020; Vismara et al., 2009). However, as argued by Speelman and McGann (2013), the “unguarded use of the mean to summarize outcomes…suppresses the perception of both variability and continuity between results” (p. 8). In response to this caution, we examined fidelity score ranges and standard deviations in addition to means. We found that the range of scores post-PACE 1 (42–100%), PACE 2 (38–93%), and implementation (31–98%) were all quite wide. The mean post-PACE 1 score was significantly lower for EE trainees (80.1%) than for AHP trainees (89.8%). The mean implementation score (66.9% vs. 83.9%) was also lower for the EE group, although large standard deviations prevented detection of a significant difference. One possible explanation for these discrepancies is that some trainees—perhaps especially AHPs, who had higher scores in two training phases—logged more hours of direct support to parents and/or received more pod + trainer support, but we found no correlations between either of these training variables and fidelity scores at any training phase.

What, then, can account for the wide fidelity variability in general and for the apparent differences between EE and AHP trainee outcomes? Trainees’ job roles and previous experiences appeared to account for at least some variability, as both PACE 1 and implementation fidelity scores for AHP trainees were generally higher than those for EE trainees. Many of the EE trainees were used to operating within a primarily consultative model, wherein they met monthly or biweekly with a parent and child, engaged in an informal conversation about the child’s progress, modeled teaching strategies with the child, and encouraged the parent to practice the strategies during the interim period before the next meeting. In contrast, most AHP trainees had strong clinical skills in their roles as speech-language or occupational therapists and were more adept at identifying specific goals, developing longer-term intervention plans, and monitoring progress over time. Trainers commented on these discrepancies during the post-PACE 2 interviews, noting that EE and AHP trainees “came from different backgrounds and skill sets,” and that many EEs “weren't used to having to be accountable, having goals that were measurable, or actually going in and having a particular plan.” They acknowledged that, for many EEs, there was a “steep learning curve” but also commented that many “still improved…but perhaps not in the way someone who really had a strong, strong handle on the [clinical] skills might have been able to.”

Another likely source of variability is the parents and children who were involved in practice or implementation. Because of the community-based nature of this project, we set no criteria for practice families, aside from having a child who was no older than 36 months and had an ASD diagnosis. The only criteria for practice parents were the ability to understand and communicate in English. Thus, parents and children in both PACE 1 and PACE 2 varied widely with regard to marital status, household income, education, employment, number of other children in the family (either with and without ASD), and psychosocial factors (e.g., mental health, history of trauma). Similarly, we had few additional exclusion criteria for the RCT, so some trainees coached parents with less family burden while others coached families with very high burden. Coaching highly burdened parents to support their child’s social-communication development likely required trainees to adapt their coaching interactions to accommodate parent and child circumstances which may have, in turn, contributed to fidelity score variability.

A third factor related to training outcomes was reflected in the number of trainees—14 in all—who withdrew from the project during or following formal training. The majority of trainees who withdrew, either during or following formal training, did so because of large caseloads and were the sole coaches nominated in their agencies, most of which were located in small or medium-sized population centres. As seen in Table 4, the time commitment required of trainees came up as a barrier related to the complexity of training (Intervention Characteristic), the limited resources available to partner agencies (Inner Setting Influence), and the expectations of the funding agent, the BC government (Process of Engaging Participation). One ED described the situation as follows: “…there have been growing pressures on the clinicians involved to split their time between this project and the work required by our ongoing funder and demands of our growing population—time that we are unable to backfill or address.” Given this, even the trainees who completed all training struggled to accommodate training expectations while supporting the (typically) 60–80 families on their caseloads. As one ED said, “There have been times when [I’ve thought] ‘why did we think this [participating in the project] was worth it’? But, you know, we keep powering through; we're committed and then it gets good again, so it's just a lot of extra.” This is congruent with Glasgow and Emmons’s (2007) observation that settings that serve the most vulnerable and highest risk populations (like the agencies in this project) often find themselves so strapped with competing demands that they cannot find “even one minute” (p. 416) for an additional task. Although the research team emphasized the need for a 3-year commitment and endeavored to be transparent about the potential time involved during the pre-training meeting, the enthusiasm of EDs and potential trainees for project involvement likely overshadowed their ability to acknowledge the practical realities they would face. Despite the funding provided by the project to cover the cost of all trainee hours, most agencies were simply unable to backfill this time by “bumping up” the hours worked by part-time staff or by hiring additional staff for coverage for the duration of the project. These pressures were compounded in small communities where the available part-time workforce with the necessary qualifications was quite small.

A challenge that inadvertently added to the training time required of trainees was identified at the training preparation meetings: lack of technology infrastructure (Table 4, Inner Setting Influence). As noted previously, two important training components—peer mentoring via pod meetings and individual supervision by trainers—were reliant on technology. In the province of BC, CDCs and most other service providers that support children and families use community-based internet/WIFI for all digital communication. Thus, by contracting with a secure, online digital platform and providing iPads to all coaches, we expected that trainees would be able to upload videos easily and that trainers would be able to interact virtually and securely with trainees in pod meetings and on a one-to-one basis. In some communities, this worked all the time; in others, it worked some of the time; and in yet others, it did not work most of the time. The size of the community was not the defining factor; in one small, rather remote community, trainees were readily able to engage online in various ways; while in another large urban community, coaches were rarely able to do so without considerable difficulty. Some of this was related to trainees’ unfamiliarity and discomfort with iPad and technology use in general; much of it was related to inadequate bandwidth in many communities and/or lack of proximity to an internet signal tower; and some problems were related to the platform itself and/or to agency privacy policies that restricted trainee access to the platform altogether (this was pre-COVID). Regardless, reliable technology access in many sites—which was essential because of the large geographic reach of the training—was compromised and resulted in intermittent trainee and trainer frustration, practical barriers, and research-related challenges—all of which likely affected training outcomes.

Contextual factors also contributed to training outcome variability. Trainees in some communities had difficulty arranging for practice sessions with their practice family because of scheduling barriers that were secondary to their large caseloads, or because of barriers related to illness or transportation (especially in the snowy winter months, when roads in small communities were sometimes blocked). Often, such factors constrained the number of practice hours that were possible within the project timeline. In addition, even at the end of training, there continued to be variability in trainees’ readiness for change. Prochaska et al. (1992) described five Stages of Change that are useful in this regard: (a) precontemplation, (b) contemplation, (c) preparation, (d) action, and (e) maintenance. At the time of the post-training interviews, all of the trainees appeared to be in the latter four of these stages. Some seemed to be in the contemplation or preparation stage; they were struggling to make changes in their usual practice, burdened by the time required, and experiencing stress because of personal or work-related demands. For example, one trainee commented, “I didn't realize that we would be writing the goals for the kids and that was a huge source of stress for me” and another said, “I was really, really stressed out with my own situation…so I really wasn't that motivated to learn…” Others appeared to be in the preparation or action stage of change; although they may have struggled initially to make a shift from the expert model of parent training with which they were familiar to a collaborative parent coaching model, they were looking for ways to make the new model fit within their current practice. One trainee described this shift as follows: "We've never done, really, that whole coaching piece before in our services; it was all about modeling and showing the parents and playing directly with the child. You elicit certain things out of the child and then provide recommendations at the end of your session. So yeah, the whole model [is] very different.” Another described her successful struggle in making this shift: “…it was not easy…it was a bit stressful in terms of just like actual time and then mental energy I guess, [but] it was worth every minute…in the childbirth kind of way; you don't like it at the time but it was worth it.” Finally, a small group of trainees seemed to be in Prochaska et al.’s maintenance phase of change, where they felt confident in their coaching skills and could envision how to integrate them into practice. As one coach in this stage noted, “the coaching is applicable to so much of our work here in terms of really getting at what the parent wants, family focused." As reflected in the Stages of Change model, it is likely that the variability of trainees’ previous experiences, expectations, and receptivity to learning new skills played a role in the variability of their fidelity scores, beyond the number of practice and implementation hours they accumulated.

Limitations

The study limitations are primarily side effects of its scope and reach, coupled with the aforementioned barriers. From the outset, the research team was aware of the need to be conservative about time demands in order to minimize the impact of the project on caseload demands and ongoing agency operations. Among other things, this meant that we needed to be quite selective about the amount of data trainees were expected to collect and transmit. Originally, we planned that trainees would upload videos at 4-week intervals for fidelity scoring by trainers. However, it became apparent shortly after the PACE 1 workshops that would not be possible in several sites because of both time and/or technology barriers. In response, trainees were asked to upload videos at least intermittently and, at sites where this was not possible, trainers viewed practice session “live” via the online platform, so that they could provide feedback. Similarly, trainees let us know that the amount of paperwork we had originally asked them to submit regularly was more effortful and time-consuming than they could manage, so we modified our expectations of this as well. A result of the need to make these accommodations “on the fly” was that we were unable to formally assess some components of trainee fidelity on an ongoing basis and, as noted previously, we were unable to assess fidelity at all for more than half of trainees post-PACE 2 and for one-quarter of trainees during implementation.

Another limitation was related to the 3-year timeline of the project, which consisted of multiple components in addition to coach training. These included screening of children with ASD and parent recruitment, conducting an RCT, and data analysis. Because of the tight timeline, it was essential that trainees completed the entire training program no more than 8 months after the PACE 1 workshop. While the pressure to complete training did not appear to affect trainee satisfaction or engagement scores, it might have affected their fidelity or their sense of self-efficacy in ways that were not measured and are therefore unknown. Similarly, as the research project was fully funded externally, the financial and budget implications to the public payer and to agencies for scale-up and for sustaining long-term implementation were not investigated, although the costs of training and coaching delivery were measured separately and were reported elsewhere (Tsiplova et al., 2021).

Summary and Conclusions

This practical trial used a multi-method approach to evaluate the outcomes of NDBI parent coach training that initially involved 23 community agencies in a large Canadian province and to conduct a formative evaluation of training outcomes. This is one of the first attempts at a large-scale community adaptation of an NDBI training model (see Rogers et al., 2020 as well). Results provided support for the four-level model of training and assessment proposed by Miller (1990) and the importance of including both quantitative and qualitative assessments of knowledge, application, and factors that affect implementation. Results also indicated that mean trainee fidelity scores were within the range reported in previous community-based NDBI training studies (i.e., ≥ 80%) but there was considerable variability across trainees. Implementation data provided insights about potential sources of this variability that have implications for future training projects or scale-up programs. We also identified contextual barriers related to time/caseload demands and technology infrastructure in particular that should be taken into account in future community-based training studies. Rather than simply accepting an arbitrary benchmark of 80% as an indicator of implementation fidelity (Vismara et al., 2013), we suggest that future training studies conduct micro-analyses similar to ours and provide details about fidelity outcomes, which may have important implications for the scale up of community-based training programs aimed at improving professional clinical practice. It is clear that training in community settings requires a level of adaptability, ongoing flexibility, and collaboration to maximize the “fit” between the training form and content, partner strengths and constraints, and research rigour. It will be critical to continue to explore strategies for disseminating evidence-based training in community settings, to meet the needs of children and families across the range of age and disability.

References

Chang, Y.-C., Shire, S., Shih, W., Gelfand, C., & Kasari, C. (2016). Preschool deployment of evidence-based social communication intervention: JASPER in the classroom. Journal of Autism and Developmental Disorders, 46, 2211–2223. https://doi.org/10.1007/s10803-016-2752-2

Damschroder, L., Aron, D., Keith, R., Kirsh, S., Alexander, J., & Lowery, J. (2009). Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implementation Science, 4, 50.

Durlak, J., & DuPre, E. (2008). Implementation matters: A review of research on the influence of implementation on program outcomes and the factors affecting implementation. American Journal of Community Psychology, 41, 327–350. https://doi.org/10.1007/s10464-008-91s65-0

Estabrooks, C., Squires, J., Cummings, G., Birdsell, J., & Norton, P. (2009). Development and assessment of the Alberta Context Tool. BMC Health Services Research, 9, 234–245.

Ferguson, R. F. (2012). Can student surveys measure teaching quality? Phi Delta Kappan, 94(3), 24–28.

Fixsen, D. L., Naoom, S. F., Blase, K. A., Friedman, R. M. & Wallace, F. (2005). Implementation research: A synthesis of the literature. University of South Florida, Louis de la Parte Florida Mental Health Institute, The National Implementation Research Network (FMHI Publication #231).

Fuller, E., & Kaiser, A. (2020). The effects of early intervention on social communication outcomes for children with autism spectrum disorder: A meta-analysis. Journal of Autism and Developmental Disorders, 50, 1683–1700. https://doi.org/10.1007/s10803-019-03927-z

Glasgow, R., & Emmons, K. (2007). How can we increase translation of research into practice? Types of evidence needed. Annual Review of Public Health, 28, 413–433. https://doi.org/10.1146/annurev.publhealth.28.021406.144145

Hanft, B., Rush, D. D., & Shelden, M. L. (2004). Coaching families and colleagues in early childhood. Paul H. Brookes Publishing Co.

Miller, G. E. (1990). The assessment of clinical skills/competence/performance. Academic Medicine, 65(9), S63–S67.

Mirenda, P., Colozzo, P., Smith, V., Kroc, E., Kalynchuk, K., Rogers, S. R., & Ungar, W. J. (2021). A randomized, community-based feasibility trial of modified ESDM for toddlers with suspected autism. Manuscript submitted for publication.

Nahmias, A., Pellecchia, M., Stahmer, A., & Mandell, D. (2019). Effectiveness of community-based early intervention for children with autism spectrum disorder: A meta-analysis. Journal of Child Psychology and Psychiatry, 60, 1200–1209. https://doi.org/10.1111/jcpp.13073

Pickard, K., Mellman, H., Frost, K., Reaven, J., & Ingersoll, B. (2021). Balancing fidelity and flexibility: Usual care for young children with and increased likelihood of having autism spectrum disorder within an early intervention system. Journal of Autism and Developmental Disorders. https://doi.org/10.1007/s10803-021-04882-4

Prochaska, J., DiClemente, C., & Norcross, J. (1992). In search of how people change: Applications to addictive behaviors. American Psychologist, 47, 1102–1114.

Proctor, E., Silmere, H., Raghavan, R., Hovmand, P., Aarons, G., Bunger, A., Griffey, R., & Hensley, M. (2011). Outcomes for implementation research: Conceptual distinctions, measurement challenges, and research agenda. Administration and Policy in Mental Health, 38, 65–76. https://doi.org/10.1007/s10488-010-0319-7

QSR International (2018). NVivo, version 13. Author.

Rogers, S. J., Dawson, G., & Vismara, L. (2012). An early start for your child with autism: Using everyday activities to help kids connect, communicate, and learn. Guilford Press.

Rogers, S. J., Dawson, G., Zierhut, C., Winter, J., McCormick, C., & Holly, E. (2013). Community ESDM (C-ESDM) Curriculum Checklist for Young Children. University of California-Davis MIND Institute.

Rogers, S. J., Stahmer, A., Talbott, M., Young, G., Fuller, E., Pellechia, M., Barber, A., & Griffith, E. (2020). Feasibility of delivering parent-implemented NDBI interventions in low resource regions: A pilot randomized controlled study. Journal of Neurodevelopmental Disorders. https://doi.org/10.21203/rs.3.rs-105121/v1

Rogers, S. J., Vismara, L., & Dawson, G. (2021). Coaching parents of young children with autism. Guilford.

Rooks-Ellis, D., Howarth, S., Boulettte, S., Kunze, M., & Sulinski, E. (2020). Effects of a parent training using telehealth: Equity and access to early intervention for rural families. Journal of Childhood, Education, & Society, 1, 141–166. https://doi.org/10.37291/2717638X.20201242

Sandback, M., Botema-Beutel, K., Crowley, S., Cassidy, M., Dunham, K., Feldman, J. I., Crank, J., Albarren, S. A., Raj, S., Mahbub, P., & Woynaroski, T. G. (2020). Project AIM: Autism intervention meta-analysis for studies of young children. Psychological Bulletin, 146, 1–29. https://doi.org/10.1037/bul0000215