Abstract

Given a closed, convex cone \(K\subseteq \mathbb {R}^n\), a multivariate polynomial \(f\in \mathbb {C}[\textbf{z}]\) is called K-stable if the imaginary parts of its roots are not contained in the relative interior of K. If K is the nonnegative orthant, K-stability specializes to the usual notion of stability of polynomials. We develop generalizations of preservation operations and of combinatorial criteria from usual stability toward conic stability. A particular focus is on the cone of positive semidefinite matrices (psd-stability). In particular, we prove the preservation of psd-stability under a natural generalization of the inversion operator. Moreover, we give conditions on the support of psd-stable polynomials and characterize the support of special families of psd-stable polynomials.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Multivariate stable polynomials can be seen as a generalization of real-rooted polynomials, and they enjoy many connections to other branches in mathematics, including differential equations [3], optimization [23], probability theory [4], matroid theory [6, 9], applied algebraic geometry [24], theoretical computer science [18, 19] and statistical physics [2]. See also the surveys of Pemantle [20] and Wagner [25].

Classical related notions include hyperbolic polynomials [11] or stability with respect to an arbitrary domain (see, e.g., [12] and the references therein). Recently, further variants and generalizations have been developed, including conic stability introduced by Jörgens and the third author [14], Lorentzian polynomials introduced by Brändén and Huh [7] and positively hyperbolic varieties introduced by Rincón, Vinzant and Yu [22].

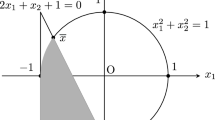

In this work we focus on the notion of conic stability. Given a closed, convex cone \(K\subseteq {\mathbb R}^n\), a polynomial \(f\in {\mathbb C}[{\textbf{z}}]={\mathbb C}[z_1,\ldots ,z_n]\) is called K-stable, if \(\textsf{Im}({\textbf{z}})\not \in {{\,\textrm{relint}\,}}K\) for every root \({\textbf{z}}\) of f, where \(\textsf{Im}({\textbf{z}})\) denotes the vector of the imaginary parts of the components of \({\textbf{z}}\) and \({{\,\textrm{relint}\,}}K\) denotes the relative interior of K. Note that \(({\mathbb R}_{\ge 0})^n\)-stability coincides with the usual stability. In the case of a homogeneous polynomial, K-stability of f is equivalent to the containment of \({{\,\textrm{relint}\,}}K\) in a hyperbolicity cone of f. The notion of K-Lorentzian polynomials recently introduced by Brändén and Leake [8] is, up to scaling, a generalization of homogeneous K-stable polynomials. Stability with respect to the positive semidefinite cone on the space of symmetric matrices is denoted as psd-stability. In the homogeneous case such polynomials are also known as Dirichlet–Gårding polynomials [13]. Prominent subclasses of psd-stable polynomials arise from determinantal representations [10]. Blekherman, Kummer, Sanyal et al. [1] have constructed a family of psd-stable lpm-polynomials (linear principle minor polynomials) from multiaffine stable polynomials.

The purpose of the current paper is to initiate the study of generalizations of two prominent research directions in stable polynomials toward conically stable polynomials: preservation operators and combinatorial criteria. In particular, a focus is to understand the transition from the classical stability situation to the conic stability with respect to non-polyhedral cones such as the positive semidefinite cone.

With regard to preservation, stable polynomials have been recognized to remain stable under a number of operations, see the survey [25]. Prominent examples include the inversion operation (see [2]), the preservation under taking partial derivatives (as a consequence of the univariate Gauß–Lucas Theorem), the Lieb-Sokal Lemma ( [17, Lemma 2.3], see also [2, Lemma 2.1]) and the celebrated characterization of Borcea and Brändén of linear operators preserving stability [2, Theorem 1.3]. Many of the mentioned applications of stability rely on the preservation properties.

With regard to combinatorial criteria, several important combinatorial results have been achieved, which provide effective criteria for the recognition of stable polynomials. A groundbreaking result of Choe, Oxley, Sokal and Wagner states that the support of a multi-affine, homogeneous and stable polynomial \(f \in {\mathbb R}[\textbf{z}] = {\mathbb R}[z_1, \ldots , z_n]\) is the set of bases of a matroid [9, Theorem 7.1]. Brändén [6, Theorem 3.2] proved a generalization of this result for the support of any stable polynomial \(f \in {\mathbb R}[\textbf{z}]\), showing that it forms a jump system, i.e., it satisfies the so-called Two-Step Axiom. See Sect. 2 for formal definitions. Recently, Rincón, Vinzant and Yu gave an alternative proof of the matroid result, based on a tropical proof of the auxiliary statement that positive hyperbolicity of a variety is preserved under passing over to the initial form [22, Corollary 4.9].

The proofs of these combinatorial properties strongly rely on the preservation properties of stable polynomials. These preservation properties establish the connection between the combinatorial and the algebraic viewpoint. For example, taking the partial derivative of a polynomial f shifts the support vectors of f by a unit vector in a negative coordinate direction (and some support vectors may disappear). Since stability of a polynomial is preserved under taking partial derivatives, one can use this preserver to argue about the combinatorics of the support. In the univariate case, these considerations are classical for deriving log-concavity of sequences with real-rooted generating functions.

Our contributions. 1. We generalize several preserving operators for usual stability to the conic stability. In particular, we derive a conic version of the Lieb-Sokal Lemma (see Lemma 2.2 and Corollary 3.6).

2. For the case of psd-stability, we can prove the preservation under a natural generalization of the inversion operator. See Theorem 4.3. This generalized inversion operator is specific to the case of psd-stability and exhibits a prominent role of this class. Furthermore, we show that psd-stable polynomials are preserved under taking initial forms with respect to positive definite matrices. See Theorem 4.10.

3. Combinatorics of psd-stable polynomials. We prove a necessary criterion on the support of any psd-stable polynomial in Theorem 5.1 and characterize the support of special families of psd-stable polynomials. In particular, we characterize psd-stability of binomials (Theorem 5.5), give a necessary criterion for psd-stability of a larger class containing binomials (Theorem 5.4), and introduce a class of polynomials of determinants, which satisfies a generalized jump system criterion with regard to psd-stability. Theorem 5.11 characterizes the restrictive structure of psd-stable polynomials of determinants. These results are complemented by an additional conjecture on the support of general psd-stable polynomials. We provide evidence for this conjecture by verifying it for the classes of polynomials treated previously.

The paper is structured as follows. Section 2 collects relevant background on preservers of the usual stability notion as well as an introduction to the notion of K-stability.

In Sect. 3, we study preservers of conic stability for general and polyhedral cones, including the generalized version of the Lieb-Sokal Lemma. Section 4 treats the case of psd-stability, in particular, the preservation of psd-stability under an inversion operation and under passing over to certain initial forms. Section 5 deals with combinatorial conditions of psd-stable polynomials. Therein, Subsections 5.1 and 5.2 discuss the support of special families of psd-stable polynomials. Subsection 5.3 considers the support of general psd-stable polynomials and also raises a conjecture.

2 Preliminaries

Let \({\mathbb R}_{\ge 0}\) and \({\mathbb R}_{>0}\) denote the sets of non-negative and of positive real numbers. Further, let \(\mathcal {H} {:=} \{z \in {\mathbb C}: \, \textsf{Im}(z) > 0\}\) be the open upper half-plane of \({\mathbb C}\). Throughout the text, bold letters will denote n-dimensional vectors unless noted otherwise.

In this section, we collect known properties of stable polynomials and then introduce the generalization of stability, namely conic stability, with which the paper is concerned.

2.1 Stable polynomials

A polynomial \(f\in {\mathbb C}[{\textbf{z}}]\) is called stable if for every root \({\textbf{z}}\) of f, there exists some \(j \in [n]\) with \(\textsf{Im}(z_j)\le 0\). Hence, a univariate real polynomial f is stable if and only if it is real-rooted, because the non-real roots of univariate real polynomials occur in conjugate pairs. The following collection from [25, Lemma 2.4] recalls some elementary operations that preserve stability, where f) can be derived from the Gauß-Lucas Theorem. Denote by \(\deg _i\) the degree in the variable \(z_i\).

Proposition 2.1

Let \(f \in {\mathbb C}[\textbf{z}]\) be stable.

-

(a)

Permutation: \(f(z_{\sigma (1)},\ldots ,z_{\sigma (n)})\) is stable for every permutation \(\sigma :[n]\rightarrow [n]\).

-

(b)

Scaling: \(c\cdot f(a_1z_1,\ldots ,a_nz_n)\) is stable or zero for every \(c\in {\mathbb C}\) and \({\textbf{a}}\in {\mathbb R}^n_{>0}\).

-

(c)

Diagonalization: \(f({\textbf{z}})\, _{z_j=z_i}\in {\mathbb C}[z_1,\ldots ,z_{j-1},z_{j+1}, \ldots ,z_n]\) is stable or zero for every \(i \ne j\in [n]\).

-

(d)

Specialization: \(f(b,z_2,\ldots ,z_n)\in {\mathbb C}[z_2,\ldots ,z_n]\) is stable or zero for every \(b\in {\mathbb C}\) with \(\textsf{Im}(b)\ge 0\).

-

(e)

Inversion: \(z_1^{\deg _1(f)}\cdot f(-z_1^{-1},z_2,\ldots ,z_n)\) is stable.

-

(f)

Differentiation: \(\partial _j f({\textbf{z}})\) is stable or zero for every \(j\in [n]\).

A prominent linear stability preserver is the Lieb-Sokal Lemma ( [17, Lemma 2.3], see also [2, Lemma 2.1] or [25, Lemma 3.2]). It is an essential ingredient in Borcea and Brändén’s full characterization of linear operations preserving stability [2, Theorem 1.1], see also [3, Section 3.2].

Proposition 2.2

(Lieb-Sokal Lemma) Let \(g({\textbf{z}})+yf({\textbf{z}})\in {\mathbb C}[{\textbf{z}},y]\) be stable and assume \(\deg _i(f)\le 1\). Then \(g({\textbf{z}})-\partial _if({\textbf{z}})\in {\mathbb C}[{\textbf{z}}]\) is stable or identically zero.

The following statement due to Hurwitz allows us to obtain (conic) stability statements as limit of statements on compact subsets under a uniform convergence condition.

Proposition 2.3

[15, Par. 5.3.4] Let \(\{f_k\}\) be a sequence of polynomials not vanishing in a connected open set \(U \subseteq {\mathbb R}^n\), and assume it converges to a function f uniformly on compact subsets of U. Then f is either non-vanishing on U or it is identically zero.

As a consequence of [9, Theorem 6.1], the following necessary condition for homogeneous stable polynomials based on their coefficients applies.

Theorem 2.4

All nonzero coefficients of a homogeneous stable polynomial \(f\in {\mathbb C}[{\textbf{z}}]\) have the same phase.

2.2 Stability and initial forms

The initial form \({{\,\textrm{in}\,}}_{\textbf{w}}(f)\) of a polynomial \(f({\textbf{z}})=\sum _{\alpha \in S} c_\alpha {\textbf{z}}^\alpha \) with respect to a functional \({\textbf{w}}\) in the dual space \(({\mathbb R}^n)^*\) is defined as

where \(S_{\textbf{w}}{:=}\{\alpha \in S \,: \, \langle {\textbf{w}}, \alpha \rangle = \max _{\beta \in S} \langle {\textbf{w}}, \beta \rangle \}\) and \(\langle \cdot , \cdot \rangle \) is the natural dual pairing. That is, we restrict the polynomial f to those monomials whose exponents lie on the face of the Newton polytope of f where the functional \({\textbf{w}}\) is maximized.

In the context of their work on positively hyperbolic varieties, Rincón, Vinzant and Yu [22, Proposition 4.1] showed that for polynomials with real coefficients, stability is preserved under taking initial forms. Their proof is based on tropical geometry. For the convenience of the reader, we give here a simplified proof and at the same time slightly generalize the statement to also cover polynomials with complex coefficients. The observation that the statement is also valid for complex coefficients has independently been derived by Kummer and Sert [16, Proposition 2.6].

Theorem 2.5

If \(f\in {\mathbb C}[{\textbf{z}}]\) is stable and \({\textbf{w}}\in ({\mathbb R}^n)^* {\setminus } \{ \textbf{0} \}\), then \({{\,\textrm{in}\,}}_{\textbf{w}}(f)({\textbf{z}})\) is also stable.

Proof

Let \(\varphi {:=}\max \left\{ \langle \alpha ,{\textbf{w}}\rangle : \alpha \in \text {supp}(f)\right\} \), and for \(\lambda > 0\), define the polynomial \(f_\lambda ({\textbf{z}}){:=}\frac{1}{\lambda ^\varphi }\cdot f(\lambda ^{w_1}z_1,\) \( \ldots , \) \(\lambda ^{w_n}z_n)\), which is stable by Proposition 2.1.

To apply Hurwitz’ Theorem to finally achieve stability of the initial form, we need to ensure that \(f_\lambda \) converges uniformly to \({{\,\textrm{in}\,}}_{\textbf{w}}(f)\) on every compact subset \(C\subseteq {\mathbb C}^n\). Let \(\mu =\max \{\langle \alpha ,{\textbf{w}}\rangle :\langle \alpha ,{\textbf{w}}\rangle < \varphi , \alpha \in \textrm{supp}(f)\}\) and \(\delta =\varphi -\mu >0\). Then

since the norm in the last equality is bounded, given that C is a compact set. \(\square \)

The discussion of the preservation of conically stable polynomials when passing over to initial forms is continued at the end of Sect. 4.

2.3 Combinatorics of stable polynomials

For \(\alpha ,\beta \in {\mathbb Z}^n\), the steps between \(\alpha \) and \(\beta \) are defined as the set

where \(|\sigma | {:=} \sum _{i=1}^n |\sigma _i|\). A collection of points \(\mathcal {F}\subseteq {\mathbb Z}^n\) is called a jump system if for every \(\alpha ,\beta \in \mathcal {F}\) and \(\sigma \in \text {St}(\alpha ,\beta )\) with \(\alpha +\sigma \notin \mathcal {F}\) there is some \(\tau \in \text {St}(\alpha +\sigma ,\beta )\) such that \(\alpha +\sigma +\tau \in \mathcal {F}\). In words, if after one step from \(\alpha \) toward \(\beta \) we have left the set \(\mathcal {F}\), then there must be a second step that takes us back into \(\mathcal {F}\). This property is also known as the Two-Step Axiom. The support of a complex polynomial \(f({\textbf{z}})=\sum _{\alpha }c_\alpha {\textbf{z}}^\alpha \) is defined as \(\text {supp}(f)=\{\alpha \in {\mathbb Z}^n_{\ge 0}:c_\alpha \ne 0\}\), that is, it is the set of all exponent vectors \(\alpha \) such that the corresponding coefficient \(c_\alpha \) is non-zero in f. The following theorem reveals the connection between stable polynomials and jump systems.

Theorem 2.6

(Brändén [6]) If \(f\in {\mathbb C}[{\textbf{z}}]\) is stable, then its support is a jump system.

In [22, Proposition 4.1], the support of stable binomials is explicitly classified as follows. Here, \({\textbf{e}}_i\) denotes the i-th unit vector in \({\mathbb R}^n\).

Theorem 2.7

Let \(f=c_\alpha {\textbf{z}}^\alpha +c_\beta {\textbf{z}}^\beta \) with \(c_\alpha ,c_\beta \ne 0\) and \(\alpha ,\beta \in {\mathbb Z}_{\ge 0}^n\) be stable and let \({\textbf{z}}^{\alpha }\) and \({\textbf{z}}^{\beta }\) do not have a common factor. Then one of the following holds,

-

a)

\(\{\alpha ,\beta \}=\{0,{\textbf{e}}_i\}\) for some \(i\in [n]\),

-

b)

\(\{\alpha ,\beta \}=\{{\textbf{e}}_i,{\textbf{e}}_j\}\) for some \(i,j\in [n]\) and \(\frac{c_\alpha }{c_\beta }\in {\mathbb R}_{\ge 0}\), or

-

c)

\(\{\alpha ,\beta \}=\{0,{\textbf{e}}_i+{\textbf{e}}_j\}\) for some \(i,j\in [n]\) and \(\frac{c_\alpha }{c_\beta }\in {\mathbb R}_{<0}\).

2.4 Conic stability

The following notion of conic stability as introduced in [14] generalizes stability to more general cones. Let K be a closed, convex cone in \({\mathbb R}^n\) and denote by \({{\,\textrm{relint}\,}}K\) its relative interior.

Definition 2.8

A polynomial \(f \in {\mathbb C}[\textbf{z}]\) is called K-stable, if \(f(\textbf{z})\ne 0\) whenever \(\textsf{Im}(\textbf{z})\in {{\,\textrm{relint}\,}}K\).

Observe that by choosing the cone \(K = {\mathbb R}^n_{\ge 0}\), we recover the usual notion of stability. For any closed, convex cone K, conic stability can be characterized through stability of univariate polynomials (see [14, Lemma 3.4], that proof literally also works without the assumption of full-dimensionality made there).

Proposition 2.9

([14], Lemma 3.4) A polynomial \(f \in {\mathbb C}[\textbf{z}] {\setminus } \{0\}\) is K-stable if and only if for all \(\textbf{x}, \textbf{y} \in {\mathbb R}^n\) with \(\textbf{y} \in {{\,\textrm{relint}\,}}K\), the univariate polynomial \(t \mapsto f(\textbf{x} + t\textbf{y})\) is stable or identically zero.

Remark 2.10

A homogeneous polynomial \(f\in {\mathbb C}[{\textbf{z}}]\) is called hyperbolic w.r.t. \({\textbf{e}}\in {\mathbb R}^n\) if \(f({\textbf{e}})\ne 0\) and the univariate polynomial \(t\mapsto f({\textbf{x}}+t{\textbf{e}})\) is real rooted. For a full-dimensional cone \(K \subset {\mathbb R}^n\), every homogeneous K-stable polynomial is hyperbolic w.r.t. every \({\textbf{e}}\in {{\,\textrm{relint}\,}}K = {{\,\textrm{int}\,}}K\) by [14, Theorem 3.5] and hence, up to a multiplicative constant every homogeneous K-stable polynomial has real coefficients [11].

2.5 Positive semidefinite stability

We introduce the notion of psd-stability, an important special case of conic stability where the cone is chosen to be the positive semidefinite cone.

Denote by \(\mathcal {S}^{\mathbb C}_n\) the vector space of complex symmetric matrices (rather than Hermitian matrices) and by \(\mathcal {S}_n\) the space of real ones. The cones of real positive semidefinite and positive definite matrices are denoted by \(\mathcal {S}^{+}_n\) and \(\mathcal {S}^{++}_n\). Let \({\mathbb C}[Z]\) denotes the ring of polynomials on the symmetric matrix variables \(Z = (z_{ij})\). More precisely, \({\mathbb C}[Z]\) is the vector space generated by monomials of the form \(Z^{\alpha } = \prod _{1 \le i,j \le n} z_{ij}^{\alpha _{ij}}\) with some nonnegative symmetric matrix \(\alpha \) whose diagonal entries are integers and whose off-diagonal entries are half-integers. Polynomials in \({\mathbb C}[Z]\) can also be interpreted as polynomials in the polynomial ring \({\mathbb C}[\{z_{ij}| 1\le i\le j \le n\}]\), by identifying \(z_{ij}\) and \(z_{ji}\) for \(i \ne j\). For example, consider the monomial

in the polynomial ring \({\mathbb C}[Z]\) over the vector space \(\mathcal {S}_2^{{\mathbb C}}\).

Definition 2.11

Psd-stability is defined as \(\mathcal {S}_n^{+}\)-stability for polynomials over the vector space \(\mathcal {S}^{\mathbb C}_n\) of complex symmetric matrices. That is, a polynomial \(f \in {\mathbb C}[Z]\) is psd-stable if it has no root \(M \in \mathcal {S}^{\mathbb C}_n\) such that \(\textsf{Im}(M) \in \mathcal {S}^{++}_n\).

The notion of psd-stability generalizes usual stability in the sense that for every stable polynomial \(f=f(z_1, \ldots , z_n)\), the polynomial \(\bar{f}: Z \mapsto f(z_{11}, \ldots , z_{nn})\) is a psd-stable polynomial.

The support \(\textrm{supp}(f)\) of a polynomial \(f \in {\mathbb C}[Z]\) is the set of all symmetric exponent matrices of the monomials occurring with nonzero coefficients in the polynomial. The variables \(z_{ii}\) are called diagonal variables, while the variables \(z_{ij}\) with \(i \ne j\) are the off-diagonal variables. We say that a monomial with exponent matrix \(\alpha \) is a diagonal monomial if \(\alpha _{ij}=0\) for all \(i\ne j \in [n] \), and we say that it is an off-diagonal monomial if \(\alpha _{ii}=0\) for all \(i \in [n]\). By convention, we say that a constant is a diagonal monomial, but not an off-diagonal one.

Example 2.12

Let \(f(Z)=\det (Z)\) in the polynomial ring \({\mathbb C}[Z]\) over the vector space \(\mathcal {S}_2^{{\mathbb C}}\). Then

The monomial \(z_{11}z_{22}\) is a diagonal monomial while the other one is an off-diagonal monomial.

A prime example of psd-stable polynomials are determinants. The proof is included for completeness.

Lemma 2.13

\(f(Z)=\det (Z)\) is psd-stable.

Proof

Suppose that f is not psd-stable, that is, there exist real symmetric matrices A and B with B positive definite, such that \(f(A+i B)=0\). Then B is invertible and \(0=f(A +i B) = \det (A+iB) = \det (B) \det (B^{-\frac{1}{2}}AB^{-\frac{1}{2}}+ i I_n)\), where \(I_n\) denotes the identity matrix of size n. Hence, \(-i\) is a root of the characteristic polynomial of, and thus an eigenvalue of, \(B^{-\frac{1}{2}}AB^{-\frac{1}{2}}\): a contradiction, since a symmetric real matrix has only real eigenvalues. \(\square \)

Contrary to the usual stability notion, monomials are not necessarily psd-stable. In fact, every monomial with an off-diagonal variable as a factor is not psd-stable since it evaluates to zero for \(Z=i\cdot I_n\).

Psd-stability can be viewed as stability with respect to the Siegel upper half-space \(\mathcal {H}_\mathcal {S} \ = \{ A \in {\mathbb C}^{n \times n} \text { symmetric } \,: \, \textsf{Im}(A) \text { is positive definite}\}\). The Siegel upper half-space occurs in algebraic geometry and number theory as the domain of modular forms.

3 Preservers for conic stability

We provide generalizations of the stability preservers from Sect. 2 to conic stability with respect to some closed, convex cone K. Our focus is on general cones and on the subclass of polyhedral cones. A main result in this section is Theorem 3.4, a conic version of the Lieb-Sokal Lemma. In Sect. 4, the specific case of preservers for psd-stability will be studied.

A conical analogue of property b) from Proposition 2.1, scaling, holds trivially since K is a cone: for any \(c\in {\mathbb C}\) and \(a\in {\mathbb R}_{\ge 0}\), the polynomial \(c\cdot f(az_1,\ldots ,az_n)\) is K-stable or identically zero. We now study the preservation of conical stability under directional derivatives. For a vector \({\textbf{v}}\in {\mathbb R}^n {\setminus } \{\textbf{0}\}\), denote by \(\partial _{{\textbf{v}}}\) the directional derivative in direction \({\textbf{v}}\), i.e., \(\partial _{\textbf{v}}f({\textbf{z}}) = \frac{d}{dt}f({\textbf{z}}+ t{\textbf{v}}) \big |_{t=0}\).

Lemma 3.1

Let \(f\in {\mathbb C}[{\textbf{z}}]\) be K-stable. For \({\textbf{v}}\in K\), the polynomial \(\partial _{\textbf{v}}f\) is K-stable or identically zero.

In the homogeneous case, this statement follows from the concept of a Renegar derivative [21] for hyperbolic polynomials.

Proof

Let f be K-stable and \({\textbf{v}}\in K\). Assume that \(\partial _{\textbf{v}}f\) is neither 0 nor K-stable. Then there is some \({\textbf{z}}\in {\mathbb C}^n\) such that \(\textsf{Im}({\textbf{z}})\in {{\,\textrm{relint}\,}}K\) and \(\partial _{\textbf{v}}f({\textbf{z}})=0\).

To aim at a contradiction to the univariate Gauß-Lucas Theorem, we construct through a substitution in f a univariate polynomial \(g \not \equiv 0\), which has a non-real zero. Since \(\textsf{Im}({\textbf{z}}) \in {{\,\textrm{relint}\,}}(K)\), there exists some \(\varepsilon > 0\) such that \(\textsf{Im}({\textbf{z}}) - \varepsilon {\textbf{v}}\in {{\,\textrm{relint}\,}}K\). Define the univariate polynomial \(g: t\mapsto f({\textbf{z}}-i \varepsilon {\textbf{v}}+t{\textbf{v}})\). If \(g \equiv 0\), then \(f({\textbf{z}}) = g(i \varepsilon ) = 0\) in contradiction to the K-stability of f. Hence, \(g \not \equiv 0\). Since \(\textsf{Im}({\textbf{z}}) - \varepsilon {\textbf{v}}\in {{\,\textrm{relint}\,}}(K)\) and \({\textbf{v}}\in K\), the univariate polynomial g is stable: if it had any root t with \(\textsf{Im}(t)>0\), \({\textbf{z}}-i\varepsilon {\textbf{v}}+t{\textbf{v}}\) would be a root of f, but its imaginary part \(\textsf{Im}({\textbf{z}})-\varepsilon {\textbf{v}}+\textsf{Im}(t) {\textbf{v}}\) is in the relative interior of the cone K, a contradiction to the conical stability of f. Moreover, g is not constant, because \(\partial _{\textbf{v}}f \not \equiv 0\). Hence, by the Gauß-Lucas Theorem, the derivative \(g'\) is stable. Since

we obtain a contradiction to the stability of \(g'\). \(\square \)

There is a natural generalization of property d) in Lemma 2.1 to conic stability.

Lemma 3.2

Let \(f \in {\mathbb C}[\textbf{z}]\) be K-stable, \({\textbf{a}}\in {\mathbb C}^n\) and \(\textbf{v}^{(1)}, \ldots , \textbf{v}^{(k)} \in {\mathbb R}^n\). Further set \(K' = \textrm{pos}\{\textbf{v}^{(1)}, \ldots , \textbf{v}^{(k)} \}\) and assume that \(\textsf{Im}(\textbf{a}) + K' \subseteq K\). Then the polynomial \(g \in {\mathbb C}[\textbf{z}]\) defined by

is stable or the zero polynomial.

Setting \(K = {\mathbb R}^n_{\ge 0}\), \(k=n-1\), \({\textbf{v}}^{(j)} = \textbf{e}^{(j+1)}\) with the \((j+1)\)-th unit vector \(\textbf{e}^{(j+1)}\), \(1 \le j \le n-1\), and \(a_2 = \cdots = a_n = 0\) yields Lemma 2.1 d).

Proof

First consider the special case where \(\textsf{Im}(\textbf{a}) + {{\,\textrm{relint}\,}}K' \subseteq {{\,\textrm{relint}\,}}K\). Further assume that the polynomial \(g \in {\mathbb C}[\textbf{z}]\) is neither zero nor stable. Then there exists \(\textbf{w} \in {\mathbb C}^k\) with \(\textsf{Im}(\textbf{w}) \in {\mathbb R}_{>0}^k\) and \(g(\textbf{w}) = 0\), and thus, \(f(\textbf{a}+\sum _{j=1}^{k} w_j \textbf{v}^{(j)}) = 0\). Since \(\textsf{Im}(\textbf{a}) + \sum _{j=1}^{k} w_j \textbf{v}^{(j)} \in \textsf{Im}(\textbf{a}) + {{\,\textrm{relint}\,}}K' \subseteq {{\,\textrm{relint}\,}}K\), f is not K-stable, contradiction.

The general case (\(\textsf{Im}(\textbf{a}) + K' \subseteq K\)) follows from Hurwitz’ Theorem. \(\square \)

In the rest of this section, we present and prove a generalization of the Lieb-Sokal Lemma (Lemma 2.2) to conic stability. In the usual Lieb-Sokal Lemma, we take a partial derivative of a polynomial which has degree at most 1 in the corresponding variable. To formulate a similar result for arbitrary cones, we take a directional derivative in a direction lying in the cone, since these directional derivatives preserve conic stability by Lemma 3.1. To this end, we need a generalized notion of degree with respect to an arbitrary direction.

Definition 3.3

For \({\textbf{v}}\in {\mathbb R}^n\), we call \(\rho _{\textbf{v}}(f)\) the degree of f in direction \(\textbf{v}\), defined as the degree of the univariate polynomial \(f({\textbf{w}}+t{\textbf{v}})\in {\mathbb C}[t]\) for generic \({\textbf{w}}\in {\mathbb C}^n\).

In particular, after taking the directional derivative in direction \({\textbf{v}}\) exactly \(\rho _{{\textbf{v}}}(f)+1\) times, we obtain the identically zero polynomial. The degree in the direction of a unit vector \({\textbf{e}}^{(j)}\) coincides with the univariate degree with respect to the variable j. We can now state the conical version of Lieb-Sokal stability preservation.

Theorem 3.4

(Conic Lieb-Sokal stability preservation) Let \(K'\) be given by \(K'=K\times {\mathbb R}_{\ge 0}\) and \(g({\textbf{z}})+yf({\textbf{z}})\in {\mathbb C}[{\textbf{z}},y]\) be \(K'\)-stable and such that \(\rho _{\textbf{v}}(f)\le 1\) for some \({\textbf{v}}\in K\). Then \(g-\partial _{\textbf{v}}f\) is K-stable or \(g-\partial _{\textbf{v}}f\equiv 0\).

We first establish a connection between a cone K and its lift \(K'\) into a higher-dimensional space, which we will use to prove Theorem 3.4.

Lemma 3.5

Let \(f,g\in {\mathbb C}[{\textbf{z}}]\), where \(f\not \equiv 0\) and K-stable and let \(K'=K\times {\mathbb R}_{\ge 0}\). Then \(g+yf\in {\mathbb C}[{\textbf{z}},y]\) is \(K'\)-stable if and only if

Proof

Let \(g+yf\) be \(K'\)-stable. Fix some \({\textbf{z}}\) with \(\textsf{Im}({\textbf{z}})\in {{\,\textrm{relint}\,}}K\). By K-stability, we have \(f(\textbf{z}) \ne 0\), and thus, we may consider \(g({\textbf{z}})+yf({\textbf{z}})\) as a univariate stable polynomial.

Setting \(w = -g({\textbf{z}})/f({\textbf{z}})\), the stability of the univariate polynomial \(y \mapsto g(\textbf{z})+yf(\textbf{z})\) implies \(\textsf{Im}(w) \le 0\). It follows that

Conversely, suppose \(\textsf{Im}\left( \frac{g({\textbf{z}})}{f({\textbf{z}})}\right) \ge 0\) for all \({\textbf{z}}\in {\mathbb C}^n\) with \(\textsf{Im}({\textbf{z}})\in {{\,\textrm{relint}\,}}K\). Assume \(g\not \equiv 0\), since otherwise \(yf({\textbf{z}})\) would clearly be \(K'\)-stable. For \({\textbf{z}}\in {\mathbb C}^n\) with \(\textsf{Im}({\textbf{z}})\in {{\,\textrm{relint}\,}}K\), we have for \(w\in {\mathbb C}\) with \(\textsf{Im}(w)>0\) that \(\frac{g({\textbf{z}})}{f({\textbf{z}})}\ne -w\). So \(g({\textbf{z}})+wf({\textbf{z}})\ne 0\) and \(K'\)-stability follows. \(\square \)

We can now complete the proof of Theorem 3.4.

Proof of Theorem 3.4

We begin by observing that g is K-stable or \(g\equiv 0\). Let \({\textbf{v}}\in K\) with \(\rho _{\textbf{v}}(f)\le 1\). If \(\partial _{\textbf{v}}f\equiv 0\), there is nothing to prove. So assume \(\partial _{\textbf{v}}f\not \equiv 0\) and thus implies \(f\not \equiv 0\). For a fixed \({\textbf{z}}\in {\mathbb C}^n\) with \(\textsf{Im}({\textbf{z}})\in {{\,\textrm{relint}\,}}K\) we may consider \(g({\textbf{z}})+yf({\textbf{z}})\) as a univariate polynomial in y. By Lemma 3.1, the polynomial \(f({\textbf{z}})=\partial _y(g({\textbf{z}})+yf({\textbf{z}}))\) is K-stable. For \({\textbf{z}}\in {\mathbb C}^n\) with \(\textsf{Im}({\textbf{z}})\in {{\,\textrm{relint}\,}}K\), \({\textbf{v}}\in K\) and \(y\in {\mathbb C}\) with \(\textsf{Im}(y)>0\), we have \(\textsf{Im}({\textbf{z}}-\frac{1}{y}{\textbf{v}})\in {{\,\textrm{relint}\,}}K\), because

It follows that \(yf({\textbf{z}}-\frac{1}{y}{\textbf{v}})\) is \(K'\)-stable. Since \(\rho _{\textbf{v}}(f)\le 1\), there exist polynomials \(f_0\) and \(f_1\) with \(\rho _{f_0}({\textbf{v}}),\rho _{f_1}({\textbf{v}})=0\) and \(f({\textbf{z}})=f_0({\textbf{z}})+\langle {\textbf{v}},{\textbf{z}}\rangle \cdot f_1({\textbf{z}})\). Thus, the identity

implies the \(K'\) stability of \(yf({\textbf{z}})-\partial _{\textbf{v}}f({\textbf{z}})\). Applying Lemma 3.5 twice gives

Using Lemma 3.5 again, the \(K'\)-stability of \(g({\textbf{z}})-\partial _{\textbf{v}}f({\textbf{z}})+yf({\textbf{z}})\) follows. By specializing to \(y=0\) and using Lemma 2.1, we obtain that \(g({\textbf{z}})-\partial _{\textbf{v}}f({\textbf{z}})\) is K-stable or \(g({\textbf{z}})-\partial _{\textbf{v}}f({\textbf{z}})\equiv 0\). \(\square \)

Theorem 3.4 not only generalizes the usual Lieb-Sokal Lemma to the case of arbitrary cones, but also extends it to directional derivatives with respect to every direction in the positive orthant. We can formulate this explicitly as the following refined version for the usual stability notion.

Corollary 3.6

(Refined Lieb-Sokal Lemma) Let \(g({\textbf{z}})+yf({\textbf{z}})\in {\mathbb C}[{\textbf{z}},y]\) be stable and assume \(\rho _{\textbf{v}}(f)\le 1\) for some \({\textbf{v}}\in {\mathbb R}^n_{\ge 0}\). Then \(g({\textbf{z}})-\partial _{{\textbf{v}}}f({\textbf{z}})\in {\mathbb C}[{\textbf{z}}]\) is stable or identically 0.

4 Preservers for psd-stability

In this section, we restrict to psd-stability. For a complex symmetric matrix \(Z\in \mathcal {S}^{{\mathbb C}}_n\), we write \(Z=X+iY\) with \(X,Y\in \mathcal {S}_n\). After collecting some elementary preservers, our main results of this section are the preservation of psd-stability under an inversion operation (see Theorem 4.3 and Corollary 4.7) and the preservation of psd-stability under taking initial forms with respect to positive definite matrices (see Theorem 4.10).

For a polynomial \(f \in {\mathbb C}[Z]\), let \(f_\textrm{Diag}\in {\mathbb C}[Z]\) denote the polynomial obtained from f by substituting all off-diagonal variables by 0. For \(1 \le i \ne j \le n\), let \(B_{ii}\) be the matrix which is 1 in entry (i, i) and zero otherwise, and let \(B_{ij}\) be the matrix which is 1/2 in entry (i, j) and (j, i) and zero otherwise. Then, for a polynomial \(f = \sum _{\alpha } c_{\alpha } Z^{\alpha } \in {\mathbb C}[Z]\) and its equivalent version \(\tilde{f} = \sum _{\alpha } c_{\alpha } \prod _{k=1}^n z_{kk}^{\alpha _{kk}} \prod _{k < l} z_{kl}^{2 \alpha _{kl}}\) in \({\mathbb C}[\{z_{kl}| 1\le k\le l \le n\}]\), we have the identities \(\frac{\partial f}{\partial B_{ii}} \big |_{z_{lk} {:=} z_{kl}} = \frac{\partial \tilde{f}}{\partial z_{ii}}\) and \(\frac{\partial f}{\partial B_{ij}} \big |_{z_{lk} {:=} z_{kl}} = \frac{1}{2} \frac{\partial \tilde{f}}{\partial z_{ij}}\) as symbolic expressions. To see this, it suffices to observe that for \(i < j\) and a monomial \(f(Z) = z_{ij}^{\alpha _{ij}} z_{ji}^{\alpha _{ji}} \in {\mathbb C}[Z]\), we have \(\tilde{f} = z_{ij}^{2 \alpha _{ij}}\) and

Substituting \(z_{ji}\) by \(z_{ij}\) gives \( \frac{\partial }{\partial B_{ij}} f(Z) \big |_{z_{ji} {:=} z_{ij}} = \alpha _{ij} z_{ij}^{2 \alpha _{ij}-1} \ = \ \frac{1}{2} \frac{\partial }{\partial z_{ij}} \tilde{f}. \)

Lemma 4.1

(Elementary preservers for psd-stability) Let \(f\in {\mathbb C}[Z]\) be psd-stable.

-

(a)

Diagonalization: The polynomial \(Z \mapsto f_\textrm{Diag}(Z)\) is psd-stable.

-

(b)

Transformation: Let \(S\in {{\,\textrm{GL}\,}}_n({\mathbb R})\), then \(f(SZS^{-1})\) and \(f(SZS^T)\) are psd-stable.

-

(c)

Minorization: For \(J\subseteq [n]\), let \(Z_J\) be the symmetric \(|J| \times |J|\) submatrix of Z with index set J. Then \(f(Z_J)\), the polynomial on \(\mathcal {S}^{{\mathbb C}}_{|J|}\) obtained from f by setting to zero all variables with at least one index outside of J, is psd-stable or zero.

-

(d)

Specialization: For a fixed index \(i \in [n]\), let \(\hat{Z}_i\) be any matrix obtained from Z by assigning real values to \(z_{ij},z_{ji}\) for all indices \(j \ne i\) and a value from \(\mathcal {H}\) to \(z_{ii}\). Then \(f(\hat{Z}_i)\), viewed as polynomial on \(\mathcal {S}^{{\mathbb C}}_{n-1}\), is psd-stable or zero.

-

(e)

Reduction: For \(i,j \in [n]\), let \(\bar{Z}_{ij}\) be any matrix obtained from Z by choosing real values for \(z_{ik}=z_{ki}\) for \(k \ne i\) and setting \(z_{ii}{:=}z_{jj}\). Then \(f(\bar{Z}_{ij})\), viewed as polynomial on \(\mathcal {S}^{{\mathbb C}}_{n-1}\), is psd-stable or zero.

-

(f)

Permutation: Let \(\pi :[n]\rightarrow [n]\) be a permutation. Then \(f((Z_{\pi (j),\pi (k)})_{1\le j,k\le n})\) is a psd-stable polynomial on \(\mathcal {S}^{{\mathbb C}}_n\).

-

(g)

Differentiation: \(\partial _V f(Z)\) is psd-stable or zero for \(V \in \mathcal {S}_n^+\).

Proof

(a) Assume \(f_\textrm{Diag}\) is not psd-stable. Then there are real symmetric matrices A, B with \(B \succ 0\) and \(f_\textrm{Diag}(A+iB) = 0\). Let \(A'\) and \(B'\) be the matrices obtained from A and B by setting all off-diagonal variables to zero. In particular, \(B'\) is positive definite. Since the only variables occurring in \(f_\textrm{Diag}\) are the diagonal ones, we have \(f(A'+iB') = f_\textrm{Diag}(A+iB) = 0\). Hence, f is not psd-stable.

(b) Both transformations \(Z \mapsto S^T Z S\) and \(Z \mapsto S^{-1} Z S\) preserve the inertia of \(\textsf{Im}(Z)\) and thus also psd-stability.

(c) Set \(k{:=}|J|\) and assume without loss of generality \(J = \{1, \ldots , k\}\). For \(\varepsilon > 0\), let \(g_{\varepsilon }\) be the polynomial on the space \(\mathcal {S}_k\) defined by \(g_{\varepsilon }(Z) \ {:=} \ f \left( \textrm{Diag}(Z, i \varepsilon I_{n-k}) \right) \), where \(\textrm{Diag}(Z, i \varepsilon I_{n-k})\) is the block diagonal matrix with blocks Z and \(i \varepsilon I_{n-k}\). The psd-stability of g implies the psd-stability of \(g_{\varepsilon }\) for all \(\varepsilon > 0\). Hurwitz’ Theorem 2.3 then gives the desired result, because \(f(Z_J) = g_0(Z)\).

(d) is obvious, e) and f) are similar to c), and g) is the special case of Lemma 3.1 when K is the psd-cone. \(\square \)

The diagonalization property from Lemma 4.1 plays a central role in the theory of psd-stable polynomials, since it establishes connections to the usual stability notion and also gives further insights into the monomial structure of psd-stable polynomials.

Corollary 4.2

Let \(f \in {\mathbb C}[Z]\) be psd-stable. Then:

-

(a)

The polynomial \((z_{11}, z_{22}, \ldots , z_{nn})\mapsto f_\textrm{Diag}(Z)\) is stable in \({\mathbb C}[z_{11},z_{22}, \ldots , z_{nn}]\).

-

(b)

If \(f(0)=0\), i.e., if f does not have a constant term, then there is a monomial in f consisting only of diagonal variables of Z.

-

(c)

If f is homogeneous, then

- (c1):

-

the sum of the coefficients of all diagonal monomials of f is nonzero.

- (c2):

-

all nonzero coefficients of diagonal monomials of f have the same phase.

Proof

(a) By Lemma 4.1, we know that \(f_\textrm{Diag}(Z)\not \equiv 0\) is psd-stable. Now it suffices to observe that \(f_\textrm{Diag}(Z)\ne 0\) whenever the diagonal of \(\textsf{Im}(Z)\) has positive entries only.

(b) Let \(f(0) = 0\). If each monomial in f contains an off-diagonal variable of Z, then \(f_\textrm{Diag}(Z) \equiv 0\), in contradiction to the psd-stability of \(f_\textrm{Diag}(Z)\).

(c1) The claim follows since the sum of the coefficients of all diagonal monomials is given by \(f(I_n)\) which cannot be zero due to \(f(i\cdot I_n)=i^{\deg (f)}f(I_n)\ne 0\).

(c2) The claim follows by combining a) with Theorem 2.4. \(\square \)

When investigating the combinatorics of psd-stable polynomials in Sect. 5, we will refer to the following observation, which could also be considered as a special case of specialization. Let f(Z) be psd-stable. For the real matrix variables X and any fixed real matrix \(B\succ 0\), the polynomial \(f(X+iB)\) does not have any real roots.

As the first main result in this section, we show the following preservation statement under inversion for psd-stability.

Theorem 4.3

(Psd-stability preservation under inversion) If \(f(Z)\in {\mathbb C}[Z]\) is psd-stable, then the polynomial \(\det (Z)^{\deg (f)}\cdot f(-Z^{-1})\) is psd-stable.

Here, the factor \(\det (Z)^{\deg (f)}\) serves to ensure that the product is a polynomial again. For the proof of Theorem 4.3, we begin with a technical lemma.

Lemma 4.4

Let \(A,B \in \mathcal {S}_n\) with \(B \succ 0\). Then the symmetric matrix \(C {:=} A+iB\) is invertible and the imaginary part matrix of the symmetric matrix \(C^{-1}\) is negative definite.

We will use the following elementary computation rules, which can be verified immediately.

Lemma 4.5

Assume that \(C=A+iB\) is invertible, and denote its inverse by \(W = U + iV\).

-

(a)

If A is invertible, then \(U =(A + B A^{-1} B)^{-1}\).

-

(b)

If B is invertible, then \(V = (-B-A B^{-1} A)^{-1}\).

We also use the following basic statement on eigenvalues in the proof of Lemma 4.4.

Lemma 4.6

Let \(A,B \in \mathcal {S}_n\) and set \(C = A+iB\). If \(B \succ 0\) then \(\lambda \in \mathcal {H}\) for all eigenvalues \(\lambda \) of C.

Proof

Let \(B \succ 0\), and let \(\lambda \) be an eigenvalue of C with some corresponding eigenvector \(\textbf{v}\). Then

Since \(B\succ 0\), we have \(\frac{{\textbf{v}}^H B {\textbf{v}}}{{\textbf{v}}^H {\textbf{v}}}>0\) and thus \(\lambda \in \mathcal {H}\). \(\square \)

Proof of Lemma 4.4

Let \(C = A + iB\) with \(A,B \in \mathcal {S}_n\) and \(B \succ 0\). Lemma 4.6 gives that C is invertible. The symmetry of \(C^{-1}\) is an immediate consequence of the invertibility. Indeed, \(C^{-1} C = I\) implies \(I = I^T = (C^{-1} C)^T = C^T (C^{-1})^T\). Since C is symmetric, the matrix \((C^{-1})^T\) is the inverse of C, that is, \((C^{-1})^T = C^{-1}\).

By Lemma 4.5, the imaginary part of \(W=C^{-1}\) is given by \((-B - AB^{-1} A)^{-1}\). We observe that \(B^{-1}\) is positive definite and thus \(A B^{-1} A\) is positive semidefinite. Hence, \(-B - A B^{-1} A\) is negative definite. Since the inverse of that matrix is negative definite as well, the claim follows. \(\square \)

We can complete the proof of Theorem 4.3.

Proof of Theorem 4.3

The inverse of a symmetric matrix \(C=A+iB\) with positive definite imaginary part B has a negative definite imaginary part, as shown in Lemma 4.4. Thus, \(f(-C^{-1})\ne 0\) if \(B\succ 0\). Since \(\det (Z)\) is a psd-stable polynomial as well as f, the polynomial \(\det (Z)^{\deg (f)}f\left( - Z^{-1}\right) \) is psd-stable. Note that the factor \(\det (Z)^{\deg (f)}\) ensures that \(\det (Z)^{\deg (f)}f\left( - Z^{-1}\right) \) is a polynomial. This directly follows from Cramer’s rule, saying \(Z^{-1} = \frac{1}{\det (Z)} \cdot {{\,\textrm{adj}\,}}(Z)\), where \({{\,\textrm{adj}\,}}(Z)\) denotes the adjugate matrix of Z. \(\square \)

The following is a slight generalization which resembles the existing formulation of the scalar version in Lemma 2.1.

Corollary 4.7

If Z is a symmetric block diagonal matrix with blocks \(Z_1, \ldots , Z_k\) and \(f(Z) = f(Z_1, \ldots , Z_k)\) is psd-stable, then \(\det (Z_1)^{\deg _{Z_1} f} \cdot f(-Z_1^{-1}, Z_2, \ldots , Z_k)\) is a psd-stable polynomial. Here, \(\deg _{Z_1} f\) denotes the total degree of f with respect to the variables from the block \(Z_1\).

We close the section with a brief discussion and our second main result of this section on the preservation of the psd-stability of a polynomial \(f\in {\mathbb C}[Z]\) when passing over to an initial form. For \(f=\sum _{\alpha \in S}c_\alpha Z^\alpha \in {\mathbb C}[Z]\), the initial form of f is defined with respect to some functional W in the dual space \(\mathcal {S}_n^*\). It is defined as

where \(S_W{:=}\{\alpha \in S \,: \, \langle W, \alpha \rangle _F = \max _{\beta \in S} \langle W, \beta \rangle _F \}\) and \(\langle \cdot ,\cdot \rangle _F\) is the Frobenius product. The following example shows that Theorem 2.5 on stability preservation under taking the initial form for any non-zero functional \({\textbf{w}}\) does not generalize to the case of psd-stability.

Example 4.8

The polynomial \(f\in {\mathbb C}[Z]\) given by

is a psd-stable polynomial. However, taking the initial form \({{\,\textrm{in}\,}}_W(f)\) for

yields \({{\,\textrm{in}\,}}_W(f)= - z_{11}z_{23}^2 - z_{22}z_{13}^2+ 2 z_{12}z_{13}z_{23}\), which vanishes at \(Z= i I_3\). Since the imaginary part of \(i I_3\) is a positive definite matrix, \({{\,\textrm{in}\,}}_W(f)\) is not psd-stable.

To answer the natural question of whether psd-stability is preserved by passing over to the initial form with respect to certain symmetric matrices, we show that it is enough for W to be positive definite.

For \(\lambda >0\) and matrices \(W\in \mathcal {S}_n\), let \(\lambda ^W\) denote the operation given by \((\lambda ^W)_{ij}{:=}\lambda ^{w_{ij}}\). Furthermore, for two matrices \(A,B\in \mathcal {S}_n\) let \(A\circ B\) denote the Hadamard product of A and B with \((A\circ B)_{ij}=a_{ij}\cdot b_{ij}\). Generalizing the notation \(|\cdot |\) for vectors, we write \(|\alpha |=\sum _{1\le i,j\le n} |\alpha _{ij}|\) for an exponent matrix \(\alpha \).

Lemma 4.9

Let \(f\in {\mathbb C}[Z]\) be psd-stable and let \(W\in \mathcal {S}_n\) be such that there exists some \(\lambda _0 >0\) such that for every \(\lambda >\lambda _0\), \(\lambda ^W\) is positive definite. Then \({{\,\textrm{in}\,}}_W(f)\) is psd-stable.

Proof

The Schur product theorem states that the Hadamard product of the two positive definite matrices is positive definite. Thus, we have \(\lambda ^W\circ A\succ 0\) for all \(A\succ 0\) and \(\lambda >\lambda _0\). Let \(\varphi =\max \left\{ \langle \alpha ,W \rangle _F:\alpha \in \text {supp}(f) \right\} \) and define the polynomial \(f_\lambda (Z){:=}\frac{1}{\lambda ^\varphi }f(\lambda ^W\circ Z)\). This is psd-stable for any \(\lambda > \lambda _0\), since the positive semi-definiteness of the imaginary part is preserved due to the previous observation. Let \(\mu {:=}\max \{\langle \alpha , W\rangle :\alpha \in \text {supp}(f), \langle \alpha ,W\rangle <\varphi \}\) and \(\delta {:=}\varphi -\mu >0\). Now, for any compact subset \(C\in \mathcal {S}_n\),

since the norm in the last equality is bounded. By Hurwitz’ Theorem 2.3, \({{\,\textrm{in}\,}}_W(f)\) is psd-stable. \(\square \)

Theorem 4.10

Let \(f\in {\mathbb C}[Z]\) be psd-stable and \(W \in \mathcal {S}_n\) be positive definite, then \({{\,\textrm{in}\,}}_W(f)\) is psd-stable.

Proof

Let \(W \in \mathcal {S}_n\) be positive definite. Then \(W^{\circ k}\), the k-fold Hadamard product of W, is positive definite for all \(k \ge 1\) and so is \(\exp [W]{:=}\sum _{k=0}^\infty \frac{W^{\circ k}}{k!}\), with the convention that \(W^{\circ 0}\) is the all-ones matrix. For \(\lambda >1\), we have \(\ln (\lambda )\cdot W\succ 0\). Therefore,

is positive definite. The claim now follows from Lemma 4.9 with \(\lambda _0=1\). \(\square \)

5 Combinatorics of psd-stable polynomials

This section is about combinatorial properties of the support of psd-stable polynomials, inspired by the results in [6, 9, 22] on the support of stable polynomials listed in Sects. 1 and 2. Theorem 5.1 gives a necessary condition on the support of any psd-stable polynomial. In Sects. 5.1 and 5.2, we characterize psd-stability of binomials and non-mixed polynomials and the class of polynomials of determinants. Finally, Sect. 5.3 discusses some aspects on the support of general psd-stable polynomials, provides a conjecture and verifies this conjecture for some special families of polynomials. We sometimes write both \(z_{ij}\) and \(z_{ji}\) with some \(i \ne j\), but both denote the same variable \(z_{ij}\) with \(i \le j\), as explained at the beginning of Sect. 4.

Theorem 5.1

If an off-diagonal variable \(z_{ij}\) (where \(i<j\)) occurs in a psd-stable polynomial \(f\in {\mathbb C}[Z]\), then the corresponding diagonal variables \(z_{ii}\) and \(z_{jj}\) must also occur in f.

This mimics the basic fact about positive semidefinite matrices that if an off-diagonal entry is nonzero, the corresponding diagonal entries must also be nonzero.

Proof

We prove the contrapositive. Suppose without loss of generality that \(z_{1n}\) is a variable appearing in f but \(z_{nn}\) is not. We can choose an \((n-1)\times (n-1)\) complex symmetric matrix A and \(a_{2n}, \ldots , a_{n-1,n} \in {\mathbb C}\) such that \(\textsf{Im}(A)\) is positive definite and such that substituting these values into f gives a non-constant univariate polynomial g in the variable \(z_{1n}\). The second condition is possible because the set \(\mathcal {S}_{n-1}^{++} \times {\mathbb C}^{n-2}\) is an open set. Indeed, we can choose all real parts to be zero.

The univariate non-constant polynomial g has a complex root \(a_{1n}\). The assignment \(z_{ij}=a_{ij}\) for all \((i,j) \ne (n,n)\) gives therefore a root of f no matter what value we choose for \(z_{nn}\). We now claim that if we assign a value \(a_{nn}\) with \(\textsf{Im}(a_{nn})\) positive and large enough, the matrix \(A'\) which results from assigning these values to Z has a positive definite imaginary part.

Observe that by Sylvester’s criterion of leading principle minors, it is enough to check that the determinant of \(\textsf{Im}(A')\) is strictly positive; the remaining leading principal minors will necessarily be positive because they are minors of \(\textsf{Im}(A)\), which we chose positive definite. Now, by developing the determinant along the last row, we obtain

where c is a constant. Since \(\det \textsf{Im}(A)\) is positive, we can choose \(\textsf{Im}(a_{nn})\) positive and sufficiently large so that \(\det \textsf{Im}(A')\) is positive. Thus, \(f(A')=0\) with \(\textsf{Im}(A')\) positive definite, which proves that f is not psd-stable. \(\square \)

The argument used in the proof is connected to the ’positive (semi-)definite matrix completion problem’, see for example [5, Section 3.5]. In the special case of binomials Theorem 5.1 can be extended as follows.

Lemma 5.2

Let \(f(Z)=c_\alpha Z^\alpha +c_\beta Z^\beta \) be a psd-stable binomial. If the two monomials \(Z^\alpha \) and \(Z^\beta \) do not have a common factor, then either both consist only of diagonal variables, or one only of diagonal and the other only of off-diagonal variables.

Proof

\(Z^\alpha \) and \(Z^\beta \) cannot both be off-diagonal monomials, since this contradicts Theorem 5.1. It remains to be shown that neither monomial can contain both diagonal and off-diagonal variables. Suppose toward a contradiction that one of the two monomials did contain both, w.l.o.g. \(Z^\beta \), and choose j such that \(\beta _{jj}>0\). Since the monomials of f do not share any variable, \(\frac{\partial Z^\alpha }{\partial z_{jj}}\equiv 0\), where we use the derivative notation \(\frac{\partial }{\partial z_{ij}}\) on the symmetric matrix space as introduced at the beginning of Sect. 4.

Hence, \(g(Z){:=}\frac{\partial f}{\partial z_{jj}}=c_\beta \beta _{jj}Z^{\beta '}\) is a non-zero monomial with \(\beta '_{kl}=\beta _{kl}\) for \((k,l)\ne (j,j)\) and \(\beta '_{jj}=\beta _{jj}-1\), that is, g is a monomial containing an off-diagonal variable. Thus, \(g(i\cdot I_n)=0\), which is a contradiction since g is psd-stable by Lemma 3.1. \(\square \)

5.1 Binomials and non-mixed polynomials

We give characterizations of the support of psd-stable binomials. Some of the results will be stated for the family of non-mixed polynomials, which includes irreducible binomials thanks to Lemma 5.2.

Definition 5.3

We call a polynomial \(f\in {\mathbb C}[Z]\) non-mixed if every monomial that occurs in f either consists only of diagonal variables or only of off-diagonal variables. We always write such a non-mixed polynomial as \(f= \sum _{\alpha \in A} c_\alpha Z^\alpha + \sum _{\beta \in B} c_\beta Z^\beta \), where A refers to the exponent matrices of diagonal monomials and B refers to the exponent matrices of off-diagonal monomials.

It is useful to consider this larger family because it is closed under directional derivatives while the family of binomials is not. The following two theorems are the main results in this subsection.

Theorem 5.4

Let \(f(Z)=\sum _{\alpha \in A} c_\alpha Z^\alpha + \sum _{\beta \in B} c_\beta Z^\beta \) be a homogeneous non-mixed polynomial of degree \(d\ge 3\) and assume \(c_\beta \ne 0\) for some \(\beta \in B\). Then f is not psd-stable.

The following theorem is a complete characterization of the support of psd stable binomials, analogous to the classification of stable binomials from Theorem 2.7.

Theorem 5.5

Every psd-stable binomial is of one of the following forms:

-

(a)

Only diagonal variables appear in f and f satisfies the conditions of Theorem 2.7: \(f(Z)=Z^\gamma (c_1 Z^{\alpha _1}+c_2 Z^{\alpha _2})\) with \(|\alpha _1-\alpha _2|\le 2\) and at least one of \(\alpha _1,\alpha _2\) is non-zero,

-

(b)

\(f(Z)=Z^\gamma (c_1 z_{ii}z_{jj}+c_2 z_{ij}^2)\) with \(i < j\) and \(\frac{c_1}{c_2}\in {\mathbb R}\),

where \(c_1, c_2 \ne 0\) and \(Z^\gamma \) is a diagonal monomial.

This theorem shows that the only psd-stable binomials with off-diagonal variables are those described in b): in particular, at most one off-diagonal variable occurs in a psd-stable binomial, and it has degree exactly 2.

The following lemma is a first step toward a proof of the main theorems and shows that the exponents of psd-stable binomials cannot be far apart. The proof relies on taking derivatives in direction \(V^{(ij)}\), with \(i \ne j\), which denotes the \(n\times n\) matrix with \(v_{ii} = v_{jj} = v_{ij} = v_{ji} = 1\) and 0 elsewhere. In terms of the basis matrices \(B_{ij}\) introduced at the beginning of Sect. 4, we have \(V^{(ij)} = B_{ii} + B_{jj} + 2 B_{ij}\).

Lemma 5.6

Let \(f(Z)=c_\alpha Z^\alpha +c_\beta Z^\beta \) be a psd-stable binomial (thus \(c_{\alpha }, c_{\beta } \ne 0\)). Then \(\left| \left| \alpha \right| - \left| \beta \right| \right| \le 2\).

Proof

We may assume that the monomials of f do not have a common factor since this does neither affect \(|\alpha -\beta |\) nor \(||\alpha |-|\beta ||\). By Lemma 5.2, either both monomials are diagonal monomials or w.l.o.g. only \(Z^\beta \) is an off-diagonal monomial. If both monomials are diagonal, the claim follows directly from Theorem 2.6, because psd-stable polynomials involving only diagonal variables are stable polynomials.

Now assume that \(Z^\beta \) is an off-diagonal monomial. Then \(|\beta |\le |\alpha |\) follows from Theorem 5.1 after possibly taking derivatives in direction \(V^{(ij)}\) for some \(z_{ij}\) appearing in \(Z^\beta \). It remains to show \(|\alpha |\le |\beta |+2\). Assume to the contrary that \(|\alpha |-|\beta | \ge 3\). Choose i and j with \(i < j\) such that \(z_{ij}\) occurs in \(Z^\beta \). Since \(\frac{\partial f}{\partial V^{(ij)}}(Z)=\left( \frac{\partial }{\partial z_{ii}}+\frac{\partial }{\partial z_{jj}}\right) (c_\alpha Z^\alpha )+\frac{\partial }{\partial z_{ij}}( c_\beta Z^\beta )\) by the computation rules at the beginning of Sect. 4, we see that \(\frac{\partial f}{\partial V^{(ij)}}(Z)\) has at most two diagonal monomials, each of degree \(|\alpha |-1\), and exactly one off-diagonal monomial of degree \(|\beta |-1\). By applying this procedure consecutively \(|\beta |\) times, we obtain a polynomial \(g(Z)=\sum _{\alpha '}c_{\alpha '}Z^{\alpha '}+c_{\beta '}\), where \(\sum _{\alpha '}c_{\alpha '}Z^{\alpha '}\) is a homogeneous polynomial in diagonal variables of degree \(|\alpha |-|\beta |\ge 3\) and \(c_{\beta '}\) is a constant. Further g(Z) is psd-stable by Lemma 3.1. Since g does not involve any off-diagonal variables, it is a stable polynomial. This is a contradiction to Theorem 2.6, since the support of g does not satisfy the Two-Step Axiom. \(\square \)

In the following, we show that most binomials are not psd-stable by explicitly constructing a root S (of the binomial or a directional derivative of it) whose imaginary part lies in the interior of the psd-cone. This root S will be a symmetric \(n\times n\) matrix of the form

Since \(\textsf{Im}(S)=I_n \succ 0\), any polynomial with root S is not psd-stable.

Lemma 5.7

Let \(f(Z)=c_\alpha Z^\alpha +c_\beta Z^\beta \) be a binomial (thus, \(c_{\alpha }, c_{\beta } \ne 0\)) with \(|\alpha |>|\beta |\ge 1\) and such that \(Z^\alpha \) and \(Z^\beta \) do not have a common factor. Then f is not psd-stable.

Proof

Assume toward a contradiction that f is psd-stable. Since \(|\alpha | > |\beta | \ge 1\) and \(Z^\alpha \) and \(Z^\beta \) do not share a factor, we have that \(|\alpha -\beta | \ge 3\). If both monomials were diagonal monomials, psd-stability would imply stability, and \(|\alpha -\beta | \ge 3\) would yield a contradiction to Theorem 2.6.

Now assume that \(Z^\beta \) is an off-diagonal monomial. We will show that there are \(s,t\in {\mathbb R}\) such that S is a root of f, thus contradicting that f is psd-stable. By Lemma 5.6, the only possibly psd-stable cases are \(|\alpha |=|\beta |+1\) and \(|\alpha |=|\beta |+2\).

First consider the case \(|\beta | = 1\). Then \(|\alpha |\in \{2,3\}\). After substituting S, \(f=0\) is of the form

One may split the real and imaginary part of equation (3) to obtain two real equations, denoted by (Re) and (Im). First let \(a=2\). If \(\textsf{Im}(b)\ne 0\), there is a real solution \(s=\frac{\textsf{Re}(b)}{\textsf{Im}(b)}+\sqrt{\left( \frac{\textsf{Re}(b)}{\textsf{Im}(b)}\right) ^2+1}\) and \(t=\frac{1-s^2}{\textsf{Im}(b)}\). If \(\textsf{Im}(b)=0\), the solution \(t=\frac{1}{\textsf{Re}(b)}\) and \(s=0\) may be found. Now let \(a=3\). If \(\textsf{Im}(b)\ne 0\), (Im) implies \(t=\frac{1-3s^2}{\textsf{Im}(b)}\), which then gives \(s^3-3s+\frac{\textsf{Re}(b)}{\textsf{Im}(b)}(1-3s^2)\), which has a real solution. If \(\textsf{Im}(b)=0\), (Im) becomes \(3s^2=1\), which has the real solutions \(s=\pm \frac{1}{\sqrt{3}}\). Substituting these into (Re) gives a linear function in t, which has a real solution as well.

Now consider the case \(|\beta |>1\). Choose i and j with \(i < j\) such that the variable \(z_{ij}\) occurs in f. Since f is psd-stable, its partial derivative in direction \(V^{(ij)}\) is psd-stable by Lemma 3.1. Further \(\frac{\partial f}{\partial V^{(ij)}}(Z)=\left( \frac{\partial }{\partial z_{ii}}+\frac{\partial }{\partial z_{jj}}\right) ( c_\alpha Z^\alpha )+\frac{\partial }{\partial z_{ij}}( c_\beta Z^\beta )\) is a non-mixed polynomial with the degree of each monomial reduced by 1 and exactly one off-diagonal monomial. Taking \(|\beta |-1\) consecutive derivatives in a similar way, we obtain a non-mixed polynomial of the form \(g(Z)=\sum _{\alpha '} c_{\alpha '} Z^{\alpha '} + c_{\beta '} Z^{\beta '}\) with \(|\beta '|=1\) and \(|\alpha '|\in \{2,3\}\). Substituting S into g gives equation (3). Thus, neither g nor f can be psd-stable. \(\square \)

Now we prove Theorem 5.4, which shows that homogeneous non-mixed polynomials of high degree cannot be psd-stable.

Proof of Theorem 5.4

Assume to the contrary that f is a homogeneous non-mixed psd-stable polynomial of degree at least 3. By Remark 2.10, we can assume without loss of generality that all coefficients of f are real.

First assume the degree of f is \(d=3\). We will show a contradiction to psd-stability by explicitly finding a forbidden root of f.

Let \(a{:=}\sum _{\alpha \in A} c_\alpha \) and \(b{:=}\sum _{\beta \in B} c_\beta \). Note that a and b are real and \(a=f(I_n)\ne 0\) by Corollary 4.2 c1). If \(b\ne 0\), w.l.o.g. we normalize so that \(a=1\). To obtain the desired forbidden root we look for a solution of the form S introduced above, that is, real solutions s, t for the equation \(f(S)=(s+i)^3+bt^3=0\). By splitting the equation into real and imaginary part, we obtain the system

Consider the positive real solution \(s^*=\frac{1}{\sqrt{3}}\) of (Im). Plugging this solution into (Re) gives a real cubic in t, which has a real solution \(t^*\).

If instead \(b=0\), we tweak matrix S to \(S'\) as follows: let \(\beta _0 \in B\) such that \(c_{\beta _0} \ne 0\), and let \(z_{ij}\) be a variable occurring in the monomial \(Z^\beta \). Then we let \(S'_{ij}=S_{ji}'=(1+\varepsilon )t\) for a small \(\varepsilon >0\). The remaining entries of \(S'\) are the same as those in S. Since \(b=\sum _\beta c_\beta =0\), we have that \(f(S')= (s+i)^3+ \varepsilon c_{\beta _0} t^3\), and \(\varepsilon c_{\beta _0} >0\), which means we fall into the case above with the coefficient of \(t^3\) non-zero. We have thus constructed solutions violating psd-stability for any such degree 3 polynomial f.

Now let \(d>3\) and assume d is the smallest degree such that there is a polynomial f of the specified form which is psd-stable of degree d. Its partial derivative in any direction \(V^{(ij)}\) is psd-stable by Lemma 3.1. If we choose \((i,j), \ i\ne j\) such that the variable \(z_{ij}\) occurs in f, \(\frac{\partial f}{\partial V^{(ij)}}\) is a polynomial of the same form of degree \(d-1\): since \(\frac{\partial f}{\partial V^{(ij)}}(Z)=\left( \frac{\partial }{\partial z_{ii}}+\frac{\partial }{\partial z_{jj}}\right) (\sum _\alpha c_\alpha Z^\alpha )+\frac{\partial }{\partial z_{ij}}(\sum _\beta c_\beta Z^\beta )\), the coefficients of off-diagonal monomials are positive multiples of those of f and therefore there must be a non-zero one. This is a contradiction, since we assumed that d was the smallest degree which a psd-stable polynomial of this form could have. \(\square \)

We finally have all the tools needed to prove Theorem 5.5, which provides a complete classification of the support of psd-stable binomials.

Proof of Theorem 5.5

Let f be a binomial. Then f can be written in the form \(f(Z)=Z^\gamma \tilde{f}(Z)\), where \(\tilde{f}(Z)=c_{\alpha } Z^\alpha +c_{\beta } Z^\beta \) is an irreducible psd-stable binomial and therefore also a non-mixed polynomial.

If all variables appearing in f are diagonal variables, then f is stable, and by Theorem 2.6 its support has to satisfy the Two-Step Axiom, which leads to \(|\alpha -\beta |\le 2\) in the case of binomials. Thus, now we can assume the occurrence of an off-diagonal monomial, say \(Z^{\beta }\). By the structure Theorem 5.1 and after possibly taking derivatives in direction \(V^{(ij)}\) for some \(z_{ij}\) appearing in \(Z^\beta \), we see \(|\beta | \le |\alpha |\).

In the homogeneous case, by Theorem 5.4, we have \(\deg (\tilde{f})\le 2\). The only possibility is given by \(\tilde{f}(Z)= c_1 z_{ii}z_{jj}+c_2 z_{ij}^2\) with \(c_1, c_2 \ne 0\) and \(i \ne j\), since otherwise we would get a contradiction to the structure Theorem 5.1. Clearly \(|\alpha -\beta |\le 2\) holds. Further we have \(\frac{c_1}{c_2}\in {\mathbb R}\) by Remark 2.10. In the non-homogeneous case, i.e., \(|\alpha | \ne |\beta |\), Lemma 5.7 implies \(\beta =0\) or \(|\beta |> |\alpha |\). \(\beta =0\) is not involving an off-diagonal variable. The case \(|\beta |>|\alpha |\) contradicts the earlier observation that \(|\beta | \le |\alpha |\). Therefore, there is no non-homogeneous psd-stable binomial involving off-diagonal variables. \(\square \)

From Theorem 5.5, we observe that psd-stable binomials cannot contain a monomial which is the product of different off-diagonal variables. This also holds for psd-stable homogeneous non-mixed polynomials.

Theorem 5.8

Let f be a psd-stable homogeneous non-mixed polynomial of degree 2. Then f is of the form \(f(Z)=\sum _{\alpha \in A}c_\alpha Z^\alpha +\sum _{i<j}c_{ij}z_{ij}^2\).

Proof

Let f(Z) be a psd-stable homogeneous non-mixed polynomial of degree 2 and assume to the contrary that there is a monomial \(z_{ij}z_{kl}\) in f involving distinct variables, that is, \(\{i,j\} \ne \{k,l\}\). Note that the index sets \(\{i,j\}\) and \(\{k,l\}\) can intersect. The order of the variable matrix must therefore be at least 3.

Consider \(S'\) as a modified version of S from (2) with \(S'_{ij}{:=}S'_{ji}{:=}t_1, \ S'_{kl}{:=}S'_{lk} {:=} t_2\) for complex \(t_1\) and \(t_2\) and set all other off-diagonal entries of \(S'\) to 0, while the diagonal of S is set to some complex value s. Thus, up to a factor, \(f(S')=0\) is of the form

with some constants \(c_1,c_2, c\) and \(c \ne 0\). Since f is hyperbolic due to its homogeneity and psd-stability, we may assume \(c_1,c_2,c\) to be real.

Since f is hyperbolic, the quadratic polynomial g in \(s,t_1,t_2\) on the left-hand side of (4) is hyperbolic as well. Hyperbolic quadratic polynomials have signature \((n-1,1)\) or \((1,n-1)\) ( [11], see, e.g., also [20]). Since the term \(s^2\) in g comes from a substitution into the terms \(z_{11}^2, \ldots , z_{nn}^2\), the representation matrix of g must have signature (2, 1). Hence, the lower right \(2 \times 2\)-matrix of the representation matrix

has signature (1, 1). If at least one of the \(c_i\) is positive, then we can choose real values for \(t_1\) and \(t_2\) such that \(s^2 + \gamma = 0\) with some \(\gamma > 0\), which gives among the two solutions for s one with positive imaginary part. If one of the \(c_i\), say, \(c_2\), is zero and \(c_1 \le 0\), then setting \(t_1 = 1\) and \(t_2 = \frac{1-c_1}{c}\) gives the solution \(s=i\) with positive imaginary part. It remains to consider the case \(c_1 < 0\), \(c_2 < 0\), in which the signature condition implies \((c/2)^2 > c_1 c_2\). By choosing \(t_1, t_2\) to satisfy \(t_1^2 = - \frac{1}{c_1}\), \(t_2^2 = - \frac{1}{c_2}\), we obtain

which can formally be viewed as the equality case of the arithmetic–geometric inequality. We can pick the signs of \(t_1, t_2\) such that \(c t_1 t_2 > 0\). And the previous inequality implies

(and the expression in the argument of the absolute value on the right hand side is negative). Hence, we obtain \(s^2 + \gamma = 0\) for some positive \(\gamma \), which gives among the two solutions for s one with positive imaginary part.

Altogether, we have constructed a zero \(S'\) of f with \(\textsf{Im}(S') \succ 0\), which contradicts the psd-stability of f. \(\square \)

5.2 Polynomials of determinants

We show that the following class of polynomials of determinants satisfies a generalized jump system criterion with regard to psd-stability. Suppose that the symmetric matrix of variables Z is a diagonal block matrix with blocks \(Z_1, \dots , Z_k\). A polynomial of determinants is a polynomial in Z of the form \(f(Z_1, \ldots , Z_k)=\sum _{\alpha } c_\alpha \det (Z)^\alpha \), where we define \(\det (Z)^\alpha =\det (Z_1)^{\alpha _1}\cdots \det (Z_k)^{\alpha _k}\).

We say a polynomial of determinants \(f(Z_1, \ldots , Z_k)=\sum _{\alpha } \det (Z)^\alpha \) is written in standard form if the largest possible determinantal monomial is factored out, i.e., \(f(Z_1, \ldots , Z_k)= \) \(\det (Z)^\gamma \sum _\beta c_{\beta } \det (Z)^\beta \) \(=\det (Z)^\gamma \tilde{f}(Z)\), and all \(c_\beta \ne 0\). We investigate the following notion of support for polynomials of determinants.

Definition 5.9

Let \(f(Z_1, \ldots , Z_k)=\sum _{\alpha }c_\alpha \det (Z)^\alpha \) be a polynomial of determinants. Then the determinantal support is defined as \(\textrm{supp}_{\det } (f)=\{\alpha \in {\mathbb Z}_{\ge 0}^k:c_\alpha \ne 0 \}\).

Note that the determinantal support specializes to the usual support when Z is a diagonal matrix, that is, all \(Z_i\) are \(1\times 1\) matrices of a single variable. Since diagonalization preserves psd-stability by Lemma 4.1 a), we obtain the following corollary of Theorem 2.6 for the determinantal support of psd-stable polynomials of determinants.

Corollary 5.10

Let \(f(Z_1, \ldots , Z_k)=\sum _{\alpha }c_\alpha \det (Z)^\alpha \) be psd-stable. Then the determinantal support of f forms a jump system.

The next theorem shows that psd-stable polynomials of determinants have a very special structure.

Theorem 5.11

Let \(f(Z_1, \ldots , Z_k) = \det (Z)^\gamma \sum _{\beta \in B} c_\beta \det (Z)^\beta = \det (Z)^\gamma \tilde{f}(Z)\) be a psd-stable polynomial of determinants in standard form. Then any block \(Z_i\) appearing in \(\tilde{f}\) (that is, any \(Z_i\) such that there is \(\beta \in B\) with \(\beta _i >0\)) has size \(d_i \le 2\).

Further, for any matrix \(Z_i\) which has size exactly 2, let \(C_i=\max _{\beta \in B} \beta _i\). Then if \(\beta \in B\), then also \(\beta + c {\textbf{e}}_i \in B\) for all \(- \beta _i \le c \le C_i -\beta _i\).

Proof

Observe that, by construction, a variable in the matrix \(Z_i\) does not appear in any other matrix \(Z_j\). This ensures that all vectors in the support of the polynomial \(\tilde{f}_\textrm{Diag}(Z)\), which involves only the diagonal variables, are of the form

where \(\beta =(\beta _1, \dots , \beta _k) \in B\) is an exponent vector of \(\det (Z)\) in \(\tilde{f}\) and \(d_i\) is the size of the matrix \(Z_i\) for each i.

Further, \(\tilde{f}_\textrm{Diag}\) is stable and its support is therefore a jump system. Suppose now that some matrix \(Z_i\), say \(Z_1\), has size \(d_1\ge 3\). Since f is in standard form, there are \(\beta \in B\) such that \(\beta _1 >0\) and \(\beta ' \in B\) such that \(\beta '_1=0\). Then there are corresponding vectors \(\alpha = (\underbrace{\beta _1, \dots , \beta _1}_{d_1 \text { times}}, \beta _2, \dots )\) and \(\alpha ' = (\underbrace{0, \dots , 0}_{d_1 \text { times}}, \beta _2', \dots )\) in the support of \(\tilde{f}_\textrm{Diag}\), which is a jump system. Thus, \({\textbf{e}}_1 \) is a valid step from \(\alpha '\) to \(\alpha \), but since \(\alpha '+{\textbf{e}}_1=(1, 0, \dots , 0, \dots )\) is not of the form (5) it cannot belong to the support of \(\tilde{f}_\textrm{Diag}\). Now by definition of a jump system, there must be a step from \(\alpha ' + {\textbf{e}}_1\) to \(\alpha \) which is in the support. However, whichever step we take will lead us again to a vector where the first \(d_1\) entries are not all equal, since \(d_1\ge 3\), and thus, none of these vectors can be in the support of \(\tilde{f}_\textrm{Diag}\), contradicting the fact that it is a jump system. Thus, all blocks \(Z_i\) in \(\tilde{f}\) must have size \(d_i \le 2\).

Now suppose that \(d_i=2\) for some block \(Z_i\), without loss of generality let it be \(Z_1\). Just as before, we know there are \(\beta \in B\) such that \(\beta _1 >0\) and \(\beta ' \in B\) such that \(\beta '_1=0\); further, If \(C_1=\max _{\beta \in B} \beta _1\), then there is also a vector \(\beta '' \in B\) such that \(\beta ''_1=C_1\). This implies that in the support of \(\tilde{f}_\textrm{Diag}\) there are vectors \(\alpha = (\beta _1, \beta _1, \dots )\), \(\alpha ' = (0, 0, \dots )\) and \(\alpha '' = (C_1, C_1, \dots )\). Thus, \(\alpha - {\textbf{e}}_1= (\beta _1 - 1, \beta _1, \dots )\) is a valid step from \(\alpha \) to \(\alpha '\). Just as before, \(\alpha - {\textbf{e}}_1\) does not belong to the support of \(\tilde{f}_\textrm{Diag}\) because it is not of the form of (5). Thus, there must be a further step from \(\alpha - {\textbf{e}}_1\) toward \(\alpha '\) which is in the support. The only such step is in the second coordinate, so that (5) is satisfied, and thus, \(\alpha -{\textbf{e}}_1 -{\textbf{e}}_2 \in \textrm{supp}(\tilde{f}_\textrm{Diag})\). This argument can be repeated until we obtain the statement of the theorem. \(\square \)

5.3 Considerations on the support of general psd-stable polynomials

By Theorem 2.6, the support of a stable polynomial defines a jump system. Hence, there cannot be large gaps in the support, that is, if two vectors are in the support and are far apart, there is some other vector of the support between them. The families studied in Subsections 5.1 and 5.2 suggest that a similar phenomenon happens for psd-stability: when there are too-large gaps in the support, the polynomial cannot be psd-stable.

In order to quantify what a large gap should be, we make two observations. First, since restricting a psd-stable polynomial in the symmetric matrix variables Z to its diagonal yields a stable polynomial, between two monomials involving only diagonal variables the Two-Step Axiom holds. A weaker statement is that between any two such monomials there is a sequence of linear and double steps which does not leave the support of the polynomial, where we define a linear step from a monomial to be multiplying the monomial by \(z_{ij}^{\pm 1}\), a double step multiplying by \(z_{ij}^{\pm 1}z_{kl}^{\pm 1}\).

Recall from Lemma 2.13 that a prominent example of psd-stable polynomials is the symmetric determinant \(\det (Z)\). In the symmetric matrix variables \((z_{ij})_{i \le j}\), its support has a special structure: it contains all monomials that can be obtained from \(z_{11} \cdots z_{nn}\) by transpositions of indices, that is, by successively multiplying the monomial by \(z_{ij}z_{kl}z_{ik}^{-1}z_{jl}^{-1}\) for some indices \(i, j, k, l \in [n]\). We call such a move on monomials a transposition step.

Lemma 5.12

Any two monomials in the support of the symmetric determinant \(\det (Z)\) are linked by a sequence of transposition steps decreasing the distance between the monomials which never leave the support.

Proof

Monomials in \(\det (Z)\) are precisely those products of symmetric variables \(z_{ij}\) (where \(i \le j\)) such that each index \(k \in [n]\) appears exactly twice. Indeed, when considering the determinant as a polynomial in \(n^2\) (i.e., non-symmetric) variables, each monomial corresponds to a permutation in the symmetric group \(S_n\), and thus, each element of [n] must appear precisely once in the rows and once in the columns index in the monomial. When considering the determinant as a polynomial in the symmetric variables, certain distinct permutations define the same monomial. Observe that the variable \(z_{ij}\) appears in the monomial defined by a permutation \(\pi \) if either \(i= \pi (j)\) or \(j=\pi (i)\). Thus, both a cycle \(\sigma =(i_1 i_2 \dots i_k) \in S_k\) and its inverse \((i_1 i_k i_{k-1} \dots i_2 ) \) yield the monomial \(\Pi _{j} z_{i_j i_{j+1}}\), and in general, two permutations correspond to the same monomial if and only if their cycle decompositions are made of pairwise the same or inverse cycles. Since two such permutations have the same sign, there is no cancelation of monomials in the symmetric determinant \(\det (Z)\).

Thus, applying any transposition step to any monomial of \(\det (Z)\) will yield another monomial of \(\det (Z)\): exchanging \(z_{ij}z_{kl}\) with \(z_{ik}z_{jl}\) or \(z_{il}z_{kj}\) preserves the property that each index appears exactly twice. We now only need to show that, given any two monomials \(Z^\alpha \) and \(Z^\beta \) of \(\det (Z)\), there exists a transposition step from \(\alpha \) to \(\beta \). Choose a variable \(z_{ij}\) such that \(z_{ij} \mid Z^\alpha \) but \(z_{ij} \not \mid Z^\beta \). There must be an index \(k \ne j\) such that \(z_{ik} \mid Z^\beta \) and an index \(l \ne i\) such that \(z_{jl} \mid Z^\beta \). Then multiplying \(Z^\alpha \) by \(z_{ij}^{-1}z_{kl}^{-1}z_{ik}z_{jl}\) is a transposition step, since it decreases the distance to \(\beta \) in the norm \(| \cdot |\). \(\square \)

We conjecture that a property inspired by the structure of the determinant and that of stable polynomials holds for all psd-stable polynomials.

Conjecture 5.13

For any monomial \(Z^\beta \) appearing in a psd-stable polynomial, there is a diagonal monomial \(Z^\alpha \) appearing in f which can be reached by a sequence of linear, double and transposition steps which decrease the distance from \(\beta \) to \(\alpha \) and which never leave the support of f.

Example 5.14

The polynomial

is psd-stable because it is the product of two psd-stable polynomials: the first one is the derivative in direction \(V^{(12)}\) of the \(2\times 2\) determinant, the other one is a \(2\times 2\) determinant sharing one variable with the first.

This polynomial satisfies Conjecture 5.13: for example, if we choose the monomial \(z_{12}z_{13}^2\), with a double step we reach \(z_{11}z_{13}^2\), which is also in the support of f, and with a transposition step we reach \(z_{11}^2z_{33}\), a diagonal monomial in the support. Notice that the double step produces a monomial whose exponent vector is closer to the exponent of the final diagonal monomial (with respect to \(| \cdot |\)). Such a sequence of valid steps can be found for all monomials of f.

As evidence for the conjecture, we observe that it holds for the classes of polynomials we have studied.

Lemma 5.15

Psd-stable binomials satisfy Conjecture 5.13.

Proof

By Theorem 5.5, \(c_\alpha z_{ii}z_{jj}+ c_\beta z_{12}^2\) is the only irreducible psd-stable binomial involving off-diagonal variables. Clearly, it is exactly one transposition step between the both monomials. \(\square \)

Lemma 5.16

Psd-stable homogeneous non-mixed polynomials satisfy Conjecture 5.13.

Proof

Let f be a psd-stable homogeneous non-mixed polynomial. If f does not involve off-diagonal monomials, the claim follows from the jump system property of usual stable polynomials. Thus, assume that f involves off-diagonal variables. We have \(d{:=}\deg (f)\le 2\) by Theorem 5.4. In the case of \(d=1\) there is a double step between every two monomials of f; thus, assume \(d=2\) and let \(c_\beta Z^\beta \) be an off-diagonal monomial of f. By Theorem 5.8, \(c_\beta Z^\beta \) is of the form \(c_{jk}z_{jk}^2\) for some \(j\ne k\). Let \(J=\{j,k\}\), then \(f(Z_J)\) is psd-stable by Lemma 4.1 c). By the structure Theorem 5.1, \(f(Z_J)\) is of the form

with \(c_k\in {\mathbb C}\) such that \(z_{jj}\) and \(z_{kk}\) both appear. We claim that \(c_2 \ne 0\). Assuming \(c_2=0\) gives \(c_1,c_3\ne 0\). Reducing \(f(Z_J)\) to the diagonal contradicts the jump system property and thus we obtain \(c_2\ne 0\). Therefore, the monomial \(c_2z_{jj}z_{kk}\) appears in \(f(Z_J)\) and hence also in f(Z). Thus, it is a transposition step from \(c_{jk}z_{jk}^2\) to the corresponding diagonal monomial \(c_2z_{jj}z_{kk}\). \(\square \)

Lemma 5.17

Psd-stable polynomials of determinants satisfy Conjecture 5.13.

Proof

Every monomial \(Z^\beta \) in a polynomial of determinants f belongs to a determinantal monomial \(\det (Z)^\gamma \) and thus is a product of monomials \(Z^{\beta _j}\) (with multiplicities \(\gamma _j\)) belonging to determinantal blocks \(\det (Z_j), \ 1\le j \le k\). Let \(Z^{\alpha _j}\) be the diagonal monomial of block \(\det (Z_j)\). By Lemma 5.12 there is a sequence of transposition steps from \(Z^{\beta _j}\) to \(Z^{\alpha _j}\) which never leaves the support of \(\det (Z_j)\) for all \(1\le j \le k\). Concatenation of these sequences (with multiplicities \(\gamma _j\)) gives a sequence of transposition steps from \(Z^\beta \) to the diagonal monomial \(Z^\alpha \) of \(\det (Z)^\gamma \) which never leaves the support of f. \(\square \)

Another class of psd-stable polynomials which satisfy Conjecture 5.13 are the psd-stable lpm polynomials introduced in [1], which are polynomials of the form \(f(Z)=\sum _{J\subseteq [n]} c_J\det (Z_J)\), where \(Z_J\) is the square submatrix of Z with index set J. Indeed, every monomial belongs to a square minor of Z, and since every minor has a different index set, there is no cancellation of monomials in the sum. Thus, for each summand Lemma 5.12 holds and it holds for the whole polynomial as well.

Data Availability

The manuscript has no associated data.

References

Blekherman, G., Kummer, M., Sanyal, R., Shu, K., Sun, S.: Linear principal minor programs. I: Linear operators determinantal inequalities and spectral containment. Int. Math. Res. Not., to appear, (2023)

Borcea, J., Brändén, P.: The Lee-Yang and Pólya-Schur programs. I: Linear operators preserving stability. Invent. Math. 177(3), 541 (2009)

Borcea, J., Brändén, P.: Multivariate Pólya-Schur classification in the Weyl algebra. Proc. Lond. Math. Soc. 101, 73–104 (2010)

Borcea, J., Brändén, P., Liggett, T.: Negative dependence and the geometry of polynomials. J. Am. Math. Soc. 22(2), 521–567 (2009)

Boyd, S., El Ghaoui, L., Feron, E., Balakrishnan, V.: Linear Matrix Inequalities in System and Control Theory. SIAM, Philadelphia (1994)

Brändén, P.: Polynomials with the half-plane property and matroid theory. Adv. Math. 216, 302–320 (2007)