Abstract

Unforeseen events (e.g., COVID-19, the Russia-Ukraine conflict) create significant challenges for accurately predicting CO2 emissions in the airline industry. These events severely disrupt air travel by grounding planes and creating unpredictable, ad hoc flight schedules. This leads to many missing data points and data quality issues in the emission datasets, hampering accurate prediction. To address this issue, we develop a predictive analytics method to forecast CO2 emissions using a unique dataset of monthly emissions from 29,707 aircraft. Our approach outperforms prominent machine learning techniques in both accuracy and computational time. This paper contributes to theoretical knowledge in three ways: 1) advancing predictive analytics theory, 2) illustrating the organisational benefits of using analytics for decision-making, and 3) contributing to the growing focus on aviation in information systems literature. From a practical standpoint, our industry partner adopted our forecasting approach under an evaluation licence into their client-facing CO2 emissions platform.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

As one of the largest industries associated with carbon dioxide (CO2) emissions, the aviation industry is under pressure to lower emissions to help combat climate change (Sharma et al., 2021). As aviation represents the primary mode of international transport, the industry is urgently trying to demonstrate lower emissions to help combat climate change, often leveraging market-based mechanisms based on carbon trading (Ritchie et al., 2020; Happonen et al., 2022; Higham et al., 2021; Gunter & Wöber, 2021).

Different emissions regimes apply to the industry in different jurisdictions. In Europe, aviation emissions are governed by the EU Emissions Trading System (EU ETS). All airlines operating in Europe must monitor, report, and verify their emissions (Bolat et al., 2023). Under the EU ETS cap and trade scheme, the number of free allowances allocated to aircraft operators will be progressively reduced to reach full competitive auctioning by 2027 (Bolat et al., 2023). Airlines exceeding their free allowance must purchase carbon credits to offset their emissions, potentially a very significant additional operating cost for airlines.

Outside the EU, the main emissions trading scheme for aviation is the Carbon Offset and Reduction Scheme for International Aviation (CORSIA), whose aim is to promote carbon-neutral growth in the aviation industry (Liao et al., 2023). Similar in focus to the EU ETS, the offsetting requirements for CORSIA are calculated in different phases, gradually transitioning from a sectoral growth factor to an individual operator growth factor, thereby placing the onus on individual operators to fully understand and manage their airline’s future exposure. Therefore, accurate forecasting based on actual flight data is crucial for individual airlines to achieve carbon targets and reduce financial costs related to purchasing carbon credits.

When it comes to calculating aircraft CO2 emissions, CO2 emissions depend on a range of factors, such as the plane model and the distance travelled (Sekartadji et al., 2023). The exact CO2 emissions per aircraft are inherently complex due to the interactions between emissions, passenger loads, fuel costs, and carbon credits. For example, Lo et al. (2020) find the annual emission per available seat kilometres decreases with longer routes, despite larger aircraft and longer distances contributing to higher total CO2 emissions. For aircraft financiers and operators, CO2 emissions must fall within specific bandwidths to avoid penalties (Bolat et al., 2023). To abide by regulations and improve decision-making, stakeholders must generate accurate CO2 emission forecasts in real time for each aircraft. This is a challenge faced by many firms, including FCOE (Forecasting CO2 Emissions company; a pseudonym), who entered into a partnershipFootnote 1 with the researchers to work on this problem. FCOE aimed to develop an emissions platform that would serve as a benchmark for analysing actual and predicted CO2 emissions across the aviation sector according to Country, Portfolio (e.g. lessor, bank, credit export agency), Airline Operator, Aircraft type, Airport, Route City Pair, ETS Scheme, Aircraft Category, and Manufacture. This would enable running operational and growth scenarios based on actual flight data rather than the typical approach of focusing on fuel measurements before and after flights.

A critical feature of the FCOE emissions platform is a forecasting engine to predict future aircraft and fleet utilisation relating to CO2 emissions to enable aircraft and fleet performance to align with international, regional, and national reduction schemes and targets. “Without accurate forecasting, it simply isn’t possible for stakeholders to make better decisions, be it in relation to which aircraft to fly on a particular route or the number of carbon credits needed to be purchased to offset future liabilities” (FCOE, Head of Product). Forecasting is crucial in addressing the urgent challenge of reducing CO2 emissions, allowing organisations to make informed decisions and help identify targeted interventions.

FCOE identified three main challenges when it came to forecasting. Firstly, airlines and financiers require forecasts for a large number of flights, which adds complexity. Secondly, the need for real-time updates becomes more significant when considering the volume of airlines and the multiple flights per day. Therefore, the forecasting solution must be scalable and fast. Lastly, the time-series data used for CO2 emissions have data quality issues, such as missing values. Accordingly, our objective is to develop a novel efficient forecasting method for real-time predictions of aircraft emissions with limited data.

The remainder of the paper is organised as follows. Section 2 provides an overview of the relevant information systems literature, focused on AI and prediction models as well as CO2 emission schemes and instruments. Section 3 presents and discusses the CO2 emissions dataset utilised in this study. Section 4 is focused on methodology and the development of our novel Adaptive Auto-Regression method for CO2 forecasting. We present our numerical and comparative results in Section 5, showcasing the proposed scheme’s applicability, accuracy, and efficiency for the entire dataset. Section 6 discusses our findings in the context of existing research and outlines our contribution to theory and practice, while also presenting the limitations and opportunities for future research. We conclude in Section 7.

2 Background Literature

In an environmental context, the greenhouse effect is causing sea level rise, climate warming, and natural disasters, and CO2 which stands for carbon dioxide, is the most crucial component of greenhouse gases. It is important to reduce CO2 emissions to decrease environmental pollution and achieve a harmonious coexistence between humans and nature (Cang et al., 2024). While greenhouse gases (GHG) consist of CO2, methane gas, nitrous oxide, and fluorinated gases, CO2 is the primary pollutant, accounting for about 80% of emissions (World Bank, 2007; Akande et al., 2019).

One of the primary challenges for transportation planners and policymakers is to develop a sustainable system that can meet the growing demands of existing and future travel while also mitigating harmful carbon emissions from transportation sources for environmental sustainability (Ismagilova et al., 2019; Zhang et al., 2023). In the IS literature, research by Cheng et al. (2020) highlights the significant impact that information systems (IS) can have in reducing vehicle CO2 emissions by alleviating vehicle-related traffic congestion.

Research has illustrated that machine learning techniques hold significant promise in relation to aircraft CO2 emissions. An analysis of the machine learning literature reveals two main areas of research (see Table 1) that are central to predictive analytics and its potential application to add value in the battle to reduce CO2 emissions. The first is the organisational benefits that stem from the application of ML. Our research contributes to this area, since the enhanced predictions from our approach enables better decision support (e.g., through advanced scenario planning). The second area is advancing predictive analytics techniques. Our research also contributes to this area, since our approach is scalable and less resource intensive compared to other state of the art machine learning approaches for forecasting with limited data.

2.1 Organisational Benefits and Management of Predictive Analytics

There has been a strong focus on prediction in the IS field (Bauer et al., 2023; Lotfi et al., 2023; Tutun et al., 2023). In the ever-evolving landscape of IS research, the combination of prediction models with artificial intelligence (AI) holds immense potential (Lotfi et al., 2023; Revilla et al., 2023). This focus is often on the use of machine learning in prediction tasks, defined by Revilla et al. (2023) as AIML (artificial intelligence machine learning), is an area of significant growth, with AIML detecting systematic patterns in big data to generate predictions that surpass the capabilities of human experts (Revilla et al., 2023; Tutun et al., 2023). This area provides insights into how to best deploy machine learning and how to manage human-AIML collaboration (e.g., Fügener et al., 2021; 2022; Revilla et al., 2023).

In terms of organisational benefits, predictive analytics offers great potential for enabling decision support and innovation (Benbya et al., 2021). Research has illustrated that machine learning can be used to augment managerial decision-making with decision-makers’ utilisation of machine learning advice and recommendations leading to improved decision-making performance (Sturm et al., 2023). For example, Fang et al. (2021) demonstrate that machine learning-based prescriptive analytics can improve the cost-effectiveness in a clinical decision-making setting.

2.2 Predictive Analytics Techniques and Applications

Predictive analytic techniques have been widely applied in the air transport domain by airlines and airports to deal with multiple issues, including flight delays and customer complaints, issues which have caused multi-billion-dollar losses to airlines (Li et al., 2023; Mamdouh et al., 2024; Zhang et al., 2024) as well as customer up selling (Thirumuruganathan et al., 2023) and aircraft maintenance (Deng et al., 2021). For example, a recent study by Shayganmehr and Bose (2024) focused on the change in passenger emotions in the pre- and post-COVID-19 period to address how luxury airlines can bring passengers back following the pandemic.

Similar to many industries, researchers are increasingly applying machine learning and predictive analytics to improve decision-making within the aviation industry. For example, Zhang and Mahadevan (2019) blend support vector machines with deep neural networks to predict the risk severity of abnormal aviation events. In earlier work, Zhang and Mahadevan (2017) use support vector regression to predict abnormal aviation events (e.g., an aircraft running out of fuel). More recently, Karakurt and Aydin (2023) proposed a regression model specifically for predicting fossil fuel-related CO2 emissions while Zhang and Mahadevan (2020) use Bayesian neural networks to characterise the uncertainty of abnormal aviation event uncertainty. Using two years of flight data from a Hong Kong-based global airline, Sun et al. (2020) identified flight characteristics (e.g., the expected arrival delay) affecting departure delays and flight times and incorporated their model of consecutive interdependent departure-arrival times to improve crew pairings decision-making. Guo et al. (2021) use machine learning to forecast real-time distributions of passenger flow at Heathrow airport. Li et al. (2021) leveraged big data from Air France-KLM to examine the impact of changes in supply and demand during COVID-19 between passenger segments.

Specifically, focusing on the techniques used to forecast C02 emissions, multiple machine learning approaches have been used. These approaches include Grey Machines for forecasting the yearly CO2 emissions of countries (Xu et al., 2001). Combinations of Grey Machines with Support Vector Machines (Wood, 2023) and Extreme Learning have also been utilised for forecasting yearly CO2 emissions of a large region in China (Li et al., 2018). Fully Modified Ordinary Least Squares and Dynamic Ordinary Least Squares for forecasting yearly panel data augmented with oil prices and energy consumption (Nguyen et al., 2021) have also been proposed. Approaches using Ridge Regression (Qian et al., 2020) for yearly forecasts of CO2 emissions have been considered. These forecasts use panel data and focus on CO2 emissions of countries/specific industries, with the purpose of identifying the potential factors affecting CO2 emissions. Beyond machine learning, CO2 emission forecasting in the aviation sector has also used traditional time-series approaches, such as Auto-Regressive Moving Average (ARIMA), Yang and O’Connell (2020); Chen et al. (2022), scenario modelling based on passenger growth, fuel efficiency improvement, demands and exogenous factors (Grewe et al., 2021), log-mean Divisia index models (Yu et al., 2020), and dynamic panel-data econometrics (Chèze et al., 2011). Recurrent Neural Networks (RNN) (Singh & Dubey, 2021), Deep Forest Regression (dos Santos Coelho et al., 2024), Deep Learning (DL), and Long Short-term Memory (LSTM) (Wu et al., 2023) have also been used to study carbon emissions. These models typically require a large number of samples and involve adjusting multiple control parameters (Cang et al., 2024). However, insufficient data samples may result in overfitting problems (Cang et al., 2024).

Research forecasting CO2 emissions for the airline industry in the literature is primarily concerned with forecasting aggregates, e.g., annual emissions of the sector or specific regions. In addition, research often focuses on macro forecasts to examine the evolution of CO2 emissions based on factors such as fuel efficiency and demand. Since the aforementioned research and associated techniques consider specific time series based on yearly data, the underlying data tends to be smooth due to aggregation. As a result, the data is easier to model, analyse and forecast. However, in the case of sparse datasets and large time-series data (e.g., 30,000 aircraft) with varying characteristics, these techniques (particularly the non-machine learning methods) cannot be utilised, as the restrictive assumptions underpinning the models are likely to be violated by the data. Furthermore, forecasting CO2 emissions for airlines has become even more complex due to the impact of the COVID-19 crisis, with many aircraft not flying regularly during this period. For forecasting, this adds much greater complexity due to the absence of data/irregular flights.

3 Empirical Context

FCOE’s CO2 emissions dataset is composed of 29, 707 time-series. Each time-series corresponds to a unique aircraft. The time-series span from December 2018 to July 2021. Thus, the time-series are composed of 32 monthly samples corresponding to the total monthly CO2 emissions (in tonnes). FCOE provided us this data, where we obtained the data “as-is” through a proprietary environment and aggregated them on a monthly basis. The data is produced from estimation of the Great Circle Distance (GCD), Sinnott (1984), between airports following the ICAO (ICAO Carbon Emissions Calculator Methodology) approach and with respect to the aircraft type, fuel type, fuel consumption and seat configuration. Thus, each time series was uniquely identified by the ICAO number of each aircraft, and each day, the CO2 emissions for each aircraft were reported for a period of 32 months. We note that the data was not pre-processed and was analysed directly by the proposed scheme since the quantity of interest was the CO2 emissions at various intervals ahead to help inform business decisions regarding offsetting.

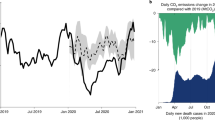

Apart from the standard routes flown by an aircraft, there are unexpected situations where other routes are also included or flights are cancelled due to unexpected reasons. These phenomena have been intensified with events such as the COVID-19 outbreak and the Russian-Ukraine crisis, which renders the process of estimating the future CO2 emissions, in order to offset, a challenging problem. Due to the aforementioned reasons, the time-series have diverse characteristics and have tremendous heterogeneity regarding the number of zero samples. Figure 1 presents a histogram of the number of time-series with nonzero samples.

The number of zero samples is affected by several factors, including: 1) An aircraft is registered at some point during the considered time horizon; thus, nonzero samples start from that particular moment; 2) an aircraft has been decommissioned at some point during the considered time horizon; thus, zero samples start from the month following the point of decommission; and 3) an aircraft has temporarily stopped for reasons related to maintenance, upgrades, or other services. In that case, zero samples are sparsely distributed into the time-series. The previous points represent ongoing complication for modelling the time-series. However, the number of nonzero elements in the time-series are also severely affected by the COVID-19 pandemic, which lead to a large number of flight cancellations due to national and international travel restrictions.

Another major characteristic impacting the choice of a model is stationarity of the time-series, which is affected by changes in schedule and route planning especially during the COVID-19 outbreak. We utilised a combination of tests to examine stationarity. Each time-series was tested with the Augmented Dickey-Fuller (Harris, 1992) and the Kwiatkowski-Phillips-Schmidt-Shin tests (Kwiatkowski et al., 1992) and are categorised as follows: Stationary - 8614 time-series, non-stationary - 2618 time-series, trend stationary - 16464 time-series, and difference stationary - 2011 time-series.

Moreover, in some of the above time-series there is presence of seasonal components. In light of this diverse data landscape and large number of zero observations across the data, traditional methods can not be relied upon to model and forecast the time-series. An important indicator is persistence and long memory, which can be assessed using the Generalised Hurst exponent computed from the scaling of the re-normalised q-moments of the distribution (Matteo, 2007). Specifically, \(\frac{\langle |y(t+r)-y(t)|^q\rangle }{\langle y(t)^q \rangle } \sim r^{qH(q)}\), where q denotes the order of the moment, y the time-series, \(\langle X \rangle \) denotes the expected value of X and \(H_q=H(q)\) is the Hurst exponent. We consider the case of \(q=1\) to assess persistence.

The majority of time-series have an anti-persistent behavior \(H_1<0.5\); thus, using fractional auto-regressive approaches, such as ARFIMA (Auto-Regressive Fractionally Integrated Moving Average) models (Granger & Joyeux, 1980) would probably not lead to substantial improvement in modelling error. Moreover, the Hurst exponent denotes that the majority of the assessed time-series present long-term switching between high and low values in succession and a tendency to return to the long term mean. For the majority of the time-series, i.e. 22, 628 time-series, the Hurst exponent could not be computed.

Forecasting in light of such a challenging dataset requires resilient forecasting methods; thus, we present a more generalised approach that is less sensitive to abrupt changes and compare it to other well-established approaches for time-series forecasting. We note that machine learning techniques can be used to model and forecast general time-series, such as Recurrent Neural Networks (Tealab, 2018), Long Short-Term Networks (LSTMs) (Hochreiter & Schmidhuber, 1997), and Support Vector Regression (Awad & Khanna, 2015). However, these techniques require substantial amounts of data for training and optimisation as well as computational resources. To mitigate these issues, we present an adaptive Auto-Regression technique based on Recursive Pseudoinverse Matrices in the following Section.

4 Methodology

4.1 Adaptive Auto-Regression based on Recursive Pseudoinverse Matrices

Consider a time-series \(y=y(t)=[y(1),\hdots ,y(N)]^T\) and a matrix X retaining n basis functions with the following form \( X=[x_1(t),x_2(t),\hdots ,x_n(t)],\) where \(t=[1,N] \subset \mathbb {N}\). Expanding the time-series y with the given set of basis provides the expression \(y(t)=a_1 x_1(t) + a_2 x_2(t) + \hdots + a_n x_n(t),\) which is equivalent to \(y=Xa\) in compact form. The vector of coefficients a can be determined for a prescribed set of basis in terms of the Moore-Penrose pseudoinverse matrix via \(a=X^+ y = (X^T X)^{-1} X^T y\) under the assumption that basis functions are linearly independent (Penrose, 1955).

The estimation of the coefficients requires “a priori” knowledge of the basis function. However, this is difficult in practice, as the basis functions might be built by monitoring the residual or other characteristics of the time-series. To avoid prescribing the basis functions explicitly, Filelis-Papadopoulos et al. (2021) proposed applying a recursive Schur complement pseudoinverse matrix based approach. This method is based on a factored recursive inversion approach inspired by the Incomplete Inverse Matrix preconditioning. The pseudoinverse matrix, required to compute the coefficients of the model is formed recursively, after assessing the effectiveness of its candidate basis function to be included in the final model. This enables selection of the most appropriate set of basis functions to model a time-series based on criteria such as stability and error reduction, which can be summarised in the following two step process: 1) Select the most appropriate basis based on error reduction and constraints from a candidate set of basis functions. 2) Incorporate selected basis function if it leads to substantial error reduction and does not disrupt the Positive Definiteness of the dot product matrix. This process is similar to adaptive feature extraction, where the features (basis functions) that lead to maximum error reduction with respect to Eq. 6 (Appendix A) are included iteratively in the final model.

In practice, the selected set of basis functions constitutes an orthogonal basis, where the vector representing the time-series is projected onto and can be easily progressed in the future to compute forecasts. A schematic representation of the process of projecting a time-series y onto an orthogonal system composed of basis function is given in Fig. 2. Note that the basis functions should not be orthogonalised before included in the set of potential basis or in the model, since this process is implicitly performed by the proposed scheme.

The approach avoids stability issues related to orthogonalisation procedures and the inherently sequential nature of techniques like Fast Orthogonal Search (Korenberg & Paarmann, 1991; Osman et al., 2020). The general nature of this method allows for incorporation of any linear or non-linear function e.g., (harmonics, exponentials, etc.) as basis functions including machine learning techniques such as support vector machines (Drucker et al., 1997). Incorporating a basis function involves the recursive update of the upper triangular and diagonal factors of recursive pseudoinverse matrix. Using these updated factors the new coefficients can be computed. The process of adding a basis function to a model can be schematically described as given in Fig. 3. where the matrix \(G_{i}\) is the upper triangular factor of the inverse of the dot product matrix of selected basis functions \(X_i^T X_i\) and \(D_i\) is the diagonal factor, such that \((X_i^T X_i)^{-1}=G_i D_i^{-1} G_i^T\) and \(a_i=G_i D_i^{-1} G_i^T X_i^T y\). In practice, recomputation of the coefficients can be avoided with the new coefficients derived from updating the already computed ones.

The current dataset presents strong local phenomena, i.e., value depends more on recent samples and less on historical data, primarily due to the pandemic. Thus, the underlying structure of the time-series is constantly changing based on environmental dynamics affecting the accuracy of the model and its formulation. To address these issues we depart from Filelis-Papadopoulos et al. (2021) and introduce an auto-regressive variant coupled with a “forgetting factor” (\(\lambda \)), which we describe in detail in Appendix B. The effect of the forgetting factor on linear regression, for a sample time-series, is presented in Fig. 4. The adopted approach for the “forgetting factor” is similar to the Recursive Least Squares method (Diniz, 2020). Another approach for filtering old data, in terms of importance, is adopted by Long Short-Term Memory Neural Networks (LSTMs), where forget gates in conjunction with input and output gates in an LSTM cell select which parts of past information are relevant and influential. The weights of these gates are trained from past data leading to a dynamic forgetting mechanism, which generates substantial improvements, especially for cases with sequential data (Hochreiter & Schmidhuber, 1997).

The technique presented in the Technical Appendix (i.e., Appendix A) constitutes a generic framework for modelling time-series. Any type of functions, either linear or nonlinear, can be used as basis functions to form a model. Filelis-Papadopoulos et al. (2021) studied the case of linear, exponential and sinusoidal functions for forming models and detecting frequencies. Machine learning techniques can be used and assessed with respect to the presented framework to form time-series models. The framework can also be used to combine functions and machine learning techniques to form mixed models. In the next section, the case of Auto-Regressive functions is studied, where models for time-series, corresponding to aircraft CO2 emissions, are modelled adaptively and forecasted. The Auto-Regressive basis are chosen to capture local phenomena caused by the COVID-19 outbreak, which resulted in time-series with a large number of zero samples, and to allow for easier incorporation of multiple constraints regarding the estimation of model coefficients.

4.2 Non-Negative Least Squares and Model Parsimony for Forecasting CO2 Emissions

We use the lagged versions of the time-series as our potential basis functions. Using the method described in §4.1, the lagged time-series produces an Auto-Regressive model with coefficients a. We consider the maximum order of an Auto-Regressive model p and a matrix of candidate basis functions \(C = [y_{-1},y_{-2},\hdots ,y_{-p}]\), where a vector \(y_{-j}\) denotes the time-series lagged by j steps. Thus, the number of rows of matrix C, for a time-series composed of N samples, are \(N-p\), while the number of columns are equal to p. An example of the formation of the potential basis functions process is given in Fig. 5.

To compute the auto-regression coefficients, with respect to the forgetting factor, we solve the following least square linear system \(C^T \Lambda ^T \Lambda C a = C^T \Lambda ^T \Lambda y\). From a candidate set of basis functions, we select the ones that lead to maximum error reduction. Initially, the lag that leads to maximum error reduction is determined and the corresponding coefficient is computed. This is followed by an iterative accumulation of lagged basis functions, in the range [1, p], until the coefficient corresponding to a lagged basis is sufficiently small compared to the first coefficient (\(<\delta |a_1|\)). The parameter \(\delta \in [0,1]\subset \mathbb {R}\) is the filtration tolerance. When the magnitude of a parameter is less than the filtration tolerance multiplied by the magnitude of the first parameter, the process terminates. In cases where no maximum is found under the constraints, the process also terminates, returning the set of coefficients and lags. This process is algorithmically described in Alg. 1 of Appendix B. (Note that notation \(\Lambda ^T\) is omitted in the algorithm, since \(\Lambda \) is a diagonal matrix.) The proposed adaptive approach leads to sparser models as opposed to traditional Auto-Regressive model with p lags (AR(p)), where all coefficients attain nonzero values despite their contribution to modelling error reduction. Moreover, the proposed approach allows for easy incorporation of constraints, e.g. non-negativity of the coefficients, in model formation.

Sparse Auto-Regressive models can be computed using Ridge Regression (Hoerl & Kennard, 1970) or Least Absolute Shrinkage and Selection Operator (LASSO) (Tibshirani, 1996), however they require “a priori” knowledge of additional parameters (penalty, number of lags, etc) and “a posteriori” filtering of basis functions after model formation, increasing computational effort for large number of lags.

The coefficients of the model, namely a, are usually a combination of positive and negative values. Due to the presence of zero samples, this might lead to negative predictions or forecasts. To demonstrate this issue, consider the CO2 emissions over a span of 32 months of an aircraft given in Fig. 6 along with computed values using the proposed model with \(\lambda =1.0\), \(p=5\) and \(\delta =0.001\). The negative predictions and forecasts are caused in cases where the lagged values are multiplied with negative weights, where the lagged values corresponding to positive weights are zero. To ensure positiveness of the prediction of forecast, the computed coefficients should be strictly positive. This can be achieved by solving the Non-Negative Least Squares (NNLS) problem \(\text {arg min}_{a} \Vert Xa-y \Vert _2^2,\;\;\text {under the constraint}\;\;a \ge 0 \), (e.g., Chen and Plemmons, 2009).

There are multiple solution methods for the NNLS problem, such as the active set type Lawson—Hanson algorithm, Lawson and Hanson (1995), the Fast Non-Negative Least Squares algorithm (Bro & De Jong, 1997), or Landweber’s gradient descent method (Johansson et al., 2006). However, these algorithms requires an extensive number of iterations before converging or repetitive computation of a pseudoinverse matrix which is a computationally intensive operation.

We impose additional constraints during the selection process in lines 6 and 13 of Alg. 1 (Appendix B) to ensure non-negativity of the coefficients. In line 6, we include the constraint \(b\ge 0\) to ensures the positiveness of the first coefficient \(a_1\). This constraint must also be added to line 16. However, this does not ensure positiveness of the coefficients, since the update step of line 23 could render some previously computed coefficients negative. Thus, an additional condition must be imposed in the basis selection process of line 13 of the following form: \(a_j+g_j b \ge 0, \forall j \in [1,i]\subset \mathbb {N}\), where \(g_j\) is the j-th element of column vector g. Using the parameters \(\lambda =1.0\), \(p=5\), \(\delta =0.001\) and the constraints presented above, the original time-series and resulting model are given in Fig. 7.

The coefficients computed using the proposed Adaptive Auto-Regression technique and the Non-Negative variant are given in Table 2. We note that the coefficients computed with the non-negative variant of the Adaptive Auto-Regression technique are similar to coefficients computed with the Lawson-Hanson algorithm (Lawson & Hanson, 1995).

The use of non-negative coefficients leads to positive predictions or forecasts, reducing prediction or forecasting error. Alternatively, non-negativity can be enforced by the introduction of constraints. However, this would result in increasing the number of rows of matrix X by a factor of 2, since each input sequence requires a corresponding constraint, which increases computational effort and is effective only for one step ahead forecasts. Although transformations such as Box-Cox or log transform can also be utilised, they introduce additional parameters. Determining the additional parameters substantially increases the computational work, particularly for a large number of time-series, or require specific data distributions (e.g., log-normal). Moreover, the application of techniques such as thresholding of coefficients, adopted by our method, creates additionally intricacies, since data transformations hinder interpretability of the model coefficients.

4.3 Thresholding the Magnitude of Coefficients

The diverse nature of the time-series included in the aircraft emissions dataset can lead to erroneous parameter estimation due to the limited length of each time-series and the bias of large groups of samples characterised by the sudden increases or decreases in the emissions. COVID-19 created a substantial number of zero emission observations in the dataset. Moreover, these cases can have sizeable increases of emissions, persisting for a few months, followed by low or zero emissions. Figure 8 provides an example of this case, along with forecasts from the model. The resulting model \(y_0 = 2.1253 y_{-1} + 0.6589 y_{-3} + 0.2763 y_{-4}\) was computed using the parameters \(\lambda =1.0\), \(p=5\) and \(\delta =0.001\) for the proposed scheme.

The coefficients’ magnitude leads to a highly non-stationary model, creating an exponential increase in the values corresponding to the forecasts. This substantially increases the forecasting errors, especially for long forecasting horizons. To avoid such phenomena, we apply a threshold strategy (in a similar manner to the non-zero constraint for the coefficients described in §4.2) during the coefficient selection process. The threshold concerns the sum of the positive coefficients that should be below the threshold, specifically \(\Vert a_{1:j}\Vert _1\ge \epsilon \), with \(\epsilon \ge 0\) denoting the threshold. The additional constraint that should be added in line 6 of Alg. 1 (Appendix B) is of the following form:

while in line 13 the constraint is as follows:

The last constraint also accounts for the updated coefficients, as sufficiently large coefficient b may increase the magnitude of the computed coefficients, violating the threshold.

The constraints given in Eqs. 1 and 2 ensure that the 1-norm of the coefficients does not exceed the threshold. However, Eq. 1 might lead to computational issues, since, for a given choice of the parameter p, no lagged basis might be adequate for selection because of the coefficient b’s magnitude or the non-negativity constraint. This results in failure to compute a model for a respective time-series. To avoid this issue, we employ an alternative model. Specifically, we replace the proposed scheme with Naive Forecasting when the model cannot be computed due to the constraints imposed in Line 6 of Alg. 1 (Appendix B). Using this approach, the model for the time-series from Fig. 8 with threshold \(\epsilon =1.001\) is presented in Fig. 9. The Non-Negative Adaptive Auto-Regression method with Thresholding (NNAART) is algorithmically described in Alg. 2 (Appendix B). Other models can be incorporated or used in case of algorithm failure; however, we leave this for future research.

Another possible way to tackle the problem of sudden increase in the coefficients is by imputing zero samples using data imputation techniques, Osman et al. (2018). However, application of such techniques cannot be performed automatically since leading zeros in the time-series (more current samples), will be imputed, also leading to nonzero forecasts in cases where it should be zero. For example, an aircraft which has not flown for the past 10 months is more probable to not fly next month. Thus, application of data imputation techniques is not straightforward and might lead to an increase in the forecasting error despite the stabilisation of the models.

5 Numerical Results

In this section, we assess the applicability and efficiency of the proposed scheme using the CO2 emissions dataset. We denote our method as NNARRT (Non-Negative Adaptive Auto-Regression with Thresholding). The parameters used to generate the forecasts were \(\delta =0.01\) and \(\epsilon =1.001\) unless stated otherwise. For the initial tests and comparisons, the value of \(\lambda \) was set to 1.0, thus all errors are contributing equally. The filtration tolerance \(\delta \) was chosen to allow coefficients be included in the models if they are larger than \(1\%\) of the first coefficient of the model.

The \(\delta \) parameter was chosen to avoid including coefficients, corresponding to lags, that do not affect the computed forecast by more than \(1\%\) of the magnitude of the first coefficient. The maximum lag parameter p can be estimated by the training data, for a fixed value of the filtration tolerance. Table 2 presents the number of time-series with respect to the model’s number of coefficients (noc) computed for various values of the maximum lag p. We observe that the maximum lag parameter does not significantly affect the model’s length above \(p=5\). The small variations of the length of the model in cases of \(noc=1\) or \(noc=2\) are caused from an increase in the maximum lag p, which affects the number of rows of matrix X and, consequently, the inputs. This value is also selected because the maximum (Table 3) lag affects the computational work and performance of the model.

The 29, 707 time-series were separated into training (\(75\%\)) and test data (\(25\%\)), with the training set including 24 samples and the test set 8 samples, unless explicitly stated otherwise. We compare our method against Naive forecasting, and a variety of machine learning forecasting methods. Specifically, we consider the methods in Table 4.

Note that we did not perform hyperparameter optimisation for SVR, GRNN, LSTM and EL due to the dataset’s size and the number of models to be built and used the default suggested hyperparameter values instead. For LSTM, we utilised a grid search approach.

We evaluate performance of our proposed scheme using predefined error measures. We did not consider Root Mean Squared Error (RMSE) due to the existence of zero samples in the time-series relative error metrics. Instead, we use the Mean Absolute Error (MAE) and the Mean Absolute Scaled Error (MASE) to measure errors (Hyndman & Koehler, 2006). The MAE metric is defined as \(MAE=\frac{1}{J} \sum _{t=1}^{J} |Y_t-F_t|\), where \(Y_t\) is the actual value, \(F_t\) is the forecasted value and J is the number of forecasts. MASE is defined as \(MASE=\frac{\frac{1}{J}\sum _{t=1}^{J} |Y_t-F_t|}{\frac{1}{N-1}\sum _{t=2}^N |Y_t-Y_{t-1}|}\), where J is the number of forecasts, N is the number of points in the training set. Thus, MASE scales the forecast’s mean error with respect to the mean error of the Naive Forecasting method (applied to the training data). For time-series composed entirely of zero samples the MAE and MASE were set to zero. Table 5 presents the median and mean MAE and MASE and the computational performance across all time-series.

In terms of computational performance, the proposed scheme is second only to Naive Forecasting, which requires minimal processing. The other techniques require substantially more computational time. The fastest and most accurate alternatives to NNAART are EL-Bagging and Holt’s linear method with additive errors (HLM). Despite the fast computation time of HLM, Table 5 shows that NNAART is, on average, 76 times faster since HLM requires constraint optimisation to determine optimal parameters for each time-series. NNAART is also more than 16 times faster than SVR, 140 times faster than GRNN, over 900 times faster than both EL methods and almost 25000 times faster than LSTM.

Beyond superior performance time, Table 5 also shows that NNAART outperforms the other methods with respect to MAE and MASE. NF is better in approximately \(35.2\%\) of the time-series, while NNAART performs better in \(32.2\%\) and identically in \(32.6\%\) of the time-series. When NF outperforms NNAART, the performance difference is mostly unsubstantial. When NNAART is better, NF leads to increased forecasting errors (on average \(26\%\) for those time-series) leading to increased mean (median) MAE and MASE. We note that NNART was replaced with NF in 3606 cases, of which 1319 corresponded to all zero training samples, while the remaining are caused by non-suitable coefficients with respect to thresholding constraints. Figure 10 provides a histogram for the distribution of MASE for NNAART. The primary source of errors are time-series where the training parts have a large number of leading zeros (more current samples). Figure 11 provides an example of such a time-series. Unfortunately, such cases are difficult to model to reduce the overall forecasting error, as they are primarily driven by random events.

To further assess the effectiveness of NNAART, we perform the method over several splittings of the dataset. Table 6 presents the median and mean MAE and MASE errors, for NF and NNAART methods for 5 additional data splits. Initially, the Naive Forecasting (NF) method presents better results in terms of MAE and MASE, for the splittings 50/50 and 60/40. With more than 40% test data, NNAART has insufficient training data to estimate the coefficients of the lagged basis. In contrast, splits from 70/30 and above leads to improved accuracy in terms of MASE and MAE for NNAART, since the training set and the length of the lagged basis increases. We note that to produce effective models, for an increased value of the parameter p and as a consequence to the number of potential lagged basis, the training data should have adequate length of samples.

5.1 Effect of the Forgetting Factor \(\lambda \)

Recall from §3 that the dataset spans from December 2018 to July 2021. Thus, the data covers the pre-COVID-19 outbreak, the lockdown period, and the gradual reopening of tourism and travelling globally. Accordingly, we investigate the effect of the forgetting factor on the forecasts. The forgetting factor used in the Recursive Least Squares method is restricted to values between 0 and 1, with typical parameter taken from the interval [0.98, 1], favouring recent over past errors (Diniz, 2020).

In the following numerical experiments, we also focus on values of \(\lambda \) that are greater than 1. \(\lambda >1\) places greater weight on past samples compared to more recent observations. The splitting on the data was chosen similar to the initial comparative tests, i.e. 75/25. The parameters for the NNAART method were set to \(\delta =0.01\) and \(p=5\). When \(\lambda \) is greater than 1, the structure of the \(\Lambda \) matrix is as follows:

Using this form of the \(\Lambda \) matrix in Alg. 2 (Appendix B), we can put more weight on old errors. Moreover, the maximum value of \(\lambda \) is restricted to \(<2\).

Figure 12 compares the accuracy of NNAART with respect to MASE across \(\lambda \in [0.7,1.4]\) for all time-series. Figure 13 presents the corresponding mean MASE for various values of the parameter \(\lambda \). From Fig. 12 we observe that the proposed scheme leads to improved results for more time-series, with respect to MASE, in the intervals [1.14, 1.23], while the NF method leads to improved results for most time-series in the interval [0.70, 1.14) and (1.23, 1.40]. We can observe from the curve corresponding to the number of time-series that the two methods perform similarly, monotonically decreasing until \(\lambda =1.06\), while for larger values it starts increasing. With respect to mean MASE, the NNAART method, cf. Fig. 13, is better in the interval [0.84, 1.40]. However, NNAART is only better in the majority of the time-series over the interval [1.14, 1.23] according to Fig. 12. This is caused due to the fact that there are cases where the proposed method under-performs compared to NF, but their difference is not significant, while when the NF method under-performs it leads to significant errors that affect the mean MASE.

The value of \(\lambda \) that leads to best overall performance is approximately 1.14, which results in mean MASE equal to 1.3371 and the NNAART method is better in 10388 of the time-series. Thus, we weight older errors more than more recent ones. This provides the interpretation that the test part of the time-series belongs to the period where air travel has slowly begun to return to normal, while the most recent part of the training set corresponds to the period where air travel was limited due to the COVID-19 outbreak, whereas, the starting part of the training dataset is in the pre-COVID-19 era. Thus, values of \(\lambda >1\) render the errors, corresponding to these samples, more important in the computation of weights of the model, leading to improved results.

The proposed scheme offers several advantages over other methods. Firstly, it adaptively selects the appropriate basis functions (features) based on potential error reduction, resulting in sparser models that avoid overfitting while improving performance and interpretability. This is particularly effective for time-series with few samples, unlike Machine learning techniques such as LSTM that require substantial amounts of data. Secondly, the scheme reduces the number of hyperparameters, enabling the training of multiple models with minimal computational resources in “what-if” scenarios, unlike Machine learning methods like SVM, EL, or LSTM that require extensive tuning. Additionally, the recursive approach ensures fast computation, model stability, and allows for parallelisation on modern multicore and manycore architectures. Constraints such as non-negativity and coefficient thresholding are easily incorporated, reducing highly non-stationary models and making them resilient against a large number of zero samples, which significantly reduces forecasting errors. The inclusion of a “forgetting” factor allows for selective weighting of past errors, rendering them less influential during model formation. Also, the scheme’s flexibility and extensibility enable the incorporation of combinations of basis functions or even other models as a foundation.

Finally, we note that we have recently updated the underlying method for generating forecasting by considering clustering. Clustering offers the advantage of reducing the effect of shocks during modelling and forecasting. Specifically, the time-series corresponding to the aircraft are clustered into several groups and the model is computed for the center of the cluster and then used to forecast all the members of the group. We present the performance of clustering in the Appendix C.

6 Discussion

In this section, we first discuss the theoretical implications, highlighting the contributions to our approach for forecasting carbon emissions. Subsequently, we note the practical implications, examining the tangible and actionable outcomes of our research. Finally, we discuss our research limitations and future research opportunities.

6.1 Theoretical Implications

We outline how this paper contributes to theory in three ways: (1) advancing theory in predictive analytics, (2) illustrating the benefits to organisations of using predictive analytics techniques for decision-making, and (3) contributing to the growing focus of aviation in information systems literature.

This paper contributes to advancing predictive analytics theory through the development and validation of a novel Adaptive Auto-Regression method based on Recursive Pseudoinverse Matrices. We demonstrate the technique’s effectiveness in the context of forecasting aircraft CO2 emissions. Specifically, we extend the literature on predictive analytics techniques (Cang et al., 2024; Grewe et al., 2021; Guo et al., 2021; Li et al., 2023; Qian et al., 2020; Mamdouh et al., 2024; Wood, 2023; Singh & Dubey, 2021) by developing a novel technique with a high percentage accuracy which can be utilised to forecast with sparse data. Thus, we contribute to the literature by providing techniques for analysing sparse data (e.g., Benítez-Peña et al., 2021), which contributes to the substantial body of work on predictive analytics (Duan & Da Xu, 2021). Specifically, we place a particular emphasis on the role and application of predictive analytics techniques in data analytics by focusing on improving forecasting accuracy and operational efficiency. The NNAART method represents a further development and extension of the machine learning techniques, and by providing validation of the operational efficiency and effectiveness of the autoregression method, it overcomes criticisms that have been levelled at the use of regression in machine learning-enabled analytics (e.g. de Waal et al., 2024). Therefore, this research adds to the existing literature (de Waal et al., 2024) focused on the exploration of business analytics and machine learning in business.

The ascendance of data-driven business models in the modern business landscape necessitates a more profound understanding of how to strategise around the utilisation of big data and analytics (Wirén & Mäntymäki, 2018; Mäntymäki et al., 2020). This need is underlined by the rapidly increasing ability to extract actionable insights from vast and complex data sets (Duan & Da Xu, 2021). We contribute to the information systems literature by illustrating the benefits for organisations of using predictive analytics-based techniques in terms of enabling more informed managerial decision-making in real-time, adding depth to recent research on improved decision-making (Sturm et al., 2023) and enabling better financial risk management for organisations, adding a new context to the findings of Fang et al. (2021) who have illustrated how predictive analytics can lead to improved financial effectiveness in clinical decision making. In particular, the role of analytics in business decision-making processes is a point of focus, as it has been demonstrated to significantly mitigate the risk inherent in business operations (Mäntymäki et al., 2020). Furthermore, the research takes an important step towards increasing transparency in the application of predictive analytics. Our methodology, which is fully disclosed, moves beyond the ’black box’ approach that is often associated with using machine learning for forecasting. In this way, the research makes a significant contribution to the growing focus on transparency and replicability within the field of business analytics (Wirén & Mäntymäki, 2018).

We contribute to the emerging literature in information systems focused on aviation. Most aviation forecasting literature typically focuses on predicting consumer demand (e.g., Li et al., 2021) and consumer emotions (e.g., Shayganmehr and Bose, 2024). By analysing CO2 emissions, we add to the growing literature base in information systems focused on ESG and travel.

6.2 Implications for Practice

Regulatory compliance for schemes covering different jurisdictions (e.g., EU ETS, the newly established UK ETS, and similar ETSs in Korea, California, etc.) creates the potential for significant present or future financial risks for aviation industry participants (Sharma et al., 2021; Guan et al., 2022). Given the increasing ESG responsibilities, FCOE’s platform helps stakeholders to identify and manage the emissions performance of individual aircraft and fleets and link these to associated financial risks. The developed platform can act as a benchmark for analysing actual and predicted CO2 emissions across the aviation sector according to Country, portfolio (e.g. lessor, bank, credit export agency), airline operator, aircraft type, airport, route city pair, aircraft category, and manufacturer. Beyond facilitating real-time decisions, the platform enables running operational and growth scenarios based on actual flight data rather than on estimates. The platform automatically compiles aircraft utilisation data for financiers, lessors, investors and insurers and leverages a blend of industry standard and proprietary methods to provide consistent, comparable, and reliable analytics on the emissions performance of aircraft and fleets and links these to associated financial risks.

This article highlights the value of the forecasting engine, which uses analytics to predict future aircraft and fleet utilisation and performance, together with accompanying future financial exposures relating to CO2 emissions. Incorporating the forecasting engine into the FCOE platform has multiple potential benefits. The forecasting engine enables aircraft owners and operators to make decisions that align with future requirements under international, regional, and national reduction schemes as well as their own voluntary ESG targets.

The forecasting component is a key differentiator against competitors (Nayak et al., 2021; Rampersad-Jagmohan & Wang, 2023). Especially important for achieving ESG goals, aircraft lessors face investor pressure to show ESG credentials, with many international banks incorporating ESG performance into their credit terms (Watts, 2021). The forecasting function becomes particularly insightful here: it captures the financial cost of exposure to various regulation schemes. It predicts an operator’s and/or lessor’s future financial exposure based on different variables like the number and type of aircraft and changes in an airline’s portfolio makeup (Jackson et al., 2023). This is tremendously valuable as banks now include ESG performance in credit terms (Henisz & McGlinch, 2019; Cheng & Hasan, 2023).

Moreover, operators can use the forecasting engine to anticipate performance and ensure they meet optimal credit terms based on ESG performance. This could have significant financial implications over a long-term credit agreement. The forecasting engine also helps airlines allocate aircraft to routes to minimise CO2 emissions per passenger for each journey (Ma et al., 2018). For instance, it could model whether an A350 aircraft is more efficient for a specific route compared to an A330, considering distance, passenger numbers, and fuel type. This contributes to making air travel more sustainable.

At the portfolio level, forecasting helps determine fleet management (e.g., which planes to retire) by modelling different scenarios (Khoo & Teoh, 2014). An airline’s portfolio could change dramatically each year, and forecasting aids operators in deciding which planes to phase out. It allows for modelling different scenarios, such as understanding how adding winglets to an aircraft affects CO2 emissions or comparing the predicted CO2 emissions performance of a fleet to that of competitors (Yin et al., 2016). According to a FCOE manager, the forecasting methodology provides users with the information needed to answer such questions, thus improving decision-making aimed at reducing emissions and increasing travel sustainability. In summary, FCOE noted that the developed forecasting methodology adds another dimension to ESG metrics and can be a key differentiator from competitors.

6.3 Limitations

While our research has led to some promising results, it’s important to recognise that, like all scientific inquiries, it has limitations. The method we developed and employed in this study was tested in a single environment using one large dataset. The boundaries of this dataset are defined by several factors, including the time frame under consideration, the geographical area of focus, the specific types of aircraft utilised in the data, and the particular variables captured for analysis.

One significant restriction that needs to be highlighted pertains to the dataset itself. The data used in our study was focused on the European Union and was provided by a single organisation. This aspect of our research design inherently brings some caveats to the table. As a result, the conclusions drawn from our analysis may not be universally applicable across different settings or regions. These potentially non-transferable results thus raise concerns about the generalizability of our findings. In addition to the geographical and source limitations of our data, the set of predetermined variables used in the dataset could potentially limit the utility of our analysis. While necessary for our study, the selection of these specific variables may not encompass all possible factors that could impact our area of study, bounding the scope of our findings.

One other significant limitation that should be considered is that the method was exclusively tested in the specific context of aircraft CO2 emissions. This means the results and findings are tailored to this particular scenario and may not apply or be as effective in other contexts or environments. Our discussions regarding the method’s applicability for decision-making purposes have been confined to a small group of individuals. This limitation in participant diversity could influence the breadth and depth of perspectives gathered, thereby restricting our understanding of the method’s utility in various decision-making scenarios.

6.4 Future Research Opportunities

Future research in different settings should investigate the utility and generalisability of the developed method and work towards addressing the limitations outlined earlier. Regarding the dataset, other areas with significant CO2 emissions, like shipping, vehicle routing, and emission compliance strategies, could be explored in terms of the utility of the developed method. More broadly, future research could apply our method to forecast the national impacts of travel on CO2 emissions across countries, aiding policymakers and regulatory bodies. For example, our method could be used to study differences regarding emissions between developed and developing nations. Beyond emissions, there is also the possibility of exploring the utility and value of the method in multiple settings, thereby addressing the issue of generalisability.

Future research should further explore the utility of the generated forecasts in terms of their influence on business decision-making. Our approach provides opportunities to address other issues within airline management. For example, there is the potential to investigate the utility of the predictive model to facilitate scenario planning and prescriptive analytics to optimise aircraft route allocations and fleet management, including aircraft maintenance and aircraft replacement.

7 Conclusion

Addressing CO2 emissions in the aviation industry is crucial due to their significant contribution to global climate change (Ritchie et al., 2020; Brewer, 2021). Despite the challenges of decarbonisation in air transport, advanced analytics offer promising solutions for emission reduction (Prussi et al., 2021). By partnering with FCOE, the research team developed a novel forecasting method, NNAART, designed to predict CO2 emissions effectively. This method, characterised by its flexibility and efficiency, allows for the adaptive selection of parameters and basis functions, enabling time series predictions for datasets with many “missing” or “zero” samples. Piloted under an IP evaluation license, this method has demonstrated its capability to provide precise carbon emissions forecasts for aircraft financiers and owners. These forecasts are vital for understanding potential CO2 credits, offsets, and financial exposures, thereby supporting climate-related financial disclosures and enhancing the financial operations of organisations. The successful implementation of this forecasting method highlights the importance of leveraging advanced analytics to achieve both environmental and financial benefits in the aviation sector.

Availability of Data and Material

Data supporting this study cannot be made available due to commercial restrictions.

Notes

The research team commenced engagement with FCOE in June 2019 as part of a four-year collaborative, government and industry-funded research project focused on technological innovation.

References

Akande, A., Cabral, P., & Casteleyn, S. (2019). Assessing the gap between technology and the environmental sustainability of european cities. Information Systems Frontiers, 21, 581–604.

Ando, T. (2005). Schur complements and matrix inequalities: Operator-theoretic approach. The Schur Complement and its Applications, 137–162 (Springer)

Awad, M., & Khanna, R. (2015). Support Vector Regression, 67–80 (Berkeley, CA: Apress), ISBN 978-1-4302-5990-9

Bauer, K., von Zahn, M., & Hinz, O. (2023). Expl (ai) ned: The impact of explainable artificial intelligence on users’ information processing. Information Systems Research, 34(4), 1582–1602.

Benbya, H., Pachidi, S., & Jarvenpaa, S. (2021). Special issue editorial: Artificial intelligence in organizations: Implications for information systems research. Journal of the Association for Information Systems, 22(2), 10.

Benítez-Peña, S., Carrizosa, E., Guerrero, V., Jiménez-Gamero, M. D., Martín-Barragán, B., Molero-Río, C., Ramírez-Cobo, P., Morales, D. R., & Sillero-Denamiel, M. R. (2021). On sparse ensemble methods: An application to short-term predictions of the evolution of covid-19. European Journal of Operational Research

Bolat, C. K., Soytas, U., Akinoglu, B., & Nazlioglu, S. (2023). Is there a macroeconomic carbon rebound effect in eu ets? Energy Economics, 125, 106879.

Breiman, L. (2001). Bagging predictors. Machine Learning, 24, 123–140.

Brewer, T. (2021). Transportation Emissions on the Evolving European Agenda, 71–85 (Cham: Springer International Publishing), ISBN 978-3-030-59691-0

Bro, R., & De Jong, S. (1997). A fast non-negativity-constrained least squares algorithm. Journal of Chemometrics, 11(5), 393–401.

Cang, H., Zeng, X., & Yan, S. (2024). A novel grey multivariate convolution model based on the improved marine predators algorithm for predicting fossil co2 emissions in China. Expert Systems with Applications, 243, 122865.

Chen, D., & Plemmons, R. J. (2009). Nonnegativity constraints in numerical analysis. In A. Bultheel and R. Cools (Eds.), Symposium on the birth of numerical analysis, World Scientific, 109–139 (Press)

Chen, J., Chen, Y., Mao, B., Wang, X., & Peng, L. (2022). Key mitigation regions and strategies for co2 emission reduction in China based on stirpat and arima models. Environmental Science and Pollution Research, 29(34), 51537–51553.

Cheng, M., & Hasan, I. (2023). Firm esg practices and the terms of bank lending. Sustainable finance and ESG: Risk, management, regulations, and implications for financial institutions, 91–124 (Springer)

Cheng, Z., Pang, M. S., & Pavlou, P. A. (2020). Mitigating traffic congestion: The role of intelligent transportation systems. Information Systems Research, 31(3), 653–674.

Chèze, B., Gastineau, P., & Chevallier, J. (2011). Forecasting world and regional aviation jet fuel demands to the mid-term (2025). Energy Policy, 39(9), 5147–5158.

de Waal, H., Nyawa, S., & Wamba, S. F. (2024). Consumers’ financial distress: Prediction and prescription using interpretable machine learning. Information Systems Frontiers 1–22

Deng, Q., Santos, B. F., & Verhagen, W. J. (2021). A novel decision support system for optimizing aircraft maintenance check schedule and task allocation. Decision Support Systems, 146, 113545.

Diniz, P. S. R. (2020) Adaptive Lattice-Based RLS Algorithms, 231–261 (Cham: Springer International Publishing), ISBN 978-3-030-29057-3

dos Santos Coelho, L., Ayala, H. V. H., & Mariani, V. C. (2024). Co and nox emissions prediction in gas turbine using a novel modeling pipeline based on the combination of deep forest regressor and feature engineering. Fuel, 355, 129366.

Drucker, H., Burges, C. J. C., Kaufman, L., Smola, A., & Vapnik, V. (1997). Support vector regression machines. Mozer, M. C., Jordan, M., Petsche, T., eds., Advances in Neural Information Processing Systems, volume 9 (MIT Press)

Duan, L., & Da Xu, L. (2021). Data analytics in industry 4.0: A survey. Information Systems Frontiers 1–17

Fang, X., Gao, Y., & Hu, P. J. (2021). A prescriptive analytics method for cost reduction in clinical decision making. Management Information Systems Quarterly, 45(1), 83–115.

Filelis-Papadopoulos, C., Kyziropoulos, P., Morrison, J., & O’Reilly, P. (2021). Modelling and forecasting based on recurrent pseudoinverse matrices. Paszynski, M., Kranzlmüller, D., Krzhizhanovskaya, V., Dongarra, J., Sloot, P., eds., Computational Science - ICCS 2021. ICCS 2021. Lecture Notes in Computer Science, volume 12745 (Springer, Cham)

Fügener, A., Grahl, J., Gupta, A., & Ketter, W. (2021). Will humans-in-the-loop become borgs? merits and pitfalls of working with ai. Management Information Systems Quarterly (MISQ)-Vol, 45

Fügener, A., Grahl, J., Gupta, A., & Ketter, W. (2022). Cognitive challenges in human-artificial intelligence collaboration: Investigating the path toward productive delegation. Information Systems Research, 33(2), 678–696.

Granger, C. W. J., & Joyeux, R. (1980). An introduction to long-memory time series models and fractional differencing. Journal of Time Series Analysis, 1(1), 15–29.

Grewe, V., Rao, A. G., Grönstedt, T., Xisto, C., Linke, F., Melkert, J., Middel, J., Ohlenforst, B., Blakey, S., Christie, S., & et al. (2021). Evaluating the climate impact of aviation emission scenarios towards the paris agreement including covid-19 effects. Nature Communications, 12(1), 1–10.

Guan, H., Liu, H., & Saadé, R. G. (2022). Analysis of carbon emission reduction in international civil aviation through the lens of shared triple bottom line value creation. Sustainability, 14(14), 8513.

Gunter, U., & Wöber, K. (2021). Estimating transportation-related co2 emissions of european city tourism. Journal of Sustainable Tourism, 30(1), 145–168.

Guo, X., Grushka-Cockayne, Y., & De Reyck, B. (2021). Forecasting airport transfer passenger flow using real-time data and machine learning. Manufacturing & Service Operations Management

Hajek, P., Abedin, M. Z., & Sivarajah, U. (2023). Fraud detection in mobile payment systems using an xgboost-based framework. Information Systems Frontiers, 25(5), 1985–2003.

Happonen, M., Rasmusson, L., Elofsson, A., & Kamb, A. (2022). Aviation’s climate impact allocated to inbound tourism: Decision-making insights for “climate-ambitious” destinations. Journal of Sustainable Tourism 1–17

Harris, R. (1992). Testing for unit roots using the augmented dickey-fuller test: Some issues relating to the size, power and the lag structure of the test. Economics Letters, 38(4), 381–386.

Henisz, W. J., & McGlinch, J. (2019). Esg, material credit events, and credit risk. Journal of Applied Corporate Finance, 31(2), 105–117.

Higham, J., Font, X., & Wu, J. (2021). Code red for sustainable tourism. Journal of Sustainable Tourism, 30(1), 1–13.

Hochreiter, S., & Schmidhuber, J. (1997). Long short-term memory. Neural Computation, 9(8), 1735–1780.

Hoerl, A. E., & Kennard, R. W. (1970). Ridge regression: Biased estimation for nonorthogonal problems. Technometrics, 12(1), 55–67.

Horn, R. A., & Zhang, F. (2005). Basic properties of the schur complement. The Schur Complement and Its Applications, 17–46 (Springer)

Hyndman, R. J., & Koehler, A. B. (2006). Another look at measures of forecast accuracy. International Journal of Forecasting, 22(4), 679–688.

Ismagilova, E., Hughes, L., Dwivedi, Y. K., & Raman, K. R. (2019). Smart cities: Advances in research–an information systems perspective. International Journal of Information Management, 47, 88–100.

Jackson, C., Pascual, R., & Kristjanpoller, F. (2023). Performance-based contracting in the airline industry from the standpoint of risk-averse maintenance providers. Proceedings of the Institution of Mechanical Engineers, Part O: Journal of Risk and Reliability 1748006X231195398.

Johansson, B., Elfving, T., Kozlov, V., Censor, Y., Forssén, P. E., & Granlund, G. (2006). The application of an oblique-projected landweber method to a model of supervised learning. Mathematical and Computer Modelling, 43(7), 892–909.

Karakurt, I., & Aydin, G. (2023). Development of regression models to forecast the co2 emissions from fossil fuels in the brics and mint countries. Energy, 263, 125650.

Khoo, H. L., & Teoh, L. E. (2014). An optimal aircraft fleet management decision model under uncertainty. Journal of Advanced Transportation, 48(7), 798–820.

Kingma, D. P., & Ba, J. (2015). Adam: A method for stochastic optimization. Bengio Y, LeCun Y, eds., 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, May 7-9, 2015, Conference Track Proceedings

Korenberg, M. J., & Paarmann, L. D. (1991). Orthogonal approaches to time-series analysis and system identification. IEEE Signal Processing Magazine, 8(3), 29–43.

Kwiatkowski, D., Phillips, P. C., Schmidt, P., & Shin, Y. (1992). Testing the null hypothesis of stationarity against the alternative of a unit root: How sure are we that economic time series have a unit root? Journal of Econometrics, 54(1), 159–178.

Lawson, C. L., & Hanson, R. J. (1995). Solving least squares problems, volume 15 of Classics in Applied Mathematics (Philadelphia, PA: Society for Industrial and Applied Mathematics (SIAM))

Li, M., Wang, W., De, G., Ji, X., & Tan, Z. (2018). Forecasting carbon emissions related to energy consumption in Beijing-Tianjin-Hebei region based on grey prediction theory and extreme learning machine optimized by support vector machine algorithm. Energies, 11 (9)

Li, X., de Groot, M., & Bäck, T. (2021). Using forecasting to evaluate the impact of covid-19 on passenger air transport demand. Decision Sciences

Li, X., de Groot, M., & Bäck, T. (2023). Using forecasting to evaluate the impact of covid-19 on passenger air transport demand. Decision Sciences, 54(4), 394–409.

Liao, W., Fan, Y., & Wang, C. (2023). Exploring the equity in allocating carbon offsetting responsibility for international aviation. Transportation Research Part D: Transport and Environment, 114, 103566.

Lo, P. L., Martini, G., Porta, F., & Scotti, D. (2020). The determinants of co2 emissions of air transport passenger traffic: An analysis of lombardy (italy). Transport Policy, 91, 108–119.

Lotfi, A., Jiang, Z., Lotfi, A., & Jain, D. C. (2023). Estimating life cycle sales of technology products with frequent repeat purchases: A fractional calculus-based approach. Information Systems Research, 34(2), 409–422.

Ma, Q., Song, H., & Zhu, W. (2018). Low-carbon airline fleet assignment: A compromise approach. Journal of Air Transport Management, 68, 86–102.

Ma, R., Boubrahimi, S. F., Angryk, R. A., & Ma, Z. (2020). Evaluation of hierarchical structures for time series data. 2020 5th IEEE International Conference on Big Data Analytics (ICBDA), 94–99

Mamdouh, M., Ezzat, M., & Hefny, H. (2024). Improving flight delays prediction by developing attention-based bidirectional lstm network. Expert Systems with Applications, 238, 121747.

Mäntymäki, M., Hyrynsalmi, S., & Koskenvoima, A. (2020). How do small and medium-sized game companies use analytics? an attention-based view of game analytics. Information Systems Frontiers, 22(5), 1163–1178.

Marin J-M, R. C. (2007). Bayesian Core: A Practical Approach to Computational Bayesian Statistics (Springer)

Matteo, T. D. (2007). Multi-scaling in finance. Quantitative Finance, 7(1), 21–36.

Nayak, B., Bhattacharyya, S. S., & Krishnamoorthy, B. (2021). Explicating the role of emerging technologies and firm capabilities towards attainment of competitive advantage in health insurance service firms. Technological Forecasting and Social Change, 170, 120892.

Nguyen, D. K., Huynh, T. L. D., & Nasir, M. A. (2021). Carbon emissions determinants and forecasting: Evidence from g6 countries. Journal of Environmental Management, 285, 111988.

Osman, A., Afan, H. A., Allawi, M. F., Jaafar, O., Noureldin, A., Hamzah, F. M., Ahmed, A. N., & El-shafie, A. (2020). Adaptive fast orthogonal search (fos) algorithm for forecasting streamflow. Journal of Hydrology, 586, 124896.

Osman, M. S., Abu-Mahfouz, A. M., & Page, P. R. (2018). A survey on data imputation techniques: Water distribution system as a use case. IEEE Access, 6, 63279–63291.

Penrose, R. (1955). A generalized inverse for matrices. Mathematical Proceedings of the Cambridge Philosophical Society, 51(3), 406–413.

Prussi, M., Lee, U., Wang, M., Malina, R., Valin, H., Taheripour, F., Velarde, C., Staples, M. D., Lonza, L., & Hileman, J. I. (2021). Corsia: The first internationally adopted approach to calculate life-cycle ghg emissions for aviation fuels. Renewable and Sustainable Energy Reviews, 150, 111398.

Qian, Y., Sun, L., Qiu, Q., Tang, L., Shang, X., & Lu, C. (2020). Analysis of co2 drivers and emissions forecast in a typical industry-oriented county: Changxing county, China. Energies, 13 (5)

Rampersad-Jagmohan, M., & Wang, Y. (2023). Predictive analytics in aviation management. International Workshop of Advanced Manufacturing and Automation, 401–406 (Springer)

Revilla, E., Saenz, M. J., Seifert, M., & Ma, Y. (2023). Human-artificial intelligence collaboration in prediction: A field experiment in the retail industry. Journal of Management Information Systems, 40(4), 1071–1098.

Ritchie, B. W., Sie, L., Gössling, S., & Dwyer, L. (2020). Effects of climate change policies on aviation carbon offsetting: A three-year panel study. Journal of Sustainable Tourism, 28(2), 337–360.

Sekartadji, R., Musyafa, A., Jaelani, L. M., Ahyudanari, E., & et al. (2023). Co2 emission of aircraft at different flight-level (route: Jakarta-surabaya). Chemical Engineering Transactions, 98, 39–44.

Sharma, A., Jakhar, S. K., & Choi, T. M. (2021). Would corsia implementation bring carbon neutral growth in aviation? a case of us full service carriers. Transportation Research Part D: Transport and Environment, 97, 102839.

Shayganmehr, M., & Bose, I. (2024). Have a nice flight! understanding the interplay between topics and emotions in reviews of luxury airlines in the pre-and post-covid-19 periods. Information Systems Frontiers 1–22

Singh, M., & Dubey, R. K. (2021). Deep learning model based co2 emissions prediction using vehicle telematics sensors data. IEEE Transactions on Intelligent Vehicles, 8(1), 768–777.

Sinnott, R. W. (1984). Virtues of the haversine. Sky and Telescope, 68(2), 158–159.

Sokal, R. R., & Michener, C. D. (1958). A statistical method for evaluating systematic relationships. University of Kansas Science Bulletin, 38, 1409–1438.

Sturm, T., Pumplun, L., Gerlach, J. P., Kowalczyk, M., & Buxmann, P. (2023). Machine learning advice in managerial decision-making: The overlooked role of decision makers’ advice utilization. The Journal of Strategic Information Systems, 32(4), 101790.

Sun, X., Chung, S. H., & Ma, H. L. (2020). Operational risk in airline crew scheduling: do features of flight delays matter?Decision Sciences, 51 (6), 1455–1489

Tealab, A. (2018). Time series forecasting using artificial neural networks methodologies: A systematic review. Future Computing and Informatics Journal, 3(2), 334–340.

Thirumuruganathan, S., Al Emadi, N., Jung, S. g., Salminen, J., Robillos, D. R., & Jansen, B. J. (2023). Will they take this offer? a machine learning price elasticity model for predicting upselling acceptance of premium airline seating. Information & Management, 60(3), 103759.

Tibshirani, R. (1996). Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society. Series B (Methodological), 58 (1), 267–288

Tutun, S., Johnson, M. E., Ahmed, A., Albizri, A., Irgil, S., Yesilkaya, I., Ucar, E. N., Sengun, T., & Harfouche, A. (2023). An ai-based decision support system for predicting mental health disorders. Information Systems Frontiers, 25(3), 1261–1276.

Ward, J. H., Jr. (1963). Hierarchical grouping to optimize an objective function. Journal of the American Statistical Association, 58(301), 236–244.

Wasserman, P. (1993). Advanced Methods in Neural Computing. New York: Van Nostrand Reinhold.

Watts, R. (2021). Esg investing & why it’s important in aviation deal-making. https://www.accaviation.com/esg-investing-why-its-important-in-aviation-deal-making/, last visited June 17, 2024

Wirén, M., Mäntymäki, M. (2018). Strategic positioning in big data utilization: Towards a conceptual framework. Challenges and Opportunities in the Digital Era: 17th IFIP WG 6.11 Conference on e-Business, e-Services, and e-Society, I3E 2018, Kuwait City, Kuwait, October 30–November 1, 2018, Proceedings 17, 117–128 (Springer)

Wood, D. A. (2023). Machine learning for hours-ahead forecasts of urban air concentrations of oxides of nitrogen from univariate data exploiting trend attributes. Environmental Science: Advances, 2(11), 1505–1526.

World Bank. (2007). State and Trends of the Carbon Market 2007 (Washington DC), in cooperation with the International Emissions Trading Association

Wright, M. (2005). The interior-point revolution in optimization: History, recent developments, and lasting consequences. Bulletin of the American Mathematical Society, 42(1), 39–56.

Wu, J., Wang, Z., Hu, Y., Tao, S., & Dong, J. (2023). Runoff forecasting using convolutional neural networks and optimized bi-directional long short-term memory. Water Resources Management, 37(2), 937–953.

Xu, Z., Liu, L., & Wu, L. (2001). Forecasting the carbon dioxide emissions in 53 countries and regions using a non-equigap grey model. Environment Science and Pollution Research, 28, 15659–15672.

Yang, H., & O’Connell, J. F. (2020). Short-term carbon emissions forecast for aviation industry in shanghai. Journal of Cleaner Production, 275, 122734.

Yin, Ks., Dargusch, P., & Halog, A. (2016). Study of the abatement options available to reduce carbon emissions from australian international flights. International Journal of Sustainable Transportation, 10(10), 935–946.

Yu, J., Shao, C., Xue, C., & Hu, H. (2020). China’s aircraft-related co2 emissions: Decomposition analysis, decoupling status, and future trends. Energy Policy, 138, 111215.

Zhang, F. (2005). Block matrix techniques. The Schur complement and its applications, 83–110 (Springer)

Zhang, J., Li, S., & Wang, Y. (2023). Shaping a smart transportation system for sustainable value co-creation. Information Systems Frontiers, 25(1), 365–380.

Zhang, T., Wang, G. A., He, Z., & Mukherjee, A. (2024). Service failure monitoring via multivariate multiple linear regression profile schemes with dimensionality reduction. Decision Support Systems, 178, 114122.