Abstract

Artificial Intelligence (AI) technology is transforming the healthcare sector. However, despite this, the associated ethical implications remain open to debate. This research investigates how signals of AI responsibility impact healthcare practitioners’ attitudes toward AI, satisfaction with AI, AI usage intentions, including the underlying mechanisms. Our research outlines autonomy, beneficence, explainability, justice, and non-maleficence as the five key signals of AI responsibility for healthcare practitioners. The findings reveal that these five signals significantly increase healthcare practitioners’ engagement, which subsequently leads to more favourable attitudes, greater satisfaction, and higher usage intentions with AI technology. Moreover, ‘techno-overload’ as a primary ‘techno-stressor’ moderates the mediating effect of engagement on the relationship between AI justice and behavioural and attitudinal outcomes. When healthcare practitioners perceive AI technology as adding extra workload, such techno-overload will undermine the importance of the justice signal and subsequently affect their attitudes, satisfaction, and usage intentions with AI technology.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Artificial Intelligence (AI) is a wide-ranging branch of computer science concerned with building smart machines capable of performing tasks that typically require human intelligence (Russell & Norvig, 2016). The global healthcare AI market worth is expected to reach USD 190.6 billion in 2025 (Singh, 2020). Many countries including China, are experiencing dramatic digitisation in the healthcare sector. China takes the lead in AI-based diagnostic imaging equipment (Nikkei Asia, 2020). AI technology is transforming the healthcare industry and offering great support to healthcare practitioners. Its implementation is evidenced in areas of medical imaging, disease diagnostics, drug discovery, various sensors, and devices to track patients’ health status in real time.

While the benefits of AI technology in the healthcare sector are widely recognized, there are still many obstacles in motivating healthcare practitioners to engage with them. For instance, job automation and the substantial displacement of workforces induced by AI has triggered considerable stress for healthcare practitioners (Davenport & Kalakota, 2019). Vakkuri et al. (2020) point out that the public is becoming aware of the ethical implications of AI technology, relating to, for example, the lack of data structure (Panch et al., 2019), ethical concerns over its unintended impacts (Peters et al., 2020), and human acceptance of machines (Anderson & Anderson, 2007). Hospitals face not only the challenges of understanding how AI can be deployed responsibly, but also of whether their engagement with AI can subsequently generate positive outcomes such as favourable attitudes, greater satisfaction, and higher usage intentions. While the discussions on the importance of AI ethics principles are consistent (Morley et al., 2020), there is a lack of understanding on the implementation of responsible AI principles in the healthcare sector. Furthermore, prior research examines the adoption of AI technology mainly from patients’ perspectives (Nadarzynski et al., 2019; Nadarzynski et al., 2020) while the drivers of healthcare practitioners’ engagement with AI technology remain unexplored.

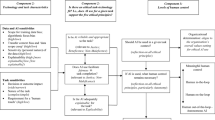

Our research seeks to address this urgent need by elucidating how AI technology can be navigated responsibly and developed in a manner to enhance healthcare professionals’ engagement and generate positive responses toward it. The signal-mechanism-consequence (SMC) theory (Li & Wu, 2018; Pavlou & Dimoka, 2006) is used as the theoretical foundation of the proposed framework. The SMC theory integrates a multitude of theoretical views (including the diffusion of innovation theory and signaling theory) wherein information systems (IS) scholars aim to understand how the signals triggered by the implementation of an innovation in organisations may determine intended or unintended consequences via certain mechanisms. Additionally, our research framework also builds on the responsible AI principles of Floridi et al. (2018), namely beneficence, non-maleficence, autonomy, and justice, we add an enabling principle, explainability. We seek to understand whether these five key responsible AI principles influence healthcare practitioners’ engagement with AI technology. The importance of engagement has been examined in the context of general technology, such as mobile applications and virtual reality; however, to the best of our knowledge, this is the first study to explore how responsible AI principles improve healthcare practitioners’ attitudes, satisfaction, and usage intentions. More importantly, we investigate the underlying mechanisms through which responsible AI principles may achieve this.

Furthermore, we also explore whether ‘techno-overload’ moderates the effects of responsible AI principles on employee engagement which then subsequently affects their attitudes, satisfaction, and usage intentions with AI technology. Tarafdar et al. (2007) describe techno-overload as being often caused by technology-related work overload and as affecting employee productivity. Healthcare practitioners may feel stressed when they perceive their daily tasks are extended by difficulties arising from using AI technology and their workload is seen to exceed their capacity. Coined ‘techno-stressors’, such pressures can undermine the benefits of responsible AI principles on engagement.

In view of these gaps in the current literature, this research addresses the following questions:

-

RQ1: How do responsible AI technology principles affect healthcare practitioners’ attitudes, satisfaction, and usage intentions?

-

RQ2: Does healthcare practitioner engagement mediate the effects of responsible AI technology principles on their attitudes, satisfaction, and usage intentions?

-

RQ3: How does techno-overload as a techno-stressor affect, through engagement, the impact of responsible AI principles on attitudes, satisfaction, and usage intentions with AI technology?

2 Theoretical Background and Research Model

2.1 Current research on AI in Healthcare

The healthcare sector is among the most promising areas of AI adoption in recent years (Sun & Medaglia, 2019; Yang et al., 2012). Its transformative potential has been brought to the sector with the design and training of powerful computational models to perform a wide range of functions with aggregated data (Keane & Topol, 2018). Some examples are case classification, risk estimation (Topol, 2019), patient administration (Reddy et al., 2019), automated diagnosis support (Abràmoff et al., 2018), disease prediction, and chronic condition management (Zhou et al., 2016). Increasingly, AI initiatives are becoming evident in clinical applications; for instance, algorithm-based disease surveillance systems have been adopted by hospitals in Hampshire, England to reduce virus outbreaks (Mitchell et al., 2016). The use of AI technology has greatly improved accuracy, productivity, and workflow in healthcare systems and such benefits generate more efficient and targeted use of healthcare resources, serving to enhance healthcare services overall (Taddeo & Floridi, 2018; Topol, 2019).

Despite the striking usefulness and value of AI technology in the healthcare sector (e.g., Haleem et al., 2019; Hamet & Tremblay, 2017), many researchers still examine its usage from mainly patients’ perspectives (e.g., Fadhil & Gabrielli, 2017; Nadarzynski et al., 2019, 2020; Tran et al., 2019; Yang et al., 2015) and disregard the perspective of healthcare practitioners. To illustrate, Nadarzynski et al. (2020) examine patients’ acceptance of medical chatbots and Fadhil & Gabrielli (2017) investigate how such chatbots induce behaviour change interventions in patients. AI wearable devices (Tran et al., 2019) and satisfaction with online physician services (Yang et al., 2015) are further examples.

Despite all the promises of AI in healthcare, there are still many obstacles in motivating practitioners to engage with the technology (Topol, 2019). Research has found them more likely to be distracted and then refuse to use AI, while feeling it is incumbent upon them to ensure the appropriate use of AI-enabled systems in healthcare delivery (Fan et al., 2018; Shinners et al., 2020). Also, performance expectations of AI implementation can influence the behavioural outcomes of practitioners towards AI (Fan et al., 2018). Thus, to realise the growing potential of AI across healthcare organisations, it seems that gaining an understanding of the factors that affect healthcare practitioner engagement with AI technology is an imperative.

2.2 Ethical Considerations of AI in Healthcare

AI implementation in healthcare has raised public awareness and caused considerable controversy, particularly concerning the ethical implications (e.g., Vakkuri et al., 2020). The discussion on ethical concerns arising from the unintended impacts of AI in healthcare has been substantial (Morley et al., 2020; Peters et al., 2020) and recently extended into the theoretical constructs and principles of AI (Jobin et al., 2019). In response to concern, ethical and rights-based frameworks that guide and govern AI implementations have been explicit when documented by different industries around the globe (Floridi et al., 2018; Jobin et al., 2019).

Nevertheless, most healthcare organisations struggle to collect the data needed to design appropriate algorithms for local patients or generate consistent practice patterns due to weak data infrastructure. Such weakness can lead to inconsistent algorithmic performance and inaccurate diagnoses (Panch et al., 2019; Wang et al., 2019). Given that health practitioners often work in sensitive and high-risk contexts (Peters et al., 2020), caution with implementation is crucial for practitioners to mitigate against potential ethical breaches and ensure accuracy in AI use.

While AI principles exert normative constraints on the responsible use of the technology, existing research points out that such principles remain highly theoretical; they are conceptual frameworks yet to demonstrate empirical evidence on whether they affect ethical decision-making (Greene et al., 2019; Vakkuri et al., 2020). Moreover, Rothenberger et al. (2019) highlight the importance of identifying who would be responsible for consequences from AI. These questions have intrigued researchers and steered enquiry towards taking the AI principles incrementally closer to assuring the technology is ethical and socially beneficial, and towards promoting responsible AI development in practice (Arrieta et al., 2020).

Responsible AI is a newly coined term that has already managed to generate considerable attention within and beyond academia (Dignum, 2019; Peters et al., 2020). Dignum (2019) provides an overview of AI as a concept and emphasizes the need to use it responsibly to meet the expectations of key stakeholders and society while harnessing its power to effectively augment humanity and business. Arrieta et al. (2020) consider responsible AI as a paradigmatic imposition on the AI principles and a viable prospect to address the growing ethical and societal concerns with large-scale AI implementation processes. Although researchers such as Benjamins et al. (2019) propose an integrated approach, including defined principles, design training, a guidance checklist, and governance process for organisations to apply AI responsibly at scale, more consideration is needed on the practical collective experience. This has called for the need for further empirical studies that support or encourage the progression of responsible AI from design to practice (Morley et al., 2020), particularly in the healthcare sector.

With the constant theoretical discussions on AI ethics in face of a paucity of practical evidence, this research aims to compensate by offering deeper insight into the potential use of responsible AI principles in healthcare to trigger practitioners’ behavioural intentions and affect their attitudes. A review by Floridi et al. (2018) suggests that the key principles incorporated by many AI initiatives are consistent with four classic bioethical principles: beneficence, non-maleficence, autonomy, and justice. This framework used in medicine has great potential to address the ethical challenges faced by AI implementation in healthcare. With new subcategories of patient, external agencies, and complex technical and clinical environments, it could easily be applied to digital environments (Floridi, 2013). Hence, building on the AI principles of Floridi et al. (2018) and adding ‘explainability’ as a further enabling principle, this research aims to understand the effects on healthcare practitioner engagement with AI technology of five key responsible AI principles: autonomy, beneficence, explainability, justice, and non-maleficence.

2.3 Research Model

The theoretical basis for the proposed research model is the signal-mechanism-consequence (SMC) theory (Li & Wu, 2018; Pavlou & Dimoka, 2006). SMC theory is rooted in two theoretical views. First, the diffusion of innovation theory developed by Rogers (1962) posits that the adoption of any innovation can lead to certain consequences, either benefit or harm. It has been frequently employed in the technology adoption literature, where scholars examine specific influences of information technology (IT) uptake and the impacts on behavioural intentions (e.g., Weerakkody et al., 2017). Second, signaling theory explains how two parties, such as focal company and stakeholders or customers and suppliers, may communicate through information processing (Connelly et al., 2011). There are four core components of signaling theory: sender, receiver, signal, and signaling environment. ‘Signal’ refers to the deliberate communication of information to convey it from one party to another (Connelly et al., 2011). The senders of signals decide on how to communicate the information they want receivers to know, while the receivers interpret the information sent by these signal senders. Building on signaling theory, considerable effort has been expended on research into how senders can convey signals effectively to target parties. For example, in the e-commerce environment, Pavlou and Dimoka (2006) show that positive signals such as credibility and benevolence conveyed by sellers can improve price premium. A firm’s market value is also increased when positive signals circulate among stakeholders, for instance voluntarily disclosing information security (Gordon et al., 2010).

Drawing on SMC theory, our research explores the signals triggered by the adoption of responsible AI principles, which may enable healthcare practitioners to engage more with AI and enhance their positive attitudes and intentions toward it. Building on Peters et al. (2020), we define responsible AI as principles that are designed to (1) navigate AI agents responsibly to create economic value from healthcare service provision, (2) orchestrate both AI and healthcare professionals’ competencies ethically, to achieve positive clinical outcomes for various stakeholder groups, and (3) leverage AI to achieve fairness, social inclusion, and sustainability in healthcare.

Signals in the healthcare context are actions that convey information to healthcare professionals about planned actions for the responsible implementation of AI-enabled healthcare systems. Leslie (2019) claims that mechanisms can be explanatory by linking signals with the associated pathways to various consequences. Perhaps such a mechanism may be found in the application of responsible AI principles, which it is reasonable to conceive healthcare practitioners would interpret as signals to what a responsible healthcare system is like. This may generate in them more positive intentions, attitudes, and behaviours in relation to AI.

Implementing responsible AI in line with these principles can generate positive outcomes. Peters et al. (2020) demonstrate, for example, that AI-enabled healthcare systems developed with the principle of autonomy increase patients’ intention to engage with the systems as well as the practitioners’ ability to make decisions. However, prior literature lacks discussion on the underlying mechanism that explains how responsible AI signals may produce the desired consequences, namely improved attitude towards AI, satisfaction with AI, and AI usage intentions, from the practitioner perspectives.

We claim that employee engagement is a linking mechanism between responsible AI signals and outcomes. Employee engagement has been extensively studied in IS contexts as it is considered an important influencing factor for IT adoption. In particular, implementing AI requires organisations to engage their staff as a means to overcome internal resistance (Brock & Von Wangenheim, 2019). Therefore, we set out to explore how different responsible AI signals affect attitude, intention, and satisfaction toward AI through the mechanism of employee engagement. We further posit that healthcare practitioners who are highly engaged with AI will tend to interpret responsible AI as a positive signal so they feel more satisfied with it and embrace it more intensively. Figure 1 represents an overview of our research framework and Appendix A provides an overview of theoretical constructs.

3 Hypothesis Development

3.1 Autonomy

According to self-determination theory (Ryan & Deci, 2017), autonomy is universally essential to humans in relation to their experience of willingness and volition (Chen et al., 2015; Martela & Ryan, 2016). With AI, the principle of autonomy does not imply the autonomy of AI per se. Rather, it refers to the autonomy of all human beings that have been promoted by rather than impaired by autonomous systems (Floridi et al., 2018). The autonomous AI system has the potential to augment labour in productive processes and transform jobs to have increased capabilities but reduce human autonomy in the workplace (Calvo et al., 2020). The ethical concerns around such advances have already raised awareness of responsible and beneficial use of AI technology (Dignum, 2017) so it is essential to ensure a trade-off between AI autonomy and human-retained decision-making power to preserve human autonomy and avoid ceding excessive control from humans to AI algorithms (Floridi et al., 2018). A similar vein of studies including Gebauer et al. (2008) and Weinstein and Ryan (2010) informs us that satisfaction from human autonomy and the intrinsic value of human choice in important decisions in the healthcare sector can contribute to healthcare practitioners’ psychological well-being. Calvo et al. (2020) assert that in designing responsible AI for human autonomy, it is critical to understand individuals’ motivations to use AI. Practitioners with positive psychological states while using AI-enabled systems are likely to have positive attitudes and satisfaction with the technology. Thus, the following hypothesis is proposed:

Hypothesis 1 (H1)

Autonomy of AI is positively related to healthcare practitioners’ (a) attitudes toward AI, (b) satisfaction with AI, and (c) AI usage intentions.

3.2 Beneficence

Beneficence refers to the subjective sense of being able to voluntarily exert positive pro-social impacts on others, with wellness-relevant outcomes about oneself resulting (Martela & Ryan, 2016). Regarding the use of AI in healthcare, as a classic ethical principle, beneficence highlights the importance of ensuring patients and practitioners are protected by and benefit from the actions powered by AI technology, at the very least (Reddy et al., 2020). As such, AI should be designed and deployed in ways that respect and preserve the dignity of patients and practitioners, promote benefits to humanity and the common good, and ensure sustainability (Floridi et al., 2018). Healthcare practitioners particularly are obliged to operate by a moral imperative of doing good for patients and society. In fact, pro-social behaviours are associated with one’s psychological well-being and enhanced satisfaction from beneficence creates a ‘virtuous-cycle’ wherein future benevolent intentions are cultivated by past ones (Martela & Ryan, 2016). We can reasonably expect that healthcare practitioners will behave positively if they are satisfied with the beneficence of AI systems, and thus propose the following hypothesis:

Hypothesis 2 (H2)

Beneficence of AI is positively related to healthcare practitioners’ (a) attitudes toward AI, (b) satisfaction with AI, and (c) AI usage intentions.

3.3 Explainability

AI explainability, also known as technological transparency (Haesevoets et al., 2019), emphasizes the need to translate system actions, processes and outputs into intelligible information and communicate this regularly and accessibly, which allows individuals to interpret these complex automated decisions (Jobin et al., 2019). When implementing AI systems in clinical settings, the use of patients’ information, the impact on their care or treatment, and the reasoning behind an AI diagnosis should all be clearly discussed with patients in advance (Currie et al., 2020). Also, having access to sufficient extra detail or algorithmic reasoning may increase the diagnostic accuracy from healthcare practitioners using such systems (Miller, 2019; Rai, 2020). However, in some studies, deeming explanations of algorithmic decisions as ‘good’ was mostly based on researcher intuition within the process of exploring how explanations can affect users’ perceptions of and interactions with AI systems (Miller, 2019). Further, the explanations are sometimes required by end users who lack the technical knowledge to interpret them within certain contexts (Liao et al., 2020). For instance, initial machine diagnosis often presents the most likely symptoms of a certain disease as a descriptive list, without further analysis, which may confuse some healthcare practitioners resulting in poor decisions (Ribeiro et al., 2016). Whether healthcare practitioners are satisfied with the performance of AI systems, especially in terms of explainability, is still uncertain and warrants further investigation. Thus, the following hypothesis is proposed:

Hypothesis 3 (H3)

Explainability of AI is positively related to healthcare practitioners’ (a) attitudes toward AI, (b) satisfaction with AI, and (c) AI usage intentions.

3.4 Justice

The principle of justice refers to the obligation of distributing benefits equitably to individuals (Newman et al., 2020). According to justice theory, employees’ perceptions of fairness in their personnel procedures are positively associated with the overall fairness of organisational decision-making (Lind, 2001). Organisations have long associated the decision process with justice, particularly from the perspectives of employees (Colquitt et al., 2001), who tend to agree with decisions if they are consistent, are based on accurate data, have complied with rules, and are less influenced by individual bias (Colquitt & Zipay, 2015). Similarly, when decision-making comes to AI systems in the healthcare sector, algorithms are expected to treat all patients fairly, equitably, proportionately, and able to distribute medical goods and services without bias, discrimination, or harm (Schönberger, 2019). Although Newman et al. (2020) postulate that algorithm-driven personnel decisions are considered less fair than identical human-made decisions in certain contexts, responsible AI could still have the potential to overcome human bias in order to increase distributive justice, procedural justice, and bring enormous opportunities for organisations through more accurate information (Aral et al., 2012). It seems clear that the principle of justice should positively influence practitioners’ behavioural outcomes. We therefore propose the following hypothesis:

Hypothesis 4 (H4)

Justice of AI is positively related to healthcare practitioners’ (a) attitudes toward AI, (b) satisfaction with AI, and (c) AI usage intentions.

3.5 Non-maleficence

Non-maleficence refers to the obligation to not inflict harm intentionally on others (Floridi et al., 2018). Consideration of non-maleficence associated with AI technology focuses on the avoidance of any potential harm to individuals or intentional misuse of personal information (Jobin et al., 2019), along with the assurance of robust and secure algorithmic decisions (Morley et al., 2020). In the healthcare sector, this is fitting for AI solutions; they should be geared to avert any patient harm or privacy breach, and rather to assuring positive outcomes for their treatment and care (Currie et al., 2020). According to Roca et al. (2009), individuals’ perceptions of perceived technological security influence their trust and behavioural intentions towards such technology. As patient information is highly sensitive, non-maleficence of AI is specifically concerned with individual privacy and security, personal safety, and consistency in how AI systems perform ethically based on pre-defined principles (Floridi et al., 2018). Therefore, we posit that satisfaction with perceived technological security related to non-maleficence of AI in the healthcare sector would generate positive behavioural intentions from healthcare practitioners; we propose the following:

Hypothesis 5 (H5)

Non-maleficence of AI is positively related to healthcare practitioners’(a) attitudes toward AI, (b) satisfaction with AI, and (c) AI usage intentions.

3.6 The Role of Employee Engagement

Employee engagement is viewed as personal engagement. In particular, Kahn (1990, p. 694) defines it as the “harnessing of organisation members’ selves to their work roles; in engagement, people employ and express themselves physically, cognitively, or emotionally during role performances”. When an organisation undergoes technical changes, like adopting an innovation or new technology, employees’ acceptance of or resistance to these changes will depend on their level of engagement (Braganza et al., 2020; Brock & von Wangenheim, 2019). Brock and von Wangenheim (2019) contend that involving highly engaged employees is key to successful AI implementation. In the healthcare context, we define employee engagement with AI as the degree to which practitioners are passionate about AI implementation within their healthcare systems. The level of employee engagement depends on the benefits they enjoy from organisational resources (Saks, 2006), which include information (or signals) pertaining to their tasks so that they know what is expected of them and how to succeed (Harter et al., 2002). Responsible AI principles can provide guidance on how to operate and exploit AI systems fully, responsibly, and ethically, by means of effective internal communication and training. Such knowledge gains through this engagement process will result in greater clarity for employees’ AI usage.

The significant effects of responsible AI signals on employee engagement can be explained in terms of social exchanges within the organisation; interactions between employee and the company are established and maintained as a balance between giving and receiving (Cropanzano & Mitchell, 2005). In particular, employees could reward the organisation with better job performance such as higher engagement when the use of responsible AI systems is guaranteed (Masterson et al., 2000). Furthermore, Alder Hey Children’s Hospital, as one of the largest children’s hospitals in Europe, has developed an AI-featured digital App called Alder Play (Alder Hey Children’s Charity, 2017), which enables healthcare practitioners to access the medical records of patients eligible for British NHS treatment. This could largely improve autonomy in clinical processes, thereby enhancing the quality of health services and strengthening patient engagement. Allowing the autonomy of data usage and providing meaningful and personalized explanations of AI benefits are expected to reduce uncertainty, thus improving healthcare practitioner satisfaction with AI and encouraging its involvement their roles (Rai, 2020; Ramaswamy et al., 2018).

Moreover, Karatepe (2013) posits that employee engagement is a motivational factor which explains the relationship between work practices and performance. He suggests that employees are most likely to be in an engaged state of mind when they recognize organisational efforts to improve their welfare. In the healthcare context, for example, the principle of AI system non-maleficence may assure employees that any potential negative outcomes from information maangement will be avoided (Jobin et al., 2019). As such, healthcare practitioners may be more willing to use AI-enabled systems when a secure AI solution committed to non-maleficence is offered by their organisations. It appears that when an organisation makes great efforts in improving their engagement through the responsible implementation of AI-enabled healthcare systems, they will be more engaged in their work, leading to better behavioural outcomes toward AI usage. Therefore, we propose the following hypotheses:

Hypothesis 6 (H6)

Responsible AI signals (autonomy, beneficence, explainability, justice, and non-maleficence) are positively related to employee engagement.

Hypothesis 7 (H7)

Employee engagement mediates the relationship between responsible AI signals (autonomy, beneficence, explainability, justice, and non-maleficence) and behavioural consequences (attitudes toward AI, satisfaction with AI, and AI usage intentions).

3.7 The Moderating Role of Techno-overload

Not everyone experiences technology in the same way. Yin et al. (2018) report that some employees experience higher levels of stress than others when overwhelmed by technology, despite its aim to boost work-stream effectiveness. ‘Technostress’ is a term coined to describe negative psychological states such as anxiety, strain, or a sense of ineffectiveness when using new technologies (Salanova et al., 2013) and this is often related to a sense of overload (Cao & Sun, 2018; Wang & Li, 2019). AI technology may force employees such as healthcare practitioners to work longer and faster (Krishnan, 2017), which contributes to job stress (Folkman et al., 1986), predicts job burnout, impedes performance (Wu et al., 2019), and reduces productivity (Tarafdar et al., 2007).

We propose that unpleasant affective states associated with techno-overload impede the subjective quality of the responsible AI signal of justice. ‘AI justice’ is defined as a subjective assessment of justice by healthcare practitioners on AI’s ability to eliminate discrimination, improve equitably shared benefits, and prevent new threats (Floridi et al., 2018; Floridi & Cowls, 2019). Justice – and therein injustice – is an emotionally laden subjective experience (Barsky & Kaplan, 2007). Negative emotions are believed to change how people perceive and respond to their surroundings; Folkman and Lazarus (1986) contend that depressed individuals or those experiencing emotions such as sustained frustration or disappointment often employ a hostile ‘confrontative coping strategy’. This is consistent with Alloy and Abramson (1979), whose findings suggest that depressed individuals tend to perceive their environment as more threatening than their non-depressed counterparts do. In a similar vein, based on the affect-as-information model, which suggests that people rely on affect as heuristic, thereby substituting objective criterion when making justice judgments (van den Bos, 2003), negative affective states often associate with subjective judgments of injustice or unfairness (Barsky & Kaplan, 2007; van den Bos, 2003; Lang et al., 2011). While recognizing this negative impact of unpleasant emotional and physiological states on how justice is assessed subjectively, any negative emotions triggered by techno-overload should impede the ability of healthcare practitioners in making rational judgments about their use of AI technology. Therefore, it is relevant to explore whether techno-overload plays a pivotal role in undermining the effects of AI justice on engagement. Hence, the following hypothesis is proposed:

Hypothesis 8 (H8)

Techno-overload moderates the relationship between AI justice and employee engagement, such that high techno-workload weakens the effect of AI justice on employee engagement.

The above analysis outlines a framework in which employee engagement mediates the relationship between AI justice and behavioural and attitudinal outcomes, and techno-overload moderates the relationship between AI justice and engagement. Given that techno-overload may indeed weaken the effect of AI justice on engagement and that engagement may positively associate with behavioural and attitudinal outcomes, it is logical to suggest a moderated mediation effect (Edwards & Lambert, 2007) whereby techno-overload also moderates the strength of the mediating mechanism for engagement in the relationship between AI justice and behavioural and attitudinal outcomes. As mentioned previously, a stronger relation between AI justice and engagement will be enabled by less techno-overload. Consequently, the indirect effect of AI justice on the AI consequences (attitudes toward AI, satisfaction with AI, and AI usage intentions) may also be stronger when techno-overload is low. Our final proposition is the following:

Hypothesis 9 (H9)

Techno-overload moderates the mediating effect of engagement on the relationship between AI justice and behavioural and attitudinal outcomes, such that techno-overload weakens the indirect effect of AI justice on healthcare practitioner’s (a) attitude towards AI, (b) satisfaction with AI, and (c) AI usage intentions, via engagement.

4 Method

4.1 Measures

Measurement items (Table 1) were adapted from previous studies: beneficence (Martela & Ryan, 2016), autonomy (Chen et al., 2015), justice (Newman et al., 2020), attitude (Lau-Gesk, 2003), satisfaction with AI (McLean & Osei-Frimpong, 2019), and usage intentions (Moons and De Pelsmacker, 2012). The technological security scale was adapted from Carlos Roca et al. (2009) as a proxy to measure non-maleficence. Technological transparency was used as a proxy for explainability (Haesevoets et al., 2019). The measurement items for techno-overload were adopted from Tarafdar et al. (2007) and Krishnan (2017). All the items were randomized and measured with a seven-item Likert-type scale ranging from ‘strongly disagree’ to ‘strongly agree’.

In addition to adapting all the measurement items from the existing literature, we also validated them by consulting AI experts and healthcare practitioners (Vogt et al., 2004). The original questionnaire was written in English. The English version was translated into Chinese by an English-to-Chinse translator and a bilingual doctoral student. The Chinese version was then translated back to English. This process identifies and minimizes any loss of meaning (Anderson & Brislin, 1976; Gong et al., 2020). Once the translations were satisfactory, we invited a panel of two AI experts and two healthcare practitioners from a Chinese Hospital who have used AI in their workplace, to review all the measurement items. Table 1 provides a list of items that we used in the research.

4.2 Data Collection

All participants are healthcare practitioners working in the West China Hospital and other Grade A tertiary hospitals in China. Since AI technology has not yet been widely adopted in the healthcare industry, AI-related provisions are primarily available in tertiary hospitals classified as Grade A (see Appendix B for hospital classification) (Chinese Innovative Alliance of Industry, Education, Research and Application of Artificial Intelligence for Medical, 2019). The West China Hospital is in this category as China’s largest and the world’s second-largest hospital, having 4,300 ward beds and being among the top research hospitals in China. Its AI implementation has achieved both domestic and international recognition (West China Hospital, 2020; Zhou et al., 2020; Cancer Research UK, 2020; Novuseeds Medtech, 2018). We only selected healthcare practitioners working in this and other Grade A tertiary hospitals for these reasons.

To recruit these participants, we created an advertisement targeting those with previous experience of AI in healthcare. We then posted our survey invitation in three active WeChat (a Chinese social media platform) workplace chat groups of the West China Hospital (1252 healthcare practitioners in total), asking for voluntary participation. We recruited the healthcare practitioners this way because WeChat is the largest mobile instant text and voice messaging communication service in China, with about 1,206 million active users per month (Tencent, 2020). Many Chinese organisations like the West China Hospital are using WeChat’s group chat function as an important communication channel (Deng, 2020). Additionally, we also approached healthcare practitioners who work in other Grade A tertiary hospitals, via Sojump, a paid online sampling service for survey research in China, that can reach up to 500 healthcare practitioners working in Grade A tertiary hospitals.

As an introduction, all participants were given a short summary of AI applications used in the medical field (see Appendix C) and exemplar AI applications (Chinese Innovative Alliance of Industry, Education, Research and Application of Artificial Intelligence for Medical, 2019) prior to their participation. To ensure data quality, we only retained responses from healthcare practitioners who have used AI technology in their workplace. In addition to the survey invitation and consent form, two screening questions were used to filter out ineligible respondents with no experience with AI: “Have you used AI technology in your workplace before?” and “How much time (hours) on average do you use AI technology per week?” Furthermore, we added two open questions asking the name and region of their hospital in order to ensure it matches the classification. Finally, we checked the IP addresses for all responses to detect any replicated submissions.

As a result, we successfully collected 413 responses, of which 213 were from the West China Hospital and 200 from Sojump. Nine responses were invalid after failing the screening questions and were subsequently removed from further analysis. In total, 404 valid responses were obtained. The mean age of the participants was 31 years (SD = 5.23), 220 were female (54.46 %) and 184 were male (45.54 %). The demographic breakdown is shown in Table 2.

5 Results

5.1 Assessment of Measurement Model

The data were analyzed using partial least squares structural equation modeling (PLS-SEM) (Hair et al., 2016). We used SmartPLS 3 to test the hypothesized research model with a bootstrap re-sampling procedure; 5000 sub-samples were randomly generated (Hair et al., 2016). Furthermore, we followed the bootstrapping method of Hayes (2017) to test the mediating effects. First of all, we assessed the reliability, convergent validity, and discriminant validity of all research constructs. Reliability was assessed using internal consistency and indicator loading (Hair et al., 2011). The composite reliability (CR) was used to measure internal consistency, with CR values above 0.7 considered satisfactory (Hair et al., 2016). For the factor loadings, those higher than 0.7 are satisfactory (Hair et al., 2011) and still considered acceptable when higher than 0.6 (Bagozzi & Yi, 1988).

Validity is indicated by convergent validity and discriminant validity. The average variance extracted (AVE) of each construct exceeds 0.5, which indicates a satisfactory convergent validity of measurements (Fornell & Larcker, 1981). Based on the Farnell-Larcker criterion, the discriminant validity can be established when the square root of each construct’s AVE exceeds the squared correlation with any other construct (Hair et al., 2016). Tables 3 and 4 demonstrate that both convergent validity and discriminant validity were established.

5.2 Structural Model and Hypotheses Testing

The structural model was assessed using standardized path coefficients (β) and their significance levels, including the t-statistics and p-values, as well as explained variance (R2) of the endogenous constructs (Becker et al., 2013). We also evaluated the effect size by means of Cohen’s f2 (Cohen, 2013), Q2 values for predictive relevance (Chin, 1998), and standardized root mean square residual (SRMR) for the global fit of the model (Henseler et al., 2014).

According to Chin (1998), R2 values of 0.67, 0.33, and 0.19 are described as substantial, moderate, and weak effects. Table 5 shows that our model accounted for 56 % of the variance in the effect of responsible AI signals on employee engagement; the strength of the effect is between a substantial effect and moderate effect. Furthermore, our model also explained 47.3 % of usage intentions to AI, 46.3 % of satisfaction with AI, and 19.0 % of attitudes towards AI, respectively. Second, we used the blindfolding technique in PLS to assess the predictive relevance of the path model. The Q2 values for all endogenous constructs were above zero (see Table 6). Hence, the model showed a good predictive relevance (Chin, 1998). Third, we evaluated the global fit of the model by applying the fit index SRMR (Henseler et al., 2014) for the discrepancy between the empirical indicator variance–covariance matrix and its model-implied counterparts, with a resulting SRMR value of 0.062 (see Table 7) below the threshold of 0.08 (Benitez et al., 2020). This indicates that our model provided a sufficient fit with the empirical data. Therefore, our proposed research model is well suited to confirming and explaining the effects of responsible AI signals on healthcare practitioners’ attitudes towards, satisfaction of, and usage intentions with AI technology.

5.3 Mediating Effects of Engagement

Mediation seeks to assess whether the effects of independent variables on the dependent variables are direct or indirect via the mediator. We hypothesised that employee engagement mediates the effects of responsible AI signals (namely autonomy, beneficence, non-maleficence, justice, and explainability) on satisfaction with AI, attitudes toward AI, and AI usage intentions in healthcare practice. We performed the bootstrapping resampling technique (5000 samples) with bias-corrected, 95 %-confidence intervals to calculate the significance of the hypothesized paths in the structural model. For the mediation analysis, we first focused on the significance of the direct effect without the mediator. Then we tested the significance of the indirect effect via the mediator. If the direct effect without the mediator is insignificant but the indirect effect is significant, a full mediation is supported. If the direct effect without the mediator is significant and the indirect effect is equally so, then a partial mediation is supported (Zhao et al., 2010). Table 5 shows the results of the effect of responsible AI signals on engagement, and Table 6 illustrates the direct effect of AI signals on attitude, satisfaction and usage intentions without a mediator, with engagement as the mediator, and the indirect effect via the mediator.

Therefore, H1(c), H2(a), H2(c), H3(b), H4(b), H5(b), H6, and H7 are supported. Responsible AI signals are positively related with employee engagement, and engagement mediates the relationship between responsible AI signals on healthcare practitioners’ attitude, satisfaction, and usage intentions with AI technology.

We further conducted a moderated mediation analysis for our research model. As demonstrated in Fig. 1, we aim to examine whether techno-overload moderates the mediating effects of employee engagement on the relationship between AI justice and behavioural and attitudinal outcomes. As illustrated in Table 8, techno-overload moderates the effect of AI justice on engagement (β = -0.174, p < 0.05). Thus, H8 is supported. Table 9 revealed that AI justice significantly influences satisfaction (β = -0.062, p < 0.05), usage intentions (β = -0.091, p < 0.05) and marginally affects attitudes toward AI (β = -0.050, p < 0.10) through the moderated mediation path, while engagement acted as the mediator and techno-overload as the moderator. Therefore, H9 is supported. The results of hypotheses testing are summarised in Table 10.

Finally, assessing the multigroup (or between-group) differences is important to more subtly understand technology usage behaviours (Qureshi & Compeau, 2009). To explore this effect, we split the sample into groups based on respondents’ educational background, gender, healthcare work experience, frequency of AI usage, average AI usage time per week, and job title. The multigroup analysis was run to learn whether healthcare professionals’ characteristics and AI usage experience can moderate the effects of responsible AI signals on employees’ responses toward AI. The results in Appendix D demonstrate that the differences between the explainability-to-satisfaction coefficients from two job title groups were significant at the 0.05 level. We also find that the differences of autonomy and beneficence-to-satisfaction coefficients between high and low AI usage time groups were significant.

6 Discussion

Responsible AI signals help us understand healthcare practitioners’ responses toward AI technology. However, despite recognition of the signals, there has been scant research empirically examining their effects on attitudes to, satisfaction, and usage intentions with AI technology. Our research model draws on signal-mechanism-consequence (SMC) theory (Li & Wu, 2018; Pavlou & Dimoka, 2006) and examines how autonomy, beneficence, explainability, justice, and non-maleficence all serve as signals to healthcare practitioners steering their decisions to engage with workplace AI technology and achieve desirable outcomes, namely favourable attitudes, high satisfaction, and clear usage intentions with AI technology.

The results demonstrate that autonomy has a significant positive effect on healthcare practitioners’ usage intention with AI technology; beneficence is positively related to healthcare practitioners’ usage intention with AI technology and their attitudes toward AI; and explainability, justice, and non-maleficence contribute to satisfaction with AI technology in the workplace. Most importantly, the significant effects of these five responsible AI signals – autonomy, beneficence, explainability, justice, and non-maleficence on attitudes, satisfaction, and usage intentions – are accelerated through healthcare practitioner engagement. Furthermore, our findings reveal that techno-overload is a significant moderator of the mediation effects of employee engagement. When healthcare practitioners perceive AI technology as adding extra workload, this will undermine the importance of AI justice and then subsequently affect practitioners’ attitudes, satisfaction, and usage intentions.

6.1 Theoretical Contributions

Our research makes several important theoretical contributions. Principally, we contribute to the responsible AI literature by empirically documenting the effects of the five key responsible AI principles. Consistent with Pavlou and Dimoka (2006) and Gordon et al. (2010), positive signals from the technology can generate desirable outcomes in the healthcare industry. The findings confirm the benefits of the five principles, and suggest that autonomy, beneficence, explainability, justice, and non-maleficence in relation to AI technology are taken by healthcare practitioners as positive signals inducing their engagement.

Interestingly, among all five signals, beneficence has the strongest positive effect on engagement with AI. Its use can be perceived by practitioners as a means to demonstrate their ability to deliver positive and pro-social impacts to patients. Most importantly, AI technology should be able to inherently improve well-being, to the betterment of human beings and society in general (Martela & Ryan, 2016). Despite the desirable functional benefits of AI technology, healthcare practitioners tend to engage with it when they perceive it can preserve the dignity of both patients and practitioners (Floridi et al., 2018). Further, extending Newman et al. (2020) and Rai (2020), our findings support the important roles of justice and explainability as they exert the strongest effects on healthcare practitioner satisfaction with AI technology. When healthcare practitioners perceive the technology is able to help them avoid human bias and increase the level of information accuracy provided to patients, they are more likely to be satisfied. Similarly, healthcare practitioners tend to value the importance of technological transparency. Their access to sufficient detail and algorithmic reasoning also seems to determine their satisfaction levels.

Our research also contributes to Signal-Mechanism-Consequence (SMC) theory (Li & Wu, 2018; Pavlou and Dimoka, 2006). Our results affirm that the five principles serve as responsible AI signals that healthcare practitioners rely on to decide whether to engage with AI technology. Engagement is one of the key drivers of usage intentions, favourable attitudes, and satisfaction with AI. This is consistent with Brock and Von Wangenheim (2019), who note employee engagement may determine the success of hospital AI implementations. Aligning with Harter et al. (2002), autonomy, beneficence, explainability, justice, and non-maleficence tend to reflect the nature of healthcare practitioners’ workplace activity and synchronize with their view on what they need to do to perform well in the workplace. The five responsible AI signals can provide sufficient guidance on how to operate AI systems responsibly and ethically. This can be disseminated through effective internal communication and training. Commitment to such programs and an understanding of the technology will result in greater engagement, and higher levels of satisfaction and usage.

Finally, techno-overload is an important techno-stressor, which undermines the significant relationship between justice and satisfaction, attitudes, and usage intentions. In particular, it weakens the positive relationship between justice and healthcare practitioner engagement with AI technology. Extending Tarafdar et al. (2007), when healthcare practitioners perceive their stated role to exceed their capacity in terms of work quantity or difficulty, it tends to decrease their engagement and undermine the importance of the AI justice signal. Despite the benefits of using AI technology, techno-overload is an affective event, that may result in negative feelings, such as stress, exhaustion, anxiety, or upset (Cao & Sun, 2018).

6.2 Implications for Practice

AI technology has the potential to transform the healthcare industry, with powerful computational models that assist healthcare practitioners’ job tasks with complex data aggregation (Keane & Topol, 2018). However, its adoption in this industry is still in its infancy. This research has been driven by a long overdue need to understand AI technology adoption from the perspective of healthcare practitioner rather than patient (Yang et al., 2015; Tran et al., 2019; Nadarzynski et al., 2019, 2020). Understanding the five key responsible AI signals tends to bring societal benefits and generate hope for practitioners. Hospitals should build responsible AI systems by emphasizing autonomy, beneficence, and non-maleficence, and perhaps more importantly, draw healthcare practitioners’ attention to AI explainability and justice. This might be done through internal communications or appropriate training with the aim of eliminating practitioners’ reticence with or fear of AI technology.

Accordingly, hospital managers and AI developers should ensure that algorithms are free from the potential for discrimination, with practitioners able to eliminate treatment disparity, which will improve their engagement. They may need to be instructed on the potential for AI to protect patient identities. Their inductions may include demonstrations on how it assists rather than impairs their decision-making ability and power. At the technical level, AI developers should ensure all mechanisms are as transparent as possible, to hospital management as well as healthcare practitioners. At the administrative level, hospital management should encourage honest and unreserved information sharing among users and patients. Only when practitioners fully understand the ethical landscape behind the development of AI technology, can they communicate clearly with patients and properly exploit AI technology to transform the patient experience positively. A useful starting point is simply to understand the five key responsible AI principles as an important foundation for all stakeholders to build consensus around the benefits of this technology in the healthcare sector.

7 Conclusion, Limitations, and Future Research Directions

Our research shows that healthcare practitioners’ attitudes toward AI, satisfaction with AI, and usage intentions with AI technology are affected by the five key responsible AI signals: autonomy, beneficence, explainability, justice, and non-maleficence. Such significant effects are facilitated through healthcare practitioners’ engagement with the technology. Moreover, this research also yields interesting findings on the pivotal role of techno-overload, in undermining the significant effects of responsible AI signals on engagement.

This research has some limitations that might offer useful future research directions. First of all, this study examines the perspectives of healthcare practitioners who have used AI technology in Grade A tertiary hospitals in China. As this technology is gradually transforming the healthcare industry, hospitals in rural areas may be planning to implement AI technology. Hence, future research could explore whether healthcare practitioners in rural areas hold a different perspective in defining responsible AI attributes. Secondly, the data in this study are cross-sectional; thus, future research may explore the possibility of longitudinal approaches to examine whether healthcare practitioner engagement varies over time as they experience either technology fatigue or staged assimilation and familiarization, for example. Finally, this research has only explored techno-overload as a moderator significantly affecting the outcomes of AI justice and attitudes, satisfaction, and usage intentions. Future research may wish to explore other potential moderators that either undermine or enhance the effects of responsible AI attributes on healthcare practitioners’ attitudes, satisfaction levels, and usage intentions.

References

Abràmoff, M. D., Lavin, P. T., Birch, M., Shah, N., & Folk, J. C. (2018). Pivotal trial of an autonomous AI-based diagnostic system for detection of diabetic retinopathy in primary care offices. NPJ Digital Medicine, 1(1), 1–8.

Alder Hey Children’s Charity. (2017). Download our brilliant new app now. Alder Hey Charity. Retrieved November 24, 2020, from https://www.alderheycharity.org/news/latest-news/the-alder-play-app-has-launched/.

Alloy, L. B., & Abramson, L. Y. (1979). Judgment of contingency in depressed and nondepressed students: sadder but wiser? Journal of Experimental Psychology: General, 108(4), 441–485. https://doi.org/10.1037/0096-3445.108.4.441.

Anderson, M., & Anderson, S. L. (2007). Machine ethics: Creating an ethical intelligent agent. AI Magazine, 28(4), 15.

Anderson, R. B. W., & Brislin, R. W. (1976). Translation: applications and research. Gardner Press.

Aral, S., Brynjolfsson, E., & Wu, L. (2012). Three-way complementarities: Performance pay, human resource analytics, and information technology. Management Science, 58(5), 913–931.

Arrieta, A. B., Díaz-Rodríguez, N., Del Ser, J., Bennetot, A., Tabik, S., Barbado, A., et al. (2020). Explainable artificial intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Information Fusion, 58, 82–115.

Bagozzi, R. P., & Yi, Y. (1988). On the evaluation of structural equation models. Journal of the Academy of Marketing Science, 16(1), 74–94. https://doi.org/10.1007/BF02723327.

Barsky, A., & Kaplan, S. A. (2007). If you feel bad, it’s unfair: a quantitative synthesis of affect and organizational justice perceptions. Journal of Applied Psychology, 92(1), 286–295. https://doi.org/10.1037/0021-9010.92.1.286.

Becker, J.-M., Rai, A., & Rigdon, E. (2013). Predictive validity and formative measurement in structural equation modeling: Embracing practical relevance. In International Conference on Information Systems (ICIS 2013): Reshaping Society Through Information Systems Design, 4, 3088–3106.

Benitez, J., Henseler, J., Castillo, A., & Schuberth, F. (2020). How to perform and report an impactful analysis using partial least squares: Guidelines for confirmatory and explanatory IS research. Information & Management, 57(2), 103168. https://doi.org/10.1016/j.im.2019.05.003.

Benjamins, R., Barbado, A., & Sierra, D. (2019). Responsible AI by design. In Proceedings of the Human-Centered AI: Trustworthiness of AI Models and Data (HAI) track at AAAI Fall Symposium, Washington DC.

Braganza, A., Chen, W., Canhoto, A., & Sap, S. (2020). Productive employment and decent work: The impact of AI adoption on psychological contracts, job engagement and employee trust. Journal of Business Research. Advance online publication. https://doi.org/10.1016/j.jbusres.2020.08.018.

Brock, J. K. U., & Von Wangenheim, F. (2019). Demystifying AI: What digital transformation leaders can teach you about realistic artificial intelligence. California Management Review, 61(4), 110–134.

Calvo, R. A., Peters, D., Vold, K., Ryan, R. M., Burr, C., & Floridi, L. (2020). Supporting human autonomy in AI systems: A framework for ethical enquiry. In C. Burr & L. Floridi (Eds.), Ethics of Digital Well-Being: A Multidisciplinary Approach (pp. 31–54). Cham: Springer. https://doi.org/10.1007/978-3-030-50585-1_2.

Cancer Research, U. K. (2020). Sichuan University (West China Hospital)-Oxford University Gastrointestinal Cancer Centre. Retrieved 15 Jan, 2021, from https://www.cancercentre.ox.ac.uk/research/consortia/sichuan-university-west-china-hospital-oxford-university-gastrointestinal-cancer-centre/.

Cao, X., & Sun, J. (2018). Exploring the effect of overload on the discontinuous intention of social media users: An S-O-R perspective. Computers in Human Behavior, 81, 10–18. https://doi.org/10.1016/j.chb.2017.11.035.

Carlos Roca, J., José García, J., & José de la Vega, J. (2009). The importance of perceived trust, security and privacy in online trading systems. Information Management & Computer Security, 17(2), 96–113. https://doi.org/10.1108/09685220910963983.

Chen, B., Vansteenkiste, M., Beyers, W., Boone, L., Deci, E. L., Van der Kaap-Deeder, J., et al. (2015). Basic psychological need satisfaction, need frustration, and need strength across four cultures. Motivation and Emotion, 39(2), 216–236.

Chin, W. W. (1998). The partial least squares approach to structural equation modeling. Modern Methods for Business Research, 295(2), 295–336.

Chinese Innovative Alliance of Industry, Education, Research and Application of Artificial Intelligence for Medical. (2019). Releasing of the white paper on medical imaging artificial intelligence in China. Chinese Medical Sciences Journal, 34(2), 89–89. https://doi.org/10.24920/003620.

Cohen, J. (2013). Statistical Power Analysis for the Behavioral Sciences. Academic.

Colquitt, J. A., Conlon, D. E., Wesson, M. J., Porter, C. O., & Ng, K. Y. (2001). Justice at the millennium: A meta-analytic review of 25 years of organizational justice research. Journal of Applied Psychology, 86(3), 425–445.

Colquitt, J. A., & Zipay, K. P. (2015). Justice, fairness, and employee reactions. Annual Review of Organizational Psychology and Organizational Behavior, 2(1), 75–99.

Conlon, D. E., Porter, C. O., & Parks, J. M. (2004). The fairness of decision rules. Journal of Management, 30(3), 329-349

Connelly, B. L., Certo, S. T., Ireland, R. D., & Reutzel, C. R. (2011). Signaling theory: A review and assessment. Journal of Management, 37(1), 39–67.

Cropanzano, R., & Mitchell, M. S. (2005). Social exchange theory: An interdisciplinary review. Journal of Management, 31(6), 874–900.

Currie, G., Hawk, K. E., & Rohren, E. M. (2020). Ethical principles for the application of artificial intelligence (AI) in nuclear medicine. European Journal of Nuclear Medicine and Molecular Imaging, 47, 748–752.

Davenport, T., & Kalakota, R. (2019). The potential for artificial intelligence in healthcare. Future Healthcare Journal, 6(2), 94.

Deng, M. P. (2020). Be aware of the risks when using WeChat work group chat in healthcare sector. Retrieved 02 February, 2021, http://med.china.com.cn/content/pid/201946/tid/1026.

Dignum, V. (2017). Responsible autonomy. In Proceedings of the 26th International Joint Conference on Artificial Intelligence (pp. 4698–4704): ACM Digital Library. https://doi.org/10.5555/3171837.3171945.

Dignum, V. (2019). Responsible artificial intelligence: How to develop and use AI in a responsible Way. In B. O’Sullivan & M. Wooldridge (Eds.), Artificial Intelligence: Foundations, Theory, and Algorithms. Springer Nature.

Edwards, J. R., & Lambert, L. S. (2007). Methods for integrating moderation and mediation: a general analytical framework using moderated path analysis. Psychological Methods, 12(1), 1–22. https://doi.org/10.1037/1082-989X.12.1.1.

Fadhil, A., & Gabrielli, S. (2017). Addressing challenges in promoting healthy lifestyles: the AI-chatbot approach. In Proceedings of the 11th EAI international conference on pervasive computing technologies for healthcare (pp. 261–265).

Fan, W., Liu, J., Zhu, S., & Pardalos, P. M. (2018). Investigating the impacting factors for the healthcare professionals to adopt artificial intelligence-based medical diagnosis support system (AIMDSS). Annals of Operations Research, 1–26.

Floridi, L. (2013). The ethics of information. Oxford University Press.

Floridi, L., & Cowls, J. (2019). A unified framework of five principles for AI in society. Harvard Data Science Review. https://doi.org/10.1162/99608f92.8cd550d1.

Floridi, L., Cowls, J., Beltrametti, M., Chatila, R., Chazerand, P., Dignum, V., et al. (2018). AI4People—an ethical framework for a good AI society: opportunities, risks, principles, and recommendations. Minds and Machines, 28(4), 689–707.

Folkman, S., & Lazarus, R. S. (1986). Stress-processes and depressive symptomatology. Journal of Abnormal Psycholology, 95(2), 107–113.

Folkman, S., Lazarus, R. S., Dunkel-Schetter, C., DeLongis, A., & Gruen, R. J. (1986). Dynamics of a stressful encounter: cognitive appraisal, coping, and encounter outcomes. Journal of Personality and Social Psycholology, 50(5), 992–1003. https://doi.org/10.1037/0022-3514.50.5.992.

Fornell, C., & Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. Journal of Marketing Research, 18(1), 39–50. https://doi.org/10.2307/3151312.

Gebauer, J. E., Riketta, M., Broemer, P., & Maio, G. R. (2008). Pleasure and pressure based prosocial motivation: Divergent relations to subjective well-being. Journal of Research in Personality, 42(2), 399–420.

Gong, X., Lee, M. K. O., Liu, Z., & Zheng, X. (2020). Examining the role of tie strength in users’ continuance intention of second-generation mobile instant messaging services. Information Systems Frontiers, 22(1), 149–170. https://doi.org/10.1007/s10796-018-9852-9.

Gordon, L. A., Loeb, M. P., & Sohail, T. (2010). Market value of voluntary disclosures concerning information security. MIS Quarterly, 567–594.

Greene, D., Hoffmann, A. L., & Stark, L. (2019, January). Better, nicer, clearer, fairer: A critical assessment of the movement for ethical artificial intelligence and machine learning. In Proceedings of the 52nd Hawaii International Conference on System Sciences.

Haesevoets, T., De Cremer, D., De Schutter, L., McGuire, J., Yang, Y., Jian, X., & Van Hiel, A. (2019). Transparency and control in email communication: The more the supervisor is put in cc the less trust is felt. Journal of Business Ethics, 1–21.

Hair, J. F., Hult, G. T. M., Ringle, C., & Sarstedt, M. (2016). A primer on partial least squares structural equation modeling (PLS-SEM). SAGE Publications.

Hair, J. F., Ringle, C. M., & Sarstedt, M. (2011). PLS-SEM: Indeed a silver bullet. Journal of Marketing Theory and Practice, 19(2), 139–152. https://doi.org/10.2753/MTP1069-6679190202.

Haleem, A., Javaid, M., & Khan, I. H. (2019). Current status and applications of artificial intelligence (AI) in medical field: An overview. Current Medicine Research and Practice, 9(6), 231–237.

Hamet, P., & Tremblay, J. (2017). Artificial intelligence in medicine. Metabolism, 69, S36–S40.

Harter, J. K., Schmidt, F. L., & Hayes, T. L. (2002). Business-unit-level relationship between employee satisfaction, employee engagement, and business outcomes: a meta-analysis. Journal of Applied Psychology, 87(2), 268–279.

Hayes, A. F. (2017). Introduction to mediation, moderation, and conditional process analysis, second edition: A regression based approach. Guilford Publications.

Henseler, J., Dijkstra, T. K., Sarstedt, M., Ringle, C. M., Diamantopoulos, A., Straub, D. W., et al. (2014). Common beliefs and reality about PLS: Comments on Rönkkö and Evermann (2013). Organizational Research Methods, 17(2), 182–209. https://doi.org/10.1177/1094428114526928.

Jobin, A., Lenca, M., & Vayena, E. (2019). The global landscape of AI ethics guidelines. Nature Machine Intelligence, 1(9), 389–399.

Kahn, W. A. (1990). Psychological conditions of personal engagement and disengagement at work. Academy of Management Journal, 33(4), 692–724.

Kang, H., Hahn, M., Fortin, D. R., Hyun, Y. J., & Eom, Y. (2006). Effects of perceived behavioral control on the consumer usage intention of e‐coupons. Psychology & Marketing, 23(10), 841-864

Karatepe, O. M. (2013). High-performance work practices and hotel employee performance: The mediation of work engagement. International Journal of Hospitality Management, 32, 132–140.

Keane, P. A., & Topol, E. J. (2018). With an eye to AI and autonomous diagnosis. NPJ Digital Medicine, 1(40). https://doi.org/10.1038/s41746-018-0048-y.

Krishnan, S. (2017). Personality and espoused cultural differences in technostress creators. Computers in Human Behavior, 66, 154–167. https://doi.org/10.1016/j.chb.2016.09.039.

Lang, J., Bliese, P. D., Lang, J. W. B., & Adler, A. B. (2011). Work gets unfair for the depressed: Cross-lagged relations between organizational justice perceptions and depressive symptoms. Journal of Applied Psychology, 96(3), 602–618. https://doi.org/10.1037/a0022463.

Lau-Gesk, L. G. (2003). Activating culture through persuasion appeals: An examination of the bicultural consumer. Journal of Consumer Psychology, 13(3), 301–315.

Leslie, L. M. (2019). Diversity initiative effectiveness: A typological theory of unintended consequences. Academy of Management Review, 44(3), 538–563.

Li, X., & Wu, L. (2018). Herding and social media word-of-mouth: evidence from groupon. MIS Quarterly, 42(4), 1331–1351.

Liao, Q. V., Gruen, D., & Miller, S. (2020). Questioning the AI: Informing Design Practices for Explainable AI User Experiences. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (pp. 1–15).

Lind, E. A. (2001). Fairness heuristic theory: Justice judgments as pivotal cognitions in organizational relations. In J. Greenberg & R. Cropanzano (Eds.), Advances in Organizational Justice (pp. 56–88). Stanford University Press.

Martela, F., & Ryan, R. M. (2016). The benefits of benevolence: Basic psychological needs, beneficence, and the enhancement of well-being. Journal of Personality, 84(6), 750–764.

Masterson, S. S., Lewis, K., Goldman, B. M., & Taylor, M. S. (2000). Integrating justice and social exchange: The differing effects of fair procedures and treatment on work relationships. Academy of Management Journal, 43(4), 738–748.

McLean, G., & Osei-Frimpong, K. (2019). Chat now… Examining the variables influencing the use of online live chat. Technological Forecasting and Social Change, 146, 55–67. https://doi.org/10.1016/j.techfore.2019.05.017.

Miller, T. (2019). Explanation in artificial intelligence: Insights from the social sciences. Artificial Intelligence, 267, 1–38.

Mitchell, C., Meredith, P., Richardson, M., Greengross, P., & Smith, G. B. (2016). Reducing the number and impact of outbreaks of nosocomial viral gastroenteritis: time-series analysis of a multidimensional quality improvement initiative. BMJ Quality & Safety, 25(6), 466–474.

Moons, I., & De Pelsmacker, P. (2012). Emotions as determinants of electric car usage intention. Journal of Marketing Management, 28(3–4), 195–237. https://doi.org/10.1080/0267257X.2012.659007.

Morley, J., Floridi, L., Kinsey, L., & Elhalal, A. (2020). From what to how: an initial review of publicly available AI ethics tools, methods and research to translate principles into practices. Science and Engineering Ethics, 26(4), 2141–2168.

Nadarzynski, T., Bayley, J., Llewellyn, C., Kidsley, S., & Graham, C. A. (2020). Acceptability of artificial intelligence (AI)-enabled chatbots, video consultations and live webchats as online platforms for sexual health advice. BMJ Sexual & Reproductive Health. https://doi.org/10.1136/bmjsrh-2018-200271. Advance online publication.

Nadarzynski, T., Miles, O., Cowie, A., & Ridge, D. (2019). Acceptability of artificial intelligence (AI)-led chatbot services in healthcare: A mixed-methods study. Digital Health, 5, 1–12.

Newman, D. T., Fast, N. J., & Harmon, D. J. (2020). When eliminating bias isn’t fair: Algorithmic reductionism and procedural justice in human resource decisions. Organizational Behavior and Human Decision Processes, 160, 149–167.

Nikkei Asia (2020). China takes lead in AI-based diagnostic imaging equipment, Retrieved March 20, 2021, from https://asia.nikkei.com/Business/Startups/China-takes-lead-in-AI-based-diagnostic-imaging-equipment.

Novuseeds, M. (2018, May 24). West China Hospital established a medical artificial intelligence research and development center. Novuseeds Medtech. Retrieved September 20, 2020, from http://www.seedsmed.com/news/3.html.

Panch, T., Mattie, H., & Celi, L. A. (2019). The “inconvenient truth” about AI in healthcare. NPJ Digital Medicine, 2(1), 1–3.

Pavlou, P. A., & Dimoka, A. (2006). The nature and role of feedback text comments in online marketplaces: Implications for trust building, price premiums, and seller differentiation. Information Systems Research, 17(4), 392–414.

Peters, D., Vold, K., Robinson, D., & Calvo, R. A. (2020). Responsible AI—two frameworks for ethical design practice. IEEE Transactions on Technology and Society, 1(1), 34–47.

Qureshi, I., & Compeau, D. (2009). Assessing between-group differences in information systems research: A comparison of covariance-and component-based SEM. MIS Quarterly, 33(1), 197–214.

Rai, A. (2020). Explainable AI: From black box to glass box. Journal of the Academy of Marketing Science, 48(1), 137–141.

Ramaswamy, P., Jeude, J., & Smith, J. A. (2018, September). Making AI responsible and effective. Retrieved November 24, 2020, from https://www.cognizant.com/whitepapers/making-ai-responsible-and-effective-codex3916.pdf.

Reddy, S., Allan, S., Coghlan, S., & Cooper, P. (2020). A governance model for the application of AI in health care. Journal of the American Medical Informatics Association, 27(3), 491–497.

Reddy, S., Fox, J., & Purohit, M. P. (2019). Artificial intelligence-enabled healthcare delivery. Journal of the Royal Society of Medicine, 112(1), 22–28.

Ribeiro, M. T., Singh, S., & Guestrin, C. (2016). "Why should i trust you?“ Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining (pp. 1135–1144).

Roca, J. C., García, J. J., & De La Vega, J. J. (2009). The importance of perceived trust, security and privacy in online trading systems. Information Management & Computer Security, 17(2), 96–113..

Rogers, E. M. (1962). Diffusion of innovations. Free Press of Glencoe.

Rothenberger, L., Fabian, B., & Arunov, E. (2019). Relevance of ethical guidelines for artificial intelligence - a survey and evaluation. In Proceedings of the 27th European Conference on Information Systems (ECIS).

Russell, S. J., & Norvig, P. (2016). Artificial intelligence: a modern approach. Pearson Education Limited.

Ryan, R. M., & Deci, E. L. (2017). Self-determination theory: Basic psychological needs in motivation, development, and wellness. Guilford Publications.

Saks, A. M. (2006). Antecedents and consequences of employee engagement. Journal of Managerial Psychology, 21(7), 600–619.

Salanova, M., Llorens, S., & Cifre, E. (2013). The dark side of technologies: Technostress among users of information and communication technologies. International Journal of Psychology, 48(3), 422–436.

Schönberger, D. (2019). Artificial intelligence in healthcare: a critical analysis of the legal and ethical implications. International Journal of Law and Information Technology, 27(2), 171–203.

Scott, J. E., & Walczak, S. (2009). Cognitive engagement with a multimedia ERP training tool: Assessing computer self-efficacy and technology acceptance. Information & Management, 46(4), 221-232

Shinners, L., Aggar, C., Grace, S., & Smith, S. (2020). Exploring healthcare professionals’ understanding and experiences of artificial intelligence technology use in the delivery of healthcare: An integrative review. Health Informatics Journal, 26(2), 1225–1236.

Singh, S. (2020, February 21). Retrieved March 18, 2021, https://www.marketsandmarkets.com/PressReleases/artificial-intelligence.asp.

Sun, T. Q., & Medaglia, R. (2019). Mapping the challenges of artificial intelligence in the public sector: Evidence from public healthcare. Government Information Quarterly, 36(2), 368–383.

Taddeo, M., & Floridi, L. (2018). How AI can be a force for good. Science, 361(6404), 751–752.

Tarafdar, M., Tu, Q., Ragu-Nathan, B. S., & Ragu-Nathan, T. S. (2007). The impact of technostress on role stress and productivity. Journal of Management Information Systems, 24(1), 301–328. https://doi.org/10.2753/MIS0742-1222240109.

Tencent (2020). Tencent 2020 Interim Report. Retrieved Jan 30, 2021, from https://cdc-tencent.com1258344706.image.myqcloud.com/uploads/2020/08/26/c798476aba9e18d44d9179e103a2e07f.pdf.

Topol, E. J. (2019). High-performance medicine: the convergence of human and artificial intelligence. Nature Medicine, 25(1), 44–56.

Tran, V. T., Riveros, C., & Ravaud, P. (2019). Patients’ views of wearable devices and AI in healthcare: Findings from the ComPaRe e-cohort. NPJ Digital Medicine, 2(1), 1–8.

Vakkuri, V., Kemell, K. K., Kultanen, J., & Abrahamsson, P. (2020). The current state of industrial practice in artificial intelligence ethics. IEEE Software, 50–57.

van den Bos, K. (2003). On the subjective quality of social justice: The role of affect as information in the psychology of justice judgments. Journal of Personality and Social Psychology, 85(3), 482–498. https://doi.org/10.1037/0022-3514.85.3.482.

Vogt, D. S., King, D. W., & King, L. A. (2004). Focus groups in psychological assessment: enhancing content validity by consulting members of the target population. Psychology Assess, 16(3), 231–243. https://doi.org/10.1037/1040-3590.16.3.231.

Wang, H., & Li, Y. (2019). Role overload and Chinese nurses’ satisfaction with work-family balance: The role of negative emotions and core self-evaluations. Current Psychology, 1–11.

Wang, Y., Kung, L., Gupta, S., Ozdemir, S. (2019). Leveraging big data analytics to improve quality of care in healthcare organizations: A configurational perspective. British Journal of Management 30 (2), 362–388

Weerakkody, V., Irani, Z., Kapoor, K., Sivarajah, U., & Dwivedi, Y. K. (2017). Open data and its usability: an empirical view from the citizen’s perspective. Information Systems Frontiers, 19(2), 285–300.

Weinstein, N., & Ryan, R. M. (2010). When helping helps: autonomous motivation for prosocial behavior and its influence on well-being for the helper and recipient. Journal of Personality and Social Psychology, 98(2), 222–244.

West China Hospital (2020). West China School of Medicine: Overview. West China School of Medicine. Retrieved January 05, 2021, from http://www.wchscu.cn/details/50453.html.

Wu, G., Hu, Z., & Zheng, J. (2019). Role stress, job burnout, and job performance in construction project managers: the moderating role of career calling. International Journal of Environmental Research and Public Health, 16(13), 2394. https://doi.org/10.3390/ijerph16132394.

Yang, H., Guo, X., & Wu, T. (2015). Exploring the influence of the online physician service delivery process on patient satisfaction. Decision Support Systems, 78, 113–121.

Yin, P., Ou, C. X., Davison, R. M., & Wu, J. (2018). Coping with mobile technology overload in the workplace. Internet Research, 28(5), 1189–1212.

Yang, Z., Ng, B.-Y., Kankanhalli, A., & Luen Yip, J. W. (2012). Workarounds in the use of IS in healthcare: A case study of an electronic medication administration system. International Journal of Human-Computer Studies, 70(1), 43–65.

Zhao, X., Lynch, J. G. Jr., & Chen, Q. (2010). Reconsidering Baron and Kenny: Myths and truths about mediation analysis. Journal of Consumer Research, 37(2), 197–206. https://doi.org/10.1086/651257.

Zhou, S. M., Fernandez-Gutierrez, F., Kennedy, J., Cooksey, R., Atkinson, M., Denaxas, S., et al. (2016). Defining disease phenotypes in primary care electronic health records by a machine learning approach: a case study in identifying rheumatoid arthritis. PLoS One, 11(5), 1–14.

Zhou, Y., Xu, X., Song, L., Wang, C., Guo, J., Yi, Z., et al. (2020). The application of artificial intelligence and radiomics in lung cancer. Precision Clinical Medicine, 3(3), 214–227. https://doi.org/10.1093/pcmedi/pbaa028.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note