Abstract

Purpose

To determine whether Twitter improves dissemination of ophthalmology scientific publications

Methods

Data were collected on articles published on PubMed between the years 2016 and 2021 (inclusive) and identified with the word “ophthalmology”. Twitter performance metrics, including the number of tweets, number of likes, and number of retweets were collected from Twitter using the publicly available scientific API. Machine learning and descriptive statistics were used to outline Twitter performance metrics.

Results

The number of included articles was 433710. The percentage of articles that were in the top quartile for citation count, which had ≥1 tweet was 34.4% (number 437/1270). Conversely, the percentage of articles that were in the top quartile for citation count, which had 0 tweets was 27.8% (number 12023/43244). When machine learning was used to predict Twitter performance metrics an AUROC of 0.78 was returned. This was associated with an accuracy of 0.97

Conclusion

This study has shown preliminary evidence to support that Twitter may improve the dissemination of scientific ophthalmology publications.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Dear Editor,

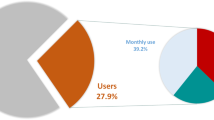

Ophthalmic practitioners, researchers and journals (ie @JAMAOphth, @AAOJournal) use Twitter to share and discuss peer-reviewed scientific ophthalmology publications [1,2,3,4]. Users can react to posts containing ophthalmic research using likes, comments, and retweets, which “pushes” the original post to a new set of users. There is, however, limited evidence to demonstrate the efficacy of Twitter for improving the citation count of scientific ophthalmology publications. In the present study, we utilise machine learning techniques to (1) characterise the performance metrics associated with Twitter usage in relation to scientific ophthalmology publications, and (2) examine the effectiveness of machine learning in the prediction of these Twitter performance metrics.

Data were collected on articles published on PubMed between the years 2016 and 2021 (inclusive) and identified with the word “ophthalmology”. Twitter performance metrics, including the number of tweets, number of likes, and number of retweets were collected from Twitter using the publicly available scientific API. Tweets were included that used the term "pubmed.ncbi.nlm.nih.gov". Tweets were linked to individual articles with PubMed ID.

Descriptive statistics were used to outline Twitter performance metrics. Wilcoxon signed rank tests (due to the non-parametric nature of the data as evaluated with Shapiro–Wilk tests) and chi squared tests were used to compare article characteristics with respect to title length, abstract length, and number of authors. Data underwent pilot machine learning analysis to gauge whether a signal were present in the prediction of Twitter performance metrics. Namely, text (title, abstract, author list, journal ISSN, and MeSH terms for each article) underwent pre-processing (capitalisation removal, negation detection, word stemming, stop-word removal, and conversion to a term-frequency inverse document frequency table), and were then randomly split into training and testing datasets (75%/25%). A XGBoost was then developed on the training dataset, prior to evaluation on the holdout test dataset, aiming to predict whether any given article would receive ≥ 1 tweet or no tweets. This model was chosen due to the imbalanced nature of the dataset. The primary outcome was the area under the receiver operator curve (AUROC). This study did not require institutional ethical approval due to the use of publicly available data.

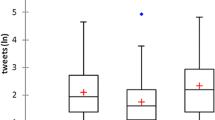

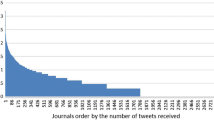

The number of included articles was 433,710. The number of articles which had ≥ 1 tweet identifiable was 1270 (0.29%) (see Table 1). Overall, the median citation count was 2 (IQR 0 to 6). For articles that had tweets, the median tweet count was 1 (IQR 1 to 2), the median cumulative like count was 1 (IQR 0 to 5), and the median cumulative retweets was 0 (IQR 0 to 2). The percentage of articles that were in the top quartile for citation count, which had ≥ 1 tweet was 34.4% (number 437/1270). Conversely, the percentage of articles that were in the top quartile for citation count, which had 0 tweets was 27.8% (number 12023/43244).

When machine learning was used to predict Twitter performance metrics an AUROC of 0.78 was returned. This was associated with an accuracy of 0.97 (10 true positive, 345 false negative, 10,764 true negative, and 10 false positive). It can be seen that these performance characteristics need to be viewed in the context of an unbalanced test dataset.

This study has shown preliminary evidence to support that Twitter may improve the dissemination of scientific ophthalmology publications. We report that scientific ophthalmology publications with higher performance on Twitter metrics, namely having at least one tweet, may be associated with higher performance on conventional academic metrics such as citations. It is important to note the observational and retrospective nature of this analysis. We recgonise that the “black box” nature of machine learning lessens interpretability, and that reverse causality may account for the study findings by producing a biased model. Further studies may seek to trial engagement with social media in a randomised manner to gauge whether such activity influences the scientific impact of articles. Continued work in this field may also explore the effect of the dissemination of false or misleading ophthalmic research findings through Twitter, a topic of growing concern [5].

References

Tsui E, Rao R, Carey A, Feng M, Provencher L (2020) Using social media to disseminate ophthalmic information during the #COVID19 pandemic. Ophthalmology 127(9):75–78. https://doi.org/10.1016/j.ophtha.2020.05.048

Huang A, Abdullah A, Chen K, Zhu D (2022) Ophthalmology and social media: an in-depth investigation of ophthalmologic content on instagram. Clin Ophthalmol 16:685–694. https://doi.org/10.2147/OPTH.S353417

Choo E, Ranney M, Chan T, Trueger N, Walsh A, Tegtmeyer K et al (2015) Twitter as a tool for communication and knowledge exchange in academic medicine: a guide for skeptics and novices. Med Teach 37(5):411–416. https://doi.org/10.3109/0142159X.2014.993371

Micieli R, Micieli J (2012) Twitter as a tool for ophthalmologists. Can J Ophthalmol 47(5):410–413. https://doi.org/10.1016/j.jcjo.2012.05.005

Men M, Fung S, Tsui E (2021) What’s trending: a review of social media in ophthalmology. Curr Opin Ophthalmol 32(4):324–330. https://doi.org/10.1097/ICU.0000000000000772

Acknowledgements

The authors recognise the contribution of Mr. Sheng Chieh Teoh for the analysis of Twitter metadata, and Dr. Carmelo Macri forassistance throughout all aspects of the study

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions. This research received no specific grant from any funding agency in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Contributions

TM and SB wrote the main manuscript text. SB, RC and WC supervised the project. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Muecke, T., Bacchi, S., Casson, R.J. et al. Does Twitter improve the dissemination of ophthalmology scientific publications?. Int Ophthalmol 43, 4487–4489 (2023). https://doi.org/10.1007/s10792-023-02849-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10792-023-02849-1