Abstract

One of the most significant problems related to Big Data is their analysis with the use of various methods from the area of descriptive statistics or machine and deep learning. This process is interesting in both—static datasets containing various data sources which do not change over time, and dynamic datasets collected with the use of ambient data sources, which measure a number of attribute values over long periods. Since access to actual dynamic data systems is demanding, the focus of this work is put on the design and implementation of a framework usable in a simulation of data streams, their processing and subsequent dynamic predictive and visual analysis. The proposed system is experimentally verified in the context of a case study conducted on an environmental variable dataset, which was measured with the use of a real-life sensor network.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Data is commonly referred to as Big Data in the case it takes on properties referred to as Vs—the number of these Vs increases over time from basic 3V to 7V and more [1]. The most basic of these properties are the founding three, which can be described as follows [2, 3]:

-

Volume of data—this property represents the amount of data which is collected from a number of sources divided into two main subgroups:

-

Static data sources composed of datasets, databases and other data units collected from various online or offline repositories which do not change over time.

-

Dynamic or ambient data sources which collect data continuously and, therefore, change with time. A typical example of such a data source is a set of Internet of Things sensors which measure the progression of the same set of values over an established period.

-

-

Velocity of data—pertains specifically to dynamic data, the change of which occurs in all systems which implement ambient data sources. In this case, the system is measuring data with the use of ambient data sources (such as sensor networks) and these measurements are done on a high enough number of sensors in small enough time intervals, this creates data streams which need to be processed and analysed in the system. The processing and analysis of such data streams are of high interest to this study.

-

Variety of data—since the set of data considered in the case of Big Data problems is not homogeneous, the system needs to be able to work with a large number of file types and formats, e.g. simple text documents, audio files, video files, coordinates or computer models concerning more than two dimensions.

Other than the volume, variety and velocity of the data, the most common complementary properties of Big Data are the Veracity and Value of datasets representing 4th and 5th Vs while the variability and visualization of data constitute the last two Big Data properties [4, 5]. The combination of all of these properties leads to one of the most significant problems related to Big Data sets - their analysis with the use of methods of statistical analysis, visualization of the data and other methods of exploratory data analysis or predictive and estimative data analysis with the use of machine learning, fuzzy inference system or neural network approaches [6,7,8].

1.1 Objectives of the work

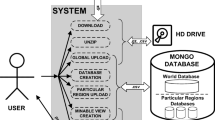

The natural objective in the area of Big Data is to create a system which comfortably works with data of the qualities described above. The basic schema of the workflow used in such Big Data systems is presented in Fig. 1 and is composed of three separate blocks - data source block, data processing block and data analysis block. However, since such real-world systems are not commonly accessible and can include several complex parts which are time-wise and financially demanding parts, the use of simulated environments for algorithm development, algorithm tuning and educating is essential. The research presented in this study focuses on designing and implementing such a software framework for the simulation of data streams, their processing and subsequent analysis with the objective of dynamic prediction and visualization capabilities.

The main contribution of the work presented within the scope of this article can be described as follows:

-

Design of a framework for the simulation of dynamic data collection and its analysis based on methods from the area of descriptive statistics and visual data analysis.

-

Implementation of this framework in the form of a Python simulator for (ideally) multicore computing systems.

-

Scaled case study of the simulation of environmental variable measurements with the use of a real dataset created within a year of data collection by a network of sensors in the city of Craiova, Romania.

In this study, we focus on the key methods and principles used in the simulation of dynamic data collection and analysis, computational advantages and disadvantages of simulated data streaming, the simulation of real-world dynamic data collection with the use of scaled sensor network and potential applications of the proposed framework.

The rest of the introductory section of the work contains a brief review of works related to the simulation of systems, big data and their analysis. In Sect. 2, we describe the proposed system and its individual components from the point of view of design and software implementation itself. Section 3 presents a case study of the proposed framework with the use of a real-life environmental variable dataset, an evaluation of this system and an identification of its strong and weak points.

1.2 Related work

As can be seen in the brief overlook of the literature, simulation of various environments is an important part of all areas of expertise—from all parts of computer science itself to simulation of real-life environments in the area of tourism.

The work presented in the paper [9] addresses the difference between mathematical models and simulation models of a particular simulation environment for Dynamic Hybrid Systems. The authors focus on various mathematical and simulation environments available to introduce models for these systems while describing restrictions of possibilities of simulation and modelling solutions. The study also consists of analysed case studies and a benchmarking proposal related to possibilities and restrictions identified in the work.

In [10], authors propose a simulation framework called Cosearcher Information with the objective of user simulation based on controlling key behavioural factors (e.g. cooperativeness and patience of users). To evaluate the approach, authors investigate the impact of a variety of conditions on search refinement and clarification effectiveness over a wide range of user behaviours, semantic policies, and dynamic facet generation.

Authors of [11] propose a schema for utilization of intrinsic gate errors of noisy intermediate-scale quantum devices to enable simulation of open quantum system dynamics without ancillary qubits or explicit bath engineering. The work is focused on the simulation of the energy transfer process in a photosynthetic dimer system on IBM-Q cloud with a large dataset presented in the work [12].

The objective of the work [13] is to create a simulation model for the sustainability of a dynamic system in order to assess and forecast the sustainability of the system under alternative development scenarios. After the design and implementation of the simulation model, the authors use the Cesis Palace complex as an example of a real-life dynamic system. The model created in the study provides a way to assess the sustainability of a real-life system and its dimensions. Other than the model itself, the study identifies a number of key factors for the improvement of similar models - mainly high-quality evaluation of the model, data security and integrity and use of machine learning in the context of the simulation.

Similar to the area of simulation models, the area of real-time analysis, its’ models, limitations and benefits are the focus of a number of modern research works.

Specifically, in [14], authors analyze the different approaches to providing real-time analytics, their advantages and disadvantages, and the tradeoffs that both designers and users of the systems inevitably have to make. The work considers streaming processing, cloud data warehousing and hybrid transactional/analytical processing while the authors provide architectural principles of each approach, analyze used software products and also describe their main benefits, limitations, and tradeoffs.

The work presented in [15] discusses the complex event processing technology that has influenced many recent data streaming solutions in the data analysis area with the focus on the integration of machine learning and artificial intelligence into such systems. The authors present real-life application scenarios from the area of the economics and health industry in order to demonstrate the utilization of the techniques and technologies discussed in this paper. The specific centre of attention relevant to the research presented in this study is the overview of data stream analytics platforms specifying the components of typical data stream analytics platforms and two types of behaviour models (formation and processing) for such platforms.

In [16] authors present a comprehensive review of the impact of edge computing on real-time data processing and analytics. The review sets a number of objectives: analysis of fundamental concepts of edge computing, and its’ architectural framework while highlighting the advantages of such a system over traditional cloud-centric approaches; exploration of the seamless integration of analytical capabilities into edge devices focusing on advanced data analysis algorithms, such as machine learning and artificial intelligence; and examination of the transformative implications of edge computing on various sectors, including healthcare, manufacturing, transportation, and smart cities.

2 Simulation of predictive analysis in data streams

The main objective of this work is to present the design and implementation of the software framework for the simulation of data streams with a focus on dynamic predictive analysis and visualization of incoming data. The basic functionalities of the proposed system include the simulation of a sensor network based on parallel computing, processing of incoming data to the internal data storage necessitated by RAM, analyzing processed data with the use of correlation and regression analysis and visualizing the results of this analysis through so-called predictive analysis visualization component.

The simulation framework considers the following behaviour models used for recomputing the predictive analysis visualization component:

-

Time-based recomputing—one of the basic models of recomputing the output of the dynamically changing data analysis system is periodical recomputation based on a set time (e.g. recomputing of analysis model every 5 seconds) [17].

-

Event-based recomputing—the opposite of time-based recomputing of the simulation output is event-based recomputing, which focuses on significant changes in data (events) for recomputation of the analysis model [18]. The basic events considered in this work are related to the selected analysis models - mainly correlation analysis. The correlation of two attributes is measured by the correlation coefficient, the value of which is in the range \(<-1, 1>\), therefore we consider the following events:

-

Correlation value leap event—when the correlation coefficient between a pair of attributes changes more than the set border, the model is recomputed.

-

Correlation level leap event—correlation coefficient values can be categorized into basic correlation levels (see Table 1). The model is recomputed when crossing from one of the correlation levels to another.

-

The following subsections of the work describe the main points and functionalities of two parts of the proposed model—simulation of the process of ambient data collection and correlation and regression analysis of the data streams.

2.1 Simulation of ambient data collection

Real-world ambient systems collect data with the use of several concurrently available sources at regular intervals. Since the collection part of such a system is commonly implemented in the form of a sensor set which is connected to the data processing and analysis modules of the system via network, the data flows into the system with varying latency [20].

The first objective of the proposed simulation framework is to model the flow of data collected with the use of a sensor network into a data processing and analysis system. In order to simulate real-world data collection effectively, the simulator considers two main properties of such sensor-based data collection:

-

Concurrent collection of data from a set of sensors, which is implemented as a set of parallel threads reading data from input data file or files. This type of simulation brings the need for multi-core processors—which are very common nowadays—in order to run the simulation properly, but also approaches the desired data streaming in the system.

-

Varying latency of transfer of data from the sensor to the data processing and analysis module of the system is implemented with the use of a standard waiting function, which can be set to a specific value in seconds or a random value in the given interval for individual sensors or for all sensors at once.

Figure 2 presents a schema for the main components of the proposed system, focused on concurrent sensor simulation based on a parallel thread model which reads data from the input data file and decomposes it into several data parts. The standard model of such decomposition is called data decomposition in which the size of such data parts is approximately in order to compute all the parts in a similar time [21]:

where \(T(dp_i)\) is the computing time of i-th data part. These data parts are aggregated in the Predictive Analysis Visualization Component which is, then, recomputed based on the selected behaviour model.

The pseudocode presented in Algorithm 1 focuses on the concurrent simulation of data collection and data streams. Inputs for the system are dataset for the simulation (data), the number of simulated sensors (n), type of behaviour for recomputation (b), time for time-based behaviour (t), correlation type (ct) and regression type (rt).

2.2 Predictive analysis visualization component

The point of data collection is to use it further in the processes of decision-making, analysis or knowledge discovery [22]. These processes can be all incorporated under the label of Predictive data analysis, which uses trends and patterns present in data in order to create logical estimations or predictions [23].

The proposed simulation model—as implemented now—uses three basic data analysis techniques for pattern recognition and predictive data analysis:

-

Correlation analysis—statistical method for measuring a linear or monotone non-linear relationship between the values of attribute pairs in data based on one of three possible coefficients [19]:

-

Pearson correlation coefficient for measurement of linear relationships between two attributes A and B as:

$$r(A,B) = \frac{{\sum\limits_{{i = 1}}^{n} {\left( {A_{i} - \mu (A)} \right)\left( {B_{i} - \mu (B)} \right)} }}{{\sqrt {\sum\limits_{{i = 1}}^{n} {\left( {A_{i} - \mu (A)} \right)^{2} } } \sqrt {\sum\limits_{{i = 1}}^{n} {\left( {B_{i} - \mu (B)} \right)^{2} } } }}{\text{ }}$$(2)where \(A_i\) and \(B_i\) are i-th measurements of attributes A and B, and \(\mu (A)\) and \(\mu (B)\) denote the mean value of these attributes and n is number of entities in the dataset.

-

Spearman rank correlation coefficient and Kendall rank correlation coefficient for measurement of non-linear relationships between attributes A and B. Spearman correlation coefficient is computed as:

$$ \rho (A,B) = 1 - \frac{{6\sum\limits_{{i = 1}}^{n} {\left( {rank\,\left( {A_{i} } \right) - rank\left( {B_{i} } \right)} \right)^{2} } }}{{n(n^{2} - 1)}} $$(3)where \(rank(A_i)\) and \(rank(B_i)\) are rankings for the i-th value of the considered attributes and n is number of measurements in the studied dataset. Kendall correlation coefficient is based on the equation:

$$\begin{aligned} \tau (A,B) = \frac{n_c - n_d}{\frac{n(n-1)}{2}} \end{aligned}$$(4)where \(n_c\) is the number of concordant pairs of rankings for attributes A and B, \(n_d\) is the number of discordant pairs of such rankings and n is the number of data instances in the dataset.

-

-

Regression analysis—statistical method for numeric value prediction based on fitting of linear or monotone non-linear function to input data [24]. In this work, we consider two possibilities for regression models used in the Predictive Analysis Visualization Component:

-

Linear regression—since this type of regression focuses on linear relationships, the proposed model uses Pearson correlation coefficient values to evaluate which attributes can be described via linear regression. This model for the attributes A and B is based on [24]:

$$\begin{aligned} B = mA + b \end{aligned}$$(5)where B is the attribute to be predicted based on the values of the attribute A, m is the so-called slope of the regression line and b is the B intercept.

-

Polynomial regression—opposed to linear regression, polynomial regression uses polynomial functions (and therefore curves) for the description of the studied dataset as follows [24]:

$$\begin{aligned} B = \beta _0 +\beta _1 A +\beta _2 A^2 + ... + \beta _n A^n + \epsilon \end{aligned}$$(6)where B is the attribute to be predicted based on the values of the attribute A, \(\beta _i\) for \(i \in 0,1,2,...,n\) are coefficients being estimated, n is the degree of the polynomial and \(\epsilon \) is the so-called error term.

-

-

Visual data analysis—in order to present data and findings effectively the proposed model uses visual models for the selected analytical methods [25].

Figure 3 contains a schema of the visual output of the simulation framework called predictive analysis visualization component. This output is composed of two main sections—one for each analysis type used in the basic proposal of the system. The left side of the visualization sheet uses a correlation heatmap model [19] in order to present the strength of the relationship between individual pairs of attributes in the incoming data stream. The right side of the visualization contains regression curves for:

-

either the pairs of attributes with strongest correlation coefficient values discovered in correlation analysis of the data - this model is used in time-based recomputing behaviour,

-

or the pairs of attributes with the strongest correlation coefficient values discovered in correlation analysis of the data and the pair of attributes which initiated the recomputing in event-based behaviour.

3 Case study and evaluation of the proposed framework

The implementation of the proposed simulation framework was done in Python programming language with the use of numpy, pandas, matplotlib and seaborn packages. This section presents a case study of the presented simulation model using a sizeable Environmental Variable Dataset. In this way, we simulate the collection of environmental data measurements (such as temperature, humidity or air pollutants) with a set of sensors, which create data streams analysed in the proposed system.

The simulations presented as a part of this case study were conducted on a 64-bit computer system with 32 processing cores, a CPU frequency of 3400 MHz and 16GB RAM.

The subsections of this part of the work focus on the presentation of the Environmental Variable Dataset itself; the process of simulation, its scaling and its outputs related to various recomputing behaviour models; and evaluation of the simulation model.

3.1 Environmental variable dataset

For the purposes of this case study the environmental variable dataset was used. This dataset is composed of sensor measurements of a number of environmental variables, which was being done each minute from December 5th 2021 to December 6th 2022 in the city of Craiova, Romania. The data collection process resulted in the raw dataset measured over one year, which created 458,635 records. Each record contains a value measured for the following properties:

-

date and time of measurement

-

temperature measured in \(^{\circ }C\)

-

barometric air pressure measured in hPa

-

humidity in air measured as g/kg

-

CO\(_2\)—carbon dioxide measured in so-called parts-per-million (ppm)

-

O\(_3\)—ozone measured in \(g/m^3\)

-

VOC—volatile organic compounds measured in \(\mu g/m^3\)

-

noise—measured in herz (Hz)

-

CH\(_2\)0 - formaldehyde measured in \(\mu g/m^3\)

-

PM1, PM2.5 and PM10—particle matter measured in \(\mu g/m^3\)

The statistical description of the pruned dataset is presented in Table 2 and consists of minimal (min) and maximal (max) values of an attribute, mean of an attribute and 1\(^{st}\) and 3\(^{rd}\) quartile of an attribute value interval.

3.2 Simulation of predictive analysis in ambient environmental variable measurement

As a way of presenting the functionality of the proposed simulation tool, we conducted a number of experiments on the environmental variable dataset. The experiments can be differentiated on the basis of a number of simulated sensors in the network and on the basis of the behaviour of output recomputing. We considered both behaviour models for 1, 8, 16 and 32 sensors in the simulated sensor network. Since the predictive analysis visualization component is created and stored for each recomputation of the model, we only present selected four visualizations for three of the experiments:

-

Figures 4, 5, 6, 7, 8, 9 show the Predictive Analysis Visualization Component for the first step of the simulation, step from approximately a third of the simulation, step from approximately two-thirds of the simulation and the last step of the simulation.

-

Figures 4 and 5 present time-based behaviour for the network of 16 concurrent sensors. For the purposes of the simulation, the Predictive Analysis Visualization Component was recomputed every 3 seconds.

-

Figures 6 and 7 present event-based behaviour for the network of 8 concurrently collecting data sensors. In the presented simulation run, the event considered for recomputing was a change in correlation level.

-

Figures 8 and 9 present event-based behaviour for the network of 32 concurrent sensors. Similarly to the previous case, the event considered for recomputation of visualization was a change in correlation level.

The two main parts of the predictive analysis visualization component for the simulation were configured as a lower triangle of Spearman rank correlation matrix on the left side of the visualization model and the visualization of four regression curves on the right side of the model.

For time-based behaviour, the four regression curves visualized in the output represent the four strongest values of \(|corr(attribute_i, attribute_j)|\). In the case of event-based behaviour recomputing the four regression curves consist of the three strongest correlation coefficient values (top row of the plots and bottom left plot) and the regression plot for the pair of attributes which initiated the recomputation (bottom right).

Figure 4 shows the initial phases of the simulated data stream of environmental measurements. Since the strong correlation between individual particles of pollution (PM1, PM2.5 and PM10) is well known, this correlation is present in the predictive analysis visualization component instantly. The fourth regression plot is of interest with regards to recomputation—we can see, that in the top of Fig. 4 the fourth strongest correlation coefficient value is measured between the time and pressure attributes, while on the bottom part of the figure, we see strong correlation between values of temperature and \(CO_2\).

Figure 5 presents selected parts of the second half of the first simulation. Other than changes in the regression plot based on the strength of attribute value correlation, there are significant changes in the correlation heatmap itself. One can note, that the value of the correlation coefficient measured between time and pressure attributes changes drastically in the duration of the simulation (with a growing amount of records being analyzed)—from strong correlation, where \(corr(time,\ pressure) = -0.87\) to no correlation at \(corr(time,\ pressure) = -0.04\). Such changes can be labelled as interesting events, which influence the results of analysis significantly.

Figures 6 and 7 show four interesting points from event-based simulation using 8 concurrently collecting sensors. Similarly to the previous simulation case, the three strongest correlation coefficient values, and therefore regression plots, are those measured between particle matters of various sizes. The fourth regression plot (bottom row, right) represents the regression curve between attributes which initiated recomputing of the visualization model—these pairs of attributes are \( time \& humidity\), \( temperature \& CO_2\), \( PM1 \& VOC\) and \( time \& temeprature\). The event-based behaviour model also supplements the recomputation of the model with commentary placed on the bottom of the Predictive Analysis Visualization Component, which describes the event.

The largest sensor network for the experimental system used in this study consisted of 32 concurrently simulated sensors. Figures 8 and 9 present four points of simulation similar to the previous examples. In this case, the event-based simulation with 32 concurrent simulated sensors collects the data much faster—as can be seen in both figures. This causes the changes in visualization to be less apparent, yet when focusing on the most significant events visualized in the bottom right regression plot, we can see trends similar to the previous simulations.

3.3 Evaluation of concurrent sensor simulation component

As seen in the case study presented in Sect. 3.2 proposed simulation framework can be used in order to analyze simulated data streams. This leads to the possible use of the framework in the design of algorithms for dynamically flowing data or to its use in education processes involving big and flowing data.

It is natural, that the simulation of data streams needs to take an appropriate amount of time in order for the analyzed and visualized changes to be perceivable. For the environmental variable dataset consisting of 458,635 records, the whole simulation takes a significant amount of time (see Table 3).

The time for simulation shown in Table 3 is presented on the time-based behaviour model, yet the times of simulation are not influenced significantly by the selection of the behaviour model itself. For the simulation using one sensor, the process takes approximately 14 and a half hours. On the other hand, the simulation using 32 sensors—the maximal amount of concurrent sensors for the system used in the testing—took less than 27 min.

In comparison, other simulation environments built for similar purposes, such as PureEdgeSim [26] or Parallel Data Generation Framework [27] work with their own generated data, which are used in specific problems. In the PureEdgeSim tool, synthetic data are generated and distributed over a set of simulated devices. Unlike the proposed simulation environment, this process in PureEdgeSim is instantaneous and therefore a user can not observe datastreams and recomputing of the models themselves. The advantage (from the point of a user) of such an approach is, that there is no need for a parallel computing system to run the simulator—this can also be perceived as a disadvantage from the point of view of simulation of concurrent data streams. In the Parallel Data Generation Framework, parallel computing for concurrent processes is used similarly to the proposed model, yet the Parallel Data Generation Framework generates its own data, which is not desirable in the process of meaningful data analysis.

3.4 Evaluation of predictive analysis visualization component

The output of the proposed framework is composed of predictive analysis visualization component, which can be presented in the form of:

-

a png file stored once for each recomputation,

-

an interactive, recomputed Python window,

-

a combined model of both.

As presented in Figs. 4, 5, 6, 7, 8, 9, various models of behaviour lead to variations of the visualization output of the framework. In the case of time-based behaviour, the regression plots contain pairs of attributes with the highest value of correlation coefficient between them, which naturally leads to the lowest values of Root mean squared error (RMSE) of the predictive function. When using event-based behaviour, we can see, that the framework uses three pairs of attributes with the highest values of correlation coefficient and (potentially) one pair of attributes with a comparatively lower value of this correlation. This fourth pair of attributes is present in the model since it initialized the event of significance (eg. transition between two correlation classes). The natural consequence of this is that the value of RMSE for the regression function grows.

Even though, the presented case study consisted of visualization of correlation and regression analysis models, the parts of the Predictive Analysis Visualization Component of the system can be switched easily to any other data analysis model. An example of such switching would be using visualization of a decision tree for a classification problem instead of regression curves shown in the study.

Since both—the PureEdgeSim and Parallel Data Generation Framework—tools are not focused on the visual analysis of data, there is no native visual output from these models. Both tools output sets of data which can be visualized additionally as opposed to the proposed tool, which uses the Predictive Analysis Visualization Component as an output of a simulation.

4 Conclusion

Since one of the main objectives in the area of Big Data processing and analysis is to create a system which comfortably works with dynamically changing data, research presented in this study focuses on the design and implementation of a software framework for the simulation of data streams, their processing and subsequent analysis with the focus on dynamic prediction and visualization capabilities.

The presented work describes the design of a framework for the simulation of dynamic data collection and its analysis on the basis of correlation and regression analysis; implementation of this framework in the form of a Python simulation model intended primarily for multicore computing systems; a case study with the use of simulation model using environmental variable measurements collected by a network of sensors in the city of Craiova, Romania.

Future work in the area of simulated data streams consists of three main directions:

-

Implementation of several predictive analysis visualization component forms using various predictive analysis models (e.g. the abovementioned decision trees or Bayesian networks).

-

Implementation of large language model for diagnostic analysis of events presented in the visualization of the simulation.

-

Implementation of the created simulation framework in the form of a Python package placed on PyPI.

Data availability

Python code for the created software and data used in the case study are freely available on the following link: https://github.com/daniel-demian/predictive-methods. In the case of any questions, please contact authors via e-mail: adam.dudas@umb.sk.

References

Kvet M, Papán J, Durneková Hrinová M. Treating temporal function references in relational database management system. IEEE Access. 2024. https://doi.org/10.1109/ACCESS.2024.3387046.

Janech J, Tavač M, Kvet M. Versioned database storage using unitemporal relational database. 2019 IEEE 15th International Scientific Conference on Informatics. 2019. https://doi.org/10.1109/Informatics47936.2019.9119269.

de Espona Pernas L, et al. Automatic indexing for MongoDB. Commun Comput Inf Sci. 2023. https://doi.org/10.1007/978-3-031-42941-5_46.

Tichý T, et al. Information modelling and smart approaches at the interface of road and rail transport. 2023 Smart Cities Symposium Prague. 2023. https://doi.org/10.1109/SCSP58044.2023.10146231.

Bansal N, Sachdeva S, Awasthi LK. Are NoSQL databases affected by schema? IETE J Res. 2023. https://doi.org/10.1080/03772063.2023.2237478.

Michalíková A, et al. Can wood-decaying urban macrofungi be identified by using fuzzy interference system? An example in Central European Ganoderma species. Sci Rep. 2021. https://doi.org/10.1038/s41598-021-92237-5.

Purkrabková Z, et al. Traffic accident risk classification using neural networks. Neural Netw World. 2021. https://doi.org/10.14311/NNW.2021.31.019.

Bělinová Z, Votruba Z. Reflection on systemic aspects of consciousness. Neural Network World. 2023. https://doi.org/10.14311/NNW.2023.33.022.

Korner A, Winkler S, Breitencecker F. Benchmarking simulation models for dynamic hybrid systems. 19th UKSIM-AMSS International Conference on Mathematical Modelling & Computer Simulation (UKSIM). 2017. https://doi.org/10.1109/UKSim.2017.23.

Salle A, et al. COSEARCHER: studying the effectiveness of conversational search refinement and clarification through user simulation. Inf Retriev J. 2022. https://doi.org/10.1007/s10791-022-09404-z.

Sun S, Shih LC, Cheng YC. Efficient quantum simulation of open quantum system dynamics on noisy quantum computers. Phys Scripta. 2024. https://doi.org/10.1088/1402-4896/ad1c27.

Aleksandrowicz G, et al. Qiskit: An open-source framework for quantum computing. Zenodo. 2019. https://doi.org/10.5281/ZENODO.2562110.

Mihailovs N, Cakula S. Dynamic system sustainability simulation modelling. Baltic J Modern Comput. 2020. https://doi.org/10.22364/bjmc.2020.8.1.12.

Kuznetsov SD, Velikhov PE, Fu Q. Real-time analytics: benefits, limitations, and tradeoffs. Program Comput Softw. 2023. https://doi.org/10.1134/S036176882301005X.

Chen W, Milosevic Z, Rabhi FA, Berry A. Real-time analytics: concepts, architectures, and ML/AI considerations. IEEE Access. 2023. https://doi.org/10.1109/ACCESS.2023.3295694.

Modupe OT, et al. Reviewing the transformational impact of edge computing on real-time data processing and analytics. Comput Sci IT Res J. 2024. https://doi.org/10.51594/csitrj.v5i3.929.

Stepney S. Nonclassical computation—a dynamical systems perspective. Handbook of natural computing. 1st ed. Springer. https://doi.org/10.1007/978-3-540-92910-9_59.

Agarwal A, Pandey A, Pileggi L. Robust event-driven dynamic simulation using power flow. Electric Power Syst Res. 2020. https://doi.org/10.1016/j.epsr.2020.106752.

Dudáš A. Graphical representation of data prediction potential: correlation graphs and correlation chains. Vis Comput. 2024. https://doi.org/10.1007/s00371-023-03240-y.

Beneš V, Svítek M. Knowledge graphs for transport emissions concerning meteorological conditions. 2023 Smart City Symposium Prague. 2023. https://doi.org/10.1109/SCSP58044.2023.10146219.

Škrinárová J, Dudáš A. Optimization of the functional decomposition of parallel and distributed computations in graph coloring with the use of high-performance computing. IEEE Access. 2022. https://doi.org/10.1109/ACCESS.2022.3162215.

Sumathi K, Vinod V. Classification of fruits ripeness using CNN with multivariate analysis by SGD. Neural Netw World. 2022. https://doi.org/10.14311/NNW.2022.32.019.

Faggioli G, et al. sMARE: a new paradigm to evaluate and understand query performance prediction methods. Inf Retriev J. 2022. https://doi.org/10.1007/s10791-022-09407-w.

Arampatzis A, Peikos G, Symeonidis S. Pseudo relevance feedback optimization. Inf Retriev J. 2021. https://doi.org/10.1007/s10791-021-09393-5.

Steingartner W, Zsiga R, Radakovic D. Natural semantics visualization for domain-specific language. 2022 IEEE 16th International Scientific Conference on Informatics. 2022. https://doi.org/10.1109/Informatics57926.2022.10083439.

Mechalikh Ch, Taktak H, Moussa F. PureEdgeSim: a simulation framework for performance evaluation of cloud, edge and mist computing environments. Comput Sci Inf Syst. 2020. https://doi.org/10.2298/CSIS200301042M.

Parallel Data Generation Framework. https://www.bankmark.de/products-and-services/pdgf. Accessed 14 May 2024.

Funding

Open access funding provided by The Ministry of Education, Science, Research and Sport of the Slovak Republic in cooperation with Centre for Scientific and Technical Information of the Slovak Republic.

Author information

Authors and Affiliations

Contributions

A.D. contributed with the conceptualization of the work, analysis, writing and revision of the presented manuscript. D.D. contributed with the programming of the proposed tool, experiments, and writing of the presented manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors of the presented work declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Dudáš, A., Demian, D. Predictive analysis visualization component in simulated data streams. Discov Computing 27, 12 (2024). https://doi.org/10.1007/s10791-024-09447-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10791-024-09447-4