Abstract

Many application areas, such as background identification, predictive maintenance in industrial applications, smart home applications, assisting deaf people with their daily activities and indexing and retrieval of content-based multimedia, etc., use automatic background classification using speech signals. It is challenging to predict the background environment accurately from speech signal information. Thus, a novel synchrosqueezed wavelet transform (SWT)-based deep learning (DL) approach is proposed in this paper for automatically classifying background information embedded in speech signals. Here, SWT is incorporated to obtain the time-frequency plot from the speech signals. These time-frequency signals are then fed to a deep convolutional neural network (DCNN) to classify background information embedded in speech signals. The proposed DCNN model consists of three convolution layers, one batch-normalization layer, three max-pooling layers, one dropout layer, and one fully connected layer. The proposed method is tested using various background signals embedded in speech signals, such as airport, airplane, drone, street, babble, car, helicopter, exhibition, station, restaurant, and train sounds. According to the results, the proposed SWT-based DCNN approach has an overall classification accuracy of 97.96 (± 0.53)% to classify background information embedded in speech signals. Finally, the performance of the proposed approach is compared to the existing methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Humans have the potential to recognize background sounds. The classification devices are unreliable when the sounds of the same loudness are added (Salomons et al., 2016). With the advancement in artificial intelligence and signal processing, it is feasible to identify the background sounds with devices as shown in Fig. 1. However, efficient machine learning algorithms need to be proposed for the recognition of background sounds as efficiently as humans (Yang & Krishnan, 2017).

Hidden Markov model (HMM)-based acoustic environmental classifier has been described in Ma et al. (2006) to check the feasibility of acoustic environmental classification in mobile devices. This model incorporates a hierarchical classification model and adaptive learning mechanism (Ma et al., 2006). An environmental sound classification (ESC) method with a power-aware wearable sensor has been proposed in Zhan and Kuroda (2014). A low calculation one-dimensional (1-D) Haar-like sound feature has been incorporated into the method with HMM that results in an average accuracy of 96.9% (Zhan & Kuroda, 2014). A heterogeneous system of Deep Mixture of Experts (DMoEs) has been proposed in Yang and Krishnan (2017) for classifying acoustic scenes using convolutional neural networks (CNNs). Here, each DMoE is a mixture of different convolutional layers weighted by a gating network (Yang & Krishnan, 2017). In Martín-Morató et al. (2018), the authors have proposed the use of nonlinear time normalization-based event representation prior to the mid-term statistics extraction for audio event classification. Thereafter, these short-term features are represented as a constant uniform distance sampling over a defined space in order to reduce the errors in the presence of noise (Martín-Morató et al., 2018). A novel CS-LBlock-Pat. model has been proposed in Okaba and Tuncer (2021) for an efficient floor tracking of the speaker in a multi-storey building. The proposed model in Okaba and Tuncer (2021) has been evaluated on the ESC dataset collected from a multi-storey hospital with ten floors. Finally, the support vector machine (SVM) has been used on the dataset in order to achieve an accuracy of 95.38% (Okaba & Tuncer, 2021).

A summary of the different machine learning and deep learning methods has been presented in Das et al. (2021) for the speech enhancement affected by environmental noise. A survey on the different methods for acoustic signal classification is presented in Chaki (2021). An implicit Wiener filter-based algorithm has been proposed in Jaiswal et al. (2022) for speech enhancement that is affected by the environmental noise generated from stationary and non-stationary noises. In Huang and Pun (2020), the authors have proposed a model that is based on an attention-enhanced DenseNet-BiLSTM network, and segment-based Linear Filter Bank (LFB) features for detecting spoofing attacks in speaker verification. Initially, the silent segments have been obtained from each speech signal using a short-term zero-crossing rate and energy. Then, the LFB features are obtained from the segments. Finally, attention-enhanced DenseNet-BiLSTM architecture has been built to mitigate the problem of overfitting (Huang & Pun, 2020). A hybrid approach has been proposed in Roy et al. (2016) to recognize the complex daily activities and the ambient using body-worn sensors as well as ambient sensors. A study has been carried out in Griffiths and Langdon (1968) for the acoustic environment at 14 sites in London by conducting interviews in these cities. This study has been carried out to check the acceptability of the traffic noise in the residential area (Griffiths & Langdon, 1968). In Seker and Inik (2020), the authors have designed 150 different CNN-based models for the ESC and tested their accuracy on the Urbansound8k ESC dataset. It has been concluded in Seker and Inik (2020) that the CNN model has achieved an accuracy of 82.5%, which is higher than its classical counterpart. The classical methods for integrating Mel-frequency cepstral coefficients (MFCCs) and the audio signal information in the temporal domain may lead to the loss of essential information (Yang & Krishnan, 2017). Thus, in Yang and Krishnan (2017), the authors have characterized the latent information on the temporal dynamics by adopting a tool named local binary pattern. Then, this LBP is used to encode the evolution process by considering the frame-level MFCC features as 2D images. Finally, the obtained features are fed to the d3C classifier for ESC (Yang & Krishnan, 2017). Spectrum pattern matching based on very short time ESC has been proposed in Khunarsal et al. (2013). A survey on the sonic environment has been presented in Minoura and Hiramatsu (1997).

In Sameh and Lachiri (2013), the authors have utilized the Gabor filter-based spectrum features and proposed a robust ESC approach. The proposed approach in Sameh and Lachiri (2013) includes three methods. In the first two methods, the outputs of log-Gabor filters, whose input is spectrograms, are averaged and underwent mutual information criteria-based optimal feature selection procedure. The third method only processes three patches extracted from each spectrogram (Sameh & Lachiri, 2013). A CNN method has been proposed in Permana et al. (2022) for the classification of bird sounds in order to identify the forest fire. A lightweight method for automatic heart sound classification has been proposed in Li et al. (2021). This method needs a simple preprocessing of the heart sound data and then the time-frequency features are extracted based on the heart sound data. Finally, the heart sounds are classified based on the fusion features (Li et al., 2021).

However, none of the works in the literature applied synchrosqueezed wavelet transforms for the pre-processing of the data and has not considered a lightweight deep CNN (DCNN) model which works better with the image data. Motivated by this, in this paper, a lightweight DCNN model for automatic background classification using speech signals is proposed. The following are the major contributions of this work:

-

For the time-frequency analysis of speech signals, the synchrosqueezed wavelet transform (SWT) is introduced.

-

A lightweight DCNN architecture is proposed for automatic background classification using the SWT of speech signals.

-

The approach is assessed by taking into account various backgrounds such as airport, airplane, drone, street, babble, car, helicopter, exhibition, station, restaurant, and train sounds embedded in speech signals.

-

Through extensive simulations, we compare the performance of the proposed approach with the existing approaches in terms of accuracy for background classification.

-

The proposed model is also deployed in edge computing devices such as NVIDIA GeForce, Raspberry Pi, and NVIDIA Jetson to exhibit its real-time usage.

-

Finally, all considered models are deployed on edge computing devices to compare the performance in terms of inference time.

The remainder of the paper is organized as follows. Section 2 discusses the dataset details, preprocessing using SWT, convolutional neural network architecture, and other benchmark models considered in this work. Section 3 presents the comparison results of the proposed model with the benchmark models in terms of 5-fold classification accuracy, model size, number of parameters, and inference time. Finally, Sect. 4 concludes the paper with an outline of the possible future.

2 Proposed method

The proposed method for background sound classification consists of three stages: generation of speech signals with background noise, generation of time-frequency plots using SWT, and classification of background embedded in speech signals using a DCNN. We generated speech signals with embedded noise sources such as airport, airplane, drone, street, babble, car, helicopter, exhibition, station, restaurant, and train sounds during the signals generation stage. Following the generation of these signals, SWT is used to generate time-frequency plots. These SWT plots are used in the classification stage to classify the background embedded in the speech signals using DCNN.

2.1 Speech signal dataset

The background noise present in human speech is classified for all methods considered in this work. We use non-stationary noises such as airport, helicopter, airplane, drone, street, restaurant, babble, car, and train at SNRs of 10 dB, 7.5 dB, 5 dB, 2.5 dB 0 dB, −2.5 dB, −5 dB, −7.5 dB, and −10 dB. Noisy samples obtained from the Aurora dataset (Hirsch & Pearce, 2000) are used for station and exhibition noises which are chosen from the noisy speech corpus NOIZEUS (Hu & Loizou, 2006). NOIZEUS corpus is created from three female and male speakers by asking them to utter the phonetically balanced IEEE English sentences. Drone audio dataset (Al-Emadi et al., 2019) is considered for drone noise and helicopter and airplane noises are taken from ESC dataset (Piczak, 2015). The speech samples are narrow-band with a frequency of 8kHz span over 3 s. All these samples are saved in WAV (16-bit PCM, mono) format for processing.

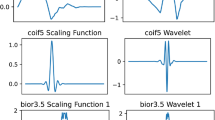

2.2 Synchrosqueezed wavelet transform

The SWT is a signal processing approach used for the time-frequency analysis and also for the modal decomposition of non-stationary signals (Daubechies et al., 2011; Madhavan et al., 2019). This study considers the SWT to evaluate the time-frequency matrix of speech signals with background noise. The SWT of a noisy speech signal, z(n), is computed in three steps. First, the discrete-time continuous wavelet transform (DTCWT) of the noisy speech signal is evaluated as (Daubechies et al., 2011; Madhavan et al., 2019)

where, \(\overline{\psi }\) is interpreted as the complex conjugate of the wavelet basis function or mother wavelet, \(\psi\). Similarly, s(k) is termed as the kth scale where, \(k>0\). In (1), the factor \(\textbf{W}=[W(\tau , s(k))]_{\tau =0, k>0}^{N, S}\) is interpreted as the wavelet coefficients matrix or scalogram matrix of the noisy speech signal, z(n), where S is total number of scales and \(0 <k \le S\). Second, the instantaneous frequency \(I(\tau , s(k))\) is estimated as (Oberlin & Meignen, 2017)

where, \(R(\cdot )\) and \(\text {Ro}(\cdot )\) are interpreted as the real part and round-off operation, respectively. In the third step, the reassignment of wavelet coefficients or the synchrosqueezing is performed based on the instantaneous frequency computed from (2). Hence, the SWT-based time-frequency matrix of the noisy speech signal is computed as (Daubechies et al., 2011)

where \(W^{SWT}(\tau , \tilde{s}(k))\) is the SWT matrix obtained at the kth scale, \(\tilde{s}(k)\). The range of \(\tilde{s}(k)\) for reassignment of wavelet coefficients in (3) is given as \([\tilde{s}(k)-\frac{1}{2}\varDelta \tilde{s}, \tilde{s}(k)+\frac{1}{2}\varDelta \tilde{s}]\) with \(\varDelta \tilde{s}=\tilde{s}(k)-\tilde{s}(k-1)\). Similarly, the \(\varDelta s(k)\) is evaluated as \(\varDelta s=s(k)-s(k-1)\) (Daubechies et al., 2011). Figures 2 and 3 show the oscillogram and the corresponding SWT-based time-frequency contour plots at the SNR values of 5 dB and − 5 dB, respectively. It is observed that the characteristics of time-frequency plots for different oscillogram or speech signals are different. Therefore, the DCNN model designed using the SWT-based time-frequency images of speech signals can be for automatic background classification.

2.3 Deep convolutional neural network architecture

In this work, we propose a very lightweight deep convolutional neural network (CNN). CNN is shown to use local filtering and max pooling to successfully learn the invariant features (Cao et al., 2019). There are 11 layers in the architecture of the proposed CNN model as shown in Fig. 4. The Batch Normalization layer is the first layer of the CNN model followed by Convolutional (tanh activation) layer-1. The third layer is Max pooling layer-1 followed by Convolutional (Tanh activation) layer-2, whose output feeds to Max pooling layer-2. The following layer is the Dropout layer, followed by Convolutional (tanh activation) layer-3, whose output will be fed to the Max pooling layer-3. The ninth layer is Flatten layer, which is followed by the Dense layer ( Softmax activation ). The output cross-entropy layer is the final layer of the proposed CNN model. The number of parameters and output shape of each layer is tabulated in Table 1. In each convolutional layer, we consider the kernel size of (4 × 4). In each Max Pooling layer, we consider the pooling size of (3 × 3). In this model, 270 images of size \(250\times 200\times 3\) from each class are considered which makes a total of 2970 images. Among the available dataset, 80% is used for training and 20% for validation.

The importance of the Batch normalization layer is that it allows each network layer to learn independently. It also helps in the normalization of the output of each input layer, this in turn, reduces the network initialization sensitivity and speeds up the training. Further, the convolutional layer extracts the learnable feature maps (FMPs) from the input image, and the reduction of these features can be achieved layer-by-layer using pooling layers. This reduction helps the network to train faster. Tanh layer is a non-linear activation function layer that applies the tanh function to input. The output of the nth Tanh layer (convolutional layer) can be calculated as (Panda et al., 2020)

where, \(U^{n-1}\) denotes the output of the previous layer, \(n-1\), and T denotes the total number of FMPs in a convolution layer. tanh is the non-activation function used in the convolution layer. The most commonly used pooling layers are Max Pooling and average pooling are defined as (Panda et al., 2020)

and

respectively. Here, n represents the feature map of the pooling layer. In classification problems, the Softmax layer follows the final dense layer. The last layer is the classification layer. The Fully connected layer’s output is obtained as (Panda et al., 2020)

and the output of the Softmax layer is

respectively. Here, \(X^{n}\) is the weight and \(y^{n}\) is the bias, respectively, at nth fully connected layer. N represents the number of output classes. For multi-class classification, the final classification layer’s categorical cross-entropy loss is computed as

where, \(\mu\) is the number of training samples, \(V_{i,k}\) and \(H_{i,k}\) represent the actual output and calculated hypothesis of the network, respectively.

To update the network parameters such as weights and bias, we consider the Adam solver algorithm. These parameters can be obtained by minimizing the loss or cost function (Panda et al., 2020). The Adam optimization algorithm is an add-on to the stochastic gradient descent. It involves a combination of Momentum and Root Mean Square Propagation (RMSP). The method is based on individual adaptive learning rates obtained from the estimates of first and second-order moments of the gradients.

2.4 Benchmark models

We compare the proposed CNN model with several benchmark models described follow.

2.4.1 DenseNet

The DenseNet is a fully connected network that is made up of multiple convolution layers. The inputs to the convolution layer are the outputs of all previous layers. Densenet models come in different variations including DenseNet121, DenseNet169, DenseNet201, and DenseNet264. We compare the performance of the proposed model to DenseNet169, DenseNet121, and Dense Net201 architectures pre-trained on ImageNet datasets.

2.4.2 InceptionNet

In this network, a typical inception layer is made up of 1 × 1, 3 × 3, and 5 × 5 convolution layers whose outputs are concatenated to form input to the next layer. InceptionNet is made up of several stacked convolution layers. In this paper, we look at InceptionV3 and InceptionResNetV2 pre-trained on the ImageNet dataset.

2.4.3 MobileNetV3

In contrast to other models, this network is optimized to perform speed operations and provides a faster boost. AutoML and a Neural Architecture search were used to create this network. It also includes an excitation and squeeze block that can be used on mobile devices in place of the sigmoid function. It also makes use of a modified version of the inverted bottleneck layer, which was first used in MobileNetV2. This network comes in two sizes: MobileNetV3Large and MobileNetV3Small. In this work, we used the MobileNetV2, MobileNetV3Large, and MobileNetV3Small which are trained on the ImageNet dataset.

2.4.4 NASNet

The Neural Architecture Search Network (NASNet) is made up of multiple layers, each of which contains a normal cell and a reduction cell. In this paper, we considered NASNetMobile trained on ImageNet dataset.

2.4.5 ResNet

The ResNet network is made up of a large number of residual blocks. Each block is made up of two 3 × 3 convolution layers with the same output channels. There are also skip connections in the residual network that perform identity mappings. ResNet models include the ResNet18, ResNet34, ResNet50, ResNet101, ResNet110, ResNet152, ResNet162, and ResNet1202. We consider ResNet101V2, ResNet152V2, and ResNet 50V2 architectures that have been pre-trained on the ImageNet dataset in this work.

2.4.6 VGG

VGG is built by 3 × 3 convolutional layers stacked on top of each other. To reduce the volume size in this network, max pooling is used. VGG16 and VGG19 are two VGG models which were trained on the ImageNet dataset is considered in this work.

2.4.7 Xception

The Xception network is an InceptionNet extension that replaces the standard Inception blocks with depthwise separable convolutions. In this study, we used a pre-trained Xception model on the ImageNet dataset.

2.4.8 EfficientNet

EfficientNet is a convolutional neural network that uniformly scales the depth/width/resolution dimensions instead of arbitrary scaling in conventional practice. It uses a compound coefficient to uniformly scale the depth/ width/ resolution dimensions. In this work, we considered EfficientNetB0, EfficientNetB1, EfficientNetB2, EfficientNetB3, EfficientNetB4, EfficientNetB5, EfficientNetB6, and EfficientNetB7 models pre-trained on the ImageNet dataset.

3 Results and discussion

In this section, the performance of the proposed model is evaluated in terms of training accuracy and validation accuracy. Further, we compare its performance to other benchmark models in terms of 5-fold cross-validation, number of parameters, size, and inference time for each model.

The proposed convolutional neural network model is trained and validated with a dataset created from different noise samples obtained from sounds of airplane, airport, babble, car, drone, exhibition, helicopter, restaurant, station, street, and train. Fig. 5 shows the variation of training and validation accuracy with an increase in the number of epochs. It is observed from Fig. 5 that the accuracy increases exponentially with increasing epoch and saturates to a maximum of 98.82%. The confusion matrix of the proposed CNN model on the test dataset is shown in Fig 6. From Fig. 6, it is observed that the proposed model classifies the airplane, exhibition, babble, drone, car, helicopter, and train data accurately with 100% accuracy. Further, the airport data can be classified correctly with an accuracy of 97% and misclassified as restaurant and station data each with an accuracy of 2%. Moreover, 96% classification accuracy is obtained for the street data. However, the street class is misclassified as helicopter and station each with an accuracy of 2%. Further, the restaurant data is correctly classified with an accuracy of 95% and is misclassified as babble with 2% accuracy and station with an accuracy of 3%. Finally, the proposed model classifies the station data correctly with an accuracy of 92% and misclassifies it as restaurant and airport class each with an accuracy of 4% as can be observed from Fig. 6.

Pre-trained models such as ResNet, DenseNet, Inception, MoblieNet, VGG, NASNetMobile, Xception, and EfficientNet are also trained with the considered dataset for a fair comparison with the proposed model. Table 2 lists the values of accuracy, number of parameters, and size of the dataset for the comparison of the proposed model with other pre-trained models. It is observed from Table 2 that the 5-fold cross-validation accuracy of the proposed model is \(97.96 (\pm .53)\) which is extremely higher than all the pre-trained models considered. This is followed by VGG19 with an accuracy of \(80.74 (\pm 1.50)\). Thus, we conclude that the proposed model outperforms the other pre-trained models in terms of 5-fold validation accuracy, which can also be observed from Fig. 7. Further, it is observed that the proposed model achieves a test accuracy between 96.8% to 98.82%. However, the pre-trained models achieve test accuracy between 65.31% to 81.98% which is very less when compared to the proposed model. The proposed model achieves this higher accuracy with a reduced number of total parameters of 32, 879 which is very less when compared to the number of parameters in other benchmark models. The benchmark model with less number of parameters is MobileNetV3Small with a total of 1, 541, 243 parameters as can be noticed from Table 2. It can also be observed from Table 2 that the proposed model works well with a reduced dataset size of 439KB. However, the pre-trained models work with a minimum model size of 6MB for MobileNetV3Small and a maximum size of 246MB for EfficientNetB7. Even though the model size is higher for the proposed model, the achievable accuracy is very high. Thus, from Table 2, we conclude that the proposed model outperforms the pre-trained models in terms of accuracy and the size of the dataset.

Table 3 shows the variation of the inference time for the proposed model and other pre-trained models when run on different edge computing devices. We consider a total of nine devices for the performance comparison. From Table 3, it is noticed that the proposed model consumes an inference time of 0.42 ms, 0.53 ms, 0.56 ms, 0.7 ms, 0.84 ms, 1.7 ms, 2.47 ms, 3.12 ms, and 41.27 ms when on Tesla P100-PCIE-16GB, Tesla T4, Telsa P4, Tesla K80, NVIDIA GeForce GTX 1050, NVIDIA Jetson Xavier, Inter(R Xeon(R) CPU E5-2630 v4, Intel core i5 8th gen, and Raspberry Pi, respectively, which is very less when compared to other benchmark models when deployed on any of the edge computing devices. Further, it is noticed that the accuracy of the proposed model is less when run on Telsa P100-PCIE-16GB.

4 Conclusion

This paper proposed a novel deep-learning-based approach for automatically classifying background information embedded in speech signals. The time-frequency analysis, SWT, has been used to convert speech signals into time-frequency plots. These time-frequency signals are then used in conjunction with a DCNN to classify background sound information embedded in speech signals. The proposed DCNN model is only 439 KB in size and can classify the sounds from airplane, airports, babble, cars, drones, exhibitions, helicopters, restaurants, stations, streets, and trains. Through extensive simulations, it has been concluded that the proposed SWT-based deep learning approach classifies more accurately in comparison to pre-trained models such as DenseNet, EfficientNet, InceptionNet, MobileNet, NASNet, ResNet, VGG, and Xception.

Data availability

Noisy samples obtained from Aurora dataset (Hirsch & Pearce, 2000) are used for station and exhibition noises which are chosen from the noisy speech corpus NOIZEUS (Hu & Loizou, 2006). NOIZEUS corpus is created from three female and male speakers by asking them to utter the phonetically balanced IEEE English sentences. Drone audio dataset (Al-Emadi et al., 2019), publicly available at (https://github.com/saraalemadi/DroneAudioDataset), is considered for drone noise, and helicopter and airplane noises are taken from ESC dataset (Piczak, 2015). The speech samples are narrow-band with a frequency of 8kHz span over 3 s. All these samples are saved in WAV (16-bit PCM, mono) format for processing.

References

Al-Emadi, S., Al-Ali, A., Mohammad, A., & Al-Ali, A. (2019). Audio based drone detection and identification using deep learning. In Proceedings of international wireless communications & mobile computing conference, Tangier, Morocco, June 2019 (pp. 459–464).

Cao, X., Togneri, R., Zhang, X., & Yu, Y. (2019). Convolutional neural network with second-order pooling for underwater target classification. IEEE Sensors Journal, 19(8), 3058–3066. https://doi.org/10.1109/JSEN.2018.2886368

Chaki, J. (2021). Pattern analysis based acoustic signal processing: A survey of the state-of-art. International Journal of Speech Technology, 24(4), 913–955.

Das, N., Chakraborty, S., Chaki, J., Padhy, N., & Dey, N. (2021). Fundamentals, present and future perspectives of speech enhancement. International Journal of Speech Technology, 24(4), 883–901.

Daubechies, I., Lu, J., & Wu, H. T. (2011). Synchrosqueezed wavelet transforms: An empirical mode decomposition-like tool. Applied and Computational Harmonic Analysis, 30(2), 243–261.

Griffiths, I., & Langdon, F. (1968). Subjective response to road traffic noise. Journal of Sound and Vibration, 8(1), 16–32. https://doi.org/10.1016/0022-460X(68)90191-0

Hirsch, H. G., & Pearce, D. (2000). The Aurora experimental framework for the performance evaluation of speech recognition systems under noisy conditions. In Proceedings of automatic speech recognition: Challenges for the new millenium, ISCA Tutorial and Research Workshop (ITRW), Paris, France, October 2000.

Hu, Y., & Loizou, P. C. (2006). Subjective comparison of speech enhancement algorithms. In Proceedings of IEEE international conference on acoustics speech and signal processing proceedings, Toulouse, France, May 2006 (Vol. 1, pp. 153–156).

Huang, L., & Pun, C. M. (2020). Audio replay spoof attack detection by joint segment-based linear filter bank feature extraction and attention-enhanced DenseNet-BiLSTM network. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 28, 1813–1825. https://doi.org/10.1109/TASLP.2020.2998870

Jaiswal, R. K., Yeduri, S. R., & Cenkeramaddi, L. R. (2022). Single-channel speech enhancement using implicit Wiener filter for high-quality speech communication. International Journal of Speech Technology, 25(3), 745–758.

Khunarsal, P., Lursinsap, C., & Raicharoen, T. (2013). Very short time environmental sound classification based on spectrogram pattern matching. Information Sciences, 243, 57–74. https://doi.org/10.1016/j.ins.2013.04.014

Li, S., Li, F., Tang, S., & Luo, F. (2021). Heart sounds classification based on feature fusion using lightweight neural networks. IEEE Transactions on Instrumentation and Measurement, 70, 1–9. https://doi.org/10.1109/TIM.2021.3109389

Ma, L., Milner, B., & Smith, D. (2006). Acoustic environment classification. ACM Transactions on Speech and Language Processing (TSLP), 3(2), 1–22.

Madhavan, S., Tripathy, R. K., & Pachori, R. B. (2019). Time-frequency domain deep convolutional neural network for the classification of focal and non-focal EEG signals. IEEE Sensors Journal, 20(6), 3078–3086.

Martín-Morató, I., Cobos, M., & Ferri, F. J. (2018). Adaptive mid-term representations for robust audio event classification. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 26(12), 2381–2392. https://doi.org/10.1109/TASLP.2018.2865615

Minoura, K., & Hiramatsu, K. (1997). On the significance of an intensive survey in relation to community response to noise. Journal of Sound and Vibration, 205(4), 461–465. https://doi.org/10.1006/jsvi.1997.1012

Oberlin, T., & Meignen, S. (2017). The second-order wavelet synchrosqueezing transform. In 2017 IEEE international conference on acoustics, speech and signal processing (ICASSP) (pp. 3994–3998). IEEE.

Okaba, M., & Tuncer, T. (2021). An automated location detection method in multi-storey buildings using environmental sound classification based on a new center symmetric nonlinear pattern: CS-LBlock-Pat. Automation in Construction, 125, 103645. https://doi.org/10.1016/j.autcon.2021.103645

Panda, R., Jain, S., Tripathy, R., & Acharya, U. R. (2020). Detection of shockable ventricular cardiac arrhythmias from ECG signals using FFREWT filter-bank and deep convolutional neural network. Computers in Biology and Medicine, 124, 103939.

Permana, S. D. H., Saputra, G., Arifitama, B., Yaddarabullah, Caesarendra W., & Rahim, R. (2022). Classification of bird sounds as an early warning method of forest fires using convolutional neural network (CNN) algorithm. Journal of King Saud University - Computer and Information Sciences, 34(7), 4345–4357. https://doi.org/10.1016/j.jksuci.2021.04.013

Piczak, K. J. (2015). ESC: Dataset for environmental sound classification. In Proceedings of ACM international conference on multimedia, New York, USA, October 2015 (pp. 1015–1018).

Roy, N., Misra, A., & Cook, D. (2016). Ambient and smartphone sensor assisted ADL recognition in multi-inhabitant smart environments. Journal of Ambient Intelligence and Humanized Computing, 7(1), 1–19.

Salomons, E. L., van Leeuwen, H., & Havinga, P. J. (2016). Impact of multiple sound types on environmental sound classification. In 2016 IEEE sensors (pp. 1–3). https://doi.org/10.1109/ICSENS.2016.7808723

Sameh, S., & Lachiri, Z. (2013). Multiclass support vector machines for environmental sounds classification in visual domain based on Log-Gabor filters. International Journal of Speech Technology, 16(2), 203–213.

Seker, H., & Inik, O. (2020). CNNsound: Convolutional neural networks for the classification of environmental sounds. In 2020 The 4th international conference on advances in artificial intelligence (ICAAI 2020) (pp. 79-84). Association for Computing Machinery. https://doi.org/10.1145/3441417.3441431

Yang, W., & Krishnan, S. (2017). Combining temporal features by local binary pattern for acoustic scene classification. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 25(6), 1315–1321. https://doi.org/10.1109/TASLP.2017.2690558

Zhan, Y., & Kuroda, T. (2014). Wearable sensor-based human activity recognition from environmental background sounds. Journal of Ambient Intelligence and Humanized Computing, 5(1), 77–89.

Funding

Open access funding provided by University of Agder. This study was supported by Norges Forskningsråd (Grant Nos. 287918, 280835).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yakkati, R.R., Yeduri, S.R., Tripathy, R.K. et al. Time frequency domain deep CNN for automatic background classification in speech signals. Int J Speech Technol 26, 695–706 (2023). https://doi.org/10.1007/s10772-023-10042-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10772-023-10042-z