Abstract

Speech recognition is the process of understanding the human or natural language speech by a computer. A syllable centric speech recognition system in this aspect identifies the syllable boundaries in the input speech and converts it into the respective written scripts or text units. Appropriate segmentation of the acoustic speech signal into syllabic units is an important task for development of highly accurate speech recognition system. This paper presents an automatic syllable based segmentation technique for segmenting continuous speech signals in Indian languages at syllable boundaries. To analyze the performance of the proposed technique, a set of experiments are carried out on different speech samples in three Indian languages Hindi, Bengali and Odia and are compared with the existing group delay based segmentation technique along with the manual segmentation technique. The results of all our experiments show the effectiveness of the proposed technique in segmenting the syllable units from the original speech samples compared to the existing techniques.

Similar content being viewed by others

References

Besacier, L., Barnard, E., Karpov, A., & Schultz, T. (2014). Automatic speech recognition for under-resourced languages: A survey. Speech Communication, 56, 85–100.

Gałka, J., Masior, M., & Salasa, M. (2014). Voice authentication embedded solution for secured access control. IEEE Transactions on Consumer Electronics, 60(4), 653–661.

He, Y., Han, J., Zheng, T., & Sun, G. (2014). A new framework for robust speech recognition in complex channel environments. Digital Signal Processing, 32, 109–123.

Kay, S. M., & Sudhaker, R. (1986). A zero crossing-based spectrum analyzer. IEEE Transactions on Acoustics, Speech, and Signal Processing, 34(1), 96–104.

Kelly, F., Drygajlo, A., & Harte, N. (2013). Speaker verification in score-ageing-quality classification space. Computer Speech & Language, 27(5), 1068–1084.

Kitaoka, N., Enami, D., & Nakagawa, S. (2014). Effect of acoustic and linguistic contexts on human and machine speech recognition. Computer Speech & Language, 28(3), 769–787.

Koolagudi, S. G., & Rao, K. S. (2012). Emotion recognition from speech using source, system, and prosodic features. International Journal of Speech Technology, 15(2), 265–289.

Lau, Y. K., & Chan, C. K. (1985). Speech recognition based on zero crossing rate and energy. IEEE Transactions on Acoustics, Speech, and Signal Processing, 33(1), 320–323.

Li, M., Han, K. J., & Narayanan, S. (2013). Automatic speaker age and gender recognition using acoustic and prosodic level information fusion. Computer Speech & Language, 27(1), 151–167.

Lin, C. H., Wu, C. H., Ting, P. Y., & Wang, H. M. (1996). Frameworks for recognition of Mandarin syllables with tones using sub-syllabic units. Speech Communication, 18(2), 175–190.

Lippmann, R. P. (1997). Speech recognition by machines and humans. Speech Communication, 22(1), 1–15.

Mao, Q., Dong, M., Huang, Z., & Zhan, Y. (2014). Learning Salient Features for Speech Emotion Recognition Using Convolutional Neural Networks. IEEE Transactions on Multimedia, 16(8), 2203–2213.

McLoughlin, I. V. (2014). Super-audible voice activity detection. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 22(9), 1424–1433.

Musfir, M., Krishnan, K. R., & Murthy, H. (2014). Analysis of fricatives, stop consonants and nasals in the automatic segmentation of speech using the group delay algorithm. In Twentieth National Conference on Communications (NCC) (pp. 1–6).

Obin, N., Lamare, F., & Roebel, A. (2013). Syll-O-Matic: an adaptive time-frequency representation for the automatic segmentation of speech into syllables. In IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), (pp. 6699–6703).

Origlia, A., Cutugno, F., & Galatà, V. (2014). Continuous emotion recognition with phonetic syllables. Speech Communication, 57, 155–169.

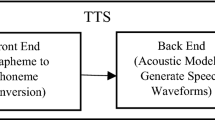

Panda, S. P., & Nayak, A. K. (2015). An efficient model for text-to-speech synthesis in Indian languages. International Journal of Speech Technology, 18(3), 305–315.

Panda, S. P., Nayak, A. K., & Patnaik, S. (2015). Text-to-speech synthesis with an Indian language perspective. International Journal of Grid and Utility Computing, 6(3–4), 170–178.

Prasad, V. K., Nagarajan, T., & Murthy, H. A. (2004). Automatic segmentation of continuous speech using minimum phase group delay functions. Speech Communication, 42(3), 429–446.

Prasanna, S., Reddy, B. V. S., & Krishnamoorthy, P. (2009). Vowel onset point detection using source, spectral peaks, and modulation spectrum energies. IEEE Transactions on Audio, Speech, and Language Processing, 17(4), 556–565.

Sakai, T., & Doshita, S. (1963). The automatic speech recognition system for conversational sound. IEEE Transactions on Electronic Computers, 6, 835–846.

Shastri, L., Chang, S., & Greenberg, S. (1999). Syllable detection and segmentation using temporal flow neural networks. In International Congress of Phonetic Sciences (pp. 1721–1724).

Sirigos, J., Fakotakis, N., & Kokkinakis, G. (2002). A hybrid syllable recognition system based on vowel spotting. Speech Communication, 38(3), 427–440.

Sreenivas, T. V., & Niederjohn, R. J. (1992). Zero-crossing based spectral analysis and SVD spectral analysis for formant frequency estimation in noise. IEEE Transactions on Signal Processing, 40(2), 282–293.

Wang, H. M. (2000). Experiments in syllable-based retrieval of broadcast news speech in Mandarin Chinese. Speech Communication, 32(1), 49–60.

Wang, G., & Sim, K. C. (2014). Regression-based context-dependent modeling of deep neural networks for speech recognition. IEEE/ACM Transactions on Audio, Speech, and Language Processing, 22(11), 1660–1669.

Zhao, X., & Shaughnessy, D. O. (2008). A new hybrid approach for automatic speech signal segmentation using silence signal detection, energy convex hull, and spectral variation. In Canadian Conference on Electrical and Computer Engineering (pp. 145–148).

Ziolko, B., Manandhar, S., Wilson, R. C., & Ziolko, M. (2006). Wavelet method of speech segmentation. In 14th European Signal Processing Conference (pp. 1–5).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Panda, S.P., Nayak, A.K. Automatic speech segmentation in syllable centric speech recognition system. Int J Speech Technol 19, 9–18 (2016). https://doi.org/10.1007/s10772-015-9320-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10772-015-9320-6