Abstract

In contrast to traditional views of instructional design that are often focused on content development, researchers are increasingly exploring learning experience design (LXD) perspectives as a way to espouse a broader and more holistic view of learning. In addition to cognitive and affective perspectives, LXD includes perspectives on human–computer interaction that consist of usability and other interactions (ie—goal-directed user behavior). However, there is very little consensus about the quantitative instruments and surveys used by individuals to assess how learners interact with technology. This systematic review explored 627 usability studies in learning technology over the last decade in terms of the instruments (RQ1), domains (RQ2), and number of users (RQ3). Findings suggest that many usability studies rely on self-created instruments, which leads to questions about reliability and validity. Moreover, additional research suggests usability studies are largely focused within the medical and STEM domains, with very little focus on educators' perspectives (pre-service, in-service teachers). Implications for theory and practice are discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Literature Review

1.1 Emergence of Learning Experience Design (LXD)

A primary goal of educators (teachers, learning designers, etc.) is to consider strategies and resources that support learning among students. Within the field of instructional and learning design, this has often focused on the development of learning environments that support students’ construction of knowledge. This emphasis has often outlined theories and models that foster cognitive and socio-emotional learning outcomes as learners engage with course materials (Campbell et al., 2020; Ge et al., 2016). Recently, theorists have increasingly advocated for a more holistic view of learning, also known as “learning experience design” (LXD) (Chang & Kuwata, 2020). To date, there are various emergent perspectives to define elements of LXD. For example, North (2023) suggests LXD “considers the intention of the content and how it will be used in the organization to design the best experience for that use”. Others such as Floor (2023) and Schmidt and Huang (2022) extend this view to underscore the human-centered and goal-oriented nature of LXD. As an extension of conceptual discourse, Tawfik and colleagues (in press) conducted a Delphi study from LXD practitioners where they define the phenomenon as follows: “LXD not only considers design approaches, but the broader human experience of interacting with a learning environment. In addition to learners’ knowledge construction, experiential aspects include socio-technical considerations, emotive aspects (e.g., empathy, understanding of learner), and a detailed view of learner characteristics within context. As such, LXD perspectives and methodologies draw from and are informed by fields beyond learning design & technology, educational psychology, learning sciences, and others such as human–computer interaction (HCI) and user-experience design.” Although various definitions have emerged in recent years, these collective views suggest that learning technologies should not solely focus on content design or performance goals, but also elevate the individual and their interaction with the learning environment. This includes the aforementioned learning outcomes (cognitive, socio-emotional), but also considers the human–computer interaction (e.g., user experience, usability) regarding the technical features and users’ ability to complete tasks within the interface (Gray, 2020; Lu et al., 2022; Tawfik et al., 2022).

There are various emerging frameworks that outline different approaches to understanding and defining constructs of learning experience design. For example, frameworks such as the socio-technical pedagogical (STP) framework (Jahnke et al., 2020) and activity theory (Engeström, 1999; Yamagata-Lynch, 2010) describe how learning environments must consider the broader learning context. That is, how individuals are goal directed and leverage technology tools to complete actions within a socially constructed context. Other frameworks, such as that by Tawfik et al. (2022), underscore the relationship between LXD and human-centered learning experiences; that is, the behaviors and interactions that are important as users employ technology for learning. Specifically, this conceptual framework describes LXD in two constructs: (a) interaction with the learning environment and (b) interaction with the learning space. The former includes learning interactions with the interface that are more technical in nature, such as customization, expectation of content placement, functionality of component parts, interface terms aligned with existing mental models, and navigation. Alternatively, the interaction with the learning space includes how the learners engage with specific affordances of technology designed to enhance learning: engagement with the modality of content, dynamic interaction, perceived value of technology features to support learning, and scaffolding. Collectively, these LXD frameworks highlight how the design perspectives extend beyond merely specific content; it details the experiential and HCI aspects of learning (e.g.—UX, usability).

1.2 LXD and Assessment of Usability Within Learning Environments

Usability is a key component of LXD because many learning technologies are often dependent on learners’ technology interaction and ability to navigate the learning environment. If learners experience challenges with usability as they interact with the learning interface, studies show that this can result in decreased learning outcomes (Novak et al., 2018). However, this aspect of LXD is often understudied relative to the design and development of learning environments (Lu et al., 2022). To date, there are various methods evaluators employ to assess technology, which can broadly be defined in terms of qualitative and quantitative approaches (Schmidt et al., 2020). The former largely consists of ethnography, focus groups, and think-aloud studies. Ethnography, a method employed for comprehensive contextual understanding, necessitates that researchers immerse themselves in natural settings to observe and interact with participants, thereby gaining insights into their behaviors and practices (Rizvi et al., 2017). Focus groups, characterized by small, structured discussions moderated by a skilled facilitator, assist in the exploration of participants’ diverse perspectives and group dynamics (Downey, 2007; Maramba et al., 2019). Lastly, a think-aloud qualitative approach explores participants’ cognitive processes and reactions while engaging in goal-directed user tasks (Gregg et al., 2020; Jonassen et al., 1998; Nielsen, 1994). Collectively, qualitative methods of usability generate rich user data that enables a deeper understanding of users’ experiences with the technology (Gray, 2016; Schmidt et al., 2020).

Whereas qualitative research is often less structured, usability evaluators might opt for a more quantitative approach that provides alternative measures of the learner experience. This may include a variety of approaches, such as analytics that capture user-logs or survey research. Alternatively, questionnaires allow researchers and practitioners to quantify specific constructs and gather data at scale during a usability evaluation. Indeed, recent large-scale reviews suggest that questionnaires are the most common form of usability evaluation (Estrada-Molina et al., 2022; Lu et al., 2022). Further analysis by Estrada-Molina et al. (2022) suggests a lack of learning emphasis within the survey items, while the results from Lu et al (2022) indicate a mix of self-developed and adapted questionnaires outside of education. For example, the System Usability Scale (SUS) (Brooke, 1996) is a popular usability instrument that explores questions related to complexity, inconsistencies, and overall functionality (Vlachogianni & Tselios, 2022). The SUS is therefore widely used as it considers users’ more general perceptions about technical features of the assessed technology. In terms of scoring, the instrument proffers ten questions and then translates the score to a percentile rank that can be categorized as above or below average. Although the instrument derives outside the field of learning design, research shows this has been extensively used as researchers and evaluators assess various technologies (Vlachogianni & Tselios, 2022). Other surveys rooted in the Technology Acceptance Model (TAM) often ask questions related to the ‘perceived ease of use’ construct (Davis, 1989), which is used to measure usability interactions such as flexibility, learnability, and terminology (Perera et al., 2021). Rather than seen as a diagnostic tool, items are often seen from a decision-making perspective to determine how perceived usability factors into technology adoption. Moreover, other instruments that measure nuanced interactions not often outlined in older surveys are often self-created (Victoria-Castro et al., 2022), especially as novel technologies (e.g., VR, wearable) and modalities emerge that (Lu et al., 2022).

1.3 Research Question

A recent systematic review by Lu et al. (2022) noted that questionnaires were the most widely used form of usability evaluations for learning technology. Although usability methodology is increasingly seen as an important aspect of LXD, there is very little understanding as to what instruments researchers and practitioners employ. This can lead to questions about the validity and reliability of usability in LXD, especially for novel technologies where there are few surveys and established practices evaluating a learners’ interaction using quantitative methods (Martin et al., 2020a, 2020b). Finally, the lack of agreement about usability within the LXD research community can lead to issues of replication, which make it hard to establish trends and gaps around a specific research topic (Christensen et al., 2022). There is therefore a need to provide an overview of the published literature about how researchers and practitioners assess learning technologies. Given this gap in the literature, we propose the following research questions:

-

1.

What are the surveys used to evaluate usability for learning environments?

-

2.

What are the types of technology and domains studied for usability evaluations of learning environments?

-

3.

What are the user characteristics for usability evaluations of learning environments?

2 Methodology

Given the lack of clarity around quantitative methods used by LXD practitioners and researchers, this study employed a systematic review to understand trends in usability evaluations of learning technologies. Specifically, the process employed for this systematic review mirrored the steps outlined in the What Works Clearinghouse Procedures and Standards Handbook (2017).

2.1 Data Sources and Search Parameters

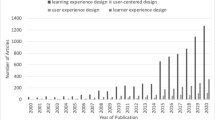

The systematic review query was amended based on a similar usability systematic review published by Lu et al. (2022). Specifically, the terms were broadened and included the following additional terms to capture the evaluation aspect of LXD: assess*, measure*, survey*, and questionnaire*. Taking into consideration the project goals and the librarian’s search recommendations, the research team searched the Scopus, ERIC, PubMed, and IEEE Explore databases using the following parameters: usability AND (evaluate* OR assess* OR measure*) AND (learn* OR education*) AND ("technology" OR "online" OR "environment" OR "management system" OR "mobile" OR "virtual reality" OR "augmented reality") AND (survey* OR questionnaire*).

2.2 Review Protocol and Data Coding

Given the emergence of LXD in recent years, the goal was to better understand issues of usability of learning technology within the last decade. Hence, the search results were narrowed by date range (January 1, 2013 to December 31, 2023), language (English), and limited to peer-reviewed journal articles, including early access articles. Based on the goals and research questions, the research team developed categories of codes based on criteria and common themes found during the initial article review (see Table 1). The code categories were as follows: Instrument, Setting, Type of Technology, Domain, Participant Type, and Number of Participants. An “Other” category was added as needed for items that appeared fewer than three times. Table 2 shows a breakdown of the codes identified and used for each category.

The final number of articles returned was 1,610. Similar to prior systematic reviews (Martin et al., 2017, 2020a, 2020b), two researchers individually reviewed the title and abstracts to determine if each article included the criteria outlined in Fig. 1. After removing articles that did not meet this inclusion criteria this, the final articles reviewed included 735 studies. Prior to the second round of coding, the research team met to review and discuss any disagreements in coding or coding categories, and then the primary investigator completed the final review. The final inter-reliability agreement was 100%, with 627 articles meeting the criteria.

3 Results

RQ1

What are the surveys used to evaluate usability for learning environments?

The first research question sought to understand what surveys were employed as evaluators assess usability in learning environments. The results found that a large number do not use standard and reliable instruments; rather, many surveys are self-created (39.58%). In terms of more popular and established survey instruments, it appears that the System Usability Scale (24.68%) and some variation of the Technology Acceptance Model (10.58%) were preferred when doing usability studies for learning technologies. Another finding for this research question is that a significant portion was categorized as ‘Others’ (14.26%) or ‘Not Reported’ (12.02%). The “Others” category implies little uniformity among usability instruments. Whereas the “Self Created” category was used when researchers assessed usability with an instrument generated for that specific study, the ‘Not Reported’ category was used when the researchers failed to disclose what instrument they employed (see Table 3).

RQ2

What are the types of technology and domains studied for usability evaluations of learning environments?

The second research question examines the different types of technologies and how frequently each was studied. The data is broken down into 11 categories, including “Other” as examples of technologies which were only studied between one to three times across the sample. Results show that the total numbers of each are spread primarily between Online Module/Multimedia (22.74%), Virtual Reality/Augmented Reality (21.84%), Mobile Applications (15.81%), and Other (15.06%). It is noteworthy that although many institutions rely on learning management systems (LMS) for education and training, they were not widely studied (8.13%) (see Table 4).

To further answer the second research question, the research team sought to understand the the different domains addressed by usability evaluations for learning technologies. The data found that a significant portion were focused on the sciences, with the medical field (39.26%) and STEM learning (28.53%) as the top two categories. Another sizeable category was ‘General’, which consisted of usability evaluations that were not isolated to a specific domain; for example, if a usability evaluation was conducted across a university for a learning management system. Additionally, few studies considered UX for assistive services despite the fact that this field relies heavily on the use of technology and is federally required by government-funded education initiatives in accordance with section 508 of the Rehabilitation Act. These technologies include providing systems to support hearing or vision impaired learners. Finally, the data also indicates that very few studies focused on education (pre-service, in-service education) despite the fact that instructors, trainers, and teachers are often the ones to implement the technology in a learning environment (see Table 5).

RQ3

What are the user characteristics for usability evaluations of learning environments?

The final research question also considers the different types of users surveyed about their user experience throughout the studies. In terms of analysis, a usability study could conceivably include multiple stakeholders within the same study, such as an app that is focused on education to enhance medical outcomes. So if the usability evaluation included both the child and parent/caregiver, they were each counted in their respective categories. The results indicate that the majority of users assessed were the learners themselves (77.19%) with a lesser percentage being the instructors (17.93%). An insignificant portion fell into different categories including Parent/Caregiver, Expert, Other, or Not Reported. Since these percentages include instances where studies surveyed more than one type of user (i.e., learners and instructors together), the low percentage of included instructors is of interest. While logically a user experience study would emphasize the learner experience, there is opportunity to broaden the understanding of usability by including greater instructor perspective (see Table 6).

The research question also focused on how many users took part in the usability evaluation for a specific learning technology. Because the number of users within a usability study is subject to longstanding debate within the field (Alroobaea & Mayhew, 2014; Hornbæk, 2006; Hwang & Salvendy, 2010), this systematic review sought to distinguish between small (0–10), medium (11–30; 30–50), and large scale studies (51–100; 100 or more). In contrast to the other tables, there was arguably more uniformity, as represented in Table 7. Interestingly, the data ranged from large-scale usability assessments (100 + at 31.25%) to more moderate in scale (25.80%). Alternatively, very few usability studies were within the range of 0–10. This is noteworthy in light of the discussion around usability test sample size where established findings suggest that the ideal size is between 3 and 16 users, with an ideal number of five users to identify 80% of issues (Nielsen & Landauer, 1993).

4 Discussion

RQ1

What are the surveys used to evaluate usability for learning environments?

The field of learning design and technology has garnered considerable interest in the concept of learning experience design, which is broadly defined as a human-centric view of learning as individuals engage in knowledge construction (Chang & Kuwata, 2020; Gray, 2020; Jahnke et al., 2020; Tawfik et al., 2022). Beyond a content-driven approach, this includes additional experiential aspects as individuals employ technology, such as usability and other aspects of human–computer interaction. To date, some research has explored usability within learning technologies using a systematic review within the range of 50–120 (Estrada-Molina et al., 2022; Lu et al., 2022), which provide insight into the methods, measures and other key aspects that are essential to LXD. This research builds on these prior studies through analysis of over 600 articles focused on recent usability studies in education within the last decade.

The first research question sought to understand the specific instruments used to evaluate usability for learning environments. This is important to better understand LXD evaluation from a methodological perspective and identify what tools are used within LXD. Moreover, this would potentially allow the field to identify preferred methods among existing LXD professionals and researchers, while also identifying consistent trends as the instrument is utilized across different contexts. In terms of the first research question, a significant finding is that many usability instruments were self-generated. In some respects, one might argue that this is a logical outcome when a new technology (e.g., wearable technology; robotics) is employed and no standard instrument can accurately assess novel user interactions. However, the overreliance of self-created instruments is problematic from a validity and reliability perspective as these measures are often susceptible to biased results (Davies, 2020). Furthermore, self-created survey instruments can make it difficult to replicate findings, especially when the surveys are not published as part of the usability study (Spector et al., 2015). As researchers look to address this issue, it may highlight the need for more instrument development as advancements in technologies evolve, especially for diverse populations (Schmidt & Glaser, 2021). Rather than depend on a self-created instrument, it is important to have valid and reliable instruments that measure the complexities of LXD as learners interact with technology.

Additional data identifies specific instruments that have been used to evaluate different learning technologies. This finding coincides with the discussion that many in LXD often borrows from other fields, especially for evaluationn (Schmidt et al., 2020). Specifically, the systematic review finds that instruments such as SUS and variations of TAM have been used extensively as researchers assess the usability of the learning environment. While this is beneficial to employ methods rooted in HCI, other LXD researchers note that instruments must account for the unique interactions that are inherent to learning technologies (Novak et al., 2018; Tawfik et al., 2022), such as learning from failure and iterative knowledge construction. In addition, surveys like the SUS are not necessarily designed to diagnose specific issues that might plague a learning environment; rather, it evaluates the system from a more technical and general perspective (Vlachogianni & Tselios, 2022). Hence, one might question whether these instruments fully capture the complex user experience needs of learning technologies, especially for specific features that one might want to assess (i.e., embedded artificial intelligent component; perceptions of a novel collaborative tool). In conclusion, the finding underscores the need for more instrument development within LXD that is specifically designed to evaluate the usability of learning environments.

RQ2

What are the domains and types of technology in which usability is evaluated for learning environments?

The second research question focused on the type of technologies and the domain in which usability of learning environments is assessed. In terms of the former, the data seems to be relatively distributed, with four technology types greater than 15%. That said, there are several notable gaps that were identified through this systematic review. First, collaborative technologies only constituted 3.77% of overall usability studies. In light of the first research question, it may highlight the need for instruments and protocols that measure collaborative technologies in which different types of learning interactions take place. For example, the protocols not rooted in education may overlook important collaborative actions that are often complex, such as how to engage in paired programming, sharing resources, perceptions of privacy, and others. As such, the lack of protocols that account for multiple users may be a reason for the lack of studies focused on collaborative technologies. Additional gaps relate to more emergent technologies that are of interest to many educators, such as robotics and artificial intelligence used in intelligent tutoring systems. Although educator interest and implementation rates are increasing to support learning outcomes, the data suggests that the usability evaluations using surveys lack methodological consistency.

The second aspect of this research question centered on what domains were the focus of usability studies of learning technology. Results show that approximately two-thirds (67.79%) were focused on medical education and STEM. In many respects, one might argue that the top two categories are reflective of increased emphasis, continued innovation, and consistent funding allocated for these domains. Moreover, the high number of studies identified in this systematic review suggests that usability studies are indeed an emphasis for new tools in these domains, as opposed to merely implementing without any source of evaluation.

Although there seems to be considerable research pertaining to learning environment usability in the STEM and medical domain, the data suggests that there is a notable gap between these disciplines and others domains. Less than approximately four percent of usability studies were focused within the teaching (pre-service, in-service) domain. This is potentially problematic because teachers are often the ones that rely upon and integrate technology in the K-12 setting, so it follows that those in LXD should consider their perspective during a usability evaluation. The finding also has implications regarding the debate about the efficacy of technology and roles of technology in K-12. Some argue that technology can address issues of inequity and access, while others argue that the resources needed for technology may not justify the learning gains (Nye, 2015; Petko et al., 2017; Tawfik et al., 2016; Vivona et al., 2023). The lack of usability evaluations is problematic for implementation and adoption, and the data presented in this systematic review suggests these educator perspectives are severely lacking, which may exacerbate the debate about effective use of technologies. Similarly, usability studies are important for assistive services technologies (e.g. learning tools for neurodivergent students) given the opportunity for technology to address specific challenges encountered by these learners beyond just memorization of information. In line with the lack of usability focused on K-12 educators, it is problematic that there seems to be a dearth of literature for assistive technology. In conclusion, the findings from the review suggest that future studies should bridge this considerable research gap beyond usability in the medical and STEM when compared with other domains.

RQ3

What are the user characteristics for usability evaluations of learning environments?

The final research question focused on users' characteristics for participants in usability evaluations of learning technologies. As noted in the results section, there is a debate regarding the appropriate number of participants per research study (Alroobaea & Mayhew, 2014; Hornbæk, 2006; Hwang & Salvendy, 2010), which was addressed by providing nuanced categories in terms of the following evaluations: small (0–10); medium (11–30; 31–50), and large (50–100; 100 +). Regarding scope, a large majority of studies were focused on the learner. One might argue this is a positive outcome to understand the learner perspective, especially given the focus of LXD to be a more human-centric view of learning (Schmidt & Huang, 2022). However, this systematic review also found that very few studies are focused on other perspectives, such as the educator or parent/caregiver.To adopt a systems view of learning technologies (Šumak et al., 2011), it follows that multiple perspectives should be included. The present data from the systematic review suggests that critical perspectives are often excluded regarding the usability of learning environments.

The final research question also sought to understand the quantify of users assessed during usability evaluations. This finding is important because a prevailing assumption among practitioners is that one can find approximately 80% of usability issues with five users (Alroobaea & Mayhew, 2014). The study extends this through broad parameters of small (0–10), medium (11–30; 31–50), and large-scale studies (51–100; 100 or more). Based on this belief, one might thus expect to find that the category of 0–10 participants was the highest. However, a surprising finding is that large-scale usability studies are most common using these instruments, with many studies conducting survey-driven usability research with over 100 participants (32.09%). The next category was more moderate in scale (11–30 users at 25.39%), which suggests there is relative uniformity in terms of how many users are assessed. Rather than make assumptions based on a small number of users that are subject to wide variability, the research shows that usability is assessed in both moderate and large-scale approaches.

5 Conclusion and Future Studies

The current systematic review builds on recent discourse about how to assess a critical design aspect of learning technology, namely usability studies. Recent systematic reviews have conducted similar assessments, finding the number of studies employing surveys between approximately 50 (Estrada-Molina et al., 2022) and 120 (Lu et al., 2022). This systematic review focused on the last decade suggests there appears to be a lack of standardized instrumentation for usability evaluations, with an overreliance on self-created instruments (RQ1). The research is often focused on the medical and STEM domains, with very little usability assessment done on domains such as teacher education, language arts, and others (RQ2). Finally, the number of participants is also extremely variable (RQ3).

Although the systematic review provides clarity regarding characteristics of usability evaluations, there are multiple opportunities to build on this research. While the current study applied a systematic review, it may be helpful to conduct a meta-analysis that quantifies trends among the instruments, especially in terms of the quality, validity, and reliability. The data presented in this article describes what survey instruments were used, but it does not necessarily present usability scores provided from the learner. A meta-analysis that aggregates these scores or a specific number of users during an evaluation study can identify gaps that should be addressed by LXD researchers and practitioners. Similarly, the current systematic review provides an overall count of instruments and types of learning technology, but does not necessarily consider broader trends in adoption of these tools. For example, it may be that findings around few usability studies in robotics and MOOC usability studies are correlated with the implementation of these digital tools. Other technologies may be more restricted based on the country where the research was conducted, which would limit usability evaluations. Further studies might explore the percentage of usability evaluations relative to the published literature about that specific learning technology. This can be especially helpful to identify issues for emergent technologies that might require additional testing during a usability evaluation.

Another follow-up study could revisit the assessment strategies. The current systematic review focused on surveys; however, usability studies employ multiple other strategies to understand the user perspective (Schmidt et al., 2020). A future study could look at approaches such as expert reviews, heuristics, think-alouds, and others that are often employed in usability studies. It may also be beneficial to the literature to look at studies that utilized multiple assessment formats (e.g., both heuristic evaluations and surveys).

References

Alroobaea, R., & Mayhew, P. J. (2014). How many participants are really enough for usability studies? Science and Information Conference, 2014, 48–56. https://doi.org/10.1109/SAI.2014.6918171

Brooke, J. (1996). SUS: A “quick and dirty” usability scale. In P. W. Jordan, B. Thomas, B. A. Weerdmeester, & A. L. McClelland (Eds.), Usability evaluation in industry (pp. 189–194). Taylor & Francis.

Campbell, A., Craig, T., & Collier-Reed, B. (2020). A framework for using learning theories to inform “growth mindset” activities. International Journal of Mathematical Education in Science and Technology, 51(1), 26–43. https://doi.org/10.1080/0020739X.2018.1562118

Chang, Y. K., & Kuwata, J. (2020). Learning experience design: Challenges for novice designers. In M. Schmidt, A. A. Tawfik, I. Jahnke, & Y. Earnshaw (Eds.), Learner and user experience research: An introduction for the field of learning design & technology (pp. 145–163). EdTech Books.

Christensen, R., Hodges, C. B., & Spector, J. M. (2022). A framework for classifying replication studies in educational technologies research. Technology Knowledge and Learning, 27(4), 1021–1038. https://doi.org/10.1007/s10758-021-09532-3

Davies, R. (2020). Assessing learning outcomes. In M. J. Bishop, E. Boling, J. Elen, & V. Svihla (Eds.), Handbook of research in educational communications and technology: learning design (pp. 521–546). Springer International Publishing. https://doi.org/10.1007/978-3-030-36119-8_25

Davis, F. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. The Mississippi Quarterly, 13(3), 319–340.

Downey, L. L. (2007). Group usability testing: Evolution in usability techniques. Journal of Usability Studies, 2(3), 133–155.

Engeström, Y. (1999). Activity theory and individual and social transformation. Perspectives on Activity Theory. https://books.google.ca/books?hl=en&lr=&id=GCVCZy2xHD4C&oi=fnd&pg=PA19&ots=l00JOMD5mU&sig=Tjz8OrwWINxiqKRG0ByVPbIx_WU

Estrada-Molina, O., Fuentes-Cancell, D. R., & Morales, A. A. (2022). The assessment of the usability of digital educational resources: An interdisciplinary analysis from two systematic reviews. Education and Information Technologies, 27(3), 4037–4063. https://doi.org/10.1007/s10639-021-10727-5

Floor, N. (2023). This is learning experience design: What it is, how it works, and why it matters. (Voices That Matter) (1st ed.). New Riders.

Ge, X., Law, V., & Huang, K. (2016). Detangling the interrelationships between self-regulation and ill-structured problem solving in problem-based learning. Interdisciplinary Journal of Problem-Based Learning, 10(2), 1–14. https://doi.org/10.7771/1541-5015.1622

Gray, C. (2016). “It’s More of a Mindset Than a Method” UX Practitioners’ Conception of Design Methods. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems, 4044–4055. https://doi.org/10.1145/2858036.2858410

Gray, C. (2020). Paradigms of knowledge production in human-computer interaction: Towards a framing for learner experience (lx) design. In M. Schmidt, A. A. Tawfik, I. Jahnke, & Y. Earnshaw (Eds.), Learner and user experience research: An introduction for the field of learning design & technology (pp. 51–65). EdTech Books.

Gregg, A., Reid, R., Aldemir, T., Gray, J., Frederick, M., & Garbrick, A. (2020). Think-aloud observations to improve online course design: A case example and “how-to” guide. In M. Schmidt, A. Tawfik, I. Jahnke, & Y. Earnshaw (Eds.), Learner and user experience research. EdTech Books.

Hornbæk, K. (2006). Current practice in measuring usability: Challenges to usability studies and research. International Journal of Human-Computer Studies, 64(2), 79–102. https://doi.org/10.1016/j.ijhcs.2005.06.002

Hwang, W., & Salvendy, G. (2010). Number of people required for usability evaluation: The 10±2 rule. Communications of the ACM, 53(5), 130–133. https://doi.org/10.1145/1735223.1735255

Jahnke, I., Schmidt, M., Pham, M., & Singh, K. (2020). Sociotechnical-pedagogical usability for designing and evaluating learner experience in technology-enhanced environments. In M. Schmidt, A. A. Tawfik, I. Jahnke, & Y. Earnshaw (Eds.), Learner and user experience research (pp. 127–144). EdTechBooks.

Jonassen, D., Tessmer, M., & Hannum, W. H. (1998). Task analysis methods for instructional design. Routledge.

Lu, J., Schmidt, M., Lee, M., & Huang, R. (2022). Usability research in educational technology: A state-of-the-art systematic review. Educational Technology Research and Development, 70, 1951–1992. https://doi.org/10.1007/s11423-022-10152-6

Maramba, I., Chatterjee, A., & Newman, C. (2019). Methods of usability testing in the development of eHealth applications: A scoping review. International Journal of Medical Informatics, 126, 95–104. https://doi.org/10.1016/j.ijmedinf.2019.03.018

Martin, F., Ahlgrim-Delzell, L., & Budhrani, K. (2017). Systematic review of two decades (1995 to 2014) of research on synchronous online learning. The American Journal of Distance Education, 31(1), 3–19. https://doi.org/10.1080/08923647.2017.1264807

Martin, F., Dennen, V. P., & Bonk, C. J. (2020a). A synthesis of systematic review research on emerging learning environments and technologies. Educational Technology Research and Development: ETR & D, 68(4), 1613–1633. https://doi.org/10.1007/s11423-020-09812-2

Martin, F., Sun, T., & Westine, C. D. (2020b). A systematic review of research on online teaching and learning from 2009 to 2018. Computers & Education, 159, 104009. https://doi.org/10.1016/j.compedu.2020.104009

Nielsen, J., & Landauer, T. K. (1993). A mathematical model of the finding of usability problems. In Proceedings of the INTERACT ’93 and CHI '93 Conference on Human Factors in Computing Systems, 206–213. https://doi.org/10.1145/169059.169166

Nielsen, J. (1994). Usability Engineering. Morgan Kaufmann. https://play.google.com/store/books/details?id=95As2OF67f0C

North, C. (2023). Learning experience design essentials. Association for Talent Development.

Novak, E., Daday, J., & McDaniel, K. (2018). Assessing intrinsic and extraneous cognitive complexity of e-textbook learning. Interacting with Computers, 30(2), 150–161. https://doi.org/10.1093/iwc/iwy001

Nye, B. D. (2015). Intelligent tutoring systems by and for the developing world: A review of trends and approaches for educational technology in a global context. International Journal of Artificial Intelligence in Education, 25(2), 177–203. https://doi.org/10.1007/s40593-014-0028-6

Perera, P., Tennakoon, G., Ahangama, S., Panditharathna, R., & Chathuranga, B. (2021). A systematic mapping of introductory programming languages for novice learners. IEEE Access, 9, 88121–88136. https://doi.org/10.1109/ACCESS.2021.3089560

Petko, D., Cantieni, A., & Prasse, D. (2017). Perceived quality of educational technology matters: A secondary analysis of students’ ICT use, ICT-related attitudes, and PISA 2012 test scores. Journal of Educational Computing Research, 54(8), 1070–1091. https://doi.org/10.1177/0735633116649373

Rizvi, R. F., Marquard, J. L., Hultman, G. M., Adam, T. J., Harder, K. A., & Melton, G. B. (2017). Usability evaluation of electronic health record system around clinical notes usage–An ethnographic study. Applied Clinical Informatics, 08(04), 1095–1105. https://doi.org/10.4338/ACI-2017-04-RA-0067

Schmidt, M., & Glaser, N. (2021). Investigating the usability and learner experience of a virtual reality adaptive skills intervention for adults with autism spectrum disorder. Educational Technology Research and Development, 69(3), 1665–1699. https://doi.org/10.1007/s11423-021-10005-8

Schmidt, M., & Huang, R. (2022). Defining learning experience design: Voices from the field of learning design & technology. TechTrends, 66(2), 141–158. https://doi.org/10.1007/s11528-021-00656-y

Schmidt, M., Tawfik, A. A., Jahnke, I., & Earnshaw, Y. (2020). Methods of user centered design and evaluation for learning designers. In M. Schmidt, A. A. Tawfik, I. Jahnke, & Y. Earnshaw (Eds.), Learner and user experience research. EdTechBooks.

Spector, J. M., Johnson, T. E., & Young, P. A. (2015). An editorial on replication studies and scaling up efforts. Educational Technology Research and Development: ETR & D, 63(1), 1–4. https://doi.org/10.1007/s11423-014-9364-3

Šumak, B., Heričko, M., & Pušnik, M. (2011). A meta-analysis of e-learning technology acceptance: The role of user types and e-learning technology types. Computers in Human Behavior, 27(6), 2067–2077. https://doi.org/10.1016/j.chb.2011.08.005

Tawfik, A., Gatewood, J., Gish-Lieberman, J., & Hampton, A. (2022). Toward a definition of learning experience design. Technology, Knowledge, & Learning, 27(1), 309–334. https://doi.org/10.1007/s10758-020-09482-2

Tawfik, A., Reeves, T. D., & Stich, A. (2016). Intended and unintended consequences of educational technology on social inequality. TechTrends, 60(6), 598–605. https://doi.org/10.1007/s11528-016-0109-5

Victoria-Castro, A. M., Martin, M., Yamamoto, Y., Ahmad, T., Arora, T., Calderon, F., Desai, N., Gerber, B., Lee, K. A., Jacoby, D., Melchinger, H., Nguyen, A., Shaw, M., Simonov, M., Williams, A., Weinstein, J., & Wilson, F. P. (2022). Pragmatic randomized trial assessing the impact of digital health technology on quality of life in patients with heart failure: Design, rationale and implementation. Clinical Cardiology, 45(8), 839–849. https://doi.org/10.1002/clc.23848

Vivona, R., Demircioglu, M. A., & Audretsch, D. B. (2023). The costs of collaborative innovation. The Journal of Technology Transfer, 48(3), 873–899. https://doi.org/10.1007/s10961-022-09933-1

Vlachogianni, P., & Tselios, N. (2022). Perceived usability evaluation of educational technology using the system usability scale (SUS): A systematic review. Journal of Research on Technology in Education, 54(3), 392–409. https://doi.org/10.1080/15391523.2020.1867938

Yamagata-Lynch, L. C. (2010). Activity systems analysis methods: Understanding complex learning environments. Springer Science & Business Media.

Funding

This material is based upon work in part supported by the National Science Foundation under Grant (Removed for Anonymity). Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the (Removed for Anonymity).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no competing interests to declare that are relevant to the content of this article.

Consent to Participate

The research did not engage in data collection; therefore, informed consent is not relevant to this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Tawfik, A.A., Payne, L., Ketter, H. et al. What instruments do researchers use to evaluate LXD? A systematic review study. Tech Know Learn (2024). https://doi.org/10.1007/s10758-024-09763-0

Accepted:

Published:

DOI: https://doi.org/10.1007/s10758-024-09763-0