Abstract

In light of the educational challenges brought about by the COVID-19 pandemic, there is a growing need to bolster online science teaching and learning by incorporating evidence-based pedagogical principles of Learning Experience Design (LXD). As a response to this, we conducted a quasi-experimental, design-based research study involving nN = 183 undergraduate students enrolled across two online classes in an upper-division course on Ecology and Evolutionary Biology at a large R1 public university. The study extended over a period of 10 weeks, during which half of the students encountered low-stakes questions immediately embedded within the video player, while the remaining half received the same low-stakes questions after viewing all the instructional videos within the unit. Consequently, this study experimentally manipulated the timing of the questions across the two class conditions. These questions functioned as opportunities for low-stakes content practice and retention, designed to encourage learners to experience testing effect and augment the formation of their conceptual understanding. Across both conditions, we assessed potential differences in total weekly quiz grades, page views, and course participation among students who encountered embedded video questions. We also assessed students’ self-report engagement, self-regulation, and critical thinking. On average, the outcomes indicated that learners exposed to immediate low-stakes questioning exhibited notably superior summative quiz scores, increased page views, and enhanced participation in the course. Additionally, those who experienced immediate questioning demonstrated heightened levels of online engagement, self-regulation, and critical thinking. Moreover, our analysis delved into the intricate interplay between treatment conditions, learners’ self-regulation, critical thinking, and quiz grades through a multiple regression model. Notably, the interaction between those in the immediate questioning condition and self-regulation emerged as a significant factor, suggesting that the influence of immediate questioning on quiz grades varies based on learners’ self-regulation abilities. Collectively, these findings highlight the substantial positive effects of immediate questioning of online video lectures on both academic performance and cognitive skills within an online learning context. This discussion delves into the potential implications for institutions to continually refine their approach in order to effectively promote successful online science teaching and learning, drawing from the foundations of pedagogical learning experience design paradigms and the testing effect model.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

A recurring concern in traditional in-person and online courses deployed is how best to maintain and sustain learners’ engagement throughout the learning process. When considering the disruptions caused by the COVID-19 pandemic, these concerns are further exacerbated by competing introductions of “edtech” tools that were deployed in urgency to facilitate teaching and learning during a time of crisis learning context. That is not to say that introducing “edtech” tools did not aid in supporting students’ learning trajectories during this period of time, but a major concern currently is a widespread deployment of “edtech solutions’’ without proper alignment with evidence-based pedagogical learning frameworks (Asad et al., 2020; Chick et al., 2020; Sandars et al., 2020) and whether or not the tools being deployed were having the intended supporting learning effect on students. Between 2020 and 2022, the United States government distributed $58.4 billion dollars through the Higher Education Emergency Relief Fund to public universities which spent more than $1.2 billion on distance learning technologies (EDSCOOP, 2023; O’leary & June, 2023). Educational technology spending by universities included expenditures on software licenses, hardware (such as computers and tablets), learning management systems (LMS), online course development tools, audio-visual equipment, digital content, and various technology-related services to name a few. In light of the considerable resources dedicated to distance learning in recent years, the need to discern how to employ these “edtech tools’’ in a manner that is meaningful, impactful, and grounded in evidence-based pedagogies has grown substantially.

Higher education has been grappling with a myriad of technologies to deploy in order to support the exponential increase of undergraduates enrolled in online courses. Data from the United States in the fall of 2020 indicate that approximately 11.8 million (75%) undergraduate students were enrolled in at least one distance learning course, while 7.0 million (44%) of undergraduates exclusively took distance education courses (National Center for Education Statistics [NCES], 2022). In the Fall of 2021 with the return to in-person instruction, about 75% of all postsecondary degree seekers in the U.S. took at least some online classes with around 30% studying exclusively online (NCES, 2022). In the aftermath of the pandemic, the proportion of students engaged in online courses has declined to 60%. Nevertheless, this figure remains notably higher than the levels seen in the pre-pandemic era (NCES, 2022). To meet the increasing demand, universities possess substantial opportunities to explore effective strategies for enhancing the online learning experiences of undergraduate students. However, it’s important to note that merely introducing new tools into instructors’ technological toolkit may not be enough to foster impactful teaching and learning.

To address these concerns, this study employs a quasi-experimental design, implementing embedded video questions into an asynchronous undergraduate Biology course, anchored in the Learning Experience Design (LXD) pedagogical paradigm. The objective is to assess the effectiveness of the embedded video question assessment platform, utilizing video technologies and employing design-based research (DBR) methodologies to evaluate practical methods for fostering active learning in online educational settings. While video content integration in education is recognized as valuable for capturing learners’ attention and delivering complex concepts (Wong et al., 2023, 2024), passive consumption of videos may not fully harness their potential to promote active learning and deeper engagement (Mayer, 2017, 2019). Embedded video questions provide an avenue to transform passive viewing into an interactive and participatory experience (Christiansen et al., 2017; van der Meij & Bӧckmann, 2021). By strategically embedding thought-provoking questions within video segments, educators can prompt students to reflect on the material, assess comprehension, and immediately evaluate conceptual understanding. Additionally, analyzing the timing and placement of these questions within a video lesson may yield valuable insights into their effectiveness of facilitating the testing effect, a process in which implementing low-stakes retrieval practice over a period of time can help learners integrate new information with prior knowledge (Carpenter, 2009; Littrell-Baez et al., 2015; Richland et al., 2009). Understanding how variations in timing influence student responses and comprehension levels can inform instructional strategies for optimizing the use of interactive elements in educational videos in fostering engagement and enhancing learning performance.

This study aimed to compare students who received low-stakes questions after watching a series of lecture videos with those who encountered questions immediately embedded within the video player. The objective was to identify differences in total weekly quiz scores, course engagement, as well as learning behaviors such as critical thinking and self-regulation over a span of 10 weeks. While previous studies have examined the efficacy of embedded video questions, few have considered the interrelation of these learning behaviors within the context of the Learning Experience Design (LXD) paradigm and the testing effect model for undergraduate science courses. These findings will contribute to a deeper understanding of evidence-based designs for asynchronous online learning environments and will help in evaluating the effectiveness of embedding video questions with regards to question timing within the LXD paradigm. Considering the increasing demand and substantial investment in online courses within higher education, this study aims to assess the effectiveness of a research-practice partnership in implementing embedded video questions into two courses. The ultimate aim is to determine whether this approach could serve as a scalable model for effectively meeting educational needs in the future.

2 Literature Review

2.1 Learning Experience Design

Learning Experience Design (LXD) encompasses the creation of learning scenarios that transcend the confines of traditional classroom settings, often harnessing the potential of online and educational technologies (Ahn, 2019). This pedagogical paradigm involves crafting impactful learning encounters that are centered around human needs and driven by specific objectives, aimed at achieving distinct learning results (Floor, 2018, 2023; Wong & Hughes, 2022; Wong et al., 2024). LXD differs from the conventional pedagogical process of “instructional design,” which primarily focuses on constructing curricula and instructional programming for knowledge acquisition (Correia, 2021). Instead, LXD can be described as an interdisciplinary integration that combines principles from instructional design, pedagogical teaching approaches, cognitive science, learning sciences, and user experience design (Weigel, 2015). LXD extends beyond the boundaries of traditional educational settings, leveraging online and virtual technologies (Ahn, 2019). As a result, the primary focus of LXD is on devising learning experiences that are human-centered and geared toward specific outcomes (Floor, 2018; Wong & Hughes, 2022).

Practically, LXD is characterized by five essential components: Human-Centered Approach, Objective-Driven Design, Grounded in Learning Theory, Emphasis on Experiential Learning, and Collaborative Interdisciplinary Efforts (Floor, 2018). Taking a human-centered approach considers the needs, preferences, and viewpoints of the learners, resulting in tailored learning experiences where learners take precedence (Matthews et al., 2017; Wong & Hughes, 2022). An objective-driven approach to course design curates learning experiences that are intentionally structured to align specific objectives, making every learning activity purposeful and pertinent to support students’ learning experiences (Floor, 2018; Wong et al., 2022). LXD also is grounded in learning theories, such that the design process is informed by evidence-based practices drawn from cognitive science and learning sciences (Ahn et al., 2019). Furthermore, LXD places a large emphasis on experiential learning where active and hands-on learning techniques, along with real-world applications, facilitate deeper understanding and retention (Floor, 2018, 2023; Wong et al., 2024). Lastly, LXD is interdisciplinary, bringing together professionals from diverse backgrounds, including instructional designers, educators, cognitive scientists, and user experience designers, to forge comprehensive and well-rounded learning experiences (Weigel, 2015). Each of these facets underscores the significance of empathy, where both intended and unintended learning design outcomes are meticulously taken into account to enhance learners’ experiences (Matthews et al., 2017; Wong & Hughes, 2022). Consequently, LXD broadens the scope of learning experiences, enabling instructors and designers to resonate with learners and enrich the repertoire of learning design strategies (Ahn et al., 2019; Weigel, 2015), thus synergizing with the utilization of video as a powerful tool for teaching and learning online. In tandem with the evolving landscape of educational practices, LXD empowers educators to adapt and enhance their methodologies, fostering successful and enriched learning outcomes (Ahn, 2019; Floor, 2018, 2023; Wong et al., 2022), while also embracing the dynamic potential of multimedia educational technologies like video in delivering effective and engaging instructional content.

2.2 Video as a Tool for Teaching and Learning

Video and multimedia educational technologies have been broadly used as “edtech tools” tools for teaching and learning over the last three decades during in-person instruction and especially now with online learning modalities (Cruse, 2006; Mayer, 2019). Educational videos, also referred to as instructional or explainer videos, serve as a modality for delivering teaching and learning through audio and visuals to demonstrate or illustrate key concepts being taught. Multiple researchers have found evidence for the affordances of video-based learning, citing benefits including reinforcement in reading and lecture materials, aiding the development of common base knowledge for students, enhancing comprehension, providing greater accommodations for diverse learning preferences, increasing student motivations, and promoting teacher effectiveness (Corporate Public Broadcasting [CPB], 1997, 2004; Cruse, 2006; Kolas, 2015; Wong et al., 2023; Wong et al., 2024; Yousef et al., 2014). Proponents in the field of video research also cite specific video design features that aid in specifically supporting students’ learning experiences such as searching, playback, retrieval, and interactivity (Giannakos, 2013; Yousef et al., 2014; Wong et al., 2023b). A study conducted by Wong et al. (2023b) sheds light on the limitations of synchronous Zoom video lectures, based on a survey of more than 600 undergraduates during the pandemic. It underscores the advantages of the design of asynchronous videos in online courses, which may better accommodate student learning needs when compared to traditional synchronous learning (Wong et al., 2023b). Mayer’s (2001, 2019) framework for multimedia learning provides a theoretical and practical foundation for how video-based learning modalities can be used as cognitive tools to support students’ learning experiences. While some researchers have argued videos as a passive mode of learning, Mayer (2001) explains that viewing educational videos involves high cognitive activity that is required for active learning, but this can only occur through well-designed multimedia instruction that specifically fosters cognitive processing in learners, even though learners may seem or appear to be behaviorally inactive (Meyer, 2009, 2019). Following Mayer’s (2019) principles, we designed multimedia lessons supporting students’ cognitive processing through segmenting, pre-training, temporal contiguity, modality matching, and signaling, all implemented through asynchronous embedded video questions.

2.3 Embedded Video Questions

Embedded video questions are a type of educational technology design feature that adds interactive quizzing capacities while students engage in video-based learning. They involve incorporating formative assessments directly within online videos, prompting viewers to answer questions at specific points in the content. While a video is in progress, students viewing it are prompted with questions designed to encourage increased engagement and deeper cognitive processing (Christiansen et al., 2017; Kovacs, 2016; Wong et al., 2023; van der Meij et al., 2021). This is similar to an Audience Response System (ARS) during traditional in-person lectures where an instructor utilizes a live polling system in a lecture hall such as iClickers to present questions to the audience (Pan et al., 2019). Yet, within the context of online learning, students are tasked with independently viewing videos at their convenience, and a set of on-screen questions emerges. This allows learners to pause, reflect, and answer questions at their own pace, fostering a sense of control over the learning process (Ryan & Deci, 2017). These questions serve to promptly recapitulate key concepts, identify potential misconceptions, or promote conceptual understanding of the subject matter. Studies suggest that embedded video questions can significantly improve student engagement compared to traditional video lectures (Chi & Wylie, 2014). Research on the use of embedded video questions has already shown promising empirical results in the field, such as stimulating students’ retrieval and practice, recognition of key facts, and prompting behavioral changes to rewind, review, or repeat the materials that were taught (Cummins et al., 2015; Haagsman et al., 2020; Rice et al., 2019; Wong & Hughes et al., 2022; Wong et al., 2024). Embedded video questions have also been shown to transition learners from passively watching a video to actively engaging with the video content (Dunlosky et al., 2013; Kestin & Miller, 2022; Schmitz, 2020), a critically important factor when considering the expediency from in-person to online instruction due to the pandemic. As a result, there are a myriad of affordances that showcase the potential effects of embedded video questions on student learning experiences ⎯one of which is how embedded video questions can be intentionally leveraged with regards to question timing to support active information processing facilitated through the testing effect.

3 Testing Effect

Active information processing in the context of video-based learning is the process in which learners are able to encode relevant information from a video, integrate that information with their prior knowledge, and retrieve that information stored at a later time (Johnson & Mayer, 2009; Schmitz, 2020). This active learning process of retrieval, the learning strategy of rehearsing learning materials through quizzing and testing, is grounded in the cognitive process known as the testing effect. From a cognitive learning perspective, the testing effect is a process in which implementing low-stakes retrieval practice over a period of time can help learners integrate new information with prior knowledge, increasing long-term retention and memory retrieval in order to manipulate knowledge flexibly (Carpenter, 2009; Littrell-Baez et al., 2015; Richland et al., 2009). This shifts the narrative from looking at assessments as traditional high-stakes exams, but rather as practice learning events that provide a measure of learners’ knowledge in the current moment, in order to more effectively encourage retention and knowledge acquisition of information not yet learned (Adesope et al., 2017; Carrier & Pashler, 1992; Richland et al., 2009). The connection between retrieval and the testing effect represents sustained, continual, and successive rehearsal of successfully retrieving accurate information from long-term memory storage (Schmitzs, 2020).

The frequency of practice and the time allotted between practice sessions also play a role in memory retention. Equally as important, the timing and intentionality of when these questions might occur within a video may influence learner outcomes. As such, the more instances learners are able to retrieve knowledge from their long-term memory as practice, the better learners may recall and remember that information (Richland et al., 2009). This can come in the form of practice tests, which research has shown tremendous success in the cognitive testing literature (Carpenter, 2009; Roediger III & Karpicke, 2006), or in this study, embedded video questions to facilitate the testing effect. By doing so, we can provide students with an alternative interactive online modality to learning the material in addition to rereading or re-studying (Adesope et al., 2017; Roediger et al., 2006). Instead, learners are presented with opportunities to answer questions frequently and immediately as retrieval practice when watching a video. Active participation through answering questions keeps viewers focused and promotes deeper information processing (Azevedo et al., 2010). We can offer a focused medium for students to recall, retrieve, and recognize crucial concepts (Mayer et al., 2009; van de Meij et al., 2021). This approach aims to cultivate an active learning environment that engages learners’ cognitive processing during online education. It assists students in discerning which aspects of the learning material they have mastered and identifies areas that require further attention (Agarwal et al., 2008; Fiorella & Mayer, 2015, 2018; McDaniel et al., 2011).

4 The Testing Effect on Student Learning Behaviors

Embedded video questions present a potential learning modality that operationalizes the theoretical model of the testing effect which may have tremendous benefits on the nature of student-centered active learning opportunities within an online course, particularly with student learning behaviors such as student engagement, self-regulation, and critical thinking. As such, leveraging the testing effect and the LXD pedagogical paradigm synergistically through the medium of embedded video questions may amplify student learning behaviors in online courses. The following sections review the literature on engagement, self-regulation, and critical thinking.

Student engagement in the online learning environment has garnered significant attention due to its crucial role in influencing learning outcomes, satisfaction, and overall course success (Bolliger & Halupa, 2018; Wang et al., 2013; Wong et al., 2023b; Wong & Hughes, 2022). Broadly defined, student engagement can be characterized as the extent of student commitment or active involvement required to fulfill a learning task (Redmond et al., 2018; Ertmer et al., 2010). Additionally, engagement can extend beyond mere participation and attendance, involving active involvement in discussions, assignments, collaborative activities, and interactions with peers and instructors (Hu & Kuh, 2002; Redmond et al., 2018; Wong et al., 2022). Within an online course, engagement can be elaborated as encompassing the levels of attention, curiosity, interaction, and intrinsic interest that students display throughout an instructional module (Redmond et al., 2018). This also extends to encompass the motivational characteristics that students may exhibit during their learning journey (Pellas, 2014). Several factors influence student online engagement, and they can be broadly categorized into individual, course-related, and institutional factors. Individual factors include self-regulation skills, prior experience with online learning, and motivation (Sansone et al., 2011; Sun & Rueda, 2012). Course-related factors encompass instructional design, content quality, interactivity, and opportunities for collaboration (Pellas, 2014; Czerkawski & Lyman, 2016). Institutional factors involve support services, technological infrastructure, and instructor presence (Swan et al., 2009; Picciano, 2023). Furthermore, research has established a noteworthy and favorable correlation between engagement and various student outcomes, including advancements in learning, satisfaction with the course, and overall course grades (Bolliger & Halupa, 2018; Havlverson & Graham, 2019). Instructional designers argue that to enhance engagement, instructors and educators can employ strategies like designing interactive and authentic assignments (Cummins et al., 2015; Floor, 2018), fostering active learning opportunities, and creating supportive online learning environments (Kuh et al., 2005; Wong et al., 2022). Thus, engaged students tend to demonstrate a deeper understanding of the course material, a stronger sense of self-regulation, and improved critical thinking skills (Fedricks et al., 2004; Jaggars & Xu, 2016; Pellas, 2018).

Self-regulation pertains to the inherent ability of individuals to manage and control their cognitive and behavioral functions with the intention of attaining particular objectives (Pellas, 2014; Vrugt & Oort, 2008; Zimmerman & Schunk, 2001). In the context of online courses, self-regulation takes on a more specific definition, encapsulating the degree to which students employ self-regulated metacognitive skills–the ability to reflect on one’s own thinking–during learning activities to ensure success in an online learning environment (Wang et al., 2013; Wolters et al., 2013). Unlike conventional in-person instruction, asynchronous self-paced online courses naturally lack the physical presence of an instructor who can offer immediate guidance and support in facilitating the learning journey. While instructors may maintain accessibility through published videos, course announcements, and email communication, students do not participate in face-to-face interactions within the framework of asynchronous courses. However, the implementation of asynchronous online courses offers learners autonomy, affording them the flexibility to determine when, where, and for how long they engage with course materials (McMahon & Oliver, 2001; Wang et al., 2017). Furthermore, the utilization of embedded video questions in this course taps into Bloom’s taxonomy, featuring both lower and higher-order thinking questions to test learners’ understanding. This medium enables learners to immediately engage with and comprehend conceptual materials through processes such as pausing, remembering, understanding, applying, analyzing, and evaluating, negating the need to postpone these interactions until exam dates (Betts, 2008; Churches, 2008). While this shift places a significant responsibility on the learner compared to traditional instruction, embedded video questions contribute to a student-centered active learning experience (Pulukuri & Abrams, 2021; Torres et al., 2022). This approach nurtures students’ self-regulation skills by offering explicit guidance in monitoring their cognitive processes, setting both short-term and long-term objectives, allocating sufficient time for assignments, promoting digital engagement, and supplying appropriate scaffolding (Al-Harthy et al., 2010; Kanuka, 2006; Shneiderman & Hochheiser, 2001). Through this, students actively deploy numerous cognitive and metacognitive strategies to manage, control, and regulate their learning behaviors to meet the demands of their tasks (Moos & Bonde, 2016; Wang et al., 2013). Due to the deliberate application of LXD principles, the course has the capability to enhance the development of students’ self-regulation abilities in the context of online learning (Pulukuri & Abrams, 2021). Consequently, this empowers students to identify their existing knowledge and engage in critical evaluation of information that may need further refinement and clarification.

Leveraging the testing effect model through the integration of embedded video questions also yields notable advantages concerning students’ critical thinking capabilities. Critical thinking involves students’ capacity to employ both new and existing conceptual knowledge to make informed decisions, having evaluated the content at hand (Pintrich et al., 1993). In the context of online courses, critical thinking becomes evident through actions such as actively seeking diverse sources of representation (Richland & Simms, 2015), encountering and learning from unsuccessful retrieval attempts (Richland et al., 2009), and effectively utilizing this information to make informed judgments and draw conclusions (Uzuntiryaki-Kondakci & Capa-Aydin, 2013). To further elaborate, according to Brookfield (1987), critical thinking in the research context involves recognizing and examining the underlying assumptions that shape learners’ thoughts and actions. As students actively practice critical thinking within the learning environment, the research highlights the significance of metacognitive monitoring, which encompasses the self-aware assessment of one’s own thoughts, reactions, perceptions, assumptions, and levels of confidence in the subject matter (Bruning, 2005; Halpern, 1998; Jain & Dowson, 2009; Wang et al., 2013). As such, infusing embedded video questions into the learning process may serve as a strategic pedagogical approach that may catalyze students’ critical thinking skills.

In the context of embedded video questions, students must critically analyze questions, concepts, scenarios, and make judgments on which answer best reflects the problem. As students engage with the videos, they’re prompted to monitor their own thinking processes, question assumptions, and consider alternate perspectives—a quintessential aspect of metacognition that complements critical thinking (Bruning, 2005; Halpern, 1998; Jain & Dowson, 2009; Wang et al., 2013). Sometimes, students might get the answers wrong, but these unsuccessful attempts also contribute to the testing effect in a positive manner (Richland et al., 2009). Unsuccessful attempts serve as learning opportunities to critically analyze and reflect during the low-stakes testing stage so that learners are better prepared later on. Furthermore, cultivating students’ aptitude for critical thinking also has the potential to enhance their transferable skills (Fries et al., 2020), a pivotal competency for STEM undergraduate students at research-intensive institutions (R1), bridging course content to real-world applications. In essence, the interplay between the testing effect model and the use of embedded video questions not only supports students’ critical thinking, but also underscores the intricate relationship between engagement, self-regulation, and course outcomes (Wang et al., 2013).

4.1 Current Study

This study builds on the work of Wong and Hughes (2023) on the implementation of LXD in STEM courses utilizing educational technologies. Utilizing the same course content, course videos, and pedagogical learning design, this Design-Based Research (DBR) approach employs learning theories to assess the effectiveness of design and instructional tools within real-world learner contexts (DBR Collective, 2003; Siek et al., 2014). In this study, we utilized the same instructional videos and course materials as Wong & Hughes et al. (2023), but instead incorporated iterative design enhancements such as embedded video questions to assess their potential testing effect impacts on students’ learning experiences. Therefore, this quasi-experimental research contrasts students who participated in a 10-week undergraduate science online course. Half of these students encountered low-stakes questions integrated directly within the video player (immediate condition), while the other half received questions following a series of video lectures (delayed condition). The aim is to assess how the timing of when low-stakes questioning occurs might beneficially influence learners’ science content knowledge, engagement, self-regulation, and critical thinking. Additionally, we assessed students’ learning analytics within the online course, including online page views and course participation, as a proximal measure of learners’ online engagement. We then compared these findings with their self-report survey responses within the online course to corroborate the results. With the implementation of a newly iterated online course grounded in LXD paradigm and the testing effect model, this study is guided by the following research questions:

-

RQ1) To what extent does the effect of “immediate vs. delayed low-stakes questioning” influence learners’ total quiz grades, online page views, and course participation rate?

-

RQ2) To what extent does the effect of “immediate vs. delayed low-stakes questioning” influence learners’ engagement, self-regulation, and critical thinking?

-

RQ3) To what extent does the relationship between “immediate vs. delayed low-stakes questioning” and learner’s total quiz grades vary depending on their levels of self-regulation and critical thinking?

5 Methodology

5.1 Ethical Considerations

This study, funded by the National Science Foundation (NSF), adheres to stringent ethical standards mandated by both the university and the grant funding agency. The university institution obtained approval from its Institutional Review Board (IRB) to conduct human subjects research, ensuring compliance with ethical guidelines. The research was categorized as IRB-exempt due to its online, anonymous data collection process, which posed minimal risk to participants. All participants were provided with comprehensive information about the study, including its purpose, procedures, potential risks and benefits, confidentiality measures, and their right to withdraw without consequences. Participant data was treated with utmost confidentiality and anonymity, and the study’s questions, topics, and content were designed to avoid causing harm to students. The research protocol received formal approval from the university’s ethics committee. All participants provided informed consent to participate in the study before any data collection procedures commenced. This ensured that participants were fully aware of the study’s purpose, procedures, potential risks and benefits, confidentiality measures, and their right to withdraw without consequences.

5.2 Quasi-experimental Design

This research employed a design-based research (DBR) approach, leveraging learning theories to evaluate the effectiveness of design, instructional tools, or products in authentic, real-world settings (DBR Collective, 2003; Siek et al., 2014). The rationale for this research methodology is to assess instructional tools in ecologically valid environments and explore whether these tools enhance students’ learning experiences (Scott et al., 2020). Our decision to adopt a DBR approach arises from the limited research on investigating the efficacy of the Learning Experience Design (LXD) pedagogical paradigm with embedded video questions in online undergraduate science courses. We are also cognizant of previous research indicating that simply inserting questions directly into videos, without evidence-based pedagogical principles, intentional design, and instructional alignment, does not significantly improve learning outcomes (Deng et al., 2023; Deng & Gao, 2023; Marshall & Marshall, 2021). Thus, this DBR study utilizes a Learning Experience Design (LXD) approach to cultivate active learner engagement through the implementation of learning theories such as the testing effect model. We then compare the impact of embedded video questions on learning outcomes within the newly designed self-paced asynchronous online course (See Fig. 1). Subsequently, we test these designs with learners and utilize the findings to iterate, adapt, and redeploy these techniques continually, aiming to enhance the efficacy and gradual evolution in our designs of embedded video questions on students’ learning experiences.

The study involved two equivalently sized classes within the School of Biological Sciences at an R1 university in Southern California, with students voluntarily enrolling in either one of these two classes. The two classes were taught by the same professor on the same topics of Ecology and Evolutionary Biology. This particular course was chosen to serve as a research-practice partnership (RPP), collaborating closely with the professor, educational designers, researchers, and online course creators to customize a course that aligns with the instructor’s and students’ needs returning from the COVID-19 remote learning environment.

The study spanned a 10-week period, allowing sufficient dosage for implementing our learning designs and effectively measuring their impact on students’ learning experiences (See Fig. 1). Selecting a quasi-experimental design allowed us to assess the impact of question timing and placement on students’ comprehension and retention of the material presented in the videos. Following quasi-experimental design principles, the study involved two classes, each assigned to a different treatment condition. Students who experienced low-stakes questions after watching a series of videos were referred to as “Delayed Questioning,” and students who experienced low-stakes questions immediately embedded within the video player were referred to as “Immediate Questioning.” In the delayed questioning condition, students encountered low-stakes questions only after watching all assigned video lectures for the week, while in the immediate questioning condition, students faced questions directly embedded in the video player, time-stamped and deliberately synchronized with the presented conceptual content. The two treatment conditions, “Delayed” and “Immediate Questioning’’ were carefully designed to isolate the effect of question timing while keeping all other variables constant. As such, the low-stakes questions, quantity of videos, and the number of questions in both conditions were completely identical, with the only experimental manipulation involving the timing and placement of the questions across conditions.

Following the viewing of videos and answering of low-stakes questions, either embedded directly in the video or after watching all of the videos in the instructional unit, all students proceeded to take an end-of-week quiz, serving as a summative assessment released on Fridays. The end-of-week quiz was completely identical and released at the same time and day across both conditions. This comprehensive approach ensured equitable testing conditions and minimized potential confounding variables. Furthermore, this approach allowed for a controlled comparison between the two conditions, helping to determine whether embedding questions directly within the video player led to different learning outcomes compared to presenting questions after watching all of the videos. Selecting these carefully designed treatment conditions allowed for a controlled comparison, isolating the effect of question timing while keeping all other variables constant. This methodological rigor facilitated a robust analysis of the impact of question placement on students’ learning experiences and outcomes.

5.3 Participants

The study encompassed a total of n = 183 undergraduate students who were actively enrolled in upper-division courses specializing in Ecology and Evolutionary Biology. Participants were selected based on their voluntary self-enrollment in these upper-division courses during a specific enrollment period of Winter 2021. No exclusion criteria were applied, allowing for a broad sample encompassing various backgrounds and levels of experience in Ecology and Evolutionary Biology. These courses were part of the curriculum at a prominent R1 research university located in Southern California and were specifically offered within the School of Biological Sciences. Students were able to enroll in the upper division course so long as they were a biological sciences major and met their lower division prerequisites. Regarding the demographic makeup of the participants, it included a diverse representation with 1.2% identifying as African American, 72.0% as Asian/Pacific Islander, 10.1% as Hispanic, 11.3% as white, and 5.4% as multiracial. Gender distribution among the students consisted of 69.0% females and 31.0% males (See Table 1). Participants randomly self-select into one of two distinct course sections, each characterized by different approaches to course implementation: (1) The first condition featured questions placed at the conclusion of all video scaffolds (n = 92). (2) The second section incorporated questions that were embedded directly within the video scaffolds themselves (n = 91).

5.4 Learning Experience Design

5.4.1 Video Design

The curriculum delivery integrated innovative self-paced video materials crafted with the Learning Experience Design (LXD) paradigm in mind (Wong et al., 2024). These videos incorporated various digital learning features such as high-quality studio production, 4 K multi-camera recording, green screen inserts, voice-over narrations, and animated infographics (See Fig. 2). Underpinning this pedagogical approach of the video delivery was the situated cognition theory (SCT) for e-learning experience design, as proposed by Brown et al. in 1989. In practice, the videos were structured to align with the key elements of SCT, which include modeling, coaching, scaffolding, articulation, reflection, and exploration (Collins et al., 1991; Wong et al., 2024). For instance, the instructor initiated each module by introducing a fundamental concept, offering in-depth explanations supported by evidence, presenting real-world instances demonstrating the application of the concept in research, and exploring the implications of the concept to align with the course’s educational objectives. This approach emphasized immersion in real-world applications, enhancing the overall learning experience.

In the video design process, we adopted an approach where content equivalent to an 80-minute in-person lecture was broken down into smaller, more manageable segments lasting between five to seven minutes. This approach was taken to alleviate the potential for student fatigue, reduce cognitive load, and minimize opportunities for students to become distracted (Humphris & Clark, 2021; Mayer, 2019). Moreover, we meticulously scripted the videos to align seamlessly with the course textbook. This alignment served the purpose of pre-training students in fundamental concepts and terminologies using scientific visuals and simplified explanations, thereby preparing them for more in-depth and detailed textbook study. As part of our video design strategy, we strategically integrated embedded questions at specific time points during the video playback. These questions were designed to serve multiple purposes, including assessing students’ comprehension, sustaining their attention, and pinpointing areas of strength and weakness in their understanding. In line with Meyer’s (2019) principles of multimedia design, our videos were crafted to incorporate elements like pretraining, segmenting, temporal contiguity, and signaling (See Fig. 2). These principles ensured that relevant concepts, visuals, and questions were presented concurrently, rather than sequentially (Mayer, 2003, 2019). This approach encouraged active engagement and processing by providing cues to learners within the video content.

5.4.2 Question Design

Students in both the “immediate” and “delayed” conditions experienced low-stakes multiple-choice questions. Low-stakes multiple-choice questions were knowledge check questions that served as opportunities for content practice, retention, and reconstructive exercises, aiming to engage learners in the testing effect and enhance their conceptual understanding (Richland et al., 2009). Grounded in Bloom’s Taxonomy, the low-stakes questions were designed to emphasize lower-order thinking skills, such as “remembering and understanding” concepts in context (Bloom, 2001; Betts, 2008) (See Fig. 2). In contrast, students experienced high-stakes multiple-choice questions on the weekly summative quizzes consisting of higher-order thinking questions that required students to “apply, analyze, and evaluate” scenarios in ecology and evolutionary biology, encouraging learners to break down relationships and make judgments about the information presented (Bloom, 2001; Betts, 2008) (See Fig. 2).

For instance, an example low-stakes multiple-choice question can be found in Fig. 2 that students encountered which included: “In the hypothetical fish example, the cost of reproduction often involves:” (A) shunting of fats and gonads to provision eggs, (B) shunting of fats to gonads to make more sperm, (C) using fats as a source of fuel for general locomotion, (D) fish face no resource limitations, (E) A and B. Upon reading the question, the question prompts the learner to “remember” and “understand” what they just watched and identify what they know or potentially do not know. Questions that prompt learners to “remember” and “understand” are considered lower-order thinking questions on the Bloom’s Taxonomy pyramid (Bloom, 2001). An example of the high-stakes questions that students encountered while taking their weekly summative exams include: “Given the tradeoff between survival and reproduction fertility, (the number of offspring), how does natural selection act on species? A) Natural selection will minimize the number of mating cycles, B) Natural selection will maximize fecundity, C) Natural selection will maximize survivability, D) Natural selection will compromise between survival and fecundity, D) None of the above. These high-stakes questions on the weekly summary quizzes are made up of higher-order thinking questions that require learners to “apply, analyze, and evaluate,” which consists of the top three pillars of the Bloom’s taxonomy pyramid (Bloom, 2001). The notable differences between low-stakes and high-stakes questions are learners’ application of their conceptual understanding to elaborate on their new and existing understandings, critically evaluate between concepts, and apply the concepts in a new scenario or context. High-stakes questions, or higher-order thinking questions, have been shown to promote the transfer of learning, increase the application of concepts during retrieval practice, and prevent simply recalling facts and memorizing the right answers by heart (Chan, 2010; McDaniel et al., 2013; Mayer, 2014; Richland et al., 2009). This active process allows students to organize the key learning concepts into higher orders and structures. Moreover, the student’s working memory connects new knowledge with prior knowledge, facilitating the transfer to long-term memory and enabling the retrieval of this information at a later time (Mayer, 2014). Together, these strategic question design choices empower students to actively participate in constructive metacognitive evaluations, encouraging learners to contemplate “how and why” they reached their conclusions (See Fig. 2). Research has indicated that such an approach promotes critical thinking and the utilization of elaborative skills among learners in online learning contexts (Tullis & Benjamin, 2011; Wang et al., 2013). Furthermore, by having students answer questions and practice the concepts, our intentions were that students would be better prepared for the high-stakes questions on the weekly quizzes due to the facilitation of testing effect through low-stakes questioning prior.

Based on their respective conditions, learners would encounter low-stakes questions either after watching a series of 6 or 7 lecture videos or integrated directly within each video synchronized to the concept being taught. We opted to have the questions for the “delayed” condition after a series of videos instead of after every video because this time delay allowed us to investigate the effects of timing and spacing between the two treatment conditions. Having all the questions appear at the end of a series of lecture videos also helped to avoid the recency effect and minimize immediate recall for students in the “delayed” condition. Since having questions after every video could also be considered a form of immediate questioning, as the questions would be directly related to the video students just watched, we intentionally designed the “delayed” condition to have all the questions at the end of 6 or 7 videos for that instructional unit to maintain treatment differences. By structuring the questions in the “delayed” condition this way, we aimed to assess whether students retain and integrate knowledge over time, providing a more comprehensive understanding of the learning process and the potential treatment differences of “delayed” compared to “immediate” questioning. Furthermore, we considered that this design choice could mitigate potential fatigue effects that might arise from frequent interruptions of questioning for students in the “immediate” condition. Ultimately, the research design decision for the “delayed” condition to place the low-stakes questions after students watched 6 or 7 videos for that instructional unit provided an optimal treatment comparison between the immediate and delayed conditions.

5.4.3 Course Design and Delivery

The course was implemented within the Canvas Learning Management System (LMS), the official learning platform of the university. The videos recorded for this course were uploaded, designed, and deployed using the Yuja Enterprise Video Platform software. Yuja is a cloud-based content management system (CMS) for video storage, streaming, and e-learning content creation. For this study, we utilized Yuja to store the videos in the cloud, design the embedded video questions platform, and record student grades. After uploading the videos, the questions were inputted into the Yuja system with the corresponding answer options based on specific time codes. These time codes were determined based on the concepts presented within the video. Typically, lower-order thinking questions (i.e. questions that required, remembering, understanding) were placed immediately after introducing a definition of a key concept. Then, higher-order thinking questions (i.e. analyzing, evaluating) were placed towards the end of the video for students to apply the concepts in context before moving on to the next video. Finally, each video was then published from Yuja to Canvas using the Canvas Learning Tools Interoperability (LTI) integration so that all student grades from the embedded video questions were automatically graded and directly updated into the Canvas grade book.

5.5 Data Collection and Instrumentation

Data collection for this study was conducted electronically during the Winter 2021 academic term. All survey measurement instruments were distributed online to the participating students through the Qualtrics XM platform, an online survey tool provided through the university. Students were granted direct access to the surveys through hyperlinks that were seamlessly integrated into their Canvas Learning Management System (LMS) course space, providing a user-friendly, FERPA compliant, and secure centralized data collection environment. Students filled out the surveys immediately after completing their last lesson during the last week of the course on Week 10. When responding to all of the surveys, students were asked to reflect on their learning experiences about the online course they were enrolled in specifically. Having students complete the survey right after their last lesson was an intentional research design decision in order to maintain the rigor, robustness, and quality of responses from students.

5.5.1 Survey Instruments

Three surveys were given to the participants: the Motivated Strategies for Learning Questionnaire, assessing critical thinking and self-regulation, and the Perceived Engagement Scale. We maintained the original question count and structure for reliability but made slight adjustments, such as replacing “classroom” with “online course” to align with the study’s online math intervention context. This approach, supported by research (Hall, 2016; Savage, 2018), ensures effectiveness while preserving the survey instruments’ reliability, particularly across different learning modalities.

The MLSQ instrument utilized in this study was originally developed by a collaborative team of researchers from the National Center for Research to Improve Postsecondary Teaching and Learning and the School of Education at the University of Michigan (Pintrich et al., 1993). This well-established self-report instrument is designed to comprehensively assess undergraduate students’ motivations and their utilization of diverse learning strategies. Respondents were presented with a 7-point Likert scale to express their agreement with statements, ranging from 1 (completely disagree) to 7 (completely agree). To evaluate students in the context of the self-paced online course, we focused specifically on the self-regulation and critical thinking subscales of the MLSQ. Sample items in the self-regulation scale included statements such as “When studying for this course I try to determine which concepts I don’t understand well” and “When I become confused about something I’m watching for this class, I go back and try to figure it out.” Sample items for critical thinking include “I often find myself questioning things I hear or read in this course to decide if I find them convincing” and “I try to play around with ideas of my own related to what I am learning in this course.” According to the original authors, these subscales exhibit strong internal consistency, with Cronbach alpha coefficients reported at 0.79 and 0.80, respectively. In this study, Cronbach’s alphas for self-regulation and critical thinking were 0.86 and 0.85, respectively.

To gauge students’ perceptions of their online engagement, we employed a 12-item survey adapted from Rossing et al. (2012). This survey encompassed a range of questions probing students’ views on the learning experience and their sense of engagement within the online course. Respondents conveyed their responses on a 5-point Likert scale, ranging from 1 (completely disagree) to 5 (completely agree). Sample items in the scale included statements such as “This online activity motivated me to learn more than being in the classroom” and “Online video lessons are important for me when learning at home.” Rossing et al. (2012) report that the internal consistency coefficient for this instrument was 0.90. Similarly, Wong et al. (2023b) reported a coefficient of 0.88, further supporting the scale’s reliability across online learning contexts. This instrument demonstrated robust internal consistency, with a Cronbach alpha coefficient reported at 0.89, indicating its reliability in assessing students’ perceptions of online engagement.

5.5.2 Course Learning Analytics

Throughout the 10-week duration, individualized student-level learning analytics were gathered from the Canvas Learning Management System (LMS). These analytics encompassed various metrics, including total quiz grades, participation rates, and page views. The total quiz grades served as a summative assessment with 10 multiple choice questions. This aggregate metric was derived from the summation of weekly quiz scores over the 10-week period. Each student completed a total of 10 quizzes over the course of the study, with one quiz administered per week. It’s noteworthy that the quizzes presented to students in both classes were completely identical in terms of length, question count, and answer choices. By standardizing the quizzes across both classes, we ensured uniformity in assessment across both classes, thereby enabling a fair comparison of learning outcomes between students who received embedded video questions and those who did not.

Pageviews and participation rates offered detailed insights into individual user behavior within the Canvas Learning Management System (LMS). Pageviews specifically monitored the total number of pages accessed by learners within the Canvas course environment, with each page load constituting a tracked event. This meticulous tracking provided a metric of the extent of learners’ interaction with course materials (Instructure, 2024), enabling a close examination of learner engagement and navigation patterns within the online course. Consequently, page view data can serve as a reliable proxy for student engagement rather than a definitive measure, assisting in gauging the occurrence of activity and facilitating comparisons among students within a course or when analyzing trends over time. The total number of page views for both classes were examined and compared between students with and without embedded video questions.

Participation metrics within the Canvas LMS encompassed a broad spectrum of user interactions within the course environment. These included not only traditional activities such as submitting assignments and quizzes but also more dynamic engagements such as watching and rewatching videos, redoing low-stakes questions for practice, and contributing to discussion threads by responding to questions (Instructure, 2024). Each instance of learner activity was logged as an event within the Canvas LMS. These participation measures were comprehensive and captured the diverse range of actions undertaken by students throughout their learning journey. They provided invaluable insights into the level of engagement and involvement of each student within their respective course sections. By recording these metrics individually for each student, the Canvas LMS facilitated detailed analysis and tracking of learner behavior, enabling a nuanced understanding of student participation patterns and their impact on learning outcomes.

5.6 Data Analysis Plan

We conducted checks for scale reliability to assess the alpha coefficients for all the measurement instruments. Additionally, a chi-square analysis was performed to ensure that there were no disparities between conditions in terms of gender, ethnicity, and student-grade level statuses prior to treatment. Next, descriptive analyses were conducted to assess the frequencies, distribution, and variability across the two different conditions on learners total quiz grades, page views, and participation after 10 weeks of instruction (See Table 2). Then, a series of one-way Analysis of Variance (ANOVAs) were conducted to examine the differences between conditions on dependent variables separately. Next, two Multivariate Analysis of Variance (MANOVAs) were conducted to evaluate the difference between treatment conditions on multiple dependent variables. A MANOVA was chosen for analysis in order to access multiple dependent variables simultaneously while comparing across two or more groups. The first MANOVA compared the means of learners with and without embedded video questions on three dependent variables: (D1) quiz grades, (D2) pageviews, and (D3) participation. A second MANOVA compared the means of learners with and without embedded video questions on three dependent variables: (D1) engagement, (D2) self-regulation, and (D3) critical thinking skills. Lastly, multiple regression analyses were conducted to evaluate the effect of embedded video questions related to learners’ quiz grades and whether this relation was moderated by learners’ self-regulation and critical thinking skills.

6 Results

Descriptive Analysis.

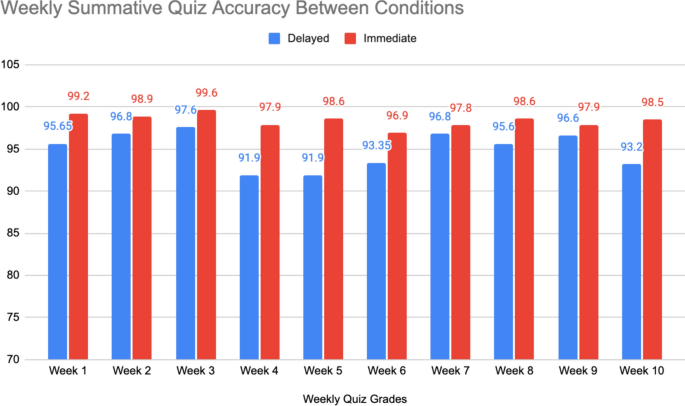

Table 3 displays the average weekly quiz grades for two instructional conditions, “Delayed Questioning” and “Immediate Questioning,” over a ten-week period from January 4th to March 8th. Fluctuations in quiz grades are evident across the observation period for both conditions. For instance, on Week 1, the average quiz grade for “Delayed Questioning” was 95.65, while it was notably higher at 99.2 for students in the “Immediate Questioning” condition. Similarly, on Week 6, quiz grades decreased for both conditions, with “Delayed Questioning” at 93.35 and “Immediate Questioning” at 96.9 (See Fig. 3). Comparing the average quiz grades between the two instructional conditions revealed consistent differences throughout the observation period. The “Immediate Questioning” condition consistently demonstrated higher quiz grades compared to “Delayed Questioning.” Notably, this difference is particularly pronounced on certain dates, such as Week 3, where the average quiz grade for “Delayed Questioning” was 97.6, while it reached 99.6 for “Immediate Questioning.” These descriptive findings suggest that embedding questions directly within the video content may positively influence learners’ quiz performance, potentially indicating higher engagement and comprehension of the course material. However, further analysis is required to explore the significant differences in weekly quiz grades between the two instructional conditions.

Figure 4 presents the frequency of page views throughout the 10 week course, acting as an proximal indicator of learner engagement, across different dates for two instructional approaches: “Delayed Questioning” and “Immediate Questioning.” Higher page view counts indicate heightened interaction with course materials on specific dates. For example, on Week 1, “Delayed Questioning” registered 9,817 page views, while “Immediate Questioning” recorded 12,104 page views, indicating peaks in engagement. Conversely, lower page view counts on subsequent dates may imply reduced learner activity or engagement with the course content. Fluctuations in page view counts throughout the observation period highlight varying levels of learner engagement under each instructional condition. Notably, a comparative analysis between the two instructional methods unveiled consistent patterns, with “Immediate Questioning” condition consistently exhibiting higher page view counts across most observation dates. This initial examination suggests that embedding questions directly within the video player may enhance learner engagement, evidenced by increased interaction with course materials.

Upon examination of the participation rates across the specified dates, it is evident that the “Immediate Questioning” condition consistently generated higher levels of engagement compared to the “Delayed Questioning” condition (See Fig. 5). For instance, on Week 4, the participation rate for “Delayed Questioning” was recorded as 459, while it notably reached 847 for “Immediate Questioning.” Similarly, on Week 7 participation rates were 491 and 903 for “Delayed Questioning” and “Immediate Questioning,” respectively, indicating a substantial difference in participation rates between the two instructional approaches. Moreover, both conditions experienced fluctuations in participation rates over time, with instances where participation rates surged or declined on specific dates. For instance, on Week 10, the participation rate for “Delayed Questioning” dropped to 287, whereas it remained relatively higher at 677 for “Immediate Questioning.” Overall, the descriptive analysis depicted in Fig. 5 highlights the differences in participation rates across the two conditions and underscores how embedding video questions influences learners’ online behaviors.

6.1 Multivariate Analysis of Variance on Dependent Variables

A MANOVA was conducted to compare the means of learners with and without embedded video questions on three dependent variables: (D1) quiz grades, (D2) pageviews, and (D3) participation (See Table 4). The multivariate test was significant, F (3, 150) = 188.8, p < 0.000; Pillai’s Trace = 0.791, partial η2 = 0.791, indicating a difference between learners who experienced ”Delayed” and “Immediate Questioning.” The univariate F tests showed there was a statistically significant difference for total quiz grades F (1, 152) = 6.91; p < 0.05; partial η2 = 0.043), pageviews F (1, 152) = 26.02; p < 0.001; partial η2 = 0.146), and course participation rates F (1, 152) = 569.6; p < 0.001; partial η2 = 0.789) between the two conditions. The results of the Bonferroni pairwise comparisons of mean differences for total quiz grades (p < 0.05), pageviews (p < 0.001), and course participation (p < 0.001) were statistically significantly different between the two conditions. Therefore, learners who experienced questions directly embedded within the video player had significantly higher total quiz grades, page views, and course participation across 10 weeks.

A second MANOVA compared the means of learners with and without embedded video questions on three dependent variables: (D1) engagement, (D2) self-regulation, and (D3) critical thinking skills (See Table 5). The multivariate test was significant, F (3, 179) = 5.09, p < 0.000; Pillai’s Trace = 0.079, partial η2 = 0.079, indicating a difference between learners who experienced ”Delayed” and “Immediate Questioning.” The univariate F tests showed there was a statistically significant difference between learners with and without embedded video questions for engagement F (1, 181) = 7.43; p < 0.05; partial η2 = 0.039), self-regulation F (1, 181) = 14.34; p < 0.001; partial η2 = 0.073), and critical thinking skills F (1, 181) = 6.75; p < 0.01; partial η2 = 0.036). The results of the Bonferroni pairwise comparisons of mean differences for engagement (p < 0.05), self-regulation (p < 0.001), and critical thinking skills (p < 0.01) were statistically significantly different across the two conditions. Therefore, experienced questions directly embedded within the video player had significantly higher engagement, self-regulation, and critical thinking skills.

6.2 Moderation Analyses

A multiple regression model investigated whether the association between learners’ total quiz grades who experienced ”Delayed” or “Immediate Questioning” depends on their levels of self-regulation and critical thinking (Table 6). The moderators for this analysis were learners’ self-report self-regulation and critical thinking skills, while the outcome variable was the learners’ total quiz grades after 10 weeks. Results show that learners’ who experienced “Immediate Questioning” (β = 1.15, SE = 4.72) were significantly predictive of their total quiz grades Additionally, the main effect of students’ self-regulation (β = 0.394, SE = 0.78) and critical thinking skills (β = 0.222, SE = 0.153) were statistically significant. Furthermore, the interaction between learners who experienced “Immediate Questioning” and self-regulation was also significant (β = 0.608, SE = 0.120), suggesting that the effect of condition on quiz grades is dependent on the level of learners’ self-regulation. However, the interaction between treatment conditions and critical thinking was not significant (β = 0.520, SE = 0.231). Together, the variables accounted for approximately 20% of the explained variance in learners’ quiz grades, R2 = 0.19, F(5,158) = 9.08, p < 0.001.

7 Discussion

This study was part of a large-scale online learning research effort at the university, examining undergraduate experiences through pedagogically grounded educational technologies. Specifically, it implemented learning experience design, the testing effect model, and “edtech tools” aligned with evidence-based learning theories to enhance student knowledge, engagement, and transferable skills like self-regulation and critical thinking. A key goal was to use design-based research methodologies to evaluate students where instructors were applying these evidence-based practices in real-world settings, helping determine if investments in educational technologies supported student learning outcomes. With the increased demand for online learning post-pandemic, this study investigated the impact of embedded video questions within an asynchronous online Biology course on engagement, self-regulation, critical thinking, and quiz performance. By comparing “Immediate Questioning” versus “Delayed Questioning,” this research explored how the timing of embedded video questions affected the efficacy of online learning, contributing to our understanding of effective online education strategies. The discussion interpreted and contextualized the findings within the broader landscape of online education, technology integration, and pedagogical design.

7.1 Impact on Student Course Outcomes

The first MANOVA results revealed significant positive effects of “Immediate Low-stakes Questioning” on learners’ summative quiz scores over a 10-week period compared to the “Delayed Low-stakes condition.” Notably, both groups had equal preparation time, with quizzes available at the same time and deadlines each week. This indicates that the timing and interactive nature of embedded video questions, aimed at fostering the testing effect paradigm, contributed to increased learner activity and participation (Richland et al., 2009). The “Immediate Questioning” group, characterized by notably higher weekly quiz scores, benefitted from the active learning facilitated by concurrent processing of concepts through answering questions while watching the lecture videos. Embedded questions not only fostered an active learning environment but also captured students’ attention and engaged them differently compared to passive video viewing learning modalidies (Mayer, 2021; van der Meij et al., 2021). This approach allowed for immediate recall and practice, providing guided opportunities for students to reflect on their knowledge and validate their accuracies or improve upon their mistakes (Cummins et al., 2015; Haagsman et al., 2020). The strategic timing of questions synchronized with specific instructional topics provided students with opportunities to recognize, reflect on, and decipher what they know and what they don’t know. Consequently, students approached their weekly quizzes with greater readiness, as strategically positioned embedded video questions fostered enhanced cognitive engagement due to their intentional timing, placement, and deliberate use of low-stakes questioning (Christiansen et al., 2017; Deng & Gao, 2023). Overall, the study’s results align with previous literature, indicating that interactive low-stakes quizzing capacities through intentionally timed questions within video-based learning effectively simulate the testing effect paradigm to foster retrieval practice over time (Littrell-Baez et al., 2015; Richland et al., 2009). These findings underscore the efficacy of integrating interactive elements into online learning environments to enhance student engagement and learning outcomes.

Additionally, students in the “Immediate Questioning’’ condition demonstrated significantly higher participation rates and page views within the course (Table 2). Page views were tracked at the individual student level, representing the total number of pages accessed, including watching and rewatching videos, accessing assignments, and downloading course materials. This indicates that students in the “Immediate Questioning’’ condition were more engaged with course content, preparing for weekly quizzes by actively engaging with various resources. In terms of participation rates, learners in the “Immediate Questioning’’ condition were more active compared to their counterparts (Table 2). Participation encompassed various actions within the Canvas LMS course, such as submitting assignments, watching videos, accessing course materials, and engaging in discussion threads. Students in this condition were more likely to ask questions, share thoughts, and respond to peers, fostering a deeper level of engagement. Moreover, there was a consistent pattern of students revisiting instructional videos, as reflected in page views. Research on embedded video questions has shown that they prompt positive learning behaviors, such as reviewing course materials (Cummins et al., 2015; Haagsman et al., 2020; Rice et al., 2019; Wong et al., 2022). These insights into student behavior highlight the impact of integrating questions within the video player, resulting in increased engagement indicated by higher page views and course participation.

7.2 Impacts on Student Learning Behaviors

In addition to learning analytics, we gathered data on students’ self-reported online engagement. Students in the “Immediate Questioning” condition reported higher engagement levels than their counterparts, possibly due to the anticipation of upcoming questions, fostering attention, participation, and interaction. This increased awareness can positively impact students’ engagement, retrieval, and understanding, as they mentally prepare for the questions presented (Dunlosky et al., 2013; Schmitz, 2020). Moreover, questions directly embedded within the video encourage thoughtful engagement with material, amplifying the benefits of repeated low-stakes testing in preparation for assessments (Kovacs, 2016; Richland et al., 2009). Our study manipulated the timing of these questions to enhance the saliency of the testing effect paradigm, aiming to transition learners from passive to active participants in the learning process. When considering both the first and second MANOVA results, students in the “Immediate Questioning” condition not only showed significant differences in participation and page views but also reported significantly higher engagement compared to those in the “Delayed Questioning” condition. These findings align with previous research on interactive learning activities and “edtech tools” in promoting engagement in online courses (Wong et al., 2022; Wong et al., 2024). We employed the same instructional videos from Wong and Hughes (2022), but our study was informed by the design constraints students identified regarding limited interactivity, practice opportunities, and student-centered active learning in asynchronous settings. By integrating embedded video questions to address these concerns, we offered students a more engaging and interactive learning experience. As a result, embedding questions directly within videos is suggested to be an effective strategy for enhancing learner engagement and participation in online courses. Our results also contribute to the literature by comparing self-report data with behavioral course data, shedding light on the beneficial impacts of embedded video questions.

The significant differences in self-regulation and critical thinking skills among learners in the “Immediate Questioning” condition, who experienced questions embedded directly in videos, highlights the value of this pedagogical approach. Engaging with questions intentionally timed and aligned with the instructional content requires learners to monitor and regulate their cognitive processes, fostering metacognitive awareness and self-regulated learning (Jain & Dowson, 2009; Wang et al., 2013). The cognitive effort exerted to critically analyze, reflect, and respond to these questions within the video enhances critical thinking skills, compelling learners to evaluate and apply their understanding in real-time contexts. Our intentional LXD aimed to enhance the testing effect model’s saliency, encouraging students to think about their own thinking through formative assessments and solidify their conceptual understanding before summative assessments (Richland & Simms, 2015). Repeated opportunities for metacognitive reflection and regulation empower students to gauge their comprehension, identify areas for further exploration, and manage their learning progress (Wang et al., 2017; Wong & Hughes, 2022; Wong et al., 2022). Furthermore, immediate questioning compared to delayed questioning facilitates higher-order cognitive skills, with students in the “Immediate Questioning” condition showing significantly higher critical thinking. Critical thinking, evident through actions like exploring varied sources, learning from unsuccessful retrieval attempts (Richland et al., 2009), and making inferences (Uzuntiryaki-Kondakci & Capa-Aydin, 2013), is influenced by the timing of these questions.

Employing Bloom’s Taxonomy as a foundation for shaping our question construction, this entailed that the lower-order questions were formulated to underscore the tasks of remembering, comprehending, and applying concepts in specific contexts (Bloom, 2001; Betts, 2008). Conversely, the higher-order questions were tailored to provoke the application and analysis of real-world scenarios in the field of ecology and evolutionary biology, requiring students to deconstruct relationships and evaluate patterns on the information presented (Bloom, 2001; Betts, 2008). In combination, these choices in question design provide students with the opportunity to engage in a critical evaluation of course concepts, prompting learners to make inferences, inquire, and judge complex problems as they formulate their solutions. Immediate questioning prompts consideration of key concepts and assessment of understanding in real-time (Jain & Dowson, 2009; Wang et al., 2013), whereas delayed questioning requires learners to retain the information for a longer duration in their working memory, simultaneously mitigating distractions from mind-wandering, as learners await a delayed opportunity to actively retrieve and practice the information gleaned from the videos (Richland et al., 2099; Richland and Simms, 2015; Wong et al., 2023b). Thus, promptly answering low-stakes questions embedded within videos while engaging with content enhances self-regulation, critical thinking, and overall engagement with instructional material. In this way, the cultivation of both self-regulation and critical thinking skills also holds the potential to bolster students’ transferable skills that can be applied across various contexts (Fries et al., 2020), which is a crucial competency for undergraduate students in STEM disciplines (Wong et al., 2023b).

7.3 Interplay between Student Learning Behaviors and Knowledge Outcomes