Abstract

The rising prevalence of online courses and ubiquitous smartphone use pose challenges to researchers and instructors. Open questions concern the effectiveness of digital interventions under unsupervised non-lab conditions, as well as potential associations between interruptions, smartphone usage, and learning. We experimentally tested a web-based training intervention based on video examples and self-explanation prompts with 53 undergraduate teacher students (training condition, n = 27 versus control condition, n = 26). Despite the unsupervised non-lab conditions with potential distractions and interruptions, we found the expected effect on learning outcomes. More interestingly, this effect was completely mediated by self-explanation quality. Furthermore, the effect of self-explanation quality on declarative knowledge was moderated by the number of interruptions during the web-based learning. Moreover, we implemented a simple yet valid method to assess the learners’ mean daily smartphone usage time. To do that, we relied on logging-functions most smartphones already have preinstalled. We detected moderate, negative correlations between the learners’ mean daily smartphone usage and their task engagement (i.e., mental effort and lack of interruptions) during our intervention. Our findings emphasize how effective it is to self-explain video examples, and how important it is to not get interrupted during web-based learning.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

During the last years, two prominent trends with respect to learning have become very apparent: Online courses are on the rise (e.g., Wong et al., 2019), as is ubiquitous smartphone use (e.g., Cha & Seo, 2018; Elhai et al., 2017), which naturally spills over into the classroom (e.g., Kim et al., 2019; McCoy, 2013). Both trends pose challenges to instructors and researchers: First, learning online usually allows learners more or less freedom to choose where, when, and on which device they process the course’s learning material. Under such conditions, and especially without a supervisor, the risks of off-task behavior and interruptions while learning can rise. Hence, a computer-based training intervention proven effective in the classroom under supervised lab conditions will not necessarily maintain its effectiveness when offered as a web-based training intervention under unsupervised field conditions. Second, the omnipresence of smartphone-related off-task behavior in the classroom implies similar or even more off-task behavior and interruptions during online learning. Assessing learners’ smartphone usage and analyzing its relation to web-based learning is therefore another challenge.

The present paper aims to address these challenges while setting two research goals: (1) to experimentally test a web-based training intervention in unsupervised non-lab conditions (2) analyze potential relations between smartphone usage and learning via the web-based training intervention. For that matter—en passant—we aimed to implement a simple yet valid method to assess learners’ mean daily smartphone usage time.

2 Literature Review

2.1 Previous Example-Based Learning Environments in Controlled/Supervised Experiments

As exhaustive research in recent decades has shown, learning from examples is effective—especially for novices (see Renkl, 2014, 2017 for an overview). An example provides a problem’s solution, thereby sparing learners an overstraining search for a solution. The learners’ limited cognitive resources are now ideally free for them to deeply process the given solution principles (Sweller et al., 2011). An effective form of such deep processing is the act of self-explaining, that is, generating explanations of the example’s principles to one’s self (Renkl, 2014; Wylie & Chi, 2014). Researchers and instructors rely on so-called self-explanation prompts as an effective method to encourage learners to generate self-explanations (e.g., Berthold & Renkl, 2010; Nokes et al., 2011). In computer-based learning environments, such prompts usually precede textboxes that offer learners the chance to type in their self-explanations (e.g., Hefter et al., 2014; Roelle & Renkl, 2020; Roelle et al., 2017). The quality of such self-explanations significantly mediated the learning environments’ effects on learning outcomes in immediate posttests (e.g., Berthold & Renkl, 2009; Berthold et al., 2009) as well as delayed posttests (e.g., Hefter et al., 2014, 2015). It is thus generally worthwhile to consider self-explanation quality an important predictor of learning outcomes, thereby furthering high self-explanation quality via prompts during computer-based learning environments. However, the aforementioned learning environments were usually accompanied by quite closely controlled conditions with a set location (e.g., computer lab), time (e.g., fixed appointment), and digital device (e.g., given laptop). Moreover, a supervisor was present during the studies.

This study tested a computer-based training intervention as a web-experiment and aimed to replicate its effectiveness under a setting enabling learners to choose their location, time and digital device to experience the learning environment without any supervision. Furthermore, we shed more light on the underlying mechanisms: in particular, whether and how self-explaining may influence the learning environments’ effects on learning outcomes during an unsupervised web-experiment.

2.2 Risks of Interruptions and Smartphone Related Off-Task Behavior during Web-Based Learning

The lack of supervision in such a web-experiment carries the risk of less task-engagement, however. We consider task-engagement as actually allocating one’s cognitive resources to the learning task at hand. First, unlike experiments in supervised classrooms or labs, in a location of their own choice, learners may be interrupted—for example by other people, animals, devices, or simply noise. The consequences of interruptions can be considered through cognitive, motivational, and affective perspectives (e.g., Federman, 2019). In this paper, however, we focus on the learner’s limited cognitive resources (e.g., Cowan, 2016). Hence, we consider interruptions as being potentially detrimental to learning, because they bind and thereby divert precious cognitive resources from the learning task at hand. This leads to another question addressed in this paper, namely, whether and how interruptions may interfere in the underlying learning mechanisms, like the aforementioned potential mediating influence of self-explanations on learning outcomes. As constructive the cognitive endeavor of self-explaining is (e.g., Bisra et al., 2018), it is still interesting to discover how prone it is to interruptions in an unsupervised web-experiment.

Second, smartphones can be a potential source of off-task behavior and interruptions. For a start, Burak (2012) conducted surveys with 774 university students that revealed that over 50% of their students engaged in texting—while in fact sitting in class. Burak (2012) also asked 333 university students about their behavior during online courses, revealing an even higher percentage (~ 70%) engaged in texting. Given that smartphones have become even more ubiquitous since then, the most recent amounts of smartphone-related off-task behavior while attending (online) courses are probably even greater. For instance, Kim et al. (2019) assessed objective log data and found that first-year college students spent over 25% of their time during class operating their smartphones: every 3–4 min, they were distracted by their smartphone for over a minute.

Engaging in off-task behavior on digital devices (such as texting) during learning environments has demonstrated detrimental effects on learning in various studies (Gingerich & Lineweaver, 2014; Mendoza et al., 2018; Waite et al., 2018; Wood et al., 2012). These findings are eye-opening in light of learners’ limited cognitive resources (e.g., Cowan, 2016): Cognitive resources bound by off-task behavior are unavailable for deep processing the given learning material.

2.3 Previous Research Assessing Smartphone Usage

As mentioned above, as smartphone-related off-task behavior is a potential risk during an unsupervised web-based training intervention, it makes sense to assess learners’ smartphone usage. Despite the obvious validity problems, many researchers have assessed smartphone usage via self-reports (Andrews et al., 2015; Boase & Ling, 2013; Ellis et al., 2019). The validity of self-reports is compromised by the simple fact that trying to remember or estimate how long we used our smartphone the other day is difficult if not impossible. After all, we humans are rather poor at estimating durations (e.g., Grondin, 2010). Furthermore, many smartphone users check their device countless times—a behavior also probably overlooked when estimating smartphone usage (Andrews et al., 2015). Finally, the simultaneous use of multiple devices can further confound self-estimates (Ellis et al., 2019). Ellis et al. (2019) compared various self-report scales on smartphone usage with actual objective log data from pre-installed iOS app ‘screen time.’ Their 238 participants (Mage = 31.88 years, SD = 11.19) spent an average daily 232.66 min (SD = 119.44) using their smartphones. They concluded that self-report assessments are rather poor and insufficient, as a single time estimate was still a better predictor for objective smartphone time than self-report scales.

Consequently, the method of choice should be to assess objective data on smartphone usage—in other words: log data. To acquire such data, some authors have relied on Android apps (Andrews et al., 2015; Kim et al., 2019) while others relied on iOS apps (Ellis et al., 2019; Rozgonjuk et al., 2018). Several researchers developed their own app (Andrews et al., 2015) and others employed third-party apps (Deng et al., 2019). Briefly, most of the studies making use of objective log data reveal at least one of three disadvantages: (a) limiting their sample to either iOS or Android users, (b) having to program or obtain a usage-logging app, (c) having their sample download this app on their personal devices. This paper aimed to circumvent all such disadvantages to assess learners’ objective mean daily smartphone usage time: Therefore, learners were instructed on how to simply read out usage-logging functions/apps already preinstalled on most recent iOS- and Android-based smartphones.

3 Hypotheses

First, this study aimed to replicate the effectiveness of a digital example-based training intervention in a web-experiment, which means the learners’ run through the learning environment completely online at a location, time, and device of their choice without any supervision.

H1

The web-based training intervention fosters declarative knowledge.

Second, this study aimed to demonstrate the characteristic and crucial influence of self-explanation quality on learning outcome.

H2

The intervention’s effect on declarative knowledge is mediated by self-explanation quality (Fig. 1a).

Due to the unsupervised conditions and risks of interruptions, we propose that interruptions can actually harm the learning process (i.e., the self-explaining) during the intervention.

H3

The mediating effect on declarative knowledge is moderated by interruptions during the intervention (Fig. 1b).

Finally, we aimed to assess objective smartphone usage data. In light of earlier considerations of smartphones as a source of off-task behavior and interruptions, we assume that…

H4

Smartphone usage correlates negatively with task engagement (i.e., mental effort and lack of interruptions) during web-based learning.

4 Method

4.1 Sample and Design

Fifty-four undergraduate teacher students at a German university participated in our online experiment. One participant whose log file revealed that she had skipped over half of the intervention had to be excluded. The final sample was N = 53 (42 female, 11 male; Mage = 21.81 years, SD = 1.86). Participants gave informed consent via the web-based learning environment, which randomly assigned them to one of two experimental conditions: (a) training intervention on argumentation knowledge (training condition, n = 27), (b) no-training intervention (control condition, n = 26).

4.2 Web-Based Learning Environment

Participants in both experimental conditions experienced a web-based learning environment of identical structure. Designed to last about 45 min, it consisted of two components: (a) an introduction phase presented learning goals and a theoretical introduction; (b) a video phase showed two videos cut in four segments, each followed by a prompt and a text box. During both conditions, participants could navigate through the 14-page introduction phase via button click. They also had play/pause- and volume-control for the videos. The two conditions differed as follows:

4.2.1 Training Condition

The training condition in the web-based learning environment was based upon the training intervention on argumentation knowledge by Hefter and Berthold (2020). Its introduction phase began with a written presentation of learning goals and theoretical introduction to argumentation elements such as theses, arguments, and counterarguments. In the following video phase, two video examples modeled these argumentation elements. The first video (see Fig. 2 for a screenshot) showed a discussion about the dinosaurs’ extinction and the second video about resettling the lynx. Each video was cut into four segments followed by a self-explanation prompt and text box. These prompts asked the participants to type in the name and function of the argumentation elements just shown in the video segment (“Which elements of argumentation do you recognize in the sequence from Julia? What are their functions?”, see Fig. 3 for a screenshot).

4.2.2 Control Condition

The control condition in the web-based learning environment did not focus on argumentation elements. Its introduction phase began with a presentation of learning goals and theoretical introduction focusing on e-learning, similar to Hefter et al.’s (2014) control condition. The video phase did not focus on argumentation knowledge either. Instead of the training condition’s video examples, two mainstream media videos addressed two contemporary topics: the airlines during the Corona crisis, and climate activism. These videos were about the same length as those shown in the training condition. Each video was also cut into four segments followed by a prompt and a text box. Instead of asking for a self-explanation, the prompts simply noted, “Here you can make notes as you like.”

4.3 Instruments

4.3.1 Learning Time

The web-based learning environment logged time stamps and calculated how much time the participants spent in the web-based learning environment.

4.3.2 Declarative Knowledge

As the learning environment’s training condition was based on Hefter and Berthold’s (2020) material on argumentation knowledge, we also applied their method to assess it. This method consists simply of the open-format question “What are the elements of good argumentation, and what function does each element serve?” that Hefter et al. (2014) employed in previous studies. The participants received this question as a pretest before the intervention and as a posttest afterwards. To rate the participants’ answers, we applied a 6-point rating scale from 1 (very low quality) to 6 (very high quality).

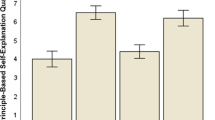

4.3.3 Self-Explanation Quality

The learning environment’s training condition featured Hefter and Berthold’s (2020) self-explanation prompts. We therefore also applied their 6-point rating scale from 1 (very low quality) to 6 (very high quality) to rate the participants’ answers to the prompts. As the control condition featured no video examples on argumentation, let alone self-explanation prompts, control-group participants obviously had no means of acquiring a rating above 1. Because the internal consistency for the ratings of all eight answers was high (Cronbach’s α = .97), we used the mean of all eight ratings to measure self-explanation quality.

4.3.4 Mental Effort

We assessed participants’ mean subjective mental effort in the video phase. After the last text box following each of the two videos, we asked “How much mental effort did you invest in following the video?” and “How much mental effort did you invest in filling out the text boxes after the video segments?” Participants answered each of these four items on a 9-point rating scale based on Paas (1992). This scale is an ever since widely used (and debated) subjective method of measuring cognitive load (see e.g., Castro-Alonso & de Koning, 2020). Internal consistency for these four items was high (Cronbach’s α = .82), so we used the mean of all four items to assess mental effort.

4.3.5 Interruptions

The participants received the question “Were you interrupted by other people or events/incidents during this web-based training intervention?” They noted the number of interruptions on 5-point scale from 0 (no interruption) to 4 (more than three interruptions).

4.3.6 Smartphone Usage

To assess the participants’ daily smartphone usage, the web-based learning environment informed and politely asked them: “Now we would like to briefly assess your familiarity and experience with your smartphone. Please grasp your smartphone to see what your mean usage time was. If yours is an Apple smartphone with iOS, use the function ‘screen time.’ For most smartphones with Android (such as Samsung, Motorola, etc.), you can use the app ‘digital wellbeing.’ Some smartphones with Android (such as Huawei/Honor) have the app ‘digital balance.’ If you do not have a smartphone brand like those mentioned above, or you do not have a similar function, please choose ‘none of the above/do not know.’”

Participants could then choose between the four options “iOS (screen time),” “Android (digital wellbeing),” “Android (digital balance),” and “none of the above/do not know.” Each option led to a slightly different routine to support participants in the most convenient way to gather their smartphone usage information from their devices.

Option “iOS (screen time).” Participants who chose “iOS (screen time)” received a simple instruction including marked and labeled screenshots. These showed them how to access the ‘screen time’ function on their smartphone and where to find the last seven days’ daily average. We asked them “Please type in the daily average that was indicated: h: ___; m: ___.” and relied on numbers of minutes + hours × 60 for our analyses. Furthermore, we asked, “Just in case that did not work, or this function is not activated or not installed on your smartphone: Please estimate how many hours you use your smartphone every day: h: ___; m: ___.” As that data was just a subjective estimation with all its previous mentioned downsides, we did not use this in our analyses.

Option “Android (digital wellbeing).” Participants who chose “Android (digital wellbeing)” received a similar instruction with screenshots to show them how to access and operate the ‘digital wellbeing’ app, which enables users to learn how long they have used their smartphone each day. To obtain an average daily figure, it makes no sense to consider today’s usage time. Hence, we showed them how to navigate through the app and asked “Please type in the screen time for yesterday: h: ___; m: ___. For the day before yesterday: h: ___; m: ___. For three days ago: h: ___; m: ___.” We used the mean of all three quantities of minutes + hours × 60 for our analyses. As was the case in the previous option, we also asked the identical “Just in case this did not work”-question to enable a subjective estimation, but those we did not use in our analyses.

Option “Android (digital balance).” Participants who chose “Android (digital balance)” also received the respective instruction with screenshots for their ‘digital balance’ app, which presents last week’s usage time. We asked them “Please type in the screen time you found there: h: ___; m: ___.” We used (the quantity of minutes + hours × 60) divided by seven for our analyses. Identical to the previous options, we also asked for a subjective estimation as a backup that we did not use in our analyses.

Option “none of the above/do not know.” Participants who chose “none of the above/do not know” got the question “Please estimate how many hours you use your smartphone every day: h: ___; m: ___.” However, we used no such estimation in our analyses.

4.4 Procedure

This study took place completely online during an 8-week period in June and July 2020. During this period, participants could run the web-based learning environment once. All they needed was a computer or similar device with internet access, web browser, and sound. They needed to set aside about an hour of their time to work in the web-based learning environment alone and without interruptions. The web-based learning environment started with thanks, greetings, followed by mandatory information about the study and data protection. After providing informed consent and receiving the pretest on declarative knowledge, the participants ran through the web-based learning environment according to their randomized experimental condition. After having finished the learning environment’s video phase, they filled out the posttest on declarative knowledge. Finally, they received the items on interruptions, smartphone usage, and a demographic questionnaire about age, sex, and grades.

5 Results

For all tests, the alpha-level was .05. For our one-sided t tests, Cohen’s d served as the effect size measure with its classic interpretation: ~ 0.20 small, ~ 0.50 medium, ~ 0.80 large effect (Cohen, 1988). For correlations, the correlation coefficient was Pearson’s r with this interpretation: ~ .10 small, ~ .30 medium, ~ .50 large correlation (Cohen, 1988). Table 1 presents all measures.

5.1 Learning Prerequisites

We detected no statistically significant differences between the experimental groups with respect to school grades, prior declarative knowledge, smartphone usage, or time spent in the web-based learning environment. The web-based learning environment also logged the kind of device the learners ran it on. We could thus rule out that our analyses of the learners’ smartphone usage would be somehow biased because of them running the learning environment on their smartphones: In our sample, only one person ran the web-based learning environment on a smartphone; another person did it on a tablet. Every other learner used a desktop or laptop computer.

5.2 Learning Outcomes

We assumed that our training intervention would exert a significant positive effect on declarative knowledge (H1). The training condition’s participants did in fact outperform those in the control condition in the posttest, t(51) = 7.24, p < .001, d = 1.99 (large effect).

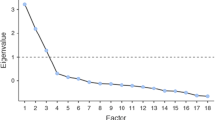

More interestingly, we analyzed the mechanisms behind this highly expected intervention effect. To address the assumption that self-explanation quality mediated the intervention’s effect on declarative knowledge (H2), we used the SPSS macro PROCESS (Hayes, 2013). Condition (training vs. control) was the independent, declarative knowledge the dependent, and self-explanation quality the potential mediating variable. Figure 1a shows this model’s conceptual diagram. We conducted a simple mediation and let PROCESS calculate 95% bootstrap percentile CIs with 10,000 samples and heteroscedasticity consistent standard errors (Davidson & MacKinnon, 1993), yielding unstandardized path coefficients. First, condition had a significant positive direct effect (a path) on self-explanation quality, B = 2.82, p < .001. Second, self-explanation quality had a significant positive direct effect (b path) on declarative knowledge, B = 1.36, p = .002. Finally, after including the potential mediator self-explanation quality in the regression model, there was no longer a significant direct effect of condition on declarative knowledge. The effect of condition on declarative knowledge was entirely mediated by self-explanation quality (ab path), B = 3.84 [1.55, 6.04]. Because the confidence interval did not include zero, we deem this indirect effect significant.

Furthermore, we analyzed whether interruptions during the intervention affected this indirect effect. More precisely, we built on the previous simple mediation model and additionally checked whether the effect of self-explanation quality on declarative knowledge was moderated by interruptions (H3). We thus tested a moderated mediation model (with the so-called second-stage moderation) as shown in the conceptual diagram in Fig. 1b. Again, we used the SPSS macro PROCESS (Hayes, 2013) to calculate 95% bootstrap percentile CIs with 10,000 samples and heteroscedasticity consistent standard errors. There was a significant interaction effect between self-explanation quality and interruptions, indicating a conditional effect on path b, B = −0.33, p = .011. The PROCESS macro (Hayes, 2013) also let us use the Johnson-Neymar technique to uncover the significance regions: The conditional effect was only significant for learners who reported an interruption number below 2.29. The overall model had an index of moderated mediation of −0.92 [−1.71, −0.16], which we deem significant because the confidence interval did not include zero.

5.3 Smartphone Usage and Task Engagement

We assumed an inverse relationship between the daily average smartphone usage and task engagement (i.e., mental effort and lack of interruptions) during our web-based learning environment. As mentioned before, to ensure validity, we relied solely on the smartphone usage information that participants had gathered from their devices’ logging functions. From our 53 participants, 27 used the iOS function ‘screen time,’ 11 used the Android app ‘digital wellbeing,’ 3 used the Android app ‘digital balance,’ and 12 had to resort to subjective estimations. Hence, we used the daily mean smartphone usage time of 41 participants for our analyses.

Indeed, we identified a negative medium correlation between daily average smartphone usage and mental effort during our web-based learning environment, r(39) = −.38, p = .014. Furthermore, we found a positive medium correlation between daily average smartphone usage and the number of interruptions during our web-based learning environment, r(39) = .35, p = .024. For validity reasons, we also checked the correlation between number of interruptions and learning time—more precisely, the time that passed between the learning environment’s time log at its beginning and end—which was very plausibly positive, r(45) = .40, p = .006. Please see Table 2 for the intercorrelations.

6 Discussion

6.1 Theoretical Contributions and Practical Implications

6.1.1 Effectiveness in Non-lab Conditions

First, we successfully tested our web-based training intervention against a control condition. This web-based training intervention consisted of learning goals, theoretical introduction, video examples and self-explanation prompts. The control condition featured a learning environment resembling the structure and length of the training condition. There were no differences in the time or mental effort the participants spent in either condition. As expected, our intervention had a large effect on declarative knowledge about argumentation—exactly what the intervention was designed to do.

However, unlike previous studies that tested other versions and forerunners of this intervention (e.g., Hefter & Berthold, 2020; Hefter et al., 2014), this study took place completely online. The participants were not in a classroom or lab to work under supervision with the learning environment. Instead, they could run the learning environment anytime and anywhere they wished. Hence, our results do not just replicate findings showing that a training intervention built on video examples and self-explanation prompts is effective. Our findings also emphasize this effectiveness under unsupervised non-lab conditions with potential distractions and unascertainable task engagement.

6.1.2 Role of Self-Explanations and Interruptions

Given the fact that we compared the training group to a control group that had no access to the relevant principles to-be-learned, the intervention’s effect on declarative knowledge was highly expected. However, under unsupervised non-lab conditions, it was interesting to analyze the mechanism behind the training intervention’s large effect on declarative knowledge. As the mediation analysis revealed, said effect was completely mediated by self-explanation quality. This result not only indicates that the better the participants explained the argumentation elements modelled in the video examples, the more they knew about them in the posttest. This result moreover indicates that the intervention’s effectiveness can be largely explained by self-explanations. In other words, not the simple access to the relevant principles made the main differences between the experimental groups’ learning outcomes, but the actual learning process—that is, the act of self-explaining—did.

Finally, this result expands the previous literature about more controlled studies. In those studies, self-explanation quality contributed less to declarative knowledge immediately after the training intervention, but more to declarative knowledge in a delayed posttest (Hefter et al., 2014). Hefter and Berthold’s (2020) results further indicate that for immediate results, simply reading the theoretical introduction sufficed, and self-explaining some video examples is not even necessary. In other words, previous research suggests that self-explaining and thereby deep processing given example seems more important for acquiring knowledge that persists for some time. In the present study however, the less controlled setting might have changed that: In an unsupervised online setting, engaging in self-explaining is the essential process for knowledge acquisition, even for just immediate results.

Finally, we proposed that this essential learning process is in fact potentially harmed by interruptions. Indeed, our further analyses revealed that the said effect of self-explanation quality on declarative knowledge was moderated by the number of interruptions. For a start, this result underscores the intuitively appealing rule of thumb that interruptions harm learning: the more interruptions, the worse the learning outcomes. Furthermore, too many interruptions may actually disrupt learning, as our conditional indirect effect revealed: After roughly two or more interruptions, the effect on declarative knowledge failed to reach significance.

Overall, and assuming a more practical point of view, these findings also offer recommendations for instructors, such as making sure participants actually engage in self-explaining without interruptions.

6.1.3 Assessment of Mean Daily Smartphone Usage

Considering the learners’ smartphone usage, this paper provides a practical implication for a simple yet valid assessment method: There is no need for learners’ subjective estimations, which are imprecise at best and inaccurate at worst. No need to program or obtain a usage-logging app and install it on learners’ personal devices. No need to restrict one’s sample to either iOS or Android users. The participants received easy instructions for reading out three typical usage-logging functions/apps that most of recent iOS- and Android-based smartphones already have, ready to use (i.e., ‘screen time,’ ‘digital wellbeing,’ and ‘digital balance’). This allowed us to access the mean daily smartphone usage time of ~ 77% of our sample. Our sample’s mean daily usage time of almost 4 h (~ 235 min) seems valid, as it is in line with other recent findings that likewise relied on actual logs and not subjective estimations (e.g., Ellis et al., 2019).

6.1.4 Mean Daily Smartphone Usage and Task Engagement

We assumed that the more time learners daily spent on their smartphone, the less task engagement (i.e., mental effort and lack of interruptions) would manifest during our web-based learning environment. Indeed, we found a negative medium correlation between daily smartphone usage and subjective mental effort during the learning environment. The more time spent on the smartphone a day, the less mental effort participants expended in our web-based training intervention—a finding that might show that learners who are prone to heavy smartphone usage are less willing to invest mental effort during a web-based training intervention. Alternatively or additionally, this correlation might indicate a modern form of cognitive miserliness, as discussed by Barr et al. (2015). Learners who are less willing to invest mental effort during a web-based training intervention might also be more prone to rely on outsourcing information and cognitive processes on their smartphone.

We also detected a moderate correlation between smartphone usage and interruptions: The more time spent on the smartphone a day, the more interruptions there were during the web-based training. Mental effort and interruptions did not in fact correlate, but smartphone usage correlated with both. It thus seems likely that smartphone usage affected both mental effort and interruptions, assuming that heavy smartphone users would probably also have used their smartphone during the web-based training intervention. This would have naturally resulted in distractions and reduced attention. We also identified this correlation: the more interruptions, the more time spent in the learning environment. In light of these highly plausible results, we assume that assessing the number of interruptions via a simple 5-point scale self-report item was valid and without a social-desirability bias.

6.2 Limitations and Implications for Future Research

Starting with the aforementioned findings on the relation between smartphone usage and task engagement, the non-lab conditions gave us no information about our learners’ actual off-task engagement during the web-based training. We can only assume smartphone use during the web-based training as a plausible reason for these correlations. Many aspects commend it, however. After all, in the aforementioned study by Kim et al. (2019), students are distracted by their smartphones every 3–4 min, and spend over 25% of their learning time operating them—in the classroom. If learners’ smartphone usage is this persistent even in class, it is probably similar if not more pronounced at home. Furthermore, we found that our learners’ mean smartphone usage time was almost 4 h a day. It therefore seems highly unlikely that they would consistently abstain from operating their smartphones during the web-based training intervention. Overall, future studies should ideally acquire and assess detailed information about actual off-task engagement—something that is admittedly technically hard to achieve, however.

The rather easy next step would be to assess not just learners’ screen time, but also the number of notifications they received and the number of times they checked/activated their device. Such information can be obtained by relying on same usage-logging functions/apps that come preinstalled on most recent smartphones, and that we relied on in this study. Besides these logging functions, further self-report items might be implemented to gain information about the type of interruptions (see e.g., Federman, 2019).

The sample size of 53 was rather small. Therefore, future studies might employ larger samples to allow more complex analyses, such as multivariate models of the relationships between learning outcomes and learners’ habits such as smartphone usage, as well as further motivational/volitional predictors.

As a final limitation, our web-based training intervention focused on argumentation knowledge—more precisely declarative knowledge about six argumentation elements. Hence, its generalizability across other domains is limited.

Summing up, our findings emphasize how effective it is to self-explain video examples even in less-controlled web-based learning scenarios. Furthermore, our findings show how important it is to avoid being interrupted during web-based learning and to mentally engage in the task. Smartphone habits apparently have an influence on these factors.

References

Andrews, S., Ellis, D. A., Shaw, H., & Piwek, L. (2015). Beyond self-report tools to compare estimated and real-world smartphone use. Plos One. https://doi.org/10.1371/journal.pone.0139004

Barr, N., Pennycook, G., Stolz, J. A., & Fugelsang, J. A. (2015). The brain in your pocket: Evidence that smartphones are used to supplant thinking. Computers in Human Behavior, 48, 473–480. https://doi.org/10.1016/j.chb.2015.02.029

Berthold, K., Eysink, T. H. S., & Renkl, A. (2009). Assisting self-explanation prompts are more effective than open prompts when learning with multiple representations. Instructional Science, 37(4), 345–363. https://doi.org/10.1007/s11251-008-9051-z

Berthold, K., & Renkl, A. (2009). Instructional aids to support a conceptual understanding of multiple representations. Journal of Educational Psychology, 101(1), 70–87. https://doi.org/10.1037/a0013247

Berthold, K., & Renkl, A. (2010). How to foster active processing of explanations in instructional communication. Educational Psychology Review, 22, 25–40. https://doi.org/10.1007/s10648-010-9124-9

Bisra, K., Liu, Q., Nesbit, J. C., Salimi, F., & Winne, P. H. (2018). Inducing self-explanation: A meta-analysis. Educational Psychology Review, 30(3), 703–725. https://doi.org/10.1007/s10648-018-9434-x

Boase, J., & Ling, R. (2013). Measuring mobile phone use: Self-report versus log data. Journal of Computer-Mediated Communication, 18(4), 508–519. https://doi.org/10.1111/jcc4.12021

Burak, L. (2012). Multitasking in the university classroom. International Journal for the Scholarship of Teaching & Learning. https://doi.org/10.20429/ijsotl.2012.060208

Castro-Alonso, J. C., & de Koning, B. B. (2020). Latest trends to optimize computer-based learning: Guidelines from cognitive load theory. Computers in Human Behavior. https://doi.org/10.1016/j.chb.2020.106458

Cha, S. S., & Seo, B. K. (2018). Smartphone use and smartphone addiction in middle school students in Korea: Prevalence, social networking service, and game use. Health Psychology Open. https://doi.org/10.1177/2055102918755046

Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd ed.). Erlbaum.

Cowan, N. (2016). Working memory capacity: Classic edition (1st Ed.), New York: Routledge.

Davidson, R., & MacKinnon, J. G. (1993). Estimation and inference in econometrics. Oxford University Press.

Deng, T., Kanthawala, S., Meng, J. B., Peng, W., Kononova, A., Hao, Q., Zhang, Q., & David, P. (2019). Measuring smartphone usage and task switching with log tracking and self-reports. Mobile Media & Communication, 7(1), 3–23. https://doi.org/10.1177/2050157918761491

Elhai, J. D., Levine, J. C., Dvorak, R. D., & Hall, B. J. (2017). Non-social features of smartphone use are most related to depression, anxiety and problematic smartphone use. Computers in Human Behavior, 69, 75–82. https://doi.org/10.1016/j.chb.2016.12.023

Ellis, D. A., Davidson, B. I., Shaw, H., & Geyer, K. (2019). Do smartphone usage scales predict behavior? International Journal of Human-Computer Studies, 130, 86–92. https://doi.org/10.1016/j.ijhcs.2019.05.004

Federman, J. E. (2019). Interruptions in online training and their effects on learning. European Journal of Training and Development, 43(5–6), 490–504. https://doi.org/10.1108/Ejtd-10-2018-0100

Gingerich, A. C., & Lineweaver, T. T. (2014). OMG! texting in class = u fail :( Empirical evidence that text messaging during class disrupts comprehension. Teaching of Psychology, 41(1), 44–51. https://doi.org/10.1177/0098628313514177

Grondin, S. (2010). Timing and time perception: A review of recent behavioral and neuroscience findings and theoretical directions. Attention Perception & Psychophysics, 72(3), 561–582. https://doi.org/10.3758/App.72.3.561

Hayes, A. F. (2013). Introduction to mediation, moderation, and conditional process analysis: A regression-based approach. Guilford Press.

Hefter, M. H., & Berthold, K. (2020). Preparing learners to self-explain video examples: Text or video introduction? Computers in Human Behavior, 110, 1–9. https://doi.org/10.1016/j.chb.2020.106404

Hefter, M. H., Berthold, K., Renkl, A., Riess, W., Schmid, S., & Fries, S. (2014). Effects of a training intervention to foster argumentation skills while processing conflicting scientific positions. Instructional Science, 42(6), 929–947. https ://doi.org/https://doi.org/10.1007/s1125 1–014–9320-y.

Hefter, M. H., Renkl, A., Riess, W., Schmid, S., Fries, S., & Berthold, K. (2015). Effects of a training intervention to foster precursors of evaluativist epistemological understanding and intellectual values. Learning and Instruction, 39, 11–22. https://doi.org/10.1016/j.learninstruc.2015.05.002

Kim, I., Kim, R., Kim, H., Kim, D., Han, K., Lee, P. H., Mark, G., & Lee, U. (2019). Understanding smartphone usage in college classrooms: A long-term measurement study. Computers & Education. https://doi.org/10.1016/j.compedu.2019.103611

McCoy, B. (2013). Digital distractions in the classroom: Student classroom use of digital devices for non-class related purposes. Journal of Media Education, 4, 5.

Mendoza, J. S., Pody, B. C., Lee, S., Kim, M., & McDonough, I. M. (2018). The effect of cellphones on attention and learning: The influences of time, distraction, and nomophobia. Computers in Human Behavior, 86, 52–60. https://doi.org/10.1016/j.chb.2018.04.027

Nokes, T. J., Hausmann, R. G. M., VanLehn, K., & Gershman, S. (2011). Testing the instructional fit hypothesis: The case of self-explanation prompts. Instructional Science, 39(5), 645–666. https://doi.org/10.1007/s11251-010-9151-4

Paas, F. G. W. C. (1992). Training strategies for attaining transfer of problem-solving skill in statistics—a cognitive-load approach. Journal of Educational Psychology, 84(4), 429–434. https://doi.org/10.1037/0022-0663.84.4.429

Renkl, A. (2014). Toward an instructionally oriented theory of example-based learning. Cognitive Science, 38(1), 1–37. https://doi.org/10.1111/cogs.12086

Renkl, A. (2017). Instruction based on examples. In R. E. Mayer & P. A. Alexander (Eds.), Handbook of research on learning and instruction (pp. 325–348). Routledge.

Roelle, J., & Renkl, A. (2020). Does an option to review instructional explanations enhance example-based learning? It depends on learners’ academic self-concept. Journal of Educational Psychology, 112(1), 131. https://doi.org/10.1037/edu0000365

Roelle, J., Hiller, S., Berthold, K., & Rumann, S. (2017). Example-based learning: The benefits of prompting organization before providing examples. Learning and Instruction, 49, 1–12. https://doi.org/10.1016/j.learninstruc.2016.11.012

Rozgonjuk, D., Levine, J. C., Hall, B. J., & Elhai, J. D. (2018). The association between problematic smartphone use, depression and anxiety symptom severity, and objectively measured smartphone use over one week. Computers in Human Behavior, 87, 10–17. https://doi.org/10.1016/j.chb.2018.05.019

Sweller, J., Ayres, P., & Kalyuga, S. (2011). Cognitive load theory. Springer.

Waite, B. M., Lindberg, R., Ernst, B., Bowman, L. L., & Levine, L. E. (2018). Off-task multitasking, note-taking and lower- and higher-order classroom learning. Computers & Education, 120, 98–111. https://doi.org/10.1016/j.compedu.2018.01.007

Wong, J., Baars, M., Davis, D., Van der Zee, T., Houben, G. J., & Paas, F. (2019). Supporting self-regulated learning in online learning environments and MOOCs: A systematic review. International Journal of Human-Computer Interaction, 35(4–5), 356–373. https://doi.org/10.1080/10447318.2018.1543084

Wood, E., Zivcakova, L., Gentile, P., Archer, K., De Pasquale, D., & Nosko, A. (2012). Examining the impact of off-task multi-tasking with technology on real-time classroom learning. Computers & Education, 58(1), 365–374. https://doi.org/10.1016/j.compedu.2011.08.029

Wylie, R., & Chi, M. T. (2014). The self-explanation principle in multimedia learning. In R. E. Mayer (Ed.), The Cambridge handbook of multimedia learning (pp. 413–432). Cambridge University Press.

Acknowledgements

Thank you very much to all students who took part in the experiment and to Carole Cürten for proofreading.

Funding

Open Access funding enabled and organized by Projekt DEAL. No funding was received.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that he has no conflict of interest.

Consent to participate

All participants gave informed consent.

Ethical approval

APA ethical standards were followed in the conduct of the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hefter, M.H. Web-Based Training and the Roles of Self-Explaining, Mental Effort, and Smartphone Usage. Tech Know Learn 28, 1079–1094 (2023). https://doi.org/10.1007/s10758-021-09563-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10758-021-09563-w