Abstract

This paper reports a multiple case study of a training center collaboration with three offshore companies and a coastline authority. Through a qualitative inquiry, we utilized the actor-network theory to analyze the common understanding of simulator use in these organizations. The paper argues that the simulator itself is an actor that can integrate shared interests with other actors to establish an actor-network. Such an actor-network expands simulator use beyond purely training purposes. It advocates that the simulator is a medium between maritime academia and industry and aligns it with the same actor-network to facilitate the process of “meaning construction.” Such a meaning construction process offers simulator-based training with a valuable definition of the learning outcomes. It helps clarifying who will gain the benefits from simulator use in the future, as well as when and on what basis. The paper also reflects on the benefits and limitations of utilizing a multiple case study in the maritime domain.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Simulator-based maritime training serves as an illustrative and paradigmatic example of a domain in which the introduction of high-end technologies, together with new legislative demands, has created new possibilities and challenges for the maritime industry. This is partially due to changes in the work activities themselves: in recent decades, maritime operations have been transformed as ship equipment and technologies have undergone rapid changes (Lützhöft et al. 2017). Historically, becoming an operator implied working one’s way up the hierarchy as a junior member of a team at sea. However, with the growth of simulator-based studies, an experiential gap between juniors and officers has been created (Hanzu-Pazara et al. 2008). An operator may not have a chance to stay in one position on a vessel, but instead could gain a high level of technical skills, professional knowledge of the bridge team, and knowledge of communication through simulators (Hanzu-Pazara et al. 2010). Thus, the goal of simulator-based studies is about utilizing applications of digital technologies to train operators through less time-consuming and less expensive methods (Schramm et al. 2017).

In terms of maritime education, the current simulator-based studies can be divided into two areas: marine engineering and nautical science. In particular, human factor specialists work in these two fields addressing issues concerning the relationship between humans and technology, such as simulators (Lützhöft et al. 2017). Related to these two areas, studies in the maritime domain can be found in a variety subjects, such as organizational studies of marine operations (Weick 1987) and assessment of technology (Lützhöft et al. 2010a, b; Nilsson et al. 2008, 2009; Orlandi et al. 2015). Through the study of these issues as they pertain to organizations, researchers aim to determine ways to train humans to be reliable assets for an organization. On the other hand, researchers hope to evaluate a given technology to see how operators can and will perform. In the case of simulators, a training method may be developed to help operators to adopt technologies, particularly regarding safety issues.

Unfortunately, these studies largely suggest a form of motherhood statement dressed up as “findings,” such as a simulator provides students with vital critical incident management and stress coping skills (Øvergård et al. 2017) and for simulator-based teaming training, the challenge is not so much in the integration between simulators, but more related to the assessment of simulator-based training and more specifically the assessment of non-technical skills (Øvergård et al. 2017). These statements are generally simple and rarely based on a sufficiently detailed analysis of the failings of the practice as it relates to the discipline. They do not provide insight into the shortfalls of the knowledge and methods of practitioners, nor do they analyze the context of failings and provide an in-depth account about what to do about specific situations. As Kawalek (2008) noted, such kinds of studies do not provide new methods or changes to existing procedures of scientific inquiry.

Moreover, we know too little about how to transform simulator-based studies to support maritime industry. In the academic context, training is the action of teaching a person or an animal a particular skill or type of behavior. However, if we need to assess the effectiveness of training, then we must assume that the simulator is good enough to support training. Current research has indicated that we often overlook how the work practice of an operator is conducted. As a result, simulator use presents surprises to trainers and introduces challenges to assessment; for example, if the training scenarios are superficial and isolated, then the simulator is inaccurate (Lützhöft et al. 2017). If that is the case, we then lack methods to assess human behavior in technology because it is difficult to determine the right and wrong of human operations.

Another example is that, although maritime resource management theorists state that training should have two separate objectives—technical and non-technical skills (Schager 2008), non-technical skills cannot exist alone and outside of simulator use. It is important to note that non-technical skill is also an efficient factor in grounding the whole organization of simulator studies on the establishment of safety in all actions. If we have limited knowledge to assess human behavior in technology, then an assessment of training would be futile across academics and the industry. Thus, it would be less effective to sell the hypothesis to the industry by saying that, through the experiment, we could prove that operators can benefit from simulator-based studies. Moreover, we would fail to indicate in what way simulators can be used to bridge the academic outcomes and industry needs, rather than providing only an assumed result.

Why does this matter? The answer is that, because of the lack of knowledge of simulator use, we face two failings. First is the failing that occurs in the curricula. In academia, access to training varies. When a human organization meets technology, we can make no sense of a “collection of facts” about simulator-based experiments, methods, and techniques for the development of simulations, rather than a field of practice underpinned by methodology that has the goal of changing and “improving” organizations (Kawalek 2008). The simulator is assumed to be a given technology which operators should be trained to use (Weick 1987); this includes, for example, recruiting maritime staff who have been tested in the use of simulators in specific scenarios for military tasks (Pew and Mavor 1998). However, testing staff with simulators can only produce results of designed experiments. Unfortunately, those results are misunderstood in the maritime domain. People believe that expertise and competence can be gained through a simulated environment (Pan and Hildre 2018). Thus, converting experimental results into standard training does not always provide insight into translating a shared interest among the training center, offshore companies, and the coastline authority. Simulators are also used for business activities, which leads back to the question of how to assess training.

Second, a failing occurs in practice. Evaluation studies bypass analysis of the failures of the practice as they relate to the discipline. For example, studies on human performance with simulators utilize a positivistic principle that is based on long held traditions of the natural sciences (e.g., engineering, computation, mechanics, and mathematics). When describing how to use the simulators for recruiting operators, it is incorrect to say that verification and validation of a designed experiment can point out detailed competencies of operators that are only related to simulated scenarios. Moreover, although the International Maritime Organization’s (IMO) Standards of Training, Certification and Watchkeeping for Seafarers (STCW) could ensure that future marine operators can act properly and safely (International Maritime Organization 2010), it is unclear how STCW convention can be adopted into simulator-based studies and how different actors could use them for various purposes.

Another essential question is this: How can simulators be used to deliver learning outcomes, what would those outcomes be, and who can benefit from this? In the present study, we used the actor-network theory (ANT) as a theoretical lens through which we analyzed a Norway multiple case study that involved three maritime organizations. We investigated how to make sense of simulator use to bridge the gap between maritime research and industry needs. We applied the qualitative inquiry process to determine problems and possible solutions for both researchers and practitioners. We hope that, through this multiple case study, researchers and practitioners in the maritime domain could reconsider simulator-based studies in pursuit of a holistic understanding of simulator use. This paper is organized as follows: Sect. 2 presents related work. Section 3 presents the theoretical lens of the ANT, which helped in analyzing and outlining the relationships among cases using the collected data. Section 4 describes the methodological position, including the chosen methods and the case. In Sect. 5, we discuss the case analysis and the findings. Section 6 summarizes the study and points to future directions for simulator studies. Section 7 reflects on the benefits of utilizing a multiple case study in the maritime domain. The paper concludes in Sect. 8.

2 Related Work

In this section, we mainly focus on recent simulator-based studies in the maritime sectors, although there are plenty of studies concerned with simulator use in other sectors. The literature on simulators mainly addresses how training operators use new technology-based systems with the goal of standardizing operations. Maritime simulators, in this manner, are a means for the instructors in the training center to put students in “realistic” situations. The aim of using a simulator is to train operators to use the standard display whenever possible, although there is no “standard operation” for safe maritime operations (personal communications with instructors in a training company, 19 September 2017). This allows maritime researchers to believe that the use of simulators could be tested as far as possible to cover all areas of operations (Lützhöft et al. 2017; Perkovic et al. 2013; Schmidt 2015). In this vein, maritime academia accepts the phenomenon that, through evaluation of human performance in simulators, it is possible to improve operators’ skills. Industry could also benefit from such a research paradigm to increase business values, including reducing the cost for board training and increasing the quality of recruiting (ABB 2018). Thus, simulator-based studies in maritime domain normally focus on two types of fields—organizational studies and evaluation of technology studies.

In organizational studies, researchers are concerned with what role the instructor plays in simulator use. For example, Sellberg (2017) and Sellberg et al. (2018) have argued that simulators are poorly implemented in the maritime domain for education, research, and training systems. Research is unclear as to how instruction with simulators should be designed and how skills trained with simulators should be accessed and connected to professional practice (Sellberg 2018; Sellberg and Lundin 2017). Through fieldwork studies, researchers have advocated the importance of instructional support throughout the training of the instructors to bridge theory and practice in ways that develop students’ competencies (Sellberg and Lundin 2018). These studies were straightforward. Their main aim was to investigate the role of the instructors in simulator use and to illustrate the relationship among instructor, student, and simulator. However, it is important to understand that to instruct a novice in simulator use is different from working with experienced operators. For example, experienced simulator operators may be interested in how to advance their specific skills and prepare for first-time tasks before they go to sea. The main purpose of using a simulator is to train procedure-based skills, allowing people to develop rule-based competence, rather than gain experience (Flyvbjerg 2001, 2006).

In evaluation research, studies have been mainly concerned with how to design simulators and vessels. For example, in systems design, Pan (2018) investigated how to use fieldwork to guide the design of simulators. In this respect, it is important to bring the work practices of operators into the design process of simulators done by system developers. Regarding the physical layout of the vessels, Mallam et al. (2017) emphasized that, with the use of a participatory design approach, it is possible to create an environment that will help naval architects, crews, and ergonomists work together to develop human-centered design solutions for ships’ bridges and human–machine interaction interfaces. Costa et al. (2017) used activity theory as a theoretical lens through which to investigate how human-centered design theory can be practiced in a ship design firm. However, in their study, the work practices of users (marine navigators) were not included, as the authors focused only on how designers, human-factor specialists, ergonomists, and consultants can design a suitable interface for navigators to use. The authors aimed to address human factor concerns for future IT design and development in the maritime domain. All of these studies, however, were concerned with design, rather than a board view of how people will use simulators for various purposes.

Given the understanding that simulator studies might merit serious consideration, we could still argue that, although training is useful for teaching people to use a technology, it may not be useful for clarifying what competencies can be gained through training. There is no pedagogical approach to help connect simulator training and business values, such as the quality of recruitment. As a consequence, several drawbacks to simulator studies can be highlighted. For example, there are debates around whether we need to focus on the accuracy of a simulated workplace and incorporate specific requirements into simulators. Among these debates is the assertion that there is no practical way to achieve the accuracy of simulators, since this would be expensive and the process of development could be complex (Lützhöft et al. 2017). In addition, compared with operators’ experiences in reality, simulator-based study may not accurately capture the important aspects of simulator use. Even though it may be possible to relax after a short intense period when using simulators, particularly after challenging simulated scenarios, it is still difficult to say that the work efficiency is the same as in reality. Furthermore, operators may also experience simulator sickness. Researchers reported that simulator sickness is due to the mixed signals within simulator use, such as the visual and vestibular systems being mismatched, which causes even experienced operators to feel sick (Lützhöft et al. 2017).

Although these negative points regarding simulator use are supported by evidence, simulators could boost the confidence in maritime organizations. Thus, we argue that simulators are powerful tools to reduce the risk of otherwise high-risk tasks and can provide business value to the industry as they do in other fields, such as driver training (Burnett et al. 2017; Parkes and Reed 2017), flight training (Huddlestone and Harris 2017), nuclear power plant training (IAEA 2004), clinical training (Shahriari-Rad et al. 2017), and various education programs, such as dental medicine (Sabalic and Schoener 2017), environmental studies (Fokides and Chachlaki 2020), and educational robotics (Ronsivalle et al. 2019). Even in the aviation industry, simulator training is set up for continuously improving the awareness of pilots in safety critical scenarios (Roth and Jornet 2015). However, to convert a gut feeling into a scientific answer requires us to determine how to make sense of simulator use to shorten the gap between maritime research and industry needs. Studies on simulator use are about more than just discovering knowledge about existing phenomena, or to verify and validate knowledge. Moreover, it is about how to study the human learning process (Bourdieu 1977; Dreyfus and Dreyfus 1988; Flyvbjerg 2006). Also, to identify human experts and evaluate technology, we argue that unknown phenomena and new concepts, theory, methods, techniques, and methodology must be explored.

3 Actor-Network Theory

To explore the interplay between simulator and work practice, we based our reflective analysis on the ANT because it was well-aligned with the results of our inductive case analysis. Our analysis revealed that, first, simulator and work practices of the three organizations coevolved through mutual influence and interdependency, through human actors clearly distinguishing between work practices and simulation. Second, it showed that the nonhuman aspect of evolution was key in the design processes of simulators. Thus, ANT proved to be an appropriate option to help investigate how to show the relationship between maritime simulators and work practices in the three organizations. In what follows, we explore extant literature related to these findings.

The ANT arose in the 1980s context of the new social constructionist analyses of scientific knowledge. The theory has gained purchase in a number of fields; nevertheless, its roots can be traced primarily back to the social studies of science. However, this is more of a multidiscipline than a discipline, drawing on various social scientific and philosophical traditions, and extending across numerous empirical areas. Developed by Callon (1986), Latour (2007), Law (2009), and others, it can more technically be described as a “material-semiotic” method (Law 2009). This means that it maps relationships that are simultaneously material (between things) and semiotic (between concepts). It assumes that many relationships are both material and semiotic. For example, in particular, ANT scholars have been concerned with tracing the ways in which laboratory practices and the sorts of materials that circulate through the space of the laboratory serve in the accreditation of scientific knowledge as being “objective.” This means that the laboratory is seen as a central venue in which texts, materials, and skills should be combined to primarily produce texts that are sufficiently potent, such that they could be used to interest and enroll other actors (i.e., scientists, funders, regulators, and publics). In this way, these actors would play their part in the extension of the networks of the laboratory scientist.

From this brief sketch, we can derive a number of key characteristics of the ANT. First, scientists are not seen as having a “direct” access to nature but, rather, they derive or construct scientific knowledge through activities that marshal actors, including human, nonhuman, and textual elements. The ANT provides a language to describe how, where, and to what extent technology influences human behavior. This is valuable when identifying the influence of seemingly grey and unanimous technical components, such as nonhuman and textual elements. In particular, this allows the ANT to zoom in and out of a situation as suits the present purpose. Second, if actors can be successfully aligned—that is, enrolled in the scientist’s project—then a network could be established and rendered durable. So, for the ANT, society should not be represented in the traditional social scientific format of a dualism between human actors and social structures. Rather, “society” is comprised of humans and nonhumans who are aligned in networks to varying extents. Instead of a model of society organized around levels or in terms of depth, society emerges as a flat network of associations. Third, the ANT illustrates the importance of the nonhuman actors and reflects the fact that it does not see the social as a means to explanation. Rather, the social is viewed as the outcome of heterogeneous processes. Human and nonhuman actors together contribute to the production of society. It is not possible to say a priori whether it is human or nonhuman actors who have come first and have played out in a society. It is through close empirical study that it becomes possible to identify the practically prominent actors, though it is not always easy to determine whether these are social. In this way, ANT draws on a battery of seemingly abstract terms, such as actor, translation, association, enrolment, and actor-network.

The ANT defines everything as actors and the relationships among them (Latour 1999). In information systems research (Aanestad 2003; Cordella and Shaikh 2003; Hanseth et al. 2004; Latour 2004; Moser and Law 1999; Storni 2012), researchers have primarily focused on the work routines and norms of labor in the workplace. However, there were four concepts we aimed to address in our study: actor, translation, actor-network, and alignment. An actor, which can be human or nonhuman, is seen to stand by itself as an ontologically valid entity (Alexander and Silvis 2014). Any actor can ally with any other actor. For any two actors to have a relationship, they must understand each other. Translation regards one actor making itself understandable by framing its own meaning/interest in terms of another actor’s frame of reference (Alexander and Silvis 2014). In this way, actors which share interest in a moment establish a network, called an actor-network. The process of establishing the network through sharing interests is then an alignment. It is clear that using ANT within simulator studies must translate and dynamically align their purposes within actor-networks until their tasks are achieved in some social orders. Moreover, ANT theorists are interested in defining and redefining the ANT to avoid making a simple conceptualization of a network. They are keen to translate the relationships of alliances established by actors. For example, researchers have used the ANT to model online communities so that system designers can understand the intermedium in which their users approach these systems (Potts 2008). A typical example is Tsohou et al. (2012) who used the due process model with ANT rationale to enhance the practice of aligning actors and translating actions by creating diagrams to demonstrate information security awareness.

We also understand that there is no difference between the social and physical world in marine operations with regard to simulator use. The ANT, of course, was a useful theoretical lens for our analysis of simulator use among offshore companies, the training center, and the authority, and to evaluate the relationships they engaged in their respective workplaces. The workplace, which is integrated into simulators, also consists of actors in social, subjective, or objective alliances with other actors, which may be fictitious or real and physical. This is relevant for making sense of simulator use to develop a comprehensive understanding of how different organizations can shape and reshape maritime research with benefits for all through the use of simulators.

4 Methodology

We utilized a multiple case study in our study. A multiple case study includes more than a single case. It is frequently associated with several experiments that could help researchers understand the differences and the similarities among cases (Stake 2005). In this way, researchers can clarify whether the findings are valuable (Eisenhardt 1991) and provide the literature with an important influence from data based on the contrasts and similarities among the various cases (Vannoni 2015). A full review of the interpretation created from a multiple case study can create a more convincing theory when the suggestions are more intensely grounded in several empirical studies (Stake 2005). In this way, the multiple case study allows for wider exploration of research questions and theoretical evolution (Eisenhardt 1991). Thus, we were able analyze data within each situation of maritime simulator use and across multiple situations to understand the similarities and differences among cases and therefore contribute to the literature with these comparisons. Filling the gap between maritime research and that of industry requires an overview of how different organizations make sense of simulator-based training in their working context; thus, a multiple case study helps to investigate similarities and differences in and across the organizations.

The most important element in multiple case study research is the “quintain.” A quintain is defined as:

In multiple case research, the single case is of interest because it belongs to a particular collection of cases. The individual cases share a common characteristic or condition. The cases in the collection are somehow categorically bound together. They may be members of a group or examples of a phenomenon. Let us call this group, category, or phenomenon a “quintain.” (Stake 2005, p. 4)

As previously mentioned, using the simulator for various purposes is a phenomenon which we would like to investigate. That is our quintain. It is a targeted collection in which we seek the sense making of simulator use. However, that does not mean we collect cases on any specific purposes. Indeed, this study was a piece of simulator studies in line with the first author’s (hereafter the researcher) research plan. The researcher studied nine cases situationally related to the quintain of the individual cases (Stake 2005). However, those cases were not necessarily organized around the researcher’s long-standing research question concerned with how to incorporate daily use of maritime technology at sea into the simulator design process with systems developers on land. To some extent, each case was organized and studied separately around a research question of its own. As noted, the gap between the organizational studies of marine operations and the assessment of technology bypasses the fundamental issue that, although simulators now represent a dominant technology, it remains unclear exactly how the technology can best be used and how people can make sense of it. Thus, three individual cases were of interest since they could reveal important information about how machinists test simulators and the different perspectives on simulator use that exist in maritime organizations, such as those related to motivations and activities. Moreover, in contrast to Yin’s (2009) positivistic paradigm, the present study did not assume how different organizations use simulators to examine any hypothesis. The researcher aimed to interpret (Stake 1995) how different organizations make sense of simulator use. By putting the simulator in the center, he combined these senses to suggest research directions in simulator studies. He spent two and one-half years on the cases, but at the same time, worked vigorously to understand each individual case. For multiple case research, the cases need to be similar in some ways (Stake 2005). Consequently, the present study set out to collect and analyze empirical data about simulators in work practices in three organizations, focus on using simulators to evaluate the competency of operators. Following Stake’s research, the study made conscious use of existing knowledge and experience in conducting the case research, while giving preference to the collected data. Thus, the study itself was concerned with an understanding of reality and making sense of the world to share meanings in the form of intersubjectivity (Stake 2005).

4.1 Data Collection

The researcher collected the data from the autumn of 2016 to the spring of 2018. The case study included different stages of research. In the autumn of 2016, the researcher used semi-structured interviews with operators who participated in the six experiments. Each time, the researcher interviewed one operator. The semi-structured interviews lasted a total of 8 h. In total, the interview had 6 operators participated in and the average time of an interview was 1 h. The purpose was to understand how they made sense of simulators during their operations and how simulators could help their work. After that, the researcher conducted open interviews with the instructor and mechanist. The researcher aimed to understand how simulators played a role in their work practices when they set up experiments in simulators. These interviews lasted a total of 3 h. The average time of an interview was 1 h. The researcher interviewed instructor two times and one time with the mechanist.

In the autumn of 2017, the researcher visited the largest shipping companies’ crew departments in Norway. Crew departments are responsible for recruiting and job promotion and are located in different cities in western Norway. The researcher conducted semi-structured interviews with members of the crew departments to gain knowledge of how they handled promotions and how simulators were involved in the promotion process. In total, five human resource managers participated in the interviews. Each interview lasted on average 2 h. Each time only one human resource manager participated in the interview. In total, interviewing members of the crew departments amounted to 27 h. In addition, the researcher conducted telephone interviews with three captains that were affiliated with the three departments. Semi-structured questions were prepared which corresponded with the interviews with the crew departments. The aim was to understand how captains made sense of simulators in relation to job promotion and how promotion occurred. The interviews lasted a total of 7 h, on average each interview was 2 h. After that, the researcher interviewed four operators who were set to be promoted at that time regarding their perceptions of promotion. The researcher wanted to investigate whether there were differences among the captains, crew departments, and operators when addressing promotion and the use of simulators in the process. The same type of semi-structured interviews were conducted. Each time one operator participated in the interview. The average time of an interview was one and half an hour. The interviews lasted a total of 9 h. Furthermore, the researcher conducted informal interviews with instructors in the training company. The purpose was to outline the relationship between simulator use in the university and in the industry, which could help to understand how simulators have similar or different meanings in the two contexts. The interviews were conducted discontinuously and mainly during lunchtime, for a total of 4 h.

In the spring of 2018, when the coastline authority decided to use a simulator-based test in recruitment to assign a marine pilot position, the researcher observed the coastline officer when using simulators as the means to select candidates. The researcher observed a session to discover how the coastline officer made decisions when candidates used simulators to finish a scenario-based task. This lasted approximately 30 min. The researcher also conducted a semi-structured interview with the coastline officer regarding how he made decisions. This lasted a total of 1 h. In addition, the researcher conducted open interviews with the captain and pilot to investigate their perceptions of simulator use in the recruiting process. This lasted approximately 1 h. Furthermore, the researcher conversed with an instructor regarding how scenario-based simulator use could support recruiting after the recruitment.

4.2 Data Analysis

After all interviews and observations, we produced 73 pages of transcription from 2016 to 2018. Furthermore, the researcher had used a field diary to document thoughts and reflections based on the observations, and these were considered valuable for subsequent analysis. The transcriptions were loaded, in which an iterative open coding and analysis process following the guidelines of Corbin and Strauss (1990) was executed. Data analysis was conducted jointly by three authors. To reduce the inherent creative leap (Langley 1999), we deconstructed the data analysis into different parts. First, the open coding began by describing, at a conceptual level, the meaning expressed in different parts of the data. For example, if informants mentioned experiences with simulators and work practices when reflecting on their everyday work, we categorized them together as “simulator experience’” and “work practice experience.” Second, the categories were developed for the three individual cases, and we additionally grouped similarities and differences from the three cases to ground our findings. Finally, our inductive data analysis was engaged with our long-standing research interest. The authors’ research interest in maritime work practices, maritime technology, maritime training, and technology design were what had attracted us to the case of simulator use “reality.” Given that the interviews were audio recorded, an oral agreement, between the interviewees and the researcher, was made to ensure that transcripts were rephrased regarding ethical considerations. Further, interviews were also noted down to capture body movements and particular emotions. This, importantly, assisted the researcher in identifying which parts of the transcripts that needed particular attention in cases of personal expressions and/or perceptions that should not be identified outside this research. Thus, the quote in the present paper is an aggregation of the different material which has been rephrased.

Moreover, working inductively, our interpretation of the findings drew on our attention to our data sources, such as interviews, observations, and informal discussions. This offered us a moving picture of the evaluation of the simulator use in various situations of the three organizations. Ribes (2014) found that such a method can be conceptualized and used for scaling devices which help researchers to understand large-scale and complex situations to better investigate diverse and growth within sociotechnical systems. Thus, this also offered the authors control of the quality of the eight-year long research project.

4.3 The Empirical Setting: Three Organizations

Maritime academia has gradually grown in size and has become more specialized since the late 1960s. With growth and specialization, it is becoming increasingly important to educate students at the university to gain knowledge and skills in simulation design. In turn, the simulated tools could help with the training and education of nautical students in simulators to gain supplemental experience before they work in the industry. The value and innovative potential of simulators as part of maritime sectors were investigated in a collaboration between the university and training center, through development and use of a simulator in maritime education. The simulator featured solutions to support training nautical students on safety–critical working environments and effective working and teambuilding. Simulator use varies across different organizations, as illustrated below.

-

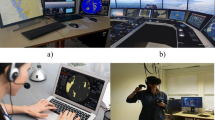

Training Center The typical use of simulators at a training center is for the purpose of education. The simulator is used as a tool for teaching skills. The training center views training as a fast and economical way to educate enough marine operators to have solid skills for industries, such as offshore and merchant shipping, fishery, and tourism. The simulator is also a research platform for multidisciplinary researchers. Since the training center belongs to the university, it is a platform for mechanists, social anthropologists, computer scientists, social scientists, human factor specialists, interaction designers, and philosophers to study marine operations and navigations for future generations of simulator building.

-

Offshore Companies The offshore companies are mainly concerned with using simulators to update operators’ knowledge of marine operations. They also have an interest in using simulators for job promotion purposes. Although they have different opinions on simulator use, these companies accept the idea that training can help them for some business purposes. However, in practice, they use their own criteria in the promotion of marine operators.

-

The Coastline Authority The coastline authority is a new user of simulators. To choose the best candidate who has both technical skills and non-technical skills for the position of marine pilot, the authority has chosen to use simulators as they are less time-consuming and offer better observation and interview methods for assessing candidates in the work context. For example, it is possible to simulate scenarios with different situations for the candidate.

4.4 The Norway Case

Along with the background of the three organizations, we present how simulators are used in the three individual cases that comprised our multiple case study. The participants in our study are presented into help readers understand their interest in simulator-based studies. Although the three cases have their own specific stories, the recording of the participants’ interests helped to associate these stories as a whole to make sense of simulator use.

4.5 Assessment Case: Integrated Marine Operation Simulator Facilities for Risk Assessment

The university has set an objective to develop a newly integrated architecture for the planning and execution of demanding marine operations, with corresponding risk evaluation tools that take human factors, focusing on awareness, into consideration. The aim is to serve the industry by improving operational effectiveness and safety with simulators. In this case, the training center has provided simulators and recruited operators to assist with research activities.

In 2016, the mechanics lab at the university wanted to recruit operators for an experiment to determine whether an eye tracker could be integrated into simulators for reducing risk behavior in marine operations. The purpose of using the eye tracker was to investigate whether such a tool could tell the mechanist how operators use simulators and where they are looking during the operation. The instructor participated in creating a scenario for the simulators. Then, mechanists used the collected eye tracking data to map out the area of interest (AOI) of the experienced operator for the instruction of non-experienced operators. It is believed that non-experienced operators could copy the AOI of the experienced operator to improve their skills. Several individuals participated in this case, including instructors at the university, experienced and non-experienced operators, the researcher and a mechanist. Six experiments were conducted, and the researcher engaged in the experiments to observe and interview the operators.

4.6 Promotion Case: Promoting Mariners from a Lower Position to Officer on Vessels

In 2017, the training company conducted a series of studies regarding how to use a simulator as a means to help offshore companies promote operators from a lower position to first officer. The studies were concerned with the extent to which technical and non-technical skills affected this promotion. However, this concern became a problem when studying companies because the decision, in most cases, was based on the captain’s recommendation. Thus, crew departments at the companies, captains, operators, and the researcher participated in this case. However, neither the crew department, captains, or the training company could define what the most important requirement for promotion was. In addition, there was no shared sense of what non-technical skills were and how they affected the promotion process. Thus, it was unclear how to use simulators in promotion. Therefore, this study explored these unfamiliar problems with captains, crew departments, operators, and instructors at the training center. The purpose of the study was to understand how simulators could help with this aspect of the industry in practice.

4.7 Recruiting Case: Selecting Captains to be Pilots for the Norwegian Coastline Authority

In the spring of 2018, the coastline authority decided to use a simulator to help with recruiting pilots because they believed simulators could help reduce selection time and increase the possibility of checking more competencies of the candidates. However, the coastline authority also had less knowledge on how to set up scenarios to assess the required competencies. Both authority and the training center still set up scenarios to see in which conditions the candidates failed to use the simulator. They believed that the selection process could reflect on such activities and even help people advance from their current level of ship maundering. Although the training center could help set up scenarios in simulators, there was no guidance for instructors to characterize which competence could be related to technical or non-technical skills. Instead, instructors could only work on mapping out whether the candidate had a good theoretical understanding of ship maneuvers and could use that knowledge in simulator-based studies.

Thus, if the candidate could perform well on both theoretical and practical practices, the authority assumed the candidate could be chosen as a pilot. In addition, before the candidate engaged in the simulator studies, a psychologist administered a survey to help the authority identify how well the candidates could work in a team. After the simulator studies, the authority reviewed all of these results to decide who the pilot would be and how the other candidates could improve their operations using the simulators.

5 Case analysis and Findings

5.1 Assessment Case

On the day of the experiments, six mariners participated, and their experiences varied. There was only one operator who had several years of experience with crane operations. The rest of the operators had limited experience and had just begun their careers in the maritime industry. In this experiment, the mechanist wished to investigate the hypothesis that “If the eye tracker can record areas of interest during operations of an experienced operator, we could outline such areas for novice operators to learn safe operation.” The experienced operator was asked to operate the crane first. An eye tracker was used to record his eye movements. During the operation, the researcher conducted an informal interview. Below, R refers to the researcher. The experienced operator is referred to as EO. The M refers to the mechanist, I refers to instructor, and NO refers to the novice operators.

R: Do you see any challenges to this scenario you are working on?

EO: No, it is a simple case. My task is to move the cargo from this place to that place. [He pointed within the simulator environment.]

R: How do you think this simulator helps to practice your skills of crane operation?

EO: I think it helps to refresh my work if I do not work at sea for a long time. However, I also think the simulator may less simulate a true feeling at sea. For example, you see that cargo? I try to lift it up. It should have weight, and if I put it into the water, it should sink before I release the hook. But it does not. So, it misleads my thought that I do not need to release the hook quickly. However, if you do the same thing on the vessel, it will be dangerous. But in the simulator, you can notice this issue and adjust your behavior quickly.

R: How do you think your behavior changes when working with the simulator?

EO: Well, you never need to expect simulators are accurate enough. However, if you think the “flaw of simulator” is a checkpoint of operators’ capabilities, then it is a good tool to help operators to practice many skills. There is no risk in simulators; you can try any errors, technical or non-technical ones.

R: But how do you think the eye tracker could help with these errors?

EO: Ah, the glass [eye tracker]. It is a good tool. However, as I said, if you could point out each checkpoint in a scenario, it may be useful to record information by the glass. Otherwise, I do not believe the glass can help with this because I noticed some important factors the glass cannot capture, for example, my glance. I have to look for some information elsewhere, and your glass cannot capture that.

It seems the inadvertent mistake in scenario-based simulator use helps with identifying the checkpoint, such as the weight of the cargo. The checkpoint can be interpreted in some formats as the competence of operators. However, this finding was overlooked because the experiments were designed to check whether we could use an eye tracker to help with avoiding human errors, rather than mapping out the relationship between checkpoint and competence. Nonetheless, the researcher also talked with the mechanist who was recording eye tracking data on video beside the operator.

R: Have you recorded eye movements?

M: Yes. I think everything is recorded.

R: But, have you noticed that he claims the simulator does not simulate the weight of the equipment? Can you record that in your video for later analysis?

M: I do not know. Maybe.

R: Can you just observe? Maybe he uses his glance to obtain information?

M: Eh, I think we cannot capture it. Because… the simulator room is dark. I assume that information may be less interesting because we set up the experiment such that the interesting areas are located in front of the simulators, such as screens and the simulated environment outside the crane room.

It is clear that the mechanist was interested in how a simulator should be used. Such interest has some overlap with the experienced operator—the use of the simulator as a tool. However, there was also difference between the mechanist and the experienced operator. The experienced operator assumed that the simulator could also be used to determine whether he could handle various errors, which he could not practice in reality. This phenomenon led the researcher to want to investigate further in depth, so he questioned the mechanist and instructor.

R: Do you notice the weight of cargoes; do you think it is important?

M: It might be a mistake of the simulator. However, it does not matter since, if we have the AOI, it will help the non-experienced operators to avoid mistakes. The important things here are how non-experienced operators can learn from the experienced one.

I: Yes, I agree. It should not be the case. Maybe next time the simulator should be improved regarding this problem.

Perhaps the simulated environment was a problem; however, the eye tracker indeed did not capture glances. This lack in the ability of the eye tracker was not due to the environment. It had not been designed to manage this function. The experienced operator doubted that his glance could be captured and used to train non-experienced operators. However, for both the mechanist and instructor, the glance information was also not one of the most significant areas. According to the instructor:

I: The glance information may be important. However, that may also be part of experience. Experience is difficult to train in simulators. It takes time when operators work at sea.

Since the instructors and the mechanist designed the experiments, they followed the principle of bridging the distance between theoretical knowledge in textbooks of nautical studies and practical skills in simulators. Thus, the researcher asked the same question to the novice operators after the experiment to understand how they made sense of simulators in their work activities.

R: Have you always copied the experienced operators’ operations?

NO: Not 100%. Some information we are unable to follow. The video shows only what he is looking for, but some information we need is out of the scope.

R: Do you notice there is some information on the screen in front of you, which is placed on your right armrest of the chair?

NO: Yes, but that is not in the video for copying, is it? I mean the one the instructor shows us.

R: No, it isn’t in the video. However, beside this, what do you find interesting and important for you?

NO: We think it is not just what he [the experienced operator] looks at; we should also know how to process that information. This is important. This is outside of the ability of simulators since we do not know how to process information. The simulators look like big games without a help guide. However, did you see there is a bug in the simulator? There is no weight of the cargo. That made me think, should I check the crane if the load is too heavy? Too light? What will happen? Did you make it on purpose?

From this discussion, the researcher realized that perhaps the checkpoint is an important resource because it helps operators to reflect on what they should be aware of. It requires them to practice their skills to handle am error so that they do not fail. Continuing this line of inquiry, the researcher again asked the instructor about the purpose for using different scenarios with simulators. The intention of this inquiry was to investigate how the instructor made sense of simulator use and what goal the instructor had in mind. The instructor replied:

I: I want to help check if the operators are able to handle some difficult tasks in comparison with the knowledge they learn from theories. In this case, the simulator is a good platform for training operators to avoid risks.

It seemed there were different interests concerning how to use a simulator for a specific purpose. If we consider the mechanist, simulators, operators, and instructors as being in the same actor-network, then the common interest in such a network is missing. For the mechanists, simulators are experimental platforms in which they can test different devices. For operators, simulators are exercise places; they can try to use systems and equipment and take the risk to perform operations that they could not perform in reality. For instructors, simulators are training tools. A common interest in the actor-network was not determined due to the experiment perhaps oversimplifying that simulators are just tools. Integrating eye trackers is important for avoiding risks, but we should know that simulators also have interest in training different scenarios which can be understood as experience of “other actors”. Simulators indicate the checkpoint that may be useful to other actors to redirect their interest in the actor-network towards a systematic simulator study. However, the experiments allowed us only to focus on how individual actors create interest in their own field. Through such experiments, we might find several useful devices that may be helpful with training activities; however, we will miss the sensemaking of simulator use, such as why theoretical knowledge can be translated into practical skills. This gives rise to additional questions? How do checkpoints play a role in this process? and How can checkpoints lead us to understand what competencies the operators have?

5.2 Promotion Case

If the previous case opened our eyes to focusing on the interest in simulators, then the promotion case highlighted what should be involved in considering the use of simulators to link the checkpoint with competence. In the following, CD refers to Crew Departments, C refers to Captain, and MO refers to marine operator. The interviews first started with determining the current promotion process in the three companies. The crew departments said they did not choose candidates directly, whether they were trained with simulators or not. They only promoted people who were recommended by the captain when there was an open position. The reason was that captains work closely with the candidates and know better than anyone else about the candidates’ work. Thus, our analysis began with this information and expanded to how the simulator played a role in this process.

The researcher then investigated whether there were any other requirements for such promotion such as whether any certificates were needed to indicate that the candidates had an education background in marine operations. The crew departments stated that certificates were one of the prerequisites. Certificates were usually only considered as basic prerequisites before candidates could apply for promotion. The CD replied:

CD: We do have requirements for all our employees to obtain certificates from the universities and finish their education before they could apply a position in our company. After that, we do not check any certificate but believe they are skilled.

However, this was an interesting answer since, if the operators had certificates, they would have of course had a background in simulator-based training. Then, the researcher asked, ‘”Besides the certificates, do you know how captains make a recommendation?” The purpose of the question was to investigate whether a promotion was not contingent upon simulator-based training and what elements were taken into account in such a process. The crew departments had various answers to this question. Some of them had a form they asked captains to fill out. Others had no form but waited for the captain to call back to report the performance of the operators. To investigate the question further, the researcher asked the same question to the captains. One captain replied:

C: We have annual evaluation for our staff. If there is a form, I will fill it out. If the company does not give forms, then I will use my rules to evaluate. The form is straightforward. As you might know, that includes questions about technical skills, social skills, and safety and security issues. However, people vary in thoughts; thus, I only promote a candidate who I am familiar with. The reason is simple: it is easy to check whether technical skills, safety, and security matters meet the requirements. However, it is difficult to make a conclusion to say who has good social skills. Meanwhile, social skills are extremely important. Thus, I have to use my rules to make a decision. I believe there is no standard to do the same task. [The authors rephased the response.]

In addition, to avoid bias, captains said that first officers were also involved in the evaluation process. However, when the researcher asked the first officers the same question, they replied that they rarely engaged in such a process. Thus, if the industries do not have a standard approach for evaluation, such as a form, various understandings of social skills are considered as part of the evaluation. In this case, any operator could say that he who has an open personality is better than an introverted person. In addition, he could also argue that he who is introverted is not good at context-based interaction with of other operators. Social skills in such cases go beyond our normal understanding of what social means.

However, operators reported that social skills are about context-based inquires in operations. During the interview, operators stated that social skills should be defined as a format which can be measurable. Thus, simulators could support such contexts during the inquiry process concerning social skills, such as communication, awareness-based activities, and cooperation. With this thought in mind, the researcher came back to interview the instructors.

For instructors, there was also no specific understanding of social skills. Although the training center had used simulators to train operators as a team for some specific tasks, which social activities should be considered as requiring social skills when using simulators were not defined. In this case, it is not difficult to understand why the industries thought simulators were just tools for training and, to some extent, why they did not care if the candidate was or was not training with simulators before promotion.

From the ANT perspective, this indicates that, although simulators, crew departments, captains, operators, and instructors are in the same actor-network, their interests vary. Due to these varying interests, there is no likelihood that they could be involved in the same actor-network. Although using simulators is a common interest among these actors, how to map out the relationships between skills and competencies is vague. The core challenge is how to define social skills and link them to the work context. For the crew departments, their interest in simulator use was that it could help them identify who was qualified to be promoted, rather than relying only on simple training. It is not difficult to transfer this interest from the crew department to request the training center to investigate. Integrating checkpoints with technical skills of an operator in different scenarios is simple; however, how to associate relevant social skills with simulator use needs instructors, crew departments, operators, and captains to have a common understanding. For captains, their interest was to investigate to what degree operators could meet the requirements for promotion. If social skills could be involved in simulator-based training, then such skills should have relationships with the tested technical skills. Both skills are connected. For example, there is a strong link between the communication and visual focus in marine operations when operators must collaborate in a team when using simulators (Pan et al. 2018); it is improper to say that “social” regards every social interaction among people. Instead, we may need to narrow it down to social skill that is bonded with technical skills when using simulators. In this case, the essential factors of the simulators—checkpoint, technical, and social skills—and training can be aligned as a whole for understanding the performance of operators. Therefore, we avoid thinking that the promotion process is about experiment-based evaluation of human performance; rather, it is about identifying specific areas in training to help operators to practice their skills.

5.3 Recruiting Case

If the previous two cases help us understand how a simulator should be used, then the third case will help with identifying a requirement to maximize the value of simulators. We know that simulators can help with choosing candidates, but we must investigate in detail how to do so. In this case, several participants were involved in the selection process, for example, the coastline authority’s officers, psychologist, professional pilot, human resource (HR) manager, captains, and university simulators and instructors, as well as the researcher. Officers usually sat in front of monitors to observe how a candidate finished the work activities. Captains and professional pilots worked with the candidate to stimulate scenarios that the candidate might face in real work. Simulators were provided by the training center, and instructors helped with creating scenarios and identifying what theory of marine operations might relate to the scenarios. The psychologist helped with identifying whether the candidate could pass the general job tests, as most companies do. Thus, the decision was mainly based on how captains and professional pilots reflected on their collaboration with the candidate and the candidate’s performance, which was observed by the authority’s officers. However, the problem was that making a decision should reflect on what competencies are encountered in each simulator-based selection process to help people to improve their overall competence. This problem became an obstacle for both instructor and the authority. As the authority’s officer and HR manager stated [the authors rephrased]:

We identify what kind of skills the candidates have and try to rank them by our judgement. We think the evaluation of the candidate in simulators should be subjective, rather than objective. Thus, there is no standard way to choose a candidate regarding his or her skills. However, a simulator should help to show his or her behavior in front of us; in that case, we could reflect on their ability. This helps decrease the recruitment process and cost to a few months, which normally takes a few years.

Although we agree that the evaluation should be subjective, it is a relativistic inquiry process (Bernstein 1983) conducted through simulation. Indeed, the instructors only provided scenarios and instructions. It is insufficient to say that only through observation the authority could decide who is better than others. Moreover, a general job test could not much help the psychologist to conclude that the candidate would perform excellently in the working context. Thus, this may result in misjudgment of how the candidates could work as team players with captains and the professional pilot. In the past, the selection method that may have taken months or years to choose a candidate was successful because everyone could reflect on their collaboration with the candidates (interview with a captain). Although it was a long process, it was a trust building experience between the candidates and the authority. Thus, years later, the candidate could have become a pilot (interview with captains and authority). Now, if simulators are used in the selection process, we must clarify what competencies can be evaluated and through which simulator-based selecting scenario. In addition, we also must understand what competencies exist inside of the process. This interest related to the simulators goes beyond the traditional simulator-based training and adds an element of inquiry that requires instructors to fully understand the importance of clarifying how simulators could serve several modules in each simulator-based training. Again, essential questions are raised by this: What competencies can be investigated?, What skills are involved in those competencies?, How do we rank the competencies and in regard to what rules and regulations?, and How do we combine different modules for different candidates? One captain stated:

We have several rounds of simulator-based training in this process. But, we do not have a clear picture of how a simulator can help identify specific competencies. We need to categorize, group, and filter different packages for some specific purposes.

If we see simulators, instructors, and the authority as an actor-network, then the simulator must be the core actor to make sure the network can be established. According to the instructor, we see many small actor-networks: for example, the network of simulators and instructors, the network of simulators and authority, and the network of candidates and simulators. These networks have their own interests, and these interests are unnecessary for creating a shared interest. As Nagel (1979, pp. 4–5) has argued:

Not all the existing sciences present the highly integrated form of systematic explanation which the science of mechanics exhibits, though for many of sciences—in domains of social inquiry as well as in the various divisions of natural science—the idea of such a rigorous logical systematization continues of function as an ideal. But even in those branches of departmentalized inquiry in which this ideal is not generally pursued, as in much historical research, the goal of finding explanations for facts is usually always present. Men seek to know why the thirteen American colonies rebelled from England while Canada did not, why the ancient Greeks were able to repel the Persians but succumbed to the Roman armies, or why urban and commercial activity developed in medieval Europe in the tenth century and not before. To explain, to establish some relation of dependence between propositions superficially unrelated, to exhibit systematically connections between apparently miscellaneous items of information are distinctive marks of scientific inquiry.

In line with Nagel (1979), because of the lack of common interest among different actors in current simulator studies, this causes a minimization of the value of how a simulator could fill the gap between the academic outcomes and the industry needs. If we take a broader perspective to look at how simulators could be used differently, we can determine a strategy for integrating a common interest of simulator use for all potential actors. This would enable a systematic inquiry regarding human values in technology activities and enable suggestions for each participating actor in the network. Moreover, it would be then be possible to better link the checkpoint, technical, and social skills to the competencies the industry requires.

6 Rethinking Simulator and Work Practices in Maritime Organizations

With regard to experiences, we discovered similarities among the assessment, promotion, and recruiting of candidates. We found that, although technical skills offered convenient and standard criteria for assessing the quality of work with regard to the systems, devices, and equipment use, they did not contribute to knowledge about how good the social aspects of work practices (non-technical skills) were. Consequently, although the technical skills were documented in the certificates that showed that the candidates had the capabilities to conduct that work, they were integrated with the social skills the candidates practiced every day. For example, when operators were asked to do crane operations, they also displayed their social skills by identifying the risks and flaws of the simulators and how they solved the problems. In the promotion case, crew departments, for instance, always brought captain’s reports to the table. This was the main resource they could use. They did not rely solely on the certificates because they could not completely reflect the skill level of the candidates. The certificates also did nothing to document candidates’ social skills in the work context. From an ANT perspective, although there are several small actor-networks surrounding or involving the simulators, the simulator as an actor does not become the spokesperson (Law and Hassard 1999) to enable other actors to engage in the network. Since simulators as nonhumans are not seen as actors, this leads to overlooking the use of the meaning-making process of simulator use. As a result, we may run experiments repeatedly but not offer to others who are outside of academia an understanding of how a simulator should be used.

In addition, it is a challenge to use simulators to make decisions in organizations. Candidates may have excellent theoretical understanding of marine operations and may fail in their simulator-based studies. Likewise, candidates may be successful with simulators, but have limited understanding of the theories of marine operations. When comparing the three cases, we found that using simulators for training purposes is much different than using simulators for business purposes. As shown in the assessment case, it is impossible to tell whether the candidates could follow the experienced operators, since not all information was provided to them, such as glances and the experience of lifting weights. This means that, whether evaluating candidates who are good at a specific operation or not, it is a challenge to tell a rich and full story. Moreover, it is also unfair if a promotion has no basis whatsoever on the opinions of qualified observers. It is a good idea to ask captains what they think about the qualifications of the candidates, since they work closely with them. However, to promote a candidate who is a team worker and can demonstrate various skills for all specific tasks is a challenge for captains as well. The captain cannot always follow the candidates and is not trained to conduct evaluation of others (interview with captains). Unfortunately, and sadly, although the simulator is considered when selecting candidates, we cannot guarantee that the section process is either reliable or supportive. Thus we must acknowledge that we have failed to determine in what way simulators are useful in both technical and social skill practices.

Similarities in terms of goals for future simulator use are evident in the actors’ interests in being able to use simulators to select relevant competencies. The industry agrees that it is a challenge to determine what a company needs from simulator-based studies. However, the industry could tell what competencies they want in their workplace. In that case, instructors could set up the simulators to incorporate the different interests into different scenarios. Such a process is about indexing and developing specific competencies to match the desires of the training company via simulators. In turn, simulator use, competencies, and skills are connected to establish a triangular relationship towards an inquiry process of making sense of simulator use. It may be argued that making sense of simulator use requires rethinking simulator and work practices in maritime research and studies. Simulator-based studies and research are sociotechnical entities that must be designed as a whole. As discussed previously, there are different interests involved in the use of simulators in maritime organizations. From an ANT perspective, the training center trend mainly addresses training operators who have less experience at sea and introduces them to the new and advanced marine operations. Moreover, current research on simulators is mostly focused on the technical functionalities, which may help advance marine operations. However, most research in the maritime domain does not involve different perspectives from instructors, the crew departments, the authority, or the operators. People may argue that human factor specialists work on the engineering and science fields to address the human elements. Although human factor specialists try to use evaluation of human performance in simulators to suggest improvements in ship and simulator design, there is still a lack of organizational problem-solving thinking to completely comprehend the real needs in current maritime sectors. However, there is nothing wrong with evaluation-based maritime training, since it focuses on helping operators to gain skills as rapidly as possible and teach them to work as a group (Øvergård et al. 2017). The problem is that purely training instructors basically assumes that operators could achieve a higher competence, even if the simulators are out of date; thus, the theory and practice may be mismatched for specific demands from industries, and the non-technical skills may be studied inappropriately.

Considering these consequences, Lützhöft et al. (2017) have argued that maritime science may be about reliability, validity, and objectivity, and that which goes beyond the engineering research is less concerned with verification, validation, and fidelity of the models (software and hardware). They stated that, if we want useful technology (simulator), the simulator operator should be involved in the experimental system. In that case, changes may need to be made to the simulation to meet the unexpected actions and events. However, we argue that a systematic approach to inquire about the unexpected actions and events may be needed. In this case, the inquiry process in simulator use becomes extremely important. Simulators are not just training platforms; they should, to some extent, bridge the academic outcomes and the industry’s needs for realistic purposes. For many years, a de facto and dominant activity set for maritime research had been based around the “waterfall” stages of application development, for example, feasibility study, technology analysis, systems design, implementation, post-implementation study, etc. Today, we see a very different context in which maritime research is practiced. The “waterfall” is not irrelevant, but as the discipline increasingly moves from being primarily concerned with application development towards organization development, the “waterfall” cannot remain the centerpiece of the discipline of maritime research. Instead, it may require the researchers to apply a variety of technologies and pre-constructed shells to improve maritime organizational performance. It is clear that this is a general trend. However, it is now likely reasonable to argue that simulator development and use will be embedded and integrated increasingly seamlessly in organization development; that is to say, simulators may be considered a component of the meaning construction process that increasingly characterizes simulator use.

Thus, if current maritime research is the constellation of facts, theories, and methods collected in current texts, then researchers are the people who, successfully or not, have striven to contribute one or another element to that particular constellation. However, when different inquiries from different parties, such as industry, authorities, and the universities, are enrolled in the same network, it may cause failures in determining a common interest among the different parties. Kuhn (2012, pp. 5–6) has argued:

Normal science, the activity in which most scientists inevitably spend almost all their time, is predicated on the assumption that the scientific community knows what the world is like. Much of the success of the enterprise derives from the community’s willingness to defend that assumption, if necessary, at considerable cost. Normal science, for example, often suppresses fundamental novelties because they are necessarily subversive of its basic commitments. Nevertheless, so long as those commitments retain an element of the arbitrary, the very nature of normal research ensures that novelty shall not be suppressed for very long. Sometimes a normal problem, one that ought to be solvable by known rules and procedure, resists the reiterated onslaught of the ablest members of the group within whose competence it falls. On other occasions a piece of equipment designed and constructed for the purpose of normal research fails to perform in the anticipated manner, revealing an anomaly that cannot, despite repeated effort, be aligned with professional expectation.

In this vein, to make sense of simulator use beyond the current debate—linking science and engineering (Lützhöft et al. 2017)—we need an approach that allows researchers to inquire about the process of simulator use. This would challenge the current researchers into thinking differently about their “as given” assumptions about the nature of a given human organization, technical simulators, and how they are operationalized to produce outcomes. Inquiry informs the “thinking about” and must involve the investigations of current organizational situations, the dynamic of a given organizational situation, clarifying how a given organizational process or set of organizational processes are served by simulators and their use. It is likely that only in this way can researchers further move beyond analysis of cause and effect towards inquiry into transformations that occur due to the interactions among elements in a “whole process.” Simulators, different actors, and maritime research communities, for example, would be considered an element of the “whole,” rather than an isolated subject of study with relatively weak conceptual integration with the whole. To make such an idea useful, as discussed in this paper, there must be a particular orientation of the mind, rethinking the work practices and the use of simulators. Otherwise, as Kuhn argued (2012, pp. 5–6):

In these and other ways besides, normal science repeatedly goes astray. And when it does—when, that is, the profession can no longer evade anomalies that subvert the existing tradition of the scientific practice—then begin the extraordinary investigations that lead the profession at least to a new set of commitments, a new basis for the practice of science. The extraordinary episodes in which that shift of professional commitments occurs are the ones known in this essay as scientific revolutions. They are the tradition-shattering complements to the tradition-bound activity of normal science.

7 Reflections on Utilizing Multiple Case Study in Maritime Domain

The process of analyzing a multiple case study as described in this paper is important in shaping maritime organizations. The everyday actions that a case study researcher takes have important consequences for organizations and for the society that relies on those organizations. It remains incumbent on those shouldering the responsibility to prepare their mind for the undertaking of it. A researcher who works in the maritime domain will be required to develop methodological thinking skills analogous to those of a master chef, and as discussed in this text, working in this domain is a creative, human intellectual activity. It requires dynamic, innovative thinking, as well as dealing with and commenting on a range of abstractions and applying them to “real world” situations. It requires conceptual skills, expression, and flamboyance. It needs creativity, critique, communication, innovation, precision, and discipline, and it involves rigor, ethics, and experience. It requires wrapping knowledge of technology into a creative, innovative, and exciting process of organizational problem-solving. It requires a new breed of researcher who is guided by a discipline of “making sense of simulator.”

A qualitative case study in maritime research is a flexible approach. It may not be able to generalize a precise solution for the concrete problems that both maritime academia and industry are focused on; however, its open-ended attribute contributes to an early understanding of the problems in their specific contexts. When the analysis is presented in a specific way, the multiple case study is easy for the reader to understand. Moreover, the reader may understand it so well that he or she creates a vision about how to implement the study in his or her own situation. Although the present study also does not develop a testable generalization, it is credible scientific work that indicates a new direction for simulator studies. That is, we need to discover the checkpoint and link possible technical and nontechnical skills to it. Then, we may map out the relationships between them and the competencies that the industry needs through the development of scenarios to make sense of simulator-based studies.