Abstract

Universities are striving to become data-driven organisations, benefitting from data collection, analysis, and various data products, such as business intelligence, learning analytics, personalised recommendations, behavioural nudging, and automation. However, datafication of universities is not an easy process. We empirically explore the struggles and challenges of UK universities in making digital and personal data useful and valuable. We structure our analysis along seven dimensions: the aspirational dimension explores university datafication aims and the challenges of achieving them; the technological dimension explores struggles with digital infrastructure supporting datafication and data quality; the legal dimension includes data privacy, security, vendor management, and new legal complexities that datafication brings; the commercial dimension tackles proprietary data products developed using university data and relations between universities and EdTech companies; the organisational dimension discusses data governance and institutional management relevant to datafication; the ideological dimension explores ideas about data value and the paradoxes that emerge between these ideas and university practices; and the existential dimension considers how datafication changes the core functioning of universities as social institutions.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Universities recognise the potential value of their digital data and strive to become data-driven organisations that collect, analyse, structure, manage, and use data and data products in their strategic and operational activities. As one of the participants in the focus groups we held during our research on the digitalisation of higher education (HE) in the UK noted:

I think every university knows that the data they hold is the wealth of the institution, whether that’s data about how people are behaving or what they’ve actually produced. But that is, at the end of the day, that is the most valuable thing you have. (G6P3).

This imaginary of the value of digital data is supported and encouraged by policymakers and sectorial agencies (Gulson et al., 2022). Jisc, a digital technology and data agency supporting HE in the UK, has recently launched the Data Maturity Framework, which universities can use to assess their ‘data capability’ and guide strategic change. The Higher Education Statistics Agency (HESA) led the Data Futures Project, which aimed at sector-level data collection and analysis to modernise HE data collection and make it more efficient. These initiatives are further driving the marketisation of HE in the UK (Williamson, 2018) and supporting commercial actors to economically benefit from university data (Komljenovic, 2020), including the recent emergence of educational data brokers (Arantes, 2023).

Datafication refers to the ‘quantification of human life through digital information, very often for economic value’ (Mejias & Couldry, 2019, p.1), which involves representing social and natural worlds in machine readable digital formats (Williamson et al., 2020) with significant social consequences. In education, datafication consists of collecting and processing data at all levels, from individual to institutional, national and beyond, impacting education stakeholders’ discursive and material practices (Jarke & Breiter, 2019).

We specifically focus on digital data collected by or registered in digital platforms and digital infrastructure. In many industries, data are valuable when aggregated into big data, allowing more sophisticated analyses, such as group analysis and comparison of individuals for targeted advertising (Birch et al., 2021; Pistor, 2020). In HE, policymakers and educational leaders are attempting to improve quality, efficiency, and impact via datafication at the sectoral and institutional levels (Eynon, 2013). Imaginaries of precision education promise to deliver personalisation akin to other sectors, such as medicine and agriculture (Kuch et al., 2020).

This omnipresent and techno-deterministic belief in the value of data acts as a mythical belief in magic in that it evokes the ideas of seamless functionality with impressive end experience without attention to how it works or the means with which this was achieved, including struggles, efforts, risks, and costs (Elish & boyd, 2018). However, a paradox emerges as this belief in the value of data is not realised in HE, at least not to the extent that stakeholders would wish; yet it continues to drive investment, business models, actions, and strategies (Komljenovic et al., 2024, 2024b). Currently, data are both valuable and not valuable. Various actors, including EdTech companies and universities, experiment and look for ways to realise economic and social value from data.

Universities are diverse along many dimensions, including size and resources, which are particularly important for datafication. These differences mean they organise data processes differently. Having thousands of students and staff, universities have to manage petabytes of data, which is a complex task technologically, financially, and legally. The costs of data storage alone have substantially increased, on top of other new costs related to establishing and maintaining the digital ecosystems required for datafication. Universities also deal with legacy software, problems integrating various systems and data flows, ensuring data security, facing cyberattacks, and more. Moreover, diverse actors formally and informally scrutinise universities concerning their data and digital practices (Komljenovic et al., 2024, 2024b).

In this article, we focus on the UK as an illustrative case due to the high level of digitalisation and datafication of HE (Williamson, 2019). We aim to recognise UK universities’ needs and aims to become data-driven organisations and analyse the challenges they face as they pursue the datafication journey. We first examine datafication in HE and then elaborate on our methodological approach. We then turn to our analysis, structured around seven interrelated dimensions of change, followed by a brief conclusion calling for democratic and relational datafication in HE.

Datafication in higher education

Embedded in the broader context of an increasingly datafied and digitalised economy and society (Sadowski, 2020) and its pervasive ordinal logic (Fourcade & Healy, 2024), universities are expanding their data gaze and extending their data frontiers (Beer, 2019). Universities have always collected data about their students and staff. However, digital technology has allowed them to increase the variety and granularity of data they collect at greater velocity and often in real-time, with material and discursive effects (Prinsloo, 2020b; Williamson, 2018). Universities have also long used technology in teaching and learning, but digital technology enables new forms of surveillance (Birch & Cochrane, 2022; Watters, 2014).

The socio-technical imaginary of data often entails unquestioned and techno-deterministic narratives about futures inevitably dominated by technology, in which data collection and analysis will improve education (Williamson, 2017). Universities are increasingly governed by black-boxed algorithms based on collected data (Pasquale, 2015; Prinsloo, 2020b). However, significant debate exists about the promises and perils of datafication in HE (Selwyn & Gašević, 2020).

Some scholars have discussed the benefits of data and datafication for HE. For example, learning analytics is envisaged as personalising learning and supporting academics in providing student feedback to large cohorts more efficiently (Pardo et al., 2019) or enabling detection of students at risk of dropping out of university (Sclater, 2017). Big data and cloud computing are imagined to disrupt HE and bring benefits such as individualised solutions to students (Moreira et al., 2017), while business intelligence is predicted to support improved university operations (Drake & Walz, 2018).

At the same time, many researchers offer warnings about the problems, threats, risks, and harms with data operations in HE (Pangrazio, 2024; Selwyn, 2015). These include incomplete and contingent data, resulting in partial or invalid representations and denying students agency in these processes (Broughan & Prinsloo, 2020). For example, digital systems collect multimodal data from various platforms and settings, including on- and off-campus (Jones, 2019), resulting in dataveillance, i.e. routine collection and analysis of data without student and staff awareness or influence (Lupton & Williamson, 2017; Marachi & Quill, 2020). Many scholars point to privacy concerns (Reidenberg & Schaub, 2018), while others argue for data activism that enables students to return the data gaze of learning analytics rather than being passive subjects of datafication (Thompson & Prinsloo, 2023). This is an especially relevant initiative since universities do not appear to actively inform students and staff of their rights and responsibilities about data use (Brown & Klein, 2020).

Specific data products are developed by analysing data collected from staff and students, such as learning analytics, recommendations, and behavioural nudges. The underlying logic of data products targeted at teaching is informed by behavioural economics (Doyuran, 2023), possibly leading to ‘machine behaviourism’ that brings together machine learning with behaviourist theories that erode student autonomy and agency and orient these processes towards predefined aims rather than enabling more open social futures (Knox et al., 2020). Smart universities using Internet of Things devices, and thus collecting very detailed and granular levels of data on students and staff, are seen to be at risk of introducing or enhancing discrimination, surveillance, and technocratic control, as well as wasting resources and enabling corporate consolidation of education (Kwet & Prinsloo, 2020). Universities are also reported to be ill-equipped to protect their constituents from data harvesting and exploitation by private actors, such as learning management systems (LMS) like Canvas (Marachi & Quill, 2020).

The collection and use of university data have expanded beyond public agencies to private actors, such as businesses, think tanks, and consultancies (Williamson, 2019). Moreover, universities routinely use proprietary digital infrastructure (Williamson, 2018) and software to collect and analyse data (Komljenovic et al., 2024, 2024b), which brings a potential conflict between the public mission of the university and for-profit motives of companies and private capital (Castañeda & Selwyn, 2018; Marachi & Quill, 2020). Data collected by digital platforms have become a valuable asset for universities, Big Tech, EdTech companies, and their investors (Birch et al., 2021; Komljenovic, et al., 2024, 2024b). Longstanding education companies such as Pearson have reinvented themselves into data companies with increasing power to influence and govern the sector through data (Williamson, 2020). Commercial interests benefit from geopolitical power asymmetries, using data as a technology to advance neoliberal approaches to HE in the Global South (Prinsloo, 2020a).

Datafication in HE is thus an ongoing and complex set of processes. We aim to contribute to this literature by focusing on the struggles UK universities face as they attempt to make digital and personal data useful and valuable.

Our approach and methodology

We discuss findings from a 3-year research project examining EdTech in the UK HE sector. Our overall research aim was to investigate the digitalisation and datafication of universities and the new forms of value that these processes can bring. We studied three groups of social actors, namely, universities, EdTech start-up companies, and investors in EdTech. Our mixed-method research design included quantitative database analyses, critical discourse analysis of documents, thematic analysis of interviews, and knowledge co-production with the project’s Stakeholder Forum, which consisted of HE stakeholders and EdTech actors (Komljenovic et al., 2024, 2024b).

In this article, we analyse the tensions and challenges in UK universities’ attempts to become datafied organisations. We specifically focus on the challenges of making digital data valuable at the micro-level of practice in juxtaposition to the discursive and policy aims of datafication mentioned above. In other words, while the discursive and policy framing of data in HE ambitiously elaborates the positive aspects and the potential benefits of data operations, we analyse the micro-level practices on the ground to understand the struggles university actors face in realising this framing and the imagined benefits of using digital data.

Our approach was to combine thematic analysis (Braun & Clarke, 2006) and thick description (Ponterotto, 2006) to analyse strategies and challenges of making digital user data valuable and useful for universities. We draw on parts of our data corpus, including 14 in-depth interviews conducted at four universities and six focus groups with 19 participants from 17 universities. We first prepared a thick description of each case university we examined and then analysed the datafication dynamics in these descriptions. Moreover, we conducted a thematic analysis of interview and focus group transcripts. We brought together themes on datafication and structured our discussion along seven dimensions. These are interrelated and present an analytical tool to structure our findings. We were interested in datafication in HE overall and did not focus specifically on AI and tools such as ChatGPT.

Interviewees and focus group participants included directors and managers of IT and learning technology units, chief information officers, academic leaders of digital strategy, and similar profiles. Conducting in-depth interviews at a limited number of universities provided insights into how universities are trying to integrate data-driven technologies in their operations, but it also makes it easier to identify the specific organisations and their staff. Due to commitments to maintain anonymity given in our ethical protocol, we can thus only present an overall aggregate analysis of themes and use direct quotes only from focus group transcripts.

Seven dimensions of change

Digital technologies and platforms collect user data from university students and staff during teaching, learning, and other activities on platforms. User data come in different forms and include content produced by staff and students (e.g. discussion forum posts in a LMS, student assignments submitted to plagiarism detection software, and lecture recordings submitted to university digital asset repositories), data provided by staff and students or their universities (e.g. information on grades, modules taken, and socio-economic background), user behaviour registered by platforms (e.g. information on clicks, time spent on particular tasks, and the sequence of user movement on the platform), and metadata (e.g. IP address, machine number, and platform access time).

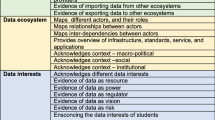

UK universities are striving to become data-powered organisations by integrating these different sources of data and making their data useful and valuable for their operations. Universities are at different levels of data maturity, with some having developed functional data warehouses and data governance regimes, while others are still managing disparate databases and low data integration and quality. Regardless, UK universities share the aspiration to realise the value of their data for teaching, learning, research, and administration. They also share many challenges in making digital and personal data valuable and useful. In what follows, we specify seven dimensions of change across which we analyse university strategies and practices and the problems universities confront as they pursue greater data maturity.

Aspirational

Key university aspirations for using digital data include improving the student experience, personalising services, automating processes, increasing institutional efficiency, and delivering evidence to the regulator. The universities we analysed were building digital ecosystems to achieve these aims, including procuring digital infrastructure and software to collect data, run data operations, and benefit from data products. Universities develop many data products themselves and also procure proprietary data products. Universities are open to learning from their data and experimenting with various analyses, as described by this participant:

[W]e did a research project a few years ago to look at our teaching programmes and do lots of analysis on the data we’ve got to see what we can find out. And we did find out some great stuff. We found out where we’re sort of letting down our widening access students and BAME students aren’t necessarily accessing our services; and some reasons why that might be. (G2P2).

However, such experimentation requires appropriate resourcing to be sustainable. Participants felt their initiative was beneficial in that it brought relevant insights but also highlighted that it was run by enthusiasts in addition to their usual workloads. The existing staff reportedly lack the skills and time for more substantial data analyses, and organisations lack integration of such analyses into institutional operations and data infrastructures.

University aspirations for data operations often lack clarity, and key concepts have different meanings. For example, focus group participants spoke about student experiences, but their elaboration was heterogeneous and referred to student perceptions of available technology (e.g. fun apps), physical infrastructure (e.g. modern gyms), extracurricular activities (e.g. a variety of things to do), teaching quality, and more. Such broad scope points to a lack of shared understanding across the HE sector, or even within a single institution, in relation to how to address student experience, which could lead to confusion in achieving institutional aims. The following statement about how EdTech addresses student experience is an example of such lack of clarity and points to the presence of techno-determinism and solutionism (van Dijck, 2014):

I think when pushed, it’s very difficult to actually find what they mean by student experience. Where does it start, where does it end, how does Ed Tech, however you want to define that, how does that shape the student experience and improve the student experience? But this word, this mantra, that gets sort of batted around the organisation; and technology is seen as the, you know the medium through which a better student experience can be delivered. (G2P1).

A few participants argued that their universities benefit from learning analytics and related metrics. However, most were sceptical and reported that their institutions were not using analytics systematically or regularly. Rather, analytics are used ‘as a system to check attendance’ (G4 collective discussion) or ‘when it came to some kind of crisis point for where you needed to find some hard evidence’ (G3P2).

Participants find that some university aspirations for data use are not shared by those impacted. For example, automated communication with students is a common aspiration, but a few participants spoke about students not appreciating the practice:

So what we find now is that disengaged students tend only to engage when their academic, the academic that they know, who knows them, is the one that’s doing the intervention. But then that’s incredibly expensive. But they don’t engage if we get someone in a call centre calling them up saying, I’m just checking you’re okay, is there anything that we need to help you or whatever. (G6P4).

This quote is a good example of how the intention to reduce costs with automated communication, coupled with a different student support organisation, does not play out as imagined. Saving costs on academic labour does not necessarily bring the desired change.

The aspiration to increase institutional efficiency and reduce costs is an important one. We found examples where universities can make savings, such as in software subscription fees if they monitor software usage or publisher fees if they follow e-text access and reading trends. However, to enact more substantial institutional change, participants reported that datafication often results in higher costs and the need for more staff.

Finally, our participants spoke about the lack of evaluation of EdTech services and data products. There are many claims and promises from the EdTech industry, but universities are often left to evaluate the impact alone. For example, one participant talked about predictive analytics that their university procures as a data product:

[T]he first thing I asked was, show me the value, show me how accurate the data is, how accurate it was at predicting at low risk students versus high-risk students. Nobody’s done the analysis. And when you then talk to the business about, you know, their belief system and their effort. It’s completely out of balance. (G6P5).

Here, we can see the current disjuncture between aspirations for data use and evidence that these aspirations can be translated into reality.

Technological

Universities are undergoing substantive changes in their digital ecosystems. Many are migrating to the public cloud as a new core infrastructure to facilitate data flows and analysis. Fiebig et al. (2021) show that in 2015, 75% of British universities used Amazon, Microsoft, and Google cloud infrastructure, while in 2021, all of them did. That does not mean university digital ecosystems have been moved to the cloud entirely, but that universities are increasingly transitioning from in-house digital capacities to greater reliance on Big Tech (Williamson, 2022). Some university-based participants reported that they were currently undergoing a full migration to cloud infrastructure, which is a lengthy and costly process that can last approximately five years and cost more than £20 million. In addition, the annual costs of public cloud infrastructure are high.

All participants agreed that integration is vital for universities to use digital technology productively. Universities aim to integrate different infrastructures, platforms, applications, databases, and data sources. One participant explained how they can add value to software via integration because they can manage their aggregated data using bespoke approaches. Tools such as Single Sign On are used to trace user data across platforms and applications. Integration is also needed to create an overall architecture for the user experience of staff and students. However, universities face challenges with integration, including bringing together ‘patchy’ legacy software within a cohesive ecosystem. One focus group participant explained the problems with legacy software:

[S]tudent record systems are a classic example. It’s our core core thing. We hate it, we love it, we can’t do anything without it, we’re stuck with three or four vendors who dominated the market for years. […] You know, in terms of these monolithic beasts that you just can’t get rid of that cost you nine million quid and six years to swap out a system. (G6P5).

Another challenge universities face with integration is that some platforms and systems are not designed to be integrated, especially larger ones. We were given examples of a Big Tech company used by most universities in the UK and a large LMS provider. Participants said that these companies did not allow easy integration of their software, which, in turn, creates more work for universities that need to develop bespoke integration solutions.

Participants also described challenges with data quality, noting that ‘perhaps one of the reasons why we have worries and concerns is because the quality of data is not where it needs to be’ (G4P1). Moreover, challenges arise when databases are not integrated:

G5P2: I think we’ve got about 36 separate databases that hold information about students […] And so we’ve kind of got this massive hotchpotch of systems instead of, well, we’re trying to have one big system but that’s not really working very well at the moment. (G5P2).

Participants discussed prioritising data cleaning and establishing good data management with a quality data warehouse at the time of research. They reported that universities are getting better at this every year:

And yes, the data is often dirty and that’s really annoying, but do you know what, even banks have dirty data so it’s not like this is a unique problem. And you need to put that effort in in trying as far as possible not to allow dirty data to enter your pool or your lake. And I think that that just gets better year after year. It used to be something that we put up with and now we don’t. (G6P3)

UK universities are increasingly using Microsoft for data warehouse purposes (reaching 40% of universities in 2021), as well as for business intelligence (65% of universities in 2021) (UCISA, 2022), which indicates the increasing power of Big Tech through the provision of core infrastructure and support for data operations (Birch & Cochrane, 2022). Some participants reported that they plan to introduce artificial intelligence to support data processing after establishing good data storage and management.

Legal

The legal dimension of university datafication is complex and includes data privacy and security matters, regulatory compliance, and contractual obligations. Legal requirements impact how universities procure software, manage digital technology and data flows after procurement, and use data products. Regarding procurement, our participants told us that in the past, university departments or individuals were free to procure the software they needed. But now, universities have centralised procurement requests and decisions to ensure all software purchases undergo a high level of scrutiny, as explained by this participant:

One thing that we’ve come to realise in recent years is we can’t be in a position where we just have lecturers or departments across our institution just happily buying software because they think it sounds good. So one thing that we have in place now is something called an [anonymised committee], which is where if somebody likes the look of a particular piece of software, even if it’s free software, they have to come to a committee to basically justify why they’re looking at it, what benefits can it bring and at that particular point we can evaluate cost. Our GDPR expert can get the relevant person to fill in a GDPR compliance form. (G2P3).

At the same time, participants felt that those responsible for data within universities might interpret legislation too strictly. One of the focus group statements was to ‘STOP using GDPR as an excuse for everything! More work on this’ (G5 collective discussion). Focus group participants discussed the value of particular data insights, such as dashboards provided by various platforms, but noted that internal university rules do not allow access to these insights freely and to everyone. This points to an ongoing tension at universities between technological and legal dimensions regarding the availability and use of data.

The legal dimension includes data to report to the regulator. One aspect is statutory reporting, which requires UK universities to send specific data to relevant authorities. However, another aspect is when universities use data to argue that they are achieving aims set in different frameworks. For example, one of the requirements in the UK’s Teaching Excellence Framework is to explain educational gains outside of the curriculum. Participants stated that various analytics become useful in providing these explanations. For example, one participant noted how analytics from LinkedIn Learning can be useful in this context: ‘So what else is the university giving you? A course on mindfulness, that could be something’ (G5P1).

However, many participants felt that using data for policy and regulatory requirements is not always productive. One reason is that large volumes of data need to be collected without necessarily addressing the issue at hand:

It doesn’t necessarily fix the underlying problem, it might not even flag things that are new. But it does drive the need to collect that data in an as accurate a way as you can. It’s not a magic wand, it’s just data. (G4P3).

Other participants also reported that collecting and dealing with data for reporting can be time-consuming without generating added value:

Yes, but the problem is, it’s hard for us to know, isn’t it, to what extent do we need to subvert those neoliberal discourses that are all about accountability and measuring, you know, as I said before, measuring the pig. […] what I’d quite like to do is be able to play the game quicker and easier by just going and saying, right here you are, here’s your data, I think it’s meaningless, but if you want it, fine off you go. At the same time, you know, what I’m doing in real life is this. And the problem is that the game playing seems to take 90% of my time as an academic rather than anything else. (G5P2).

This points to a situation where external policy demands pressure university staff to deliver more data and provide evidence of impact in specific ways, leading to a vicious cycle of responding to demands and generating reports without being afforded the time to reflect on these data and implement constructive changes to practice.

Commercial

Universities collaborate with EdTech and other technology companies to develop data-based digital products and/or test new software features. Our participants reported undertaking pilot projects with EdTech companies, such as testing new analytics features in an LMS or testing use cases of a new platform with an EdTech start-up. Moreover, universities might allow companies to collect and process user data for product development, especially if the university expects to benefit from the product in future. We heard examples of a university allowing a technology company to collect and aggregate de-identified user data to develop AI algorithms for student engagement. In return for collaboration, companies may offer reduced fees for these products for a certain time. However, some focus group participants felt uneasy in that these company strategies might not always benefit universities. This might be because companies start charging high fees later, even though universities participated in developing the product with their data, feedback, and labour. Some participants also spoke about how these products might not work well or might generate flawed metrics but are still rolled out due to decisions taken at the higher level of the university leadership. Moreover, some EdTech companies train and motivate universities to use data products available in their platforms, which expands datafication within universities and facilitates rising subscription costs.

Participants observed that until recently, universities used digital products and services they needed but did not pay much attention to user data generated by platforms beyond respecting privacy legislation. It was accepted that companies would process and use data to drive innovation. However, since the COVID pandemic, universities have become more conscious of the value of user data and analytics and want to process and analyse data internally. While vendors offer dashboards for displaying user data analytics, universities now want their data back via an application programming interface (API) and do not necessarily find dashboards useful. Thus, universities increasingly demand that their user data be returned to their data lakes, seeking this as a condition in new contracts, as stated by one participant:

We hate people taking our data and then trying to sell it back to us. So on the data, that is a really important sort of criteria for us when we’re purchasing, in having access to our data. (G2P2).

Our participants spoke about companies’ different approaches to sharing student and staff data they collect. At one extreme, some companies extract and enclose the data without providing any access to universities. They also do not send user data back to the university. The most common example was that of a well-known plagiarism detection platform. At the other extreme are companies that automatically return user data back to the university, enabling data to be easily integrated into university systems and providing detailed analytics dashboards. In between are platforms that allow user data to be downloaded by universities, but in time-limited batches (e.g. 6 months of data at a time) and in a format that may be hard to manage and analyse. An example we were given was a popular LMS company. Therefore, (1) how companies collect and process user data and integrate analytics into their product, and (2) whether they provide data access to universities, crucially shaped participants’ perceptions of the usefulness of products and the fairness and legitimacy of business models.

Organisational

University roles and practices are changing due to datafication, leading to increased costs and new labour needs. Staff requirements are changing due to datafication. Learning technologists have become more central, vendor management has become a critical task, IT professionals need specific skill sets, and new profiles are needed, such as data scientists and project managers. Vendor managers need to maintain relationships with 100–200 technology companies for core digital products. Universities once required on-premise IT architects, but now, they increasingly need cloud engineers and developers.

Our participants reported changes to the organisational aspects of universities based on IT and datafication requirements. IT support is being restructured, and many participants described the continuous reorganisation of their university’s administration as intending to create agile organisations. Technological changes are often also business changes.

Most universities have an explicit digital or data strategy. However, participants felt that there is still often a lack of shared understanding regarding institutional data landscapes. This includes different values and aims concerning data, as people have different ideas about what data should be generated and used. In terms of day-to-day operations, there is a lack of coherence in everyday practices. For example, many participants noted that learning analytics and business intelligence capabilities enable the use and display of large volumes of data, but in many instances, users cannot interpret and act on this information sensibly.

There is an apparent sector-wide lack of skills in data analysis and interpretation and a lack of people who can use data appropriately to make decisions, based on our study. For example, one focus group participant shared the following experience of participating in university committees:

I don’t think anyone really has the experience to understand the data or to analyse it properly. So, we’re looking at graphs, we’re looking at spreadsheets, we’re comparing like NSS spreadsheets against attainment graphs, going up and down. And no one there is a statistician. No one really understands how to understand stuff, and it’s all very much anecdotal, and you know, well let’s try this, well let’s try that, you know, maybe that will make a difference. And so even at the highest level of the senior management team, we have all this data, but no one really knows what to do with it. (G3P2).

Participants pointed to the disconnect between (1) collecting and analysing data and (2) acting on the data. Their view was that these discussions are frequently disconnected within universities. Moreover, it is expensive to act on data, for example, by implementing interventions and evaluating their success. One participant explained that their university was able to generate predictive analytics on the likelihood of student success but was facing challenges in doing the next step, which involved finding and implementing interventions to support students who were identified as struggling. Even though a university’s data maturity may enable the generation of actionable data, the university does not always react.

Ideological

Participants described a pervasive belief in the value of data in the university sector. Some spoke about instances where data is believed to be able to solve existing and future problems simply by being collected and analysed: ‘perhaps a perception in some quarters is that data in itself will drive efficiency, solve problems’ (G4, collective discussion). We can understand this trust and belief in data as an ideology of data solutionism (van Dijck, 2014) that is not supported by realistic assessments of what data can do, as elaborated by this participant:

But I think the tendency with some-, I’m speaking from our institution’s the learning analytics platform route is just present the data and it will solve all the problems . That won’t do anything on its own. The data itself won’t cure anything. It can create workload, it can create you know, change of workflow to mean anything. (G4P3).

We identified a fundamental paradox where the belief in the value of data is not realised, at least not to the extent that participants would wish. Participants spoke about data products possibly displaying incorrect metrics or not being useful or reliable, as this participant notes:

And annoyingly, I think some of the, we’ve had, kind of looked at students who had zero engagement with the materials and looked at their marks and they still performed really well. So, they either just relied on books or just downloaded everything as PDF and didn’t engage in conversation and anything like that. (G3P1).

The lack of reliability may be due to flawed premises concerning what particular data products represent. There does not seem to be a comprehensive agreement across, or even within, institutions regarding data products’ valid and reliable interpretation and use. Some participants spoke about learning analytics not representing learning or student engagement scores not representing engagement. Quantification involves omissions in collection or interpretation (Pasquale, 2015).

A few participants felt that university administrators are mesmerised by the promises of quantitative certainty, which is a desire promoted within society more generally (Fourcade & Healy, 2024). However, many of our participants felt that a move towards centring most or all university processes on technology and data is working against the university’s core values and social relations. As the following quote indicates, university staff already have well-established practices for supporting students and appropriately monitoring their progress without analytics and scores, which would, contrary to its promise, duplicate things at best:

[O]ne reason why I find all this stuff very superfluous is that built into the whole process of teaching and learning, we have engagement metrics already. It’s called formative assessment and it’s called assessment. So, at these different points, especially when you have formative assessments throughout the year or even a kind of like a, you know, modular assessment or bit by bit assessment, anything apart from an end of year exam, you’re getting that feedback constantly about how students are doing, how they’re engaged, how, you know, how much they’re learning. And that just comes, that’s part of the job, and the lecturers are all intimately aware of that. So, it’s actually pointless having a little number or having some kind of graph showing you, oh watch out for this student, because you can see it in their work already. It’s kind of just doubling up something which is already there. (G3P2).

Similarly, participants reported that many university activities happen outside of digital platforms. Consequently, they escape datafication in that they are not recorded and included in metrics and analytics. Another critical set of views on data products is around perceptions of surveillance, which threaten trusted academic relations within universities:

And then you have people who just say, well, you know, why do you want to know? Will you be wanting to know the inside leg measurements of our students next? How Orwellian do you want this to be? Are we just now not trusting them, so we have to quantify and watch everything they do? (G5P2).

Participants indicated that it is mainly academics who would raise such concerns and suggested that they have an ulterior motive for these critiques, such as that they are overworked and do not want to incorporate data in the workflows because of work pressure, that they are scared of what data would show about their practices, and that they do not want change. However, dismissing critique and lacking time to address concerns about data points to ideological pressures around datafication.

Some participants felt that the datafication of universities is an extension of performative, neoliberal modes of governance (Prinsloo, 2020a). Participants stated that the possibility of data collection should not be a sufficient reason to collect it. Moreover, even if data is collected, participants felt that this does not necessarily mean it should be used for any analytics and governance decisions. The dynamic of some university constituents turning to data products while others remain sceptical, and the overall complex dynamic around university datafication, reveals that it will be hard to make institutional change without a democratic discussion of data use cases, as explained by this participant:

I think there’s a lot about interpretation of the data that’s lacking, and if we don’t have that very clear, then the Big Brother thing comes in, and you just think, well you’re just collecting and using data for no real benefit. It’s just collecting data. (G4P3).

Unless ontological and epistemological perspectives on data and data products are constructively addressed within and across universities, it is hard to see how data can substantially help and support universities. Datafication should not be reduced to whether certain university staff are for or against progress but should be accepted as a political and existential question for universities.

Existential

Students and staff are often excluded from decision-making about datafication, data products, data use, and the impact of data practices. Instead, universities focus on setting up digital infrastructure and ecosystem, rolling out data products, and respecting privacy legislation. Consequently, universities provide basic information to staff and students about which data is collected and the purposes of collection, and gain consent as required by law. However, our participants felt this is often not done meaningfully and transparently, leading to individuals not knowing or understanding what is happening with the data they produce. Moreover, they cannot opt out of contributing to developing data products as their user data is collected even if personal data are de-identified, nor are there democratic ways of discussing what data products are being developed and procured, for what reasons, under which conditions, with what impact, and so on. Thus, students and staff have limited agency over data collection and processing, which impacts their study and work lives (Marachi & Quill, 2020; Thompson & Prinsloo, 2023). Our participants reported that feelings of surveillance and concerns about the quality of data products are not addressed either, which seems to threaten the core of the university’s traditional values of collegiality, trust, democratic governance, and transparency.

Our participants welcomed the increased use of digital technology, and datafication was valued by all when it supports administrative and technical processes, such as managing subscription licences for software or academic text access. However, there is significant scepticism when datafication interferes with human decisions and agency, including processes such as recommendations, behavioural nudging, automation of decisions, and automation of communication. When data intervenes in teaching and learning, and human cognition, sentiment, and action, it is necessary for universities to take time to thoroughly and democratically discuss use cases of data products and collectively determine the rules.

Another key question for universities is who aggregates user data for innovation. As mentioned above, participants reported that EdTech companies collect and aggregate user data and use them for product development. We have not found evidence of user data being sold or used for advertising. Instead, we found that companies develop data products with university data and then charge universities for access to these data products. This is a usual practice in the digital economy (Sadowski, 2019), but some participants felt such practices were unfair and predatory. Even more so, some participants spoke about how this will impact the very core of the university as an institution:

[W]e’re not keeping track of where that may be going and how it will be used as an aggregate back at us. I think it’s important for us to know more about how our students are behaving and all those kind of things. How data will build platforms in competitive solutions, and then tie us into things, is something that I’m not sure we have captured. I’ve been talking to a number of companies recently around adaptive learning platforms and all those types of things; and it’s-, are we beginning to outsource what Higher Education is? (G1P2).

One of the clear narratives among some participants, particularly those from more entrepreneurial universities, was about scaling their online study provision and using AI and data products instead of human teachers and support staff. Consequently, the university’s key role may evolve into (i) content curation and (ii) management of technology used for automated learning and coordinating peer discussions. Such imaginaries raise the question of whether this is a desired future of the British HE. Some participants also felt that the EdTech industry is driving the ideas and imaginaries of the future of HE. They reported that universities are not good at communicating their imagined futures and the role of technology and data in realising these futures.

Finally, we must explore the impact of particular business models and the EdTech market structure on datafication in HE. We found a trend toward EdTech companies making their digital products valuable by integrating data products into their platforms, such as analytics or personalised recommendations. We call this practice ‘datafying products’, and it supports the aim of EdTech companies to convince universities to pay higher fees. The model seems to be working, and it motivates companies to keep developing more data products, increasingly with AI, even though the impact of these products is not clear, including a lack of evidence of positive outcomes (Williamson et al., 2024). Large subscription fees for datafied EdTech products are perceived to be legitimate only if universities are convinced they are valuable enough to pay for them. For example, universities are not likely to pay high fees for an LMS that functions only as a document repository. Thus, LMSs have transformed into Software-as-a-Service platforms with integrated data products (e.g. analytics or recommendations) displayed using dashboards. As discussed above, these must be perceived as valuable, which is why companies train universities on how to use data products and work on pilot projects together. However, it seems that criticism of these practices is not taken seriously at universities. Moreover, differences between on-campus and online courses seem to be omitted. Various analytics are promoted as working similarly for both types of delivery even though in on-campus courses, not everything is done via platforms and recorded, and students prefer in-person social relations. These omissions are necessary to nourish the belief in the value of data and, consequently, the value of proprietary datafied platforms. This disjuncture between the perceived and actual value is a key issue that needs further research.

Conclusion

In this article, we have discussed current work undertaken by UK universities to datafy their operations and have explored seven dimensions of this work. These seven dimensions are closely interrelated and are different perspectives on cross-cutting issues rather than distinct domains of activity. Overall, the perceptions of our participants and their understanding of practices on the ground indicate that university datafication aspirations are often not achieved for various reasons, including aims not being clear or shared, technological challenges, lack of resourcing, lack of action upon data, lack of evidence of impact, and concerns about negative impacts of data collection and use. While our participants reported on the extensive volume of work universities have already invested in the datafication of their operations and improving data collection, storage, and analysis, they also felt universities need more resources, time, staff, and support to fully realise their ambitions in relation to datafication.

Datafication creates several dilemmas for universities that we have explored. First, increasing collection and analysis of data could improve learning outcomes and help sharpen teaching objectives; however, there is a risk that the expansion of data operations will shift universities towards more financial and ordinal logic (Fourcade & Healy, 2024), leading to the pursuit of rankings, for example, over their core teaching and research mission. Second, the increasing reliance on external technology providers could reduce demands for internal technology development, enabling universities to redirect increasingly scarce resources towards their teaching and research missions; however, the promise of EdTech products and services is often dependent upon increasing the workloads of university teaching and support staff and increasing costs (Komljenovic et al., 2024, 2024b). The promise of being able to redirect resources is a chimera. Third, and cutting across all seven dimensions of change, there is a significant disjuncture between the perceived value of datafication and evidence of this value in practice. This disjuncture sustains scepticism about the potential benefits of datafication and serves as an obstacle to winning institutional and individual support for change. Universities need more resources to make available data useful, including meeting the rising costs of technology, employing new staff profiles, and facilitating internal discussions on datafication.

Our analysis shows that datafication is uncontroversial and widely valued when it supports administrative and technical processes. However, there is less agreement and more significant controversy about the use of data to (1) augment or replace human decision-making and agency and (2) to intervene in teaching, learning, and human cognition, sentiment, and action. In order to build greater support for datafication and using data, universities must invest time in thoroughly and openly consulting on use cases for data products and collectively determining what data will be collected, for what purpose, how, and to what ends. As Calacci and Stein (Calacci & Stein, 2023) argue, ‘data regulation in the workplace requires a framework that acknowledges the core interest workers have in accessing their data: to collectively exert greater agency and control at work. … workplace data regulation should largely be a matter of workplace governance and worker co-determination, an approach rooted in workers’ rights, to negotiate the terms of their employment agreements and specific working environments’. Similarly, students should be active agents in co-determining strategies and processes for university datafication. We propose that this shift in university approaches to datafication is a critical next step to enable aspirations to become data-driven organisations.

Data availability

Data used in this article is available at: Komljenovic, Janja and Sellar, Sam and Hansen, Morten (2023). EdTech in Higher Education: Focus Groups, Database, and Documents on EdTech Companies, Investors and Universities, 2021–2023. [Data Collection]. Colchester, Essex: UK Data Service. https://doi.org/10.5255/UKDA-SN-856729.

References

Arantes, J. (2023). Educational data brokers: Using the walkthrough method to identify data brokering by EdTech platforms. Learning, Media and Technology, 49(2), 320–333. https://doi.org/10.1080/17439884.2022.2160986

Beer, D. (2019). The data gaze: Capitalism, power and perception. Sage Publications, Inc.

Birch, K., & Cochrane, D. T. (2022). Big Tech: Four emerging forms of digital rentiership. Science as Culture, 31(1), 44–58. https://doi.org/10.1080/09505431.2021.1932794

Birch, K., Cochrane, D., & Ward, C. (2021). Data as asset? The measurement, governance, and valuation of digital personal data by Big Tech. Big Data & Society. https://doi.org/10.1177/20539517211017308

Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101.

Broughan, C., & Prinsloo, P. (2020). (Re)centring students in learning analytics: In conversation with Paulo Freire. Assessment & Evaluation in Higher Education, 45(4), 617–628. https://doi.org/10.1080/02602938.2019.1679716

Brown, M., & Klein, C. (2020). Whose data? Which rights? Whose power? A policy discourse analysis of student privacy policy documents. The Journal of Higher Education, 91(7), 1149–1178. https://doi.org/10.1080/00221546.2020.1770045

Calacci, D., & Stein, J. (2023). From access to understanding: Collective data governance for workers. European Labour Law Journal, 14(2), 253–282. https://doi.org/10.1177/20319525231167981

Castañeda, L., & Selwyn, N. (2018). More than tools? Making sense of the ongoing digitizations of higher education. International Journal of Educational Technology in Higher Education, 15(22). https://doi.org/10.1186/s41239-018-0109-y

Doyuran, E. B. (2023). Nudge goes to Silicon Valley: Designing for the disengaged and the irrational. Journal of Cultural Economy, 0(0), 1–19. https://doi.org/10.1080/17530350.2023.2261485

Drake, B. M., & Walz, A. (2018). Evolving business intelligence and data analytics in higher education. New Directions for Institutional Research, 2018(178), 39–52. https://doi.org/10.1002/ir.20266

Elish, M. C., & boyd, danah. (2018). Situating methods in the magic of Big Data and AI. Communication Monographs, 85(1), 57–80. https://doi.org/10.1080/03637751.2017.1375130

Eynon, R. (2013). The rise of Big Data: What does it mean for education, technology, and media research? Learning, Media and Technology, 38(3), 237–240. https://doi.org/10.1080/17439884.2013.771783

Fiebig, T., Gürses, S., Gañán, C. H., Kotkamp, E., Kuipers, F., Lindorfer, M., Prisse, M., & Sari, T. (2021). Heads in the clouds: Measuring the implications of universities migrating to public clouds. http://arxiv.org/abs/2104.09462. Accessed 8 Apr 2022.

Fourcade, M., & Healy, K. (2024). The ordinal society. Harvard University Press.

Gulson, K. N., Sellar, S., & Webb, P. T. (2022). Algorithms of education how datafication and artificial intelligence shape policy. University of Minnesota Press

Jarke, J., & Breiter, A. (2019). Editorial: The datafication of education. Learning, Media and Technology, 44(1), 1–6.

Jones, K. M. L. (2019). Learning analytics and higher education: A proposed model for establishing informed consent mechanisms to promote student privacy and autonomy. International Journal of Educational Technology in Higher Education, 16(1), 24. https://doi.org/10.1186/s41239-019-0155-0

Knox, J., Williamson, B., & Bayne, S. (2020). Machine behaviourism: Future visions of ‘learnification’ and ‘datafication’ across humans and digital technologies. Learning, Media and Technology, 45(1), 31–45.

Komljenovic, J. (2020). The future of value in digitalised higher education: Why data privacy should not be our biggest concern. Higher Education. https://doi.org/10.1007/s10734-020-00639-7

Komljenovic, J., Hansen, M., Sellar, S., & Birch, K. (2024). EdTech in higher education: Empirical findings from the project ‘Universities and Unicorns: Building Digital Assets in the Higher Education Industry’ [Special Report]. Centre for Global Higher Education. https://www.researchcghe.org/publications/special-report/edtech-in-higher-education-empirical-findings-from-the-project-universities-and-unicorns-building-digital-assets-in-the-higher-education-industry/. Accessed 16 Apr 2024.

Komljenovic, J., Sellar, S., Birch, K., & Hansen, M. (2024b). Assetisation of higher education’s digital disruption. In B. Williamson, J. Komljenovic, & K. N. Gulson (Eds.), World Yearbook of Education 2024: Digitalisation of Education in the Era of Algorithms, Automation and Artificial Intelligence (pp. 122–139). Routledge Taylor&Francis Group.

Kuch, D., Kearnes, M., & Gulson, K. (2020). The promise of precision: Datafication in medicine, agriculture and education. Policy Studies, 41(5), 527–546.

Kwet, M., & Prinsloo, P. (2020). The ‘smart’ classroom: A new frontier in the age of the smart university. Teaching in Higher Education, 25(4), 510–526.

Lupton, D., & Williamson, B. (2017). The datafied child: The dataveillance of children and implications for their rights. New Media and Society, 19(5), 780–794.

Marachi, R., & Quill, L. (2020). The case of Canvas: Longitudinal datafication through learning management systems. Teaching in Higher Education, 25(4), 418–434.

Mejias, U. A., & Couldry, N. (2019). Datafication. Internet Policy Review, 8(4). https://doi.org/10.14763/2019.4.1428

Moreira, F., Ferreira, M. J., & Cardoso, A. (2017). Higher education disruption through IoT and Big Data: A conceptual approach. In P. Zaphiris & A. Ioannou (Eds.), Learning and Collaboration Technologies. Novel Learning Ecosystems (pp. 389–405). Springer International Publishing. https://doi.org/10.1007/978-3-319-58509-3_31

Pangrazio, L. (2024). Data harms: The evidence against education data. Postdigital Science and Education. https://doi.org/10.1007/s42438-024-00468-2

Pardo, A., Jovanovic, J., Dawson, S., Gašević, D., & Mirriahi, N. (2019). Using learning analytics to scale the provision of personalised feedback. British Journal of Educational Technology, 50(1), 128–138. https://doi.org/10.1111/bjet.12592

Pasquale, F. (2015). The black box society. Harvard University Press.

Pistor, K. (2020). Rule by data: The end of markets? Law and Contemporary Problems, 83(2), 101–124.

Ponterotto, J. G. (2006). Brief note on the origins, evolution, and meaning of the qualitative research concept “thick description.” The Qualitative Report, 11(3), 538–549.

Prinsloo, P. (2020a). Data frontiers and frontiers of power in (higher) education: A view of/from the Global South. Teaching in Higher Education, 25(4), 366–383. https://doi.org/10.1080/13562517.2020.1723537

Prinsloo, P. (2020b). Of ‘black boxes’ and algorithmic decision-making in (higher) education – A commentary. Big Data and Society. https://doi.org/10.1177/2053951720933994

Reidenberg, J. R., & Schaub, F. (2018). Achieving big data privacy in education. Theory and Research in Education, 16(3), 263–279. https://doi.org/10.1177/1477878518805308

Sadowski, J. (2019). When data is capital: Datafication, accumulation, and extraction. Big Data & Society, 6(1), 1–12.

Sadowski, J. (2020). Too smart: How digital capitalism is extracting data, controlling our lives, and taking over the world. The MIT Press.

Sclater, N. (2017). Learning analytics explained. Routledge.

Selwyn, N. (2015). Data entry: Towards the critical study of digital data and education. Learning, Media and Technology, 40(1), 64–82

Selwyn, N., & Gašević, D. (2020). The datafication of higher education: Discussing the promises and problems. Teaching in Higher Education: Critical Perspectives, 25(4), 527–540.

Thompson, T. L., & Prinsloo, P. (2023). Returning the data gaze in higher education. Learning, Media and Technology, 48(1), 153–165. https://doi.org/10.1080/17439884.2022.2092130

UCISA. (2022). Trends in corporate information systems 2011–2021. Retrieved April 17, 2024, from https://www.ucisa.ac.uk/Groups/Corporate-Information-Systems-Group/CIS-survey-2021-results

van Dijck, J. (2014). Datafication, dataism and dataveillance: Big Data between scientific paradigm and ideology. Surveillance & Society, 12(2), 197–208. https://doi.org/10.24908/ss.v12i2.4776

Watters, A. (2014). The monsters of educational technology. Audrey Watters. http://monsters.hackeducation.com/. Accessed 18 Apr 2014.

Williamson, B. (2017). Big Data in education: The digital future of learning, policy and practice. SAGE.

Williamson, B. (2018). The hidden architecture of higher education: Building a big data infrastructure for the ‘smarter university’. International Journal of Educational Technology in Higher Education, 15(12). https://doi.org/10.1186/s41239-018-0094-1

Williamson, B. (2019). Policy networks, performance metrics and platform markets: Charting the expanding data infrastructure of higher education. British Journal of Educational Technology, 50(6), 2794–2809. https://doi.org/10.1111/bjet.12849

Williamson, B. (2020). Making markets through digital platforms: Pearson, edu-business, and the (e)valuation of higher education. Critical Studies in Education. https://doi.org/10.1080/17508487.2020.1737556

Williamson, B. (2022). Big EdTech. Learning, Media and Technology, 47(2), 157–162. https://doi.org/10.1080/17439884.2022.2063888

Williamson, B., Bayne, S., & Shay, S. (2020). The datafication of teaching in Higher Education: Critical issues and perspectives. Teaching in Higher Education: Critical Perspectives, 25(4), 351–365.

Williamson, B., Molnar, A., & Boninger, F. (2024). Time for a pause: Without effective public oversight, AI in schools will do more harm than good. National Education Policy Center. Retrieved April, 19, 2024, from http://nepc.colorado.edu/publication/ai

Funding

This work was supported by the Economic and Social Research Council, UK, under Grant [ES/T016299/1].

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Komljenovic, J., Sellar, S. & Birch, K. Turning universities into data-driven organisations: seven dimensions of change. High Educ (2024). https://doi.org/10.1007/s10734-024-01277-z

Accepted:

Published:

DOI: https://doi.org/10.1007/s10734-024-01277-z