Abstract

Earthquakes pose a significant hazard, and due to the growth of vulnerable, exposed populations, global levels of seismic risk are increasing. In the past three decades, a dramatic improvement in the volume, quality and consistency of satellite observations of solid earth processes has occurred. I review the current Earth Observing (EO) systems commonly used for measuring earthquake and crustal deformation that can help constrain the potential sources of seismic hazard. I examine the various current contributions and future potential for EO data to feed into aspects of the earthquake disaster management cycle. I discuss the implications that systematic assimilation of Earth Observation data has for the future assessment of seismic hazard and secondary hazards, and the contributions it will make to earthquake disaster risk reduction. I focus on the recent applications of Global Navigation Satellite System (GNSS) and increasingly the use of Interferometric Synthetic Aperture Radar (InSAR) for the derivation of crustal deformation and these data’s contribution to estimates of hazard. I finish by examining the outlook for EO in geohazards in both science and decision-making, as well as offering some recommendations for an enhanced acquisition strategy for SAR data.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Earthquakes are a consequence of the sudden release of the accumulation of strain within the Earth’s crust due to the stresses from the tectonic driving forces of plate motion and mountain building. Earthquakes produce two major sets of measurable physical phenomena. Firstly, seismic waves propagate from the earthquake source, radiating outwards, and can be measured globally by seismometers. It is these waves that are the source of the ground shaking (accelerations) that comprise the bulk of the hazard to buildings in major earthquakes. Secondly, a permanent displacement of the Earth’s surface occurs due to the change in the accumulated elastic energy that results from the sudden slip across the fault surface (which in itself can be a direct hazard if buildings straddle the fault rupture). This static displacement leads in the long-term (after many earthquake cycles) to the permanent deformation of the crust, accommodating the translation of plates and crustal blocks, as well as resulting in the growth of geological structures and mountains. It is the second of these earthquake ground phenomena (permanent static displacements) that we are largely concerned with in this review because these are typically visible to Earth Observing systems that acquire images of the land surface. We seek to contribute towards better recognising the hazard associated with seismic shaking by expanding the analysis of both earthquake ground displacements and the longer-term relative interseismic displacement of the crust leading up to earthquakes. Identifying the location and rates of crustal deformation is important for constraining the potential fault sources of the seismic shaking that constitutes the hazard. In addition to the primary hazard of shaking, secondary hazards include triggered tsunamis, landsliding, liquefaction and volcanic unrest, as well as cascading events such as fires and flooding that can result following the initial earthquake (Tilloy et al. 2019), but these are not a focus of this review.

Whilst globally the death rate from natural disasters such as flooding and drought has declined dramatically in the past century, the fatality rates from earthquakes (Fig. 1) have remained persistent. It is a target of the Sendai Framework for Disaster Risk Reduction to reduce the global disaster mortality rate (along with those affected and economic losses) for this coming decade compared to the past decade. However, as populations continue to grow and cluster, an increasing number of people are exposed to the hazards from earthquakes, having gathered to live in urban centres and thus created megacities over recent decades (Fig. 2). These population centres are often clustered along fault lines (Fig. 3) resulting from historical trade routes, water supplies, fertile basins and security that exist due to the mountains created by the same tectonic processes that cause the underlying hazard (Jackson 2001, 2006). Rapid population growth in economically developing countries, many with limited economic resources or facing other pressing near-term priorities, has led to housing structures of often limited seismic resilience, thus contributing to a heightened vulnerability of those exposed. Furthermore, entrenched and deep-seated corruption tends to by-pass any attempts made to improve this situation with seismic design codes in some countries where earthquake hazard is recognised but ignored. This duplicity results in much worse outcomes in the face of an earthquake disaster than expected, given the prevailing income level (Ambraseys and Bilham 2011).

Annualised death rates from disasters resulting from natural hazards, grouped by decade from the beginning of the 20th Century (e.g., 1900 is the average annual rate of deaths for the complete years 1900–1909, coloured by hazard type). A decline is observed in the number of deaths from climatological and hydrological disasters attributed to drought and flood. However deaths due to earthquake disasters have persisted. Source: International Disaster Database, EM-DAT, CRED, UCLouvain, Brussels, Belgium. http://www.emdat.be

Population exposure of capitals and major cities of the Development Assisted Countries (DAC) as a function of the strain rate determined from the Global Strain Rate Map (Kreemer et al. 2014), used as a proxy of the seismic hazard. Each city is coloured by the Gross Domestic Product (GDP) per capita for the country as a proxy for the first order control on the physical vulnerability

Selection of global capital cities (from Fig. 2) in economically developing nations situated in regions of active tectonic strain accumulation, with major faults marked by red lines (from the Global Earthquake Model Active Fault database, Styron et al. 2010). Satellite imagery is from Sentinel 2 data and is true colour RGB composites (10 m visible bands 4, 3, 2) from cloud free images acquired in 2019

Broadly, we understand how and where earthquakes happen. However, many recent events continue to surprise us regarding the nature of their exact location, size and sometimes style of faulting. Therefore, our knowledge is incomplete and there is an advantage to be had in forensically studying each major seismic event to understand more about the overall earthquake process. This is best done globally, because typically the recurrence time interval for a major earthquake in any given area is long (hundreds of years). We can make an ergodic assumption and substituting space for time we can use global datasets to better understand the size, exact locations and rupture processes, as well as how to interpret the fault geomorphology at the surface as a clue to the underlying hazard (Fig. 3). Furthermore, we can not only use the past location of earthquakes but also examine where the strain is accumulating that eventually leads up to an earthquake. This has been done at a global scale using the Global Strain Rate Map (Kreemer et al. 2014). This makes use of the relatively coarse distribution of Global Navigation Satellite System (GNSS) stations to estimate the velocity fields on continental and subduction zone areas from which estimates of rates of strain can be made. It is this strain accumulation that is typically released in earthquakes. This measure of strain build-up acts as a first order proxy for the potential level of earthquake hazard that a region is exposed to. By comparing the location of capital cities for the world's economically poorer nations with this strain map, we can see that over 50 major population centres lie in regions that are accumulating a significant crustal strain, a phenomenon typically synonymous with seismic hazard (Fig. 2) as this strain is usually released violently in earthquakes. This intersection provides a targeted list of exposed populations for focusing efforts on better locating active fault traces (Fig. 3), constraining the seismic hazard, increasing the preparedness and examining the earthquake risk for major cities (Hussain et al. 2020).

For the stresses to build up on a fault (whose eventual sudden failure will lead to an earthquake), the fault surface itself is locked in the period between earthquakes, in that no displacement occurs immediately across it. However, an increasing number of observations point to the propensity for faults (in particular subduction zone interfaces) to accommodate transient slow slip within these locked zones (Jolivet and Frank 2020). This slip releases the accumulated elastic energy slowly enough that seismic shaking is not generated, but the motion can be detectable geodetically. Measuring such signals is important for determining the long-term slip budget of a fault assessing their mechanical and rheological behaviour and is particularly important for major subduction zones (Wallace et al. 2016).

Finally, being able to forewarn of an impending earthquake through the use of earthquake precursors (Cicerone et al. 2009) has been the preoccupation of parts of the earthquake community for decades. These precursors could take the form of foreshocks in a region that indicate a build-up to the mainshock, gas emissions, electromagnetic field disturbances, ground deformation or changes to groundwater water levels. However, a reliable system for demonstrating the use of such potential precursors continues to be elusive for short-term prediction. For longer-term assessment of the location of earthquake hazard, measurements of where strain in the crust is accumulating and at what rate using GNSS (Bird and Kreemer 2015) and increasingly InSAR data offer greater potential, but does not provide short-term predictions that enable the timely evacuation of populations. Instead, efforts to focus on preparedness in the risk management cycle based upon knowledge of where hazardous faults are accumulating strain offer a tractable approach to increase resilience.

Due to the global coverage, increasing revisit frequency and wide area imaging of 100′s of km width at a time, Earth Observation (EO) offers a number of contributions to the improved understanding of both the earthquake cycle in terms of a physical process and also to aid in the better management of disaster risk at various stages. In this article, I start with a review of the fleet of Earth Observing systems that currently and historically have regularly imaged the Earth's land surfaces. I then highlight the diverse contributions to disaster risk reduction that EO presently, and could potentially, provide at different points of response, recovery, mitigation and preparedness. In particular, I focus on the emerging capacity of EO data to contribute towards strain mapping and seismic hazard assessment. The recent launches of constellations of earth imaging systems have opened up new opportunities for investigating earthquake hazard. The current growth of satellite systems shows no sign of abating, and future missions are continually being planned. However, there are still improvements that could be made, in particular in terms of the imagery acquisition strategies. I therefore finish by providing some recommendations for the further optimisation of satellite observations for earthquake hazard in future mission planning and development.

2 Earth Observing Systems for Solid Earth Processes

Over the past few decades an ever-expanding fleet of Earth Observation systems have been launched in into low-earth near polar orbit, enhancing our capability to monitor solid earth hazards. This continued expansion of satellite capacity (Fig. 4) has occurred both for active radar (synthetic aperture radar—SAR) and passive optical imaging systems (multi-spectral—MS). The European Space Agency’s (ESA) Copernicus programme and regular Sentinel-1/2 acquisitions have been central to making such analysis of data more systematic. The free and open data policy has greatly expanded the user base (aided by the development and provision of open software processing toolboxes). For instance, there existed a two-decade period from 1992–2014 when exploitation of SAR imagery was largely the preserve of academic specialists and small niche technical companies. The deployment of the Copernicus space segment (“the Sentinels”) from late 2014 will most likely be viewed as the defining watershed moment when the wide-scale exploitation of long-time series of big EO data took off, building upon USGS/NASA’s open data policy for imagery such as that from the Landsat program from 2008 (Zhu et al. 2019).

Timeline showing the typical major Low Earth Orbit EO satellites used for earthquakes comprising (a) optical and (b) RADAR systems (updated from Elliott et al. (2016a) to end of 2019). Optical satellites are ordered by their resolution and RADAR ones grouped by their microwave wavelength, and both sets are colour coded by their operators/agencies (an acronym list of space agency names is provided at the beginning of the paper). Anticipated launch dates for upcoming missions are shown approximately but are often subject to delay. Arrows continuing beyond present day are only indicative, and for many existing systems this is beyond their design lifespan specification (nominally 5–7 years though often exceeded). There are many other past and present satellite systems, but the ones shown here have near global or consistent systematic coverage, or they have a greater level of availability and suitability for earthquake displacement and crustal strain deformation studies. There has been a very recent increase in the number of constellations of optical microsatellites (such as Planetscopes indicated here) and also of systems from China such as Superview-1, as well as ones in commercial SAR (ICEEYE) and video (Vivid-i) that will become increasingly important to deformation studies as exploitation of these newer datasets develops

Furthermore, the type of operators of satellites is undergoing a shift. In the past, the expensive development and long-term commitment required by early satellite platforms has meant that they were the preserve of the national space agencies (and military industrial complexes). However, there has been an increasing shift (starting in the late 1990s with IKONOS at 1 m metre resolution) towards commercial operators offering the highest resolutions (increasingly sub-metre) of optical imagery both from large platforms (e.g., Satellite Pour l’Observation de la Terre SPOT6/7 1.5 m and WorldView ~ 0.3 m) and also lower-cost microsatellites (e.g., Planetscope—3 m). For SAR systems, this shift has lagged behind optical platforms by about two decades and has only occurred with the recent deployment from 2018 onwards of ICEEYE's micro-SAR satellites.

Earth Observation satellites are typically launched into a near-polar orbit in which they pass almost directly over the poles, as this enables them to achieve near-global coverage after a certain number of orbits (175 cycles of 98.6 min orbital period in the case of Sentinel-1A/B, taking a total of 12 days each to complete full coverage). Typically, this is in a low-earth orbit (LEO) at around 700 km for many SAR systems, and the width of tracks that can be imaged is usually a couple of hundred kilometres wide (depending upon the SAR imaging mode used). This improvement to the revisit period (down to 12 days with Sentinel-1 from 35 days with Envisat, for example) is due to the increase in the width of the track imaged in the range direction (from 70 km with Envisat to 250 km with Sentinel-1, when considering their main interferometric imaging modes). This reduced revisit time both increases the volume of data (through the number of independent observations made within a given time period) and also the level of interferometric coherence (as the interval between passes is shorter, the land surface has changed less), which subsequently leads to improved measurements of time series of surface deformation when using interferometry. However, due to power limitations for radar satellites, it is possible only to actively image for about a quarter of an orbit, so not all the Earth’s surface is imaged with SAR on every pass.

Earth imaging satellites are typically in a Sun Synchronous orbit so that they image the ground at the same time of day (for radar satellites this normally occurs in a dawn/dusk orbit). They consequentially pass the equator twice on each revolution, once in an ascending direction (approximately south-to-north with a retrograde orbital inclination angle of about 98 degrees to the equator, passing at around dusk) and once in the opposite descending direction (nearly north-to-south around dawn). The local time for the ascending node crossing is 18:00 for Sentinel-1. Whilst the radar instrument predominately points down towards the Earth, it is inclined off to the side and for Sentinel-1 is configured as a right-looking imaging system, so looks eastwards in an ascending direction and westwards in descending. Having images on both the ascending and descending passes increases the frequency of observing a given point and also helps to constrain the direction of ground displacement in terms of vertical versus horizontal deformation (although near polar-orbiting SAR satellites suffer from being far less sensitive to north–south motion, i.e. in the near azimuthal direction).

SAR imagery is typically acquired in one of three main wavelength bands (L 23 cm, C 6 cm, X 3 cm) with the higher-resolution imagery (1–5 m) in the past being at X-band. However, in terms of making interferometric measurements (InSAR) that are particularly useful for ground deformation, an issue of coherence arises across the time periods needed (week to months) to measure relative displacements from strain over wide areas. Phase coherence is a crucial parameter controlling the spatial extent and distribution of possible interferometric measurements with multiple SAR images. The time difference between acquisitions is one important constraint on the level of coherence achievable. In the past when revisit periods were longer (such as 35 days for Envisat) and also imagery was not acquired on every pass (with many months to year-long intervals between images), the low coherence limited the areas that were suitable for InSAR observations, depending upon land cover type and wavelength used (and this therefore created systematic variations in the level of coverage with latitude with interferometry). This means the technique succumbs to problems arising from vegetation causing decorrelation, making the data unusable, and this is particularly a problem at shorter wavelengths (Ebmeier et al. 2013) in non-urban environments. Currently, L-band measurements (such as from the Advanced Land Observing Satellite (ALOS-2) Phased Array type L-band Synthetic Aperture Radar PALSAR-2) suffer much less coherence loss at vegetated low latitudes, but the acquisition plan is not systematic enough globally to make continental-wide measurements of slow deformation easily (where regular, consistent and multiple acquisitions are required to measure the small displacement signals above the noise from differential atmospheric delay and other sources). It is anticipated that there will be an improvement in this capacity with the launch of further L-band missions such as NASA-ISRO Synthetic Aperture Radar (NISAR, Joint USA-India mission). Whilst most satellite SAR systems have focussed at X, C and L-bands, the UK's NovaSAR-1, launched in late 2018, operates in S band (~ 9 cm) to test the applications and capabilities for SAR imaging at this wavelength.

There have also been additions to the Global Navigation Satellite System (GNSS) such as with the nascent Galileo system. This will offer enhanced capability and improved accuracy of site velocities in the coming years, which will be of use both for high rate measurements of earthquake ruptures and for long-term interseismic rates of continental deformation with continuously occupied sites (and more rapid occupations of survey sites). A still burgeoning field is that of video satellites which are capable of capturing tens of seconds of high-definition imagery from steerable platforms that pivot during orbit to capture steady images of cities. Constellations, such as that based on the Carbonite-2 demonstrator (Vivid-i), are currently planned. Planet's SkySats have been able to capture high rate full motion video for 2-min durations for a number of years. These types of platforms and capabilities will play an increasingly important monitoring role in disaster response but will also be of use to scientists in a similar way to optical imagery. Use of multiple frames of images can be used to reconstruct topography through the structure-from-motion approach (Johnson et al. 2014). This could potentially play an important part in land surface change detection before and after a disaster.

Understanding the shape of the Earth and how this evolves through time underpins much of the Earth Sciences, and an important dataset for many aspects of this is topography. Globally available open datasets of digital elevation models (DEMs) are still at relatively coarse spatial resolutions compared to available optical imagery (30 m versus 10 m). Remotely sensed topography is largely derived either from radar or from optical stereo photogrammetry (and typically in the past at a national level this was often captured from aerial photography). An important milestone in 2000 was the Shuttle Radar Topography Mission (SRTM) (Farr and Kobirck 2000) that offered 90 m (and now 30 m) resolution topography for low and mid-latitudes. Open globally optically derived topography from the Advanced Spaceborne Thermal Emission and Reflection Radiometer (ASTER) Global DEM (GDEM) and from Panchromatic Remote-sensing Instrument for Stereo Mapping (PRISM) Advanced World-3D (AW3D) is also available at 30 m, with higher-resolution PRISM datasets available commercially. However, optically derived topographic datasets can exhibit greater high-frequency noise when compared to equivalent resolution radar-derived datasets. Bespoke DEMs at very high resolutions (a few metres) can be generated from in-track stereo imagery from satellite systems such as SPOT, Pleiades and Worldview. Topographic datasets often act as baselayers and background information for analysis, but they can also tell us about the physical processes of faulting, especially when looking at changes in topography (Diederichs et al. 2019).

3 International Initiatives and Organisations for the Enhanced Exploitation of EO in Disasters

The International Charter for space and major disasters provides satellite data for response and monitoring activities following major natural (e.g., flooding, earthquakes and volcanoes) or man-made disasters (such as oil spills). The International charter was initiated in 1999 and became operational on the 1 November 2000, with over 600 activations since then (http://disastercharter.org/).

The charter is activated by authorised users (representatives of a national civil protection organisation) which mobilises space agencies to obtain and quickly relay Earth Observation data from over 60 satellites on the unfolding disaster (there have been 67 earthquake activations to date in the past two decades). This can be particularly important for providing commercial data at high resolution and in a timely fashion that would otherwise be very costly to task for rapid acquisition. The main focus is on the initial response phase of a disaster with a limited timespan of activation, rather than any later phase of the cycle that may be useful for other aspects of disaster risk management (DRM).

An important organisation that seeks to coordinate the exploitation of satellite data for the benefit of society is the Committee of Earth Observing Satellites (CEOS), originally established in 1984 (https://ceos.org). This international forum brings together most space agencies with a significant EO programme in order to optimise the exchange and use of remote sensing data for the sustainable prosperity of humanity (Percivall et al. 2013). One particularly relevant part of CEOS is the working group on disasters which has coordinated pilot and demonstrator phases of multiple hazards projects (e.g.,, Pritchard et al. 2018), and one of which is focused on seismic hazards. Their aim is to broaden the user base of EO and engage with non-expert users, promoting the adoption of satellite data and products in the decision-making process for disaster risk reduction.

Another international partnership is the Group of Earth Observations (GEO) that has a similarly aligned goal of harnessing EO to better inform decisions for the benefit of humankind (www.earthobservations.org). The global network, initiated in 2003, joins governments, research organisations and businesses with data providers to target sustainable development and sound environmental management. A particularly relevant initiative was the organisation of “supersites” (Geohazard Supersites and Natural Laboratories–GSNL) that seeks to increase the openness of satellite observations and in situ data over particular target sites, such as the San Andreas Fault Natural Laboratory and the Sea of Marmara (Istanbul) where major earthquakes are anticipated to be probable in the near future (Parsons et al. 2004).

4 Earth Observation and the Disaster Risk Management Cycle

A priority for action identified within the Sendai Framework for Disaster Risk Reduction is to understand disaster risk. Earth Observation can play an important role in achieving this in terms of characterising the sources of seismic hazard as well as the exposure of persons and assets, and the physical environment of the potential disaster. Therefore, EO can contribute to both understanding the hazard element of disaster and some of the contributors to the risk calculation, namely where populations and buildings are located relative to the sources of such hazards. Furthermore, EO can drive the enhancement of disaster preparedness for earthquakes so that a more effective response and recovery can occur by highlighting the regions exposed to earthquake hazards, thus addressing a further priority of the Sendai Framework to increase resilience. Earth Observation currently feeds into all aspects of the disaster risk reduction cycle and, with upcoming advances, has the potential to contribute even further, particularly for identifying hazard in continental straining areas (Fig. 5). I describe each stage of the cycle in turn, highlighting some examples of the exploitation and utility of such EO datasets.

Earth Observation data from SAR, optical and GNSS satellites currently contributes information to various aspects of the disaster management cycle. Emerging technologies and understanding of the physical processes of earthquakes and deformation that leads to seismic hazards mean that there are emerging and future potential avenues for EO to contribute further to the constituent elements of the cycle

4.1 During the Earthquake

A large earthquake can take many tens of seconds to minutes to rupture from the epicentre initiation point on the fault (hypocentre) to its eventual end, which may be many hundreds of kilometres away from the start. The propagation speed of surface seismic waves is of the order a few kilometres per second, so relaying early warnings over speed-of-light networks can precede the arrival of the onset of the greatest shaking at far-field distances enabling mitigation to take place (Allen and Melgar 2019). Seismometers play a crucial role in providing this early shaking information. However, an increasing number of high-rate (20 Hz) Global Navigation Satellite System (GNSS) networks exist that can measure the strong ground motion and not saturate in amplitude from the passing seismic waves, and could prove important in real-time monitoring of a developing earthquake (Fig. 5). It has been recently recognised that there is the potential that once a large earthquake initiates, it is possible to determine what the eventual size of an earthquake will become. This can be done using only the first 20 s of shaking since nucleation, rather than having to wait for the whole rupture to finish propagating to the eventual end of the fault (Melgar and Hayes 2019). If this recognition that earthquake sizes have a weak determinism holds true, it offers the chance to greatly improve early warning systems as the final magnitude (of big ruptures) can be determined before the earthquake finishes. This means it may be possible to answer the long-standing question of when a small earthquake goes on to grow into a big one. For example, the South Alpine Fault on the southern Island of New Zealand is one of the largest and fastest strain accumulating strike-slip faults in the world (about 30 mm/yr). This fault is anticipated to experience large ruptures, and the regular repeat of previous major earthquakes found in the past palaeoseismological record to be an average of 330 years (Berryman et al. 2012) means we can reasonably expect a major earthquake in the coming decades. This assessment can be done with a higher degree of certainty (Biasi et al. 2015) than exists for most faults around the world where the individual fault characteristics are often poorly known. If the rupture were to initiate at the south-western end of the Alpine fault (just along strike from earlier large earthquakes that have increased the stress in this area at the Puysegur Trench in 2003, 2006 and 2009, Hamling & Hreinsdóttir 2016), then the rupture is likely to propagate in a north-eastwards direction, towards the capital Wellington over 600 km away. If it takes 20 s to establish that this earthquake will ultimately be a magnitude 8, then there will be about 80 s to provide a warning for the arrival of the first seismic waves at Wellington, and over twice this time before the strongest surface waves reach the capital. However, this relies on the installation and real-time monitoring of a number of high-rate GNSS along or near the Alpine fault, instruments which are currently relatively sparse.

In contrast, the latency of satellite-based Earth Observations means that a seismic event of minutes is long over before imagery from space is acquired. Polar orbiting satellites have relatively long repeat intervals, typically of many days (the repeat interval for a single satellite Sentinel-2 is 10 days and for Sentinel-1 is 12 days, halved when considering the pair constellation). Whilst the polar satellite orbits have a period of about 90 min, there is a trade-off between imaging area and resolution (as well as a limited line-of-sight footprint area from 700 km orbital altitude). This represents an improvement relative to past repeat intervals in the 1990–2000s of around a month (e.g., Envisat Advanced Synthetic Aperture Radar (ASAR) was a minimum of 35 days between potential acquisitions for interferometry and typically much longer because an image was not programmed to be acquired on each pass). This reduction in latency has been achieved largely through two approaches: (1) the implementation of wider swath widths of many hundreds of kilometres (whilst often sacrificing potential technological improvements in ground spatial resolution) and (2) by having pairs of satellites, as is the case with Sentinel-1 and Sentinel-2 that have (near) identical twins A and B (and combined repeat times of 6 and 5 days, respectively). Further improvements have and are being made with slightly larger constellations (e.g., COnstellation of small Satellites for the Mediterranean basin Observation (COSMO-Skymed) and the RADARSAT Constellation Mission (RCM)). Under the ESA Copernicus programme, there are further copies of the Sentinels to be launched as replacements in the future as A and/or B fails or depletes its hydrazine propellant. However, pre-emptive launching of these would have the advantage of increasing constellation sizes and thus reducing latency further. Shorter observation intervals also come from repeated coverage by overlapping tracks at high latitudes and furthermore by acquisitions often occurring in both the ascending and descending directions for SAR, a feature permissible from the day and night capability of radar.

The re-visit latency is also greatly reduced from agile imaging platforms (such as Pleiades and Worldview for high resolution optical) that do not always look at a fixed ground track (as in the case of Sentinel-2 or Landsat-8 that image at nadir) but instead have steerable sensor systems or agile platforms permitting coverage over off-track areas at higher incidence angles. The waiting time is also being reduced by large constellations of cubesats such as Planet’s hundred-plus individual platforms (“doves”), a constellation capable of imaging each point on the Earth at least every day (subject to cloud cover). In the future, continuously staring video satellites and SAR satellite systems from geo-stationary orbits may offer the chance to monitor an event in real time (Fig. 5).

4.2 Earthquake Response

The utility of EO systems for aiding the immediate response to earthquakes can be relatively late into this part of the DRM cycle due to the latency of systems mentioned above, limitations with cloud/night-time for optical systems and subsequent analysis time although this is continually improving. Whilst global seismological networks are able to estimate earthquake magnitudes and locations well and quickly (within minutes to hours), there is still room for EO to enhance the initial estimates of the sources of the earthquake hazards on the time scale of a couple of days. Actions by agencies such as the International Charter for space and major disasters over the past two decades have consistently improved the speed with which EO data and information products are made available to assess the earthquake impact on infrastructure. Measurements of ground deformation with either optical offsets or InSAR (Fig. 5) are particularly useful for: (1) identifying more precisely the geographic location of the earthquake, which is especially important for closest approach to cities and extent of aftershocks (e.g., 2010 Haiti earthquake, Calais et al. (2010)); (2) investigating the rupture of a large number of complex fault segments (e.g., 2008 Sichuan earthquake, de Michele et al. (2009), or the 2016 Kaikoura earthquake, Hamling and Hreinsdottir (2016)) not easy to ascertain with global seismology; (3) determining whether surface rupturing has occurred and constraining the depth of fault slip (important for estimates the degree of ground shaking, e.g., 2015 Gorkha earthquake, Avouac et al. (2015)); and (4) resolving the seismological focal plane ambiguity for earthquakes (which can be important in particular for strike slip earthquakes where the ambiguity is orthogonal in the surface strike direction) and the rupture direction which could be uni-lateral or bi-lateral and makes a larger difference for long ruptures (e.g., the 2018 earthquake in Palu, Sulawesi, Socquet et al. (2019)). Barnhart et al. (2019a) provide a review of the implementation of imaging geodesy for the earthquake response for a number of recent events where improvements in the initial estimates of fatalities and economic losses were made. They provide a workflow and framework for the integration of such EO data into their operational earthquake response efforts at the National Earthquake Information Centre (NEIC).

Whilst very high-resolution optical imagery may be of use in identifying the location of collapsed buildings (and therefore potentially trapped persons), buildings that “pancake” are not as obviously critically damaged when viewed in satellite imagery taken at nadir. Highly oblique images may be of greater utility (although may suffer from building shadowing in dense urban environments). A more recent advance using the behaviour of the coherence in SAR images (Yun et al. 2015) is a useful indicator of building collapse as it provides a good measure of ground disturbance. DEM differencing of urban environments generated before and after an earthquake from high-resolution stereo optical imagery (Zhou et al. 2015) also offers the future potential to quickly determine building height reductions, indicative of collapse.

In addition to the primary earthquake hazard of shaking, there are secondary hazards of landsliding and liquefaction (Fig. 5) that are triggered during the event (Tilloy et al. 2019). Optical imagery is used to map landslide scars (Kargel et al. 2016) and also displacements (Socquet et al. 2019), whilst SAR imagery offers the advantage of not requiring cloud free/daytime conditions (Burrows et al. 2019). Increased use of videos (such as from SkySat and Vivid-i) and night-time imagery (such as Earth Remote Observation System EROS-B or Jilin-1) for disaster response and rapid scientific analysis of an earthquake can be expected in this coming decade. In 2016, the German Aerospace agency (DLR) launched their Bi-Spectral Infrared Optical System (BIROS) to detect high-temperature events, often forest fires as part of a Firebird constellation comprising an earlier instrument (Halle et al. 2018). This is an enhancement of ~ 200 m ground sampling over previous lower resolution systems such as from the Moderate Resolution Imaging Spectroradiometer (MODIS) at 1 km, offering the potential for identifying smaller fires that could be useful in post-earthquake triggered fires within cities. Other missions carrying a thermal infrared imaging payload at sub-100 m resolution are currently under development, e.g., Trishna (CNES-ISRO) and Land Surface Temperature Monitoring (LSTM, Copernicus-ESA) that will add to this capability.

Tsunamis triggered by large earthquakes beneath the oceans (subduction zone faulting) are a major contributor to fatalities in such events, and tsunami warnings can provide some level of response. Ocean pressure sensors can provide the observations of a tsunami wave passing across an ocean, where EO data have had a limited input into such processes to date. However, satellite altimeters such as that on Jason-1 that measure the sea surface topography can capture these waves (Gower 2005) and may provide future potential for constraining submarine earthquakes and tsunamis (Sladen and Herbert 2008) more rapidly. With polar orbiting satellites, this would rely on serendipity of the satellite footprint passing over the transitory tsunami, but with increased constellations, or geostationary orbits, or use of higher-resolution optical tracking, more operational direct tsunami wave tracking may be possible.

A major benefit of EO data in the immediate aftermath of a disaster is that its acquisition is not intrusive for the local population and government. The rapid deployment of research and data gathering personnel into an affected area is not always welcome or helpful, even if done with good intentions of trying to provide greater understanding of the event to improve outcomes in future disasters (Gaillard and Peek 2019). Using this advantage of EO, in cooperation and engagement with local partners, will maximise the effectiveness of its uptake. An increasingly important aim of organisations such as CEOS is to ensure that local capacity or international connections exist prior to disasters so that EO data are used effectively.

4.3 Recovery

In this period, further geohazards can perturb and interrupt the recovery process. Aftershocks typically decay in magnitude and frequency with time, but also seismic sequences can be protracted. Furthermore, the largest earthquake is not necessarily the first in a destructive sequence (Walters et al. 2018). Earth Observation data for large shallow aftershock events can provide further information on locations as for the main event. Operational Earthquake Forecasting can examine the time-dependent probabilities of the seismic hazard (Jordan et al. 2014) for communication to stakeholders. A time-dependent hazard model for seismicity following a major event can be estimated (Parsons 2005). One aspect of this is the transfer of stress from the earthquake fault onto surrounding fault structures which can be calculated from the distribution of slip on the fault found from inverting the surface InSAR and optical displacement data. In the recent Mw 6.4 and Mw 7.1 Ridgecrest earthquakes in California, using satellite InSAR and optical imagery, Barnhart et al. (2019b) were able to model the slip and stress evolution of an earthquake sequence (that occurred on faults not previously recognised as major active ones). They also observed the triggering of surface slip (creep) on the major Garlock strike-slip fault to the south of the earthquake zone. Understanding how faults such as these interact will be necessary to provide probabilistic calculations of time-dependent hazard. Additionally, major earthquakes often highlight the existence of hitherto unknown active faults or ones that were previously considered less important compared to known major faults (Hamling et al. 2017).

Postseismic processes such as afterslip, poroelasticity and viscous relaxation change the nature of stress in the earthquake area (Freed 2005). InSAR observations can provide useful constraints on the degree of postseismic behaviour and the magnitude of signals through time, which could prove useful in updating hazard assessments. Important insights regarding these postseismic processes, the role of fluids, lithology and fault friction can be gained from measuring surface displacements immediately after the earthquake. Following the 2014 Napa (California) earthquake, rapid deployment of on the ground measurements (DeLong et al. 2015) and GNSS (Floyd et al. 2016) enabled the capture of the very early rapid postseismic processes of slip and were further augmented by satellite radar in the weeks after the earthquake. The stresses involved in the earthquake can promote and impede postseismic slip and also cause triggered slip on other faults. Being able to track the motion on faults following a major event will enable a recalculation of the stress budget and potential for stressing other surrounding faults to incorporate this additional time varying postseismic phase (as well as highlight other previously unknown active fault traces from triggered slip). Reduced latency in Earth Observing systems is helping to contribute to this for remote areas where field teams are unlikely to get there quickly. Knowing the ratio of coseismic slip and postseismic slip better for entire fault lengths of major surface rupturing earthquakes will enable us to improve the interpretation of the magnitudes of past events (and therefore the likely amount of shaking), as this may be overestimated if a significant amount of surface offset is due to aseismic afterslip following major earthquakes, or inaccurate if the slip measured at the surface is not representative of the average slip at depth (Xu et al. 2016), or if there is significant off-fault deformation (Milliner et al. 2015). Much of this feeds into the longer-term aspects of DRM rather than immediate recovery.

Earthquakes often trigger landslides, in particular in regions of high relief with steep slopes, and identifying these is important in the response phase of an earthquake. However, the earthquake, subsequent shaking and triggered landslides also result in changes to the behaviour of the landscape in the recovery period, and landslides persist in being more frequent in the subsequent years post-earthquake (Marc et al. 2015). Measuring slopes that have experienced a change in their behaviour (rates or extents) using InSAR would be important to identify an acceleration in their sliding or initiation of new slope failures that would inform the recovery attempts for resettlement location plans, as well as for monitoring landslide dam stabilities for risk of catastrophic flooding.

When augmented with on the ground surveys, satellite imagery can contribute to the recovery process in mapping impacts to support various aspects of the reconstruction strategy. This can be the location of buildings that have been destroyed (and require subsequent debris removal), the location of populations in temporary, informal or emergency housing that may need to be rehoused and relocated (Ghafory-Ashtiany and Hosseini 2008), as well as some indicators of building condition (structure and roofs). This can be done through a range of visual, change detection and classification techniques in a manual to automated process (Contreras et al. 2016). Identification of new areas to build on around the city (green areas) would ideally take into account the change in hazard on surrounding areas from the earthquake, as faults along-strike or up-dip of rupture areas maybe become more prone to future rupture (Elliott et al. 2016b).

4.4 Mitigation

Strategies for reducing the risk from seismic hazards have long been known (Hu et al. 1996). The engineering requirements for buildings to survive ground accelerations from earthquakes are established and already codified into building regulations for many countries. However, in many areas these are not implemented due to increased costs relative to the status quo and/or an under appreciation of their necessity (such as in the case of hidden faults and their unrecognised proximity to cities), as well as due to corruption (and past legacies). This makes the identification of priority areas for seismic risk critical, especially where there are competing needs for the use of limited economic resources.

Earth Observation data support an array of geospatial mapping, which can provide a useful method of visualising complex spatial information, making such datasets and analyses more accessible to potential end users (Shkabatur and Kumagai 2014). When trying to communicate seismic hazard and risk, EO imagery can foster community engagement by placing the community's location in a geospatial context relative to the hazard, home, work and community buildings such as churches, as well as a family's relatives, emergency services and shelters. This could lead to the empowerment of communities to act on known solutions to earthquake hazard and support the enforcement of building codes to reduce the risk.

Ideally, the aim would be to move off the disaster risk management cycle so that a hazard no longer presents a risk and there would be no need for preparedness as the chance of disaster would have been negated (assuming the hazard was well enough characterised to know where it could occur and to what severity). Given that earthquakes are an ever-present hazard across many parts of the deforming world and will not decrease through time, risk can only be reduced by decreasing vulnerability or moving those exposed relative to the location of hazard. Risk prevention, in terms of moving the exposed population and assets relative to the active faulting and expected shaking, can be done at two spatial scales. At a local level, one approach is to establish setback distances from the surface traces of faults (Zhou et al. 2010) where peak ground accelerations are expected to be largest (as well as issues of differential offsets if buildings straddle the fault rupture itself). EO data can help by constraining the size of a suite of similar earthquake ruptures, their maximum surface offsets, and the width of the fault rupture zone (whether the zone is very localised or distributed over hundreds of metres, Milliner et al. 2015). Both visual inspection and subpixel image correlation of higher-resolution imagery is important for being able to detect the degree of on and off fault deformation due to an earthquake rupture (Zinke et al. 2014). The availability of open datasets of high-resolution imagery lags behind that of commercial operators (10 m open versus 0.3 m closed), but the higher resolution is important for pixel correlation as the measurable offsets are a fraction of the pixel size. Higher-resolution imagery would enable the detection of smaller movements and better characterisation of the width of active fault zones.

At a broader spatial scale, shifting the exposure relative to the hazard can be done to a much greater degree, such as occurred when the capital of Kazakhstan was relocated away from Almaty, in part because of seismic hazard considerations (Arslan 2014). This decision was taken because Almaty is exposed to a large seismic hazard and suffered a series of devastating earthquakes in the late nineteenth and early twentieth centuries (Grützner et al. 2017). The capital is instead based in Nur-Sultan (formerly Astana) which is both away from past earthquakes and is not in a zone rapidly straining, and is therefore considered to be of lower hazard. Such a relocation of the exposed population requires a good knowledge of where active faults are that could rupture in the future, and the potential sizes or magnitudes of future earthquakes, including what the largest credible size would be within the timeframe of human interest. Otherwise, relocating a population away from an area that has just experienced a devastating earthquake could place them in a zone that still has a significant seismic hazard or in fact a heightened hazard (such as occurs during along-strike faulting from stress changes, Stein et al. 1997). Using GNSS and InSAR data over deforming belts will enable the identification of regions within a country that are building up strain relatively slowly. For example, in some countries such as New Zealand, whilst there are regions of relatively much higher and much lower strain rate, even the slowly deforming regions are still capable of producing damaging earthquakes (Elliott et al. 2012).

At a city scale, it is important to identify the location of the faults and their segmentation. This is necessary for determining the location of faults relative to the exposure. This also allows the estimation of hazard and losses for potential earthquake scenarios, for example, by comparing rupture of a fault right beneath a city versus that expected for distant fault ruptures (Hussain et al. 2020). Such analysis is best done in conjunction with field studies and dating of fault activity from paleoseismological trenching (e.g., Vargas et al. 2014) to help ascertain the recurrence intervals and the time since the most recent event as well as potential sizes of events. Once the most exposed elements in a city are identified, mitigation by seismic reinforcement from retrofitting buildings (Bhattacharya et al. 2014) could be applied to reduce the vulnerability of certain districts. Optical imagery that captures the expansion of cities could help identify the growth of suburbs and districts onto fault scarps that leave the population more exposed. Such urban growth also hides the potential geomorphological signals of past activity from assessment today. The use of DEMs at a city scale can also help identifying relative uplift and folds beneath a city that mark out the location of active faulting (Talebian et al. 2016).

Over wider areas, previous work using EO data such as InSAR and GNSS has sought to measure the rate at which major faults are accumulating strain (Tong et al. 2013). By measuring the surface velocities across major fault zones, it is possible to determine which faults are active and how fast they accrue a slip deficit. The fastest faults are generally considered to be more hazardous, and these faster rates are more easily measured with EO techniques. Such information can then be used alongside rates of seismicity and longer-term geologic estimates of fault slip rates to make earthquake rupture forecasts (Field et al. 2014) so that regions to target for mitigation can be identified. An important component of measuring fault strain accumulation is whether there are significant creeping segments (Harris 2017) that are slipping continuously (Jin and Funning 2017) and by how much does this lower the hazard. High resolution EO imagery over wide areas provides the possibility of picking out active faults by identifying such creeping segments. Identifying the size, depth extent and persistence through time of creeping areas on major faults (Jolivet et al. 2013) can provide constraints on whether these slowly slipping portions can act as barriers to major ruptures.

4.5 Preparedness

In order to know where to prepare for earthquakes, we need to know where active faults are located and where strain is accumulating within the crust. It is necessary, but not sufficient, to also know where past earthquakes have occurred. This is because past earthquakes represent areas where strain has accumulated sufficiently to lead to rupture. A region that has previously had earthquakes is likely to be able to host them in the future. It also gives us the time since the last earthquake to determine the slip deficit that may have accumulated in that interval. But due to long recurrence times relative to the length of past seismicity records, a region that is currently devoid of past major earthquakes may still be capable of producing one if it is accumulating strain. If earthquakes were to follow a regular pattern in which they had a constant (periodic) time interval between successive ruptures (Fig. 6), then the task of forecasting them would be trivial. Some faults have enough past observations or inferences of earthquakes that it is possible to assess the degree to which they host regular earthquakes (Berryman et al. 2012), and the degree to which they deviate from periodic. A fault with a low coefficient of variation (CoV) of time intervals between successive ruptures has a pattern of earthquakes that is said to be quasi-periodic, and from which estimates of the probability of a future rupture can be updated based upon the time since the last earthquake, i.e. it is time dependent (with uncertainty ranges based in part upon the degree of variation thus far observed). This is because the mean recurrence interval is more meaningful as the relative standard deviation becomes lower. In contrast, a time-independent process follows a Poisson distribution. The time to the next earthquake does not depend on how long ago the last earthquake occurred and the coefficient of variation is 1 (Fig. 6), with the probability of rupture constant through time. This acts as a good reference case against which to compare other patterns of faulting events. Although we may attribute this to the actual behaviour of a fault, such attributions are more likely due to the fact that we do not understand the underlying physical processes that drive, govern and control the rupture characteristics of the fault. In contrast to this behaviour, if a fault zone ruptures episodically, the seismicity is said to be clustered through time, with many events close together (relative to a mean recurrence) separated by a long hiatus with no earthquakes (Marco et al. 1996). The picture becomes much more complex when considering interacting faults, triggered seismicity and event clustering (Scholz 2010). For most faults in the world, the number of previous events and their timings are very poorly known, and the recurrence times may be very long compared to the 100 years used as an example here. Considering Fig. 6, a more realistic time horizon for the knowledge of even major faults may only be the past 1000 years of historical records from which patterns of behaviour cannot be determined given typical recurrence intervals. Interpreting palaeoseismic records of past events and trying to calculate slip rates from previous fault offsets leads to large epistemic uncertainties when the observation window is a small multiple of only a few mean recurrence intervals (Styron 2019). Further to this, if one considers the typical lifetime of an individual (and more so that of a government), the relatively long recurrence interval and variability of earthquakes may go some way to explaining the difficulties in communicating hazard and stoking an impetus for action. We usually do not know the past characteristics of a fault, nor do we know whether it is really time independent or the form of the time dependence, leading to a deep level of uncertainty (Stein and Stein 2013). One option under this situation is to take a robust risk management approach to deal with the breadth of reasonable scenario outcomes.

Examples of realisations of earthquake occurrence patterns through time (right column) due to varying distributions of recurrence intervals (left histograms) but all with a mean interval of about 100 years (vertical black line). A uniform earthquake interval leads to a regular pattern of earthquakes (dark grey) and therefore zero coefficient of variation (CoV)—that is the standard deviation divided by the mean interval. A time-dependent model with a small standard deviation (30 years) on the recurrence interval is shown in blue (the maximum and minimum intervals in the distribution are marked by arrows). A model where the time since the last earthquake does not affect the chance of an earthquake (green) is time independent (a Poisson probability) and has a CoV = 1. A distribution that has a short interval between most events, but a long tail of intervals has a clustered pattern and subsequently a large CoV (red). Whilst 50 events in 5000 years are shown here as an example (average recurrence interval 100 years), for most faults the recurrence intervals tend to be much longer. Given that reliable records of past earthquakes generally cover a much shorter period than 5000 years, the task of determining even an average recurrence interval, let alone an accurate assessment of any variation, becomes nearly intractable

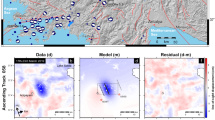

Therefore, in terms of preparedness, current techniques harnessing EO are more focussed on identifying the regions accumulating strain, as opposed to the response phase where EO is aimed at understanding the earthquake deformation process itself. Areas that pose particular difficulty in assessing the sources of hazard are those accumulating strain relatively slowly (Landgraf et al. 2017), where such approaches using decadal strain rates may not be appropriate (Calais et al. 2016). However, given that we do not always have enough knowledge about past earthquake behaviour and seismicity for a region, this alternative of measuring the accumulation of strain can provide a forecast of the expected seismicity (Bird and Kreemer 2015), and in the past this has been based upon GNSS measurements of strain. Using GNSS observations to derive crustal velocities from over 22 thousand locations, Kreemer et al. (2014) produced a Global Strain Rate Model (GSRM). From this, Bird and Kreemer (2015) forecast shallow seismicity globally based upon the accumulation of strain observed from these GNSS velocities. This can be combined with past seismological catalogues to enhance the capability for forecasting (Bird et al. 2015) such as in the Global Earthquake Activity Rate Model (GEAR). One current aim is to combine EO datasets of interferometric SAR time series with GNSS results to calculate an improved measure of continental velocities and strain rates (Fig. 7) at a higher resolution and with more complete global coverage of straining parts of the world that may lack dense GNSS observations. From these rates of strain, it is possible to estimate earthquake rates and forecasts of seismicity (Bird and Liu 2007) to calculate hazard. When combined with the exposure and vulnerability, this information can then be used to calculate seismic risk (Silva et al. 2014). A challenge of using surface velocities and strain derived from geodetic data is being able to differentiate long-term (secular) interseismic signals from those that are transient (Bird and Carafa 2016).

Workflow for calculations of seismic hazard and strain based upon EO input datasets of SAR images and GNSS. InSAR measurements are used to calculate surface displacements and velocities through a time series approach. Rates of geodetic strain can be derived from these velocities to calculate earthquake rates (along with other seismological and geological data and constraints) and forecasts that can be used in subsequent seismic hazard and risk assessments

An important contribution of EO data of strain accumulation is in determining which portions of a fault are locked and building up a slip deficit, and which are stably sliding. This fraction of locking is termed the degree of coupling. By determining which portions are currently locked (i.e. the spatial variability of coupling), it may be possible to build up a range of forecasts of earthquake patterns on a fault (Kaneko et al. 2010). InSAR measurements of creeping fault segments highlight which portions of a partially coupled fault are stably sliding, and which are more fully locked, and when bursts of transient slip have occurred (Rousset et al. 2016). By determining the extent of these areas, it is possible to develop a time-dependent model of creep and establish the rate of slip deficit that builds up over time, and the potential size of earthquakes possible on a given fault section (Shirzaei and Bürgmann 2013). Determining the spatio-temporal relationship between locked regions of faults and transient slow slip portions is important to understand the potential for these stabling sliding regions to both release accumulated elastic energy and also drive subsequent failure on other portions of the fault (Jolivet and Frank 2020).

Rollins and Avouac (2019) calculate long-term time independent earthquake probabilities for the Los Angeles area based upon GNSS derived interseismic strain accumulation and the instrumental seismic catalogue. By examining the frequency–magnitude distribution of smaller earthquakes that have already occurred, they are able to estimate the maximum magnitude of earthquakes and the likelihood of certain sized events occurring in the future. Estimates of the rate of strain accumulation from EO datasets are needed to determine the rate of build-up of the moment deficit for such analysis. For fast faults that are plate bounding and relatively simple, with few surrounding faults to interact with, a time-dependent model may be appropriate. This time dependence since the last earthquake comes from the concept of the accumulation of strain within the crust. Using InSAR measurements across most of the length of the North Anatolian Fault, Hussain et al. (2018) showed that it is reasonable to use short-term geodetic measurements to assess long-term seismic hazard, as the measured velocities from EO are constant through time apart from during the earliest parts of the postseismic phase following major earthquakes. However, the use of a time-dependent model has been argued to have failed on the Parkfield area of the San Andreas Fault as it did not perform as expected in forecasting the repeating events on this fault (Murray and Segall 2002). The accumulation of strain through time leads to an interseismic moment deficit rate which is considered to be a guide to the earthquake potential of a fault. Being able to place bounds on this deficit is important for accurate assessment of the hazard (Maurer et al. 2017). By comparing the interseismic slip rates derived from EO with known past earthquakes and postseismic deformation, Michel et al. (2018) find that the series of past earthquakes on the Parkfield segment of the San Andreas Fault is not sufficient to explain the moment deficit. They conclude that larger earthquakes with a longer return period than the window of historical records are possible on this fault to explain the deficit. For the state of California, where there is information on active fault locations, past major ruptures, fault slip rates, seismicity and geodetic rates of strain, it has been possible to develop sophisticated time-independent (Field et al. 2014) and time-dependent forecast models (Field et al. 2015) termed the third Uniform California Earthquake Rupture Forecast (UCERF3).

An even harder problem is to characterise the accumulation of strain and slip release for offshore portions of subduction zones which host the largest earthquakes and also generate tsunamis. The expanding field of sea floor geodesy techniques opens up a huge area of the Earth’s surface to future measurement of earthquake cycle processes (Bürgmann and Chadwell 2014) that can be used in seismic and tsunami hazard assessment. One of the methods for seafloor geodesy links GNSS systems with seafloor transponders to detect movement of the seafloor relative to an onshore reference station. The large costs of instrumentation and seafloor expeditions have limited the deployment of such technologies so far. Understanding such fault zone processes as slow slip at subduction zones is important as it both releases some of this strain aseismically but may also drive other areas closer to failure and is itself promoted by nearby earthquakes (Wallace et al. 2017). Such slow slip behaviour can be captured onshore with dense GNSS and InSAR.

The global earthquake model (GEM) foundation is an initiative to create a world resilient to earthquakes by enhanced preparedness, improving risk management from their collection of risk resources. Their global mosaic of seismic hazard models (Pagani et al. 2018) includes constraints using geodetic strain rates where available, such as for UCERF3 in California. This hazard mosaic will continue to be updated as hazard maps are enhanced regionally around the globe, and EO constraints on strain rates will help improve characterisations of the input hazard. In particular, GEM have compiled an Active Faults database (Fig. 3) from a compilation of regional databases (e.g., Styron et al. 2010) which is continually being added to, and can be guided by constraints from EO data using optical imagery, DEMs and geodetic measurements of faults. By combining the Global Hazard map with exposure and vulnerability models (Fig. 7), a Global Seismic Risk map (Silva et al. 2018) is produced of the geographic distribution of average annual losses of buildings and human lives due to damage from ground shaking. By sharing these in an open and transparent manner, GEM’s aim is to advocate for reliable earthquake risk information to support disaster risk reduction planning.

Right at the end of the disaster management cycle is the Holy Grail of preparedness that are earthquake precursors (Cicerone et al. 2009)—the ability to forecast an impending seismic rupture and prevent a potential disaster. This has been an ever-present endeavour of a section of the community to find signals that might foretell of an upcoming earthquake. However, despite decades of research (Wyss 1991), a reliable technique accepted by the wider community has not arisen that can provide an earthquake prediction in terms of giving a temporally and spatially constrained warning of an imminent earthquake rupture that could be safely used to evacuate, or take measures to ensure the safety of, an exposed population.

A current debate surrounding processes prior to earthquake nucleation leaves open the question of whether major events may be triggered by a cascade of foreshocks or whether propagating slow slip triggers earthquake nucleation (Gomberg 2018). Measuring foreshocks is largely the preserve of sensitive seismological equipment, and given that slow slip at depth may be small, its measurement by EO imaging systems may remain unfeasible. Detecting small changes in creep rates of faults has typically been done with surface creepmeters in the past for specific locations (Bilham et al. 2016), but InSAR observations can be used to measure wider area creep rates and determine longer-term background rates to measure deviations from this (Rousset et al. 2016). Perhaps identification of subtle accelerations of creep (or creep events) may be possible using EO observations of strain, but both the time resolution and sensitivity would need to be greatly enhanced beyond current capabilities.

5 Outlook and Recommendations

The uptake and exploitation of Earth Observation is expanding both in terms of understanding the science of earthquakes and in operational disaster risk management. Measuring the underlying driver of earthquake hazard, in terms of the accumulation of strain in the crust, has been an important development. The use of EO has also increased in more direct application to earthquake events—both in terms of disaster response and preparedness. This has involved the increased uptake of SAR and its derived products, which had previously lagged behind the more intuitively interpretable optical imagery datasets. Advancements have been made by going beyond using an individual image in isolation to instead looking at change in a time series approach, be it ground deformation with InSAR, image matching and optical offsets with optical data or landscape classification for landsliding. The power of the observational data is best harnessed when coupled with modelling of physical processes to be able to better interpret the underlying mechanisms generating earthquake hazard and the changes that occur through time.

The future lies in being able to continuously image the Earth. Much of the current use of EO data for this application comes from near polar orbiting satellites. This means continuous operational monitoring or high-density time series datasets cannot be achieved nor formed due to the short time over an area and a long revisit interval. This leads to the compromise of having high spatial resolution but limited footprint datasets of coarse time resolution. In the medium term, constellations of Low Earth Orbit steerable video imaging satellites could provide a much-enhanced disaster response tool and improve the science of earthquakes. An unfolding disaster could be continuously monitored remotely to facilitate the response to collapsed buildings and determine the impact of subsequent aftershocks, as well as earthquake triggered landslides through analysis of the video imagery, and from constructing digital topography from the video feed to capture landscape elevation changes. Such potential for harnessing space-based video feeds for the International Disaster Charter activations has been identified (Stefanov and Evans 2015) with the High Definition Earth Viewing System (HDEVS) onboard the International Space Station (ISS). Ultimately, continuously staring satellites from geostationary orbits (both optical/video and SAR based) would in the long term provide this uninterrupted monitoring mode that would greatly enhance Earth Observation in disaster risk management. Such systems have been suggested to capture the propagation of seismic waves using a geostationary optical seismometer (Michel et al. 2012) and for geosynchronous radar to image geohazards with SAR interferometry near-continuously (Wadge et al. 2014).

Since 2014, we have seen an important shift in the availability of SAR and optical imagery from space. Reduced latency has opened up the utility of EO data to act increasingly earlier in disaster response. This huge repository of information offers a great potential for the analysis of the underlying science of earthquakes. This will hopefully further contribute to the improved assessment of hazard and has the potential to help mitigate some of the risk, or at least allow for better preparation for it. A challenge for the community is to be able to usefully extract information from such large volumes of data to meet the aspirations of the Sendai framework for the substantial reduction of disaster risk and losses in lives, livelihoods and health.

The open availability of Sentinel-1 data through ESA's Copernicus programme has greatly enhanced the capacity for incorporating EO into disaster risk management for earthquakes as well as to enhance our understanding of the sources of seismic hazard through improved science. The availability of processing toolboxes has widened the access to exploiting such datasets to a much broader user base of varying skill levels. However, in order to further advance Copernicus' aims to provide accurate, timely and accessible information of the environment globally with Earth Observation, there are a number of recommendations to further this huge recent increase in the uptake of EO data (in terms of its application to earthquakes and tectonics for both the assessment of hazard and risk from geohazards). Whilst there have been dramatic enhancements in the latest EO systems when compared to earlier ones, there are some remaining limitations. Improvements could be made both to current systems in terms of acquisition strategy and data provision, as well as in the planning for future missions. In particular, the following recommendations pertaining to the Sentinel 1/2 satellites from ESA within the Copernicus programme may help some of the goals described here. Whilst the use of Sentinel data for earthquake science is only one of many mission objectives, outlined below are suggestions that would enhance the interferometric capability for Sentinel-1 SAR in particular, a tool useful for determining the rates of seismogenic strain accumulation on the continents.

The Sentinel-1 constellation offers two satellites in orbit and a long-term mission with spare copies currently in storage available for launch to maintain mission continuity upon failure/deorbiting of the initial pair of satellites. However, I recommend launching the two spare Sentinel-1 C/D satellites to grow the constellation, thus reducing latency for imaging an affected area following major earthquakes. If the satellites were operated in quadrature, revisit times would reduce from the current best case 6 days with Sentinel-1 A and B to 3 days with all four, and considering both ascending and descending passes, this would be down to 1.5 days. This assumes that data can be acquired all the time, which is not possible given the limited duty cycle of about 25% of an orbit due to the power constraints from the SAR and periods when in eclipse (Attema et al. 2010). Launching Sentinel-1 C & D could therefore alleviate some of the limitations of the current duty cycle by permitting more regular acquisitions globally whilst improving the acquisition repeat interval. Were acquisitions to be done on every pass (as is done over Europe) this would help both in the use of SAR for the response phase following an earthquake and also for better constraining the postseismic deformation processes that follow immediately after earthquakes. There are a number of satellites in orbit from other agencies that contribute towards disaster response (Pritchard et al. 2018), reducing times for the first pass, but Sentinel data with a large background acquisition strategy provide some of the most consistent data for interferometry and offsets. Additionally, for long-term tectonic studies measuring strain accumulation over years, there are two advantages to increasing the number of satellites: (1) a shorter interval between acquisitions improves the interferometric coherence, so InSAR data are retrievable over more vegetated areas, increasing the latitudinal coverage for such assessments with C-band SAR; (2) a greater number of observations improves the time series analysis, both for constraining the evolution of rapidly changing rates (such as postseismic deformation) and for determining smaller long-term rates above the noise (i.e. regions of slower continental deformation that can still host large earthquakes). The improvement in the uncertainty in the velocity from a time series analysis of InSAR data scales as the square root of the number of observations made (Morishita et al. 2020), so doubling the number of observations using all four satellites will reduce the uncertainty by about 30%.

For both optical cross-correlation techniques and SAR interferometry, a pre-event image is required in addition to a post-earthquake image to do the matching comparison to measure the surface displacement that has occurred in an earthquake. The longer the gap between the prior image and the newly acquired one, the greater the degradation in the quality of the displacement map, due to changes in the nature of the Earth’s surface. Therefore, I recommend making sure all areas of the globe likely to be straining and exposed to earthquakes should have regular enough images acquired that no gap between acquisitions exceeds a number of months. Tracks of Sentinel-1 SAR data were not acquired at all for a whole year over tectonically important areas such as Iran and New Zealand, which occurred in 2017–2018. Such large gaps can break the time series approach of detecting deformation, and warning systems should be created to highlight the emergence of such data gaps. The uncertainty on the estimated constant velocity in a time series of InSAR data scales linearly with the length of the connected time series (Morishita et al. 2020). Any gaps in the time series result in a shorter connected network of data. Therefore, missing data acquisitions for large periods of time has a major deleterious impact on the level of uncertainty, and breaking the connected time series in half will about double the uncertainty in estimated velocities.

Regular acquisitions with consistent imaging modes are an important design consideration for optical and SAR acquisitions (such as with Landsat and Sentinel-1) for improving the potential for long-term and systematic exploitation of the archives. Such an example is Sentinel-1′s Interferometric Wide Swath (IWS) model that allows for the analysis of large stacks of time series of data as the imagery is collected predominately in this single mode over land in tectonically deforming areas. This has occurred in a way that is not possible for satellite systems that have the flexibility of acquiring in a whole host of imaging modes (stripmap, wideswath, spotlight, etc.) as they do not create a consistent archive for long-term measurements for interferometry. There were also some issues arising from the shifting of acquisition frames by ESA (the start and end time of an acquisition) making processing of the data initially more difficult. This resulted in a greater amount of book-keeping, slowing the initial uptake of the imagery, and now requires more data management. It is easier if the acquisitions are systematic and consistent such as with the ERS frame approach. This could both be usefully implemented in the current acquisition strategy and in future mission designs.