Abstract

Statistical assessment of candidate gene effects can be viewed as a problem of variable selection and model comparison. Given a certain number of genes to be considered, many possible models may fit to the data well, each including a specific set of gene effects and possibly their interactions. The question arises as to which of these models is most plausible. Inference about candidate gene effects based on a specific model ignores uncertainty about model choice. Here, a Bayesian model averaging approach is proposed for evaluation of candidate gene effects. The method is implemented through simultaneous sampling of multiple models. By averaging over a set of competing models, the Bayesian model averaging approach incorporates model uncertainty into inferences about candidate gene effects. Features of the method are demonstrated using a simulated data set with ten candidate genes under consideration.

Similar content being viewed by others

References

Bishop CM (2006) Pattern recognition and machine learning. Springer, New York

Carlin B, Chib S (1995) Bayesian model choice via Markov Chain Monte Carlo methods. J Roy Stat Soc Ser B 57:473–484

Carlin BP, Louis TA (1995) Bayes and empirical Bayes methods for data analysis, 2nd edn. Chapman & Hall/CRC Press, Boca Raton

Congdon P (2006) Bayesian model choice based on Monte Carlo estimates of posterior model probabilities. Comput Stat Data Anal 50:346–357

Congdon P (2007) Model weights for model choice and averaging. Stat Methodol 4:143–157

Dellaportas P, Forster J, Ntzoufras I (2002) On Bayesian model and variable selection using MCMC. Stat Comput 12:27–36

Draper D (1995) Assessment and propagation of model uncertainty. J Roy Stat Soc Ser B 57:45–97

Fridley B (2009) Bayesian variable and model selection methods for genetic association studies. Genet Epidemiol 33:27–37

Gelman A, Rubin DB (1992) Inference from iterative simulation using multiple sequences. Stat Sci 7:457–511

Green P (1995) Reversible jump Markov chain Monte Carlo computation and Bayesian model determination. Biometrika 82:711–732

Heath SC (1997) Markov chain Monte Carlo segregation and linkage analysis for oligogenic models. Am J Hum Genet 61:748–760

Hoeting JA, Madigan D, Raftery AE, Volinsky T (1999) Bayesian model averaging: a tutorial. Stat Sci 14:382–417

Jannink JL, Wu XL (2003) Estimating allelic number and identity in state of QTLs in interconnected families. Genet Res 81:133–144

Madigan D, Raftery AE (1994) Model selection and accounting for model uncertainty in graphical models using Occam’s window. J Am Stat Assoc 89:1535–1546

Miller AJ (1984) Selection of subsets of regression (with discussion). J Roy Stat Soc Ser A 147:387–425

Munafò MR (2006) Candidate gene studies in the 21st century: meta-analysis, mediation, moderation. Genes Brain Behav 5(Suppl 1):3–8

Pflieger S, Lefebvre V, Causse M (1996) The candidate gene approach in plant genetics: a review. Mol Breed 7:275–291

Raftery AE (1993) Bayesian model selection in structural equation models. In: Bollen K, Long J (eds) Testing structural equation models. Sage, Newbury Park, pp 163–180

Raftery AE, Madigan D, Volinsky CT (1996) Accounting for model uncertainty in survival analysis improves predictive performance (with discussion). In: Bernardo J, Berger J, Dawid A, Smith A (eds) Bayesian statistics 5. Oxford University Press, Oxford, pp 323–349

Regal RR, Hook EB (1991) The effect of model selection on confidential intervals for size of a closed population. Stat Med 10:717–721

Rothschild MF (2003) Advances in pig genomics and functional gene discovery. Comp Funct Genomics 4:266–270

Schwarz G (1978) Estimating the dimension of a model. Ann Stat 6:461–464

Sillanpää MJ, Arjas E (1998) Bayesian mapping of multiple quantitative trait loci from incomplete inbred line cross data. Genetics 148:1373–1388

Sillanpää MJ, Arjas E (1999) Bayesian mapping of multiple quantitative trait loci from incomplete outbred offspring data. Genetics 151:1605–1619

Sinharay S, Stein HS (2005) An empirical comparison of methods for computing Bayes factors in generalized linear mixed models. J Comput Graph Stat 14:415–435

Sorensen D, Gianola D (2002) Likelihood, Bayesian, and MCMC methods in quantitative genetics. Springer, New York

Tierney L, Kadane JB (1986) Accurate approximations for posterior moments and marginal densities. J Am Stat Assoc 81:82–86

Uimari P, Hoeschele I (1997) Mapping-linked quantitative trait loci using Bayesian analysis and Markov chain Monte Carlo algorithms. Genetics 146:735–743

Wu XL, Jannink JL (2004) Optimal sampling of a population to determine QTL location, variance, and allelic number. Theor Appl Genet 108:1434–1442

Wu XL, Macneil MD, De S, Xiao QJ, Michal JJ, Gaskins CT, Reeves JJ, Busboom JR, Wright RW Jr, Jiang Z (2005) Evaluation of candidate gene effects for beef backfat via Bayesian model selection. Genetica 125:103–113

Yi N, Xu S (2000a) Bayesian mapping of quantitative trait loci for complex binary traits. Genetics 155:1391–1403

Yi N, Xu S (2000b) Bayesian mapping of quantitative trait loci under the identity-by-descent-based variance component model. Genetics 156:411–422

Acknowledgments

This research was supported by the Wisconsin Agriculture Experiment Station, and was partially supported by National Research Initiative Grant no. 2009-35205-05099 from the USDA Cooperative State Research, Education, and Extension Service, NSF DEB-0089742, and NDF DMS-044371. KAW acknowledges financial support from the National Association of Animal Breeders (Columbia, MO). Comments from the anonymous reviewers and the editor are acknowledged.

Author information

Authors and Affiliations

Corresponding author

Appendix: Preliminary model selection using the Occam’s Window method

Appendix: Preliminary model selection using the Occam’s Window method

The procedure described here is adapted from the Occam’s Window (OW) method of Madigan and Raftery (1994), which averages over a set of data-supported models. There are two principles underlying OW. First, a model that predicts the data far worse than the model producing the best predictions should no longer be considered. That is, models not belonging to set

should be excluded, where c is a chosen value (e.g., c = 20, by analogy with the popular 0.05 cutoff for P-values). The second principle (optional) appeals to the Occam’s razor, which excludes complex models (M k ) receiving less support from the data than their simpler counterparts (M l ), i.e., by excluding models in set:

Then, Bayesian model averaging (2) is replaced by

where A contains models in set A′ but not in set B, and all probabilities are implicitly conditional on the set of models in A. So, the BMA problem is reduced to finding set A.

Rejection based on OW is based on the interpretation of the ratio of posterior probabilities of two competing models. Let \( r = {\frac{{p\left( {M_{0} |{\mathbf{y}}} \right)}}{{P\left( {M_{1} |{\mathbf{y}}} \right)}}} \), where M 0 and M 1 denote the “smaller” and “larger” models, respectively, and \( p\left( {M_{k} |y} \right) \) is the marginal (with respect to the parameters) posterior probability that k is the true model (k = 0, 1). Let O L and O R define the left and right boundaries of OW, respectively. If evidence supports M 0 (i.e., r > O R ), then M 1 is rejected. Rejecting M 0 occurs if r < O L (Optionally, rejecting “smaller” model M 0 may require stronger evidence if choosing O L < O −1 R ). Once M 0 is rejected, all its “submodels” are rejected as well. If O L ≤ r ≤ O R , then both models stay.

Computing model posterior probability (3) requires evaluating integral (4), which may be analytically difficult or even impossible in many practical situations. Laplace’s approximation (Tierney and Kadane 1986) can be used to compute the marginal likelihood, say, of model k:

where p k is the dimension of θ k , \( \tilde{{\varvec{\theta}}}_{k} \) is the posterior mode of θ k and \( {\mathbf{H}}_{{\tilde{{\varvec{\theta}}}_{k} }}^{ - 1} \) is minus the inverse Hessian of \( h\left( {{\varvec{\theta}}_{k} } \right) = \log \left\{ {p\left( {{\mathbf{y}}|{\varvec{\theta}}_{k} ,M = k} \right)p\left( {{\varvec{\theta}}_{k} |M = k} \right)} \right\} \), evaluated at \( {\varvec{\theta}}_{k} = \tilde{{\varvec{\theta}}}_{k} \). A computationally convenient variant to approximation (20) uses the maximum likelihood estimator \( \hat{{\varvec{\theta}}}_{k} \), instead of the posterior mode \( \tilde{{\varvec{\theta}}}_{k} \) (Sorensen and Gianola 2002). In particular, if observations are i.i.d, then,

where \( {\mathbf{H}}_{{1,\hat{{\varvec{\theta}}}_{k} }} \) is the observed information matrix calculated from a single observation, evaluated at the maximum likelihood estimates of θ k . Suppose that the prior conveys some sort of “minimal” information represented by \( {\varvec{\theta}}_{k} |M = k\sim N\left( {\hat{{\varvec{\theta}}}_{k} ,H_{{1,\hat{{\varvec{\theta}}}}}^{ - 1} } \right) \). This is a unit information prior centered at the maximum likelihood estimator and having a precision equivalent to that brought up by a sample of size n = 1. Following Sorensen and Gianola (2002), it can be shown that

This is one half of the Bayesian Information Criterion (BIC, Schwarz 1978). Note that evaluating (20) or (21) requires specification of the number of parameters, which is not always obvious with correlated random effects (e.g., animal or sire effects) in the model. However, as shown in (22), what matters is the difference in number of parameters between the two models. In a CG study, all models share the same set of infinitesimal additive effects, and computing (22) is straightforward. Nevertheless, (22) is not readily applicable to situations when the number of random effects varies among models. A review of approaches for computing marginal likelihoods for selection of models involving random effects is in Sinharay and Stein (2005).

Model selection search can proceed in two directions, e.g., moving either from larger to smaller models (“down” algorithm) or from smaller to larger models (“up” algorithm). Let A and C be dynamically changing subsets of model space M, which contain “acceptable” models and “candidate” models (i.e., those currently under consideration), respectively. Both algorithms start with A = Ø and C = {set of starting models} and proceed until set C is empty. Upon completion, set A contains a set of potentially acceptable models. Finally, all models meeting either of the following two criteria are removed:

This reduces considerably the number of models, and retains a set of data-supported models for the BMA analysis.

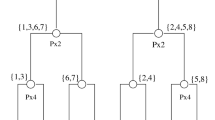

To illustrate the process, consider model selection involving the choice of three regression variables x 1, x 2, and x 3, which may represent three candidate gene effects. Let sample size be n = 1,200, and x 2 be the only regression variable that truly affects observation y. Without considering their interactions, there are eight possible models, including the null model, as follows:

Let log (O L ) = log (1/20) ≈ −1.30 and log (O R ) = log (20) ≈ 1.30 define the left and right boundaries of the OW, respectively.

Suppose that the “down” algorithm initializes with A = Ø and for simplicity C = {M1}. To start, pick a model, i.e., M1, from set C and add it to set A. Model selection search proceeds by comparing M1 with one of its submodels formed by removing one regression variable each time (leading to M2, M3, and M4, respectively). If M1 is rejected, the submodel replaces M1; otherwise, it “survives” as an acceptable model. First, select a submodel of M1, say M2 (which is M1 without x 1). Because the influence of x 1 on y is immaterial, models M1 and M2 would be expected to give similar likelihoods but the latter model has one less parameter. Using (22), this leads to \( \log \left( {{\frac{{p\left( {M2|y} \right)}}{{p\left( {M1|y} \right)}}}} \right) \approx 0 - {\frac{{\left( { - 1} \right)}}{2}}\log \left( {120} \right) \approx 1.54 > \log \left( {O_{R} } \right). \) Thus, model selection rejects M1, and M2 replaces M1 in set C. Continue the same process with the other two submodels M3 and M4. Assume that M3 is rejected and M4 is accepted, because the latter contains the influential variable x 2. Then, set C now consists of two models, M2 and M4.

Next, M2 is compared with all its submodels with one less regression variable (i.e., M6 and M7). First, select M7, which is M2 without x 2. Assume that \( \log \left[ {{\frac{{p\left( {{\mathbf{y}}|\hat{{\varvec{\theta}}}_{7} ,M7} \right)}}{{p\left( {{\mathbf{y}}|\hat{{\varvec{\theta}}}_{2} ,M2} \right)}}}} \right] = - 3.2 \). Then, \( \log \left[ {{\frac{{p\left( {M7|{\mathbf{y}}} \right)}}{{P\left( {M2|{\mathbf{y}}} \right)}}}} \right] \approx - 3.20 + 1.54 = - 1.66 < \log (O_{L} ) \). Thus, M7 is rejected, and so is its submodel M8. Model selection continues to compare it with the other submodel M6 (i.e., formed by removing x 3 from M2). Because x 3 has no significant effect on y, we would expect that the likelihoods of the two models are approximately equal, such that \( \log \left( {{\frac{{p\left( {M6|y} \right)}}{{p\left( {M2|y} \right)}}}} \right) \approx 0 - {\frac{{\left( { - 1} \right)}}{2}}\log \left( {120} \right) \approx 1.54 > \log \left( {O_{R} } \right) \). Thus, model selection rejects M2, and M6 replaces M2 in set C (now, set C consists of M4 and M6).

Like with M2, repeat the same calculation for comparing M4 and all its submodels (i.e., M5 and M6). For simplicity, we assume that M5 is rejected and M6 is accepted, because the latter contains the influential variable x 3. Because M6 is already in set C, there is no need to add it there.

By the same reasoning, the model selection retains M6 when compared to the null model M8, so M6 enters set A. Now, there is no more “candidate” model in set C, and the “down” algorithm ends. The outcome is set A which contains only one acceptable model. In real situations, however, more models are expected in set A.

The “up” algorithm starts, e.g., with A = Ø and, for example, C = {M6}, where set C contains model(s) output from the “down” algorithm. To proceed, the model selection compares M6 with one of its super-models each with one more regression variable. If there is decisive evidence for the super-model, it replaces the smaller model, otherwise the smaller model remains. Adding x 1 and x 3 to M6 leads to super-models M4 and M2, respectively. In this setting, model selection rejects both super-models and retains the smaller model (M6), because both variables do not influence y. Upon completion, set A contains the acceptable model, i.e., M6. Because there is only one model in set A, evaluations based on (23) and (24) are no longer necessary, and set A is final.

Rights and permissions

About this article

Cite this article

Wu, XL., Gianola, D., Rosa, G.J.M. et al. Bayesian model averaging for evaluation of candidate gene effects. Genetica 138, 395–407 (2010). https://doi.org/10.1007/s10709-009-9433-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10709-009-9433-4