Abstract

Nitrate (NO3 −) leaching into groundwater is a growing global concern for health, environmental, and economic reasons, yet little is known about the effects of agricultural management practices on the magnitude of leaching, especially in dryland semiarid regions. Groundwater nitrate–nitrogen (nitrate–N) concentrations above the drinking water standard of 10 mg L−1 are common in the Judith River Watershed (JRW) of semiarid central Montana. A 2-year study conducted on commercial farms in the JRW compared nitrate leaching rates across three alternative management practices (AMP: pea, controlled release urea, split application of N) and three grower standard practices (GSP: summer fallow, conventional urea, single application of urea). Crop biomass and soil were collected at ten sampling locations on each side of a management interface separating each AMP from its corresponding GSP. A nitrogen (N) mass balance approach was used to estimate the amount of nitrate leached annually. In 2013, less nitrate leached the year after the pea AMP (18 ± 2.5 kg N ha−1) than the year after the fallow GSP (54 ± 3.6 kg N ha−1), whereas the two AMP fertilizer treatments had no effect on nitrate leaching compared to GSPs. In 2014, leaching rates did not differ between each AMP and its corresponding GSP. The results suggest that replacing fallow with pea has the greatest potential to reduce nitrate leaching. Future leaching research should likely focus on practices that decrease deep percolation, such as fallow replacement with annual or perennial crops, more than on N fertilizer practices.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Groundwater nitrate contamination due to leaching is an extensive and growing concern, especially in agricultural regions with shallow groundwater (Power and Schepers 1989; Dubrovsky et al. 2010; Puckett et al. 2011). Puckett et al. (2011) showed that average nitrate–N concentrations in 424 shallow unconfined groundwater wells across the United States increased from <2 mg L−1 in the early 1940s to 15 mg L−1 by 2003. Nitrate–N concentrations above the Environmental Protection Agency’s (EPA’s) primary drinking water standard of 10 mg L−1 have been linked to adverse health effects such as methemoglobinemia (Knobeloch et al. 2000; Fewtrell 2004) and cancer (De Roos et al. 2003; Ward et al. 2005). Beyond health concerns, nitrate leaching can result in economic losses for agricultural producers (Janzen et al. 2003; Miao et al. 2014), making greater N use efficiency (NUE)—conceived here as the ratio of crop N uptake to plant available N—a promising shared goal for sound environmental stewardship and economic sustainability. In the 720,000-ha semiarid Judith River Watershed (JRW) of central Montana, a large agricultural production region in the northern Great Plains (NGP), nitrate–N concentrations in shallow wells are commonly above the EPA drinking water standard. Notably, concentrations in the only long-term monitoring well in the watershed approximately doubled since 1994, increasing from ca. 10 to ca. 20 mg L−1 in two decades (Schmidt and Mulder 2010; Miller 2013).

Much of the small-grain agriculture in the JRW is on soils with shallow water tables and gravel contacts 30–100 cm below the ground surface. These soils are not only especially vulnerable to leaching loss of solutes, but make agricultural production on them particularly sensitive to management changes and drought. As a result, producers are often hesitant to adopt new strategies, limiting their flexibility to develop environmentally and economically successful management solutions. Soils with shallow gravel contacts are not confined only to the JRW but exist on alluvial and fluvial landforms throughout Montana, including the 200,000-ha Flaxville Gravels located in northeast Montana (Nimick and Thamke 1998).

Reducing N application rate is one option for decreasing leaching (Goulding 2000) and increasing NUE, yet decreased N rates can reduce net revenue especially when grain protein discounts are steep (Miller et al. 2015). Controlled-release urea (CRU: Mikkelsen et al. 1994; Shoji et al. 2001) and split N applications (Mascagni and Sabbe 1991; Mohammed et al. 2013) can also decrease leaching and increase NUE by lowering soil nitrate concentrations during vulnerable periods for leaching. Nitrate leaching effects of CRU and split applications have not been evaluated in the semiarid NGP, although Grant et al. (2012) found that they produced inconsistent effects on NUE in a wetter portion of the NGP.

Cropping systems can also affect efficiency of water and nitrogen use. Summer fallow, a production method in which crops are not grown for an entire season to conserve water, has been linked to high groundwater nitrate concentrations in the NGP (Custer 1976; Bauder et al. 1993; Nimick and Thamke 1998). Consistent with this finding, continuous cropping can decrease the downward movement of water and increase NUE (Campbell et al. 1984; Westfall et al. 1996). In western Canada, a continuous wheat rotation leached 180 kg N ha−1 less nitrate than fallow-wheat over the 37-year study (Campbell et al. 2006). Consequently, intensifying cropping systems and increasing crop diversity, such as replacing fallow with annual legumes in cereal rotations, could reduce leaching, with potential to also increase net revenue in the NGP (Zentner et al. 2001; Miller et al. 2015), yet concerns persist about increased risk from increased water use of intensified cropping systems.

Published nitrate leaching research for dryland semiarid regions in general is scant. Limited research on this topic may reflect an underestimation of the importance of leaching in low precipitation regions, but elevated groundwater nitrate concentrations in other parts of Montana and the Great Plains demonstrate its significance (Bauder et al. 1993; Scanlon et al. 2008; Schmidt 2009). In addition, rates of deep percolation from the root zone in semiarid regions are relatively small and difficult to quantify, especially in areas with highly variable gravel contact depths. Here we employ a mass balance leaching quantification approach (Meisinger and Randall 1991) that relies on spatially extensive sampling, and complements a companion lysimeter study (Sigler et al. in review).

This study seeks to address the research gap in measuring nitrate leaching rates in regions of the NGP with shallow gravel contacts and shallow water tables by identifying Alternative Management Practices (AMPs) that are most likely to be adopted by agricultural producers and then empirically testing and comparing the performance of each AMP to reduce nitrate leaching rates. Although soils with shallow gravel contacts are less common outside the JRW, these soils are more sensitive to management changes than deeper soils, and hence can provide insight to practices that can affect leaching from deeper soils during wetter periods. All studies were conducted on producer fields using their equipment, with management practices, including N rates, developed in close collaboration with producers.

Materials and methods

Study area

The 720,000-ha JRW (HUC 10040103) is located in the Upper Missouri River watershed in central Montana, within the North American NGP agroecoregion (Fig. 1; Padbury et al. 2002). Agricultural land encompasses 41% of the agroecoregion’s approximately 125 million ha, with the vast majority of this agricultural land farmed using dryland practices. The JRW ranges in elevation between 760 and 1290 m, and the town of Moccasin, located near the JRW’s center, receives approximately 40% of its average 390 mm annual precipitation in May and June (WRCC Gage # 245761). Annual crops in the watershed are primarily small grains, which are especially prevalent on gravel-mantled strath terraces (cut by a meandering river into relatively soft bedrock) with shallow aquifers, where elevated nitrate concentrations are most common (Schmidt and Mulder 2010). Only 1% of the annual crops grown in the two counties within JRW are irrigated (USDA 2016). Summer fallow represents approximately 27% of the cropped land (USDA 2014) in any given year, reflecting that on average, fallow is used once in a 3- or 4-year rotation.

Judith River Watershed location within Montana and the northern Great Plains (NGP). Aerial imagery illustrates the large extent of cropland over gravel deposits. Fields A, B, and C were located within the Stanford Terrace, Moccasin Terrace, and Moore Bench landforms, respectively; Data Sources: Montana Natural Resources Information Systems, USGS National Elevation Dataset, Commission for Environmental Cooperation, LandSat 8 imagery, MBMG Belt, Big Snowy and Lewistown Geologic Maps, USGS White Sulphur Springs Geologic Map

The soils capping the terrace gravels in the JRW are derived primarily from alluvium, loess, and shale, with mainly clay loam and loam textures. They are primarily mapped as Typic Calciustolls of the Judith series, Vertic Argiustolls of the Danvers series, and Typic Argiustolls of the Tamaneen and Doughty series, which collectively are characterized by variable and shallow gravel contacts that vary from 30 to 100 cm depth, with about 20 g kg-fines−1 of soil organic carbon (SOC) in the upper 15 cm, and 100–600 g CaCO3 kg-fines−1 (12–72 g C kg-fines−1) in calcic subsurface horizons that predominated below ca. 30 cm (NRCS 2016).

Study design

The study was conducted from 2012 to 2014 on three dryland commercial farms located in the JRW (Fig. 1; Table 1). Farms were chosen to ensure representation of major landform types and geographic distribution within the JRW. A representative sample of locations is particularly important for helping attenuate potential selection bias of producers who were willing to participate. Within each landform, field selection criteria included (1) presence of shallow gravel contacts within soils (<100 cm), (2) only one soil series or two-component complex per field, and (3) use of summer fallow-winter wheat-spring grain rotation (a typical JRW rotation). Fields A, B, and C were located a few km from Stanford, Moccasin, and Moore, respectively (Fig. 1) and were on farms that had been under no-till management for 10–15 years.

We surveyed randomly selected producers (Jackson-Smith and Armstrong 2012) and obtained input from both a stakeholder advisory committee and a producer research advisory group to identify three AMPs with the highest adoption potential for the JRW: pea grown for grain (followed by winter wheat), and wheat fertilized with either a controlled-release urea (CRU) or a split N application (see Supplementary Material for details on selection process). These AMPs were empirically compared with grower standard practices (GSP): summer fallow (followed by winter wheat), and wheat fertilized with conventional urea (CU) applied in a single broadcast (SB). All three AMPs were tested at Field A (Fig. 2). The sampling design at Fields B and C mimicked that at Field A, yet the only AMP tested at Field B was pea (2012 and 2013), and only fertilizer treatments were tested at Field C (2013 and 2014).

Study design at each field, illustrating sampling locations (+), non-sampled buffers at field ends (gray shading), treatment interfaces (dashed lines), crop, and treatment. WW winter wheat, SW spring wheat, Fal fallow, SB single broadcast urea, CU conventional urea, CRU controlled release urea, Split App split application. In 2013, subfield A4 simultaneously served as a Post Fal treatment (east sample sites) and SB (west sample sites)

At each farm, full scale operational fields were divided into 10–20 ha subfields (Fig. 2). Each north–south management boundary (“interface”) separated a GSP subfield from its respective AMP subfield (selected randomly). In total, there were eight GSP-AMP interfaces (four at Field A, two at Field B, and two at Field C) so that each treatment was studied in duplicate in both space (i.e., pea studied at Field A and B) and time (Table 2). Fertilizer treatments used in 2013 on winter wheat were repeated on the same fields in 2014 on spring wheat, and pea and fallow treatments were on different subfields in 2012 than in 2013 so that winter wheat could follow pea or fallow in rotation.

Sampling was designed to capture variation with treatment in full size working fields. Each subfield excluded areas located approximately 100 m from the north and south field edges to minimize potential agricultural machinery disturbance from turning and/or multiple seeding/chemical passes. Ten sample locations were established per subfield in pairs equidistant from the interface (20 sample locations per interface). Sample locations were approximately 8.5 m from the interface line to avoid treatment edge effects (i.e., 17 m between paired sampling locations). The areas surrounding each sampling locations were near-level, with slopes <2%, and neither runoff, nor evidence of runoff, was observed. Soil pits excavated in summer 2012 at 4–5 locations on each side of each interface revealed presence of groundwater within 1.2 m of the land surface in 8 of 33 pits at Field A, likely due in part to near record precipitation amounts received from Sept 2010 to Aug 2011 (483 mm; Moccasin Agrimet). A network of 12 monitoring wells installed within Field A in 2012 indicated dropping water levels for the rest of that year, and in 2013, none of the measured water levels in the field were within 1.2 m of ground surface, making concerns of crop N uptake from groundwater less of a concern as the study progressed. There was no evidence of groundwater within the ca. 1 m root zone at either Field B or C, where groundwater levels were ca. 10 and 3–15 m below the surface, respectively, in monitoring wells and nearby domestic wells.

Rates of fertilizer N were within 3 kg N ha−1 for each AMP and its respective GSP (Table 3). In the 2013 treatment year (i.e., late summer 2012 to late summer 2013), CRU (ESN®; Agrium Inc., Calgary, AB, USA) was applied with winter wheat seed. In the 2014 treatment year, growers A and C applied about 1/3 of the total N rate as CRU with spring wheat seed and the remaining N was broadcast as urea later in the spring. While this is technically a ‘split application’, for purposes of this paper, we have designated the split application as broadcast urea followed by a liquid N application. Specifically, urea ammonium nitrate (UAN) was applied in late May/early June 2013 using flat fan nozzles. For the second application at Field C in 2014, UAN was applied with streamer bars, whereas on Field A, the second application was not performed due to very dry conditions. Additional details of fertilizer application are presented in John (2015).

Instrumentation and sampling

Instrumentation for air temperature, soil temperature, precipitation, and volumetric water content (VWC) are described in the Supplementary Material. Sampling to characterize subfield soils was conducted prior to initiation of treatments, between Apr and Aug 2012. Two soil core sub-samples were taken at each sample location with either a hand core or custom-made truck mounted hydraulic probe to a depth of 15 cm. Sub-samples were mixed, placed into plastic-lined paper bags, and transported in coolers on ice to Montana State University prior to further processing. Each year, within 10 days prior to commercial harvest, a biomass sample was collected from a ca. 0.6-m2 area at each location using a rice sickle to cut the stems at soil contact.

Post-harvest soil samples were collected as close to harvest as possible (ca. July 15–Sept) during each study year (2012–2014) to minimize N mineralization between biomass harvest and soil sampling that would bias the N balance. Two 3-cm diameter soil cores were collected at each sample location with a truck-mounted hydraulic probe to the deepest possible depth; that is, either to gravel contact, anomalous rock, or the 110-cm probe depth limit (only 6 of 1130 cores). The two core samples were then sectioned into 15-cm depth intervals and combined into one sample per depth. In 2013 and 2014, both biomass and soil samples were collected ca. 1 m north of the previous year’s soil sampling location based on coordinates logged with a GPS unit (Trimble Navigation Ltd., Sunnyvale, CA, USA).

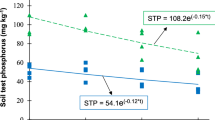

Laboratory procedures

Soil samples were weighed moist, oven-dried at 50 °C for 7–14 days, and re-weighed to determine gravimetric water content (GWC) and dry bulk density based on core dimensions. Volumetric water content (VWC) was determined for each sample by multiplying GWC by dry bulk density. Each soil sample was broken up with a mortar and pestle and sieved (<2 mm). Nitrate was extracted from the fines in a 1:10 soil:solution ratio with 1 M KCl (Bundy and Meisinger 1994) and extracts analyzed with cadmium reduction and colorimetry using Lachat Flow Injection Analysis (QuikChem Method 12-107-04-1-B, Lachat Instruments Inc., Milwaukee, WI, USA). Each surface core (0–15 cm) collected in 2012 was analyzed for pH (1:1 soil:water), electrical conductivity (EC; 1:1 soil:water), Olsen phosphorus (Frank et al. 2012), exchangeable potassium (ammonium acetate pH 7), total-N (TN; Elementar Vario Max by combustion analyzer), soil organic carbon (SOC) by Walkley–Black (Combs and Nathan 2012), and soil texture (Hydrometer method ASTM 422).

Wheat biomass samples were dried at 50 °C for 7–14 days, weighed, and threshed with a Vogel Stationary Grain Thresher (Almaco, Nevada, IA, USA). All grain and stubble were finely ground (<0.5 mm) in a Udy mill (Cyclone Lab sample mill, Udy Corporation, Fort Collins, CO, USA), and 0.1-g subsamples analyzed for TN using an automatic combustion analyzer (TruSpec CN, LECO Corporation, St. Joseph, MI, USA).

N Balance method to calculate nitrate leaching

To estimate the amount of nitrate–N that leached from each of the treatment subfield locations, we used a simple inorganic N mass balance (Meisinger and Randall 1991; Ju et al. 2006) of annual inputs, outputs (losses) and soil storage change:

In this conceptualization, an inorganic-N mass balance is approximated by the combination of inputs including N fertilizer, net N mineralization (total mineralization–total immobilization), and N deposition (wet and dry); and inorganic N outputs including N fertilizer volatilization, denitrification, and crop N uptake (grain, stubble and root) from the soil inorganic N pool. Although inorganic N includes both ammonium–N and nitrate–N, nitrate–N concentrations generally exceeded ammonium–N concentrations, and ammonium–N pools in late summer were generally small compared to the overall N balance. Nitrogen runoff was not included based on nearly flat fields with no evidence of runoff.

Fertilizer N amounts were determined through grower management interviews. In both study years, TN deposition was estimated to be 1.6 kg N ha−1 year−1 using data from the EPA’s nearest atmospheric deposition station in Glacier National Park (Elevation 976 m; U. S. Environmental Protection Agency 2014). The TN amount in the root biomass was set equal to 20% of the aboveground N, based on wheat root N:shoot N ratios (Andersson et al. 2005).

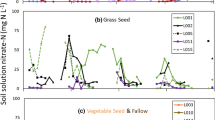

Broadcast urea volatilization amounts were estimated at 14% of applied N based on measured average ammonia volatilization losses from early spring (Mar 24–Apr 20) applications for eight central and north central Montana trials, including three within the JRW (Engel et al. 2011; Engel and Jones 2014). For both years, it was assumed that seed placed starter and CRU fertilizer did not volatilize, and that 7% of liquid UAN volatilized based on a Manitoba, Canada study conducted in late May (Grant et al. 1996). The change in soil nitrate storage (soil nitratefinal–soil nitrateinitial) was defined as the difference in total soil core sample nitrate–N pools between each post-harvest sampling period. The total soil nitrate–N pool (kg N ha−1) was a sum of nitrate–N at all depth intervals (0–15 cm, 15–30 cm, etc.) that could be collected before encountering rocks (15–105 cm depth), averaged across each subfield.

N Mineralization and denitrification

Although neither net N mineralization nor denitrification was measured in this study, only the difference between the two was necessary to complete the N balance (see Eq. 1). This difference was predicted for each treatment subfield based on changes in soil nitrate measurements taken during seven fallow “subfield-periods” (e.g., Aug 13–Nov 6 2012 on subfield A4) of ca. 40–200 days during 2012–2013. Because these were fallow periods, fertilizer input and N-uptake were known to be zero, and there were likely negligible amounts of volatilization (Grant et al. 1996) and deposition. Based on water budgets, nitrate leaching out of the depth interval sampled was also assumed to be zero; specifically, evapotranspiration estimated from flux tower measurements on and near Field C (Vick et al. 2016) was greater (within uncertainty) than the precipitation amount minus the change in soil water storage (final–initial) during the seven subfield-periods. Therefore, the final assessment provides a measure of net nitrate production (NNP, kg N ha−1) that includes mainly nitrate derived from net N mineralization and lost to denitrification (Eq. 2):

The assumption that change in soil nitrate equals net mineralization–denitrification also assumes that nitrification rates are at least as great as net mineralization rates in this system. Using NNP data from only fallow periods does create some uncertainty in estimating NNP rates when a crop is growing; however, VWC is a key factor affected by a crop, and calibration VWCs were as low as 0.16 cm3 cm−3. This value is not much different from the lowest recorded under a crop and suggests the model could adequately predict NNP under both fallow and crop. Soil nitrate–N concentrations were analyzed from soil core sampling that occurred during fallow sampling time periods, at ten locations within five subfields in the 0–15 cm depth and four locations in two subfields in the 0–15 and 15–30 cm depths to calculate 66 distinct NNP observations.

Net nitrate production model

R statistical software (The R Foundation for Statistical Computing, Vienna, Austria, 2013) was used to estimate a linear regression specification of NNP at the subfield level, which characterizes a more practical model for assessing and making insights about farm-level management decisions than NNP at specific 0.6 m2 sampling locations. Existing literature describes a number of variables that influence N mineralization and denitrification rates; those cited most often include soil temperature (Stanford et al. 1973, 1975; Curtin et al. 2012), soil water content (Paul et al. 2003; Heumann et al. 2011) and soil TN (Vigil et al. 2002). Other variables discussed in the literature include crop residue C:N ratio (Booth et al. 2005; Patron et al. 2007), soil organic matter (Booth et al. 2005; Heumann et al. 2014) and previous crop (Soon and Arshad 2002). We modeled NNP as a function of previous crop, soil C:N ratio, depth to gravel contact, soil test K, clay content, pH, EC, soil temperature (T), VWC, and soil TN pool (kg N ha−1). Post-estimation statistics determined that T, VWC, and TN provided the best fit to the NNP data (see Supplementary Material for details).

The selected model was then used to predict NNP in both the 0–15 and 15–30 cm depths for four distinct time periods each year (Aug/early Sept–Nov 14; Nov 15–Apr 30; May 1–June 30; July 1–Aug/early Sept). Net nitrate production in the upper 30 cm for each time period was summed to calculate an annual input for inclusion in the nitrate leaching mass balance equation. Although some mineralization likely occurs at greater depths, soil nitrate profiles from 1.4-m pits excavated after fallow indicated that more than 55% of N mineralization occurred in the upper 30 cm (unpub. data), likely in part due to much lower TN concentrations in lower depths and gravel contacts near 65 cm.

Nitrate leaching model

The nitrate leaching rate (Eq. 1) was estimated using a hierarchal mixed effects model with the lmer and lme4 packages (Gelman and Hill 2007; Bates et al. 2015) in R statistical software. This estimation approach was chosen for its ability to account for unobserved or unquantifiable variability across fields within a particular production location (e.g., topographical heterogeneity at a farm) and across farm locations in the sample (e.g., differences in producers’ farming decisions). We specified differences across fields and locations as random effects because we assumed that the size of the effect of unobserved factors could differ from one farm and one field to another. This specification provides for greater flexibility in generalizing the results, especially in light of the relatively modest sample size (Borenstein et al. 2010). Lastly, the hierarchal model design allows nesting each of the ten sample location pairs within each subfield pair, which further improves the ability to control for unobserved variability along the management interface. Because the second N application was not applied to the Split App subfield (A3) in 2014, we treated that subfield as a GSP with a lower N rate, rather than eliminating it, to maintain estimation power.

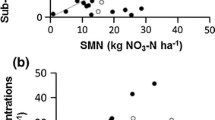

A separate model was constructed for each study year. Twelve of the 60 sampling locations from the 2013 winter wheat at Field A (Fig. 2) were excluded from further analysis based on negative leaching values, suggesting that winter wheat took up groundwater N from those locations. There was no evidence of groundwater N uptake by wheat in 2014 at Field A, and groundwater was too deep at Fields B and C to be an issue. Explanatory variables of interest were treatment, grower, growing season precipitation, N fertilizer amount, TN, SOC, soil pH, depth to gravel contact, coarse fraction, Olsen P, EC, and crop type (only in 2014). We also created a categorical interaction variable that accounts for unobservable differences in grower management and climate (Mgmt&Clim) across farms. After adjusting for available degrees of freedom based on paired location-fields, variables estimated to statistically explain variation in leaching were Mgmt&Clim, depth to gravel contact, clay, Olsen P, coarse fraction, EC, and crop (only in 2014). For a more detailed description of the modeling, see John (2015).

Economic assessment

We recognized that practices that reduce leaching will have a higher likelihood for adoption if they maintain or increase revenue. To assess net revenue for each tested management practice, an enterprise budget analysis was conducted (Supplementary Material; John 2015).

Results and discussion

Precipitation context

Winter wheat seeding for the first year of the study (2013) followed a very dry 2012 water year (Oct 2011–Sept 2012) in the JRW (Table 4). In the 2013 treatment year, Field A received similar growing season (May–July) precipitation (187 mm) to the long term average (LTA) of 181 mm, whereas fields B and C received 241 and 343 mm, respectively (Table 4). Much of the growing season precipitation occurred from late May to early June, making for ideal leaching conditions on soils with shallow gravel contacts. Above average precipitation occurred at the beginning of the 2014 treatment year (namely in September 2013), yet all fields received well below average precipitation during the growing season and Field A received ca. 40% less than the other fields.

Net nitrate production model

The selected NNP model included four explanatory variables (Eq. 3).

where T is soil temperature in °C, VWC is cm3 water cm−3 soil and TN is total N in upper 15 cm in Mg N ha−1. Both T and TN were positively correlated with NNP (p < 0.05), consistent with other research (Cassman and Munns 1980; Vigil et al. 2002). The negative relationship between T2 and NNP possibly reflects increased denitrification rates at higher temperatures (Stanford et al. 1975). Using the study’s average VWC (0.25 cm3 cm−3) and TN (2.8 Mg N ha−1), NNP becomes negative above 23 °C, suggesting denitrification exceeds net mineralization at higher temperature, consistent with other findings of net N loss under hot, dry conditions (Amundson et al. 2003). While VWC was only weakly related (p = 0.12) to NNP, this could simply be a result of a relatively small number of independent VWCs (n = 5) to provide sufficient power to adequately capture the effect.

Although the four independent variables only explained 18% of the variability in the 66 NNP measurements, overall, the NNP model explained most of the variability in NNP when averaged across each subfield-period (R 2 = 0.81). This result is more relevant, because it was more important and practical to be able to accurately estimate NNP, and hence leaching, for a particular subfield and treatment than at a particular location within a subfield. Moreover, any changes in producers’ management strategies would not be made at the scale of a sampling location (ca. 1 m2), but would be more likely made at a substantially larger scale. The average in-sample predicted NNP among the seven subfield-periods was 0.13 kg N ha−1 day−1 and average absolute difference from measured NNP was 0.03 kg N ha−1 day−1. For the seven subfield-periods, the average high squared forecast error (n = 2) was 0.020 which was 60% higher than the average low squared forecast error (n = 5). When the two worst (i.e., highest squared forecast error) models were used to estimate nitrate leaching, none of the three AMP versus GSP treatment effects changed for either year, providing confidence that any errors in NNP prediction did not affect our final conclusions on treatment effects (discussed below).

N Budget inputs and outputs

Fertilizer was the largest N input, averaging approximately 90 kg N ha−1 in both years (Tables 5, 6). The average predicted NNPs under wheat were ca. 70 and ca. 62 kg N ha−1 in 2013 and 2014, respectively, or about 75% of fertilizer N inputs. A study in Saskatchewan Canada, also in the NGP, found 54–70 kg N ha−1 of net N mineralization occurred from harvest through the following wheat growing season (Campbell et al. 2008), reasonably coinciding with our mean NNP estimates. Winter wheat N uptake was very high in 2013, averaging 136 kg N ha−1, due to nearly ideal growing conditions that resulted in grain yields of 3.4–4.6 Mg ha−1. Winter wheat N uptake in 2014 was nearly as high (110–133 kg N ha−1) as in 2013, yet spring wheat, grown on the fertilizer treatment fields following winter wheat, was drought-stressed resulting in low yields (1.9–2.6 Mg ha−1) and N uptake (ca. 80 kg N ha−1). Soil nitrate–N changes (final–initial) during each wheat year ranged from −17 kg N ha−1 (in pea treatment) to −48 kg N ha−1 (in fallow treatment) in 2013, and were close to 0 for all fertilizer treatments in 2014. Volatilization and deposition estimates were consistently much smaller than the other inputs and outputs. The dominance of plant N uptake and fertilizer N in the N balance, both of which likely had relatively low error, minimizes uncertainty around the N leaching estimate (Meisinger and Randall 1991).

Nitrate leaching results

Among the six nitrate leaching comparisons, only one treatment difference was observed (Table 7); namely, in the 2013 treatment year, less nitrate leached during the winter wheat year post-pea (18 ± 2.5 kg N ha−1) than from winter wheat post-fallow (54 ± 3.6 kg N ha−1). Reduced leaching after pea was likely a result of both lower soil nitrate and lower VWC after pea than after fallow. Specifically, 2012 post-harvest soil nitrate pools were on average 16 and 59 kg N ha−1 for pea and fallow, respectively. Also, soil VWC was close to wilting point at pea harvest (ca. 0.16 cm3 cm−3) and field capacity on adjacent fallow subfields (ca. 0.28 cm3 cm−3); this would have reduced deep percolation and N mineralization rates more post-pea than post-fallow. Although there is a recognized degree of uncertainty in the results given the number of assumptions needed when using a N mass balance approach, these results are broadly consistent with our companion study (Sigler et al. in review), which found that mean lysimeter nitrate concentrations at the gravel contact were significantly lower (p < 0.05) the year after a crop (8.6 ± 7.3 mg L−1) than the year after fallow (25.4 ± 14.0 mg L−1) during the 2-year study, helping confirm that fallow increases leaching potential. Our findings are also consistent with results from a much longer term study in Saskatchewan Canada (Campbell et al. 2006), demonstrating that fallow replacement could have both short- and long-term benefits on water quality.

In contrast to 2013, no leaching difference was observed between wheat post-pea and wheat post-fallow during the 2014 treatment year (Table 7). This may reflect that soil nitrate–N concentrations at the start of the 2014 treatment year only differed by 17 kg N ha−1 between pea and fallow (compared to 43 kg N ha−1 at start of 2013 treatment year). This was in turn likely due to precipitation in the first month of the 2014 treatment year (Sept 2013) averaging about two times the long-term average, compared to <6% of average for Sept 2012 (Table 4). Abnormally high soil moisture going into the 2014 treatment would have initiated mineralization of pea residue and soil organic matter, bringing the amount of nitrate available to leach from pea fields much closer to that from fallow than in the previous year.

In both treatment years, neither fertilizer AMP had an effect (p = 0.05) on nitrate leaching. While CRUs have been found to decrease leaching in other regions (Mikkelsen et al. 1994; Nakamura et al. 2004) and under irrigated conditions (Wilson et al. 2010), the CRU benefit in our study may have been negated due to N application timing differences between treatments. Notably, CRU was applied with the seed about 6 months prior to the GSP spring broadcast urea application in 2013. The placement and timing of the CRU AMP and urea GSP were not the same because fall-applied urea was not a standard practice (due to shallow gravel contact) and CRU can release too slowly to benefit wheat if spring broadcast in the NGP (McKenzie et al. 2007). The split application may not have been effective at reducing leaching in 2013 because on Field C the second application was completed on 23 May, immediately prior to 180 mm of precipitation in the next 12 days, enough to move substantial UAN below the root zone before it could be used by the crop. In the 2014 treatment year, the majority of N had leached by 30 April on Field C (John 2015), which was prior to urea application in both the GSP and AMP treatments, minimizing the potential for the split application to reduce leaching. In the semiarid NGP, effects of CRU and split application practices on leaching rates have not been published to our knowledge. Our results suggest that alternative N fertilizer practices can only be effective at reducing leaching if substantial leaching occurs after a standard single fertilizer N application, yet precipitation rates are not so high that they negate the AMP benefit on soils with shallow gravel contacts. Given that the primary precipitation period in most of Montana and some adjacent regions is from late April to early June, fertilizer N is generally applied by mid-April, and 2013 had spring precipitation well above the long-term average, it’s possible that the fertilizer AMPs could reduce leaching in other weather years.

Estimated annual rates of nitrate leached during wheat years over the 2-year study of 18 ± 2.5–69 ± 6.1 kg N ha−1 (Tables 5, 6) were substantial considering that the average annual fertilizer input was 87 kg N ha−1, but less startling when both NNP and fertilizer were summed (ca. 150 kg N ha−1). For comparison with our leaching rates, a companion study (Sigler et al. in review) found that the average N flux from soil to groundwater for the cropland-dominated Moccasin Terrace (Fig. 1) from 2012 to 2014 was approximately 10–20 kg N ha−1 year−1. That work quantifies leaching rates with observations in groundwater, so values are integrated over time and space (26,000-ha landform), and are likely closer to an annual average N leaching rate across years and management. Leaching rates were likely elevated in our current study compared to landform leaching rates because of above average May–June precipitation in 2013 (121–225% of LTA) after fallow, and hence high soil nitrate levels, and above average precipitation from Sept 2013–Mar 2014 before spring wheat N uptake started (Table 4). Thus the rates determined here provide a reasonable measure of leaching loss for these wheat fields in these precipitation years, within these cropping systems. Most importantly, they indicate the vulnerability of these soils to low NUE when both fertilizer and mineralization are considered. Although nitrate leaching rates reported here were likely above average, the estimated rates likely were not overly rare, because precipitation amounts during the study were within normal ranges. Although amounts during the study were above average for key periods, from 1983 to 2012, there were six May–June periods wetter than 2013 (WRCC gage, Moccasin).

In the 2014 treatment year, depth to gravel contact was inversely related to nitrate leaching (Table 8). The effect of depth to gravel contact on leaching was considerable; notably, just a 60 cm difference in depth to gravel contact was associated with a predicted 17.4 ± 8.7 kg N ha−1 difference in leaching. This finding not only points out the threshold response of gravel depth, it also suggests that that there was still substantial leaching (ca. 20 kg N ha−1) after pea on the deepest gravel contacts (ca. 125 cm), indicating fallow replacement alone won’t eliminate leaching. Conversely, on the shallowest gravel contacts (ca. 30 cm), leaching from GSP management could exceed 70 kg N ha−1. This result demonstrates the critical importance of identifying fields and areas within fields that have the shallowest gravel contacts to better inform appropriate management decisions, such as adoption of multiple, integrated AMPs, or planting perennials. Aerial imagery of crop greenness, calibrated with push probing, has accurately predicted locations within Field B that have gravel contact depths <45 cm (Sigler et al. unpub data).

In the 2013 treatment year, depth to gravel contact did not influence nitrate leaching, likely because a bulk of the May–June rain came in a very short period from late May to early June, minimizing the ability of winter wheat roots to take up nitrate before it reached gravel, even in soils with greater depths to gravel. Model error was small (1.7 kg N ha−1) relative to predicted leaching rates (18–69 kg N ha−1), indicating the model was quite accurate for the purpose of assessing treatment effects. Although there is uncertainty with each of the terms in the leaching equation, for the most part, uncertainty should be similar across a management interface (e.g., volatilization should be similar on post-pea and post-fallow subfields since the same N amount was applied). Therefore, we have more confidence in treatment differences than in absolute leaching amounts.

Although we only tested one treatment that was found likely to reduce deep percolation, replacement of fallow with any crop or converting from annual cropping to perennial cropping is expected to decrease deep percolation and nitrate leaching based on previous work. For example, a meta-analysis by Tonitto et al. (2006) found legume and non-legume cover crops both reduced nitrate leaching amounts compared to fallow, by 40 and 70%, respectively. In semiarid regions, perennial grass ecosystems have been found to retain >90% of added N in the long term (15 years) compared to only 30% in annually cropped ecosystems (Mobley et al. 2014).

Our results strongly indicate that fallowing can result in substantial nitrate leaching because mineralization is out of phase with N uptake, and deep percolation rates increase during and immediately after fallow. Our results further indicate that although continuously cropped systems can leach less than fallow-wheat systems, there was still substantial leaching loss from pea-wheat and continuous wheat. This suggests that conversion to perennials, especially in the most vulnerable locations, will likely be necessary to reduce nitrate loss and eventually meet the EPA drinking water standard in JRW groundwater. While somewhat uncertain, current climate models (Walsh et al. 2014) predict warmer, wetter winters and springs, and hotter, drier summers. These conditions are conducive to enhanced mineralization of organic matter, increased leaching in spring, and increased motivation toward fallowing as perceived drought protection. As a consequence, we see potential for further nitrate leaching to groundwater and potential soil degradation if management changes toward reduced fallow, cropping system diversification, and seeding perennials in the most vulnerable locations are not adopted.

Net revenue

The enterprise budget determined that pea-winter wheat net revenue ($243 ± 39 ha−1) was higher than fallow-winter wheat net revenue ($160 ± 33 ha−1) in 2013–2014 (John 2015). Conversely, the fertilizer AMPs had no effect on net revenue in either study year and replacing fallow with pea did not affect net revenue in 2012–2013 (John 2015). The differences in net revenue results between years for the fallow–pea comparison were likely a direct result of precipitation differences between years. Notably, 2012 winter wheat seeding conditions were much worse after pea than fallow due to a very dry September (<2 mm), whereas seeding conditions after pea and fallow were similar in 2013 due to a wetter than average September (ca. 75 mm). Lack of economic benefit from the fertilizer AMPs was possibly caused by the intensity of late May/early June rains in 2013 and drought in 2014 (making water, not N, limiting to yield), as discussed above, combined with additional costs of these AMPs.

Conclusion

This study provides empirical evidence that management practices that decrease both deep percolation and soil nitrate levels, such as fallow replacement, can increase the likelihood of reducing nitrate leaching, whereas fertilizer timing and source management practices may be less effective because they do not substantially decrease deep percolation. Nonetheless, fallow is still common in parts of the NGP, in part because replacing fallow is perceived to reduce economic returns, despite evidence that pea-wheat often produces higher net revenue than fallow-wheat (Zentner et al. 2001: Miller et al. 2015), consistent with our findings. The combined leaching and revenue results demonstrate that fallow replacement with pea can reduce leaching without decreasing profit when cropping system is considered as a whole. These results are highly relevant for local farmers who list maximization of yields as a top management priority when fertilizing with N, but for whom consideration of leaching has gained increased weight in management decisions during the period of this project (Jackson-Smith et al. 2016).

Our study results also demonstrated that leaching was highly dependent on the depth of gravel contact, indicating that it would be most beneficial to target fields and areas within fields that have the shallowest gravel contacts for management changes. Future nitrate leaching research in agricultural areas with shallow gravel contacts and shallow groundwater should focus on practices that reduce potential for deep percolation (e.g., fallow replacement, perennials) while maintaining or increasing economic returns to increase the adoption potential of these practices.

References

Amundson R, Austin AT, Schuur EAG, Yoo K, Matzek V, Kendall C, Uebersax A, Brenner D, Baisden WT (2003) Global patterns of the isotopic composition of soil and plant nitrogen. Glob Biogeochem Cycles 17:1031. doi:10.1029/2002GB001903

Andersson A, Johansson E, Oscarson P (2005) Nitrogen redistribution from the roots in post-anthesis plants of spring wheat. Plant Soil 269:321–332

Bates D, Maechler M, Bolker BM, Walker S (2015) Fitting linear mixed-effects models using lme4. ArXiv e-print; J Stat Softw. http://arxiv.org/abs/1406.5823. Accessed 8 Sept 2016

Bauder JW, Sinclair KN, Lund RE (1993) Physiographic and land use characteristics associated with nitrate–nitrogen in Montana groundwater. J Environ Qual 22:255–262

Booth MS, Stark JM, Rastetter E (2005) Controls on nitrogen cycling in terrestrial ecosystems: a synthetic analysis of literature data. Ecol Monogr 75(2):139–157

Borenstein M, Hedges LV, Higgins JPT, Rothstein HR (2010) A basic introduction to fixed-effect and random-effects models for meta-analysis. Res Synth Methods 1:97–111

Bundy LG, Meisinger JJ (1994) Nitrogen availability indices. In: Weaver RW (ed) Methods of soil analysis. Part 2, microbiological and biochemical properties. Soil Science Society of America book series, vol 5. Soil Science Society of America, Madison, WI, USA, pp 951–984

Campbell CA, De Jong R, Zentner RP (1984) Effect of cropping, summer fallow and fertilizer nitrogen on nitrate–nitrogen lost by leaching from a Brown Chernozemic loam. Can J Soil Sci 64:61–74

Campbell CA, Selles F, Zentner RP, De Jong R, Lemke R, Hamel C (2006) Nitrate leaching in the semiarid prairie: effect of cropping frequency, crop type, and fertilizer after 37 years. Can J Soil Sci 86:701–710

Campbell CA, Zentner RP, Basnyat P, DeJong R, Lemke R, Desjardins R, Reiter M (2008) Nitrogen mineralization under summer fallow and continuous wheat in the semiarid Canadian Prairie. Can J Soil Sci 88:681–696

Cassman KG, Munns DN (1980) Nitrogen mineralization as affected by soil moisture, temperature, and depth. Soil Sci Soc Am J 44:1233–1237

Combs SM, Nathan MV (2012) Chapter 12: Soil organic matter. In: Nathan MV, Gelderman R (eds) Recommended chemical soil test procedures for the North Central Region. Missouri Agricultural Experiment Station SB 1001, pp 12.1–12.6. http://extension.missouri.edu/explorepdf/specialb/sb1001.pdf

Curtin D, Beare MH, Hernandez-Ramirez G (2012) Temperature and moisture effects on microbial biomass and soil organic matter mineralization. Soil Sci Soc Am J 76:2055–2067

Custer SG (1976) Nitrate in ground water; a search for sources near Rapelje, Montana. Northwest Geol 5:25–33

De Roos AJ, Ward MH, Lynch CF, Cantor KP (2003) Nitrate in public water supplies and the risk of colon and rectum cancers. Epidemiology 14(6):640–649

Dubrovsky NM, Burow KR, Clark GM, Gronberg JM, Hamilton PA, Hitt KJ, Mueller DK, Munn MD, Nola BT, Puckett LJ, Rupert MG, Short TM, Spahr NE, Sprague LA, Wilber WG (2010) The quality of our Nation’s waters—nutrients in the Nation’s streams and groundwater, 1992–2004: U.S. Geological Survey Circular 1350

Engel R, Jones C (2014) Minimizing ammonia–N loss from no-till cropping systems. WSARE Final Report. http://landresources.montana.edu/soilfertility/ammonvolat.html. Accessed 20 Jan 2015

Engel R, Jones C, Wallander R (2011) Ammonia volatilization from urea and mitigation by NBPT following surface application to cold soils. Soil Sci Soc Am J 75(6):2348–2357

Fewtrell L (2004) Drinking-water nitrate, methemoglobinemia, and global burden of disease: a discussion. Environ Health Perspect 112(14):1371–1374

Frank K, Beegle D, Deening J (2012) Chapter 6: Phosphorus. In: Nathan MV, Gelderman R (eds) Recommended chemical soil test procedures for the North Central Region. Missouri Agricultural Experiment Station SB 1001, pp 6.1–6.9. http://extension.missouri.edu/explorepdf/specialb/sb1001.pdf

Gelman A, Hill J (2007) Chapter 12: Multilevel linear models: the basics. In: Alvarez RM, Beck NL, Wu LL (eds) Data analysis using regression and multilevel/hierarchial models. Cambridge University Press, New York, pp 251–278

Goulding K (2000) Nitrate leaching from arable and horticultural land. Soil Use Manag 16:145–151

Grant CA, Jia S, Brown KR, Bailey LD (1996) Volatile losses of NH3 from surface-applied urea and urea ammonium nitrate with and without the urease inhibitors NBPT or ammonium thiosulphate. Can J Soil Sci 76:417–419

Grant CA, Wu R, Selles F, Harker KN, Clayton GW, Bittman S, Zebarth BJ, Lupwayi NZ (2012) Crop yield and nitrogen concentration with controlled release urea and split applications of nitrogen as compared to non-coated urea applied at seeding. Field Crop Res 127:170–180

Heumann S, Ringe H, Bottcher J (2011) Field-specific simulations of net N mineralization based on digitally available soil and weather data. I. Temperature and soil water dependency of the rate coefficients. Nutr Cycl Agroecosyst 91:219–234

Heumann S, Ratjen A, Kage H, Bottcher J (2014) Estimating net N mineralization under unfertilized winter wheat using simulations with NET N and a balance approach. Nutr Cycl Agroecosys 99:31–44

Jackson-Smith D, Armstrong A (2012) Judith River Watershed Farmer survey summary report. http://waterquality.montana.edu/judith/images-files/R_SurveySummaryReport2012.pdf

Jackson-Smith D, Ewing S, Jones C, Sigler WA (2016) The road less travelled: assessing the impacts of in-depth farmer and stakeholder participation in nitrate pollution research. Annual meetings of the rural sociological society. Toronto, Canada, 7–10 Aug 2016

Janzen HH, Beauchemin KA, Bruinsma Y, Campbell CA, Desjardins RL, Ellert BH, Smith EG (2003) The fate of nitrogen in agroecosystems: an illustration using Canadian estimates. Nutr Cycl Agroecosyst 67:85–102

John AA (2015) Fallow replacement and alternative fertilizer practices: effects on nitrate leaching, grain yield and protein, and net revenue in a semiarid region. MS Thesis, Montana State University

Ju XT, Kou CL, Zhang FS, Christie P (2006) Nitrogen balance and groundwater nitrate contamination: comparison among three intensive cropping systems on the North China Plain. Environ Pollut 143:117–125

Knobeloch L, Salna B, Hogan A, Postle J, Anderson H (2000) Blue babies and nitrate-contaminated well water. Environ Health Perspect 108(7):675–678

Mascagni HJ Jr, Sabbe WE (1991) Late spring nitrogen applications on wheat on poorly drained soil. J Plant Nutr 14(10):1091–1103

McKenzie RH, Bremer E, Middleton AB, Pfiffner PG, Dowbenko RE (2007) Controlled-release urea for winter wheat in southern Alberta. Can J Soil Sci 87:85–91

Meisinger JJ, Randall GW (1991) Estimating nitrogen budgets for soil-crop systems. In: Follett RF, Keeney DR, Cruse RM (eds) Managing nitrogen for groundwater quality and farm profitability. Soil Science Society of America, Madison, pp 85–124

Miao YF, Wang ZH, Li SY (2014) Relation of nitrate N accumulation in dryland soil with wheat response to N fertilizer. Field Crop Res 170:119–130

Mikkelsen RL, Williams HM, Behel AD Jr (1994) Nitrogen leaching and plant uptake from controlled-release fertilizers. Fertil Res 37:43–50

Miller CR (2013) Groundwater nitrate transport and residence time in a vulnerable aquifer under dryland cereal production. Thesis, Montana State University, Bozeman, MT

Miller PR, Bekkerman A, Jones CA, Burgess MH, Holmes JA, Engel RE (2015) Pea in rotation with wheat reduced uncertainty of economic returns in southwest Montana. Agron J 107:541–550. doi:10.2134/agronj14.018

Mobley ML, McCulley RL, Burke IC, Peterson G, Schimel DS, Cole CV, Ellito ET, Westfall DG (2014) Grazing and no-till cropping impacts on nitrogen retention in dryland agroecosystems. J Environ Qual 43(6):1963–1971. doi:10.2134/jeq2013.12.0530

Mohammed YA, Kelly J, Chim BK, Rutto E, Waldschmidt K, Mullock J, Torres G, Desta KG, Raun W (2013) Nitrogen fertilizer management for improved grain quality and yield in winter wheat in Oklahoma. J Plant Nutr 36:749–761

Nakamura K, Harter T, Hirono Y, Horino H, Mitsuno T (2004) Assessment of root zone nitrogen leaching as affected by irrigation and nutrient management practices. Vadose Zone J 3:1353–1366

Nimick DA, Thamke JN (1998) Extent, magnitude, and sources of nitrate in the Flaxville and underlying aquifers, fort peak Indian reservation, northeastern Montana. U.S. Geological Survey Water-Resources Investigations Report. 98-4079. http://pubs.er.usgs.gov/publication/wri984079. Accessed 8 Sept 2016

NRCS (2016) Web soil survey. Soil survey staff, Natural Resources Conservation Service, United States Department of Agriculture. http://websoilsurvey.nrcs.usda.gov/. Accessed 8 Sept 2016

Padbury G, Waltman S, Caprio J, Coen G, McGinn S, Nielsen G, Sinclair R (2002) Agroecosystems and land resources of the northern Great Plains. Agron J 94(2):251–261

Patron W, Silver WL, Burke IC, Grassens L, Harmon ME, Currie WS, King JY, Adair EC, Brandt LA, Hart SC, Fasth B (2007) Global-scale similarities in nitrogen release patterns during long-term decomposition. Science 315:361–364

Paul KI, Polglase PJ, O’Connell AM, Carlyle JC, Smethurst PJ, Khanna PK (2003) Defining the relationship between soil water content and net nitrogen mineralization. Eur J Soil Sci 54:39–47

Power JF, Schepers JS (1989) Nitrate contamination of groundwater in North America. Agr Ecosyst Environ 26:165–187

Puckett LJ, Tesoriero AJ, Dubrovsky NM (2011) N Contamination of surficial aquifers—a growing legacy. Environ Sci Technol 45:839–844

Scanlon BR, Reedy RC, Bronson KF (2008) Impacts of land use change on nitrogen cycling archived in semiarid unsaturated zone nitrate profiles, Southern High Plains, Texas. Environ Sci Technol 42(20):7566–7572

Schmidt C (2009) Permanent monitoring well network nitrate–N summary report. Montana Department of Agriculture. http://agr.mt.gov/Topics/Groundwater. Accessed 9 Feb 2017

Schmidt C, Mulder R (2010). Groundwater and surface water monitoring for pesticides and nitrate in the Judith River Basin, central Montana. Montana Department of Agriculture. http://agr.mt.gov/agr/Programs/NaturalResources/Groundwater/Reports/. Accessed 8 Sept 2016

Shoji S, Delgado J, Mosier A, Miura Y (2001) Use of controlled release fertilizers and nitrification inhibitors to increase nitrogen use efficiency and to conserve air and water quality. Commun Soil Sci Plant Anal 32(7–8):1051–1070

Sigler WA, Ewing SA, Jones CA, Payn RA, Brookshire ENJ, Klassen JK, Weissmann GS (in review) Connections among soil, ground, and surface water chemistries characterize nitrogen loss from an agricultural landscape in the upper Missouri River basin. J Hydrol

Soon YK, Arshad MA (2002) Comparison of the decomposition and N and P mineralization of canola, pea, and wheat residues. Biol Fertil Soils 36:10–17

Stanford G, Frere MH, Schwaninger DH (1973) Temperature coefficient of soil nitrogen mineralization. Soil Sci 115(4):321–323

Stanford G, Dzienia S, Vander Pol RA (1975) Effect of temperature on denitrification rate in soils. Soil Sci Soc Am J 39:867–870

Tonitto C, David MB, Drinkwater LE (2006) Replacing bare fallows with cover crops in fertilizer-intensive cropping systems: a meta-analysis of crop yield and N dynamics. Agric Ecosyst Environ 112:58–72

USDA (2014) National Agricultural Statistics Service Cropland Data Layer (Online). http://nassgeodata.gmu.edu/CropScape/. Accessed 8 Sept 2016

USDA (2016) Montana’s 2016 reported crops. Farm Service Agency. https://www.fsa.usda.gov/state-offices/Montana/resources/index. Accessed 26 Jan 2017

Vick ESK, Stoy PC, Tang ACI, Gerken T (2016) The surface–atmosphere exchange of carbon dioxide, water, and sensible heat across a dryland wheat-fallow rotation. Agric Ecoyst Environ 232:129–140

Vigil MF, Eghball B, Cabrera ML, Jakubowski BR, Davis JG (2002) Accounting for seasonal nitrogen mineralization: an overview. J Soil Water Conserv 57(6):464–469

Walsh J, Wuebbles D, Hayhoe K, Kossin J, Kunkel K, Stephens G, Thorne P, Vose R, Wehner M, Willis J (2014) Ch. 2: Our changing climate. Climate change impacts in the United States. In: Melillo JM, Richmond TC, Yohe GW (eds) The third national climate assessment. U.S. Global Change Research Program, pp 19–67. doi:10.7930/J0KW5CXT

Ward MH, deKok TM, Levallois P, Brender J, Gulis G, Nolan BT, VanDerslice J (2005) Workgroup Report: drinking-water nitrate and health-recent findings and research needs. Environ Health Perspect 113(11):1607–1614

Westfall DG, Havlin JL, Hergert GW, Raun WR (1996) Nitrogen management in dryland cropping systems. J Prod Agric 9(2):192–199

Wilson ML, Rosen CJ, Moncrief JF (2010) Effects of polymer-coated urea on nitrate leaching and nitrogen uptake by potato. J Environ Qual 39:492–499

Zentner RP, Campbell CA, Biederbeck VO, Miller PR, Selles F, Fernandez MR (2001) In search of a sustainable cropping system for the semiarid Canadian prairies. J Sustain Agric 18(2/3):117–136

Acknowledgements

This project was funded by the United States Department of Agriculture National Integrated Water Quality Program (USDA-NIFA Grant #2011-51130-31121), Montana Fertilizer Advisory Committee, Montana Agricultural Experiment Station and MSU Extension. This project would not have been possible without Dr. Douglas Jackson-Smith (formerly Utah State University, now Ohio State University), Producer Research Advisory Committee members, Advisory Committee members, and Terry Rick (Montana State University).

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

John, A.A., Jones, C.A., Ewing, S.A. et al. Fallow replacement and alternative nitrogen management for reducing nitrate leaching in a semiarid region. Nutr Cycl Agroecosyst 108, 279–296 (2017). https://doi.org/10.1007/s10705-017-9855-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10705-017-9855-9