Abstract

Additive manufacturing of metals offers the possibility of net-shape production of topologies that are infeasible by conventional subtractive methods, e.g. milling. However, additive manufacturing of metals posses a high defect density, which necessitates accounting for the larger statistical material variation than in conventional metals. To prevent time-consuming experimental component testing, computational methods are required that account for the topology and metal as well as that precisely predict component failure even for metals with statistically varying properties. Predictions require an automatic and modular evaluation of the possibly large set of material properties and their scatter by employing calibration experiments. In addition, a module for failure and deformation prediction as well as a module for the aggregation of experimental data are required. In this contribution, such a modular framework is presented and the encapsulated and non-structured storage of statistically varying material properties is highlighted. In addition, the framework includes multiple FEM solvers and a multitude of optimizers, which are compared and the objective function is addressed. The approach is employed for the third Sandia Fracture Challenge for which two configurations (homogeneous and heterogeneous material properties) are studied and discussed. The blind-predictions of the verification geometry are used to identify the benefits and drawbacks of the framework.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

One of the main purposes of structural engineering is the prediction of the material stress state and its deformation even for complex components. By optimizing the geometry with regard to failure, costs and other criteria, the structural engineer improves the component benefit and reduces the need for expensive and time-consuming component testing. However, the benefit of these structural simulations depends on the reliability of the material property identification and the reliability of the stress and deformation predictions.

Additive manufacturing (AM) of metals (also called Additive Layer Manufacturing Frazier 2014) offers significant advantages and drawbacks compared to traditional metals. AM ideally produces net-shape geometries and grants complete geometric flexibility, which allows shape (i.e. changing the dimensions) and topology (e.g. including or excluding holes) optimization by numerical algorithms (Kharmanda et al. 2004). In addition, AM admits to fully integrate the design, simulation and production because these steps rely on similar computational frameworks (Jared et al. 2017). Such tool integration allows also for complete adaptation / customization of each product towards the customers needs. However, component testing is infeasible when using individual product adaptation. Hence, matured simulation tools are required to ensure product safety even for individual products. Moreover, AM metals have a multitude of defects that arise from source materials (Herzog et al. 2016) and from production (voids Gong et al. 2014, anisotropy Carroll et al. 2015, heterogeneity Carlton et al. 2016). The defect heterogeneity requires statistical approaches for the material property calibration and the prediction of the deformation behavior. The calibration of the large set of material properties and their different statistical distributions is computationally too expensive to calibrate simultaneously. Hence, sequential calibration of the individual properties and sequential calibration of their statistical distribution is required. The third Sandia Fracture Challenge (SFC3) (Kramer et al. 2019) is a case study of the structural engineering task: blind computational predictions for a complex component produced by AM.

The first and second Sandia fracture challenge (SFC1 and SFC2) (Boyce et al. 2014, 2016) addressed the reliability of numerical predictions. Initially, material tests (e.g. tension testing) are executed for a limited number of geometries to calibrate the material models. The results of these calibrations identify the material properties and possibly their distribution. The crack paths and failure loads are predicted blindly for verification geometry and compared to those results of the experiments, which are executed after the numerical predictions. SFC1 investigated a high strength steel and the calibration experiments focused on mode-I failure. Damage mechanics and cohesive zone based simulations predicted the load-displacement curve but failed to predict the crack path (Brinckmann and Quinkert 2014) because the mixed-mode crack propagation was not calibrated. Mode-II failure and the rate dependence were in the focus of SFC2 (Boyce et al. 2016), which used a commercial Ti-Al-V alloy.

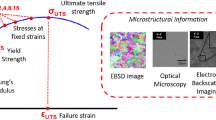

SFC3 (Kramer et al. 2019) uses the same three steps as the previous challenges: calibration experiments guide material model identification, blind numerical predictions of the global and local behavior, and experimental verification using a custom 3D-geometry. This challenge used a 316L stainless steel, which was produced by Direct Metal Laser Sintering (DMLS) (also referred to as Laser Powder Bed Fusion (LPBF) King et al. 2015). The calibration geometries included tensile tests in the print and perpendicular direction to calibrate the potential anisotropy.

Here, a general computational framework for these kinds of structural engineering projects is described and the SFC3 is used as case study. The framework is modular to admit for all calibration and verification geometries, include the statistical distribution of the material properties, a large number of optimizers, as well as different FEM solvers, Hibbitt et al. (2011), Marc (1997). Moreover, all intermediate and final data is stored encapsulated in the open h5-file format. After introducing the framework and reporting on the predictions for the verification geometry, the advantages and disadvantages of the framework are given—as well as—possible future developments of the framework are addressed.

2 Framework for material calibration and evaluation

The calibration of the material model and the verification simulations require a number of steps, which are combined into a modular framework. While some modules are constant for all projects and calibration geometries, other modules are specific to the calibration / verification geometry (geometry specific), while other modules are specific to the project / challenge (material model specific). The following list describes each module.

- (a):

Calibration main [project specific] This central module contains two parts. The first part is the discrete calibration step: create an input file given the geometry and current estimate of material properties; start the FEM simulation; request the inspection of the FEM output. The second part is the optimization initiation and the start of the calibration. As such, this module calls all other modules, which execute the sub-tasks.

In this project, six material property sets exist, since the material properties are anisotropic in the longitudinal and transverse direction and since the 20%, 50% and 80% percentiles of the material properties are calculated. While the anisotropic data sets depend on each other, the data sets for the property percentiles are calculated independently.

- (b):

Verification main [project specific] This module mirrors the calibration main. The first part is the description of the individual evaluation given a set of material properties. The second part executes the individual predictions for the statistically varying material properties. Additionally, the results of the individual predictions are assembled in the second part of this module.

- (c):

Material property storage [material model specific] The calibration of the material properties and their statistical variation depends on the specific material model, e.g. specific plastic hardening. In addition, the assembly of the material properties into a FEM software specific string is also included in this module.

The h5-format encapsulates the material properties into branches and ensures that inevitable computer crashes do not result in the loss of previously calibrated data. The material data was stored after each calibration step (e.g. plasticity in the transverse direction for the 20% percentile). Moreover, the number of iterations, the minimum of the objective function and the material property units are added as attributes. As such, the material data of each percentile and of each direction creates a separate branch in the h5-format.

- (d):

Experimental data assembly [constant] This module reduces the large number of experimental curves and calculates the 20%, 50% (i.e. average) and 80% percentiles of the load-displacement curves in the longitudinal and traverse direction, separately. To aggregate the load-displacement curves with different displacement increments, the data is interpolated to common displacement vectors and the percentiles are evaluated at each increment. Since the calibration curves contained unloading-reloading segments, the module produces monotonic load-displacement curves by using the reloading segment as initial loading segment and pasting the entire plastic segment onto the reloading segment.

- (e):

Optimization [constant] The optimization routines initiates the optimization algorithm, reports the calibration progress and individual material properties. The calibration of material properties includes two particulars that are different to conventional optimization problems. The choice of the objective function (i.e. absolute or relative error) has a profound influence on the optimization success. In addition, the discrete nature of the experimental curves leads to scatter on the objective function. In this study, the Simplex (Nelder and Mead 1965), the BFGS (Broyden 1970; Fletcher 1970; Goldfarb 1970; Shanno 1970) and the Differential Evolution (Storn and Price 1997) algorithms were used. The optimal optimization algorithm is discussed in Sect. 5.

- (f):

Statistics [constant] The statistics module assembles the properties from the individual calibration simulations into an exponential Weibull distribution that is used for material properties that vary depending on the local microstructure. In contrast to the Gauss distribution, the exponential Weibull distribution can be restricted to only allow physical (i.e. non-negative valued random) properties. In addition to the Weibull distribution, the uniform distribution is employed for material properties that are homogeneous in the material.

- (g):

FEM software [constant] The default input and output to the FEM software, the execution of the particular FEM command, identification of premature failure and the bookkeeping of the software licenses is combined in this module. The module is written for the Abaqus and Marc software, which both use text files for input and status reports. The free-format of Marc is used since it is less error-prone compared to its fixed format.

- (h):

Input and output of individual geometry [geometry specific] Each calibration and verification geometry requires a specific FEM input file that includes the mesh and boundary conditions. For each geometry, a corresponding post-processing file is required, which uses the nodes on specific planes to determine global forces by integration and displacements by averaging. The input and output files might use symmetry boundary conditions to reduces the numerical effort.

- (i):

Assemble individual predictions [constant] After the individual predictions for the varying heterogeneous material properties are executed, the data is aggregated as required by the shareholders in engineering situations.

The framework was implemented in the Python language since it is by default installed on servers that run FEM software. In addition, Python has a rich library of optimization algorithms that include gradient based, gradient-less and evolution based algorithms. Moreover, the statistical tools are extensive and text file parsing, string handling and string assembly are automatically included.

3 Framework application to SFC3

For each property percentile, the properties were calibrated in sequential steps to reduce the number of unknown variables in each step and to increase the convergence.

- C1

Non-linear anisotropic elasticity fitted to the elastic segment of the longitudinal tensile test,

- C2

Non-linear anisotropic elasticity fitted to the elastic segment of the transverse tensile test,

- C3

Plastic flow curve fitted to the entire longitudinal tensile test,

- C4

Hill parameter fitted to the entire transverse tensile test,

- C5

Damage accumulation fitted to the notched longitudinal test.

The project ensured that the individual material properties are monotonic, e.g. the Young’s modulus is monotonically increasing with the percentile. If the material properties were non-monotonic after the individual calibration step, uniform material properties were calibrated during an intermediate step.

Although the FEM module was also implemented for Marc (1997), the Abaqus Standard package (Hibbitt et al. 2011) and its non-linear geometry option were employed. The gauge section was created using Solid Edge (Siemens PLM) by cropping the provided CAD drawings. For the notched fracture geometry, the active gauge section (i.e. section within the optical strain gauges) was simulated. In addition, the symmetry planes were used as crop planes. As such, the fracture geometry had the least number of elements. The processed geometry was converted into a FEM mesh by Abaqus CAE.

AM results in layered metals that have potentially planes of higher pore density and planes of higher material density. The latter planes are similar to close packed planes in FCC crystals, which have a strong elastic anisotropy. Hence, it is plausible that also the AM metal is elastically anisotropic: stiff in the transverse direction and compliant in the longitudinal direction with its perpendicular build layers. The elastic anisotropy was taken into account by supplying the engineering constants: Young’s moduli \(E_i\), Poisson’s ratios \(\nu _{ij}\) and shear moduli \(G_{ij}\). The Poisson’s ratio was assumed to be constant \(\nu _{ij}=0.28\) for all directions and the shear modulus was evaluated from the calibrated Young’s modulus by \(G=E/(2+2\nu )\). Both transverse directions were assumed to have the same material properties.

Non-linear elasticity was taken into account by a linear variation of the Young’s moduli \(E_i\) and resulting variation of the shear modulus. In the user subroutine, the Mises stress \(\sigma _M\) was supplied as field variable which interpolated the directional Young’s modulus for \(\sigma _M=0\ldots 1\, {\mathrm{GPa}}\). The non-linear elastic anisotropy was calibrated to the initial segment of the load-displacement curve, i.e. reloading curve, and required 88 iteration steps and 4 h on a single processor for the 50% percentile in the longitudinal direction. The calibration runs for the transverse direction and for the 20% and 80% percentiles required similar iteration steps and durations. The elastic data was calibrated in steps C1 and C2, and stored twice in the file by rotating it appropriately for easy reading of the material data from the h5-file.

A non-linear flow curve was employed for plasticity in the longitudinal direction:

where \({{\bar{\varepsilon }}}_p\) is the equivalent plastic strain in the integration point. The yield stress, linear contribution and non-linear contribution are denoted by \(\sigma _{y}\), \(\sigma _{l}\), \( \sigma _{nl}\), respectively. \(k_\varepsilon \) is the proportionality factor for the equivalent plastic strain and n the exponent. While a negative slope in the flow curve was permitted in this project, non-positive flow stresses resulted in an objective function penalty and the abortion of the corresponding simulation.

The calibration of the five material properties \(\sigma _{y}\), \(\sigma _{l}\), \( \sigma _{nl}\), \(k_\varepsilon \), n was time consuming: a single iteration step required 1.5 h and hundreds of iteration steps were necessary. The optimization was obstructed by the strong interaction of the exponent and the yield stress: a lower exponent significantly reduced the yield stress. A constant exponent of \(n=0.16\) was assumed based on the best non-converged result of the full optimization problem. With the constant exponent the calibration was restarted and the plastic material calibration to the longitudinal tension experiment required 4d (57 iteration steps) on a single processor for each percentile (20%, 50%, 80%).

In this project, the Hill potential surface (Hill 1948) accounted for plastic anisotropy by referencing to the flow curve in the longitudinal direction. The full set of six Hill parameters is reduced to a single unknown: the ratio of flow curves between the longitudinal and transverse direction. This ratio entered twice the set of six parameters. The other four parameters are one. The 18 iteration steps were executed in 12 h for each percentile.

Experimental load-displacement curve (red) and calibrated load-displacement curve (blue) for the notched calibration geometry. The experimental curve is defined as linear-interpolated function, which is evaluated at the same displacements as the numerical curve. Since the damage mechanics is the last step in the calibration, these curves show the summary of all calibration steps

A large number of damage models for continuum mechanics exist (Lemaitre 2012; Kachanov 2013). Here, a model was used that is based on the damage accumulation in cohesive zones (Roe and Siegmund 2003) and that was similarly used in the first Sandia Fracture Challenge (Brinckmann and Quinkert 2014). Especially damage mechanics exhibits an element size effect (Javani et al. 2009; Junker et al. 2017): smaller elements lead to more localized failure and less damage energy. The same seeding size (0.21 mm) for element creation was used for the calibration and verification geometries to prevent that the mesh size influences unduly the predictions. Material failure was modeled at integration points by linear damage accumulation from \(D=0\rightarrow 1\) in a user subroutine. The hydrostatic pressure p was used to calculate the damage increment:

where \(p_{min}\) and \(p_\Delta \) are the damage accumulation threshold and the damage accumulation resistance. \(D_{i-1}\) is the damage at the integration point in the previous time increment and dt is the time step, which is normalized by a reference time step \(t_0\). The implementation ensures that the damage increment is non-negative and the damage is bound by zero and one. The calibration for the damage parameters \(p_{min}\) and \(p_\Delta \) did not result in a smooth force reduction during necking contrary to the experiments (see Fig. 1). The calibrated load-displacement curve shows segments of load decrease which are followed by segments of constant or increasing load. The last segments of the simulated load-displacement curves vary considerably from the experimental curves.

Please recall, the calibrated material properties are stored in a hierarchical h5-tree since this structure offers more flexibilityFootnote 1 than a table and encapsulates calibrated data of the individual percentile and individual direction in case of inevitable software failure. For improved reading—however—the material properties are given here in Table 1.

For the verification geometry, two configurations were studied: homogeneous and heterogeneous material properties. The latter configuration is deemed more realistic as the material properties in the physical samples differ locally (Carlton et al. 2016) and this heterogeneity leads to a spread of load-displacement curves for the verification geometry. In the homogeneous configuration, the given percentile is used to evaluate each material property and this property set defines the behavior of all FEM elements. Hence, this configuration yields directly the 20%, 50% and 80% percentile results for the verification geometry. In the heterogeneous configuration, the FEM elements are randomly divided into groups and a material property set for each group is taken from the material property distribution. Please note that a completely heterogeneous material is simulated, if the number of material property groups is equal to the number of FEM elements and if the element size is representing the grain size. As the simulations in this project are restricted to a single core computer, 10 material property groups and large elements are used. The heterogeneous configuration simulations are executed multiple times and the 20%, 50% and 80% percentile results are determined from the individual results.

4 Verification of the results

The verification of the force-displacement curves is shown using the homogeneous material properties in Fig. 2. In the elastic and initial plastic segments of the curve, the three percentiles lead to similar curves with little variation between them. For intermediate plastic strains, the flow curves significantly differ: the 20% percentile is initially the weakest. However, the predicted flow curves invert, with the 20% percentile resulting in the highest flow stress at 0.8 mm deformation. Once damage sets in, the 20% percentile material results in the lowest force, rectifying the order of the percentiles. After 1.2 mm deformation, i.e. at the end of deformation, the difference between the weakest and strongest material configuration is 0.5 kN.

Using the heterogeneous material configuration, 21 simulations were executed, which are shown as gray lines in Fig. 3. From these data points, the 20%, 50% and 80% percentiles are evaluated by specifying a displacement window. Depending on the number of data points in the individual window, certain window positions result in a higher/lower apparent flow stresses. For instance, if the window size is small, few data points are present within each window and the obtained percentile curves scatters. On the other hand, a large window results in smoother data and a reduced maximum force.

There are only minor differences between the individual simulations for the heterogeneous configuration results. The similarity between these curves for the percentiles is more pronounced than for the homogeneous configuration and than the experimental results.

Figure 4 shows the strain localization for the homogeneous material distribution of the 50% percentile at the maximum force and at the end of the simulation. Most of the deformation localizes in the shear band that stretches from the central hole along the \(45^\circ \) angle which follows the subsurface channel. On the left side of the sample, minor strain localization occurs above the subsurface hole.

5 Discussion

5.1 Results of the framework application to SFC3

The current study used the longitudinal and transverse fast data of the tensile calibration specimen as well as the fracture calibration data in the longitudinal direction. For each calibration tests, all experiments were aggregated into 20%, 50% and 80% percentiles and the gauge sections (excluding the grips) were simulated. For the fracture geometry, only that subset was simulated that was measured by the optical strain gauges. Due to the symmetry in the fracture sample, few elements are present in the effective gauge section. The failure of a single integration point significantly influences the overall stiffness and displacement solution and leads to force drops as evident from the force-displacement curves (see Fig. 1). This behavior could be rectified by using a finer mesh, which would reduce these load-drops (Gao et al. 1998).

When inspecting the fitted material properties in Table 1, one observes that the material is elastically anisotropic because the Young’s moduli in the longitudinal and transverse direction differ significantly with the traverse direction being stiffer than the longitudinal one. The ratio of Young’s moduli is larger than the ratio of the Hill plasticity; an observation that suggests that the material is elastically more anisotropic than plastically.

The predictions using the homogeneous configuration revealed that the weakest material (20% percentile) results in the strongest force-displacement curve at 0.8mm displacement. This unreasonable behavior is due to the non-unique material property identification. Moreover, the material properties were identified separately: the material properties were identified for each percentile without taking into account the properties of the other percentiles. Afterwards, a manual consistency check was executed: the material properties are monotonically increasing/decreasing in the order of the percentiles. This consistency check failed as evident by the non-monotonic behavior of the \(p_{\mathrm{min}}\) damage parameter (see Table 1). A better approach is to verify automatically the calibrated material properties and the consistent flow-curves.

A strain distribution measurement by DIC on the longitudinal specimen could be helpful for improving the calibration. Such experiments allow evaluating the local variation of properties and allow capturing the ’true’ material by complementing the global force-displacement calibration data and local calibration data. This project did not use the data-intensive tomography measurements of the void distribution. Instead, the void distribution was included intrinsically in the material property distributions.

Compared to the homogeneous material configuration, the predictions using the heterogeneous distribution of material properties resulted in much less differences between the individual simulations. The small differences are due to the averaging of material behavior in the heterogeneous material description (while some material points have weak properties, other material points have strong material properties; both of which result in a behavior that similar to the mean behavior). Since the experiments have a larger difference between the percentiles, an improved method for the heterogeneous material calibration is required. A possible solution is the brute force material calibration using element groups, a calibration that determines directly the distribution of material properties. Such approach is computationally more expensive since the material properties and their distributions are calibrated simultaneously. On the other hand, the current approach of material property calibration could be used. In that case, the distribution should be widened by the exponent of the number of material sets. However, a more complete study of the statistical distribution and its effect on the global force-displacement curve would be required (Beremin et al. 1983).

5.2 General discussion of the framework

Three options exist for the evaluation of the objective function: residuals \(F_{\mathrm{sim}}-F_{\mathrm{exp}}\), relative residuals \(\frac{F_{\mathrm{sim}}-F_{\mathrm{exp}}}{F_{\mathrm{exp}}}\) or (\(1-R^2\)), where \(F_{\mathrm{sim}}\) and \(F_{\mathrm{exp}}\) are the global forces determined in the simulations and experiments, respectively, as well as \(R^2\) is the coefficient of determination. Residuals uniformly distribute the weight across each value; however, a human judgment of the objective value, i.e. residual, is impossible as the measure depends on the force unit and the range of forces. The relative residuals (or the relative mean square RMS) admit to quantify the quality by a human, however small forces have a large weight, which significantly de-emphasizes the upper forces during elastic loading. The usage of the \(R^2\) grants the quantification of the calibration quality by a human and does not emphasize values that are close to zero. On the other hand, a given \(R^2=0.99\) suggest a high quality of the calibration although \(R^2=0.99\) cannot differentiate between the 20% and 80% percentiles for the given tensile calibration experiments. This project used residuals for minimization. Future projects might use \(\log (1-R^2)\) for optimization, which allows quantification by humans while it allows to differentiate between the percentiles.

The calibration of material properties, i.e. inverse fitting by FEM simulations, is an optimization problem with—ideally—a single minimum. Since the objective function in the calibration is the difference between experimental and numerical data at discrete displacements and due to the Newton-Raphson iteration of plasticity (Bathe 2002), higher order errors occur that are superposed onto the objective function, as observed in Fig. 5. Moreover, the strong interaction of material properties, e.g. yield stress and plastic hardening, results in an extended minimum. As such, the Rosenbrock function (which has a single extended minimum) with a sinusoidal scatter mimics the typical objective function in FEM based material calibration projects (see Fig. 6). The Global Multiobjective Optimizer (GMO) Ruciński et al. (2010) is superior for these problems. However, these algorithms use parallel execution, which would require multiple FEM licenses. These algorithms were excluded since the project used a single license

The BFGS method is most efficient for functions without scatter. However, in the present study the BFGS method converged into scatter site around the global minimum. The simplex algorithm was the most efficient, if a large 10% initial simplex was used which prevented the algorithm from converging into a local minimum around the starting point. The differential evolution was not obstructed by local scatter, but this algorithm spent a large number of function evaluations on off-minimum sites with the current parameter set.

Simplified 2-parameter simulation of a tensile sample in which plastic yielding is followed by linear hardening. The objective function (RMS) is depending on the yield stress and hardening, which are normalized by the optimal yield stress and hardening, respectively. High gradients are present outside the minimum and minor scatter occurs close to the minimum as evident from the oscillations of the contours

Benchmark of multiple python algorithms using the Rosenbrock function with and without additional sinusoidal scatter. The latter function mirrors typical material property calibration by FEM. The Global Multiobjective Optimizer is denoted with GMO. The simplex algorithm is denoted with “fmin”. The intensity of the results is corresponds to the accuracy: high accuracy optimization results in dark colors. A low number of function calls is desirable

The calibration of the ’true’ material properties is the central challenge of this study. The SFC3 uses global force-displacement measures to identify the local material behavior, which in turn is employed to predict local strain-measures in the verification geometry. Uniqueness is required: local and global measures are predicted successfully only if a single set of material properties exists that fulfills the global calibration. It should be noted that elastic problems are unique because they are elliptic boundary value problems (Brebbia et al. 2012). For the plastic material, the uniqueness is not given for different loading conditions (Pelletier et al. 2000; Shim et al. 2008). Consequently, researchers used local measures, i.e. atom force microscopy (AFM) when using nanoindentation experiments (Zambaldi et al. 2012; Schmaling and Hartmaier 2012). Other researchers have used local texture measures to verify crystal plasticity simulations (Pinna et al. 2015). For large-scale deformation, DIC or the necking shape would provide valuable calibration data (Cortese et al. 2017), which could be used in future implementations of the framework.

Administrative mistakes occurred in this study and these mistakes highlight the need for an improved quality control especially in the presence of a computational framework that promises to solve the entire chain (experimental data aggregation \({-}{>}\) calibration of material properties \({-}{>}\) predictions). When using the homogeneous material configuration and when extracting the strains along a path in Abaqus, the values are returned incrementally with the given step size. However, when using the heterogeneous material description, the values are nonconsecutive and multiple path-points occur twice, since strains are defined at integration points and extrapolated to the element boundaries. These artifacts require post-analysis ordering and local averaging. In this study, the administrative error was the use of alternating path directions and the subsequent mirroring of the strains to account for the symmetric boundary condition. This procedure resulted in high strains in the hole while no strains occurred in the sample, as shown in Fig. 7. The shortcomings of the framework (high strains inside hole; non-consecutive flow curves) could be prevented by more visual inspection and graphical user interactions. This finding is a reminder that all final and intermediate results should be visually inspected instead of relying blindly on algorithms.

Administrative misreporting: initially the dashed blue strain distribution was reported which suggested finite strains in the hole, which is represented by a grey rectangle, and undefined strains in the sample. The revised strain distribution mirrors the strains measured by the experiments, shown in green for the 20%, 50% and 80% percentile

6 Conclusions

The following conclusions are based on the introduction of a framework for material property calibration and the verification of the global and local deformation during the third Sandia Fracture Challenge:

A modular approach has been presented that is applicable to experimental material property calibration using multiple geometries and custom anisotropic non-linear material properties. The approach includes the statistical distribution of material properties throughout the framework and the optimization used the simplex algorithm. Ensuring uniqueness of the material parameters is of importance for the material property calibration.

The prediction module of the framework used homogeneous and heterogeneous material configurations. The former directly yields the 20%, 50% and 80% percentile results. The latter has the potential to account for the local variation of material properties in AM metals.

While agreement of blind predictions and experiments was achieved for global load-displacement curves, local measures differed significantly. The difference of local measures is partly caused by the non-uniqueness of the material properties.

The graphical interface for the framework would allow verifying final and intermediate results and identifying errors.

Notes

The plasticity description and the number of properties differ in the longitudinal and transverse direction.

References

Bathe K-J (2002) Finite-elemente-methoden, 2nd edn. Springer, Berlin

Beremin F, Pineau A, Mudry F, Devaux J-C, D’Escatha Y, Ledermann P (1983) A local criterion for cleavage fracture of a nuclear pressure vessel steel. Metall Trans A 14(11):2277–2287

Boyce BL, Kramer SL, Fang HE, Cordova TE, Neilsen MK, Dion K, Kaczmarowski AK, Karasz E, Xue L, Gross AJ et al (2014) The sandia fracture challenge: blind round robin predictions of ductile tearing. Int J Fract 186(1–2):5–68

Boyce B, Kramer S, Bosiljevac T, Corona E, Moore J, Elkhodary K, Simha C, Williams B, Cerrone A, Nonn A et al (2016) The second sandia fracture challenge: predictions of ductile failure under quasi-static and moderate-rate dynamic loading. Int J Fract 198(1–2):5–100

Brebbia CA, Telles JCF, Wrobel LC (2012) Boundary element techniques: theory and applications in engineering. Springer, Berlin

Brinckmann S, Quinkert L (2014) Ductile tearing: applicability of a modular approach using cohesive zones and damage mechanics. Int J Fract 186(1–2):141–154

Broyden CG (1970) The convergence of a class of double-rank minimization algorithms: 2. the new algorithm. IMA J Appl Math 6(3):222–231

Carlton HD, Haboub A, Gallegos GF, Parkinson DY, MacDowell AA (2016) Damage evolution and failure mechanisms in additively manufactured stainless steel. Mater Sci Eng A 651:406–414

Carroll BE, Palmer TA, Beese AM (2015) Anisotropic tensile behavior of ti-6al-4v components fabricated with directed energy deposition additive manufacturing. Acta Mater 87:309–320

Cortese L, Genovese K, Nalli F, Rossi M (2017) Inverse material characterization from 360-deg DIC measurements on steel samples. In: Yoshida S, Lamberti L, Sciammarella C (eds) Advancement of optical methods in experimental mechanics, vol 3. Conference proceedings of the society for experimental mechanics series. Springer, Cham. https://doi.org/10.1007/978-3-319-41600-7_30

Fletcher R (1970) A new approach to variable metric algorithms. Comput J 13(3):317–322

Frazier WE (2014) Metal additive manufacturing: a review. J Mater Eng Perform 23(6):1917–1928

Gao X, Ruggieri C, Dodds R (1998) Calibration of weibull stress parameters using fracture toughness data. Int J Fract 92(2):175–200

Goldfarb D (1970) A family of variable-metric methods derived by variational means. Math Comput 24(109):23–26

Gong H, Rafi K, Gu H, Starr T, Stucker B (2014) Analysis of defect generation in ti-6al-4v parts made using powder bed fusion additive manufacturing processes. Addit Manuf 1:87–98

Herzog D, Seyda V, Wycisk E, Emmelmann C (2016) Additive manufacturing of metals. Acta Mater 117:371–392

Hibbitt D, Karlsson B, Sorensen P (2011) Abaqus 6.11 Documentation. SIMULIA, Dassault Systemes

Hill R (1948) A theory of the yielding and plastic flow of anisotropic metals. Proc R Soc Lond Ser A Math Phys Sci 193(1033):281–297

Jared BH, Aguilo MA, Beghini LL, Boyce BL, Clark BW, Cook A, Kaehr BJ, Robbins J (2017) Additive manufacturing: toward holistic design. Scr Mater 135:141–147

Javani HR, Peerlings R, Geers M (2009) Three dimensional modelling of non-local ductile damage: element technology. Int J Mater Form 2:923–926

Junker P, Schwarz S, Makowski J, Hackl K (2017) A relaxation-based approach to damage modeling. Contin Mech Thermodyn 29(1):291–310

Kachanov L (2013) Introduction to continuum damage mechanics, vol 10. Springer, Berlin

Kharmanda G, Olhoff N, Mohamed A, Lemaire M (2004) Reliability-based topology optimization. Struct Multidiscip Optim 26(5):295–307

King W, Anderson A, Ferencz R, Hodge N, Kamath C, Khairallah S, Rubenchik A (2015) Laser powder bed fusion additive manufacturing of metals; physics, computational, and materials challenges. Appl Phys Rev 2(4):041304

Kramer SLB et al. (2019) The third sandia fracture challenge: predictions of ductile fracture in additively manufactured metal. Int J Fract. https://doi.org/10.1007/s10704-019-00361-1

Lemaitre J (2012) A course on damage mechanics. Springer, Berlin

M. A. R. Corporation (1997) MARC Users Manual

Nelder JA, Mead R (1965) A simplex method for function minimization. Comput J 7(4):308–313

Pelletier H, Krier J, Cornet A, Mille P (2000) Limits of using bilinear stress-strain curve for finite element modeling of nanoindentation response on bulk materials. Thin Solid Films 379(1–2):147–155

Pinna C, Lan Y, Kiu M, Efthymiadis P, Lopez-Pedrosa M, Farrugia D (2015) Assessment of crystal plasticity finite element simulations of the hot deformation of metals from local strain and orientation measurements. Int J Plast 73:24–38

Roe K, Siegmund T (2003) An irreversible cohesive zone model for interface fatigue crack growth simulation. Eng Fract Mech 70(2):209–232

Ruciński M, Izzo D, Biscani F (2010) On the impact of the migration topology on the island model. Parallel Comput 36(10–11):555–571

Schmaling B, Hartmaier A (2012) Determination of plastic material properties by analysis of residual imprint geometry of indentation. J Mater Res 27:2167–2177

Shanno DF (1970) Conditioning of quasi-newton methods for function minimization. Math Comput 24(111):647–656

Shim S, Jang J-I, Pharr GM (2008) Extraction of flow properties of single-crystal silicon carbide by nanoindentation and finite-element simulation. Acta Mater 56(15):3824–3832

Storn R, Price K (1997) Differential evolution-a simple and efficient heuristic for global optimization over continuous spaces. J Glob Optim 11(4):341–359

Zambaldi C, Yang Y, Bieler TR, Raabe D (2012) Orientation informed nanoindentation of \(\alpha \)-titanium: indentation pileup in hexagonal metals deforming by prismatic slip. J Mater Res 27(01):356–367

Acknowledgements

Open access funding provided by Max Planck Society.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Brinckmann, S. A framework for material calibration and deformation predictions applied to additive manufacturing of metals. Int J Fract 218, 85–95 (2019). https://doi.org/10.1007/s10704-019-00375-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10704-019-00375-9