Abstract

We model a piece of text of human language telling a story by means of the quantum structure describing a Bose gas in a state close to a Bose–Einstein condensate near absolute zero temperature. For this we introduce energy levels for the words (concepts) used in the story and we also introduce the new notion of ‘cogniton’ as the quantum of human thought. Words (concepts) are then cognitons in different energy states as it is the case for photons in different energy states, or states of different radiative frequency, when the considered boson gas is that of the quanta of the electromagnetic field. We show that Bose–Einstein statistics delivers a very good model for these pieces of texts telling stories, both for short stories and for long stories of the size of novels. We analyze an unexpected connection with Zipf’s law in human language, the Zipf ranking relating to the energy levels of the words, and the Bose–Einstein graph coinciding with the Zipf graph. We investigate the issue of ‘identity and indistinguishability’ from this new perspective and conjecture that the way one can easily understand how two of ‘the same concepts’ are ‘absolutely identical and indistinguishable’ in human language is also the way in which quantum particles are absolutely identical and indistinguishable in physical reality, providing in this way new evidence for our conceptuality interpretation of quantum theory.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

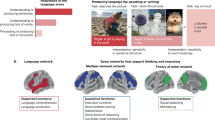

Human language is a substance consisting of combinations of concepts giving rise to meaning. We will show that a good model for this substance is the one of a gas of entangled bosonic quantum particles such as they appear in physics in the situation close to a Bose–Einstein condensate. In this respect we also introduce the new notion of ‘cogniton’ as the entity playing the same role within human language of the ‘bosonic quantum particle’ for the ‘quantum gas’. There is a gas of bosonic quantum particles that we all know very well, and that is the electromagnetic field, which we will also briefly call ‘light’, which is a substance of photons. Often we will use ‘light’ as an example and inspiration of how we will talk and reason about human language where ‘concepts’ (words), as ‘states of the cogniton’, are then like ‘photons of different energies (frequencies, wave lengths)’. With the new findings we present here, we also make an essential and new step forward in the elaboration of our ‘conceptuality interpretation of quantum theory’, where quantum particles are the concepts of a proto-language, in a similar way that human concepts (words), are the quantum particles (cognitons) of human language (Aerts 2009a, 2010a, b, 2013, 2014; Aerts et al. 2018d, 2019c).

There are several new results and insights that we will put forward in the coming sections. We summarize them here, referring also to earlier work on which they are built, guaranteeing however that the article is self-contained, so that it is not necessary to have studied these earlier works for understanding its content. The reason we can present here a self-contained theory of human language is because most of our earlier results take a simple and transparent form in the model of a boson gas that we elaborate here for human language. Since we also introduce the basics of the physics of a boson gas, our presentation will remain self-contained also from a physics’ perspective. In the article, we will use the terms ‘words’ and ‘concepts’ interchangeably because their difference does not play a role in the aspects of language we study.

We will see that the state of the gas of bosonic quantum particles which we identify explicitly to also be the state of a piece of text such as that of a story is one of very low temperature, i.e. a temperature in the neighborhood of where also the fifth state of matter appears, namely the Bose–Einstein condensate. This means that the interactions between ‘words’, which are the boson particles of language in our description, is mainly one of ‘quantum superposition’ and ‘quantum entanglement’, or more precisely one of ‘overlapping de Broglie wave functions’. This corresponds well with some of our earlier findings, when studying the combinations of concepts in human language, namely that superposition and entanglement are abundant, and the type of entanglement is deep, namely it also violates additionally to Bell’s inequality the marginal laws (Aerts 2009b; Aerts et al. 2011; Aerts and Sozzo 2011, 2014; Aerts et al. 2012, 2015a, 2016, 2018a, b, c, 2019a, b; Aerts Arguëlles 2018; Beltran and Geriente 2019).

When we present our model in the next sections, we will see that it contains several new explanations of aspects of human language which we brought up in earlier work. For example, we elaborated an axiomatic quantum model for human concepts, which we called SCoP (state context property system), and in which different exemplars of a specific concept are considered as different states of this concept (Gabora and Aerts 2002; Aerts and Gabora 2005a, b; Aerts 2009b; Aerts et al. 2013a, b). In the theory of the boson gas for human language that we develop here, we will not only introduce these states explicitly, but also introduce them as eigenstates for specific values of the energy and a detailed energy scale for all the words appearing in a considered piece of text will be introduced. If we compare this with the quantum description of light, it means that the cognitons of our piece of text of human language will radiate their meaning with different frequencies to the human mind, engaging in the meaning of this piece of text.

Let us consider an example of a text, namely the Winnie the Pooh story entitled ‘In Which Piglet Meets a Heffalump’ (Milne 1926), to make this introduction of ‘energy’ in our theory of language more concrete. We define the ‘energy level’ of a word (concept, cogniton) in the story by looking at the number of times this word appears in that story. The most often appearing word, namely 133 times, is the concept And (we will denote concepts or words when they are looked upon as states of a cogniton in italics and with a capital letter, like in our earlier works we have denoted concepts) and we attribute to it (for reasons that will become clearer later) the lowest energy level \(E_0\). The second most often appearing word, 111 times, is the concept He, and we attribute to it the second lowest energy level \(E_1\), and so on, till we reach words such as Able, which only appears once. In other words, if we think of a story as a ‘gas of bosonic particles’ in ‘thermal equilibrium with its environment’, these ‘number of times of appearance in the story’ indicate different energy levels of the particles of the gas, following the ‘energy distribution law governing the gas’, and this is our inspiration for the introduction of ‘energy’ in human language. Remember indeed that each of these words (concepts) is a ‘state of the cogniton’, exactly like different energy levels of photons (different wave lengths of light) are each ‘states of the photon’. Proceeding in this way we arrive at 452 energy levels for the story ‘In Which Piglet Meets a Heffalump’, the values of which are taken to be

We denote \(N(E_i)\) the ‘number of appearances’ of the word (concept, cogniton) with energy level \(E_i\), and if we denote n the total number of energy levels, we have that

is the total number of words (concepts, cognitons) of the considered piece of text, which is 2655 for the story ‘In Which Piglet Meets a Heffalump’.

For each of the energy levels \(E_i\), \(N(E_i)E_i\) is the amount of energy ‘radiated’ by the story ‘In Which Piglet Meets a Heffalump’ with the ‘frequency or wave length’ connected to this energy level. For example, the energy level \(E_{54} = 54\) is populated by the concept Thought and the word Thought appears \(N(E_{54})=10\) times in the story ‘In Which Piglet Meets a Heffalump’. Each of the 10 appearances of Thought radiates with energy value 54, which means that the total radiation with the wave length connected to Thought of the story ‘In Which Piglet Meets a Heffalump’ equals \(N_{54}E_{54} = 10 \cdot 54 = 540\).

The total energy E radiated by the considered piece of text is therefore

For the story ‘In Which Piglet Meets a Heffalump’ we have \(E = 242{,}891\). Let us represent now some of the other findings that we will describe more in detail in the following sections.

When we applied the Bose–Einstein distribution

to model the data we collected on the story ‘In Which Piglet Meets a Heffalump’, determining the parameters A and B by the two requirements

we found an almost complete fit with the data (see Sect. 2, Table 1, Figs. 1a, b and 2). We tested numerous other texts, short stories (see Sect. 3, Table 4, Figs. 3, 4) and long stories of the size of novels (see Sect. 4, Fig. 7b), and each time it showed that a modeling by means of a Bose–Einstein statistical energy distribution, like explained above, gives rise to an almost complete fit with the data.

We started this investigation with the idea that ‘concepts within human language behave like bosonic entities’, an idea we expressed earlier as one of the basic pieces of evidence for the ‘conceptuality interpretation’ (Aerts 2009a). The origin of the idea is the simple direct understanding that if one considers, for example, the concept combination Eleven Animals, then, on the level of the ‘conceptual realm’ each one of the eleven animals is completely ‘identical with’ and ‘indistinguishable from’ each other of the eleven animals. It is also a simple direct understanding that in the case of ‘eleven physical animals’, there will always be differences between each one of the eleven animals, because as ‘objects’ present in the physical world, they have an individuality, and as individuals, with spatially localized physical bodies, none of them will be really identical with the other ones, which means that each one of them will also always be able to be distinguished from the others. Even if all the animals are horses, simply because they are ‘objects’ and not ‘concepts’, they will not be completely identical and hence they will be distinguishable. The idea is that it is ‘this not being completely identical and hence being distinguishable’ which makes the Maxwell–Boltzmann statistics being applicable to them. However, when we consider ‘eleven animals’ as concepts, such that their ontological nature is conceptual, they are all ‘completely identical and hence intrinsically indistinguishable’. Within the conceptuality interpretation of quantum theory, where we put forward the hypothesis that quantum entities are ‘conceptual’ and hence are not ‘objects’, their ‘being completely identical and hence intrinsically indistinguishable’, would also be due to their being conceptual instead of objectual entities.

In earlier work we already investigated this idea by looking at simple combinations of concepts with numerals, such as indeed Eleven Animals and then considering two states of Animal, namely Cat and Dog. We then checked whether the twelve different exemplars of them that form in these two states, namely Eleven Dogs, One Cat And Ten Dogs, Two Cats And Nine Dogs,..., Ten Cats And One Dog, Eleven Cats, in their appearance in texts follow a Maxwell–Boltzmann or rather a Bose–Einstein statistical pattern. In a less convincing way because of a collection of limited data (Aerts 2009a; Aerts et al. 2015b), but with an abundance of data and very convincingly (Beltran 2019), it was shown that indeed the Bose–Einstein statistics delivers a better model for the data as compared to the Maxwell–Boltzmann statistics.

The result that we put forward in the present article, namely that the Bose–Einstein statistics as explained above models entire texts of any size, is a much stronger one, although it expresses the same idea. Consider any text, and then consider two instances of the word Cat appearing in the text, if then one of the concepts Cat is exchanged with the other concept Cat, absolutely nothing changes in the text. Hence, a text contains a perfect symmetry for the exchange of cognitons (concepts, words) in the same state. This is not true for physical reality and its physical objects. Suppose one considers a physical landscape where two cats are within the landscape, exchanging the two cats will always change the landscape, because the cats are not identical and are distinguishable as physical objects. If we introduce a quantum description of the text, the wave function must be invariant for the exchange of the two cats, which would again be not the case if the wave function would describe the physical landscape containing two cats as objects. This is the result we will present in Sect. 2.

Section 3 is devoted to a self-contained presentation of the phenomenon of Bose–Einstein condensation in physics. We illustrate the different aspects of the Bose–Einstein condensation valuable for our discussion, by means of two examples of Bose gases, the rubidium 87 atom gas and the sodium atom gas, that also originally where the first ones to be used to realize a Bose–Einstein condensate (Anderson et al. 1995; Davis et al. 1995). We compare the Bose–Einstein condensates of the gases and how their energy level distribution is modeled by the Bose–Einstein distribution function with our Bose–Einstein modeling of pieces of texts of stories and point out the points of correspondence.

Another finding that we will put forward, in Sect. 4, was completely unexpected. The method of attributing an energy level to a word depending on the number of appearances of the word in a text, introduces the typical ranking considered in the well-known Zipf’s law analysis of this text (Zipf 1935, 1949). When we look at the \(\log /\log\) graph of ranking in function of the number of appearances, we indeed see the linear function, or a slight deviation of it, which represents the most common version of Zipf’s law. Zipf’s law is an experimental law, which has not yet been given any theoretical foundation, hence perhaps our finding, of its unexpected connection with Bose–Einstein statistics, might provide such a foundation. We also show, in Sect. 4, how the connection with Zipf’s law allows us to develop more in depth the Bose–Einstein model of texts of different sizes, short stories and long stories of the size of novels.

In Sect. 5, we reflect about the issue of ‘identity and indistinguishability’ from the perspective we developed in the foregoing sections, taking into account the conundrum this issue actually still is in quantum theory with respect to quantum particles (Dieks and Lubberdink 2019). Confronting the theoretical view where bosons and fermions are considered to be identical and indistinguishable even if they are in different states, we note that experimentalists take another stance in this respect considering, for example, photons of different frequencies as distinguishable. A recent experiment shows that if this experimentally accepted possibility to distinguish them is erased by means of a quantum eraser, these different frequency photons behave as indistinguishable (Zhao et al. 2014). This makes us put forward the proposal that ‘the way in which we clearly see and understand the identity and indistinguishability of concepts (words, cognitons) in human language’ is also ‘the way in which identity and indistinguishability for quantum particles can be understood’. More specifically, it shows that ‘identity and indistinguishability’ are contextual notions for a quantum particle, depending on the way a measuring apparatus or a heat bath interacts with the quantum particle, similarly to how ‘identity and indistinguishability’ are contextual notions for a human concept, depending on how a mind interacts with the concept. We elaborate with examples this new way of interpreting ‘identity and indistinguishability’ and show how it is a strong confirmation of our conceptuality interpretation of quantum theory.

2 Human Language as a Bose Gas

Let us consider again the Winnie the Pooh story ‘In Which Piglet Meets a Heffalump’ as published in Milne (1926). In Table 1, we have presented the list of all words that appear in the story (in the column ‘Words concepts cognitions’), with their ‘number of appearances’ (in the column ‘Appearance numbers \(N(E_i)\)’), ordered from lowest energy level to highest energy level (in the column ‘Energy levels \(E_i\)’), where the energy levels are attributed according to these numbers of appearances, lower energy levels to higher number of appearances, and their values are given as proposed in (1).

The word And is the most often appearing word, namely 133 times, hence the cognitons in this state populate the ground state energy level \(E_0\), which as per (1) we put equal to zero. The word He is the second most often appearing word, namely 111 times, hence the cognitons in this state populate the first energy level \(E_1\), which following (1) we put equal to 1. Hence, the ‘words’, their ‘energy levels’ and their ‘numbers of appearances’ are in the first three columns of Table 1.

The question can be asked ‘what is the unity of energy in this model that we put forward?’, is the number ‘1’ that we choose for energy level \(E_1\) a quantity expressed in joules, or in electronvolts, or still in another unity? This question gives us the opportunity to reveal already one of the very new aspects of our approach. Energy will not be expressed in ‘\({\mathrm{kg\,m}}^2/{\mathrm{s}}^2\)’ like it is the case in physics. Why not? Well, a human language is not situated somewhere in space, like we believe it to be the case with a physical boson gas of atoms, or a photon gas of light. Hence, ‘energy’ is here in our approach a basic quantity, and if we manage to introduce—this is one of our aims in further work—what the ‘human language equivalent’ of ‘physical space’ is, then it will be oppositely, namely this ‘equivalent of space’ will be expressed in unities where ‘energy appears as a fundamental unit’. Hence, the ‘1’ indicating that ‘He radiates with energy 1’, or ‘the cogniton in state He carries energy 1’, stands with a basic measure of energy, just like ‘distance (length)’ is a basic measure in ‘the physics of space and objects inside space’, not to be expressed as a combination of other physical quantities. We used the expressions ‘He radiates with energy 1’, and ‘the cogniton in state He carries energy 1’, and we will use this way of speaking about ‘human language within the view of a boson gas of entangled cognitons that we develop here’, in similarity with how we speak in physics about light and photons.

The words The, It, A and To, are the four next most often appearing words of the Winnie the Pooh story, and hence the energy levels \(E_2\), \(E_3\), \(E_4\) and \(E_5\) are populated by cognitons respectively in the states The, It, A and To carrying respectively 2, 3, 4 and 5 basic energy units. Hence, the first three columns in Table 1 describe the experimental data that we extracted from the Winnie the Pooh story ‘In Which Piglet Meets a Heffalump’. As we said, the story contains in total 2655 words, which give rise to 542 energy levels, where energy levels are connected with words, hence different words radiate with different energies, and the size of the energies are determined by ‘the number of appearances of the words in the story’, the most often appearing words being states of lowest energy of the cogniton and the least often appearing words being states of highest energy of the cogniton. In Table 1, we have not presented all 542 energy levels, because that would lead to a too long table, but we have presented the most important part of the energy spectrum, with respect to the further aspects we will point out.

More concretely, we have represented the range from energy level \(E_0\), the ground state of the cogniton, which is the cogniton in state And, to energy level \(E_{78}\), which is the cogniton in state Put. Then we have represented the energy level from \(E_{538}\), which is the cogniton in state Whishing, to the highest energy level \(E_{542}\) of the Winnie the Pooh story, which is the cogniton in state You’ve.

These last five highest energy levels, from \(E_{538}\) to \(E_{542}\), corresponding respectively to the cogniton in states Whishing, Word, Worse, Year and You’ve, all have a number of appearance of ‘one time’ in the story. They do however radiate with different energies, but the story is not giving us enough information to determine whether Whishing is radiating with lower energy as compared to Year or vice versa. Since this does not play a role in our actual analysis, we have ordered them alphabetically. So, different words which radiate with different energies that appear an equal number of times in this specific Winnie the Pooh story will be classified from lower to higher energy level alphabetically.

In the column ‘Energies from data \(E(E_i)\)’, we represent \(E(E_i)\), the ‘amount of energy radiated by the Winnie the Pooh story by the cognitons of a specific word, hence of a specific energy level \(E_i\)’. As we mentioned already in the previous section, the formula for this amount is given by

the product of the number \(N(E_i)\) of cognitons in the state of the word with energy level \(E_i\) multiplied by the amount of energy \(E_i\) radiated by such a cogniton in that state. In the last row of Table 1, we give the Totalities, namely in the column ‘Appearance numbers \(N(E_i)\)’ of this last row the total number of words

and in the column ‘Energies from data \(E(E_i)\)’ of the last row we give the total amount of energy

radiated by the Winnie the Pooh story ‘In Which Piglet Meets a Heffalump’. Hence, columns ‘Words concepts cognitions’, ‘Energy levels \(E_i\)’, Appearance numbers \(N(E_i)\) and ‘Energies from data \(E(E_i)\)’ contain all the experimental data of the Winnie the Pooh story ‘In Which Piglet Meets a Heffalump’.

In columns ‘Bose–Einstein modeling’ and ‘Maxwell–Boltzmann modeling’ of Table 1, we give the values of the populations of the different energy states for, respectively, a Bose–Einstein and a Maxwell–Boltzmann model of the data of the considered story. Let us explain what these two models are. As we recalled in the introduction, the Bose–Einstein distribution function is given by

where \(N(E_i)\) is the number of bosons obeying the Bose–Einstein statistics in energy level \(E_i\) and A and B are two constants that are determined by expressing that the total number of bosons equals the total number of words, and that the total energy radiated equals the total energy of the Winnie the Pooh story ‘In Which Piglet Meets a Heffalump’, hence by the two conditions

We remark that the Bose–Einstein distribution function is derived in quantum statistical mechanics for a gas of bosonic quantum particles where the notions of ‘identity and indistinguishability’ play the specific role they are attributed in quantum theory (Huang 1987). We will come back to this in Sect. 5, when we will analyze what our findings and our aim are, given our conceptuality interpretation of quantum theory, to understand better how ‘identity and indistinguishability’ can be explained for a physical Bose gas using our understanding of it in human language.

In a we represent the ‘number of appearances’ of words in the Winnie the Pooh story ‘In Which Piglet Meets a Heffalump’ (Milne 1926), ranked from lowest energy level, corresponding to the most often appearing word, to highest energy level, corresponding to the least often appearing word as listed in Table 1. The blue graph (Series 1) represents the data, i.e. the collected numbers of appearances from the story (column ‘Appearance numbers \(N(E_i)\)’ of Table 1), the red graph (Series 2) is a Bose–Einstein distribution model for these numbers of appearances (column ‘Bose–Einstein modeling’ of Table 1), and the green graph (Series 3) is a Maxwell–Boltzmann distribution model (column ‘Maxwell–Boltzmann modeling’ of Table 1). In b we represent the \(\log / \log\) graphs of the ‘numbers of appearances’ and their Bose–Einstein and Maxwell–Boltzmann models. The red and blue graphs coincide almost completely in both a and b while the green graph does not coincide at all with the blue graph of the data. This shows that the Bose–Einstein distribution is a good model for the numbers of appearances, while the Maxwell–Boltzmann distribution is not

Since we want to show the validity of the Bose–Einstein statistics for concepts in human language, we compared our Bose–Einstein distribution model with a Maxwell–Boltzmann distribution model, hence we introduce also the Maxwell–Boltzmann distribution explicitly. It is the distribution described by the following function

where \(N(E_i)\) is the number of classical identical particles obeying the Maxwell–Boltzmann statistics in energy level \(E_i\) and C and D are two constants that will be determined, like in the case of the Bose–Einstein statistics, by the two conditions

The Maxwell–Boltzmann distribution function is derived for ‘classical identical and distinguishable’ particles, and can also be shown in quantum statistical mechanics to be a good approximation if the quantum particles are such that their ‘the Broglie waves’ do not overlap (Huang 1987). In the last two columns ‘Energies Bose–Einstein’ and ‘Energies Maxwell–Boltzmann’ of Table 1, we show the ‘energies’ related to the Bose–Einstein modeling and to the Maxwell–Boltzmann modeling, respectively.

We have now introduced all what is necessary to announce the principle result of our investigation.

A representation of the ‘energy distribution’ of the Winnie the Pooh story ‘In Which Piglet Meets a Heffalump’ (Milne 1926) as listed in Table 1. The blue graph (Series 1) represents the energy radiated by the story per energy level (column ‘Energies from data \(E(E_i)\)’ of Table 1), the red graph (Series 2) represents the energy radiated by the Bose–Einstein model of the story per energy level (column ‘Energies Bose–Einstein’ of Table 1), and the green graph (Series 3) represents the energy radiated by the Maxwell–Boltzmann model of the story per energy level (column ‘Energies Maxwell–Boltzmann’ of Table 1)

When we determine the two constants A and B, respectively C and D, in the Bose–Einstein distribution function (9) and Maxwell–Boltzmann distribution function (12), by putting the total number of particles of the model equal to the total number of words of the considered piece of text, (10) and (13), and by putting the total energy of the model to the total energy of the considered piece of text, (11) and (14), we find a remarkable good fit of the Bose–Einstein modeling function with the data of the piece of text, and a big deviation of the Maxwell–Boltzmann modeling function with respect to the data of the piece of text.

The result is expressed in the graphs of Fig. 1a, where the blue graph represents the data, hence the numbers in column ‘Energies from data \(E(E_i)\)’ of Table 1, the red graph represents the quantities obtained by the Bose–Einstein model, hence the quantities in column ‘Bose–Einstein modeling’ of Table 1, and the green graph represents the quantities obtained by the Maxwell–Boltzmann model, hence the quantities of column ‘Energies Maxwell–Boltzmann’ of Table 1. We can easily see in Fig. 1a how the blue and red graphs almost coincide, while the green graph deviates abundantly from the two other graphs which shows how Bose–Einstein statistics is a very good model for the data we collected from the Winnie the Pooh story, while Maxwell–Boltzmann statistics completely fails to model these data.

To construct the two models, we also considered the energies, and expressed as a second constraint the conditions (11), (14), that the total energy of the Bose–Einstein model and the total energy of the Maxwell–Boltzmann model are both equal the total energy of the data of the Winnie the Pooh story. The result of both constraints, (10), (13) and (11), (14) on the energy functions that express the amount of energy per energy level—or, to use the language customarily used for light, the frequency spectrum of light—can be seen in Fig. 2. We see again that the red graph, which represent the Bose–Einstein radiation spectrum, is a much better model for the blue graph, which represents the experimental radiation spectrum, as compared to the green graph, which represents the Maxwell–Boltzmann radiation spectrum.

Both solutions, the Bose–Einstein shown in the red graph, and the Maxwell–Boltzmann shown in the green graph, have been found by making use of a computer program calculating the values of A, B, C and D such that (10), (11), (13) and (14) are satisfied, which gives the approximate values

In the graphs of Fig. 2, we can see that a maximum is reached for the energy level \(E_{71}\), corresponding to the word First, which appears seven times in the Winnie the Pooh story. If we use the analogy with light, we can say that the radiation spectrum of the story ‘In Which Piglet Meets a Heffalump’ has a maximum at First, which would hence be, again in analogy with light, the dominant color of the storyFootnote 1. We have indicated this radiation peak in Table 1, where we can see that the amount of energy the story radiates, following the Bose–Einstein model, is 522.79.

Due to their shape, the graphs in Fig. 1a are not easily comparable, and although quite obviously the blue and red graphs are almost overlapping, while the blue and green graphs are very different, which shows that the data are well modeled by Bose–Einstein statistics and not well modeled by Maxwell–Boltzmann statistics, it is interesting to consider a transformation where we apply the \(\log\) function to both the x-values, i.e. the domain values, and the y-values, i.e. the image values, of the functions underlying the graphs. This is a well-known technique to render functions giving rise to this type of graphs more easily comparable.

In Fig. 1b, the graphs can be seen where we have taken the \(\log\) of the x-coordinates and also the \(\log\) of the y-coordinates of the graph representing the data, which is again the blue graph in Fig. 1b, of the graph representing the Bose–Einstein distribution model of these data, which is the red graph in Fig. 1b, and of the graph representing the Maxwell–Boltzmann distribution model of the data, which is the green graph in Fig. 1b. For readers acquainted with Zipf’s law as it appears in human language, they will recognize Zipf’s graph in the blue graph of Fig. 1b. It is indeed the \(\log /\log\) graph of ‘ranking’ versus ’numbers of appearances’ of the text of the Winnie the Pooh story ‘In Which Piglet Meets a Heffalump’, which is the ‘definition’ of Zipf’s graph. As to be expected, we see Zipf’s law being satisfied, the blue graph is well approximated by a straight line with negative gradient close to -1. We see that the Bose–Einstein graph still models very well this Zipf’s graph, and what is more, it also models the (small) deviation from Zipf’s graph of the straight line. Zipf’s law and the corresponding straight line when a \(\log /\log\) graph is drawn is an empirical law. Intrigued by the modeling of the Bose–Einstein statistics by the Zipf graph, we have analyzed this correspondence in detail in Sect. 4.

In the next section, however, we want to describe what a Bose gas is in physics, when it is brought nearby its state of Bose–Einstein condensate, with the aim of identifying the physical equivalent to the Winnie the Pooh story ‘In Which Piglet Meets a Heffalump’ and other pieces of texts which we will also consider.

3 The Bose–Einstein Condensate in Physics

We will explain in this section different aspects related to the experimental realization of a Bose gas near to it being a Bose–Einstein condensate where most of the bosons are in the lowest energy state. The awareness of the existence of this special state of a Bose gas came about as a consequence of a peculiar exchange between the Indian physicist Satyendra Nath Bose and Albert Einstein (Bose 1924; Einstein 1924, 1925). Bose actually devised a new way to derive Planck’s radiation law for light—which has the form of a Bose–Einstein statistics, hence, like we now know, being a consequence of the indistinguishability of the photon as a boson, but that was not known in these pre-quantum theory times—and sent the draft of his calculation to Einstein. Although what Bose did was far from being fully understood in that time, the new method of calculation must have caught right away the full attention of Einstein, because he translated the article from English to German and supported its publication in one of the most important scientific journals of that time (Bose 1924). Einstein himself then, inspired by Bose’s method, worked out a new model and calculation for an atomic gas consisting of bosons, and predicted the existence of what we now call a Bose–Einstein condensate, an amazing accomplishment, taken into account that the difference between bosons and fermions and the Pauli exclusion principle were not yet known (Einstein 1924, 1925). Because of the intense study of Bose–Einstein condensates that took off after their first experimental realizations (Anderson et al. 1995; Bradley et al. 1995; Davis et al. 1995), a lot of new knowledge, experimental, but also theoretical, has been obtained, material on which we built upon for some of the details of the present article (Ketterle and van Druten 1996; Parkins and Walls 1998; Dalfovo et al. 1999; Ketterle et al. 1999; Görlitz et al. 2001; Henn et al. 2008).

The principle idea is still the one foreseen by Einstein, namely to take a dilute gas of boson particles and then stepwise lower its temperature and as a consequence its total energy such that at a certain moment there is so little energy in the gas that all boson particles are forced to transition to the lowest energy state. At that moment, all boson particles are in the same state, namely this lowest energy state, and the gas behaves then in a way for which there is no classical equivalent—we will see that given our conceptuality interpretation of quantum theory and the boson gas model we built here for human language, we will be able to put forward a new way to view the indistinguishability that lies at the heart of a Bose–Einstein condensate (see Sect. 5).

The Bose–Einstein condensates that have been realized so far all consist mainly of massive boson particles, hence generally atoms with integer spins, which makes them bosons. Indeed, the situation of the bosons of light, i.e. of photons, is more complicated, because photons interact so abundantly with matter that their number is never constant, which makes it difficult to realize a thermal equilibrium in this case, albeit not impossible (Klaers et al. 2010a, b, 2011; Klaers and Weitz 2013). We do want to keep using our analogy of language with light, although of course the pieces of texts that we will study contain a fixed number of words, but a dynamic use of human language will also give rise to a continuous coming into existence of new words, which means that for such a dynamic situation the example of light is probably even more representative than gases with a fixed number of atoms. In this stage of our analysis, also because they are the more easy to realize Bose–Einstein condensates, we however focus on massive bosons, hence atoms with integer spins.

The underlying idea is that the gas consists of atoms in a good approximation not interacting with each other, hence only carrying the kinetic energy \(K = p^2 / 2m\) generated by random movements due to the temperature T. It can be shown that in this situation the average kinetic energy of a free particle equals \(K= \pi kT\), where k is Boltzmann’s constant, hence we have

where m is the mass of the atoms and p the absolute value of their momentum. From (16) and de Broglie’s formula \(\lambda = h / p\) we can calculate the ‘thermal de Broglie wave length’ \(\lambda _{th}\) of the atoms of the gas

Let us make things more concrete and calculate this thermal de Broglie wave lengths for the atoms that were used in the Bose–Einstein condensates realized by Eric Cornell and Carl Wieman at the University of Colorado at Boulder in their NIST-JILA lab (Anderson et al. 1995), and by the group led by Wolfgang Ketterle at MIT, for which they jointly were attributed the Nobel Prize in physics in 1999. At Cornell they used a vapor of rubidium 87 atoms in a number density of \(2.5 \times 10^{12}\) atoms per cubic centimeter, cooled down to a temperature of 170 nanokelvin, to see the condensate fraction appear containing an estimated 2000 atoms and be preserved for more than 15 seconds. At MIT, they used a dilute gas of sodium atoms in a number density higher than \(10^{14}\) atoms per cubic centimeter to realize the formation of a condensate containing up to 500,000 atoms at a temperature of 2 microkelvin, with a lifetime of 2 seconds.

Let us calculate \(\lambda _{th}\) for both these condensate formations. Next to the values of Planck’s and Boltzmann’s constants, and the value of \(\pi\), we only need the value of the mass of a rubidium 87 atom and of a sodium atom to do the calculation. The atomic mass of a rubidium 87 atom and of a sodium atom are, respectively, 86.909180527 and 22.989769 unified atomic mass units, and given that one such unified atomic mass unit is \(1.66053904 \times 10^{-27}\,{\mathrm{kg}}\) we get

Using the above values into (17), we obtain for the rubidium gas at 170 nanokelvin and the sodium gas at 2 microkelvin

Often one can read that in states of the Bose gas that are ‘nearing the Bose–Einstein condensate’, the ‘de Broglie waves’ of the particles start to ‘overlap’, and that this is the reason why quantum effects become dominant. There is an interesting measure to express in a quantitative way this notion of ‘overlapping de Broglie waves’ and it is called the ‘phase space density’ \(\rho _{ps}\) of the boson gas

where n is the ‘atom density’ of the gas expressed in ‘number of atoms per cubic centimeter’. From (25) follows that \(\rho _{ps}\) corresponds to the number of atoms in a region of space of the ‘de Broglie wave’ cube size. If this number is much smaller than 1, this means that the de Broglie wave length is much smaller than the distance between the atoms, hence there will be no overlapping and the gas will behave classically. The more this number is greater than 1, the more the de Broglie waves of the atoms are overlapping, hence quantum behavior will increase. It has been shown (Bagnato et al. 1987) that independent of the trapping device used for the atoms, a box, or a magnetic trap—which is the one used in actually realized Bose–Einstein condensates—the condensate starts to form whenever the value of \(\rho _{ps}\) is such that

Considering (17) and (25), the value of \(\rho _{ps}\) in the process of formation of a Bose–Einstein condensate is determined by the temperature T and number density n of the atom gas. In the last stage of the formation, the temperature is lowered by a technique called ‘evaporative cooling under influence of a radio frequency field’. The effect is that also the number density decreases, hence to attain the quantum regime of overlapping de Broglie wave lengths it is necessary to lower the temperature faster than diluting the gas. The group at MIT mentions explicitly the number density that they reached when the Bose–Einstein condensate is formed, namely, between \(10^{14}\) and \(4 \times 10^{14}\) atoms per cubic centimeter (Davis et al. 1995). The Boulder group, since they identified the formation of their rubidium Bose–Einstein condensate at a temperature of \(170\, {{\mathrm{nK}}}\), taking into account (26), we can calculate that the number density of the rubidium gas must have been around \(2.8 \times 10^{13}\) atoms per cubic centimeter.

We give in Table 2 an overview of the energies and lengths that are characteristic for the realizations of the sodium condensate in MIT (Ketterle et al. 1999). Because the gas is very dilute and the temperature is very low, the size of the atoms is very small compared to the distance between the atoms, while the thermal de Broglie wave lengths are large, such that they are overlapping. With each length scale l there is an associated energy scale which is the kinetic energy \(K = \pi kT\) of a particle with a de Broglie wavelength l, that is

gives a good indication of the relation between sizes and energies.

A good measure for the size of atoms which are diluted like in the considered boson gas is the so-called elastic s-scattering length \(a = l/2\pi\). For sodium this has been measured to be 3 nanometers, which using (27) corresponds to an energy of 1 millikelvin in temperature (Marte et al. 2002). Around this temperature elastic s-wave scattering between the atoms will be dominant.

The separation between the atoms in the gas can be estimated by considering the cubic root \(n^{1 \over 3}\) of the number density, which gives us the number of atoms spread out over 1 centimeter. For sodium, with a number density higher than \(10^{14}\) atoms per cubic centimeter, this gives rise to a spacing between the atoms of around 200 nanometers. The length l can be calculated by making use of (26) which gives us the following estimate for l

and hence, by making use of (27) we find that E is around \(2\, \upmu {\mathrm{K}}\).

A temperature of around \(1\, \upmu {\mathrm{K}}\) gives rise to a thermal de Broglie wavelength of around \(300\, {\mathrm{nm}}\).

The largest length scale is related to the confinement characterized by the size of the box potential or by the oscillator length \(a_{HO} = {1 \over 2\pi }\sqrt{h / m \nu }\), which is the typical size of the ground state wave function in a harmonic oscillator potential of frequency \(\nu\) (see “Appendix 2”). With \(\nu = 10\, {\mathrm{Hz}}\), we get a value for \(a_{HO}\) of about \(6.5\, \upmu {{\mathrm{m}}}\). The energy scale related to the confinement is characterized by the harmonic oscillator energy level spacing, given by \(h \nu\). Again, for \(\nu = 10\, {\mathrm{Hz}}\) we get an energy value for the spacing of about \(0.5\, {{\mathrm{nK}}}\).

In Table 3, we made the calculations of length and energy scales for the rubidium 78 Bose–Einstein condensate, taking into account that a density of around \(2.8 \times 10^{13}\) atoms per cubic centimeter was realized within the condensate of 2000 atoms.

We want to show now that our Bose–Einstein distribution model of the Winnie the Pooh story ‘In Which Piglet Meets a Heffalump’ is well modeled by a Bose gas close to the Bose–Einstein condensate of this gas, and will take the rubidium and sodium gases that we described in as inspiration. What is important to notice is the difference in order of magnitude between the energy level spacings of the harmonic trap oscillator, they are of the order of \(1\, {\mathrm{nK}}\), and the energies involved with the gas itself, of the order of \(1\, \upmu \mathrm{K}\). The Winnie the Pooh story ‘In Which Piglet Meets a Heffalump’ is not in a Bose–Einstein condensate state, because then all the words of the story should be the word And, populating the zero energy level. So, it is in a state which is close to a Bose–Einstein condensate.

We have not yet explained what the parameters A and B of (9) are for the situation of a physical boson gas, for which the Bose–Einstein distribution is often written as

where \(\upmu\) is called the ‘chemical potential’, and \(g_i\) the ‘multiplicity’. The multiplicity \(g_i\) of a specific energy level \(E_i\) is the number of states that are different but have this same energy \(E_i\). That different states can have the same energy is connected to the symmetries of the configuration, often spatial ones. For example, for the most simple model of the harmonic trap, the one of a quantum harmonic oscillator, the multiplicity in s dimensions equals

which becomes \((n+1)(n+2) / 2\) in 3 dimensions, \((n+1)\) in 2 dimensions, and 1 in the one-dimensional situation. The different dimensions are relevant for the Bose–Einstein condensates realized in laboratories, because, although the boson gas exists always in 3 dimensions, often the harmonic traps give rise to very elongated cigar-like configurations, such that a quantum description in terms of an effective one-dimensional harmonic oscillator is a better model. Anyhow, for the text of the Winnie the Pooh story we do not have to hesitate about its dimension, pronouncing a text while reading it is certainly one-dimensional. Also a written text, although materialized on a page which is two dimensional, is a one-dimensional structure. This means that in the formula for the Bose–Einstein distribution we have rightly taken \(g_i = 1\) for every energy level \(E_i\).

What about the ‘chemical potential’ \(\upmu\)? There is another quantity which is introduced with respect to it which is called the ‘fugacity’

If we look at (29), taking into account that \(g_i = 1\) and \(E_0 = 0\), we get

which means that the chemical potential and the fugacity are determined by the number \(N_0\) of particles that are in the lowest energy state, hence the number of particles that are in the condensate state. More specifically, for the Winnie the Pooh story we find

Let us note that from (33) follows that the fugacity is a number contained between 1/2 and 1, in case we have at least one particle in the condensate state, and the chemical potential is a negative number, they respectively approach 1 and 0 when the condensate grows in terms of number of particles in the lowest energy level. For what concerns the second constant B, we have

which means that the second constant B is given by the temperature of the Bose gas.

The rubidium condensate is a better example for the Winnie the Pooh story, as also the number of atoms, 2000, is of the same order of magnitude as the number of words, 2655, of the Winnie the Pooh story. The energy levels of the trap for the rubidium condensate are of the order of \(1\, {\mathrm{nK}}\), while the temperature of the gas is \(170\, {\mathrm{nK}}\) (Table 3), which is 170 times bigger. We see for the Winnie the Pooh story that if we take 1 unit of energy for the energy level spacings, we have \(B = kT = 593\), following (15), and hence \({1 \over 2} kT\), being a good estimate for the average energy per atom of a one-dimensional gas, gives for the latter 271, which means that we are in this respect also in the same order of magnitude for the Winnie the Pooh story and the rubidium condensate. Hence, we can say that the Winnie the Pooh story can be looked at as behaving similarly to a Bose gas of rubidium 87 atoms in one-dimension at a temperature of \(170\, {\mathrm{nK}}\). We will see in Section 4, where we consider the text of the novel ‘Gulliver’s Travels’ of Jonathan Swift (Swift 1726), that the sodium condensate is a better example for this text.

Let us introduce a second piece of text in Table 4, namely a story entitled ‘The magic shop’ written by Herbert George Wells (Wells 1903), with which we want to illustrate an aspect of our ‘Bose gas representation of human language’ that we have not yet touched upon. For the Winnie the Pooh story, If we look at Fig. 2 and Table 1, we can see that the ‘energy spectrum’ does not cover the whole range of possible energy values. Indeed, the red graph of Fig. 2 on the right hand side of the graph has still a substantial value, and is not at all close to zero. Hence one can wonder what happens further on for the higher energy spectrum with this graph?

On the low energy spectrum, the amount of radiation increases starting from zero radiation for energy level \(E_0\), hence for the words that are captured in the zero energy level of the Bose–Einstein condensate, there is no radiation emerging from them following the considered choice of zero in the energy scale—for the case of the Winnie the Pooh story, the zero level energy state puts the cogniton in state And—and then the amount of radiation increases steeply—we have already a radiation of 111 energy units (and 105.84 in the Bose–Einstein model) for \(E_1\) for the Winnie the Pooh story and the cogniton in state He. The energy radiation keeps increasing steeply—182 for \(E_2\) (179.36 for the Bose–Einstein model) for the cogniton in state The, 255 for \(E_3\) (233.36 for the Bose–Einstein model) for the cogniton in state It, 280 for \(E_4\) (274.65 for the Bose–Einstein model) for the cogniton in state A, 345 for \(E_5\) (307.23 for the Bose–Einstein model) for the cogniton in state To, etc.—to reach a maximum at \(E_{71}\) with a radiation level of 522.79 energy units for the cogniton in state First. Then the radiation starts to decrease slowly. But, remark that at energy level \(E_{542}\), with the cogniton in state You’ve, which is the highest energy level of Table 1, we still have a radiation of 385.55 energy units, which is more than half of the maximum radiation reached at energy level \(E_{71}\) for the cogniton in state First.

How can we understand this, because we have in Table 1 exhausted all the words of the Winnie the Pooh story and hence seemingly represented all possible energy levels. But is this true? To see clear in this, we have to reflect about the difference of the numbers in the third and the fourth column of Table 1, respectively the ‘numbers of appearances’ of the specific words in the Winnie the Pooh story and the ‘values of the Bose–Einstein distribution that we used to model these numbers of appearances’. The values in the fourth column are of a probabilistic nature and express averages of stories ‘similar’ to the one of Winnie the Pooh with respect to the numbers of appearances of the specific words, while the values in the third column express real counts for one specific story. More concretely, by ‘similar’ we actually mean ‘containing the same total number of words, and containing the same total amount of energy’. Remember indeed that the Bose–Einstein distribution function only contains two parameters, which hence will be determined by the total number of words and the total amount of energy. Or to put it even more concretely, suppose we would collect a vast number of pieces of ‘meaningful’ texts all containing the same total number of words N and the same amount of total energy E, the Bose–Einstein distribution function (9) is then supposed to model a specific type of average that can be obtained for all these texts, and the more numerous these texts the better this average will correspond with the Bose–Einstein distribution function. The reason is that this function is the consequence of the limit process in statistical mechanics of a micro-canonical ensemble of states of particles with the same N and E (Bose 1924; Einstein 1924, 1925; Huang 1987).

The above reasoning indicates that we can consider to introduce a ‘place for words that do no appear in the considered text but could have appeared’. Remark that these new words do not add to the sum N of all words, since they have ‘number of appearance zero’, which means that this operation of ‘adding new words’ leaves N unchanged. In the ranking of energy levels, they have to be classified by ‘additional energy levels higher than the highest one we now identified with respect to the last alphabetically classified word that appears one time in the text’. Remark that also E remains unchanged by this adding of words that could have appeared. Indeed, although these new added words carry high energies, since all of them have appearance number zero, they do not add to the total amount of energy because the product of the energy of an even very high energy level with the zero of its number of appearances equals zero. Since N and E are left unchanged by the adding of these new words that could have appeared also the micro-canonical ensemble and its thermodynamical equilibrium remain unchanged. However the adding of the new words does alter substantially the Bose–Einstein distribution function and the Maxwell–Boltzmann distribution function calculated to model the data, because they both do not have appearance values equal to zero for these words, which means that there will be contributions to the total number of words and the total energy of their modeling. Hence, this operation of adding words such that the energy spectrum completes itself over the whole range is a necessary operation in the modeling with Bose–Einstein or Maxwell–Boltzmann.

Again more concretely, let us consider the words that appear one time in the Winnie the Pooh story, and look for synonyms of these words, then a word that appears now one time could not have appeared and instead its synonym could then have appeared. So, the synonyms can be listed in a new set of words to add with zero appearance, as ‘could have appeared’, and indeed, the Bose–Einstein distribution function will not be zero for them, which expresses exactly this ‘they could have appeared’.

To illustrate the above, we consider the H. G. Wells story ‘The magic shop’ (Wells 1903) for which we have classified its words in energy levels in Table 4. As we can see, the energy level \(E_{1153}\) corresponding to the state of the cogniton characterized by the word Youngster, would have been the highest energy level in case we had stopped, like we did for the Winnie the Pooh story, to add energy levels at the ‘one word appearance number’. For this new story ‘The magic shop’ we have however added the ‘zero word appearance number’ explicitly, starting with Garden, which is a word that does not appear in the story, synonym of Yard of energy level \(E_{1149}\) and we attributed energy level \(E_{1154}\) to the cogniton in a state characterized by Garden. And indeed, in the third column in the row where Garden appears in Table 4 there is 0, indicating that Garden does not appear in the story ‘The magic shop’. In the fourth column, in the row of Garden in Table 4, we however have 0.25, which is the value of the Bose–Einstein distribution function at energy level \(E_{1154}\), and in the fifth column, in the row of Garden in Table 4, we have 0.07, which is the value of the Maxwell–Boltzmann distribution function at energy level \(E_{1154}\). Both numbers indicate that ‘Garden could have appeared in a story similar to the H. G. Wells story’, because they are not zero. These numbers are linked to the probability of Garden to appear in a similar story than the story of ‘The magic shop’ in the way we explained above. And indeed there should be not zeros in these places because there is a probability that Garden would appear in such a similar story. We added the word Okay at energy level \(E_{1155}\) as synonym of Yes at energy level \(E_{1150}\), as a new not appearing state of the cogniton, however potentially appearing in a similar story. We continued in the same way adding Junior as synonym of Youngster, but there are no synonyms of You’d and You’re, which gives us the occasion to mention that the added words that could appear in a similar story do not have to be synonyms.

The only criterion is that ‘they appear in a meaningful story with the same total number of words and the same total energy’. Hence, adding synonyms is a simple way to ensure that the whole story remains meaningful, but also a completely new meaningful part to the story can be added with words that are no synonyms’.

So, we added many more energy levels, namely till the cogniton being in energy level \(E_{3500}\). We have only shown the seven last ones of these words in Table 4, namely Continued, Adding, Mention, Similar, Criterion, Obviously and Appearing, having zero number of appearances in the H. G. Wells story, but their Bose–Einstein value in the Bose–Einstein model, as well as their Maxwell–Boltzmann value in the Maxwell–Boltzmann model, being not zero.

In Fig. 3a, b, we have represented, respectively, the numbers of the appearing and not appearing words with respect to the energy levels, a graph very steeply going down, and the \(\log /\log\) graphs of these numbers of appearances, where we take the logarithm of both y and x. In Fig. 4, we have represented the amounts of radiated energy with respect to the energy levels, and we see that this time the red graph representing the Bose–Einstein model of the data, after steeply going up and reaching a maximum, goes slowly down to touch closely the zero level of amount of energy radiated for high energy level cognitons. We see again, like in Fig. 1, that the Bose–Einstein distribution function, the red graph, gives an almost complete fit with the data, the blue graph, and gives definitely a much better fit than the Maxwell–Boltzmann distribution function, the green graph, does. Let us look more carefully to the amounts of energy graphs in Fig. 4. Also here we see that the red graph, which is the Bose–Einstein distribution, is a much better fit for the blue graph of the data, than the green graph, which is the Maxwell–Boltzmann distribution. We see that the maximum amount of radiation is reached at energy level \(E_{70}\) in the state of the cogniton characterized by Door and the amount is 652.55204 energy units. So the frequency of Door would be the dominant color with which the story ‘The magic shop’ shines.

Comparing with the Winnie the Pooh story, we have a higher temperature, kT equals 722 instead of 593, a higher fugacity, f equals 0.9951 instead of 0.9923, and a higher chemical potential, \(\upmu\) is \(-3.576\) instead of \(-4.581\). This will be generally so when we consider longer texts like again will be illustrated by the text of ‘Gulliver’s Travels’ considered in Sect. 4. We mentioned already that the sodium condensate realized at MIT, which we described above in detail, is a better model for the ‘magic shop’ story, and indeed, in Table 2 we can see that the harmonic oscillator level spacing for the sodium condensate is around \(0.5\, {\mathrm{nK}}\) while the temperature of the sodium gas is \(1\, {\mathrm{mK}}\), which is a factor 2000 in difference of size. In Table 4, we see that we have 3500 energy levels for the story ‘The magic shop’, which is of the same order of magnitude. The number of atoms in the MIT sodium condensate was estimated to be 500,000, which is way more still than the number of words in the H. G. Wells story ‘The magic shop’, which is 3934. When we analyze larger texts that come closer to this size, such as the text of Gulliver’s Travels in Sect. 4, we find an even better correspondence in magnitudes with the data of the sodium condensate. But before showing this, we have to investigate more in depth another aspect of our modeling, namely the aspect related to the ‘global energy level structure’.

In a the numbers of appearances of words in the H. G. Wells story ‘The magic shop’ (Wells 1903) is represented, ranked from lowest energy level, corresponding to the most often appearing word, to highest energy level, corresponding to the least often appearing word, as listed in Table 4. The blue graph (Series 1) represents the data, i.e. the collected numbers of appearances from the story (column ‘Appearance numbers \(N(E_i)\)’ of Table 4), the red graph (Series 2) is a Bose–Einstein distribution model for these numbers of appearances (column ‘Bose–Einstein modeling’ of Table 4), and the green graph (Series 3) is a Maxwell–Boltzmann distribution model (column ‘Maxwell–Boltzmann modeling’ of Table 4). In b the \(\log / \log\) graphs of the appearance numbers distributions are represented. The red and blue graphs coincide almost completely in both a and b while the green graph does not coincide at all with the blue graph of the data. This shows that the Bose–Einstein distribution is a good model for the numbers of appearances while the Maxwell–Boltzmann distribution is not

We have not yet revealed the parameters A, B, C and D for the story ‘The magic shop’, they have the following values

There are two quantum models that also in physics are used as an inspiration for the energy level structure of the trapped atoms, one is the ‘harmonic oscillator and its variations’ (“Appendix 2”) and the other is the ‘particle in a box and its variations’ (“Appendix 1”). From the harmonic oscillator model follows that the energy levels are equally (linearly) spaced, which is also the way we have modeled them for the two examples that we have considered, the Winnie the Pooh story and the H. G. Wells story. However, the energy levels of the particle in a box are quadratically spaced. We will see in the following of our analysis that in view of our experimental findings in analyzing numerous texts in all generality, the energy levels of the cognitons, depending on the story considered, are spaced following a power law, with a power coefficient which is in principle between 0 and 2, but for all the stories that we investigated was between 0.75 and 1.25. This indicates that different energy situations on both sides of the ‘harmonic oscillator’ are at play, from the ‘anharmonic oscillator’, with converging spacings between energy levels, to the ‘particle in a box’, with quadratic spacings between energy levels. We will show in next section how this generalization for the energy spacings strengthens the correspondence with Zipf’s law in human language.

A representation of the ‘energy distribution’ of the H. G. Wells story ‘The magic shop’ (Wells 1903) as listed in Table 4. The blue graph represents the energy radiated by the story per energy level (column ‘Energies from data \(E(E_i)\)’ of Table 4), the red graph represents the energy radiated by the Bose–Einstein model of the story per energy level (column ‘Energies Bose–Einstein’ of Table 4), and the green graph represents the energy radiated by the Maxwell–Boltzmann model of the story per energy level (column ‘Energies Maxwell–Boltzmann’ of Table 4)

4 Zipf’s Law and the Bose Gas of Human Language

Zipf’s law is considered to be one of the mysterious structures encountered in language (Zipf 1935, 1949). It was originally noted in its most simple form in the following way. When ranking words according to their numbers of appearances in a piece of text, the product of the rank with the number of appearances is a constant. Hence Zipf’s law was originally stated mathematically as follows

where R is the rank, N the number of appearances, and c is a constant. We have presented in Figure 5 the products \(R_i \times N_i\) for the text of the Winnie the Pooh story that we have investigated in Sect. 2, where \(R_i\) is the i-th Zipf’s ranking and \(N_i\) is the number of appearances corresponding to this ranking. The x-coordinate of the graphs in Fig. 5 represents the ranks \(R_i\), and the y-coordinate represents the products \(R_i \times N_i\) for the blue graph, and the values of respectively the Bose–Einstein distribution, and the Maxwell–Boltzmann distribution for the red and green graphs.

It is not a coincidence that there is a striking resemblance between the graphs shown in Fig. 5 and the energy distribution graphs of the Winnie the Pooh story as a boson gas shown in Fig. 2. Indeed, the energy levels \(E_i\) that we introduced are very simply related to the Zipf rankings \(R_i\), the only difference being that we started with value zero for the lowest energy level, while Zipf started with value 1 for his first rank. Hence, more concretely, we have

This means that although none of the values of the Zipf products in Fig. 5 is equal to the energies in Fig. 5, the differences are small, because \(R_i\) equals \(E_i +1\).

The blue graph (Series 1) is a representation of the products \(R_i \times N_i\) for the text of the Winnie the Pooh story that we have investigated in Sect. 2, where \(R_i\) is the i-th rank in Zipf’s ranking and \(N_i\) is the number of appearances corresponding to this ranking. The x-ccordinate represents the ranks \(R_i\), and the y-coordinate represents the products \(R_i \times N_i\). For the red graph (Series 2) and the green graph (Series 3) the values of respectively the Bose–Einstein distribution and the Maxwell–Boltzmann distribution which we developed in Sect. 2 were used as a comparison with the graph in Figure 2

Consulting Table 1, we can see that the biggest difference is at the zero point of the graph, where on the x-axis \(E_0 = 0\) and \(R_0 = 1\), hence between the product \(R_0 \times N_0\), which equals \((E_0+1) \times N_0\), that is between \(1 \times 133 = 133\) and \(E_0 \times N_0 = 0 \times 133 = 0\). This can not easily be seen as a difference between the graphs of Fig. 5 and the graphs of Fig. 2, since 133 is still little compared to the values the functions take at \(R_1\) and \(E_1\). Again consulting Table 1, we indeed see that \(R_1 \times N_1 = (E_1+1) \times N_1 = 2 \times 111 = 222\), while \(E_1 \times N_1 = 1 \times 111 = 111\). This means that both the ‘product graph’ of Fig. 5 and the ‘energy distribution graph’ of Fig. 2 go quickly up between \(R_0\) and \(R_1\) and between \(E_0\) and \(E_1\), the first from value 113 to value 222, and the second from value 0 to value 111, which is almost with the same steepness. Both graphs will then remain increasing quite quickly and then slowly flatten till they reach their maxima at Zipf rank \(R_{70}\) and energy level \(E_{71}\). Then, from this maximum on, both the Zipf product and the energy distribution slowly decrease from their maxima to a lower value. More specifically, the maximum value is 522.79 in both cases, and for the last considered Zipf rank \(R_{542}\) and energy level \(E_{542}\) we find values 359.22 and 358.55 respectively. This shows that there is a decreasing for the Zipf products and not constancy like Zipf’s law predicts.

In the foregoing reasoning on Zipf’s law, we have always considered the two graphs, the blue and the red one, in both Figs. 5 and 2. Of course, Zipf did not know of the Bose–Einstein distribution that is represented by the red graph in both figures, and which we used to model the data, represented by the blue graph in both figures. Hence Zipf only had the blue graph in Fig. 5 available to come up with the hypothesis that the product of rank and number of appearances is a constant. If one considers the blue graph in Fig. 5, one could indeed imagine it to vary around a constant function, certainly in the middle part of the graph. The beginning part can then be considered as a deviation, which is also what Zipf did when noting that in the first ranks the law did not hold up well. It was also known to Zipf that the end part of the graph, as a consequence of how ranks and numbers of appearances behave there, making the product go up and down heavily, did not behave very well with respect to his law either, and the slight downward slope all at the end was identified by Zipf as well. We see it explicitly pictured by the red graph, representing the Bose–Einstein distribution modeling of the data.

There is however another aspect of the situation which was overlooked by Zipf. It is self-evident that ‘if Zipf’s law is a law, it has to be a probabilistic law’. Let us specify what we mean by this. Suppose we had a large number of texts available with exactly the same number of different words in it, such that a Zipf analysis would lead to the same total number of ranks for each of the texts. Zipf’s graphs, including the ‘product graph’, i.e. the blue graph in Fig. 5, will then show a statistical pattern for the set of texts where it is tested on. Suppose we make averages for the numbers of appearances pertaining to the same rank over the available texts, then the function representing these averages of the numbers of appearances for the different texts will be a distribution function with a steep upward slope in the first ranks going towards a maximum and then a slow downwards slope in the ranks after this maximum. It will be a function similar to the Bose–Einstein distribution we have used to model texts as Bose gases, i.e. the red graph. This will be even more so when we add the two constraints that in our case follow naturally from our modeling, namely that the different texts need to count the same total number of words, and the sum of the products, which in our interpretation of the Bose gas model is the total energy, needs to be the same for each one of the texts. What is however more important still is that ‘if Zipf’s law is a probabilistic law, we should also introduce rankings that represent words with a zero number of appearances’, exactly like what we have done for the H. G. Wells story ‘The magic shop’, for which we have represented the data and the Bose–Einstein model in Table 4, and the graphs representing these data in Figs. 3a, in b and in 4.

If we look carefully at the energy distribution graph in Fig. 4, we can understand again somewhat better why Zipf came to believe that the products of the ranks and the numbers of appearances are a constant. Indeed, having added the zero number of appearance till the energy distribution becomes close to zero in the high energy levels, like shown in Fig. 4, we can see how the blue graph goes first far up where the one word appearance cases are, to compensate the long row of zero appearance cases that take a great part of the x-axis. So, if one leaves out the zero appearance part, one easily can get the impression that the blue graph represents a constant on average, at least when neglecting the low energy levels at the start, where it goes steeply up.

Representation of the \(\log /\log\) graphs of the Zipf data. The blue graph represents the data (Series 1), the red graph represents the Bose–Einstein model (Series 2), the green graph represents the Maxwell–Boltzmann model (Series 3) and the purple graph represents a straight line (Series 4) that is an ‘as good as possible approximation’ of the other graphs to illustrate that the gradient of the ‘straight line approximation’ is not equal to \(-1\)

Most of the investigations of Zipf’s findings afterwards concentrated on the \(\log /\log\) graph representation, where the \(\log\) is taken for the rank as well as for the numbers of appearances, hence the Zipf equivalents for the \(\log /\log\) graphs we considered for our Bose gas modeling represented in Fig. 1b and in Fig. 3b. For what concerns Zipf’s law expressed in (38), the \(\log /\log\) graph of the Zipf product gives rise to a straight line with gradient equal to \(-1\). Indeed, when we take the \(\log\) of both sides of (38) we get

which graph, with \(\log R\) on the x-axis and \(\log N\) on the y-axis, is a straight line with gradient equal to \(-1\). It is indeed much more easy to see by the naked eye that such a \(\log /\log\) graph like those in Fig. 1b and in Fig. 3b can be approximated well by a straight line as compared to seeing the constancy of the Zipf’s products in a graph like the one in Fig. 5, where the constancy needs to be approximated to the up and down moving blue graph. However, the focus of all Zipf’s investigations on the \(\log /\log\) graphs also has its down side, in the sense that the upper and lower parts of the graph will be more easily considered as slight deviations of the straight line, while, as we see with our Bose–Einstein distribution modeling in its energy graph version, they really represent essential and significant deviations from Zipf’s original product law (38). That in both Fig. 1b and in Fig. 3b the graphs are slightly bent towards a concave form is the expression of Zipf’s law essentially not being satisfied for low ranks and high ranks.

The foregoing analysis is meant to provide evidence to the Bose–Einstein distribution being a better model for the Zipf data than a constant, or also still than later more complex versions of Zipf’s law along the lines of still believing that the product graph is in good approximation a constant, and the \(\log /\log\) version in good approximation a straight line. There is however another aspect of Zipf’s finding that we want to put forward here, since it will be important for our model of a Bose gas for human language.

In Fig. 6, we represented the \(\log /\log\) graphs of the Zipf data (blue graph) and the Bose–Einstein (red graph) and Maxwell–Boltzmann (green graph) distributions which we used to model them, and we added a straight line (purple graph) that approximates the other graphs as good as possible. We can see that the gradient of the straight line is not equal to \(-1\), but to \(-0.94\). Although Zipf himself kept focusing on the straight line with gradient \(-1\), it was noted by many who studied Zipf’s law that a generalization was needed to take into account the gradient of the straight line usually being smaller than \(-1\), hence the \(\log /\log\) version of law was generalized to

which made the original product of rank and frequency be generalized to

where p is called the ‘power coefficient’ of Zipf’s law.

We will apply this ‘power coefficient’ in Zipf’s law also in our modeling. Let us explain why and how we will do so. First of all, there is no a priori reason why the energy levels would be as simple as we presented it in the two examples that we considered, namely such that

where \(E_1 - E_0\) is the unit of energy that we introduced. Of course, we have systematically taken \(E_0 = 0\), see (1), which makes the energy levels we have introduced in both stories even more simple, but it is not necessarily so that \(E_0 = 0\) as a rule, which is why we now formulate the ‘linear system of energy levels’ as in (43). This simple linear system is inspired by the energy levels of the quantum harmonic oscillator (“Appendix 2”), where we have

with \(\nu\) being the frequency of the oscillator. But that energy spacings between consecutive energy levels are the same, like in the case of the harmonic oscillator, is a very exceptional situation of quantization. For general quantized systems the spacings between consecutive energy levels will not be the same, and both cases exist, for not confined quantized situations the spacings will decrease, while for confined situations the spacings will increase. For example, for the quantized energy levels of the ‘particle in a box’ (“Appendix 1”), we have

which means that the energy levels change quadratically in function of the unit of energy

Remark that in “Appendices 1 and 2” we have used n to indicate the ‘quantum numbers’, because that is the traditional letter used for quantum numbers within standard quantum theory. In the approach we followed we have used i to indicate the ‘energy levels’, because we do not want to make a direct and exclusive reference to standard quantum theory alone, since our aim is to also make a connection with Zipf’s law in language. More generally, we want to elaborate a ‘quantum cognition theory’ for ‘human language and cognition’ from basic principles on a more foundational level than the one where standard quantum theory is situated, building on earlier work in quantum cognition and quantum computer science (Aerts and Aerts 1995; Khrennikov 1999; Atmanspacher et al. 2002; Gabora and Aerts 2002; van Rijsbergen 2004; Aerts and Czachor 2004; Widdows 2004; Bruza and Cole 2005; Busemeyer et al. 2006; Pothos and Busemeyer 2009; Lambert Mogiliansky et al. 2009; Bruza et al. 2009; Busemeyer and Bruza 2012; Dalla Chiara et al. 2012, 2015; Haven and Khrennikov 2013; Melucci 2015; Pothos et al. 2015; Blutner and beim Graben 2016; Moreira and Wichert 2016; Broekaert et al. 2017; Gabora and Kitto 2017; Busemeyer and Wang 2018).

In this we will also be inspired by the global foundational work we have done in our Brussels group (Aerts 1986, 1990, 1999, 2009b; Aerts et al. 2010, 2012, 2013a, 2018a, 2019a, 2011; Aerts and Gabora 2005a, b; Aerts et al. 2013b; Aerts and de Bianchi 2014, 2017; Aerts et al. 2016; Aerts and Sozzo 2011, 2014; Aerts et al. 2015a, 2016; Sassoli de Bianchi 2011, 2013, 2014, 2019; Sozzo 2014, 2015, 2017, 2019; Veloz et al. 2014; Veloz and Desjardins 2015), and by the more specific work on the ‘conceptuality interpretation’ (Aerts 2009a, 2010a, b, 2013, 2014; Aerts et al. 2018d, 2019c). To mention a concrete aspect in need of a more foundational approach, there is yet no well identified spatial domain for human language, which means that we will have to build a ‘quantum cognition’ without reference to space (Aerts 1999; Sassoli de Bianchi 2019).

The ‘harmonic oscillator’ and the ‘particle in a box’ are both special cases where the one-dimensional Schrödinger equation can be solved analytically, but for boson gases power law potentials have been studied as more general models (Bagnato et al. 1987), and hence we will also introduce in our approach a more general variation of the energy levels than the linear one, namely one of a ‘power law change’

Let us show right away how the introduction of a power law for the energy level spacings gives extra strength to the Bose–Einstein modeling of the texts of stories expressed in human language. This time we choose a much larger text than the two ones we investigated before, namely the text of the satirical work Gulliver’s Travels by Jonathan Swift (Swift 1726), which contains in total 103184 words, hence of the order of 40 times more than the Winnie the Pooh story and 25 times more than the H. G. Wells story. When analyzed as the Winnie the Pooh and the H. G. Wells story, with the hypothesis of equally spaces energy levels, or, which is equivalent, with a power coefficient spacing of the energy levels with power coefficient equal to 1, we find a total of 8294 energy levels without adding the zero number of appearances levels, and the ten highest numbers of appearances and their corresponding words are The, 5838, Of, 3791, And, 3633, To, 3400, I, 2852, A, 2442, In, 1976, My, 1593, That, 1280 and Was, 1263.

In Fig. 7a, we represented the \(\log /\log\) version of the ‘numbers of appearances’ graphs for the Gulliver’s Travels story, the blue graph representing the data, the red graph the Bose–Einstein model, and the green graph the Maxwell–Boltzmann model. We can see right away that again the Bose–Einstein model is a much better representation of the data than the Maxwell–Boltzmann model, but we can also see that it is a less good representation of the data than it was the case for the Winnie the Pooh story and the H. G. Wells story. Indeed, the red graph indicates noticeably too high values in the low energy levels and for a large region in the middle energy levels it has values that are too low. In Table 5 (a) we give the eleven lowest energy levels values of the Bose–Einstein distribution model corresponding to the states of the cognitons, i.e. the corresponding words, and compare with the data, and see that the first ones are too high, while the following ones are too low.