Abstract

In this paper, we reject commonly accepted views on fundamentality in science, either based on bottom-up construction or top-down reduction to isolate the alleged fundamental entities. We do not introduce any new scientific methodology, but rather describe the current scientific methodology and show how it entails an inherent search for foundations of science. This is achieved by phrasing (minimal sets of) metaphysical assumptions into falsifiable statements and define as fundamental those that survive empirical tests. The ones that are falsified are rejected, and the corresponding philosophical concept is demolished as a prejudice. Furthermore, we show the application of this criterion in concrete examples of the search for fundamentality in quantum physics and biophysics.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Scientific communities seem to agree, to some extent, on the fact that certain theories are more fundamental than others, along with the physical entities that the theories entail (such as elementary particles, strings, etc.). But what do scientists mean by fundamental? This paper aims at clarifying this question in the face of a by now common scientific practice. We propose a criterion of demarcation for fundamentality based on (i) the formulation of metaphysical assumptions in terms of falsifiable statements, (ii) the empirical implementation of crucial experiments to test these statements, and (iii) the rejection of such assumptions in the case they are falsified. Fundamental are those statements, together with the physical entities they define, which are falsifiable, have been tested but not been falsified, and are therefore regarded as fundamental limitations of nature. Moreover, the fundamental character of statements and entities is strengthened when the same limitations are found through different theoretical frameworks, such as the limit on the speed of propagation imposed by special relativity and independently by no-signaling in quantum physics. This criterion adopts Popper’s falsificationism to turn metaphysical concepts into falsifiable statements but goes beyond this methodology to define what is fundamental in science. Such a criterion, as well as Popper’s falsificationism, are not here regarded as normative, but rather descriptive of the methodology adopted by a broad community of scientists.

The paper is structured as follows: in Sect. 2 we explore the reasons to go beyond reductionism in the search for fundamentality. In Sect. 3 we show that many physicists are employing a falsificationist method, or at least are convinced to do so, which inevitably shapes their research programs. In Sect. 4, we define our criterion for fundamentality and show its application to notable examples of modern foundations of quantum mechanics—wherein the no-go theorems (such as Bell’s inequalities) play a pivotal role— and in biophysics, in the search for fundamental properties of polymers.

2 Reductionism and Foundations

Tackling the question of “what is fundamental?” seems to boil down, in one way or another, to the long-standing problem of reductionism. This is customarily intended to mean “that if an entity x reduces to an entity y then y is in a sense prior to x, is more basic than x, is such that x fully depends upon it or is constituted by it” (van Riel and Van Gulick 2016). Accordingly, the process of reduction is sometimes thought to be equivalent to the action of digging into the foundations of science.Footnote 1 Despite this generally accepted view, we show that the reductionist approach to foundations, which seems prima facie legitimate given its historical success, can be overcome by more general approaches to search for foundations.

Reductionism commonly “entails realism about the reduced phenomena” (van Riel and Van Gulick 2016), because every effect at the observational level is reduced to objectively existing microscopic entities. This is the case of a particular form of reductionism known as microphysicalism. In this view, the entities are the “building blocks” of nature, and their interactions fully account for all the possible natural phenomena.Footnote 2.This is, however, a view that requires the higher-order philosophical pre-assumption of realism.Footnote 3

Reductionism is justified merely on historical arguments, that is, looking at “specific alleged cases of successful reductions” (van Riel and Van Gulick 2016). However, there are many cases where reductionism has exhausted its heuristic power, and it is only the unwarranted approach of some physicists who regard physics as the foremost among sciences, claiming that every biological or mental process can be eventually reduced to mere physical interactions. Feynman, for instance, would maintain that “everything is made of atoms. [...] There is nothing that living things do that cannot be understood from the point of view that they are made of atoms acting according to the laws of physics” (Feynman 1963, Sect. 1.9). On the contrary, we believe, with David Bohm, that “the notion that everything is, in principle, reducible to physics [is] an unproved assumption, which is capable of limiting our thinking in such a way that we are blinded to the possibility of whole new classes of fact and law”, Bohm (1961).Footnote 4 Moreover, the reductionist program seems to be failing even within physics alone, not having so far been capable of unifying the fundamental forces nor its most successful theories (quantum and relativistic physics). It has been proposed that even a satisfactory theory of gravity requires a more holistic (i.e. non-reductionist) approach (Barbour 2015) and it could have an emergent origin (Linnemann and Visser 2017). Furthermore, it is the belief of many contemporary scientists (especially from the promising field of complex systems studies) that emergent behaviours are inherent features of nature (Kim 2006), not to mention the problem of consciousness. So, the authoritative voice of the Stanford Encyclopedia of Philosophy concludes that “the hope that the actual progress of science can be successfully described in terms of reduction has vanished” (van Riel and Van Gulick 2016).

Another tempting path to approach the question of “what is fundamental”, is the use of conventionalist arguments. “The source of conventionalist philosophy would seem to be wonder at the austerely beautiful simplicity of the world as revealed in the laws of physics”, (Popper 1959, p. 80). The idea, however, that our descriptions being simple, elegant, or economical or the like, constitutes a guarantee of “fundamentality” is a mere utopia. Conventionalism, despite being totally self-consistent, fails when it comes to acquiring empirical knowledge. In a sense, for the conventionalist, the theory comes first, and observed anomalies are “reabsorbed” into ad hoc ancillary hypotheses. It thus appears quite unsatisfactory to address foundations of natural science from the perspective of something that has hardly any empirical content.

In conclusion, although we acknowledge that an approach that involves the intuitive decomposition of systems into basic building-blocks of nature can still be a useful heuristic tool, it seems too restrictive to be used to define a universal criterion of fundamentality. Nor it looks promising to rely on purely conventional (e.g. aesthetic) factors, though they can be fruitful in non-empirical sciences. Indeed, while reduction-based foundations clash with the ontological problem (the assumption of realism), conventional-based foundations clash with the epistemological problem (the empirical content of theories). What we are left with is to go back to the very definition of science, to its method, and try to understand what science can and cannot do.

3 Scientists Adhere to Falsificationism

As it is generally known, Popper (1959) showed the untenability of a well-established criterion of demarcation between science and non-science based on inductive verification.Footnote 5 Popper proposed instead that theories are conjectures that can only be (deductively) falsified. Popper’s criterion of demarcation between science and non-science requires that scientific statements (laws, consistent collections of laws, theories) “can be singled out, by means of empirical tests, in a negative sense: it must be possible for an empirical scientific system to be refuted by experience. [...] Not for nothing do we call laws of nature ‘laws’: the more they prohibit the more they say” (Popper 1959, pp. 40–41).

We ought to stress, however, that one of the major critiques to Popper’s falsificationism is that it demarcates scientific statements from non-scientific ones on a purely logical basis, i.e. in principle independently of the practical feasibility. In fact, for Popper, a statement is scientific if and only if it can be formulated in a way that the set of its possible falsifiers (in the form of singular existential statements) is not empty. On this regard, Č. Brukner and M. Zukowski, who significantly contributed to FQM in recent years, slightly revised Popper’s idea (among other scolars). While maintaining a falsificationist criterion of demarcation, they attribute to falsifiability a momentary value:

[Non-scientific] propositions could be defined as those which are not observationally or experimentally falsifiable at the given moment of the development of human knowledge.Footnote 6

Falsificationism seems to influence the working methodology of scientists directly. In fact, within the domain of scientific (i.e. falsifiable) statements, we show here that scientists aim at devising crucial experiments to rule out those that will be falsified. In the following, we show that this is employed by many scientists as a scientific methodology. In fact, as the eminent historian of science Helge Kragh recently pointed out, “Karl Popper’s philosophy of science [...] is easily the view of science with the biggest impact on practising scientists” (Kragh 2013). For instance, the Nobel laureate for medicine, Peter Medawar, acknowledged to Popper’s falsificationism a genuine descriptive value, stating that “it gives a pretty fair picture of what actually goes on in real-life laboratories” (Medawar 1990). Or the preeminent cosmologist Hermann Bondi declared that “there is no more to science than its method, and there is no more to its method than Popper has said” (Jammer 1991). Besides these appraisals, it is a matter of fact that “many scientists subscribe to some version of simplified Popperianism” (Kragh 2013), and this happens especially to physicists, and specifically to those who are concerned with fundamental issues.

In this section, we will support this claim with several quotations from different prominent physicists who do not share a common philosophical standpoint, and show that they do actually think of their scientific praxis as based on a form of deductive hypothesis-testing-falsification process. We then show that this methodological choice has indeed profound consequences on the development of theories and that it has been extremely efficient in the modern results of foundations of different branches of physics.

Here, we are not concerned with the justification of falsificationism as the right methodology to aspire to; we avoid any normative judgment.Footnote 7 We just assume as a working hypothesis—build upon a number of instances—that this is what scientists do, or at least what they are convinced to do: this is enough to lead them to pursue certain (theoretical) directions. Methodological rules are a matter of convention. They are indeed intuitively assumed by scientists in their everyday practical endeavour, but they are indispensable meta-scientific (i.e. logically preceding scientific knowledge) assumptions: “they might be described as the rules of the game of empirical science. They differ from rules of pure logic rather as do the rules of chess”. However, a different choice of the set of rules would necessarily lead to a different development of scientific knowledge (meant as the collection of the provisionally acknowledged theories). The methodology that one (tacitly) assumes entails the type of development of scientific theories, insofar as it “imposes severe limitations to the kind of questions that can be answered” (Feynman 1998). Our fundamental theories look as they do also because they are derived under a certain underlying methodology. This is not surprising: if chess had a different set of rules (e.g. pawns could also move backwards), at a given time after the beginning of a game, this would probably look very different from any game played with standard rules of chess.

Coming back to the physicists who, more or less aware of it, loosely adhere to falsificationism, we deem it interesting to explicitly quote some of them, belonging to different fields. It is worth mentioning a work by the Austrian physicist Herbert Pietschmann with the significant title: “The Rules of Scientific Discovery Demonstrated from Examples of the Physics of Elementary Particles”. Elementary particle physics was particularly promoted in the Post-war period to revive scientific (especially European) research. It is well known that this field developed in a very pragmatic and productivist way (see e.g. Baracca et al. 2016). Nevertheless, the author shows that falsificationist methodological rules

are applied by the working physicist. Thus these rules are shown to be actual tools rather than abstract norms in the development of physics. [...] Predictions by theories and their tests by experiments form the basis of the work of scientists. It is common knowledge among scientists that new predictions are not proven by experiments, but are ruled out if they are wrong. (Pietschmann 1978)

While Pietschmann has a vast knowledge of the philosophy of science, one of the most brilliant physicist of all times, the Nobel laureate Richard P. Feynman, was rather an ignoramus in philosophy. Feynman belonged to a generation of hyper-pragmatic American scientists, whose conduct went down in history with the expression “shut up and calculate!” (see e.g. Mermin 2004). However, in the course of some public lectures he gave in the 1960s, Feynman’s audience was granted the rare opportunity to hear the great physicist addressing the problem of the scientific method. It turns out that he also adheres to falsificationism:

[scientific] method is based on the principle that observation is the judge of whether something is so or not. [...] Observation is the ultimate and final judge of the truth of an idea. But “prove” used in this way really means “test,” [...] the idea really should be translated as, “The exception tests the rule.” Or, put another way, “The exception proves that the rule is wrong.” That is the principle of science. (Feynman 1998)

Coming to some leading contemporary figures in the field of foundations of quantum mechanics (FQM), David Deutsch has been a staunched Popperian since his student years. Deutsch maintains that

we do not read [scientific theories] in nature, nor does nature write them into us. They are guesses - bold conjectures. [...] However, that was not properly understood until [...] the work of the philosopher Karl Popper. (Deutsch 2011).

To conclude, we agree with Bohm when he states that “scientists generally apply the scientific method, more or less intuitively” (Bohm 1961). But we also maintain that since scientists are both the proposers and the referees of new theories, the form into which these theories are shaped is largely entailed by the method they (more or less consciously) apply. Methodology turns therefore into an active factor for the development of science. As we will show in the next section, the falsificationist methodology, vastly adopted in modern physics, has opened new horizons for the foundations of physics.Footnote 8

4 A Criterion for Fundamentality

Provided with a working methodology, we can now propose a criterion to define what is fundamental. At a naive stage of observation, our intuitive experience leads to the conviction that such as determinism, simultaneity, realism, were a priori assumptions of a scientific investigation. What it turns out, however, is that there is in principle no reason to pre-assume anything like that. The process of reaching the foundations consists of: (i) the process of turning those metaphysical concepts into scientific (i.e., falsifiable) statements, thus transferring them from the domain of philosophy to the one of science (e.g., from locality and realism one deduces Bell’s inequalities); (ii) a pars destruens that aims at rejecting the metaphysical assumptions, of which the corresponding scientific statements have been tested and empirically falsified. Those rejected metaphysical assumptions were to be considered “philosophical prejudices”.Footnote 9 Modern physics, with the revolutionary theories of quantum mechanics and relativity, has washed away some of them, and recent developments are ruling out more and more of these prejudices. Feyerabend’s words sound thus remarkable when he states that it

becomes clear that the discoveries of quantum theory look so surprising only because we were caught in the philosophical thesis of determinism [...]. What we often refer to as a “crisis in physics” is also a greater, and long overdue, crisis of certain philosophical prejudices.” (Feyerabend 1954)

We are so able to put to the test concepts that were classically not only considered part of the domain of philosophy (metaphysics) but even necessary a priori assumptions for science. Reaching the foundations of physics then means to test each of these concepts and remove the constraints built upon a prejudicial basis, pushing the frontier of scientific domain up to the “actual” insurmountable constraints which demarcate the possible from the impossible (yet within the realm of science on an empirical basis, see Fig. 1). These actually fundamental constraints (FC) are thus trans-disciplinary and should be considered in every natural science. Yet, contrarily to the physicalist program, the search for FC does not elevate one particular science to a leading, more fundamental position. Moreover, this criterion does not entail any pre-assumption of realism, but rather it allows to test, and possibly empirically falsify, certain forms of realism (see further).

A sketch of fundamental research here proposed as a series of successive experimental violations of the “philosophical prejudices” assumed by our “established theories”, towards the actual fundamental constraints (see main text). “Physical theories” here means “physically significant”, i.e. they carry an empirical content. “Formulable” theories are in general all the theories one can think of, and they are characterized solely by the formalism

We would like to clearly point out that, although this criterion aims at removing metaphysical a priori assumptions (here called “philosophical prejudices”) by means of empirical refutations, it is not our aim—and it would not be possible—to remove philosophy from scientific theories. First of all, as explained above, methodological rules are necessarily non-scientific, and yet they are indispensable for science. Secondly, and this could admittedly be a problematic issue for our proposed criterion, there is always room for more than one interpretation of empirical evidence. In fact, the process of turning “philosophical prejudices” into a falsifiable statement usually introduces further independent metaphysical assumptions. The best that can be done within a theory is to minimize the number of independent “philosophical prejudices” from which the falsifiable statement can be deduced. If the falsification occurs, however, only the conjunction of such assumptions is refuted and there is, in general, no way to discriminate whether any of them is individually untenable. Nevertheless, other philosophical consideration can bridge this gap, giving compelling arguments for refuting one subset or the other of the set of “philosophical prejudices” (see Sect. 4.1 for a concrete example on Bell’s inequality).

To overcome one possible further criticism to our view, we would like to clarify that not all the falsifiable statements of a scientific theory that have not yet been falsified are to be considered fundamental. Fundamental is a particular set of falsifiable statements that have been deduced from metaphysical assumptions, have been tested, and resisted empirical falsification. Hence, the limitations imposed by no-go results are (provisionally) to be considered fundamental. On the same note, a separate discussion deserve the postulates of scientific theories, to which intuitively one would like to attribute a fundamental status. A postulate of a scientific theory can be defined as an element of a minimal set of assumptions from which it is possible to derive the whole theory. Note that a postulate may not be directly falsifiable, but at least its conjunction with additional assumptions have to lead to falsifiable statements. Admittedly, one could object that if a postulate did not rest upon any “philosophical prejudices”, then it could not be fundamental according to our proposed criterion. However, we maintain that it is, in fact, always possible to identify one or more philosophical prejudices that underlie any statement and, in particular, any postulate. This has been discussed thoroughly in the literature, for instance, Northrop states:

Any theory of physics makes more physical and philosophical assumptions than the facts alone give or imply. [...] These assumptions, moreover, are philosophical in character. They may be ontological, i.e., referring to the subject matter of scientific knowledge which is independent of its relation to the perceiver; or they may be epistemological, i.e., referring to the relation of the scientist as experimenter and knower to the subject matter which he knows. (Northrop 1958)

Therefore, the postulates of the scientific theories that we consider fundamental (e.g., quantum physics or special relativity) are fundamental according to our criterion.Footnote 10

We can push our criterion of fundamentality even further and try to reach the most fundamental constraints, those that are not provisional because violating them would jeopardize the applicability of the scientific method. A clear example of this is the bound imposed by the finite speed of light. But why is it so special? Why should be the impossibility of instantaneously signaling considered a more fundamental (i.e. insurmountable) limitation of physics, than the bound imposed by, say, determinism? Because the knowledge of the most fundamental bounds is also limited by our methodology. Since falsificationism requires some “cause-effect” relations to test theories meaningfully, then instantaneous signaling would break this possibility and any meaning of the current methodology along with it.Footnote 11

This criterion allows also to attempt a ranking of relative fundamentality between theories, which is alternative to the physicalist approach in terms of building blocks decomposition. We say that a theory \(T_1\) is thus more fundamental than a theory \(T_2\) when \(T_1\) includes \(T_2\), but it comes out of the boundaries of \(T_2\) (e.g. \(T_1=\)quantum theory and \(T_2=\)deterministic contextual theories; or local realistic theories). In this sense, quantum mechanics is more fundamental than classical mechanics. The reason for this, however, is not that we believe, as it is usually assumed, that the equations quantum mechanics can predict everything that classical mechanics can also predict (in fact, we cannot solve the Schrödinger equation for atoms with more than a few electrons). On the contrary, we call quantum physics more fundamental than classical physics because it violates certain no-go theorems (thus demolishing some “philosophical prejudices”), and yet it lies within the bounds of the empirically tested no-go theorems.

To sum up, we maintain that one of the aims of science is to approach the actual foundations (red edge in Fig. 1) through a discrete process of successive falsifications of the alleged a priori metaphysical assumptions, which have to be formulated in terms of scientific statements. If these are falsified, then the former can be dismissed as “philosophical prejudices”.

4.1 Foundations of Quantum Mechanics

A large part of the research on modern foundations of physics has developed along with the directions that we have thus far described. It is the case of what are usually referred to as “no-go theorems”.Footnote 12 They require a falsifiable statement (e.g. in the form of an inequality) that is deductively inferred (i.e. formally derived) from a minimal set of assumptions, which are chosen to include the “philosophical prejudices” (in the sense expounded above) that one wants to test. A no-go theorem, therefore, allows formulating one or more of this “philosophical prejudices” in terms of a statement that can undergo an experimental test: what till then was believed to be a philosophical assumption, suddenly enters the domain of science. If this statement is experimentally falsified, its falsity is logically transferred to the conjunction of the assumptions (modus tollens) that thus becomes untenable. The no-go theorem is the statement of this untenability.

But there is more to the epistemological power of “no-go theorems”: they can sometimes be formulated in a way that they do not include any particular scientific theory in their assumptions (device-independent formulation). In this case, the no-go theorem assumes the form of a collection of measurements and relations between measurements (operational formulation), yet it holds directly independently of any specific experimental apparatus, its settings, or the chosen degrees of freedom to be measured. In practice, if a particular theory assumes one of the “philosophical prejudices” that have been falsified by a certain no-go theorem, then this theory needs to be revised (if not completely rejected) in the light of this evidence. Furthermore, the falsification of a no-go theorem rules out the related “philosophical prejudice” for every future scientifically significant theory.

We shall review some of the by now classical no-go theorems in quantum theory, in the spirit of the present paper. It is generally known that quantum mechanics (QM) provides only probabilistic predictions, that is, given a certain experiment with measurement choice x and a possible outcome a, quantum theory allows to compute the probability p(a|x) of finding that outcome. Many eminent physicists (Einstein, Schödinger, de Broglie, Bohm, Vigier, etc.) made great efforts to restore determinism and realism.Footnote 13 A way to achieve this is to assume the existence of underlying hidden variables (HV), \(\lambda\), not experimentally accessible (either in principle or provisionally), that if considered would restore determinism, i.e. \(p(a|x,\lambda )=0\) or 1.Footnote 14 In a celebrated work Bohm (1952), Bohm proposed a fully developed model of QM in terms of HV. However, the HV program started encountering some limitations. To start with, Kochen and Specker (1967) assumed (1) a deterministic HV description of quantum mechanics and (2) that these HV are independent of the choice of the disposition of the measurement apparatus (context),Footnote 15 and showed that this leads to an inconsistency.Footnote 16 Thus if HV exist, they must depend on the context. John Bell, however, noticed that this is not so surprising since

there is no a priori reason to believe that the results [...] should be the same. The result of observation may reasonably depend not only on the state of the system (including hidden variables) but also on the complete disposition of apparatus. (Bell 1966, p. 9)

We must stress that this theorem rules out the conjunction of the assumptions only logically. It is only with an experimental violation, recently achieved (Lapkiewicz 2011), of its falsifiable formulation that contextuality is ruled out.

But it was with a seminal paper by Bell (1964), that one of the most momentous no-go theorems was put forward.Footnote 17 Consider two distant (even space-like separated) measurement stations A and B. Each of them receives a physical object (information carrier) and they are interested in measuring the correlations between the two information carriers that have interacted in the past. At station A (B) a measurement is performed with settings labeled by x (y), and the outcome by a (b). Since the stations are very far away and the local measurement settings are freely chosen, common sense (or a “philosophical prejudice”) would suggest that the joint probability of finding a and b given x and y is independent (i.e. factorizable). Nevertheless, in principle (i.e. without “prejudices”), the local measurement settings could somehow statistically influence outcomes of distant experiments, such that \(p(a, b|x,y) \ne p(a|x) p(b|y)\). It is important to notice that “the existence of such correlations is nothing mysterious. [...] These correlations may simply reveal some dependence relation between the two systems which was established when they interacted in the past” (Brunner 2014). This ’common memory’ might be taken along by some hidden variables \(\lambda\) that, if considered, would restore the independence of probabilities. The joint probability then becomes:Footnote 18

This condition is referred to as local realism (LR).Footnote 19

Let us consider dichotomic measurement settings and outputs (i.e. x, \(y \in \{0,1\}\) and a, \(b \in \{-1, +1\}\)) and define the correlations as the averages of the products of outcomes given the choices of settings, i.e. \(\langle a_xb_y \rangle = \sum _{a, b } ab \ p(a,b|x,y)\), it is easy to prove that the condition (1) of LR leads to the following expression in terms of correlations:

This is an extraordinary result, known as Bell’s inequality (Bell 1964).Footnote 20 Indeed, a condition such as (1) gives a mathematical description of the profound metaphysical concepts related to locality and realism, whereas its derived form (2), transforms LR into an experimentally falsifiable statement in terms of actually measurable quantities (correlations). Indeed,

the conjunction of all assumptions of Bell inequalities is not a philosophical statement, as it is testable both experimentally and logically [...]. Thus, Bell’s theorem removed the question of the possibility of local realistic description from the realm of philosophy. (Brukner and Zukowski 2012)

Since the 1980s, experiments of increasing ambition have tested local realism through Bell’s inequalities (Giustina 2015), and have empirically violated them. Namely, LR has been falsified and this removed the possibility of scientific theories based on a local realistic description. It ought to be remarked that Bell’s no-go theorem relies on the additional tacit assumption of ’freedom of choice’ (or ’free will’): local settings are chosen freely and independently from each other. This is a clear example of the problematic issue previously stated, namely that the conjunction of these metaphysical assumptions (i.e., local realism and freedom of choice) is at stake, but the falsification of the statement deduced from them cannot discriminate which one should be dropped (or if they both are untenable). However, the rejection of the assumption of freedom of choice would mean that all of our experiments are in fact meaningless, because we would live within a ’super-deterministic’ Universe, and the decision of rotating a knob or otherwise would be a mere illusion. Therefore, believing that we are granted the possibility of experimenting is a philosophical argument that makes us reject freedom of choice as a valuable alternative. Hence, in our view, we are entitled to interpret the violation of Bell’s inequalities as a refutation of local realism.Footnote 21

Quantum mechanics is a compatible theory because its formalism gives a result that is out of the bounds of local realism. Indeed, quantum mechanics allows the preparation of pairs of information carriers called entangled.Footnote 22. From elementary calculations (see e.g. Brunner 2014), it follows that using quantum entanglement, the relation between correlations as defined in (2) reaches a maximum value (Tsirelson’s bound) of

This is the second crucial result of Bell’s theorem: quantum formalism imposes a new bound that is out of the bounds of local realism. At the moment this new bound imposed by (3) has never been experimentally violated, and QM survived experimental falsification. There is yet another condition that one might want to enforce, namely that the choice of measurement settings cannot directly influence the outcomes at the other stations (not in terms of correlations but actual information transfer). This is called no-signaling (NS) condition and reads

The NS constraint is where we set the FC. Indeed, a theory that would violate this condition allows for instantaneous signaling and it thus would mean a failure of the scientific method as we conceive it. It would be in principle not falsifiable (besides being incompatible with relativity theory).Footnote 23

Relations between local realistic (\({\mathcal {LR}}\)), quantum (\({\mathcal {Q}}\)) and no-signaling (\({\mathcal {NS}}\)) theories in a probability space. Although here the geometry is also explicit (see footnote 24), the strict inclusion \({\mathcal {LR}} \subset {\mathcal {Q}} \subset {\mathcal {NS}} \subset\) “formulable” theories, represents the series of bounds abstractly sketched in Fig. 1

It is possible to show that LR correlations are a proper subset of quantum correlations and that both are strictly included in the NS set of theories (see Fig. 2).Footnote 24 To summarise, local realistic theories have been falsified, and we have a theory, QM, which comes outside its borders. However, it is not the most fundamental theory we think of, since there is potentially room for theories that violate the bounds imposed by QM, and still lies in the domain of “physically significant” theories (i.e within the NS bound).

In the literature of modern FQM, there is plenty of other no-go theorems that quantify the discrepancy between “classical” and quantum physics, ruling out different “philosophical prejudices” than the mentioned contextuality and local realism. For instance, it has been recently proposed Del Santo and Dakić (2017) a new no-go theorem for information transmission. Consider a scenario in which information should be transmitted between two parties, A and B in a time window \(\tau\) that allows a single information carrier to travel only once from one party to the other (“one-way” communication). At time \(t=0\), A and B are given inputs x and y and at \(t=\tau\) they reveal outputs a and b. The joint probability results in a classical mixture of one-way communications:

where symbol \(\prec\) denotes the direction of communication, e.g. \(A\prec B\) means that A sends the information carrier to B. This distribution leads to a Bell-like inequality that, in the case of \(x,y,a,b=0,1\), reads:

In (Del Santo and Dakić 2017) is shown that an information carrier in quantum superposition between A and B surpasses this bound, and leads to \(p(a=y,b=x)= 1\). This bound, logically violated by quantum formalism, has been also experimentally falsified (Massa et al. 2019), and results in a violation of “classical” one-way communication.

4.2 “Foundations” of Biophysics

The process of reaching foundations here proposed can also be pursued in branches of physics considered more “complex” (i.e. the opposite of fundamental in the reductionist view), like the physics of biological systems.

Proteins are a class of polymers involved in most of the natural processes at the basis of life. The 3D structure of each protein, of which the precision is essential for its functioning, is uniquely encoded in a 1D sequence of building blocks (the 20 amino acids) along a polymer chain. This process of encoding is usually referred to as design. The huge variability of all existing natural proteins is originated solely by different sequences of the same set of 20 building blocks. Proteins are very complex systems and the understanding and prediction of the mechanism behind their folding, that is the process with which they reach their target 3D structure, is still one of the biggest challenges in science. Until now, no other natural or artificial polymer is known to be designable and to fold with the same precision and variability of proteins.

A more fundamental approach to understand proteins, that can possibly go beyond the observed natural processes, is to ask a different question: are proteins such unique polymers? In other words: is the specific spatial arrangement of the atoms in amino acids the only possible realization to obtain design and folding?

According to the mean field theory of protein design (Pande et al. 2000), given an alphabet of building blocks of size q, a system is designable when

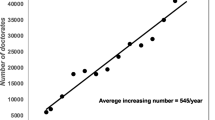

where M is the number of structures that the chain can access, divided the number of monomers along the chain.Footnote 25 For instance, a simple beads and springs polymer will have a certain number of possible structures \({\tilde{M}}\), defined only by the excluded volume of the beads. If one adds features to the monomers (e.g. directional interactions), this can result in a smaller number of energetically/geometrically accessible structures for the polymer \(M<{\tilde{M}}\). With fewer accessible structures, it will be easier for the sequence to select a specific target structure—and not an ensemble of degenerate structures. Hence, to make a general polymer designable (from Eq. (7)) one can follow two strategies: (i) increasing the alphabet size q and, (ii) reducing the number of accessible structures per monomer M. In the works (Coluzza 2013) and (Cardelli 2017) the authors show, by means of computational polymer models, that one can indeed reduce the number of accessible structures by simply introducing a few directional interactions to a simple bead-spring polymer. This approach is already enough to reduce the number of accessible structures M to obtain designability.Footnote 26 The resulting polymers (bionic proteins) can have different numbers and geometries of those directional interactions and, despite not having at all the geometrical arrangement of amino acids, are designable and able to fold precisely into a specific unique target structure, with the same precision of proteins. These bionic proteins are also in principle experimentally realizable on different length scales. Introducing directional interactions is not the only approach to reduce the number of accessible configurations, therefore there can be other examples of polymers that are able to be designable and fold into a target structure. This view teaches us to look beyond the prejudice that the particularism of the amino acids is the only way to achieve design and folding, and search the functioning of proteins into more fundamental principles, that can be applied to a wider range of possible folding polymers (Fig. 3).

Relations between polymers at a fixed \(q=20\): natural proteins, “bionic” proteins (i.e. all polymers that are designable to fold into a specific target structure) and all “formulable” polymers. The boundary between “bionic” proteins and not folding polymers is the inequality (7) (see main text)

5 Conclusions

We have proposed a criterion for fundamentality as a dynamical process that aims at removing “philosophical prejudices” by means of empirical falsification. What is fundamental in our theories are their limits, and these can be discovered performing experiments and interpreting their results. This search tends however to an end, given by the FC (which are the most general, physically significant constraints under a certain methodology).

Notes

A more realistic-inclined thinker would rather prefer foundations of “nature”. We will discuss the problem of realism in what follows, but showing that it is an unnecessary concept for our discussion on fundamentality.

Philosophy of science discerns between at least three forms of reductionism: theoretical, methodological and ontological. The distinction, although essential, turns out to be quite technical, and it goes beyond the scope of the present essay to expound it. In what follows, we will consider reductionism mainly in the classical sense of microphysicalism (i.e. a mixture of theoretical and ontological reduction). In his book Hüttemann (2004) explores the meaning and limitations of microphysicalism advocating a view in which “neither the parts nor the whole have ’ontological priority”’.

Although the problem of realism is indeed one of the most important in philosophy of science and surely deserves a great deal of attention, it is desirable that this problem finds its solution within the domain of science (this will be discussed in the Sect. 4.1).

The concept of the impossibility in principle to reduce all event to the underlying physics is not new in philosophy of science, and it usually goes under the name of multiple-realizability. To this extent, it is worth mentioning the influential work of the Nobel Laureate in Physics, Anderson (1972).

In particular, Popper’s criticisms were leveled against the logical positivism of the Vienna Circle. He indeed came back to Hume’s problem of induction; Hume maintained that there is, in fact, no logically consistent way to generalize a finite (though arbitrarily large) number of single empirical confirmations to a universal statement (as a scientific law is intended to be). Popper embraced this position, but he proposed a new solution to the problem of induction and demarcation (see further).

We have preferred here to change the original quotation from “philosophical” to “non-scientific” inasmuch we do not limit the extent of philosophical to be considered antithetical to scientific statements.

It is well known that Popper’s methodology has today hardly any supporter among philosophers of science, who have severely criticized it as a too strict and naive description of scientific development. Moreover, falsificationism has for Popper a normative value, i.e. it is seen as the most rational, and therefore the best, possible methodology. Among Popper’s foremost critics and commentators, we ought to mention Imre Lakatos, who developed a weaker and more sophisticated form of falsificationism, which encompasses part of Thomas Kuhn’s critiques.

We must stress that falsificationism is not always considered as the driving scientific method, especially in other scientific disciplines than physics. For instance, Roald Hoffmann, chemist and philosopher of chemistry, claims that falsification is much less relevant to chemistry than to physics, especially when chemists synthesize molecules: “Synthesis is a creative activity, and while every synthesis implicitly and trivially tries to falsify some deep-seated fundamental law, the science and art of synthesis as a whole does not explicitly and non-trivially try to falsify any particular theory. That does not mean that falsification is absent or untrue, it just means that it is rather irrelevant in this field” (Kovac and Weisberg 2011).

Notice that the expression “philosophical prejudices” is borrowed from Feyerabend (1954) (see main text). However, we would like to stress that would be perhaps more correct to call these “metaphysical assumptions”.

Consider the example of one of the postulates of quantum mechanics which states: “To any physically measurable quantity of physics is associated a Hermitian operator”. For how technical and abstract this postulate may seem, it ensures that observable quantities take value in the domain of real numbers. Thus, if by any experimental procedure it were possible to “observe” a complex number as the outcome of a physical experiment, this would mean a violation of the philosophical prejudice of “reality of the physical observables”. Being this based on a clear philosophical assumption and since the falsifiable statements that this imply are hitherto not falsified, this postulate clearly is fundamental according to our proposed criterion.

Recent lines of research show that quantum mechanics may not present a definite causal order (Oreshkov et al. 2012). However, these new possibilities do not undermine testable cause-effect relations, and thus do not clash with falsifiability.

Historically, no-go theorems are associated with the conditions of compatibility with quantum formalism. Here, however, we consider them in a more general context. They are regarded as decidable statements that discriminate between any two classes of theories, possibly beyond QM (see further).

Realism is a vague concept, but it can be summarised as the idea that measurements reveal pre-existing properties of the system. However, in the context of no-go theorems, this should be formally defined from time to time.

This is more of a historical motivation, than an actual program. Hidden variables, in fact, do not necessarily have to be deterministic. What it really matters for HV theories is that there exist a complete state encompassing the “real state of affairs”, i.e. the values of all the physical properties independently of any measurements. Strict determinism was given up also by Bohm himself.

In principle, and classical physics assumes this, one can use whatever practical method to measure a certain physical quantity and always find the same value (within experimental uncertainty).

It is noteworthy that the original proof by Kochen and Specker involved an at least three-dimensional Hilbert space and 117 directions of projection. This can be seen as rather unsimple and inelegant, as a practical example against conventionalist arguments.

\(\lambda\) can, in general, be governed by a probability distribution and be a continuous variable over a domain \(\Lambda\), as considered below. The final probability p(a, b|x, y) should eventually not explicitly depend on \(\lambda\), which should be averaged out.

The name comes from the fact that decomposition (1) was derived under the mere assumption of having some real quantities \(\lambda\) that factorize the joint probability distribution into local operations only. Notice, however, that LR is here a compound condition, given by the mathematical expression (1), and cannot be formally separated into two distinct conditions as some authors try to do (e.g. Brunner 2014) to justify the “non-local” nature of QM (see further).

In fact, this is the easiest non-trivial Bell’s inequality, usually referred to as Clause–Horne–Shimony–Holt (CHSH) inequality. Bell’s inequalities can be generalized to an arbitrary number of settings and outcomes, and to many parties.

Some scholars maintain that there Bell’s theorem requires the further implicit assumption that quantum laws are valid also in those physical situations in which quantum mechanics forbids that a measurement context exists to test their validity (Garola and Persano 2014). If this were the case, a further ambiguity in the “philosophical prejudice” to be refuted would again arise. We are thankful to one of the reviewers for providing this reference.

Formally, quantum mechanics postulates that systems are described by vectors \(|\psi \rangle\) living in complex Hilbert spaces, \({\mathcal {H}}\). The joint state of two systems A and B lives in the tensor product of the spaces of the two systems, i.e. \({\mathcal {H}}_{AB}= {\mathcal {H}}_{A} \otimes {\mathcal {H}}_{B}\). A pure state \(|\psi \rangle _{AB} \in {\mathcal {H}}_{AB}\) is then defined to be separable if \(|\psi \rangle _{AB}= |\psi \rangle _{A} \otimes |\psi \rangle _{B}\). Otherwise, it is entangled. A maximally entangled state (for two two-level systems) is for instance the singlet state: \(| \psi ^- \rangle = \frac{1}{\sqrt{2}}\left( |0\rangle _A \otimes |1\rangle _B - |1\rangle _A \otimes |0\rangle _B \right) )\). The states \(|0\rangle\) and \(|1\rangle\) are the eigenstates of the standard Pauli z-matrix \(\sigma _z\) associated to the eigenvalues +1 and -1, respectively

Concerning quantum and no-signaling correlations, it is possible to show that the quantity S of the correlations defined in (2) reaches a maximal logical bound \(S_{(NS)}= 4\). Popescu and Rohrlich (1994) explicitly formulated a set of correlations that reach this logical bound, but that respect NS (i.e. they are physically significant).

In general, for every number of values that x, y, a, b can take, it is possible to prove that in the space of all the possible probabilities p(ab|xy), the LR condition (1) forms a polytope whose vertexes are the deterministic correlations (\({\mathcal {D}}\)), and whose facets (the edges in the 2-d representation of Fig. 2) are the Bell’s inequalities (Minkowski’s theorem assures that a polytope can be always represented as the intersection of finitely many hyperplanes). NS correlations form a polytope too, whereas the quantum correlations form a convex, closed and bound set, but that has no facets.

M is \(\exp (\omega )\), where \(\omega\) is the so-called configurational entropy per monomer.

The designability was obtained for a size of the alphabet \(q= 20\) such as in proteins or even less down to \(q =3\).

References

Anderson, P. W. (1972). More is different. Science, 177(4047), 393–396.

Baracca, A., Silvio, B., & Del Santo, F. (2016). The origins of the research on the foundations of quantum mechanics (and other critical activities) in Italy during the 1970s. Studies in History and Philosophy of Modern Physics, 57, 66–79.

Barbour, J. (2015). Reductionist doubts. In A. Aguirre, B. Foster, & Z. Merali (Eds.), Questioning the foundations of physics. Cham: Springer.

Bell, J. S. (1964). On the Einstein–Podolsky–Rosen paradox. Physics, 1(3), 195–200.

Bell, J. S. (1966). On the problem of hidden variables in quantum mechanics. In J. S. Bell (Ed.), Speakable and unspeakable in quantum mechanics (2nd ed.). Cambridge: Cambridge University Press.

Bohm, D. (1952). A suggested interpretation of the quantum theory in terms of ‘hidden’ variables. Physical Review, 85(2), 166.

Bohm, D. (1961). On the relationship between methodology in scientific research and the content of scientific knowledge. British Journal for the Philosophy of Science, 12(46), 103–116.

Brukner, Č., & Zukowski, M. (2012). Bell’s inequalities foundations and quantum communication. In G. Rozenberg, T. Bäck, & J. N. Kok (Eds.), Handbook of natural computing (pp. 1413–1450). Berlin: Springer.

Brunner, N., et al. (2014). Bell nonlocality. Reviews of Modern Physics, 86(2), 419.

Cardelli, C., et al. (2017). The role of directional interactions in the designability of generalized heteropolymers. Scientific Reports, 7(1), 4986.

Coluzza, I., et al. (2013). Design and folding of colloidal patchy polymers. Soft Matter, 9(3), 938–944.

Del Santo, F., & Dakić, B. (2017). Two-way communication with a single quantum particle. Physical Review Letters, 120(6), 060503.

Deutsch, D. (2011). The beginning of infinity. New York: Viking Penguin.

Feyerabend, P. K. (1954). Determinismus und Quantenmechanik. Wiener Zeitschrift für Philosophie, Psychologie, Pädagogik, 5, 89–111. (English translation by J. R. Wettersten, J. L. Heilbron and C. Luna).

Feynman, R. P. (1963). The Feynman lectures on physics (Vol. 1). Reading: Addison-Wesley Pub. Co.

Feynman, R. P. (1998). The meaning of it all: Thoughts of a citizen scientist. New York: Perseus.

Garola, C., & Persano, M. (2014). Embedding quantum mechanics into a broader noncontextual theory. Foundations of Science, 19(3), 217–239.

Giustina, M., et al. (2015). Significant-loophole-free test of Bell‘s theorem with entangled photons. Physical Review Letters, 115(25), 250401.

Hüttemann, A. (2004). What’s wrong with microphysicalism?. London: Routledge.

Jammer, M. (1991). Sir Karl Popper and his philosophy of physics. Foundations of Physics, 21(12), 1357–1368.

Kim, J. (2006). Being realistic about emergence. In P. Clayton & P. Davies (Eds.), The re-emergence of emergence (pp. 189–202). Oxford: Oxford University Press.

Kochen, S., & Specker, E. P. (1967). The problem of hidden variables in quantum mechanics. Journal of Mathematics and Mechanics, 17, 59–87.

Kovac, J., & Weisberg, M. (Eds.). (2011). Roald Hoffmann on the philosophy, art, and science of chemistry. Oxford: Oxford University Press.

Kragh, H. (2013). The most philosophically of all the sciences: Karl Popper and physical cosmology. Perspectives on Science, 21, 3.

Lapkiewicz, R., et al. (2011). Experimental non-classicality of an indivisible quantum system. Nature, 474(7352), 490–493.

Linnemann, N. S., & Visser, M. N. (2017). Hints towards the emergent nature of gravity. Arxiv preprint. arXiv:1711.10503.

Massa, F., Moqanaki, A., Baumeler, Ä., Del Santo, F., Kettlewell, J. A., Dakić, B., & Walther, P. (2019). Experimental two-way communication with one photon. Advanced Quantum Technologies. https://doi.org/10.1002/qute.201900050.

Medawar, P. B. (1990). The threat and the glory. Oxford: Oxford University Press.

Mermin, N. D. (2004). Could Feynman have said this? Physics Today, 57(5), 10–12.

Northrop, F. S. C. (1958). Introduction. In W. Heisenberg (Ed.), Physics and philosophy: The revolution in modern science. World perspectives. New York: Harper & Row.

Oreshkov, O., Costa, F., & Brukner, Č. (2012). Quantum correlations with no causal order. Nature Communications, 3, 1092.

Pande, V. S., Grosberg, A. Y., & Tanaka, T. (2000). Heteropolymer freezing and design: Towards physical models of protein folding. Reviews of Modern Physics, 72(1), 259.

Pietschmann, H. (1978). The rules of scientific discovery demonstrated from examples of the physics of elementary particles. Foundations of Physics, 8(11/12), 905–919.

Popescu, S., & Rohrlich, D. (1994). Quantum nonlocality as an axiom. Foundations of Physics, 24(3), 379–385.

Popper, K. R. (1959). The logic of scientific discovery. New York: Basic Books.

van Riel, R., & Van Gulick, R. (2016). Scientific reduction. In E. N. Zalta (Ed.), The Stanford encyclopedia of philosophy (Winter 2016 ed.). Stanford: Stanford University.

Acknowledgements

Open access funding provided by University of Vienna. F.D.S. acknowledges the support from a DOC Fellowship of the Austrian Academy of Sciences. The authors also thank David Deutsch for kind comments, and David Miller and Časlav Brukner for interesting discussions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Del Santo, F., Cardelli, C. Demolishing Prejudices to Get to the Foundations: A Criterion of Demarcation for Fundamentality. Found Sci 25, 827–843 (2020). https://doi.org/10.1007/s10699-019-09629-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10699-019-09629-0