Abstract

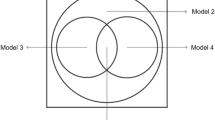

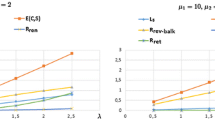

We investigate a system with two types of customers wherein each customer type has a dedicated server. When the corresponding dedicated server is busy, we allow customer overflow to the non-dedicated server, and the service time of each server is customer-dependent. The objective of this study is to assess the negative consequences of overflow. On the basis of the analytical stationary distribution of the proposed two-server model, we first identify the conditions under which overflow leads to improvement of throughputs. Second, we obtain customers’ overflow rates and ratios. For a symmetric system under heavy traffic, the wrong assignment ratio comes close to 50%. Third, we analyze the probability that a customer is served by a non-dedicated server. The probability that both servers are serving non-dedicated customers approaches 25% in a symmetric system under heavy traffic. Finally, we determine various overflow conditions while including the overflow costs.

Similar content being viewed by others

References

Abdalla N, Boucherie R (2002) Blocking probabilities in mobile communications network with time-varying rates and redialing subscribers. Ann Oper Res 112:15–34

Asaduzzaman M, Chaussalet T (2014) Capacity planning of a perinatal network with generalised loss network model with overflow. Eur J Oper Res 232:178–185

Asaduzzaman M, Chaussalet T, Robertson N (2010) A loss network model with overflow for capacity planning of a neonatal unit. Ann Oper Res 178:67–76

Best T, Sandikci B, Eisenstein D et al (2015) Managing hospital inpatient bed capacity through partitioning care into focused wings. Manuf Serv Oper Manag 17(2):157–176

Dai J, Shi P (2019) Inpatient bed overflow: an approximate dynamic programming approach. Manuf Serv Oper Manag 21(4):894–911

van Dijk N (1993) Queueing networks and product forms: a system approach. Wiley, Chichester

van Dijk N, Kortbeek N (2009) Erlang loss bounds for OT-ICU systems. Queue Syst 63:253–280

Jordan W, Graves S (1995) Principles on the benefits of manufacturing process flexibility. Manag Sci 41(4):577–594

Kelly F (1991) Loss network. Ann Appl Prob 1(3):319–378

Kleinrock L (1975) Queueing systems, Vol. I, Theory. Wiley, New York

Litvak N, van Rijsbergen M, Boucherie R et al (2008) Managing the overflow of intensive care patients. Eur J Oper Res 185(3):998–1010

Mandelbaum A, Reiman M (1998) On pooling in queueing networks. Manag Sci 44(7):971–981

Patel P, Combs M, Vinson D (2014) Reduction of admit wait times: the effect of a leadership-based program. Acad Emerg Med 21(3):266–273

Pines J, Batt R, Hilton J et al (2011) The financial consequences of lost demand and reducing boarding in hospital emergency departments. Ann Emerg Med 58(4):331–340

Smith D, Whitt W (1981) Resource sharing for efficiency in traffic systems. Bell Syst Tech J 60(1):39–55

Song H, Tucker A, Graue R et al (2020) Capacity pooling in hospitals: the hidden consequences of off-service placement. Manag Sci 66(9):3825–3842

van Dijk N, van der Sluis E (2008) To pool or not to pool in call centers. Prod Oper Manag 17(3):296–305

Whitt W (1992) Understanding the efficiency of multi-server service systems. Manag Sci 38:708–723

Whitt W (1999) Partitioning customers into service groups. Manag Sci 45(11):1579–1592

Wolff R (1982) Poisson arrivals see time averages. Oper Res 30:223–231

Wolff R (1989) Stochastic modeling and the theory of queues. Pearson College Division

Xie J, Zhuang W, Ang M et al (2020) Analytics for hospital resource planning—two case studies. Prod Oper Manag. https://doi.org/10.1111/poms.13158

Young T, Tabish T, Young S et al (2019) Patient transportation in Canada’s northern territories: patterns, costs and providers’ perspectives. Rural and Remote Health 19(2):5113

Acknowledgements

Yanting Chen acknowledges support through the NSFC grant 71701066 and the USST Business School 2021 Starting Grant KYQD202101. Jingui Xie acknowledges support through the NSFC grant 72122019.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix

A Proof of Theorem 1

Proof

The balance equations for X(t) are

The normalization requirement for the probability measure is \(\sum _{i = 0}^{2} \sum _{j = 0}^{2} \pi (i,j) = 1\). It can be readily verified that Equations (1)-(9) satisfy the balance equations and the normalization requirement, which completes the proof. \(\square\)

B Proof of Proposition 1

Proof

Note that \(P_{loss}<\min \left\{ \frac{\rho _1}{1+\rho _1}, \frac{\rho _2}{1+\rho _2}\right\}\) holds iff \(\frac{(\rho _1+\rho _2)^2}{(\rho _1+\rho _2)^2+2(\rho _1+\rho _2+1)}<\frac{\rho _1}{1+\rho _1}\) and \(\frac{(\rho _1+\rho _2)^2}{(\rho _1+\rho _2)^2+2(\rho _1+\rho _2+1)}<\frac{\rho _2}{1+\rho _2}\). Using the non-negativity of \(\rho _1\) and \(\rho _2\), these two inequalities are simplified to \(\rho _2^2<\rho _1^2+2\rho _1\) and \(\rho _1^2<\rho _2^2+2\rho _2\). Again using the non-negativity of \(\rho _1\) and \(\rho _2\), these two inequalities are summarized as \(\rho _{\max }<\sqrt{\rho _{\min }^2+2\rho _{\min }}\) where \(\rho _{\max }=\max \{\rho _1,\rho _2\}\) and \(\rho _{\min }=\min \{\rho _1,\rho _2\}\). This completes the proof. \(\square\)

C Proof of Corollary 1

Proof

For the first statement, substituting the expression for \(P_{loss}\) into the relative loss reduction yields \((\lambda _1 + \lambda _2) \frac{(\rho _1 +\rho _2)^2}{(\rho _1 + \rho _2)^2 + 2(\rho _1 + \rho _2 + 1)} < \lambda _1 \frac{\rho _1}{1+\rho _1} +\lambda _2 \frac{\rho _2}{1+\rho _2}\). When the occupation rates are the same, i.e., \(\rho _1 = \rho _2 = \rho\), this inequality becomes \((\lambda _1 + \lambda _2) \frac{4 \rho ^2}{4 \rho ^2 + 2(2 \rho + 1)} < (\lambda _1 + \lambda _2) \frac{\rho }{1 + \rho }\). The simplification of this inequality leads to \(4 \rho ^2 (1 + \rho ) < 4 \rho ^3 + 2 \rho (2 \rho + 1)\). It can be readily verified that this inequality holds for all \(\rho > 0\). This indicates that the relative loss reduction requirement always holds when the intensity rates \(\rho _1\) and \(\rho _2\) are the same.

For the second statement, when the arrival rates are the same, i.e., \(\lambda _1 = \lambda _2\), the relative loss reduction becomes \(2 P_{loss} < \frac{\rho _1}{1 + \rho _1} + \frac{\rho _2}{1 + \rho _2}\). Substituting the expression for \(P_{loss}\) into the inequality we obtain \(2 \frac{(\rho _1 +\rho _2)^2}{(\rho _1 + \rho _2)^2 + 2(\rho _1 + \rho _2 + 1)} < \frac{\rho _1}{1 + \rho _1} + \frac{\rho _2}{1 + \rho _2}\). This inequality can be simplified to \(\rho _1^3 + \rho _2^3 < \rho _1^2 \rho _2 + \rho _2^2 \rho _1 + 4 \rho _1 \rho _2 + 2 \rho _1 + 2 \rho _2\). Notice that the reversed derivation of the above inequalities also hold, hence, when \(\rho _1^3 + \rho _2^3 < \rho _1^2 \rho _2 + \rho _2^2 \rho _1 + 4 \rho _1 \rho _2 + 2 \rho _1 + 2 \rho _2\) and \(\lambda _1 = \lambda _2\), the relative loss reduction requirement holds.

For the third statement, substituting the expression for \(P_{loss}\) into the relative loss reduction requirement yields \((\lambda _1 + \lambda _2) \frac{(\rho _1 +\rho _2)^2}{(\rho _1 + \rho _2)^2 + 2(\rho _1 + \rho _2 + 1)} < \lambda _1 \frac{\rho _1}{1+\rho _1} +\lambda _2 \frac{\rho _2}{1+\rho _2}\). When the service rates are the same, this inequality becomes \((\lambda _1 + \lambda _2) \frac{ \frac{1}{\mu ^2}(\lambda _1 +\lambda _2)^2}{\frac{1}{\mu ^2}(\lambda _1 + \lambda _2)^2 + 2 \frac{1}{\mu } (\lambda _1 + \lambda _2 + \mu )} < \lambda _1 \frac{\lambda _1}{\mu +\lambda _1} +\lambda _2 \frac{\lambda _2}{\mu +\lambda _2}\). The simplification of this inequality leads \(\frac{(\lambda _1 + \lambda _2)^3}{(\lambda _1 + \lambda _2)^2 + 2 \mu (\lambda _1 + \lambda _2 + \mu )} < \frac{\lambda _1^2 (\mu + \lambda _2) + \lambda _2^2 (\mu + \lambda _1)}{(\mu + \lambda _1)(\mu +\lambda _2)}\). Moreover, we have \(\big (\lambda _1^2 (\mu + \lambda _2) + \lambda _2^2 (\mu + \lambda _1)\big )\big ((\lambda _1 + \lambda _2)^2 + 2 \mu (\lambda _1 + \lambda _2 + \mu )\big ) - (\lambda _1 + \lambda _2)^3(\mu + \lambda _1)(\mu +\lambda _2) = \mu ^2(\lambda _1^2 + \lambda _2^2)(\lambda _1 + \lambda _2 + 2\mu ) > 0\), this indicates that the relative loss reduction requirement always holds when the service rates \(\mu _1\) and \(\mu _2\) are the same. \(\square\)

D Proof of Lemma 1

Proof

The absolute loss reduction \(P_{loss}<\min \left\{ \frac{\rho _1}{1+\rho _1}, \frac{\rho _2}{1+\rho _2}\right\}\) indicates \(P_{loss}<\frac{\rho _1}{1+\rho _1}\) and \(P_{loss}< \frac{\rho _2}{1+\rho _2}\). Multiplying the first inequality by \(\lambda _1\), the second inequality by \(\lambda _2\) and adding these two inequalities yields \((\lambda _1 + \lambda _2) P_{loss} < \lambda _1 \frac{\rho _1}{1 + \rho _1} + \lambda _2 \frac{\rho _2}{1 + \rho _2}\), which is exactly the relative loss reduction. \(\square\)

E Proof of Proposition 3

Proof

For the first statement, it can be readily verified that \(r_1= \frac{(\rho _1+\rho _2)^2+2\rho _1}{2(\rho _1+\rho _2+1)^2}<\frac{1}{2}\), \(r_2= \frac{(\rho _1+\rho _2)^2+2\rho _2}{2(\rho _1+\rho _2+1)^2}<\frac{1}{2}\) and \(r = \frac{(\lambda _1+\lambda _2)(\rho _1+\rho _2)^2+2(\lambda _1\rho _1+\lambda _2\rho _2)}{2(\lambda _1+\lambda _2)(\rho _1+\rho _2+1)^2}<\frac{1}{2}\). In addition, when \(\lambda _1=\lambda _2\) and \(\rho _1=\rho _2=\rho\), we have \(\lim _{\rho \rightarrow \infty } r_1= \lim _{\rho \rightarrow \infty } r_2 = \lim _{\rho \rightarrow \infty } = \frac{1}{2}\).

The second statement can be obtained by verifying the following first-order partial derivatives \(\frac{\partial { r_1}}{\partial {\rho _1}} = \frac{2\rho _2+1}{(\rho _1+\rho _2+1)^3}\), \(\frac{\partial { r_1 }}{ \partial {\rho _2} } =\frac{\rho _2-\rho _1}{(\rho _1+\rho _2+1)^3}\), \(\frac{\partial { r_2}}{\partial {\rho _1}} = \frac{\rho _1 - \rho _2 }{(\rho _1+\rho _2+1)^3}\), and \(\frac{\partial {r_2}}{ \partial {\rho _2} } =\frac{2\rho _1+1}{(\rho _1+\rho _2+1)^3}\).

For the third statement, when \(\lambda _1 =\lambda _2\), the total wrong assignment ratio reduces to \(r = \frac{(\rho _1+\rho _2)^2+(\rho _1+\rho _2)}{2(\rho _1+\rho _2+1)^2} = \frac{(\rho _1+\rho _2+1)^2-(\rho _1+\rho _2+1)}{2(\rho _1+\rho _2+1)^2} = \frac{1}{2} - \frac{1}{2(\rho _1+\rho _2+1)}\). Therefore, we conclude that r is monotonically increasing in \(\rho _1+\rho _2\), which completes the proof. \(\square\)

F Proof of Proposition 4

Proof

For the first statement, we have

For the second statement, when \(\rho _1=\rho _2=\rho\), applying Equation (9) we have \(\pi (2,1)=\frac{\rho ^3}{2(\rho +1)(2\rho ^2+2\rho +1)}.\) For any positive \(\rho\), we have \(\frac{\mathrm {d}\pi (2,1)}{\mathrm {d}\rho }=\frac{\rho ^2(4\rho ^2+6\rho +3)}{2(\rho +1)^2(2\rho ^2+2\rho +1)^2}> 0\) and \(\lim _{\rho \rightarrow \infty }\pi (2,1)=\lim _{\rho \rightarrow \infty }{\frac{\rho ^3}{2(\rho +1)(2\rho ^2+2\rho +1)}}=\frac{1}{4}\). Therefore, we conclude that when the occupation rates are the same, the serious malpositioning probability \(\pi (2,1)\) is increasing in \(\rho\) and approaches \(\frac{1}{4}\).

For the third statement, we first denote \({\bar{\rho }} = \rho _1+\rho _2\). When \(\alpha =1\) and \(\rho _1+\rho _2\) is fixed, i.e., \({\bar{\rho }}\) is fixed, deploying Equations (1) and (9), we obtain \(\pi (2,1)=\phi ({\bar{\rho }})\rho _1\rho _2\), where \(\phi ({\bar{\rho }})\) is a function of \({\bar{\rho }}\) and consequently is a constant for fixed \({\bar{\rho }}\). Notice that \(\rho _1\rho _2\) is decreasing in \(|\rho _2-\rho _1|\) when \(\rho _1+\rho _2\) is fixed. Hence, when \(\rho _1+\rho _2\) is fixed, the malpositioning probability \(\pi (2,1)\) is decreasing in \(|\rho _2-\rho _1 |\).

When \(\rho _2\) is fixed, the serious malpositioning probability \(\pi (2,1)\) is a function of \(\rho _1\). It can be readily verified that \(\frac{\partial \pi (2,1)}{\partial \rho _1} = \frac{\rho _2(\rho _1 + \rho _2)^2(\rho _2^2 - \rho _1^2 + 4 \rho _2 + 6) + 4 \rho _2(2\rho _1 +\rho _2)}{(\rho _1 + \rho _2 + 2)^2[(\rho _1 + \rho _2)^2 + 2(\rho _1 + \rho _2 +1)]^2}\), the denominator of this partial derivative is positive, hence we focus on the sign of the nominator. Since \(\rho _2\) is fixed, we consider the residual of the nominator of this partial derivative as a function of \(\rho _1\), by replacing \(\rho _1\) by x, we obtain g(x) where \(g(x) =(x+\rho _2)^2(\rho _2^2-x^2+4\rho _2+6)+4(2x+\rho _2)\), the derivatives of g(x) regarding to x are \(g'(x)=-2(x+\rho _2)(2x^2+x\rho _2-\rho _2^2-4\rho _2-6)+8\), \(g''(x)=-4(3x^2+3x\rho _2-2\rho _2-3)\) and \(g'''(x)=-12(2x+\rho _2)\). Notice that for positive x, we have \(g'''(x)<0\), \(g''(0)=4(2\rho _2+3)>0\) and \(\lim _{x\rightarrow \infty } g''(x)=-\infty\). Hence, there exists a unique positive number \(x_1\) such that \(g''(x_1)=0\). Besides, we have \(g''(x)>0\) if \(0<x<x_1\) and \(g''(x)<0\) if \(x>x_1\). Moreover, we have \(g'(0)=2\rho _2(\rho _2^2+4\rho _2+6)+8>0,\quad \lim _{x\rightarrow \infty } g'(x)=-\infty\). Hence we conclude that there exists a unique positive number \(x_2(>x_1)\) such that \(g'(x_2)=0\). Obviously, we have \(g'(x)>0\) if \(0<x<x_2\) and \(g'(x)<0\) if \(x>x_2\). Similarly, we can conclude that there exists a unique positive number \(x_3(>x_2)\) such that \(g(x_3)=0\). In addition, we have \(g(x)>0\) if \(0<x<x_3\) and \(g(x)<0\) if \(x>x_3\). Moreover, we know that \(\frac{\partial \pi (2,1)}{\partial \rho _1}=\frac{\rho _2g(\rho _1)}{(\rho _1+\rho _2+2)^2{[(\rho _1+\rho _2)^2+2(\rho _1+\rho _2+1)]^2}}\). Therefore, the serious malpositioning probability \(\pi (2,1)\) is increasing in \(\rho _1\) if \(\rho _1<x_3\) and decreasing in \(\rho _1\) if \(\rho _1>x_3\). Observe that \(g(\rho _2)=4\rho _2(4\rho _2^2+6\rho _2+3)>0\), which implies \(\rho _2<x_3\). This completes the proof. \(\square\)

G Proof of Proposition 5

Proof

The first statement follows by noting that \(\rho _1\rho _2= \frac{(\rho _1+\rho _2)^2-(\rho _1-\rho _2)^2}{4}\). For the second statement, when \(\rho _1=\rho _2=\rho\), we have \(\pi (0,1)+\pi (2,0) = \frac{\rho ^2}{(\rho +1)(2\rho ^2+2\rho +1)}\). Therefore, it can be readily verified that \(\pi (0,1)+\pi (2,0)\) is unimodal and there exists a specific \(\rho\) that maximizes \(\pi (0,1)+\pi (2,0)\). \(\square\)

H Proof of Proposition 6

Proof

The first statement follows by noting that \(\pi (1,1)+\pi (2,2)<1\) and \(\lim _{\rho _1 \rightarrow \infty } [\pi (1,1)+\pi (2,2)]=1\) for \(\rho _2\in (0,\infty )\). The second statement can be verified by checking the sign property of the first-order derivative of \(\pi (1,1)+\pi (2,2)\) as follows, when \(\rho _1=\rho _2=\rho\), we have \(\lim _{\rho \rightarrow \infty } [\pi (1,1)+\pi (2,2)]= \lim _{\rho \rightarrow \infty } \frac{\rho ^2}{2\rho ^2+2\rho +1 }=\frac{1}{2}\) and \(\frac{\mathrm {d}(\pi (1,1)+\pi (2,2))}{\mathrm {d} \rho }= \frac{2\rho (\rho +1)}{(2\rho ^2+2\rho +1)^2}>0\). The third statement follows by noting that \(\rho _1^2+\rho _2^2 = \frac{1}{2}\left[ (\rho _1+\rho _2)^2 + (\rho _1 - \rho _2)^2 \right]\). For the last statement, we have

It can be verified that \(\frac{\partial (\pi (1,1)+\pi (2,2))}{\partial \rho _1}\le 0\) for \(0<\rho _1\le \sqrt{\rho _2^2+1}-1\) and \(\frac{\partial (\pi (1,1)+\pi (2,2))}{\partial \rho _1}\ge 0\) for \(\rho _1\ge \sqrt{\rho _2^2+1}-1\), which completes the proof. \(\square\)

I Proof of Proposition 7

Proof

Since the light malpositioning probability is a part of the total malpositioning probability, the first statement follows the first statement of Proposition 6. We now verify the second statement, when \(\rho _1=\rho _2=\rho\), we have

\(\square\)

For the last statement, when \(\alpha =1\), it can be readily verified that

Because \(\rho _1^2 + \rho _2^2 = \frac{1}{2}[(\rho _1 - \rho _2)^2 + (\rho _1 + \rho _2)^2]\), we conclude that the total malpositioning probability \(P_{mal}^{total}\) is increasing in \(|\rho _1-\rho _2 |\) when \(\rho _1+\rho _2\) is fixed and \(\alpha =1\), which completes the proof.

Rights and permissions

About this article

Cite this article

Chen, Y., Xie, J. & Zhu, T. Overflow in systems with two servers: the negative consequences. Flex Serv Manuf J 35, 838–863 (2023). https://doi.org/10.1007/s10696-022-09455-w

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10696-022-09455-w