Abstract

Fire testing enables an individual or an organisation to make a claim about how a material, product, or system will perform in operational use. This paper describes and analyses the various reaction-to-fire tests that have used over the last 100 years in the UK. By analysing the commonalities and differences between these tests we propose a ‘taxonomy of testing’. We suggest that tests may be classified by the degree to which users may unthinkingly apply the results—without leading to negative fire safety outcomes. We propose three categories: unrepresentative tests; model tests; and technological proof tests. Unrepresentative tests are those which do not mimic building fire scenarios, but have thresholds so conservative that users need not consider whether the test was applicable to their intended application. Model tests are those based on ‘models’ of expected fire scenarios—users must therefore be confident that the model is sufficiently similar to their application. Technological proof tests are those which provide a more realistic test of a real building system—users must carefully analyse the similarities between their test and the real building before applying the results. From this we conclude that where user competence is low, policymakers should cite only unrepresentative (and conservative tests) within their guidance. Conversely where user competence is high, policy makers may more safety cite model or technological proof tests. The kinds of tests that may be safely cited in guidance are therefore indelibly linked to the expertise of the user.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

As motorists drive away from London on England’s M1 highway, most would give little thought to a sloping bridge under which they pass. Nor would they likely peek through the thick trees and glimpse the cluster of the buildings sitting in the grounds of an old manor house. In the summer of 2017, one of these buildings—the ‘burn hall’—became the focus of national attention. Inside the burn hall, was an eight-metre high wall, and onto this wall was mounted the facade of a building. But this was no ordinary facade. This facade was to be the subject of a standardised test. The test would enable a government-appointed panel to make claims regarding the safety of hundreds of tower blocks across the country following the Grenfell Tower fire that had resulted in 72 deaths.

This test (known as BS 8414) was the culmination of decades of development of testing methods aimed at enabling fire safety regulation of building materials and products. However, despite relying on this large-scale system test to judge the safety of existing buildings in 2017, the same government would decide in 2018 that many new buildings (or major renovations) would instead be regulated using a bench-scale test focused on assessing the potential combustibility of materials and products used within those cladding systems.

Both of these tests provide a means of assessing whether the external cladding of a building is ‘safe’, but they do so in markedly different ways that epitomise different testing philosophies. While the large-scale BS 8414 test seeks to represent the behaviour of a complete cladding system, the bench-scale combustibility test assesses only the behaviour of a material or product in a test rig that is unrelated to a building context. The former seeks to be representative of the fire hazard whereas the latter focusses on reproducibility.

To understand why the BS 8414 test was developed, but then rejected for regulation of certain classes of buildings in the aftermath of Grenfell, we unpick the logic behind the development of the UK reaction-to-fire testing standards that have been used to regulate the use of construction materials and products. This reveals an on-going struggle to assert the credibility of test results as means of gauging fire hazards. Just as Shapin [1] argues that credibility is the very essence of scientific knowledge, so too it is central to the role of testing. The evolution of these tests hinged on judgements about how best to use tests to make inferences of real-world performance.

We argue that the history of reaction-to-fire tests can be understood through a taxonomy of tests that highlights the degree to which each test was considered to be representative of the relevant fire scenario. In order to enable innovation in construction technologies, tests were developed that were seen as more realistic, and thus more credible as representations of fire hazards. However, the development of new tests did not lead to scrapping old tests (because that would have required companies to retest many existing products), thereby resulting in a proliferation of tests. Although we do not attempt to explain the root causes of the Grenfell Tower fire here, it is clear that this range of standardised tests contributed to a regulatory framework that enabled the construction industry, whether knowingly or otherwise, to use ‘the ambiguity of regulations and guidance to game the system’ ([2], p. 5).

Our argument is that this was not simply due to the accumulation of the multiple testing routes to compliance that resulted from a desire to enable innovation whilst retaining legacy tests. The additional problem was that the testing standards ‘black-boxed’ knowledge, so that the users of the tests were able to remain ignorant of how a particular test result was considered to be relevant to fire hazards. Passing tests (in a ‘compliance culture’) rather than understanding their specific utility also then made it hard, politically, to defend the use of tests that provided a more complex understanding of fire hazards—hence the post-Grenfell reversion to a simple test focussed on banning combustible cladding [3].

Space limitations mean that this paper has a narrow focus on analysing the significance of our taxonomy of fire tests. We acknowledge the value of understanding the processes by which these tests were developed, including the role of industry, but do not seek to document them in detail (for a description of the processes involved in the development of the related Euroclass test standards, see [4]). We also touch on, but do not expand on, the importance of competency in using test results (for a recent discussion of competency in fire safety engineering, see [5]). Finally, we do not discuss here the limitations of standard tests with regard to the development of fundamental knowledge, and the challenge thus posed to the first principles fire safety engineering claims made in performance-based design (this is discussed in [6]).

Our claim to originality stems from the insights that flow from our taxonomy of fire tests, framed by analysis that draws on concepts from the Sociology of Testing (set out in the next section). In what follows, we describe each of the UK reaction-to-fire tests in roughly chronological order whilst also organising them into a taxonomy that reflects the key properties of the tests. We use an observational rather than experimental approach, based on classification and comparison to discern patterns, thus enabling inductive inference to make claims about their significance ([7], p. 18).

Methodologically, we draw on a wide-ranging literature review carried out for the Grenfell Tower Public Inquiry [8]. Where information is based on documents in the UK National Archives, this is indicated in the bibliography. Otherwise, all other evidence comes from published documents, selected by ‘purposive sampling’ [9], focussed on the testing standards themselves, associated regulation and guidance, and related technical literature.

2 Testing and Fire Safety

Testing enables an individual or an organisation to make a claim about how a material, product, or system will perform when used in an operational situation. A key issue with any test is therefore ‘the problem of inference from test performance to actual use’, and the tension that exists between tests that are more realistic and those that are carefully controlled and highly instrumented ([10], p. 414). Tests can never exactly mimic operational use of a technology because test designers seek to minimise variables, and introduce instrumentation that would not normally be present. As Downer ([11], p. 9) argues: ‘Since even the most “realistic” tests will always differ in some respects from the “real thing”, engineers must determine which differences are “significant” and which are trivial if they are to know that a test is relevant or representative.’

Nevertheless, one approach to claiming that a test is sufficiently representative is to seek to make it as realistic as practical. These are what Sims ([12], p. 492) refers to as ‘proof tests’, designed ‘to test a complete technological system under conditions as close as possible to actual field conditions to make a projection about whether it will work as it is supposed to.’

Although there have been some full-scale fire tests carried out for scientific purposes in actual buildings (e.g. [13, 14]), the destructive nature of such testing means it would be impractical to use these tests as a means of regulation. Instead, regulatory fire testing has focussed on the component parts of buildings. The first such standardised test originated in the early twentieth century as a way of rating ‘fire resistance’—a metric that measures how long elements of structure such as columns, beams and doors will maintain structural properties in a furnace test [15]. The fire resistance test first appeared as a standard in the USA in 1919, followed by a UK version in 1932.

The most important aspect of this development was standardisation—around a metric (fire resistance) and a test method (specimens subjected to a prescribed time–temperature curve in a furnace). When he first proposed the test in 1904, Sachs [16] argued that: ‘It is desirable that these standards become the universal standards in this country, on the continent and in the United States, so that the same standardization may in future be common to all countries.’

This standardisation meant that regulation based around local practices (e.g. two inches of tiles or plaster around a steel column) could be replaced with the use of a universal metric (e.g. 90 min ‘fire resistance’). Construction industry practitioners could then operate using the same ‘language’, with manufacturers only needing to gain certification through one test in order to meet regulatory approval across the whole jurisdiction.

Standardisation works by defining how a test should be carried out so that those using the test obtain consistent results, but users do not need to know how test results are claimed to be relevant to building fire hazards. Standards typically say what should be done, but not why. Three aspects need to be decided by the test designers: what to measure, how to measure it, and how to interpret the result with regards to building safety. A standardised test thus encompasses a large amount of knowledge and many judgements about how test metrics relate to fire hazards, and what level of risk is considered acceptable. However, the users of a standard test are not required to know any of this (and the extent to which they can know will depend on how explicitly the rationale for a test is documented).

A standard test is thus a ‘black-box’ in the sense defined by MacKenzie ([17], p. 26): ‘a technical artefact—or, more loosely, any process or program—that is regarded as just performing its function, without any need for, or perhaps any possibility of, awareness of its internal workings on the part of users.’ The benefit of this black-boxing is that practitioners do not need to think about how the test ‘works’; the downside, of course, is that they do not need to think about how the test ‘works’! The designers of the standard fire resistance test black-boxed a particular view of what structural properties mattered in a fire, and how these should be defined and measured. Thereafter, practitioners simply needed to know that an element of structure achieved the rating required by regulation.

This view of standardisation is also reflected within the somewhat recursively named ‘British Standard 0—A standard for standards—Principles of standardisation’ [18]. This document recognises that ‘for a British Standard to be a useful and attractive tool for conducting business or supporting public policy, it has to command the trust and respect of all those who are likely to be affected by it’ [18]. Earlier drafts of the same standard set out the degree to which standards may be regarded as a source of authority. The 1981 edition highlighted that ‘a special duty of care is owed by BSI as the publisher of authoritative national documents offering definitive information or expert advice’. It notes that ‘the care exercised in the production of standards is relied upon by users of the standard who themselves owe a similar duty to the public’. BS 0 (1981) notes that ‘it remains the responsibility of users to ensure that a particular standard is appropriate to their needs’, but then qualifies this with the statement that ‘within their scope, national standards provide evidence of an agreed “state of the art” and may be taken into account by the Courts in determining whether or not someone was negligent’.

In the case of fire resistance, the belief that the test does work is widely held because of the perceived correlation between its use and satisfactory fire safety outcomes over a long period of time [6]. Although critiqued as being unrealistic, the fire resistance test has endured essentially unchanged for a 100 years [15]. As Downer [11] argues, the utility of testing can be assessed from the operational performance of technologies developed based on that testing, so long as the technology in question and the test procedure have not been subject to radical change. The results of such tests can be seen as ‘yardsticks, significant because of their uniformity rather than because they reflect any inherent or “natural’ properties” … What is important is not that the test represents a “natural” measure but that it is constant, allowing us to measure one thing against another’ ([11], p. 21).

Whereas fire resistance provides a metric for how building components retain their structural properties in a fire, reaction-to-fire tests seek to assess how materials and products contribute to a fire. How easily does something ignite, how much heat does it release, and how readily does fire spread across surfaces and develop to the point of ‘flashover’?

These reaction-to-fire tests have not seen the same ‘design stability’ [11] as the fire resistance test because of innovation in construction materials and products, with some tests being improved and new ones being developed since the first UK combustibility test standard was promulgated in 1932. By the time of the Grenfell Tower fire, English fire safety regulations offered routes to compliance that could be based on the use of eight different reaction-to-fire tests.

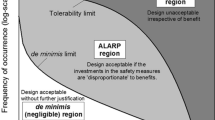

To understand the significance of these reaction-to-fire tests we set out a taxonomy that illustrates how the tests embodied three different approaches to establishing their credibility for classifying the extent to which a material or product might contribute to a fire. Four of these tests were used to define whether a material was considered combustible; these are laboratory bench style tests that make little or no attempt to mimic building fire scenarios, and thus we label these as unrepresentative tests. Three provided measures of the extent to which a fire on a material or product might spread and develop; these are based on models of fire scenarios, and thus we label these as model tests. Finally, the BS 8414 test was developed to provide a more realistic test of a cladding system, and thus comprises a technological proof test.

Two issues are central to understanding this diversity of testing methods. First, the evolution of reaction-to-fire tests stems in large part from the challenges presented by innovation in construction materials and products. Second, the logic of why and how a test was considered useful was black-boxed (to varying degrees), making some aspects of the test opaque to most users.

Innovation can offer societal benefits but may also introduce new risks. Brannigan ([19], p. 1) argues that attempts to apply a standard test to new materials, products, or design methods may result in ‘innovation risk’ because ‘the ability to create a new product is not always connected with the ability to understand its risks and therefore to develop an appropriate test’, resulting in a failure ‘to capture the risk inherent in materials or processes which did not exist when a regulatory standard was adopted.’ Such innovation risk constitutes ‘a risk in any type of performance testing’, where there is ‘the ability to create a product that meets the technical requirement of a regulation but represents a novel hazard’ ([19], p. 25).

UK test developers sought to address this ‘innovation risk’ by developing new tests that enabled the use of novel materials and products that would not have passed the existing tests. Because these new tests were considered to more closely represent fire hazards, they were seen to be credible ways of gauging adequate safety. They thus enabled a balancing of societal objectives—seeking to maintain adequate safety whilst enabling the use of new materials and products. Critics (e.g. [20]) may argue that this amounted to ‘deregulation’ that went too far, but choices had to be made about what regulation and regulatory tests were intended to achieve.

Policy-makers need to balance competing outcomes while the regulatory authorities and test designers need to make expert judgements not just about whether a test is a useful representation of a fire hazard, but also the extent to which that hazard poses a tolerable risk. The design of a standard fire test is thus based on both ‘technical’ and ‘societal’ judgements, but the rationale for the test will rarely be fully documented or publicised.

Our argument is that the categorisation of these reaction-to-fire tests into a taxonomy shows differing degrees of black-boxing, with different expectations of user expertise. While the early unrepresentative tests made no attempt to mimic building fire scenarios, the thresholds of what was considered safe were so conservative that test users did not need to consider whether the test was applicable to their intended application (so long as they followed the regulatory requirements). However, both the model tests, and even more so, the BS 8414 proof test, are designed to mimic real fire scenarios. The model and proof tests thus rely on users to make appropriate use of test results.

Although the test designers were almost certainly aware of this distinction, most fire safety practitioners treated all eight tests as having equal epistemic status. A minimum compliance culture prioritised passing a test (any test!) to gain product certification or building design approval. Whether unconsciously or deliberately, most practitioners acted as though only ‘passing’ the test mattered, with no need to understand or interpret the test result in the overall context of how the test related to real-world fire scenarios.

In what follows, we describe the development of UK reaction-to-fire testing, organised into a taxonomy that highlights the extent to which knowledge was black-boxed. We argue that standardisation necessarily involves some degree of black-boxing, but this may vary in the extent to which users can (or should) understand how the test is claimed to provide a credible means of regulating a fire hazard. Moreover, two kinds of judgement can be hidden inside the black box: those that can be seen as ‘technical’—whether the test rig measures something relevant in an accurate way; and those that can be seen as ‘societal’—what balance should be struck between safety and other objectives such as economic growth or environmental protection. What was it that standardisation black-boxed into these tests, and how did it differ between the different categories of test? What role was each test intended to play?

3 Category 1: Unrepresentative Tests

3.1 The Combustibility Test

Early attempts to regulate the fire hazards of construction materials and products were based around discriminating between those that were ‘combustible’ and those that were ‘incombustible’. While it was relatively straightforward to ban the use of structural timber, as happened following the 1666 Great Fire of London, the twentieth century brought a desire for a more scientific way of rating combustibility.

The first such test evolved from a test apparatus created for the US Forest Products Laboratory to compare the responses of materials (it was developed to investigate the effectiveness of fire retardants) [21]. In the test, a sample of the test product was lowered into a small furnace that had been pre-heated to a temperature between 200 and 450°C, with a pilot flame located above the furnace. If flammable vapours were generated in sufficient quantity, these would be ignited by the pilot.

In 1932, the British Standards Institution (BSI) adapted this setup, changing some procedures and creating a definition of ‘incombustibility’. Included in the first UK fire testing standards, British Standard Definitions 476 (BS 476), this test began when the furnace was cold, with the sample lowered into the furnace and then gradually heated to 750°C. BSI’s definition of ‘incombustibility’ was not met ‘if at any time during the test period the specimen (i) flames, or (ii) glows brighter than the walls of the heating-tube’ ([22], p. 12).

In a 1953 revision the furnace was now preheated to 750°C before the start of the test, it was called a test of ‘combustibility’ rather than a test of ‘incombustibility’, and combustibility was defined as any sample that ‘flames, or produces vapours which are ignited by the pilot, or causes the temperature of the furnace to be raised by 50°C or more’ [23]. By inference, this definition acknowledged that it would be possible for a specimen to produce vapours which might not be ignited by a pilot—thus implicitly acknowledging that, as with all such tests, this was a test of whether a product did burn in a particular test set-up and conditions, rather than if it could burn under some other set of conditions.

In the 1970 version of the standard (now named BS 476 Part 4), the testing equipment was adjusted slightly, and the language was (again) reversed so that it was now a test for ‘non-combustible’ materials (or products). The assessment criteria were also adjusted; whereas, previously, any flaming resulted in a material being classed as ‘combustible’, the 1970 standard allowed a material (or product) to be classified as ‘non-combustible’ even if it flamed for up to ten seconds [24]. The 50°C furnace temperature criterion was retained.

This 1970 test appears to have been influenced by the development of an equivalent testing standard by the International Standards Organisation (ISO)—ISO 1182 also introduced in 1970 [25]. Herpol (one of the developers of the ISO standard) highlighted the necessary degree to which some of the parameters were semi-arbitrarily chosen, with, for example, the permissible flaming time constituting a compromise between absolute and acceptable levels of ‘non-combustibility’. Herpol pointed out that: ‘as a matter of fact, all materials producing flaming should be declared combustible, but to be sure that there is real flaming inside the furnace the flame has to last for a certain time. Thus the 10 s limit is also arbitrary and no member of [the committee] would probably object to acceptance of another period, for example, 15 s’ [26].

In 1982 another similar standard was introduced as BS 476 Part 11 [27]. The key difference with BS 476 Part 4 was that the sample was cylindrical rather than cuboid. This aligned with the new version of ISO 1182 introduced in 1979 [28]. Figure 1 provides an illustrative timeline of the development of these tests. The BS 476 Part 11 test standard did not define ‘non-combustibility’. Instead, the new guidance to support the building regulations—Approved Document B introduced in 1985—provided the definition and the criteria: a material or product was considered non-combustible if it ‘does not flame and there is no rise in temperature’ [29].

Thus, by 1985 there were two alternative routes to defining ‘non-combustibility’ via a regulatory test—i.e. a route which included a definition and pass criteria within the testing standard (Part 4), or a route which provided only a method of test (Part 11). The government’s 1985 guidance also stated that ‘non-combustible’ materials could be defined as ‘totally inorganic materials … containing not more than 1 per cent by weight of volume of organic material’ [29]. An allowance for concrete blocks was also introduced.

In 2002, government introduced another definition for non-combustibility ([30], p. 112). This was based on the results from two different tests as defined by a new harmonised European standard [31]. The first was ISO 1182 (for which the equipment and test method was fundamentally the same as that in BS 476 Part 11). A slight distinction was that to achieve the classification of ‘non-combustible’ via the ISO 1182 test standard, the sample should not sustain flaming for more than 5 s. In addition, the European testing and classification system also required an additional ‘bomb calorimeter test’ to be performed on samples of any product (see below).

What emerges is that definitions of ‘non-combustibility’, and measures thereof, are matters of judgement. Whether or not a material or product can burn is different from whether it does burn under a particular set of circumstances. Any material that contains organic content can ‘burn’, and each test method would allow some organic content to be present within a test sample whilst nonetheless classifying a material or product as ‘non-combustible’. Those that did not exhibit flaming could still pyrolyse to produce flammable vapours, even if these did not ignite. Indeed, several of the test definitions for non-combustibility allowed limited flaming to occur – thereby explicitly acknowledging that materials or products that were classified as ‘non-combustible’ could, and in fact sometimes did, burn under the conditions of the test(s).

Although based on expert judgement, the thresholds for defining whether or not a material or product was classified as combustible were not based on any direct linkage with specific fire hazards. Regulators simply appear to have sought a definition for ‘combustible’ that would allow materials or products that did not burn very much. This was explicitly acknowledged in the government’s 1985 guidance, which allowed materials to be classified as ‘non combustible’ so long as less than 1% of their mass comprised organic (i.e. combustible) content.

A further complication with the concept of non-combustibility based on any of these tests lies in whether such a test should apply only to a material, or whether it can be used for testing products made up from one or more materials. Babrauskas [32] notes that the concept of non-combustibility ‘arose initially in connection with “elementary” materials, i.e., materials which are homogeneous on a macroscopic scale’. The original 1932 version of BS 476 explicitly states that ‘the term incombustible and its derivatives shall be applied to materials only’ [22]. According to Babrauskas, ‘this was satisfactory until about 1950, when paper-faced gypsum wallboard started becoming a popular interior finish material’ [32]. Paper-faced gypsum wallboard is a product that consists of core made from the material gypsum—which passes tests for non-combustibility—and surface made from the material paper—which does not. Strictly speaking therefore, testing the product—i.e. paper-faced gypsum board—in any of the non-combustibility tests described above would not be appropriate. Such an approach to testing products might not have raised concern when applied to these types of wallboard (mostly composed of incombustible gypsum with a thin layer of paper), but the precedent set would be more significant for cladding products that contained highly combustible cores covered with a thin non-combustible skin.

The concept of non-combustibility and the tests used to define it exemplify the first category of test. They are tests that in our classification are seen as both unrepresentative and at least partly arbitrary. There is no particular reason why the criteria for flaming should be set at zero seconds, five seconds, or ten seconds—these are judgements made on the assumption that the precise value selected does not particularly matter with regard to the expected fire safety outcomes.

3.2 The Bomb Calorimeter Test

The European testing approach adopted into regulation (in England and Wales) in 2002 included a measure of the heat of combustion of a material determined by using an apparatus called a bomb calorimeter (the bomb!). If a tested product has the potential to burn, then the bomb calorimeter will detect this potential and return a quantitative measurement (subject to the precision of the instrument used). In the European reaction-to-fire classification system, whether a material is ‘non-combustible’ or of ‘limited’ combustibility is determined from results from the bomb test (EN 1716), together with the non-combustibility test (EN ISO 1182).

The bomb’s test method does not define the threshold measurement below which a sample would be deemed non-combustible. This value is instead provided within another European standard (EN 13501-1). The threshold value below which a material is (currently) considered to be non-combustible is 2 MJ/kg (compared to about 46 MJ/kg for polyethylene or about 18 MJ/kg for softwood timber). This means that products that are classed as ‘non-combustible’ may burn, but only barely. The value of 2 MJ/kg represents such a low threshold that the code writers presumably felt that such materials or products presented no meaningful fire hazard. A slightly higher threshold of 3 MJ/kg is (currently) used to define ‘limited combustibility’. To the extent that it is possible to document the process by which these thresholds were agreed, it is clear that they reflect compromise between negotiators from different nations, strongly influenced by the interests of producers of existing products that were already approved for certain building functions ([4] pp. 11, 12).

The particulars of EN 1716 are specific to that European standard; other bomb calorimeter standards exist that use slightly different equipment and procedures. However, although the equipment and procedures may vary between standards, the resulting measurement is largely independent of the equipment or methods used. This non-dependence on test standard or specific equipment creates a noteworthy distinction between the non-combustibility tests and the bomb test. With the non-combustibility tests, the result is a function of the particular test equipment and procedure; with the bomb, the result should be the same, or at least very similar, irrespective of the equipment used.

Although the bomb test is highly reproducible, the small (often only about 0.5 g) sample used in a bomb test cannot be said to be representative of the construction of a real building. In fact, the reproducibility of the test is because the test procedure requires systematic elimination of any variable that might account for how a product is used, or the geometry in which it is placed. The sensitivity of the equipment, however, along with its reproducibility, makes it ideal for differentiating between materials that are not expected to release very much energy (heat) when burnt.

Bomb calorimetry suffers from the same challenge as the non-combustibility tests as regards testing of ‘products’ versus ‘materials’. Bomb calorimeter test standards may get around this problem by determining the gross heat of combustion of non-homogenous products using a mass-weighted average of the heats of combustion of the respective component materials, or by dealing only with ‘substantial components’ of composite products [33].

3.3 Commonalities

The bomb test and the non-combustibility tests are fundamentally different. The latter have been developed solely for the purposes of regulating materials and products on the basis of their ‘combustibility’ rating. The former uses a scientific instrument that has been appropriated for regulatory purposes. However, the ways in which the results of these tests are used are very similar; it is assumed amongst practitioners that the exact (albeit ‘low’) threshold for pass/fail does not materially affect the fire safety outcome across a huge range of fire scenarios.

In the non-combustibility tests, the criteria for flaming could be zero seconds, five seconds, ten seconds, or indeed longer—the exact value is assumed not to particularly matter with regards to the fire safety outcomes. Similarly, the threshold for the bomb calorimeter’s heat of combustion value could just as easily be set at 1.7 or 2.4 MJ/kg—again the exact value is assumed not to be significant with regards to the fire safety outcomes.

However, small changes in the threshold values set by regulators may have significant impacts on the kinds of products that can be classified as ‘non-combustible’. The selection of a particular threshold value is therefore asymmetric in terms of its impact on fire safety outcomes, as compared to its impacts on the products that may be deemed to pass or fail. In the case of the non-combustibility tests, small changes to the test setup may have a significant impact on the products that could be classed as non-combustible. Likewise, for both types of test, small changes in the acceptable threshold values could have a significant impact on whether a product can (or cannot) be deemed to be non-combustible.

Thus, with each change in the definition of the non-combustibility test since 1932, and with each change in the acceptability criteria, there will have been the potential for significant commercial impacts on the products that were (or were not) classified as non-combustible. A small change might cause a product that had previously been classified as non-combustible to be reclassified as combustible. This could have potentially serious consequences for product manufacturers. Small tweaks to a test made by regulators for no meaningful fire safety gain could potentially end a company’s business. Compromises over thresholds were thus a key aspect of the harmonisation of national fire test standards that led to the Euroclasses, with industry seeking to ensure the commercial viability of existing materials and products [4].

The approach historically taken by the UK government was different as there was no original need for international harmonisation. The needs of industry could most easily be met by allowing new standards to be created, whilst retaining legacy standards within the regulatory framework. The result was that by 2017 there were four methods (the oldest of which dated from 1970) which could be used by a manufacturer to demonstrate that their product was non-combustible.

4 Category 2: Model tests

4.1 The Surface Spread of Flame Test

The non-combustibility and bomb tests both involve judgements about how to define which products could be considered combustible versus non-combustible. Neither the test methodology nor the thresholds are claimed to be representative of real building fire scenarios. However, beginning in the 1940s a new kind of fire test method emerged, based around the idea that the fire hazard presented by a product in a real building could be represented by a small-scale test. The first such tests were focussed (in the UK) on the threats posed by incendiary bombs during World War II as it was recognised that materials and products did not have to be non-combustible in order to provide an ‘adequate’ level of protection from an incendiary bomb. Attention then moved towards broader regulatory application ([34], p. 47).

Prior to World War II there had been an increase in the use of building boards for construction. Many of these involved wood fibres, and were therefore combustible. Research at the UK’s Fire Research Station sought to discriminate between the hazards presented by different boarding products. Corridors were lined with different types of board and the behaviour of fires within the corridors observed. It was concluded that ‘the rate of growth of a fire was primarily influenced by the assistance given by exposed wall and ceiling surfaces to flame spread’ [35]. A laboratory test was devised to mimic this large-scale test, and to provide a measure of flame spread rate.

This ‘surface spread of flame’ test used a gas-fuelled radiant panel to heat the test specimens. The specimen and radiant panel were arranged in an L-shaped configuration, and the rate and extent of flame spread across the surface of the specimen was measured. This provided a more nuanced and complex classification system than the incombustibility test. Where fire spread was observed to be rapid and/or extensive the product was deemed of higher hazard and was ‘Class 4’; where fire spread was slow and/or confined the product was deemed of lower hazard and was ‘Class 1’, with two intermediate categories of ‘Class 2’ and ‘Class 3’. In the same manner as had been the case for the incombustibility test, the definitions of Class 1, 2, 3, and 4 were directly linked to observed behaviours in this particular testing configuration, and also in the same manner, the thresholds between the four ‘classes’ were essentially arbitrary.

However, unlike the incombustibility test, the classifications were tied (albeit loosely) to the physical phenomena that were considered of relevance in the more representative large-scale tests. This allowed the proposers of the new test method to suggest that the classification obtained during a test could be used to determine the situation in which a given product could be used within a building. They noted that: ‘The risk which can be permitted will depend largely on the situation. In passages and on staircases less risk can be tolerated than in a room, and accordingly the kind of material used in the former situations should be less susceptible to fire’ [36].

Incorporated in the BS 476 test standards in 1945, the surface spread of flame test marked an important change in regulatory fire testing. For the incombustibility test, the link between test results and building performance was based purely on judgement, with no attempt to quantitatively correlate the test results with building fire behaviours. With the surface spread of flame test, the link to reality was stronger. The test was inspired by an attempt to represent physical phenomena that were relevant in a real fire condition, and the results were used to create different classifications that corresponded to perceived acceptable flame spread rates based on the respective hazards that were considered tolerable in different end use conditions.

The surface spread of flame test, later labelled BS 476 Part 7, underwent various modifications over the years, but in 2017 it was fundamentally the same as the test that had been created during World War II. The surface spread of flame test sits within a category of tests that can be termed ‘model tests’. This is because the small-scale and inexpensive compliance test was modelled on a larger ‘scenario test’ that was the subject of underpinning research involving validation testing at larger scale; this validation testing was in turn modelled on a real-life fire scenario of relevance. Such tests, to borrow from Brannigan [19], are ‘models of models’—or what Fischer [37] termed ‘practical’ tests. For the first British surface spread of flame test, the link between the corridor and the resulting test was relatively weak. However, a precedent had been established; the subsequent decades would see the development of two other tests using the same logic.

4.2 The Fire Propagation Test

During the 1950s a series of 1/5th scale fire tests were carried out at the Fire Research Station wherein different products were used to line the interiors of compartments. It was found that products that achieved the same classification in the surface spread of flame test sometimes resulted in quite different fire behaviour in the scaled compartments ([38], p. 8). Thus, it was decided to develop a new test method which could better represent, and be used to distinguish between, the fire hazards presented by products when used within a real compartment. The idea of the new test was that it should be possible to correlate its results with the observations from the scaled compartment fire tests.

The resulting test comprised a small gas burner and two electric heaters situated inside a box with an exhaust flue. The test would commence with the ignition of the gas burners, with the reasoning that this was representative of a localised ignition source. Then, over the course of several minutes, the electrical elements were heated in order to subject the whole surface of the sample to thermal radiation—this was seen to be representative of a large source of heat coming from compartment contents burning in a real fire ([38], pp. 2, 3). The rate of temperature increase in the exhaust flue, as well as the duration of this increase, were measured. The results were then compared against the results from the 1/5th scale compartment tests.

Although the test method was developed during the mid 1950s, it was not formalised as a British Standard test until 1968 [39]. It was called the ‘Fire Propagation Test for Materials’Footnote 1 BS 476 Part 6, and the results were used to generate an index. This index was used to link the results of the test to the time taken for the scaled rooms lined with the test material to become fully engulfed in fire (to ‘flashover’).

The research that linked the results of the BS 476 Part 6 tests with fire growth behaviour in a real compartment was better developed and more rigorous than for the surface spread of flame test. The new test represented a ‘model’ of the 1/5th scale compartment, which in turn was a model of a real fire scenario.

4.3 The Single Burning Item Test

A desire to remove barriers to trade inspired the creation of a harmonised pan-European fire test standard (as detailed in [4]). This involved the use of a test where a small gas burner was placed in the corner of a room. The room was lined with the construction product of interest, and the growth of the fire and time to flashover were noted. Construction products were then rated based on the heat released during the fire and how quickly the fire grew to flashover. Classifications included Class A2 (very limited burning, no flashover), Class B (the room approaching, but not achieving flashover), through to Class E (where flashover occurred after only 2 min). The logic of the classification system was based on the relevant hazard being a small, growing fire within a room. However, the ‘Room Corner Test’—as it was known—was large (3.6 × 2.4 × 2.4 m) and therefore expensive in both time and money. Thus, during the 1990s, a test was developed that was smaller and less costly, but that would give essentially the same information as regards fire hazards [40].

This was the ‘Single Burning Item’ (SBI) test (EN 13823). The SBI test was not an entire room, but rather only the corner of a room with a gas burner situated on the floor in the corner. The gas burner was ignited, and the growth rate of the fire resulting from involvement of the lining products, as well as the total heat released, was measured; the results were then compared against results from tests on the same lining products in the Room Corner Test. The researchers demonstrated a reasonable correlation between the fire growth rates in the SBI versus those in the Room Corner Test for many, but not all, products (see below). Thus, they concluded that it was possible to use the results from the SBI test to infer conclusions about the results that would be expected from an equivalent Room Corner Test [41].

A new classification system was proposed, with classes A2 to E coupled to behaviours in the SBI test. The intent was that Class A2 or B products would not induce a flashover whereas Classes C to E would provide a ‘grading’ of products that could induce a flashover within a compartment. The threshold for Class A was set to align with products that were perceived to not represent a fire hazard (i.e. totally non-combustible products, mineral wool, and plasterboard), but a desire to further distinguish between the best performing products led to splitting the A Class into two categories, A1 and A2. The SBI test was, however, insufficiently sensitive to distinguish between these classes—and therefore the bomb calorimeter and non-combustibility tests (as described above) were instead cited as the means to demonstrate whether a product was Class A1 or A2. This European reaction-to-fire classification system (called EN 13501-1, or the Euroclasses [4]) is thus a composite classification system that relies on various test methods to construct the full range of classifications. Each classification threshold is, in its own particular way, a criteria which products may pass or fail in order to obtain a classification result.

Two key differences exist between the UK national classification system and the Euroclass system. First, the room on which the Euroclasses were based (and calibrated) was a full-scale room, as opposed to the British 1/5th scale model of a room. Second, both the full-scale Room Corner Test and the SBI testing apparatus were equipped with oxygen consumption calorimetry—a novel measurement technology that could be used to measure the energy released by a fire which had been unavailable to the British researchers of the 1950s (because it had not yet been invented). The new test methods thus included more direct measurements (i.e. heat release rate) of the fire hazards that might be presented by different products when used within a room. In many respects, the Euroclass approach can be seen as following the same fundamental logic as the pre-existing UK national classification system, albeit with a greater quantification of the fire hazards thanks to more advanced diagnostics. Figure 2 shows an illustrative timeline of the development of model tests.

4.4 Commonalities

The surface spread of flame test, the fire propagation test, and the SBI test are, in their setup and arrangements, very different tests. Conceptually, however, they are strongly aligned. The surface spread of flame test established the principle that a regulatory fire test could be a model of a more realistic model scenario, and that the results of the test could therefore (in principle) be used to make inferences about the performance of a product in a real fire. The fire propagation test, and then the SBI test, reinforced this idea and drew upon larger (albeit always incomplete) datasets in order to support the inferences that were being made. Because these tests are seen as more representative of real building fire scenarios, the performance limits do not need to be set so conservatively as for the ‘unrepresentative’ tests.

As with the ‘unrepresentative tests’, the thresholds used to categorise the outcomes of each of the above tests are a matter of judgement with regards to their impact on the resulting fire safety outcomes. Small changes in the thresholds for the various classifications would likely result in small changes to outcomes in building performance. However, following the same logic as for the ‘unrepresentative tests’, small changes to the precise thresholds may have significant impacts in terms of which products may achieve a particular regulatory classification. As with the ‘unrepresentative tests’ there is considerable potential for commercial interest in the threshold values that are used to define each product classification. Moreover, all of these tests were developed on the basis that the relevant hazard was a fire growing inside a room; they were not designed to address external fire spread hazards.

5 Category 3: Technological Proof Tests

Although based on fire scenarios, the model tests described above still prioritised reproducibility over representativeness. They did not, and did not attempt to, closely mimic real building situations, and to the extent that they were based on fire scenarios, these were ones that represented internal fire spread scenarios. Concern that bench scale tests provided inadequate representation of real-world hazards arose following a cladding fire at Knowsley Heights in Merseyside in 1991 [42]. After a programme of full-scale fire tests on cladding systems at the Building Research Establishment (BRE), Connolly [43] concluded in 1994 that ‘it is clear that the [the UK national tests] tests do not accurately reflect the fire hazards that may be associated with cladding systems’.

The response to this was to prioritise representativeness over reproducibility—and to create a more ‘realistic’ test of cladding systems that included cavity protection, insulation, and external rainscreens. This external fire spread ‘system test’ eventually became the BS 8414 test.

This test was created in an attempt to balance the demand for the use of complex cladding systems and combustible external wall materials and products, with the technical challenges resulting from an absence of underpinning scientific knowledge about how to extrapolate from smaller-scale material/product behaviour to large-scale system performance. The BS 8414 fire test thus constituted a form of ‘technological proof test’ whereby the test rig and procedures were designed to mimic, as closely as practicable, a real-world scenario.

BS 8414 was claimed to provide ‘a method of assessing the behaviour’ of cladding systems with test conditions that are ‘representative of an external fire source or a fully developed (post-flashover) fire in a room, venting through an aperture such as a window opening that exposes the cladding to the effects of external flames’ [44]. Those executing a BS 8414 test must design a mock-up of a sufficiently representative cladding system. The performance criteria by which it could be judged whether a cladding system tested in accordance with BS 8414 had passed or failed are given in a separate document, BR 135 [45]. There were a series of criteria—including the exceedance of temperature thresholds at different heights above the burning crib. A system that achieved the criteria was said to have been ‘classified’.

BS 8414 constitutes a distinctive shift from the earlier tests not only in being intended to be more realistic, but also in the extent to which it places responsibility on the test user. The supporting documentation for BR 135 notes that the classification ‘applies only to the system as tested and detailed in the classification report’ and that ‘when specifying or checking a system it is important to check that the classification documents cover the end-use application’ ([45], p. 19).

BS 8414 thus presents contradictory challenges. It requires users to claim that a test is representative of a cladding system for which they seek regulatory approval, while imposing requirements that mean that the test must differ from the actual system and application. Because BS 8414 is a standard test there are inevitable limitations on how realistic it can be. Testing a mock-up of a cladding system is clearly more realistic than the model tests described above, but BS 8414 necessarily standardises some parameters and instrumentation techniques. For example, the BS 8414 testing set-up does not include any windows, and the standard’s specifications mean that it ‘is not possible to “map” real façade geometry onto the BS 8414 rig’ [46]. In particular, the placement of thermocouples with which to measure temperature change (an important performance criterion) can be chosen in ways that ‘significantly increase the chance of test failure’, or not [46]. This means that while the requirements of the standard impose some unrealistic aspects on the test, they also provide sufficient ambiguity to enable users to game the system. Thus, Schulz et al. [46] note that ‘it is possible to change the severity of the test conditions by careful adjustment of particular parameters, while still being compliant with the test protocol’.

Those who carried out BS 8414 tests were expected to understand that the credibility of a technological proof test rests above all on a ‘similarity judgement’ [47] as to whether a tested specimen is sufficiently similar to ones used in buildings. In other words, this was far from the black-boxed logic inherent in earlier standard fire tests, where most of the expert judgement was carried out by those who designed the test standard and set the regulatory requirements. However, while the documentation supporting BS 8414 and BR 135 partially opens the black box, much of the underlying rationale remains opaque, even to users with a reasonable level of competence.

This matters because BS 8414 and BR 135 specifically rely on test users to make judgements as to whether their cladding system is sufficiently similar to their test specimen, but must do so without knowing what achieving a ‘pass’ means relative to real fire hazards. The extent to which the ‘pass’ criteria represent a ‘conservative’ assessment of the performance of external wall when the system was applied on real buildings is difficult to assess quantitatively. This question was not explicitly addressed in any Building Research Establishment or British Standard Institute publications on the matter; and the real-world rationale for the chosen performance criteria in BR 135 have never been clearly articulated. Indeed, evidence to the Grenfell Tower Inquiry from the authors of BR 135 suggests that even they do not recall the rationale behind some aspects of the test’s design [48].

BS 8414 places the onus on test users to judge whether a test is representative, but the degree to which something is sufficiently representative is open to dispute by individuals with various—potentially very different—claims to fire safety expertise [49]. For example, for rainscreen panels directly fixed to aluminium rails with a gap of 10 mm between the panels, is the gap width a parameter that has an impact on the system behaviour? Could the gap width alter the outcome of the tests? If so, what tolerance is allowable during the test: ± 0.5 mm, ± 2 mm? And what tolerance is therefore allowable on a building? To answer these questions, one must have a deep understanding of the relevant processes, and of how the gap between panels may affect the overall system performance and the resulting outcomes of the test. However, this type of test exists precisely because this knowledge is not yet (and may never be) available.

Thus, in essence, application of a cladding system classified to BR 135 always requires a similarity judgement by whoever is deemed to be the designer of the cladding system—whether they realise that they are making it or not.

Despite being intended to be a ‘proof test’, BS 8414 has been criticised for not providing a sufficiently realistic representation of the fire hazards presented by cladding systems [46]. Such a critique misses the key point. It should be obvious that BS 8414 tests can never be faithful reproductions of anything other than BS 8414 tests. In other words, the test is the test—it is what people do with the test’s results that is most deserving of scrutiny.

6 Discussion

To understand the significance of the large and varied number of tests that were used to regulate cladding systems such as that implicated in the Grenfell Tower fire (and subsequently deemed unsafe on many other UK buildings), we have developed a taxonomy that classifies these tests into three categories.

Unrepresentative tests were used to define the least hazardous materials (and, indirectly, products). They did not closely represent the real behaviours that might manifest during a fire, but the thresholds for acceptance were so conservative that there was consensus that there was no need for the tests to be representative. It could be assumed that the test results (and their pass/fail criteria) were applicable across a huge range of potential fire scenarios.

Model tests were used to differentiate between more hazardous products, thus enabling flexibility for innovation in the construction industry. To have confidence that the result of a model test was relevant to real world applications, these tests were underpinned by significant bodies of research. This linked the results of relatively unrealistic but reasonably reproducible tests to more representative fire scenarios.

A technological proof test was used when there was little understanding of how materials or products would behave in a real building situation. This technological proof test was intended to resemble a product’s application in a real building situation—to allow designers to make judgements about how a particular system would behave in a real fire.

As is typical of disasters, retrospective analysis reveals many failings, and space does not allow a full account of the Grenfell Tower disaster or the role of these tests therein. Instead, we focus on the general issues raised by an analysis of the development of UK reaction-to-fire tests. The chronology of their development shows the tests being made more representative of real building fires, but this resulted in a complex system where the value of tests as ‘yardsticks’ [11], for consideration by competent professionals, was obscured.

Two main arguments flow from this analysis. First, the history of UK reaction-to-fire testing shows a struggle to maintain credibility whilst enabling construction industry innovation. Second, this credibility hinged on inferences made as to whether a test represented a fire hazard and the degree to which such judgements were made by the designers or users of the tests.

The first point is that regulation involves balancing societal outcomes. Reaction-to-fire testing proved difficult to stabilise because of the desire to enable building industry innovation. Attempts to characterise new materials and products led to a proliferation of test methods. However, as regulators sought to more finely parse the properties measured in the tests and correlate them with real fire hazards, concern emerged about whether the tests were sufficiently representative.

The development of UK reaction-to-fire tests thus embodied judgements (albeit perhaps not explicitly made) that balanced the differing societal objectives of building safety and innovation (including better environmental performance). Tests and regulatory thresholds needed to be credible not just as regards safety, but also as regards the commercial interests of industry and the nation; in a context where politics, in the UK and elsewhere, was becoming increasingly enchanted with deregulation.

This deregulatory impulse (‘justified’ in fire safety by a steady decline in UK fire deaths from the 1970s onwards) affected not only the broader political context; it also had a direct consequence for the application of the UK fire safety expertise that had been built up in the Fire Research Station in the post-war period. In the early 1990s, needing to show its value to government and with the threat of privatisation hanging over it, BRE (which had absorbed the FRS) had an organisational incentive to enable overcladding solutions whilst being seen to mitigate their fire risks—rather than to prohibit their use. This led eventually to the BS 8414 test and BR 135 classification document, along with the associated revenue streams.

Whereas Brannigan ([19], p. 26) argues that the normal way to address ‘innovation risk’ is ‘to have a regulator with adequate discretion and expertise examine each innovative product or situation to determine whether the regulatory test is adequate to describe the risk arising from the new product’, it is naïve to think that such expertise can operate independent of the societal context. BRE’s position may have made it uniquely vulnerable to pressure, but all regulators must operate in a context of balancing differing societal goals. In this case, in an effort to maintain the ‘status quo’ for industry, innovations in standardised testing resulted in an accumulation of test methods—rather than in rationalisation—and in many potential routes to regulatory compliance.

The second issue that flows from this analysis is that different levels of black-boxing of expertise resulted in a regulatory system that was not used in the way it was intended. As our taxonomy demonstrates, not all tests were created equal.

The three categories of test methods, and the way in which they can and should be used within a regulatory system, are fundamentally different. Unrepresentative tests are the ‘bluntest tool’ in the regulatory toolbox, and their results are likely to be valid across the widest range of scenarios. In selecting both the test and the thresholds for pass/fail the test’s designers recognised that, while these may have a significant commercial impact on product manufacturers, there would be minimal impact on fire safety outcomes. Although there is a degree of arbitrariness about test design and thresholds, the resulting ratings are assumed to have almost universal validity. The credibility of these tests stems from the use of conservative thresholds, and in the case of the bomb calorimeter test, from a high degree of reproducibility.

This means that much of the responsibility for the unrepresentative test and its application falls upstream of a building designer or product manufacturer, who need rarely think about whether the test is appropriate for their particular situation. In such cases the onus to ensure that the test is fit for the purpose for which it is used falls on the test’s designers—and on those citing the test in regulations or guidance.

Model tests seek to be more representative, but the link between the test result and the real world is valid only in so far as the tested product matches the assumptions of the underlying research and reference scenario. Here, the credibility of the test stems from the claim that it provides a model that is reasonably representative of key aspects of actual building hazards (at two removes, being typically a model of a model). The model test’s designers must therefore be careful to define and articulate the test’s limitations—otherwise there is a risk that the test may be used for products or situations for which it was not intended. In addition, the model test’s users must also ensure that the test is not used for products or situations for which it was not intended. The implicit onus of responsibly is therefore split. The test’s developers, upstream of a building design process, have a responsibility to clearly define and articulate the limitations of the test method. But the building designer or product manufacturer also have a responsibility to check these limitations and to ensure that the test is not pushed beyond its credible bounds of application. The fact that in the years leading up to the Grenfell Tower fire model tests became widely used to enable compliance of external cladding systems indicates that neither those who wrote the regulatory guidance nor those that used it appear to have been sufficiently concerned about the limitations of tests that were developed on the basis of internal fire spread scenarios.

Technological proof tests are different. They rely almost entirely on similarity judgements made by the end users about each specific system. The implicit (and, in the case of BR 135, explicit) onus of responsibility falls entirely on the end user—to make a judgement about whether any particular testing outcome is applicable to any particular real-world situation.

However, the use of these different kinds of tests within England’s regulatory system in the years leading up to the Grenfell Tower fire appears not to have been in accordance with the logic set out above, as detailed elsewhere [8]. In practice, construction industry practitioners treated all three types of tests as black boxes, concerned only with the test result, and not the relevance of its application to their case. These practitioners thus did not adhere to the principle set out in British Standard 0, that: ‘it remains the responsibility of users to ensure that a particular standard is appropriate to their needs’.

The unrepresentative tests were used largely as set out above. The thresholds were set at a low level, and the tests were (relatively) sensitive to detecting the degree to which a material or product may or may not burn. The model tests were clearly not being deployed in accordance with the logic set out above [8]. Rather than the classification frameworks being set out with their assumptions and limitations, in practice they were implemented within the UK’s regulatory system as more of a ‘one size fits all’ classification system. Thus, so long as the literal rules of the test standards and government guidance were ‘followed’, in practice the regulatory system appears to have placed no onus whatsoever on the user to ensure that the particular testing framework applied to any specific situation was suitable for that product, or for its application on a building.

The technological proof test (BS 8414) was invoked within government guidance and BR 135 in accordance with the logic outlined above; it was made explicit that the onus was on the user to ensure that a test results was applicable to any particular situation. However, the manner in which it was actually used in practice was more as an unrepresentative or model test—where little thought appears to have been given about the extent to which the tested system was genuinely relevant to the end use situation.

Each of these tests involved complex judgements about the extent to which inferences could be made from the test to building applications, as well as about what balance should be struck between safety and commerce, but these were black-boxed in the form of standard tests that were opaque to most users. Users could select from a buffet of tests based on which test was easiest to pass for their product. Manufacturers were able to gain approval for new materials and products, and designers could use insulated cladding systems on new buildings, and for renovations of existing ones, including many social housing tower blocks whose poor environmental performance made them uncomfortable and expensive to live in. And then, on 14 June 2017, a sufficiently horrific disaster destroyed this testing regime’s credibility.

Justified public outrage following the Grenfell Tower fire undercut the central rationale for both the model tests and BS 8414—that more realistic tests could so accurately gauge fire hazards as to allow innovative products, even if they contained some materials that would be rated as combustible in other tests. The credibility of these tests had rested on users making appropriate judgements as to whether a test was relevant to a particular application. However, because at least some of the logic of these tests was black-boxed, it was difficult for users to make such judgements, and easy (and convenient/attractive) for them to focus on simply achieving a ‘pass’.

Industry had demonstrated that they could not be trusted to make such similarity judgements, and the government removed their freedom to do so. A 2018 revision to the building regulations meant that only the results of unrepresentative combustibility tests could be used to gain compliance for cladding on certain classes of building above 18 m [50]. No doubt well-intentioned, this ‘ban’ provided a further example of how the black-boxing of fire tests impeded understanding of their full role in regulation.

The unintended consequences compounded the tragedy of Grenfell, ensuring that the ‘cladding crisis’ was not just a crisis of safety. Building owners, through no fault of their own, found their properties to be deemed ‘unsafe’ because of sometimes very small amounts of functionally negligible material deemed combustible in the unrepresentative tests. Affected building owners were unable to sell their properties or obtain building insurance.

The unravelling of the credibility of the testing regime thus had broad societal consequences, causing great distress in addition to that directly suffered in the Grenfell Tower fire. It provides a salutary reminder that not all tests are created equal, that realism is not the only important factor in test design, and that black-boxing of expert judgements can come at the expense of broader practitioner and societal understanding.

The wider implication of this case study is that for all regulation where governments (or standard setting bodies) cite tests as a means for practitioners to make claims about a product or system, there is a responsibility among those making the citation to ensure that the practitioners have sufficient expertise to use the test method as intended. This requires that the underpinning testing framework is clearly and transparently communicated and that users of the system have the necessary expertise to engage with it. In the absence of such expertise, the only tests that should be cited are unrepresentative tests; these tests require no expertise from the practitioner and force conservatism on the regulated industry.

Other tests require more expertise but may deliver more freedom. Expertise is necessary for both the regulators and the practitioners, to know how tests should be used. In the absence of this expertise, there is the potential for tests to be inadvertently (or purposefully) misused.

It is our suggestion that the taxonomy of tests presented herein has the potential to allow regulators and standard-setting-bodies to match the test methods they specify with the competence of the industry that will use them. In an industry characterised by ‘ignorance’, ‘indifference’, and ‘lack of clarity on roles and responsibilities’ ([2], p. 5) it would be foolhardy to specify anything other than unrepresentative tests. If an industry were characterised by ‘knowledge, care, and responsibility’, then model and technological proof tests could be more safely deployed.

Notes

Note the use of the word ‘materials’ in the title of the testing standard.

References

Shapin S (1995) Cordelia’s love: credibility and the social studies of science. Perspect Sci 3:255–275

Hackitt J (2018) Building a safer future independent review of building regulations and fire safety: final report. London

Law A, Butterworth N (2019) Prescription in fire regulation: treatment, cure or placebo? Proc Instit Civil Eng Forensic Eng 172:61–68. https://doi.org/10.1680/jfoen.19.00004

Law A, Spinardi G, Bisby L (2023) The rise of the euroclass: inside the black box of fire test standardisation. Fire Saf J 135:103712. https://doi.org/10.1016/j.firesaf.2022.103712

Lange D, Torero JL, Spinardi G et al (2022) A competency framework for fire safety engineering. Fire Saf J 127:103511. https://doi.org/10.1016/j.firesaf.2021.103511

Spinardi G, Law A, Bisby L (2023) Vive la résistance? Standard fire testing, regulation, and the performance of safety. Sci Cult. https://doi.org/10.1080/09505431.2023.2227186

Blaikie N (2010) Designing social research: the logic of anticipation, 2nd edn. Polity Press

Bisby L (2022) Grenfell tower inquiry phase 2—regulatory testing and the path to Grenfell. Grenfell Tower Inquiry

Arber S (1993) Designing samples. In: Gilbert N (ed) Researching social life. Sage, Los Angeles, pp 58–82

MacKenzie D (1989) From Kwajalein to Armageddon? Testing and the social construction of missile accuracy. In: The uses of experiment

Downer J (2007) When the chick hits the fan: representativeness and reproducibility in technological tests. Soc Stud Sci 37:7–26. https://doi.org/10.1177/0306312706064235

Sims B (1999) Concrete practices: testing in an earthquake-engineering laboratory. Soc Stud Sci 29:483–518

Ingberg SH (1928) Tests of the severity of building fires. Natl Fire Protect Assoc Quart 22:43–46

Rein G, Torero JL, Jahn W et al (2009) Round-robin study of a priori modelling predictions of the Dalmarnock fire test one. Fire Saf J 44:590–602. https://doi.org/10.1016/j.firesaf.2008.12.008

Law A, Bisby L (2020) The rise and rise of fire resistance. Fire Saf J. https://doi.org/10.1016/j.firesaf.2020.103188

Sachs EO (1903) Suggested standards of fire resistance. In: International fire prevention congress. pp 243–248

MacKenzie D (1990) Inventing accuracy: a historical sociology of nuclear missile guidance. MIT Press, Cambridge

(2016) BS 0:2016 A standard for standards—principles of standardisation. British Standards Institution

Brannigan VM (2008) The regulation of technological innovation: the special problem of fire safety standards. In: Fire and building safety in the single European market. Edinburgh, pp 20–33

(2019) The Grenfell tower fire: a crime caused by profit and deregulation. Fire Brigades Union

Prince RE (1915) Tests on the inflammability of untreated wood and of wood treated with fire-retarding compounds. In: national fire protection association proceedings of ninteenth annual meeting. pp 108–114

(1932) BS 476–1932 Fire-resistance, incombustibility and non-inflammability of building materials and structures (including methods of test). London

(1953) British standard specification, fire tests on building materials and structures, B.S. 476:1953

BSI (1970) Fire tests on building materials and structures, Part 4. Non-combustibility test for materials, BS 476: Part 5: 1970. British Standards Institution, London

ISO (1970) Noncombustibility test for building materials, ISO Recommendation R 1182. International Standards Organisation

Herpol G (1972) Noncombustibility—its definition, measurement, and applications. Ignition, heat release, and noncombustibility of materials (ASTM STP 502). American Society for Testing and Materials, Philadelphia, pp 99–111

BSI (1982) Fire tests on building materials and structures, Part 11. Method for assessing the heat emission from building materials, BS 476: Part 11: 1982. British Standards Institution, London

ISO (1979) Fire tests—building materials—non-combustibility test, ISO 1182. International Standards Organisation

(1985) Approved Document B, B2/3/4. London

(2002) Approved document B. Her Majesty’s Stationery Office

EN 13501-1-2002 Fire classification of construction products and building elements Part 1: Classification using test data from reaction to fire tests

Babrauskas V (2017) Engineering variables to replace the concept of ‘noncombustibility.’ Fire Technol 53:353–373. https://doi.org/10.1007/s10694-016-0570-x

(2009) Fire classification of construction products and building elements—Classification using data from reaction to fire tests. BS EN 13501-1:2007+A1:2009. British Standards Institution, London

Hamilton SB (1958) A short history of the structural fire protection of buildings particularly in England, National Buildings Studies, Special Report No. 27. London

Malhotra HL (1974) Determination of flame spread and fire resistance, lecture given at the national fire protection association’s first European fire conference, Geneva, October 15–17. BRE Current Paper. UK National Archives file AY 21/24. Fire Research Station

(1944) Department of scientific and industrial research joint committee of the building research board and fire offices’ committee on fire grading of buildings (working group), one and two storey houses, 24 February. UK National Archives file DSIR 4/272. DSIR

Fischer K (1979) Developing flame retarded plastics: new guidance wanted. Fire Mater 3:167–170. https://doi.org/10.1002/fam.810030309

Hird D, Fischl CF (1954) National building studies special report 22, Fire Hazard of Internal Linings

(1968) Fire tests on building materials and structures, Part 6. Fire Propagation Test for Materials, BS 476: Part 6: 1968. BSI

Mierlo R, Sette B (2005) The single burning item (SBI) Test method—a decade of development and plans for the near future. Heron 50:

Messerschmidt B (2008) The capabilities and limitations of the single burning item (SBI) test. In: fire & building safety in the single European market. Edinburgh, pp 70–81

Shennan P (1991) Towering Inferno. Liverpool Echo, p 1

Connolly R (1994) Investigation of the behaviour of external cladding systems in fire—Report on 10 full-scale fire tests CR143/94—Fire Research Station, 42

(2002) Fire performance of external cladding systems—part 1: test method for non-loadbearing external cladding systems applied to the face of a building, BS-8414-1. British Standards Institution, London

Colwell S, Baker T (2013) BR 135 fire performance of external thermal insulation for walls of multistorey buildings. Watford

Schulz J, Kent D, Crimi T et al (2021) A critical appraisal of the UK’s regulatory regime for combustible façades. Fire Technol 57:261–290. https://doi.org/10.1007/s10694-020-00993-z

MacKenzie D (1996) How do we know the properties of artefacts? Applying the sociology of knowledge to technology. Technological change: methods and themes in the history of technology. Harwood, London, pp 247–263

(2002) Grenfell Tower Inquiry, Day 231, February 14th Page 176, Line 23

Law A, Spinardi G (2021) Performing expertise in building regulation: ‘codespeak’ and fire safety experts. Minerva. https://doi.org/10.1007/s11024-021-09446-5

Law A, Butterworth N (2019) Prescription in English fire regulation: treatment, cure or placebo? Proc Instit Civil Eng Forensic Eng. https://doi.org/10.1680/jfoen.19.00004

Acknowledgements

The work leading to this article was performed by the authors in their roles supporting the Grenfell Tower Inquiry. Some of the text in this article forms part of the third author’s expert witness report to the Grenfell Tower Inquiry.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no financial or non-financial interests that are directly or indirectly related to the work submitted for publication.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

See Table 1.

Rights and permissions