Abstract

The X-ray Integral Field Unit (X-IFU) instrument on the future ESA mission Athena X-ray Observatory is a cryogenic micro-calorimeter array of Transition Edge Sensor (TES) detectors designed to provide spatially-resolved high-resolution spectroscopy. The onboard reconstruction software provides energy, spatial location and arrival time of incoming X-ray photons hitting the detector. A new processing algorithm based on a truncation of the classical optimal filter and called 0-padding, has been recently proposed aiming to reduce the computational cost without compromising energy resolution. Initial tests with simple synthetic data displayed promising results. This study explores the slightly better performance of the 0-padding filter and assess its final application to real data. The goal is to examine the larger sensitivity to instrumental conditions that was previously observed during the analysis of the simulations. This 0-padding technique is thoroughly tested using more realistic simulations and real data acquired from NASA and NIST laboratories employing X-IFU-like TES detectors. Different fitting methods are applied to the data, and a comparative analysis is performed to assess the energy resolution values obtained from these fittings. The 0-padding filter achieves energy resolutions as good as those obtained with standard filters, even with those of larger lengths, across different line complexes and instrumental conditions. This method proves to be useful for energy reconstruction of X-ray photons detected by the TES detectors provided proper corrections for baseline drift and jitter effects are applied. The finding is highly promising especially for onboard processing, offering efficiency in computational resources and facilitating the analysis of sources with higher count rates at high resolution.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The X-ray Integral Field Unit (X-IFU; [6]) is a high-resolution cryogenic imaging spectrometer that will be one of the two instruments on-board the ESA’s Athena mission [31]. It will operate in the 0.2–12 keV band and provide unprecedented spectral resolution with a Full Width at Half Maximum (FWHM) of 2.5 eV at 7 keV. The X-IFU Focal Plane will contain a large array of Transition Edge Sensors (TES; [36]) with several tens of TES per readout channel using a Time Division Multiplexing (TDM) scheme [18]. The on-board Event Processor [34, 35] hardware will reconstruct the detected events caused by the impact of X-ray photons in the detector to estimate their energy, arrival time and spatial location (based on impact pixel).

Event processing poses a significant challenge, demanding a delicate balance between achieving high energy resolution from photons and minimizing computational costs. The effectiveness of selected algorithms for working in event processing must be optimized to prevent degradation of the energy resolution caused by detector non-linearity.

Numerous studies have investigated different algorithms to characterize the energy of detected photons by X-IFU [8, 9, 12, 14]. Building upon our previous findings in Ceballos et al. [9], this paper offers a more comprehensive understanding of the ongoing efforts to identify the most suitable strategy for maximizing energy resolution with this instrument.

At the core of reconstructing X-IFU events lies the classical optimal filtering technique [38]. This method involves digitizing time stream data into fixed-length records, which are then utilized to construct the signal and noise components of the filter.

In order to construct the filter, two steps are followed (see e.g. [7, 17]). Firstly, the Discrete Fourier Transform (DFT) of the average of multiple pulse records is calculated to create the signal portion. Meanwhile, the noise portion is generated by averaging the spectra of several pulse-free records. The \(f\!=\!0\) Hz bin of the DFT, typically containing a slowly varying and arbitrary offset, is usually discarded to achieve a final filter that is zero-summed. This zero-summed filter is crucial as it effectively rejects the signal baseline during processing.

When working in the time domain, the most accurate estimate of photon energy is obtained by computing the scalar product of the data pulse and an optimal filter. This straightforward approach provides a proportional estimate of the photon energy

where d(t) is the pulse data, \(o\!f(t)\) is the time domain expression of the optimal filter and k is the normalization factor to give \(\hat{E}\) in units of energy

The matched filter (a normalized model pulse shape, S(f)) and the noise spectrum (N(f)) are used to initially build the optimal filter in frequency domain as

The optimal filtering technique relies on the assumption that all pulses are scaled versions of a single template, which is not valid for non-linear detectors like Athena/X-IFU. Therefore, \(\hat{E}\) serves strictly as an energy estimator that requires correction to obtain the final energy. This correction involves applying a gain scale obtained from filtering pulse templates measured at different calibrated energies (see Section 5). To underscore the distinction between real energies and estimated (or reconstructed) "pseudo-energies," we will employ (k)eV units for the former and (k)\(\widehat{\textrm{eV}}\) for the later.

The energy resolution of the instrument, determined after event reconstruction, is measured by the FWHM of the Gaussian broadening resulting from the instrumental setup and reconstruction algorithm, in addition to the Lorentzian natural profiles of the lines in a typical X-ray complex.

As the average value of the filtered pulse is set to 0 (specifically the \(f\!=\!0\) Hz bin), the number of samples used in the discrete expression of the data pulse and filter can influence the final energy resolution achieved through the optimal filter [17]. Increasing the record length can improve resolution, but it comes with the trade-off of higher computational demands as well as more sensitivity to low frequency fluctuations. Furthermore long filters cannot be built at high count rates due to the temporal proximity of the photon arrival.

In a previous study [14], we aimed to reduce the on board computing operations by exploring optimal filters of varying lengths and comparing their performance in terms of energy resolution.

The filters under investigation were:

-

FULL: This filter uses a pulse template obtained by maximizing the length of the data records (\(N_\textrm{FULL}\) samples).

-

SHORT: The pulse template in this filter is constructed using shorter pulses (\(N_\textrm{SHORT}\) samples), specifically half the length of the record, to save computational resources.

-

0-padding: A modified version of the FULL filter truncated to half its length in the time domain. This approach is equivalent to 0-padding the data pulses in the scalar product of filter and pulse (Section 2.1).

The analysis was conducted using synthetic monochromatic data at 6 keV simulated with the X-IFU official simulator xifusim [28].

The primary finding indicated that the 0-padding technique outperformed both the SHORT and FULL filters in terms of energy resolution. Remarkably, it outperformed the FULL filter (which is currently the baseline filter for high resolution events) despite being only half its length. This result suggests that 0-padding offers a viable alternative for reducing the computational burden associated with optimal filtering.

However, to apply these findings in real-life scenarios it was crucial to extend the analysis to more representative simulations, including photons from a typical X-ray line complex with controlled simulated energy resolution.

Moreover, the initial analysis revealed that the 0-padding filter is more sensitive to variations in instrumental conditions, especially changes in bath temperature leading to baseline drifts. Therefore, it was essential to test this approach using real laboratory data before considering 0-padding as an optimization or even a feasible alternative to the current baselined reconstruction algorithm.

This paper presents the energy resolution results obtained by applying the 0-padding filter to a realistic X-IFU simulation of the Mn K\(\alpha \) line complex and to TES (X-IFU-like) real data from the Goddard Space Flight Center (GSFC) and the National Institute of Standards and Technology (NIST) laboratories. We compare these results with those obtained using the FULL optimal filter, which serves as the baseline method at these laboratories, as well as with those obtained with the SHORT filter, equal in length to the 0-padding filter. Additionally, we assess the performance and potential systematic effects of different analysis algorithms, along with some external factors that could influence the results.

It is important to highlight that although the initial motivation of this work stems from the effort to find the optimal algorithm for reconstructing energy for the X-IFU instrument, the results presented are applicable not only to data on X-IFU-type detectors but can also be useful for other present or future TES detectors.

This work benefited significantly from the use of the software SIRENA [10, 11] (Software IFCA for Reconstruction of EveNts for Athena X-IFU)Footnote 1, a package developed to reconstruct the energy of the incoming X-ray photons after their detection in the X-IFU TES detector.

Section 2 explores mathematically the possible reasons behind the better performance of the 0-padding filter. Section 3 describes the simulations of the Mn K\({\alpha }\) line complex with xifusim and the performance of the filters on these simulated data. Section 4 provides a description of the laboratory data utilized in the analysis. In Section 5, the real-data reconstruction process is presented, and Section 6 describes and compares the two techniques utilized to fit the energy distribution and retrieve the energy resolution. Section 7 presents the results of the filter comparison in terms of the measured energy resolution. The analysis to other line complexes at energies different from the standard Mn K\(\alpha \) complex from which the optimal filters are built is described in Section 8. Finally, Section 9 summarizes the main conclusions of this work.

2 Insights into the effectiveness of 0-padding

2.1 Energy reconstruction in detail

In practical terms, the application of Eq. (1) to compute the reconstructed energy of a pulse is evaluated through a discrete sum expressed as follows

In this equation \(d(t_i)\) represents the discretized pulse, sampled at \(N_\textrm{final}\) time values denoted as \(t_i\). Likewise \(\widetilde{o\!f}(t_i)\) corresponds to the optimal filter in the time domain. For the sake of simplicity in the notation, we incorporate the normalization factor from Eq. (2) into \(\widetilde{o\!f}(t_i)\). This makes it clear that Eq. (4) is essentially a dot product calculation between the discretized pulse and the optimal filter, considering them as vectors.

Panel (a): Example of a noise-free pulse generated with xifusim, corresponding to a photon of 6 keV. The entire pulse comprises \(N_\textrm{FULL}=8192\) samples, with a pre-trigger (i.e., the data signal before the rising of the pulse) of 1000 samples and a baseline of \(\sim \! 7085\) (arbitrary) units. Panel (b): Optimal filter constructed from the same pulse displayed in the previous plot. The inset plot is a zoom-in on the vertical axis revealing that the flat part of the optimal filter contains negative numbers (below the horizontal dotted line marking the zero level). Panel (c): Cumulative sum of the dot product of the pulse and the optimal filter represented above. The filled colored circles in this panel indicate the reconstructed energy obtained when the upper limit of the summation in Eq. (4) is calculated only up to the sample indicated by the vertical dashed lines: \(N_\textrm{cut}=1100\) (orange), \(N_\textrm{cut}=4096\) (green), and \(N_\textrm{final} = N_\textrm{FULL}=8192\) (blue) for a sampling rate of 156.25 kHz

As an example, Fig. 1(a) illustrates a noise-free 6 keV pulse, which was simulated using the xifusim simulator (v.0.8.3) with an LPA2.5a instrument configuration file (1 pixel) and a sampling rate of 156.25 kHz. This configuration served as the baseline for the X-IFU instrument at the time of writing and it was consistently used throughout this paper.

In Fig. 1(b), we can observe the optimal filter computed from that pulse, making use of a noise spectrum derived from 100 000 noise streams. The cumulative sum of the dot product of the pulse and optimal filter is represented by the purple line in Fig. 1(c). As expected, when extending the sum in Eq. (4) up to \(N_\textrm{final}=N_\textrm{FULL}=8192\) (the full length of the simulated pulse) the reconstructed energy is measured as \(6.00\;\textrm{k}\widehat{\textrm{eV}}\), as indicated next to the blue filled circle.

It is important to note that the cumulative sum initiates as negative, reaches a peak around sample 1800, and then decreases monotonically. This pattern is predictable, as the most significant part of the pulse has been included in the scalar product by the time the cumulative sum reaches the peak. Beyond that sample, the optimal filter remains relatively constant and negative, while the pulse mainly consists of the baseline value due to the completed exponential decay of the pulse.

In an effort to grasp the impact of filter truncation on the estimation of the pulse energy using the 0-padding filter, we can decompose the dot product of Eq. (4) into two components,

where \(N_\textrm{cut}\) is an intermediate time sample that represents the point selected to truncate a FULL filter to construct a 0-padding filter. \(\hat{E}_{\textit{0-pad}}\) corresponds to the reconstructed energy obtained by performing the dot product using the first \(N_\textrm{cut}\) samples. Specifically, we emulate the 0-padding optimal filter as examined by Cobo et al. [14] by selecting \(N_\textrm{cut}=4096\). This is illustrated in Fig. 1(c) with the vertical green dashed line. In this particular case, the calculated energy is \(\hat{E}_{\textit{0-pad}}=16.60\;\textrm{k}\widehat{\textrm{eV}}\) (green filled circle), which is significantly higher than the expected 6.0 keV value. This discrepancy is in line with the fact that the second term in Eq. (5) is negative. An even more extreme 0-padding scenario can be achieved by computing the summation only up to \(N_\textrm{cut}=1100\). This is shown by the vertical orange dashed line in the same figure, resulting in \(\hat{E}_{\textit{0-pad}}=12.62\;\textrm{k}\widehat{\textrm{eV}}\).

As previously mentioned in the introduction, the optimal filter is computed from a single-energy template, making each reconstructed energy an energy estimation that needs to be converted into a real energy using a gain scale conversion. To achieve this, we simulated noise-free pulses with energy values ranging from 0.5 to 12.0 keV in increments of 0.1 keV using xifusim. By applying the optimal filter computed using the 6.0 keV pulse as the template, we determined the corresponding reconstructed energies. Subsequently, we fitted an eleventh degree polynomial to the relationship between the real (simulated) energies and the reconstructed energies. This relationship is presented in Fig. 2 for the three filters depicted in Fig. 1(c): FULL (\(N_\textrm{FULL}=8192\); blue line), 0-padding (\(N_\textrm{cut}=4096\); green line), and extreme 0-padding (\(N_\textrm{cut}=1100\); orange line). This figure also represents the corresponding gain scale for the SHORT filter (\(N_\textrm{SHORT}=4096\)), although it is visually indistinguishable from the FULL filter curve.

These gain scales, particularly those corresponding to 0-padding and extreme 0-padding, are responsible for transforming the reconstructed energies \(\hat{E}_{\textit{0-pad}}\) of \(16.60\;\textrm{k}\widehat{\textrm{eV}}\) and \(12.62\;\textrm{k}\widehat{\textrm{eV}}\), respectively, into calibrated energies of 6.00 keV in both cases.

The crucial aspect to comprehend here is how the uncertainties in the measurement of the energy are affected by the 0-padding truncation and how these uncertainties change when the corresponding gain scale correction is applied.

Gain scales computed for different filters: FULL (\(N_\textrm{FULL}=8192\); blue line), 0-padding (\(N_\textrm{cut}=4096\), using the FULL filter data; green line), and extreme 0-padding (\(N_\textrm{cut}=1100\), using the FULL filter data; orange line). The gain scale for the SHORT filter (\(N_\textrm{SHORT}=4096\)) is indistinguishable from the FULL filter curve in this representation. The three horizontal grey dashed lines correspond to real energies of 1, 6 and 11 keV, from bottom to top. The tangent lines to the gain scale curves at the intersection with those three horizontal lines are shown with black line segments, and the corresponding slope values are displayed next to the intersection points (the values associated with the SHORT filter are given in red color between parentheses)

2.2 Propagation of random uncertainties

To get an initial understanding of how the truncation introduced in the 0-padding approach affects uncertainties (and consequently impacts the measured energy resolution), we begin by examining the random uncertainty associated to the computation of the energy estimator given by Eq. (4). It is worth noting that systematic effects arising from our incomplete knowledge of the system will be addressed during the application of the gain scale correction.

In a simplified scenario, we disregard the random uncertainties in the optimal filter in comparison with those in the pulse. This approximation is justified given that the optimal filter, in real-world situations, is derived from an average pulse (achieved by averaging pulses corresponding to photons of a certain energy). The random uncertainty in each sample of this averaged pulse is anticipated to be significantly less than the random uncertainty associated with each sample of individual pulses, the energy of which we aim to determine.

Even though there are specific frequencies with more noise in the system, we will operate under the additional approximation that the noise within different time samples of a specific pulse is uncorrelated, which facilitates the following computation. Applying the law of propagation of uncertainties to Eq. (4), we obtain

In this equation, we have approximated the uncertainty at each sample of the pulse \(\Delta d(t_i)\) by its average value \(\Delta d\). We have also split the final expression into two terms, similarly to what we have done in Eq. (5), to clarify the computation in the 0-padding case. The resulting expression suggests that the expected uncertainty in the reconstructed energy scales with the sum of the squares of the optimal filter values.

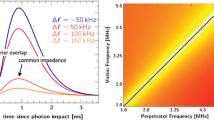

Panel (a): Comparison of the FULL (\(N_\textrm{FULL}\!=\!8192\); blue line) and SHORT (\(N_\textrm{SHORT}\!=\!4096\); red line) optimal filters constructed from a noise-free pulse corresponding to a photon of 6 keV. The inset plots are zooms that highlight the differences between both filters for values close to zero, subplot (a1), and for values around the maximum and minimum filter peaks, subplots (a2) and (a3), respectively. Panel (b): Cumulative sum of the squared FULL (blue line) and SHORT (red line) optimal filters displayed above. The inset is a zoom of the grey shaded region of the diagram, highlighting the smooth increase of the curves beyond the time samples where most of the information of the pulse is concentrated. The filled symbols indicate the summation factor in \((\Delta \hat{E})^2\) from Eq. (6) for the filters SHORT (\(N_\textrm{final}\!=\!4096\); red circle), and FULL (\(N_\textrm{final}\!=\!8192\); blue circle). The open symbols depict the corresponding summation factor in \((\Delta \hat{E})^2_{\textit{0-pad}}\) resulting from the truncation of the FULL filter for 0-padding (\(N_\textrm{cut}\!=\!4096\); green open square), and 0-padding extreme (\(N_\textrm{cut}\!=\!1100\); orange open triangle)

Considering the typical shape of the optimal filters, it is clear that the most significant contributions to the uncertainty described by the sum in Eq. (6) occur at the time samples where the pulse exhibits its abrupt increase and subsequent decline. This behavior is evident in Fig. 3. In particular, panel 3(a) compares the FULL (\(N_\textrm{FULL}\!=\!8192\); blue line) and SHORT (\(N_\textrm{SHORT}\!=\!4096\); red line) optimal filters, computed from the same 6 keV pulse depicted in Fig. 1(a). The insets highlight the differences between them. In addition, panel 3(b) represents the cumulative sum of the squared values for both filter data. These curves demonstrate a drastic change starting around sample 1000 (where the pulse is triggered). They stabilize beyond the sample where most of the pulse’s exponential decline has occurred, after which they flatten.

Interestingly, the inset figure in panel 3(b) (a zoomed-in view of the graph region demarcated by the shaded grey rectangle) reveals that the displayed cumulative sums indeed exhibit a modest, but not negligible, positive increase after sample \(\sim \! 1800\). The filled colored circles indicate the summation factor in \((\Delta \hat{E})^2\) from Eq. (6) for the FULL and SHORT filters. Analogously, the open symbols represent the summation factor in \((\Delta \hat{E})^2_{\textit{0-pad}}\), resulting from the truncation of the FULL filter for 0-padding (\(N_\textrm{cut}\!=\!4096\); green open square), and 0-padding extreme (\(N_\textrm{cut}\!=\!1100\); orange open triangle). The value for the 0-padding case is slightly below the one corresponding to the FULL filter, as expected considering the second term in the last expression of Eq. (6), which is always positive but only incorporates optimal filter values very close to zero. In addition, the 0-padding value is substantially below the one associated with the SHORT filter. This result is also easy to understand looking at the insets of Fig. 3(a), where the SHORT filter exhibits larger absolute values than the FULL filter in most samples.

Given the earlier approximation, where the uncertainty \(\Delta d(t_i)\) at each pulse sample can be represented by its mean value \(\Delta d\), the previous results imply that the uncertainty in the reconstructed energy obtained with 0-padding should be marginally smaller than the uncertainty associated with the FULL filter estimate. Moreover, it should be noticeably smaller than the uncertainty linked to the SHORT filter.

It is crucial to note that while the aforementioned results highlight the relative significance of uncertainties in reconstructed energies, these energies still require conversion to a real scale using the appropriate gain scale transformations.

When focusing on pulses produced by photons within a narrow energy interval (like our 6 keV simulated pulses), the application of the gain scale can be approximated by a linear transformation. Under these circumstances, the propagation of uncertainties depends solely on the slope of this transformation. Interestingly, the derivatives illustrated in Fig. 2 for the FULL and 0-padding gain scales at a fixed real energy are identical within four significant figures. This suggests that the gain scale of the 0-padding filter is the same as the one corresponding to the FULL filter except for a horizontal shift in this diagram.

As a result, the uncertainties associated with the reconstructed energies are modified by the same factor when converted into real energies upon applying the gain scale correction. This accounts for why the uncertainties in the final energies obtained with the 0-padding filter remain slightly smaller than those associated with the FULL filter. The same comparison holds true when evaluating the 0-padding and SHORT filters.

We have quantified this effect using 1 000 000 monochromatic noisy 6.0 keV pulses simulated with xifusim, whose reconstructed energies were computed using the four filters FULL, SHORT, 0-padding and 0-padding extreme, and later transformed into a real energy scale using their corresponding gain scale corrections. The mean energies obtained in each case, together with the associated dispersion expressed as FWHM, are summarised in Table 1.

For a given filter, the FWHM corresponding to the reconstructed energies is stretched by the slope values indicated at the locations of the filled circles in Fig. 2: 1.133 (FULL), 1.136 (SHORT), 1.133 (0-padding) and 1.526 (0-padding extreme). When we move from reconstructed energies to gain-scale corrected energies we observe an increase of \(\sim 13\)% in FWHM for the FULL, 0-padding and SHORT filters. Interestingly, the FWHM of the mean energy reconstructed with the 0-padding extreme filter was the smallest (1.419 eV); however, when applying its gain scale transformation, this value is stretched by \(\sim 53\)% (2.166 eV), making it the worst option.

Panel (a): histogram of FWHM values corresponding to the energy resolutions obtained with different optimal filters (as indicated in the legend) for 100 simulated TES pixels observing 10 000 monochromatic 6 keV pulses each. The mean values and standard deviations correspond to the values listed in column (6) of Table 1. Panel (b): comparison of the individual 100 FWHM values whose histograms are displayed in panel (a), using for the horizontal axis the ones retrieved using the 0-padding filter. The dashed line indicates the 1:1 relation. Note that all the points appear above this line

It is essential to emphasize that the uncertainty quoted in each FWHM value, as presented in Table 1 columns (4) and (6), has been calculated by dividing the simulated dataset into 100 sub-samples, each containing 10 000 pulses. The standard deviation of the resulting 100 FWHM estimates was then computed and divided by the square root of 100 to obtain the uncertainty in the mean. This process is analogous to having 100 identical TES, each collecting 10 000 monochromatic 6 keV pulses. Although there may appear to be small differences in FWHM among different filters, a statistical analysis must be conducted considering these expected uncertainties. This analysis must account for the fact that the FWHM values obtained with different optimal filters are paired for a specific simulated TES, meaning each subset of 10 000 pulses in the simulated dataset. In this regard, although Fig. 4(a) shows some overlap in the histogram distributions of the 100 FWHM estimates corresponding to the different optimal filters listed in Table 1, the mean FWHM values are statistically different. Figure 4(b) visually represents this difference, indicating that the 0-padding estimate is consistently lower. A Wilcoxon signed-rank test for paired data [42] (non-parametric) rejects the null hypothesis that the FWHM obtained using FULL, SHORT, and 0-padding extreme are lower than the FWHM obtained with 0-padding, with a p-value of zero in all three comparisons.

The outcome is not unexpected when we employ \(N_\textrm{cut}\!=\!1100\). At this cut-off, we are disregarding vital information present in the pulse data, as the exponential decay is still evident at that time sample. Consequently, the signal-to-noise ratio of the reconstructed energy would be significantly lower than when considering all the informative pulse samples. Furthermore, the larger slope in the corresponding gain scale transformation would further degrade the energy resolution.

As a final validation of all the approximations leading to Eq. (6), we have verified the proportionality between the energy uncertainty (\(\Delta \hat{E}\)) and the noise in the pulse (\(\Delta d\)) with the help of additional numerical simulations. In particular, we have simulated monochromatic noisy pulses using as starting point the prediction of xifusim for a 6.0 keV noiseless pulse, and adding Gaussian noise with varying standard deviation. After computing the reconstructed energy using the optimal filters FULL, SHORT, 0-padding, and 0-padding extreme, we have applied their gain scale transformations to obtain the corresponding corrected energies and associated FWHM. In Fig. 5 we represent the difference between the final FWHM values obtained with FULL, SHORT, and 0-padding extreme, compared to the FWHM corresponding to 0-padding method, as a function of the noise (standard deviation) in the pulse. Each filled circle represents 100 000 simulated noisy pulses, whereas the thin lines correspond to the prediction \(\Delta \hat{E} \propto \Delta d\), where \(\Delta d\) is the assumed standard deviation in the pulse, as shown on the horizontal axis of this figure. We find that the 0-padding technique consistently performs slightly better than FULL and is significantly superior to both SHORT and 0-padding extreme. This advantage is particularly pronounced as the noise level in the pulse increases.

Comparison of energy dispersion values (FWHM) derived from various filters. The differences between the FWHM values derived from FULL, SHORT, and 0-padding extreme with respect to the FWHM of the 0-padding filter are plotted against the standard deviation \(\Delta d\) in the pulse. Each filled circle represents 100 000 simulated noisy pulses. Thin lines indicate the theoretical prediction \(\Delta \hat{E} \propto \Delta d\), where \(\Delta d\) is the assumed standard deviation in the pulse

In conclusion, the aforementioned discussion has demonstrated that a 0-padding filter constructed from a truncated FULL filter tends to slightly outperform the latter, as long as the truncation occurs at a time sample where the essential pulse information has already been captured by the first term in Eq. (5). In such cases, the gain scale slope at the reconstructed energy in the FULL and the 0-padding filters is notably similar. The small increase in noise experienced by the FULL filter due to the inclusion of unnecessary samples in the dot product computation is consequently translated into the gain-scale corrected energies.

3 X-ray line complex simulation

Before delving into the analysis of real data, the next step after the analysis of the performance of the optimal filters on 6 keV monochromatic simulated pulses, involves running more realistic simulations, generating pulses following a theoretical profile of standard X-ray line complexes used in laboratories: specifically the Mn K\(\alpha \) complex. To achieve this, we also utilized the xifusim simulator.

A laboratory-measured Mn K\(\alpha \) complex is the convolution of the natural Lorentzian profile of the X-ray lines and the Gaussian broadening caused by the instrumental setup. The resulting profile of this Lorentzian-Gaussian convolution is referred to as a Voigt profile [40].

The process of generating lists of photon energies within the Mn K\(\alpha \) complex for the simulations, involved following their Lorentzian line profiles with the line parameters described in Table 2. To broaden the lines in a manner similar to the instrument’s behaviour, we included an intrinsic controlled Gaussian profile with varying widths. We randomly selected 300 uniform values of FWHM between 0.7 and 2.3 eV for this purpose. This interval was chosen to get final broadened FWHM values in the range from 2.2 to 3.0 eV, similar to the one measured with the laboratory pixels (see Section 6). For each intrinsic width value, we constructed a Mn K\(\alpha \) complex randomly drawing 10 000 photon energies with the appropriate distribution (again, this number was selected to reproduce the typical number of photons/pixel in the laboratory data of Section 6).

In practice, to calculate the energy of each photon we inverted the cumulative distribution function (CDF) of the line complex. This was achieved by using a uniformly distributed random number between 0 and 1 as input to the CDF. The CDF of the line complex was computed by adding the expected CDF of each lineFootnote 2.

These lists of photon energies served as inputs for the xifusim simulator which generated a current pulse for each photon.

To gain further insights into the factors influencing the performance of the filters, we devised an additional analysis to differentiate how noise in the filter and noise in the pulse affected the reconstruction process and the determination of the energy resolution. In this case, the way to determine the energy resolution is by measuring the Gaussian FWHM broadening that affects the Lorentzian profiles of the lines in the complex. The instrument magnifies the simulated Gaussian width, and measuring the final FWHM for each set of simulations allowed us to analyze and compare the impact of the instrument on the performance of the different filters under conditions similar to those of the laboratory data in Section 6.

During the simulations, we generated two sets of pulses: one with the nominal xifusim noise and the other with the nominal noise enhanced by a factor of 5. For constructing the optimal filters we used a noiseless pulse template at 6 keV, which is close to the mean energy of the Mn K\(\alpha \) complex. Additionally, we derived a noise spectrum from the average of 100 000 instrument-expected noise streams or white-noise streams. We included the case of white noise, even though it is not realistic for a real instrument, as it simplifies calculations, and in this scenario, the optimal filter reduces to just the pulse template [38].

The reconstruction of the pulse energies was performed using the three filters introduced in Section 1 which were also used in the analysis of monochromatic pulses as discussed in Section 2. The 0-padding extreme filter is no longer relevant in the following discussion as it does not provide reasonable values for the energy resolution. The lengths of these filters were as specified in Table 1. For the energy calibration of the Mn K\(\alpha \) photons, the gain scale derived from the monochromatic simulations was utilized.

Comparison of Gaussian FWHM values obtained after optimal filter reconstruction of xifusim simulated pulses under different noise conditions. Panels (a) and (c) depict pulses simulated with the expected instrumental noise while panels (b) and (d) show pulses with noise enhanced by a factor of 5. The optimal filter is constructed from a noiseless template in all cases. The noise spectrum part of the filter was generated using expected-noise streams (for panels (a) and (b)) and white-noise streams (for panels (c) and (d)). In each panel, the left figure displays the quadratic difference between the FWHM value obtained with each filter (blue, red and green symbols for FULL, SHORT and 0-padding filters respectively) and the simulated FWHM. In panel (a) this accounts for the squared instrumental resolution in xifusim. The figures on the right of each panel display the difference between the FULL and SHORT filter FWHM values (blue and red symbols respectively) and the 0-padding FWHM value. In all plots, differences are plotted against the Gaussian FWHM values used in the simulations

The results of applying the different filters are presented in Fig. 6. This figure displays the recovered Gaussian FWHM values of the four different noise combinations in both the pulses and the filters. As anticipated, the FWHM values obtained are greater than the intrinsic simulated values, indicating the effect of the detector broadening the complex lines.

From these plots, several conclusions can be drawn. When simulating pulses with the nominal noise (panels (a) and (c)), the analysis of the simulations revealed that the 0-padding filter performed slightly better than the FULL filter and clearly outperformed the SHORT filter. This is true at least under ideal instrumental operational conditions, i.e. in the absence of baseline drifts or jitter effects (see Section 5). The right figures of the panels clearly show the relative difference of the FWHM values they produce, with respect to the 0-padding value.

The slight difference in the FWHM value range between panels (a) and (c) may be attributed to the fact that the noise conditions for the pulses and the optimal filter in panel (a) are the same, representing realistic instrumental noise. In contrast, in panel (c), the filter was constructed with white noise, which did not fully replicate the conditions of the pulse simulations. As a result, this led to slightly larger resolution values.

Interestingly, when we artificially increased the noise of the pulses (panels (b) and (d)) we observed a similar behavior in the filters, albeit with a more pronounced difference in the resolution values. This reaffirms our previous observation from the analysis of monochromatic pulses in Section 2 that the better performance of 0-padding scales with the level of instrumental noise.

Additionally, the similarity of the resolution values regardless of the filter noise conditions (nominal or white-noise) indicates that the dominant factor influencing the filter performance is the noise present in the pulses as already discussed in Section 2.

Given that the ideal non-varying conditions defined during the simulations may not reflect the realistic conditions encountered during the detector operations (on-board or in the laboratories), it becomes crucial to verify whether the corrections implemented during the reconstruction process on real data are adequate to ensure the differential performance of the filters observed in the simulations.

For this purpose, the next step involves the analysis of realistic TES laboratory data.

4 The laboratory data

The measurements used in this analysis were taken on a 1-k pixel prototype X-IFU array developed by NASA/GSFC. Up to 250-pixels in the array can be readout using 8-column \(\times \) 32-row TDM developed by NIST/Boulder. X-rays are generated by fluorescing different metallic and crystal materials, which enables measurements over the energy range 3.3 keV (K K\(\alpha \)) to 12 keV (Br K\(\alpha \)). Full details on the design and performance of the detector and readout can be found in Smith et al. [36] and Durkin et al. [18].

Specifically, the data analyzed in this work belong to three datasets with different X-ray line complexes, count rates and bath temperature drifts:

-

10Jan2020 (GSFC): initial dataset with several line complexes and \(8\times 32\) channels in the detector.

-

30Sep2020 (GSFC): lower count rate dataset of line complex Mn K\(\alpha \) (\(8\times 32\) channels), to avoid an additional effect on the energy resolution caused by a possible imperfect removal of cross-talk events which could contaminate the Mn complex.

-

LargeTdrift (NIST): two column measurement (\(2\times 32\) channels) taken with the NASA Large Pixel Array (LPA) array at NIST in a cryostat that exhibits much larger drift. This dataset was used to test 0-padding reconstruction under conditions of worse temperature stability.

Laboratory data are stored in triggered records of data streams containing the current pulses induced by the X-ray photons. A typical record with a single pulse is displayed in Fig. 7 for the three different datasets. As shown, the pulses differ in both the total length of the data record and the pre-trigger length.

To construct the optimal filter used in the data reconstruction, the pulse template used for creating the signal part was obtained by averaging a large number of isolated Mn K\(\alpha \) pulses at 5.9 keV, as monochromatic as possible. To achieve this, records containing multiple pulses and pulses with heights outside the range of the Mn K\(\alpha \) complex were excluded from consideration. Furthermore, records contaminated by cross-talk events (events produced by a close-in-time impact of another X-ray photon in a different pixel of the same TDM readout column) were also removed from the analysis.

For the noise spectrum, we selected the cleanest set of noise records, ensuring they were free from instrumental artifacts or undesirable effects. Records that produced the largest residuals from the mean noise spectrum were excluded from the selection.

Using the above mentioned pulse template and noise spectrum, we constructed the three types of optimal filters introduced in Section 1 and utilized in Section 2 and Section 3.

The specific lengths of the filters in the analysis are detailed in Table 3. It is worth noting that not all the samples in the records were used because the final samples were discarded to avoid alignment problems during template creation. Additionally, for the case of LargeTdrift, the pre-trigger length was reduced due to the shorter pulse length.

5 Data reconstruction

The energy of the pulses generated by laboratory X-ray photons is estimated using SIRENA through optimal filtering, as described in Section 1.

Initially only photons from the Mn K\(\alpha \) complex, which were used to construct the filter template, were utilized to study the detector’s energy resolution. This approach was chosen to minimize any imperfection in the TES non-linearity correction performed by the gain scale transformation.

5.1 Energy calibration

Similar to the simulations presented in Sections 2 and 3, a gain scale correction is applied to obtain the real energies from the initial energy estimations.

The adopted procedure for energy calibration has been developed to ensure its automatic application and it is illustrated in several steps as depicted in Fig. 8. To begin, we determine a global offset between an already calibrated pixel (orange curve) reconstructed with the FULL filter, and a pixel of interest (blue curve) reconstructed with 0-padding. The energies of this reference pixel were refined with a gain scale correction obtained from a manual identification of the line complexes whose energies are listed in [21] and tables in Section 8. This first step is illustrated in Fig. 8(a). The offset represents the energy difference between the corresponding Mn K\(\alpha \) complex peaks. By applying this global offset we can clearly observe the discrepancy in the energy scale between the reference and the pixel of interest, as shown in Fig. 8(b).

To address the distortion in the energy scale, we initially perform a linear fit by cross-correlating the reference and the pixel of interest within the energy interval containing the Cr K\(\alpha \) and Mn K\(\beta \) complexes, using a varying stretching/shrinking coefficient, as shown in Fig. 8(c). The maximum value in this figure indicates the scale deformation, where a negative coefficient corresponds to energy scale shrinking, and a positive coefficient corresponds to stretching. The linear correction is then applied, as shown in Fig. 8(d), with the green line representing the energy of the pixel of interest after aligning it with the reference data using the required stretching coefficient.

However, it becomes evident that a linear correction alone is inadequate to achieve precise energy calibration across the entire available energy range, as illustrated in Fig. 8(e). To obtain a more refined energy calibration, we identify the line complexes above a predefined relative threshold, as shown in Fig. 8(f). In this process, we use the initial linear correction derived from the Cr K\(\alpha \)–Mn K\(\beta \) region to predict the expected location of subsequent line complexes at both lower energies (complexes V K\(\alpha \), Ti K\(\alpha \), and Sc K\(\alpha \)) and higher energies (Co K\(\alpha \), Ni K\(\alpha \), Cu K\(\alpha \), Zn K\(\alpha \), Ge K\(\alpha \), and Br K\(\alpha \)). This allows us to compute a gain scale correction, as depicted in Fig. 8(g), which is fitted using a fifth-degree polynomial. The choice of a fifth-degree polynomial is due to the smaller number of reference energy points compared to the simulations. The application of this gain scale correction results in the corrected energy scale, as seen in Fig. 8(h).

Illustration of the energy calibration procedure. Panel (a): Determination of a global offset between the pixel of interest (blue, reconstructed with 0-padding filter) and an already calibrated reference pixel (orange, reconstructed with FULL filter) using the Mn K\(\alpha \) complex peaks. Panel (b): Application of the global offset, highlighting the energy scale discrepancy between the reference and pixels of interest. Panel (c): Initial linear fit to the energy distortion achieved by cross-correlating the reference and pixels of interest in the Cr K\(\alpha \)–Mn K\(\beta \) region. Panel (d) Application of the linear correction to align the problem pixel with the reference data. Panel (e): Inadequacy of the linear correction for a precise energy calibration across the full energy range. Panel (f): Identification of line complexes above a predefined relative threshold for a refined calibration. Panel (g): Computation of the gain scale correction using the initial linear correction for a progressive identification of neighbouring line complexes. Panel (h): The resulting corrected energy scale after applying the gain scale correction

5.2 Baseline drift and jitter corrections

Once the energies of the photons are brought to the correct energy scale, they need to be corrected for instrumental variations that occur during data acquisition. The most notable effects are attributed to baseline time drift caused by instabilities in the TES setup’s bath temperature, and the offset between the physical/real arrival time of the photon and its measured/digitized arrival time (jitter) [24].

These two corrections are applied sequentially using a cross-correlation technique, as illustrated in Fig. 9. In this example, gain scale calibrated data in the Mn K\(\alpha \) energy range (shown as the small blue points in panel 9(b)) are displayed as a function of the time index indicating the arrival time order of the pulses.

Illustration of cross-correlation method for baseline drift and jitter corrections. Panel (a): A comparison between the expected energy histogram of the Mn K\(\alpha \) complex (thick blue line) and a sample histogram (thin green line) obtained by combining photons within a relatively narrow time window. Panel (b): Gain scale calibrated data (small blue points) displayed as a function of the time index indicating the arrival time of the pulses. The shaded green rectangle exemplifies the width of the moving window used to compute the sample histogram at different times. The green filled circles indicate the relative offsets measured by cross-correlating the two histograms displayed in the top panel

The first step involves computing an expected energy histogram, represented by the thick blue line in panel 9(a), based on an assumed initial Gaussian FWHM for the theoretical line complex Voigt profile. Next, a sample histogram (thin green line) is computed by considering the data values enclosed within a moving window of a fixed width (hereafter referred to as the xwidth parameter), represented by the green shaded rectangle in panel 9(b).

The cross-correlation of both the expected and the sample histograms provides the average energy offset for the data points within the moving window, depicted as green filled circles in panel 9(b). As each time window contains xwidth photons and yields only one offset estimate, a final correction for each individual photon is determined by fitting a low-order polynomial to the derived offsets. A Savitzky-Golay interpolation with second degree polynomials and a predefined number of points (referred to as the smooth parameter) can be used for this purpose. In cases where an abrupt energy offset is detected, as observed after the first three computed offsets presented in panel 9(b), the data to be interpolated are split into subsets separated by these energy jumps. This approach is adopted to prevent interpolation attempts across the energy jumps (when present). If the number of data points within a subset falls below the specified smooth parameter, a straightforward linear interpolation is utilized.

After correcting the energy of each photon, the procedure is repeated for a few iterations. Before each new iteration, the average energy-corrected data histogram is recomputed and its Gaussian FWHM is fitted (for a detailed description of the fitting procedure, refer to Section 6). This fitted FWHM value is then used to create a new expected histogram. Typically, the use of 3 iterations is sufficient to achieve convergence.

Additionally, we tested the cross-correlation method by using the global averaged data histogram as the expected histogram, instead of a theoretical profile. This alternative approach yields the same correction, although its convergence is slower.

The choice of the cross-correlation window size (xwidth parameter) is crucial as using too small a value leads to noisy offset estimates, while too large a value only provides a coarse-grained determination of the energy variation we aim to correct. If we were to unrealistically make xwidth too small, it would result in the offset correction overcompensating for the actual energy displacement. To investigate the potential bias introduced by a too small xwidth parameter, we conducted numerical simulations using 30 series of \(1.6\times 10^6\) photons following the Mn K\(\alpha \) complex distribution, assuming a Gaussian FWHM of 2.2 eV and without any distortion in the energy scale. The cross-correlation method was applied with xwidth values ranging from 51 to 601 and smooth values of 11, 31 and 51 points.

The results for the Gaussian FWHM and global energy offset of the corrected line complexes are displayed in Fig. 10, revealing that the cross-correlation method tends to slightly over-correct the FWHM, especially for small xwidth and smooth parameters (see panel 10(a)). At the same time, it introduces a minor energy offset in the mean energy of the Mn K\(\alpha \) complex (see panel 10(b)). A horizontal dashed line at the ratio \(\mathrm{FWHM(fit)}/\mathrm{FWHM(sim)}=0.99\) is drawn in panel 10(a) to indicate a 1% threshold for the resolution over-correction introduced by the cross-correlation method. This value is well below the 6% resolution calibration requirement of the X-IFU. Based on the analysis of these simulations, we have decided to adopt \(\texttt {xwidth}=201\) and \(\texttt {smooth}=11\) as the default choices for the baseline drift and jitter corrections.

Impact of cross-correlation window size (xwidth parameter) and polynomial smoothing factor (smooth parameter) on fitted Gaussian FWHM. Panel (a): Ratio of fitted Gaussian FWHM to simulated Gaussian FWHM as a function of cross-correlation window size. Panel (b): Energy offset in the reconstructed data with varying window sizes for cross-correlation corrections. Both plots consider three smoothing factors: 11 (blue dots), 31 (orange dots), and 51 (green dots) points

The graphical illustration of the correction procedure, applied to pixel 1 in the 10Jan2020 dataset, is shown in Fig. 11, clearly depicting the baseline variation that occurred during data acquisition and the slight curvature of the energy dependence with the phase (jitter).

Corrections of Mn K\(\alpha \) complex pulses for TIME (linked to baseline) and TIME+JITTER effects, using pixel 1 in the 10Jan2020 dataset. The left column represents the baseline drift correction, while the right column shows the jitter correction. Panel (a): Gain scale corrected data (small blue dots) and baseline of the corresponding pulses (gray points), plotted as a function of a time index indicating the arrival time of the pulses. Panel (b): Energy offsets derived by the cross-correlation method as illustrated in Fig. 9. Panel (c): TIME-corrected data (orange) plotted on top of the original data (blue). Panel (d): TIME-corrected data (SIGNAL_cTIME) from panel (c), plotted as a function of the phase in the range of \(\pm 0.5\) samples (PHI05). Panel (e): Energy offsets computed by applying the cross-correlation method again. Panel (f): TIME+JITTER-corrected data (green) shown on top of the only TIME-corrected data (orange). The magenta lines in panels (c) and (f) represent the correction curves already displayed in the middle panels (b) and (e), but at the same reconstructed energy scale. This illustration is used to demonstrate the actual magnitude of the applied corrections

6 Energy resolution determination

To determine the energy resolution (FWHM of the Gaussian component), for both the simulated Mn K\(\alpha \) complex and the laboratory data, we employed two independent approaches: standard fitting of the energy distribution histogram and a new procedure based on the cumulative distribution function (CDF; [8]).

6.1 Histogram fitting

The histograms of the corrected-calibrated energies obtained by applying the different filters were fitted using the Fitting module of AstroPy [3, 5] employing the Levenberg-Marquardt algorithm and least squares statistic (LevMarLSQFitter).

For the Mn K\(\alpha \) complex, we utilized a model that simultaneously fits the eight Lorentzian profiles described in Table 2 along with an additional Gaussian broadening. The relative intensities of the Lorentzian lines are tied, and the distance between the line centers is also tied relative to the location of a single line. The FWHMs of the Lorentzian profiles are fixed. The Gaussian broadening is a free parameter and is common for all the lines. The FWHM of this Gaussian broadening is used as the figure of merit to quantify the energy resolution of the detector.

During the AstroPy fitting procedure, several weight options for the LevmarLSQFitter call have been tested. These weights are defined as the inverse variance (\(\sigma ^{2}\)) of each data bin:

-

*

iSig: histogram bins are weighted by the number of counts N within each bin (\(\sigma ^2=N\))

-

*

SN: histogram bins are weighted by the Signal-to-Noise ratio in the bin (\(\sigma ^2 = \frac{1}{\sqrt{N}}\))

-

*

None: no weight is applied (\(\sigma ^2=1\))

The iSig option adopts the iterative approach proposed by Fowler [25]. It represents one of the alternatives for conducting a Poisson Maximum Likelihood fit (Cash C-statistics) identified in that study as the least biased method.

Another crucial parameter in histogram fitting is the number of bins. A study was conducted on the Gaussian FWHM values obtained for different numbers of bins and it was found that using 3000 bins (for a total number of \(\sim 8000\) data points spread in the fitted energy range) yields stable results. This is illustrated in Fig. 12 for the case of 10Jan2020 pixel 11. The dispersion shown in the FWHM values provided by the different histogram fittings is consistent with the expected dispersion observed in the simulations (as seen in Section 6.3).

6.2 Cumulative Distribution Function Fitting

In order to avoid the need for a priori binning of the data, we explored an alternative approach based on fitting the Cumulative Distribution Function of the photon energies. To test the consistency of this method, we conducted simulations using 8300 photons of the Mn K\(\alpha \) complex energy distribution following the procedure described in Section 3, aiming to have a similar number of pulses as in the laboratory pixels typically analyzed.

Schematic of simultaneous fitting of 8 Voigt profiles to the Mn K\(\alpha \) line complex using CDF. Panel (a): Initial histogram for the data set, consisting of 8300 simulated photons. Panel (b): Initial guess for the 8 Voigt profiles (thin colored lines) and the expected total probability distribution (thick black line). Panel (c): Comparison of the empirical CDF of the data to be fitted (orange line) and the temporary fit (black line). Panel (d): Same as panel (c) after the iterative numerical fit has converged. Panel (e): Final fit for the individual 8 Voigt profiles (colored lines) and the global fit (thick black line)

The resulting energy histogram from the simulations displayed the expected double-peak distribution for the Mn K\(\alpha \) complex at our spectral resolution, as shown in panel (a) of Fig. 13. Interestingly, a small fraction of the simulated photons fell outside of the displayed energy range, 96 photons below the lower 5860 eV limit and 121 photons above the upper 5920 eV limit.

We demonstrated that the CDF fitting procedure could successfully recover the original parameters used to generate the simulated data set. However, we faced the challenge of having 4 free parameters (i.e., the energy of one reference line, the Gaussian FWHM, and the number of photons below 5860 eV and above 5920 eV) due to the constraints imposed by fixing the Lorentzian FWHM and relative intensity of the eight individual lines, as well as their center-to-center distances.

To address this challenge we used the following approach: first, we provided an initial guess for the solution, as shown in panel 13(b). Next, we compared the empirical CDF of the simulated data (orange line) with the CDF of the temporary solution (black line) in panel 13(c). Finally, we used the Levenberg-Marquardt minimization procedure, with the help of the Python package lmfitFootnote 3 [32], to determine a better fit to the empirical CDF, as illustrated in panel 13(d). The objective function to be minimized was defined as the weighted difference between the empirical and simulated CDF, using the product \(F(x)\,\left( 1-F(x)\right) \) as the weight, where F(x) is the empirical CDF. Note that this weighting scheme favours data points nearer the center of the line complex, diminishing the influence of the Mn K\(\alpha \) complex tails, which are prone to systematically missing photons when correcting energies from pulses severely affected by baseline drift effects. The result of this fitting process was the simultaneous fitting of the eight sought Voigt profiles, as shown in panel 13(e).

Distribution of Gaussian FWHM values obtained from simulated data using histogram fitting with three different weights: None (light blue), SN (dark blue), and iSig (cyan), as well as using CDF fitting (orange). The simulations were performed with varying numbers of photons (ranging from 4000 to 16 000, as specified in the numeric labels). The dashed vertical lines separate sets of 1000 simulations performed with a fixed number of photons. A histogram representation of these data is shown in Fig. 15

6.3 Fitting procedures performance on simulated data

The simulations of the Mn K\(\alpha \) complex were extended to include various numbers of photons, ranging from 4000 to 16 000, to ensure the accuracy and reliability of the fitting methods when analyzing real data. As previously mentioned, these simulations utilized the eight Voigt profiles defined by the Lorentzian profiles with laboratory parameters from Table 2, and a FWHM Gaussian broadening of 2.2 eV.

We analyzed these synthetic data using histogram fitting with the three different weights described in Section 6.1, as well as the CDF fitting method explained in Section 6.2. The obtained Gaussian FWHM values from the two fitting procedures were compared with the simulated value to evaluate their performance in terms of energy resolution. The distributions of fitted Gaussian FWHMs obtained from the simulated data with varying numbers of photons are presented in Figs. 14 and 15.

The results indicate that the distributions are well centered around the simulated resolution value \(\textrm{FWHM}=2.2~\textrm{eV}\), with no systematic deviations at the peak values. Therefore, we can conclude that the fitting methods do not introduce any intrinsic systematic errors to the determination of the Gaussian FWHM of simulated data.

As expected, the dispersion of the measured FWHM decreases as the number of fitted photons in the Mn K\(\alpha \) complex increases, regardless of the adopted fitting procedure. The histogram fitting method with weight iSig provides a slightly lower dispersion, as visually evident in Fig. 15. In this figure, the histogram fitting method with weight iSig (shaded cyan) appears narrower than histogram fitting with the other weights, and the CDF method yields the widest distribution. This behavior is quantitatively presented in Table 4, which lists the centroid offsets and standard deviations of the histograms displayed in Fig. 15.

Histogram representation of data shown in Fig. 14, corresponding to the Gaussian FWHM values obtained from simulated data using histogram fitting with different weights (None in light blue, SN in dark blue, and iSig in shaded cyan) and using the CDF fitting method (in orange). Each panel shows the FWHM distribution for a different number of simulated photons (as indicated at the top of each histogram). The centroid offsets and standard deviations of these histograms are listed in Table 4

6.4 Fitting procedures performance on real data

Once we ensured the comparability of the fitting methods on simulated data, we proceeded to analyze real data starting with the individual analysis of each pixel. To do this, we reconstructed the photons from each pixel using the FULL optimal filter, performed gain calibration, and corrected for baseline drifts and jitter. Afterward, we applied both the histogram and the CDF methods to fit the data.

FWHM values for pixels in datasets 10Jan2020 (top), 30Sep2020 (middle), and LargeTdrift (bottom), using histogram fitting with weights None (light blue circles), SN (dark blue squares), and iSig (cyan \(\times \)) as well as CDF fitting (orange symbols), all reconstructed using the FULL filter. The dashed lines represent the FWHM obtained by each fitting procedure using the combined information from all pixels

Note that in this comparative analysis, we are using the FULL filter for the fitting methods, as it serves as the reference method in the literature, allowing us to isolate and evaluate the performance of the fitting methods independently of the filters’ performances.

In contrast to the observations made with the earlier described simulations, we noticed a consistent pattern across all datasets. Specifically, the CDF method consistently yielded slightly lower Gaussian FWHM values (median=2.38 eV) compared to those obtained from the histogram fittings (median=2.43 eV). A Wilcoxon signed-rank test for paired data (non-parametric) was conducted under the null hypothesis that the CDF method yields larger FWHM values than those provided by the histogram fittings. The resulting p-values were \(2.2\times 10^{-10}, 9.5\times 10^{-5}\) and \(2.8\times 10^{-7}\) for the iSig, SN and None cases, effectively rejecting the null hypothesis and thus confirming the statistical significance of the comparison. This trend is illustrated in Fig. 16.

To further investigate this discrepancy in the fitting procedures on real data and to assess their performance with improved statistical significance, we conducted a global fit of all the pixels in dataset 10Jan2020. Initially, we processed each pixel individually and subsequently, we combined all the pixels for the global fit using the selected fitting procedure. Figure 17 displays the resulting fits and residuals (\(\textrm{model} - \textrm{data}\)) obtained for each method. As expected, the FWHM value obtained from the CDF fitting is lower than the values corresponding to the other histogram fitting methods. However, this outcome does not corroborate the results obtained from the analysis of the simulations.

Global fits to the combined data from all pixels in the 10Jan2020 dataset using different fitting methods. The histogram fitting methods with iSig, None, and SN weights, as well as the fit with the CDF fitting method, are shown. The associated residuals (model - data) are displayed below each particular fit. The plots are arranged from left to right and top to bottom in the order of histogram with iSig weights, histogram with None weights, histogram with SN weights, and CDF fitting. The line complex is fitted using 8 individual Voigt profiles (colored lines), with the black curve representing the co-added result. The fitted Gaussian FWHM value is displayed in the inset text

Upon conducting a detailed examination of the residuals in Fig. 17, it becomes evident that the data on the left wing of the line complex consistently falls below the global fit. As mentioned earlier, the baseline drift correction introduces energy shifts that may cause a slight under-representation of photons at the edges of the energy interval initially chosen for selecting the Mn K\(\alpha \) photons. Consequently, an imperfect photon distribution in the wings of the complex is anticipated to result in variations in the fitted resolution across different fitting methods. Indeed, we have confirmed that eliminating the \(F(x)\,\left( 1 - F(x)\right) \) weight in the CDF method (refer to Section 6.2), thus giving more prominence to the complex wings in the CDF fit, increases the discrepancy in resolution when comparing histogram fitting with CDF fitting.

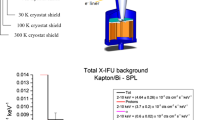

After analyzing the results obtained from histogram fitting using three different weights, all of which yielded similar resolution values, we observed that the CDF method consistently produced slightly lower, yet still close results. Appendix A explores the impact of two known systematic effects, the extended line spread function and the instrumental background, on the different performance of the methods. However none of these factors account for the differences found when analysing real data.

Additionally, we considered the dispersion of the FWHM estimates provided by the iSig weight, and found it to be the lowest among the options.

Based on these considerations, we have chosen the histogram fitting method with the iSig weight as our baseline approach for analyzing the various datasets.

7 Energy resolution analysis: the filters’ role

In Appendix B, we present a mathematical expression that quantifies the expected uncertainty in the measured Gaussian FWHM. This expression depends on both the number of photons in the Mn K\(\alpha \) line complex and the FWHM value itself. We will use this derived uncertainty in the upcoming plots of the Gaussian FWHM values measured for real data.

The comparison of the energy resolution values obtained for all the pixels in the 10Jan2020, LargeTdrift and 30Sep2020 datasets is presented in Fig. 18.

Comparison of energy resolution values for the FULL filter (blue) and SHORT filter (red) plotted on the Y-axis versus those from the 0-padding filter reconstruction on the X-axis for datasets 10Jan2020 (left), 30Sep2020 (center), and LargeTdrift (right). The fitting technique applied in the minimization process is the histogram fitting with iSig weight. The dashed lines represent the mean energy resolution values, with red for SHORT, blue for FULL, and green for 0-padding filters

Based on the obtained results, the 0-padding filter demonstrates comparable performance to the FULL length filter and outperforms the SHORT filter in terms of energy resolution values for all datasets, even under varying instrumental stability conditions and cross-talk levels. Notably, the most significant advantage is observed in the LargeTdrift dataset, which can be attributed to the shorter length of the LargeTdrift records and filters (see Table 3). As a result, the \(f=0\) Hz bin which is discarded in the construction of the optimal filters contains more information, making its impact more relevant.

Furthermore, the 0-padding filter not only excels in terms of energy resolution but also provides the added advantage of reduced computational cost.

However, during simulated data tests [14], it was observed that the 0-padding filter showed heightened sensitivity to baseline fluctuations during data acquisition. This sensitivity can be attributed to the fact that the 0-padding filter, as explained in Section 1, is essentially a truncation of the FULL filter in the time domain. Consequently, it lacks perfect zero-summing when compared to the FULL filter, which leads to increased sensitivity to baseline fluctuations.

To address this issue, we tested a modified 0-padding technique (called 00-padding). In this modified approach, we enforced the filter to have a sum of zero in the time domain using different expressions referred to as zsum1, zsum2 and zsum3 as described in Eqs. (7)–(10). We assume \(N_\textrm{final}=8192\), \(N_\textrm{cut}=N_\textrm{final}/2=4096\), and define

The three 00-padding optimal filters are built using the following prescriptions:

-

00-padding with zsum1:

$$\begin{aligned} \widetilde{o\!f}_{\text {00PAD}}[t_i] = \widetilde{o\!f}_{\text {FULL}}[t_i]+\frac{S\!1}{N_\textrm{cut}}, \quad \forall i=1,\ldots ,N_\textrm{cut} \end{aligned}$$(8) -

00-padding with zsum2:

$$\begin{aligned} \widetilde{o\!f}_{\text {00PAD}}[t_i] = \widetilde{o\!f}_{\text {FULL}}[t_i]+\widetilde{o\!f}_{\text {FULL}}[t_{i\!+\!N_\textrm{cut}}], \quad \forall i=1,\ldots ,N_\textrm{cut} \end{aligned}$$(9) -

00-padding with zsum3:

$$\begin{aligned} \widetilde{o\!f}_{\text {00PAD}}[t_i]{} & {} = \widetilde{o\!f}_{\text {FULL}}[t_i], \quad \forall i=1,\ldots ,N_\textrm{cut}/2 \nonumber \\ \widetilde{o\!f}_{\text {00PAD}}[t_{i\!+\!N_\textrm{cut}/2}]{} & {} = \widetilde{o\!f}_{\text {FULL}}[t_{i\!+\!N_\textrm{cut}/2}] + \widetilde{o\!f}_{\text {FULL}}[t_{i\!+\!N_\textrm{cut}}] \,+ \nonumber \\{} & {} + \widetilde{o\!f}_{\text {FULL}}[t_{i\!+\!3 N_\textrm{cut}/2}] \nonumber \\{} & {} \quad \quad \quad \quad \quad \quad \quad \forall i=1,\ldots ,N_\textrm{cut}/2 \end{aligned}$$(10)

These modified 0-padding filters were then applied to the xifusim simulated pulses in the Mn K\(\alpha \) complex (as described in Section 3). Subsequently, the reconstructed energies were gain scale calibrated and fitted using the histogram iSig technique. Figure 19 illustrates the comparison of the resolution values measured with these zero-summed filters and the FULL, SHORT and 0-padding filters previously analyzed.

The results indicate that these modified filters lead to a degraded energy resolution in all the zero-sum designed scenarios making their performance comparable to that of the SHORT filter. As a result, any of these zero-summed 0-padding filters are unsuitable as a viable option. Consequently, fully harnessing the advantages of the 0-padding filter will depend on successfully correcting the baseline drift within the limits of the instrument resolution budget.

In the cases where the baseline drift cannot be properly accounted for, 0-padding will cause a degradation of the energy resolution. For a simplistic approximation where the variation of the baseline level from pulse to pulse follows a normal distribution with a dispersion \(\sigma _\textrm{baseline}\), the additional degradation in energy resolution, that should be quadratically added to the expected FWHM of the calibrated energy, can be quantified (following the same reasoning as in Section 2.2) as

where \(g'\) is the first derivative of the gain scale correction evaluated at the energy of the considered photons.

Differential resolution values obtained with each filter in the analysis (FULL, blue symbols; SHORT, red symbols and 0-padding, green symbols), and the zero-summed modified versions of 0-padding, labeled as 00PAD on the X-axis (zsum1: left, zsum2: center and zsum3: right). Please refer to Eqs. (7)–(10) for details on the modifications

8 Other line complexes reconstruction

To address any potential bias in favor of the 0-padding filter resulting from using the same photons for constructing the optimal filter and resolution analysis, we conducted an additional test. In this test, we reconstructed pulses with energies significantly different from the optimal filter’s energy of 5.9 keV. By doing so, we aimed to evaluate whether the non-linearity of the detector response influenced the results obtained by the 0-padding filter.

Specifically, we reconstructed the Ti K\(\alpha \) (4.9 keV), Cr K\(\alpha \) (5.4 keV), Cu K\(\alpha \) (8.0 keV), and Br K\(\alpha \) (11.9 keV) complexes found in the 10Jan2020 dataset using the optimal filters constructed from the Mn K\(\alpha \) photons.

The Lorentzian profiles for each complex are described in the following tables: Table 5 (Ti K\(\alpha \)), [13], Table 6 (Cr K\(\alpha \)), [21], Table 7 (Cu K\(\alpha \)), [21], and Table 8 (Br K\(\alpha \)).

The reconstruction process was performed using the FULL, SHORT and 0-padding filters and the energies were gain calibrated as explained in Section 5. Baseline and jitter corrections were conducted with \(\texttt {xwidth}\!=\!101\) (due to the poorer statistics compared to with the Mn K\(\alpha \) case) and \(\texttt {smooth}\!=\!11\), respectively. The histograms were fitted using the iSig weight and are displayed in Fig. 20. Similar to the case of the Mn K\(\alpha \) complex, the 0-padding reconstruction appears to offer resolution values comparable to the FULL filter and better than the SHORT filter. However, it is important to note that the larger resolution values and dispersion obtained for Cu K\(\alpha \) and Br K\(\alpha \) lines may be attributed to the non-linearity of the detector, which results in degraded energy resolution at energies far from the optimal filter template.

In the case of these line complexes, where fewer photons are detected, the distribution of pulses along a varying baseline can have a larger effect on the reconstruction. For a few pixels with large variations in baseline during data acquisition, the initial automatic (no baseline-aware) gain scale calibration was not possible for the 0-padding reconstruction, as line peaks were double-peak shaped due to these different baseline values. Consequently, we removed these pixels from the analysis since they would require a more sophisticated, baseline-accounting gain calibration of the photon energy distribution. For the latest progress on demonstrating the gain scale correction over time, please refer to Smith et al. [37].

Figure 21 illustrates the relationship between the gain in resolution and the energy of the complex. The improvement in energy resolution offered by 0-padding versus FULL and SHORT is statistically significant for all the line complexes, as revealed by the Wilcoxon signed-rank test for paired data, whose p-values are provided in Table 9. The only exception is the Ti K\(\alpha \) complex when comparing the 0-padding and FULL filters. The most substantial improvement occurs for the largest energy complex, Br K\(\alpha \).

Comparison of resolution values for Ti K\(\alpha \) (top-left), Cr-K\(\alpha \) (top-right), Cu K\(\alpha \) (bottom-left) and Br K\(\alpha \) (bottom-right) complexes obtained using FULL (blue) and SHORT filters (red) on the Y-axis plotted against those from the 0-padding reconstruction (X-axis) for dataset 10Jan2020. The resolution values were obtained using histogram fitting with iSig weight. Dashed lines represent the mean energy resolution values (red for SHORT, blue for FULL and green for 0-padding filters)

Boxplot diagrams showing the improvement in resolution achieved by the 0-padding filter reconstruction for the analyzed line complexes of the 10Jan2020 data. Dashed central line in the boxes correspond to the median values, the coloured boxes cover the IQR (inter quantile range \(\textrm{Q1}-\textrm{Q3} \equiv 25\%-75\%\)), error bars go from \(\textrm{Q1}-1.5\times \textrm{IQR}\) to \(\textrm{Q3}+1.5\times \textrm{IQR}\) and grey circles are the outliers. Left: Energy resolution values obtained with the 0-padding (green), FULL (blue) and SHORT (red) filters. Right: Differential energy resolution values of FULL and SHORT filter with respect to 0-padding

However, it is important to mention that the scatter also increases as we move to higher energies. Therefore, a more extensive investigation with higher statistics would be required to validate this trend.

9 Conclusions

In this study, we take an in-depth look at a variation of the classical optimal filter algorithm to estimate the energy of photons detected by a Transition Edge Sensor device, such as the one to be onboard the Athena mission. This approach, initially proposed by Cobo et al. [14] and called 0-padding, involves truncating the classical optimal filter in the time domain.

The results of our analysis, based on both simulated and laboratory data, show that truncating a long optimal filter (0-padding) yields better performance when compared to using a filter constructed from a shorter template but with the same final length as the truncated filter (SHORT). As the information loss resulting from setting the \(f\!=\!0\) Hz bin to zero during the construction of the optimal filter diminishes as the filter length increases (as indicated by [17]), the 0-padding technique experiences less signal degradation as it begins its construction with a filter longer than the final intended size. As a result this approach limits the loss of resolution from shortened filters for high count rate cases.

What is even more relevant is that the resolution values obtained through our 0-padding approach are comparable, and in some cases slightly better than those achieved with a double-length optimal filter. Additionally our approach offers the advantage of reduced computational cost. As FULL filter and 0-padding only differ by the length the filter (the latter being half length) in terms of on-board computation, we can say that the energy estimation part of the event reconstruction would require half of the operations to be made. It would also require half of the on-board non-volatile memory as only half-length filters would be saved on-board. One would expect that computational time should scale at first order with the number of operations required, although this can very much be implementation dependent.

The enhanced performance of the 0-padding filter scales with the noise level in the detector’s signal, which directly impacts the uncertainty in energy determination. For filters constructed from the same template pulse, where the removal of the \(f\!=\!0\) Hz bin has a similar effect (such as a long filter and its corresponding truncated 0-padding filter obtained by omitting the second half), longer data pulses result in greater uncertainties.

Making use of the second half of the long filter does introduce a small, albeit non negligible, increase in the uncertainty of energy estimation. This is due to the inclusion of time samples that convey not useful information. This holds true if the cutoff for producing the 0-padding filter does not disregard relevant time samples where pulses register a signal statistically greater than the baseline.