They [economists] are accused of all thinking the same thing. But what use would economists be if they could not reach a consensus about anything?

Jean Tirole, “Economics for the public good”, p. 65, Princeton University Press, 2017.

Abstract

The highly popular belief that rent-control leads to an increase in the amount of affordable housing is in contradiction with ample empirical evidence and congruent theoretical explanations. It can therefore be qualified as a misconception. We present the results of a preregistered on-line experiment in which we study how to dispel this misconception using a refutational approach in two different formats, a video and a text. We find that the refutational video has a significantly higher positive impact on revising the misconception than a refutational text. This effect is driven by individuals who initially agreed with it and depart from it after the treatment. The refutational text, in turn, does not have a significant impact relative to a non-refutational text. Higher cognitive reflective ability is positively associated with revising beliefs in all interventions. Our research shows that visual communication effectively reduces the gap between scientific economic knowledge and the views of citizens.

Similar content being viewed by others

1 Introduction

Research on how to communicate economic research findings to the general public effectively is still limited. However, policymakers and researchers increasingly acknowledge the need to improve the communication of economic research results about how economic policies work (Stankova, 2019). Recently, some studies have started to approach this issue. For instance, Haldane and McMahon (2018) and Coibion et al. (2019, 2020) analyze how communication of fiscal and monetary policies affects agents’ expectations, where communication becomes a policy tool in itself. Other studies focus on the spread of socio-economic misinformation in the media (“alt-facts”) and on the effect of fact-checking to neutralize it (Barrera et al., 2020; Ecker et al., 2020; Henry et al., 2021). Finally, some other studies analyze how people perceive and form their attitudes towards tax policy (Stantcheva, 2021), towards racial gaps (Alesina et al., 2021) and towards unregulated price increases (Elias et al., 2022).

Little is known, however, on how to communicate economic knowledge when large segments of the public have entrenched misconceptions—that is, beliefs that contradict well-established scientific knowledge about the functioning of the economy. The problem is that widespread unfounded beliefs, some of them derived from not accounting for equilibrium effects, may result in the public endorsing damaging economic policies, or rejecting the implementation of evidence-based, welfare-enhancing reforms (Nyhan, 2020; Bó et al., 2018). Hence, it is of general interest to explore how to communicate convincingly economic research findings that contradict prevalent misconceptions.

We focus here on the popular belief that rent control allows more families to find affordable housing. The support for this policy is substantial among the public, above 60% in polls conducted in several countries.Footnote 1 This belief, however, is at odds with scientific consensus arising from economic research. To illustrate, about 95% of economists in the IGM Economic Experts Panel strongly disagree that rent capping will increase the quantity of affordable housing.Footnote 2

This high consensus stems from the abundant and cumulative empirical evidence on this subject (see, for instance, Diamond et al. (2019a) and Kholodilin and Kohl (1839). Although rent control may have positive effects for a subset of tenants because their rents will be lower, or grow less, relative to market rents (Sims, 2007), both total quantity of rental housing available and quality of controlled housing ends up falling (Sims, 2007; Mora-Sanguinetti, 2011; Asquith, 2019; Diamond et al., 2019a; Hahn et al., 2021; Monràs & García-Montalvo, 2021; Ahern & Giacoletti, 2022). Some owners decide not to rent their property but sell it instead, either to owner-occupants or to developers (Diamond et al., 2019a). Supply reduction is more pronounced among corporate landlords than among individual landlords (Diamond et al., 2019b). As the number of tenants willing to pay the controlled rent is higher than the supply of dwellings, queues and a black market for rental contracts appear (Malpezzi, 1998; Andersson & Söderberg, 2012).Footnote 3 In the end, low-income tenants—young people and newcomers—are most likely to be harmed by this policy (Hahn et al., 2021; Kattenberg & Hassink, 2017). Alternative policies, however, such as increasing supply, or tax subsidies for low-income households, are welfare increasing (Diamond et al., 2019b).

Research in communication and in social, educational and political psychology shows that a refutational correction can reduce the prevalence of misconceptions in a variety of domains, such as climate change (Nussbaum et al., 2017; Lewandowsky, 2021), education (Aguilar et al., 2019), psychology (Kowalski & Taylor, 2009, 2017) and vaccine resistance (Lewandowsky et al., 2012). The surge of misinformation associated with the recent Covid-19 pandemic and its consequences has prompted further research on debunking, showing that refutational corrections are effective at it (MacFarlane et al., 2021). The main features that characterize a refutational correction are that the misconception addressed is explicitly stated and declared as incorrect; the existing scientific arguments and evidence are explained as simply as possible, and the relevance of the issue for the individual and her values is explicitly acknowledged (Tippett, 2010; Druckman, 2015; Weil et al., 2020). In contrast, a non-refutational approach does not include all these elements, as it simply lays out in a neutral way the arguments that explain a fact or a relation. The refutational correction is an appealing approach because it aims at inducing careful, reflective thinking about the evidence and arguments provided by scientific research about the several dimensions of a problem. It is a thoughtful way to communicate counter-intuitive information that takes into account the affective elements behind some beliefs. This approach, however, has barely been studied in relation to popular misconceptions about economic policies.

In this paper we report a new study where we use this communication method to design an information treatment experiment aimed at reducing the prevalence of the misconception about the effects of rent control. We conduct a set of on-line survey experiments with three interventions, one visual and two text-only, and test the effectiveness of each format in dispelling the misconception.Footnote 4 Given that ultimately we are interested in engaging with ordinary citizens, whose beliefs affect their support for policies, we here use a new subject pool extracted from the general population that includes participants of all ages and conditions living in Spain. We recruit a sample of 1,050 participants who are randomly allocated to one of the three interventions and participate through their own digital devices.

We design two short text-only messages about the effects of rent controls. One of the messages—the refutational text (RT hereafter)—uses the refutational approach which includes the refutational features described above. The other message—the non-refutational text (NRT hereafter)—uses a standard expository approach. This text explains, in a neutral way, the effects of a rent control policy, much like how a standard textbook of introductory economics would do. The most distinctive feature of the NRT is that it does not include the refutational elements. These texts build on previous work by Brandts et al. (2022), where we test the effectiveness of two written messages in dispelling the rent control misconception. Two important differences are that in the previous study both texts were twice as long as the ones used here, and that participants were college students. In reducing the number of words of both texts, we aim at achieving a balance between providing an accurate explanation and minimizing the time and cognitive effort required to read them. This may increase the effectiveness of the text-only messages, in particular of the RT. Readers may not pay sufficient attention when a text is long; length may also be in the way of the refutational elements of the RT to be salient enough. Indeed, previous research in psychology finds that short textual information is effective to reduce the prevalence of factual misconceptions (Ecker et al., 2020).

To design the visual message we build on research that suggests that using visual explanations, such as videos and infographics may be more effective than text-only information to correct misconceptions or misinformation (Mason et al., 2017; Mayer & Moreno, 2003; Goldberg et al., 2019; Young et al., 2018; Reynolds et al., 2018). Adding visual elements reduces the participants’ cognitive and attention effort required to process the new and counter-intuitive information. More broadly, visual presentations match more closely how citizens nowadays encounter information of different kinds. Our visual message includes the elements from the refutational approach. We produce a refutational video (RV, hereafter), which is a dynamic slideshow where frames combine text and images using the refutational framework. Specifically, the RV adds images and symbols to selected textual sentences from the RT, including all refutational elements.

We elicit participants’ beliefs before and after the corresponding treatment by asking them, on a 5-point Likert scale, about their degree of agreement with a statement that says that the establishment of rent controls increases the number of people who have access to housing. We transform the degrees of agreement into a numerical scale, and define our outcome variable as the difference between post-treatment and pre-treatment beliefs. With the term belief, we refer to the degree of agreement with the statement. The outcome of interest is, thus, the change in beliefs of each participant. We compare the effectiveness of the RV relative to the new short RT and NRT messages in reducing the prevalence of the rent-control misconception. We also compare the effectiveness of these two text messages to each other. We further explore whether the effect of information treatments on updating beliefs varies for different subgroups of participants according to relevant socio-demographic characteristics such as housing status (tenants vs owners), gender and education.

Aside from the informational treatment, a personality trait that may affect the change in beliefs, according to previous evidence, is an individual’s predisposition to reflective or to intuitive thinking as measured by the cognitive reflection test (CRT) (Pennycook & Rand, 2019; Pennycook et al., 2020; Tappin et al., 2020). We thus analyze the role of this trait on the disposition to update beliefs, and whether it varies with the treatment.Footnote 5

Our work contributes to the still sparse literature on communicating counter-intuitive expert consensus about the effects of an economic policy to the general public. As explained above, an emerging literature addresses issues such as how to communicate inflation expectations by central banks, or how attitudes and preferences towards redistribution, taxation or immigration are determined. Very little is known, however, about how to credibly communicate the evidence about policy effects and whether communication formats make a difference. The use of the refutation approach to correct economic misconceptions, either using visual or text-only formats, has been underexplored, despite their application in psychology for correcting misperceptions, misconceptions and misinformation.

Our contribution is threefold. We provide first evidence in economics on the effectiveness of using a visual communication format combined with a refutational approach to dispel a widespread and highly persistent misconception. Although some authors have used visual formats—instructional videos—to increase financial education (Lusardi et al., 2017) and awareness of the consequences of taxation for income distribution (Stantcheva, 2021), they have neither investigated their effectiveness in relation to causal misconceptions about policy effects, nor adopted a refutational approach. Concerning the beliefs about the rent-control policy, previous works study the effectiveness of informational treatments that rely on text-only messages (Brandts et al., 2022; Dolls et al., 2022; Müller & Gsottbauer, 2022).

Second, we provide evidence on the effectiveness of short texts, a step forward with respect to the evidence reported in Brandts et al. (2022), where texts are twice as long. In our new study described here, both short RT and NRT lead to a higher reduction in the prevalence of the misconception. Furthermore, this improvement is obtained with a sample of ordinary citizens—not college students—, who are the natural target for communicating economic policies. Third, we show evidence on whether the role of personality traits on revising the misconception varies across visual and written formats. Our study, thus, adds to an emerging research in economics on the effectiveness of different modes of communicating research findings that confront popular beliefs, as well as to the growing literature on debunking misconceptions in political and economic psychology, and, more broadly, to the literature on science communication.

Our main findings are as follows. First, the RV has a significantly higher impact on dispelling the misconception than the RT for participants who initially hold the misconception, that is, the main target group. The effect of the video is even higher relative to the non-refutational, expository text. Second, we do not find that the RT is more effective than the NRT, as both lead to a similar decline in the prevalence of the misconception. Third, a higher propensity to reflective thinking induces a change away from the misconception, for participants who initially hold it, in all treatments.

The paper is organized as follows. Section 2 explains the hypotheses, conditions and procedure of our experimental framework. Section 3 describes the specifications used to test the hypotheses. Section 4 reports the estimated treatment effects and the results of a heterogeneity analysis across some socio-demographic characteristics. Section 5 concludes.

2 Experimental framework

2.1 Research hypotheses

We test three hypotheses, as preregistered. Hypotheses one and two are our central hypotheses, since they pertain to the treatment effects of the RV, RT and NRT, while the third hypothesis refers to the role of cognitive traits. The first hypothesis is about the effect of using the video format on the change in beliefs:

Hypothesis 1 (H1): Communicating scientific evidence about rent control policy through a refutational video is more effective to dispel the misconception about this policy than a refutational or a non-refutational text.

H1 relies on literature from social and political psychology and from communication that has investigated the effects of different communication formats on recipients’ beliefs or attitudes regarding climate change, political or health issues. Some examples of this work, most of it experimental, are the following. Goldberg et al. (2019) report that a video is significantly more effective than its transcript in increasing people’s perception of scientific agreement on climate change. Bolsen et al. (2019) show that climate science politicization undermines credible textual frames, but that adding compelling imagery to the textual frames counteracts science politicization and restores the impact of a scientific consensus message. In a similar vein, Young et al. (2018) show that providing audiovisual information is more effective than text-based messages in correcting misperceptions in the context of political fact-checking. Reynolds et al. (2018) find that using infographics enhanced with images to communicate evidence on the effectiveness of a hypothetical tax to tackle childhood obesity increases perceived effectiveness and support for this policy. In the educational literature, Mayer and Moreno (2003) document that meaningful learning involves cognitive processing that includes building connections between pictorial and verbal representations. Mason et al. (2017) find that accompanying a refutational text with a graph is more effective to reduce a misconception about the earth’s seasonal changes than the text alone. All this work suggests that explanations that include visual components may also be more effective than text-only messages in communicating the effects of economic policies, such as rent-control. Note that the cleaner part of H1 is to compare the RV with the RT, since both have the refutational elements, and only the format changes. For completeness, H1 includes the comparison with the NRT.Footnote 6

Our second hypothesis refers to the effect of a written refutational correction relative to a non-refutational written message:

Hypothesis 2 (H2): Communicating scientific evidence about rent control policy through a refutational text is more effective to dispel the misconception about this policy than a non-refutational text.

Evidence from psychological, political and educational research shows that a refutational correction is often more effective than a simple retraction to correct misinformation in domains such as climate change, educational policies and psychology (Kowalski & Taylor, 2009; Tippett, 2010; Lewandowsky et al., 2012; Lewandowsky, 2021; Chan et al., 2017; Aguilar et al., 2019; Ecker et al., 2020; Weil et al., 2020). In economics, and in the case of the particular misconception about the effects of rent controls, Brandts et al. (2022) report mixed findings in this respect. In the laboratory experiment with college students, both their RT and NRT reduce the misconception on rent controls by a similar magnitude. Hence, the RT does not significantly improve on the NRT. However, in the field environment of a college course, the RT is more effective than standard, non-refutational instruction, and it also slightly improves on the NRT. Here we now test the effectiveness of the written refutational approach using shorter texts based on Brandts et al. (2022). Given the length of the texts in our previous paper, participants may not have been able to pay attention to the refutational elements of the RT. In a shorter RT the refutational elements may be more salient. Some previous studies show that short messages are effective. For instance, Ecker et al. (2020) find that refutational fact-checks (like Tweets) reduce misinformation regarding factual claims. Ferrero et al. (2020) show that 200-word—long texts reduce the number of misconceptions about education among teacher education students. Hypothesis 2 is aimed at assessing the sensitivity of our previous results to the shorter, less attention-demanding version of both texts.

Our third hypothesis refers to the association between the propensity to cognitive reflection and changes in beliefs:

Hypothesis 3 (H3): Higher cognitive reflection values—higher propensity to analytical thinking—will induce a higher positive change in the misconception.

We study the role of a particular personality trait, the inclination to reflective relative to intuitive thinking, on the change of beliefs after being exposed to each treatment. Understanding scientific reasoning and evidence requires cognitive effort, especially when people have misconceptions on an issue. The individual inclination to analytical thinking may thus affect the extent to which people revise their beliefs, as previous evidence shows. Measuring this trait through modified versions of the Cognitive Reflection Test, Pennycook and Rand (2019), Pennycook et al. (2020) and Tappin et al. (2020) find that an individual’s ability to discern fake news from real news, and to update beliefs about political issues, are positively correlated with higher propensity to use analytical thinking (higher CRT scores). Furthermore, McPhetres et al. (2020) find that cognitive sophistication is often positively correlated with pro-science beliefs, although political affiliation may affect this correlation for some divisive issues. Brandts et al. (2022) also report that higher CRT scores are positively related to revising the rent-control misconception in some, but not all, conditions. Given the length of the texts used in our previous paper, cognitive reflection perhaps could not make more of a difference there. Given this evidence, here we will account for this trait.

2.2 Conditions

The experiment has three conditions: the RV condition, the RT condition and the NRT condition. A first pre-analysis plan (PAP) was preregistered at AsPredicted Registry, Wharton Credibility Lab (University of Pennsylvania) on July 4, 2020. This PAP included the RT and NRT conditions. The PAP corresponding to the RV condition was preregistered at AsPredicted Registry on July 3, 2021. All three conditions were preregistered before running the corresponding experiments. Budgetary constraints prevented us from conducting the three conditions simultaneously. To avoid potential seasonal effects we conducted both experiments on the same day and month. Pandemic, socio-economic and political conditions were very similar over this period.Footnote 7

Conditions only differ in the format the message is delivered to participants. In the RV condition, participants watch a 2:42 minute long refutational video about the effects of the rent control policy. In the RT and NRT conditions, participants read a text about the same issue that uses, respectively, a refutational and a non-refutational approach. The estimated reading time of the NRT and the RT is about 2 and 3 minutes, respectively (see Table 11 in Appendix 2). Thus, all formats are designed to take the reader/viewer a similar amount of time.

We follow the guidelines from research in psychology in incorporating the refutational elements into the design of the RV and the RT (Tippett, 2010; Druckman, 2015; Lewandowsky, 2021). The features that characterize the refutational approach are the following. First, the text must activate the misconception. Second, it must explicitly state that the misconception is incorrect. Third, it must explain, as simply and clearly as possible, the scientific arguments and evidence on the topic at hand to show why the misconception is incorrect and what the negative consequences of the belief are. Fourth, it must aim at capturing the recipient’s attention making clear the personal relevance of the problem and the connection with the person’s values.Footnote 8 As in Brandts et al. (2022) we add another feature, which is that we explain that alternative and effective policies to reach the same goal exist. Our intention is to show participants that there are effective policies aligned with fairness concerns, but without the negative consequences of a rent control policy.

2.2.1 The RT

The refutational text is, in Spanish, 671 words long. Based on the longer RT in Brandts et al. (2022), it is about half its length. Appendix 2.1 shows the English translation of the text that participants read in the RT condition. As explained in the introduction, we design a shorter RT to achieve a balance between providing a detailed explanation and reducing time and cognitive load involved in reading the text. The resulting RT includes all the refutational elements listed above to correct the misconception. The first three paragraphs contain a brief introduction to how markets work and to price controls. The first refutational element—activating the misconception—appears in the fourth sentence of paragraph four (see Appendix 2.1). In the last sentence of paragraph four and in paragraph five we state that the belief is incorrect (second refutational element). In paragraphs six to eight and in the first sentence of paragraph nine, we explain the negative, unintended effects of rent controls as shown by the scientific evidence (third element). These are the appearance of waiting lists, black market, poor housing maintenance, supply reduction, as shown in many empirical studies (Malpezzi, 1998; Sims, 2007; Mora-Sanguinetti, 2011; Andersson & Söderberg, 2012; Kattenberg & Hassink, 2017; Asquith, 2019; Diamond et al., 2019a, b; Kholodilin & Kohl, 1839; Hahn et al., 2021). Regarding the fourth refutational element (connecting with person’s values), we (i) refer to the participant’s potential fairness concerns in the first three sentences in paragraph four and the last sentence in paragraph nine; (ii) highlight the example of the city of Stockholm in Sweden, a society with strong reputation for fairness concerns; and (iii) cite the study of Swedish researchers Andersson and Söderberg (2012) about the negative effects of rent control there. Finally, we explain alternative effective policies in paragraphs ten and eleven to show that there are better alternatives to reach the desired goal.

We include two links in the RT in paragraph six (as shown by the sentences in the RT “you can find it HERE”) which lead to the links: the first leads to Andersson and Söderberg (2012) and the second to the Stockholm Housing AgencyFootnote 9. Our purpose is to show readers that the claims about the negative effects of rent controls are exclusively based on empirical evidence. Finally, we use non-technical language and include some sentences to induce critical thinking about one’s own beliefs, such as “[...] contrary to what it seems [...]”, “How can this be?”, “Let’s think slowly and ask [...]”.

2.2.2 The RV

We design the RV as a dynamic slideshow composed of twenty-one frames that combine selected text extracted from the RT and images. We intentionally exclude a voice in the video to avoid confounding effects that may arise from the voice’s gender, intonation and other features of voice. For a rigorous comparison with the other two conditions, the sentences included in the video are taken from the RT, with minor changes in some case to adapt the sentence to the narrative. We design this RV to closely reflect the RT because our purpose is to rigorously test whether just adding images and presenting the information in a dynamic format is a more effective debunking strategy than an equivalent, plain written text. Appendix 2 includes the link to the video and the frames of the video with an indication of the reference to the RT paragraphs where the corresponding exact—or the closest—sentence can be found. As shown in Appendix 2, six out of twenty-one frames are animated with the purpose of increasing the dynamism of the video.

The structure of the RV, thus, reproduces the content and the structure of the RT. Both the RV and the RT include the refutational elements that characterize the refutational approach. Frames one to three correspond to the brief introduction to markets and price controls contained in the first three paragraphs of the RT. The video activates the misconception (first refutational element) in frame seven and states it is incorrect (second refutational element) in frames eight and nine. Frames ten to fifteen explain the negative effects of rent controls as shown by the scientific evidence (third refutational element). As in the RT, the fourth element is included by mentioning Stockholm and the study by Andersson and Söderberg (2012) in frame eleven and by contemplating fairness issues in frames four to six and in frame sixteen. Finally, frames seventeen to twenty-one explain the policies alternative to rent controls.Footnote 10 The video includes images, objects, or symbols that intend to capture the viewer’s attention and to emphasize the message of the written words.Footnote 11 Appendix 2 shows the frames of the RV.

2.2.3 The NRT

To write the non-refutational text we exclude the refutational elements from the RT. The original NRT is in Spanish and is 392 words long (Table 11). Appendix 2.3 shows the English translation of the NRT. The first three paragraphs are the same as in the RT. Paragraph four is partly new—except for one sentence equal to the first sentence in paragraph six of the RT—because the NRT does not activate explicitly the misconception, does not state that the belief is incorrect and does not consider the person’s values. Regarding the latter, paragraph eight of the NRT is equal to paragraph nine of the RT except for the last sentence, because it appeals to fairness values. Paragraphs ten and eleven of the RT—about alternative policies—are excluded from the NRT. The only element that we maintain is the explanation of the scientific evidence about the negative effects of rent controls because this is usually the case in standard, expository, textbooks (see, for instance, Krugman and Wells (2020). Therefore, paragraphs five to seven of the NRT are equal to paragraphs six to eight of the RT, including the two links (except for the first sentence in paragraph six of the RT, which is included in paragraph four of the NRT as explained above). The NRT provides thus a research-based explanation of the negative effects of rent controls using a neutral—expository—tone.Footnote 12

In our experiment, the NRT condition acts as an active control group. In contrast to a pure or passive control, where irrelevant or no information is provided, an active control group receives differential information. Having an active control group is one of the methods used in the literature that allows to disentangle priming effects from true belief updating. It also allows for a cleaner estimation of treatment effects, especially when treatments may trigger side effects on attention or emotions (Haaland et al., 2023).

Tables 10 and 11 in Appendix 2.4 show readability measures and text statistics of the RT and NRT in Spanish. The NRT is somewhat easier to read than the RT. However the differences in the readability statistics are small. Overall, both texts have a fairly standard readability, corresponding to a reading level of seventh graders, around 13–14 years old.

2.3 Experimental procedure

The experiments were run on-line by the LINEEX Laboratory for Research in Behavioural Experimental Economics of the University of Valencia (Spain). An advantage of an on-line experiment is less intrusive than delivering the message in a physical laboratory environment and it also allows us to access a sample from the general adult population. The experiment with the RT and NRT conditions was run on July 7, 2020, and the experiment with the video condition on July 6, 2021. LINEEX recruited 1,064 adult participants, 14 more than initially planned because of slight over-recruitment. The recruitment rules where that the pool was gender balanced and with at least 20% of participants older than thirty years of age. Sample size is determined by the goal to reach a statistical power close to 80% in case of an effect size equal to 0.29, which is the estimated effect of the RT relative to the NRT in the laboratory, as shown in Table 3 of Brandts et al. (2022). It is close to the comparable estimated effect of the RT relative to the same benchmark in the field (see Table 8 in Brandts et al. (2022).

The procedure and questionnaires to elicit beliefs are the same across conditions. Before starting the experiment, participants’ profiles were checked to make sure they fulfilled the required characteristics. In addition, filters for previous participation were applied so the final pool was composed solely of inexperienced participants. Security measures such as IP geolocation were applied before and after the start of the experiment in order to avoid fraud and profile duplication. Subjects could participate from their digital devices.

The 1,064 participants are randomly allocated to the three conditions, RT and NRT conditions have 351 participants and RV condition has 362 participants. Composition of the final pool is as follows: 50% women; around 35–45% older than thirty, with average age between 30 and 33 years; about 45–50% with college education, and about 20% tenants. Table 12 in Appendix 3 shows the distribution of socio-demographic characteristics across conditions and the results from a test of differences in means from pairwise comparisons between the NRT, RT and RV. Participants’ characteristics are rather balanced across conditions, with some small significant differences.Footnote 13

We design two questionnaires to elicit participants’ beliefs about the effect of rent capping. One is to be completed before the intervention, and the other after it. Both questionnaires include six statements: three related to housing (including the key statement about the misconception), two on attitudes towards science, and one about fairness. Appendix 1 shows all the statements included in the questionnaires. Questionnaires are identical across the three conditions, but vary somewhat before and after the treatment in order to blur the focus on the statement on rent controls and to avoid memorization of answers. As shown in Table 9 in Appendix 1, three out of six statements are the same before and after the treatment.

The statement that refers to the misconception reads as follows: “Establishing rent controls, such that rents do not exceed a certain amount of money, would increase the number of people who have access to housing facilities.” Participants are asked to indicate their agreement with this and remaining statements on a five-point scale, from totally agree to totally disagree. With the term beliefs we refer to the degree of agreement with this statement. Our outcome variable is the opinion change, measured as the difference between final and initial beliefs.Footnote 14

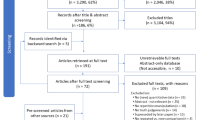

Figure 1 depicts the steps of the experiment. First, participants see on their screens a consent form, where they are informed that the experiment is part of a research project in social sciences, that their personal data will be confidential, that their decisions will be anonymous, and that they will be paid if they agree to participate. If they do so, they are asked to sign the consent form. The next screen explains that they will be asked to complete several tasks, that if they complete all of them, they will receive a payment of six euros, and that one of the tasks will allow them to obtain two additional euros if they perform it correctly. They are also informed that the tasks will take about 20 minutes but they can use more time if they wish. Instructions emphasize that in the opinion questionnaires there are no correct or incorrect answers, and remind participants that payment does not depend on these answers, but on task completion (see the initial instructions in Appendix 5).

After a set of socio-demographic questions, participants fill out the questionnaire eliciting initial beliefs. On the next screen, participants see either the RT, the NRT, or the RV according to the condition they have been assigned to. They can take their time to read and re-read the texts, as they are not given a time limit. Participants in the video condition can also take their time and view the video several times if they wish, and they can also pause it.

After participants have read the texts or watched the video, we assess their attention and understanding of the content by showing a screen with two comprehension questions. These questions are shown in Appendix 2.5 and are the same across the three conditions. If participants answer both questions correctly, their payment increases by 2 euros. They cannot go back to previous screens with the text or the video to answer the questions. They are informed about this before being presented with the text or the video. Note that, through these two questions, we incentivize that participants pay attention to the texts or the video, as this is an essential aspect of the experiment. These are the only two questions that have a correct or an incorrect answer. We do not incentivize answers to all other questions and statements, since for them there are no correct or incorrect answers. We expect participants to answer in good faith by informing them, from the outset, that the study is part of a social research project carried out by professors from several universities, who will not be able to see or verify their personal identity, and that the purpose of the study is to contribute to a better understanding of our society by investigating people’s views. Moreover, since polls and studies are often used by policy-makers, participants in our study may have an intrinsic interest in revealing their true beliefs.

On the next screen participants answer an 8-item Cognitive Reflection Test. We adapt the original questions in Frederick (2005), Toplak et al. (2014), Thomson and Oppenheimer (2016) to have economic content, so that they do not appear too disconnected from all other statements that have a socio-economic character. The eight items are shown in Appendix 4. We present them after the respective intervention to prevent the CRT from affecting participants’ reaction to the intervention.

Following the CRT questions, participants answer the final opinion questionnaire. In the closing screen participants are informed about the total payment and thanked for their collaboration. Appendix 5.2 shows all the instructions given to participants on each screen, which are the same across conditions.Footnote 15

3 Analysis

To test the hypotheses, we specify the following general regression:

where \(y_{i}\) is the change in beliefs, computed as the difference between a participant’s response to the statement on rent controls after the intervention and her response before the intervention. We transform the original responses in the five-point scale into numerical values as follows: 5 (totally disagree), 4 (disagree), 3 (do not know), 2 (agree), and 1 (totally agree). Hence \(y_{i}\) takes values between \(-4\) (a change from fully disagree pre-intervention to fully agree post-intervention) and 4 (a change from fully agree pre-intervention to fully disagree post-intervention). That is, a positive value obtains when the response varies from agreement towards disagreement with the misconception. If the participant provides the same response in both questionnaires, the change is zero. \(D_{i}\) is a dummy variable equal to one if the participant is exposed to a given treatment and zero otherwise.

To test for the first hypothesis we estimate Eq. (1) by comparing the change in beliefs of participants exposed to the RV relative to the change in beliefs of participants exposed to the RT, both unconditional and conditional to the initial belief. Hence \(D_{i}\) is a dummy variable equal to one if the participant is exposed to the video and zero if she/he is exposed to the RT. Testing for H1 also involves estimating Eq. (1) by comparing the change in beliefs of participants exposed to the RV relative to the change in beliefs of participants exposed to the NRT, both unconditional and conditional to the initial belief. Hence \(D_{i}\) in this case is a dummy variable equal to one if the participant is exposed to the video and zero if she/he is exposed to the NRT.

To test for the second hypothesis we estimate Eq. (1) by comparing the change in beliefs of participants exposed to the RT relative to the change in beliefs of participants exposed to the NRT, both unconditional and conditional to the initial belief. In this specification, \(D_{i}\) is a dummy variable equal to one if the participant is exposed to the RT and zero if she/he is exposed to the NRT.

To test for the third hypothesis we build on the models above adding participants’ CRT scores to the regressions. To additionally assess whether the effect of the treatment varies with the propensity to think analytically, we add an interaction term between CRT score and the corresponding dummy variable.

Furthermore, we explore whether the change in beliefs is correlated with other personality traits, such as attentiveness, as captured by the time spent reading the texts or watching the video. We build on the models specified above with the CRT scores and add this attentiveness measure. We also study whether the effect of the interventions varies across gender, education level or housing ownership status by separately estimating the treatment effects in these subsamples.

4 Results

4.1 Descriptives

Table 1 reports the distribution of the degree of agreement with the statement about rent controls before and after each intervention. Figures 2 and 3 show the graphical description of these data. The distribution of initial beliefs is very similar in the three conditions: about 77 to 79% of participants agree or totally agree with the statement—that is, hold the misconception—while only 12 to 15% disagree (see Panel A in Table 1 and Fig. 2). These numbers are in line with findings from Brandts et al. (2022), where the misconception is initially shared by 75% to 84% of participants in the laboratory experiment, and by 70 to 78% in the field, depending on the condition. Note that these percentages are also similar to those found in polls conducted in Spain, Germany, in the UK or in the USA (see footnote 1).

After the treatment, the share of participants who disagree or totally disagree with the statement increases substantially in all three conditions, up to 42 to 53% depending on the treatment (see Panel B in Table 1 and Fig. 3). Note that the percentage in the case of the RV is over 10 percentage points (pp) higher than in the case of the NRT and over 8 pp higher than in the case of the RT. These percentages are substantially higher than those found in Brandts et al. (2022), where the share of the participants who disagree or totally disagree after the treatment ranges from 25% to 32% in the laboratory and from 10% to 29% in the field, depending on the condition. Nevertheless, the comparison of the distribution of final beliefs here with those in our previous work should be used with caution since the information treatments are different as well as the subject pool, as explained above.

Panel C in Table 1 shows the change in beliefs and the significance level from a test of difference in means. In both text conditions the percentage agreeing falls substantially, by about 37 pp, a drop of almost 50%. In the RV condition, the drop in the percentage of those who agree is more dramatic, being equal to 45 pp, almost 60%. These are the participants who abandon the misconception. Some of them move towards “don’t know” but most move towards disagreeing. In the NRT condition the share of those disagreeing increases by 29 pp, which is more than twofold the initial share. In the RT condition, the share disagreeing increases slightly more, by 32 pp. The percentage of those disagreeing in the RV condition increases by 38 pp, even more. All these changes are statistically significant.

According to these findings, the on-line intervention with shorter texts appears to be more successful in reducing the prevalence of the misconception than the interventions with the longer texts in Brandts et al. (2022), although one needs to take into account the change of subject pool and the fact that the current experiment was conducted online. More importantly, a visual presentation of the refutation arguments is, on a descriptive level, more effective than a text-only correction, be it the NRT or the RT.

Table 2 shows a number of performance indicators: CRT scores and average time spent on each screen of the experiment. We do not observe significant differences for most of them across the three conditions. The CRT score is measured as the percentage of correct answers to the eight items included in the test. The mean score is around 0.45, in line with the average CRT score in Brandts et al. (2022) and in Mosleh et al. (2021). To explore whether the CRT is correlated with initial beliefs, we regress the latter on the CRT and socio-demographic variables. Results are shown in Table 15 in Appendix 3. The CRT score is not significantly correlated with the initial belief in any of the conditions, which indicates that being more analytical is not associated to a lower prevalence of the misconception.

On average, participants spend around 13 minutes to complete all screens. This is lower than the expected duration of about 20 minutes, calculated on the basis of the length of the questionnaires, the video duration and the texts’ estimated read time (see Table 11). Time spent on the treatment screen exhibits significant differences across conditions, as expected, since the estimated duration of the treatments is somewhat different. Participants spend more time on the RT than on the NRT. Average time spent on the RT screen is 2.8 minutes, slightly below the estimated read time (3.4 minutes). Average time on the NRT screen is about equal to the estimated time of 2 minutes. Looking at time per word, however, we find that the RT is read faster than the NRT. Participants spend more time on the video than on the two texts, as average time on the RV screen is 3.26 minutes, which is higher than the video duration (2.42 minutes). We also observe that the percentage of participants who spend less than the estimated time on the treatment screen is substantially lower in the RV than in the text conditions. All this suggests that the RV increases the attention paid to the message, while texts are read more lightly.

Time spent on the comprehension question screen is significantly lower in the RV than in the two text conditions, with no significant difference between the last two. A possible interpretation is that participants focus more on the visual presentation, which allows them to respond faster to the comprehension questions. However, we do not observe large differences across conditions in the percentage of participants who answer both questions correctly. Finally, very few participants (about 5%) show an interest in checking the links to the Andersson-Söderberg paper or to the Stockholm housing agency website in the text-only corrections. Table 13 in Appendix 3 shows more statistics on the total time spent in the experiment and on each screen in the three conditions.

4.2 Estimation results

The results shown in Tables 3 and 4 address the three research hypotheses formulated in Sect. 2.1.

Table 3 presents the estimation results of the differential impact of the treatments on participants’ beliefs, comparing pairwise and pooling all three treatments. We first estimate Eq. (1) with and without the set of socio-demographic variables in each case to assess the sensitivity of the results to the observed sample unbalances across conditions discussed above. Since there are no large differences in the estimated treatment effects once we have accounted for socio-demographics, we focus on commenting on the results with controls (Table 14 in Appendix 3 shows the estimated coefficients of the control variables).

Column (2) shows that the RV reduces the misconception relative to the RT—as the positive coefficient indicates a change away from the misconception—at the 10% significance level. The effect size, as measured by the ratio of the estimated coefficient to the standard deviation of the dependent variable indicates that the video induces a revision in beliefs towards disagreeing with the statement by 0.13 standard deviations. Column (5) shows that the RT has a positive but not significant impact on reducing the prevalence of the misconception relative to the NRT. Since both RT and NRT decrease the prevalence of the misconception by a similar magnitude (Table 1), the additional effect of the RT is of small size and not precisely identified. Column (8) shows that the effect of the RV with respect to the NRT is larger and significant at the 1% level. The effect size is of 0.20 standard deviations, larger than the effect with respect to the RT. When pooling all three conditions in column (11), the estimated effects of the RV and the NRT with respect to the RT—the base category—are very close to those in columns (2) and (5). Since results are robust to conducting the pooled and pairwise estimations, we use the latter in the rest of the paper, as sample sizes are sufficiently large. This approach is more flexible than pooling because it allows the coefficients of control variables to vary across pairwise comparisons. Pooling all conditions constrains the coefficients of control variables to be equal and, therefore, any difference in participants’ distribution of characteristics across conditions would be captured by the treatment coefficients.

Columns (3), (6), (9) and (12) show that adding the CRT score to each specification with controls barely changes the treatment estimates. In all pairwise comparisons a higher CRT score is significant and associated with a stronger change away from the misconception, with very little variation across conditions. The estimates range between 0.45 in the RV vs NRT comparison and 0.51 in the RT vs NRT comparison, implying an effect size of 0.34 to 0.40 standard deviations. Recall that the CRT score is not significantly correlated with the initial belief in any of the conditions, as shown above. However, being more analytical does predict the ability to revise the misconception.

Results in Table 3 capture the overall effects of treatments on changing beliefs. These overall effects, however, may conceal differences across the distribution of initial beliefs. We thus estimate Eq. (1) separately for the groups of participants who initially agree, don’t know and disagree with the statement.Footnote 16 Table 4 contains the results for the specifications with all the controls. This table also shows the results from the specifications that add the CRT score and its interaction with the treatment.

In Panel A we compare the RV to the RT. The RV has a positive and significant effect at 5% level on abandoning the misconception of those participants who initially agree with it, with a coefficient of 0.26 and an effect size of 0.21 standard deviations. The effect is positive although not significant for the other two initial belief categories. Adding the CRT does not alter these patterns. The CRT score is positively correlated to abandoning the misconception (equivalent to 0.34 standard deviations) but the association is only significant for those who initially agree. Adding an interaction term between the RV and the CRT score does not change previous results, as shown by the estimated average marginal effect (AME), which is 0.26 in both regressions. That is, the effect of the RV does not vary with CRT scores.

In sum, the RV improves on the RT basically through the effect on those who initially agree with the misconception. Note that participants who initially disagree or those who are uncertain do not significantly react to the RV. Therefore, the RV induces a reduction of the misconception by shifting the whole distribution of beliefs towards disagreeing.

In Panel B we compare the RT to the NRT. We do not find significant differential effects of the RT along the distribution of initial beliefs. As in Panel A, higher CRT scores are associated with a higher abandonment of the misconception, with the correlation being slightly stronger (0.40 standard deviations). The interaction term between the RT and the CRT score is not significant, as above. These results are qualitatively in line with those obtained in the laboratory in Brandts et al. (2022).

Panel C in Table 4 shows the comparison between the RV and the NRT. Here, as in Panel A, the RV has a positive effect on revising the misconception of those who initially hold it. The effect is significant at 1% level and its size is equivalent to 0.28 standard deviations, stronger than the effect with respect to the RT in Panel A. The CRT score also shows a positive correlation with moving away from the misconception. The interaction term between the CRT score and the treatment is not significant.

Hence, our results indicate that the ranking in terms of effectiveness in dispelling the misconception is the RV, followed by the RT and, last, the NRT.Footnote 17 They also show that the importance of the association between the disposition to reflective thinking and the revision of the belief slightly decreases when information is presented in video instead of in text.

To sum up, our empirical findings provide the following evidence regarding the three research hypotheses as follows:

Result 1. “The RV has a significantly higher impact on abandoning the misconception than the RT, and even higher impact relative to the NRT. In both cases the effect of the RV is driven by the impact on participants who initially hold the misconception.”

Our results support H1. Visual communication is more effective in dispelling the misconception than refuting it through a written text, even if the text is refutational.

Result 2. “The RT is not significantly more effective than the NRT in dispelling the misconception.”

Our estimates do not support H2. Although the effect of a written RT is positive, it is small, and its differential impact with respect to the NRT is not precisely estimated. The refutational elements of the RT do not seem to stand out among the other sentences. This result concurs with Brandts et al. (2022). Note, however, that in that study the proportion of participants who hold the misconception after the intervention is of about 54 to 63%; while here this percentage is smaller, 41%. The drop is now of 37 pp, almost double than in the former study. This suggests that short texts are more effective than long texts.

Result 3. “A higher propensity to reflective thinking significantly induces a change away from the misconception in all treatments for participants who initially hold it.”

Our findings show a positive association between the CRT score and the change away from the misconception, especially for the key group of interest, i.e., participants who initially have the misconception. This evidence is in line with H3. However, we should be cautious when drawing conclusions about H3. Since CRT scores are collected after the treatment in each condition for the reason discussed above, the refutational correction or the visual elements may affect CRT scores. Results suggest that this potential influence is not serious since the average CRT score is not significantly different across the three conditions, as shown in Table 2. Nevertheless, we cannot fully disregard that the treatments may affect the answers to the CRT questionnaire and, thus, we have to be cautious about the causal effect of the propensity thinking on the change of beliefs. Finally, we do not find evidence that the treatment effect varies with the CRT score.

We explore whether the mechanism that makes the RV more effective than written information is related to a higher ability of the RV to capture and keep the viewer’s attention. As described above, in the case of the NRT condition 53% of participants spend less time than expected on the text screen; in the RT condition, 62% are below expected time. In the RV condition, in contrast, only 24% spend less time than the whole video duration. This suggests that the video attracts and keeps the attention of more participants than the text interventions. To analyze the role of attention, we define two alternative measures. One is the ratio between the time spent on the treatment screen by an individual and the expected time to be spent on that screen. This is 2:42 minutes in the case of the RV, and 3:40 minutes in the RT, and 2:00 minutes in the NRT (see Sect. 2.2). The second measure is a dummy variable equal to one if time spent on the treatment screen is below the expected time and zero otherwise.

We separately add these two measures to the specification with the CRT and the controls both for the full sample and conditional on initial beliefs. Table 5 shows that the estimated CRT and treatment effects are robust to the attention measure used. Relative time has always a positive effect on revising beliefs, and the indicator for time below the expected time shows a congruent negative sign. Estimated treatment effects fall somewhat compared to those in Tables 3 and 4, as does the effect of the CRT. This suggests that part of the positive effect of the video found above can be attributed to the video per se prompting higher attention compared to plain text. In other words, if participants paid as much attention to the text as they do to the video, the impact of the two formats would likely be similar. When comparing the RV with the NRT, the impact of attention is significant and positive, and so does the treatment effect. Overall, these results suggest that integrating visual elements increases attention and thus comprehension of the arguments and evidence presented in the correction, boosting the refutational elements.

4.3 Additional results

We explore whether treatment effects vary across several socio-demographic characteristics and according to several measures of trust. We focus on the subsample of participants who initially hold the misconception (agree with the statement) because this is where the relevant changes take place as discussed above.

We first split the sample of participants according to whether they are tenants or owners. Tenants and owners might differ in their reaction to a treatment, since they would be potentially affected in opposite directions by rent regulation. Perhaps current tenants, whose interest is to pay low rents, stick to their initial belief more than owners, who may be more receptive to the intervention, given that they may wish to rent out at some point. Panel A in Table 6 shows, however, that the treatments do not significantly differ for tenants and owners. Note that sample size is small in the case of tenants, which reduces the precision of the estimates. The only exception is for the RV vs NRT comparison, where the positive impact of the RV on owners’ revision is significant. This is in line with owners being more receptive to the intervention than tenants, who are not significantly affected by it.

Men and women often differ in their attitudes or behavior. In our data gender is significantly correlated with the initial opinion (see Table 15 in Appendix 3). Results in Panel B, Table 6 show some differences in the effect of treatment on beliefs across gender but there is no clear pattern. For the RV vs RT comparison, RV is significant for men but not for women and for the RT vs NRT comparison, RT is significant for women but not for men. For the RV vs NRT comparison, both men and women significantly revise their beliefs but the video has a stronger effect on women.

Education is not significantly correlated with the initial belief (see Table 15 in Appendix 3) but more educated individuals may react differently to the treatments than less educated ones. To study this we split the sample into low and highly educated participants. Highly educated includes those who report tertiary education as their maximum schooling level as well as participants who report being currently enrolled in tertiary education. Results in Panel C of Table 6 show that the video is more effective for the highly than for the low educated participants, relative to both the RT and NRT. When comparing the two text conditions the effects are not significant for any education level. Therefore, results suggest that participants with high education are more likely to abandon the misconception after watching the video than participants with low education.

We finally explore whether participants react differently to the treatments depending on their degree of trust towards economic statistical information, and towards several information sources. We use the questions on trust that participants answer before the treatments: what they consider to be the most trustworthy source for economic and social information, and the extent to which they mistrust economic statistics. Table 7 shows the distribution of responses for these two questions. The distributions are similar across conditions and show, maybe surprisingly, that around 70% of participants select politicians as the most trustworthy source for economic and social information. More than half of the participants show mistrust towards economic statistics: they agree or totally agree that economic statistics do not reflect, in general, the true economic situation.

Panel A in Table 8 shows the results from separate regressions run after splitting the sample according to whether participants consider politicians or other sources to be the most trustworthy source for economic and social information. In the comparison between visual and text-only messages (RT or NRT), participants who trust other sources significantly abandon the misconception, while those who trust politicians, only significantly update their beliefs if they receive the video compared with receiving the NRT. In the comparison between RT and NRT, the effects are not significant. Although the evidence is not very strong, these results suggest that the visual message is somewhat more effective for those who declare that politicians are not their most trustworthy source.

Panel B in Table 8 presents the regression results for the treatment comparisons after splitting the sample according to whether participants mistrust economic statistics, i.e., those who respond “totally agree” or “agree” to the statement. Those who mistrust statistics (columns Yes in the table) are more convinced by the RV both when compared to the RT and to the NRT. In the latter comparison (RV vs NRT), the video is also effective for those who do not mistrust statistics. The comparison between the text-only messages (RT vs NRT) shows that the RT only has a significant positive effect on the group who trust economic statistics. These results suggest that the visual message is a especially effective format for those who do not trust economic data.

5 Conclusions

The accumulation of research about the causes and effects of socio-economic phenomena has contributed to building a strong consensus among researchers on some issues. A case in point are the effects of rent controls on rental housing availability. Yet, this consensus has not reached a majority of citizens, who endorse and demand this policy in the hope that it will have positive effects on access to housing. Effective communication between researchers and the public faces several barriers in all fields of knowledge. Some stem from the inherent difficulty involved in explaining concepts, methods and results in a simple way without losing accuracy; others may arise from different biases and values that arise when lay people process information. Barriers may be stronger in the case of social sciences, where even providing hard factual information encounters skepticism (Nyhan, 2020). The concern about the serious consequences that ignoring solid knowledge may have, has led to the emergence of a body of research on how to communicate science to policymakers and the public in several fields. This concern is further justified by the rise of mis-information in the media and social networks.

In this paper we have investigated the effectiveness of specific communication formats in confronting the widespread belief that rent control increases affordable housing using a sample of ordinary citizens. We design a short video that combines a refutational approach with images and we test its effectiveness relative to short text-only messages. The video contributes to closing the gap between scientific consensus and public opinion substantially. Indeed, while 77% of participants initially hold the misconception, after the refutational video intervention this percentage drops to 32%, that is, by 45 pp. This is an additional 12 pp compared to the effect of texts that convey the same information but with words only. The estimated effect of the visual message is significant and induces an abandonment of the misconception for participants who initially hold it. Our results suggest that part of this positive effect may be driven by the higher attention that the video attracts. We interpret that visual communication, by reducing cognitive effort, makes economic arguments easier to grasp. Our results are in line with previous literature that finds that visual tools are more effective than text-only messages to dispel misconceptions about other topics (Mayer & Moreno, 2003; Goldberg et al., 2019; Young et al., 2018; Reynolds et al., 2018).

Our results also show that short texts are more effective than longer ones. Both short RT and NRT lead to an additional 15 percentage points reduction in the prevalence of the misconception, compared to the reduction in Brandts et al. (2022), where texts are twice as long. Nonetheless, like in our previous work, the refutational correction in a written message does not improve on a non-refutational one. We wish to emphasize that the positive effects of the video and short texts are obtained with a pool of ordinary citizens who are the natural target for communicating economic policies.

The main takeaway from this study is that communicating to the general public evidence about the effects of rent control in a visual refutational format goes a long way in dispelling this misconception. Citizens are currently highly exposed to visual communication in the media; there is also a lot of competition for their attention. We therefore believe that it is important that the format that uses images is the most successful in our case. A practical implication is that researchers should rely more on visual refutational elements to communicate research findings to the public. Academic associations, for instance, could design and disseminate refutational videos addressing misconceptions about socio-economic issues. Our study, thus, adds to the still scarce research in economics on the effectiveness of different modes of communicating research findings that confront popular beliefs. We show that our approach, refutational visual communication, may be a promising method to communicate other counter-intuitive economic findings to ordinary citizens. We also add to the literature on misconceptions in political and economic psychology, and, more broadly, to the literature on science communication.

Yet, in our experiment 32% of participants still hold the misconception after the refutational video intervention. Although obviously one cannot expect to persuade everybody, we think that this is still a high percentage, given the high consensus among researchers. We see several possibilities that remain to be explored in future research. The first pertains to the characteristics of our refutational video. In designing it we have been quite restricted, since we needed to make it very parallel to the refutational text to properly identify the effect of the pure visual element. A richer, less restricted video could be more effective, closing the gap further.

Second, our communication strategy may be improved by adding some other important aspects. The standard refutation correction is based on inducing people to approach the issue at hand analytically. One possible reason that may be behind the resistance to move away from the misconception is that corrections involving topics sensitive to political views or worldviews are perceived as a threat to individuals’ social identity. This kind of misconceptions may be harder to debunk, and may require the refutation approach to account for this explicitly. Other issues that warrant further research are for how long changes in beliefs persist, whether peer-to-peer communication would affect them (Hüning et al., 2021), and whether changes in beliefs are related to ideology. Moreover, a relevant question for further research is whether changes in beliefs translate into behavior such as voting decisions. Our purpose here is to put the focus on whether communication formats have an impact. We think that this is the first battle, so to speak, we, as economists, have to fight.

A final issue is whether and how the source of information may affect the reaction to information treatments. Regarding the subject of rent-control, the reaction might be different depending on whether the information is provided by political parties, civic associations or experts. Ecker et al. (2022) review the psychological drivers of misinformation belief and its resistance to correction and also refer to the issue of which societal players have the power to affect public perceptions. This is an important subject in relation to misconceptions about rent control and other policies that should be studied in the future.

Notes

In Spain, a poll conducted in 2023 by 40dB on behalf of the media Cadena Ser/El País found that 75% of respondents support rent control. In Germany, 71% of respondents to a poll conducted by Infratest dimap in 2020 were in favor of the rent cap in Berlin. In the UK, the support for rent controls reached 71% in a poll conducted in December 2019 by Ipsos MORI, with only 9% of people opposing them. This is the most supported policy among other housing policies indicated in the same survey. Building new public homes is supported by 56%, while opposed by 15%. In a poll conducted by the Institute of Governmental Studies (IGS) of UC Berkeley in 2017, 60% of the state’s registered voters favored rent control, while 26% opposed them.

Percentage weighted by the degree of confidence of the response. See https://www.igmchicago.org/surveys/rent-control/

See also Stockholm’s housing agency, https://bostad.stockholm.se/english/.

The on-line experiment was preregistered at AsPredicted Registry, Wharton Credibility Lab, University of Pennsylvania.

There is no consensus on the terminology that refers to attributes other than cognitive ability. Here we adopt the term personality traits. Other authors refer to them as non-cognitive abilities, character skills, temperament or personal qualities. All this terminology has flaws as discussed in Duckworth and Yeager (2015).

Given our limited budget, we could not test the effectiveness of another possible visual treatment, a non-refutational video. Our previous work in Brandts et al. (2022) shows some indication that the refutational text correction improves slightly relative to the expository text. We decided here to keep the refutational approach, now combining it with visual elements, to test whether the effectiveness of this format of communication is stronger than the RT format.

An anonymized copy, without author names, of the first preregistered plan is available at https://aspredicted.org/blind.php?x=uv2t69. The PAP corresponding to the video condition is available at https://aspredicted.org/blind.php?x=4n78sy.

A fifth element refers to facilitating interactions among individuals by asking them to explain their opinions to other participants. We do not introduce this element here because of the technical complexity involved in an online setting.

First link: https://aresjournals.org/doi/abs/10.5555/jhor.21.2.xv120w45816v3344. Second link: https://bostad.stockholm.se/Como-funciona/ Both links are also included in the NRT below

Due to technical complexity, it was not possible to include in the RV the two links inserted in the RT.

We thank a professional in the visual industry for his advice about the suitability of the sequences of images and signs.

When the misconception on rent controls is not explicitly addressed, undergraduate students are found to stick to it after receiving standard teaching on price controls during a course in principles of economics, even if they perform well in a question on this topic included in graded tests (Busom et al., 2017).

To see how our sample compares to the adult population in Spain, we use the information from the March 2023 Barometer sample from the Spanish Centro de Investigaciones Sociologicas, CIS (Sociological Research Center). CIS is the national public entity in charge of regularly conducting opinion surveys on a range of socio-economic and political topics. Its sample includes individuals 18 years old or older, as is our case. In this sample, 52% of people are women, 20% younger than 34 years of age, 4% non-Spanish. The distribution of education levels is: primary or less 8.4%, compulsory 13%, upper secondary 34%, tertiary 44%. The employed are 54%, the unemployed 9%, not in labor force 36%. With respect to housing, 47% are owners, 29% have a mortgage, 19% are tenants, and other 5.4%. CIS does not provide data on household composition, municipality size and province of residence. We thus use information from the Spanish Statistics Institute (INE). According to the 2020 Household Survey, household composition is: single 26%, single parent 10.4%, childless couple 21%, couple with children 33%. According to the 2021 Census, the distribution of the population by town size is 20% small, 40% medium and 40% large; the share of population living in Valencia is around 6%. Therefore, our samples for each treatment are quite representative for gender, non-Spanish, education, labor status, home ownership, and less so for household composition and town size. For age distribution and province of residence the differences are large. We should clarify that our limited budget did not allow for a complete stratification of the sample to obtain a fully representative sample of the adult Spanish population. Nevertheless, the level of representativeness of our sample is quite high in many important dimensions.

Note that rents are unregulated in Spain since the 1980s; only in May 2023 the Spanish Parliament passed a law that allows regional governments to set rent control regulations. At the local level, however, only the municipality of Barcelona and some other municipalities in Catalonia with tight housing markets were subject to rent control during the period September 2020 to April 2022. About 3 to 6% of our participants live in the province of Barcelona, depending on the condition. Not all municipalities were regulated, and although we do not have information about the participants’ municipality of residence, we can conclude that very few participants potentially live in a regulated area. Therefore, it is very unlikely that this factor drives the results.

A potential concern in experimental work is the presence of an experimenter demand effect. Note that all three interventions would be equally subject to this effect, and what we do is to compare across conditions.

We add up agree and totally agree; and disagree and totally disagree.

The pooled estimation conditional on initial beliefs yields the same results.

References

Aguilar, S. J., Polikoff, M. S., & Sinatra, G. M. (2019). Refutation texts: A new approach to changing public misconceptions about education policy. Educational Researcher, 48(5), 263–272.

Ahern, K. R., & Giacoletti, M. (2022). ‘Robbing Peter to pay Paul? The redistribution of wealth caused by rent control. NBER Working Paper 30083.

Alesina, A., Ferroni, M. F., & Stantcheva, S. (2021). Perceptions of racial gaps, their causes, and ways to reduce them. NBER Working Paper 29245.

Andersson, R., & Söderberg, B. (2012). Elimination of rent control in the Swedish rental housing market: Why and how? Journal of Housing Research, 21(2), 159–181.

Asquith, B. J. (2019). Do rent increases reduce the housing supply under rent control? Evidence from evictions in San Francisco. Upjohn Institute Working Paper 19-296.

Barrera, O., Guriev, S., Henry, E., & Zhuravskaya, E. (2020). Facts, alternative facts, and fact checking in times of post-truth politics. Journal of Public Economics, 182, 104123.

Bó, E. D., Bó, P. D., & Eyster, E. (2018). The demand for bad policy when voters underappreciate equilibrium effects. The Review of Economic Studies, 85, 964–998.

Bolsen, T., Palm, R., & Kingsland, J. (2019). Counteracting climate science politicization with effective frames and imagery. Science Communication, 41(2), 147–171.

Brandts, J., Busom, I., Lopez-Mayan, C., & Panadés, J. (2022). Dispelling misconceptions about economics. Journal of Economic Psychology, 88, 102461.

Busom, I., Lopez-Mayan, C., & Panadés, J. (2017). Students’ persistent preconceptions and learning economic principles. The Journal of Economic Education, 48(2), 74–92.

Chan, M. S., Jones, C. R., Jamieson, K. H., & Albarracín, D. (2017). Debunking: A meta-analysis of the psychological efficacy of messages countering misinformation. Psychological Science, 28(11), 1531–1546.

Coibion, O., Gorodnichenko, Y., & Weber, M. (2019). Monetary policy communications and their effects on household inflation expectations. NBER Working Paper 25482.

Coibion, O., Gorodnichenko, Y., & Weber, M. (2020). ‘Does policy communication during covid work? NBER Working Paper 27384.

Diamond, R., McQuade, T., & Qian, F. (2019a). The effects of rent control expansion on tenants, landlords, and inequality: Evidence from San Francisco. American Economic Review, 109(9), 3365–3394.

Diamond, R., McQuade, T., & Qian, F. (2019b). Who pays for rent control? Heterogeneous landlord response to San Francisco’s rent control expansion’, AEA Papers and Proceedings, 109, 377–380.

Dolls, M., Schüle, P., & Windsteiger, L. ( 2022). Affecting public support for economic policies: Evidence from a survey experiment about rent control in Germany. CESifo Working Paper No. 10493.

Druckman, J. (2015). Communicating policy-relevant science. PS, Political Science & Politics, 48, 58–69.

Duckworth, A. L., & Yeager, D. S. (2015). Measurement matters: Assessing personal qualities other than cognitive ability for educational purposes. Educational Researcher, 44(4), 237–251.

Ecker, U. K., Lewandowsky, S., Cook, J., Schmid, P., Fazio, L. K., Brashier, N., Kendeou, P., Vraga, E. K., & Amazeen, M. A. (2022). The psychological drivers of misinformation belief and its resistance to correction. Nature Reviews Psychology, 1, 13–26.

Ecker, U. K., O’Reilly, Z., Reid, J., & Chang, E. (2020). The effectiveness of short format refutational fact-checks. British Journal of Psychology, 111, 36–54.

Elias, J., Lacetera, N., & Macis, M. (2022). Is the price right? The role of economic tradeoffs in explaining reactions to price surges. NBER WP, W29963(112), 163–169.

Ferrero, M., Konstantinidis, E., & Vadillo, M. A. (2020). An attempt to correct erroneous ideas among teacher education students: The effectiveness of refutation texts. Frontiers in Psychology, 11, 577738.

Frederick, S. (2005). Cognitive reflection and decision making. Journal of Economic Perspectives, 19(4), 25–42.