Abstract

We present an experiment where subjects sequentially receive signals about the true state of the world and need to form beliefs about which one is true, with payoffs related to reported beliefs. We attempt to control for risk aversion using the Offerman et al. (Rev Econ Stud 76(4):1461–1489, 2009) technique. Against the baseline of Bayesian updating, we test for belief adjustment underreaction and overreaction and model the decision making process of the agent as a double hurdle model where agents with inferential expectations first decide whether to adjust their beliefs and then, if so, decide by how much. We also test the effects of increased inattention and complexity on belief updating. We find evidence for periods of belief inertia interspersed with belief adjustment. This is due to a combination of random belief adjustment; state-dependent belief adjustment, with many subjects requiring considerable evidence to change their beliefs; and quasi-Bayesian belief adjustment, with aggregate insufficient belief adjustment when a belief change does occur. Inattention, like complexity, makes subjects less likely to adjust their stated beliefs, while inattention additionally discourages full adjustment.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Agents form and update their beliefs when they receive new information. In the presence of rational expectations, new information leads to belief updating every period according to Bayes rule. In reality many agents do not behave according to basic statistics, and task complexity and inattention may contribute to deviations from Bayesian predictions. Such violations of rational expectations have been studied in static settings, where all the information is presented at once to subjects who discount priors (Kahneman and Tversky 1973; Tversky and Kahneman 1982; El-Gamal and Grether 1995). In this paper we study a dynamic setting in which new information arrives sequentially and consider the frequency as well as the extent of belief adjustment, referring to sticky belief adjustment when it is insufficient in either domain.

Relative to previous urn experiments where agents need to state guesses, such as Khaw et al. (2017), our study is innovative in a number of dimensions. First, it is simple, in that our experiment does not have an evolving state of nature. We are interested instead in the basic question of how beliefs are updated dynamically, and this basic question can be answered in a way that is easiest for experimental participants and most interpretable for researchers by having a simple dynamic environment with new information flowing in. Second, we attempt to control for risk aversion and therefore are able to measure beliefs more accurately than in previous research. We try to do so by using the Offerman et al.’s (2009) technique. Third, we do not use cumulative earnings, which may lead to uncontrolled factors such as income effects or portfolio diversification.

Fourth, we develop a double hurdle econometric model to combine in a single framework different types of belief adjustment we may observe in the laboratory: time-dependent (random) belief adjustment and state-dependent (Bayesian, Quasi-Bayesian) belief adjustment.Footnote 1 Each type has been utilized in macro and microeconomic modelling, though the tendency has been to focus on only one type.

Within macroeconomic research, sticky belief adjustment can be seen as a possible microfoundation of sticky price adjustment, for example as a result of inattention and observation costs (Alvarez et al. 2016), information costs (Abel et al. 2013), cognitive costs (Magnani et al. 2016) and the consultation of experts by inattentive agents (Carroll 2003), or some combination of these factors (Cohen et al. 2019). State-dependence in beliefs implies a dependence of belief adjustment on the economic state, which in turn may depend on new information flowing in. Time-dependence in beliefs is often viewed stochastically [as for example in Caballero (1989)] and therefore yields random belief adjustment, following some underlying data generating process. One useful way of conceptualizing state- and time-dependent beliefs is the inferential expectations (IE) model of Menzies and Zizzo (2009): that is, agents hold a belief until enough evidence has accumulated for a statistical test of a given test size \(\alpha\) to become significant, at which point beliefs switch. Furthermore, if agents’ stance towards evidence is modelled by a probabilistic draw on their \(\alpha\), then the probability that the test size is unity (which results in an update for any evidence, or noneFootnote 2) is the probability of a random belief adjustment.

Within microeconomic research, Quasi-Bayesian (QB) belief adjustment has been the preferred route to think about bounded-rational belief adjustment. Rabin (2013) distinguishes between warped Bayesian models which encapsulate a false model of how signals are generated, for example ignoring the law of large numbers (Benjamin et al. 2015); and information-misreading Bayesian models that misinterpret signals as supporting agents’ hypotheses, thus giving rise to confirmation bias (Rabin and Schrag 1999), and therefore lead to underweighting of information (for early evidence, see Phillips and Edwards 1966). In static problems where priors and ‘new information’ were given, Kahneman and Tversky (1973) and Tversky and Kahneman (1982) made the contrasting finding of base rate neglect, with more weight being put on the new information; reviews of the literature on base rate neglect can be found in Koehler (1996), Barbey and Sloman (2007) and Benjamin (2019). One simple way of modelling QB adjustment, which we follow, is that the agent adjusts beliefs every period in response to new information, but this adjustment is either too big or too small (Massey and George 2005; Ambuehl and Li 2014). That is, if the posterior probability is the prior multiplied by \((likelihood)^{\beta }\), Quasi-Bayesian models are marked by departures of \(\beta\) from unity.Footnote 3

In this paper we use a double hurdle model to consider different perspectives about belief adjustment emphasized within macro- and microeconomics. Full rationality requires clearing two hurdles in a very specific way: fully rational agents must adjust every period as they clear hurdle 1, and they must use Bayes rule with \(\beta =1\) as they clear hurdle 2.

Table 1 describes the first hurdle using different values of the IE test size (\(\alpha\)) and the columns describe the second hurdle using different values of the QB parameter \(\beta\). Fully rational agents are fully attentive (\(\alpha =1\)) and Bayesian (\(\beta =1\)). The double hurdle model is formalized in Sect. 4.2 and generates a distribution for \(\alpha\) and \(\beta\), parameterizing both the frequency and extent of adjustment.

Fifth, we provide the first study that looks at how increased task complexity or scope for inattention affects belief updating. Task complexity and inattention are two factors that have been independently identified as playing a potentially substantial role in bounded-rational decision making.

Among others, Simon (1979), Gigerenzer and Gaissmaier (2011) and Caplin et al. (2011) have identified complexity of decision settings as a key reason for ‘satisficing’ and heuristic-based decision making. This is neurobiologically plausible (Bossaerts and Murawski 2017) and leads to different ways information is processed (Payne 1979) and lotteries selected in binary choices (Wilcox 1993). Examples of practical applications where complexity can be important is consumer exploitation by firms to achieve greater profits (Carlin 2011; Huck et al. 2011; Sitzia and Zizzo 2011; Sitzia et al. 2015); decisions to engage in vertical integration or outsourcing (Tadelis 2002); climate change inaction as linked to the complexity of the relevant task environment (Slawinski et al. 2017); defaults becoming more attractive as an omission bias (e.g., Baron and Ritov 2004).

Inattention has been independently identified as a key source of bounded-rational decision making (e.g., Alvarez et al. 2016; Magnani et al. 2016; Carroll 2003), in ways that may but do not necessarily reflect rational inattention trade-offs. (See Caplin et al. 2020, for a discussion of this point.) It has a wide ranging and growing set of applications. Examples of applications in macroeconomics include the New Keynesian Philips Curve (Mankiw and Reis 2002), business cycle dynamics (Mackowiak and Wiederholt 2015) and the failure of uncovered interest rate parity (Bacchetta and van Wincoop 2010). Examples of applications in microeconomics include strategic product pricing (Martin 2017), corporate strategy (Dessein et al. 2016) and portfolio selection (Huang and Liu 2007).

Surprisingly, given the importance that both complexity and inattention have been stated to have in a wide range of settings with risk and imperfect information, we are not aware of papers that have looked at the effect of either on belief updating. A key contribution of this paper is to address this gap.

Regarding task complexity, we expect it to potentially reduce the frequency of stated belief changes in the first hurdle (\(\alpha\) in Table 1) as well as the extent of the stated belief change when this takes place in the second hurdle (\(\beta\) in Table 1). This is because complexity makes subjects less likely to wish to make an ‘active’ choice and therefore more likely to stick to the default (see Gerasimou 2018); and because, if they do change their stated beliefs, as they perceive the task as more uncertain, they are likely to be more conservative in the degree to which they do so (see Brainard 1967).

Regarding inattention, if it matters in the way that the literature has suggested, then a simple experimental manipulation increasing the likelihood of inattention will lead experimental subjects to be less likely to update their beliefs regarding the variable to which they are not paying attention. Importantly, while this would not be surprising if the alternative distracting task were incentivized, in our experiment (as in Sitzia et al. (2015)) it is not. There is therefore unlikely to be any preference-based reason why a rational agent should ignore the guessing task on the basis of which payments are wholly made, and deviations from Bayes can be more precisely identified as being due to cognitive costs in information processing. Inattention should reduce the likelihood of agents switching their belief, and therefore enter the first hurdle of the model.

In brief, our results are as follows. Subjects change their beliefs about half the time, which is consistent with random belief adjustment, but they also consider the amount of evidence available, which is consistent with state-dependent belief adjustment. When subjects do change beliefs, they do so by around 80 per cent of the full Bayesian update, which is consistent with our version of Quasi-Bayesian belief adjustment. There is substantial heterogeneity in our results and the frequency and extent of belief adjustment are negatively correlated: agents who update with low frequency do so by more than 80 percent of the full Bayesian update. Furthermore, we find evidence that inattention reduces the propensity to update, as predicted, as well as the extent of update. Complexity is less important, as it only affects the propensity to update and does so by less than inattention. We do not find that task confusion explains belief stickiness to an important degree, nor is there any financial incentive to explain why beliefs are stickier if we add an alternative distracting task. Rather, inattention and cognitive costs are likely to explain the infrequent belief adjustment, to different degrees, by half of our subjects. Only a small fraction of agents have rational expectations where that is understood as full Bayesian updating each period.

Our paper is structured as follows: in Sect. 2 we construct a balls-and-urn experiment with treatments for complexity and inattention. Section 3 describes the balls-and-urn environment, and predicts behavior, under different expectational assumptions. Section 4 analyzes the experimental results using: nonparametric (model free) statistics for the raw data; the double hurdle econometric model for the risk-adjusted data; and a subject-specific density of test sizes derived from the double hurdle model. Section 5 draws together the main results, and concludes.

2 Experimental design and treatments

Our experiment was fully computerized in JavaScript and run with undergraduate and postgraduate students in the experimental laboratory of the University of East Anglia with \(n=245\) subjects in 16 sessions conducted between July and December 2013.Footnote 4 Everyone in each session participated in the same treatment, and sessions were conducted in mixed order. ORSEE was used as experimental recruitment software for the assignment of subjects to sessions. Subjects were separated by partitions. The experiment was divided in two parts, labelled the risk attitude part (Stage 1) and the main part (Stage 2).

Experimental instructions were provided at the beginning of each part for the tasks in that part. Online appendices 3 and 4 contain a copy of the instructions; a file with more details on the software and with computer screens is also provided as supplementary material. A questionnaire was administered to ensure understanding after each batch of instructions. If a subject got an answer wrong, a brief and simple explanation was provided explaining the correct answer (see the computer screens file for the text in each case) and, if anything was still unclear, subjects were given the opportunity to obtain further clarification from an experimenter.

2.1 Main part of the experiment

After playing the risk attitude part described in more detail below, in the main part of the experiment subjects played 7 stages, each with 8 rounds, thus generating \(T=56\) observations. At the beginning of each stage the computer randomly chose one of two urns (Urn 1 or Urn 2), with Urn 1 being selected at a known probability of 0.6. Each urn represents a different state of the world. While this prior probability was known and it was known that the urn would remain the same throughout the stage, the chosen urn was not known to subjects. It was known that Urn 1 had seven white balls and three orange balls, and Urn 2 had three white balls and seven orange balls. At the beginning of each of the 8 rounds (round = t), there was a draw from the chosen urn (with replacement) and subjects were told the color of the drawn ball. These were therefore signals that could be used by subjects to update their beliefs.Footnote 5 It was made clear to the subjects that the probability an urn was chosen in each of the seven stages was entirely independent of the choices of urns in previous stages. A visual representation of the urns was provided on the computer screens to facilitate understanding (see the computer screens file).

Once they saw the draw for the round, subjects were asked to make a probability guess between 0 and 100%, on how likely it was that the chosen urn was Urn 1. The corresponding variable for analysis is their probability guess expressed as a proportion, denoted g. Once a round was completed, the following round started with a new ball draw, up to the end of the 8th round.

Payment for the main part of the experiment was based on the guess made in a randomly chosen stage and round picked at the end of the experiment. A standard quadratic scoring rule (e.g. Davis and Holt 1993) was used in relation to this round to penalize incorrect answers. The payoff for each subject was equal to 18 GBP minus 18 GBP \(\times\) \(\left( guess\ -\ correct\ probability \right) ^{2}\). Therefore, for the randomly chosen stage and round, subjects could earn between 0 and 18 GBP depending on the accuracy of their guesses. It was clarified to subjects that “if the chosen urn was Urn 1, then the correct probability of the chosen urn being Urn 1 is 100%; if the chosen urn was Urn 2, then the correct probability of the chosen Urn being Urn 1 is 0%”. While instructions were generally provided on the computer screen, a table with payoffs for each level of accuracy of the guesses was provided in print, to facilitate understanding (see online appendix 3). Table 8 in “Appendix 5: Robustness and understanding” includes a regression model with maths ability as an explanatory variable, which we measure in the C treatment. This variable is insignificant even in the treatment where potentially it should have mattered the most, which undermines its relevance. Furthermore, subjects could make use of a calculator. Specifically, a ‘calculate consequences’ button gave subjects ready information about the payoffs arising from their guess, depending on which urn was drawn.

2.2 Risk attitude part of the experiment

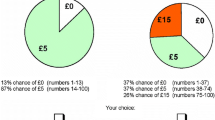

The risk attitude part was similar to the main part but simpler and therefore genuinely useful as practice. It was modelled after Offerman et al. (2009) to enable us to infer people’s risk attitude, as detailed in Sect. 3.

It consisted of 10 stages with one round each. In each stage a new urn was drawn (with probabilities 0.05, 0.1, 0.15, 0.2, 0.25, 0.75, 0.8, 0.85, 0.9, 0.95).Footnote 6 Subjects were told the prior probability of Urn 1 being chosen but did not receive any further information. In particular, no balls were drawn. The guessing task (single round) was to nominate a probability that Urn 1 was chosen, where subjects could rely on the information available to them and the payment mechanism to identify which probability guess would maximize their expected utility. For subjects who are not risk neutral, the task is non-trivial as they should take account of the payoff structure rather than repeat the announced probabilities. Payment for the risk attitude of the experiment was based on the guess made in a randomly chosen round picked at the end of the experiment. A quadratic scoring rule was applied as in the main part, but this time this was equal to 3 GBP minus 3 GBP \(\times\) \(\left( guess\ -\ correct\ probability \right) ^{2}\).Footnote 7 Again, it was clarified to subjects that the correct probability was 0 or 1 depending on the urn being chosen, and again a table with payoffs for each level of accuracy of the guesses was provided in print (see online appendix 3) and a calculator was also available.

2.3 Experimental treatments

There were three treatments. The risk attitude parts were identical across all treatments, and the main part of the Baseline treatment (B) was as described.

In the main part (only) of the Complexity treatment (C), the information on the ball drawn from the chosen urn at the beginning of each round was presented as a statement about whether the sum of three numbers (of three digits each) is true or false. If true (e.g., \(731+443+927=2101\)), this meant that a white ball was drawn. If false (e.g., \(731+443+927=2121\)), this meant that an orange ball was drawn.

In the main part (only) of the Inattention treatment (I), subjects were given a non-incentivized alternative counting task which they could do instead of working on the probability. The counting task was a standard one from the real effort experimental literature (see Abeler et al. 2011, for an example) and consisted in counting the number of 1s in matrices of 0s and 1s. Subjects were told that they could do this exercise for as little or as long as they liked within 60 s for each round, and that we were not asking them in any way to engage in this exercise at all unless they wanted to.Footnote 8

As in Caplin et al. (2020) and the key treatments in Sitzia et al. (2015), we see as important not to incentivize the alternative task. If the alternative task were incentivized—financially or in terms of doing something fun such as browsing the internet—, it would be rational for an agent to split his or her time allocation between tasks, which would trivially imply worse decision making in the guessing task. This would make it difficult to precisely identify what is due to inattention as a psychological mechanism, and could be construed to simply reflect the fact that agents are bad at multitasking (e.g., Buser and Peter 2012). In our setting, instead, agents should just focus on a single task and not be distracted by the alternative task.Footnote 9 Undoubtedly, future research could change the incentives associated to the alternative task.

3 Theoretical model

3.1 Model variables and risk attitude correction

In this section, we build a model of subject play in our experiment where the key driver is the way subjects form beliefs. Table 2 lays out the main variables from the experiment and the interrelationships between them, when the event is described in terms of the chosen urn (row 1) and when it is described in terms of the probability of a white ball being drawn (row 2). The two descriptions are equivalent since the subject’s subjective guess of the probability that Urn 1 was chosen generates an implied subjective probability that a white ball is drawn.Footnote 10 In our modelling of this experimental environment, we sometimes use the former probability—that Urn 1 was chosen—and it will be useful to transform this probability guess using the inverse cumulative Normal distribution (so that the support has the same dimensionality as a classic z-statistic). Alternatively, we sometimes describe agents’ guesses in terms of the probability that a white ball is drawn because the sample proportion from repeated Bernoulli trials has a tractable sampling distribution for hypothesis testing.

Time is measured by t, the draw (round) number for the ball draws in each stage. We define the value of t for which subjects last moved their guess (viz. updated their beliefs) to be m (for ‘last move’). Thus, for any sequence of ball draws at time t, the time that has elapsed since the last change in the guess is always \(t-m\).

Along the top row the theoretical estimator for the probability that Urn 1 was drawn is provided by Bayes rule, which we denote by \(P_{t}\) after t ball draws. Many subjects do not use Bayes rule when they are guessing the probability that Urn 1 is chosen, though some guesses are closer to it than others.

As derived in Offerman et al. (2009), the elicited guess \(g_{t}\) in the fourth column is the result of maximizing expected utility based on a Constant Relative Risk Aversion (CRRA) utility function, \(U\{\)Payoff\(\}\):

and a true guess \(g_{t}^{*}\) in \(E\left[ U\{{\text {Payoff}}\}\right] =g_{t}^{*}U\{1-\left( 1-g_{t}\right) ^{2}\}+(1-g_{t}^{*})U\{1-g_{t}^{2}\}\) where the payoffs for Urn 1 and Urn 2 are proportional to \(1-\left( 1-g_{t}\right) ^{2}\) and \(1-g_{t}^{2}\), according to the quadratic scoring rule, as explained in Sect. 2.Footnote 11 Expected utility is assumed to be maximized with respect to \(g_{t}\) and yields the following relationship between \(g_{t}^{*}\) and \(g_{t}\):

In the risk attitude part, the prior probabilities given to the subjects (by way of reminder, 0.05, 0.1, 0.15, 0.2, 0.25, 0.75, 0.8, 0.85, 0.9, 0.95 for 10 separate stages/rounds) are in fact the correct probabilities \(P_{t}\). We see no reason not to credit subjects with realizing this, and they possess no other information anyway, so we define their true guess to be \(g_{t}^{*}=P_{t}\). Offerman et al. (2009) then interpret the deviations of \(g_{t}\) from \(g_{t}^{*}\) as being due to the subjects’ risk preferences, and so do we. We use the ten datapoints \(\left( g_{t}^{*},g_{t}\right)\) for each subject to estimate \(\theta\) in a version of (1) appended with a regression error.Footnote 12 Armed with a subject-specific value of \(\theta\) from the risk attitude part, all the observable \(g_{t}\) values in the main experiment can be transformed to a set of inferred \(g_{t}^{*}\). This transformation is accomplished by exponentiating both sides of (1), and solving for \(g_{t}^{*}\). By taking the inverse cumulative Normal function, \(\Phi ^{-1}\), of \(g_{t}^{*}\) we move it outside the [0, 1] interval and give it the same dimensionality as a z-test statistic, namely \((-\infty ,\infty )\). The variable in the penultimate column, \(r_{t}^{*}=\Phi ^{-1}\left( g_{t}^{*}\right)\), thus becomes the basis for our econometric analysis.Footnote 13

We will explain the last column of Table 2 and why it is useful later.

3.2 Expectation processes

Using the notation of Table 2, we define three processes of expectation formation that will be relevant for our double hurdle model in Sect. 4.

3.2.1 Rational expectations

The rational expectations solution predicts straightforward Bayesian updating. The (conditional) probability the subject is being asked to guess is the rational expectation (RE), which is given by \(P_{t}\). Calling \(P_{initial}\) the initial prior probability and noting that the number of white balls is \(tP_{t}^{w}\) we can write down \(P_{t}\) in a number of ways:

The second line is a useful simplification (which we use in “Appendix 1: Closeness of two strength-of-evidence measures”) whereas the bracketed fraction in the first line is the probability of obtaining the \(tP_{t}^{w}\) white balls when Urn 1 is drawn versus the total probability of obtaining this number of white balls. We called this the likelihood ratio in Table 1.

3.2.2 Quasi-Bayesian updating

In our version of Quasi-Bayesian updating (QB), agents use Bayesian updating as each new draw is received, but they incorrectly weight the likelihood ratio:

The parameter \(\beta\) may be thought of as the QB parameter: if \(\beta =1\), agents are straightforward Bayesians; if \(\beta >1\) they overuse information and under-weight priors; if \(0\le \beta <1\) they underuse information and over-weight priors and if \(\beta <0\) they respond the wrong way to information—raising the conditional probability when they should be lowering it, and vice versa.

Agents’ attitude towards the extent of belief change in the light of evidence can be summarized by the distribution \(f(\beta )\) across subjects. If \(f(\beta )\) has most probability mass between 0 and 1, most agents only partially adjust, and subjects converge to full adjustment at \(\beta =1\) to the extent that the probability mass in \(f(\beta )\) converges towards unity.

3.2.3 Inferential expectations

Cohen et al. (2019) show that models with cost-based state-dependent sticky belief adjustment are equivalent to an inferential expectations (IE) model, where agents’ hypothesis testing generates infrequent belief adjustment (Menzies and Zizzo 2009).Footnote 14 We show this in our specific context in “Appendix 2: Relationship between inferential expectations and switching cost models”. We therefore are in a position to model the degree of state-dependent belief stickiness by the test size, where a low test size implies a higher cost of changing beliefs and therefore relatively infrequent adjustment.

We assume subjects hold a belief until enough evidence has accumulated to pass a threshold of statistical significance, at which point beliefs are updated. Agents form a belief and do not depart from that belief until the weight of evidence against the belief is sufficiently strong. Under IE, each agent is assumed to start with a belief about the probability of U (that is, \(P_{0}=0.6\)) and its implied probability of a white ball (\(P_{0}^{w}=0.54\)), and conducts a hypothesis test that the latter is true after drawing a test size from his or her own distribution of \(\alpha\), namely \(f_{i}\left( \alpha \right)\). Agents are assumed to draw this every round during the experiment.

In the first row of the final column of Table 2, we provide a measure \(z_{t}\) of the strength of evidence against the probability guess at the time of the last change. Agents change their guesses from time to time, and \(z_{t}\) tells us if the value of P at the last change, denoted \(P_{m}\), seems mistaken in the light of subsequent evidence.

Importantly, as shown in “Appendix 1: Closeness of two strength-of-evidence measures”, the top-row \(z_{t}\) is approximately equal to the standard test statistic for a proportion, shown in the second row of the final column of Table 2, using the maximal value of the variance of the sampling distribution (namely \(( \frac{1}{2})^{2}\)):

Thus, the p value for IE can be derived from (4) as the test statistic. We assume for simplicity that \(z_{t}\) is distributed as a standard Normal.

Later in the paper we derive the full distribution of \(\alpha\) so as to let the data adjudicate each agent’s attitude towards evidence. If \(f_{i}\left( \alpha \right)\) has most probability mass near zero, agent i exhibits sticky belief adjustment. Probability mass in \(f_{i}\left( \alpha \right)\) near unity implies a willingness to update for any evidence. In the limit, as \(\alpha\) approaches unity, agents will update regardless of evidence (or, more precisely, even for zero evidence against the null). This is equivalent to (stochastic) time dependent updating. That is, if the probability mass at unity in \(f_{i}\left( \alpha \right)\) is, say, 0.3, it implies that there is a thirty per cent chance that agent i will update regardless of what the evidence says. More formally, the decision rule in a hypothesis test is to reject \(H_{0}\), the status quo, if the p value \(\le \alpha\). A value for \(\alpha\) of unity implies the status quo will be rejected, which is the same as updating in this context, for any p value whatsoever.

3.2.4 Relationship between expectations benchmarks

When agent i rejects \(H_{0}\) within the IE framework we assume she updates her probability guess using Quasi-Bayesian updating.

Since each agent has a full distribution of \(\alpha\), namely \(f_{i}\left( \alpha \right)\), we need a representative \(\alpha _{i}\) to summarize the extent of sticky belief adjustment for agent i and to relate to her \(\beta _{i}\). There are a number of possibilities, but a natural choice which permits analytic solutions is the median \(\alpha _{i}\) from their \(f_{i}\left( \alpha \right)\). For the purposes of our empirical analysis a fully rational (Bayesian) agent is one who has (median) \(\alpha _{i}=\beta _{i}=1\), whereas any other sort of agent does not have RE.

We now parameterize all three expectation processes in a double hurdle model. We find evidence for all of them in our data, and importantly we find that the IE representation of \(f_{i}\left( \alpha \right)\) has non-zero measure at unity. As discussed above, this is the fraction of agents who undertake random belief adjustment.

4 Data analysis and model estimation

4.1 Nonparametric analysis

The baseline and complex treatments each had 82 subjects, and the inattention treatment had 81 subjects. In this sub-section, we motivate our model with nonparametric analysis.

First, we consider the number of times our subjects executed a no-change, meaning a guessed probability equal to that of the previous period. This is interesting because, given the nature of the information and comparatively small number of draws, incidences of no-change are not predicted by either Bayesian or Quasi-Bayesian updating, and so, if such observations are widespread in the data, this is the first piece of nonparametric evidence that these standard models are incomplete.

The maximum of the number of no-changes for each subject is 49: seven opportunities for no change out of eight draws, times the seven stages. The distributions over subjects separately by treatment are shown in Fig. 1. The baseline distribution shows a concentration at low values; for both Complex and Inattention, there appears to be a shift in the distribution towards higher values, as one might expect. The means for each treatment are represented by the vertical lines on the right hand side. The vertical lines on the left hand side show the mean number of no-changes that would result from subjects rounding the Bayesian probabilities to two decimal places (or, equivalently, rounding percentages). Clearly, rounding cannot account for the prevalence of no-changes found in the empirical distribution.

The mean is higher under C (22.79) than under B (18.74) (Mann–Whitney test gives \(p=0.007\)); and higher under I (26.97) than under B (\(p<0.001\)).Footnote 15 This is expected: complexity and inattention are both expected to increase the tendency to leave guesses unchanged. When C and I are compared, the p value is 0.06, indicating mild evidence of a difference between the two treatments.

In Fig. 1 it is clear from the nonparametric evidence of widespread incidence of no-changes that any successful model of our data will have to deal with the phenomenon of whether to adjust, before considering how much to adjust.

Second, when agents do change, it is of interest why they do. In Fig. 2 we plot the binary indicator for updating against the strength of evidence against the maintained beliefs \(\left| z_{it}\right|\) (the absolute change in the Bayesian posterior since the last time the subject updated; see top of row Table 2). A Lowess smoother is superimposed, and this can be interpreted as the predicted probability of an update for a given value of \(\left| z_{it}\right|\). This provides good nonparametric evidence that higher values of \(\left| z_{it}\right|\) make change more likely, but, across subjects, agents who are more reluctant to change will exhibit both a relatively low probability of update and a higher value of \(\left| z_{it}\right|\). Hence there is an econometric concern that the relationship may be affected by endogeneity bias.Footnote 16

Predicted probability of updating against strength of evidence (the latter measured as the absolute value of z, defined in Table 2). The dots represent individual decisions to update (1 = update; 0 = no update). The lines are Lowess smoothers, obtained using a tricube weighting function and bandwidth 0.8 (both STATA defaults). Left panel: smoother obtained for full sample. Right panel: smoother obtained separately by treatment

Third, Fig. 3 shows the extent of any updating on receipt of a white ball and an orange ball, both as a raw change and as a proportion of the absolute change dictated by Bayes rule. The upper panels indicate that updates are often in step sizes of 0.1 or 0.05 and the 0.6 prior for \(P_t\) was not so asymmetric as to generate artefacts.

Change in guess: raw, and as a proportion of a Bayesian benchmark. The top panels show the raw size of the updates on receiving a ball of each color. The bottom panels show the proportional size of the updates on receiving a ball of each color: this is defined as the actual update on receiving a ball as a proportion of the absolute correct Bayesian update (assuming the subject starts from the Bayesian prediction). A vertical line is drawn in correspondence to 0 (no update) and, for the bottom panels, in correspondence to the Bayesian proportional change in guess (so 1 on receiving a white ball and − 1 on receiving an orange ball). Data from all treatments are used

In the lower panels, the updates relative to the Bayesian benchmark cluster between zero and one (for a white ball) and minus one and zero (for an orange ball).Footnote 17 This shows that when agents adjust, they tend to do so in a reasonable direction for risk averse agents, raising their probability for Urn 1 when a white ball is drawn and lowering it when an orange ball is drawn.Footnote 18 Furthermore, the clustering indicates that any Quasi-Bayesian representation of their adjustment will require a \(\beta\) parameter less than unity, reflecting insufficient belief adjustment.

These nonparametric statistics are consistent with more than one theoretical approach, but it is not clear that just one approach will explain all the features of the data. With that in mind, we now turn to a model which allows the different approaches to co-exist.

4.2 A double hurdle model of belief adjustment

In this section, we develop a parametric double hurdle model which simultaneously considers the decision to update beliefs and the extent to which beliefs are changed when updates occur. The purpose of the model is to act as a testing tool for state-dependent belief adjustment, namely Bayesian belief adjustment and Quasi-Bayesian belief adjustment in the simple version previously defined, as well as (stochastic) time-dependent belief adjustment.

Our econometric task is to model the transformed implied belief \(r_{t}^{*}=\Phi ^{-1}\left( g_{t}^{*}\left( \theta _{i}\right) \right)\), which in turn requires an estimate for risk aversion. We estimate this at the individual level using the technique by Offerman et al. (2009). “Appendix 3: Method for estimating CRRA risk parameter” contains the subject-level details surrounding the estimation of \(\theta _{i}\). On average, agents are risk averse with a mean \(\theta\) of 0.2.Footnote 19

We will refer to \(r_{it}^{*}\), subject i’s belief in period t, as shorthand for ‘transformed implied belief’. We will treat \(r_{it}^{*}\) as the focus of the analysis, because \(r_{it}^{*}\) has the same dimensionality as \(z_{_{it}}\), the test statistic defined in (4). That is, both have support \((-\infty ,\infty )\). Sometimes \(r_{it}^{*}\) changes between \(t-1\) and t; other times, it remains the same. Let \(\Delta r_{it}^{*}\) be the change in belief of subject i between \(t-1\) and t. That is, \(\Delta r_{it}^{*}=r_{it}^{*} -r_{it-1}^{*}\).

In the following estimation we exploit the near equivalence between (4) and the scaled difference since the last update \(2(\Phi ^{-1}\left( P_{t}\right) -\Phi ^{-1}\left( P_{m}\right) )/\sqrt{t}\) (from Table 2). In round 1, \(P_{m}\) equals the prior 0.6 and the movement of the guess for a given subject is \(\Delta r_{i1}^{*}=r_{1}^{*}-\Phi ^{-1}\left( 0.6\right)\). That is, both the objective measure of the information change and the subjective guess of the agent are assumed to anchor onto the prior probability that Urn 1 is chosen, 0.6, in the first period.

4.2.1 First hurdle

The probability that a belief is updated (in either direction) in period t is given by:

where \(\Phi \left[ \cdot \right]\) is the standard Normal cdf and \(\delta _{i}\) represents subject i’s idiosyncratic propensity to update beliefs, and therefore models random probabilistic belief adjustment (time-dependent belief adjustment). The probability of an update is assumed to depend (positively) on the absolute value of \(z_{it}\), the test statistic. The vector \(x_{i}\) contains treatment and gender dummy variables together with an age variable and a score on two questions from the comprehension questionnaire administered after the main part of the experiment was explained,Footnote 20 all of which are time invariant and can be expected to affect the propensity to update.

One econometric issue flagged in the last sub-section is the endogeneity of the variable \(\left| z_{it}\right|\): subjects who are averse to updating tend to generate large values of \(\left| z_{it}\right|\) while subjects who update regularly do not allow it to grow beyond small values. This could create a downward bias in the estimate of the parameter \(\gamma\) in the first hurdle. To deal with this concern we use an instrumental variables (IV) estimator which uses the variable \(\widehat{\left| z_{it}\right| }\) in place of \(\left| z_{it}\right|\), where \(\widehat{\left| z_{it}\right| }\) comprises the fitted values from a regression of \(\left| z_{it}\right|\) on a set of suitable instruments.Footnote 21

4.2.2 Second hurdle

Conditional on subject i choosing to update beliefs in draw t, the next question relates to how much they do so. This is given by:

As a reminder, the Quasi-Bayesian belief adjustment parameter \(\beta _{i}\) represents subject i’s idiosyncratic responsiveness to the accumulation of new information: if \(\beta _{i}=1\), subject i responds fully; if \(\beta _{i}=0\), subject i does not respond at all. Remember that \(\beta _{i}\) is not constrained to [0, 1]. In particular, a value of \(\beta _{i}\) greater than one would indicate the plausible phenomenon of overreaction. Again, treatment variables are included: the elements of the vector \(\Psi _{2}\) tell us how responsiveness differs by treatment.

Considering the complete model, there are two idiosyncratic parameters, \(\delta _{i}\) and \(\beta _{i}\). These are assumed to be distributed over the population of subjects as follows:

In total, there are seventeen parameters to estimate: \(\mu _{1}\), \(\eta _{1}\), \(\mu _{2}\), \(\eta _{2}\), \(\rho\), \(\gamma\), \(\sigma\), four treatment effects (two in each hurdle); two gender effects (one in each hurdle); two scores from the comprehension questionnaire (one in each hurdle); and two age effects (one in each hurdle). Estimation is performed using the method of maximum simulated likelihood (MSL), with a set of Halton draws representing each of the two idiosyncratic parameters appearing in (7). Following estimation of the model, Bayes rule is used to obtain posterior estimates (denoted \(\hat{\delta _i}\) and \(\hat{\beta _i}\)) of the idiosyncratic parameters for each subject.Footnote 22

The results are presented in Table 3 for four different models. The last column shows the preferred model. Model 1 estimates the QB benchmark, in which it is assumed that the first hurdle is crossed for every observation—that is, updates always occur. Zero updates are treated as zero realizations of the update variable in the second hurdle, and their likelihood contribution is a density instead of a probability. Because of this difference in the way the likelihood function is computed, the log-likelihoods and AICs cannot be used to compare the performance of QB to that of the other models.

Model 2 estimates the IE benchmark, in which the update parameter (\(\beta _{i}\)) is fixed at 1 for all subjects. Consequently the extra residual variation in updates is reflected in the higher estimate of \(\sigma\). The parameters in the first hurdle are free.

Model 3 combines IE and QB, but constrains the correlation (\(\rho\)) between \(\delta\) and \(\beta\) to be zero. Model 4 is the same model with \(\rho\) unconstrained.

The overall performance of a model is judged initially using the AIC; the preferred model being the one with the lowest AIC. Using this criterion, the best model is the most general model 4 (model 1 not being subject to the AIC criterion): IE-QB with \(\rho\) unrestricted, whose results are presented in the final column of Table 3.

To confirm the superiority of the general model over the restricted models, we conduct Wald tests of the restrictions implied by the three less general models. We see that, in all three cases, the implied restrictions are rejected, implying that the general model is superior. Note in particular that this establishes the superiority of the general model 4 (IE-QB with \(\rho\) unrestricted) over the QB model 1 (a comparison that was not possible on the basis of AIC).

Further confirmation is furnished by measuring the sample predictive accuracy on a subsample of data (the ‘cross validation’ approach). ROC (Receiver Operating Characteristic) is the model’s out of sample predictive accuracy for hurdle 1 (the frequency of updates) and \(R^{2}\) (out of sample) is the model’s predictive accuracy for hurdle 2 (the extent of updates).Footnote 23 Model 4 is no worse than model 3 on an ROC criterion, but predicts the extent of adjustment better. Model 1 is best of all at predicting the extent of adjustment, but it fails to predict ‘no-change behavior’, by construction. Thus, on both in and out of sample criteria, model 4 is best overall.Footnote 24

We interpret the results from model 4 as follows. Consider the first hurdle (propensity to update). The intercept parameter in the first hurdle (\(\mu _{1}\)) tells us that a typical subject has a predicted probability of \(\Phi \left( 0.061\right) =0.524\) of updating in any task, in the absence of any evidence (i.e. when \(\left| z_{it}\right| =0\)). We note that this estimate is not significantly different from zero, which would imply a 50% probability of updating. The Inattention treatment effect is significant and negative, suggesting that the probability of update is lower when subjects are not paying attention. So is the Complexity treatment but not by as much. The effect of the questionnaire score is negative and significant, though it is not large. The negative coefficient is consistent with Cohen et al.’s (2019) model if subjects have cognitive costs. This gives us our first result:

Result 1

There is evidence of time-dependent (random) belief adjustment. Subjects update their beliefs idiosyncratically around half the time.

The large estimate of \(\eta _{1}\) tells us that there is considerable heterogeneity in the propensity to update (see Fig. 4), something we will explore further in Sect. 4.3. The parameter \(\gamma\) is estimated to be significantly positive, and this tells us, as expected, that the more cumulative evidence there is, in either direction, the greater the probability of an update:

Result 2

There is evidence of state-dependent belief adjustment. Subjects are more likely to adjust if there is more evidence to suggest that an update is appropriate (thus making it costlier not to update).

In the second hurdle, the intercept (\(\mu _{2}\)) is estimated to be 0.819 in our preferred model 4: when a typical (baseline) subject does update, she updates by a proportion 0.819 of the difference from the Bayes probability. The large estimate of \(\eta _{2}\) tells us that there is considerable heterogeneity in this proportion also (see Fig. 4). Interestingly, on the basis of the posterior estimates from model 4, only 13 out of 245 subjects appear to have \(\beta <0\), which indicates noise or confused subjects who adjusted in the wrong direction. Moreover, 87 out of 245 subjects (around one third) display overreaction to the evidence. We summarize this in the following result:

Result 3

There is evidence of Quasi-Bayesian partial belief adjustment. On average, subjects who adjust do so by around 80%. There is evidence of prior information under-weighting: around one third of the subjects overreact to evidence once they decide to adjust.

The estimate of \(\rho\) is negative, indicating that subjects who have a higher propensity to update, tend to update by a lower proportion of the difference from the Bayes probability. Inattention is important for both hurdles:

Result 4

Inattention lowers the probability of updating from 50 to 40% and lowers the extent of update from 80 to 56% of the amount prescribed by Bayes rule. Complexity also lowers the probability of update in the first hurdle.

4.3 The empirical distribution of \(\alpha\) and \(\beta\)

To get a better sense of the population heterogeneity in belief adjustment, this subsection maps out the empirical distribution of the IE \(\alpha _{i}\) and QB \(\beta _{i}\) parameters across subjects against each other. The estimated distribution \(f\left( \beta \right)\) can be seen from the distribution of the posterior estimates \(\hat{\beta _i}\) from Model 4, and this distribution is the marginal distribution of the extent of update on the vertical axis on the bottom-right panel of Fig. 4. We next use the first hurdle information to generate \(f_{i}\left( \alpha \right)\), the empirical distribution of \(\alpha _{i}\).

As we flagged earlier, each agent has a full distribution of \(\alpha\) and so we need a representative \(\alpha _{i}\) to summarize the extent of sticky belief adjustment for agent i, to then relate to their \(\beta _{i}\). As will be clear below, the choice that permits analytic solutions is the median \(\alpha _{i}\) from \(f_{i}\left( \alpha \right)\).

The econometric equation for the first hurdle is equivalent to the probability of rejecting the null under IE. We omit the dummy variables and begin by re-writing the first hurdle, namely (5):

where \(\delta _{i}\) and \(\gamma\) are estimated parameters and \(\left| z_{it}\right|\) is the test statistic based on the proportion of white balls:

For any \(\left| z_{it}\right|\) it is possible to work out an implied p value and we do so by assuming that (9) is approximately distributed N(0, 1). This in turn allows us to work out \(f_{i}\left( \alpha \right)\) from the econometric equation for the first hurdle. When \(\left| z_{it}\right| =0\), the p value for a hypothesis test is unity, and so the equation says that a fraction of agents will reject \(H_{0}\) if the p value is unity. Since the criterion for rejecting \(H_{0}\) in a hypothesis test is always \(\alpha \ge p\)-value, the observed behavior of rejecting \(H_0\) when \(\left| z_{it}\right| =0\) implies that there must be a non-zero probability mass on \(f_{i}\left( \alpha \right)\) at the value of \(\alpha\) exactly equal to 1. The pdf of \(\alpha _{i}\) will thus have a discrete ‘spike’ at unity and be continuous elsewhere. We know what that spike is from Eq. (8) with \(\left| z_{it}\right| =0\) substituted in, namely \(\Phi \left( \delta _{i}\right)\).

The probability of rejecting \(H_{0}\) depends on the probability that the test size is greater than the p value, but this is also equal to the econometric equation for the first hurdle.

Upper case F in the last equality is the anti-derivative of the density. We define \(F_{i}\left( 1\right)\) to be unity since 1 is the upper end of the support of \(\alpha\) but we also note that there is a discontinuity such that F jumps from \(1-\Phi \left( \delta _{i}\right)\) to 1 at \(\alpha =1\), as a consequence of the non-zero probability mass on \(f_{i}\left( \alpha \right)\) at unity. To solve the equation we use an expression for the p value of \(\left| z_{it}\right|\) on a two-sided Normal test.

We use a ‘single parameter’ approximation to the cumulative Normal (see Bowling et al. 2009). For our purposes \(\sqrt{3}\) is sufficient for the single parameter.

We can now write down \(\left| z_{it}\right|\) as a function of the p value using (11) and (12):

Intuitively, a p value of zero implies an infinite \(\left| z_{it}\right|\) and a p value of unity implies \(|z_{it}|\) is zero, and (13) confirms this. We can now use the relationship between \(F_{i}\left( p{\text {-value}}_{it}\right)\) and our estimated first hurdle to generate \(F_{i}\left( \alpha \right)\).

In the above expression the variable ‘\(p{\text {-value}}_{it}\)’ is just a place-holder and can be replaced by anything with the same support leaving the meaning of (14) unchanged. Thus, it can be replaced by \(\alpha\) giving the cumulative density of \(\alpha\).

Substitution of \(\alpha =1\) does not give unity, which is what we earlier assumed for the value of \(F_{i}\left( 1\right)\). However, it does give \(1-\Phi \left( \delta _{i}\right)\), which of course concurs with the econometric equation for the first hurdle when \(\left| z_{it}\right| =0\). This discontinuity in \(F_{i}\) is consistent with a discrete probability mass in \(f_{i}\left( \alpha \right)\) at unity, as we noted earlier. It now just remains to differentiate \(F_{i}\) to obtain the continuous density \(f_{i}\left( \alpha \right)\) for \(\alpha\) strictly less than unity. The description of the function at the upper end of the support (unity) is completed with a discrete mass at unity of \(\Phi \left( \delta _{i}\right)\).

Figure 5 illustrates the distribution \(f_{i}\left( \alpha \right)\) for \(\delta _{i}=0.1\) and \(\gamma =0.6\) together with the distributions one standard deviation either side of \(\delta _{i}\). The former is the mean of \(\delta\) across subjects, from our estimation (from the last column of Table 3, rounded). On the right-most of the chart is the probability mass when \(\alpha =1\). As discussed earlier, this corresponds to the proportion of agents who update on vanishingly small evidence (\(\left| z_{it}\right| =0\)). There is clearly a great deal of interesting heterogeneity. One distribution has a near-zero probability of a random update (10%) and when the agent uses information they are very conservative, with \(\alpha\) close to zero. We might call them ‘classical statisticians’ given the large probability mass around 1%, 5% and 10%. Another distribution has a virtually certain probability of a random update (90%) and we might call these agents ‘fully attentive’. The central estimate of \(\delta\) describes an agent who updates roughly half the time, and otherwise has a more or less uniform distribution over \(\alpha\).

Since there are idiosyncratic values of \(\delta _{i}\) there will be a separate distribution for every subject varying over \(\delta _{i}\). So we must use a summary statistic for \(f_{i}\left( \alpha \right)\), and the one which comes to hand is the median \(\alpha\) value, obtained by solving \(F_{i}\left( \alpha \right) =0.5\) in Eq. (15). In Fig. 6, we plot the collection of subject i’s (median \(\alpha\), \(\beta\)) duples for model 4, our preferred equation. Table 4 lists the percentage of subjects in each (median \(\alpha _{i}\), \(\beta _{i}\)) 0.2 bracket.Footnote 25

Roughly half the subjects update regardless of evidence, so the median \(\alpha\)’s cluster at unity along the bottom axis with half of them (49%) in the range at or above 0.8. Just under one quarter (22%) of agents could be described as classical statisticians with median \(\alpha\)’s around the 1–10% level and a similar figure (28%) have ‘conservative belief adjustment’, with \(\alpha\)-values no more than 0.20.

Regarding the size of updating, we already know from Result 3 that it is less than complete. In Table 4, 22% update no more than 40 per cent of what they should.

Result 5

Estimated test sizes spread over the whole support [0, 1] but are clustered at zero and unity. The extent-of-update distribution has a large probability mass around 50% but an even larger mass for values over unity.

In supplementary analysis (see online appendix 5), we find that infrequent updaters (\(\alpha _{med}\): 0–0.2) have larger mean square deviations (MSD) from Bayesian’s guesses than other subjects. Frequent updaters (\(\alpha _{med}\): 0.8–1) tend to have larger MSD as each stage progresses, which can be explained by comparative underadjustment or overadjustment of beliefs in the second hurdle of our model (see Table 4).Footnote 26

5 Discussion and conclusion

The double hurdle model we have developed in this paper allows us to integrate both time- and state-dependent belief adjustment in a unified econometric framework. Our experiment uses a quadratic scoring rule with monetary payoffs to incentivize subjects, and we operationalize Offerman et al. (2009) in order to attempt to control for risk aversion. Further research could use different belief elicitation methods to verify the robustness of our findings.

Our econometric model found evidence for considerable heterogeneity in both the propensity and extent of updating, with the majority of agents departing from the rational expectations benchmark of \(\alpha =\beta =1\). Yet deviations from this benchmark are systematic, predictable and can be understood within our modelling framework. We observe random belief adjustment around half the time, which is consistent with stochastic time-dependent belief adjustment. Deviations from Bayesian updating are systematically in the direction of under-adjustment, with a mean of 80% of full adjustment. The likelihood of a belief change increases as the amount of evidence against the no-change status quo increases, which is consistent with state-dependent belief adjustment.

Our aggregate findings are broadly in favor of under- as opposed to over-adjustment to information, which is consistent with prior belief conservatism findings (such as Phillips and Edwards 1966) and the overall finding of under-inference in Benjamin’s (2019) review. It is however in apparent contrast with the base rate neglect from static experimental settings such as Kahneman and Tversky (1973) and Tversky and Kahneman (1982). That said, we note based on Table 4 that, when subjects are at the second hurdle, while 52% of subjects have a \(\beta _{i}\) less than 1, 36% of them do have a \(\beta _{i}\) above 1, i.e. around a third over-weight rather than under-weight new information. One possible explanation for the greater proportion of under-weighting relative to some other research is that, where the prior is not actually perceived as a genuine and meaningful anchor by subjects—or at least it is perceived as a less reliable source of information than the new information [as in Goodie and Fantino (1999), or some of the settings by Massey and George (2005)]—, then it is more likely to be under-weighted. This is likely to be more the case in static experimental settings, or (following a conjecture by Benjamin (2019)) where priors are based on extreme probabilities.Footnote 27 Clearly, more research is needed.

Another interesting finding from our double hurdle model is that around half our subjects have \(\alpha _{i}\) less than 0.8. Unlike our dynamic setup, the nature of tasks in static experimental settings as well as others where regime change is to be detected [as in Massey and George (2005)], arguably nudges people to make an active choice and to pay the required cognitive and inattention costs.

We believe that a setting where there is no such nudge is a more accurate reflection of many real world decision settings, such as investment portfolio choice over time (Cohen et al. 2019). Cost-based state-dependent sticky belief adjustment can be micro-founded on adjustment costs, for example inattention costs (Alvarez et al. 2016), information costs (Abel et al. 2013), or cognitive costs (Magnani et al. 2016); these, in turn, can be modelled in a stylized way using a hypothesis testing framework, as shown by Cohen et al. (2019) and explained in “Appendix 2: Relationship between inferential expectations and switching cost models”. We avoid incentivizing the distractor task to help with the interpretability of the findings in a first experiment studying the effect of inattention on belief updating; changing this could be an interesting direction of future research.

We have parameterized the degree of state-dependent belief stickiness by the distribution of the test size. This is informative in two respects. First, the probability mass at \(\alpha =1\) measures the extent of time-dependent adjustment. Second, where agents instead adopt state-dependent adjustment, the density over the support \(\alpha =\left[ 0,1\right)\), as shown in Fig. 5, informs us about the extent of belief conservatism. On this note, we estimate that roughly one quarter of agents are highly belief conservative with \(\alpha \le 0.2\).

Although the negative correlation between \(\alpha\) and \(\beta\) in Fig. 6 is not significant, the relevant pair of parameters in the double hurdle model do have a significantly negative correlation. Thus we have established that agents who tend to update with a low frequency may update a little more when they do update. Subjects with a good understanding of the experiment may defer adjustment because of cognitive and inattention costs, but may be more rather than less Bayesian when an adjustment does take place.

We vary task complexity and likelihood of inattention in belief updating, as these are important dimensions that, we claim, affect how effectively agents process information in the real world. We conceive of inattention costs as the costs of departing from a default choice. This is a natural way of modelling many real world settings in which choices remain as default (e.g. portfolio choices) unless actively changed, and in this respect we follow Khaw et al. (2017) although they do not manipulate the likelihood of inattention (or task complexity). We do not find that task confusion explains belief stickiness to an important degree, nor is there any financial incentive to explain why beliefs are stickier if we add an alternative distracting task. Rather, inattention and cognitive costs are likely to explain infrequent adjustment, to different degrees, by half our subjects.

“Appendix 5: Robustness and understanding” provides descriptive statistics on the optional task, and specifically on how many counting tasks were answered by each subject correctly or incorrectly. It also contains a regression for the inattention treatment where ‘Correct counting tasks’ and ‘incorrect counting tasks’ (referring to the number of each per subject) both have a significant and negative effect on the probability of updating. This supports the conclusion that the effect of the optional task was to distract subjects, who therefore paid less attention than they should have. As noted at the end of Sect. 2, since the optional task was unincentivized, we have cleanly identified this as inattention as opposed to (for example) being bad at multitasking.

Table 8 in “Appendix 5: Robustness and understanding” includes a regression model with maths ability as an explanatory variable, which we measure in the C treatment. This variable is insignificant even in the treatment where potentially it should have mattered the most, which undermines its relevance. The answer of how increased complexity affects updating is instead provided by the preferred general models (3) and (4) in Table 3, as well as the model in Table 8 in “Appendix 5: Robustness and understanding” restricting the sample to subjects who answered correctly the understanding questions. Specifically, complexity makes subjects keener to stick to the default rather than making an active choice (see Gerasimou 2018). This leads to guesses that are on average more distant from the Bayesian predictions (see online appendix 5).

We conclude by populating the cells from Table 1, which provided a taxonomy for agents’ updating, with our empirical results, to give Table 5. Such an exercise is tentative, since ours is the first study to combine the frequency and extent of adjustment in a unified econometric framework. We have also had to make minor adjustments to the table: with respect to the frequency of adjustment, agents are inattentive if the median \(\alpha\) is less than the smallest classical test size (0.01) and, with respect to the extent of adjustment, \(\beta\) is continuous in our model so we must deem it to be unity when it is within a small range (0.2) of that value.

Based on the prevalence of agents, the Quasi-Bayesian modelling strategy of assuming period-by-period updating of information, but with less than full adjustment, commends itself by the behaviour of 28% of agents. The next most common behaviour is Quasi-Bayesian adjustment combined with Inferential Expectations (22%). An advantage of the latter, not shown in the table but shown in Fig. 5, is that an empirical distribution of inferential expectations \(\alpha\)’s allows for both time- and state-dependent adjustment, where the probability mass on unity implies stochastic time-dependent adjustment. Among the least common behaviours is full rational expectations (3%), defined as the fully attentive use of Bayes rule (\(\alpha =\beta =1\)).

It is not clear yet how generalizable these proportions are and future research might profitably probe them with our double hurdle model in different experimental environments. However, the non-dominance of any row or column within our taxonomy is evidence that both the extent and frequency of adjustment should be taken into account in economic modelling.

Notes

None of these points should be interpreted as criticisms of regime switching studies such as Khaw et al. (2017). The focus of these studies is on getting a better understanding of how agents handle regime switching, and so an evolving economy or a more specialised modelling approach are appropriate for what they are trying to achieve, and potentially confounding factors (such as risk aversion) are less of an issue than they are when trying to get a basic understanding of belief updating. The latter is our different and more fundamental focus.

A standard (Neyman–Pearson) hypothesis test minimizes the probability of falsely believing a null (a type II error) subject to an upper bound on the probability of falsely rejecting that same null (a type I error). If \(\alpha\) is unity, the constraint does not bind, so the best way to minimize the probability of falsely believing a null is to automatically (for any evidence against it, or none) reject the null. Under IE, rejecting a null is the same as ‘updating’ because a new value has to be chosen.

In Bayes rule, \(P\left( A \vert B \right) = \left[ P \left( B \vert A \right) / P \left( B \right) \right] P \left( A \right)\), the ratio \(\left[ P\left( B \vert A \right) /P\left( B \right) \right]\) is sometimes called the likelihood.

Dates, times and treatments of experimental sessions are listed in online appendix 2.

All signals are thus informative. It would be an interesting extension to include non-informative signals in future research.

One might wonder why we did not use 0.6 as a possible value. The purpose of the risk attitude part is to estimate \(\theta\) by the auxiliary regression in “Appendix 3: Method for estimating CRRA risk parameter”, and so it is desirable to have values close to 0 and 1, since the standard error in a regression is inversely related to the standard deviation of the independent variable. Adding extra values in the middle of the range of the independent variable may be useful for some purposes, but it would have a low leverage and therefore not help much in this context. This is not to deny that more data is always better than less, but 0.6 was not a natural choice for extra data. Furthermore, at the point in time when the subjects do the risk attitude part, the number 0.6 had no special significance for them; and we wished to avoid the danger of subjects potentially artificially anchoring in the main part to what they had done under 0.6 in the risk attitude part.

This ensured similar marginal incentives for each round in the risk attitude part (3 GBP prize picked up from 1 out of 10 rounds) and the main part (18 GBP prize picked up from 1 out of 56 rounds).

They were also told that, if they did not make a guess in the guessing task within 60 s, they would automatically keep the guess from the previous round and move to the next round (or to the next stage). The length of 60 s was chosen based on piloting, in such a way that this would not be a binding constraint if subjects focused on the guessing task.

In their experiment on the selection of energy tariffs, Sitzia et al. (2015) did not find different results when the alternative task was the ability to browse the internet instead of a counting task like the one in this paper.

For example, at the start of the experiment, before any ball is drawn, subjects know that the chance that Urn 1 was drawn is 0.6. It therefore follows that the chance of a white ball being drawn for the very first time is \(0.7\times 0.6+0.3\times (1-0.6) = 0.54\).

It is straightforward to derive the equivalent of (1) for CARA utility, and later in “Appendix 5: Robustness and understanding” the CARA \(g^{*}\)’s are used in a robustness check.

See “Appendix 3: Method for estimating CRRA risk parameter” for details. The estimated mean \(\theta\) across subjects is 0.2. There is considerable heterogeneity across subjects, so in our econometric modelling we check for robustness by excluding subjects with extreme values (\(\left| \theta \right| >1.5\)).

Cohen et al.’s (2019) explanation relies on a cost of adjustment. This must be interpreted as a cognitive cost, since there is no financial penalty for adjustment in our experiment. A cognitive cost might also be described as ‘laziness’. However, we should note that cognitive effort in attention may well take place, rather than the cognitive cost being simply about lack of effort as the notion of ‘laziness’ and its associated implicit moral censure seems to imply. Either way, any explanation of inertia is provisional in our context given the difficulty of inferring mental states from inaction.

All p values in the paper are two tailed. All bivariate tests use subject level means so that the independent observations avoid the problem of dependence of within-subject choices.

On another econometric matter, the RHS plots by treatment provide suggestive evidence that treatment dummies shifting the probability of adjustment are warranted in the econometric modelling of Sect. 4.2.

Plots by treatment for Fig. 3 are provided in “Appendix 5: Robustness and understanding”.

The true guess \(g^{*}\) should always rise when the draw is white, and vice versa when the draw is orange. Online appendix 1 has an extensive discussion of why a minority of contrarian adjustments (falls on white and increases on orange) is observed in Fig. 3. In brief, round 1 data suggests an error rate of around 5% and this is supported by the results of the double hurdle model presented later. That said, it can be optimal for g to move in the opposite direction to \(g^{*}\). The variance of the scaled payoff linearized around \(g^{*}\), \(1-(X-g)^{2}\approx 1-(g^{*}-g)^{2}-2(g^{*}-g)(X-g^{*})\), is \(4(g^{*}-g)^{2}V(X)\), where \(X\sim Bernoulli(g^{*})\). Thus, risk averse agents will always want to move their g in line with \(g^{*}\) to minimize variance, but agents who love risk enough might possibly move g in the opposite direction to \(g^{*}\).

This is in the neighborhood of the 0.3–0.5 range elicited by Holt and Laury (2002, p. 1649) using their Multiple Price List (MPL) task. In the results to follow, the heterogeneity of \(\theta\), along with a roughly even split between risk loving and risk averse agents, corroborates the usefulness of the robustness analysis in “Appendix 5: Robustness and understanding”.

These are questions 1 and 2 in the main part questionnaire as provided in online appendix 4. A third question was used on subjects for all treatments but a software coding error prohibited its use for analysis.

See “Appendix 4: IV Estimator”.

ROC is a methodology that is applied in binary data settings (e.g. our first hurdle). Clearly, when the outcome is binary, the prediction is in the form of a “predicted probability of a 1”, so there is no such thing as a “correct prediction”. A starting point is to define a prediction to be correct if the predicted probability of the observed outcome is greater than 0.5. However, the threshold need not be 0.5. ROC forms a test statistic by finding the number of correct predictions at all possible thresholds. The outcome in the second hurdle is continuous, so standard measures of predictive performance (e.g. predictive R-squared) are applicable. Both measures of predictive performance are out-of-sample measures. For the purpose of obtaining them, a 50% sample was used for estimation, and the remaining 50% of the observations were predicted. The number we report for ROC is the area under the ROC curve. This curve compares the true positive rate of prediction with the false positive rate of prediction for the universe of possible thresholds. The \(R^{2}\) (out of sample) is computed by comparing predictions from the second hurdle with actual decisions contingent on an update occurring.

In “Appendix 5: Robustness and understanding” we provide a number of robustness checks on model 4, and check for comprehension by the subjects more generally. We supplement this section by (1) running model 4 using CARA preferences to correct for risk aversion; (2) estimating model 4 on a subset of (near) risk neutral subjects; (3) separately running model 4 using the subset of data where subjects correctly answered both comprehension questions; (4) exploring the Inattention and Complexity treatments on their own to check for coherence between the comprehension questionnaires and the model results; (5) providing the marks for the comprehension questionnaire; and (6) providing data on the risk attitude part which helps explain the distribution of \(\theta\).

Rounding implies occasional discrepancies in the row and column totals shown.

Within each stage, MSD increases as the stage progresses since the behavioral impact of the deviations from Bayesian behaviour becomes progressively more significant. We find instead no evidence that MSD changes across stages, i.e. with general experience.

See Benjamin (2019) for a recent discussion of some of the other factors that may be at work.

A value close to 50%, or 50% with rounding, is compatible with strong risk aversion. This is not an unreasonable interpretation, noting for example that in finance data it is often the case that better calibration results are achieved assuming very high CRRA parameters, such as 30 (see Cecchetti and Mark 1990; Boguth and Kuehn 2013). Rounding is common in experiments and does not of course imply that one cannot use models to predict behavior while accepting the additional noise it entails. For example, it is standard to predict ultimatum game results using social preference models which often predict offering a little less than half of the pie, even though a typical modal offer is 50% of the pie.

Technically, there were three questions, but two of these had five subparts, making a total of 11 questions.

The extent of update is not significantly different from zero in the Complexity treatment. However, the positive and significant maths ability coefficient means that there is an indirect updating effect.

References

Abel, A. B., Eberly, J. C., & Panageas, S. (2013). Optimal inattention to the stock market with information costs and transaction costs. Econometrica, 81(4), 1455–1481.

Abeler, J., Falk, A., Goette, L., & Huffman, D. (2011). Reference points and effort provision. American Economic Review, 101(2), 470–492.

Alvarez, F., Lippi, F., & Passadore, J. (2016). Are state and time dependent models really different? NBER Macroeconomics Annual, 31, 379–457.

Ambuehl, S., & Li, S. (2014). Belief updating and the demand for information. SSRN Discussion Paper, June.

Bacchetta, P., & van Wincoop, E. (2010). Infrequent portfolio decisions: A solution to the forward discount puzzle. American Economic Review, 100, 870–904.

Barbey, A. K., & Sloman, S. A. (2007). Base-rate respect: From ecological rationality to dual processes. Memory and Cognition, 30(3), 241–254.

Baron, J., & Ritov, I. (2004). Omission bias, individual differences, and normality. Organizational Behavior and Human Decision Processes, 94(2), 74–85.

Benjamin, D. J. (2019). Errors in probabilistic reasoning and judgment biases. In B. Bernheim, S. D. Douglas, & D. Laibson (Eds.), Handbook of behavioral economics (Vol. 2, pp. 69–185). London: Elsevier.

Benjamin, D. J., Rabin, M., & Raymond, C. (2015). A model of non-belief in the law of large numbers. Journal of the European Economic Association, 14(2), 515–544.

Boguth, O., & Kuehn, L.-A. (2013). Consumption volatility risk. Journal of Finance, 68(6), 2589–2615.

Bossaerts, P., & Murawski, C. (2017). Computational complexity and human decision-making. Trends in Cognitive Sciences, 21(12), 917–929.

Bowling, S., Khasawnch, M., Kaewkuekool, S., & Cho, B. (2009). A logistic approximation to the cumulative normal distribution. Journal of Industrial Engineering and Management, 2(1), 114–127.

Brainard, W. C. (1967). Uncertainty and the effectiveness of policy. American Economic Review Papers and Proceedings, 57(2), 411–425.

Buser, T., & Peter, N. (2012). Multitasking. Experimental Economics, 15, 641–655.

Caballero, R. J. (1989). Time dependent rules, aggregate stickiness and information externalities. Columbia University Department of Economics Discussion Paper 428.

Calvo, G. A. (1983). Staggered prices in a utility-maximizing framework. Journal of Monetary Economics, 12(3), 383–398.

Caplin, A., Csaba, D., Leahy, J., & Nov, O. (2020). Rational inattention, competitive supply, and psychometrics. Quarterly Journal of Economics, 135(3), 1681–1724.

Caplin, A., Dean, M., & Martin, D. (2011). Search and satisficing. American Economic Review, 101(7), 2899–2922.

Carlin, B. L. (2011). Strategic price complexity in retail financial markets. Journal of Financial Economics, 91, 278–287.

Carroll, C. D. (2003). Macroeconomic expectations of households and professional forecasters. Quarterly Journal of Economics, 118, 269–298.

Cecchetti, S. G., & Mark, N. C. (1990). Macroeconomic evaluating empirical tests of asset pricing models: Alternative interpretations. American Economic Review Papers and Proceedings, 80(2), 48–51.

Cohen, S. N., Henckel, T., Menzies, G. D., Muhle-Karbe, J., & Zizzo, D. J. (2019). Switching cost models as hypothesis tests. Economics Letters, 175, 32–35.

Davis, D. D., & Holt, C. A. (1993). Experimental economics. Princeton: Princeton University Press.

Dessein, W., Galeotti, A., & Santos, T. (2016). Rational inattention and organizational focus. American Economic Review, 106(6), 1522–1536.

El-Gamal, M. A., & Grether, D. M. (1995). Are people Bayesian? Uncovering behavioral strategies. Journal of the American Statistical Association, 90(432), 1137–145.

Gerasimou, G. (2018). Indecisiveness, undesirability and overload revealed through rational choice deferral. Economic Journal, 128(614), 2450–2479.

Gigerenzer, G., & Gaissmaier, W. (2011). Heuristic decision making. Annual Review of Psychology, 62, 451–482.

Goodie, A. S., & Fantino, E. (1999). What does and does not alleviate base-rate neglect under direct experience. Journal of Behavioral Decision Making, 12(4), 307–335.

Holt, C. A., & Laury, S. K. (2002). Risk aversion and incentive effects. American Economic Review, 92(5), 1644–1655.

Huang, L., & Liu, H. (2007). Rational selection and portfolio selection. Journal of Finance, 62(4), 1999–2040.

Huck, S., Zhou, J., & Duke, C. (2011). Consumer behavioural biases in competition: A survey. Office of Fair Trading.

Kahneman, D., & Tversky, A. (1973). On the psychology of prediction. Psychological Review, 80(4), 237–251.

Khaw, M. W., Stevens, L., & Woodford, M. (2017). Discrete adjustment to a changing environment: Experimental evidence. Journal of Monetary Economics, 91, 81–103.

Koehler, J. J. (1996). The base rate fallacy reconsidered: Descriptive, normative and methodological challenges. Behavioral and Brain Sciences, 19(1), 1–53.

Mackowiak, B., & Wiederholt, M. (2015). Business cycle dynamics under rational inattention. Review of Economic Studies, 82, 1502–1532.