Abstract

The literature addressing bias and fairness in AI models (fair-AI) is growing at a fast pace, making it difficult for novel researchers and practitioners to have a bird’s-eye view picture of the field. In particular, many policy initiatives, standards, and best practices in fair-AI have been proposed for setting principles, procedures, and knowledge bases to guide and operationalize the management of bias and fairness. The first objective of this paper is to concisely survey the state-of-the-art of fair-AI methods and resources, and the main policies on bias in AI, with the aim of providing such a bird’s-eye guidance for both researchers and practitioners. The second objective of the paper is to contribute to the policy advice and best practices state-of-the-art by leveraging from the results of the NoBIAS research project. We present and discuss a few relevant topics organized around the NoBIAS architecture, which is made up of a Legal Layer, focusing on the European Union context, and a Bias Management Layer, focusing on understanding, mitigating, and accounting for bias.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The last decade has witnessed a renaissance of Artificial Intelligence (AI), leading to an increasingly pervasive usage in many socially sensitive tasks. However, many concerns have been raised about the—intentional or unintentional—negative impacts on individuals and society due to biases embedded in AI modelsFootnote 1 (Future of Privacy Forum, 2017; Shelby et al., 2023). A few AI incident databases report collections of harms or near harms realized in the real world by intelligent systems (Turri & Dzombak, 2023), the most relevant one being illegal discrimination against social groups protected by non-discrimination law (Altman, 2020). In fact, there is a deep academic and social discussion around the need to evaluate the claims, decisions, actions and policies that are being made based on the AI’s alleged neutrality as more examples confirm that algorithmic systems “are value-laden in that they (1) create moral consequences, (2) reinforce or undercut ethical principles, or (3) enable or diminish stakeholder rights and dignity” (Martin, 2019).

The objective of this paper is twofold.

First, we aim at providing the reader with an up-to-date entry-point to the state-of-the-art of the multidisciplinary research on bias and fairness in AI. We take a bird’s-eye view of the methods and resources, with links to specialized surveys, and of the issues and challenges related to policies on bias and fairness in AI. Such an overview provides guidance for both new researchers and AI practitioners that want to find their way in the blooming literature of the area.

Second, we contribute towards the objective of providing policy advice and best practices for dealing with bias and fairness in AI by leveraging from the results of the NoBIAS projectFootnote 2. We present and discuss a few topics that emerged during the execution of the project, whose focus was on legal challenges in the context of the European Union (EU) legislation, and on understanding, mitigating, and accounting for bias from a multidisciplinary perspective. The presented issues are relevant but not sufficiently developed or acknowledged in the literature. As such, the paper can contribute to the advancement of the research and to increase awareness on bias and fairness in AI.

Introducing fair-AI

In general, bias can be defined as “an attitude that always favors one way of feeling or acting over any other” (Bias, 2023). In human cognition and reasoning, this is the result of evolution (Haselton et al., 2005), for which some heuristics work well in most circumstances, or have a smaller cost than alternative strategies. In AI, biases can originate in the data (pre-existing bias), in the design of AI algorithms and systems (technical bias), and in the organizational processes using AI models (emerging bias). Most AI models are data-driven, hence they may inherit bias embedded in representations of reality encoded in raw data (Shahbazi et al., 2023). In fact, data are not neutral but are instead value-laden (Gitelman, 2013). Biases in AI algorithms have similar foundations as human cognitive biases, namely the reliance on heuristic algorithmic-search strategies that work well on average (Hellström et al., 2020). Quantitative loss metrics that are optimized by AI algorithms may result in an oversimplification of the complexity of reality, hence leading to a systematic difference between what AI actually models and the reality it is intended to abstract (Grimes & Schulz, 2002; Danks and London, 2017) (internal validity). Moreover, the usage of AI in complex socio-technical processes under untested or unplanned conditions may suffer from a lack of generalizability of the AI models (external validity). Several categorizations of the sources of bias and fairness in AI have been proposed in contexts such as social data (Olteanu et al., 2019), Machine Learning (ML) representations (Shahbazi et al., 2023), ML algorithms (Mehrabi et al., 2021), recommender systems (Chen et al., 2023a), algorithmic hiring (Fabris et al., 2023), large language models (Gallegos et al., 2023), and industry standards (ISO/IEC, 2021) to cite a few.

Fairness in AI (or simply, fair-AI) aims at designing methods for detecting, mitigating, and controlling biases in AI-supported decision making (Schwartz et al., 2022; Ntoutsi et al., 2020), especially when such biases lead to (in an ethical sense) unfair or (in a legal sense) discriminatory decisions. Fairness research in human decision-making was triggered by the US Civil Rights Act of 1964 (Hutchinson & Mitchell, 2019), while bias in procedural (i.e., hand-written by humans) algorithms has been considered since the mid 1990’s (Friedman & Nissenbaum, 1996)—with the first known case tracing back to 1986 (Lowry & Macpherson, 1986). Instead, fair-AI research is only 15 years old, starting with the pioneering works of Pedreschi et al. (2008) and Kamiran and Calders (2009). The area originally addressed discrimination and unfairness in ML, and it has been rapidly expanding to all sub-fields of AI and to any possible harm to individuals and collectivities. The state-of-the-art has been mainly developing on the technical side, often reducing the problem to a numeric optimization of some fairness metric (Ruggieri et al., 2023; Carey & Wu, 2023; Weinberg, 2022). Such critiques to the hegemonic (i.e., dominant) theory of fair-AI are not new to the AI community. For instance, Wagstaff (2012) questioned the hyper-focus of ML on abstract metrics “in that they explicitly ignore or remove problem-specific details, usually so that numbers can be compared across domains” but the true significance and impact of the metrics are neglected. Likewise, Mittelstadt et al. (2023) pointed out how “the majority of measures and methods to mitigate bias and improve fairness in algorithmic systems have been built in isolation from policy and civil societal contexts and lack serious engagement with philosophical, political, legal, and economic theories of equality and distributive justice”, and proposed to address future discussion more towards substantive equality of opportunities and away from strict egalitarianism by default. The issue of engineering fairness is, without doubts, challenging (Scantamburlo, 2021), and likely to require domain-specific approaches (Lee & Floridi, 2021; Chen et al., 2023b) and the ability to distinguish whether and when to use AI (Lin et al., 2020), or how to enhance and extend human capabilities with AI (human-centered AI) (Xu, 2019; Garibay et al., 2023). A paradigmatic case is presented in Silberzahn and Uhlmann (2015), where 29 teams of researchers approached the same research question (about football players’ skin colour and red cards) on the same dataset with a wide array of analytical techniques, and obtaining highly varied results. The authors concluded that “bringing together many teams of skilled researchers can balance discussions, validate scientific findings and better inform policy-makers”.

The NoBIAS project

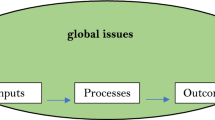

The NoBIAS project (January 2020–June 2024) was a Marie Skłodowska-Curie Innovative Training Network funded by the European Union’s Horizon 2020 research and innovation program. The core objective of NoBIAS was to research and develop novel interdisciplinary methods for AI-based decision making without bias. Fig. 1 shows the NoBIAS architecture, which is designed to integrate bias management with the AI-system pipeline layer. The Bias Management Layer is made up of the various components contributed by the research projects of fifteen Early-Stage Researchers (ESRs). Together, these components aim to achieve three main research objectives: understanding bias, mitigating bias, and accounting for bias in data and AI-systems. An orthogonal Legal Layer provides the necessary EU legal grounds supporting the research objectives. The purpose is not to produce one single bias management framework but rather to combine technologies and techniques for generating bias-aware AI-systems in different application domains and contexts.

Summary of contributions

The contributions of this paper are twofold:

-

we concisely survey the state-of-the-art of fair-AI methods and resources, and the main topics about policies on bias in AI (Sect. “The landscape of policies on bias and fairness in AI”), thus providing guidance for both researchers and practitioners;

-

we discuss the main policy suggestions and the best practices that, in light of the execution of the NoBIAS project, are deemed relevant and under-developed (Sect. “Lessons from the NoBIAS project”). These topics are presented w.r.t. the pillars of the NoBIAS architecture (legal challenges, bias understanding, bias mitigation, and accounting for bias).

We take a multidisciplinary approach, thus facilitating cross-fertilization.

The landscape of policies on bias and fairness in AI

In this section, we provide a concise overview of state-of-the-art fair-AI methods and policy topics. We point to the main contributions and resources in the area to provide guidance for both researchers and practitioners.

Fair-AI methods and resources

Multiple measures of the degree of (un)fairness in (automated) decision making have been introduced in ML and AI (Castelnovo et al., 2022; Mehrabi et al., 2021; Berk et al., 2021; Verma & Rubin, 2018; Zliobaite, 2017; Caton & Haas, 2024). Some of them were originally proposed and investigated in other disciplines, such as philosophy, economics, and social science (Lee et al., 2021b; Hutchinson & Mitchell, 2019; Binns, 2018a; Romei & Ruggieri, 2014). Group fairness metrics aim at measuring the statistical difference in distributions of decisions across social groups. Individual fairness metrics bind the distance in the decision space to the distance in the feature space describing people’s characteristics. Causal fairness metrics exploit knowledge beyond observational data to infer causal relations between membership to a protected group and decisions, and to estimate interventional consequences. As with other performance objectives, the choice of a fairness metric is crucial for optimizing AI models. See the previous surveys and Räz (2021); Wachter et al. (2021a); Hertweck et al. (2021); Binns (2020); Tang et al. (2023); Binns et al. (2023) for a discussion of the moral/legal bases and relative merits of the various fairness notions and metrics.

Fairness metrics are the building block for numerous methods and tools of fair-AI. They aim at bias detection (a.k.a. discrimination discovery or fairness testing) (Chen et al., 2022), at data de-biasing through data processing (pre-processing approaches) (Shahbazi et al., 2023; Zhang et al., 2023), at fair learning of AI models and representations (in-processing approaches) (Wan et al., 2023), at correcting existing models (post-processing approaches), and at monitoring models’ decisions (monitoring) (Kenthapadi et al., 2022; Barrainkua et al., 2022). We also refer to Pessach and Shmueli (2022); Hort et al. (2022); Mehrabi et al. (2021); Ashurst and Weller (2023) and to Fabris et al. (2022); Quy et al. (2022), respectively, for surveys of the techniques and of the experimental datasets commonly used in the field. Several off-the-shelf software libraries are available to practitioners, expanding at a fast pace. Some critical gaps to be addressed by such systems are discussed in Richardson and Gilbert (2021); Lee and Singh (2021); Balayn et al. (2023). A few papers critically discuss the inherent limitations of fair-AI (Friedler et al., 2021; Buyl & Bie, 2024; Ruggieri et al., 2023; Castelnovo et al., 2023).

Research in fair-AI originated from the supervised ML area, but it has been rapidly expanding to all sub-fields of AI, including unsupervised (Chhabra et al., 2021; Dong et al., 2023) and reinforcement learning (Gajane et al., 2022), natural language processing (NLP) (Blodgett et al., 2020; Czarnowska et al., 2021; Gallegos et al., 2023), computer vision (Fabbrizzi et al., 2022), speech processing, recommender systems (Chen et al., 2023a), and knowledge representation (Kraft & Usbeck, 2022) among others. Major AI scientific conferences regularly include papers and workshops on bias and fairness. A few global events are targeted at multidisciplinary aspects of bias, fairness and other ethical issues in AI and algorithmic decision making. These include ACM FAccTFootnote 3, AAAI/ACM AIESFootnote 4, ACM EAAMOFootnote 5, and FoRCFootnote 6. A number of initiatives have started to standardize, audit, and certify algorithmic bias and fairness (Szczekocka et al., 2022), such as the IEEE P7003\(^{\hbox {TM}}\) Standard on Algorithmic Bias ConsiderationsFootnote 7, the IEEE Ethics Certification Program for Autonomous and Intelligent SystemsFootnote 8, the ISO/IEC TR 24027:2021—Bias in AI systems and AI aided decision makingFootnote 9, and the NIST AI Risk Management FrameworkFootnote 10. Challenges of certification schemes are discussed in Anisetti et al. (2023). Moreover, very few works attempt at investigating the practical applicability of fairness in AI (Madaio et al., 2022; Makhlouf et al., 2021b; Beutel et al., 2019), whilst several external audits of AI-based systems have been conducted (Koshiyama et al., 2021), sometimes with extensive media coverage (Camilleri et al., 2023). Finally, on the educational side, bias and fairness have become common topics of university courses on technology ethics (Fiesler et al., 2020), albeit they are not sufficiently included in core technical courses (Saltz et al., 2019) nor sufficiently transversal and interdisciplinary (Raji et al., 2021b; Memarian & Doleck, 2023).

the NoBIAS bias and fairness in AI

Bias and fairness can imply different meanings to different stakeholders depending on the application context, the people’s culture and moral values, and the reference discipline (Mitchell et al., 2021; Mulligan et al., 2019). Policy initiatives, standards, and best practices in fair-AI set principles, procedures, and knowledge bases to guide and operationalize the detection, mitigation, and control of bias in AI models. Paradoxically, the uncoordinated selection and usage of fair-AI techniques may worsen off some protected groups as side-effects. Examples of such behaviors are described in the literature, including the Yule’s effect (Ruggieri et al., 2023) and long-run effects of imposing fairness constraints (Liu et al., 2018).

Policy and guideline inventories

The AI Ethics Guidelines Global InventoryFootnote 11 by AlgorithmWatch lists 167 frameworks “that seek to set out principles of how systems for automated decision-making can be developed and implemented ethically”. There are 8 binding agreements, 44 voluntary commitments, and 115 recommendations. The EU Agency for Fundamental RightsFootnote 12 has collected a list of 349 policy initiatives at the national level, and also including examples at the EU and international level. The OECD.AI Policy ObservatoryFootnote 13 provides a live repository of over 800 AI policy initiatives.

An early survey of 84 ethics guidelines (mostly from Western countries) found an apparent agreement that AI should be ethical, and it identified shared principles of transparency, justice and fairness, non-maleficence, responsibility and privacy. Authors highlight, however, a “substantive divergence in relation to how these principles are interpreted, [...] and how they should be implemented” (Jobin et al., 2019). Despite these various contributions, universal standards or blueprints of fair-AI have not yet been provided by policy-makers, regulators or scientific experts (Wachter et al., 2021b). Even if there were such standards or blueprints, computer/data scientists and practitioners still need to translate these into their academic and industrial contexts and specific situations (Hillman, 2011; Kiviat, 2019).

The option not to use AI

Some scholars argue that, while AI is biased, it is less biased than humans (Lin et al., 2020). For example, humans tend to resort to judgement heuristics when making decisions, leading to biased outcomes (Kahneman, 2011). Humans can also be inconsistent and sometimes opaque and unreliable decision-makers (Kahneman et al., 2021). Given that as the alternative, the option of a noise-free, consistent algorithm is understandably appealing to some. This rationale has supported the push for algorithmic-decision-making system across domains (Miller, 2018). Notwithstanding, it is essential to acknowledge the false sense of objectivity attributed to AI as well as to revise the narrative that AI’s deployment and use is inevitable. Technology alone cannot solve complex real world problems (D’Ignazio & Klein, 2020; Costanza-Chock, 2020), let alone in an equitable way (Costanza-Chock, 2020; Alkhatib, 2021). In underpinning the non-use of AI, or by-effect, prohibiting it or supporting its dismantlement, the following arguments have been documented and researched: potential or realized health and safety harms, human rights violations, opposition to deceptive predictive tools, e.g., predictive optimization (Wang et al., 2023), and organizational factors (Alkhatib, 2021). Existing community-led efforts, such as Stop LAPD Spying CoalitionFootnote 14, invest their efforts in awareness campaigns on the risks and implications of the hyper surveillance of marginalized and racialized communities, thus opposing the deployment of predictive policing tools across cities. Moreover, emerging research (Pruss, 2023) has been able to demonstrate that despite the best efforts to automate high-stake decision-making, humans operating these systems can still “opt-out”, or choose to not use/interact with these tools.

An underdeveloped research line consists of rejecting the low-confidence outputs of an AI system in favor of escalating the decisions to a human agent who could possibly take into account additional (qualitative) information. This is considered in the area of classification with a reject option (or selective classification) (Hendrickx et al., 2021). There is a trade-off here between the performance of an AI system on the accepted region, which should be maximized, and the probability of rejecting, which should be minimized, as human agents’ effort is limited.

Regarding legal regimes, the EU law of General Data Protection Regulation (GDPR) (European Parliament and Council of the European Union, 2016) establishes some restrictions on the use of automated decision-making over individuals when the legal rights or legal status of an individual are impacted. Concretely, individuals should not be subject to a decision that is based solely on automated processing when it is legally binding or has similarly significant effects on them. Whether Article 22 of GDPR provides the data subjects with a right to object or establishes a general prohibition on automated decision-making is still uncertain and is the object of academics and practitioners debate (Mendoza and Bygrave, 2017; Article 29 Data Protection Working Party, 2018). The position of the regulator, then, seems to either offer people the option to opt-out or to provide them with strong safeguards to protect them from potential risks and harms. The upcoming EU AI Act (European Commission, 2021), will introduce in the EU legal framework a substantial advance in this regard by adopting a risk-based approach to assess AI systems’ legal compliance. AI systems could only be placed in the EU market if they comply with certain requirements that mainly aim to avoid the bias. The proposed risk-based approach differentiates between minimal risk, low risk, high-risk, and unacceptable risk, advocating, likewise, for a gradually stricter set of obligations and duties proportionate to each level of risk. The AI Act bans six practices due to their particularly harmful and abusive nature that contradicts the values of respect for human dignity, freedom, equality, and the rule of law. Specifically, the text recognises the threat that AI practices concerning: (1) biometric categorization systems using sensitive attributes, (2) facial recognition, (3) emotion recognition, (4) social scoring, (5) human manipulation, and (6) the exploitation of people’s vulnerabilities can pose to peoples’ rights and democracy values. Notwithstanding the prohibition, the use of real-time and post-remote biometric identification systems in public accessible spaces for law enforcement purposes would be permitted under specific safeguards and strict conditions.

Using fair-AI with a guidance

Fairness metrics are at the core of the technical approaches for fair-AI. However, theoretical results state that it is impossible to satisfy different fairness notions at the same time (Chouldechova, 2017; Kleinberg et al., 2017). Not only fairness notions are in tension among each other (Alves et al., 2023), but also with other quality requirements of AI systems, such as predictive accuracy (Menon & Williamson, 2018), calibration (Pleiss et al., 2017), impact (Jorgensen et al., 2023), and privacy (Cummings et al., 2019), for which Pareto optimality should be considered (Wei & Niethammer, 2022). Moreover, the choice of a fairness metric requires to take into account several contrasting objectives: stakeholders’ utility, human value alignment (Friedler et al., 2021), people’s actual perception of fairness (Saha et al., 2020; Srivastava et al., 2019), and legal and normative constraints (Xenidis, 2020; Kroll et al., 2017). Decision diagrams or rules-of-thumb for guiding practitioners in the choice of the fairness metrics are offered by (Makhlouf et al., 2021a; Buijsman, 2023; Majumder et al., 2023), highlighting the complexity of the choice. The way that the various objectives and requirements are looked for, expressed and formalized, impacts on the choice of the fairness metrics and, a fortiori, on the design of an AI system (Passi & Barocas, 2019)—an instance of the framing effect bias, as shown e.g., in Hsee and Li (2022). For example, in the famous case analysed by ProPublicaFootnote 15, the COMPAS algorithm for recidivism prediction fails to meet equal false positive rate among groups, but it achieves equal calibration (Corbett-Davies et al., 2017), possibly as the result of different perspectives taken by the designers of the algorithm and the ProPublica journalists. Even when restricting to a specific fairness notion, there is a problem on how to quantify the degree of unfairness. In fact, even the apparently innocuous choice among algebraic operators (e.g., difference or ratio of proportions), may have an impact. Pedreschi et al. (2012) show that the top-k protected-by-law sub-groups with the highest risk difference and the top-k with the highest selective risk ratio do not coincide. Hence, cases of possible discrimination with one choice may be undetected or unprevented with another choice.

Beyond debiasing: addressing the origins of AI harms

The report by Balayn and Gürses (2021) studies several EU policy documents, including the AI Act. The authors find that such documents rely on technocentric approaches to address AI harms, while simultaneously not adequately specifying which harms are being referred to. They argue that there is an overemphasis and overreliance specifically on the approach of debiasing data and models. Here, debiasing is used as it is in the fair-AI literature, to refer to improving model performance on specific fairness metrics, as well as to improving representation of certain groups in datasets. This is described as a limited approach as it fails to acknowledge potential harms caused by a myriad of other system design decisions, such as what is being optimized for or what attributes are being used to represent aspects of the real world. Authors also point out that the documents provide no guidance on how to address the inevitable question of which stakeholders view of what is acceptable or unacceptable bias in a system, nor do they acknowledge that any dataset or system is biased, in the sense that it was created by people, with and for a specific view or goal. They advocate for the EU to utilize other governance strategies beyond technical debiasing solutions, so as not to transfer the responsibility, and power, to determine complex political questions to designers and technicians building AI systems. One alternative perspective about the impact of AI systems they address is the organizational view. Specifically, they identify the need to consider what impacts the adoption of extensive AI systems will have on public institutions; if they begin to rely on digitization and automation helmed by large private companies, in what ways will their resources and capacities be shifted, and what would this kind of interdependence mean for public–private relationships.

Bias and auditing

In algorithmic decision-making, auditing involves using experimental approaches to investigate potential discrimination by controlling factors that may influence decision outcomes (Romei & Ruggieri, 2014). Given the application scope of these systems, proposed audits span various domains, including algorithmic recruitment (Kazim et al., 2021), online housing markets (Asplund et al., 2020), resource allocation systems (Coston et al., 2021); and more general processes related to the design (Katell et al., 2019) and vision of these systems as socio-technical processes (Cobbe et al., 2021). Auditors play a crucial role in ensuring algorithmic accountability. Consequently, they involve multiple stakeholders, from product developers, government, policy makers, and data owners to broader groups in society, such as advocacy organizations and institutional operators (Wieringa, 2020). Ultimately, audits are evaluations designed to hold stakeholders accountable. Algorithm auditing (Koshiyama et al., 2021), and specifically AI auditing (Mökander, 2023), is a concept coined to seek for the development of auditing frameworks on research and in practice. Moving from a case-by-case basis, audits should establish formal assurance that algorithms are legal, ethical, and safe by informing on governance and compliance with regulations and standards. Notably, the Information Commissioner’s Office (ICO) of the United Kingdom has developed a such a framework for auditing AI systems in the public and private sectorsFootnote 16. These investigations assess how these entities process personal information and effectively deal with information rights issues. In this capacity, an audit will involve a thorough evaluation of an organisation’s procedures, processes, records, and activities. We see in this example how audits are crucial in addressing issues of bias and discrimination. Specifically, by ensuring the existence of adequate policies and procedures, verifying their compliance, testing the adequacy of controls, detecting existing or potential violations, and recommending necessary changes to controls, policies and procedures.

Living with bias by documenting it

An emerging scholarship advocates for the development of documentation practices and accompanying artefacts that enhance AI audit pipelines (Gebru et al., 2021; Raji & Yang, 2019; Stoyanovich et al., 2022; Raji et al., 2020), thus enabling stakeholders to easily inspect all the actions performed across the many steps of the pipeline. This also contributes to increasing the trust on the development processes and the systems themselves. The AI community does not count with standardized methods to produce documentation on datasets and models, nor are there any specific regulatory frameworks that enforce this practice at the moment of writing; however, pioneering work in this area argues that “drawing on values-sensitive practices can only bring about improvements in engineering and scientific outcomes” (Bender & Friedman, 2018). Further, Gebru et al. (2021) advocate that documentation promotes the communication between “dataset consumers and producers”. Existing frameworks for the elaboration of documentation include: Datasheets for Datasets (Gebru et al., 2021), Dataset Nutrition Labels (Chmielinski et al., 2022), Data Statements (Bender & Friedman, 2018), Data Readiness Report (Afzal et al., 2021), and Model Cards for Models (Mitchell et al., 2019). Formal data models, like ontologies and controlled vocabularies, can also support AI-related documentation needs. Examples of relevant vocabularies include: the Data Catalog Vocabulary (DCATFootnote 17), the provenance ontology (PROV-OFootnote 18), and the Machine Learning Schema ontology (MLSFootnote 19). Lastly, Miceli et al. (2022b) propose a shift in perspective, from documenting datasets to documenting data production processes in order to account for the intensive and precarious human labour involved in the production of datasets. More recently, the urgent call for data stewardship (Peng et al., 2021) and responsible data management practices (Stoyanovich et al., 2022) has also seen the emergence of new professional roles (Rismani & Moon, 2023).

Lessons from the NoBIAS project

The Bias Management Layer in the NoBIAS architecture of Fig. 1 aims at achieving three main research objectives: understanding bias, mitigating bias, and accounting for bias in AI-based systems. An orthogonal Legal Layer provides the necessary legal grounds, with regard to the EU context, supporting the research objectives. In this section, we discuss a few policy advices and best practices resulting from the execution of the NoBIAS research. The section is organized according to the NoBIAS architecture.

Legal challenges of bias in AI

After framing the EU legal context of AI biases, we discuss how to overcome the hegemonic theory of fair-AI beyond fairness metrics by moving towards transparency and accountability of AI systems. Finally, we consider the synergies and frictions between non-discrimination and data protection law in the specific case of EU legislation. A summary of the challenges, policy advices, and best practices in the Legal Layer is reported in Fig. 2, together with a reference to the subsection(s) where they are discussed.

AI biases, discrimination and unfairness

Anti-discrimination legal cases—targeted and strategically litigated—are traditionally based on causal connections between the protected group, the questioned provisions, and the discriminatory situation or unfair treatment (Foster, 2004). However, AI systems challenge that, initial, intuitive causality basis by performing through correlations that do not provide causal explanations for the connections between the input data and the target variable (see Bathaee (2018) and also later Sect. “Bias as a causal-thing”). AI systems operate in such a complex manner that they defy human understanding, leaving the potential victim unaware of the scope and magnitude of the extent to which they have been discriminated against and disadvantaged. Establishing a case of AI discrimination is undoubtedly difficult, as seen in the following brief analysis. Firstly, the potential claimants may not be aware of their disadvantage and the information required to prove that such algorithmic discrimination may be difficult to discover, gather, or access (Wachter et al., 2021b). Secondly, anti-discrimination law protects on the grounds of protected attributes; however, the sources of algorithmic discrimination and the individuals and groups affected by it may not be straightforwardly correlated with those attributes (Zuiderveen Borgesius, 2020). Protected groups may be treated in a biased or unfair way, but the use of proxies can cover such treatment as the features of the model would not directly reveal the use of any sensitive attribute. A second challenge arises from the limited personal scope of EU non-discrimination law, restricted by an exhaustive list of protected grounds. By utilizing proxies-i.e., “neutral" variables closely correlated with the protected ones-the use of AI systems poses a significant risk of circumventing the scope of legal protection [often referred as proxy discrimination (European Commission et al., 2021; Zuiderveen Borgesius, 2020)]. The way AI systems operate reinforce an existing challenge in EU equality protection, that of intersectional discrimination, arising when discriminatory effects occur at the intersection of two or more vectors of disadvantage. While concepts of intersectionality have been advanced by legal scholarship, the Court of Justice of the EU has so far failed to explicitly recognize intersectional discrimination as a special type of discrimination (Xenidis, 2018; Roy et al., 2023; European Court of Justice, 2016), creating a potential gateway for algorithmic discrimination within the realm of EU non-discrimination law. Thirdly, the current legal procedure to establish a case of discrimination may also set some limitations to bring and present a case of algorithmic discrimination effectively (Wachter et al., 2021b). Furthermore, what makes an algorithm biased and its outcomes unfair is the subject of a contested debate (Rovatsos et al., 2019; Barocas & Selbst, 2016; Jacobs, 2021; Wachter et al., 2021a). Fairness is essentially a contested concept as it is context-dependent and highly conflicts with different ethical, political, and cultural understandings. Still, fairness needs to be mathematically defined to build fair-AI systems, leaving the question of which values need to be operationalized into variables unsolved. For this reason, the literature of fair-AI mainly derives its fairness constructs from a legal context where a process or decision is considered fair if it does not discriminate against people based on their membership to a protected group (Tolan, 2019; Mehrabi et al., 2021; Romei & Ruggieri, 2014). Fairness can be understood as equality or as equity, which are different concepts (Minow, 2021), so the instruments and ways to achieve and ensure the goals of each highly differ. Fairness, in essence, can be understood in different manners depending on its nature, formal or substantive; the context it applies to, legal or technical, or the actor it refers to, public or private. Selecting the appropriate principles and operationalizing the preferred construct requires understanding how people assess fairness and questioning whose perceptions should be captured or discharged (Binns, 2018b).

AI fairness beyond metrics: transparency and accountability of AI systems

Carey and Wu (2023); Weinberg (2022) survey the existing critiques on the hegemonic theory of fairness that draw from non-computing disciplines, including philosophy, law, critical race and ethnic studies, and feminist studies. The hegemonic (i.e., dominant) theory of fairness in the ML community reduces the fairness problem “in terms of a domain-general procedural or statistical guideline [...] so long as the chosen fairness criteria are satisfied, the resulting procedures and outcomes of the system are necessarily fair" (Green & Hu, 2018). Beyond those critics, AI systems’ opaqueness and the potential to impact individuals’ lives are frequently described as the main motivations to demand disclosures of information and provision of explanations about their internal processes and final outcomes, understanding these requirements as necessary to ensure effective governance of the AI context (Almada, 2021) and for allowing applicants to make cases of discrimination (Xenidis and Senden, 2020). On the one hand, algorithms are considered powerful procedures that create “a growing need to evaluate the claims, decisions, actions, and policies that are being made on the bases of them. This evaluation requires gauging the reasons for an algorithmic decision, its components, and the weight assigned to them” (Vedder & Naudts, 2017), in short, requiring AI accountability. On the other hand, the “individual adversely affected by a predictive process has the right to understand why and frames this in familiar terms of autonomy and respect as a human being” (Edwards & Veale, 2017), in short AI transparency.

An extensive review of algorithmic accountability is provided by Wieringa (2020), while Percy et al. (2021) brings to life the notion of AI accountability in industry work programs, aiming to implement industry-specific technical requirements. Algorithmic impact assessments are accountability governance practices rendering visible the (possible) harms caused by algorithmic systems (Metcalf et al., 2021). Reviewability, introduced by Cobbe et al. (2021), is a way to break down the algorithmic decision-making process into technical and organisational elements which help in determining the contextually appropriate record-keeping mechanisms to facilitate meaningful review both of individual decisions and of the process as a whole. The design of interpretable AI models and the development of methods to explain black box models are comprised in the area of eXplainable AI (XAI) (Guidotti et al., 2019; Minh et al., 2022). Such techniques respond to a societal desire to understand the obscure systems that can greatly affect our lives when allocating services or granting and denying rights. Transparency and information obligations can publicly assess the consistent compromise and dutifulness of AI systems with legal principles such as fairness, lawfulness, or information privacy, improving the legitimacy and acceptance of their use by the individuals affected by them at last stay, and supporting the contestability of their outcomes (Henin & Métayer, 2022). However, in most situations where there are obligations to provide information and explanations about automated decision-making systems, the context is adversarial, and the interests of the parties involved are, if not opposite, different (Bordt et al., 2022). The interest of the users and providers of AI systems and the persons affected by them are opposed to the extent that the former will want to address its transparency and information obligations in a way that ensures compliance but does not harm its private interests, whilst the person subjected to the AI systems will expect a level of compliance that is sufficiently rigorous to enable an effective exercise of her rights and protect her interests and freedoms. Consequently, the interests to be protected or respected will largely condition the method of explanation and the information and explanations expected to be received (see also later Sect. “The need for trustworthy AI, and XAI in particular”).

EU data protection law and non-discrimination law

The uptake of (fair-)AI has brought two distinct EU legal regimes to the forefront: data protection law and non-discrimination law. As data-driven technology, AI relies today on the processing of big volumes of data, which often relate to identified or identifiable individuals. This processing brings the development and deployment of many AI systems directly under the scope of the GDPR (European Parliament and Council of the European Union, 2016). On the other hand, due to the issue of bias, AI applications have the potential to infringe upon non-discrimination rights and interfere with existing non-discrimination regulations. Considering that both data protection and non-discrimination rights constitute fundamental rights that are as such equally protected in EU primary (art. 8 and 21 of the Charter of Fundamental Rights (European Union, 2000)) and secondary law (GDPR and EU non-discrimination directives (Council of the European Union, 2000a; European Parliament and Council of the European Union, 2006; Council of the European Union, 2000b, 2004)), mapping aspects of their intersection becomes highly relevant. We refer to Gellert et al. (2013) for a comparative analysis of the two. Here, we highlight a few relevant synergies and frictions.

Since the emergence of the AI bias discourse, EU legal scholars have approached the existing non-discrimination and data protection legal frameworks in an integrated way in order to deal with the challenges of AI in the digital age (Zuiderveen Borgesius, 2020; Hacker, 2018; European Parliament et al., 2022). Confronted with the novel challenges of algorithmic bias, commentators have mainly sought recourse to the GDPR, as a means to compensate enforcement deficiencies of the EU non-discrimination legal apparatus. Tools such as individual access rights (Article 15 (1)), data protection audits (Article 58 (1) (b)), Data Protection Impact Assessments (Article 35 et seq.) and the principle of “fairness” (art. 5 (1) (a)) along with the provision of administrative fines for violation of associated obligations (art. 83) are among those highlighted for their potential to fight against AI bias and support the protection of non-discrimination rights. However, recourse to data protection law cannot be forever a panacea for the challenges of AI discrimination. Not only is the GDPR not rationae materiae primarily concerned with the right to non-discrimination but it is also de facto considerably ineffective in achieving this goal (Zuiderveen Borgesius, 2020; European Parliament et al., 2022). It is important that EU and national legislature and judiciary engage with the limitations of existing non-discrimination frameworks and the nuances of AI application in order to consider tailored legislative amendments or interpretative approaches. Specific recommendations or guidelines by relevant independent bodies such as the European Data Protection Board (EDPB) that adapt the application of existing legislation to the specificities of AI technologies will particularly serve this effort. Striking the right balance between legal certainty and agile application across different domains, Member States and technological developments represent a key challenge in this undertaking. See Gerards and Zuiderveen Borgesius (2022); European Parliament et al. (2022); Xenidis (2020) for suggestions on different legislative and interpretative approaches in the context of fair-AI.

The fair-AI ecosystem may bring about a clash between the objectives of data protection and non-discrimination legislation, as debiasing approaches may interfere with well-established data protection rights and principles (Veale & Binns, 2017). First of all, the lack of representative training datasets has been consistently described as one of the sources of AI bias (Barocas & Selbst, 2016; Buolamwini & Gebru, 2018; Ntoutsi et al., 2020) (see also Sect. “Understanding bias”). This line of reasoning has been adopted by the proposed AI Act (European Commission, 2021). Specifically, art. 10 para 3 mandates that providers of high-risk AI systems shall ensure representative training, validation and testing data sets, as part of the prescribed data governance practices. It is thus conceivable that such legislative calls might risk motivating an increasing collection of personal data particularly from data subjects that belong to hitherto underrepresented groups, who are often the most vulnerable in terms of data protection. Furthermore, fair-AI frameworks centered around bias detection, monitoring, and correction often imply the processing of data on characteristics protected by the EU non-discrimination law. This often corresponds to the collection and/or the processing of special categories of personal data (hereafter sensitive data), despite the fact that they are, as such, extensively protected by the GDPR (European Parliament and Council of the European Union, 2016). Moreover, special attention must be given to the way that bias mitigation approaches, and particularly the modification of training data through pre-processing (see Sect. “Fair-AI methods and resources”), may interfere, or at least may introduce a layer of complexity, with GDPR principles such as the principle of “accuracy” outlined in Article 5(1) (d) of GDPR.

Since the practice of removing or ignoring sensitive attributes shows to be ineffective to tackle the issue of AI bias (Barocas et al., 2019; Zliobaite and Custers, 2016; Haeri & Zweig, 2020), data scarcity due to regulation constraints is essentially seen as a hurdle to the realisation of fair-AI. There is an effort in the European Parliament’s negotiated version of the AI Act to minimize and circumscribe the width of this obligation, by requiring “sufficiently representative” (sic) training datasets. However, this choice can also be seen critically as compromising and relativizing the obligation of AI providers to engage with representation biases. As the notion of “sufficiency” is not legally defined and until specific standards or guidelines elaborate on the matter, it is at the discretion of AI providers to weight up their datasets against the “sufficiency” scale, considering the application and the context at hand. A level of legal uncertainty arises in that regard.

The proposed AI Act comes to mediate this tension and opens up the possibility of processing sensitive personal data for the case of bias monitoring, detection and correction in high-risk AI systems [art. 10 (5)]. This possibility comes together with various requirements, intended to ensure a balance between the right to data protection and non-discrimination and prevent an excessive processing of sensitive data in the name of debiasing. However, once again these requirements entail indefinite legal concepts (e.g. “necessity"), with no existing guidance on they way they shall be operationalized in the context of fair-AI. Entrusting the lawful interpretation and implementation of fundamental requirements to the discretion of AI providers entail the risk of a purposeful and inconsistent legal application to the detriment of the right to data protection. In addition, infringements upon provisions of the GDPR or the AI Act might result in severe financial penalties (art. 83, 84 GDPR, art. 71 AI Act).

The tensions between different regulatory tools and the abundance of vague binding textual requirements generate thus a great level of legal uncertainty for all bodies concerned, which explains the urgent need for adequate guidance. Considering the novelty, the fast-evolving nature and the complexity of different debiasing approaches, the desired guidance requires targeted research efforts. Rather than focusing solely on non-discrimination desiderata and sustaining an adversarial conceptualisation of “fairnes” vs “privacy”, it is important that interdisciplinary research and good practices on fair-AI transition to a more integrated model. This model should account for the deep intertwinement between data protection and non-discrimination legal regimes and seek to enhance privacy while engaging in debiasing.

Understanding bias

Bias in data is not as clear-cut as it is often presented. What we mean by bias, what we consider its sources, and what we view as its materialization are all, among other, complex questions with considerable implications on policies for addressing unfair AI models. In this section, we present different angles to better understand and be critical about bias(es) in data. First, we argue on understanding biases, not bias, as a multifaceted issue. Then, we criticize the AI assumption of ground truth, quest for source criticism and archival practices, and discuss the issue of reliable data annotation. Finally, we claim for approaches specific to data types and domain types. A summary of the challenges, policy advices, and best practices in understanding bias is reported in Fig. 3.

Understanding biases, not bias

Bias is primarily understood as a difference between what is seen as “truth” or “fact” and the respective results of an algorithmic function (a prediction or a representation). Such definitions of bias have in common that they do not relate to the harmful and discriminative impact of statistical errors nor to the underlying social conditions leading to bias. Recent research not least in Computer Science has therefore elaborated how bias is also entangled with social and historical prejudice and discrimination. For instance, the terminology “gender bias” refers not only to a statistical error but also to the algorithmic amplification of already existing discrimination against women and LGBTIQ* persons like in the case of the Austrian public employment service algorithm (Lopez, 2019). Further studies grounded in Social Science and Science and Technology Studies have explored the “empirical grounded accounts of practices” (Jaton, 2020) of Computer Science, folded into algorithmic bias and fairness. These contributions have in common that they approach bias not as a statistical error in the predictive performance of an algorithm, but as socio-technical, procedural and constitutive to algorithms (Jaton, 2020; Ziewitz, 2016; Draude et al., 2019; Seaver, 2017).

We think it is crucial to acknowledge that there is not just a singular bias, but rather a multitude of biases, having different (social, technical and socio-technical) roots and exerting distinct effects when employed. In the realm of policy-oriented research, a suggested approach is to “study up” (Nader, 1972) and embrace a framework that considers power dynamics, rather than solely focusing on identifying (singular) bias(es) (Miceli et al., 2022a). By doing so, understanding biases can even inform policy-making as it acts as a synecdoche for structural inequalities that persist in society.

The ground truth is biased

AI models are trained on historical data to accomplish a certain task, e.g., to predict recidivism of defendants. The data used for training is assumed to encode the ground “truth” of the task, e.g., the actual outcome of recidivism for each defendant in case the defendant would have been released. In most cases, collecting the ground “truth” is difficult, expensive, or even unethical, as it would require to obtain counterfactual outcomes, such as releasing potential criminals, not treating sick patients, etc. (Tal, 2023). In the analysis of the COMPAS algorithm, for example, ground truth was approximated by the actual re-arrest outcome of defendants in the 2 years period after they were scored. First, due to unobservability of crime, re-arrest does not coincide with re-offense (Bao et al., 2021), which is the recidivism outcome intended to be predicted. Second, we do not know whether or not defendants who were not released would have recidivated in case they would have been released. Similarly, we do not know whether an applicant with denied credit would have repaid the credit if granted, a sample selection bias problem tackled by reject inference in credit scoring (Ehrhardt et al., 2021). An idea close to reject inference has been considered in (Ji et al., 2020) for group fairness. Such sampling bias in collected ground truth has been called negative legacy unfairness (Kamishima et al., 2012), or the selective label problem (Lakkaraju et al., 2017), and it is an instance of data missingness (Goel et al., 2021). Recognizing that ground truth in collected data is biased help to solve the illusive tension between fairness and accuracy (Wick et al., 2019). In NLP, the ground truth is obtained by human annotation, typically aggregating annotators’ labels through majority voting. Here, the simplifying assumption of a single ground truth is used. A perspectivist approach is emerging in favor of granting significance to divergent opinions, by designing methods over non-aggregated data (Cabitza et al., 2023). Uncertainty and inconsistency in expert annotations have been pointed out also in the domain of healthcare (Lebovitz et al., 2021; Sylolypavan et al., 2023). In the absence of unbiased ground truth, however, practitioners train AI classification models by setting the target feature using historical data. Any bias in the historical data risks to be lifted to the AI model with a false claim of fairness. Looking at other disciplines, Zajko (2022) points that AI students are untrained and unprepared for the reality of an unfair society. We support the author’s claim that “AI developers refer to the reality that exists outside of their models as the ‘ground truth’, and bias is often defined as deviations from this truth, or inaccurate representations and predictions. But when the truth is that society is deeply, structurally unjust and unequal, and that technologies are part of these structures, the question is whether our algorithms should accurately reproduce inequality or work to change it”.

Beyond documenting bias: source criticism and archival practices

Data curation is central in Computer Science approaches to data bias management (Demartini et al., 2023; Balayn et al., 2021) and information resilience (Sadiq et al., 2022). Here, we highlight instead the issue of source criticism, which is central in historical disciplines and in the humanities, but still in its infancy in Computer Science and AI. Source criticism relates to the practice of understanding the provenance, authenticity, and completeness of sources used in scholarship (Koch and Kinder-Kurlanda, 2020). In the historical disciplines and in the humanities more generally, the practice is considered as required for assessing the validity and reliability of findings based on the source, usually a document. The adoption of source critical practices, applied to datasets, in fair-AI would allow us to give a better picture of the data being used and the individual instances it contains. Questions of provenance, which is defined as “the question of who has created it with what intention, in which institutional and socio-cultural context” (Koch and Kinder-Kurlanda, 2020), have gone particularly under-examined in AI research and development work. There is now a growing body of works examining the lack of quality, offensiveness, and un-curated nature of some of the massive datasets used for common text and image AI applications (Birhane & Prabhu, 2021; Birhane et al., 2021) as well as works attempting to identify the ‘genealogy’ of commonly used datasets and benchmarks, with a focus on understanding the norms and values embedded in them (Raji et al., 2021a; Denton et al., 2021).

Many existing datasets used in fair-AI research have minimal information available about the reasons and decisions behind their creation (Fabris et al., 2022; Quy et al., 2022), which are needed for effective source criticism. There have been recent works proposing specific implementations for ensuring that newly created datasets are both well documented and designed as suitable for their intended purposes. In this way, AI practitioners will have a better understanding of the provenance, authenticity, and completeness of the datasets that they use, and of what the implications of results drawn from them are. Hutchinson et al. (2021) present a framework for dataset creation drawn from software development best practices. This framework is intended to support transparency and accountability regarding all steps of the dataset creation life cycle, with a particular focus on the often forgotten maintenance phase. Jo and Gebru (2020) propose the creation of an interdisciplinary sub-field of dataset archiving as a way to ensure capacity for the extensive and specialized work required for responsible data creation and management. The authors explain that the existing field of archiving already has established standards and practices for responsible archival processes that can be transferred to this new sub-field.

Don’t blame the data, don’t blame the annotator

The current paradigm of AI research and development is heavily dependent on data. Consequently, and despite the extensive resources that have been allocated to research pertaining to bias detection and mitigation in datasets and AI models, the common misconception that bias originates in the data persists, especially in circles outside fair-AI research. The hyper-fixation on data as the primary source of bias can wrongfully lead to treating the negative societal impacts of ML-systems’ deployment as an oversimplified problem that can be tackled by “removing” bias from data. Instead, it is essential to reinforce the need to assess algorithmic harms through a holistic assessment that contemplates the whole of the AI pipeline throughout its entire life cycle, whilst also accounting for the societal context for its intended use (Suresh & Guttag, 2021). With this in mind, we reinstate how biases can arise at any point of the pipeline as they are derived from the series of choices and practices that go into making these systems, and that eradicating all the biases is a near impossible task (Olteanu et al., 2019). Suresh and Guttag (2021) propose a framework that supports the understanding of sources of harm that can be mapped to different stages across the ML life cycle, accompanied by a non-comprehensive taxonomy of biases that can be attributed to each stage. Here, we emphasize on non-comprehensive, because in the same way humans are plagued by innumerable types of biases, datasets and models are also subjected to this problem (Olteanu et al., 2019).

Ultimately, decoupling the AI pipeline in stages can support the careful examination of harms, and help anticipate unforeseen negative implications that these technologies can go on to have upon deployment. Moreover, assessing algorithmic harms from a holistic point of view, also instils a degree of accountability from all those involved in the process of deploying them, instead of doing away with it by simply tackling bias during data pre-processing.

Another localized issue associated with the need for vast amounts of annotated data to train and validate AI-powered systems, in particular those resorting to supervised ML methods, is the one concerned with attributing data bias and, consequently, bad dataset quality, to human annotators, or by-effect, data labourers (Li et al., 2023). In particular, research focused on crowdsourcing dataset annotations tend to make the case for bias in human annotations as being one of the main causes of unfairness in downstream ML tasks (Demartini et al., 2023). The reason for this can be closely intertwined with the interpretative nature of tasks such as data labelling (see also Sect. “The ground truth is biased”), where data labourers are expected to fit complex and divergent world-views into rigid categories (Lin and Jackson, 2023). However, identifying “annotator bias” as the root problem of biased datasets, has become as of late a contentious issue in discussing ethical practices and AI development, as it overlooks the need to acknowledge opaque dataset production processes that require an intensive amount of human labourFootnote 20. Emerging research on this spectrum calls to instead consider biases in datasets as the result of “instruction bias” (Parmar et al., 2023), where bias enters the data collection process at the hand of those designing the instructions for the requested tasks (requestors). Going further than that, Miceli et al. (2022b) propose shifting the discussion away from “annotator bias” altogether, and instead towards the critical assessment of existing work practices and conditions associated with dataset production. Specifically in this context, they allude to their restricted ability to ask questions in instructions, raise concerns about tasks, low pay, and the elevated surveillance of the labourers. To alleviate this, Miceli et al. (2022b); Li et al. (2023) advocate for centring data labourers’ well-being, and propose frameworks that, for starters, incorporate their input and feedback into production processes, with the aim to empower them.

Consider the data type

We have already displayed how bias is an umbrella term that comprehends many different characterisations and ranges across different disciplines (e.g., Statistics, Psychology, Social Science, Science and Technology Studies, Gender Studies, etc.), as further demonstrated by the extensiveness of the projectsFootnote 21\(^,\)Footnote 22 that try to catalogue human biases. Especially for big (non-tabular) data, there is a great amount of different biases that can co-occur in the same dataset and often depend on the data type itself. In visual data, for example, framing bias is defined in Fabbrizzi et al. (2022) as “any associations or disparities that can be used to convey different messages and/or that can be traced back to the way in which the visual content has been composed”. It is clear how this definition makes sense only if we rely on further knowledge on how visual communication works (also from the very practical point of view). Furthermore, a typical example of bias in hate speech detection is that African American English (AAE) tends to be labelled as offensive (Harris et al., 2022). Outside the specific example of this case study based on Twitter data, for which the bias was due to a different use of swearing by AAE speakers, it is evident how searching for such a bias in general is not straightforward and requires a certain understanding of how languages work and of the relationships between different dialects of the same language. It is to be considered a best practice, then, to analyse data in search for bias having clear the peculiarities of each data types. Furthermore, any policies that aim at regulating AI adequately need to be either general enough to comprehend the specificity of each data type or differentiate among different data types. For example, the “horizontal”Footnote 23 data governance approach of the AI Act w.r.t. bias in training, testing and validation datasets (art. 10 of AI Act) might raise considerable challenges in that respect. While different types of data imply different challenges in terms of fairness and data protection, horizontal legal requirements lean arguably towards the paradigm of tabular data. This might impede their consistent application to a large amount of high-risk AI systems that utilize visual data. The development of corresponding regulatory guidelines and standards tailored to different data types can increase legal certainty and enhance compliance.

Mitigating bias

Bias mitigation is a crucial aspect in the development of fair-AI models, aimed at reducing or eliminating biases that can skew outcomes and perpetuate discrimination. As mentioned in Sect. “Fair-AI methods and resources”, bias mitigation can happen in multiple crucial stages, including data processing approaches (pre-processing), specialized fair-AI algorithms (in-processing), and model sanitization (post-processing). The effectiveness of mitigations at those stages presents some challenges. Pre-processing approaches may inadvertently remove relevant or informative data, with the risk of overgeneralizing and ignoring legitimate differences that may exist among subgroups. This is a problem shared with the data processing for privacy-enforcement (Shahriar et al., 2023). In-processing approaches follow the optimization of some trade-off between performance and fairness metrics. Finally, post-processing approaches may not address the root causes of biases, hence having a limited impact and potentially leading to new biases or feedback loops. In this section, we present issues that deserve specific attention by the practitioners when implementing bias mitigation strategies. A summary of the challenges, policy advices, and best practices in the mitigating bias is reported in Fig. 4.

Multi-stakeholder participatory design

As observed in Sect. “Don’t blame the data, don’t blame the annotator”, every technical decision, yet apparently-neutral, in any stage of the AI pipeline can impact on the biases of the final AI system. For instance, fairness is affected by imputation of missing values (Caton et al., 2022), by encodings of categorical features (Mougan et al., 2023), by feature selection strategies (Galhotra et al., 2022), and even by hyper-parameter settings (Tizpaz-Niari et al., 2022). More importantly, the composition of data transformations and AI models that are fair in isolation may not be fair in the end (Dwork & Ilvento, 2019). Observe that this also applies to AI-based complex socio-technical systems resulting from the composition of AI, algorithms, people, and procedures (Kulynych et al., 2020). The lack of compositionality requires that the bias analysis of a socio-technical system is conducted as a whole, not by pieces. This is also because the objectives and requirements of the designers of AI, of the users of AI, and of the population subject to the AI decisions are unlikely to be the same. Fair-AI methods are currently not sufficiently robust and they can be incomplete in modelling the complexity and dynamic of the deployment scenario. Multi-stakeholders participatory design (Feffer et al., 2023) and policy actions that take into account qualitative contextual information and feedback from reality may be a valid alternative to technological solutionism. For instance, the NoBIAS project contributed in Scott et al. (2022) to a participatory approach in the design of algorithmic systems in support of public employment services.

Prioritizing human-centric AI

In addition to the issues discussed in Sect. “Multi-stakeholder participatory design”, involving the interested communities during the whole development process of a decision-making system is also a crucial aspect for prioritising AI systems that respond to human values—an objective known as AI alignment (Ji et al., 2023) or socially responsible AI (Cheng et al., 2021). Inclusion should go beyond the provision of “low-resource” methods (Gururangan et al., 2022), i.e., framing the under-representation of social minorities as a data scarcity problem. Instead, it should account for preventive considerations that respond to diverse human needs and preferences. This concept is the basis for a human-centered AI (Mosqueira-Rey et al., 2023; Xu, 2019; Garibay et al., 2023). Active participation during the whole construction process of an AI system can be a key part of addressing the representation bias that prevails in current systems. Involving a diverse group of people has shown to be critical in stages such as selecting the preferences instructed to the model to make decisions (Organizers Of QueerinAI et al., 2023). Such practices elucidate how systems align with values from specific social groups, which frequently reflect structural and power inequalities. Adjusting to and uncovering the variations on how the data captures under-represented communities can help to represent them more fairly. For example, these practices can help to build socially aware language technologies that are adept for different dialects (Ziems et al., 2022) (see also the AAE example in Sect. “Consider the data type”). Further examples will be considered in Sect. “The need for trustworthy AI, and XAI in particular”.

Intersectionality

Many bias mitigation techniques assume in input the specification of one or more protected attributes to mitigate the bias against. However, different dimensions of identity cannot be understood in isolation but must be considered collectively to grasp the full complexity of individuals. A special effort must hence be employed to consider the interplay of the different (protected) attributes (Ovalle et al., 2023). It is further worth noticing that debiasing for a group can reduce even more the representation of already under-represented subgroups (Smirnov et al., 2021). The phenomenon of debiasing paradox (Smirnov et al., 2021; Hughes, 2011), refers to situations where efforts to reduce bias towards certain groups based on a characteristic can actually exacerbate the underrepresentation of already marginalized or even the most marginalized subgroups. This paradox arises when additional attributes, which may be sensitive but overlooked or disregarded, are associated with the characteristic being targeted for bias reduction. Such correlations can occur naturally in real-life scenarios. For instance, the gender pay gap, which can be partially attributed to the wage penalty for motherhood (Budig & England, 2001), serves as an example. In this case, two attributes, namely “being a woman” and “taking care of children” are correlated and both can have detrimental effects on salary. Attempting to address bias solely based on gender may unintentionally disadvantage certain minority groups, such as women without caregiving responsibilities or men who do have such responsibilities. Hence, when considering mitigation strategies, side effects on different subgroups should be carefully analyzed. Beyond its legal challenges (see Sect. “AI biases, discrimination and unfairness”), intersectionality is currently actively addressed also by technical research (Gohar & Cheng, 2023) and Science and Technology Studies (van Nuenen et al., 2022).

Bias as a causal-thing

As observed in Sect. “AI biases, discrimination and unfairness”, most ML models are purely observational and rely on correlation among features. Consequently, they are not able to account for spurious effects. A principled way of tackling bias is to rely on causal reasoning (Nogueira et al., 2022; Spirtes & Zhang, 2016). The preferred causal framework used within ML is that of Perlian Causality, or Structural Causal Models (SCM) (Pearl, 2009). Under SCM, causes and effects among a set of variables are denoted using a directed acyclical graph (DAG) that, in turn, represents a set of structural equations that encode directed effects (i.e., \(X \rightarrow Y\) for attributes X and Y) rather than non-directed effects (i.e., \(X \rightarrow Z \rightarrow Y\) for one or more intermediate attributes Z). Further, human thinking is often framed as causal. Causal DAGs have allowed to formalize human reasoning in a ML-readable manner (Schölkopf et al., 2021).

Causal DAGs are able to graphically represent a worldview on a given fairness context, to highlight the (structural) assumptions, and to formalize the potential bias in a dataset (Pearl & Mackenzie, 2018). Causal DAGs have motivated the rise of causal fairness metrics (Makhlouf et al., 2020; Carey & Wu, 2022), including total fairness (Zhang & Bareinboim, 2018), path-specific fairness (Zhang et al., 2017), and counterfactual fairness (Kusner et al., 2017). Compared with the fairness notions based on correlation, causality-based fairness notions and methods include additional knowledge of the causal structure of the problem. This knowledge often reveals the mechanism of data generation, which helps comprehend and interpret the influence of sensitive attributes on the output of a decision process. This additional auxiliary causal knowledge, e.g., is often the basis for moving from testing unfairness to testing discrimination (Álvarez & Ruggieri, 2023). A common limitation is defining a causal DAG, which requires an agreement on its existence and, in turn, structure. It is not a straightforward task, but it also forces practitioners to state otherwise implicit assumptions about the data and encourages discussions among stakeholders (Kusner et al., 2017; Álvarez & Ruggieri, 2023).

Overall, while approaches for causal discovery from data can be adopted, specifically in the context of fairness (Binkyte-Sadauskiene et al., 2022), they definitively need to be complemented with domain expert knowledge—but, with no guarantee of an unanimous agreement among experts (Rahmattalabi & Xiang, 2022). Moreover, a number of assumptions are typically made which might not be met in practice, such as sufficiency (all causes are known), and faithfulness (the graph completely characterizes the conditional independences among features) (Spirtes et al., 2000). Further, causal fairness metrics may suffer from the identifiability problem (Makhlouf et al., 2022), namely the impossibility to compute them from observational data only. Finally, the use of causal DAGs in fairness has not been free of criticism (e.g., Kasirzadeh and Smart (2021)). Arguments against the manipulability of the sensitive features, e.g., race, in counterfactual reasoning have been raised (Kohler-Hausmann, 2019; Hu & Kohler-Hausmann, 2020). These works argue that it is difficult, if not impossible, to disentangle the causal effects of the sensitive attributes on and from the other attributes in a meaningful way.

Knowledge-informed AI models

Relying exclusively on raw data for a given task is often not sufficient. Primarily, models trained on raw data fail to capture the nuances found in the less-represented segments of the data distribution (Mallen et al., 2023), which often correspond to underprivileged communities. While using external knowledge sources to compensate these inequalities holds promise (Lobo et al., 2023), this objective is not central to current knowledge-informed approaches. Typically, external sources support the so-called knowledge-intensive tasks, which are those tasks requiring a significant amount of real-world knowledge (e.g., fact verification) (Petroni et al., 2021). External knowledge sources are then used to update the model, provide higher interpretability, and enhance the reliability of its predictions (Asai et al., 2023). Other possible applications where informing predictions can be useful are based on using a combination of sources to enhance the generalizability of a model (Chiril et al., 2022). Particularly, leveraging data to improve performance outside the training distribution for a specific AI task. On issues closely related to discrimination, the integration of additional data and knowledge sources is gaining presence in the development of social-aware ML models (Wiegand et al., 2022). Such models are tailored to fill the gaps of individuals or groups with limited access to technology or who experience discriminatory representation, to frame AI systems within the specific social contexts in which they are applied.

The non-i.i.d. case: bias in unsupervised learning and graph-mining

The majority of traditional fair-AI metrics and methods are developed based on the independent and identically distributed (i.i.d.) data assumption: every instance in a dataset is drawn independently from a same statistical distribution. However, many real-world problems include graph-structured (network) data reflecting the connection between subjects, and such connections are not independent nor random—for instance, people connect due to similarity, local proximity, or common interests (Aiello et al., 2012). The studies centered on i.i.d. data are unable to reflect the bias exhibited by the relational information (i.e., the topology) in graph data. Fairness in graph mining can be non-trivial and it has exclusive backgrounds, taxonomies, and fulfilling techniques. Overviews papers by Dong et al. (2023); Chhabra et al. (2021); Choudhary et al. (2022), categorize a few of the current challenges and urgent needs in the field that we agree with. They include: (1) formulating (individual and group) fairness notions according to different types of biases and corresponding harms; (2) balancing model utility and algorithmic fairness; (3) explanation of bias in graph-based methods; and (4) enhancing the robustness of algorithms especially in cases of biased human annotations or malicious attacks. Harms of bias in the context of graphs, and in particular social networks, may go beyond discrimination, and include segregation (Baroni & Ruggieri, 2018; Ferrara et al., 2022), polarization (Tölle & Trier, 2023), filter bubbles (Pariser, 2011), and censorship (Aceto and Pescapè 2015). We see an urgent need for expanding the fair-AI research on the non-i.i.d. cases in the future.

Accounting for bias

In this section, we consider two technical aspects of accounting for bias, which complement the legal discussion of Sect. “AI fairness beyond metrics: transparency and accountability of AI systems”: monitoring and explaining bias. We claim the need for trustworthy AI as an holistic approach beyond fairness and bias issues. We warn, however, about the limitations of the young research field of XAI. Also, we discuss bias issues in tasks related to monitoring, including transferring AI models from a domain to another, and in reproducing evaluation scenarios. A summary of the challenges, policy advices, and best practices in accounting for bias is reported in Fig. 5.

The need for trustworthy AI, and XAI in particular