Abstract

Context:

Empirical studies with human participants (e.g., controlled experiments) are established methods in Software Engineering (SE) research to understand developers’ activities or the pros and cons of a technique, tool, or practice. Various guidelines and recommendations on designing and conducting different types of empirical studies in SE exist. However, the use of financial incentives (i.e., paying participants to compensate for their effort and improve the validity of a study) is rarely mentioned

Objective:

In this article, we analyze and discuss the use of financial incentives for human-oriented SE experimentation to derive corresponding guidelines and recommendations for researchers. Specifically, we propose how to extend the current state-of-the-art and provide a better understanding of when and how to incentivize.

Method:

We captured the state-of-the-art in SE by performing a Systematic Literature Review (SLR) involving 105 publications from six conferences and five journals published in 2020 and 2021. Then, we conducted an interdisciplinary analysis based on guidelines from experimental economics and behavioral psychology, two disciplines that research and use financial incentives.

Results:

Our results show that financial incentives are sparsely used in SE experimentation, mostly as completion fees. Especially performance-based and task-related financial incentives (i.e., payoff functions) are not used, even though we identified studies for which the validity may benefit from tailored payoff functions. To tackle this issue, we contribute an overview of how experiments in SE may benefit from financial incentivisation, a guideline for deciding on their use, and 11 recommendations on how to design them.

Conclusions:

We hope that our contributions get incorporated into standards (e.g., the ACM SIGSOFT Empirical Standards), helping researchers understand whether the use of financial incentives is useful for their experiments and how to define a suitable incentivisation strategy.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Empirical studies are important in Software Engineering (SE) research (Juristo and Moreno 2001; Wohlin et al. 2012; Felderer and Travassos 2020; Shull et al. 2008), for instance, to understand the impact of a technique on developers (e.g., using novel testing tools), to investigate relations between properties (e.g., programmers’ experience and software defects), or to test theories (e.g., whether agile practices lead to faster releases). For this purpose, various empirical methods can be used, such as controlled experiments, interviews, or questionnaires. Each method comes with its own pros and cons, for example, regarding the trade-offs between internal and external validity (Siegmund et al. 2015; Petersen and Gencel 2013) or between quantitative and qualitative data elicitation (Felderer and Travassos 2020). While particularly challenging to design and conduct, experiments with human participants (Sjøberg et al. 2005; Wohlin et al. 2012; Ko et al. 2015) promise a high degree of internal validity to understand whether, how, and to what degree a property (i.e., independent variable) impacts developers (i.e., in terms of the dependent variable).

One challenge for experiments in SE is the high degree of human factors that are intertwined with software development. Most importantly, inter-individual differences between software developers need to be acknowledged, since selecting just a few participants often leads to a selection bias; meaning that the selected developers represent a specific, not representative subgroup out of all developers (Juristo and Moreno 2001; Höst et al. 2005; Wohlin et al. 2012). The findings of such studies are not generalizable. Consequently, it is crucial to involve a suitable number (i.e., in terms of the population size) of participants who, in addition, must be diverse enough to cover all aspects of inter-individual differences within the overall population. Another challenge are participants who may not complete the tasks in a realistic manner, due to a lack of motivation; even though the experimental design, data collection, and analysis have been well-designed and carefully conducted.

Financial incentives (i.e., monetary compensation) are an established means to address selection bias and motivation issues in various other disciplines, such as experimental economics or behavioral psychology. Such incentives should mimic real-world settings by reflecting developers’ situations in practice. For instance, rewarding participants a show-up fee that is derived from developers’ wages helps mitigate selection bias, because it can motivate especially higher paid developers to participate. Through payoff functions (i.e., mathematical functions relating participants’ performance to a payment, cf. Table 1) that address the motivation of developers in the experiment, real-world "motivation scenarios" can be simulated in a more controlled way. Interestingly, various guidelines for empirical SE mention the concept of incentives (Wohlin et al. 2012; Höst et al. 2005; Ralph 2021; Petersen and Wohlin 2009; Carver et al. 2010; Sjøberg et al. 2007). However, to the best of our knowledge, apart from show-up or completion fees that are paid to motivate participation, advanced task-related incentives (i.e., paying incentives based on the actual performance) are rarely employed. Even the ACM SIGSOFT Empirical StandardsFootnote 1 (Ralph 2021) mention incentives only as (as of September 26, 2022; commit 26815c6):

-

1.

desirable attribute for longitudinal studies and

-

2.

essential (recruitment) as well as desirable attribute (effect of incentives, improving response rates) for questionnaire surveys.

Note that none of these two mentions in the ACM SIGSOFT Empirical Standards refers explicitly to financial incentives. So, it seems that there is a missing awareness of how advanced financial incentives (i.e., payoff functions) can be used to increase the validity of SE experiments. Unfortunately, missing or misguided incentivisation in experiments may even hamper the validity.

1.1 Goals

With this article, we aim to provide a better understanding and recommendations for using financial incentives in human-oriented SE experimentation, including controlled experiments, quasi experiments, experimental simulations, and field experiments (Wohlin et al. 2012; Stol and Fitzgerald 2020). To this end, we built upon the experiences of other disciplines that have different perspectives on financial incentives (cf. Section 2). First, we consider research from the area of experimental economics, an area that relies on laboratory experiments to test theories on human decision-making. In experimental economics, researchers experimentally analyze human decision-making in a variety of cases, including any work-related problems across various domains (e.g., banking, human resources, health). Therefore, experimental economics research that focuses on effort and work or compares different work-related practices and the working environment are relevant for SE, too. Aiming to increase participation in their experiments and improve the validity of the obtained results, researchers in experimental economics often use financial incentives (Harrison and List 2004; Weimann and Brosig-Koch 2019; van Dijk et al. 2001; Erkal et al. 2018). Importantly, incentives in experimental economics are usually more complex than show-up or completion fees that are sometimes used in empirical SE. Besides such fees, researchers in experimental economics often define payoff functions that depend on task correctness (e.g., number of correctly identified bugs), time (e.g., decrease over time spent), or penalties (e.g., for wrongly identified bugs). Second, to reflect on the limitations of financial incentives, especially with respect to the motivation of participants, we also consider research from the area of behavioral psychology (Weber and Camerer 2006; Kirk 2013). In this area, many experiments are purposefully designed without task-related financial incentives, since such incentives interfere with intrinsic and extrinsic motivation, and thus can impact (positively as well as negatively) the external validity. By discussing the state-of-the-art on financial incentives in SE experimentation based on insights from these two disciplines, we aim to provide a detailed understanding of the concepts, benefits, and limitations of financial incentives.

1.2 Contributions

We first report the conduct and results of a Systematic Literature Review (SLR) with which we investigated the current state-of-the-art of using incentives in human-oriented SE experimentation. For this purpose, we reviewed 2,284 publications published in 2020 and 2021 at six conferences and five journals with high reputation in SE research. We analyzed 105 publications that report experimental studies with human participants, but only 48 mention some form of incentives, mostly as simple completion rewards. Then, we studied the properties of the individual studies in more detail (e.g., scopes, goals, measurements, participants) to understand whether financial incentives could have been a helpful means to improve their designs. Based on research from experimental economics, behavioral psychology, and our SLR results, we contribute a guideline to decide whether to use financial incentives in SE experiments, derive 11 recommendations to design payoff functions, and exemplify the use of both. Note that we considered the perceptions of two other disciplines (cf. Section 2) to account for the various different designs of empirical studies—and to understand potential limitations of using incentives that researchers have to keep in mind.

In more detail, we contribute the following in this article:

-

We describe how financial incentives are used in two other disciplines to introduce the core concepts, benefits, and limitations (Section 2).

-

We report an SLR with which we captured the state-of-the-art of using incentives in SE experimentation (Section 3).

-

We discuss the SLR results to understand how financial incentives can help improve the validity of SE experiments (Section 4).

-

We define a guideline (Section 5), 11 recommendations (Section 6), and exemplify their use (Section 7) to guide researchers in deciding whether to use and how to design financial incentives in an SE experiment.

-

We publish our data in an open-access repository.Footnote 2

Our contributions connect experimental methods used in two other disciplines to SE. Seeing that financial incentives are not well-understood in SE experimentation, and are sparsely used, we argue that we help to mitigate an important gap in existing guidelines for designing experiments in SE. We hope that our contributions help researchers in empirical SE understand trade-offs and design options of financial incentives, and are useful to refine and extend existing guidelines, such as the ACM SIGSOFT Empirical Standards.

1.3 Structure

The remainder of this article is structured as follows. In Section 2, we introduce concepts related to incentives based on established knowledge from experimental economics and behavioral psychology; and discuss the related work. We report the design and conduct of our SLR on incentives in SE experimentation in Section 3. In Section 4, we report and discuss the results of our SLR to provide an understanding of whether and how incentives are used in SE. Then, we build on this discussion to derive a guideline for deciding whether to use financial incentives (Section 5), concrete recommendations for designing financial incentives (Section 6), and exemplify how to use these in SE experimentation (Section 7). Finally, we discuss threats to the validity of our work in Section 8 before concluding in Section 9.

2 Incentives

Incentives can be any form of compensation for the effort participants spend during an empirical study. Typical examples are a set of vouchers that are randomly distributed among all participants of a survey or experiment (Amálio et al. 2020) and non-financial incentives, such as brain scans obtained during fMRI studies (Krueger et al. 2020). In SE research, such incentives are used to increase participation rates for surveys or experiments, and they are usually independent of the actual task performance. While designing an experiment on SE with researchers from experimental economics and behavioral psychology (Krüger et al. 2022), we experienced that particularly in the area of experimental economics financial incentives are used far more systematically. Specifically, researchers in experimental economics design payoff functions to increase the validity of experiments. A payoff function is a mapping (i.e., mathematical formula) that defines the relation between participants’ choices and their payment (e.g., rewarding correctly solved tasks, penalizing time spent to complete a task), and thus may involve any task-related payments (cf. Table 1).

In our experience, SE experimentation is not concerned with, and does not make use of, payoff functions. This personal perception motivated the research we report in this article. Note that we focus on SE experimentation (e.g., controlled experiments, quasi experiments, field experiments), since payoff functions reward task performance, which can rarely be done during other empirical SE methods that are used to elicit subjective opinions and experiences (e.g., interviews, questionnaires) or to solve a concrete practical problem (e.g., case studies, action research). Solving a concrete problem is typically connected to the participants’ own system, which represents a non-financial incentive (cf. Section 2.4). In the following, we describe financial incentives from the perspectives of experimental economics and behavioral psychology. Particularly, we build on guidelines and research in the area of experimental economics, due to its long history of using financial incentives in laboratory experiments (Harrison and List 2004; Weimann and Brosig-Koch 2019; van Dijk et al. 2001; Erkal et al. 2018). We provide an overview of the concepts related to incentivizing in Table 1. Finally, we discuss the related work.

2.1 Using Financial Incentives

The most important question when using incentives is: How do the incentives impact the behavior of participants during an experiment? Arguably, this is the most complicated aspect of financial incentives, since it is difficult to decide how to incentivize participants to perform "well" during their tasks. Note that "well" in this context refers to whether the incentives are sufficient to induce appropriate preferences, and thus how well the behavior in the laboratory mirrors the behavior in question outside the laboratory.

In his fundamental work on microeconomic systems as an experimental science, Smith (1982) establishes three major conditions for financial incentives in the laboratory that serve as a guide for designing payoff functions to improve experiments’ validity and replicability:

-

Dominance means that the incentives are strong enough to overpower other aspects that can motivate the behavior of participants. For instance, consider boredom: If participants stay in the laboratory for some time, they may start feeling bored and could start playing around. The incentives should be strong enough to avoid boredom becoming the main motivator for behavior.

-

Monotonicity implies that participants prefer to obtain more of the incentive (e.g., they prefer more money over less).

-

Salience defines that a participant’s performance in an experiment is transparently linked to the received incentive (e.g., it is clear what reward is paid for correct solutions).

Fundamentally, not much has changed since this initial description of financial incentives (Feltovich 2011). Camerer and Hogarth (1999) discuss whether and when financial incentives matter. While the impact of such incentives on individual experiments can be mixed (i.e., in some cases there are differences, in others there are none), higher incentives usually improve participants’ performance, especially for tasks that are responsive to better effort (e.g., mental arithmetic, counting certain letters in a line of text, positioning a slider at the required position). Especially in such cases, incentives should trigger a level of effort that is more similar to the level of effort a person would spend in real-world situations of interest.

In this context, it is important to distinguish different types of financial incentives and tasks, since performance-based incentives (cf. Table 1) can only influence participants’ effort within certain limits. By incentivizing outcomes in a performance-based task, participants’ effort can only be increased up to their individual maximum abilities (e.g., mental arithmetic). Obviously, participants cannot improve their abilities in a major way during a single experiment. So, Hertwig and Ortmann (2001) conclude in their review that financial incentives improve performance and, even though they do not guarantee optimal decisions, they lead to decisions that are closer to efficient outcomes (as predicted by economic models). Thus, whenever there is no limitation with respect to participants’ cognitive ability of solving a task, increasing their motivation leads to a better performance.

Analogous to such findings from economics experiments, insights on survey methods from psychology underpin the role of motivation: The psychological theory of "survey satisficing" (Krosnick 1991) describes participants’ strategy in survey situations to answer the questions with the lowest effort possible, which can result in low quality answers. Such a strategy deteriorates the validity of findings, because participants’ answers include a component that is connected to the survey only (i.e., answering questions most efficiently in the specific context of the survey). The theory discusses cognitive processes required for answering surveys and the problem of participants aiming to reduce their own cognitive effort. In a nutshell, the quality of survey responses depends on an interplay of the participants’ motivation, their ability, and the difficulty of the task at hand.

To analyze the role financial incentives could have in SE, we focus on one specific set of experiments from experimental economics: experiments involving effort. Such experiments can be designed to implement either real or chosen effort (Carpenter and Huet-Vaughn 2019). For real effort, a certain task must be exercised, which is linked to the payoff function (cf. Table 1). The pro of such a strategy is a higher external validity, or at least more mundane realism. The con of such a strategy is that some important variables (e.g., costs of effort, intrinsic motivation) are not observed. This issue can be mediated through diligent randomization of participants between treatments. Another issue with real effort is that it is difficult to calibrate the appropriate payoff function. In contrast, chosen effort means the participant chooses a level of effort that directly corresponds to certain monetary costs. Simplified, they face a specific scenario for which they have to decide how much time they would be willing to spend. For example, the chance of identifying a bug per minute is 50% and awards \({\$1}\), but the costs of searching incrementally increase from \({\$0.1}\) to \({\$1}\). Such a design offers a higher level of control at the cost of realism (Carpenter and Huet-Vaughn 2019). Note that we focus on real-effort experimental designs, since only these are concerned with participants exercising a measurable task.

2.2 Benefits of Financial Incentives

Using feasible financial incentives in experiments promises several benefits (B), for instance:

-

\(({\text {B}}_{1})\) improving the participation rate of experiments;

-

\(({\text {B}}_{2})\) improving the realism of an experiment;

-

\(({\text {B}}_{3})\) improving the motivation of participants to exercise the experimental tasks appropriately;

-

\(({\text {B}}_{4})\) reducing the variance in outcomes due to the dominance condition limiting the impact of other motivators—thus, also improving replicability (Camerer and Hogarth 1999); and, via these four,

-

\(({\text {B}}_{5})\) improving the validity (i.e., internal when tasks involve effort, external when representing the real-world).

The sizes of payoffs are typically oriented towards the real-world opportunity costs (cf. Table 1) of the participants (i.e., exhibiting similar properties in terms of fixed and variable payoffs or penalties). For example, a payoff to compensate for the time that participants have to spend to finish a task can be defined by considering the average monthly (or hourly) wages of participants in the country in which an experiment is conducted (Harrison and List 2004). Next, we discuss the benefits of improving participation \({\text {B}}_{1}\) and realism in experiments \({\text {B}}_{2}\). We do not discuss the other benefits (again), since we described how the major conditions of financial incentives, particularly dominance \({\text {B}}_{4}\), can improve the motivation of participants \({\text {B}}_{3}\) in Section 2.1, and a higher validity \({\text {B}}_{5}\) is the consequence of achieving the other four benefits.

Improving Participation The most common argument for using financial incentives is to improve participation, which can help tackle two issues. First, empirical studies require a certain minimum number of participants to ensure the validity of the obtained results (e.g., ensuring statistical power). A large body of evidence from psychology supports the assumption that financial incentives increase response rates in surveys; specifically, they seem to more than double the odds for responses (Edwards et al. 2005; David and Ware 2014), which is transferable to experiments. Second, selection biases should be mitigated. For this purpose, the laboratory conducting the experiment must have an appropriate reputation and well-defined policies to attract participants. In particular, participants who register for experiments must know a priori that they will be reimbursed for the time they spend during an experiment, independently of what the precise scope of the experiment is. The actual payment can depend on a combination of show-up fees, task-related rewards, penalties, or chance (e.g., winners-take-all tournament, lottery) to mimic different real-world scenarios (Weimann and Brosig-Koch 2019). One example in SE could be to reward or penalize the identification of correct or wrong feature locations, respectively; or to fix faulty configurations. Note that participants should not end up with a negative payment for the whole experiment.

Being aware that their participation in an experiment will be reimbursed makes the setup for participants comparable to methods for incentivizing surveys. For instance, participants are incentivized to fill out surveys to avoid selection bias. Selection bias may occur if participants mainly consist of those who are interested in the survey topic, have a positive attitude towards surveys, or score high on traits, such as openness and pro-socialness (Brüggen et al. 2011; Keusch 2015; Marcus and Schütz 2005). Also, incentives can increase response rates for participants with lower socioeconomic status or of younger age (Simmons and Wilmot 2004). While payoffs in psychology are often based on a lottery, it is established in experimental economics that all participants receive payoffs. Researchers in experimental economics further enhance the use of incentives by questioning how different types of payments (e.g., risky and task-related payments versus fixed show-up fees) can cause selection bias with respect to the risk preferences of participants (Harrison et al. 2009).

Improving Realism Another set of arguments for using financial incentives is based on a general issue of laboratory experiments. Usually, such experiments take place in an environment that differs from the targeted environment in several ways (e.g., by isolating individuals in laboratory cubicles, by using specific measurement equipment like eye-trackers, or by requiring/forbidding the use of specific tools). Consequently, while laboratory experiments excel at improving internal validity, they are usually limited in terms of external validity. Still, it is recommended to maximize the external validity of an experiment, as long as the internal validity is not harmed. Since the majority of experiments in experimental economics

-

1.

focuses on testing economic theories (e.g., game theory),

-

2.

builds on maximization assumptions (i.e., participants aim to maximize their payoff), and

-

3.

concerns problems involving money and time (or tradeable goods that can be easily converted to money),

it is reasonable to conduct experiments in a setting that is comparable to the real world (Hertwig and Ortmann 2001). So, introducing financial incentives to mirror the outside-the-lab situation (e.g., paying professionals) can increase the external validity of an experiment (Schram 2005). Similarly, SE research is heavily concerned with practical problems, and simulating the real world in experiments is an important concern. For instance, researchers may test the theory whether their new technique helps developers detect more bugs (1.) in a shorter period of time (3.), for which they can use financial incentives to motivate real-world behavior (2.).

2.3 Limitations of Financial Incentives

Even though it can be beneficial to use financial incentives in an experiment, researchers must balance these benefits against several limitations. In the following, we discuss two more pragmatic (e.g., handling costs, controlling participation) and two more fundamental (i.e., addressing habituation, scoping the payoff) limitations. We aim to help researchers understand and resolve such limitations.

Handling Costs Obviously, (financial) incentives increase the costs of an experiment, especially if they are oriented towards real hourly wages. Therefore, when designing an experiment, researchers should consider to what extent the outcome depends on the participants effort and motivation. Considering incentives in relation to the overall costs of a research project, incentives could be considered marginal. For example, a rather large laboratory experiment with about 300 participants and an average payoff per person of around \({\$20}\) will cause costs for incentives of roughly \({\$6,000}\). Nonetheless, funding incentives for an experiment can become a challenging issue, depending on the availability of project funds. In this regard, our guidelines can help researchers to plan and reason for such funding within their grant proposals.

Controlling Participation Incentives are helpful for targeting certain groups of participants and reducing sampling bias. However, besides these desired effects, incentives in online surveys can also attract participants who simply click through the survey in order to receive the payment, or even bots. Similarly, online as well as in-person experiments face the problem that participants may only be interested in receiving the payment, without concern for the actual task. Consequently, stronger control is required, especially when incentives are provided as show-up fees. Also, there are different methods (e.g., CAPTCHAs, different types of questions, plausibility checks) that can decrease the threat of bots in online settings (Aguinis et al. 2021). Lastly, a payoff function can also minimize these problems by granting lower payment for random responses—and our guidelines are intended to help researchers define such functions.

Addressing Habituation Singer et al. (1998) found that incentives can raise participants’ expectations regarding survey incentivisation in general, but it is not clear how these expectations impact participants’ behavior and the quality of their responses in future studies. At worst, habituation processes (i.e., decreases in response strength due to practice (Thompson and Spencer 1966)) could occur. For incentives, habituation means that, over time, their positive effects on the outcomes would dissipate, since participants become accustomed to receiving incentives. In the long run, this effect could increase costs without improving quality to the same extent. Fortunately, the few studies that have been conducted on habituation do not confirm such an effect (Pforr 2015). Esteves-Sorenson and Broce (2020) speculate that unmet payment expectations, which can occur if incentives are provided in one study and withdrawn in a second one, could harm output quality. Laboratories in experimental economics address unmet payment expectations and habituation by having internal quality guidelines including the size of expected payments, and by using payoff functions that put more weight on (dominating) task-related payments than show-up fees. Again, our guidelines can help researchers define such setups for their laboratories and experiments.

Scoping the Payoff Finally, there is a lack of evidence regarding what constitutes the "right" amount of incentives. For instance, findings from experimental economics indicate that the pure presence of financial incentives is rarely the motivating factor for participants, but it is rather their magnitude (Gneezy and Rustichini 2000; Parco et al. 2002; Rydval and Ortmann 2004). This issue broadly refers to the condition of dominance and the so-called crowding out of motivation. The term crowding out refers to the observation that extrinsic motivation (e.g., financial incentives) may replace intrinsic motivation, making the total effect on performance more ambiguous (Deci 1971; Frey 1997). For example, Murayama et al. (2010) illustrate on a neurological level how financial incentives can undermine intrinsic motivation when the task has intrinsic value of achieving success. This discussion also led to neurological evidence indicating that real choices activate reward regions in the brain more strongly and broadly than hypothetical choices (Kang et al. 2011; Camerer and Mobbs 2017).

In addition, ethical concerns have been raised that too large participation incentives may force participants to participate in a study, which is contrary to the principle of voluntariness (Pforr 2015). However, in most social surveys, incentives offered are not that high, and thus unlikely to inappropriately influence participants in terms of, for instance, accepting a higher risk of personal data being disclosed (Singer and Couper 2008). For surveys in behavioral psychology, studies found that financial incentives increase the response rate (and more than non-financial incentives), but they do not seem to improve the quality of responses when considering item-nonresponse (i.e., missing information/values regarding variables) as indicator (Singer and Ye 2013). So, the following question remains unanswered: What should the amount of incentives be to have a positive impact on the response quality? We intend our guidelines to help SE researchers tackle this question for their experiments.

2.4 Related Work

In the following, we provide an overview of the related work, particularly with respect to research on financial incentives in computer science and SE.

Financial versus Non-Financial Incentives The study by DellaVigna and Pope (2018) is probably the most rigorously conducted one on the impact of (non-)financial incentives on participants’ motivations. Among others, the authors conducted a large real-effort experiment with 18 treatment arms and over 9,800 participants to analyze different motivators (i.e., financial, behavioral, and psychological). DellaVigna and Pope (2018) illustrate that financial incentives work better than psychological ones. Note that the findings require caution, since they refer only to the specific context of the experiment. Still, Esteves-Sorenson and Broce (2020) obtained similar results when they reviewed over 100 studies on crowding out and conducted their own field experiment. They found that financial incentives did not lead to a crowding out of motivation for intrinsically motivated individuals. However, they state that unmet payment expectations may influence the output quality. Given the variety of tasks as well as structural differences among them (e.g., with respect to the amount of intrinsic motivation), it is necessary to provide tailored incentivisation for a specific task—especially for experiments including real effort. In this context, effort (i.e., the decision on how much effort to put in a task) refers to the way participants can earn money in an experiment.

Financial Incentives in Computer Science We have been aware of a few publications that are concerned with financial incentives in empirical SE. However, to improve our confidence that we did not miss any important studies or guidelines on financial incentives, we performed an automated search on dblpFootnote 3 and Scopus.Footnote 4 For dblp (last updated July 30, 2021), we used the search string financial incentive, which helped us identify publications in computer science that refer to those two terms in their bibliographic data (e.g., title). For Scopus (last updated September 26, 2022), we used the search string "financial incentive" AND experiment on titles, abstracts, and keywords; and excluded any subject areas that are not computer science. We obtained 50 and 26 publications, respectively, with some overlap between the two sets. These publications are concerned with topics like using (financial) incentives to motivate online reviews (Wang and Sanders 2019; Burtch et al. 2018), knowledge sharing in social networks (Kettles et al. 2017), or crowdsourcing (Ho et al. 2015; Shaw et al. 2011). Such topics are not connected to our goal (i.e., using financial incentives in experiments), or have financial incentives as an inherent property (i.e., crowdsourcing). Consequently, they are not within the scope of our study and intended guidelines, since we are interested in experiments that use financial incentives as a methodological means to simulate realism and reward cognitive effort. Still, the following seven publications are closely related to our own work.

Sjøberg et al. (2005) surveyed controlled experiments in software engineering from 1993 to 2002. While they focused on various other properties of the experiments, Sjøberg et al. (2005) also collected data on the recruitment of participants and the rewards used. Overall, the use of incentives was explicitly mentioned even fewer times compared to our SLR (23 of 113 versus 50 of 105 experiments; cf. Table 2). Similarly, only three experiments in the survey by Sjøberg et al. (2005) reported on incentivizing with money, whereas we found 30 of such experiments (i.e., money or monetary vouchers). Comparing both studies may seem to indicate that there has been a continuous raise in the use of financial incentives, but the covered venues are different and the number of experiments in SE has increased. Consequently, the picture may be skewed. Moreover, the general insights remain identical between the two reviews: financial incentives are somewhat used, but mostly to motivate participation (e.g., using completion fees) whereas payoff functions seem unused. Besides such similarities, our SLR differs considerably from the survey by Sjøberg et al. (2005) due to the focus on financial incentives and coverage of a more recent period. For this reason, the guidelines and recommendations we derive are also completely new.

Glasgow and Murphy (1992) report an experiment with a small software development team in which financial incentives have been used. They found that financial incentives can have negative impact in practice, for instance, reducing social interactions between developers or causing a feeling of injustice. This study highlights that it is challenging to implement the right financial incentives, in practice and in experiments. However, this report is rather old and the details are vague. Instead of practice, we are focusing on motivating participants during experiments, we discuss how to balance the pros and cons of financial incentives, and build on more advanced research on incentives.

Rao et al. (2020) compare different incentivisation schemes aiming to receive deep bug fixes rather than shallow ones. Their study focuses on ways of distributing financial incentives in software markets (e.g., based on what strategies, when, and to whom bug bounties are paid). However, the study is not concerned with experiments, and provides only a simulation of the defined payoff functions. So, this work is complementary to our research, in which we provide actionable contributions for SE researchers for designing experiments with human participants.

Fiore et al. (2014) report on four experiments in which they compared how regular and "surprise" financial incentives impact the participation rate in online experiments. They found that surprising participants with financial incentives yields lower participation rates compared to motivating the incentives from the beginning (i.e., in the advertisement)—but increasing the financial incentive surprisingly after following the advertisement yields even higher participation rates. The results underpin that financial incentives can help increase participation rates in SE experiments. However, we are focusing on how to improve the motivation during the experiment to improve its validity.

Grossklags (2007) discusses the use of financial incentives in experimental economics and exemplifies related studies in computer science. While we also build upon knowledge from experimental economics, there are key differences to our work: In contrast to Grossklags , we (i) systematically elicit the current state-of-the-art of using incentives in SE experimentation in Section 3; (ii) do not solely focus on experiments on economics, for which incentives are of primary concern (e.g., Grossklags (2007) exemplifies trading in electronic markets or game theory for computer networks); and (iii) derive guidelines for using financial incentives in SE experiments with human participants.

Höst et al. (2005) study non-financial incentives that are the result of the properties of the experimental object. Namely, the authors’ findings indicate that if the experimental object is an isolated artifact (e.g., a random piece of code), the validity of the experimental results relies on the participants’ will and pride to perform their task correctly. Other factors that incentivize participants may be a code of conduct that open-source developers adhere to or the possibility to improve their grading for student participants. Similarly, presenting the experimental object within an real-world setting can improve participants’ motivation. Most prominently, participants’ motivation will arguably be the highest if the experiment or field study is conducted within a software project they are working on. For example, conducting an experiment, field study, or action research on code inspection on a real system with the corresponding developers would incentivize participants, since they should be interested to remove the bugs anyway. Consequently, real-world settings are more incentivizing for participants than laboratory examples, which may feel more like a waste of time for the participants. In a related manner, we are concerned with using financial incentives to mimic real-world settings to improve participants’ motivation.

Mason and Watts (2009) discuss the relationship between incentivizing experiments conducted on Amazon Mechanical Turk and the participants’ performance. For this purpose, they discuss the results of two experiments and confirm findings from experimental economics and behavioral psychology. Namely, their results suggest that financial incentives improve the quantity of tasks performed, but not their quality; and that different forms of incentives (e.g., piece rate versus quota scheme) could significantly impact the quality. However, this work is not concerned with typical SE experimentation (i.e., the tasks were to order images and to solve a word puzzle), but how incentives may impact participants on crowd-sourcing platforms (i.e., Amazon Mechanical Turk). In contrast, we aim to provide guidelines on how to financially incentivize actual SE experiments.

3 A Review of Incentives in SE

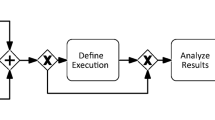

Within this section, we report our SLR on experiments and observational studies in SE, for which we followed the guidelines of Kitchenham et al. (2015). In Fig. 1, we provide an overview of our overall process. Please note that we did not review the state-of-the-art in experimental economics because financial incentives (1) are a de-facto standard in this domain; (2) included in established guidelines (Weimann and Brosig-Koch 2019); and (3) even required by many journals, like Experimental Economics.Footnote 5 Consequently, there is no need to review the state-of-the-art, since we could build on well-established references and extensive research from this domain—in contrast to SE.

3.1 Goal and Research Questions

As motivated, the main purpose of our SLR was to understand the state-of-the-art of how (financial) incentives are used in SE experiments. Besides eliciting evidence in favor of or in contrast to our subjective perception of publications and existing guidelines on SE experimentation not involving advanced financial incentives, the results should provide us with the information we needed for our interdisciplinary analysis. Particularly, the results should provide the foundation for deriving recommendations for guidelines that are feasible for SE (e.g., considering open-source developers). For this purpose, we had to understand how SE experiments are currently designed, set up, and reported. Using this information, we could identify gaps between SE and other disciplines, allowing us to construct our guidelines and recommendations for using financial incentives.

We defined two research questions to understand to what extent and in what forms financial incentives are used in SE:

-

RQ\(_{1}\) To what extent are financial incentives used in experiments? We collected the publications to understand whether and to what extent SE researchers use or discuss financial incentives. So, we can reason on the extent of awareness for financial incentives and collect typical experimental designs for our interdisciplinary discussion on whether such incentives can be useful.

-

RQ\(_{2}\) What forms of financial incentives are applied? From all experiments that employ some form of financial incentives, we elicited how exactly these are applied. So, we can understand potential benefits and limitations of financial incentives used in SE experiments.

Note that, due to our interdisciplinary analysis and terminology issues in SE (Schröter et al. 2017), experiments in our analysis include not only controlled, quasi, field, or simulation experiments; but also field and observational studies, which often resemble or actually are types of field experiments, particularly compared to other disciplines (e.g., lab-in-the-field experiments in experimental economics). So, such observational studies can exhibit similar properties in terms of motivating participants and using incentives. We involve such different types of studies to obtain a broader overview of the current state-of-the-art, and remark that typical setups for such SE studies are somewhat of a gray area considering the methods of other disciplines. For example, the differences between lab or field experiments and observational studies in SE are often blurry, since the environment and control opportunities of the lab and field are typically more alike than in other disciplines.

3.2 Search Strategy

Automated searches are problematic to conduct and replicate, due to technical issues of search engines (Kitchenham et al. 2015; Krüger et al. 2020; Shakeel et al. 2018); and our test runs resulted in many irrelevant results. For instance, the search string

"financial incentives" AND

"software engineering" AND

experiment

returned roughly 1,560 results on Google Scholar, which report on healthcare, machine learning, or motivators of software engineers—but rarely involve SE experiments. Identically, our searches on dblp and Scopus (cf. Section 2.4) returned no viable datasets. Therefore, we decided to conduct a manual search instead.

We aimed to cover a representative set of up-to-date best practices in SE experimentation. For this purpose, we decided to perform a manual search, covering the years 2020 and 2021 (to include recent publications that were available and officially published) of high-quality SE venues. Namely, we analyzed six conferences and five journals that involve empirical research:

-

Int. Conf. on Automated SE (ASE)

-

Int. Conf. on Evaluation and Assessment in SE (EASE)

-

Int. Symp. on Empirical SE and Measurement (ESEM)

-

Int. Conf. on Program Comprehension (ICPC)

-

Int. Conf. on SE (ICSE)

-

Eur. SE Conf./Symp. on the Foundations of SE (ESEC/FSE)

-

ACM Trans. on SE and Methodology

-

Empirical SE

-

IEEE Trans. on SE

-

Information and Software Technology

-

J. of Systems and Software

Note that this selection may have introduced bias, since we did consider two recent years and 11 venues only. However, if financial incentives in SE experimentation were an established concept, they should be used and reported appropriately in experiments recently published at these high-quality venues. So, we argue that this sample of publications is sufficient to obtain an overview understanding of the state-of-the-art.

Furthermore, we acknowledge that the COVID-19 pandemic could have had an impact on how financial incentives were used in experiments with human participants in 2020 and 2021. Please note that it is highly unlikely that a full experiment can be conducted, analyzed, documented, reviewed, and published within one year; and many deadlines for the venues we analyzed are in the year before the publication. As a consequence, the COVID-19 pandemic should have had almost no impact on the publications from 2020. Our results (cf. Table 3) further indicate that, in 2021, the number of experiments dropped slightly and more were conducted online (49 in 2021 versus 56 in 2020), even though we analyzed a larger number of publications (1,303 in 2021 versus 981 in 2020). This may be due to the COVID-19 pandemic preventing laboratory sessions. However, there is no apparent difference regarding the types and forms of incentives used or the ratio of publications reporting to have involved incentives, which is why we argue that the COVID-19 pandemic does not threaten our results.

3.3 Selection Criteria

We defined four inclusion criteria (ICs) for any publication:

-

\({\text {IC}}_{1}\) Reports an experiment or observational study in which the tasks have a certain solution that allows to measure a participant’s performance.

-

\({\text {IC}}_{2}\) Reports a study involving human participants.

-

\({\text {IC}}_{3}\) Has been published in the main proceedings of a conference or represents a full research article of a journal (e.g., excluding corrections, editor’s notes, retractions, and reviewer acknowledgements).

-

\({\text {IC}}_{4}\) Is written in English.

Note that we only consider experiments in which the participants’ behavior or performance is relevant (\({\text {IC}}_{1}\)), not setups in which participants simply rate the quality of an artifact to serve as a baseline for a predictive model or perform tasks only to obtain a ground truth for testing models. We added this refinement on task performance during our second data analysis, when we found several publications employing such a setup (Karras et al. 2020; Nafi et al. 2020; Paltenghi and Pradel 2021). Finally, \({\text {IC}}_{3}\) also ensures that the selected publications have been peer-reviewed.

Moreover, we defined two exclusion criteria (ECs):

-

\({\text {EC}}_{1}\) We excluded publications that report on other empirical studies, such as surveys or interviews, which involve incentives only for improving participation, not as a reward for spending cognitive effort during a task.

-

\({\text {EC}}_{2}\) For conference papers only, we excluded those that report an experiment as a sub-part of their contribution, typically to evaluate a tool.

We employed \({\text {EC}}_{2}\) to provide a better overview of current best practices. Mainly, we expected that the more restricted space that is available for a tool evaluation in a typical conference paper leads to missing details (which was confirmed when we scanned a set of these). As a consequence, we would potentially obtain a skewed perception of the SE community; not because incentives are not used, but because of missing details in technical papers. We did not employ this EC for journals, since SE journals typically do not enforce or have more relaxed page restrictions. Also, if publications that are concerned solely with an experiment do not report on (financial) incentives, we would not expect technical publications that perform an experiment only for evaluating a tool or technique to do so.

3.4 Quality Assessment

A quality assessment in an SLR aims to capture the quality of the involved publications (Kitchenham et al. 2015). For us, such an assessment would only be important if we would intend to compare the results of the experiments to understand which results are more reliable. However, we are concerned with understanding how (financial) incentives are used in SE experiments, which in itself can represent a quality criterion since financial incentives can improve an experiment’s validity. Consequently, we did not employ a quality assessment for the publications we identified during our SLR.

3.5 Data Extraction and Collection

For each selected publication, we extracted the relevant bibliographic data from dblp into a spreadsheet, which we used throughout our whole study to add, store, structure, and analyze data. To enable a sophisticated analysis regarding the use of financial incentives, we collected data on the experiments’ context and on typical criteria used in experimental economics to assess these incentives’ design, use, and quality—which connect to the three conditions dominance, monotonicity, and salience (cf. Section 2). In total, we further extracted the following data:

-

The scope and goal of the study (context).

-

The measurements used and whether statistical tests or effect sizes are reported (context).

-

The experimental design (i.e., within/between subject), number and profile (e.g., students) of participants, as well as number of treatments and consequent participants (context).

-

Whether incentives are described at all, and if so their value like a brain model or the concrete monetary amount (use of financial incentives).

-

Whether a payoff function was used (with details on differences between treatments, tasks, designs) and how it was defined, or whether the incentives represent a show-up fee, completion fee, lottery, or any other form of payoff (dominance, monotonicity, salience).

-

Whether and what fixed amount of time was allocated for a participant to perform their tasks (dominance).

-

What the hourly wage of a participant would have been (i.e., comparing earned incentives to the amount of time allocated), and whether this wage is somewhat realistic for the country in which the experiment was performed as well as the participants (dominance).

We added this data to our spreadsheet to ensure traceability and allow us to perform our interdisciplinary analysis. Note that we did not find many details on most of these entries, which is why we cannot reliably analyze and compare them (e.g., whether the payment represents the hourly wage based in the allocated time and country). Still, we required this data to provide a detailed understanding for all authors.

3.6 Conduct

Collecting Bibliographies The first two authors of this article extracted the bibliographic data of all conferences and journals from dblp into spreadsheets, resulting in a list of 2,284 publications (cf. Fig. 1). Before our analyses (divided by years), we aimed to remove all publications that do not belong to the main track of a conference or are (one page) corrections in journals by considering the information of dblp and the number of pages. Namely, we removed all conference papers with fewer than eight pages to discard, for instance, tool demonstrations, data showcases, or keynote abstracts. However, it was not always possible to clearly identify industry papers at conferences, since they can have a similar length to typical main-track papers and are sometimes insufficiently marked in the proceedings. As a consequence, the number of publications for each step in Fig. 1 may be a bit higher than the actual number of the official research publications.

Selecting Publications from 2020 For the 981 publications from 2020, the first and second author independently iterated through all publications and decided which to include based on our selection criteria (by assigning "yes," "no," or "maybe"). We compared the individual assessments to reason on the final decision of including or excluding a publication. Both authors agreed on 927 publications, while they disagreed on 54 (mostly, one author marked the publication with a "maybe," while the other stated a clear "yes" or "no"). Overall, we achieved a substantial inter-rater reliability, with a percentage agreement of 94.50 % and Cohen’s \(\kappa \) of \(\approx \)0.7 (counting every "maybe" as a "no" for \(\kappa \)). We resolved disagreements by re-iterating over the respective publications and discussing the individual reasonings. This also led to refinements regarding our selection criteria (e.g., we adopted \({\text {EC}}_{2}\) so that it covers only conferences, but not journals). In the end, we considered 60 publications to be relevant for our SLR.

Then, the first two authors split the selected publications among each other and manually extracted the data for their subsets. For this purpose, they read each publication, focusing particularly on the abstract, introduction, methodology, and threats. Furthermore, they used search functionalities to ensure that they did not miss details, for instance, by searching for the term "incentive." When in doubt about the details of a publication, the other author cross-checked the corresponding publication. In the end, the two authors performed a cross-validation of the extracted data, investigating the other author’s subset. Afterwards, the remaining authors analyzed whether the data was complete and sufficiently detailed for them to understand each experiment—leading to our refinement of \({\text {IC}}_{1}\) and the exclusion of four publications, resulting in a total of 56 publications. We remark that it is challenging to identify whether \({\text {IC}}_{1}\) applies until reading the details of a publication, which is why we also excluded some publications during the data analysis. During the validation, we added some details for individual publications, but did not find major errors. Mostly, we clarified some SE context on the experiments for the authors from experimental economics and behavioral psychology or corrected an entry (e.g., changing the number of participants).

Selecting Publications from 2021 After refining our selection criteria and obtaining a common understanding on the experiments, we continued with the 1,303 publications from 2021. For these, the second author employed the selection criteria alone. The first author validated a sample of 152 (11.67 %) publications, including all 56 marked as "yes" or "maybe" as well as 100 randomly sampled ones (overlap of four). Regarding this sample, both authors agreed on 145 publications and disagreed on seven. So, we achieved an almost perfect inter-rater reliability with a percentage agreement of 95.39 % and Cohen’s \(\kappa \) of \(\approx \)0.87 (counting every "maybe" as a "no" for \(\kappa \)). We considered 51 publications as relevant and the second author extracted the corresponding data. The first author validated the whole dataset and refined some of the entries. We excluded two more publications due to our refinement of \({\text {IC}}_{1}\) based on discussions with all authors, leading to a total of 49 publications.

Triangulating and Analyzing Finally, we analyzed the data of all 105 remaining publications (56 from 2020, 49 from 2021). We remark that several publications involved multi-method study designs, for instance, combining surveys with a later experiment. In such cases, we extracted only the data on the experimental part. We performed our interdisciplinary analysis mainly in the form of repeated discussions, which started during the design of an actual experiment (Krüger et al. 2022). After conducting our SLR, we inspected the results and compared the reporting and use of incentives to best practices and guidelines in experimental economics as well as behavioral psychology. For this purpose, we built on the expertise of the respective authors from each field as well as the established guidelines and related work that we summarized in Section 2. Specifically, the respective authors iterated through the data, took notes, and investigated different experimental designs based on the actual papers to understand how experiments are designed in SE and outcompared those to their fields. Then, we continuously discussed their impressions, the motivations of software engineers, and the context of SE experiments (e.g., considering open-source developers’ motivations) to understand differences between the fields. Primarily, we discussed the general results of our SLR, which we present in Section 4. Building on more than 30 hours of discussions, individual analyses, and synthesis, we then derived and iteratively refined our guideline and recommendations in this article. At this point, we particularly considered established guidelines from experimental economics (cf. Section 2) and adapted these to the specifics of SE.

Informing the Design of Guidelines (G) and Recommendations (R) The data (cf. Section 3.5) we collected during the SLR (e.g., prevalence and type of financial incentives, background and number of participants) is based on criteria from experimental economics and psychology. This data is used to investigate whether an experiment correctly applied financial incentives and correctly disclosed the application of these incentives. Both aspects are important to facilitate replications of experiments, and thus to increase the quality of experiments. If our SLR indicated failures in correctly applying financial incentives and disclosing them, this justifies the need for defining precise recommendations customized for SE. Additionally, besides capturing whether (\({\text {RQ}}_{1}\)) and what (\({\text {RQ}}_{2}\)) financial incentives are used in SE experimentation, our SLR was also the basis to investigate whether financial incentives should be used in SE. Doing so enables us to not merely copy guidelines from other disciplines, but to develop SE-specific guidelines and recommendations. To achieve this, we first identified the relevant types of studies (i.e., \({\text {IC}}_{1}\), \({\text {IC}}_{2}\)) that are related to those used in experimental economics and psychology. This was important, because some SE experiments did not involve measurements related to participants’ performance. However, the prevalence of financial incentives is especially important for experiments where performance plays a role. For instance, this resulted in Q\(_{1}\) in our guidelines.

We further extracted the selected data to inform our interdisciplinary analysis. Concretely, the measurements helped us distinguish again whether and what parts of an experiment were connected to performance or not (e.g., \({\text {R}}_{7}\)). The experimental design and population are important because these can impact the design of payoff functions (e.g., \({\text {R}}_{1}\), \({\text {R}}_{2}\), \({\text {R}}_{5}\)) and also reveal specialized populations that require different means. For example, open-source developers that work for free are somewhat known in experimental economics, but rarely studied. Reflecting on their motivations from the lens of psychology helped us understand how to translate these into financial incentives (e.g., Q\(_{4a}\)–Q\(_{4c}\), \({\text {R}}_{7}\)). The question what incentives are used and for which experimental designs is important for our analysis to understand how financial incentives could be employed altogether. Overall, the criteria we used to extract data for the SLR are based on studies from experimental economics and psychology, and should help us judge what properties to consider and adapt in what form. As a concrete example, we identified that some experiments relied on course credits, which are discouraged in experimental economics for their lack of comparability and replicability.

Identically, through discussing and analyzing our data, we obtained further general insights (cf. Section 4.3) that were important for our guidelines and recommendations. For instance, one findings was that SE experiments do not document the use of (financial) incentives well. Therefore, we stressed documentation as an important recommendation for SE experimentation to improve their replicability and comparability (e.g., \({\text {R}}_{10}\), \({\text {R}}_{11}\)). Also, we discussed that various specific populations are participating in SE experiments. From the point of experimental economics and psychology, the smaller sample sizes caused concerns over the statistical validity and generalizability of results. Consequently, we also incorporated such specifics in our guidelines (e.g., Q\(_{7}\), Q\(_{8}\), \({\text {R}}_{3}\), \({\text {R}}_{9}\)) and recommendations. Again, we argue that the general insights we obtained and discuss clearly support our goal of developing SE-specific guidelines and recommendations for using financial incentives during experiments.

4 Results and Discussion

In Section 2, we described financial incentives from the perspectives of experimental economics and behavioral psychology. Within this section, we describe and discuss the findings from our SLR based on these perspectives.

4.1 Results

We analyzed 105 publications. The studies reported in these publications aim to address a variety of goals in different scopes, for example, to understand the impact of being watched on developers’ performance during code reviews (Behroozi et al. 2020). In Table 3 in the Appendix, we provide an overview of core properties of each study, namely the research method, study design, participants, and incentives. Note that the studies have been conducted with participants from a variety of countries, such as the USA, Canada, Germany, UK, Chile, or China—indicating that we cover a broad sample.

Overall, 76 publications cover experiments (e.g., online, quasi, controlled), 26 cover observational studies (e.g., fMRI studies, session recordings), and three cover both (cf. Table 3). Of the 79 publications reporting an experiment, 38 involve between-subject, 31 within-subject, and 10 hybrid (i.e., both or multiple experiments) designs. We remark that this distinction is infeasible for observational studies, since developers are exposed to the same treatment only once to explore patterns in their behavior. A majority of 76 studies involved (44 solely) students, 48 developers, 10 researchers, and four non-computer science participants. Note that the publications often report only on involving developers, without specifying the developers’ concrete background. We summarize these studies as well as those that mention, for instance, industrial backgrounds, professionals, or API developers, under this term. Finally, the number of participants in the studies varies substantially (i.e., 6–907 for experiments, 4–249 for observational studies), and in 22 cases the exact number of discarded observations are explicitly described. We provide the number of valid observations in parentheses in Table 3, which are typically smaller because participants did not finish the study (e.g., in online settings (Spadini et al. 2020)). Overall, we argue that these 105 publications span a variety of experimental designs in SE and are a representative sample of the best efforts in current SE experimentation. Consequently, they were a feasible foundation for our analysis and discussion of using financial incentives in SE experimentation.

Unfortunately, the details on incentives are often vague, particularly regarding when they have been paid. Thus, we assumed that incentives were typically awarded for (valid) completions, and deviated from this strategy only if the publication hinted at, or explicitly specified, a different one. We mark forms of incentives for which we have been unsure with "(?)" in Table 3.

In Table 2, we synthesize an overview of the incentives used within the studies. We can see that a majority of 58 (55.24 %) publications does not report on using incentives. Note that one publication (Wyrich et al. 2021) states that the participating students were required to participate in a study to fulfill the university’s curriculum, which we did not consider as an incentive on its own (i.e., without further incentives for their efforts). Out of the remaining publications, most used direct money payments as incentives (25, 23.81 %) followed by course credits (22, 20.95 %). However, almost all of the money payments are connected to fixed payments, but not payoff functions: In 28 (26.67 %) publications, the use of fixed incentives awarded after completing the experiment are mentioned, including fixed amounts of money for each participant, donations to charities, and non-financial incentives (e.g., images of brain scans from fMRI studies, course credits for students). Another 12 (11.43 %) publications (seem) to refer to a show-up fee in the form of course credits or a fixed amount of money. Four (3.81 %) publications mention to have paid participants hourly wages or provided them with contracts, paying them directly with money or with gift cards. Interesting is one study that investigates how different payments (hourly wages versus fixed contracts) impact freelances (Jørgensen and Grov 2021), indicating that fixed contracts led to higher costs. One (0.95 %) publication (Bai et al. 2020) used a lottery among the participants to distribute a voucher.

Some publications indicate that more advanced incentivisations or even payoff functions have been used, but the details are still lacking. Four (3.81 %) publications (seem to) have used course credits as a piece rate to reward a participant’s performance. Three (2.86 %) publications indicate that a quality check was performed before paying out a completion fee, which makes the payoff somewhat performance-based since only the correct solution yields a payoff (cf. Table 1). Finally, one (0.95 %) publication (Shargabi et al. 2020) reports on rewarding only the best-performing participants (i.e., winners-take-all tournament), but not what that reward constitutes.

Lastly, we can see in Table 2 that our cost estimates span from \({\$30}\) to \({\$12,540}\). Unfortunately, the information on these costs is often vague or incomplete, too. For instance, one publication (Jørgensen and Grov 2021) mentions contracts, but only lists the smallest and highest paid contract. The publication with the "most expensive" incentives (Endres et al. 2021a) reports to have paid participants for contributing to multiple sessions (\({\$20}\) for each out of a maximum of 11 sessions). Unfortunately, it is unclear how many participants joined each session, so our estimate of \({\$12,540}\) is an upper bound. We remark that a majority of 18 (out of 25 specifying a monetary amount) of the financial incentives resulted in costs (way) below \({\$1,500}\), with average costs of \({\$1,715.16}\) based on our estimates.

4.2 \({\text {RQ}}_{1}\) & \({\text {RQ}}_{2}\):The Use of Financial Incentives

We can see in Table 2 that roughly 44.76 % (47 of 105) of the publications report on some form of incentivisation. Of these 47, 30 (63.83 %) used financial incentives (i.e., indicated by a concrete monetary value or mentioning payments) and 37 (78.72 %) used fixed completion or show-up fees (involving non-financial incentives), which are similar to incentives used to improve participation in surveys. Since many experiments have been conducted with students, course credits have often been used as a replacement for actual financial incentives. This aligns to our personal perception of the research conducted in empirical SE, and underpins that our research tackles an important gap in SE experimentation.

Only 12 of the 47 publications hint at more elaborate strategies for incentivizing participants, such as payoff functions. Four studies have used, or at least indicated to have done so, course credits as a piece rate (Paulweber et al. 2021a, b; Taipalus 2020; Czepa and Zdun 2020). For instance, Paulweber et al. (2021a, 2021b) rewarded students with up to six course credits for correct answers during experiments. However, the details of these studies (e.g., how the credits were assigned, how much they benefited the participants) are unclear. Also, course credits do not allow to replicate such experiments easily, a problem financial incentives can help to mitigate (cf. Section 4.4). Another four studies have paid their participants contracts and hourly wages to compensate for their time (Jørgensen et al. 2021; Jørgensen and Grov 2021; Liu et al. 2021; Aghayi et al. 2021). Two publications (Sayagh et al. 2020; Braz et al. 2021) indicate that performance-based financial incentives were used by quality checking the submitted solutions before paying a completion fee. For instance, Sayagh et al. (2020) paid five freelancers among their participants only after checking the quality of the solutions (students received course credits), so only a good enough performance allowed them to obtain the reward. Still, it is unclear to what extent the freelancers were informed in advance. Shargabi et al. (2020) used a winners-take-all-tournament, awarding the best performing students with an unspecified incentive. The incentives in all of these experiments are rather simplistic performance-based payoffs, and more elaborate payoff functions as used in experimental economics have apparently not been used. Overall, only five (4.76 %) of these studies report on using financial incentives, and thus may fulfill the properties of a payoff function (cf. Section 2).

4.3 General Insights

When analyzing the individual experiments, particularly the authors from other disciplines raised several discussion points to move towards our guidelines and recommendations. In the following, we summarize the four major points as general insights on incentives in SE experimentation.

Reporting Incentives We already noted that it was sometimes problematic to understand how incentives were used during an experiment. For instance, we re-checked various publications numerous times trying to obtain a better understanding regarding whether incentives were paid on completion or as show-up fees. In fact, many publications simply stated to have used some form of incentivisation without providing any details (e.g., students have been graded somehow). This finding highlights the need for improving our understanding on how and when to employ incentives in SE experimentation, and what details to report. For example, a majority of 58 publications simply does not mention incentives, but they also do not specify that they have not been used. Similarly, few experiments report on the reasoning for using a particular form of incentives. For instance, LaToza et al. (2020) indicate to rely on a fixed show-up fee (it may have also been a completion fee) to not bias their participants. Such details are important to allow researchers to understand, evaluate, and particularly replicate previous experiments. In experimental economics, it has become standard to rigorously describe the incentivisation. The information typically includes the average payoff of the participants (consisting of show-up fee and performance-based payments), whether all decisions were relevant for the payoff, any influence of chance, and the average duration of the experiment. Also, it has to be clear that the applied incentives were identical for all participants, unless incentives were a treatment variable.

Population Sizes One issue raised in our discussions are the relatively small population sizes of some experiments we reviewed. Even though there are statistical tests that can be used (e.g., Fisher’s exact test) and the studies are still meaningful to understand developers’ behavior, it remains an open issue to what extent the findings can be transferred to the general population. Considering also that many populations involve convenience samples (e.g., students of one particular course of one university), the population sizes of the experiments are an important concern that should be considered carefully. However, discussing the (mis-)use of statistical tests and significance is out of scope for our work and we refer to existing research (Wasserstein and Lazar 2016; Wasserstein et al. 2019; Amrhein et al. 2019; Baker 2016). Still, this issue highlights the potential for improving participation and the external validity, for instance, by using financial incentives.

Wages, Realism, and Participants Incentives in experimental economics are used to mimic realism and compensate participants’ time by resembling the incentive structure of the real world—typically paying for participants’ time/effort oriented towards real-world wages. When reflecting on and discussing these intentions with respect to the developers involved in the studies we reviewed, we also raised the issue of (the subset of) open-source developers who contribute to their projects without receiving money. For them, financial incentives may not actually mimic the real world (e.g., if they are unpaid contributors). However, financial incentives can also help to mimic factors (open-source) developers may otherwise not be aware of and for which no other incentive is viable in an experiment (cf. Section 5), such as a loss of reputation in the community.

Similarly, we found publications that employed observational studies or experiments during action-research with industry. In such cases, incentives may also not be useful or even applicable. As aforementioned, for these cases the incentivisation stems from the work itself and additional performance-based incentives should actually be avoided to prevent biases. However, one study also explicitly mentions that companies were compensated for their developers participating in a study by the researchers paying the participants’ wages for the time of the conduct (Jørgensen et al. 2021). These observations highlight that (financial) incentives are not a silver bullet for SE experimentation, but they should be considered and their non-use reported as well as explained.

Laws, Compliance, and Ethics The laws and ethics around financial incentives are of particular importance when designing an experiment, and can vary depending on the location at which a study is conducted. For instance, in our SLR, researchers mention that they were not allowed to pay students for participating in experiments, whereas bonus course credits were allowed (Baldassarre et al. 2021). As a result, financial incentives may not be usable in experiments conducted in the respective countries or universities—but please note that we could not identify any references that support the argument that students could not be paid legally. This situation as well as the different populations involved in experiments (e.g., open-source developers versus students) raise ethical issues. Namely, it is questionable to conduct an experiment with different populations and rewarding only some of the participants (with money) or to change the average payoff. For instance, some experiments reported to have compensated students with course credits or not at all, but other participants received (inherently more) money (Sayagh et al. 2020; Uddin et al. 2020; Danilova et al. 2021; Uddin et al. 2021). Moreover, research (Różyńska 2022) underpins that it is not only fair to compensate participants with financial incentives for their efforts, but it is a moral obligation to do so in a fair and ethical way without discrimination or exploitation. Therefore, when designing the incentives for an experiment, researchers have to keep legal and ethical implications in mind (cf. Section 6). One particular concern in this direction that researchers have to be aware of are compliance issues—depending on whether professionals participate during their work or free time. Some companies may forbid their employees to receive external money, which means that financial incentives cannot be used. However, in other cases, using financial incentives may even be required to reassure a company and facilitate industrial collaboration, thereby lowering the risks of failure and increasing the value of the study (Sjøberg et al. 2007).

4.4 SE and Payoff Functions

Our SLR clearly shows that advanced financial incentives are sparsely used (or reported) in SE experimentation. Precisely, if incentives are used, they rarely combine monetary, task-related, and performance-based incentivisation. In fact, only the four studies paying participants hourly wages or contracts (Jørgensen et al. 2021; Jørgensen and Grov 2021; Liu et al. 2021; Aghayi et al. 2021) and the one study assessing the freelancer’s solutions (Sayagh et al. 2020) involve all of the concepts to some extent. Still, whether and what payoff functions have been used is not reported in the publications. In the following, we discuss how payoff functions connect to SE experimentation based on the results of our SLR.