Abstract

Context:

The term technical debt (TD) describes the aggregation of sub-optimal solutions that serve to impede the evolution and maintenance of a system. Some claim that the broken windows theory (BWT), a concept borrowed from criminology, also applies to software development projects. The theory states that the presence of indications of previous crime (such as a broken window) will increase the likelihood of further criminal activity; TD could be considered the broken windows of software systems.

Objective:

To empirically investigate the causal relationship between the TD density of a system and the propensity of developers to introduce new TD during the extension of that system.

Method:

The study used a mixed-methods research strategy consisting of a controlled experiment with an accompanying survey and follow-up interviews. The experiment had a total of 29 developers of varying experience levels completing system extension tasks in already existing systems with high or low TD density.

Results:

The analysis revealed significant effects of TD level on the subjects’ tendency to re-implement (rather than reuse) functionality, choose non-descriptive variable names, and introduce other code smells identified by the software tool SonarQube, all with at least \(95\%\) credible intervals.

Coclusions:

Three separate significant results along with a validating qualitative result combine to form substantial evidence of the BWT’s existence in software engineering contexts. This study finds that existing TD can have a major impact on developers propensity to introduce new TD of various types during development.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Contemporary software systems often have complex and constantly evolving code bases that require continuous maintenance. Martini et al. (2018) found that developers waste as much as twenty-five percent of development time refactoring or otherwise managing previous implemented sub-optimal solutions. The culprit is technical debt (TD), an accumulation of “not-quite-right” implementations that now serve to impede further development and maintenance. The costs incurred by TD often claim a sizeable portion of the budget of software development projects and are referred to as interest (Ampatzoglou et al. 2015).

What is worse, unless actively managed, the costs of TD may increase nearly exponentially with TD generating additional TD as well as interest (Martini and Bosch 2015, 2017a). There are multiple possible causes of this dynamic (and they are not mutually exclusive). Hunt and Thomas (1999) suggested that the most important factor is the “psychology, or culture, at work on a project”. They explained this by analogously applying the broken windows theory (BWT) from criminology. The BWT states that an indication of previous crimes, or even just general disorder, increases the likelihood of further crimes being committed. In their view, this holds in software development projects, where acceptance of minor defects can result in a snowball effect that causes further deterioration of not only the system, but also the culture surrounding the project.

It is a compelling argument that a developer might think that if someone else got away with being careless, perhaps they could too. From a psychological perspective, this could be explained through the lens of norms, where the apparent prevalence of a behavior forms a descriptive norm, signaling that the behavior is acceptable. It could also be that it happens through unconscious mimicry of previous work, or that it is simply a result of being less enthusiastic about the system due to the TD. Though this software engineering BWT gained some traction, there appears to be no empirical evidence for it. Besker et al. (2020) found that the existence of TD impairs the morale of software developers, lending some credence to the theory, but did not examine the tangible effects of that decline.

This study aims to evaluate the causal relationship between the TD density of a system and the propensity of developers to introduce TD during the extension of the system. While there is some research indicating the developers often find themselves forced to introduce TD as a result of previous suboptimal solutions (Besker et al. 2019), our interest is in situations where no significant hurdles are preventing the developer from implementing a low TD solution. In other words, our goal is to understand if they will adopt the negligent attitude towards the software that they may infer is acceptable, given its state.

The study utilizes a mixed-methods design combining a controlled experiment and a survey with semi-structured interviews. The experiment involved software practitioners completing tasks that required the extension of small existing systems, designed specifically for the experiment, half of which we had deliberately injected with TD. Volunteering participants were subsequently interviewed, and asked about their reason for choosing their particular solution, their general thoughts around TD management, and their experiences on the BWT in software engineering. The quantitative data from the experiment and survey were interpreted using Bayesian data analysis and the qualitative data produced by the interviews through thematic analysis.

Efficient management of TD requires an understanding of the dynamics that govern its growth and spread. The results of this study could be a first step toward formulating improved strategies to keep TD in check. Additionally, the experiment could be seen as a cross-disciplinary effort that could speak to the generalizability of the original Broken Windows Theory.

1.1 Research questions

The goal of this study is to isolate and measure the causal effects of existing technical debt on a developer’s propensity to introduce new technical debt. We condense this into the following research questions, and by distinguishing between similar and dissimilar TD, we ask whether developers mostly mimic TD they come in contact with or if they also introduce TD of a different character than that which was previously in the system.

-

RQ1: How does existing technical debt affect a developer’s propensity to introduce technical debt during further development of a system?

-

RQ2: How does existing technical debt affect a developer’s propensity to introduce similar technical debt during further development of a system?

-

RQ3: How does existing technical debt affect a developer’s propensity to introduce dissimilar technical debt during further development of a system?

2 The broken windows theory

The story of the BWT starts with an experiment conducted by Philip Zimbardo in 1969. He placed identical cars, with no license plates and the hoods up, in Bronx, New York, and Palo Alto, California (on the Stanford campus).

The car in New York was destroyed and stripped of parts after just three days, while the car left on the Stanford campus went untouched for over a week (Zimbardo 1969). Zimbardo was not investigating the BWT, rather, he wanted to observe what kind of people committed acts of vandalism and under what circumstances. However, he did (successfully) employ the BWT when he initiated the vandalism by taking a sledgehammer to the car on the Stanford campus, after which others continued the vandalism within hours (Zimbardo 1969).

A sample size of one is hardly convincing. However, Zimbardo’s experiment inspired Wilson and Kelling to write an opinion piece in the monthly magazine “The Atlantic”. The text, Broken Windows: The police and neighborhood safety (Wilson and Kelling 1982), takes the concept beyond the narrow domain of vandalism and applies it to a broader context of crime and antisocial behavior. In doing so, they created what subsequently became known as the BWT.

Wilson and Kelling suggest (although they provide no evidence) that:

Window-breaking does not necessarily occur on a large scale because some areas are inhabited by determined window-breakers whereas others are populated by window-lovers; rather, one unrepaired broken window is a signal that no one cares, and so breaking more windows costs nothing. (It has always been fun.) (Wilson and Kelling 1982)

Perhaps the most prolific example of policy shaped by the BWT is that of New York City under former mayor Rudy Giuliani and police commissioner William Bratton. The strategy adopted revolved around strictly enforcing minor crimes and was followed by a 50% drop in property and violent crime (Corman and Mocan 2002). While the political leadership attributed the improvement to their broken windows policing policies, the causality of this relationship has been questioned (Thacher 2004; Sampson and Raudenbush 1999).

Several more recent studies have provided empirical evidence supporting the BWT. Keizer et al. (2008) secretly observed a number of public areas in Groningen, to some of which they had deliberately introduced disorder, e.g., graffiti, and found that it had a significant effect on further minor crime (such as graffiti or littering), but also on more serious crime such as robberies. Additionally, a 2015 meta-study found that broken window policing strategies moderately decrease crime levels (Braga et al. 2015).

The BWT has also been examined from a more psychological perspective, in the context of normativity. Several studies within the field have evaluated the effects of a littered environment on the propensity of subjects to litter themselves (Krauss et al. 1978; Cialdini et al. 1990). Cialdini et al. (1990) argue that a littered environment conveys the descriptive norm that littering is acceptable.

The first reference to the BWT in a software engineering context appears in The Pragmatic Programmer: From Journeyman to Master, where authors Hunt and Thomas assert that the BWT holds in the software development context (Hunt and Thomas 1999). They describe the psychology and culture of a project team as the (likely) most crucial driver of software entropy (sometimes referred to as software rot), and the “broken windows”, they claim, are significant factors in shaping them (Hunt and Thomas 1999):

One broken window—a badly designed piece of code, a poor management decision that the team must live with for the duration of the project—is all it takes to start the decline. If you find yourself working on a project with quite a few broken windows, it’s all too easy to slip into the mindset of “All the rest of this code is crap, I’ll just follow suit”. (Hunt and Thomas 1999, p. 8)

Hunt and Thomas provide no evidence to support this claim, other than referring to the 1969 Zimbardo experiment (Zimbardo 1969) and an (unsupported) assertion that anti-broken window measures have worked in New York City (Hunt and Thomas 1999). The only software development-specific arguments come in the form of anecdotal evidence (Hunt and Thomas 1999).

Since its inception, the BWT in software engineering has mostly been discussed on online blogs and, sometimes using different terminology, in popular books such as Clean Code (Martin 2014) with the occasional mention in a scientific paper (Sharma et al. 2015). Section 4 further explores the prevalence of the BWT in software engineering research.

3 Technical debt

Shipping first time code is like going into debt. A little debt speeds development so long as it is paid back promptly with a rewrite...The danger occurs when the debt is not repaid. Every minute spent on not-quite-right code counts as interest on that debt.

(Cunningham 1992, p. 30)

Ward Cunningham (co-author of The Agile Manifesto (Agile Manifesto 2001)) coined the metaphor in his 1992 OOPSLA experience report (Cunningham 1992). Since then, the concept of TD has gained widespread popularity and is commonly applied, not just to code but also to related concepts, resulting in terms such as documentation technical debt and build technical debt (Brown et al. 2010).

Technical debt is now a concept used and studied academically. While there certainly are multiple definitions to choose from, in this paper, we will use the following one arrived at during the 2016 “Dagstuhl Seminar 16162: Managing Technical Debt in Software Engineering”:

In software-intensive systems, technical debt is a collection of design or implementation constructs that are expedient in the short term, but set up a technical context that can make future changes more costly or impossible. Technical debt presents an actual or contingent liability whose impact is limited to internal system qualities, primarily maintainability and evolvability. (Avgeriou et al. 2016, p. 112)

Studying the propagation of TD requires a method for identifying it. There are multiple such methods, ranging from those that require more manual labor to those that rely on automated processes (Martini and Bosch 2015; Olsson et al. 2021; Besker et al. 2020). Some TD could be painfully apparent to developers; after all, it might impact their professional life daily. Simply asking project members could be a way of identifying some of the most egregious instances of TD.

Other types of TD can be a little more subtle. Manually scanning the code for these would generally be prohibitively time-consuming, hence a need for automation. Static code analysis tools see frequent use in industry and research contexts; they rely on rule sets to identify TD items, often referred to as code smells (Alves et al. 2016). Some such tools even attempt to estimate the time required to fix each item (Alves et al. 2016). This category ranges from simple linting rules that can do little beyond correcting indentation to more encompassing solutions that detect security issues and deviations from language convention.

We propose to interpret TD items as the broken windows of software engineering as introduced by Hunt and Thomas Hunt and Thomas (1999). In our view, several recent findings support this conceptualization.

Besker et al. (2020) found TD to negatively impact developer morale, establishing a link between TD and the psychological state of those working in its presence. This lowered morale is reminiscent of the dynamic described by Wilson and Kelling in their original Broken Windows article, where they assert that general disorder will trigger the local inhabitants to adopt a general stance of “not getting involved” (Wilson and Kelling 1982). This link is further supported by a study by Olsson et al. (2021) that used the SAM (self-assessment manikin) system to map changes in affective states triggered by interaction with TD. A natural continuation of that research would be to examine the effects of this psychological impact, where propagation or reproduction of TD may be one of the most exciting lines of inquiry. Moreover, Fernández-Sánchez et al. (2017) have also described that besides causing low morale in development teams, TD may also cause negative effects such as developer turnover.

4 Related literature

Tufano et al. (2015) found that most code smells were introduced during the creation of an artifact or feature and that when code smells were introduced during the evolution of the project, it was usually in close proximity to preexisting code-smells. They also observed that a significant portion was introduced during refactoring activities and that developers with high workloads or tight schedules were more likely to introduce them. On the other hand, Digkas et al. (2020a) examined the change in code technical debt (CTD) over time in a set of open-source projects and attempted to correlate the amount of CTD introduced with the workload of the development teams. They did, however, not find any correlation between the workload of the teams and the amount of new CTD introduced. Furthermore, the same study found that the introduction of CTD was mainly stable over time, with a few spikes. In contrast, Lenarduzzi et al. (2020) followed the migration of a monolithic system to a micro-services system and found that for each individual micro-service, the amount of TD was very high in the beginning but decreased as the artifact matured. The study also reported that the migration reduced the rate at which TD grew in the system but that, over time, TD still grew.

Chatzigeorgiou and Manakos (2014) as well as Digkas et al. (2020b) found that TD is mainly introduced when adding new features to a code base and that as they are not removed, they accumulate over time. Similarly, Bavota and Russo (2016) showed that instances of self-admitted TD increase over the lifetime of a project and that it usually takes a long time for them to be resolved. Digkas et al. (2020b) and de Sousa et al. (2020) did however also find that while the total amount of TD grows, the density decreases when new features with a lower TD density are added. de Sousa et al. (2020) also found, through practitioner interviews, that inexperienced developers propagate error handling anti-patterns by replicating existing practices in the code base.

Two studies by Rios et al. (2020a), (2020b) asked practitioners to identify the causes and effects of documentation debt (DD). Non-adaptation of good practices was the third most mentioned cause of DD. Similarly, low documentation quality (outdated/non-existent/inadequate) was a commonly mentioned effect of DD. Unfortunately, the studies do not make it clear how the practitioners might have interpreted the survey and interview questions (and some answers suggest that interpretations varied). Therefore, we cannot be sure whether the mentioned effects and causes refer to the specific instance of debt or the system in general. Only the latter interpretation would support the BWT.

Borowa et al. (2021) investigated how bias contributed to architectural technical debt (ATD) and found through interviews that biases such as the band-wagon effect, where one is likely to “do what others do”, influence architectural decisions. Verdecchia et al. (2020) also interviewed practitioners and identified perceived effects of ATD. They found that ATD is perceived to cause code smells.

Besker et al. (2018b) studied TD management from the perspective of startups, through interaction with practitioners. They briefly mention a possible vicious cycle involving TD and project culture, but found that some developers saw TD not to be much of an issue in a static project group where everyone is aware of and used to it. They note a tendency to reduce TD before bringing new developers onto a project. In another study, Besker et al. (2019) found that developers see themselves forced to introduce TD in as much as a quarter of the cases where they encounter existing TD; suggesting TD has a contagious nature. Ozkaya (2019) also proposed a vicious cycle in which messy code reduces the morale of the developers, causing them to write more messy code.

Moreover, research has consistently shown a strong link between technical debt and vulnerability proneness in software. Izurieta et al. (2018) and Siavvas et al. (2022) both found that technical debt indicators can serve as a potential indicator of security risks, with Izurieta and Prouty (2019) further emphasizing the importance of managing technical debt associated with cyber-security attacks.

Some studies have attempted to model the interest and growth of TD. Martini and Bosch (2015) conceptualizes a model detailing the cause and effect of ATD and find a potentially vicious cycle through which existing ATD causes more ATD to be implemented. Two other studies by Martini and Bosch (2016, 2017b) continue the work and evaluate methods in cooperation with industry for estimating the future cost of ATD. The derived methods involve several propagation factors of ATD, but none of the identified factors includes the BWT. Martini and Bosch (2017a) do however investigate how ATD propagates and one of their identified propagation patterns is “Propagation by bad example”, indicating that developers copy the practices they see in a system.

The literature search did not result in a single paper that treats the BWT as the main subject, and it is never discussed using the broken windows terminology. The most common way it appears is by being brought up by interviewees as a cause or effect of TD. One of the most interesting entries is that by Tufano et al. (2015), which found that new code smells tend to appear in areas where code smells were already common. However, there are other possible explanations than BWT effects for that finding.

The concept of TD inducing further TD is not uncommon. However, the sub-mechanisms identified are usually restricted to that building on top of TD creates further TD, or that interaction effects between TD items result in higher interest payments than would have been necessary if the items were separate. The lack of studies on the possible psychological mechanics of how TD spreads is concerning as it may be one of the primary mechanics of runaway TD. Properly understanding these underlying mechanics of TD, allows us to refine existing models for how TD and its costs behave, helping practitioners establish effective management strategies that target these specific mechanics, and providing guidance for what future research might be warranted around TD and developer behavior.

We found no studies using an experimental methodology; most of them are field studies or judgment studies, making causality difficult to ascertain. One may conclude that this focus on more exploratory methods is a symptom of the research field being relatively new; some of the included entries explicitly state that the field has, until recently, chiefly been interested in definitions and theory-building. Furthermore, a majority of related literature has been published in the last five years.

5 Method

Seaman (1999) argues that diverse evidence gathered through multiple distinct methodologies will constitute stronger proof than evidence of a single type, a concept commonly referred to as triangulation. Following her advice, this study uses a mixed-methods design. The quantitative part consisted of a controlled experiment coupled with surveys, and the qualitative component was comprised of follow-up interviews. While more effort was put towards the former, the latter could constitute an essential complement, enabling us to clarify, contextualize and corroborate the results of the quantitative analysis.

The GQM (goal, question, metric) breaks down the overarching goal (conceptual level) into questions (operational level) that needs answering in order to satisfy the goals. Answering the question requires identification and measurement of suitable metrics (quantitative level). Qualitative data from interviews were used to further validate and provide insight into our findings

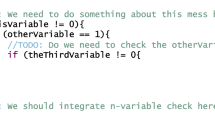

Using the software research strategy framework laid out by Stol and Fitzgerald (2018), we would classify the experiment as experimental simulation while we would argue that the interview component belongs in the judgment study category. We consider them suitable complements to each other, as the former allows inference of causality, while the latter does not. However, a judgment study is of a more flexible and exploratory nature, allowing us to discover some finer details that the experiment might miss (Stol and Fitzgerald 2018). This section describes our methods for each component separately. Figure 1 presents a Goal-Question-Metric breakdown of our design, as prescribed by Basili and Caldiera (2000).

5.1 The experiment

This section describes the design and execution of the experiment and survey that constitute the quantitative component of our study. The purpose of the experiment was to isolate the effects of the independent variable, technical debt (TD), which would allow us to investigate the causal relationship.

To run these experiments we used a custom-built web-interface which meant that our only contact with the participants was recruitment and follow-up interviews. This was facilitated by providing participants with a sign-up code and information to access our research tool called RobotResearcher. After receiving this information the participants could commence the experiment at a time suitable for them from the comfort of their browser.

The experiment was split into several sequential steps as shown in Fig. 2. After accessing the research tool, participants were presented with a short introduction about the experiment, as well as, a confidentiality agreement which they had to accept to proceed with the data collection part of the experiment. Next, participants were asked to complete a background survey to collect the data described in Section 5.1.4. After the background survey, participants were taken to the main part of the experiment where they were presented with two tasks as described in Section 5.1.5. As a last step in the experiment, participants answered some reflection questions where they were asked to grade the quality of the preexisting code and their solution to both tasks. After completing the experiment all subjects were thanked for their participation and presented with a link to sign up for follow-up interviews.

5.1.1 Technical debt level: the independent variable

Since there is a vast, arguably infinite number of issues that constitute TD, determining a suitable subset to represent high TD is not a trivial task. The approach chosen was to go with two distinctly different types of issues introduced at a very high rate, i.e. they were introduced everywhere where they could be introduced. This way, we ensured that designing suitable sets of systems and tasks, henceforth referred to as scenarios, was feasible. It also mitigated the risk associated with choosing a single issue type as a representative for TD. While this choice won’t allow us to conclude what effect every type of TD has it will allow us to draw conclusions about effects for at least some types of TD.

The first type of TD we chose was bad variable names, an issue with multiple great properties for the purposes of this study. Variable names are typically abundant (in the vast majority of programming languages); hence changing them for the worse would constitute a flaw that the subject would be likely to detect. Bad variable names are also a clear example of code technical debt. A variable whose name poorly describes its purpose will make the code less readable, which means that any time a piece of code needs to be understood, e.g., during refactoring, the process will be conceptually harder (Avidan and Feitelson 2017; Butler et al. 2009), and hence, slower. This extra time (and associated development costs) constitute the interest payment that characterizes TD. An example of how bad variable names can make understanding harder is the difference between a/b and distance/time where it’s a lot easier to deduce that the velocity was calculated in the second example.

The second type we chose was code duplication. This issue shares the property of applying to the vast majority of programming languages; however, in other regards, it contrasts nicely with bad variable names. Notably, it may not be quite as obvious a defect, and avoiding propagation of it will usually take the developer more effort. In terms of scenario design, we found that accommodating the inclusion of code duplication and constructing tasks that a subject could solve with or without introducing additional TD were both feasible. Code duplication is often, but not always, an example of TD. Insisting on shared functionality between distant and unrelated classes may lead to excessive interdependency and complexity (Kapser and Godfrey 2008). In many cases, however, there is a strong case that code duplication causes maintainability problems (Yu et al. 2012) and thus can be considered TD (Alves et al. 2016).

Classifying code duplication as a particular type of TD is not straightforward. While Li et al. (2015) firmly categorize it as code TD, using the taxonomy offered by Alves et al. (2016), it could be considered design TD. Further complicating matters, in a systematic review by Besker et al. (2018a), they found that several authors described code duplication as architectural TD. For the purpose of this study the exact classification is not important; we are content with noting that they all agree that code duplication often constitutes TD.

The above TD items could be introduced to the experiment scenarios without changing the overall structure of the code given to the participants. This made sure that most solutions to the tasks would be feasible in both the high and low-debt versions of the code base without making extensive changes to the existing code. This was essential as it allowed the participants to choose a solution, rather than being forced into one out of technical necessity.

5.1.2 Controlled variables

When conducting an experiment, it is preferable to control as many factors as possible to avoid confounding. This study managed to control the following variables.

Programming Language

For several practical reasons, JavaFootnote 1 was chosen as the sole language of all scenarios. First, it is one of the most widespread programming languages in existence, not just in industry, but it is also often the language used for teaching object-oriented programming at educational institutions. Second, we, the authors, were all comfortable with working in Java.

Development Environment

To mitigate interference from participants using different editors with different levels of support we developed an original research tool that enforced a standard, but fairly bare-bones, editor. The editor is a web-based environment that integrates the ACE text editorFootnote 2 to provide a set of text editing shortcuts and syntax highlighting. The tool has access to a remote server that compiles and executes the users’ code in a secure environment. It also oversees the experiment by allocating scenarios, treatments and providing instructions. A limited demo of the tool will remain available for evaluation purposes.Footnote 3

Scenarios

We created two tasks and their corresponding systems to be quite similar. One of them was to extend a room booking system with a new room type and the other was a commuting ticket system with a new ticket type. The scenarios were created such that they contained no or few technical debt items apart from those purposefully introduced. Both systems were of similar size, as well as structure, and are publicly available for review.Footnote 4

5.1.3 Dependent variables

The dependent variables represent what outcomes could be affected by the variation of the independent variable. We used various measures of the amount of TD added to the system by the subject. These particular measures were chosen as they were easily measurable and likely to appear in the limited context of the experiment tasks.

Logic reuse measured if the existing logic, validation logic and constructor, had been duplicated or reused while completing the task. For the purpose of this measure, any type of reuse qualified.

Variable naming recorded how many of the old, new, and copied variable names that fulfilled the following requirements, inspired by the guidelines proposed by Butler et al. (2009):

-

It should consist of one, or multiple, full words.

-

Its name should have some connection to its use and/or the concept it represents.

-

It should follow the format of some widely used naming conventions. While camel case is generally the standard for Java, we do not specify a standard and thus choose to generously also accept names that consistently follow other conventions such as snake case or pascal case.

-

If it is used for iterating (where, e.g., i,j are commonly chosen names), or the variable represents something unknown (such as in the case of equals()) anything gets a pass.

SonarQube issues measured how many issues the static analysis tool SonarQube found in the submitted solution. Beyond the base set of issue identification rules (Java Code Quality and Security), we included three additional libraries CheckstyleFootnote 5, Codehawk JavaFootnote 6, and FindbugsFootnote 7. The result is a set of 1,588 rules. Not all rules were suitable. The following criteria were grounds for rule exclusion:Footnote 8

-

The rule duplicates another rule, which was occasionally the case, as we included multiple libraries.

-

The rule represented a possible standard, i.e., one that may or may not be the standard at a particular institution but could not be considered part of general Java convention.

-

The rule measures one of the things that we had already measured manually, e.g., code duplication detection rules were excluded in favor of manual inspection as we found them to be insufficiently effective.

-

The rule concerns minor indentation and cosmetic formatting errors. Such rules would generate copious amounts of issues, drowning out all others. We also considered them invalid since most commonly used development environments will help the user maintain proper indentation, while the provided editor would not.

-

The rule did not make sense for a system as simple as the scenario code bases.

-

The rule appeared not to function as intended.

Implemented utility methods recorded if the participant had implemented the utility methods equals and hashCode for the new classes they added.

Documentation state measured whether the newly added functionality had been documented and whether the documentation was correct or not.

Task completion measured to which degree the task was completed on the scale: “Not submitted”, “Does not compile”, “Invalid solution”, and “Completed”.

Time to complete task was recorded as it could provide extra insight into the result of the study.

5.1.4 Predictor variables

What we could not control, we accounted for through the inclusion of additional predictor variables. Some otherwise possibly confounding factors could be made part of the model by gathering background information on the subjects.

The predictor variables were collected through a pre-task survey and included information about programming experience, work domain, education level and field. It also had questions regarding which of the following software practices were used at their most recent place of employment: “technical debt tracking”, “pair programming”, “established code standards”, and “peer code reviewing”.

5.1.5 Treatment and scenario allocation scheme

In anticipation of a modest sample size, we opted for a repeated measures design, i.e., a design that would entail each subject finishing multiple tasks. The drawback of such a design is that it can produce learning effects, e.g., a subject may get better at a task with repetition. We took several measures to mitigate the impact of such effects:

-

The order of the scenarios and the treatment of scenarios was randomized.

-

Each subject completed tasks in systems of high and low levels of TD; this allowed the statistical model to isolate the individual ‘baseline’ from the effect of the treatment.

-

A subject was never given the same scenario twice. While the scenarios were similar enough to justify a fair comparison they were also different enough that a solution to one of them couldn’t be easily adapted to the other one. Being able to reuse a previously created solution would likely have been irresistibly convenient.

The minimum number of tasks required to fulfill these criteria was two, each with an accompanying system that existed in a high TD and a low TD version. Hence, there would be four experimental run configurations in total, as shown in Table 1.

5.1.6 Study validation

Through a follow-up survey, we also asked the participants to rate the quality of their submissions as well as the system they were asked to work on as this data could help us validate the scenarios and how the quality of submissions was measured.

All classification and data extraction were done by two researchers independently and later compared to find and resolve any discrepancies or errors. All data from the experiment is publicly available.Footnote 9

As we used an original tool to collect data for the experiment we performed extensive user testing before the study was sent out. Both scenarios were in a similar manner subject to such tests to ensure that they were understandable and clear. These tests led to several improvements and were rerun until the testers reported a frictionless experience with the data collection tool and the scenarios themselves. No data from this testing was included in the study as the environment went through multiple changes and many of the testing participants had extensive knowledge about our hypothesis.

We also performed one pre-study run, and as we did not encounter any issues that led to revisions in our scenarios or data collection tool, we used all data from the pre-study in our analysis.

Directed acyclic graph encoding our causal assumptions concerning the experiment. “Y. Measures of introduced TD” corresponds to logic reuse, variable, naming, SonarQube issues, documentation state, and implemented utility logic. They are actually parallel (having the exact same ingoing and outgoing edges), but were condensed into a single node to keep the graph readable. The same goes for “B. Measured personal characteristics”, which combines all the measures taken during the background survey

5.1.7 Causal analysis

Pearl (2009) suggests building a directed acyclic graph (DAG) of all possible causal relationships in the analysis as it helps the authors document their assumptions and reason about, as well as make claims about causality. The DAG shown in Fig. 3 depicts the possible causal relationships between the measures we aim to safely include in our statistical models when we want to be able to infer causality. The causal relations of the DAG can easily be motivated. Both A and X were randomized and thus cannot have been affected by any other variables. The personal characteristics (B) are measured before the participant is assigned a scenario and a TD level, hence protecting it from any influence of A and X. The task submissions, from which the measures of introduced TD (Y) were derived, did not exist before the subject started the experiment, but were products of the participants, the scenarios, and the TD levels. Hence, they could not have influenced the subjects’ personal characteristics.

Once the DAG has been constructed, do-calculus can be used to check if a specific causal relationship may be inferred from the statistical model (Pearl 2009). This is done by constructing a do-statement for the question we want to ask our model and transforming it using the rules of do-calculus with the goal of eliminating all do-clauses (Pearl 2009). If all do-clauses can be removed it follows that a causal relationship can be measured by our statistical model (Pearl 2009).

The questions we want to ask our model can be described as \(P(\textrm{Y}|\mathrm {do(X)}, \textrm{A}, \textrm{B})\). The second rule of do-calculus states that \(P(\textrm{Y}|\mathrm {do(X)}, \textrm{Z})\) can be rewritten as \(P(\textrm{Y}|\textrm{X}, \textrm{Z})\) when all backdoor-paths between \(\textrm{X}\) and \(\textrm{Y}\) are blocked by \(\textrm{Z}\) (Pearl 2009). Our expression satisfies these criteria as \(\textrm{X}\) and \(\textrm{Y}\) are independent apart from the direct causal link \(\textrm{X}\rightarrow \textrm{Y}\), and when the rule is applied we receive \(P(\textrm{Y}|\textrm{X}, \textrm{A}, \textrm{B})\) which tells us that a causal relationship can be measured by our model, assuming that the stated assumptions are correct.

We will end this section by pointing out that the measured personal characteristics are not randomized and that we can not, and will not, claim causality of any effects estimated for them. They could have numerous unmeasured ancestor variables in common with the dependent variable, creating causal backdoors that we can’t block.

5.1.8 Analysis procedure

We used Bayesian data analysis (BDA) to analyze the data from our experiment. Bayesian data analysis allows the specification of a model, which is then fitted using empirical data through Bayesian updating (McElreath 2020). This model can then be queried for multiple scenarios (McElreath 2020). In contrast, a traditional frequentist analysis arrives at a result that is either significant or insignificant, which is entirely dependent on their selection of \(\alpha \) (which commonly is set to 0.05 in the natural sciences). Wasserstein et al. postulate that the fixation with statistical significance is unhealthy, and thus, they encourage a move “beyond statistical significance” (Wasserstein et al. 2019). Employing BDA is one way of following this suggestion which, according to Wasserstein et al., could prevent the software engineering community from ending up in a replication crisis like certain other disciplines.

A challenge with using BDA is that there is no widely accepted standard procedure. We largely followed the Bayesian workflow laid out by Gelman et al. (2020). However, there are many fine points to the iterative process of model building that they do not cover in detail.

We have multiple questions we would like to answer, each requiring its own specifically tailored model. To keep our analysis consistent, we used a uniform procedure to create and evaluate all models. Our complete analysis, conducted in accordance with the procedure detailed in this section, can be found in our replication package.Footnote 10

We began the model building process by plotting the data and extracting some descriptive statistics (e.g., mean, variance, and median) to get a rough understanding of its distribution. We then created an initial model by choosing an appropriate distribution type and adding our essential predictors. We based this choice on whether the distribution type accurately described the underlying data generation process that produced the data and how well it fitted the empirical data.

After adopting a distribution and basic predictors, we also had to set the prior probability distributions (priors) which encode our prior knowledge and belief into the statistical model. We did this by using the default priors (suggested by the brm function of the brms packageFootnote 11) as a starting point, and then tuned them until we had priors that allowed for physically possible outcome values. We also made sure that our \(\beta \) parameters had priors that were skeptical of extreme effects. We did not encode any prior knowledge regarding the BWT into our priors since there appears to be no previous research on the subject in a software engineering context.

When we had an initial model with appropriate priors, we had to determine which predictors to include in the final model. For the models describing the amount of introduced TD, where we wanted to infer causality, we did this by creating a set of possible predictors subject to the restrictions laid out in Section 5.1.7. For other models, we used a more exhaustive set of predictors.

Next, we created a new model for each of our possible additional predictors and compared the extended models with each other and the initial model using leave-one-out cross-validation (specifically the version implemented by the Stan package looFootnote 12). The comparison allowed us to ascertain which predictors had significant predictive capabilities. Those predictors were then combined into a new set of expanded models. We repeated the process until there were no more predictors to combine.

As a last step we compared some of our most promising models and picked the simplest set of predictors which did not significantly reduce the models’ performance. This model was slightly modified to increase the validity of our analysis by, e.g., fitting it to all the available data and adding \(\beta \) parameters that provided us with extra insight. We then chose to keep those modifications if they did not negatively impact the sampling process or significantly harm the model’s out-of-sample prediction capability.

The final model was used to answer our research questions. This was done partially by inspecting the estimates for the \(\beta \) parameters we were interested in and querying the posterior probability distribution of the model, with factors fixed at various levels, and comparing the results. This final step of querying the model is crucial as it takes the general uncertainty of the model into account and provides an easy way to access effect sizes as it moves everything to the scale of the original input data (Torkar et al. 2020).

5.2 The interviews

This section describes the procedure we used to conduct the interviews that constitute the qualitative part of this study; the purpose of which was to gain further insights relating to our research questions. We also describe the analysis approach through which we analyzed the resulting material.

All participants who agreed to a follow-up interview were contacted and scheduled within a couple of days. We scheduled each to a maximum of 30 minutes, but the length could vary significantly depending on the participants’ availability.

The interviews were semi-structured around a set of questions with the purpose of giving us further insight into why the participants acted as they did in the experiment. We aimed for the questions to be open-ended while gradually steering the interviews toward the central topic of the study to capture as many unprovoked reflections as possible from each participant before revealing our research question. Given that we had 30 allocated minutes, we were able to allow interviewees to elaborate on each question and go on semi-related tangents before we had to steer them back toward the topic of the study by asking the next question. The questions were:

-

1.

Can you describe your solutions to the tasks in the experiment?

-

2.

Can you motivate why you choose those solutions?

-

3.

What was your experience of the preexisting code?

-

4.

How did the preexisting code affect you?

-

5.

Do you have any other comments regarding the BWT in software engineering? (This question followed a brief explanation of the topic of our study)

Interviews were documented using field notes as described by Seaman (1999), i.e., we noted down any interesting reflections made by the interviewed participants relating to our research questions. We omitted any identifying details and, in many cases, translated the reflections from Swedish to English. Some participants who did not participate in the follow-up interviews provided us with their reflections in text. We also included such reflection in the interview material.

5.2.1 Interview results analysis

The resulting list of noteworthy statements was subjected to thematic analysis using a process largely following the steps laid out by Braun and Clarke (2006), with the exception that our base material did not consist of a complete transcription but rather the field notes as described in Section 5.2. This is a brief description of the stages of the process (Braun and Clarke 2006):

-

1.

Familiarize yourself with the data.

-

2.

Encode patterns in the data as codes.

-

3.

Combine codes into overarching themes.

-

4.

Check theme coherency and accuracy against the data, iterate themes until those criteria are fulfilled.

-

5.

Define the themes and their contribution to the understanding of the data.

When constructing themes, we did employ a partially deductive approach, where we assumed some of the themes from the start, i.e., software quality and system extension quality (in terms of maintainability), since these were the areas of interest to us. The resulting set of themes was discussed and agreed upon by two of the authors.

5.3 Participant recruitment

We used convenience sampling by recruiting volunteers from our personal networks and local software development companies. As our personal networks mostly consisted of developers with little professional experience, we also used purposive sampling by specifically recruiting participants with more professional experience. Snowball sampling was also utilized by encouraging participants to pass on the participation invite. The sample used for the interviews was a subset of the experiment subjects, who volunteered after completing their participation in the experiment.

5.4 Sample description

In total, 29 subjects submitted solutions to at least one of the two tasks, for a total of 51 submissions, meaning that seven subjects only submitted one of the tasks. Additionally, 14 potential participants entered the research tool but did not do any tasks. The convenience sampling procedure employed produced a sample with some noticeable skews that reflect the nature of our personal networks and our geographical location (Gothenburg, Sweden).

While the experience level of the participants varied, six of them had none or very little professional programming experience and an additional eleven participants had less than five years of experience. The full distribution of reported experience can be seen in Fig. 4.

As seen in Fig. 5 subjects reported working in a wide variety of software industry sectors ranging from embedded programming to web development. The “Automotive” section is over-represented among the participants, likely due to Volvo Cars being a large local employer.

A majority of participants reported having completed “some master-level studies”, with about a quarter claiming a finished master’s degree. Regarding their field of study all but two participants reported “Computer Science” or “Software Engineering”, with the latter being twice as common as the former. Only one participant reported having no higher education.

A more detailed sample description with complementary descriptive statistics is available in the replication package.Footnote 13

5.5 Method deviations

Two participants reported that they had experienced problems with the user interface of the data collection tool. One of them accidentally reloaded the page and lost their progress. The other one accidentally submitted an empty solution, which lost us two submissions. However, these drop-outs could be considered random and should not bias the sample.

One of the early participants discovered two minor mistakes in the given scenarios. We resolvedFootnote 14 the mistakes upon notice and presented the revised versions to subsequent participants. Given the limited impact of the changes—they were not in a location that participants frequently modified—we find it unlikely that many participants noticed, let alone were impacted by their unfortunate presence.

6 Quantitative results

This section presents the estimates and some carefully selected posterior samples produced by the final models for each outcome. Four outcome models describing how likely the developers were to implement utility methods, add documentation to their code, complete the task, and the time it took to complete the task, are not presented in detail in this section as they showed no significant effects. Furthermore, including all the intermediary models described in Section 5.1.8 would not be feasible. Instead, we have provided a replication packageFootnote 15 that allows thorough examination and reproduction of all our models and their development.

As described in Section 5.4, seven participants failed to submit both tasks and therefore only contributed one submission each. These drop-outs were analyzed and were found to have no correlation with either treatment or scenario allocation. While multiple measures from each participant would have been desirable and lead to higher certainty in our models we can safely include all the submissions in our analysis while considering both within and between subjects effects as we use partial pooling, which will ensure that single data points do not skew the result.

All models include \(\beta \) parameters for high_debt_version and programming_experience as well as a varying intercept for each participant (session). The \(\beta \) parameter for high_debt_version is compulsory as examining the effects produced by the existence of TD is the point of our study. The parameter for programming_experience was included as we want some insight into what effect the skew in our sample towards more junior developers might have on our result. We did not consider any interaction effects and did not add any other grouping factors than the participant identifier since they would entail a high risk of overfitting with our relatively small sample size.

We fitted all final models to the full data set, including participants who only completed one of the assigned tasks. Excluding the participants who did not complete both tasks made sampling easier as it assured that the data set was balanced but could also introduce bias in the analysis. Therefore, we chose to fit the final models with all available data after confirming that the models could sample well using the complete data set.

6.1 Logic reuse

Table 2 (Models 1 & 2) show that the high_debt_version predictor has a significant effect on the outcome with well over \(95\%\) of the \(\beta \) estimate probability mass on the negative side of zero. The \(\beta \) estimate distribution for the programming_experience predictor is centered close to zero with significant weight on both sides, indicating no or minimal effect. This information alone indicates that a high debt density in the preexisting code base induces developers to reuse less. In contrast, the developers’ professional programming experience scarcely affects the amount of reused code.

The outcome distribution shown in Fig. 6 offers additional insights into the effects of the predictors. It shows a clear difference in the outcome depending on the TD level of the system. The effect appears to persist for all levels of professional programming experience. The model estimates that developers with 10 years of professional programming experience are \(102\%\) more likely to duplicate logic in the high debt version of the system. The corresponding number for those with no professional programming experience is \(113\%\). Given that this model was developed in accordance with the restrictions arrived at in our causal analysis (Section 5.1.7), it is possible to make causal inferences regarding the effects of TD level (but not experience level).

6.2 Variable naming

Table 2 (Model 3) shows that the high_debt_version predictor has a significant effect on the outcome with well over \(95\%\) of the probability mass on the right side of zero. Conversely, the programming_experience predictor’s \(\beta \) estimate is centered around zero, indicating no or minimal effect. These results imply that a high debt density in the preexisting code base causes developers to use non-descriptive variable names and that the developers’ professional programming experience has little to no effect on the descriptiveness of their variable names.

Figure 7 shows the distribution of the estimated rate of good variable naming for a developer with ten years of professional programming experience introducing ten variables. According to the depicted sample, a developer is \(458\%\) more likely to use a non-descriptive variable name whenever they introduce a variable in the presence of TD. Given that this model was developed in accordance with the restrictions arrived at in our causal analysis (Section 5.1.7), it is possible to make causal inferences regarding the effects of TD level (but not experience level).

6.3 SonarQube issues

The \(\beta \) estimates presented in Table 2 (Model 4) suggest that the debt level of the system has a notable effect on the number of SonarQube issues introduced by the subject with the \(95\%\) credible interval of the estimated high_debt_version parameter outside of zero. The programming_experience \(\beta \) estimate is centered closer to zero, but the uneven distribution suggests that it could potentially have some effect.

The outcome distributions depicted in Fig. 8 show a moderate effect of the debt density of the system on the number of SonarQube issues introduced by the participant. The, somewhat unreliable, estimated effect of professional programming experience generates noticeable differences in the prediction for various experience levels, with more experience being expected to introduce fewer SonarQube issues. The effects shown by the graph correspond to developers on average introducing \(117\%\) more issues in the high debt version. Given that this model was developed in accordance with the restrictions arrived at in our causal analysis (Section 5.1.7), it is possible to make causal inferences regarding the effects of TD level (but not experience level).

6.4 System quality rating

As shown by Table 2 (Model 5) this model estimates considerable effects of TD level (high_debt_version) and professional programming experience on the way subjects rated the the system in terms of quality (maintainability). In both cases, the \(95\%\) credible intervals do not cross zero. These results show that participants tended to rate the high TD as worse and more experienced programmers also, on average, give all systems a lower rating.

The outcome distributions differ noticeably between the high and low debt cases in both our low experience (Fig. 9) and high experience (Fig. 10) cases. The average difference is about one unit on the Likert scale. The model also predicts that a developer with ten years of experience is \(197\%\) more likely to rate the high debt system as worse than the low debt system, as opposed to the other way around.

6.5 Self-reported submission quality

Table 2 (Model 6) shows a variety of small effects with high uncertainty. However, the effect of reused_logic_validation:false is significant enough that the \(95\%\) credible interval does not cross zero, indicating that the amount of duplication is strongly linked to how participant rated their own work.

The distribution of differences between the participants’ quality rating of their own work, simulated for a bad submission with a lot of introduced TD and a good submission with close to no introduced TD according to our measurements. The vertical lines represent the means. Difference as: bad submission rating − good submission rating

Further insight is offered by Fig. 11, which shows the distribution of differences between outcomes generated under the assumption of a bad submission and good submission. In the simulated case, the model estimates that participants are \(114\%\) more likely to rate the bad submission as worse than they were to rate the good submission as worse.

7 Qualitative assessment

Our results concerning the introduction of further technical debt (TD) in the form of logic duplication, bad naming of newly created variables, and other issues discovered through the use of SonarQube all showed considerable effects:

-

Developers are \(102\%\) more likely to duplicate existing logic in our systems with high levels of TDFootnote 16 (Section 6.1).

-

Developers are \(458\%\) more likely to assign a variable a non-descriptive name in systems with high levels of TD16 (Section 6.2).

-

Developers introduce \(117\%\) more SonarQube issues in our systems with high levels of TD16 (Section 6.3).

All of these were established by investigating \(95\%\) credible intervals. Taken together, it is very improbable that they are all false positives. Additionally, the results of our causal analysis (see Section 5.1.7 show that we can infer the causality of these relationships, i.e., pre-existing TD is the cause of the effects.

We found no noticeable effect of TD on a subject’s propensity to implement utility methods for the new classes they constructed. Similarly, we found no significant effect of pre-existing TD on the likelihood of participants correctly documenting their new code. This does however not mean that we can reject the possibility of such relation as the probability density, while crossing zero, is quite wide showing a large uncertainty in these estimates.

The experiment design, i.e., bad variable names and code duplication coinciding, makes it impossible for us to discern the effects of the former from the latter. However, the estimated impact on the introduction of general code smells suggests that broken windows theory effects are not limited to within specific types of TD items. That is, pre-existing bad variable names do not just induce the developer to implement additional poorly named variables, but also other examples of TD. This finding is interesting because it suggests that developers mimicking previous work is not a sufficient explanation of BWT effects on its own.

Furthermore, our analysis of the participants’ evaluation of their work (Section 6.5) reveals a tangible correlation between the way a subject rated their submitted changes in terms of quality (maintainability) and some of our various measures of TD. This correlation shows that subjects were not completely oblivious regarding the TD they had introduced.

7.1 Thematic analysis

The data set forming the basis of our thematic analysis were field notes taken during six follow-up interviews as well as additional comments received via email or other text messages from a further four participants. This section presents the results of that analysis.

We arranged the themes derived through this process into the thematic map that constitutes Fig 12. A connection between two themes represents them appearing together in the interview material. The manner of their connection may differ between interviews.

The oval themes represent the ones that we enforced as part of our deductive approach. They are the focal points of our inquiry, the TD density or quality (maintenance) of the system, and the same properties of the new code they produce.

The subjects were clearly bothered by the issues in the high TD version. Some complained that the short and non-descriptive variable names made the system difficult to understand, which affected their productivity. While expected, it is a solid indication that bad variable names are a good example of TD. We also found that some expressed a sense of discouragement or loss of enthusiasm in relation to system quality exemplified by comments such as “It was such a drag with all the bad variable names ...//...I almost didn’t finish the task”.

Refactoring was a topic frequently brought up and is very much a topic of interest in the context of TD propagation. The task descriptions explicitly stated that subjects were free to alter other parts of the system as they saw fit, and most of our interviewees had taken advantage of that opportunity. Interestingly several of them connected system quality to their decision to refactor, while some argued that refactoring a system that was in such a sorry state was not worth their time, others expressed that the obvious faults were what prompted them to start refactoring. One said that the quality issues caused him to go on a “refactoring spree” and another that the noticeable faults made them examine the system more closely, in search of more quality issues in need of attention.

Views on refactoring varied significantly, some older and more experienced developers favored an approach where refactoring efforts are mainly relegated to specifically dedicated time-slots: “You should devote specific sprints to refactoring and not mix it with feature development”. In their view, mixing refactoring work with new feature development results in productivity losses and results in individual developers exposing the project to the risks they associate with refactoring (instead, they favor team deliberations regarding significant refactoring decisions). Others favored a more flexible approach where they fix problems as they come across them, though admitting that “going down the rabbit hole” (having to follow a seemingly never-ending trail of interdependent issues) is a definite risk, especially when dealing with older systems whose architecture is reminiscent of a “ball of yarn”. Finally, high test quality and coverage were mentioned as a way of enabling more aggressive refactoring strategies by mitigating the associated risks.

Several participants noted that they had trouble deciding on how to name the variables in their new class when working on the high debt system. While they recognized that the current naming scheme was terrible, using a different one for their new variables would make the code less uniform, which could potentially be more confusing. One said that “it’s important to follow the style of the code already there to reduce bugs”. The optimal solution, they agreed, would be to fix all variable names (which some actually did), although one expressed regret over not having done it. This participant went on to admit that their discouragement, as a result of the quality issues, had made them focus on just getting the tests to pass as soon as possible to “be done with it”.

Our impression is that those who volunteered to be interviewed were more enthusiastic about the project, generally put more effort into their submissions than the average subject, and were excited to discuss the qualities of their particular solution.

8 Discussion

As summarized in Section 7 our results clearly show that developers’ propensity to introduce TD is higher while working in a system with more preexisting TD (RQ1). Moreover, we see the same effect when only looking at introduced TD of similar types (RQ2), as well as dissimilar types (RQ3) of TD compared to the preexisting TD. The thematic analysis and interview material further allow us to reason about the underlying mechanics of this behavior.

During the follow-up interviews, participants did express that the preexisting flaws made them less enthusiastic about the task at hand. One went as far as saying that they “hardly had the will to finish the task” and merely made sure the tests passed (they were then pleasantly surprised that the system they were assigned in their second task was of significantly higher quality). This is in line with previous findings of Besker et al. (2020) and Olsson et al. (2021). Interestingly, and seemingly at odds with this statement, the results indicate that debt level does not appear to have significantly affected a subject’s propensity to drop out of the experiment.

Considering that the code in a high TD system is both longer and harder to read, we might expect participants to spend more time in those systems. The results indicate no significant effect of TD on the amount of time the participants allocated towards completing their task. Perhaps this is an indication that developers are averse to compensating the productivity loss induced by TD with additional time, resulting in the loss of quality. This is further corroborated by some comments from our interviews, such as “I’ll just run my tests and be done with it” (when discussing their effort put into the high TD system).

While we found that mimicry alone is not enough to explain the additional TD introduced (Finding 3), it is likely the case that it is a significant driver of TD propagation in general. Given the comparatively large effects on code reuse and variable naming, it is also likely that mimicry is a large part of the effects observed in this study. One participant admitted that “you think that someone else thought it should be this way, and then you follow in the same tracks” and several others raised the issue of code uniformity. While it may be clear that a system contains issues, if the defects are systematic, it may not necessarily be the case that breaking that uniformity is preferable. For example, using a different (but better) variable name to describe something already existing in adjacent classes could be more confusing than a bad (but consistent) naming scheme. We did anticipate this, and it is why we discerned new variables from copied variables. However, although there is no practical advantage to mimicking the bad naming scheme for new variables, it may be that some subjects preferred the aesthetics of consistently short and non-descriptive variable names.

Another takeaway from our result is that developers were at least partially aware of the TD they introduced (Finding 4). Besker et al. (2019) previously found that developers often felt forced to introduce new TD due to existing TD, which implies an awareness of their TD creation. However, we presented participants with tasks that could feasibly be solved without introducing further TD, i.e., they were not forced to do so. Our results suggest that developers are somewhat aware of their introduction of TD even under such circumstances. From a practical perspective, this may be good news; it is likely easier to achieve change in a conscious rather than unconscious behavior.

Interestingly, the predictive values of the background factors gathered by our survey were small and uncertain enough that we could safely exclude them from the final models. This, of course, could be a symptom of our relatively low sample size (\(N=51\)). Years of professional programming experience, which we included as a predictor in the final version of all models measuring TD outcomes, did show a small effect in the expected direction in each case (i.e., more experienced developers introduce less TD on average). However, it is critical to note that we only ever investigated background factors in relation to the amount of TD introduced, but not the effect of background factors on susceptibility to BWT effects. It could be that experienced developers are less likely to be affected by existing TD, but that would require the inclusion of interaction effects and our sample size would not allow for such a reckless approach.

Finally, although we argue that our results have answered the question of if there is ever a BWT effect, the next question may be: “is there ever not a BWT effect in software engineering?” We suspect that such exceptions are possible, e.g., if a developer is not aware of the existence of a more optimal solution that would reduce their maintenance workload. In other words, could they identify a broken window, having never seen one that is unbroken? The broken window of the BWT is a message indicating general indifference; it stands to reason that for a true BWT effect to occur, the disorder must be apparent to the observer.

Given that we find convincing evidence of BWT effects, companies and software practitioners should continue striving toward keeping the TD density of their systems low. This is by no means a new insight; it has been advocated for by pioneers of the software industry for many years. However, this study is the first to empirically validate the claims of the advocates of the BWT and corroborate their anecdotal evidence. We believe our results are a significant contribution toward establishing the generalizability of the BWT across disciplines.

8.1 Suggestions for future research

To isolate and measure the causal effect described by the BWT this study has been designed as a carefully controlled experiment in an artificial setting. While necessary to achieve our goals, a natural continuation of the work and conclusions laid out in this paper would be to do field experiments as well as observational and longitudinal studies along similar lines of inquiry to better understand how this effect is influenced by other factors of software development. Such research would help us define best practices and models to handle technical debt in a software engineering context by better understanding the mechanics influencing its introduction and growth.

At the core of the BWT is that the broken window itself is not the primary cause of the undesired BWT effects; it merely communicates an atmosphere of neglect and indifference that indicates that there are no repercussions to the behavior that “broke the window”.

The state of the (proverbial) window is just one such indication, although perhaps the most important one. The possibility of repercussions can also be communicated by the context of the window. The broken windows may still be there, but certain practices and circumstances may inoculate developers against their effects. Examples of such factors could be peer reviewing, continuous integration, and acknowledgment of TD issues (such as a “fix me” comment). Examining such contextual factors would be the next major step towards understanding the BWT in software engineering. This could be challenging to accomplish in a controlled experimental setting; it might require a longitudinal field experiment.

Furthermore, Wilson and Kelling (1982), as well as Hunt and Thomas (1999), describe a long-term cultural deterioration as a result of BWT effects. While our results support the central mechanism of the BWT, we can not confirm whether or not “broken windows” have a lasting impact on the culture of a project group, e.g., if a team is assigned to work with high TD systems for some time, will that affect their performance when switching back to a system of low TD density? This is a question we would like to see answered by further research.

Several of the studies that came up during our related literature review (Section 4) presented models of TD that did not even entertain the possibility of BWT effects. Rather, they exclusively discuss the dynamic of TD forcing new TD implementation. We deem our results convincing enough that TD researchers should consider them in future models.

While the BWT was the focus of this study it is only a small part of what may influence a developer in their craft. Further exploring other lines of inquiry relating to developer behavior theory and behavioral design, in the context of software development, will be essential to form effective practices for developer experience.

Finally, we would welcome attempts to replicate the results of our experiment and variations of it using different systems and other examples of TD, perhaps with a sample size sufficient to investigate interaction effects and more than two levels of TD density. To facilitate any such efforts, we have made all our research materials publicly available.Footnote 17

8.2 Threats to validity

This section discusses threats to the validity of our study and is divided into four parts as per the recommendations of Wohlin et al. (2012)

8.2.1 Internal validity

The choice of a simulated experiment allowed us to achieve a high internal quality as we measure the effect of a specific treatment in an environment we control. We also took multiple additional measures to ensure that nothing other than the TD itself influenced the developers to take different actions in the two different tasks they were assigned.

We designed the experiment to ensure that it was always possible to measure the difference between a subject’s performance in a high debt system and a low debt system, reducing the influence of factors that we could not control. These factors include, but are not limited to, the time of day of the experiment, which hardware they used, and their physical environment.

Another threat to internal validity is learning effects. However, randomization of the order of the scenarios and debt levels should have neutralized the impacts of any such effects on our results.

In an isolated experiment such as this, it could have been that the subjects were not particularly meticulous in their work since they did not care about their performance. Conversely, in practice, the opposite is more often the case. In experiment and survey research, respondents frequently elicit social desirability bias. That is, they may answer or perform in the manner that they think is socially desirable (Phillips and Clancy 1972). We would argue that since low TD code would be desirable, the subjects are more likely to have overperformed rather than the opposite.

The use of a fully automated research tool ensured that we could not influence the subject in any way while participating in the experiment. The research tool presented participants with the same interface, information, and task description no matter whether they were coding in the high debt system or the low debt system. We also denied any questions from participants during their participation.

8.2.2 External validity

Since we had to rely on volunteering subjects, i.e., something more akin to convenience sampling rather than random sampling, there is reason to doubt that the sample was fully representative of the population (software developers). However, the fact that background factors showed little predictive power indicates that the skew in the sample is unlikely to have affected our results.

Another concern regarding external validity is how representative our system and research environments were of real systems and natural environments; there are some disparities, e.g., the lack of symbol searching in the environment and the size of the scenarios. The contrived setting of this study could impact the generalizability of our findings. But, as noted by Stol and Fitzgerald (2018), there is an inherent trade-off between the generalizability of a study conducted in a more natural environment and the precision with which measurement of behavior can be achieved in a study using a more controlled and contrived setting. The chosen research strategy allowed us to measure how high TD levels affect the behavior of developers, with, we would argue, high precision. This would not have been possible in a natural setting. The qualitative part of the study also helped improve the generalizability of the study as it included a question about BWT in a neutral setting, where multiple participants expressed their support of the theory.

8.2.3 Construct validity