Abstract

Source code comments are a cornerstone of software documentation facilitating feature development and maintenance. Well-defined documentation formats, like Javadoc, make it easy to include structural metadata used to, for example, generate documentation manuals. However, the actual usage of structural elements in source code comments has not been studied yet. We investigate to which extent these structural elements are used in practice and whether the added information can be leveraged to improve tools assisting developers when writing comments. Existing research on comment generation traditionally focuses on automatic generation of summaries. However, recent works have shown promising results when supporting comment authoring through a next-word prediction. In this paper, we present an in-depth analysis of commenting practice in more than 18K open-source projects written in Python and Java showing that many structural elements, particularly parameter and return value descriptions are indeed widely used. We discover that while a majority are rather short at about 6 to 9 words, many are several hundred words in length. We further find that Python comments tend to be significantly longer than Java comments, possibly due to the weakly-typed nature of the former. Following the empirical analysis, we extend an existing language model with support for structural information, substantially improving the Top-1 accuracy of predicted words (Python 9.6%, Java 7.8%).

Similar content being viewed by others

1 Introduction

Source code comments are an important part of any software project as they are essential for developers to understand, use and maintain projects. However, writing comments is a tedious, manual process and, as a result, software is often poorly documented (Aghajani et al. 2020). Research has long attempted to reduce this effort through automated comment generation. The earliest works were focused on generating function and class comments (Haiduc et al. 2010; Moreno et al. 2013) through custom heuristics and templates. More recent data-driven approaches are inspired from the field of machine translation and use deep learning and natural language processing to generate summaries of the source code (Hu et al. 2018; Wan et al. 2018; Le Clair et al. 2019). What all these approaches have in common is that they focus on the first sentence of the documentation comment. This sentence is treated as the summary and the rest of the comment is ignored. In practice, however, comments contain a wide range of topics, ranging from functional descriptions to discussions of the rationale behind design decision (Writing system software: code comments 2021; Pascarella and Bacchelli 2017). It is also well known that developers spend more than half of their time on source code comprehension activities (Xia et al. 2018). While a good summary can be helpful, it is just the tip of the iceberg and represents only a small fraction of the whole comment. Unfortunately, automatically generating complete comments is still out of reach for current machine learning techniques.

Several documentation formats exist that introduce structural elements for languages such as Python and Java. These elements allow the organization of comment contents into various sections, for example, said summary, but also a long description, a description of parameters and return values, details about possible runtime errors, or other fields that are specific to the programming language or the documentation framework. A popular example of such a format is Javadoc for documentation comments in Java code. Having structural elements does not only improve the readability of a comment, but also enables automated processing to automatically generate API documentation or to provide better tool support in development environments.

In this paper we investigate the idea that leveraging the structural information available in comments can improve the assistance systems for developers for writing comments. Instead of generating full comments though, we keep the developer in the loop. Previous work has introduced a promising comment-completion tool that works in a semi-automated way (Ciurumelea et al. 2020), which -similar to email clients (Chen et al. 2019) and messaging apps- suggests likely next words to reduce repetitive typing and the required time and effort. We enhance this previous approach by considering the section information as additional context to improve the quality of the predicted comment completions.

In this study, we answer the following research questions to investigate the context and the feasibility of our vision:

- RQ1::

-

How structured are function documentation comments?

- RQ2::

-

Can structural information be used to improve the accuracy of a comment completion tool?

- RQ3::

-

How dependent are the results on a concrete programming language?

To answer these questions, we have compiled two datasets that contain more than 14K Java and Python projects. We have comprehensively analyzed this dataset and have measured various characteristics of the contained comments. Our results show that structured documentation comments are indeed widely used in practice, for example, we could identify a formatting style for over half of the Python projects. While summaries, also called short descriptions are indeed the most widely used comment section across both languages, also long descriptions, declarations of parameters and return values, and explanations of possible exceptions/raises are frequently contained in a comment.

These results made us confident that it is possible to improve the accuracy of the completion tool, so we trained several variants of a language model that uses the section information. The evaluation results show improvements between 4.1% for the Long description section and 9.6% for the Return section of the obtained Top-1 accuracy, when compared to the baseline for the Python dataset. We also found that some sections are significantly more predictable than others and achieved significantly different Top-1 absolute accuracy values from 0.194 (for the Long description) and 0.323 (for the Raises section) for the Python base model represented by the Context LM.

A comparison across the Python and Java languages datasets has revealed several similarities. Comments in both languages contain structured comments, however while Python has a diverse set of formatting styles, Java is dominated by Javadoc. We found that Java comments contain more structural elements than Python and that documentation comments of Java projects are more complete and include the different sections more often. Also the language model can achieve similar results for Java and Python, which suggests that our initial observations can be generalized to other programming languages. However, further research is required to solidify these initial findings and replicate them in a larger array of programming languages.

Overall, this paper presents the following main contributions:

-

An empirical study over two programming languages that investigates the use of function documentation formats in practice.

-

An extension of an existing language model for the task of code comment completion that leverages the additional section structure to improve the accuracy of the generated completion suggestions.

-

A comparative evaluation of the study and the language models for two programming languages to strengthen our findings.

We have released a replication package that contains the datasets and the corresponding source code we used for the analysis of the projects and the training and evaluation of the neural language models (Replication package (2022).

2 Overview

Motivated by an empirical study on comment sections, this paper proposes a novel comment suggestion engine that is assessed in an extensive evaluation approach in two different programming languages. To clarify this complex methodology of the paper, we provide an overview over the paper structure in Fig. 1 to illustrate the different high-level parts.

The first part of this paper is an empirical study on source code documentation practices in the wild. We base this initial investigation on the CodeSearchNet dataset (Husain et al. 2019b). This dataset is clean and ready to use, which provides an excellent starting point for our research. The dataset also covers different programming languages, so it is possible to compare results on a similar ground. The study that is presented in this paper is based on Java and Python to cover to popular programming languages with different paradigms. We will introduce more details about the two (sub-)datasets in Section 3.1.

We preprocess the existing data for both languages and establish a pool of source code/comment pairs. Details about this preprocessing will be provided in Section 3.2. Based on this dataset, we can answer RQ1 by investigating several aspects of documentation comments: which format guideline is most popular (Section 3.3.1), how often are docstrings used/missing (Section 3.3.2), which sections are commonly used in docstrings (Section 3.3.3), how consistent is the documentation of method parameters (Section 3.3.4), and how long are the descriptions of tag-like structures (Section 3.3.5).

The results of these analyses are used to inform our design of the completion engine that is presented in Section 4. We start with two neural language models for comment completion that have been introduced in previous work and introduce three extensions that leverage the additional information that is available when section information is taken into account: both models will be extended through an additional embedding layer to encode the enclosing section in the comment. While the base model only considers the word sequence, the context model also considers the method body as input. The third model is an ensemble technique that contains multiple instances of the context model, which are all trained/specialized for one particular comment section.

To instantiate the different models, we have established another dataset. While for Java, the CSN dataset was extensive, we found the extent of the Python dataset limited and decided to complement it with additional data that we mined from GitHub. Section 5 contains detailed information about the second dataset and our approach to training the different language models. Using the resulting models allowed us to investigate the two final research questions: As a first step, we investigate in Section 6 for Python, whether the additional section information can actually improve the performance of the completion suggestion (RQ2). In the next step, we answer RQ3 by shedding light onto the questions whether these results also generalize to other languages like Java (Section 7).

3 Structure Analysis of Function Documentation Comments (RQ1)

We noticed during our previous work (Ciurumelea et al. 2020) that Python documentation comments (docstrings) often have a well defined structure as a result of following a specific formatting style, and are not only simple natural language textual descriptions of the accompanying code. As this observation seems to be relevant for approaches that analyse the content of comments or build tools for supporting developers to write comments, we decided to further investigate how common formatting styles are and how they are used in practice. While past research has studied the content of comments (Pascarella and Bacchelli 2017) as well as the co-evolution of source code and comments (Fluri et al. 2007; Wen et al. 2019), they did not consider the structure of documentation comments. In addition, we also investigate the prevalence and size of documentation contained in different sections.

Our previous work has focused on documentation comments for Python functions, however another popular programming language, Java, also uses a formatting style called Javadoc (How to write doc comments for the javadoc tool 2021) for writing documentation comments. An accompanying tool, also named javadoc, exists for automatically extracting these comments from the source code to different kinds of output formats. While formatting styles for other programming language do exist, to the best of our knowledge these are less common with fewer available open-source projects. Therefore, we restrict our investigations to these two programming languages.

In the next sections, we describe the dataset, the process for parsing the documentation comments and functions, and finally present the findings of our analysis. We analyse the prevalence of the different formatting style for the Python dataset. Following, we study if the Python and Java documentation comments have the structure we expect and investigate where the parameter, return and raises/throws sections should be included but are missing. We also analyse the distribution of the different sections among the projects in our dataset, comparing the findings between the two programming languages. Additionally, we look at how complete and correct the parameters sections are. Finally, we collect statistics on the size of section-based comments, and investigate whether parameters for which a dedicated section is missing are mentioned elsewhere in the docstring description.

We would like to note that throughout the paper we often use the term docstring to refer to a documentation comment accompanying a method for Java and/or a function for Python. When necessary we also specify the corresponding programming language.

3.1 Dataset

To study the structure of documentation comments we use the Python and Java datasets provided during the CodeSearchNet challenge (Husain et al. 2019b), which aimed to explore semantic code search, i.e., retrieving relevant code given a natural language query (Husain et al. 2019a). This data is suited to our goal, as it provides comment-and-code pairs at the function level. It comprises 4,769 Java repositories with 136,336 distinct files and 13,590 Python repositories with 92,354 files, respectively.

To build the corpus, the authors of Husain et al. (2019b) chose publicly available open-source non-fork GitHub repositories that were identified as libraries (used by at least one other project), and sorted by “popularity” represented by the number of stars and forks.

The following filtering steps for selecting the comment-function pairs were applied:

-

only include functions that do have an associated documentation comment;

-

filter-out pairs for which the comment is shorter than 3 tokens;

-

filter-out pairs for which the function is shorter than 3 lines;

-

remove functions with the word “test” in the name, and constructors and extension methods such as __str__ in Python or toString in Java;

-

remove duplicates using an algorithm described by Allamanis (2018) based on Jaccard similarity from the dataset and keeping a single copy.

In Table 1 we include statistics for the analysed datasets on a repository level. These include the files and functions that were not removed after the filtering step. The code is tokenized using TreeSitter (GitHub’s universal parser), while the documentation comments are tokenized using NLTK’s tokenizer (Nltk sentence tokenizer 2021). Although, software-specific tokenizers are subject to ongoing research (e.g., S-POS (Ye et al. 2016)), these are not as readily usable, and we found NLTK sufficiently precise. A full word is considered a token, but also punctuation signs or mathematical operators. As an example, this function with the largest number of code tokens for Python includes a large number of matrix multiplications.

3.2 Parsing Documentation Comments

The current best practice is for Python and Java function documentation comments to follow a specific style and structure, nevertheless, this is largely just a recommendation, and tools enforcing docstring formatting are not widely applied, even though they existFootnote 1. To understand the structure of these comments, we first need to parse them and extract the different sections, as described next.

3.2.1 Parsing Python Docstrings

Python documentation strings or docstrings are string literals that occur as the first statement in a function or method and can be accessed through a special attribute at runtime. The goal of a docstring is to summarize the function behavior and document the arguments, return value(s), side effects, exceptions raised, and any restrictions related to when the function can be called (Pep 257 – docstring conventions 2021). In Listing 1 we include an example of a function docstring written using the Google style format. For Python, the official recommendation is to use the reStructuredText (reST) style for formatting docstrings as mentioned in Pep 287 – restructuredtext docstring format (2021). Nevertheless, in practice several other formatting styles are also used, like Epytext (The epytext markup language 2022), Google (Google docstring style 2022) and NumPy (Numpy docstring style 2022). All these formats contain the following main sections (Numpydoc docstring guide 2021). Some sections are specified by corresponding markup indicators (“tags”):

-

Short description: a one-line summary;

-

Long description: an extended summary clarifying the functionality;

-

Parameters: one tag for each function, describing the argument including its type;

-

Return: a tag explaining the return type and value;

-

Raises: one tag for each possible exception raised by the function and under what conditions.

To extract the different sections, we use a modified version of an open-source parser for Python docstrings called docstring_parser (Docstring parser 2021). This offers support for the reST, NumPy and Google formats and we extended it to also parse docstrings using Epytext. The recommended best practice is to always start a docstring with a one-line summary. Then, if necessary, more details can be added as a Long description after an empty line. We observed that following this recommendation the parser made the assumption that the first line of a docstring is always the Short description, and everything else that does not start one of the other section belongs to the Long description. In our dataset, developers would sometimes write first sentences that are longer than a single line, therefore the parser would cut the sentence and erroneously interpret the first part until the newline character as the Short description and the rest as the Long description. We modified the parser to interpret the first sentence as the Short description using the nltk sentence tokenizer (Nltk sentence tokenizer 2021), and if there are additional sentences, allocate them to the Long description. This choice was a trade-off between what the standard recommends and how developers write comments. It seemed wrong to cut a sentence at the end of a newline, for this reason we decided to extract the first sentence, even if it extends the first line. However, if the first line contains multiple sentences, just the first one is interpreted as the short description. We encounter the situation when the first sentence is longer than a single line in 18% of the comments with Short Descriptions, while when this is shorter than a single line appears in around 6% of the cases. However, we also noticed that when the first line contains multiple sentences, the extra sentences represent additional explanations and would be more appropriate for the long description.

After these changes we perform a preliminary evaluation for the different sections to understand how well the parser works. We want to assess whether parsing errors might influence our conclusions, while we do not intend to perform a full evaluation of the parser itself, as this would be outside of the scope of this paper. We choose for each section a sample of 100 docstrings and manually analyse them. To choose this sample we first randomly select 500 projects from our dataset, then for each section we iterate through the list of projects and randomly select one docstring per project that contains the specific section until we obtain a sample of 100 docstrings per section. For each section we obtain a different sample of 100 docstrings and the corresponding extracted content. One author read each docstring and classified it as correct/incorrect and marked the incorrect examples according to the identified problems. Table 2 contains the results of our evaluation. Note that the Incorrect Cases column only includes a subset of the identified cases for reasons of brevity.

For the Short description, as mentioned, we modified the parser to split at the sentence level. We use a well established parser from the NLTK package trained for the English language. Nevertheless this relies on punctuation being used correctly and can return an incorrect sentence split if there is no punctuation or if abbreviations using a “.” character are included. From the sample of 100 docstrings, in 91 of the cases the parser correctly extracts the first sentence as the Short description. In 7 cases, the tokenizer does not split correctly the sentences and in 2 cases the docstring is not written in English, although that does not necessarily mean that the extraction is not correct. If the docstring contains correct punctuation similar to the English language, the tokenizer is still able to split the sentence correctly.

For the Long description, we obtain correct results in 81 cases. In 7 cases the sentence split is not correct, in 9 cases the docstring content is not formatted correctly and the Long description contains descriptions of return or parameter values. In 2 cases the docstring is not written in English and in only one case the parser made an error and terminated the Long description too early, thus skipping some of its content.

For the Parameters section the parser extracts for each parameter the name, the type, whether it is optional or not and a potential default value. In this case it is able to correctly extract the parameter tag descriptions in 77 of the docstrings, from which two of the docstrings contain an empty description. The most common mistake we identified is that the parser is not able to extract the type of the parameter correctly, nevertheless, the parameter name and description are returned correctly. We encountered one example that was not written in English and in two cases the developer included extra content after including the parameters section which the parser erroneously attributed to the last parameter description.

For the Return section the parser extracts the type and the corresponding description. The parser correctly extracts this information for 76 of the cases, however in 5 of the cases the developers documents returning None, and in 1 case the description is empty. The most common mistake identified because of wrong formatting is that the type is not correctly detected and is included in the description. In 4 of the cases, the return value is a tuple that is not formatted correctly, therefore the parser only extracts one return value.

For the Raises section the parser returns a tuple with a type and description for each potential error that is documented. It works correctly in 74 of the cases, although for 2 cases the description section is left empty. The most common mistake encountered is that the type is not correctly identified, this is either because of a formatting error, or because the parser is not able to extract it, however the description is correctly identified. In 1 more case the docstring is not written in English.

We include in Table 3 several examples that could not be parsed correctly. For the Short description, the sentence tokenizer incorrectly detected a sentence split because of the ‘.’. For the Long description example, it is a bit difficult to understand what the developer meant to write, it seems that they used the wrong punctuation. For Parameters the parser extracts tuples of the form: (name, description, type, is optional). Therefore, for the example it is clear that the developer wanted to specify the type ‘bool’, however this should have been split by the rest of the description through the colon character and not the dot character. Finally, the Raises sections are extracted by the parsers as tuples of the form: (type, description). However, as the exception type is not followed by a newline, as the formatting style specifies (Example numpy style python docstrings 2022), it was wrongly extracted together with the description.

After performing this analysis we can conclude that the parser works well enough for our purposes for the Python programming language. The most common identified problem, is that the parser is not able to correctly extract the type from the Parameters, Return or Raises tag descriptions. However, we do not consider this information when analysing the structure of Python documentation strings, therefore it does not affect our results.

3.2.2 Parsing Java Documentation Comments

Doc Comments, also called Javadoc, is the de-facto standard format for writing comments in Java, intended for use with the javadoc documentation generator. Thus, unlike in Python, where multiple commenting styles exist, documentation comments in Java usually adhere to one specific structure. Furthermore, programming environments (such as IntelliJ IDEA or Eclipse), automatically generate correctly formatted comment templates for the developer to fill in. API documentation to well-maintained Java projects, be it as HTML pages or in other formats, is commonly generated entirely from the documentation comments written in the source code. Hence, the Java documentation specifically states two primary goals for commenting code: “as API specification, or as programming guide documentation” (How to write doc comments for the javadoc tool 2021), with the former being a priority.

Java documentation comments can be written in HTML, placed directly before class, field, constructor or method declarations and typically consist of two parts: 1. A description, where the first sentence is a short summary, followed by 2. block tags, such as @author, @param, @see, or @deprecated, among many others.

In this study, we are interested only in the description and the @param, @throws and @return tags. Observe Listing 2 for an example of a Java method that includes a correctly formatted comment. Note that unlike in Python docstrings, the type of a parameter is not included in a block tag (although it may be optionally linked to).

Because we wish to know the types of parameters for our study, we do not only parse the docstrings in our dataset, but also the method signatures. This way, we can determine (i) the types of documented parameters, provided their names are correctly mentioned in the block tag, and (ii) whether there are undocumented parameters in the method signature.

To parse the comments and methods, we use javalang (Javalang 2021). For the description section of each comment, we used NLTK’s sent_tokenize to separate the first sentence (the summary or what we call the Short Description) from the rest (what we call the Long description).

All HTML tags and entities were stripped from the comments using the following regex:

<.*? >| & ([a-z0-9]+ | #[0-9]1,6 | #x[0-9a-f]1,6);. We implemented a two-fold verification to ensure that the data is extracted correctly. First, we handcrafted a code and comment pair as a sample representative of the data set and manually extracted the expected data. Then, we wrote automated tests to ensure that our approach extracts the expected data. Second, we drew a random sample of 100 from the extracted data and had one author inspect each of them for discrepancies between the expected and extracted values. The extraction of Short and Long descriptions, Parameters, Return and Throws statements worked perfectly in 97 out of 100 cases, with zero errors in identifying and extracting the different docstring sections, as well as extracting the types from the source code where applicable. Due to a limitation in the parser, the type of two parameters and one return statement were extracted incompletely: when a type is specified using a fully qualified identifier, the parser only extracts the first path component, e.g., only java instead of java.util.Map.

3.3 Comments Sections Analysis

In this part of the paper, we perform an in-depth investigation into the nature of docstring usage in Python and Java in order to answer the following questions:

-

What is the prevalence of the four different styles for Python docstrings?

-

How often are docstring sections missing where they should be present?

-

How common are the different sections among the projects in our dataset?

-

How well do parameter docstrings match up with the parameters specified in the function or method (correctness and completeness)?

-

How often are parameters described in the short or long descriptions instead of having their own tag description?

-

How can the length of parameter, return and exception descriptions be quantified?

3.3.1 Distribution of Python Formatting Styles

A particularity of Python documentation comments is that there does not exist a consensus on a single formatting style, there are several recommended styles while plain text docstrings are also accepted. To analyse the distribution of the formatting style, we identify the majority style as the style of a particular project. We can only determine the style of a docstring, if this contains one of the Parameters, Return or Raises sections, because the Short and Long descriptions look identically for all the styles as they do not contain any tag identifiers. We plot in Fig. 2 the obtained distribution. We notice that reST is the most popular style, followed by the Google, NumpyDoc and finally the Epytext styles. In 6,551 projects we were not able to identify a style, this either means that the docstrings do not describe the parameters, return and raises values or that the formatting used does not follow any of the known conventions. The projects for which a style could not be identified are included in all the analyses that follow in the next sections, as otherwise the comparison would not be fair with the Java dataset.

3.3.2 Analysis of Missing Docstring Sections

We start by analysing if the Python and Java documentation comments from our dataset have the structure we expect and investigate where the parameter, return and raises/throws tag descriptions should be included but are missing. We would like to emphasise that in our analysis we only consider function-comment pairs, therefore we only take into account functions for which the developer decided to write a comment. While we do not believe that a general rule to document all code is necessary, we also do not adhere to the saying that “good code is self-documenting”. By taking into account only functions with comments pairs, we select only cases for which a comment was deemed necessary. And, we do not see a valid reason why a comment should not be complete, once it had been included.

To find the cases for which the Parameters, Return and Raises sections are missing when they should be included, we parse the docstrings and extract the different tag descriptions. Please, note that we use the terms “Raises”, “Throws” and “Exception” interchangeably to refer both to raises sections in Python docstrings and throws sections in Java documentation comments. We then conduct a static analysis on the functions from the dataset to determine if a particular function contains parameters in the signature, or return or exception-raising statements in the body. Then, we compare this information with the parsed docstring sections and compute the fraction of docstrings that have missing Parameter, Return or Raises docstring tags per project. For example, for a project with 100 function-docstring pairs, of which 30 have parameters included in the function signature, but lack a parameter section in the comment, we obtain a fraction of 0.3 for missing parameters. After obtaining these values for each project and section we compute and include the summary statistics results of the fractions of missing sections in Table 4. Here, we would like to note that for the Python dataset, we only consider projects for which a formatting style has been identified, that is we were able to parse correctly at least one of the parameter, return or raises sections in the docstrings of the project.

For this analysis we consider only absolute values. For instance, if the function has parameters in the signature, we only verify if there is at least one parameter documented in the docstring. Similarly, for the returns and raises we only report a missing case when there is at least one corresponding statement in the function body but none in the docstring. If the function does not include parameters in the signature, or has no return or raises statements in the body, then this is ignored in the corresponding analysis as to not inflate the results.

From Table 4 we can observe that developers omit these sections quite often for the Python programming language, although they should be included. The section developers include most often, is the Parameters section. The average fraction of project docstrings that should include a parameters section but do not, is 0.424. This value increases to 0.565 for the return section, while the raises section is the one developers include least frequently and is missing on average in over 0.85 of the project docstrings. This represents a convincing argument for our work, as there does not exist a valid reason for not writing complete documentation comments and developers would benefit from getting support in writing them, especially for the Python programming language. Interestingly, for the Java functions we analysed, this problem seems to be less pronounced. A possible reason could be that for Java projects it is more common to use IDEs which can create a documentation comment template automatically whenever a function is defined. If this is the case we expect to see more sections with empty descriptions. Indeed, the Java dataset contains more empty parameter descriptions at 10.03% vs. 6.59% in Python. Exception descriptions are also more frequently empty in Java at 18.23% vs. 2.85% in Python. However, return descriptions are empty in Java for 7.16% of functions, compared to 11.07% for Python. For those parameter, return and exception descriptions which are not empty, we provide a further empirical analysis regarding their size in Section 3.3.5.

3.3.3 Distribution of Docstrings Sections

The minimal documentation comment includes only the Short description section, the short summary of the function. For Python this should fit on a single line, however as we observed developers write summaries that are longer than a single line, we extract the first sentence as the Short description. The short summary of a function has also been the focus of previous research that automatically generates summaries of code (Hu et al. 2018; Wan et al. 2018; Le Clair et al. 2019). In this section, we would like to understand how common it is for developers to only write a summary of the method for the projects in our datasets, therefore previous approaches only focusing on generating the short summaries of code are enough. Or if developers do provide more than just the first sentences for a documentation comment and additional approaches are needed to support them with this task.

To study how common it is for developers to only write this first sentence for the Python and Java projects in our datasets, we plot in Fig. 3 the number of extracted docstrings per project on the x axis and the number of corresponding number of Short description only docstrings on the y axis for the Python dataset. If the documentation comments in our dataset would only contain short description, we should observe an almost linear relationship. On the contrary, if the comments would never contain only the short description, then the scatter plot would only include points on the x axis, resulting in a horizontal line. From the plots, we can observe that there is a large variety into the number of Short description only docstrings per project for both programming languages. Therefore there is no obvious correlation between the number of docstrings and how many of those are Short descriptions only.

To better understand the distribution of these comments we compute the fraction of Short description only from the total docstrings per project and include the summary statistics for these values in Table 5. The average fraction of Short description only per project is around 0.38, while the median percentage has a close value of 0.31. We can notice that the Java projects have, in general, a higher number of documentation comments from the plots, while the average number of Short descriptions only per project is smaller with a value of 0.29 and the median value is only 0.18. This suggests that Short descriptions only comments are somewhat less frequent for Java documentation comments than for Python from the analysed dataset.

We would like to note that for all the scatter plots presented in the paper, we show only the projects that contain a number of docstrings less or equal to the 0.99 percentile, as showing all the points would lead to more dense and harder to read figures. As well, please note the differences in scales on the x and y axis for the Python and Java side-by-side plots. Using the same scale, would lead to figures that are more difficult to read.

Following, we analyse how common each of the studied sections: Short description, Long description, Parameters, Return and Raises are among the docstrings on a per project basis. We first look at how prevalent the Short description is over all the docstrings from the two datasets. We expect that most comments include a Short description, while it should be less common for a comment to include other sections (like Parameters, Return or Raises) but not this one. In Fig. 4 we plot the number of docstrings per project on the x axis and the number of extracted Short descriptions on the y axis. We can notice that there is almost a linear relationship between the number of docstrings and the ones that include a Short description. There is some variety for the Python dataset, while for the Java dataset this relationship between the number of docstrings per project and the corresponding number of Short descriptions is almost perfectly linear with the exception of a few projects. For both of our datasets it is clear that there is a strong inclination to include the summary as a Short description in a comment.

To analyze how common the Long description section is we plot in Fig. 5 the number of docstrings on the x axis and the number of extracted Long description per project on the y axis for both datasets. Here, we observe that there is a high variety on the frequency of this section, while still being relatively common. The goal of the Long description is to elaborate on the summary and can include different details that the developer finds important, for example dependencies with other functions, constraints that should be known when using the particular function or rationale for using a specific data structure or algorithm. While this section is less common than the Short description, developers use it frequently to describe more than just the one sentence summary of the function. We can observe that the frequency of this section is similar between Python and Java.

Next, we look at how common the Parameters section is. In Fig. 6 we plot the number of docstrings per project on the x axis, while on the y axis we plot the corresponding number of docstrings including this section for the Python and Java datasets. We can notice a higher prevalence of this section for the Java dataset, while the distribution between the number of Long Descriptions and the corresponding total number of docstrings has a linear tendency. The Python dataset seems to have several projects with various counts of docstrings, but without any extracted Parameters section, as indicated by large density of points on the x axis. These likely correspond to the projects for which we could not identify a style and classified them as N/A in Fig. 2. Here, we would like to note that it is possible for developers to have provided description for the parameters as free-text, while others include formatting errors which makes it impossible for the parser to correctly extract the Parameters section. In our results, we can only analyze sections that have been correctly formatted.

Finally, we analyse the Return and Raises sections. The plots in Fig. 7 include the number of docstrings on the x axis and the number of extracted Return sections on the y axis. We can observe similarities of this section with the Parameters section per programming language. For the Python dataset, both sections have a wide distribution without any clear correlation and a large density on the x axis. While for Java, there is a clear linear tendency while the projects plotted on the x axis are not very frequent. In Fig. 8 we plot the number of docstrings on the x axis and the number of well formed Raises sections on the y axis. Here we can notice a drastic difference in the frequency of this section for the Python dataset compared to the rest of the sections. Very few projects contain a large number of this section among the total number of docstrings. This difference is not as pronounced for the Java dataset with a larger variety of the frequency of this section among the various documentation comments.

Even though the scatter plots allow us to visualize the prevalence of the different sections per project, to gain a better quantitative understanding of how common these sections are, we perform a further analysis. We compute the fraction of docstrings including a particular section per project and then include the summary statistics for these fraction values in Table 6. From this table we can observe that for the Python dataset, the Long description is on average included in a third, while the Parameters section is included in a quarter of the docstrings of a project. The Return section shows up on average in 0.181 fraction of the docstrings per project, while the Raises section is extremely rare in the projects from the Python dataset. Here, we would like to note that the median value for the Parameters, Return and Raises sections is equal to 0, as around half of the projects do not have a majority styles, this means that such sections could not be extracted by the parser we used.

Interestingly, the Long description is less common in the Java dataset we studied, the average fraction of docstrings including this section per project is 0.25, 0.078 less than for the Python dataset, while the occurrence of the Return and Raises sections is significantly larger in the dataset we studied. The average fraction of docstrings per project including a Parameters section is 0.56, while for the Return and Raises section this value is equal to 0.448 and 0.156 respectively.

Following our analysis of the structure of documentation comments for the Python and Java dataset, we can make the following observations:

Finally, we can notice differences between the structure of Python and Java documentation comments. We speculate that this might be due to the more frequent use of IDEs for writing Java code, which generate the template of documentation comments automatically. Additionally, the existence of a single recommended and widely accepted formatting style might encourage developers to use this more often. Nevertheless, we observed for the sections that are included in Python documentation comments, they seem to be longer than the Java counterpart. Further work is needed to understand the reasons behind these differences. However, the prevalence of these sections in both datasets we studied, indicates that both Java and Python developers can benefit from approaches that support them in writing fully formed comments and not only the summary.

3.3.4 Analysis of the Parameters Section

For the Parameters section, we extend our analysis by looking at how correct and complete this section is. We interpret precision as correctness in order to answer the following question: “from the parameters that are described in the comment, how many do actually appear in the function signature?”. And we interpret recall as completeness to answer the second question: “from the parameters included in the function signature how many are described in the documentation comment?”. We compute the precision (correctness) and recall (completeness) of the included parameters using the following two formulas:

The correctDocstringParams represent the number of parameters described in the docstring that match parameters from the function signature. The len(docstringParams) represents the number of parameters included in the docstring, while len(functionParams) represent the number of parameters included in the function signature. For class instance methods we ignore the parameter self, as the recommendation is to not document it. As an example, in Listing 3 the description for the parameter one_hot_labels is missing and we obtain the following values for precision and recall: Precision = 1/1 = 1.0, Recall = 1/2 = 0.5.

We then compute the average of these values per project and include the summary statistics in Table 7. From this table we can observe that the average and median precision and recall per project for Python docstrings that do include parameters descriptions is around 0.5. This can happen if a developer does not document all the parameters in the docstring. Maybe they did not include them from the beginning, or maybe they neglected to update the corresponding comment when adding additional parameters at a later time. Another option is that the function parameter in the signature was renamed, but not in the docstring. Incomplete comments can be particularly problematic, as a developer, reading the comment might wrongly assume that it is complete and rely on incorrect information. Interestingly, the average precision per project of included parameters description is much higher for the Java projects at around 0.97, while the average recall per project is lower at 0.65. This further corroborates our previous observation, that the comments of the Java dataset are more complete. If parameters are included in the documentation comments these are correct, as named in the function signature, however also for the Java dataset sometimes parameters are missing as indicated by the lower average recall value.

References in Short or Long descriptions

In many cases, docstrings are present, but the expected parameters are not defined. We wanted to determine how often parameters may be documented simply as part of the Short or Long descriptions, rather than in their dedicated sections. For this investigation, we first filtered out all samples where the parameters are fully documented. For the remaining samples in which at least one parameter is undocumented, we (a) parsed the function body to extract the parameter names, (b) determined, whether each parameter name appears in the Short and/or Long descriptions, (c) calculated, for each project, the mean fraction of parameter names referred to in different parts of the description. We chose to consider the mean fraction for each project, rather than all fractions, as projects have a tendency for uniformity, and we wanted to avoid larger projects with more functions to bias the outcome. To parse the functions, we again used javalang for Java code, while using Python’s own ast module for parsing Python functions. We were able to parse 496,248 Java methods. Since the CodeSearchNet dataset also contains older Python code, we used lib2to3 (Lib2to3 2022) to refactor any Python functions that could not be parsed initially. This allowed us to parse an additional 3,964, for a total of 442,980 Python functions. We were unable to parse 440 Java and 333 Python functions from the CodeSearchNet dataset, as they appear to be malformed. The mean fraction of mentioned parameters for each project is calculated via the following formula:

Figure 9 visualizes these mean values. We can see that for both Python and Java, where docstring tags are missing, parameters are mentioned in about 10-40% of descriptions for most projects. Conversely, this means that parameters are undocumented in the majority of functions. The outliers indicate that in some projects, most parameters are documented, or at least mentioned, as part of the Short or Long descriptions.

3.3.5 Length of Tag Descriptions

Similar to parameters, also return values and exceptions are documented using tags, for example @returns foo or @raises exception X. To obtain an approximate understanding of how extensively this tag-like structures are documented (if they are present), we used NLTK’s word tokenizer to count the number of words contained in their descriptions. For parameters, we also check if the parameter name itself is mentioned as part of the description. We make sure to only identify parameter names not surrounded by other alphabetical characters (e.g., a parameter i would be identified in the sentence “Takes a number i.” but not in “Not i mplemented!” by using the following regex:

rf”(?: ̂|[â-zA-Z]) (re.escape(param)) (?:[â-zA-Z]—$)

Figures 10, 11 and 12 display the word count distributions (not including zero-length descriptions), revealing the insights described next.

A quarter of all non-empty parameter descriptions appearing in Python and Java programs comprise just up to 5 or 3 words, respectively. From a qualitative inspection of our data, we conclude that these parameter tags are usually in the form of @param thing the given thing; i.e., the documentation provides barely any additional information over the source code. The same holds true for exceptions, where a quarter are described in 6 words or less in both languages. Furthermore, one in four parameter descriptions mention the parameter name itself, contributing to the word count, in both Python (27%) and Java (28%).

Tag descriptions in Python tend to be more verbose than in Java, this is true for both Short and Long descriptions. We identify at least one contributing reason: most Python code lacks type hints and Python code often leverages duck typing. For this reason, the parameter descriptions often describe default values or the ability to accept different types of values. Similarly, return values in Python are often complex values (such as dictionaries), which are not defined and documented in a separate source code location, so they are documented in the location where they are returned. It is also common for usage examples to be included as part of parameter or return descriptions. Listings 4, 5 and 6 illustrates a few such examples. By contrast, parameters and return types in Java have a specific (or at least, generic) type which is documented elsewhere. Thus, return descriptions in Java are often similar to the short parameter and exception tags in the form of “@return the thing”. Given the relative verbosity of Java code, it is reasonable to assert that Java code can be more self-documenting than Python code in this regard.

There are numerous outliers which provide extensive documentation for single parameters, return values, or even raised/thrown exceptions. Inspecting a random sample of long parameter descriptions reveals that these often accept a specific set of values (such as choosing a specific strategy or algorithm), in which case every value accepted by the function is described as part of the parameter documentation. Similarily, long return and exception descriptions often describe an extended set of possible values. We also observe that in some cases, the text provided as a description must have been placed there accidentally, for example duplicating documentation that belongs to the type of the parameter, possibly done so by an automated tool.

Table 8 gives an overview of all data analyzed in Sections 3.3.5 and 3.3.4.

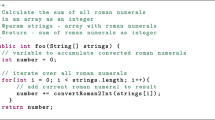

4 Structure-based Comment Completion

The empirical study has shown that structural elements are indeed widely used in documentation comments of Python (docstrings) and Java (Javadoc). Motivated by this finding, we present novel language models in this section that leverage this structure information for the task of comment completion. For simplicity, we will refer to both types of structured documentation with the name docstring. The completion task that we are tackling is “given a prefix sequence of a documentation comment, suggest the most likely next word to the developer”. We include an illustrative example in Fig. 13. Imagine that the developer is about to write a complete comment and is currently at the point of the cursor (where the darker text ends). The completion engine should assist the developer in finding the next words, i.e., function that can, and so on. We adopt and extend neural language models that were presented in previous work (Ciurumelea et al. 2020) as a baseline for generating completion suggestions.

4.1 Neural Language Models

The task of generating completion suggestions can be solved using language models (LMs), which are statistical models (Jurafsky and Martin 2000) that assign a probability to each possible next word and are also able to assign a probability to an entire sentence. For the example in Fig. 13 if the developer typed Converts the class into an actual view such a model can generate possible next words. Some potential ones are function, method and object, but not the, refrigerator or view. These kind of models have applications in a large variety of tasks, such as speech recognition, spelling or grammatical error correction and machine translation among others. Traditionally, this problem was tackled using n-gram language models, which estimated the probability of a sequence by extracting frequency counts from the training corpus. However, n-gram models have several problems due to data sparsity and require complex back-off and smoothing techniques. Additionally, using larger n-gram sizes is very expensive in terms of memory and these models cannot be generalized across contexts. For example, seeing sequences such as “blue car” and “red car” will not influence the estimated probability of “black car”. Whereas a neural language model is able to learn that “blue”, “red” and “black” all represent the same concept of color. Neural language models are better able to handle all the problems described above, and will in general, have a much higher prediction accuracy than an n-gram model for a particular training set (Jurafsky and Martin 2000).

To build neural language models we use LSTM-based models that process sequences of words one word at a time and return at each time step a fixed sized vector representing the processed sequence so far. Vanilla RNNs are notoriously difficult to train and have problems with exploding and vanishing gradients during training. For this reason they have been replaced by gated architectures such as Long Short-Term Memory (LSTM) or Gated Recurrent Unit (GRU) units.

Language models can be evaluated using an intrinsic metric called perplexity, the perplexity of a language model on a test set represents the inverse probability of the test set normalized by the number of words (Jurafsky and Martin 2000). A better language model is one that assigns a higher probability to the test set, therefore has lower perplexity values. For training and evaluation of such models it is common to use the log transformed version of perplexity, called cross-entropy. An intrinsic improvement in perplexity does not guarantee an improvement in the performance of the task for which a language model is used, therefore it is important to always evaluate such a model using extrinsic metrics, which reflect the performance of the application. In our case, we want to know how well we can predict completion suggestions while a developer is typing a comment, for this we use the Top-k accuracy metric with k = [1,3,5,10]. We define the Top-k accuracy as the ratio of correct predictions among the k predicted words with regard to all predictions being made.

When training language models it is necessary to define a fixed vocabulary at the beginning. This vocabulary can be composed of full words, sub-words or characters, while each type has its advantages and disadvantages. In general, it is not possible to learn all possible words, as some will not appear in the training set at all or will not be frequent enough. The solution is to assign a general <UNK> token to words that are rare or were not seen during training. This is a reasonable solution for natural language text, as it is less likely to encounter unknown tokens during training and testing. However, in source code developers include new identifier names all the time (Hellendoorn and Devanbu 2017) and dealing with <UNK> words requires additional measures. For example, splitting identifiers using the snake or camel case convention into individual words.

4.2 Language Models for Documentation Comments

We train five different language models (LM) for the experiments in this paper. We first train a Sequential and a Context-based Language Model on the extracted sections to establish a baseline. For this, we use the same models that have been introduced in previous work (Ciurumelea et al. 2020).

Sequential LM

The sequential language model is presented in Fig. 14(a) and is an LSTM model that includes an Input layer, an Embeddings layer, one or more LSTM layers followed by one or more Dense layers interleaved with Dropout layers. The input layer receives a word sequence of length k representing the prefix, for which a completion suggestion should be generated. Then this is followed by an Embeddings layer, this associates with each word of the vocabulary a dense fixed-size real valued vector that incorporates semantic and syntactic information. This layer is necessary, as deep learning models cannot receive as inputs words, only numeric values. What happens in practice is that words are one-hot encoded, that is each word is represented as a sparse vector with a 1 on a single position. The Embeddings layer is added on top of the Input layer, to learn a dense distributed representation for each word from the vocabulary in a predefined vector space. This representation of the words, also called word embedding is learned based on the usage of the words. Words that are used in a similar way will result in having similar representations that can be projected close in the geometric space they are represented. For example, colors or animals will have similar vector representations.

The LSTM layer will process the input sequence word-by-word and keep an internal state during this process. At the end of the sequence it will return a vector representation of the received sequence. This is passed through the Dense layers, to extract further useful features until the final Dense layer, which outputs for each word in the vocabulary the probability that this is the next word. This is a regular language model that only uses the text of the documentation comments in our dataset for training, but as mentioned in (Ciurumelea et al. 2020) documentation comments are accompanied by context information, such as the method body they are documenting.

Context LM

Following, we build a Context LM represented in Fig. 14(b), which is a multi-input model that additionally to the prefix sequence also receives the method body as input. The method body is passed through a shared Embeddings layer, then the obtained representation of the method body is passed through a GlobalAveragePooling1D layer. This averages the Embeddings vector representing the method body to obtain a single vector representation of the method body context, which is then repeated and concatenated with each word embedding representation of the prefix sequence. The rest of the model follows the structure of the Sequential LM.

Next, we want to investigate how to leverage the structure information of the documentation comments to improve the suggestions results. For this we have two options: either build a model that receives the section type as additional input, or train section specific models, that is train a separate model for each of the sections we analysed: Short and Long description, Parameters, Return and Raises sections, respectively.

Section LM

To pursue the first option, we extend the Sequential LM and build a Section LM model represented in Fig. 15, that also receives the section information as input, which can take one of the following categorical values: Short description, Long description, Parameters, Return or Raises. The section input is passed through an Embeddings layer, with different weights and dimensions than the ones used for the prefix sequence input. This allows the model to learn a more meaningful representation for the sections, for example, it might learn for the Short description a vector representation that is more similar to the one learned for the Long description than the one for the Raises sections. The embedding representation of the section input is repeated and concatenated with each embedding vector for the prefix sequence words which are then passed through the layers described previously.

Section-Context LM

Our fourth model is a Section-Context LM model that receives as input the prefix sequence, the method body context and the section type for which it should learn to generate a completion suggestion. This model is included in Fig. 16. It concatenates the embedding representation for each word in the prefix sequence with the obtained method context and the section types representations. This information is then passed through the LSTM layers for each word of the prefix sequence, and the representation at the end of this sequence is passed through the Dense layers up to the final Output layer.

Section specific Context LMs

Additionally, we train section-specific models using the Context LM architecture 14(b) using as training data only the corresponding sections. For example, for the Short description we train a model that only receives as input the prefix sequences generated from the extracted Short description sections together with the method body context. As a result, we obtain 5 Section models that are then evaluated on the corresponding section test sets. We will refer to these models as Section specific Models in the rest of the paper.

5 Dataset Creation and Training Details

We selected Python and Java as two popular representatives for two different programming paradigms to investigate the performance of our completion engine in different programming languages. Additionally, both languages are known for recommending a formatting style when writing documentation comments. In the following, we describe which datasets we use for training and evaluating the models, how we extracted the training instances and finally provide some additional details about the models themselves. For Python, we collected a larger and more diverse dataset for training the language models. However, for Java we keep the CodeSearchNet dataset as this should be sufficient for our goals, to understand if our results can be generalized to other programming languages.

5.1 Documentation Comments Datasets

Python Dataset Collection

We build a new Python dataset from the GitHub dump available on the BigQuery platform through the Google Cloud Public Datasets Program (Google cloud public datasets 2021). At the time we queried BigQuery for this particular dataset, it had last been updated on the 20th of March 2019. We collect all Python files that contain at least one instance of triple quotes, which could potentially represent a docstring. Here, we would like to note that some of the projects were relatively old and might not use the newer recommendations. Additionally, by only looking at triple quotes, we might have missed the comments that uses single triple quotes. However, this was only common for older projects. This resulted in a very large number of files and projects of varied quality and characteristics. To ensure that the quality of our dataset is sufficient, we queried GitHub using the REST API (Github rest api 2022) for additional commit information and filter the projects based on the following characteristics:

-

no forks (not marked as a fork of another project);

-

include at least 10 Python files;

-

commits data: it includes at least 10 commits, and these commits include at least 2 different author emails

-

include at least 100 docstring - function pairs.

The filtering criteria have been chosen as minimal characteristics for excluding toy projects. For example, we have chosen at least 10 commits with at least 2 different emails as criteria to ensure a minimum amount of activity in the project without excluding too many projects. However, it is possible that a GitHub user uses two different emails when committing changes to a repository, thus resulting in a false positive. We also ignore projects which have been removed from GitHub or for which we were not able to obtain the commit information. After performing all these filtering steps we obtain a list of 14,507 projects, nevertheless we noticed that there was some duplication among the projects. While we filter out the forks, some users copy a specific project then create a new repository on GitHub using this project and give it a different name. By using the git root hash we remove these cloned projects and obtain a final list of 9,743 projects that comprise the Python dataset. By looking at the git root hash, we were able to filter out a substantial number of cloned projects. These were projects that copied another popular project and contained minimal changes to it. However, it might still be possible that we missed some of them, or that a project includes the source code of another project.

Java

As we previously mentioned, for Java we use the dataset, that we analysed in Section 3 of the paper. This is the one provided as part of the CodeSearchNet challenge (Husain et al. 2019a).

5.2 Training Data Extraction

To train and evaluate machine learning models, it is necessary to prepare the data and convert it to training instances in the format expected by the models. Additionally, to ensure that our evaluation results are valid, we should follow procedures such as splitting the dataset into a training, validation and testing sets, and use cross-fold validation. Employing k-fold cross-validation for training and evaluation mitigates the risk that the results are caused by spurious patterns in the data partitioning.

The dataset is split into 5 folds, while 4 folds are used for training, and the fifth fold is equally split into validation and test sets. We are using 5 folds instead of the more traditional 10 folds because of limited computational resources. We follow the guidance from Le Clair and McMillan (2019) and split the data on a repository level granularity. This means that repositories that appear in the training set will not appear in the validation/test sets and vice-versa. Splitting the data on a function level granularity would lead to a boost of our results as functions from the same project would be found both in the training and test set, therefore making it easier for the neural language models to generated completions for them. Please note, that the partitioning on a project level is different to previous work (Ciurumelea et al. 2020), which split the dataset at a function level.

For the collected Python dataset, we check and eliminate function-docstring exact duplicates pairs between the train and validation/test sets. In this way we are ensuring that the results are not inflated because of auto-generated code and comments or snippets of code that are copy-pasted between projects. For the Java dataset this has already been done (Husain et al. 2019a).

In Table 9 we include the number of repositories, files and extracted function-docstring pairs averaged over the 5 folds for the Python and Java datasets. We would like to note, that as some of the projects have a disproportionate number of docstrings, we only keep a maximum of 500 docstrings per project for Python that were randomly selected. Additionally, for each documentation comment we extract the sections and obtain the total counts as included in Table 10 averaged over the 5-folds for the separate dataset partitions.

We can observe by comparing the number of repositories and extracted function-docstring pairs for the Python and Java datasets described in Table 9, that the Java dataset is smaller. From the statistics describing the number of extracted sections included in Table 10 for the Java dataset, we notice that these are better balanced and we expect that this will have an effect on the training of the neural language models. This observation confirms the findings from the Section 3, that Java comments include more often the different sections and rarely contain only a summary of the method as a documentation comment.

Preprocessing and Generation of Training Instances

For creating the training instances we use the sections extracted from the docstring-function pairs. For each of the Short description, Long description, Parameters, Return and Raises sections we perform the following preprocessing steps:

-

1.

remove non-English docstrings, which were detected using the Python langdetect library (Langdetect 2021) as mentioned in Le Clair et al. (2019);

-

2.

remove doctests (Doctest — test interactive python examples 2021) from the section content, these correspond to interactive Python sessions and contain snippets of code and the expected results, they are not very common and would likely lead to worse results. This step is only applied for the Python dataset.

-

3.

split identifiers based on the camel and snake case conventions, this has been shown in previous work to reduce the necessary vocabulary size (Hu et al. 2020) for training neural language models and as a reasonable solution for the problem of Out-Of-Vocabulary words for source code comments;

-

4.

lowercase all characters;

-

5.

replace any characters that do not satisfy the following regular expression: [A-Za-z0-9.,!?:; ∖n] with an empty space;

-

6.

replace the punctuation signs with a corresponding special symbol (e.g.,the dot character is replaced with <punct.>), we keep the punctuation signs as we believe they are important signals for the LMs;

-

7.

multiple whitespaces are replaced by a single one;

-

8.

add a <sos> (start of sequence) and an <eos > (end of sequence) token to each extracted section.

The summary statistics for the obtained lengths in tokens of the preprocessed sections for the datasets are included in Table 11.

Next, we describe how the extraction, preprocessing and training instance generation works using as an example the function-docstring pair illustrated in Listing 1. We include in Table 12 the extracted sections, while in Table 13 we include the corresponding preprocessed sections. The Short and Long descriptions will be extracted as free text from the docstring if they exist, while the Parameters are represented as a list of tuples. Each tuple will contain the identified name, the description, the type if it is included, and, for Python, a boolean flag that indicates whether the parameter is optional. The Return section is a tuple containing the type and the corresponding description, while the Raises section can contain a list of tuples containing for each extracted exception the type and the description. The method body is split into tokens, which are then preprocessed using steps 3 to 7 from above and the results are then fed as context input for the Context-based LMs presented earlier.

Using the preprocessed section, we generate the training instances, these contain sequences of length k, the preprocessed method body and the corresponding section type. The neural language models will be trained to predict the kth word, given the prefix sequence of length k - 1, and additional input depending on the model type. We apply a sliding window approach to extract the sequences of length k for the Short and Long description sections. However, the other sections are lists of tuples or tuples, therefore we first assemble them into strings before extracting the sequences of length k. For example, for each extracted parameter we concatenate the identifier name, the type and optional/not optional qualifier if it exists, a special token <sod > (start of description) and finally the description. A similar process is used for the Return and Raises sections.

In Table 14 we include several of the resulting training instances corresponding to the current example. The first column includes the preprocessed method body, the second one includes the section type and the final one includes the docstring sequence. For these examples, the model would receive the sequence without the last word as input and it would learn to predict the last word. In practice, we train the model on all possible subsequences that we can generate from a comment. We follow the previous work (Ciurumelea et al. 2020) and use a value of 5 for k, as this allows us to predict words early on. The size of the preprocessed method body is limited to the first 100 tokens, which also follows the example of previous work (Hu et al. 2020) in automated summary generation, as neural networks generally have problems with learning long sequences.

5.3 Model Configuration and Training Details

We trained the described models for 20 epochs with early stopping, and the models were evaluated after each epoch on the validation set. The model that performed best on the validation set was then evaluated on the test set by computing the Top-k accuracy. Here, we would like to mention that testing instances for which the predicted word is a special token, like the < UNK > or any of the punctuation tokens, are ignored during the evaluation on the test set. We used the Tensorflow and Keras libraries to implement our models and trained them using a GPU. For the training, we use the following hyperparameters for the neural language models:

-

a size of 512 for the prefix and method body Embedding layers, while a size of 64 is used for the section type Embedding layer;

-

an LSTM layer with size 256, followed by a Dense layer with size 128 and finally the Output layer with dimension equal to 30,000, as the vocabulary size, we also add a Dropout layer between the Dense and Output layers with a dropout probability value of 0.2;

-

training is done using the Adam optimizer with a learning rate of 3e − 4;

This model configuration is similar to the previous work, we have only set the dimensionality slightly higher to accommodate for our larger dataset. We have not performed further hyperparameter optimization because of time and computational resources constraints. This should not affect our findings, since the goal of our work was to investigate whether the structure of documentation comments could be leveraged for the problem of generating completion suggestions. However, further hyperparameter optimization could results in better results.

6 Can Structural Information Improve Completion Accuracy? (RQ2)

The focus of this research question is to understand the effect of using the section information on the completion performance. We will investigate two aspects: “1) does using the section information improve the generated completion suggestions?” and “2) are there significant differences between the completion predictability among the sections?”. To limit the scope of the research question, we first focus only on Python docstrings. The comparison to the Java results will then be performed in the next research questions.

Methodology

To evaluate the trained language models we compute the Top-1, Top-3, Top-5, Top-10 accuracy of the generated suggestions for the test sets averaged over the 5 folds. There are several options for evaluating machine learning models, and language models in particular. We choose the Top-k accuracy as it allows us to directly measure how well the models work for our task. This metric has been used in a paper (Zhou et al. 2022) that evaluates deep learning models for the task of code completion, which is similar to our task. We are interested in understanding if the results returned by the model are correct, the most important value is the Top-1 accuracy, as likely developers will not spend time analysing a list of 10 words to chose the correct next word. However, looking at the Top-3, Top-5 and Top-10 accuracy allows us to understand the potential of the models, as additional post-processing steps could allow us to improve the prediction results. For example, we might decide to suggest a parameter or variable name on the first position if this appears in the method body and the model suggests it in the Top-5, but not as the Top-1 prediction.

We include the results of our evaluation in Table 15, describing the results obtained for the Sequential, Section, Context, Context-Section Language Models and for the Section specific Models. We select the Context LM as the base model and include for all the other models in parentheses the relative change, in percentages compared to this model. The relative change is computed as the difference between the new value and the reference value, divided by the reference value, where the new value is the Top-k accuracy obtained for a specific model and the reference value is the Top-k accuracy obtained for the base model, that is the Context LM model. The values included in the table are rounded for reasons of space, however the relative change is computed on the exact values.