Abstract

Various mature automated test generation tools exist for statically typed programming languages such as Java. Automatically generating unit tests for dynamically typed programming languages such as Python, however, is substantially more difficult due to the dynamic nature of these languages as well as the lack of type information. Our Pynguin framework provides automated unit test generation for Python. In this paper, we extend our previous work on Pynguin to support more aspects of the Python language, and by studying a larger variety of well-established state of the art test-generation algorithms, namely DynaMOSA, MIO, and MOSA. Furthermore, we improved our Pynguin tool to generate regression assertions, whose quality we also evaluate. Our experiments confirm that evolutionary algorithms can outperform random test generation also in the context of Python, and similar to the Java world, DynaMOSA yields the highest coverage results. However, our results also demonstrate that there are still fundamental remaining issues, such as inferring type information for code without this information, currently limiting the effectiveness of test generation for Python.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Automated unit test generation is an established field in research and a technique well-received by researchers and practitioners to support programmers. Mature research prototypes exist, implementing test-generation approaches such as feedback-directed random generation (Pacheco et al. 2007) or evolutionary algorithms (Campos et al. 2018). These techniques enable the automated generation of unit tests for statically typed programming languages, such as Java, and remove the burden of the potentially tedious task of writing unit tests from the programmer.

In recent years, however, dynamically typed programming languages, most notably JavaScript, Python, and Ruby, have gained huge popularity amongst practitioners. Current rankings, such as the IEEE Spectrum RankingFootnote 1 underline this popularity, now listing Python as the overall most popular language according to their ranking criteria. Dynamically typed languages lack static type information on purpose as this is supposed to enable rapid development (Gong et al. 2015). However, this dynamic nature has also been reported to cause reduced development productivity (Kleinschmager et al. 2012), code usability (Mayer et al. 2012), or code quality (Meyerovich and Rabkin 2013; Gao et al. 2017). The lack of a (visible static) type system is the main reason for type errors encountered in these dynamic languages (Xu et al. 2016).

The lack of static types is particularly problematic for automated test generation, which requires type information to provide appropriate parameter types for method calls or to assemble complex objects. In absence of type information the test generation can only guess, for example, the appropriate parameter types for new function calls. To overcome this limitation, existing test generators for dynamically typed languages often do not target test-generation for general APIs, but resort to other means such as using the document object model of a web browser to generate tests for JavaScript (Mirshokraie et al. 2015), or by targeting specific systems, such as the browser’s event handling system (Artzi et al. 2011; Li et al. 2014). A general purpose unit test generator at the API level has not been available until recently.

Pynguin (Lukasczyk et al. 2020; Lukasczyk and Fraser 2022) aims to fill this fundamental gap: Pynguin is an automated unit test generation framework for Python programs. It takes a Python module as input together with the module’s dependencies, and aims to automatically generate unit tests that maximise code coverage. The version of Pynguin we evaluated in our previous work (Lukasczyk et al. 2020) implemented two established test generation techniques: whole-suite generation (Fraser and Arcuri 2013) and feedback-directed random generation (Pacheco et al. 2007). Our empirical evaluation showed that the whole-suite approach is able to achieve higher code coverage than random testing. Furthermore, we studied the influence of available type information, which leads to higher resulting coverage if it can be utilised by the test generator.

In this paper we extend our previous evaluation (Lukasczyk et al. 2020) in several aspects and make the following contributions: We implemented further test generation algorithms: the many-objective sorting algorithm (MOSA, Panichella et al. 2015) and its extension DynaMOSA (Panichella et al. 2018a), as well as the many independent objective (MIO) algorithm (Arcuri 2017; 2018). We study and compare the performance of all algorithms in terms of resulting branch coverage. We furthermore enlarge the corpus of subjects for test generation to get a broader insight into test generation for Python. For this we take projects from the BugsInPy project (Widyasari et al. 2020) and the ManyTypes4Py dataset (Mir et al. 2021); in total, we add eleven new projects to our dataset. Additionally, we implemented the generation of regression assertions based on mutation testing (Fraser and Zeller 2012).

Our empirical evaluation confirms that, similar to prior findings on Java, DynaMOSA and MOSA perform best on Python code, with a median of 80.7% branch coverage. Furthermore, our results show that type information is an important contributor to coverage on all studied test-generation algorithms, although this effect is only significant for some of our subject systems, showing an average impact of 2.20% to 4.30% on coverage. While this confirms that type information is one of the challenges in testing Python code, these results also suggest there are other open challenges for future research, such as dealing with type hierarchies and constructing complex objects.

2 Background

The main approaches to automatically generate unit tests are either by creating random sequences, or by applying meta-heuristic search algorithms. Random testing assembles sequences of calls to constructors and methods randomly, often with the objective to find undeclared exceptions (Csallner and Smaragdakis 2004) or violations of general object contracts (Pacheco et al. 2007), but the generated tests can also be used as automated regression tests. The effectiveness of random test generators can be increased by integrating heuristics (Ma et al. 2015; Sakti et al. 2015). Search-based approaches use a similar representation, but apply evolutionary search algorithms to maximize code coverage (Tonella 2004; Baresi and Miraz 2010; Fraser and Arcuri 2013; Andrews et al. 2011).

As an example to illustrate how type information is used by existing test generators, consider the following snippets of Java (left) and Python (right) code:

Assume Foo of the Java example is the class under test. It has a dependency on class Bar: in order to generate an object of type Foo we need an instance of Bar, and the method doFoo also requires a parameter of type Bar.

Random test generation would typically generate tests in a forward way. Starting with an empty sequence t0 = 〈〉, all available calls for which all parameters can be satisfied with objects already existing in the sequence can be selected. In our example, initially only the constructor of Bar can be called, since all other methods and constructors require a parameter, resulting in t1 = 〈o1 = new Bar()〉. Since t1 contains an object of type Bar, in the second step the test generator now has a choice of either invoking doBar on that object (and use the same object also as parameter), or invoking the constructor of Foo. Assuming the chosen call is the constructor of Foo, we now have t2 = 〈o1 = new Bar();o2 =new Foo(o1);〉. Since there now is also an instance of Foo in the sequence, in the next step also the method doFoo is an option. The random test generator will continue extending the sequence in this manner, possibly integrating heuristics to select more relevant calls, or to decide when to start with a new sequence.

An alternative approach, for example applied during the mutation step of an evolutionary test generator, is to select necessary calls in a backwards fashion. That is, a search-based test generator like EvoSuite (Fraser and Arcuri 2013) would first decide that it needs to, for example, call method doFoo of class Foo. In order to achieve this, it requires an instance of Foo and an instance of Bar to satisfy the dependencies. To generate a parameter object of type Bar, the test generator would consider all calls that are declared to return an instance of Bar—which is the case for the constructor of Bar in our example, so it would prepend a call to Bar() before the invocation of doFoo. Furthermore, it would try to instantiate Foo by calling the constructor. This, in turn, requires an instance of Bar, for which the test generator might use the existing instance, or could invoke the constructor of Bar.

In both scenarios, type information is crucial: In the forward construction type information is used to inform the choice of call to append to the sequence, while in the backward construction type information is used to select generators of dependency objects. Without type information, which is the case with the Python example, a forward construction (1) has to allow all possible functions at all steps, thus may not only select the constructor of Bar, but also that of Foo with an arbitrary parameter type, and (2) has to consider all existing objects for all parameters of a selected call, and thus, for example, also str or int. Backwards construction without type information would also have to try to select generators from all possible calls, and all possible objects, which both result in a potentially large search space to select from.

Without type information in our example we might see instantiations of Foo or calls to do_foo with parameter values of unexpected types, such as:

This example violates the assumptions of the programmer, which are that the constructor of Foo and the do_foo method both expect an object of type Bar. When type information is not present such test cases can be generated and will only fail if the execution raises an exception; for example, due to a call to a method that is defined for the Bar type but not on an int or str object. Type information can be provided in two ways in recent Python versions: either in a stub file that contains type hints or directly annotated in the source code. A stub file can be compared to C header files: they contain, for example, method declarations with their according types. Since Python 3.5, the types can also be annotated directly in the implementing source code, in a similar fashion known from statically typed languages (see PEP 484Footnote 2).

3 Unit Test Generation with Pynguin

We introduce our automated test-generation framework for Python, called Pynguin, in the following sections. We start with a general overview on Pynguin. Afterwards, we formally introduce a representation for the test-generation problem using evolutionary algorithms in Python. We also discuss different components and operators that we use.

3.1 The Pynguin Framework

Pynguin is a framework for automated unit test generation written in and for the Python programming language. The framework is available as open-source software licensed under the GNU Lesser General Public License from its GitHub repositoryFootnote 3. It can also be installed from the Python Package Index (PyPI)Footnote 4 using the pip utility. We refer the reader to Pynguin’s web siteFootnote 5 for further links and information on the tool.

Pynguin takes as input a Python module and allows the generation of unit tests using different techniques. For this, it analyses the module and extracts information about available methods from the module and types from the module and its transitive dependencies to build a test cluster (see Section 3.3). Next, it generates test cases using a variety of different test-generation algorithms (which we describe in the following sub-sections). Afterwards, it generates regression assertions for the previously generated test cases (see Section 3.7). Finally, a tool run emits the generated test cases in the style of the widely-used PyTestFootnote 6 framework. Figure 1 illustrates Pynguin’s components and their relationships. For a detailed description of the components, how to use Pynguin for own purposes, as well as how to extend Pynguin, we refer the reader to our respective tool paper (Lukasczyk and Fraser 2022).

The different components of Pynguin (taken from Lukasczyk and Fraser (2022))

Pynguin is built to be extensible with other test generation approaches and algorithms. It comes with a variety of well-received test-generation algorithms, which we describe in the following sections.

3.1.1 Feedback-Directed Random Test Generation

Pynguin provides a feedback-directed random algorithm adopted from Randoop (Pacheco et al. 2007). The algorithm starts with two empty test suites, one for passing and one for failing tests, and randomly adds statements to an empty test case. The test case is executed after each addition. Test cases that do not raise an exception are added to the passing test suite; otherwise they are added to the failing test suite. The algorithm will then randomly choose a test case from the passing test suite or an empty test case as the basis to add further statements. Algorithm 1 shows the basic algorithm.

Feedback-directed random generation (adapted from Pacheco et al. (2007)).

The main differences of our implementation compared to Randoop are that our version of the algorithm does not check for contract violations, does not implement any of Randoop’s filtering criteria, and it does not require the user to provide a list of relevant classes, functions, and methods. Please note that our random algorithm, in contrast to the following evolutionary algorithms, does not use a fitness function to guide its random search process. It only checks the coverage of the generated test suite to stop early once the module under test is fully covered. We will refer to this algorithm as Random in the following.

3.1.2 Whole Suite Test Generation

The whole suite approach implements a genetic algorithm that takes a test suite, that is a set of test cases, as an individual (Fraser and Arcuri 2013). It uses a monotonic genetic algorithm, as shown in Algorithm 2 as its basis. Mutation and crossover operators can modify the test suite as well as its contents, that is, the test cases. It uses the sum of all branch distances as a fitness function. We will refer to this algorithm as WS in the following.

Monotonic Genetic Algorithm (adopted from Campos et al. (2018)).

Many-Objective Sorting Algorithm (MOSA, adopted from Campos et al. (2018)).

3.1.3 Many-Objective Sorting Algorithm (MOSA)

The Many-Objective Sorting Algorithm (MOSA, Panichella et al. 2015) is an evolutionary algorithm specifically designed to foster branch coverage and overcome some of the limitations of whole-suite generation. MOSA considers branch coverage as a many-objective optimisation problem, whereas previous approaches, such as whole suite test generation, combined the objectives into one single value. It therefore assigns each branch its individual objective function. The basic MOSA algorithm is shown in Algorithm 3.

MOSA starts with an initial random population and evolves this population to improve its ability to cover more branches. Earlier many-objective genetic algorithms suffer from the so-called dominance resistance problem (von Lücken et al. 2014). This means that the proportion of non-dominated solutions increases exponentially with the number of goals to optimise. As a consequence the search process degrades to a random one. MOSA specifically targets this by introducing a preference criterion to choose the optimisation targets to focus the search only on the still relevant targets, that is, the yet uncovered branches. Furthermore, MOSA keeps a second population, called archive, to store already found solutions, that is, test cases that cover branches. This archive is built in a way that it automatically prefers shorter test cases over longer for the same coverage goals. We refer the reader to the literature for details on MOSA (Panichella et al. 2015). In the following we will refer to this algorithm as MOSA.

3.1.4 Dynamic Target Selection MOSA (DynaMOSA)

DynaMOSA (Panichella et al. 2018a) is an extension to the original MOSA algorithm. The novel contribution of DynaMOSA was to dynamically select the targets for the optimisation. This selection is done on the control-dependency hierarchy of statements. Let us consider the Python code snippet from Listing 1.

The condition in line 2 is control dependent on the condition in the first line. This means, it can only be reached and covered, if the condition in the first line is satisfied. Thus, searching for an assignment for the variable bar to fulfil the condition—and thus covering line 3—is not necessary unless the condition in line 1 is fulfilled. Figure 2 depicts the control-dependence graph. DynaMOSA uses the control-dependencies, which are found in the control-dependence graph of the respective code, to determine which goals to select for further optimisation. To compute the control dependencies within Pynguin, we generate a control-flow graph from the module under test’s byte code using the bytecodeFootnote 7 library; we use standard algorithms to compute post-dominator tree and control-dependence graph from the control-flow graph (see, for example, the work of Ferrante et al. (1987) for such algorithms). We refer the reader to the literature for details on DynaMOSA (Panichella et al. 2018a). In the following we will refer to this algorithm as DynaMOSA.

3.1.5 Many Independent Objectives (MIO)

The Many Independent Objectives Algorithm (MIO, Arcuri 2017) targets some limitations of both the Whole Suite and the MOSA approach. To do this, it combines the simplicity of a (1 + 1)EA with feedback-directed target selection, dynamic exploration/exploitation, and a dynamic population. MIO also maintains an archive of tests, were it keeps a different population of tests for each testing target, for example, for each branch to cover. Algorithm 4 shows its main algorithm.

Many Independent Objective (MIO) Algorithm (adapted from Campos et al. (2018)).

MIO was designed with the aim of overcoming some intrinsic limitations of the Whole Suite or MOSA algorithms that arise especially in system-level test generation. Such systems can contain hundreds of thousands of objectives to cover, for which a fixed-size population will most likely be not suitable; hence, MIO uses a dynamic population. It furthermore turns out that exploration is good at the beginning of the search whereas a focused exploitation is beneficial for better results in the end; MIO addresses this insight by a dynamic exploration/exploitation control. Lastly, again addressing the large number of objectives and limited resources, MIO selects the objectives to focus its search on by using a feedback-directed sampling technique. The literature (Arcuri 2017; 2018) provides more details on MIO to the interested reader. We will refer to this algorithm as MIO in the following.

3.2 Problem Representation

As the unit for unit test generation, we consider Python modules. A module is usually identical with a file and contains definitions of, for example, functions, classes, or statements; these can be nested almost arbitrarily. When the module is loaded the definitions and statements at the top level are executed. While generating tests we do not only want all definitions to be executed, but also all structures defined by those definitions, for example, functions, closures, or list comprehensions. Thus, in order to apply a search algorithm, we first need to define a proper representation of the valid solutions for this problem.

We use a representation based on prior work from the domain of testing Java code (Fraser and Arcuri 2013). For each statement sj in a test case ti we assign one value v(sj ) with type \(\tau (v({s_{j}}\!)) \in \mathcal {T}\), with the finite set of types \(\mathcal {T}\) used in the subject-under-test (SUT) and the modules transitively imported by the SUT. A set of test cases form a test suite. We define five kinds of statements: Primitive statements represent int, float, bool, bytes and str variables, for example, var_0 = 42. Value and type of a statement are defined by the primitive variable. Note that although in Python everything is an object, we treat these values as primitives because they do not require further construction in Python’s syntax.

Constructor statements create new instances of a class, for example, var_0 = SomeType(). Value and type are defined by the constructed object; any parameters are satisfied from the set V = {v(sk)∣0 ≤ k < j}. Method statements invoke methods on objects, for example, var_1 = var_0.foo(). Value and type are defined by the return value of the method; source object and any parameters are satisfied from the set V. Function statements invoke functions, for example, var_2 = bar(). They do not require a source object but are otherwise identical to method statements.

Extending our previous work (Lukasczyk et al. 2020) we introduce the generation of collection statements. Collection statements create new collections, that is, List, Set, Dict, and Tuple. An example for such a list collection statement is var_2 = [var_0, var_1]; an example for a dictionary collection statement is var_4 = {var_0: var_1, var_2: var_3}. Value and type are defined by the constructed collection; elements of a collection are satisfied from the set V. For dictionaries, both keys and values are satisfied from V. Tuples are treated similar to lists; their sole difference in Python is that lists are mutable while tuples are immutable.

Previously, we always filled in all parameters (except ⋆args and ⋆⋆kwargs), when creating a constructor, method or function statement and passed the parameters by position. However, filling all parameters might not be necessary, as some parameters may have default values or are optional (for example ⋆args and ⋆⋆kwargs, which will result in an empty tuple or dictionary, respectively). It can also be impossible to pass certain parameters by position as it is possible to restrict them to be only passed by keyword. We improved our representation of statements with parameters, by (1) passing parameters in the correct way, that is, positional or by keyword, and (2) leaving optional parameters empty with some probability.

Parameters of the form ⋆args or ⋆⋆kwargs capture positional or keyword arguments which are not bound to any other parameter. Hereby, args and kwargs are just names for the formal parameters; they can be chosen arbitrarily, but args and kwargs are the most common names. Their values can be accessed as a tuple (⋆args) or a dictionary (⋆⋆kwargs). We fill these parameters by constructing a list or dictionary of appropriate type and passing its elements as arguments by using the ⋆ or ⋆⋆ unpacking operator, respectively. Consider the example snippet in Listing 2 to shed light into how a test case for functions involving those parameter types may look like.

This representation is of variable size; we constrain the size of test cases l ∈ [1, L] and test suites n ∈ [1, N]. In contrast to prior work on testing Java (Fraser and Arcuri 2013), we do not define attribute or assignment statements; attributes of objects are not explicitly declared in Python but assigned dynamically, hence it is non-trivial to identify the existing attributes of an object and we leave it as future work. Assignment statements in the Java representation could assign values to array indices. This was necessary, as Java arrays can only be manipulated using the []-operator. While Python also has a []-operator, the same effect can also be achieved by directly calling the __setitem__ or __getitem__ methods. Please note that we do not use the latter approach in Pynguin currently, because Pynguin considers all methods having names starting with one or more underscores to be private methods; private methods are not part of the public interface of a module and thus Pynguin does not directly call them. Given these constraints, we currently cannot generate a test case as depicted in Listing 3 because this would require some of the aforementioned features, such as reading and writing attributes or the []-operator for list manipulation. The latter is currently not implemented in Pynguin because we assume that changing the value of a list element is not often required; it is more important to append values to lists, which is supported by Pynguin. Additionally, in Java an array has a fixed size, whereas lists in Python have variable size. This would require a way to find out a valid list index that could be used for the []-operator.

3.3 Test Cluster

For a given subject under test, the test cluster (Wappler and Lammermann 2005) defines the set of available functions and classes along with their methods and constructors. The generation of the test cluster recursively includes all imports from other modules, starting from the subject under test. The test cluster also includes all primitive types as well as Python’s built-in collection types, that are, List, Set, Dict, and Tuple. To create the test cluster we load the module under test and inspect it using the inspect module from the standard Python API to retrieve all available functions and classes from this module. Additionally, we transitively inspect all dependent modules.

The resulting test cluster basically consists of two maps and one set: the set contains information about all callable or accessible elements in the module under test, which are classes, functions, and methods. This set also stores information about the fields of enums, as well as static fields at class or module level. During test generation, Pynguin selects from this set the callable or accessible elements in the module under test to generate its inputs for.

The two maps store information about which callable or accessible elements can generate a specific type, or modify it. Please note that these two maps do not only contain elements from the module under test but also from the dependent modules. Pynguin uses these two maps to generate or modify specific types, if needed.

3.4 Operators for the Genetic Algorithms

Except the presented random algorithm (see Section 3.1.1), the test-generation algorithms (see Sections 3.1.2 to 3.1.5) implemented in Pynguin are genetic algorithms. Genetic algorithms are inspired by natural evolution and have been used to address many different optimisation problems. They encode a solution to the problem as an individual, called chromosome; a set of individuals is called population. Using operations inspired by genetics, the algorithm optimises the population gradually. Operations are, for example, crossover, mutation, and selection. Crossover merges genetic material from at least two individuals into a new offspring, while mutation independently changes the elements of an individual. Selection is being used to choose individuals for reproduction that are considered better with respect to some fitness criterion (Campos et al. 2018).

In the following, we introduce those operators and their implementation in Pynguin in detail.

3.4.1 The Crossover Operator

Crossover is used to merge the genetic material from at least two individuals into a new offspring. The different implemented genetic algorithms use different objects as their individuals: DynaMOSA, MIO, and MOSA consider a test case to be an individual, whereas Whole Suite considers a full test suite, consisting of potentially many test cases, to be an individual.

This makes it necessary to distinguish between test-suite crossover and test-case crossover; both work in a similar manner but have subtle differences.

Test-suite Crossover

The search operators for our representation are based on those used in EvoSuite (Fraser and Arcuri 2013): We use single-point relative crossover for both, crossing over test cases and test suites.

The crossover operator for test suites, which is used for the whole suite algorithm, takes two parent test suites P1 and P2 as input, and generates two offspring test suites O1 and O2, by splitting both P1 and P2 at the same relative location, exchanging the second parts and concatenating them with the first parts. Individual test cases have no dependencies between each other, thus the application of crossover always generates valid test suites as offspring. Furthermore, the operator decreases the difference in the number of test cases between the test suites, thus abs(|O1|−|O2|) ≤abs(|P1|−|P2|). Therefore, no offspring will have more test cases than the larger of its parents.

Test-case Crossover

For the implementation of DynaMOSA, MIO, and MOSA we also require a crossover operator for test cases. This operator works similar to the crossover operator for test suites. We describe the differences in the following using an example.

Listing 4 depicts an example defining a class Foo containing a constructor and two methods, as well as two test cases that construct instances of Foo and execute both methods. During crossover, each test case is divided into two parts by the crossover point and the latter parts of both test cases are exchanged, which may result in the test cases depicted in Listing 5. Since statements in the exchanged parts may depend on variables defined in the original first part, the statement or test case needs to be repaired to remain valid. For example, the insertion of the call foo_0.baz(int_0) into the first crossed-over test case requires an instance of Foo as well as an int value.

In the example, the crossover operator randomly decided to create a new Foo instance instead of reusing the existing foo_0 as well as creating a new int constant to satisfy the int argument of the baz method. For the second crossed-over test case, the operator simply reused both, the instance of Foo as well the existing str constant to satisfy the requirements for the bar method.

Test cases from Listing 4 after performing crossover

3.4.2 The Mutation Operator

Similarly to the crossover operator, the different granularity of the individuals between the different genetic algorithms requires a different handling in the mutation operation.

Test-suite Mutation

When mutating a test suite T, each of its test cases is mutated with probability \(\frac {1}{|T|}\). After mutation, we add new randomly generated test cases to T. The first new test case is added with probability σtestcase. If it is added, a second new test case is added with probability \(\sigma _{\text {testcase}}^{2}\); this happens until the i-th test case is not added (probability: \(1-\sigma _{\text {testcase}}^{i}\)). Test cases are only added if the limit N has not been reached, thus |T|≤ N.

Test-case Mutation

The mutation of a test case can be one of three operations: remove, change, or insert, which we explain in the following sections. Each of these operations can happen with the same probability of \(\frac {1}{3}\). A test case that has no statements left after the application of the mutation operator is removed from the test suite T.

When mutating a test case t whose last execution raised an exception at statement si, the following two rules apply in order:

-

1.

If t has reached the maximum length, that is, |t|≥ L, the statements {sj∣i < j < l} are removed from t.

-

2.

Only the statements {sj∣0 ≤ j ≤ i} are considered for mutation, because the statements {sj∣i < j < l} are never reached and thus have no impact on the execution result.

For constructing the initial population, a random test case t is sampled by uniformly choosing a value r with 1 ≤ r ≤ L, and then applying the insertion operator repeatedly starting with an empty test case \(t^{\prime }\), until \(|t^{\prime }| \geq r\).

The Insertion Mutation Operation

With probability σstatement we insert a new statement at a random position p ∈ [0, l]. If it is inserted, we insert another statement with probability \(\sigma _{\text {statement}}^{2}\) and so on, until the i-th statement is not inserted. New statements are only inserted, as long as the limit L has not been reached, that is, |t| < L.

For each insertion, with probability \(\frac {1}{2}\) each, we either insert a new call on the module under test or we insert a method call on a value in the set {v(sk)∣0 ≤ k < p}. Any parameters of the selected call are either reused from the set {v(sk)∣0 ≤ k < p}, set to None, possibly left empty if they are optional (see Section 3.2), or are randomly generated. The type of a randomly generated parameter is either defined by its type hint, or if not available, chosen randomly from the test cluster (see Section 3.3). If the type of the parameter is defined by a type hint, we can query the test cluster for callable elements in the subject under test or its dependencies that generate an object of the required type. Generic types currently cannot be handled properly in Pynguin, only Python’s collection types are addressed. A parameter that has no type annotation or the annotation Any, requires us to consider all available types in the test cluster as potential candidates. For those, we can only randomly pick an element from the test cluster.

⋆args, ⋆⋆kwargs as well as parameters with a default value are only filled with a certain probability. For ⋆args: T and ⋆⋆kwargs: T we try to create or reuse a parameter of type List[T] or Dict[str, T], respectively. Primitive types are either randomly initialized within a certain range or reuse a value from static or dynamic constant seeding (Fraser and Arcuri 2012) with a certain probability. Complex types are constructed in a recursive backwards fashion, that is, by constructing their required parameters or reusing existing values.

The Change Mutation Operation

For a test case \(t = \langle s_{0}, s_{1}, \dots , s_{l-1} \rangle \) of length l, each statement si is changed with probability \(\frac {1}{l}\). For int and float primitives, we choose a random standard normally distributed value α. For int primitives we add αΔint to the existing value. For float primitives we either add αΔfloat or α to the existing value, or we change the amount of decimal digits of the current value to a random value in [0,Δdigits]. Here Δint, Δfloat and Δdigits are constants.

For str primitives, with probability \(\frac {1}{3}\) each, we delete, replace, and insert characters. Each character is deleted or replaced with probability \(\frac {1}{|v(s_{i})|}\). A new character is inserted at a random location with probability σstr. If it is added, we add another character with probability \(\sigma _{\text {str}}^{2}\) and so on, until the i-th character is not added. This is similar to how we add test cases to a test suite. bytes primitives are mutated similar to str primitives. For bool primitives, we simply negate v(si).

For tuples, we replace each of its elements with probability \(\frac {1}{|v(s_{i})|}\). Lists, sets, and dictionaries are mutated similar to how string primitives are mutated. Values for insertion or replacement are taken from {v(sk)∣0 ≤ k < i}. When mutating an entry of a dictionary, with probability \(\frac {1}{2}\) we either replace the key or the value. For method, function, and constructor statements, we change each argument of a parameter with probability \(\frac {1}{p}\), where p denotes the number of formal parameters of the callable used in si. For methods, this also includes the callee. If an argument is changed and the parameter is considered optional (see Section 3.2) then with a certain probability the associated argument is removed, if it was previously set, or set with a value from {v(sk)∣0 ≤ k < i} if it was not set. Otherwise, we either replace the argument with a value from {v(sk)∣0 ≤ k < i}, whose type matches the type of the parameter or use None. If no argument was replaced, we replace the whole statement si by a call to another method, function, or constructor, which is randomly chosen from the test cluster, has the same return type as v(si), and whose parameters can be satisfied with values from {v(sk)∣0 ≤ k < i}.

The Remove Mutation Operation

For a test case \(t = \langle s_{0}, s_{1}, \dots , s_{l-1} \rangle \) of length l, each statement si is deleted with probability \(\frac {1}{l}\). As the value v(si) might be used as a parameter in any of the statements \(s_{i+1},\dots , s_{l-1}\), the test case needs to be repaired in order to remain valid. For each statement \({}_{s_{j}}\), i < j < l, if \({}_{s_{j}}\) refers to v(si), then this reference is replaced with another value out of the set {v(sk)∣0 ≤ k < j ∧ k≠i}, which has the same type as v(si). If this is not possible, then \({}_{s_{j}}\) is deleted as well recursively.

3.5 Covering and Tracing Python Code

A Python module contains various control structures, for example, if or while statements, which are guarded by logical predicates. The control structures are represented by conditional jumps at the bytecode level, based on either a unary or binary predicate. We focus on branch coverage in this work, which requires that each of those predicates evaluates to both true and false.

Let B denote the set of branches in the subject under test—two for each conditional jump in the byte code. Everything executable in Python is represented as a code object. For example, an entire module is represented as a code object, a function within that module is represented as another code object. We want to execute all code objects C of the subject under test. Therefore, we keep track of the executed code objects CT as well as the minimum branch distance \(d_{\min \limits }(b, T)\) for each branch b ∈ B, when executing a test suite T. \(B_{T} \subseteq B\) denotes the set of taken branches. Code objects which contain branches do not have to be considered as individual coverage targets, since covering one of their branches also covers the respective code object. Thus, we only consider the set of branch-less code objects \(C_{L} \subseteq C\). We then define the branch coverage cov(T) of a test suite T as \( \text {cov}(T) = \frac {|C_{T} \cap C_{L}| + |B_{T}|}{|C_{L}| + |B|} \).

Branch distance is a heuristic to determine how far a predicate is away from evaluating to true or false, respectively. In contrast to previous work on Java, where most predicates at the bytecode level operate only on Boolean or numeric values, in our case the operands of a predicate can be any Python object. Thus, as noted by Arcuri (2013), we have to define our branch distance in such a way that it can handle arbitrary Python objects.

Let \(\mathbb {O}\) be the set of possible Python objects and let Θ := {≡,≢,<,≤,>,≥,∈,∉,=,≠} be the set of binary comparison operators (remark: we use ‘≡’, ‘=’, and ‘∈’ for Python’s ==, is, and in keywords, respectively). For each 𝜃 ∈Θ, we define a function \(\delta _{\theta }: \mathbb {O}\times \mathbb {O} \to \mathbb {R}_{0}^{+} \cup \{\infty \}\) that computes the branch distance of the true branch of a predicate of the form a𝜃b, with \(a,b\in \mathbb {O}\) and 𝜃 ∈Θ. By \(\delta _{\bar {\theta }}(a,b)\) we denote the distance of the false branch, where \(\bar {\theta }\) is the complementary operator of 𝜃. Let further k be a positive number, and let lev(x, y) denote the Levenshtein distance (Levenshtein 1966) between two strings x and y. The value of k is used in cases where we know that the distance is not 0, but we cannot compute an actual distance value, for example, when a predicate compares two references for identity, the branch distance of the true branch is either 0, if the references point to the same object, or k, if they do not. While the actual value of k does not matter, we use k = 1.

The predicates is_numeric(z), is_string(z) and is_bytes(z) determine whether the type of their argument z is numeric, a string or a byte array, respectively. The function decode(z) decodes a byte array into a string by decoding every byte into a unique character, for example, by using the encoding ISO-8859-1.

Note that every object in Python represents a Boolean value and can therefore be used as a predicate. Classes can define how their instances are coerced into a Boolean value, for example, numbers representing the value zero or empty collections are interpreted as false, whereas non-zero numbers or non-empty collections are interpreted as true. We assign a distance of k to the true branch, if such a unary predicate v represents false. Otherwise, we assign a distance of δF(v) to the false branch, where is_sized(z) is a predicate that determines if its argument z has a size and len(z) is a function that computes the size of its argument z.

Future work shall refine the branch distance for different operators and operand types.

3.6 Fitness Functions

The fitness function required by genetic algorithms is an estimate of how close an individual is towards fulfilling a specific goal. As stated before we optimise our generated test suites towards maximum branch coverage. We define our fitness function with respect to this coverage criterion. Again, we need to distinguish between the fitness function for Whole Suite, which operates on the test-suite level, and the fitness function for DynaMOSA, MIO, and MOSA, which operates on the test-case level.

3.6.1 Test-suite Fitness

The fitness function required by our Whole Suite approach is constructed similar to the one used in EvoSuite (Fraser and Arcuri 2013) by incorporating the branch distance. The fitness function estimates how close a test suite is to covering all branches of the SUT. Thus, every predicate has to be executed at least twice, which we enforce in the same way as existing work (Fraser and Arcuri 2013): the actual branch distance d(b, T) is given by

with \(\nu (x) = \frac {x}{x+1}\) being a normalisation function (Fraser and Arcuri 2013). Note that the requirement of a predicate being executed at least twice is used to avoid a circular behaviour during the search, where the Whole Suite algorithm alternates between covering a branch of a predicate, but in doing so loses coverage on the opposing branch (Fraser and Arcuri 2013).

Finally, we can define the resulting fitness function f of a test suite T as

3.6.2 Test-case Fitness

The aforementioned fitness function is only applicable for the Whole Suite algorithm, which considers every coverage goal at the same time. DynaMOSA, MIO, and MOSA consider individual coverage goals. Such a goal is to cover either a certain branch-less code object c ∈ CL or a branch b ∈ B. For the former, its fitness is given by

while for the latter it is defined as

where dmin(b, t) is the smallest recorded branch distance of branch b when executing test case t and al(b, t) denotes the approach level. The approach level is the number of control dependencies between the closest executed branch and b (Panichella et al. 2018a).

3.7 Assertion Generation

Pynguin aims to generate regression assertions (Xie 2006) within its generated test cases based on the values it observes during test-case execution. We consider the following types as assertable: enum values, builtin types (int, str, bytes, bool, and None), as well as builtin collection types (tuple, list, dict, and set) as long as they only consist of assertable elements. Additionally, Pynguin can generate assertions for values using an approximate comparison to mitigate the issue of imprecise number representation. For those assertable types, Pynguin can generate the assertions directly, by directly, by creating an appropriate expected value for the assertion’s comparison.

We call other types non-assertable. This does, however, not mean that Pynguin is not able to generate assertions for these types. It indicates that Pynguin will not try to create a specific object of such a type as an expected value for the assertion’s comparison, but it will aim to check the object’s state by inspecting its public attributes; assertions shall then be generated on the values of these attributes, if possible.

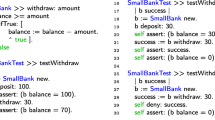

For non-assertable collections, Pynguin creates an assertion on their size by calling the len function on the collection and assert for that size. As a fallback, if the aforementioned strategies is successful, Pynguin is asserting that an object is not None. Listing 7 shows a simplified example of how test cases for the code snippet in Listing 6 could look like. Please note that the example was not directly generated with Pynguin but manually simplified for demonstration purpose.

Some test cases for the snippet in Listing 6

Additionally, Pynguin creates assertions on raised exceptions. Python does, in contrast to Java, not know about the concept of checked and unchecked, or expected and exceptions. During Pynguin’s analysis of the module under test we analyse the abstract syntax tree of the module and aim to extract for each function which exceptions it exceptions it explicitly raises but not catches or that are specified in the function’s Docstring. We consider these exceptions to be expected, all other exceptions that might appear during execution are unexpected. For an expected exception a generated test case will utilise the pytest.raises context from the PyTest frameworkFootnote 8, which checks whether an exception of a given type was raised during execution and causes a failure of the test case if not. Pynguin decorates a test case, who’s execution causes an unexpected exception, with the pytest.mark.xfail decorator from the PyTest library; this marks the test case as expected to be failing. Since Pynguin is not able to distinguish whether this failure is expected it is up to the user of Pynguin to inspect and deal with such a test case. Listing 8 shows two test cases that check for exceptions.

Some test cases handling exceptions for the code snippet in Listing 6

To generate assertions, Pynguin observes certain properties of the module under test. The parts of interest are the set of return values of function or method invocations and constructor calls, the static fields on used modules, and the static fields on seen return types. It does so after the execution of each statement of a test case. First, executes every test case twice in random order. We do this to remove trivially flaky assertions, for example, assertions based on memory addresses or random numbers. Only assertions on Only assertions on values that are the same on both executions are kept as the base assertions.

To minimise the set of assertions (Fraser and Zeller 2012) to those that are likely to be relevant to ensure the quality of the current test case, Pynguin utilises a customised fork of MutPyFootnote 9 to generate mutants of the module under test. A mutant is a variant of the original module under test that differs by few syntactic changes (Acree et al. 1978; DeMillo et al. 1978; Jia and Harman 2011). There exists a wide selection of so called mutation operators that generate mutants from a given program.

From the previous executions, Pynguin knows the result of each test case. It then executes all test cases against all mutants. If the outcome for one test case differs on a particular mutant between the original module and that mutant, the mutant is said to be killed; otherwise it is classified as survived. Out of the base assertions, only those assertions are considered relevant that have an impact on the result of the test execution. Such an impact means that the test is able to kill the mutant based on the provided assertions. Assertions that have not become relevant throughout all runs are removed from the test case, as they do not seem to help for fault finding.

3.8 Limitations of Pynguin

At its current state, Pynguin is still a research prototype facing several technical limitations and challenges. First, we noticed that Pynguin does not isolate the test executions properly in all cases. We used Docker containers to prevent Pynguin from causing harm on our systems; however, a proper isolation of file-system access or calls to specific functions, such as sys.exit, need to be handled differently. One of the reasons Pynguin fails on target modules is that they call sys.exit in their code, which stops the Python interpreter and thus also Pynguin. Future work needs to address those challenges by, for example, providing a virtualised file system to or mocking calls to critical functions, such as sys.exit.

The dynamic nature of Python allows developers to change many properties of the program during runtime. One example is a non-uniform object layout; instances of the same class may have different methods or fields during runtime, of which we do not know when we analyse the class before execution. This is due to the fact that attributes can be added, removed, or changed during runtime, special methods like __getattr__ can fake non-existing attributes and many more. The work of Chen et al. (2018), for example, lists some of these dynamic features of the Python language and their impact on fixing bugs.

Besides the dynamic features of the programming language Pynguin suffers from supporting all language features. Constructs such as generators, iterators, decorators, higher-order functions provide open challenges for Pynguin and aim for future research. Furthermore, Python allows to implement modules in C/C++, which are then compiled to native binaries, in order to speed up execution. The native binaries, however, lack large parts of information that the Python source files provide, for example, there is no abstract syntax tree for such a module that could be analysed. Pynguin is not able to generate tests for a binary module because its coverage measurement relies on the available Python interpreter byte code that we instrument to measure coverage. Such an on-the-fly instrumentation is obviously not possible for binaries in native code.

Lastly, we want to note that the type system of Python is challenging. Consider the two types List[Tuple[Any, ...] and List[Tuple[int, str]]; there is no built-in support in the language to decide at runtime whether one is a subtype of the other. Huge engineering effort has been put into static type checkers, such as mypyFootnote 10, however they are not considered feature complete. Additionally, each new version of Python brings new features regarding type information to better support developers. This, however, also causes new challenges for tool developers to support those new features.

4 Empirical Evaluation

We use our Pynguin test-generation framework to empirically study automated unit test generation in Python. A crucial metric to measure the quality of a test suite is the coverage value it achieves; a test suite cannot reveal any faults in parts of the subject under test (SUT) that are not executed at all. Therefore, achieving high coverage is an essential property of a good test suite.

The characteristics of different test-generation approaches have been extensively studied in the context of statically typed languages, for example by Panichella et al. (2018b) or Campos et al. (2018). With this work we aim to determine whether these previous findings on the performance of test generation techniques also generalise to Python with respect to coverage:

Research Question 1 (RQ1)

How do the test-generation approaches compare on Python code?

Our previous work (Lukasczyk et al. 2020) indicated that the availability of type information can be crucial to achieve higher coverage values. Type information, however, is not available for many programs written in dynamically typed languages. To gain information on the influence of type information on the different test-generation approaches, we extend our experiments from our previous study (Lukasczyk et al. 2020). Thus, we investigate the following research question:

Research Question 2 (RQ2)

How does the availability of type information influence test generation?

Coverage is one central building block of a good test suite. Still, a test-generation tool solely focussing on coverage is not able to create a test suite that reveals many types of faults from the subject under test. The effectiveness of the generated assertions is therefore a crucial metric to reveal faults. We aim to investigate the quality of the generated assertions by asking:

Research Question 3 (RQ3)

How effective are the generated assertions at revealing faults?

4.1 Experimental Setup

The following sections describe the setup that we used for our experiments.

4.1.1 Experiment Subjects

For our experiments we created a dataset of Python projects, which allows us to answer our research questions. We only selected projects for the dataset that contain type information in the style of PEP 484. Projects without type information would not allow us to investigate the influence of the type information (see RQ2). Additionally, we focus on projects that use features of the Python programming language that are supported by Pynguin. We do this to avoid skewing the results due to missing support for such features in Pynguin.

We excluded projects with dependencies to native code such as numpy from our dataset. We do this for two reasons: first, we want to avoid compiling these dependencies, which would require a C/C++ compilation environment in our experiment environment to keep this environment as small as possible. Second, Pynguin’s module analysis and on the source code and Python’s internally used byte code. Both are not available for native code, which can limit Pynguin’s applicability on such projects. Unfortunately, many projects depend to native-code libraries such as numpy, either directly or transitively. Pynguin can still be used on such projects but its capabilities might be its capabilities might be limited by not being able to analyse these native-code dependencies. Fortunately, only few Python libraries are native-code only. Dependencies that only exist in native code, for example, the widely used numpy library, can still be used, although Pynguin might not be able to retrieve all information it could retrieve from a Python source file.

We reuse nine of the ten projects from our previous work (Lukasczyk et al. 2020); the removed project is async_btree, which provides an asynchronous behaviour-tree implementation. The functions in this project are implemented as co-routines, which would require a proper handling of the induced parallelism and special function invocations. Pynguin currently only targets sequential code; it therefore cannot deal with this form of parallelism to avoid any unintended side effects. Thus, for the async_btree project, the test generation fails and only import coverage can be achieved, independent of the used configuration. None of the other used projects relies on co-routines.

We selected two projects from the BugsInPy dataset (Widyasari et al. 2020), a collection of 17 Python projects at the time of writing. The selected projects are those projects in the dataset that provide type annotations. The remaining nine projects have been randomly selected from the ManyTypes4Py dataset (Mir et al. 2021), a dataset of more than 5 200 Python repositories for evaluating machine learning-based type inference. We do not use all projects from ManyTypes4Py for various reasons: first, generating tests for 183 916 source code files from the dataset would cause a tremendous computational effort. Second, many of those projects also have dependencies on native-code libraries, which we explicitly excluded from our selection. Third, the selection criterion for projects in that dataset was that they use the MyPy type checker as a project dependency in some way. The authors state that only 27.6 % of the source-code files have type annotations. We manually inspected the projects from ManyTypes4Py we incorporated into our dataset to ensure that they have type annotations present.

Overall, this results in a set of 163 modules from 20 projects. Table 1 provides a more detailed overview of the projects. The column Project Name gives the name of the project on PyPI; the lines of code were measured using the clocFootnote 11 tool. The table furthermore shows the total number of code objects and predicates in a project’s modules, as well as the number of types detected per project. The former two measures give an insight on the project’s complexity: higher numbers indicate larger complexity. The latter provides an overview how many types Pynguin was able to parse. Please note that Pynguin may not be able to resolve all types as it relies on Python’s inspect library for this task. This library is the standard way of inspecting modules in Python—to extract information about existing classes and functions, or type information. If fails to resolve a type that type will not be known to Pynguin’s test cluster, which means that Pynguin will not try to generate an object of such a type. However, since the inspect library is part of the standard library we would consider its quality to be very good; furthermore, if such a type is reached in a transitive dependency, it might be used anyway.

4.1.2 Experiment Settings

We executed Pynguin on each of the constituent modules in sequence to generate test cases. We used Pynguin in version 0.25.2 (Lukasczyk et al. 2022) in a Docker container. The container. The container is used for process isolation and is based on Debian 10 together with Python 3.10.5 (the python:3.10.5-slim-buster image from Docker Hub is the basis of this container).

We ran Pynguin in ten different configurations: the five generation approaches DynaMOSA, MIO, MOSA, Random, and WS (see Section 3.1 for a description of each approach), each with and without incorporated type information. We will refer to a configuration with incorporated type information by adding the suffix TypeHints to its name, for example, DynaMOSA-TypeHints for DynaMOSA with incorporated type information; the suffix NoTypes indicates a configuration that omits type information, for example, Random-NoTypes for feedback-directed random test generation without type information.

We adopt several default parameter values from EvoSuite (Fraser and Arcuri 2013). It has been empirically shown (Arcuri and Fraser 2013) that these default values give reasonably acceptable results. We leave a detailed investigation of the influence of the parameter values as future work. The following values are only set if applicable for a certain configuration. For our experiments, we set the population size to 50. For the MIO algorithm, we cut the search budget to half for its exploration phase and the other half for its exploitation phase. In the exploration phase, we set the number of tests per target to ten, the probability to pick either a random test or from the archive to 50 %, and the number of mutations to one. For the exploitation phase, we set the number of tests per target to one, the probability to pick either a random test or from the archive to zero, and the number of mutations to ten. We use a single-point crossover with the crossover probability set to 0.750. Test cases and test suite are mutated using uniform mutation with a mutation probability of \(\frac {1}{l}\), where l denotes the number of statements contained in the test case. Pynguin uses tournament selection with a default tournament size of 5. The search budget is set to 600 s. This time does not include preprocessing and pre-analysis of the subject. Neither does it include any post-processing, such as assertion generation or writing test cases to a file. The search can stop early if 100 % coverage is achieved before consuming the full search budget. Please note that the stopping for the search budget is only checked between algorithm iterations. Thus, it may be possible that some executions of Pynguin slightly exceed the 600 s search budget before they stop the search.

To minimise the influence of randomness we ran Pynguin 30 times for each configuration and module. All experiments were conducted on dedicated compute servers each equipped with an AMD EPYC 7443P CPU and 256 GB RAM, running Debian 10. We assigned each run one CPU core and four gigabytes of RAM.

4.1.3 Evaluation Metrics

We use code coverage to evaluate the performance of the test generation in RQ1 and RQ2. In particular, we measure branch coverage at the level of Python bytecode. Branch coverage is defined as the number of covered, that is, executed branches in the subject under test divided by the total number of branches in the subject under test. Similar to Java bytecode, complex conditions are compiled to nested branches with atomic conditions in Python code. We also keep track of coverage over time to shed light on the speed of convergence of the test-generation process; besides, we note the final overall coverage.

To evaluate the quality of the generated assertions in RQ3 we compute the mutation score. We use a customised version of the MutPy tool (Derezinska and Hałas 2014) to generate the mutants from the original subject under test. MutPy brings a large selection of standard mutation operators but also operators that are specific to Python. A mutant is a variant of the original subject under test obtained by injecting artificial modifications into them. It is referred to as killed if there exists a test that passes on the original subject under test but fails on the mutated subject under test. The mutation score is defined as the number of killed mutants divided by the total number of generated mutants (Jia and Harman 2011).

We statistically analyse the results to see whether the differences between two different algorithms or configurations are statistically significant or not. We exclude modules from the further analysis for which Pynguin failed to generate tests 30 times in all respective configurations to make the configurations comparable. We use the non-parametric Mann-Whitney U-test (Mann and Whitney 1947) with a p-value threshold 0.050 0 for this. A p-value below this threshold indicates that the null hypothesis can be rejected in favour of the alternative hypothesis. In terms of coverage, the null hypothesis states that none of the compared algorithms reaches significantly higher coverage; the alternative hypothesis states that one of the algorithms reaches significantly higher coverage values. We use the Vargha and Delaney effect size \(\hat {A}_{12}\) (Vargha and Delaney 2000) in addition to testing for the null hypothesis. The effect size states the magnitude of the difference between the coverage values achieved by two different configurations. For equivalent coverage the Vargha-Delaney statistics is \(\hat {A}_{12} = 0.500\). When comparing two configurations C1 and C2 in terms of coverage, an effect size of \(\hat {A}_{12} > 0.500\) indicates that configuration C1 yields higher coverage than C2; vice versa for \(\hat {A}_{12} < 0.500\). Furthermore, we use Pearson’s correlation coefficient r (Pearson 1895) to measure the linear correlation between two sets of data. We call a value of r = ± 1 a perfect correlation, a value of r between ± 0.500 and ± 1 a strong correlation, a value of r between ± 0.300 and ± 0.499 a medium correlation, and a value of r between ± 0.100 and ± 0.299 a small or weak correlation.

Finally, please note that we report all numbers during our experiments rounded to three significant digits, except if they are countable, such as, for example, lines of code in a module.

4.1.4 Data Availability

We make all used projects and tools as well as the experiment and data-analysis infrastructure together with the raw data available on Zenodo (Lukasczyk 2022). This shall allow further analysis and replication of our results.

4.2 Threats to Validity

As usual, our experiments are subject to a number of threats to validity.

4.2.1 Internal Validity

The standard coverage tool for Python is Coverage.py, which offers the capability to measure both line and branch coverage. It, however, measures branch coverage by comparing the transitions between sources lines that have occurred and that are possible. Measuring branch coverage using this technique is possibly imprecise. Not every branching statement necessarily leads to a source line transition, for example, x = 0 if y > 42 else 1337 fits on one line but contains two branches, which are not considered by Coverage.py. We thus implemented our own coverage measurement based on bytecode instrumentation. By providing sufficient unit tests for it we try to mitigate possible errors in our implementation.

Similar threats are introduced by the mutation-score computation. A selection of mutation-testing tools for Python exist, however, each has some individual drawbacks, which make them unsuitable for our choice. Therefore, we implemented the computation of mutation scores ourselves. However, the mutation of the subject under test itself is done using a customised version of the MutPy mutation testing tool (Derezinska and Hałas 2014) to better control this threat.

A further threat to the internal validity comes from probably flaky tests; a test is flaky when its verdict changes non-deterministically. Flakiness is reported to be a problem, not only for Python test suites (Gruber et al. 2021) but also for automatically generated tests in general (Fan 2019; Parry et al. 2022).

The used Python inspection to generate the test cluster (see Section 3.3) cannot handle types provided by native dependencies. We mitigate this threat by excluding projects that have dependencies with native code. This, however, does not exclude any functions from the Python standard library, which is partially also implemented in C, and which could influence our results.

4.2.2 External Validity

We used 163 modules from different Python projects for our experiments. It is conceivable that the exclusion of projects without type annotations or native-code libraries leads to a selection of smaller projects, and the results may thus not generalise to other Python projects. Furthermore, to make the different configurations comparable, we omitted all modules from the final evaluation for that Pynguin was not able to generate test cases for each configuration and each of the 30 iterations. This leads to 134 modules for RQ1 and RQ2 and 105 modules for RQ3. The number of used modules for RQ3 is lower because we exclude modules from the analysis that did not yield 30 results. Reasons for such failures are, for example, flaky tests. However, besides the listed constraints, no others were applied during this selection.

4.2.3 Construct Validity

Methods called with wrong input types still may cover parts of the code before possibly raising exceptions due to the invalid inputs. We conservatively included all coverage in our analysis, which may improve coverage for configurations that ignore type information. A configuration that does not use type information will randomly pick types to generate argument values, although these types might be wrong. In contrast, configurations including type information will attempt to generate the correct type; they will only use a random type with small probability. Thus, this conservative inclusion might reduce the effect we observed. It does, however, not affect our general conclusions.

Additionally, we have not applied any parameter tuning to the search parameters but use default values, which have been shown to be reasonable choices in practice (Arcuri and Fraser 2013).

4.3 RQ1: Comparison of the Test-Generation Approaches

The violin plots in Fig. 3 show the coverage distributions for each algorithm. We use all algorithms here in a configuration that incorporates type information. We note coverage values over the full range of 0 % to 100 %. Notably, all violins show a higher coverage density above 20 %, and very few modules result in lower coverage; this is caused by what we call import coverage. Import coverage is achieved by importing the module; when Python imports a module it executes all statements at module level, such as imports, or module-level variables. It also executes all function definitions (the def statement but not the function’s body or any closures) as well as class definitions and their respective method definitions. Due to the structure of the Python bytecode these definitions are also (branchless) coverage targets that get executed anyway. Thus, they count towards coverage of a module. As a consequence coverage cannot drop below import coverage.

The distributions for the different configurations look very similar, indicating a very similar performance characteristics of the algorithms; the notable exception is the Random algorithm with a lower performance compared to the evolutionary algorithms.

Although the violin plot reports the median values (indicated by a white dot), we additionally report the median and mean coverage values for each configuration in Table 2. The table shows the almost equal performance of the evolutionary algorithms DynaMOSA, MIO, and MOSA. Our random algorithm achieves the lowest coverage values in this experiment.

Since those coverage values are so close together, we computed \(\hat {A}_{12}\) statistics for each pair of DynaMOSA and one of the other algorithms on the coverage of all modules. All effects are negligible but in favour of DynaMOSA (DynaMOSA and MIO: \(\hat {A}_{12} = 0.502\); DynaMOSA and MOSA: \(\hat {A}_{12} = 0.502\); DynaMOSA and Random: \(\hat {A}_{12} = 0.541\); DynaMOSA and WS: \(\hat {A}_{12} = 0.508\)). The effects are not significant except for DynaMOSA and Random with p = 1.08 × 10− 10. We also compared the effects on coverage on a module level. The following numbers report the count of modules where an algorithm performed significantly better or worse than DynaMOSA. MIO performed better than DynaMOSA on 3 modules but worse on 13. MOSA performed better than DynaMOSA on 1 module but worse on 5. Random performed better than DynaMOSA on 0 modules but worse on 52. Whole Suite performed better than DynaMOSA on 1 module but worse on 25. We see that although modules exist where other algorithms outperform DynaMOSA significantly, overall DynaMOSA performs better than the other algorithms.

We now show the development of the coverage over the full generation time of 600 s. The line plot in Fig. 4 reports the mean coverage values per configuration measured in one-second intervals. We see that during the first minute, MIO yields the highest coverage values before DynaMOSA is able to overtake MIO, while MOSA can come close. However, the performance of MIO decreases over the rest of the exploration phase. From the plot we can see that MOSA comes close to MIO at around 300 s. At this point, MIO switches over to its exploitation phase, which again seems to be beneficial compared to MOSA. Over the full generation time, Whole Suite yields smaller coverage values than the previous three, as does Random.

We hypothesize that the achieved coverage is influenced by properties of the module under test. Our first hypothesis is that there exists some correlation between the achieved coverage on a module and the number of lines of code in that module. In order to study this hypothesis, we use our best-performing algorithm, DynaMOSA, and compare the mean coverage values per module with the lines of code in the module. The scatter plot in Fig. 5 shows the result; we fitted a linear regression line in red colour into this plot. The data shows a weak negative correlation (Pearson r = − 0.211 with a p-value of 0.0143), which indicates that there is at least some support for this hypothesis: it is slightly easier to achieve higher coverage values on modules with fewer lines of code. However, lines of code is often not considered a good metric to estimate code complexity. Therefore, we similarly study the correlation of mean coverage values per module with the mean McCabe cyclomatic complexity (McCabe 1976) of that module. The scatter plot in Fig. 6 shows the results; again, we fitted a linear regression line in red colour into the plot. The data shows a medium negative correlation (Pearson r = − 0.365 with a p-value of 3.00 × 10− 5), supporting this hypothesis: modules with higher mean McCabe cyclomatic complexity tend to be more complicated to cover. However, since this correlation still is not strong, other properties of a module appear to influence the achieved coverage. Possible properties might be the quality of available type information or the ability of the test generator to instantiate objects of requested types properly. Also finding appropriate input values for function parameters might influence the achievable coverage. We study the influence of type information in RQ2, and leave exploring further factors as future work.

Discussion:

Overall, the results we achieved from our experiments indicate that automated test generation for Python is feasible. They also show that there exists a ranking between the performance of the studied algorithms, which is in line with previous research on test generation in Java (Campos et al. 2018).

The results, however, do not show large differences between the algorithms; only DynaMOSA compared to Random yielded a significant, although negligible, effect. A reason, why the algorithms have very similar performance is indicated by the subjects that we use for our study. We noticed subjects that are trivial to cover for all algorithms. Listing 9 shows such an example taken from the module flutes.math from the flutes projectFootnote 12.

Almost every pair of two integers is a valid input for this function (only b = 0 will cause a ZeroDivisionError). Since this function is the only function in that particular module, achieving full coverage on this module is also trivially possible for all test-generation algorithms, especially since they know from the type hints that they shall provide integer values as parameters.

Another category of modules that is hard to cover, independent of the used algorithm, is due to technical limitations of Pynguin (see Section 3.8). Consider the minimised code from the flutesFootnote 13 project in Listing 10.

This function is actually both a context manager (due to its decorator) and a generator (indicated by the yield statement). Pynguin currently supports neither; the context manager would require a special syntax construct to be called, such as a with block. Calling a function with a yield statement in it does not actually execute its code. It generates an iterator object pointing to that function. The code will only be executed when iterating over the generator in a loop or by explicitly calling next on the object. A test case Pynguin can come up with is similar to the one shown in Listing 11.

This test case would only result in a coverage of 50 %, which is only import coverage resulting from executing the import and def statements during module loading. As stated above, the body of the function will not even be executed.

Figures 5 and 6 indicate that our subject modules are not very complex. Previous research has shown that algorithms like DynaMOSA or MIO are more beneficial for a large number of goals, that is, a large number of branches (Panichella et al. 2015; Arcuri 2018). For modules with only few branches they cannot show their full potential which definitely influences our results. Having smaller modules is a property of our evaluation set. On average, our modules consist of 79.2 lines of code; Mir et al. (2021) report an average module size of 120 lines of code. Future work shall repeat our evaluation using more complex subject systems in order to evaluate whether the assumed improvements can be achieved there.

4.4 RQ2: Influence of Type Information

We hypothesized in the previous section that type information might have an impact on the achieved coverage. We compare the configuration with type information and the configuration without type information for each algorithm. Our comparison is done on the module level.

A test case from Pynguin for the function in Listing 10

We plot the effect-size distributions per project for DynaMOSA, our best-performing algorithm from RQ1, in Fig. 7. We aggregate the data for a better overview here; we will also show the data on a module level afterwards. Each data point that is used for the plot is the effect size on one module of that project. The results show that the median effect is always greater or equal than 0.500. This entails that available type information is beneficial in the mean for four out of our twenty projects.

We do not only report the data on a project level as we did using Fig. 7 but also per module. We provide a table that reports the effect size per module in Table 3 in the Appendix. A value larger than 0.500 for a module indicates that the configuration with type information yielded higher coverage results than the configuration without type information. A value smaller than 0.500 indicates the opposite. We use a bold font to mark a table entry where the effect size is significant with respect to a p-value < 0.05.

Discussion:

Our results show that type information can be beneficial to achieve higher coverage, although this largely depends on the module under test. From Table 3 we also note that there are cases where the presence of type information has a negative impact compared to its absence.

A reason for this is that the type information is annotated manually by the developers of the projects. Although powerful static type checking tools, such as MyPy are available, they are often not used. Rak-amnouykit et al. (2020) conducted a study using more than 2 600 repositories consisting of more than 173 000 files that have partial type annotations available. They report that often type information is only partially available. As a consequence, large parts of the code still do not have any type information at all. They furthermore report that the type annotations are often wrong, too: they were able to correctly type check only about 11.9% of the repositories using the MyPy tool. Such wrong or incomplete type information can mislead Pynguin as to which parameter types to use. For wrong type information there is still a small probability for Pynguin to disregard the type hint anyway and use a random type. The consequence for missing type information is that Pynguin needs to use a random type, anyway.