Abstract

Code reviewing is a widespread practice used by software engineers to maintain high code quality. To date, the knowledge on the effect of code review on source code is still limited. Some studies have addressed this problem by classifying the types of changes that take place during the review process (a.k.a. review changes), as this strategy can, for example, pinpoint the immediate effect of reviews on code. Nevertheless, this classification (1) is not scalable, as it was conducted manually, and (2) was not assessed in terms of how meaningful the provided information is for practitioners. This paper aims at addressing these limitations: First, we investigate to what extent a machine learning-based technique can automatically classify review changes. Then, we evaluate the relevance of information on review change types and its potential usefulness, by conducting (1) semi-structured interviews with 12 developers and (2) a qualitative study with 17 developers, who are asked to assess reports on the review changes of their project. Key results of the study show that not only it is possible to automatically classify code review changes, but this information is also perceived by practitioners as valuable to improve the code review process. Data and materials: https://doi.org/10.5281/zenodo.5592254

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Contemporary peer code review is a lightweight and informal process in which developers (a.k.a., the reviewers) judge the quality of a newly proposed set of code changes, using a dedicated software tool (Baum et al. 2016).

Despite the substantial practical adoption of code review (Rigby and Bird 2013), the knowledge on its effects—and how to measure them—is still limited. For instance, how can benefits such as knowledge transfer among developers be quantitatively assessed? Past research (e.g., Bacchelli and Bird 2013; Czerwonka et al. 2015) found a strong mismatch between what developers and managers think to obtain from code review (i.e., catching important defects early) vs. what they actually obtain (i.e., catching very few, localized, low-level defects).

This mismatch is not surprising: Assessing the effect of the code review process is a complex task and existing evaluation tools provide basic information, at most. Nevertheless, a number of empirical software engineering studies (e.g., Kemerer and Paulk 2009; Mäntylä and Lassenius 2009; Bacchelli and Bird 2013; Beller et al.2014; Thongtanunam et al. 2015a; Morales et al. 2015; Thongtanunam et al. 2016; McIntosh et al. 2016) did manage to devise approaches able to assess certain aspects of the effect of code review and have provided valuable information to researchers on the topic.

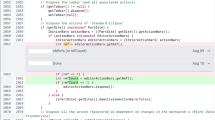

In particular, three studies (Mäntylä and Lassenius 2009; Bacchelli and Bird 2013; Beller et al. 2014) looked at the code review process from a new perspective that investigates review changes: the changes to the code that are implemented at review time (Fig. 1 shows an example of these review-induced changes).

Example of changes induced by a code review in JGit. For instance, at line 193 the reviewer asks to substitute the magic number “200” with a variable with a meaningful name. (https://git.eclipse.org/r/c/jgit/jgit/+/111364/20..21/org.eclipse.jgit.lfs/src/org/eclipse/jgit/lfs/LfsPrePushHook.java)

Under this perspective, these studies could (1) determine that most code reviews do not affect the functionalities of source code (Mäntylä and Lassenius 2009; Bacchelli and Bird 2013; Beller et al. 2014), (2) find the aforementioned mismatch between expectations and outcome in code review (Bacchelli and Bird 2013), and (3) lay out a number of other, previously undocumented, effects of code review (Bacchelli and Bird 2013). The key idea behind this perspective is an approach that evaluates the code review process by classifying the types of review changes. This approach answers questions such as: “Are most review changes fixing functional defects or improving other aspects of the code?” “What kind of defects are found most frequently during review?”

Two critical advantages of studying review changes are: (1) It pinpoints exactly the immediate effect of code review on code and (2) it is not influenced by the external confounding factors (e.g., change-proneness of artifacts or adjustments in the development process) affecting other long-term metrics, such as defect proneness (McIntosh et al. 2016).

In recent years, researchers focused on devising tools that leverage data from software development practices (Ball et al. 1997; Wolf et al. 2009; Bettenburg et al. 2008). In particular, researchers at Microsoft developed a tool, CodeFlow Analytics (CFA), to collect and display to developers information about the code review process (Bird et al. 2015). CFA was created to answer development teams’ requests to easily analyze and monitor their code review data. Bird et al. reported how CFA (1) successfully enabled development teams at Microsoft to monitor themselves and improve their processes and (2) having code review data at disposal stimulated further research to improve the code review process.

Based on the good welcome received by CFA, we believe that integrating information on review changes in a similar tool might be the next natural step to allow development teams to gain further insights in their code review process. This information could drive the team to reflect on the code review practices they have in place. For instance, if the majority of changes are documentation changes could not these be catched earlier in the development phase (e.g., using static analysis tools or improving the style guidelines of the project). This could allow developers to allocate more time in looking for functional defects, critical for the success of the project.

Nevertheless, the application of this approach to help developers to assess their review process is currently limited by two factors. First, the review-induced changes have to be manually classified: This aspect clearly impacts the scalability of the approach, as it can not easily be applied at large. Second, the target of such effort in classifying review changes has mostly been the research community and it is still unclear whether and how its output is meaningful to practitioners.

In this paper, we present a two-step empirical investigation we conducted to address these limitations. First, we investigate whether review changes can be automatically classified using a supervised machine learning approach; this with the goal of solving the scalability issue. To this end, (1) we manually classify 1,504 review changes using Beller et al.’s taxonomy (Beller et al. 2014); (2) we select 30 features based on the analysis of prior work (Buse and Weimer 2010; Fluri et al. 2007) as well as the insight acquired constructing the dataset; (3) we evaluate three machine learning algorithms to automatically classify review changes at two different levels of granularity. Our results show that the evaluated solution classifies code changes with an AUC-ROC beyond 0.91. This finding provides evidence that machine learning can be effectively employed to classify review-induced changes.

In the second part of our investigation, we focus on collecting feedback from developers. Our goal is two-fold: Analyzing developers’ perception concerning the classification of review changes as mean to support review practices as well as evaluating our approach in a real-world scenario. To this aim, (1) we interviewed 12 developers with experience in code review, and (2) we sent reports generated with our approach to 20 open-source projects, receiving feedback from 17 developers. Practitioners positively assessed the novelty of the information on review changes and gave an initial indication of the potential usefulness of this information, particularly for project managers interested in improving the code review process.

2 Background and related work

The topic of code inspection (Fagan 2002) has been largely explored in the past (Baum et al. 2017; Wiegers 2002; Porter et al. 1996) and, in the last decade, both research community and practitioners switched to the more practical concept of modern code review (Bacchelli and Bird 2013). In this respect, researchers have been investigating methodologies and techniques to provide support for developers during the code review process. For instance, researchers have devised automated solutions to identify proper reviewers for a new code change (Ouni et al. 2016; Kovalenko et al. 2020; Thongtanunam et al. 2015b; Zanjani et al. 2015), as well as investigated both the factors making the process more/less effective (Baum et al. 2019; Baysal et al. 2016; Bosu and Carver 2014; Kononenko et al. 2015; McIntosh et al. 2014; Ram et al. 2018; Thongtanunam et al. 2016; Spadini et al. 2018) and the actual influence of code review on the resulting software quality (Abelein and Paech 2015; Porter et al. 1998; Rigby and Bird 2013; Rigby et al. 2014; Sauer et al. 2000; McIntosh et al. 2016; Morales et al. 2015; Kemerer and Paulk 2009; Spadini et al. 2019; Vassallo et al. 2019a).

In the context of our work, we are mainly interested in review-induced changes (or simply review changes). These are the changes to which the code undergoes as result of the review process: e.g., as consequence of a reviewer’s comment (as shown in Fig. 1). However, changes to the code can also be triggered by other factors: e.g., a spontaneous action from the code author or informal discussions among developers. In this paper, our main goal is to overcome the current limitations to classify review changes and evaluating how this data can be used to support developers during code review. For this reason, in this section we focus on the discussion of previous papers that previously faced the problem of classifying review changes. Surveying the literature, we found three main works.

In the first place, Bacchelli and Bird (2013) analyzed the code review process in place at Microsoft, revealing a number of underestimated goals and benefits: among them, they pointed out that knowledge transfer and awareness of design solutions among team members are key aspects of modern code review. Bacchelli and Bird (2013) also highlighted that the main challenge for practitioners while performing code review is the comprehension of the newly committed code changes. This finding motivates our work, as it suggests the need for solutions able to help in classifying and showing to reviewers which changes have been performed.

Other papers aimed at characterizing which defects can be identified during code review. In this direction, Mäntylä and Lassenius (2009) were the first to empirically explore the types of defects discovered in code review sessions as well as their distribution in both industrial and academy contexts. To this aim, the authors assessed the outcome of over 100 code review sessions and defined a taxonomy of code changes. Their main finding reports that 75% of the inspected reviews identify defects that do not affect the external behavior of source code.

A further step toward this research direction has been made by Beller et al. (2014), who further explored what changes are performed in code reviews of open-source systems, with the aim of investigating what the real benefits provided by modern code review are. They considered over 1,400 code changes, manually labeling them using the taxonomy of Mäntylä and Lassenius (2009), whenever possible. As a result, they came up with an updated taxonomy of code changes done in code review, which is shown in Fig. 2.

Taxonomy (Beller et al. 2014) for review-induced changes with their categories/types

Taxonomy of review changes.

The taxonomy of Beller et al. presents two main categories, namely (1) Evolvability changes, which are related to all the modifications that do not have an effect of the functionalities implemented in the source code (e.g., those targeting documentation and refactoring) and (2) Functional changes, which are those that change the structure of the code, thus impacting on the inner-work of a program. These two main categories were then refined to report the specific types of evolvability and functional changes. A description of each type of change, together with an example from a real-world scenario, is available in Sections 2.1 and 2.2. Moreover, each type can be further divided into subtypes. Two tables offering a complete description of changes types and subtypes, respectively, can be found in our replication package.Footnote 1

Our work is clearly inspired by the paper by Beller et al. (2014) and aims at studying deeper the value of their findings: We investigate a technique to overcome the scalability limitations of their classification approach and assess with developers the value such information on review changes.

2.1 Evolvability changes

Evolvability changes can be defined as maintainability changes, which, therefore, do not have an impact on the functionalities of a system. Based on the taxonomy by Beller et al. (2014), they can be divided in five types:

- Textual: :

-

Textual changes concern the use of textual information in the code. In particular, they impact logging messages, adding or updating comments, or renaming variables to be more consistent with the environment (Figs. 3 and 4).

- Supported by language: :

-

Supported by language changes impact programming language-specific features used to convey information: e.g., the use of the final or static keywords in Java.

- Visual representation: :

-

Visual representation changes are related to the style of the code: e.g., they concern the wrong use of indentation in the code or the presence of unnecessary new lines and whitespaces.

- Organization: :

-

Organization changes involve a re-arrangement of the code. For instance, an organizational change can remove unused portions of code or move a method into a different class to improve the structure of the project.

- Solution approach: :

-

Solution approach changes modify the way in which a system’s functionality is implemented, without modifying the functionality itself: e.g., the removal of magic numbers in favor of predefined constants (Figs. 5, 6, 7, 8, 9, 10, 11, 12 and 13).

Example of Textual change, where a comment was rephrased for consistency with similar comments. The real-world code review in which this change took place is available http://review.couchbase.org/c/couchbase-java-client/+/57530/3..4/src/main/java/com/couchbase/client/java/bucket/AsyncBucketManager.java#b258

Example of Supported by language change, where the class that extends FtsServerOverloadExcpetion has been changed from CouchbaseException to TemporaryFailureException. The real-world code review in which this change took place is available http://review.couchbase.org/c/couchbase-java-client/+/99952/1..2/src/main/java/com/couchbase/client/java/error/FtsServerOverloadException.java

Example of Visual representation change where a reviewer requested to change the code indentation. The real-world code review in which this change took place is available http://review.couchbase.org/c/couchbase-java-client/+/100606/2..3/src/main/java/com/couchbase/client/java/transcoder/crypto/JsonCryptoTranscoder.java#b138online

Example of Organization change where a portion of dead code has been removed. The real-world code review in which this change took place is available http://review.couchbase.org/c/couchbase-java-client/+/19606/2/src/main/java/com/couchbase/client/ViewConnection.java#b246online

Example of Solution approach change. In this example the assert instructions has been changed for a more clear alternative without altering its functionality. The real-world code review in which this change took place is available http://review.couchbase.org/c/couchbase-java-client/+/18094/4..5/src/test/java/com/couchbase/client/ViewTest.java#b286online

Example of Resource change where the data buffer is closed in order to avoid unexpected results in the computation. The real-world code review in which this change took place is available http://review.couchbase.org/c/couchbase-java-client/+/62350/1..2/src/integration/java/com/couchbase/client/java/util/CouchbaseTestContext.java#b344

Example of Check change: A check is introduced to enforce that the object side is not null. The real-world code review in which this change took place is available http://review.couchbase.org/c/couchbase-java-client/+/102501/1..2/src/main/java/com/couchbase/client/java/query/dsl/path/HashJoinHintElement.java#b35

Example of Interface change that solve an issue with the incorrect order of parameters. The real-world code review in which this change took place is available https://git.eclipse.org/r/c/jgit/jgit/+/44126/2..3/org.eclipse.jgit/src/org/eclipse/jgit/util/TemporaryBuffer.java#543

Example of Logic change. In the given example, a check on the response status needs to be moved to allow a correct release of the content buffer. The real-world code review in which this change took place is available http://review.couchbase.org/c/couchbase-java-client/+/71319/1..2/src/main/java/com/couchbase/client/java/bucket/DefaultAsyncBucketManager.java#b189

Example of Support change, where new modules are added to the configuration settings to improve the security and stability of the system. The real-world code review in which this change took place is available https://android-review.googlesource.com/c/platform/art/+/839418/2..3/libartbase/base/common_art_test.cc#b336

Example of Larger defect change where an entire missing piece of code was added to the method. The real-world code review in which this change took place is available http://review.couchbase.org/c/couchbase-java-client/+/89902/3..4/src/main/java/com/couchbase/client/java/document/json/JsonObject.java#b262

2.2 Functional changes

Functional changes are modifications that impact the functionality of a system to ensure the correct flow of the program. Based on the taxonomy proposed by Beller et al. (2014), functional changes can be divided in six types:

- Resource::

-

Resource changes impact how the data are handled. They typically happen to solve issues in how the code manages data.

- Check::

-

Check changes are introduced to verify conditions of variables and functions left previously unchecked (possibly leading to run-time errors).

- Interface::

-

Interface changes impact the way the code interacts with other parts of the system: e.g., the use of incorrect parameters in a function call.

- Logic::

-

Logic changes solve issues in the logic of the system: for instance, when an algorithm produces incorrect results.

- Support::

-

Support changes solve issues related to support libraries (or systems) and their configuration: e.g., defects caused by the use of a wrong version of an external library.

- Larger defect::

-

Larger defects changes are modifications that address major issues in the code, often spanning across several classes: for instance, when a class is left partly implemented.

3 Research questions

The goal of this study is to understand (1) whether it is possible to devise an approach to automatically classify code review changes and (2) to what extent developers perceive information about review changes as useful (e.g., because these changes raise awareness about the outcome of the code review process in their projects). The perspective is of both researchers and practitioners. The first are interested in exploiting this new source of information to build analytics tools and devise new strategies to further support developers, while the latter aim to use this information to improve their processes and the overall quality of their software systems.

We start our investigation by focusing on whether a machine learning-based solution can automatically classify code review changes into the taxonomy proposed in prior work (Beller et al. 2014; Mäntylä and Lassenius 2009). A positive outcome would give us confidence that it is possible to overcome the scalability issues of this approach (Bacchelli and Bird 2013; Beller et al. 2014; Mäntylä and Lassenius 2009). Hence, we ask:

To the best of our knowledge, no previous attempts have been made to devise approaches to automatically classify review changes. For this reason, in RQ1 we could not compare our results with other existing approaches. In our investigation, we evaluated our approach in terms of AUC-ROC, using as baseline a random classifier. Having achieved promising results with the automated approach, we proceed to evaluate how information on review changes can be relevant for practitioners. To gather in-depth knowledge of the developers’ perception of the meaningfulness of our approach as well as the usefulness of classifying review changes for review practices, we devise a two-step investigation. First, we conduct semi-structured interviews with developers to gather their opinions on data on types of review changes as a mean to support code review practices (e.g., as a way to measure the effects of code review) and on the relevance of the taxonomy used for the classification. We ask:

Informed by the feedback gathered during the interviews, we devise reports based on review change data and investigate how experienced developers from open-source software projects perceive these reports. Therefore, our last research question is:

Our study features a mixed-method research approach (Johnson and Onwuegbuzie 2004) that includes (1) quantitative analysis on the performance of the investigated automated approach (Domingos 2012), (2) semi-structured interviews (Longhurst 2003), and (3) customized surveys with developers from open-source software systems (Fink 2003). The next sections describe methodology and results for each research question.

4 R Q 1: Classifying types of review changes

The first necessary step to use information about the types of review changes as a mean to support practitioners is to overcome the existing scalability issues (i.e., review changes have to be manually classified (Mäntylä and Lassenius 2009; Bacchelli and Bird 2013; Beller et al. 2014)). Here, we investigate an approach to automatically classify review changes and we evaluate its accuracy. In this study, we limit ourselves to the case of code changes in Java source code.

4.1 The evaluated approach

We design the proposed review changes classifier as a machine learning-based solution available in our replication package.Footnote 2 This choice is motivated by three main factors: (1) the amount of code review data available (e.g., in projects using GerritFootnote 3) that make supervised approaches practically suitable, (2) the restrictions of heuristics-based techniques, which may lead to approaches limited to certain programming languages, and (3) the ability of machine learning classifiers to identify the most suitable features to use and learn from previous changes applied by developers. To investigate the accuracy of a machine-learning based approach, we make the following steps:

- Dataset Selection. :

-

To select the projects to build our review changes dataset, we start from Crop (Paixao et al. 2018), a publicly available and curated code review dataset. Crop contains projects that use Gerrit as code review platform and those review data are publicly available. From the two communities (Eclipse and Couchbase) represented in Crop, we select JGit and JavaClient. These projects are (1) Java-based and (2) large open-source systems (ranging from 85k to 145k lines of code). Furthermore, the selected projects possess an active community of developers and reviewers, which makes them ideal targets of our investigation. We limited our selection to two projects from the CROP dataset to ensure that our classification covers a number of cases sufficient to report even the occurrences of uncommon types of changes. On the contrary, covering less cases from a more vast selection of projects might introduce bias in the types of changes contained in our dataset, under-reporting changes that occur less frequently. Moreover, we also consider Android, one of the most widely used projects in code review research. Android has a long development history with many active developers and reviewers and has been shown to be highly representative the code review practices of open-source projects (Bavota and Russo 2015; McIntosh et al. 2014, 2016; Pascarella et al. 2018).

- Dataset Labeling. :

-

To build and evaluate a supervised machine learning approach, we need reliable information on the actual labels to assign to the considered modifications (i.e., an oracle of code change types). With the labeled data, the approach learns from a subset of the labeled changes and can be tested on the remaining ones. To this aim, the first author of this paper manually classified the changes according to the taxonomy proposed by Beller et al. (2014), relying on (1) the source code of each modification and (2) the code comments left by developers on Gerrit. To each change was assigned both a category (Evolvability or functional) and a type. A description of each type is provided in Section 2. These changes were selected randomly choosing review IDs (i.e., a unique identifier assigned to a review in Gerrit) among all merged reviews in the considered project. Then, we performed a second step randomly choosing a patch set among the ones contained in the review. We excluded from this selection the first patch since we were not interested in changes done before the beginning of the review process. The labeling process took place between March 2019 and August 2019.

- Labeling Granularity. :

-

While analyzing the labeled dataset, we noticed that types of changes, such as Larger defect or Support are very uncommon. This observation was confirmed by previous studies: In their analysis Mäntylä and Lassenius found only eleven changes belonging to the larger defect type and no changes belonging to the support type (Mäntylä and Lassenius 2009). Similar results have been obtained also by Beller et al. (2014). Moreover, the overall amount of functional changes is significantly lower compared to the number of evolvability changes: Only 6.89% of all changes in our dataset belong to the functional category. This might significantly impact the performance of the devised classification approach as it would not have enough instances of each type of change to be able to make accurate prediction at type-level. For this reason, we argue that considering all eleven type labels might negatively impact the performance of our machine-learning approach.

Restricting our classification approach to work only at category-level might also not be an effective solution: We argue that the information offered by the categories might be too coarse-grained to offer valuable insight in the code review process. For these reasons, we devise the concept of group as an intermediate level of granularity for labels between categories and types. We define changes groups as follows. Referring to the taxonomy presented in Fig. 2, we aggregate types into their corresponding higher classes: ‘textual’ and ‘supported by language’ changes were considered as ‘documentation’ changes, while ‘organization’ and ‘solution approach’ changes as ‘structure’ ones. Therefore, a change group can be one of the following: ‘documentation’, ‘visual representation’, ‘structure’, or ‘functional’. Based on this consideration, we assigned to each change in our dataset an additional label reflecting the group to which it belongs. Then, we tested the performance of our model at both category and group level.

- Instance Unit. :

-

When labeling the review changes, we assigned the same ID to multiple changes when they were logically linked together: e.g., multiple changes involving the renaming of the same variable (as shown in Fig. 14). However, the granularity of our approach is the individual code change, which we call modification (an example of modification is shown in part

in Fig. 14). More precisely, a modification is composed of a pair of code chunks (i.e., a group of continuous modified code lines): an old code chunk reporting the code removed in a change, and a new one representing the added code. However, a modification might not include (1) an old code chunk in case the code was only removed (Fig. 15) or (2) a new code chunk if the code was added without removing anything (Fig. 16).

in Fig. 14). More precisely, a modification is composed of a pair of code chunks (i.e., a group of continuous modified code lines): an old code chunk reporting the code removed in a change, and a new one representing the added code. However, a modification might not include (1) an old code chunk in case the code was only removed (Fig. 15) or (2) a new code chunk if the code was added without removing anything (Fig. 16).

Fig. 14 Example of logically linked review changes, therefore, labeled with the same ID. These changes gave origin to three modifications. The real-world code review in which this change took place is available https://git.eclipse.org/r/c/jgit/jgit/+/49966/3..4/org.eclipse.jgit/src/org/eclipse/jgit/transport/BaseReceivePack.java

Fig. 15 Example of a modification containing only an old code chunk. The real-world code review in which this change took place is available https://git.eclipse.org/r/c/jgit/jgit/+/1281/5..6/org.eclipse.jgit/src/org/eclipse/jgit/storage/file/FileRepository.java

Fig. 16 Example of a modification containing only a new code chunk. The real-world code review in which this change took place is available https://git.eclipse.org/r/c/jgit/jgit/+/77733/2..3/org.eclipse.jgit.test/tst/org/eclipse/jgit/transport/RefSpecTest.java

We mined Gerrit to extract the related code chunks for each of the review changes in the labeled dataset. However, the Gerrit API does not allow to extract information on the link between the old and new code chunks. Furthermore, although Gerrit UI displays related old and new code chunks together, this link is made based on the changes line number and not their content. Old and new code chunks impacting the same lines of code are reliably linked together. However, this is not the case when the old and new code chunk impact different code lines: e.g., when a function declaration is moved in a class. For these reasons, we need to link related old and new code chunks by ourselves. Keeping the old and the new code chunks separated, the designed approach computes the Levenshtein distance (Yujian and Bo 2007) among each old chunk and all the new ones. This process leads to the construction of a weighted bipartite graph, where each code chunk is a node and the computed distance represents the weight of the link. Then, it selects the pair that has the lowest distance, links them into a modification, and removes them from the graph. Finally, our linking approach proceeds iteratively among the remaining nodes in the graph. If multiple links have the same weight, it relies on the assumption that related code chunks are likely to have similar positions in the file. We tested our linking approach against all modifications contained in our dataset, after removing changes containing only import statements. The proposed approach reached a precision of 86.07% and an accuracy of 89%. Furthermore, we removed modifications containing only import statements as they might introduce potential bias in the classification approach. Chunks containing only import statements are logically linked to the chunks where the imported entities are used, sharing a common classification (category and type). Once grouped into modifications, these import chunks might have similar characteristics but different types, potentially reducing the classifier performance. Overall, this leads to 2,641 modifications. Each modification has two corresponding labels reporting its category (evolvability or functional) and its group (documentation, visual representation, structure or functional), respectively.

From Table 1, we see that functional changes represent less than the 7% of all modifications considered, while ‘documentation’ changes constitute 48.33% of the whole dataset, ‘visual representation’ the 15.23%, and ‘structure’ the remaining 29.55%. Such a distribution of changes confirms the findings previously reported in literature (Mäntylä and Lassenius 2009; Bacchelli and Bird 2013; Beller et al. 2014).

Table 1 Modification groups in our dataset - Labeling validation. :

-

To evaluate the reliability of the manually assigned labels, a second author performed an independent labeling of a statistically significant subset of the labeled dataset (306 changes, leading to a confidence level of 95% and a margin of error of 5%). Comparing the labels given by the two authors, 90% of the categories labels matched perfectly as well as 75% of the type labels. In the other cases, the two inspectors opened a discussion to reach a consensus and the dataset was adapted accordingly. To measure the inter rater agreement between the two authors we computed Krippendorff’s alpha coefficient (Krippendorff 2011) achieving a measure of 0.447 for the categories labels and 0.673 for the type labels. Although the alpha value for categories labels reports only a moderate agreement, we argue that this reflects the intrinsic unbalance in our dataset: Evolvability changes happen significantly more often during code review compared to functional changes (Beller et al. 2014; Mäntylä and Lassenius 2009).

- Machine-learning Features. :

-

As features of the machine learning algorithm, we selected metrics with three different scopes: the code in the old chunk, the code in the new chunk, and the difference between them. Tables 2, 3, and 4 show a summary of the metrics, grouped by their scope (the single code chunk or the whole modification) and the rationale behind them. The rationale behind each of the metrics selected for our investigation based on the literature and our observations during the creation of the dataset. We combined common code analysis metrics (e.g., LOC, LOCExec, or Cyclomatic Complexity) with a selection of code readability metrics: number of commas or number of cycles (Buse and Weimer 2010). The selection of these metrics is based on an analysis of the literature on what can characterize the type of a modification performed by developers (Fluri et al. 2007) and what can capture the nature of source code under different perspectives. The metrics that work at code chunk level are computed for both the old and the new code chunk in a modification.

Table 2 Code Metrics employed in the evaluated approach at code chunk level Table 3 Code Metrics employed in the evaluated approach at modification level Table 4 Code Metrics employed in the evaluated approach at modification level To compute the selected metrics, we extracted from Gerrit the code snippet of each review change contained in our datasetusing the java implementation of the Gerrit REST API.Footnote 4 Based on the retrived code snippets, we then computed the selected code metrics. The code developed to extract and compute the metrics is available in our replication packageFootnote 5 as part of the devised machine-leaning approach.

We also consider the comments associated with a modification (if any). The first of these metrics is words in comment, a metric that counts the number of words included in a comment. In computing this metric, we considered the main comment and all its replies as a unique entity. Our hypothesis is that modifications belonging to different categories or types involve a different amount of discussion (e.g., the request to change an algorithm might require more explanations than adding a missing comment). We collected the comments related to each code change using the Java implementation of the Gerrit REST API, in a similar fashion to what done for the code of each change. Subsequently, we analyzed the content of comments. To this aim, we first follow a typical Information Retrieval normalization process (Baeza-Yates et al. 1999) involving tokenization, lower-casing, stop-word removal, and stemming (Porter 1997). After these pre-processing steps, we computed the 50 most frequent words taking into account the whole corpus of comments of our dataset. Based on this analysis, we cluster them into seven groups grouping together words indicating a similar operation on the code (e.g., remove and delete): We define groups of words that could help the classifier to distinguish between different change types. The defined group of words are reported in Table 5.

Table 5 Metrics derived from the analysis of review change comments - Machine-learning Algorithm. :

-

We experiment with three different classifiers: Random Forest, J48, and Naive Bayes. We selected these classifiers as they have been successfully applied to solve similar problems in the software engineering domain: e.g., defect prediction (Giger et al. 2012; Lessmann et al. 2008; Strüder et al. 2020) or refactoring recommendation (Pantiuchina et al. 2018; Kumar et al. 2019). These classifiers make different assumptions on the underlying data, as well as having different advantages and drawbacks in terms of execution speed and overfitting (Bishop 2006). In building our approach, we deal with two different classification scenarios: (1) a binary classification to classify a modification in one of the two categories (evolvability or functional); (2) a multi-class classification to assign each modification to one of the four groups (documentation, visual representation, structure, and functional). In the multi-class classification scenario, we estimate multi-class probabilities directly. We do not use techniques such as one-vs-one or one-vs-rest.

Our analysis revealed how the number of if statements (#Added if and #if diff ) is one of the most prominent variable in our model (at both category and group-level). The vast majority of check and logic changes among the functional changes in our dataset (respectively, 34.95% and 24.73% of the functional changes) might explain the high importance of these variables in our model. Both check and logic changes involve fixing issues with variable checks and comparisons: e.g., adding a missing check on the variable returned by a method.)

Variables related to the number of lines of comments are also evaluated as particularly important (especially the number of the LOC in the new code chunk of a modification). We argue that high number of comments LOC might be a strong indication of a documentation change. Overall, documentation changes constitute 48.33% of all the modifications in our dataset.

We test our approach using either gain ratio or correlation-based feature selection. We notice that using correlation-based feature selection leads to better performance. For this reason, we select this technique and we remove the reported non-relevant features. We test different correlation thresholds to remove non-relevant features to predict modification categories and groups. When evaluating our model at category-level, we notice that its performance slowly increase until a threshold of 0.005 of correlation is selected to exclude non-relevant features. Afterwards, the performance of the model remains stable until decreasing again for even smaller threshold. Based on these observations, we select 0.005 as our feature selection threshold at category level. This leads us to exclude the following features from our model: method word (corr. value 0.0044); #Round brackets diff (corr. value 0.0031); change word (corr. value 0.0030); #Assignment diff (corr. value 0.0009); #Methods changed (corr. value 0.0001); Brackets (corr. value 0). Concerning groups, we apply a correlation threshold of 0.01. This leads to include almost all the computed features in our model, with the exception of Brackets since our analysis shows that is uncorrelated to the class variable (corr. value 0).

- Data Preprocessing and Training Strategy. :

-

We consider three typical aspects for the use of machine learning models: (1) data normalization (Kotsiantis et al. 2006), (i.e., the reduction of the feature space to the same interval) (2) removal of non-relevant features (Chandrashekar and Sahin 2014), and (3) balancing of minority classes (Krawczyk 2016). We use the normalize function available in the Weka toolkit to scale data (Hall et al. 2009), while we employed and Synthetic Minority Oversampling (SMOTE) (Chawla et al. 2002) for balancing the data, respectively. To implement SMOTE in our machine-learning approach, we relied on the SMOTE class offered by the WEKA Java API.Footnote 6 Afterward, we run the selected machine learning classifiers using all combinations of settings (e.g., without data normalization but including feature selection), so that we can identify the most performing solution. Moreover, we test the performance of the models considering both within- and cross-project strategies. In the first case, we train models only using the data of single projects and validate their performance using the 10-fold cross validation method (Arlot et al. 2010); to mitigate the possible negative effect of random fold splittings (Tantithamthavorn et al. 2016) and have a more reliable interpretation of the results, we repeat the validation ten times. In the second case, the data of two projects are used to train the models, while the remaining project is used as test set; we run the models multiple times to allow each project to be the test set once.

- Evaluation Metrics. :

-

We assess the goodness of the experimented models by computing Precision, Recall, F-Measure, AUC-ROC, and Matthew’s Correlation Coefficient (MCC) (Reich and Barai 1999). These metrics provide different perspectives on the performance of the investigated approach.

- Feature selection: :

-

To identify relevant features for our classification problem, we apply two different techniques: (1) Gain Ratio attribute selection (Karegowda et al. 2010) and (2) Correlation-based feature selection (Hall 1999). Gain ratio attributes selection evaluates the importance of an attribute computing the gain ratio with respect to the class. Table 6 reports the ten most relevant features in terms of Gain Ratio to predict categories or groups, respectively. The complete list of metrics is available in our replication package (Fregnan et al. 2020). Correlation-based feature selection measures instead the value of Pearson’s correlation between it and the class to evaluate the importance of a feature. Table 7 shows the ten most relevant features based on their correlation value (the complete list of values is available in our replication package (Fregnan et al. 2020)).

Table 6 Gain Ratio of the ten most relevant features to predict change categories or groups, respectively. When a metric is computed at code chunk-level, we specify between brackets if it is related to the new or the old code chunk in a modification Table 7 Pearson’s correlation value of the ten most relevant features to predict change categories or groups, respectively. When a metric is computed at code chunk-level, we specify between brackets if it is related to the new or the old code chunk in a modification

4.2 Analysis of the results

The final manually labeled dataset contains 1,504 changes with an assigned label. We classified 227 additional review changes, but these are borderline cases that could not be properly assigned to a label with the available data, thus we excluded them from the dataset to not reduce potential errors.

Table 8 shows the results achieved by the best of the experimented models in terms of F-Measure. A report on the performance of the other experimented models and configurations is available in our replication package (Fregnan et al. 2020). This configuration uses Random Forest to predict both the category and group labels, normalizing the data and oversampling the minority class using SMOTE. We kept the standard parameter settings offered by the WEKA implementation of SMOTE. In particular, the default percentage parameter of SMOTE was left to 100: This parameter specifies the percentage of SMOTE instances the algorithm creates. To avoid introducing bias in the classifier performance, we apply class-rebalancing with SMOTE only to the training data and never to the test data, following the guidelines of Santos et al. (2018). The results have been computed combining the three projects in our dataset and applying 10-fold cross validation ten times. At category level, our model reached promising results, showing an AUC-ROC of 0.91 and MCC of 0.61. Furthermore, the model could classify evolvability changes with a precision and recall of 0.97 and 0.98, respectively, resulting in an F-Measure of 0.97. As for the functional class, we achieved an F-measure of 0.63, with precision and recall of 0.70 and 0.57, respectively. At group level, the AUC-ROC computed for each of the four classes was above 0.91, giving a first indication of the feasibility of the task using the proposed model.

Considering these results, the selected features seem to be good predictors for the types of review changes, thus suggesting that it is possible to overcome the scalability issues of previous approaches to classify review changes.

At category level, we selected a feature selection threshold of 0.005 (as reported in Section 4.1). This value was identified as a key point in the classifier performance: The classifier performance slowly increase until reaching this threshold, after which they begin to decrease. Despite this, the selected value might be considered too small and, thus, lead to overfitting. To mitigate this risk, we explored how the performance of our classifier changed for higher thresholds, 0.005 and 0.01, respectively. Table 9 reports the results achieved by our classifier when these feature selection threshold are selected. We used the best performing classifier (Random Forest with SMOTE) based on the results reported in Table 8. Even for higher thresholds, the performance of our approach remain stable and in-line with the previously reported results. This, combined with the other measures taken to reduce possible overfitting, contributes to strengthen our confidence in the reported findings. Nonetheless, further work might be conducted to further reduce this possible bias: e.g., by using Recursive Feature Elimination with Cross Validation (RFECV).

Despite the good overall results, we make some further observations on the performance of the model when it classifies the functional class. Table 8 shows that this category is the most problematic. To better understand the reasons behind this result, we conducted a further qualitative analysis. First, the limited amount of functional changes naturally limits the capabilities of the machine learner, as it lacks enough examples to fully learn how to classify them Pecorelli et al. (2019). Our dataset contains 6.89% of functional review changes. This is in line with previous findings that reported how functional changes constitute only a very low percentage of all the code changes that happen during code review (Beller et al. 2014; Mäntylä and Lassenius 2009).

Furthermore, the ‘functional’ class includes changes with very different characteristics: indeed, a functional change might range from a missing if statement to the modification of a whole algorithm. This large variety of changes for one category is a further challenge for machine learning solutions. Nevertheless, the performance achieved indicates that the features selected can discriminate most of the functional changes in our dataset, thus representing a promising baseline that other researchers can improve through further investigations. Finally, we performed an additional analysis aimed at improving the classification of functional changes. In particular, we assessed the suitability of cost sensitive learning (Elkan 2001): we assigned different cost weights to false positive and negatives of functional instances, thus testing the model with different cost matrix configurations. However, we did not achieve any significant improvement, confirming that further research is required to improve the classification of functional changes.

Our results showed that Random Forest achieved the best performance among the considered models. This is in line with findings of previous studies that reported how Random Forest outperforms Naive Bayes and other decision tree-based machine learning algorithms (Mahboob et al. 2016; Gata et al. 2019): For instance, in the field of software engineering, Random Forest was shown to perform better than other algorithms when used to build bug-prediction approaches (Kamei et al. 2010). Random forest is a machine learning algorithm that builds multiple decision trees and trains them with the “bagging” method. Compared to a decision tree, random forest randomly selects observations and features to create multiple trees. Then, the final performance is computed by averaging the results of each tree. We argue these characteristics of random forests might be what allowed it to outperform the other models we considered in our investigation: J-48 (an implementation of C4.5) and Naive Bayes.

To gain further insight into the differences between different groups of review modifications, we investigated which features are the most relevant to distinguish between a functional or a structure modification. To this aim, we built a binary classifier with these two groups (functional and structure) of modifications and excluding documentation or visual representation modifications. Then, we analyzed the importance of each feature in the model using correlation-based feature analysis. We report the ten most relevant features in Table 10.

The most important feature is the number of if added in a modification: Most of the functional defects involve changes in the logic of the algorithm or add checks on the variables. On the contrary, modifications that instantiate new objects or remove/replace method calls are likely to belong to the structure group: e.g., modifications belonging to the “solution approach” type (a subgroup of structure modifications) often involve removing unused methods to increase the maintainability of the code.

Finally, informed by the results of our feature importance analysis (reported in Tables 6 and 7) and by the comparison between functional and structure modifications (whose results are illustrated in Table 10), we select a subset of features that might be particularly relevant to highlight the difference among the four different groups of modifications. At the same time, we aim to verify previous hypotheses formulated based on the results of the feature importance analysis. To this aim, we selected the following three features: #if added, #new added, and #methods added. We report in Table 11 the mean and standard deviation of these features for each group of review modifications.

The mean of the number of added if statements (#Added if ) is significantly higher for the functional modifications compared to the other three groups (documentation, visual represenation, and structure). This confirms our previous hypothesis: A functional change often requires the addition of a check on a variable and, therefore, the number of added if statements can significantly help to distinguish between functional and evolvability changes. Considering the amount of added new objects declarations and method calls added, we noticed that the mean values of both these features are significantly different when comparing documentation and visual representation changes to structure and functional changes. Looking at only structure and functional changes, our results still confirm our hypothesis: Structure modifications have a higher number of added objects and methods, but the the difference with functional modifications is not so large as originally expected.

5 R Q 2.1: Evaluation of developers’ perceptions

To carry out an evaluation on using information on the types of review changes as a mean to inform practitioners about their code review process and evaluate it, we conduct semi-structured interviews involving twelve developers with code review experience (whose characteristics are summarized in Table 12).

After verifying that is possible to automatically classifying review changes (therefore overcoming the scalability issue of previous works (Bacchelli and Bird 2013; Mäntylä and Lassenius 2009; Beller et al. 2014)), our aim is to understand how this information is perceived by practitioners. For this reason, the goals of this investigation are the following: (1) collecting developers’ feedback on review changes classification and the potential use of this information to support and improve code review practices, (2) conducting a qualitative evaluation on the used taxonomy, and (3) gathering feedback on how to display the generated review changes data. In this investigation, we did not validate our machine learning approach. We focused instead on how information on review changes can be effectively used and shown to reviewers.

5.1 Design and methodology

Each interview has three parts: (1) A general discussion on code review, focusing on the interviewee’s experience; (2) a discussion on the concept of automatically classify review changes as well as the information it offers; (3) the evaluation of the taxonomy (Beller et al. 2014). All interviews have been conducted as semi-structured interviews (Newcomer et al. 2015; Longhurst 2003). Starting from the three above-mentioned general topics, the use of semi-structured interviews allowed us to dynamically adjust the structure of the interview to ask follow up questions when needed or address unforeseen points of discussion raised by the participants. We employed a set of slides to guide participants through the structure of the interview as well as to illustrate key concepts: e.g., the review changes taxonomy. The complete set of slides is available in our replication package.Footnote 7

In the first part, we open a discussion about interviewees overall experience with code reviews. This discussion allows us to gather participants background experience on code reviews and set the context for the concept testing. Afterwards, we present to participants the concept of classifying review changes. First, we explain orally the general idea of classifying review changes, then we show this information in four PowerPoint slides. Using code review data from the QT projectFootnote 8, the slides show two possible UIs as Gerrit extensions: (1) a general overview of the changes of the entire project, and (2) a detail view on a specific commit.

For the general overview, two alternative representations are shown in two different slides (Figs. 17 and 18). The UIs differ only from the charts used to represent the types of changes (pie-chart, histograms, etc.). We show more than one option to display alternative representations of the same data. Both slides show a list of the latest changes, and three charts representing the type of the changes over time totally, and for each developer. One additional slide of the general overview shows the evolution of review changes by developer, accessible by clicking on the developers’ name in the list of changes. Finally, one slide shows the categorization of changes for a specific commit as a pie-chart.

First view of a UI that leverages information on review changes. For readability reasons, this view contains only two charts (as opposed to the three in the original slides) and has a different layout compared to the one shown to the interview participants. The original slides are available in our replication package (https://doi.org/10.5281/zenodo.5592254)

Second view of a UI that leverages information on review changes, as shown to the participants during the interviews. For readability reasons, this view contains only two charts (as opposed to the three in the original slides) and has a different layout compared to the one shown to the interview participants. The original slides are available in our replication package (https://doi.org/10.5281/zenodo.5592254)

After presenting the concept of classifying review changes, we ask interviewees to express their feedback on this information and how it is displayed; they are also allowed to provide additional ideas and suggestions on what data/information might be useful to developers (e.g., to build a tool based on review changes data).

In the third step, we discuss with the participants the review changes taxonomy (Beller et al. 2014). To mitigate possible biases, we introduce the details of the taxonomy only in this last phase. We aim to validate if this taxonomy matches developers’ expectations. In particular, we focus on assessing if all the types in the taxonomy are understandable and match the interviewee’s experience. Before starting this discussion, we show the taxonomy to the participants and provide them with a brief explanation of each category and type. Because of the complexity of the taxonomy, we do not introduce the changes subtypes. To assess the taxonomy, we ask participants to place in the taxonomy each of the changes mentioned in the first interview phase. We finally discuss how difficult they perceive this task and whether they face any issues.

Interviews are conducted through video conference or in person and lasted approximately from 45 minutes to one hour. However, we do not define a strict maximum duration limit: We consider an interview concluded once all pre-determined topics are covered and the discussion naturally comes to an end. The study is held by one or two researchers and all interviews are recorded and transcribed. The second researchers participated in the first interview to ensure the goodness of the procedure. Before conducting the interviews, the authors obtained the approval of the ethics committee of their home institution. Moreover, interviewees were asked to sign a consent form to consent to take part to the interview. The form described the scope of the study.

To analyze the results of the interviews, we employ thematic analysis (Lyons and Coyle 2007). As a first step, we create codes: Brief descriptions of the content of the interview to summarize an idea presented by the interviewee. Subsequently, we identify common themes across different codes. To generate themes, we group together codes referring to the same topic. Finally, we review the identified themes to ensure their goodness: e.g., to avoid overlaps between different themes. The results are first analyzed by one of the authors, then checked by a second one.

Participants.

Twelve people participated in our semi-structured interviews on review changes. They are selected through snowballing of the professional network of the authors. Table 12 summarizes the background of the participants involved. Their age range from 18 to 44 years, with the majority (9) ranging from 25 to 35. The participants come from eight different countries with three from the US, and the remaining from the Czech Republic, France, Germany, Italy, and other European countries. Eleven participants identified themselves as males, while one identified herself as female. Currently, nine participants work in companies, among whom one also works as a Ph.D. student, two also contribute to open-source projects and one studies at the university. Two users work exclusively for open source projects, and one is a student. Overall, our participants have an average of 11 years of coding experience and 5.3 years in code reviews (std. 4.7 and std. 2.5, respectively). On average, 7.7 years of coding experience were acquired during academic studies (std. 3) and 9 in industry (std. 8.6). Participants reported to spend, on average, 17.2 hours a week doing code reviews (std. 10.2). Nine participants use code reviews tools such as GitHubFootnote 9 and CrucibleFootnote 10.

5.2 Analysis of the results

This section overviews the main findings achieved in RQ2.1.

- Information on review changes to support review. :

-

All participants found valuable and interesting the concept of using the information obtained by classifying review changes as mean to increase the understanding of code review. For instance, P1 stated that our proposed approach might help software teams to improve their code review processes and understand where and which problems are happening in the project. Similarly, P12 said that with such features developers may be able to avoid the same errors in the future. P3 also suggested that a tool that displays analytics on review changes might allow companies to understand where resources have been spent. Most developers (ten) mentioned managers as the perfect suit for the information generated by our approach; they commented that managers and CTOs could use these data for a better understanding of the project evolution. They reported how the information on the kind of review changes could be useful to project manager or tech-leads to assess the goodness of their review practices. For instance, P3 explained that this information could reveal if the team is paying attention to the right kind of defects. This is in line with the findings of Bird et al. (2015): their code review analytics tool became a valuable instrument for Microsoft developers to monitor themselves and improve their code review process. To increase the actionability of this information, interview participants suggested to compare the distribution of review changes in their project with benchmarks, created, for instance, by looking at similar projects or the history of the project under analysis. Eight participants acknowledged the potential benefits of this kind of functionality.

Moreover, our participants found that showing the type of changes for each developer may be problematic, for example creating competition [P12]. To avoid this issue, P11 suggested limiting access to this information. Seven participants stated that the most interesting information is the evolution of changes over time. P4 also stated that an additional feature might allow developers to change the level of granularity (year, month, and week). P11 and P7 suggested adding a chart, visible to the single team member, that compares the developer and to an ideal standard reviewer, obtained averaging the type of changes found by all reviewers in the project.

- Evaluation of the taxonomy. :

-

After the participants were briefed on Beller et al.’s taxonomy (Beller et al. 2014) and knew the definition of each category, we asked them to place some of the examples of review changes they mentioned during their interview within the taxonomy. All developers were able to fully or partially associate their changes into the various classes. During the task, all users could see a graphical representation of the taxonomy on the screen (Fig. 2). Four participants had no issues performing this task, while five had some initial doubts on where to put a change in the taxonomy. However, they were then able to decide before proceeding.

When asked to comment on the taxonomy, five developers stated that it could have some overlapping issues. Three participants suggested renaming some of the types (i.e., ‘Interface’, ‘Larger defects’) to lower confusion. Despite these comments, participants were able to associate most changes in the taxonomy. Although participant P9 recognized some overlapping issues, he stated that, overall, everything was fairly clear. Similarly, P7 found that classes may not be mutually exclusive, but stated that the taxonomy had enough descriptive power.

Overall, this evaluation phase brought positive feedback to motivate further research on classifying review changes to support practitioners (e.g., as an analytics tool) and improve Beller et al.’s taxonomy.

5.3 Follow-up interviews

We perform follow-up interviews with 7 developers from the original interviews. Our goal is two-fold: (1) Assessing the concept of group, introduced in Section 4.1, as intermediate layer in the review changes taxonomy; (2) Conducting an initial evaluation of the goodness of our approach with developers. Each interview was conducted by the first author in the form of semi-structured interviews and lasted approximately 30 minutes. All interview were conducted remotely. Moreover, to clarify the concepts discussed in the interviews and show examples to the participants, we use a set of slide (an example is available in our replication package (Fregnan et al. 2020)). Note that in the following section, we refer to the follow-up interviews participants with the code assigned to them in the original interviews and reported in Table 12.

- Evaluation of review changes groups: :

-

To evaluate the goodness of review changes groups, we discuss them with developers. First of all, we introduce again the concept of review changes as well as all the change types reported in Beller et al.’s taxonomy (Beller et al. 2014) (reported in Fig. 2). To support our explanation, we show to the interviewees a slide with the taxonomy.

All of the interviewees positively assessed the reduction of the original taxonomy into the defined four groups. P10 reported how the reduction into groups was based on the original taxonomy and did not arbitrarily introduced new categories. Five participants mentioned that the level of granularity of the classification depends on the goal of our approach. Participants agreed that the level of information offered by our approach is enough, for instance, to inform project managers about the kind of changes that happen in their code review process. For instance, P1 reported how groups are appropriate to offer an overview of the review process of a project, but a finer level of granularity is required to make this information fully actionable.

- Assessing our approach with developers: :

-

In the second part of the interviews, we conduct an initial evaluation of the performance of our classification approach with developers. To this aim, we show in random order to each participant 20 review changes and ask them to classify each change in one of the four group (documentation, visual representation, structure, and functional). To select the changes, we applied our classification approach to randomly selected reviews in the code review history of JGit. Among the resulting classified changes, we randomly choose five changes per group. Each participant is asked to evaluate a different set of review changes: We do not show the same change to multiple participants. Before asking participants to complete this task, we discuss with them the review changes taxonomy and introduce the concept of review change groups.

We compare the classification performed by the interview participants with the one of our approach. Overall, developers agreed with the approach classification in 79.41% of the cases. Despite this investigation constitutes only an initial assessment of the performance of our approach, the obtained results are encouraging. We notice that the majority of the disagreements between developers and our approach was caused by structure and functional changes. This might be caused by the relatively similar appearance of these two groups of changes: without having an in-depth knowledge of the project, it is not always possibile to immediately understand if a change is impacting the functionality of the code (therefore, being a functional change) or not.

6 R Q 2.2: Evaluation with open-source projects

In RQ2.2, we verify the meaningfulness of the information on review changes on 20 open source projects. The main goal is to analyze the developers’ perception of such data when related to their own codebase and, therefore, evaluate the impact of automatically classifying review changes for active software projects.

6.1 Design and structure

Design.

To analyze how information obtained by automatically classifying review changes is perceived by open-source developers, we consider 20 different projects and send the results of the classification to developers in the form of an online report, with some attached questions to gather feedback. We devised our reports following a methodology similar to the one used in previous studies (Vassallo et al. 2019a).

The target population of our investigation is represented by the main developers of each project (e.g., core developers or product owners): to give valuable feedback on the data generated by our approach is indeed necessary to have a high-level view and understanding of the whole code review process of the project. The respondents are contacted by sending the link to the report to the developers’ mailing lists or project forums, as these channels mostly attract the interaction of core contributors (Shibuya and Tamai 2009). Among the 20 projects (reported in Table 13), three are the initial systems used for the training of our tool (i.e., Android, JGit, and Java-client). The remaining projects are randomly selected among the ones belonging to the same communities as JGit (Eclipse) and Java-client (Couchbase). We focus on these communities because of the high review activity of their developers. Furthermore, all the selected projects respect the following criteria: they are mainly written in Java and use Gerrit as code review tool.

To create the reports, we extract information on the code review history of each project using the Java implementation of the Gerrit REST API.Footnote 11 We use these data as input for our review changes classification approach (presented in Section 4). The information returned by the devised classification approach is used to create the graphs shown in the online reports.

Structure of the reports.

In the online report, after a brief explanation on the meaning of review changes and their selected types (i.e., evolvability, functional, documentation, visual representation, and structure), we show developers an overview of the information collected by the designed approach, together with one real example of each type of change extracted from their project (see Fig. 19). Below the latest commits on the Gerrit platform, we show the general overview of the results. The overview shows the results in three forms. One chart (Fig. 19a) represents the total number of reviews that happened over the last year. This data is intended to give an idea of the amount of merged code reviews in the project. The second chart shows the total types of changes, computed over the last year, in a pie-chart (Fig. 19b). Finally, the histograms (Fig. 19c) represent the types of review changes that happened over the latest twelve months. Given the differences between the number of functional and evolvability changes, the two classes are shown in the same chart but with two different scales. Although this factor should be evident from the data shown on the single histogram of each change, we also inform the developers about the two different scales in the description of the figure and with a label below the chart. Following, we show to the participants both charts in detail (Fig. 19b and c) and ask developers to rate the information gained for the overview and for each detailed chart. More in detail, we ask if the figures are clear and if the information is useful for project managers and for developers. Furthermore, participants can also indicate how useful the entire report is, whether they perceive they learned something new from it and if this information might have a positive influence on their code review process.

General overview shown to participants. The images and charts were cropped for readability purposes. a Number of reviews over times. b Total review changes per group. c Review changes group over time. The original report is available in the replication package (https://doi.org/10.5281/zenodo.5592254)

At the end, participants can fill out background information to complete the collection of their data. We give them the possibility of contact us in case they were interested in knowing more details about our tool and the results obtained for their project. The answers to all the questions, excluding the ones requiring an extensive answer, are expressed using a Likert scale (Likert 1932) ranging from 1 (Strongly disagree) to 5 (Strongly agree). All reports are available in our replication package.Footnote 12

6.2 Analysis of the results

Out of the 20 reports sent, 18 developers from 10 different projects reached back to us with feedback. 17 of them replied to our questionnaire: we received 15 complete answers and 2 partial ones. Moreover, five developers additionally contacted us directly to give more extensive feedback. All respondents except one are core developers, integrators (i.e., developers having committing/merging rights), or product owners. For this reason, we consider them a valuable source of feedback as they are fully aware of what happens in the code review process. The last participant declared not to be involved in the development of the project, so we excluded their answer from our analysis.

The majority of the respondents acknowledged the novelty of the information provided by the designed approach assigning, on average, a score of 4.2 (std. ≈ 0.5) to this question on a Likert scale. One developer further commented on the value of this kind of data remarking that: “this provides a concrete way to measure one of the effects of code review”. To further confirm this aspect, some developers stated that they learned something new about their projects. For instance, one participant reported that: “The distribution of changes per group [is] different than expected”, while another was not aware of the distribution of the changes over time.

Moreover, we confirmed our initial hypothesis about the potential users of review changes data. Our participants agreed that the information provided by the overview (avg. 4.2, std. 0.5), graph B (avg. 3.9, std. 0.4), and graph C (avg. 4.3, std. 0.4) might be particularly valuable for a project manager. One of the developers reported that a closer look to the review process might help management to better understand the development process. However, they also mentioned the possibility of misuse of these data by the management. Such a possibility was also considered by some of the participants in our concept testing.

In contrast, and as found in RQ2.1, we obtained mild reactions when asking if the report could have been useful for developers (e.g., avg. 3.5, std. 0.62 for the overview). A developer further commented on this matter: “these kind of statistics are nice for a manager, but no clue how this would influence daily practice of an engineer?”.

Overall, developers positively assessed the value of the information shown in the reports. This gave an initial indication of the potential usefulness of information on review changes for practitioners. However, further studies need to be conducted to fully evaluate the usefulness of this information: e.g., integrating our classification approach into the code review pipeline of existing projects.

As a further step of the investigation, we evaluated potential actions on the review process that could be triggered by the information of our report. We asked participants to report how the data shown could influence code review policies, use of support tools, and reviewers assignment. However, we could not reach any strong conclusion on this matter since we got neutral responses (e.g., avg. 3.0, std. 1.03 for the tools use). We argue that a potential cause of these results might be the lack of further documentation and interaction with our visualization. For instance, one of our participants stated that they would need more details about each category to fully grasp the potential of the information. Similarly, another developer said that they might want to have more background on the specific changes that were classified in each category. In other words, developers recognized the value of the reported information but, at the same time, require additional understanding and possibly other data to fully benefit from it.

7 Discussion and implications

Our results highlighted aspects to be further discussed.

- Detecting functional defects in code review. :

-

As noticed in the context of RQ1, most of the changes applied in code review refer to evolvability modifications rather than functional ones. This finding confirms the results reported by Beller et al. (2014) and further supports the research aimed at improving static analysis tools to pinpoint potential issues in source code (Vassallo et al. 2018; Vassallo et al. 2019a; Di Penta et al. 2009; Johnson et al. 2013). Moreover, this result suggests that developers might benefit from the outcome of dynamic analysis methods during code review: for instance, providing developers with the output of test cases may help them in finding defects in source code.

- On the value of classifying review changes. :

-

First and foremost, the results achieved from both RQ2.1 and RQ2.2 suggest that the information coming from the analysis of the review changes is perceived as useful for project managers, and possibly software developers, to better understand the types of modifications done and achieve better insights on the code review process of the project. As such, despite our automatic approach has still some limitations when classifying functional changes, it represents the first step to put this kind of knowledge at the service of developers. On the one hand, our work has implications for practitioners: They can adopt our automated approach to improve their code review process rather than relying on time-consuming manual classification of code changes. On the other hand, our work contributes to the state of the art in code review research and opens new directions for researchers, who are called to further investigate code review practices and how to provide meaningful information to practitioners.

- Integrate automatic review changes classification into code review. :

-