Abstract

Nowadays, RESTful web services are widely used for building enterprise applications. REST is not a protocol, but rather it defines a set of guidelines on how to design APIs to access and manipulate resources using HTTP over a network. In this paper, we propose an enhanced search-based method for automated system test generation for RESTful web services, by exploiting domain knowledge on the handling of HTTP resources. The proposed techniques use domain knowledge specific to RESTful web services and a set of effective templates to structure test actions (i.e., ordered sequences of HTTP calls) within an individual in the evolutionary search. The action templates are developed based on the semantics of HTTP methods and are used to manipulate the web services’ resources. In addition, we propose five novel sampling strategies with four sampling methods (i.e., resource-based sampling) for the test cases that can use one or more of these templates. The strategies are further supported with a set of new, specialized mutation operators (i.e., resource-based mutation) in the evolutionary search that take into account the use of these resources in the generated test cases. Moreover, we propose a novel dependency handling to detect possible dependencies among the resources in the tested applications. The resource-based sampling and mutations are then enhanced by exploiting the information of these detected dependencies. To evaluate our approach, we implemented it as an extension to the EvoMaster tool, and conducted an empirical study with two selected baselines on 7 open-source and 12 synthetic RESTful web services. Results show that our novel resource-based approach with dependency handling obtains a significant improvement in performance over the baselines, e.g., up to + 130.7% relative improvement (growing from + 27.9% to + 64.3%) on line coverage.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

REST is an architectural style composed of a set of design constraints on architecture, communication, and web resources for building web services using HTTP (Fielding 2000; Allamaraju 2010). It is useful for developing web services with public APIs over a network. Currently, REST has been applied by many companies for providing their services over the Internet, e.g., Google,Footnote 1 Amazon,Footnote 2 and Twitter.Footnote 3 However, in spite of their widespread use, testing such RESTful web services is quite challenging (Bozkurt et al. 2013; Canfora and Di Penta 2009) (e.g., due to dealing with databases and calls over a network).

In this paper, we propose a novel approach to enhance the automated generation of systems tests for RESTful web services using search-based techniques (Harman et al. 2012). To generate tests using search-based techniques, we use the Many Independent Objectives evolutionary algorithm (MIO) (Arcuri 2018b). The MIO algorithm is specialized for system test case generation with the aim of maximizing code coverage and fault finding. The MIO algorithm is inspired by the (1 + 1) Evolutionary Algorithm (Droste et al. 1998), so that an individual is mainly manipulated by sampling and mutation (no crossover). However, our novel techniques could be extended and adapted in other search algorithms.

We implemented our approach as an extension of an existing white-box test case generation tool, called EvoMaster (Arcuri 2018a; 2019). The tool targets RESTful APIs, and generates test cases in the JUnit format, where sequences of HTTP calls are made to test such APIs. During the search, EvoMaster assesses the fitness of individual test cases using runtime code-coverage metrics and fault finding ability.

Our novel approach is designed according to REST constraints on the handling of HTTP resources. First, based on the semantics of HTTP methods, we design a set of effective templates to structure test actions (i.e., HTTP calls) on one resource. Then, to distinguish templates based on their possible effects on following actions in a test, we add a property (i.e., independent or possibly-independent) to the template. A template is independent if actions with the template have no effect on following actions on any resource. Furthermore, we define a resource-based individual (i.e., a test case) by organizing actions on top of such templates. To improve the performance of the MIO algorithm with such individuals (i.e., the test cases evolved in the evolutionary search), we propose a resource-based sampling operator and resource-based mutation operators in our approach.

For the smart sampling operator, we define four sampling methods. At each sampling of a new random individual in the evolutionary search, one of these methods is applied to sample a new test. These methods are designed by taking into account the intra-relationships among the resources in the system under test (SUT). To determine how to select a method for sampling, we propose five strategies: Equal-Probability enables an uniformly distributed random selection; Action-Based enables a selection based on the proportions of applicable templates; Used-Budget-Based enables an adaptive selection based on the passing of search time; Archive-Based enables an adaptive selection based on their achieved improvement on the fitness; and ConArchive-Based enables an adaptive selection based on fitness improvement after a certain amount of sampling actions on one resource. Regarding mutation, we propose five novel operators to mutate the structure of the individuals, with respect to their use of the resources.

To seek a proper combinations of resources in the tests, we develop resource dependency handling which comprises dependency identification, and is integrated with resource-based sampling and resource-based mutation. In REST, there typically exist some dependencies among resources in the SUTs, and dependency identification is used to detect such dependencies based on REST API Schema, Accessed SQL Tables and Fitness Feedback. To exploit combinations of the resources, we enhance resource-based sampling and resource-based mutation with strategies involving the detected dependencies, e.g., sample actions on dependent resources in a test, and remove actions on a resource which is not related to any other resources.

We conducted an empirical study on our novel approach by comparing it with the existing work on white-box testing of RESTful APIs, i.e., the default version of EvoMaster. Experiments were carried out on seven open-source case studies, which we used in previous work and gathered together in an open-source repositoryFootnote 4 made for experimentation in automated system testing of web/enterprise applications. To investigate the role of resource dependencies in more detail, we also created twelve synthetic case studies,Footnote 5 designed with various resource settings and relationships.

Results of our empirical study show that our novel techniques can significantly improve the performance of the test generation (e.g., relative improvement of line coverage is up to 130.7%) on SUTs that use fully independent, or closely connected, resources. Due to the randomness of the algorithm, in the worst case the improvements can be negligible.

The paper is an extension of a conference paper (Zhang et al. 2019), and the new contributions in this paper are summarized as follows:

-

To enable proper handling of multiple resources, dependency handling is newly developed that consists of dependency identification, resource-based sampling with dependency and resource-based mutation with dependency. Besides, based on our experiments, dependency handling achieves a further improvement on our resource-based solution.

-

To better assess our proposed resource-based solution, we designed the synthetic RESTful API generatorFootnote 6 for automatically generating RESTful APIs with various resource-based configurable properties, i.e., a number of resources, applied HTTP methods, a number of dependencies, a constructed resource graph, different types of dependencies, and show/hide dependency on URIs. Note that the generator is also useful to setup experiments for studying other RESTful APIs-related approaches.

-

We designed three resource graphs with two dependency-related constraints and two URI generations that generate a total of 12 synthetic RESTful APIs. Those are new case studies for our experiment in this extension.

-

With our novel techniques, we answer new research questions and more experiment settings. Compared with the 22 experiment settings in the conference version, 52 experiment settings are conducted in this extension.

-

To investigate the performance of our proposed approach on the various case studies, we characterize in detail five of the real RESTful APIs. We manually derived the resource dependency graphs for each of these APIs by checking their implementation in details. Then, the impact of resources and their dependencies on the SUTs are discussed.

-

All the experiments are newly conducted with the latest tool version of EvoMaster.

-

Regarding the main changes in the paper, Sections 4, 6, 7.2 and 8 are all new.

The rest of the paper is organized as follows. In Section 2, we provide a brief description on related background topics, needed to better understand the rest of the paper. Section 3 discusses related work. The overview of the proposed approach is presented in Section 4, followed by Resource-based MIO (Section 5) and Resource Dependency Handling (Section 6). The applied case studies are presented in Section 7, while the empirical study and its results are discussed in Section 8. We discuss threats to validity in Section 9 and conclude the paper in Section 10.

2 Background

2.1 HTTP and REST

The Hypertext Transfer Protocol (HTTP) is an application protocol used by the World Wide Web. The protocol defines a set of rules for data communication over a network. HTTP messages are composed of four main elements:

-

Resource path: indicates the target of the request, referring to a resource that will be accessed. The resource path defines Uniform Resource Identifier (URI), which can include query parameters. These latter are pairs of “key=value”, separated by & symbols, following the resource path after a “?”, e.g., /api/someResource? x=foo&y=bar.

-

Method/Verb: the type of operation that is performed on the specified resource. The types of operations include: i) GET: retrieve the specified resource that should be returned in the Body of the response; ii) HEAD: similar to GET, but without any payload; iii) POST: send data to the server. This is often used to create a new resource; iv) DELETE: delete the specified resource; v) PUT: similar to POST. But PUT is idempotent, so it is usually employed for replacing an existing resource with a new one; vi) PATCH: partially update the specified resource.

-

Headers: carries additional information with the request or the response.

-

Body: carries the payload of the message, if any.

The Representational State Transfer (REST) is designed for building web services on top of HTTP. The concept of REST was first introduced by Fielding in his PhD thesis (Fielding 2000) in 2000, and it is now widely applied in industry, e.g., Google,1 Amazon,2 and Twitter.3 REST is not a protocol, but rather it defines an architectural style composed of a set of design constraints on how to build web services using HTTP. A web service using REST should follow some specific guidelines, e.g., the architecture should be client-server by separating the user interface concerns from the data storage concerns, and communications between client and server should be stateless. To manage resources, REST suggests that: i) resources should be identified in the requests by using Uniform Resource Identifiers (URIs); ii) resources should be separated from their representation, i.e., the machine-readable data describing the current state of a resource; iii) the implemented operations should always be in accord with the protocol semantics of HTTP (for example, you should not delete a resource when handling a GET request). In this paper, our novel approach is based on the assumption that the web services are written following the REST constraints, especially following the protocol semantics of HTTP method to develop endpoints. However, our approach should not have any significant negative side-effects when dealing with non-conforming APIs.

In a RESTful API, data can be transfered in any format. However, one of the most typical format is JSON (JavaScript Object Notation). For example, all the SUTs in our empirical study use JSON. Furthermore, JSON is also typically used to specify the schemas of such APIs (e.g., with OpenAPI/SwaggerFootnote 7).

2.2 The MIO Algorithm

The Many Independent Objective (MIO) algorithm (Arcuri 2018b) is an evolutionary algorithm specialized for system test case generation in the context of white-box testing. The algorithm is inspired by the (1 + 1) Evolutionary Algorithm (Droste et al. 1998) with a dynamic population, adaptive exploration/exploitation control and feedback-directed sampling.

Algorithm 1 shows the pseudo-code representation of the MIO algorithm. The search is started with no populations. Each time a testing target is “reached” when executing a test, a new empty population is created for such target, and the test is added to it. For example, when a statement like “if(predicate)” is executed (i.e., “reached”), there will be two branch-coverage targets, representing the “then” and “else” branches. Unless the evaluation of the predicate leads to an exception, one of these two branches will be “covered”, whereas the other will be “reached” but “uncovered”. Afterwards, at each step, with a probability Pr, MIO either samples new tests at random or samples (followed by a mutation) a test from a population that includes reached but uncovered targets.

As the next step, the sampled/mutated test may be added to the populations if it achieves any improvement on covered targets. Once the size of a population exceeds the population limit n, the test with worst performance is removed. In addition, at the end of a step, if an optimization target is covered, the associated population size is shrunk to one, and no more sampling is allowed from that population. At the end, the search outputs a test suite (i.e., a set of test cases) based on the best tests in each population. In the context of testing, users may care about what targets are covered, rather than how heuristically close they are to be covered. Therefore, MIO employs a technique called feedback-directed sampling. This technique guides the search to focus the sampling on populations that exhibit recent improvements in the achieved fitness value. This enables an effective way to reduce search time spent on infeasible targets (Arcuri 2018b). Moreover, to make a trade-off between exploration and exploitation of the search landscape, MIO is integrated with adaptive parameter control. When the search reaches a certain point F (e.g., 50% of the budget has been used), the search starts to focus more on exploitation by reducing the probability of random sampling Pr.

In this paper, we introduce resource-based individual by reformulating the individual for the REST problem, and propose new sampling and mutation operators that enables handling of resource and dependency in the context of RESTful web services.

The proposed solutions could be applicable and adapted to other evolutionary algorithms addressing test generation for RESTful web services. As MIO was the best in previous studies (Arcuri 2018b; 2019) (in terms of the fitness function, which uses code coverage and fault detection), we employ MIO with the newly proposed solutions in this paper to assess improvements on the problem of testing RESTful web services.

2.3 RESTful API Test Case Generation

In Arcuri (2019), we proposed a search-based approach for automatically generating system tests for RESTful web services, using the MIO algorithm (recall Section 2.2). Testing targets for the fitness function were defined with three perspectives: 1) coverage of statements; 2) coverage of branches; and 3) returned HTTP status codes. In addition, to improve the performance of sampling in the context of REST, smart sampling techniques were developed for sampling tests (i.e., sequences of HTTP calls) with pre-defined structures by taking into account RESTful API design. The structures are described as follows:

-

GET Template: k POSTs with GET, i.e., add k POSTs before GET. This template attempts to make specified resources available before making a GET on them. k is configurable, e.g., k = 2 indicates that add 2 POSTs before a GET.

-

POST Template: just a single POST.

-

PUT Template: POSTs with PUT, i.e., add 0, 1, or more POSTs before PUT with a probability p. PUT is an idempotent method. When making a PUT on a resource that does not exist, the PUT could either create it or return an 4xx status. So the template involves a probability for sampling a test with either a single PUT, or POSTs followed by a single PUT.

-

PATCH Template: POSTs with PATCH, i.e., add 0, 1, or more POSTs before a PATCH, and possibly add a second PATCH operation at end with a probability p. The second PATCH is used to check if POSTs and the first PATCH are doing partial updates instead of a full resource replacement.

-

DELETE Template: POSTs with DELETE, i.e., add 0, 1 or more POST operations followed by a single DELETE.

The approach was implemented as an open-source tool, named EvoMaster.6 It has two components (Arcuri 2018a): Core which mainly implements a set of search algorithms for test case generation (e.g., WTS Rojas et al. 2017); and Driver that is responsible for controlling (e.g., start, stop, and reset) the SUT, and for instrumenting its source code. With it, the search algorithm assesses the fitness of individual test cases using runtime code-coverage metrics (e.g., lines and branches) and fault finding ability (e.g., based on HTTP status codes such as 500, and on discrepancies of the results with what is expected based on the API schema). For SUTs that compile into JVM byte-code, the instrumentation to collect code-coverage metrics is done fully automatically by the Driver when such SUTs are started.

EvoMaster can also analyse all interactions with SQL databases (Arcuri and Galeotti 2020), to improve the generation of test cases (e.g., by analysing which data is queried). Furthermore, to make the test independent from each other, the databases are reset at each fitness evaluation (just the data is cleaned, as there is no need to re-create the SQL schemas or re-start the databases).

3 Related Work

Recently, there has been an increase in research on black-box automated test generation based on REST API schemas defined with OpenAPI (Atlidakis et al. 2019; Karlsson et al. 2020; Viglianisi et al. 2020; Ed-douibi et al. 2018). Atlidakis et al. (2019) developed RESTler to generate test sequences based on dependencies inferred from OpenAPI specifications and analysis on dynamic feedback from responses (e.g., status code) during test execution. In their approach, there exists a mutation dictionary for configuring test inputs regarding data types. Karlsson et al. (2020) introduced an approach to produce property-based tests based on OpenAPI specifications. Viglianisi et al. (2020) employ Operation Dependency Graph to construct data dependencies among operations. The graph is initialized with an OpenAPI specification and evolved during test execution. Then, tests are generated by ordering the operations based on the graph and considering the semantics of the operations. Ed-douibi et al. (2018) proposed an approach to first generate test models based on OpenAPI specifications, then produce tests with the models.

OpenAPI specifications are also required in our approach for accessing and characterizing the APIs of the SUT (e.g., which endpoints are available, and what types of data they expect as input). As we first introduced in Arcuri (2019) and Zhang et al. (2019), the OpenAPI specifications are further utilized for identifying resource dependencies, similarly to what recently done by approaches like in Atlidakis et al. (2019) and Karlsson et al. (2020). However, the dependencies we identify are for resources, and not just operations. In our context, we consider that a REST API consists of resources with corresponding operations performed on them, and there typically exist some dependencies among the different resources. To identify such dependencies, we analyze the API specification and collect runtime feedback. We then use the derived dependencies to improve the search by enhancing how test cases are generated and evolved. A key difference here is that, in contrast to all existing work, we can further employ white-box information to exploit and derive the dependency graphs. For instance, if a REST API interacts with a database, manipulating resources often leads to further access data in such database, e.g., retrieving a resource might require to query data from some SQL table(s). This information about which tables are accessed at runtime can be obtained with EvoMaster. Such runtime information helps to identify a relationship between a resource and SQL tables. Thus, through the analysis of which tables are accessed at runtime we can further derive possible dependencies among resources. In this work, to derive the dependencies, we also employ code coverage and the other search-based code-level heuristics by checking the effects on involving different resources.

Note that OpenAPI specifications do not need to be necessarily written by hand. Depending on the libraries/frameworks used to implement the RESTful web services (e.g., with the popular Spring), such OpenAPI schemas can be automatically inferred (e.g., using libraries such as SpringFox and SpringDoc). So, the lack of an existing OpenAPI schema is not necessarily a showstopper preventing the use of tools such as EvoMaster.

Besides existing work on black-box testing based on industry-standards such as OpenAPI/Swagger7 schemas, there exist previous approaches to test REST APIs that rely on formal models and/or ad-hoc schema specifications (Chakrabarti and Kumar 2009; Chakrabarti and Rodriquez 2010; Fertig and Braun 2015; Pinheiro et al. 2013; Lamela Seijas et al. 2013). The models often describe test inputs, exposed methods of SUTs, behaviors of SUTs, specific characteristics of REST or testing requirements. An XML schema specification used for testing was introduced by Chakrabarti and Kumar (2009). This was then extended in Chakrabarti and Rodriquez (2010) to formalize connections among resources of a RESTful service, and further focus on testing such “connectedness”. Fertig and Braun (2015) developed a Domain Specific Language to describe APIs, including HTTP methods, authentication and resource model. A set of test cases can be generated from such a model. Lamela Seijas et al. (2013) proposed an approach to generate test cases based on property-based test models, and UML state machines are applied (Pinheiro et al. 2013) to construct behavior models for test case generation.

In contrast to such earlier work, to make our approach and tool as usable as possible for practitioners in industry, we rely on industry standards such as OpenAPI/Swagger specifications. Our techniques do not require practitioners to write academic formal models to be able to use our techniques in practice on their systems.

Besides improving coverage of an API, it is important to design new techniques to detect different categories of faults in such APIs. Segura et al. (2017) developed an approach for the metamorphic testing of RESTful Web APIs, for tackling the oracle problem. The approach defined six abstract relations covering possible metamorphic relations in a RESTful SUT. Those can be used to detect faults when test data is generated for which those metamorphic relations are not satisfied. In this work, we do not propose any new approach to tackle the oracle problem in API testing.

All the above are black-box testing approaches that are different from our approach, i.e., white-box system test case generation for RESTful APIs. As discussed, in Arcuri (2017) and Arcuri (2019) our team proposed a means of generating test cases for RESTful APIs by using search-based techniques to create sequences of HTTP calls that has been implemented as a prototype tool, named EvoMaster. In addition, a major novelty is that SQL operations are enabled in EvoMaster for producing tests with handling of databases (Arcuri and Galeotti 2019; 2020). This is a search-based software testing (Ali et al. 2010) approach, relying on information obtained from the API specifications and code instrumentation to generate test cases. It does not, however, identify relationships between resources and consider the relationships when generating these test cases (apart from some basic templates introduced in Arcuri (2019)). Therefore, in this paper, we propose a complete resource-based approach, built upon EvoMaster, by detecting resource dependencies, introducing resource-dependency handling methods and strategies, as well as developing tailored sampling and mutation operators.

Another key difference with existing work is that, not only EvoMaster is open-source and freely available on GitHub,6 but also it is actively supported, with extensive documentation on how to use it. This is essential to enable replicated studies, and for using EvoMaster in studies involving tool/technique comparisons. For example, in this work, we could compare our novel techniques only with the base version of EvoMaster, as no other tool was available.

4 Overview of the Proposal

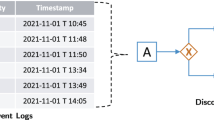

REST defines a set of guidelines for creating stateless services which can be accessed over a network using HTTP. Figure 1 shows a snippet example of a specification of API following REST guidelines. The specification is defined using an OpenAPI/Swagger7 schema. In the example, the APIs are structured with resource URIs, and relevant HTTP methods are defined for each resource.

In our context, an individual is a test case composed of a sequence of HTTP calls. Each HTTP call consists of a specific HTTP method and an associated resource, defined by its URI for performing some actions on the associated resource. Consider an API that deals with products and warehouses, as the example in Fig. 1. Some tests (in pseudo-code) for such API are shown in Fig. 2. Each line represents an action which follows the format <a method on a resource path with/without parameters><the method on the path with values of the parameters>. For instance, the HTTP call POST /products/foo?warehouse=bar&quantity= 20 is an action to add 20 new products named foo in a warehouse named bar.

Note that, to make the examples more readable, here a resource is created with POST using query parameters. But, in practice, usually the data would be in the body payload of the requests (as URLs have small size limits). Furthermore, for simplicity we are considering a POST that fails if the resource already exists. A different approach could have been to rather use PUT operations to create and/or update these resources.

Regarding the action, we can identify a resource foo of type product directly handled by this call, and a referred resource bar warehouse. When executing this action in different tests, the status of the resources (i.e., foo and bar) might be different in the SUT’s backend, and so then result in different code executions. As demonstrated in Fig. 2, four tests represent this action (at line 3) with different statuses of the resources, i.e., Example 2: the warehouse bar exists and has space to store 40 new products, and the product foo does not exist; Example 2: the warehouse bar does not exist; Example 2: the warehouse bar exists, and the product foo exists; Example 2: the warehouse bar exists but there is no enough space to store 100 products. With each of the states, the call at line 3 executes different paths in the source code of the SUT. From a testing perspective, exploring those different possible states of resources may help to improve coverage of the testing targets (e.g., lines, branches and HTTP status codes).

Typically, search-based techniques use random sampling to create new individuals. In our context, an individual is a series of HTTP calls, where the resources are identified with URIs. Those depend on variables that can be part of the search, such as path elements and query parameters. Depending on quantity and complexity of those variables, sampling them at random would lead to different URIs (especially when the variables are strings). Furthermore, different but related resources will have different URIs, where the relations will be expressed by some specific variable (e.g., an ID that is a path element in a resource, and it is referenced in another resource as a query parameter).

In this manner, it is unlikely we will be able to generate several HTTP calls at random to perform on relevant, related resources, e.g., line 2 and line 3 on foo product in Fig. 2. If there exist some relationships among resources and actions just as in the product-warehouse example, then it is very unlikely to produce tests that result in good coverage. Therefore, we propose Resource-based MIO (Section 5) to enable handling of individuals with respect to resources, i.e., resource-based individual, resource-based sampling and resource-based mutation.

There typically exist some dependencies among resources in a RESTful API. Often, the dependencies can be identified based on hierarchical structures of the URIs. For example, the resource foo is hierarchically related to the collection of all products called /products, i.e., the resource products/foo belongs to the collection resource /products. However, there might exist other kinds of relations, e.g., a product depends on a warehouse, and that information is not part of the path element in the URI. To derive such further kinds of dependencies and exploit them to generate higher coverage tests, we propose Resource Dependency Handling (Section 6).

Algorithm 2 represents how the proposed techniques are integrated in MIO (Algorithm 1). These techniques are controlled with parameters, i.e., probability for resource-based sampling Ps, probability for applying dependency handling Pd, and enabling of dependency pre-matching PM. At the beginning of the search, dependencies of the SUT are typically unknown, i.e., an empty D. But there might be some information on the dependencies stated in the RESTful API schema of the SUT (e.g., based on hierarchical relationships in the URI path elements). So we develope a pre-matching process to initialize dependencies with the schema (Section 6.1), the process can be applied when dependency handling and dependency pre-matching are enabled, i.e., Pd > 0 and PM (see lines 4-5 in Algorithm 2). During the search, based on a specified probability for resource-based sampling Ps, resource-based sampling (see lines 8-9, discussed in Section 5.2) and mutation (see lines 14-15, discussed in Section 5.3) are applied to sample and mutate an individual regarding resources. Note that the individual is a test for REST API. The resource-based sampling and mutation can be enabled with dependency-based strategies for producing tests, e.g., sample an individual with actions on dependent resources. The strategies are controlled by the probability Pd and enabled when Pd > rand() is evaluated as true at line 9 (for the sampling introduced in Section 6.2) and line 15 (for the mutator introduced in Section 6.3). After the individual is executed on the SUT and its fitness is evaluated, we make use of the information on which database tables were accessed and changes on fitness to derive the dependencies among resources (see lines 18-21 in Algorithm 2 and introduced in Section 6.1). Based on such dependency handling, the derived dependencies are updated and refined over each iteration of the search. At the end of search, the best individuals are selected to generate the output test suite based on their code coverage and fault finding.

5 Resource-Based MIO

5.1 Resource-Based Individual Representation

To enable the handling of individuals regarding resources, we defined a set of templates that list meaningful combinations of HTTP methods based on their semantics. Then, an individual is reformulated as a sequence of resource-handling s, and each of the resource-handling s is a sequence of actions (i.e., HTTP calls) on one resource based on the templates (e.g., POST-DELETE). With such an individual, the search can be applied to handle actions based on resources (e.g., sample actions on the same resource) and manipulate resources (e.g., add actions on a new resource), instead of handling each action independently. However, search is still needed, for example to evolve the right query parameters for the URIs, the content of the body payloads (e.g., JSON objects), and the HTTP headers.

Based on the different types of HTTP methods, we define templates in Table 1. Note that we intentionally make the template short (i.e., at most combine two different types of HTTP methods) to allow small modifications on the structure of the individuals. As the example shown in Fig. 2, code coverage does often depend on the status of the resources (e.g., if they exist or not). Different types of HTTP methods can help to manipulate the status of a resource before a following action is executed:

-

POST (PUT) and DELETE may be applied to handle the existence of a resource;

-

PUT and PATCH may be applied to update some properties of a resource when the resource exists;

-

GET and HEAD typically cannot change a status of a resource.

In the design of the templates, we only focused on the existence of resources. This is because the update action is restricted by the existence condition. For example, assume that an update (i.e., PATCH) performs on an existing resource and a following action DELETE improves the code coverage of the tests. This would normally be due to the existence of the resource itself rather than what update operation was previously performed on it. Even if the success of a DELETE was dependent on a specific value in a field of the resource, such a value could have been directly provided in the operation that created the resource in the first place (e.g., a POST). Therefore, an update operation on the resource would not be needed in this context.

In the templates, we only use methods (i.e., POST or PUT) to prepare the existence condition of a resource. We ignore DELETE to make the resources non-existent, i.e., remove resources. This is because, in EvoMaster, the SUT is reset at each test execution (e.g., the database state is cleaned before each test execution). Furthermore, as the search starts by usually choosing new values at random for the parameters of the actions, this means that it is unlikely that the newly sampled values have been previously applied on a creation method (e.g., POST) for creating that corresponding resource in one specific test. In this case, a DELETE is almost the same as the situation when no creation method is used. Thus, executing an extra DELETE per resource (i.e., add DELETE as the first action to templates #1-#5) would be probably a waste of the search budget (e.g., by making the test unnecessarily longer, and so more time consuming to run).

We designed 10 templates (shown in Table 1) based on all types of HTTP methods along with whether the related resource exists. Only 5 new templates are introduced, as templates #5, #7-#10 are the same as the templates from our previous work (Arcuri 2019) (Section 2.3). These templates were applied on the sampling of whole test cases. On the other hand, in this paper, we apply them on a fragment of a test with the aim of handling multiple resources, and each fragment is a sequence of actions performed on a same resource (i.e., a test case can be composed of one or more fragments). In addition, we identify properties for all the templates, i.e., independent and possibly-dependent.

There might exist some unknown internal relations among resources in the SUT, e.g., /products /{productName} depends on /warehouses/{warehouseName} in Fig. 1. So, it is not clear, based on the URIs alone, whether actions executed first have effects on the following actions in a test. But actions that never have an impact can be derived based on the semantics of HTTP methods (e.g., GET operations are not supposed to change the state of the resources in the SUT).

In the context of testing, we also capitalize on invalid sequences of actions, i.e., #2-#3, that aim to operate on a resource that does not exist. Since the actions are expected as failure operations, they probably do not change any state of the resources. Therefore, we identify independent templates (#1-#3) that, when actions with the template are executed, do not have any effect on follow-up actions on any resource.

PUT might be implemented to create or update a resource (both options are valid according to the HTTP semantic). However, the implementation may vary among endpoints or case studies and is typically not exposed to the schema. Therefore, to cover the potential creation by PUT, we consider PUT with a 20% probability of creating a resource (i.e., see CREATE applied in #6-#10 in Table 1). Regarding a single PUT (i.e., #4), we consider its semantic as update, thus, #4 is independent. We further define a possibly-dependent template as a template for which independence cannot be assumed. Note that a possibly-dependent template might or might not be dependent, because it varies from resource to resource, and dependency of resources is usually unknown before a search starts. Moreover, we further identify resources based on their applicable templates. An independent resource is a resource which can only be manipulated with the independent template, and a possibly-dependent resource is a resource that can be manipulated with at least one possibly-dependent template.

With the defined templates, we formulate the resource-based individual (shown in Fig. 3) as a sequence of resource-handling constrained with one of the templates, i.e., (R1,...,Ri,...Rn) where n is a number of the resource-handling. Thus, the resource-handling is composed of operations that perform a sequence of actions on the same resource Ri, i.e., \(R_{i}=(A_{i,1}...A_{i,j}...A_{i,m_{i}})\) where mi is a number of actions of Ri and mi > 0. Each action is composed of a sequence of genes, i.e., \(A_{i,j} = (G_{i,j,1} ... G_{i,j,k} ... G_{i,j,t_{ij}})\) where tij is a number of genes in the j th action of the i th resource-handling and tij ≥ 0. Note that tij might be 0 if there does not exist any gene in a REST Action, e.g., GET /warehouses. With the hierarchical formulation, the individual can be seen as either a sequence of actions or a sequence of genes.

Figure 4 shows an example of a test for handling /warehouses/{warehouseName} with template #1 and /products/{productName} with template #7 for products-warehouse APIs. In this example, the test covers a scenario that handles two resources, i.e., warehouse and product. Regarding the handling (lines 2-3) with template #7 for retrieving a product with a specified productName, the POST action at line 2 is added to prepare the resource for the GET action at line 3, then value on the path element productName in the POST is bounded with the same value as in the GET. In addition, the representation of the test as a resource-based individual is shown in Fig. 5.

An example of the test in Fig. 4 formulated with resource-based individual. An instance of Gi,j,k is represented with its value, its name and its [type]

In the following subsections, we explain how to sample (Section 5.2) and mutate (Section 5.3) such an individual during the search.

Note: most of our templates are based on the semantics of HTTP. But, what if an API is not following such semantics, and for examples it has GET requests with side-effects? Most likely, in such cases we would see a decrease in performance compared with the default version of EvoMaster without our novel templates. However, one of the greatest benefits and strengths of evolutionary search is its adaptiveness. Test cases with lower fitness will have lower chances of reproducing. As we apply our templates only with a given probability, we still sample “regular” test cases. And, if those test cases will have higher fitness, they will reproduce more often and take over during the search. This could lead to a “slower” start, in which some search budget would be wasted in sampling tests with our templates. However, given enough search budget, those side-effects at the beginning of the search might become negligible.

5.2 Resource-Based Sampling

In this paper, we propose four methods to sample individuals as shown in Table 2. For each of the methods, we define an applicable precondition regarding exposed HTTP methods on resources in a SUT. In other words, the method can be applied to sample a test only if the precondition is satisfied.

One rationale behind the use of these methods is to distinguish related actions from independent actions (i.e., actions based on the independent template). By isolating actions with independent templates, we can reduce unnecessary invocations (i.e., HTTP calls) during the search. As the example in Fig. 4, the first action is to GET a warehouse by warehouseName. This is fully independent from the following two actions, as GET operations are not supposed to have side-effects. When applying a mutation on the parameter warehouseName of that action, it is highly possible that there is no improvement (e.g., covering new statements) achieved by the invocations of the remaining two actions. Besides, for testing two or more independent resources, it is better to have separate test cases (and thus, separate individuals). Shorter test cases are less costly to run, and easier to maintain. Therefore, for handling independent resources, we developed the S1iR strategy to sample one resource with one independent template in a test. Regarding handling of one resource, S1dR is defined to manipulate one resource with a possibly-dependent template in a test.

Another rationale is to explore dependency among resources. So we propose the S2dR strategy to sample the minimal dependent set by combining only two resources with possibly-dependent templates (e.g., the example in Fig. 6), and the SMdR for handling the possibility of complex, multi-resource dependencies. Note that when manipulating multiple resources in a test (i.e., S2dR and SMdR strategies), only an independent template with a non-parameter GET is allowed at the last position of the resources (e.g., GET /warehouses is allowed, but not GET /warehouses/{warehouseName}). As the non-parameter GET is often used to retrieve a collection of resources from the SUT, it may cover new statements due to new resources created by previous actions.

When a new individual is sampled with probability Pr (recall Algorithm 2), the individual is sampled with our resource-based sampling with probability Ps, or fully randomly with probability 1 − Ps. In the former case, one of the four methods in Table 2 is chosen, with a probability denoted as Pm, and the probabilities of the four methods are Pm(S1iR) + Pm(S1dR) + Pm(S2dR) + Pm(SMdR) = 1.

Note that it is important to still be able to sample tests at random with no structure with probability 1 − Ps. The templates we define in Table 1 should cover the most important cases, but likely not all. Which tests will then be most useful is left to the search to decide, based on the fitness function. Recall that in the evolutionary algorithms the most fit individuals have higher chances to reproduce.

The four methods enable us to sample tests with different considerations on resources. Since normally the resources involved vary from SUT to SUT, we designed five strategies to determine which method should be applied at the beginning of each sampling. For each applicable method, we set a probability Pm, which enables the selection process to be controlled by adjusting the appropriate selection probability. The five sample strategies are described as follows:

Equal-Probability: select methods at random with uniform probability, i.e., the probability for each applicable method is equal. It is calculated as \(P_{m} = \frac {1.0}{n_{m}}\), where nm is a number of applicable methods (i.e., the ones for which their preconditions are satisfied).

Action-Based: the probability for each applicable method is derived based on a number of independent or possible-dependent templates for all resources (this depends on which endpoints are available in the SUT, recall Table 1). It is calculated as:

where nat is the sum of the number of applicable templates for all resources, ndt is a sum of a number of possibly-dependent templates for all resources, and k is a configurable weight. Note that k(≥ 0) indicates a degree to prioritize the possibly-dependent templates. We set it to 1 in this implementation. For example, there exist 5 independent templates and 15 possibly-dependent templates in a SUT, with k = 1, \(P_{m}(S1iR) = \frac {15-10}{(15 + 10\times 1) }=0.2\), thus, a sum of the methods with possibly-dependent templates is 0.8. For the probability of each of the methods,

where (si,wi) ∈ S = {(si,wi)| si is an applicable method of S1dR, S2dR and SMdR, wi is a weight of the method, i = 1...ndm, and ndm is the number of applicable methods except S1iR}. The weight wi for the method can be decided in various ways, e.g., constant 1. In this implementation, considering that a test involved more resources might take more budget to execute, the weight is defined based on the number of resources sampled with the method, i.e., w(S1dR) = 3, w(S2dR) = 2, and w(SMdR) = 1.

Used-Budget-Based: the probability for each applicable method is adaptive to the used budget (i.e., time or number of fitness evaluations) during search. The strategy samples an individual with one resource with a high probability (i.e., 0.8) at the beginning of the sampling (i.e., the used time budget for sampling is less than 50%), and then at later stages of the search it turns to sample a test with multiple resource methods. This approach allows the search to explore test cases with one resource first, and only spend effort on multiple resources if there is still enough available budget to allow for that. The reasoning is that test cases with multiple resources are harder to develop and more costly to run. They will be considered after the simpler test cases have been tried, and only if they provide a fitness improvement over those simpler test cases.

Archive-Based: the probability for each applicable method is adaptively determined by its performance during the search. The performance is evaluated based on the number of times that the method has helped to improve the fitness values (i.e., improved times) during the search. It is calculated as:

where δ = 0.1, (si,ri) ∈ S = {(si,ri)|si is an applicable method, ri is a rank for the applicable methods that is computed based on improved times, i = 1...nm, and nm is a number of the applicable methods}.

ConArchive-Based (Controlled Archived Based): Distinguished from Archive-Based by a preparation phase. At the beginning of the sampling, the strategy samples an individual with one resource with a high probability. After a certain amount of search budget is used, the strategy starts to apply the same mechanism as Archive-Based. The strategy attempts to distinguish between improvements obtained by a combination of multiple resources from improvements obtained by different values on parameters of a resource. For instance, in Fig. 6, if a referred bar resource by actions at lines 2-3 is created by an action at line 1, the test could achieve an improvement due to the combination of the two resources (i.e., when the second resource depends on the first). But, during search, it would not be known whether the improvement is due to the actions on the first resource, actions on the second resource, or the combination of the two resources. If we first used some of the budget to sample the first resource and second resource separately in different tests, then later we may improve the chances of identifying whether the improvement is due to the combination (i.e., improve the chances to get the right improved times value for the strategies on multiple resources).

5.3 Resource-Based Mutation

When we sample a new individual during the search, we use our templates with some pre-defined structures. To improve the search, we need novel mutation operators that are aware of such structures. Therefore, we propose resource-based mutation that follows the same mechanism with MIO for mutating an individual: mutate values on parameters (i.e., the content of the HTTP calls, including headers, body payloads and path elements in the URIs), and mutate the structure of a test (i.e., adding or removing HTTP calls in the test).

Regarding value mutation, we apply the mutation on the parameters of the resources. The parameters of the resources are represented by the parameters of an action with longest path among the actions for the same resource. Once the value of any of the parameters is mutated, we update the other actions on the same resource with the same value. Considering a test shown in Fig. 6, in terms of /products/{productName} resource, parameters of an action at line 2 is selected to represent the resource. When a value of the parameter productName is mutated, e.g., from foo to ack, then the same parameter productName on the action at line 3 is also required to be updated with the same value, i.e., from foo to ack as shown in Example 7 in Fig. 7.

Examples of applying resource-based mutations on a test in Fig. 6

In addition, we propose five operators to mutate the structure of the individuals, for exploiting relationships among resources and different templates on resources: DELETE: delete a resource together with all its associated actions on that resource; SWAP: swap the position of two resources together with all their associated actions; ADD: add a new set of actions with a template for a new resource in the test; REPLACE: replace a set of actions constrained with a template on a resource with another set of actions constrained with a template on a new different resource; MODIFY: modify a set of actions on a resource with another template. For instance, a test applied with the MODIFY mutator is represented as Example 7 in Fig. 7.

6 Resource Dependency Heuristic Handling

In a RESTful web service, there typically exist some dependencies among the resources (recall Section 4). Then, a proper handling of resources according to their dependencies may help the generated tests to achieve a better coverage. In some cases, such dependencies might be partially identified by the resource path, i.e., hierarchical dependencies on the URIs, by just analyzing the OpenAPI/Swagger schemas. For example, the /products/{id} resource is hierarchically related to the resource /products. However, there might still exist some dependencies that are not exposed directly in the path elements of the URIs. For example, those could be HTTP query parameters or fields in body payload objects referencing IDs of other resources.

Therefore, we developed dependency heuristic handling to identify possible dependencies (Section 6.1). In addition, we enhance sampling (Section 6.2) and mutation (Section 6.3) by utilizing such identified dependencies among multiple resources.

6.1 Resource Dependency Detection

To identify dependencies among resources, we developed several solutions to derive them based on REST API Schema, Accessed SQL Tables and Fitness Feedback at runtime. Since the dependencies are heuristically inferred, each of the derived dependencies has an estimated probability (∈[0.0, 1.0]) for representing the confidence on the correctness of this inference. If a probability of a derived dependency is 1.0, then this indicates that we strongly consider this dependency to be correct. On the other hand, if the probability is 0.0, then this indicates that we strongly assume that there is no dependency between the two considered resources.

6.1.1 REST API Schema

Based on REST guidelines, a resource path and its parameters are typically designed with names of related resources. Thus, by identifying similar names, dependencies among resources might be derived directly based on the API Schema. As the snippet example of API schema shown in Fig. 1, there exists a dependency between products and warehouses, e.g., a product should always refer to an existing warehouse. In order to create a product, its creation method (e.g., POST) should be specified with the id/name of an existing warehouse. In this example, the dependent warehouse is identified by a query parameter in the POST /products/{productName}, named warehouse.

By detecting matched names among query parameters and path elements in the URIs, it is possible to identify potential dependencies among resources. EvoMaster requires API schemas specified with OpenAPI.7 Therefore, we identified possible components of the OpenAPI specification for inferring possible dependencies. The four components in the OpenAPI specification are: path, parameter (including defined object type of the parameter and attribute of the defined object type), operation description, and operation summary.

A path defines an endpoint, and it is composed of a sequence of tokens separated by / and {} symbols. The tokens between { and } are recognized as path parameters. For example, both /products/foo and /products/42 would match the same endpoint path /products/{productName}. Since there might exist multiple resources in a path to represent their hierarchical structure separated by /, to represent this resource, we take the last non-parameter token as a representative token for the resource. For instance, /products/{productName} can be decomposed into two tokens, products and productName, and the text products can be used to represent the resource. Given a representative token x for a resource A, then to check if another resource B has a dependency on A, we analyze if B has any reference to x in its schema definition (e.g., a query parameter name, or text description).

For parameter, defined object type and its attribute, we keep their names as tokens. Besides, we consider some commonly used words (e.g., id, name and value) relevant for deriving alternative possibilities for the identifying tokens. For example, an alternative token for warehouseName query parameter is simply warehouse, as the token ends with the word name (ignoring the case). When matching dependent resources, both alternative tokens are used.

Regarding operation description and operation summary, since they are free-text sentences, we employed Stanford Natural Language ParserFootnote 8 (SNLP) to analyze them for identifying noun s as tokens. In addition, with SNLP we may get base forms (i.e., lemmas) of tokens analyzed from parameters and path elements, e.g., products has product as its lemma. Therefore each of the tokens is designed with a lemma property that is used as an alternative for token matching.

For a resource, one representative token and/or more related tokens can be parsed with such pre-processing on the four components of its resource path and its available operations. To infer possible related resources, we match tokens of the resource with the representative tokens of other resources using the Trigram Algorithm (Martin et al. 1998) to calculate a degree of similarity between any token of the resource and other representative tokens. In our current implementation, if the degree of at least one token satisfies our specified requirement (i.e., > 0.6), we create a possible undirected dependency between the two resources. The probability of the derived dependency is initialized based on that degree.

6.1.2 Accessed SQL Tables

RESTful APIs are supposed to be stateless. This helps with horizontal scalability (e.g., a service can easily be replicated in several running instances on different processes, as there is no internal state to keep in sync), and to avoid issues with the restarting of the processes. This means that the state is usually handled externally, typically in a database, such as Postgres, MySQL and MongoDB. In these cases, the resources handled by the API are an abstraction of the data in such databases.

EvoMaster does analyze all the interactions of the SUT with SQL databases (Arcuri and Galeotti 2019; 2020) (for NoSQL ones such as MongoDB, it is work-in-progess), as it computes heuristics based on the results of such operations (e.g., to create the right input data for which SELECT operations return non-empty sets by satisfying their WHERE clauses). By exploiting this current feature in EvoMaster, we can record the information about all accessed tables in the databases for each executed HTTP call made in the tests.

Theoretically, if different API endpoints operate on the same tables, then there exist some relationships among them. During the search, we keep a global track in all test cases of all SQL tables accessed by actions on resources, at each single fitness evaluation. Thus, after each evaluation, dependencies among resources can be newly derived or updated if actions on different resources accessed the same tables in the database. Note that, if a dependency is newly derived (i.e., not currently existing in the graph), the added dependency in the graph is undirected. The probability of the dependency is then initialized/updated with the maximum 1.0.

6.1.3 Fitness Feedback

Dependency handling is developed for achieving a proper combination of dependent resources in a test. Besides heuristics on name matchings and database accesses, we also consider the feedback on the test fitness (e.g., line coverage), by analyzing its variations when different resources are manipulated. With resource-based structure mutation (recall Section 5.3), the combination of resources can be manipulated. Thus, by analyzing changes of fitness after a mutation operation, the dependency of resources in the test can be identified.

Assume that a test T = (R1,...,Rn) is a sequence of Rk (1 ≤ k ≤ n), and each Rk represents a sequence of actions (i.e., HTTP calls following one of the templates in Table 1) on one resource. Table 3 represents a set of heuristics to identify dependencies based on different changes of fitness for each of resource-based structure mutation operators. For each of the operators:

ADD a resource Rx at index i: fitness of all resources after i index is required to be examined. For each resource Rm (i ≤ m ≤ n), if there exists any change, a dependency (i.e., Rm depends on Rx) can be identified, and its probability will be updated with 1.0. Note that the update of the probability with update(\(DD_{R_m \rightarrow R_x}\), p) is to take a larger value between the probability and p. For example (also represented in add row and Example column in Table 3), a test is a sequence of resource handling actions denoted as (A,B,C,D,E), e.g., A can be regarded as a sequence of actions on the A resource. With add mutation operator, a resource handling action on the resource F is added to the test at index 2, then the mutated individual becomes (A,B,F,C,D,E). For instance, if the fitness values contributed by actions in the C resource are changed in the mutated individual, i.e., with the newly involved actions on the F resource. This might imply that there exists a dependency, i.e., \(C\rightarrow F\).

DELETE/MODIFY a resource Ri at index i: fitness of all resources after i index is required to be examined. For each of the resource Rm (i < m ≤ n), if there exist any change, a dependency (i.e., Rm depends on Ri) can be identified with a probability of 1.0.

REPLACE a resource Ri with Rx at index i: fitness of all resources after i index is required to be examined. For each of the resource Rm (i < m ≤ n), if the fitness becomes better, it means that Rm depends on Rx. If the fitness becomes worse, it means that Rm depends on Ri.

SWAP a resource Ri and a resource Rj (1 ≤ i < j ≤ n): first, fitness of Rj is required to be examined, if it is changed, it means that Rj might depend on any resource between i and j (j is excluded). In addition, the fitness of all resources between i and j is required to be examined. For each of the resource Rm (i < m < n), if the fitness becomes better, it means that Rm depends on Rj. If the fitness becomes worse, it means that Rm depends on Ri. Moreover, fitness of Ri is required to be examined, if it is changed, it means that Ri might depend on any resource between i (i is excluded) and j.

6.1.4 Summarize the Resource Dependency Detection

If the pre-match processing based on REST API Schema (Section 6.1.1) is enabled, the search will start with a set of undirected derived dependencies. Over the course of the search, the graph of dependencies will be evolved by adding new derived dependencies, updating directions of undirected derived dependencies, and updating probabilities of the derived dependencies. After each fitness evaluation, by further identifying dependencies based on Accessed SQL Tables (Section 6.1.2), the graph will be expanded and the probabilities of the dependencies might be updated to 1.0. Besides, by analyzing the changes in fitness scores (Section 6.1.3), newly directed dependencies can be further detected and the direction of the undirected derived dependencies might be updated.

6.2 Smart Sampling with Dependency

Information on derived dependencies can be exploited by sampling methods involving multiple resources, i.e., S2dR and SMdR, as smart sampling with dependencies. Enabling such smart sampling with dependencies is controlled with a probability, i.e., Pd, as described in Section 4. When enabled, for S2dR, a test is sampled with two resources which are linked with a derived dependency (if any exist). If the dependency is undirected, the order of handling the two resources will be determined randomly. Otherwise, the test starts with handling the dependent resource first followed by the other. SMdR does sample a test with more than two resources, and, in the test, there exists at least one derived dependency if the set of dependencies is not empty (i.e., there exists at least one dependency whose probability is more than 0.0). In case of no derived dependencies, then SMdR simply chooses resources at random.

As discussed in Section 5.1, values of parameters of actions within a template will be bounded for handling one specific resource. For example, all the path variables in a series of actions on a resource will have the same values (to guarantee to work on the same resource), and a mutation operation that modifies any of them will propagate its changes to all the other actions on such resource. To make a dependent resource available to the following related resources, it is also required to bind values of parameters across resources according to their dependencies.

Recall the example in Fig. 6, a test is sampled by S2dR that is composed of an action (at line 1) for handling a warehouse resource and two actions (at lines 2-3) for handling a product resource, and parameters of the actions at lines 2-3 are bounded for handing same resource, i.e., a foo product. If the dependency (i.e., product depends on warehouse) is derived, when processing actions on product, we also need to consider a bar warehouse in order to make a foo product related to the barwarehouse, i.e., the warehouse parameter of POST /products/{productName} is bounded with the bar warehouse performed by the action at line 1 as shown in Fig. 8.

6.3 Smart Mutation with Dependency

As discussed in Section 5.3, one aim of resource-based structure mutation is to exploit relationships among resources. Thus, we enhance the mutation with a consideration of derived dependencies to boost its performance. Regarding each of the mutation operators:

DELETE. Delete a resource that is marked as unrelated to any other resource in the test.

SWAP. We propose three strategies to swap two resources with dependency relations: (1) adjust the order of a resource and its likely dependent resource, i.e., if there exist a derived dependency with a high probability (i.e., ≥ 0.6) in the test, but the order is incorrect according to the dependency relation, then we apply the strategy to swap their order in the test; (2) attempt to adjust the order of a resource and its possibly dependent resource, i.e., if there exist a derived dependency with a modest probability (i.e., < 0.6 but still > 0.0) in the test, but the order is incorrect according the dependency, then we applied the strategy to fix their order; (3) explore new order of two resources. Similarly to adjust and attempt but apply on two resources with no dependency information, to evaluate a possible new dependency. Based on a set of derived dependencies, the applicable strategies are selected at random. If none of the strategies can be applied, we select two positions randomly as SWAP without dependency handling.

ADD. Add a resource that is related to one of the resources in the test based on the inferred dependencies. If none of the resources is related to any other resource, add a new resource as ADD without dependency handling.

REPLACE. Replace is handled as a combination of DELETE and ADD with a fixed position, i.e., remove an unrelated resource at a specific position, and then add a new related resource at that same position.

MODIFY. No further treatment with a consideration of the dependency graph.

Note that, if a resource is mutated with the above operators based on derived dependencies, any other resource bounded to it will need to be updated (e.g., query parameters and fields in body payloads) as well to maintain the dependency.

7 Case Studies

7.1 Open Source Case Studies

In our empirical study, we employed seven RESTful APIs (available on GitHub4) which we used in our previous work (Arcuri 2018b; 2019). These APIs consist of three artificial and four real-world web services. Table 4 shows detailed descriptive statistics on these web services, including their number of Java/Kotlin class files, lines of code, number of endpoints (where with the term endpoint we mean the combination of resource paths and HTTP methods applicable on them, ignoring any query parameter), and number of accessible resources (ignoring HTTP verbs) with the number of independent resources among them.

Regarding these services, REST Numerical Case Study (rest-ncs) and REST String Case Study (rest-scs) are artificial APIs based on functions that were previously used in unit testing for experiments on solving numerical (Arcuri and Briand 2011) and string (Alshraideh and Bottaci 2006) problems. These APIs simply put each of these stateless functions behind a different GET endpoint. rest-news is also an artificial API, which was developed for educational purposes on enterprise development in a university course of one of the authors.Footnote 9 The APIs features-service, proxyprint, scout-api and catwatch are real RESTful web service projects, which were selected by analyzing projects on the popular open-source repository GitHub. More details of the selection can be found in Arcuri (2018b) and Arcuri (2019).

Note: in this article we use the term “real” (for 4 SUTs downloaded from GitHub) just as opposed to, and differentiate from, the terms “artificial” (used for 3 SUTs) and “synthetic” (used for 12 SUTs). We do not claim that these systems are widely used in industry, or that they are representative of industrial systems in general. Furthermore, in this article we use the term “artificial” to represent the SUTs that were written by hand just for experiment purposes (e.g., based on software used in the evaluation of unit test generation techniques) or didactic reasons, in contrast to the “synthetic” SUTs that were automatically generated with a tool. But other terminologies could have been used to distinguish those groups, like Group A, B and C.

7.2 Automatically Generated Synthetic RESTful APIs

To achieve sound results in an empirical study, a large and varied selection of SUTs is required (Fraser and Arcuri 2012). However, system testing is very time consuming. Furthermore, although open-source repositories such as GitHub do host plenty of software projects (especially libraries), enterprise applications are not so common among them. This poses challenges in carrying out empirical studies in this problem domain.

To address this problem, we integrated our empirical study with a set of synthetically generated APIs, with different characteristics when it comes to intra-resource dependencies. This also enables us to clearly identify how our techniques perform, i.e., pinpoint the conditions in which they perform well or struggle. However, whether real APIs would have the same characteristics remains to be seen. This is why it is important to still do empirical studies on real APIs and not just synthetic ones. In other words, the experiments on these synthetic APIs are only done to provide more insight, and possibly explain differences in performance among the real APIs.

To experiment the proposed techniques with various RESTful web applications in terms of resource and their dependency, we implemented a synthetic REST API generator (Fig. 9) for automatically producing such applications. In Fig. 9, we propose a model (i.e., Synthetic REST API Graph) that is composed of elements (denoted as white boxes) for defining the application with respect to resources and their dependencies. With such a model, a RESTful web service can be automatically generated with elements (denoted as grey boxes). Note that the elements with grey boxes are for representing a mapping between the model and an instantiation that could be carried out with any available tool/framework. In our work, we used SpringBootFootnote 10 JPAFootnote 11 and automated inference of OpenAPI schemas7 from source code.Footnote 12

In our implementation, a Synthetic RESTful API is defined with a set of resources (ResourceClass), which can be connected with dependencies (Dependency).

ResourceClass represents a type of the resource, and its instances can be considered as an actual resource. For example, GET /products/foo can be regarded as “to retrieve an instance of product, and the identifying name of the instance should be foo”. Based on the specified ResourceClass, we generate an Entity (for resource persistence and access in the database) and its corresponding Data Transfer Object (DTO) (when the resource needs to marshaled into a JSON object representation for resource transfer through a network).

Dependency is designed to describe “one or more resources depend on one or more of the other resources”. The dependencies among resources are varied. To generate RESTful APIs with various dependencies, we designed three kinds of dependency, i.e., Composition, ExistenceDependency, and PropertyDependency. These kinds are also designed with different level of complexity in dependency handling, i.e., Composition is the easiest one, and PropertyDependency is the most challenging one. Definitions of each of these kinds are presented in Table 5, together with the corresponding constraints on resources and their dependent resources.

Moreover, we introduced RestMethod for specifying Endpoint generations for a RESTful API. The RestMethod is associated with a RestMethodKind, which is composed of all the different types of HTTP methods (e.g., GET and POST), for specifying which HTTP methods should be provided to access the related resources. As dependencies among resources might be represented in the resource path, we proposed two strategies to generate resource paths for each Endpoint, i.e., showing dependency and hidden dependency. Table 6 presents our strategies to generate endpoints based on different HTTP methods following REST guidelines.

In our context, in terms of an endpoint, a successful status code (i.e., 2xx) should be given only if all related dependency constraints are satisfied and an action (according to specified HTTP method) is performed properly. If any constraint is not satisfied, execution of the endpoint will exit and return a 4xx status code indicating that there are user errors in this HTTP request. The order and type of the dependency constraint checking is based on the complexity in the dependency handling setting, i.e., from easy to challenging. Thus, a test with proper data to handle dependencies can cover more code (as all those constraint checks are if statements in sequence, checking one condition at a time). Note that, if all of the constraints are satisfied but the action is still performed improperly (e.g., an exception is thrown for some reason), then the execution will exit and a server error status code (i.e., 500) will be returned (this is handled by default in Spring).

To generate the applications with various resource-dependency settings, we designed three resource graph settings, i.e., Dense-Central, Medium-Deep and Sparse-Straight, as shown in Fig. 10 (the names of the resources are generated at random). Note that all of the three settings consist of 5 ResourceClass es with 6 methods. Thus, there are 30 endpoints for all of the settings.

Regarding Dense-Central, there are 4 dependencies connecting all 5 resource classes through UEear. Regarding Medium-Deep, 3 dependencies connect 4 out of the 5 resource classes, but there exist a deep chained dependency from VIL0S to U1rA1 through HErqD and XpOCt. Regarding Sparse-Straight, 2 out of the 5 resources classes are connected with 1 dependency. Note that in Fig. 10<is composed of > can only be specified with DR1, but <depends on> can be specified with either DR2 or DR3, which result into easy or complex dependency constraints. To control for the difficulty in solving dependency constraints, we, therefore, generated APIs with different choices (i.e., DR2 or DR3) on <depends on> in the three settings. Moreover, we configured resource paths generation with showing or hidden dependencies in paths, since this configuration might impact the handling of resources. Thus, in total, 12 (3 resource-dependency settings × 2 kinds of dependency constraints × 2 strategies of resource path generation) synthetic RESTful APIs are generated for our empirical study.

Dependencies of Dense, Medium and Sparse settings. Dense-Central setting is defined with 4 one-to-one dependencies from UEear that forms 1 two-to-two dependency relationship, i.e., OEXmz and W27dt depend on B8v25 and IUJWo. Medium-Deep setting is defined with 3 connected one-to-one dependency relationships. The deepest derived dependency is from V IL0S to U1rA1 through HErqD and XpOCt. Sparse-Straight setting is defined with 1 one-to-one dependency relationship

Snippet examples of implementations of POST on UEear (see Dense-Central in Fig. 10) with different configurations

An example is shown in Fig. 11, illustrating different endpoint generations according to the different configurations. The endpoint in Fig. 11 is for the POST action on UEear resource shown in Dense-Central setting of Fig. 10. In the setting, UEear owns OEXmz and W27dt, and has two dependent resources, i.e., B8v25 and IUJwo. Snippets 1-2 present different paths regarding showing and hidden dependency in paths, respectively. In Snippet 1, two dependent resources are shown in the path, i.e., /iUJWo s/iUJWoId/b8v25 s/b8v25Id/uEears, while not in Snippet 2, i.e., /uEears. Regarding an implementation of POST, when creating an instance of UEear, it is the first to check whether the instance exists, denoted as R check (see line 2 in Snippet 3). Once passing the R check, create owned resources (i.e., an instance of OEXmz and an instance of W27dt) if the resources do not belong to any others (DR1 checks), and the code for processing OEXmx is at lines 6-13 in Snippet 3.

As discussed,<depends on> can be applied with DR2 or DR3. If DR2 is applied, following by the creation of owned resources, we check the existence of dependent resources, as shown in Snippet 4, i.e., line 16 is for B8v25 and line 20 is for IUJwo. If DR3 is applied, there are not only the same checks as in Snippet 4, but also additional checks on property condition in Snippet 5. In this implementation, the property condition is designed based on value properties of the resource (denoted as RC) and its dependent resources (denoted as DRCi) as

not (median(SRC) < median(SDRC) andaverage(SRC) < average(SDRC))

where if RC is composed of more than one other resources (denoted as ORCk), SRC = {ORCk|k > 1}, otherwise SRC = {RC}; SDRC = {DRCi|i > 0}; median(S) is a median of values on value property of a set of resources S; average(S) is an average of values on value property of a set of resources S. For instance, the additional check of UEear is shown at lines 27-28 in Snippet 5. Last, if all checks are passed, we save the created instance of UEear in the database and return 201 status code (Snippet 6).

Note that our synthetic REST API generator is not limited for evaluation just in this work. As we released it as open-source, it could be also useful to setup experiments for studying other RESTful APIs-related approaches. With our generator, a REST API can be automatically generated by configuring a set of parameters (e.g., a number of resource nodes, a number of dependencies, applied HTTP methods), or a specific resource dependency graph. Those configurable parameters capture different basic characteristics of a REST API, and this flexible configuration would be helpful to customize different synthetic case studies (e.g., the number of resources might be used to study the scalability of an approach). In addition, as the generator is open-source, that presents a possibility to be further customized by different researchers, e.g., extend PropertyDependency with different implementations. Moreover, the generator is designed by following the REST API guidelines, and the generated REST APIs come with a schema using OpenAPI/Swagger. Thus, the generator might offer an opportunity to assess OpenAPI-based approaches with synthetic case studies. However, currently the generator only supports the creation of REST APIs with SpringBoot and JPA. This may limit the study regarding implementations with different frameworks/libraries.

8 Empirical Study

In this paper, we have carried out an empirical study aimed at answering the following research questions.

- RQ1::

-