Abstract

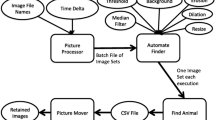

Remote cameras are an increasingly important tool for ecological research. While remote camera traps collect field data with minimal human attention, the images they collect require post-processing and characterization before it can be ecologically and statistically analyzed, requiring the input of substantial time and money from researchers. The need for post-processing is due, in part, to a high incidence of non-target images. We developed a stand-alone semi-automated computer program to aid in image processing, categorization, and data reduction by employing background subtraction and histogram rules. Unlike previous work that uses video as input, our program uses still camera trap images. The program was developed for an ungulate fence crossing project and tested against an image dataset which had been previously processed by a human operator. Our program placed images into categories representing the confidence of a particular sequence of images containing a fence crossing event. This resulted in a reduction of 54.8% of images that required further human operator characterization while retaining 72.6% of the known fence crossing events. This program can provide researchers using remote camera data the ability to reduce the time and cost required for image post-processing and characterization. Further, we discuss how this procedure might be generalized to situations not specifically related to animal use of linear features.

Similar content being viewed by others

References

Anderson, C.J., Lobo, N.D.V., Roth, J.D., & Waterman, J.M. (2010). Computer-aided photo-identification system with an application to polar bears based on whisker spot patterns. Journal of Mammalogy, 91, 1350–1359.

Ardekani, R., Greenwood, A.K., Peichel, C.L., & Tavaré, S. (2013). Automated quantification of the schooling behaviour of sticklebacks. EURASIP Journal on Image and Video Processing, 2013, 1–8.

Bubnicki, J.W., Churski, M., & Kuijper, D.P. (2016). TRAPPER: an open source web-based application to manage camera trapping projects. Methods in Ecology and Evolution.

Burton, A.C., Neilson, E., Moreira, D., Ladle, A., Steenweg, R., Fisher, J.T., Bayne, E., & Boutin, S. (2015). REVIEW: Wildlife camera trapping: a review and recommendations for linking surveys to ecological processes. Journal of Applied Ecology, 52, 675–685.

Efford, M. (2004). Density estimation in live-trapping studies. Oikos, 106, 598–610.

Forrester, T., McShea, W.J., Keys, R., Costello, R., Baker, M., & Parsons, A. (2013). emammal–citizen science camera trapping as a solution for broad-scale, long-term monitoring of wildlife populations. Sustainable Pathways: Learning from the Past and Shaping the Future.

Goehner, K., Desell, T., Eckroad, R., Mohsenian, L., Burr, P., Caswell, N., Andes, A., & Ellis-Felege, S. (2015). A comparison of background subtraction algorithms for detecting avian nesting events in uncontrolled outdoor video. In 2015 IEEE 11th international conference on e-science (e-Science) (pp. 187–195). IEEE.

Gonzalez, R.C., & Woods, R.E. (2007). Digital image processing. New Jersey: Prentice Hall.

Hines, G., Swanson, A., Kosmala, M., & Lintott, C.J. (2015). Aggregating User input in ecology citizen science projects. In Proceedings of the twenty-seventh innovative applications of artificial intelligence conference (pp. 3975–3980). AAAI.

Jhala, Y.V., Mukherjee, S., Shah, N., Chauhan, K.S., Dave, C.V., Meena, V., & Banerjee, K. (2009). Home range and habitat preference of female lions (Panthera leo persica) in Gir forests, India. Biodiversity and Conservation, 18, 3383–3394.

Krishnappa, Y.S., & Turner, W.C. (2014). Software for minimalistic data management in large camera trap studies. Ecological Informatics, 24, 11–16.

Meek, P., Ballard, G., Claridge, A., Kays, R., Moseby, K., OBrien, T., OConnell, A., Sanderson, J., Swann, D., & Tobler, M. et al. (2014). Recommended guiding principles for reporting on camera trapping research. Biodiversity and Conservation, 23, 2321–2343.

Osterrieder, S.K., Kent, C.S., Anderson, C.J., Parnum, I.M., & Robinson, R.W. (2015). Whisker spot patterns: a noninvasive method of individual identification of Australian sea lions (Neophoca cinerea). Journal of Mammalogy, 96, 988–997.

Piccardi, M. (2004). Background subtraction techniques: a review. In 2004 IEEE international conference on systems, man and cybernetics, (Vol. 4 pp. 3099–3104). IEEE.

Sirén, A.P., Pekins, P.J., Abdu, P.L., & Ducey, M.J. (2016). Identification and density estimation of american martens (Martes americana) using a novel camera-trap method. Diversity, 8, 3.

Spampinato, C., Farinella, G.M., Boom, B., Mezaris, V., Betke, M., & Fisher, R.B. (2015). Special issue on animal and insect behaviour understanding in image sequences. EURASIP Journal on Image and Video Processing, 2015, 1.

Swinnen, K.R., Reijniers, J., Breno, M., & Leirs, H. (2014). A novel method to reduce time investment when processing videos from camera trap studies. PloS one, 9, e98,881.

Tobler, M.W., Zúñiga Hartley, A., Carrillo-Percastegui, S.E., & Powell, G.V. (2015). Spatiotemporal hierarchical modelling of species richness and occupancy using camera trap data. Journal of Applied Ecology, 52, 413–421.

Visscher, D.R., MacLeod, I., Vujnovic, K., Vujnovic, D., & DeWitt, P.D. (2017). Human induced behavioural shifts in refuge use by elk in an agricultural matrix. Wildlife Society Bulletin, 41, 162–169.

Weinstein, B.G. (2015). Motionmeerkat: integrating motion video detection and ecological monitoring. Methods in Ecology and Evolution, 6, 357–362.

Yu, X., Wang, J., Kays, R., Jansen, P.A., Wang, T., & Huang, T. (2013). Automated identification of animal species in camera trap images. EURASIP Journal on Image and Video Processing, 2013, 52–53.

Acknowledgments

We thank Alberta Parks for funding for this project and for helping with field setup and data collection and the King’s University and the King’s Centre for Visualization in Science for funding and support. This work, in part, comes from the undergraduate research projects of K. Visser and I. MacLeod. We also thank Dr. Jose Alexander and an anonymous reviewer for their comments.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix I

Software availability: http://cs.kingsu.ca/mjanzen/CameraTrapSoftware.html.

Example instructions for running the program on a Windows computer are also available from this website.

Appendix II

Additionalsequences of images showing a false negative event, Fig. 3, and false positive event, Fig. 4. In Fig. 3, the camera trap takes its first picture after the moose has already entered the field of view, and the moose overlaps in images causing the overlap to appear as part of the background image. In Fig. 4, movement of the fence or camera causes an image change to be detected, which is retained in one of the three images. This causes the program to classify the image set into category two, implying a fast moving animal detected in only one of the three images.

Rights and permissions

About this article

Cite this article

Janzen, M., Visser, K., Visscher, D. et al. Semi-automated camera trap image processing for the detection of ungulate fence crossing events. Environ Monit Assess 189, 527 (2017). https://doi.org/10.1007/s10661-017-6206-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10661-017-6206-x