Abstract

This paper studies the efficient definition of product defects for AI systems with autonomous capabilities. It argues that defining defects in product liability law is central to distributing responsibility between producers and users. The paper proposes aligning the standard for defect with the relative control over and awareness of product risk possessed by the producer and the user. AI systems disrupt the traditional balance of control and risk awareness between users and producers. The paper provides suggestions for defining AI product defects in a way that promotes an efficient allocation of liability in AI-related accidents. It assesses whether the recent EU policy proposal on product liability aligns with this approach.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The adoption of artificial intelligence (AI)Footnote 1 presents new challenges for determining liability rules. AI systems with autonomous capabilities raise the question of where a producer’s responsibility ends and that of an operatorFootnote 2 begins. For example, in cases involving accidents with autonomous cars, courts must decide whether the producer should be held liable for a flawed design or if the user’s inattentiveness while driving was the root cause.Footnote 3 Similarly, in the field of healthcare, it may be unclear whether physicians should be held accountable for following inaccurate or misleading recommendations from AI systems in diagnosing and treating patients.Footnote 4

In related work, the author has explored the issue of allocating liability for AI between producers and users (Buiten et al., 2023). This paper focuses on a specific aspect of this liability allocation, namely, the concept of defect. Product liability law in Europe and the U.S. holds producers responsible for defective products.

This paper contributes to the existing literature in two ways. First, it emphasises the role of defining a defect in assigning responsibility for product harm between producers and users. The concept of defect is underexposed in the law and economics literature on product liability, which typically compares strict liability and negligence rules. Defect positions product liability in a middle ground between strict liability and negligence, depending on how it is interpreted.Footnote 5 Consequently, the definition of defect has significant implications for the extent of product liability. If courts assume a defect easily, product liability tends towards strict liability. Conversely, if proving a product is defective is difficult, product liability resembles a negligence rule.Footnote 6 This paper suggests that distinguishing the criteria for determining a defect based on the source of the defect can help align product liability with the control and risk awareness of both producers and users.

Second, the paper illustrates how AI systems disrupt the traditional balance of control and risk awareness between users and producers. Users may have less control over autonomous AI systems than they have over traditional products, and they may misperceive AI risk. Courts are tasked with deciding the implications of this change in control and risk perception for product liability. Courts need to determine if an AI system is automatically deemed defective if it causes an accident or if an acceptable failure rate should be tolerated. Their interpretation of product defects will help establish whether the standard of care should be more rigorous for autonomous AI systems than human-operated products. This involves questions about shifting liability from users to producers despite producers’ limited control over AI systems once they are on the market.Footnote 7 Courts must also address users’ monitoring responsibilities and producers’ role in encouraging user oversight. This involves reviewing the design of human–machine interaction, testing, user-friendly interfaces, product warnings, and transparent marketing. Defining a defect is central to addressing these issues and establishing an effective liability framework for AI.

The paper provides insights for the policy debate. The European Commission has proposed a review of the EU Product Liability Directive (“PLD Proposal”).Footnote 8 This proposal follows the adoption of the AI ActFootnote 9 and is accompanied by a proposal for an AI Liability Directive.Footnote 10 A central motivation of the PLD Proposal is to clarify product liability rules so that they can be applied to modern technologies.Footnote 11 The PLD Proposal updates the concept of defect. This paper illustrates that the interpretation of this concept is vital in allocating responsibility for AI between producers and users.

Related literature has applied the economics of tort law to robotics, especially autonomous vehicles (De Chiara et al., 2021; Fagnant & Kockelman, 2015; Guerra et al., 2022; Roe, 2019; Shavell, 2020; Talley, 2019). While these papers have highlighted the drawbacks of strict liability and negligence standards for controlling robot accidents, they have not explored the concept of defect in product liability law. The paper also relates to legal literature on product liability for AI. Some earlier contributions have proposed to base AI defects on the average performance of the AI system rather than the failures of the individual product (Janal, 2020, 192; Geistfeld, 2017; Wagner, 2019). Whereas the earlier contributions highlight the possibility of defect as average performance, this paper assesses whether it would lead to an efficient liability standard. It explores if this interpretation is possible under current liability rules and the PLD Proposal.

The paper proceeds as follows. Section 2 discusses the economic justification for product liability and the considerations for determining the optimal product liability standard. Section 3 highlights the significance of the notion of a defect in determining the liability standard in product liability law and argues that the optimal liability standard depends on the source of the defect. Section 4 applies this framework to AI, discussing various ways in which AI can be defective and the specific characteristics of AI that are relevant here. Section 5 examines whether the PLD Proposal addresses the difficulties highlighted in Sect. 4. Section 6 concludes.

2 Economics of product liability

This section begins by examining the overarching economic justification for implementing product liability in cases involving harm to third parties and customers. Subsequently, it explores various potential liability standards, focusing on the central role played by the concept of defect.

2.1 Externalities and information problems

The liability of manufacturers for damage caused by their products compensates harmed consumers. From an economic perspective, product liability is a means of controlling accident risks by incentivising adequate precautions. That is, liability induces manufacturers to improve product safety. Based on the principle of least cost avoider, liability for harm caused by AI should be allocated to the party that can most efficiently mitigate risk through their actions. Product liability covers harm to third parties or bystanders but also to customers who bought the product. Product liability for harm to third parties is warranted by the recognition that harm constitutes a negative externality. Extending this liability to customers is justified by the lack of information among customers regarding product risks.

The case for imposing tort liability on producers for harm caused to noncontractual victims is a classic example of a negative externality. Product liability encourages manufacturers to minimise the sum of expected harm and precaution costs by taking optimal precautions (Shavell, 1987).Footnote 12 In short, liability causes the prices of products to reflect their risks (Polinsky & Shavell, 2010, 1440). Both strict liability and negligence induce producers to choose the optimal precautions when consumers are perfectly informed about care and perceive risks accurately (Friehe et al., 2022, 55).Footnote 13

Extending tort liability to harms experienced by customers is not straightforward, as market forces may already incentivise producers to create safe products (Choi & Spier, 2014). Since product liability involves costly litigation, it is essential to consider why it is necessary to extend it to customers. The justification for holding producers liable for customer harm is that customers often have imperfect information about product risk. Customers’ expectations of accident losses depend on their knowledge of risk (Shavell, 2004, 213). When product risk is perfectly observable to consumers, they factor in accident costs when deciding to buy, considering both the product’s market price and expected accident losses (Shavell, 2004, 207). Expected harm directly influences the price, reducing the demand for risky products regardless of the liability rule on producers—no liability, negligence, or strict liability (Shavell, 2004, 212).Footnote 14 As riskier products will have a higher perceived price, competition will compel producers to take optimal care to avoid sales drops (Polinsky & Shavell, 2010, 1441). Therefore, when consumers are well-informed, producers are encouraged to exercise optimal care, even without liability (Shavell, 1980).

The rationale for extending product liability to include customers is that customers frequently lack adequate information regarding potential risks associated with products. When customers cannot accurately assess product risks, their purchasing decisions fail to reflect the actual level of risk involved. As a result, manufacturers lack incentives to exercise optimal care, as they bear no liability for any potential harm. Even if producers try to enhance product safety, these endeavours are not rewarded with increased sales as consumers fail to recognise the lower risk when purchasing. Instead, consumers prioritise lower-priced products, neglecting to consider the potential losses resulting from accidents. Allowing producers to exclude product liability through contractual clauses would create an adverse selection problem. Producers are typically better informed than consumers about the costs of alternative safety measures and the safety of competing products (Landes & Posner, 1985, 544). Due to the high costs of acquiring information relative to the low probability of an adverse event, consumers would not find it worthwhile to thoroughly examine liability disclaimers (Landes & Posner, 1985, 545). Consequently, there would be no competition for more favourable contractual terms, and manufacturers would find it advantageous to include disclaimers uniformly. In addition to imperfect information, imperfect competition and consumer biasesFootnote 15 undermine precautions without liability.

Consumers’ knowledge of risk varies with the type of product and customer. Consumers may be able to accurately judge the risk of everyday items, such as a hammer or scissors.Footnote 16 However, they may have difficulty assessing the risks of complex products such as cars, drugs, or machines (Shavell, 2004, 215). AI-based products are likely to belong to the latter type of products. Some customers will be more sophisticated than others in their risk assessment. For instance, repeat purchasers may accurately know the risks, while first-time customers may not. With the introduction of AI-based products, many customers will be first-time customers. Thus, customers’ knowledge of the risks of AI-based products will likely be limited.Footnote 17

2.2 Comparing liability standards

Producers can be held liable under a strict liability standard or a negligence rule. A strict liability rule holds producers responsible for any harm caused by their products, regardless of fault. A negligence rule holds producers accountable if they are at fault for the harm caused. The traditional law and economics analysis of product liability rules, assuming well-informed and risk-neutral consumers, has found that no liability, strict liability, and negligence are all equally effective in achieving the socially optimal level of care (Landes & Posner, 1985; Shavell, 1980). This may be different when we consider factors such as the party experiencing harm, how this harm relates to product use, the activity level of both producers and customers and the costs associated with litigation.

Most economic literature considers harm to either third parties or harm to consumers. Friehe et al. (2022) find that for the relative performance of liability rules, it is consequential to consider harm to third parties and consumers simultaneously. The driving force in their model is that consumers consider the marginal expected harm per unit of output in their purchasing decision only to the extent that it affects them, not third parties. If consumers are not perfectly informed and third-party harm is sufficiently substantial relative to consumer harm, negligence becomes the preferred liability rule (Friehe et al., 2022). The reason is that the producer’s output is too small under strict liability. Daughety and Reinganum (2014) also consider a setting where harm depends on product use (cumulative harm), finding that strict liability outperforms a negligence rule if only the consumer suffers harm.

These analyses do not yield universal conclusions but highlight factors that influence the choice of the preferable liability rules.Footnote 18 These include the level of care in production and use (2.2.1), the amount sold and used (2.2.2), and courts’ information costs (2.2.3).

Building on the least cost avoider principle, this paper argues that the definition of defect should align with the level of control over product risk that producers and users have. It moreover argues that the relative influence of consumers and producers on the risk of harm varies depending on the origin and nature of the defect. Therefore, an efficient product liability regime should consider the source of the defect and differentiate the applicable liability standard. By categorising defects based on the degree of control held by producers and users, the liability standard can be better matched to their respective levels of control, resulting in a more suitable determination of the appropriate liability standard.

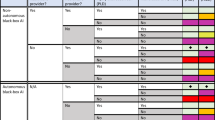

Table 1 summarises various defect sources and the corresponding level of control available to producers and users. It recommends the appropriate liability standard based on the defect type. The table classifies defects as either manufacturing or design-related while also considering factors like inherent product risk, the immediacy of risk recognition (information costs), product wear and tear over time, and instances of product misuse. By taking these factors into account, the table aids in identifying the most fitting liability standard for each defect type. When the table indicates no liability for producers, it suggests that any responsibility for liability would rest with the user. The table distinguishes seven ways in which a product might cause harmFootnote 19:

-

(1)

Manufacturing flaws or construction failures that affect individual product items.

-

(2)

Products that present inherent risks in everyday use, with risks that are difficult for consumers to assess.

-

(3)

Defective product design that makes an entire product line more dangerous.

-

(4)

Products that pose inherent risks in daily use and where the risk is readily identifiable by consumers.

-

(5)

Products for which accidents can occur occasionally.

-

(6)

Product wear and tear.

-

(7)

Product misuse.

2.2.1 Level of care in production and use

Product liability promotes efficient safety measures when consumers cannot accurately assess risks. To identify the optimal liability standard in product liability, it is important to determine whether the producer or the consumer can prevent harm at a lower cost in such scenarios.

Producers can typically best influence defects stemming from unintended mistakes in the production process (1). Producer precautions to reduce product risk may take different forms. These preventive measures can include regular inspections throughout the manufacturing process to identify and rectify potential defects or issues, implementing quality control procedures to ensure product consistency and reliability, conducting rigorous product safety and performance testing, and choosing high-quality materials for product construction. Producers can also reduce risk by ensuring safe and user-friendly product design and providing comprehensive user manuals and safety warnings (3). Both can encourage safe product usage by consumers. Finally, producers can invest in research and development to design and engineer safer products and adhere to regulatory safety standards.Footnote 20

As Table 1 illustrates, some product-related risks are better borne by consumers. Holding manufacturers liable for every minor hazard (5) would not improve safety or encourage the development of safer products when no safer alternatives are available (Landes & Posner, 1985, 559–60). Similarly, consumers are best positioned to mitigate the risks of products that undergo wear and tear over time (6). For example, consumers can prevent accidents by renewing worn-out tires in a timely manner. This approach is more efficient than expecting manufacturers to create tires that never wear out (Landes & Posner, 1985, 560).

Additionally, in cases of product misuse (7), liability should typically be assigned to the user. If the user handles the product recklessly or employs it for purposes it was not intended for, harm can be prevented best by the user. However, there is an exception to this principle. Manufacturers should be held liable if they could have reasonably foreseen a specific type of misuse and taken measures to prevent it at a low cost. Holding manufacturers accountable for foreseeable misuse incentivises them to design products that are less susceptible to consumer misuse (Landes & Posner, 1985, 562). It is essential, however, to carefully delineate the boundary between foreseeable misuse and unreasonable abuse of the product to ensure that consumers still have the incentive to exercise caution (see Sect. 4.4).

2.2.2 Amount sold and used (activity level)

Some product risks remain even if the producer takes due care. If these risks are significant and not easily identifiable for consumers (2), a strict liability reduces the amount sold of such products.

It is socially optimal to produce additional units of a good only when consumers derive more utility from consuming it than the sum of production costs, costs of care and expected accident losses associated with these additional units (Shavell, 2004, 210). The consumer’s activity level refers to their choices regarding the purchase of a product and the extent to which they use the product. For instance, consumers can lower the accident risk by reducing the distance driven with an autonomous vehicle or the number of hours a robotic vacuum operates. Therefore, it is essential to consider the level of activity from the producer’s and the user’s perspectives (Shavell, 2020).

Under a strict liability rule, the liability costs are incorporated into the product price, leading consumers to purchase fewer units. Armed with better knowledge about product risk than consumers, producers can choose appropriate preventive measures and account for expected accident costs in pricing the product. This increases the price, which encourages consumers to make informed purchasing decisions and buy the optimal quantity (Geistfeld, 2021, 11). Under a negligence rule, the producer avoids liability by complying with a due safety level. Consequently, the customer bears the accident costs, and the purchasing price of the product does not include expected liability costs.

Strict liability provides an additional advantage in terms of optimising insurance arrangements. The producer can distribute the anticipated losses resulting from product-related harm more effectively than the customer (Calabresi & Hirschoff, 1972). By factoring the expected liability into the pricing of their products, producers can compensate for potential losses through increased sales revenue (Li & Visscher, 2020, 6). The producer usually serves as the superior insurer, given their capacity to efficiently absorb and manage the anticipated liabilities.Footnote 21

As previously mentioned, the consumer’s risk perception plays a crucial role in shaping how the anticipated harm affects their willingness to pay for a product. When obtaining information about product risk is costly for consumers, they may underestimate the product’s risk (and buy too much of it) or overestimate it (and buy too little of it) (Landes & Posner, 1985, 549). Consequently, if producers have better information than consumers about product risk, strict liability provides better incentives for the activity level than a negligence rule (Landes & Posner, 1985, 550).

However, the consumer is not incentivised to reduce their product use under a strict product liability regime. The producer would fully cover any losses the consumer incurs (Shavell, 2004, 215). This is different under a negligence rule, which induces the socially optimal activity level by the consumer.

When choosing between a strict liability or negligence standard, a critical consideration is identifying which party, the producer or the consumer, has a greater capacity to prevent accidents through their level of activity. For inherently dangerous products, such as guns, consumers can recognise the hazard and choose not to purchase the product (Landes & Posner, 1985, 557), or they can minimise risks by reducing usage. The optimal liability rule in such high-risk products depends on the information costs: in cases where consumers are perfectly informed (4) and accurately perceive risk, a no-liability rule is the optimal approach (Landes & Posner, 1985, 557). This depends on the risk being apparent to consumers and the producer’s limited ability or difficulty in reducing the risk. However, when the risk is not readily apparent (2), strict liability on the producer becomes efficient in encouraging manufacturers to enhance product safety and shift demand away from the product by raising its price. Hay and Spier (2005) add the financial means of the consumer as an additional consideration. Examining third-party harm from consumer products such as guns, they find that consumers should be liable for such harm as long as they have deep pockets and can pay for the damage they cause.Footnote 22 This encourages consumers to take the optimal degree of care when using dangerous products and to demand optimal safety features from the products they buy.Footnote 23

Policymakers typically impose a form of strict liability to inherently dangerous activities to induce those undertaking the activity not only to observe the optimal level of care but also to reduce the activity level to the socially optimal amount. However, if the activity benefits society, strict liability may reduce their activity below the efficient level because harm is internalised while external benefits to society may not all flow back to them (Buiten et al., 2023, 9). This may be relevant in the context of AI, which promises to be safer than human decision-making.Footnote 24 Dawid and Muehlheusser (2022) present a formal model featuring a monopoly producer that sets prices and makes costly safety investments over time. They demonstrate that a more stringent liability regime can result in a slower overall increase in the safety of autonomous vehicles. Their findings indicate that market penetration is hampered.Footnote 25 Liability could, thus, hinder the widespread adoption of AI systems if higher liability costs make AI products more expensive than non-AI.Footnote 26

2.2.3 Courts’ information costs

Litigation costs tend to be lower in strict liability systems compared to negligence-based systems because courts do not have to determine the optimal level of due care. Strict liability cases provide more predictable outcomes, making settlements more likely and saving costs in the litigation process (Calabresi, 1970, 28; Shavell, 2004, 282–283). However, in bilateral settings, strict liability requires a rule of contributory negligence on the injured party, which reintroduces information costs for courts.

A negligence standard in product liability shifts the burden to consumers to prove that producers failed to meet the required standard of care, encouraging consumers to be cautious and reduce risky behaviour (Landes & Posner, 1985). However, consumers may have difficulty obtaining the necessary information about products and production processes to establish producer negligence, particularly when dealing with complex or historical data.Footnote 27 Courts may also find it challenging to assess the actions consumers should take to mitigate risks related to products (Shavell, 2004, 217). In the AI context, the burden of proof could be a hurdle to claimants. AI systems utilising deep learning or complex algorithms can be intricate and non-transparent in their decision-making processes. The inner workings of these systems can be difficult to comprehend or explain, making it challenging to identify and attribute specific defects or errors.

When court decisions are uncertain, both producers and consumers may be inclined to adopt either excessive or insufficient levels of care. When courts cannot fully observe or verify all aspects of behaviour, like the intensity of product use, consumers may tend to underinvest in risk reduction. This occurs when it is challenging to prove in court how consumers used the product and whether they maintained it properly.

Similarly, firms may take inadequate care if courts are unable to observe or verify certain aspects of their behaviour, such as the production process (Shavell, 2004, 218). Producers can then avoid liability by taking care of the observable dimensions while doing nothing to reduce risks in unobservable dimensions of behaviour. Under strict liability, manufacturers are also incentivised to take care of the unobservable dimension because they will bear the costs (Posner, 1973; Li & Visscher, 2020, 6).

Regulatory standards can inform courts in product liability cases, offering clarity for both producers and consumers. They define manufacturer responsibilities, user care obligations, and safety measures. Under a negligence rule, regulatory standards can reduce information costs for courts by defining manufacturer responsibilities with respect to product safety. In product liability cases, courts can thus use regulatory safety standards and certification requirements to assess product defects. In some circumstances, certification may shift liability from producers to certifiers.Footnote 28

Safety regulations often mandate that firms take steps to enhance product safety, reducing the relevance of product liability when both safety regulations and market forces ensure safe products (Polinsky & Shavell, 2010, 1441–1442). This essentially shifts the responsibility to the certification stage.Footnote 29 Sector-specific regulators with industry expertise may be better suited than courts to determine when, to whom, and what obligations should be imposed.

Consequently, regulation and certification are essential to complement product liability in innovative product contexts (Castellano et al., 2020; Schebesta, 2017, 132).Footnote 30 While each approach has limitations, using them in tandem can be efficient (Shavell, 1984).

3 Defect and product liability scope

After summarising the economic factors influencing the choice between strict liability and negligence in product liability law, this section delves into the definitions of defects in EU and U.S. product liability law. It aims to determine if these definitions align with economic principles for efficient product liability. The focus is on two factors identified in Sect. 2 relevant to selecting the optimal product liability rule: consumers’ risk awareness and the level of control held by producers and users over expected harm.Footnote 31 This section investigates the implications of these factors for the definition of defect, assuming that this definition should establish an efficient liability standard for producers.

Product liability was introduced in Europe and the U.S. in response to mass production processes that emerged during the Industrial Revolution. The scope and standard of product liability govern the allocation of liability between producers and those harmed. Crucial to this allocation is understanding the circumstances under which a producer is held liable and the types of harm for which they are accountable.

The Product Liability Directive (“PLD”), established in 1985, makes producers liable for defective products that cause harm, irrespective of whether the defect was their fault.Footnote 32 The PLD applies to tangible goods (Art. 2 PLD), assigning liability to producers for damages arising from defective products. Producer covers all manufacturers of a finished product, producers of any raw material or manufacturers of a part (Art. 3(2) PLD). Defective products are defined as products that do not provide the safety a consumer is entitled to expect (Art. 6 PLD). Damage covers all harm incurred by death, personal injury, or damage to consumer property. The PLD is set to undergo a review as proposed by the Commission in 2022.Footnote 33 This review aims to address the division of responsibility among manufacturers, owners, and users in terms of liability for products. While the PLD has a broad scope that extends beyond AI-related concerns, the rationale for updating it is closely tied to technological advancements.Footnote 34

3.1 Defect as a negligence standard

Product liability has aspects of both strict liability and negligence. While it is generally considered a strict liability approach, from an economic point of view, product liability has aspects of negligence liability (Shavell, 2004, 222; Landes & Posner, 1985, 554; Reimann, 2003a, 777). The plaintiff must show that the product was defective, which can be seen as a negligence element (Zech, 2021, 150; Wagner, 2019, 35–36). The producer is not “absolutely liable, as an insurer of product users” (Wendel, 2019, 391).Footnote 35

The justification for restricting producer liability to defective products is that consumers are usually in the best position to prevent losses caused by non-defective products at the lowest cost (Landes & Posner, 1985). Instead of producers striving to make product designs even safer, it is more efficient for users to employ products safely to prevent harm when the product is not defective. The requirement for a defect encourages consumers to exercise caution in using the product.

The burden of proof in product liability exhibits elements of negligence and strict liability. The injured party must prove the damage, the defect and the causal relationship between the defect and damage.Footnote 36 Yet, product liability differs from a typical negligence rule because the manufacturer is liable regardless of whether they are to blame for the defect (Li & Visscher, 2020, 7). Customers do not need to prove negligence in the production process. They only need to demonstrate that their losses were due to defects in the product (Shavell, 2004, 222).

Both the definition of defect and the burden of proof have significant ramifications for the scope of product liability. If courts assume a defect quickly, product liability moves towards strict liability. Product liability is closer to a negligence rule, or even a no liability rule, if proving a product is defective is difficult.

3.2 Defect in EU and U.S. product liability law

In the EU, the definition of a defect is determined by the consumer-expectation test, which considers whether a product fails to meet the safety expectations the public is entitled to, considering all relevant circumstances.Footnote 37 While the producer can be considered negligent for selling a product that does not meet legitimate consumer expectations (Reimann, 2003a, 776–777), the test does not assess defects by comparing a product’s social benefits and costs (Wuyts, 2014). The gravity of the possible dangers and their effect on the product’s utility are relevant in determining the public’s legitimate safety expectations (Wuyts, 2014, 18). However, courts may only evaluate the impact of the producer’s conduct on the public’s legitimate safety expectations, not the product’s inherent risk.Footnote 38

Additionally, the producer may avoid liability if a defect is deemed unavoidable given the scientific and technical knowledge available at the time (known as the “development risk defence”).Footnote 39 Therefore, the consumer-expectation test does not constitute a strict liability standard, which would solely consider the product’s dangerousness at the time of the accident (Reimann, 2003a, 776–777). Although the consumer-expectation test does not explicitly consider reasonable alternative designs or a specific standard of care, these factors become relevant in assessing the development risk defence. Courts can assess whether, based on the technical and scientific knowledge at the time of production, an alternative, safer design was feasible. In determining the legitimate safety expectations of the public, the courts are not bound by industry safety standards. This means a product can comply with industry safety standards but still be deemed defective under the PLD. Conversely, a product can deviate from the industry standard and not be defective (Wuyts, 2014, 10–11).Footnote 40

In the U.S., product liability law employs distinct defectiveness standards for manufacturing defects (1) and design defects (3, Table 1). Manufacturing defects refer to unintended flaws found in individual units of mass-produced items which deviate from the intended design.Footnote 41 For manufacturing defects, U.S. law employs a standard close to strict liability (Wuyts, 2014, 10). A manufacturing defect occurs “when an individual product (or batch) does not meet the standard of the general quality of its specific type even if all possible care was exercised.”Footnote 42

Products with design and information defects are produced as intended but have inherent shortcomings within the product (Reimann, 2003b).Footnote 43 Design defects refer to foreseeable risks of harm associated with the product that could have been minimised or averted by adopting a reasonable alternative design. Information defects are foreseeable risks that could have been achieved by providing adequate instructions or warnings.Footnote 44 U.S. courts predominantly use the risk-utility test for design and information defects.Footnote 45 According to this test, a product is considered defective if it could have reduced or avoided foreseeable risks of harm by adopting a reasonable alternative design.Footnote 46 This essentially applies a negligence framework (Masterman & Viscusi, 2020, 185). Under the risk-utility test, courts must determine if the costs and risks justified a safer alternative design or additional warnings. The risk-utility test thus considers the probability of damage and the economic capacity of the producer to avoid damages. When applying the risk-utility test, courts consider various factors to establish a defect. Most commonly, courts refer to the following factors (developed by Wade, 1973): (i) The usefulness and desirability of the product; (ii) the safety aspects of the product; (iii) the availability of safer substitute products; (iv) the possibility of elimination of dangerous characteristics of the product without impairing its usefulness; (v) the user’s ability to avoid danger by safe use of the product; (vi) the anticipated dangers inherent in the product due to general knowledge or the existence of warnings; and (vii) the possibility of loss-spreading by the manufacturer through price setting or insurance. The risk-utility test can be considered closer to a negligence rule than the European approach, particularly regarding the plaintiff’s evidentiary burden. While EU law places the burden on the plaintiff to establish damage, defect, and causation, the risk-utility test requires the plaintiff to prove fault on the part of the producer as well.

3.3 An efficient definition of product defect

From an economic standpoint, product liability law should differentiate between defect types to vary the liability standard based on the relative control of the manufacturer and the user in preventing accidents (aligning with the least cost avoider principle). Risk awareness of the user also plays a role since it determines the user’s precautions in using the product.

The U.S. differentiation between manufacturing and design defects aligns with the principle of least cost avoidance. It considers the level of control held by producers and users, dividing responsibility based on the efficiency of their safety efforts. Limiting product liability to defective products in a negligence-like manner is efficient for design defects, as it encourages users to be cautious and prevent accidents. This approach is often more efficient than modifying the product’s design to enhance safety. For instance, it is more efficient for a car owner to drive responsibly and avoid speeding rather than relying solely on design improvements to reduce accident risks (Landes & Posner, 1985, 554). If producers were held liable for injuries that alternative designs could have prevented, consumers might have less incentive to exercise due care (Landes & Posner, 1985).

When it comes to defects originating in the production process, strict liability is efficient because consumers cannot reasonably prevent accidents at a low cost. Even if the manufacturer could not have avoided the accident through increased care (and thus, is not negligent), they can still develop better preventive methods, such as improved inspection procedures. Strict liability incentivises manufacturers to do so. If these methods are not (yet) available, it raises the product’s price and shifts demand towards safer alternatives (Landes & Posner, 1985, 555). For similar reasons, producers should be held strictly liable for third-party components. This encourages producers to explore long-term improvements in defect inspection for these components (Landes & Posner, 1985, 556). This has relevance for AI products, which often rely on inputs from various manufacturers (e.g., algorithms, data, testing) and require rigorous defect prevention and quality control measures.

The EU consumer expectation test has been criticised, especially as a norm for assessing design defects, since it may lead to the use of unreasonable consumer safety expectations as a benchmark (Veldt, 2023, 26). As mentioned earlier, a utility/risk analysis is not part of the test—even if national courts have applied it differently. The test only indirectly allows courts to vary the liability standard based on which party controls product risk. While the PLD does not explicitly distinguish between different defect types,Footnote 47 it leaves room for differentiating liability by requiring courts to consider “all circumstances” when assessing legitimate safety expectations. This includes factors like product presentation, reasonable use, and the timing of the product's introduction.Footnote 48 The presentation of the product consists of how the producer markets the product and what instructions the producer provides (Wuyts, 2014, 16).Footnote 49 By considering these factors, courts can give more weight to either the producers’ or consumers’ responsibility, depending on the case's particular circumstances.Footnote 50 Furthermore, laws transposed in various EU Member States include elements resembling the American distinction between manufacturing and design defects,Footnote 51 and national courts have referred to these distinctions.Footnote 52

Under EU law, the element of “reasonably expected use” allows courts to draw the line between intended use and misuse of the product. As explained above, the producer should bear the unavoidable risks of a product's intended use if this risk is significant and not apparent to users. Conversely, if the risk of intended use is low, consumers are aware of the risk, or if consumers misuse the product, they should bear the product risk. Especially in high-risk contexts, it thus matters how courts interpret “misuse”.Footnote 53 Under EU product liability law, producers cannot expect consumers to always use their products how they intended them to be used. They must consider a margin of “foreseeable misuse” by consumers (Wuyts, 2014, 20). In practice, producers must adapt the safety features of their product to mitigate possible harmful consequences of foreseeable misuse or, if this is not feasible, provide detailed instructions to users (Wuyts, 2014, 20–21). Producers are, however, not responsible for unreasonable misuse of the product.Footnote 54 Whether misuse is reasonable is at the discretion of the courts. Relevant factors are whether the misuse is socially accepted and whether the user was gravely at fault (Vansweevelt & Weyts, 2009, 517).

4 AI defects

The previous sections have examined legal criteria for defining product defects in the EU and the U.S. in light of economic considerations. The next focus is on the unique challenges that arise when applying these criteria to AI systems, considering the distinct characteristics of AI.

AI encompasses a spectrum of technologies that can be employed in a wide range of sectors and contexts.Footnote 55 This means that the dimensions of AI safety, or conversely, of AI risks, depend on the type of technology and the context in which it is used. Nevertheless, with a view to revising product liability rules for new technologies, it is worth thinking about common, typical features of AI that may affect product risk. AI possesses several characteristics that can impact product safety, including autonomy, complexity, unpredictability, and reliance on data.Footnote 56 While some of these characteristics are common among emerging digital technologies, autonomy is a distinct attribute unique to AI.Footnote 57

Section 4 of the paper addresses four key issues. Firstly, Sect. 4.1 explores the challenge of assigning product liability to AI systems when neither producers nor consumers have complete control over them. Section. 4.2 discusses the implications for U.S. product liability of the increasing importance of design defects in AI systems and the decreasing relevance of manufacturing defects. Section 4.3 examines how inaccurate expectations regarding AI safety may impact EU product liability. Lastly, Sect. 4.4 raises questions about the boundaries between the proper use and misuse of AI in human–machine interaction and whether an excessive reliance on AI autonomy could be considered a design defect by the manufacturer.

4.1 Control over AI risk

This paper argues that product liability should align with the respective control of producers and consumers. If neither party fully controls an AI system, the question arises of who should bear responsibility for harm.

Autonomous features in AI products can limit users’ ability to prevent harm while using the product. For example, accidents involving autonomous vehicles are less likely to result from driver errors and more likely to be caused by product defects (Kim, 2017). In the case of conventional cars, consumers are in control when using them. However, when it comes to autonomous AI systems, users are not actively involved in their operation. While drivers of autonomous vehicles may still have some duties related to supervision, users of autonomous AI systems still expect the technology to work independently without their direct involvement.

While users may have limited control over AI products, it is not necessarily the case that manufacturers have more control. Some aspects of AI failures may be under the manufacturer’s control. AI systems can fail if there are problems with its inputs, such as the data or the sensor hardware, if the processing logic is deficient in some way, or if the repertoire of actions available to the AI system is inadequate, resulting in an inappropriate output (Chanda & Banerjee, 2022). Manufacturers may not have full control over AI systems with autonomous features. Autonomy implies that an AI system can decide or act based on its own set of rules that are not entirely pre-programmed. For regulatory purposes, it is important to distinguish autonomy from automation: an automated system functions independently but follows preprogrammed instructions, while an autonomous system possesses its own decision-making capacity (Buiten et al., 2023).Footnote 58 Since autonomous systems may respond to input in unpredictable ways, manufacturers cannot foresee every action or reaction in every situation. The question arises when an unforeseen decision or action by an AI system can be considered a defect,Footnote 59 and who should be responsible for harm resulting from it.

Tort law typically imposes liability on parties who are responsible for the autonomous actions of individuals or animals, sometimes even holding them strictly liable when these actions are unpredictable.Footnote 60 In situations like this, such as liability rules for children or animals, the person responsible for supervising the third party is held accountable because they are considered the most capable of preventing or minimising risks.

If manufacturers and users lack full control over actions and decisions, both parties should share responsibility to ensure an appropriate level of care in designing, testing, and using AI-based solutions (Buiten et al., 2023). Producers are accountable for the development and testing phases, while users are responsible for monitoring and properly using AI systems. When producers cannot entirely control the product, such as when an AI system continues to learn after deployment, liability can incentivise them to enhance testing procedures for safety before deployment and to offer ongoing upgrades afterwards. Ensuring the safety of autonomous AI systems requires constant testing and high-quality simulators, possibly assisted by AI tools, to maintain and improve their performance (Chen et al., 2018).

Users should assume liability for monitoring AI systems that are not fully autonomous. This approach encourages users to take necessary precautions in maintaining and using the system correctly. In the case of highly autonomous AI systems, users may not be expected to monitor them actively. However, users still have control over the choice to employ AI systems, which justifies assigning them some liability for decisions delegated to AI (Buiten et al., 2023). Users also benefit from using AI systems. Allocating costs of harm to the beneficiaries of AI technologies can motivate them to make socially optimal decisions about when and how to use these systems.Footnote 61

4.2 AI defects as design defects

The risks or defects that may arise in AI systems depend on the system’s function and intended area of use. While AI may be considered high-risk in certain domains, the technology may potentially reduce overall risks in some areas.Footnote 62 Courts in product liability lawsuits will be tasked with determining the acceptable level of safety for AI systems (Lemley and Casey, 2019). They must decide how frequently and under which circumstances AI is allowed to fail before it is deemed defective (Whittam, 2022, 5). This paper argues that such defects will more often stem from design defects than from manufacturing defects, as compared to traditional products.

First, autonomous AI systems involve complex algorithms, the design of which encompasses a wide range of factors, such as training data, model architecture, learning algorithms, and decision-making rules. Any flaws or inadequacies in these design elements can significantly impact the system’s behaviour and performance.

Second, unlike traditional physical products, the manufacturing process of autonomous AI systems often involves replicating and deploying standardised software and hardware components. Of course, it is possible that, occasionally, such components do not conform to the intended design. Manufacturing defects could show up because of inadequate quality control measures, calibration procedures, or component assembly techniques employed during manufacturing. However, these components are typically produced under controlled conditions, ensuring minimal manufacturing variability. As a result, manufacturing errors that cause defects in the traditional sense, such as faulty assembly or component malfunction, are relatively less common for AI systems.

Third, AI systems heavily rely on software components. Design flaws or bugs in the software code can directly affect the system’s behaviour and lead to defects. These defects are more likely to arise from the design choices made during the development and implementation of the AI system rather than from the manufacturing process itself. Overall, defects in autonomous AI systems are often a result of inadequate design choices, including biases in the training data, imperfect algorithms, or insufficient consideration of edge cases. Considering these factors, defects in autonomous AI systems are more likely to be attributed to faulty design rather than manufacturing errors.

If design defects gain more significance than manufacturing defects, AI-based product producers are more frequently subjected to a negligence standard rather than strict liability under U.S. product liability law. Consequently, claimants face a heightened burden of proof (Vladeck, 2014, 145; Webb, 2017). In theory, according to the risk-utility test, a product is considered defective if adopting a safer alternative design would enhance consumer surplus (Larsen, 1984, 2055). However, courts are constrained by what can be observed and verified in practice.

An increased importance of design defects draws more attention to the burden of proof for the plaintiff under U.S. law. While it is often straightforward to establish manufacturing defects, proving design defects is more challenging, mainly when it involves research and development and product design decisions. Courts may be required to assess whether, when a producer had the chance to conduct an investigation, the likelihood of success and the expected value were high enough to justify the associated costs (Shavell, 2004, 218). For example, an AI company may have the chance to develop an alternative algorithm that is more complex but offers greater accuracy. If a company is uncertain whether a particular level of design effort will be deemed adequate by the courts and fears substantial liability, it may engage in socially excessive efforts to enhance the design. Conversely, if a company believes that courts are lenient, it may choose not to pursue opportunities to reduce risk. In the case of the AI company, this would mean refraining from improving the system by utilising more extensive datasets or conducting more rigorous testing. Perverse incentives may arise in this context, where an initial investigation by the producer could yield evidence that might lead the courts to determine that an opportunity to reduce risk existed (Shavell, 2004, 219).

4.3 AI safety expectations

Under EU product liability law, courts will have to determine the public’s legitimate safety expectations of AI systems. As discussed above, the actual relative safety of AI compared to non-AI products is irrelevant. Courts have ruled in the past that consumers’ expectations reasonably exceeded a product’s factual or statistical safety.Footnote 63

The public may not have accurate expectations about the safety of AI technologies (Frascaroli et al., 2019). Recent crashes with Tesla cars, where drivers were not attentive to the road, suggest that the public may place too much trust in AI. Another example is a recent case in which a lawyer used ChatGPT without checking the results for accuracy.Footnote 64 There are two primary reasons why consumers may misconstrue the risks associated with AI. Firstly, AI is inherently complex, which contributes to the challenges faced by consumers in comprehending its potential risks. Secondly, AI may reduce errors significantly in one aspect but introduce errors in another dimension (Veitch & Alsos, 2022, 2). These errors may differ from those typically made by humans. For instance, autonomous vehicles sometimes fail to recognise other cars, which humans would likely have noticed easily. A notable incident occurred in 2016 involving a Tesla vehicle on autopilot that was unable to detect the white side of a tractor-trailer against a brightly lit sky.Footnote 65 Another example is medical AI systems, which tend to generate more false positives but fewer false negatives than human physicians (Makino et al., 2022).Footnote 66 Due to the disparity in the types of mistakes made by AI systems compared to humans, the errors made by AI can be unpredictable or unanticipated by humans.Footnote 67 Consequently, consumers may have less accurate knowledge of AI product risk than the traditional products that autonomous AI systems replace.

In the U.S., the doctrine of design defects necessitates assessing whether an alternative design could have averted harm caused by an AI system. However, as engineers strive to anticipate human errors that cause recurrent accidents, their design modifications might inadvertently introduce new, unexpected, and potentially more dangerous accidents stemming from the technology itself (Wendel, 2019, 379).

Under EU product liability law, courts will have to judge the reasonableness of the public’s inaccurate expectations of AI. A plaintiff may argue that the public may (reasonably) expect AI systems to be safer than humansFootnote 68 or that a particular AI failure could not reasonably have been expected. The manufacturer may counter that their AI system is generally safer than its non-AI counterpart—and therefore, it is not dangerous beyond the ordinary consumer’s expectation (Wardell, 2022, 20). Courts will have to determine the users’ reasonable expectations, as well as the importance of the unpredictability of errors in determining product liability. This involves reviewing the testing the manufacturer did to avoid unforeseen errors. Accidents involving AI systems often occur in edge cases, so extensive testing of all possible scenarios becomes crucial for detecting and mitigating potential risks.Footnote 69

When assessing legitimate safety expectations in product liability, the relevant moment is when the product was put into circulation, not the expectations that pertained to when the damage occurred. By extension, a product is not considered defective for the mere reason that a better product is subsequently put into circulation.Footnote 70 This is significant in sectors with rapid scientific and technological advancements (Wuyts, 2014, 21). For AI systems with learning capabilities that evolve over time and produce different outcomes, it may be challenging to establish legitimate consumer expectations.

These consumer expectations of AI may also evolve over time: there is a dynamic relationship between consumer expectations and AI safety. Initially, the public may distrust AI, but as AI becomes more prevalent in consumer products, trust may grow. Under the EU standard for product defect, this means that, at the outset, consumer expectations for AI safety are low, and AI products would be held to a less stringent standard for defects compared to traditional products. Conversely, if consumer expectations are (too) high, the standard would be stricter for AI products than for their non-AI counterparts. Overall, the consumer expectation test may only become effective in assessing AI defects as consumers gradually become accustomed to what they can reasonably expect from AI systems.Footnote 71

This issue is not exclusive to AI and has been addressed in various industries, such as pharmaceuticals and aviation. Over time, as consumers become more familiar with autonomous systems, their expectations can gradually align with the reality of these technologies (Garza, 2012, 601). When determining the expected safety level, courts have recognised that inherently dangerous products are not automatically considered defective. Producers must consider that the general public may not be aware of all the specific dangers associated with a product beyond the obvious ones and, therefore, cannot be expected to adjust their expectations accordingly (Wuyts, 2014, 16). Producers can be held liable if they fail to provide adequate warnings or instructions for the proper use of a dangerous product.Footnote 72 Producers must provide information that helps consumers form realistic safety expectations based on the product’s actual safety. This aspect becomes particularly significant in the context of AI. The consumer expectation test thus connects product liability to the information available to consumers.

As mentioned earlier, the courts are not bound by industry safety standards when determining the legitimate safety expectations of the public. In the context of AI, regulatory standards can only provide guidance but no guarantee for the standard in product liability.Footnote 73 It would be useful to rely more on regulation and certification standards to guide the concept of defect in the context of AI. This would provide more legal certainty than the consumer expectation or risk-utility tests.

The risk-utility test has advantages over the consumer expectation test in evaluating AI systems. It allows courts to assess how well the AI system performs in relation to the safety efforts made by manufacturers, including comprehensive testing, evaluating component interactions, and considering alternative designs and potential harm severity. For example, the consequences of an error in a video recommendation system differ from those in a medical AI tool or an autonomous vehicle. However, the risk-utility test has limitations. In some instances, finding a reasonable alternative design, especially in the era of AI, may not be feasible. If no viable alternative exists, and the potential risk is deemed unacceptable, regulations or societal prohibitions on the AI system’s use may be necessary until a safer design is developed.

4.4 Product use and misuse

Product liability should encourage producers to invest in better safety management and better instruments to improve the safety of AI product design.Footnote 74 At the same time, it should encourage safe use by AI operators.

The concept of misuse takes on a new dimension in the context of (semi-)autonomous systems. Courts will have to determine if AI systems adequately prevent foreseeable misuse through their design of human–machine interaction.Footnote 75 The effectiveness of human–machine interaction plays a pivotal role in determining the overall safety and reliability of autonomous systems (Ramos et al., 2020). Overreliance on AI, combined with distractions, can lead to accidents. Courts need to assess whether insufficient user oversight qualifies as foreseeable misuse and whether the manufacturer should have taken steps to prevent it through product design, warnings, and marketing.

Excessive trust in the safety of AI systems can lead to misuse, as users may overly rely on the AI’s decisions. Users may not always know when to intervene, when AI systems seem to be operating within their designated parameters but require human intervention beyond their programming (Ramos & Mosleh, 2021, 3). This issue becomes more significant as AI systems operate in various environments and perform diverse tasks.Footnote 76 Human operators may struggle to identify instances when automation behaves incorrectly or understand the reasons for such behaviour. Additionally, they may not be adequately prepared to regain control when necessary (Ramos & Mosleh, 2021, 3; Endsley, 2017).

Another problem is that as human operators shift from active task performers to passive supervisors, they can become complacent and inattentive when quick control is required (Ramos et al., 2020; Endsley, 2017; Veith & Alsos, 2022). Tragic incidents, such as the 2018 collision between a self-driving Uber car and a pedestrian and a fatal accident involving a Tesla car, emphasise the need for effective monitoring of driver engagement and interventions in autonomous systems.Footnote 77 These incidents highlight the critical role of human operators in ensuring the safety of such systems and the importance of their awareness of this responsibility (Ramos & Mosleh, 2021).Footnote 78

Courts face the challenge of determining whether a defectively designed AI system is responsible for misuse that could have been prevented with better design. This includes assessing the manufacturer’s responsibility to create systems that cannot be used in hazardous ways.Footnote 79 Recent lawsuits against Tesla illustrate this, with plaintiffs arguing that Tesla’s automated driving technology had a defective design because it failed to issue alerts to an inattentive driver.Footnote 80

Various methods have been developed to reduce the potential for errors resulting from misjudgements of human involvement levels (Ramos & Mosleh, 2021). These include interface design, interpretation of human instructions and signals,Footnote 81 providing clear and timely warnings to users, and using intuitive user interfaces to encourage proper system monitoring (Tadele, 2014, 135; Zacharaki et al., 2020, 2; Villani et al., 2018, 249–50). Safety assurance and risk management in robot development encompass context awareness, adaptiveness, override behaviours, collision avoidance systems, and workplace design (Bozhinoski, 2019; Scianca et al., 2021; Robla-Gomez et al., 2017; Bdiwi et al., 2017). User training and awareness programs can educate human operators about the necessary level of monitoring and AI system limitations, fostering vigilance, trust in automation through transparency, and emphasising the need for continuous attentiveness to combat issues like automation complacency and inattentiveness.

With the advancement of AI systems towards greater autonomy and reduced reliance on human operators, it is crucial for these systems to have self-monitoring and fault-detection capabilities. This entails designing AI systems to use their own data for onboard fault detection without the need to rely on cloud services, for instance. (Khalastchi & Kalech, 2018, 7). Fully autonomous systems must effectively perceive their environment, respond to unexpected situations, and operate reliably without human intervention for extended periods (Guiochet and Waeselynck, 2017, 45; Ramos et al., 2020, 2). As autonomy increases, ensuring bug-free software becomes more challenging due to increased complexity and the higher likelihood of faults (Guiochet and Waeselynck, 2017, 45). This challenge is even more significant for cloud-connected AI systems, as they can be affected by various fault sources, including environmental uncertainties and errors across different domains like physical, networking, sensing, computing, and actuation (Chen et al., 2018).

In the context of AI systems, marketing and warnings can significantly shape consumer expectations (Vladeck, 2014, 137). For example, Tesla faced several class action lawsuits alleging deceptive marketing of its driving assistance systems as autonomous driving technology, using names like “Autopilot” and “Full Self-Driving Capability.”Footnote 82 The plaintiffs claim that Tesla used these terms despite knowing the autonomous driving claims were misleading.Footnote 83 Studies have shown that terms like “Autopilot” can lead to driver misinterpretations.Footnote 84

Product liability and regulatory standards can ensure that producers market their (semi-)autonomous products responsibly. In 2022, the UK proposed a rule holding manufacturers liable for the actions of self-driving vehicles when they are operating in autonomous mode.Footnote 85 California has enacted a bill to prevent misleading advertising practices and restrict terms like “Full Self-Driving” to enhance transparency and ensure consumers are not deceived about technology capabilities.Footnote 86 It has also been proposed to reduce the number of autonomy levels for AI systems to manage consumer expectations better.Footnote 87 Next to incentivising producers, regulatory standards can establish accurate consumer expectations. Responsible marketing practices, including clear warnings and safety labels, play a crucial role in promoting user awareness and safe utilisation of autonomous products, ensuring that users understand the required oversight.Footnote 88

By aligning product liability with regulatory and certification frameworks, court decisions become more predictable. Simultaneously, product liability encourages manufacturers to market their products responsibly. In formulating these rules, it is essential to prevent manufacturers from evading liability through narrow definitions or shifting the responsibility entirely to users merely because the systems can be overridden (Van Wees & Brookhuis, 2005, 364). Such limited definitions of normal product use could relieve manufacturers of accountability for system defects or shortcomings in design, performance, or safety features. When manufacturers continue to require oversight if this is not needed for safety reasons, this creates unnecessary user monitoring costs. Drivers of non-autonomous cars cannot make productive use of travel time because they must remain attentive to the road. Similarly, AI diagnostic tools may not significantly free up physicians’ time if they need to review the tool’s results.Footnote 89 This delay could be detrimental to society, even if AI adoption can enhance overall decision-making.

Summarising, the distinction between foreseeable misuse and unreasonable abuse of semi-autonomous AI systems is pivotal as it shapes the design requirements for producers, defines the extent of their responsibility, and clarifies where the responsibility of users begins. Manufacturers can contribute to user oversight by designing more intuitive interfaces, but the responsibility for ensuring proper use also falls on the users.Footnote 90 Courts must assess not only whether manufacturers have taken sufficient precautionary measures but also whether they have made adequate efforts to facilitate user precautions through product design and warnings. If the producer possesses superior knowledge about the system’s risks and fails to provide sufficient warnings or instructions to users, they may be held accountable for the defects. Conversely, if users were reasonably informed about the risks but failed to exercise caution or follow instructions, they should bear the losses.

5 Policy implications: the PLD Proposal

This paper has argued that to align product liability with control, it is useful to differentiate the liability standards for manufacturing and design defects. Section 4 has identified several problems for defining defects that are particularly relevant in the context of AI: AI transfers control away from users, and manufacturers may not have complete control either; AI can increase the importance of design flaws and reduce the occurrence of manufacturing defects; determining if AI is defective can be challenging; users might hold inaccurate safety expectations of AI; and users may misuse AI by over-relying on its autonomous features. This section reviews to what extent the PLD Proposal addresses these problems.

5.1 Consumer-expectation test

The PLD Proposal keeps the consumer-expectation test despite the concerns discussed in this paper: The test does not include a risk/utility-analysis, and consumer expectations of AI may well be inaccurate or unreasonable. Others have also noted the difficulty in applying the consumer expectation test to assess the defectiveness of AI (Borghetti, 2019; De Bruyne et al., 2023).Footnote 91 The proposal does not settle whether a risk/utility-analysis is allowed (Veldt, 2023, 26). While some note that, in practice, courts will have to weigh costs and benefits when determining consumer expectations (Wagner, 2023), it is unfortunate that the PLD Proposal did not take a leap forward here. This would have been especially welcome given the existing practice of applying a risk/utility-test in national courts, as mentioned earlier.

The PLD Proposal adds circumstances that courts can consider in determining safety expectations. In addition to the presentation of the product, the reasonably foreseen use of the product, and the moment in time when the product was placed into circulation,Footnote 92 the proposal includes the relevance of learning effects, product complementarity, product safety requirements, interventions by regulatory authorities, and the specific expectations of the end-users for whom the product is intended.Footnote 93 These circumstances are clearly designed to allow for a more comprehensive evaluation of AI systems, considering their evolving nature, learning capabilities, and the necessity of updates. The broadening of the list of circumstances that courts may take into account may increase legal certainty (De Bryune et al., 2023; Rodríguez de las Heras Ballell, 2023). However, some of the new additions remain open to interpretation (De Bruyne et al., 2023). The effect of learning on the product, for instance, could arguably be used both to extend or to limit the producer’s liability, depending on how learning effects are considered to affect consumers’ legitimate expectations.

Overall, the proposal suggests a more objective standard for consumer expectations, considering factors like the AI’s intended purpose and objective characteristics to determine the level of safety the public can reasonably expect.Footnote 94 This approach could provide a more solid foundation for evaluating consumer expectations of AI safety.

The PLD Proposal also modifies the time point at which defectiveness is assessed for products that require software upgrades and updates.Footnote 95 The PLD Proposal recognises that manufacturers retain control over digital products even after they have been introduced to the market. Producers may be obliged to upgrade existing products when more recent AI software increases safety.Footnote 96 This acknowledges the ongoing responsibility of manufacturers in maintaining and updating the products. By adjusting the assessment timeline, the PLD Proposal ensures that manufacturers’ control over AI systems is considered when evaluating defects.

5.2 Burden of proof

Some have criticised the PLD Proposal’s approach to defect, noting that plaintiffs are still unlikely to succeed in lawsuits about damage caused by black-box AI that arises purely because of its complex, opaque, and autonomous reasoning process (Duffourc & Gerke, 2023). One way in which the PLD Proposal tries to accommodate plaintiffs is by introducing an alleviated burden of proof.Footnote 97 As mentioned earlier, identifying and proving defects in AI systems is challenging due to their interconnected nature and the ambiguity surrounding what qualifies as a defect, especially in the context of autonomous behaviour (De Bruyne et al., 2023, 13). Even with thorough research in explainable AI, it can be unclear in specific instances whether an AI system has failed. Performance is typically the relevant criterion for defining a defect but determining which outcomes should be considered defects may not always be straightforward (Buiten et al., 2023).

The PLD Proposal stipulates that a product will be deemed defective if manufacturers fail to disclose evidence of how the product operates, encouraging manufacturers to disclose such information.Footnote 98 In this respect, the PLD Proposal aligns with the risk awareness of the producer and the user. The producer or developer of the AI system typically possesses in-depth knowledge about the system’s design, functioning, and potential risks. They can access technical specifications, data, and insights from the development process. This knowledge places them in a better position than the consumer to understand the system’s limitations, potential errors, and necessary safeguards. By contrast, users of AI systems may have limited access to the system’s technical details or internal workings. They need to rely on provided documentation, user manuals, or training materials to understand the system’s capabilities and potential risks. Thus, their understanding of the system’s complexities and potential sources of error may be less comprehensive than that of the producer.

A rebuttable presumption of defectiveness also applies if a product does not comply with mandatory safety requirements, thus linking product liability with regulatory standards.Footnote 99 Relying on regulatory and certification standards can assist courts in understanding the legitimate expectations of AI safety. These standards can serve as valuable guidance and inform the court about the prevailing industry norms and accepted practices. By referencing these established standards, courts can gain insights into what the public deems as reasonable and legitimate expectations for AI safety.

Relying on regulatory standards in product liability allows the inclusion of risk/utility-considerations indirectly by incorporating them into decisions on regulatory standards for AI. This essentially shifts the risk/utility-assessment to the certification stage, where determinations are made about obligations, timing, and responsibilities. Regulators may be better positioned than producers or courts to determine marketing guidelines for AI systems in terms of autonomy and human override capabilities. Enforcing such labels through certification and regulation can clarify consumer expectations and ensure accurate marketing of AI autonomy, promoting safer use of these systems. Additionally, on the producer’s side, it can specify requirements for interface design and override capabilities. Regulatory measures are crucial in shaping the design of AI-based products, addressing human–machine interaction challenges, and facilitating product liability cases.

6 Conclusion

This paper articulated three key points: Firstly, product liability should align with the relative control over risk and awareness of risk possessed by both the producer and the user. Secondly, the definition of a defect serves as a pivotal factor in distributing responsibility between the producer and the user. And thirdly, AI systems disrupt the traditional balance of control and risk awareness between users and producers.

Modernising product liability rules for AI requires bridging the gap between the abstract risks that are more prominent in AI and the concrete legal requirements and definitions that should apply. In product liability law, establishing a clear concept of AI defects becomes essential in assessing the responsibility of AI manufacturers and operators for any harm or injury caused by these systems. However, determining liability in AI-related defects can be complex due to the involvement of multiple stakeholders. Assigning responsibility becomes particularly challenging when defects arise from various sources, such as flawed training data, algorithmic biases, or inadequate system design.

As this paper illustrated, AI poses challenges to defining defect in product liability. The allocation of responsibility for product defects in AI systems should consider the level of control and the information and expertise available to both producers and users. This ensures an assessment of defects that promotes the safe development, deployment, and use of AI technology. When AI systems cannot operate fully autonomously, courts need to consider who is responsible for what aspect of safe human–machine interaction.

By combining objective factors to evaluate reasonable safety expectations within the PLD Proposal and leveraging regulatory and certification standards, the challenges associated with applying the consumer-expectation test to AI could be addressed. By relying on regulatory and certification standards in product liability, the definition of defect becomes more closely aligned with earlier cost–benefit analyses of AI safety conducted by regulatory bodies. This approach allows courts and users to better grasp legitimate safety expectations and industry norms, offering clarity and predictability in defect determinations.

By relying on regulatory and certification standards in product liability, the definition of defect aligns more closely with a cost–benefit or risk-utility analysis. It allows courts to better understand legitimate safety expectations and industry norms, providing clarity and predictability in determining defects. This approach promotes responsible AI development while ensuring legal recourse for harm caused by defective AI systems.

It is crucial to define policy goals broadly for regulating AI, encompassing safety and societal benefits, while addressing gaps in regulation and liability more narrowly. Recognising the diversity of AI technologies and their applications, regulation, and liability should be adaptable to specific contexts. In product liability law, courts will play a significant role in interpreting and applying the rules, but regulation can provide guidance and inform the scope of liability in certain AI contexts.

Notes

The AI Act defines an ‘artificial intelligence system’ (AI system) as software that is developed with specific techniques and approaches that can, for a given set of human-defined objectives, generate outputs such as content, predictions, recommendations, or decisions influencing the environments with which they interact. These techniques and approaches include machine learning, logic- and knowledge-based approaches, statistical approaches, Bayesian estimation, and search and optimization methods.

The term ‘operator’ refers to the human who employs an AI system to make a decision or carry out an activity. The terms ‘operator’, ‘user’ and ‘consumer’ are used interchangeably throughout the paper. The paper distinguishes only between the producer or manufacturer on the one hand and the user (who may be a consumer but could be an operator who is not a consumer) on the other. The paper does not consider further distinctions between types of users or operators.