Are judges human?

—Jerome Frank, American legal philosopher and author.

Abstract

Historically, people have often expressed negative feelings toward speculators, a sentiment that might have even been reinforced since the latest financial crisis, during which taxpayer money was warranted or spent to bail out reckless investors. In this paper, we conjecture that judges may also have anti-speculator sentiment, which might affect their professional decision making. We asked 123 professional lawyers and 247 law students in Germany this question, and they clearly predicted that judges would have an anti-speculator bias. However, in an actual behavioral study, 185 judges did not exhibit such bias. In another sample of 170 professional lawyers, we found weak support for an anti-speculator bias. This evidence suggests that an independent audience may actually perceive unbiased judgments as biased. While the literature usually suggests that a communication problem exists between lawyers and non-lawyers (i.e. between judges and the general public), we find that this problem can also exist within the legal community.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Judges are supposed to decide cases solely on legal grounds (i.e. based on arguments the law deems relevant) and to disregard arguments that appeal to their personal moral, political, or philosophical standards but are irrelevant from a legal standpoint. There is broad consensus among legal scholars, however, that judges do not always live up to those standards. Judges are, after all, human beings with emotions and moral and political convictions.

The suggestion that judges are not able to completely ignore emotionally appealing but legally irrelevant decision determinants is rather unsurprising from a psychological standpoint. Judges often engage in what psychologists call “motivated reasoning” (Braman 2010; Kunda 1990; Sood 2013; Spellman and Schauer 2012). They want to convince their audience (e.g., individuals in the general public or the regulatory apparatus of the federal government, see Baum 2006; Black et al. 2016) and are focused on reaching certain conclusions. This motivation can affect judges’ information search and processing as well as other components of the decision-making process. This same point finds support in psychological research on confirmation bias (e.g., Snyder and Swann 1978).

However, judges are also professionals. They have been trained to distinguish legally relevant from legally irrelevant aspects of a case. Their job is to make decisions on purely legal grounds, and many judges have been doing that for a very long time. In psychology, it is widely accepted that professional decision makers often perform much better at decision-making tasks than their non-professional counterparts. The question, therefore, is if and to what extent legal training, practice, and experience can insulate judges from the possibility of non-legal arguments influencing their decisions (Kahan et al. 2016; Spellman and Schauer 2012).

In this article, we provide evidence that judges’ decisions are not influenced by legally irrelevant sentiment toward speculators, even though we find that two independent samples of 123 professional lawyers and 247 law students expect them to be biased against speculators. In another sample of 170 lawyers, we find weak evidence for an anti-speculator bias. This adds to a growing body of literature that posits that a biased audience may perceive unbiased judgments as biased. While this literature usually suggests that this “communication problem” exists between lawyers and non-lawyers (i.e. between judges and the public), we show that a similar problem may exist within the legal community. Law students and professional lawyers perceive judges to be biased, even though in our study the former are not biased themselves.

2 Previous literature

There is considerable empirical literature on legal decision making. However, many of the studies focus on how judges and juries find facts rather than how judges interpret and apply statutes, doctrines, or precedents (e.g., Englich et al. 2006; Guthrie et al. 2001; Rachlinksi et al. 2009, 2013). Thus, these studies do not investigate the aspect that is unique to legal decision making compared with other forms of professional decision making (Kahan 2015; Spellman and Schauer 2012).

Many studies on legal reasoning use non-professional decision makers as informants such as law students or even laypeople (e.g., Braman and Nelson 2007; Furgeson et al. 2008a, b; Holyoak and Simon 1999; Simon et al. 2001). As such, they are of limited use because they do not reflect the professional part of judicial decision making (Kahan 2015; Kahan et al. 2016).

Empirical investigations of legal reasoning by professional judges can be divided into two groups (Kahan et al. 2016)—observational and experimental studies. The first group contains studies that use correlational analyses in the form of multivariate regression models. These studies usually treat legally irrelevant decision determinants such as judges’ political ideology as one independent variable whose impact on the court’s decisions is assessed after controlling for other independent variables (e.g., Epstein et al. 2013; Segal et al. 2005; Sunstein et al. 2006). Ample evidence shows that political ideology affects judges in civil law countries. For example, Amaral-Garcia et al. (2009) investigate 270 constitutional court decisions from Portugal and find that judges are sensitive to their political affiliations and their political party’s presence in government when voting. Espinosa (2017) analyzes 612 cases from the French Constitutional Council and also finds evidence of voting along political/ideological lines and that judges restrain themselves from invalidating laws. Berger and Neugart (2011) provide evidence for a nomination bias in German labor court activity—that is, courts are more active if unemployment rates are high.

The main advantage of these studies is that they investigate real decisions by sitting judges made under real conditions. A larger methodological problem is that observational studies are necessarily limited to published judicial opinions. This leads to a sampling bias known as the “Priest–Klein” hypothesis (Priest and Klein 1984). It is fair to assume that parties are less likely to litigate disputes if they fear that a favorable outcome is highly improbable. In the literature, these cases are called “easy cases” because they do not involve much legal ambiguity and uncertainty. Those cases will either be settled or not be filed. Consequently, any sample of published decisions will contain a disproportionately high number of “hard cases” (i.e. cases in which legal ambiguity and uncertainty is high). This is why any investigation limited to published opinions will understate the influence of legally relevant arguments that steer cases away from litigation and, on the flipside, is likely to overstate the impact of legally irrelevant determinants (Kahan et al. 2016). In other words, because hard cases involve more legal ambiguity than easy cases, they are more likely to be decided on the grounds of non-legal arguments disguised as legal reasoning. Any study using a sample that contains a disproportionate number of hard cases is therefore likely to overstate the influence of non-legal factors on judicial decision making (Spellman and Schauer 2012).

Experimental studies avoid this methodological problem. Moreover, they allow studying judicial decision making in a more controlled environment, though this artificiality is also a disadvantage of experimental studies, raising questions particularly about the external validity of results. Overall, however, we believe that the benefits of experimental designs outweigh their disadvantages and therefore are the more promising approach (see Kahan et al. 2016; Sood 2013).

We find only a few experimental studies on legal reasoning by real judges:

-

Wistrich et al. (2015) use a between-subjects design to assess whether U.S. judges (state and federal, trial and appellate; n > 1800) are more likely to favor parties displayed as likable or sympathetic over parties displayed as dislikable or unsympathetic, even though these features have no legal relevance. They created two or more versions of various hypothetical civil and criminal law cases, encompassing a wide range of legal reasoning tasks, such as interpreting and applying the law, exercising discretion, and awarding damages. They varied the information that made the parties seem either sympathetic or unsympathetic. This information was irrelevant from a legal standpoint.Footnote 1 Nevertheless, Wistrich et al. (2015) found that judges favored sympathetic parties over unsympathetic parties in all hypothetical cases and, in some cases, to a large extent. They found no evidence that judges’ decisions at the trial level were driven by political ideology.

-

Kahan et al. (2016) use a similar method to assess whether U.S. state trial and appellate judges’ (n = 253) cultural worldviews influenced their decisions. They found that, though judges were polarized on topics such as climate change and marijuana legalization, these differences had no effect on their legal judgments. Their decisions did however concretely influence their assessments outside the legal realm: a non-legal risk assessment task that Kahan et al. (2016) also assigned to the judges. Furthermore, with regard to legal reasoning, judges performed much better (i.e. were less influenced by their cultural outlooks) than a sample of law students (n = 284) but not significantly better than a sample of lawyers (n = 217). Kahan et al. (2016) therefore conclude that professional judgment imparted by legal training and experience confers resistance to identity-protective cognition, but only for decisions involving legal reasoning. This result is in line with that of Redding and Repucci (1999).

-

Spamann and Klöhn (2016) created a virtual file of an international criminal law appeal case with briefs, precedents, and a trial judgment displayed on a tablet computer. They gave a group of 32 U.S. federal judges 55 min to read the file and decide the case. In addition, they asked judges to briefly state reasons for their decisions in writing. They used a 2 × 2 between-subjects design to determine what had a stronger impact on the judges’ decisions: a weak, distinguishable precedent or legally irrelevant information about the likability of the defendant. They found that the weak precedent had no measureable effect while the legally irrelevant information about the defendant shifted the probability of conviction by 45 percentage points.

In summary, the question whether judges’ professionalism shields them from the influence of legally irrelevant decision determinants is far from answered. While Kahan et al. (2016) provide evidence that gives reason for optimism, the results of Wistrich et al.’s (2015) and Spamann and Klöhn’s (2016) studies are rather sobering. We add to this literature by providing another piece of evidence largely in line with Kahan et al. (2016).

3 The anti-speculation sentiment in the German judiciary

Drawing on our scientific experience with investor and securities litigation in Germany, we argue that judges hold sentiment against capital market speculation and speculators in the German judiciary. To be sure, speculators have always had a rather negative image in most, if not all, countries of the world. They have been labeled gamblers or parasites who got rich without working for their money and have been blamed for impoverishing others, causing financial crises, and inflicting harm on society (Fabian 1990). We hypothesize, however, that the anti-speculation sentiment is particularly strong in Germany and that it affects the German judiciary and legal training as well. Several practicing lawyers told us that defendants (e.g. banks, brokers, investment firms) win their cases against investor-plaintiffs if they can convince the judge that the plaintiff was a reckless speculator. Even if the court does not dismiss the claim right away, it will exercise its discretion in favor of the defendant (e.g. in the context of measuring damage or finding contributory negligence). We therefore conjecture:

H1

There is a perceived bias in judicial decision making. Professional lawyers and law students predict that judges will more frequently and more severely punish speculators than other defendants in cases in which the quality as speculator has no legal relevance.

These anecdotes find support in the reasoning of some published decisions by German courts. While the economic literature defines a speculator neutrally as a profit-motivated trader who tries to exploit differences in prices (see Harris 2002), some German courts have equated being a speculator with being irrational, manipulating, reckless, and even less worthy of legal protection. For example, German law makes inducing other people to speculate on stock exchanges a criminal act if the person doing the inducing is taking advantage of other people’s inexperience (§§ 26, 51 Stock Exchange Act). The Federal Court of Justice has stated that the rationale of this provision is not only to protect the economic interest of inexperienced investors but also to prevent the “distortive effect of speculative trades” on stock prices.Footnote 2 In another quite prominent example, the Higher Regional Court of Stuttgart (OLG Stuttgart) wrote in one of its judgments on the landmark Markus Geltl. v. Daimler AG caseFootnote 3 that the reasonable investor—a legal standard used to determine whether a piece of information is materialFootnote 4—acted “rationally” and made decisions “contrary to the speculative investor” on reliable factual grounds. Contrast this with what the U.S. Court of Appeals for the Second Circuit wrote in 1968 about the reasonable investor in its famous judgment Securities & Exchange Com. v. Texas Gulf Sulphur Co.: “The speculators and chartists of Wall Street and Bay Street are also ‘reasonable’ investors entitled to the same legal protection afforded conservative traders”.Footnote 5 Given the contrasting picture of speculators among the Germany judiciary, we hypothesize:

H2

There is an anti-speculator bias in judicial decision making. Judges more frequently and more severely punish speculators than other defendants in cases in which the quality as speculator has no legal relevance.

Here, we do not claim that the negative connotation of speculation and speculators in these judgments is per se “wrong” from a legal perspective (though we believe that in some cases, such as the case of OLG Stuttgart, it clearly was wrong). Furthermore, it is not our intent to get into an economic debate about the positive and negative externalities of speculation and whether speculation should be contained or encouraged from a social welfare perspective (see Kemp and Sinn 2000; Stevens 1892). We are, however, confident that the anti-speculation sentiment among German judges is likely to have an effect on decisions in cases in which it clearly should not guide judges’ decisions from a legal standpoint.

Finally, we were interested in how well lawyers would predict the frequency and extent of contributory negligence in the treatment condition. In Germany, legal education follows the concept of “uniform lawyer education” (einheitliche Juristenausbildung). This means that anyone who aspires to go into the legal profession must undergo the same legal training. This education consists of legal studies for at least 4 years at a university and 2 years of practical training in a court, administrative body, and law firm. The focal point of the education is the qualification for judgeship (Befähigung zum Richteramt). Thus, law students in Germany are trained not merely to “think like a lawyer” but to think like a judge. Therefore, we were optimistic that lawyers would accurately predict whether and to what extent judges would award contributory negligence.

H3

Lawyers and law students will accurately predict how frequently and to what extent judges award contributory negligence in the treatment condition.

4 Method

4.1 Behavioral study

4.1.1 The cases

In line with Wistrich et al. (2015) and Kahan et al. (2016), who distributed short case vignettes to their participants and asked them to solve the cases in a relatively short period, we created three hypothetical cases using a paper-and-pencil research design. All three cases were civil law cases. One case had no relation to the world of investing, while two cases centered on questions of investor protection. In each case, the protagonist had a damage claim against another person, and we asked the participants whether this damage claim should be reduced due to contributory negligence. We created two versions of each case. In one version (the “treatment condition”), the case involved a speculator (i.e. a person whose goal is to earn money in a relatively short period by betting on the development of security prices). In the other version (the “control condition”), the case involved another person such as a doctor, an inventor, or a long-term investor, or an inventor. In each case, the varied information had no legal relevance. Thus, from a purely legal perspective both versions of the case were essentially the same. Table 3 in the “Appendix” provides the original full text and the English translation.

The three cases also differed in terms of their legal ambiguity. In case 1, there was no “one right answer”, so we refer to this case as a “hard case” (for a distinction between “hard” and “easy” cases in legal decision making, see Spellman and Schauer 2012). In a previous article, one of the authors submitted this case (involving no speculator) to various groups of participants, ranging from first-year law students to senior law students and sitting judges (Klöhn and Stephan 2010). On average, all groups of participants found 50% contributory negligence regardless of their legal expertise and experience. Thus, we predicted that 50% of our participants would find contributory negligence in both the treatment and control conditions if participants were not biased against or in favor of speculators. Cases 2 and 3 are easier cases. Under German law, contributory negligence requires that there be a breach of “duty to act diligently in one’s own affairs” (Sorgfalt in eigenen Angelegenheiten). Cases 2 and 3 have no information that could serve as grounds for such charge. To find contributory negligence, one would need to qualify the investment decision itself as a breach of duty. However, there is no information about why this decision might have been taken carelessly. Thus, we predicted that none of the participants would find contributory negligence in either the control condition or the treatment condition of case 2 and case 3 if participants were not biased against or in favor of speculators.

4.1.2 Participants

For legal case 1, we targeted 80 participants from each of the three cohorts. For the legal cases 2 and 3, we targeted 50 participants for each case. We administered the experiment to 614 participants (185 judges, 170 lawyers, and 259 students). Two lawyers and one student did not solve the legal case that was part of the experiment and were subsequently exclude from the analysis. Moreover, response rates to the survey questions varied, so the regression results are based on a somewhat smaller sample. Table 1 provides descriptive statistics of the sample.

4.1.3 Survey questions

After solving one of the cases, all participants had to answer a survey on the back of the sheet of paper that was allocated to them. In terms of demographics, we were interested in participants’ gender, age, place of birth, living standard (“What describes your standard of living?” 1 = “very well off”, 6 = “poor”), relative earnings (“Compared to other households, would you say your household earns less or more money?” 0 = “far less money”, 5 = “far more money”), political orientation (“In political matters, people talk of ‘the left’ and ‘the right’. How would you place your views on this scale, generally speaking?” 0 = “left”, 10 = “right”), and lack of trust (“Generally speaking, would you say that most people can be trusted or that you can’t be too careful in dealing with people?” 0 = “most people can be trusted”, 1 = “you can’t be too careful in dealing with people”). We adapted the trust question from the World Value Survey, which has been used widely in studies comparing levels of generalized trust. We adapted all other questions from Ariely et al. (2019) and Mann et al. (2016). Materials were almost identical in the three settings, with the main exception being that judges were asked additional details about their position in the judiciary and cognizance, lawyers about their position in the law firm and the field of law they specialized in, and students about their major field of law study and their grades in the civil law, criminal law, and public law intermediate examination.

4.1.4 Administration and procedure

For the judge and student samples, the study was administered over a four-month period from August to November 2013. For the lawyer sample, it took us an additional 8 months to reach the targeted sample size. To ensure consistency in the administration, an experimenter traveled to the respective location where the experiment took place. Judges were recruited during training at the German Judicial Academy (Deutsche Richterakademie), Germany’s leading institution for training judges. For the experiment, we recruited judges attending eight different seminars on building law, child custody, asylum law, social justice, organized crime, civil law, criminal economic offences, and procedural law at the Academy centers in Trier and Wustrau. The judges had to solve one of three cases without any preparation or additional material. We recruited the lawyer sample from 11 mid-size and large German and international law firms. The experiment was administered in their offices in Berlin, Düsseldorf, Frankfurt, and Munich. Finally, we recruited the student sample from the advanced corporate law class (Unternehmens und Gesellschaftsrecht) at the University of Munich; the experiment took place in a lecture hall.

In each case, the experimenter described the study and indicated that participants should complete the study by themselves without exchanging information with their peers. This policy was enforced by the experimenter administering the experiment. The participants were informed that they would have approximately 10–15 min to complete the study. We deliberately decided not to compensation participants because an insufficient financial payment might have offended the professional sense of honor of judges and lawyers.

4.2 Prediction study

4.2.1 Participants

We recruited two separate samples of participants to predict the decisions of the judges for the different cases. The participants in the first sample were, as before, recruited from large German and international law firms based in Germany. Overall, 123 lawyers participated in the prediction study. The participants in the second sample were law students from Free University of Berlin and Humboldt University of Berlin. Overall, 247 law students participated in the prediction study. All participants predicted the outcome of one of the three legal cases for the treatment and control groups. The cases were distributed to them at random. In terms of political orientation, the lawyer predictors were slightly to the right of the 10-point scale (value 0.56); 49% stated that people can generally be trusted, while 51% stated the opposite. Approximately one-third of the participants were women, and the average age was 39 years. The median law student predictor was younger, more to the left in terms of political orientation, more trusting, and female. Table 1 provides descriptive statistics of the predictor samples.

4.2.2 Survey and procedure

Participants of the prediction study were informed that they would be learning about an experiment that was conducted with German judges and that they would be making predictions about the judges’ decisions. The details of one of the cases were explained to them, with the differences in the respective treatment and control group clearly highlighted. The predictors were then asked to estimate (1) the percentage of judges who awarded contributory negligence for the treatment and control groups, respectively, and (2) the extent to which these judges awarded contributory negligence.

5 Results

5.1 Prediction study

In line with H1, we first analyze whether there is a perceived bias in judicial decision making. Our analyses reveal that lawyers and law students predicted such a bias on two dimensions. For legal case 1 (lawyers: 37 vs. 48%, p = 0.049; students: 25 vs. 41%, p < 0.001), legal case 2 (lawyers: 13 vs. 37%, p < 0.001; students: 26 vs. 44%, p < 0.001), and legal case 3 (lawyers: 19 vs. 35%, p = 0.001; students: 34 vs. 47%, p < 0.001), lawyers and law students predicted that speculators would more frequently receive a punishment (see Figs. 1 and 2). Moreover, the lawyers predicted not only that more judges would award contributory negligence if a speculator was involved but also that the contributory negligence awarded would be significantly higher in the treatment group. For legal case 1 (lawyers: 25 vs. 36%, p < 0.001; students: 27 vs. 40%, p < 0.001), legal case 2 (lawyers: 18 vs. 38%, p < 0.001; students: 24 vs. 38%, p < 0.001), and legal case 3 (lawyers: 19 vs. 34%, p < 0.001; students: 27 vs. 40%, p < 0.001), the cohorts expected speculators to be punished more severely (see Figs. 3 and 4). These findings provide robust evidence for H1 that professional lawyers and law students believe that judges might have a bias against speculators and potentially against the financial industry more generally.Footnote 6 To determine whether this is indeed the case, we now turn to the analysis of the behavioral study and the judge sample.

5.2 Behavioral study

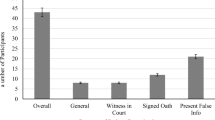

In a second step, we investigate whether judges are affected by the person who might act negligently (see Fig. 5). We find that in legal case 1, judges awarded contributory negligence slightly more often when a doctor rather than a speculator was involved. However, this difference is not statistically significant (63 vs. 50%, p = 0.239). When manipulating the purpose of the investment or the source of the funds, we find similar results. While for legal case 2 contributory negligence is awarded more frequently for the control group (24 vs. 12%, p = 0.279), in legal case 3 speculators are awarded contributory negligence more often, though this difference is not statistically significant (4 vs. 12%, p = 0.307). Regarding the extent of contributory negligence awarded, the judgments did not differ for the doctor or speculator in legal case 1 (36 vs. 38%, p = 0.718). Given that only a few judges awarded contributory negligence at all in legal cases 2 and 3, we can draw no meaningful comparison regarding the extent of contributory negligence awarded. Thus, our data provide no support for H2.

One explanation for why judges were not affected by the person acting negligently could be that the manipulation was too salient and the cases were easy to decide. First, judges only saw one condition—treatment or control condition—and it is highly unlikely that they inferred the manipulation by simply reviewing the case. Second, Danzinger et al. (2011) show not only that judges apply legal reasoning to the facts in a rational, mechanical, and deliberative manner but also that psychological, political, and social factors affect their rulings. Especially the latest financial crisis might have triggered anti-speculator sentiments among certain social groups (e.g. judges). Third, if our manipulation was too obvious and, for that reason, the person acting negligently did not exert any effect on legal reasoning, the outcome of our study should not differ for the hard and easy cases. However, we find strong differences between the two types of cases (see Fig. 5); this gives us confidence that our results are not driven merely by the nature of the three cases we developed.

To test H3, we compare the results from the prediction study with the actual outcomes of the behavioral study (see Figs. 6, 7). For the treatment condition of legal case 1, we find that lawyers quite accurately predicted the percentage of judges awarding contributory negligence (50 vs. 48%, p = 0.852). However, for the other two cases, in which contributory negligence should not be awarded, lawyers predicted a significantly higher failure rate in judicial decision making. In legal case 2 (12 vs. 38%, p < 0.001) and legal case 3 (12 vs. 35%, p = 0.002), they predicted that judges would award contributory negligence three time more frequently as they actually did in the behavioral study. The results for the student sample are similar, with law students deviating even more strongly from the actual outcomes of the behavioral study for all three cases. When considering the extent of contributory negligence in the treatment condition of legal case 1, the contributory negligence awarded was only marginally higher than what lawyers predicted (38 vs. 36%, p = 0.593), which is in line with H3. Again, for the other two legal cases too few judges awarded contributory negligence, so we make no meaningful comparison regarding the extent of contributory negligence between the behavioral and the prediction study. Overall, the evidence for H3 is mixed, with especially lawyers predicting the frequency and extent to which judges award contributory negligence in the treatment condition quite accurately for the hard case 1, but lawyers and law students performing rather poorly for the easy cases 2 and 3.

5.3 Supplementary results

In a next step, we investigate whether lawyers or law students exhibit a bias toward speculators. If so, this would count as evidence that judges learn to better control their biases. The evidence shows, however, that neither lawyers nor students exhibit a significant anti-speculator bias (see Figs. 8, 9, respectively). As this result might be due to the small sample size in the respective cases, we also combined the three cases for the different cohorts. However, we found no significant difference between the lawyer sample (20 vs. 27%, p = 0.320) and the student sample (37 vs. 33%, p = 0.549).

In a final step, we investigate whether there is a learning effect in the judiciary. If legal training has a positive effect on judicial decision making, we would expect that in legal cases 2 and 3, judges and lawyers would award contributory negligence less often than students, who can still be considered judicial laypeople. The effect of legal training might even be more pronounced because only the smartest students finish university with a law degree and become lawyers or even judges. Thus, some of the students in our sample might be considered advanced laypeople, because they will not finish their law degree and will take a job outside the judiciary. To investigate the effect of learning and selection, we combined the treatment and control conditions and investigated how often the different cohorts awarded contributory negligence. For the two “easy cases”, we observe that judges and lawyers did not differ in the frequency of how often they awarded contributory negligence (13 vs. 12%, p = 1.000). However, students awarded contributory negligence significantly more often than judges (26 vs. 13%, p = 0.017) and significantly more often than lawyers (26 vs. 12%, p = 0.006) (see Fig. 10). Thus, legal training seems to improve participants’ ability to ignore irrelevant facts.

Percentage of participants awarding contributory negligence (treatment and control group combined). p values of Bonferroni multiple-comparison test for legal cases 2 and 3: Lawyers − Judges: Diff. = − 1.2%, p = 1.000; Students − Judges: Diff. = 13.5%, p = 0.017; Students − Lawyers: Diff. = 14.7%, p = 0.006

To control for confounding variables, we run a Probit regression. Our dependent variable is the decision of a participant to award contributory negligence or not. For all the regressions reported in Table 2, we calculate robust standard errors and report average marginal effects. Table 4 in the “Appendix” provides a full description of the explanatory variables. To identify the effect of our treatment condition, we first include a dummy variable speculator dummy that is equal to 1 if the respective participant received the treatment condition and 0 otherwise. When pooling the different cohorts (columns 1 and 2), we again do not find any evidence of a speculator bias, neither for the “hard case” nor for the two “easy cases”. However, when investigating the different cohorts individually (columns 3–8), we find some evidence for the “easy cases” that lawyers—after having predicted a speculator bias for the judges—suffer from an anti-speculator bias. However, this finding is only weakly significant at the 10% level.

Moreover, we find robust evidence that participants’ distrust increases the likelihood that they award contributory negligence in the “hard case”, which is driven mostly by the judge and student cohorts but not the lawyer cohort. Finally, we find evidence that younger lawyers more often award contributory negligence in the “easy cases”. For these two cases, both the living standard and household income play a relevant role for awarding contributory negligence, particularly in the lawyer and judge cohorts.

As a robustness test, we estimated all models with ordinary least squares and found similar results to those in the Probit regressions.Footnote 7

6 Conclusion

In this study, we investigated whether sitting judges disfavor speculators over other professions in cases in which the quality as speculator has no legal relevance. Two samples of 123 professional lawyers and 247 law students predicted that judges would hold biases against speculators. Our behavioral study, however, reveals no such bias. This result is in line with recent literature that posits that judges’ professionalism shields them from the influence of legally irrelevant decision determinants. The discrepancy between the lawyers’ predictions and the judges’ actual behavior is in line with a view recently expressed in the literature that a biased audience may perceive unbiased judgments as biased (Kahan 2011). In our study, law students and professional lawyers wrongly predicted that judges would be biased, though we find no or only weak evidence that law students and lawyers are biased themselves.

Our study also has clear limitations. While experimental studies enable the experimenter to better control for confounding variables, they also come with some disadvantages. Unlike in observational studies, judges’ decisions did not have any impact on people’s lives in our experiment. Arguably, if they would have, judges might have decided even more carefully, and observing a bias in the behavioral study might have been even less likely. Nevertheless, future research might investigate potential biases of judges using real cases in which judges’ decisions have an impact on actual people. Interviews and prediction studies among lawyers might be novel ways to guide researchers on testing these biases.

Notes

Bundesgerichtshof 7.12.1979—2 StR 315/79 Neue Juristische Wochenschrift 1980, p. 1007 sub B I. (quoting a judgment by the Imperial Court and legislative material).

Oberlandesgericht Stuttgart 22.4.2009—20 Kap 1/08 Neue Zeitschrift für Gesellschaftsrecht 2009, p. 624, 628 (DaimlerChrysler II).

Expected to have an impact on the price of the security.

Securities & Exchange Com. v. Texas Gulf Sulphur Co., 401 F.2d 833, 849 (2d Cir. 1968).

The magnitude of the predicted bias is not significantly different for the lawyer and law student predictors.

These results are available on request.

References

Amaral-Garcia, S., Garoupa, N., & Grembi, V. (2009). Judicial independence and party politics in the Kelsenian constitutional courts: The case of Portugal. Journal of Empirical Legal Studies, 6(2), 381–404.

Ariely, D., Garcia-Rada, X., Gödker, K., Hornuf, L., & Mann, H. (2019). The impact of two different economic systems on dishonesty. European Journal of Political Economy, forthcoming.

Baum, L. (2006). Judges and their audiences: A perspective on judicial behavior. Princeton, NJ: Princeton University Press.

Berger, H., & Neugart, M. (2011). Labor courts, nomination bias, and unemployment in Germany. European Journal of Political Economy, 27(4), 659–673.

Black, R. C., Owens, R. J., Wedeking, J., & Wohlfahrt, P. C. (2016). U.S. Supreme Court opinions and their audiences. Cambridge: Cambridge University Press.

Braman, E. (2010). Searching for constraint in legal decision making. In D. E. Klein & G. Mitchell (Eds.), The psychology of judicial decision making (pp. 203–220). New York: Oxford.

Braman, E., & Nelson, T. E. (2007). Mechanism of motivated reasoning? Analogical perception in discrimination disputes. American Journal of Political Science, 51, 940–956.

Danzinger, S., Levav, J., & Avnaim-Pesso, L. (2011). Extraneous factors in judicial decisions. Proceedings of the National Academy of Sciences, 118(17), 6889–6892.

Englich, B., Mussweiler, T., & Strack, F. (2006). Playing dice with criminal sentences: The influence of irrelevant anchors on experts’ judicial decision making. Personality and Social Psychology Bulletin, 32, 188–200.

Epstein, L., Landes, W. A., & Posner, R. (2013). The behavior of federal judges: A theoretical and empirical study of rational choice. Cambridge, MA: Harvard University Press.

Espinosa, R. (2017). Constitutional judicial behavior: Exploring the determinants of the decisions of the French Constitutional Council. Review of Law & Economics, 13(2), 1–41.

Fabian, A. (1990). Card sharps, dream books, and bucket shops: Gambling in nineteenth-century America. Ithaca: Cornell University Press.

Furgeson, J. R., Babcock, L., & Shane, P. M. (2008a). Behind the mask of method: Political orientation and constitutional interpretive preferences. Law and Human Behavior, 32, 502–510.

Furgeson, J. R., Babcock, L., & Shane, P. M. (2008b). Do a law’s policy implications affect beliefs about its constitutionality? An experimental test. Law and Human Behavior, 32, 219–227.

Guthrie, C., Rachlinkski, J. J., & Wistrich, A. J. (2001). Inside the judicial mind. Cornell Law Review, 86, 777–830.

Harris, L. (2002). Trading & exchanges. Market microstructure for practitioners. Oxford: Oxford University Press.

Holyoak, K. J., & Simon, D. (1999). Bidirectional reasoning in decision making by constraint satisfaction. Journal of Experimental Psychology: General, 128, 3–31.

Kahan, D. M. (2011). Foreword: Neutral principles, motivated cognition, and some problems for constitutional law. Harvard Law Review, 125, 1–77.

Kahan, D. M. (2015). Laws of cognition and the cognition of law. Cognition, 135, 56–60.

Kahan, D. M., Hoffman, D., Evans, D., Devins, N., Lucci, E., & Cheng, K. (2016). “Ideology” or “situation sense”? An experimental investigation of motivated reasoning and professional judgment. University of Pennsylvania Law Review, 164, 349–439.

Kemp, M. C., & Sinn, H. W. (2000). A simple model of privately profitable but socially useless speculation. Japanese Economic Review, 51, 84–95.

Klöhn, L., & Stephan, E. (2010). Psychologische Aspekte der Urteilsbildung bei juristischen Experten. In S. Holzwarth, U. Lambrecht, S. Schalk, A. Späth, & E. Zech (Eds.), Die Unabhängigkeit des Richters (pp. 65–94). Tübingen: Mohr Siebeck.

Kunda, Z. (1990). The case for motivated reasoning. Psychological Bulletin, 108, 480–498.

Mann, H., Garcia-Rada, X., Hornuf, L., Tafurt, J., & Ariely, D. (2016). Cut from the same cloth: Similarly dishonest individuals across countries. Journal of Cross-Cultural Psychology, 47, 858–874.

Priest, G. L., & Klein, B. (1984). The selection of disputes for litigation. Journal of Legal Studies, 13, 1–55.

Rachlinksi, J. J., Johnson, S. L., Wistrich, A. J., & Guthrie, C. (2009). Does unconscious racial bias affect trial judges? Notre Dame Law Review, 84, 1195–1246.

Rachlinksi, J. J., Wistrich, A. J., & Guthrie, C. (2013). Altering attention in adjudication. UCLA Law Review, 60, 1586–1618.

Redding, R. E., & Repucci, N. D. (1999). Effects of lawyers’ socio-political attitudes on their judgments of social science in legal decision making. Law and Human Behavior, 23, 31–54.

Segal, J. A., Spaeth, H. J., & Benesh, C. (2005). The Supreme Court in the American legal system. Cambridge: Cambridge University Press.

Simon, D., Pham, L. B., Le, Q. A., & Holyoak, K. J. (2001). The emergence of coherence over the course of decision making. Journal of Experimental Psychology. Learning, Memory, and Cognition, 27, 1250–1260.

Snyder, M., & Swann, W. B. (1978). Hypothesis-testing processes in social interaction. Journal of Personality and Social Psychology, 36, 1202–1212.

Sood, A. M. (2013). Motivated cognition in legal judgment—An analytic review. Annual Review of Law and Social Science, 9, 307–325.

Spamann, H., & Klöhn, L. (2016). Justice is less blind, and less legalistic, than we thought: Evidence from an experiment with real judges. Journal of Legal Studies, 45, 255–280.

Spellman, B., & Schauer, F. (2012). Legal reasoning. In K. J. Holyoak & R. G. Morrison (Eds.), The Oxford handbook of thinking and reasoning (2nd ed., pp. 719–734). Oxford: Oxford University Press.

Stevens, A. C. (1892). The utility of speculation in modern commerce. Political Science Quarterly, 7, 419–430.

Sunstein, C. R., Schkade, D., Ellman, L. M., & Sawicki, A. (2006). Are judges political? An empirical analysis of the federal judiciary. Washington, DC: Brookings Institution Press.

Wistrich, A. J., Rachlinski, J. J., & Guthrie, C. (2015). Heart versus head: Do judges follow the law or follow their feelings? Texas Law Review, 93, 855–923.

Acknowledgements

We thank two anonymous referees, Dan Kahan, Giovanni Ramello, Eyal Zamir and the participants in the Joint Law and Economics Symposium by the German National Academy of Sciences Leopoldina and the Israel Academy of Sciences and Humanities (Berlin, Germany), the 4th Conference on Economic Analysis of Litigation (Paris, France) and the brownbag seminar at the University of Trier (Trier, Germany) for their thoughtful comments and suggestions. We also thank Gerrit Engelmann and Tobias Knappe for their excellent research assistance. Open access funding provided by Max Planck Society.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

OpenAccess This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Hornuf, L., Klöhn, L. Do judges hate speculators?. Eur J Law Econ 47, 147–169 (2019). https://doi.org/10.1007/s10657-018-09608-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10657-018-09608-z