Abstract

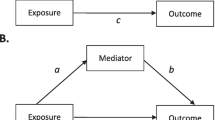

Definitions and conceptualizations of confounding and selection bias have evolved over the past several decades. An important advance occurred with development of the concept of exchangeability. For example, if exchangeability holds, risks of disease in an unexposed group can be compared with risks in an exposed group to estimate causal effects. Another advance occurred with the use of causal graphs to summarize causal relationships and facilitate identification of causal patterns that likely indicate bias, including confounding and selection bias. While closely related, exchangeability is defined in the counterfactual-model framework and confounding paths in the causal-graph framework. Moreover, the precise relationships between these concepts have not been fully described. Here, we summarize definitions and current views of these concepts. We show how bias, exchangeability and biasing paths interrelate and provide justification for key results. For example, we show that absence of a biasing path implies exchangeability but that the reverse implication need not hold without an additional assumption, such as faithfulness. The close links shown are expected. However confounding, selection bias and exchangeability are basic concepts, so comprehensive summarization and definitive demonstration of links between them is important. Thus, this work facilitates and adds to our understanding of these important biases.

Similar content being viewed by others

Notes

For example, a Google Scholar search (1/6/14) for “exchangeability” and “confounding path” identified 25 publications; none give conditions for which exchangeability implies no confounding path, or conversely.

References

Rothman KJ, Greenland S, Lash TL. Modern epidemiology. 3rd ed. Philadelphia: Lippincott Williams & Wilkins; 2008.

Rothman KJ. Modern epidemiology. Boston: Little, Brown; 1986.

Greenland S, Robins J, Pearl J. Confounding and collapsibility in causal inference. Stat Sci. 1999;14:29–46.

Miettinen OS, Cook EF. Confounding: essence and detection. Am J Epidemiol. 1981;114:593–603.

Greenland S, Robins J. Identifiability, exchangeability, and epidemiologic confounding. Int J Epidemiol. 1986;15:413–9.

Greenland S, Brumback B. An overview of relations among causal modelling methods. Int J Epidemiol. 2002;31:1030–7.

Greenland S, Robins JM. Identifiability, exchangeability and confounding revisited. Epidemiol Perspect Innov. 2009;6. doi:10.1186/742-5573-6-4.

Pearl J. Causal diagrams for empirical research (with discussion). Biometrika. 1995;82:669–710.

Pearl J. Some apects of graphical models connected with causality. In: 49th session of the International Statistical Institute, Florence, Italy; 1993.

Glymour MM, Greenland S. Modern Epidemiology, 3rd ed. In: Rothman KJ, Greenland S, Lash TL, editors. Causal Diagrams. Philadelphia: Lippincott, Williams & Wilkins; 2008. p. 183–209.

Greenland S, Pearl J, Robins J. Causal diagrams for epidemiologic research. Epidemiology. 1999;10:37–48.

Greenland S. Quantifying biases in causal models: classical confounding vs collider-stratification bias. Epidemiology. 2003;14:300–6.

Pearl J. Causality. 2nd ed. Cambridge: Cambridge University Press; 2009.

Greenland S, Pearl J. Adjustments and their consequences—collapsibility analysis using graphical models. Int Stat Rev. 2011;79:401–26.

VanderWeele TJ, Robins JM. Directed acyclic graphs, sufficient causes, and the properties of conditioning on a common effect. Am J Epidemiol. 2007;166:1096–104.

Robins JM, Richardson T. Alternative graphical causal models and the identification of direct effects. In: Shrout P, Keyes K, Ornstein K, editors. Causality and psychopathology: finding the determinants of disorders and their cures. Oxford: Oxford University Press; 2010. p. 103–58.

Hernan MA, Robins J. Causal inference. 2012 ed; 2012. http://www.hsph.harvard.edu/miguel-hernan/causal-inference-book/. Accessed 1 Oct 2012.

Greenland S, Pearl J. Causal diagrams. In: Boslaugh S, editor. Encyclopedia of epidemiology. Thousand Oaks: Sage; 2007. p. 149–56.

Rubin DB. Estimating causal effects of treatments in randomized and nonrandomized studies. J Educ Psychol. 1974;66:688–701.

Hernán MA, Robins J. A definition of causal effect for epidemiology. J Epidemiol Community Health. 2004;58:265–71.

Maldonado G, Greenland S. Estimating causal effects. Int J Epidemiol. 2002;31:422–9.

Rubin DB. Comment: Neyman (1923) and causal inference in experiments and observational studies. Stat Sci. 1990;5:472–80.

Rubin DB. Direct and indirect causal effects via potential outcomes. Scand J Stat. 2004;31:161–70.

Hernán MA, Robins JM. Estimating causal effects from epidemiological data. J Epidemiol Community Health. 2006;60:578–86.

VanderWeele TJ. Causal mediation analysis with survival data. Epidemiology (Cambridge, Mass). 2011;22:582.

Pearl J, Paz A. Confounding equivalence in causal inference. J Causal Inference. 2012;2:75–93.

Richardson TS, Robins JM. Single world intervention graphs (SWIGs): a unication of the counterfactual and graphical approaches to causality. Working paper number 128. Center for Statistics and the Social Sciences, University of Washington. 2013. http://www.csss.washington.edu/Papers/wp128.pdf.

Acknowledgments

We would like to thank and acknowledge Dr. Sander Greenland (University of California Los Angeles) for his helpful comments and correspondence in regards to this manuscript.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendix 1

Appendix 1

In the Appendix 1, we define additional terms, state assumptions and provide proofs of Claims 1B, 2A, 3, and 4. Throughout, we assume that the causal model is Markovian [13], defined below.

Causal models

Following Pearl [13, p. 27], a functional causal model, or just causal model, is a set of structural equations that determines the potential outcome x i of X i for each dependent variable by:

where: i indicates the variable, f i (.) is a function; pa i is a value of the variables PA i which are the parents of X i (i.e., the immediate causes of X i ); and, u is a value of the error term Ui and n is the number of variables. The errors, sometimes called disturbances, are often unobserved and could be viewed as representing omitted factors. In Eq. (3) of the main text, Xi could represent D, and then PAD consists of E and C, and Ui is U1.

The Eq. (4) give the effect on each X i that would result from changing PA i or U i from one value to another. They are assumed to represent autonomous, causal mechanisms or effects. When the form of the f i (.) is unspecified they define a non-parametric structural-equations model (NPSEM), which generalizes the linear structural-equations models with Gaussian errors often found in the econometric and social literature [13]. The set of structural equations provide formulas for determining potential outcomes that would occur for actions of setting specific combinations of the relevant parents [13].

Without some restriction, D i (e) could depend on the exposure of other individuals as might, for example, be true of communicable diseases. Rubin describes independence (no interference), wherein exposure of one individual doesn’t affect outcomes of others. Here, to avoid this interference, we make a “stable unit treatment value assumption”: D i (e) \(\mathop \coprod \nolimits\) E i* for i and i* ≠ i.

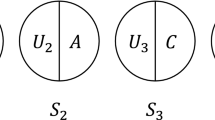

Markovian causal model

If we draw an arrow from the direct causes of each variable X i (from each member of PA i ) to X i in a causal model, we obtain a causal diagram for the model. Here, each node or letter in a DAG represents a variable (Xi) and each arrow represents a causal effect with the arrowhead pointing to the effect. We call each variable (X i ) a node and each arrow an effect. If the causal diagram contains no cycles and the errors of each variable are jointly independent, we call the causal model Markovian [13, p. 30]. Each Markovian causal model induces a compatible probability distribution [13] which we use here. With the appropriate distribution for Ui, we could write P(X i = x i |PA i = pa i ) = P(U i = u, u \(\in\) {u: f i (pa i , u) = x i }). This model is a nonparametric structural model with independent errors (NPSEM-IE). It differs from the “finest fully randomized causally interpretable tree graph” (FFRCITG) of Richardson and Robins [24] because the errors are assumed independent. The NPSEM-IE is a special case of the FFRCTIG.

Further note on DAGs

Other models, both causal and non-causal, can be represented by DAGs. Here, we use only the causal interpretation and independent errors (represent by a U) for DAGs so that they represent Markovian causal models. With this link and restrictions, we can use either the non-parametric structural equations (Appendix Eq. 4) or the graphical representation of a Markovian causal model. In a Markovian causal model, a compatible induced probability distribution always exists and can be factored as: P(X1 = x 1,…, X n = x n ) = \(\prod\nolimits_{i} {{\text{P}}({\text{X}}_{i} = x_{i} |PA_{i} = pa_{i} )}\) [13, p. 30].

Confounding

As noted in the main text, many definitions of confounding (and other concepts) are available. Our main focus concerns the relationships between exchangeability, bias and biasing paths but for completeness, we provided one precise definition of confounding [11]. Although highly overlapping, the presence of confounding under this definition does not always coincide with presence of a confounded exposure-disease association. For example, suppose that E precedes D and consider the three DAGs in Fig. 4. The upper DAG shows no confounding (under our definition [11] )—since E and D would be unassociated if all effects of E were removed, but the association is confounded since there is a confounding path (conditioning on the collider C1, indicated by the box, opens the path). The middle DAG shows confounding—since E and D would be associated if all effects of E were removed, but the association is not confounded since there is no confounding path (the biasing path does not end with an arrow into D). This middle DAG nevertheless illustrates a biasing path [1]; since it involves conditioning on a common effect of exposure and disease, referred to as structural selection bias.

Other definitions of confounding and of other biases, while similar to those used here, are available and can differ. We adopted one set of definitions from the literature so we could illustrate and summarize the inter-relationships between some of the different conceptualizations. Use of a single set of definitions allowed us to focus on the inter-relationships between conceptualizations in different frameworks; we avoid some of discussion about strengths, weakness and preferability of one option over another. Nevertheless, it might be useful for the wider epidemiologic community to move towards adoption of a single set of definitions.

Structural selection bias is defined as bias that results from conditioning on a variable caused by two other variables; one is exposure or a cause thereof, and the other is disease or a cause thereof [17]. As such it’s a type of collider bias. In the DAG framework, this situation would be represented by a biasing path. However, the definition of confounding path used here [1] includes some situations that represent both a confounding path and structural selection bias (e.g., the uppermost DAG of Fig. 4). Hence, here we primarily refer to “biasing paths.” There are a few biasing paths, perhaps unusual, that do not meet this definition of structural selection bias (but more inclusive definitions are available, see Appendix of Ref. [17] ) or a confounding path (lowest DAG of Fig. 4).

We now sketch proofs of Claims 1B, 2B, 3, 4A and 4B.

Proof of Claim 1B

(Exchangeability and faithfulness imply absence of a biasing path). We prove a contrapositive: a biasing path and faithfulness imply non-exchangeability. Presence of a biasing path implies the DAG includes an open, undirected path. An example is illustrated in Fig. 5, where n and m are the number of additional variables intermediate between E and C, and between C and D, respectively, along the path. Additional variables not on the path can also be present. Each factor in the path is determined by its parents along the path plus other parents not in the path, according to the structural equations:

Causal graph, illustrating a biasing path with m descendants of C that are ancestors of exposure E, and n descendants of C that are ancestors of outcome D (see Appendix 1)

In the expression E(y m , paE, uE) = fE(y m , paE, uE) is the potential value of E if Y m , were set to y m , PAE, to paE and the independent, random error term UE to u E, with analogous statements for the other expressions; where PAX are the parents of X excluding UX and the factors explicitly included on the path shown. The path depicted is a special type of biasing path—a backdoor path, without any conditioning on colliders in the path and with an arrow into E; However, the n + m + 3 equations above can be modified to reflect other biasing paths; for each type we still have n + m + 3 equations for variables on the paths. [For example, the path in Fig. 6, is a biasing path with one, controlled collider (Y1). We would need to modify 2 equations, setting E(paE, uE) = fE(paE, uE) and Y m (e, paYi, uYi) = fYi(e, paYi, uYi) to reflect this modification.]

Causal graph, illustrating a biasing path with m − 1 descendants of C that are parents of a descendant (Y m ) of exposure E, conditioning on collider (Y m ) which opens the path between C and E, and n descendants of C that are ancestors of outcome D (see Appendix 1). A box indicates control for the variable

We show that one can always choose parameters so that P(D(e)|E = 1) ≠ P(D(e)|E = 0), for e = 0, 1. The functions are unspecified, so we can define and parameterize each function (e.g., fD) so that it depends on the unmeasured terms (e.g., UD) and on the immediate parent on the path (e.g., Zn), but negligibly on other variables (e.g., PAD). We first consider the simplest case (Fig. 1a), where C affects both E and D directly, and Yi and Zi aren’t present. We can define:

Then with α1 = β1 = 1,000, α2 = β2 = γ = 1, and β0 = 0, E has no effect: D(1) = D(0). Also D(e) will be 1, to a close approximation, if and only if C = 1, so: P(D(e) = 1|E = 1) ≈ P(C = 1|E = 1) ≈ 1 and P(D(e) = 1|E = 0) ≈ P(C = 1|E = 0) ≈ 0 implying P(D(e)|E = 1) ≠ P(D(e)|E = 0) for e = 0, 1 and by consistency P(D|E = 1) ≠ P(D|E = 0).

For more complicated situations (e.g., Fig. 5), one can show by induction on the number of equations that it is always possible to choose functions (fE fYm , …, fY1, fC, fZ1,…, fZn fD) and parameterizations for those functions such that P(D(e)|E = 1) ≠ P(D(e)|E = 0) for e = 0, 1.

Faithfulness now implies that exchangeability cannot hold: the induction argument implies that parameters can be chosen that would “destroy” the independence P(D(1)|E = 1) = P(D(1)|E = 0) required by exchangeability (see Note 2) and destroy P(D|E = 1) = P(D|E = 0)—which is not consistent with faithfulness. (In other words, a probability distribution which implies P(D(e)|E = 1) = P(D(e)|E = 0) for e = 0, 1 under the structure implied by a biasing-path-containing graph could not be faithful). Thus, if a biasing path is present and the distribution is faithful in this way, then exchangeability cannot hold, establishing Claim 1B.

Proof of Claim 2A

(Absence of a biasing path implies exchangeability.) Absence of a biasing path implies that E \(\mathop \coprod \nolimits\) AD, where AD is the set of D’s parents including the U’s that are implicit in the DAG for each node. If E \(\mathop \coprod \nolimits\) AD did not hold, the DAG would need to include an open path from E to some X \(\in\) AD, which would then be part of a biasing path from E to X and then to D, conditionally on controlled factors (if any). But, by G-computation or the do-calculus, the parents of D determine the counterfactual distribution of D, under interventions setting e to 0 or 1. Since the distribution of D’s -parents is the same among the exposed and the unexposed by independence, the distribution of counterfactuals for D must be the same in the exposed and unexposed. In particular, D(e) \(\mathop \coprod \nolimits\) E. (A possible subtlety is that absence of an open path from E to X \(\in\) AD immediately implies E and each X \(\in\) AD are pairwise independent, whereas the argument assumed that E is independent of AD. However, this last independence is implied, for example, by Theorem 1.2.5 of Pearl, since E and AD are d-separated [13], conditionally on controlled factors, if any).

Proof of Claim 3

(In a Markovian causal model, exchangeability need not imply absence of a biasing path). We start with the DAG in Fig. 2 which has a confounding path and the corresponding structural equations (Example 1) and show that exchangeability can hold for some parameterization. We consider the following parameterization:

With this parameterization, D(1) = 1 if and only if: UD = 3; UD = 4; UD = 5 and C = 0; or, UD = 6 and C = 1. These events are mutually exclusive so:

Similarly, P(D(1) = 1|E = 0) = P(UD = 3) + P(UD = 4) + P(UD = 6) so D(1) \(\mathop \coprod \nolimits\) E; similar results show D(0) \(\mathop \coprod \nolimits\) E, proving exchangeability. With the same parameterization, a stronger form of exchangeability \(\vec{p} = \vec{q}\) also holds. Thus, exchangeability (not even the stronger form) does not imply absence of a biasing path as Fig. 2 does, in fact, have a biasing (confounding) path.

Proof of Claims 4A and 4B

(Absence of bias does not imply absence of a biasing path, and a biasing path does not imply bias.). We prove this claim through an Example that has a Biasing path but no bias (and exchangeability). We again use the causal relationships in Fig. 2, where there is a biasing path. Appendix Table 4 parameterizes the causal relationships, in terms of the structural equations. We also assume that C has 3 categories with P(C = 1) = 0.4, P(C = 2) = 0.3, P(E = 1|C = 1) = 0.4, P(E = 1|C = 2) = 0.5 and P(E = 1|C = 3) = 0.1. The latter three equations represent causal effects of C on E.

With this parameterization, E[D(1)] = P(D = 1|E = 1) = 0.1375 and E[D(0)] = P(D = 1|E = 0) = 0.1225 and so there is no bias. Similarly, exchangeability holds. In this example, we have “fine-tuned” the parameters, so bias would be absent even though C affects both E and D, a common situation for confounding. Bias would be present for most minor changes in the parameters and so the absence of bias is unstable in some sense. The distribution would be technically be unfaithful, since for example with most parameter changes D(e) would no longer be independent of E.

Rights and permissions

About this article

Cite this article

Flanders, W.D., Eldridge, R.C. Summary of relationships between exchangeability, biasing paths and bias. Eur J Epidemiol 30, 1089–1099 (2015). https://doi.org/10.1007/s10654-014-9915-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10654-014-9915-2