Abstract

We modeled biological growth using a random differential equation (RDE), where the initial condition is a random variable, and the growth rate is a suitable stochastic process. These assumptions let us obtain a model that represents well the random growth process observed in nature, where only a few individuals of the population reach the maximal size of the species, and the growth curve for every individual behaves randomly. Since we assumed that the initial condition is a random variable, we assigned a priori density, and we performed Bayesian inference to update the initial condition’s density of the RDE. The Karhunen–Loeve expansion was then used to approximate the random coefficient of the RDE. Then, using the RDE’s approximations, we estimated the density f(p, t). Finally, we fitted this model to the biological growth of the giant electric ray (or Cortez electric ray) Narcine entemedor. Simulations of the solution of the random logistic equation were performed to construct a curve that describes the solutions’ mean for each time. As a result, we estimated confidence intervals for the mean growth that described reasonably well the observed data. We fit the proposed model with a training dataset, and the model is tested with a different dataset. The model selection is performed with the square of the errors.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Motivation

1.1 Introduction

In this article, we apply a stochastic model to actual data coming from ecology. Indeed, we consider a stochastic model that takes into account the individual variability of the organisms over time. The proposed model allows individuals to grow at random levels of intensity that change along time. In addition, in the stochastic model, not all individuals reach the maximal expected growth size, which agrees with what is observed in nature.

The model and methodology studied in this article can be applied to several species of the animal kingdom. Here, to illustrate the use of the model, we will focus on marine animal literature and data. Traditionally, the individual growth models that are applied to marine organisms have been based on length-at-age data, describing the average growth of the individuals in a population. However, this approach ignores the intrinsic individual variability of the organisms, which can be influenced by genetic differences in growth rate potential (Gurney et al. 2007), food availability (Fujiwara et al. 2004), or differences in physiological stress (Benhaïm et al. 2011) and length dependence of growth rate (Gurney and Veitch 2007). This phenomenon can be observed when there is differential access to food and dominant organisms interfere with the feeding of subordinates, and consequently, dominant individuals show better growth rates (Abbott and Dill 1989). Recognizing that the length-at-age data are highly variable, two accepted hypotheses have been proposed: (a) growth compensation, which is observed when the length-at-age variability decreases with time or age (Fujiwara et al. 2004; Benhaïm et al. 2011); and (b) growth depensation, which is observed if length-at-age variability increases with age (Luquin-Covarrubias et al. 2016a, b). The parameterization of the individual growth models has used ordinary sums of squares or likelihood functions, assuming different probabilistic density functions, such as normal, log-normal, gamma, mixture distribution or log skew-t distribution (Chen and Fournier 1999; Tang et al. 2014; López Quintero et al. 2017).

The survival matrix is represented as \(S_{(l,t)}=\exp ^{-Z_{l,t}}\), where the number of individuals in the length-class l surviving to the start the time \(t^\prime\) is reduced by mortality, considering that \(Z_{l,t}= F_{l,t}+M_{l,t}\), where M is natural mortality and F is the fishing mortality. Finally, \(P_{l,l^{\prime }}\) matrix can be estimated as: \(N_{l,t}=P_{l,l^\prime }\exp ^{-Z_{l,t}}\).

The inclusion of this stochastic approach partially improves estimates of individual growth. However, the final estimate requires an estimation of the survival matrix, which is usually not easy to estimate from length-at-age data. Consequently, its use is limited in individual growth modeling. Given the limitations for modeling the individual differences in growth from length-at-age data, a new proposal must be developed following the the stochastic approach to improve the estimation of parameters and to increase the realism of the theoretical model.

Regarding stochastic models for biological growth, we just review some of the previous works. In Dorini et al. (2016), the authors study a logistic differential equation with carrying capacity and initial conditions as independent random variables; in this manner, they introduce uncertainty into the model. The authors also are able to show a closed form of the density of the solution of the Initial Value Problem (IVP). See also the references cited in that paper for further references on stochastic population models in ecological modeling.

Our main inspiration for this work is Cortés et al. (2019), where they construct an approximation for the density of the solution of a random logistic differential equation (RLDE). Its approximation is based on a truncation of the Karhunen–Loeve expansion; however, their results are valid in the case of a Gaussian process, or if a stochastic basis for the probability space has support in a common bounded interval. In Calatayud et al. (2019) the authors remove the restrictions mentioned before; indeed, they consider a general sequence \(\{A_n(t)\}_{n\ge 1}\) that approximates A(t) in the Hilbert space \(L^2([t_0, T] \times \Omega )\).

In Calatayud et al. (2022), the authors focus on a random logistic differential model with a particular sigmoid functional form of the carrying capacity. They set the probability distributions of the parameters using the maximum entropy principle. Afterward, they use the random variable transformation method for computing the density function of the solution. Bevia et al. (2023) consider a random generalized logistic differential equation (RGLDE), with three parameters: A as the growth rate, K the carrying capacity, and B a power that controls how fast the limiting number K is being approached. They find conditions to have two types of solutions: the sample-path and mean-square. In addition, they construct the density of the solutions using two approaches, the first one using the random variable transformation technique, and the second one by solving Liouville’s partial differential equation (PDE). They illustrate their results with numerical examples.

In Calatayud, Gregori et al. (2020) the authors study a random non-autonomous Bertalanffy differential equation. They showed, under certain assumptions on the random coefficients and random initial condition, the existence of a solution as a stochastic process, both in the sample-path and in the mean square senses. In addition, by using the random variable transformation technique and Karhunen–Loeve expansions, they construct a sequence of probability density functions that under certain conditions converge pointwise or uniformly to the density function of the solution.

Numerical methods for stochastic differential equations (SDEs) have been developed for the last few decades, such as the Euler–Maruyama method, stochastic Itô–Taylor expansions, Runge–Kutta approximations, Monte Carlo methods, and their multiple variations; see for instance the classical book of Kloeden and Platen (2013).

Recently, spectral methods have been applied to the probability space to solve SDEs in a numerical manner. The Wiener-Chaos expansion of the probability space is a spectral method that allows us to write any square-integrable random variable as a Fourier-Hermite series. At this regard see, for instance, Chap. 5 in Lototsky and Rozovsky (2017) and the references therein. Another option is the Karhunen–Loeve expansion. In this approach, any square-integrable random variable is decomposed as a Fourier expansion using as a basis the eigenfunctions of a suitable operator (usually the covariance operator of a nice stochastic process) via Mercer’s Theorem (see, for example, Chap. 4 in Xiu 2010 or Sect. 37.5 in Loeve 1978). The former approach converges faster than the latter; however, the Wiener-Chaos expansion is more complicated to perform numerically since it is necessary to solve a coupled system of ordinary differential equations to construct the approximation (cf. Huschto and Sager 2014; Huschto et al. 2019).

1.2 Our contribution

We modeled the biological growth with a random differential equation (RDE). RDEs have been applied in a wide range of disciplines such as biology, medicine, population dynamics, and engineering (see Han and Kloeden 2017).

A RDE is essentially an ordinary differential equation where one or more of its coefficients are stochastic processes. Since differential equations are very dynamic and also given the randomness of their coefficients, RDE’s are able to describe individual differences in growth. We also use Bayesian inference to update the probability density function of the random initial condition of the RDE with new data. With the posterior density, we were able to obtain credible intervals (CIs) for the RDE’s solution.

Let us explain the main idea of the application. We have to compute numerically the density probability function f(p,t) of the solution of the random logistic equation with a random initial condition. We used the Wiener process and the Ornstein–Uhlenbeck (OU) process as random coefficients. Therefore, we need to compute or simulate the random coefficients of the RDE. It is well-known that the OU process is the solution of a stochastic differential equations (SDE); however, it is possible to rewrite the OU as a scaled, time-transformed Brownian motion; then, in some sense, the simulation of both processes are equivalent (see, for instance, Øksendal 2003).

In this paper, we use the Karhunen–Loeve expansion to numerically estimate the Wiener process and the OU process. After that, we approximate the solution of the RDE (3) following Cortés et al. (2019).

The procedure described above can accomplish the characteristics of the Data assimilation definition. Indeed, Data Assimilation (DA) is the systematic use of data to constrain a mathematical model. It combines theory (usually in the form of a model) with observations. It is assumed that the dynamics that are responsible for a particular process or distribution are inherent in the data. By inputting data of various types into a mathematical model, it will more accurately stimulate a particular environment or situation. Through data assimilation, the hindcast, and/or forecast of the model will be improved. There may be a number of several goals sought (for instance, to determine the optimal state estimate of a system, to fix initial conditions for a numerical forecast model, to estimate numerical parameters based on training a model from observed data, etc.). Data assimilation is distinguished from other forms of machine learning, image analysis, and statistical methods in that it utilizes a dynamical model of the system being analyzed, see, e.g. Reich and Cotter (2015) and Van Leeuwen et al. (2015). In our particular case, we applied DA to RDEs and we updated the state of the system with new observations just one time.

This paper is organized as follows. In Sect. 2, we introduce the RLDE and its Karhunen–Loeve expansion. In Sect. 3, we briefly review Bayesian theory, particularly the Beta-Binomial model. A model description for biological growth is discussed in Sect. 4, we also describe the data used in this paper in this section. In Sects. 5 and 6, we apply the RDE model with Wiener and OU processes as its random coefficient and with a Beta random variable as the prior distribution of the initial condition. In Sect. 7 we apply the results of the previous section to a different dataset. Our conclusions and a discussion of the model together with its numerical applications are given in Sect. 8. Finally, in the “Appendix”, we provide a brief description of the method that we use to estimate the parameters of the OU process.

2 A random logistic differential equation

The classical logistic differential equation has been applied to model bounded growth, for instance, tumor growth in medicine, reaction models in chemistry, population dynamics and Fermi distribution in physics, and so on. These problems are formulated via the Initial Value Problems (IVPs; Cortés et al. 2019, for instance).

with solution:

where a is the intrinsic growth rate and \(p_0\) and p(t), in our case, denote the size proportion at time instant \(t_0\) and t.

We are interested in a model where both parameters a and \(p_0\) are random; more precisely, we assume that a is a random variable and p(t) is a stochastic process. Therefore, this model will take into account the intrinsic variability of the growth rate a and the value of \(p_0\).

In order to distinguish the stochastic parameter of the deterministic one, we introduce the stochastic process \(A(t,\omega )\) as the growth rate. Furthermore, we introduce a modification of the logistic model, as follows:

\(A(t,\omega )\) is a stochastic process and \(p_0(\omega )\) is a bounded absolutely continuous random variable \(p_0(\omega ):\Omega \rightarrow [x_1,x_2]\subset (0,1)\). We assume that both, \(A(\cdot ,\omega )\) and \(p_0(\omega )\), are defined on a common probability space \((\Omega ,{\mathcal {F}},{\mathbb {P}})\). \(T>0\) is fixed. We called Eq. (3) the random logistic equation, which is a RDE. We observe that the parameters in the proposed model are still interpretable; meaning that, in our case, they correspond to the random birth size and to the stochastic growth rate at each time t.

The solution to Eq. (3) is given by

Note that the \(\lim _{t\rightarrow \infty } P(t,\omega )=1\) is satisfied only when the stochastic process \(A(s,\omega )\) is strictly positive. Otherwise, if \(A(s,\omega )\) does not ensure positivity for a subset \(\Omega _0\) of \(\Omega\) with \({\textbf{P}}(\Omega _0)>0\), then with some strictly positive probability the \(\lim _{t\rightarrow \infty } P(t,\omega )=0\), thus the IVP stochastic solution (3) could not behave as its deterministic counterpart (1) if \(A(s,\omega )\) is not strictly positive. However, in nature some species could decrease their sizes under some conditions; then, even in these situations, the stochastic model considered in this manuscript could be an appropriate model. To illustrate the suitability of the model we choose as examples two stochastic processes with reflection at zero. However, other possible choices could be done accordingly to the phenomena/data to study.

The fact that P(t) reaches an asymptotic value of 1 does not imply that all individuals are expected to grow to the species’ maximum observed size. Instead, this means that, with strictly positive probability, some individuals could reach the asymptotic size, and some will not.

To approximate the coefficient \(A(s,\omega )\), we will use the Karhunen—Loeve expansion. This approximation is used for those cases where it is highly difficult to obtain a closed form of the stochastic process or when performing simulations in an efficient way is desired or when statistical properties of the referred stochastic process will be studied.

Therefore, we present a theorem on the \(L^2\) convergence of the Karhunen–Loeve expansion of a mean square continuous process. The proof of this theorem can be consulted in Lord et al. (2014, Theorem 5.28).

Theorem 1

Consider a mean square integrable continuous time stochastic process \(X \equiv \{X(t, \omega ): t \in {\mathcal {T}}, \omega \in \Omega \},\) i.e., \(X \in L^2 \big (\Omega , L^2 ({\mathcal {T}})\big )\) with \(\mu _X (t)\) and \(c_X (s, t)\) as its mean and covariance functions, respectively. Then,

where this sum converges in \(L^2 \big (\Omega , L^2 ({\mathcal {T}})\big )\) and

associated to the covariance function \(c_X (s, t)\). The random variables \(\xi _j(\omega )\) have zero mean \({\mathbb {E}}[ \xi _j (\omega )] = 0,\) unit variance \(Var[\xi _j (\omega )] = 1\) and are pairwise uncorrelated \({\mathbb {E}}[\xi _j (\omega )\xi _k (\omega )] =\delta _{jk}\). Furthermore, if \(X( t, \omega )\) is Gaussian, then \(\xi _j (\omega ) \sim N(0, 1)\) are independent and identically distributed.

This theorem guarantees that each process with a finite second moment can be written as a series of the product between random variables and a sequence of functions that are some suitable basis. By using this theorem, we will approximate \(A(s,\omega )\). In this paper, we will use \({\mathcal {T}}=[t_0,T]\) for some \(T>t_0\).

Note that it is necessary to truncate the series because it is impossible to take the sum to infinite in equation (5). Then, we work with a finite approximation to the process \(X(t,\omega )\).

In the following paragraphs, we apply a truncation of the Karhunen–Loeve expansion to \(A(s,\omega )\) and then we will get an approximation for the density f(p, t) of the RDE’s solution (3) at time t. Notice that f(p, t) is not a transition density but is a true fixed-time density for the process \(P(t,\omega )\). More precisely, given a fixed t the function \((0,1)\ni p\rightarrow f(p,t)\) is the density probability function of the random variable \(P(t,\omega )\) at every fixed time t.

Set \(\overline{\varvec{\xi }_N}(\omega ):=(\xi _1,\ldots ,\xi _N)\) and denote by

Some hypotheses on \(p_0\), \(A(t,\omega )\), \(\{\xi _j\}_{j\ge 1}\) and f(p, t) are required:

- (H1):

-

\(p_0:\Omega \rightarrow [b_1,b_2]\subset (0,1)\) and \(A(t,\omega )\in L^2 \big (\Omega , L^2 ({\mathcal {T}})\big )\).

- (H2):

-

\(p_0(\omega )\), \(\xi _j(\omega )\), \(1\le j\le N\) are absolutely continuous random variables. Moreover, \(p_0(\omega )\), \(\overline{\varvec{\xi }_N}(\omega )\) are independent with densities \(f_{p_0}(p_0)\) and \(f_{\overline{\varvec{\xi }_N}(\omega )}(\xi _1,\ldots ,\xi _N)\), respectively.

- (H3):

-

\(f_{p_0}(p_0)\) is Lipschitz-continuous in its domain; that is,

$$\begin{aligned} \exists L=L_{f_{p_0}}\quad \text{ such } \text{ that } \mid f_{p_0}(p)-f_{p_0}(q)\mid \le L \mid p-q \mid , \quad \forall p,q\in [b_1,b_2]. \end{aligned}$$ - (H4):

-

\(A(t,\omega )\) admits a Karhunen–Loeve expansion of type (5) such that there exists a constant \(C>0\) that satisfies

$$\begin{aligned} {\mathbb {E}}\big [e^{2Q_N(t,\varvec{\xi }_N(\omega ) )} \big ] \le C, \, \text{ for } \text{ all } \text{ positive } \text{ integers } N. \end{aligned}$$

Note that because \(A(t,\omega )\in L^2 \big (\Omega , L^2 ({\mathcal {T}})\big )\), we can apply Theorem 1. Then, the stochastic process (4) solution to (3) can be written as

We define the N-truncated solution of \(P(t,\omega )\) as

Following the procedure used in Cortés et al. (2019, see, Eq. (11) ), we obtain the probability density function of the truncated solution \(P^N(t,\omega )\)

Note that in the previous expression, even when it is not explicitly pointed out, the density \(f_{p_0}\) depends on time.

The following theorem ensures the convergence of (10) to the exact density f(p, t) (Cortés et al. 2019, Theorem 3).

Theorem 2

Under Hypotheses (H1)–(H4), the sequence of densities \(\{f^N(p,t)\}_{N\ge 1}\) defined by (10) converges for every \(J\times [t_0,T],\) for all \(J\subset {\mathbb {R}},\) to the exact density f(p, t) of the solution of the random IVP (3).

3 Bayesian inference

In this section, for the sake of completeness, we illustrate the use of Bayesian inference to update the density \(f_{p_0}(p_0)\). The main idea of Bayesian inference is to update the probability of the occurrence of some event as more evidence or data becomes available. We refer to Gelman et al. (2013) for further reading.

Suppose that the unknown parameter \(\theta\) of a given model is regarded as a random variable. Prior information is expressed using probability density \(f(\theta )\), which is called prior probability. The Bayesian approach connects data and parameters through the likelihood function \(L(x\mid \theta )\) and is based on the evaluation of the posterior probability, which by Bayes theorem is

Note that parameter values where the likelihood is high are those that have a high probability of producing the observed data. This agrees with the maximum likelihood approach, where the best estimate of \(\theta\) is the one that maximizes the likelihood function.

We want to update \(f_{p_0}\) by applying Bayesian inference. Suppose that, at times \(\tau _1,\tau _2,\ldots ,\tau _m\) we obtain new information to calculate the likelihood function and consequently update the posterior probability. We denote by \(L_i(x\mid \theta )\) and \(f_{P_i}(\theta \mid x)\) as the likelihood function and the posterior probability at time \(\tau _i\) (\(i=1,2,\ldots ,m\)), respectively. Then,

where \(f_{P_{0}}(\theta \mid x)=f_{p_0}(\theta )\). Note that the last expression is applied in the case when the SDE (3) has a closed solution. In those cases where there is an approximate solution,

where \(f_{P_{0}}^N(\theta \mid x)=f_{p_0}(\theta )\) and N are the number of terms that we take in the approximation of \(A(t,\omega )\) [compare Eqs. (8) and (9) taking into account (7)].

We use the well-known Beta-Binomial model to our data. For the sake of completeness, we present a brief review of this model in the Appendix.

4 Biological growth of the Narcine entemedor

We are interested in modeling the biological growth of a giant electric ray (or Cortez electric ray) N. entemedor using the RDE (3).

Narcine entemedor specimens were collected between October 2013 through December 2015 in the south of Bahia de La Paz, which is located in the southern portion of the Gulf of California (24\(^{\prime \prime }\) 25\(^{\prime }\) N, 110\(^{\prime \prime }\) 18\(^{\prime }\) W). The organisms were captured by artisanal fishers using monofilament gill nets (200–300 m long, 1.5 m high, 20–25 cm stretch mesh), traditionally called “chinchorros,” which are set in the afternoon at depths between 10 and 30 m over sandy bottoms and recovered the next morning. Individuals were measured for total length (TL, cm) and the sex was determined by the presence of copulatory organs in males. Vertebrae were collected from the abdominal region of each specimen. The radius of each vertebra was measured on the corpus calcareum along a straight line through the focus of each vertebra with SigmaScan Pro 5.0.0 Software (SPSS, Inc). Vertebral radius (VR) was plotted against TL and tested for a linear relationship to determine if these vertebrae were a suitable structure for age determination and for back-calculated estimation of length at previous ages. The number of the sample is \(N=244\).

We have split the whole sample into two disjoint datasets. The biggest dataset contains around 70% of the observations, and it was used for learning—that is, to fit the parameters of the model—which is usually called the training dataset. The fitted model was used to run simulations. We then construct confidence intervals, which we use to compare the observations of a second dataset—called the validation dataset, which is the complement of the training data. The sample size for training data was \(N_1=170\) and for the test data it was \(N_2=74\).

4.1 Bayesian inference for the initial random variable

In this subsection, we applied the Beta-Binomial model to the random variable \(p_0\). We noted two limitations of this approach. Firstly, there was no available data that help us to set up a density function for the variable \(p_0\). Secondly, the random variable \(p_0\) has as a support an interval strictly inside the \([a,b]\varsubsetneq [0,1]\). Thus, we used the Beta-Binomial model, because it allowed us to approximate a Beta distribution for \(p_0\) in a simple but efficient approach. Another possible choice could be to take into account the deviations from the predictions, which means trying to model with a continuous likelihood instead of a discrete one as in the Beta-Binomial model. However, we constrained the density to be in an interval \([a,b]\varsubsetneq [0,1]\) which implies we have to truncate the continuous likelihood, and consequently the posterior density as well.

The strategy to construct a density for \(p_0\) using the Beta-Binomial model is as follows. First, we select a noninformative or weakly informative prior density, meaning a Beta density that reflects our ignorance of the initial value \(p_0\). Afterward, we use the available data to construct a Beta-Binomial distribution that incorporates information about the initial density. This last part will be done by fitting the logistic model (2).

We focused on two variables

-

Estimated-aged, which is an age that is assigned to each individual according to its largest vertebra.

-

Proportion size of each individual, we define a new variable by dividing “individual size” by the maximum size of the specie observed in the sample.

Observe that in opposition of the definition of the already defined two random variables, when we use explicit data we get the sampled aged and the sampled proportion size, which is no longer a random variable but an actual number.

Using these variables, we fitted a logistic curve according to Eq. (2), which is the solution of Eq. (1), with the variables Estimated-aged and Proportion size. \(p_0\) and r were fitted using an optimization procedure. To estimate those values, the sum of the square error between the observed data and the curve (2) was calculated,

where \({\hat{p}}(t_j;r,p_0)\) is the value of the curve at the time of the \(p_j(t)\). \({\hat{r}}\) and \({\hat{p}}_{0}\) were the values that minimize S. By using the R-function optim,Footnote 1\({\hat{r}}=0.2266\) and \({\hat{p}}_0=0.4414\) were obtained; see figure 1. Note that this prior information is reflected on the prior distribution of \(p_0\).

A set of random variables \(\{Y_j\}_{j=1}^{N_1}\) is then defined: \(Y_j=1\) if the j observation of the variable “estimated-aged” is below or on the logistic curve, otherwise \(Y_j=0\). The sum over all the random variables \(Y_j\):

follows a \(Z \sim Bin({N_1},p_0)\), with p unknown. The random variable Z describes how many of the observed data are less than or equal to the size given by the model (2) and according to age. It is important to note that \(p_0\) is the initial condition of the model equation (3) which we are assuming is random.

From our training data, we obtain that \(z=62\) and because \({N_1}=170\), then \(n-z=108\). Moreover, data goes from 0 to 14 years in the estimated aged variable, then the interval \([0,T]=[0,14]\) in the RDE equation (3).

We consider parameter p as a random variable that follows a Beta distribution: \(Beta_{[0.1,0.9]}(\alpha ,\beta )\), which is the prior distribution. We denote by z the value of the random variable Z. We use the Beta-Binomial model to obtain the posterior distribution accordingly to Eq. (19), then the posterior distribution is \(Beta_{[0.1,0.9]}(\alpha +z,n-z+\beta )\). Prior and posterior distributions need to be standardized to be a true density.

We consider two Beta distributions with parameters \((\alpha ,\beta )\):

-

\((\alpha ,\beta )=(1,1),\)

-

\((\alpha ,\beta )=(2,2)\),

in the former case, each segment with the same length, included in the range of the random variable, has equal probability; while in the latter case, the probability is high around the middle point of the segment [0.1, 0.9]. These choices could be considered as noninformative distributions and, as we mentioned before, they reflect our ignorance of the true value of the random initial condition.

We use a mesh for the interval [0.1, 0.9] with \(\Delta _x=1/100\).

The density of the RDE’s solution with Beta as prior distribution for \(p_0\), with two different Betas, is presented in Fig. 2. This means that the use of the posterior distribution is not included. As we mentioned before, they are noninformative, and therefore they are among the best choices as a prior distribution when we do not know the value of the parameter (or any data) (see for instance Bernardo and Smith 2006).

In the following two sections, we simulate the 1-PDF of the solution of the RDE using the posterior distribution, with two examples of stochastic processes as random coefficients of the RDE (3). The stochastic processes are the Wiener and the OU processes.

We use the Karhunen–Loeve expansion with \(N=1\), as in Cortés et al. (2019) to approximate the 1-PDF of the solution of the RDE. We apply the Simpson’s rule see, e.g., Burden et al. (2000) to perform the numerical integration of the expression (10).

5 Wiener process as a random coefficient

In this section, we consider \(A(t, \omega )\) to be the Wiener process (also known as Brownian motion). We recall some basic facts of the Karhunen–Loeve expansion for the Wiener process \(W(t,\omega )\), \(t\in [0,T]\) (Lord et al. 2014). \(W(t,\omega )\) has mean function \(\mu _W(t)=0\) and covariance function given by \(C_W(s,t)=\min (s,t)\), for all \(s,t\in [0,T]\). The Karhunen–Loeve expansion, Eq. (5), is performed with a set of uncorrelated standard Gaussian random variables \(\{\xi _j(\omega )\}_{j\ge 1}\); that is, \(\xi _j(\omega )\sim N(0,1)\) and with the set of functions \(\phi _j(t)\) and eigenvalues \(\nu _j\) given by

We choose \(P_0(\omega )\) independent of the random vector \(\overline{\varvec{\xi }_N}=(\xi _1,\ldots ,\xi _N)\).

With this expansion, we simulate the solution of the RDE by using the Eq. (10). The 1-PDF of the solution of the RDE of the solution when the prior density is Beta(1, 1) and Beta(2, 2) is presented in Fig. 3. Notice that we get almost the same shape of the density for both choices of the prior distribution. This is most probably due to the fact that the sample number is greater than 50. We use a mesh for the interval [0.1, 0.9] with \(\Delta _x=1/100\) and a time step of \(\Delta _t=1/100\).

Note that the variability increases when time increases. This can be explained by the fact that during the first period of life, the availability of food is essential in the growth of each organism. If the food is very abundant, then their size will be greater than expected. This fact generates variability in the data. Meanwhile, when the organisms achieve the maximum age, the variability decreases because they approach the maximum expected size. Therefore, the model reproduces the biological growth dynamic well.

We then run 1000 simulations of the Wiener process. We use the function snssde1d from the R-library Sim.DiffProc [34] (Guidoum and Boukhetala 2018) to simulate the Wiener process.

From the posterior density at time \(t=0\), as shown in Fig. 3, we take 1000 samples. We make that for each prior distribution Beta(1, 1) and Beta(2, 2).

Subsequently, we run 1000 simulations of the solution of the Eq. (4), each of them combining the posterior density \(Beta{(\alpha ,\beta )}\) and the previous Wiener processes simulations. Figure 4 show the simulations of the solutions of Eq. (3).

In these figures, every single line is a realization of the process, which means that every line represents an estimated growth over time. Note that not all individuals reach the maximal expected growth size; this agrees with what is observed in nature. Therefore, the proposed model adequately reproduces this phenomenon.

We also observe that the dynamic of the differential equation governs the behavior of the solution because each of the solutions stays close to the solution mean. In other words, the variations of the Wiener processes are not strong.

Note that when the posterior distribution is updated with new data, we obtain a Beta(21, 32) and a Beta(22, 33), and these distributions are quite similar. Therefore, as in the case of the densities (Fig. 3), there is no substantial difference between Beta(1, 1) and Beta(2, 2). Thus, if the sample size is large enough (\(n \ge 20\)), then we could use as a prior distribution either Beta, and the result will be very similar.

One important fact about this model is that it is able to provide a credible interval at a certain level \(\alpha\) (analogous to CIs in classical statistics). However, because we are using a very low number of terms in the Karhunen–Loeve expansion, the credible intervals are wide and meaningful. Consequently, they are not included in the results. To improve this part it is necessary to include more terms in the Karhunen–Loeve expansion. Another idea could be to use the Wiener-Chaos expansion to improve the numerical simulations.

Figures 5 show the mean, confidence intervals at 95% for the simulations; black points represent observed data, and dotted lines represent CIs of the simulation. We presented two graphs, one for each prior distribution Beta(1, 1) and Beta(2, 2). Bayesian inference and the numerical approximation to the solution given by the Karhunen–Loeve expansion are well-behaved because all of the observed data are inside the CI.

6 Ornstein–Uhlenbeck process as a random coefficient.

We will now address the case in which the random coefficient \(A(t,\omega )\) is the OU process.

The OU process was introduced by Leonard Ornstein and George Eugene Uhlenbeck in 1930. This is a mean reverting process (i.e., the process tends to drift towards its long-term mean). This process has been studied deeply by several authors, see, e.g. (Øksendal 2003).

Consider the following SDE

where \(\theta ,\mu ,\sigma\) are the parameters of the SDE and \(W_t\) is a Wiener process. In particular:

-

\(\mu\) represents the equilibrium or mean value.

-

\(\sigma\) is the degree of errors.

-

\(\theta\) is the rate by which the errors dissipate and the variable reverts towards the mean.

-

\(x_0\) is the initial value.

As usual, the OU process is defined as the unique strong solution:

when \(\mu =0\) and \(x_0\sim N(0, \sigma ^2 /2)\), it is possible to prove that the covariance function is \(cov(X_t, X_s ) = 2\theta e^{-\theta |t-s|}\).

The Karhunen–Loeve expansion, Eq. (5), is performed with the set of functions \(\phi _j(t)\) and eigenvalues \(\nu _j\) given by

where \(w_j\) are the strictly positive solutions of the following equation

for \(j\ge 1\).

Moreover, \(\xi _j\) is given by

Note that \(\{\xi _j\}\) is a sequence of independent Gaussian random variables. For a detailed derivation of the Karhunen–Loeve expansion for the OU process, see for instance Sect. 2.3 of Ghanem and Spanos (1991) or Corlay and Pagés (2015).

We choose \(P_0(\omega )\) independent of the random vector \(\overline{\varvec{\xi }_N}=(\xi _1,\ldots ,\xi _N)\).

To simulate solutions of the random logistic equation with the OU process and Beta posterior, we need to fix the values of the parameters in the SDE (12). However, estimating these parameters formally and rigorously seems complicated and is beyond the scope of this work.

Therefore, we choose these values heuristically without the use of any inference tools (see “Appendix” for the procedure to fit these values). We fixed the values as \(\theta =1/2\), \(\sigma =1.5\), and \(x_0=0\).

We repeat the procedure described in the previous section: we run 1000 simulations of the OU process using the function snssde1d. We take 1000 samples from the posterior density at time \(t=0\), as shown in Fig. 6. Finally, we run 1000 simulations of the solution of the Eq. (4), each of them combining the posterior density \(Beta{(\alpha ,\beta )}\) and the previous OU processes simulations.

Figure 7 shows the simulations of the solutions of Eq. (3). As before, we observe that the dynamic of the differential equation governs the behavior of the solution. In fact, the behavior of the OU case is very similar to the Wiener process. The only difference that we observe is that the CIs are smaller than for the Wiener process. This is not a surprise, since the OU process has a tendency to the mean, while the Wiener has not; thus the OU could represent better the stochastic rate growth.

As in the previous section, we now present Fig. 8 with the mean, and CIs at 95% for the simulations and compare them with the real data. From here, we observe that almost all of the experimental data are inside the interval, meaning that the Bayesian inference and the numerical approximation to the solution given by the Karhunen–Loeve expansion are well-behaved.

7 Testing the model with new data

In this section, we test the model with a different dataset. The observations are stored as a test dataset, which is approximately \(30\%\) of the whole dataset. We use the mean of simulations and CIs and compare them with these new data. We observe in Fig. 9 that the new data is inside the CIs (See also Fig. 10).

We have fitted four models, with different choices of the initial condition and of the stochastic process \(A(t,\omega )\).

To evaluate the goodness of fit, the adjusted values of each model were compared, we calculated the square of the errors for each simulation,

where \(\hat{p}(t_j)\) is the predicted curve at the time \(t_j\) of the \(p_j(t)\) observation. Since \(\hat{p}(t_j)\) is random, then we run 1000 simulations and we take the value \(\hat{p}(t_j)\) equal to the mean of such simulations, in this way we stabilize the mean curve. We use \(\hat{p}(t_j)\) as the predicted curve. We observe that for each set of 1000 simulations, the values of Sq have very small variations. Consequently, we decided to repeat this procedure 10 times, and with these values take the average to provide a better approximation to Sq. We have also calculated the standard deviation of Sq.

The model with the OU process and prior Beta(2, 2) has the smallest sum of squares, which means that the predicted values are closest to the real data and consequently enjoy a better goodness of fit. Moreover, when comparing the goodness of fit of the OU process with the Wiener processes, when they are used as stochastic growth rates, we conclude that the OU process has a better performance, no matter the choice of Beta as the prior distribution. We also conclude that the election of the prior distributions is not as important as was expected. This means that we could start with a total absence of knowledge and, if the data is large enough, then it is possible to get a very similar result if we have little knowledge about the initial condition. We observe that the CI for the random initial condition of the solution covers the estimated \(p_0\) in Sect. 4.

We present the results of this procedure in Table 1.

8 Conclusions

In this paper, we modeled the biological growth of N. entemedor with the use of a RLDE. Because we allow the initial condition to be a random variable, we used the classical Beta-Binomial model from Bayesian inference to incorporate real data in the calculation of the distribution of the mentioned initial condition. Our second main assumption is that the growth rate r is not deterministic but is a stochastic process. We then used two examples for the stochastic growth rate: the Brownian motion and the OU process. For these examples, the density of the solution by using the Karhunen–Loeve expansion of the solution of the RLDE was constructed numerically. Observe that we have calculated a probability density function, and thus it is possible to calculate directly credible intervals.

The proposed model allows us to include the inherent randomness of the variables in the model, hence the within-subject variation is well represented. In the deterministic model, the parameters are usually estimated by minimizing the square of the differences between the value of the function and the observed data. In contrast, in the stochastic model, it is assumed that the initial condition is stochastic. Therefore, it is assumed that the value of the parameter may vary depending on the circumstances. Assuming that the parameter is a random variable, under certain conditions, its probability density function can be obtained and therefore CIs can be calculated. This is an advantage because it is not clear how to build CIs in the deterministic model.

We have also performed several simulations for the RLDE. With these simulations, we construct CIs of their mean. We simulated the initial condition accordingly to the density obtained by the Karhunen–Loeve expansion, and we simulated the stochastic process \(A(t,\omega )\) using simulations of the solution of the RLDE. We find that the mean of the simulations fits well with the real data. Moreover, the simulated trajectories of the solution reach maximum sizes at different times, which is consistent with the observation in nature where different individuals of the same species reach a maximum size at different ages; so the model reproduces these phenomena well.

The method introduced in this paper could be applied to different growth equations; for instance, to a random Von Bertalanffy equation. A natural extension of this research could be to increase the number of terms in the Karhunen–Loeve expansion. Another option is to use Wiener-Chaos expansion instead of using KL expansion to numerically construct the density of the solution of the RLDE and compare their performance. Another avenue of future research is to construct a model with the use of a SDE, instead of a RDE, and then study if it could produce better results than those presented here.

Notes

We have used as a minimizer a quasi-Newton method (also known as a variable metric algorithm), specifically that published simultaneously in 1970 by Broyden, Fletcher, Goldfarb, and Shanno. This uses function values and gradients to build up a picture of the surface to be optimized. In addition, instead of a numerical approximation, it is used a finite-difference approximation for it.

References

Abbott JC, Dill LM (1989) The relative growth of dominant and subordinate juvenile steelhead trout (Salmo gairdneri) fed equal rations. Behaviour 108(1–2):104–113

Benhaïm D, Péan S, Brisset B, Leguay D, Bégout M-L, Chatain B (2011) Effect of size grading on sea bass (Dicentrarchus labrax) juvenile self-feeding behaviour, social structure and culture performance. Aquat Living Resour 24(4):391–402

Bernardo JM, Smith AF (2006) Bayesian theory. Wiley, New York

Bevia V, Calatayud J, Cortés J-C, Jornet M (2023) On the generalized logistic random differential equation: theoretical analysis and numerical simulations with real-world data. Commun Nonlinear Sci Numer Simul 116:106832

Burden RL, Faires JD, Reynolds AC (2000) Numerical analysis, 7th edn. Brooks/Cole Pacific Grove, CA, Boston

Calatayud J, Cortés JC, Jornet M (2019) Improving the approximation of the probability density function of random nonautonomous logistic-type differential equations. Math Methods Appl Sci 42(18):7259–7267

Calatayud J, Cortés JC, Dorini FA, Jornet M (2022) Dealing with variability in ecological modelling: an analysis of a random non-autonomous logistic population model. Math Methods Appl Sci 45(6):3318–3333

Calatayud Gregori J, Caraballo Garrido T, Cortés López JC, Jornet Sanz M (2020) Mathematical methods for the randomized non-autonomous Bertalanffy model. Electron J Differ Equ 2020(50):1–19

Chen Y, Fournier D (1999) Impacts of atypical data on Bayesian inference and robust Bayesian approach in fisheries. Can J Fish Aquat Sci 56(9):1525–1533. https://doi.org/10.1139/f99-076

Corlay S, Pagés G (2015) Functional quantization-based stratified sampling methods. Monte Carlo Methods Appl 21(1):1–32

Cortés J-C, Navarro-Quiles A, Romero J-V, Roselló M-D (2019) Analysis of random non-autonomous logistic-type differential equations via the Karhunen–Loève expansion and the random variable transformation technique. Commun Nonlinear Sci Numer Simul 72:121–138

Dorini FA, Cecconello M, Dorini LB (2016) On the logistic equation subject to uncertainties in the environmental carrying capacity and initial population density. Commun Nonlinear Sci Numer Simul 33:160–173

Fujiwara M, Kendall BE, Nisbet RM (2004) Growth autocorrelation and animal size variation. Ecol Lett 7(2):106–113

Gelman A, Stern HS, Carlin JB, Dunson DB, Vehtari A, Rubin DB (2013) Bayesian data analysis. Chapman and Hall/CRC, Boca Raton

Ghanem RG, Spanos PD (1991) Stochastic finite elements: a spectral approach. Springer, Berlin

Ghosh JK, Delampady M, Samanta T (2006) An introduction to Bayesian analysis: theory and methods. Springer, New York

Gloter A (2001) Parameter estimation for a discrete sampling of an integrated Ornstein–Uhlenbeck process. Statistics 35(3):225–243

Guidoum AC, Boukhetala K (2018) Sim.DiffProc: simulation of diffusion processes. R package version 4.3. https://cran.r-project.org/package=Sim.DiffProc. Accessed 10 May 2020

Gurney WSC, Veitch AR (2007) The dynamics of size-at-age variability. Bull Math Biol 69(3):861–885. https://doi.org/10.1007/s11538-006-9167-8

Gurney WSC, Tyldesley G, Wood SN, Bacon PJ, Heath MR, Youngson A, Ibbotson A (2007) Modelling length-at-age variability under irreversible growth. Can J Fish Aquat Sci 64(4):638–653. https://doi.org/10.1139/F07-039

Han X, Kloeden PE (2017) Random ordinary differential equations and their numerical solution. Springer, Singapore

Huschto T, Sager S (2014) Solving stochastic optimal control problems by a Wiener chaos approach. Vietnam J Math 42:83–113

Huschto T, Podolskij M, Sager S (2019) The asymptotic error of chaos expansion approximations for stochastic differential equations. Mod Stoch Theory Appl 6(2):145–165

Kloeden PE, Platen E (2013) Numerical solution of stochastic differential equations, vol 23. Springer, Berlin

Loeve M (1978) Probability theory. II, vol 46. Graduate texts in mathematics. Springer, New York

López Quintero FO, Contreras-Reyes JE, Wiff R, Arellano-Valle RB (2017) Flexible Bayesian analysis of the von Bertalanffy growth function with the use of a log-skew-t distribution. Fish Bull 115(1):13–26. https://doi.org/10.7755/FB.115.1.2

Lord GJ, Powell CE, Shardlow T (2014) An introduction to computational stochastic PDEs, vol 50. Cambridge texts in applied mathematics. Cambridge University Press, New York

Lototsky SV, Rozovsky BL (2017) Stochastic partial differential equations. Springer, Cham

Luquin-Covarrubias MA, Morales-Bojórquez E, González-Peláez SS, Hidalgo-De-La-Toba J, Lluch-Cota DB (2016a) Modeling of growth depensation of geoduck clam Panopea globosa based on a multimodel inference approach. J Shellfish Res 35(2):379–387. https://doi.org/10.2983/035.035.0212

Luquin-Covarrubias MA, Morales-Bojórquez E, González-Peláez SS, Lluch-Cota DB (2016b) Joint likelihood function based on multinomial and normal distributions for analyzing the phenotypic growth variability of geoduck clam Panopea globosa. Calif Coop Ocean Fish Investig Rep 57:151–162

McLachlan GJ, Krishnan T (2007) The EM algorithm and extensions. Wiley, Hoboken

Nielsen SF (2000) The stochastic EM algorithm: estimation and asymptotic results. Bernoulli 6(3):457–489

Øksendal B (2003) Stochastic differential equations. In: Stochastic differential equations. Springer, Berlin, pp 65–84

Reich S, Cotter C (2015) Probabilistic forecasting and Bayesian data assimilation. Cambridge University Press, Cambridge

Tang M, Jiao Y, Jones JW (2014) A hierarchical Bayesian approach for estimating freshwater mussel growth based on tag-recapture data. Fish Res 149:24–32. https://doi.org/10.1016/j.fishres.2013.09.005

Van Leeuwen PJ, Cheng Y, Reich S et al (2015) Nonlinear data assimilation. Springer, Cham

Xiu D (2010) Numerical methods for stochastic computations: a spectral method approach. Princeton University Press, Princeton

Acknowledgements

We would like to thank two anonymous referees for their helpful remarks and for providing a generous amount of comments that improved our paper.

We thank Fernando Baltazar-Larios for providing a computational code to estimate the parameters for the OU process.

Author information

Authors and Affiliations

Corresponding author

Additional information

Handling Editor: Luiz Duczmal.

Appendices

Appendix 1

Here, we briefly describe how to estimate the SDE’s parameters in (12).

After applying some algebra to Eq. (4), we obtain,

\(A(s,\omega )=X_t(\omega )\) is the solution of the SDE Eq. (12) and \(x_0=0\) was fixed. Note that in (17) the stochastic process \(A(s,\omega )\) is behind a Riemann time integral, therefore it is difficult to estimate the parameters \(\theta\) and \(\sigma\) of the SDE (12). See, Gloter (2001) for a method to estimate these parameters using observations of the integral through a discretization with time step \(\Delta\), which in our case does not apply. We now describe the method to estimate the parameters.

Denote by \(Z_t\) as

We look for parameters that generate solutions whose integrals “cover” the data. We have used the EM algorithm (cf. McLachlan and Krishnan 2007 or Nielsen 2000) to estimate the parameters, and we have fixed the parameters for the OU process given by the SDE

We fixed such parameters as \(\theta _1=64.683761\), \(\theta _2=25.136916\) and \(\theta _3=2.922927\).

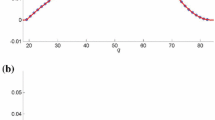

We have performed 1000 simulations of the OU process equation (12), we have integrated them in time; the confidence intervals and mean of these simulations were obtained, and presented in Fig. 11. The Function snssde1d from the R-library Sim.DiffProc [34], (Guidoum and Boukhetala 2018) was used.

Mean and confidence intervals for the process Z [see (18)]. The set of parameters was estimated with the EM algorithm

Appendix 2: Beta-binomial model

We now describe shortly the well-known Beta-Binomial model.

Suppose \(y_1,\ldots ,y_n\) follow independent binomial distributions:

where n is known and \(\theta\) is an unknown parameter that will be assumed as a random variable, thus we assign it to be a prior distribution.

The prior distribution should reflect our ignorance about the unknown parameter \(\theta\) and it should not have any special properties. Then, we suppose that \(\theta \sim Beta(\alpha , \beta )\) with \(0<\alpha<\beta <1\).

The Beta distribution is a conjugate of binomial distribution (Bernardo and Smith 2006, see example 5.4) or (Ghosh et al. 2006, see example 2.2), this implies that the posterior distribution is also a Beta. Assuming that the random variable X is Binomial(n, x), the posterior distribution \(p(\theta \mid x)\) is also Beta:

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ornelas, A., Delgado-Vences, F., Morales-Bojórquez, E. et al. Modeling the biological growth with a random logistic differential equation. Environ Ecol Stat 30, 233–260 (2023). https://doi.org/10.1007/s10651-023-00561-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10651-023-00561-y

Keywords

- Bayesian inference

- Biological growth

- Data assimilation

- Random logistic differential equation

- Simulations