Abstract

Research in cognitive load theory is increasingly recognizing the importance of motivational influences on students’ (willingness to invest) mental effort, in particular in the context of self-regulated learning. Consequently, next to addressing effects of instructional conditions and contexts on groups of learners, there is a need to start investigating individual differences in motivational variables. We propose here that the biopsychosocial model of challenge and threat may offer a useful model to study the motivational antecedents of (anticipated) mental effort. We also report four experiments as initial tests of these ideas, exploring how feedback valence affects students’ challenge/threat experiences, self-efficacy, and mental effort investment. The results showed that negative feedback leads participants to expect that they will have to invest significantly more effort in future problems than positive feedback (Experiments 1, 2, and 3) or no feedback (Experiment 3). Had we not considered the motivational variables in investigating the effect of feedback conditions on effort investment, we would not have known that this effect was fully mediated and thus explained by participants’ feelings of self-efficacy (Experiments 1/2) and threat (Experiment 1). We would also have concluded that feedback does not affect the willingness to invest effort in future problems (all four experiments), whereas actually, there were significant indirect effects of feedback on willingness to invest effort via challenge (in Experiments 1/2) and threat (in all experiments). Thus, our findings demonstrate the added value of considering challenge and threat motivational states to explain individual differences in effort investment.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Recent research in cognitive load theory (Sweller et al., 1998, 2019) is increasingly starting to recognize the importance of motivational influences on mental effort investment. In particular in the context of self-regulated learning, where it is up to learners to decide what tasks to work on, in which order, and for how long, such motivational influences on mental effort cannot be ignored (De Bruin et al., 2020). Consequently, next to continuing to address effects of instructional conditions and contexts on groups of learners, there is a need for research in cognitive load theory to start investigating individual differences in motivational variables, to be able to explain why, in a seemingly similar instructional condition or context, some students are (willingly) investing substantial amounts of effort in learning tasks while others are not.

We propose here that the biopsychosocial model of challenge and threat (Blascovich, 2008) may offer a useful model to study the antecedents of (anticipated) mental effort. It offers a theoretical lens through which to analyze different factors that co-determine whether individual students experience challenge or threat motivational states, which may help explain how students regulate their effort and why some students are and remain motivated to invest effort whereas others are not. We also report four experiments as initial tests of these ideas, exploring how feedback valence affects students’ challenge/threat experiences, self-efficacy, and mental effort investment.

Motivational Influences on Mental Effort

Early research on cognitive load theory (Sweller et al., 1998) was mostly concerned with cognitive processes, in seeking to explain how the design of instruction affected learners’ cognitive (i.e., working memory) load. This was done by comparing effects of different instructional conditions for groups of learners. Self-reported mental effort investment (Paas, 1992) was used to measure the actual cognitive load learners experienced: “Mental effort is the aspect of cognitive load that refers to the cognitive capacity that is actually allocated to accommodate the demands imposed by the task; thus, it can be considered to reflect the actual cognitive load.” (Paas et al., 2003, p. 64). The amount of cognitive load a learner experienced was considered to result from the interplay between task characteristics (i.e., complexity, defined in terms of element interactivity, i.e., the number of interacting information elements a task contains) and learner characteristics (i.e., prior knowledge, which affects the number of interacting information elements a task contains for a particular learner, because with increasing expertise, elements are chunked and can be processed as a single element; see Sweller et al., 1998).

Later research on cognitive load theory has increasingly started to recognize the importance of emotional (Plass & Kalyuga, 2019) and motivational (Feldon et al., 2019) influences on mental effort investment. For instance, Paas et al. (2005) introduced a motivational perspective on the relation between mental effort and performance to compare learners’ involvement in different instructional conditions. They showed how mental effort ratings and performance scores can not only be used to measure the efficiency of instructional conditions (i.e., high performance with low effort = high efficiency; low performance with high effort = low efficiency; Paas & Van Merriënboer, 1993), but also learner involvement in different instructional conditions (i.e., low effort with low performance = low involvement; high effort and high performance = high involvement).

While this allows for classifying motivational effects of instructional conditions, this is not sufficient to explain why students are motivated to invest effort in response to an instructional condition, and some more so than others. Next to the effect of the way in which instruction is designed, we need to start looking into the motivational antecedents that determine individual students’ effort investment in response to an instructional condition. One well-known motivational influence on effort investment, is students’ self-efficacy.

Self-Efficacy and Mental Effort

Students’ self-efficacy, that is, their confidence in their ability to (learn to) perform a task (Bandura, 1986, 1994), is considered one of the main motivational influences on effort investment. After all, if students would not believe they can (learn to) perform a task, then why would they bother investing effort at all? Higher self-efficacy has been associated with the tendency to see difficult tasks as challenging and to persist when facing setbacks or failure, as well as with better performance and learning outcomes (Bandura, 1994; Marsh et al., 2019; Schunk & Pajares, 2002, 2009), and higher (self-reported) usage of self-regulated learning strategies (e.g., Baars & Wijnia, 2018; Pintrich & De Groot, 1990).

Self-efficacy originates from and is modified by different sources (Bandura, 1997; Schunk & Pajares, 2009): one’s own mastery experiences, vicarious experiences (i.e., seeing others [who are similar to us] succeed or fail), verbal persuasion (e.g., feedback or encouragements by teachers or parents), and interpretation of one’s physiological states (e.g., anxiety). Because mastery experiences are important contributors to feelings of self-efficacy, it is not surprising that higher self-efficacy –just like higher prior knowledge– is often found to be associated with lower effort invested/required and higher achievement (Schunk & Pajares, 2002): If the high self-efficacy is warranted (i.e., aligned with actual ability), people may need to invest less effort in a task to perform well on it. However, people’s confidence in their own ability is not necessarily aligned with their actual ability to perform a task, and their perceptions, not actual ability, drive their affective responses and (academic/self-regulatory) behavior (e.g., Pajares, 1996).

When it comes to learning new things, higher self-efficacy is often associated with higher willingness to invest effort and persist at a task (Bandura, 1997; Pajares, 1996). However, this relationship between self-efficacy and (willingness to invest) effort is not self-evident and may be affected by learners’ perceptions of the task. For instance, the seminal study by Salomon (1984) has shown that learners’ perceptions of the media in which learning materials were presented (i.e., as television film vs. as printed text) were associated with self-efficacy (higher for television), anticipated effort (lower for television), and learning outcomes (lower for television). Self-efficacy correlated positively with effort investment and learning outcomes in the group studying the printed text, but negatively in the group studying the television film. As television was perceived as an ‘easy’ medium, students had (unwarranted) high self-efficacy and had the idea that they would not need to invest much effort. Presumably as a consequence thereof, they also learned less.

It is also important to note, especially in academic contexts, that although self-efficacy prior to a task is important for the amount of effort students expect to have to invest and are willing to invest, self-efficacy is dynamic (see e.g., Yeo & Neal, 2013). Thus, students’ experiences during task performance, for instance, in response to the format of the tasks, or feedback on their performance on the tasks, will also affect their self-efficacy (i.e., it can be both predictor and outcome). For instance, Van Harsel et al. (2020) compared four instructional conditions: a practice problem only condition, a video modeling examples only condition, an example-problem condition, and a problem-example condition, with four (Experiment 1) or eight (Experiment 2) tasks. They asked participants to report their self-efficacy and invested mental effort immediately after each task. In both experiments, when students started with a practice problem to solve, their self-efficacy after the first task was significantly lower than when they studied an example, and their self-reported effort investment was significantly higher. In the practice problem only condition, self-efficacy remained at the same, low level throughout the learning phase, whereas effort remained at the same, high level. In the problem-example condition, however, after the second task (i.e., the example), self-efficacy substantially increased and effort substantially decreased, and remained, roughly speaking, stable throughout the rest of the study phase.

While self-efficacy constitutes an important motivational influence on students’ (anticipated) investment of effort and their willingness to invest effort, it is unlikely to be the only motivational antecedent of how much effort students invest, expect they have to invest, or would be willing to invest. For instance, how much pressure a student feels to perform well on that particular task in that particular situation, or to what extent they feel they have to live up to their own or other people’s expectations, may also play a role. According to the biopsychosocial model of challenge and threat (Blascovich, 2008) such intraindividual and interindividual factors together give rise to challenge or threat motivational states, and we propose here that these challenge and threat states may also be motivational antecedents of (anticipated) mental effort and willingness to invest effort.

The Biopsychosocial Model of Challenge and Threat

The Biopsychosocial Model of Challenge and Threat (Blascovich, 2008; Seery, 2013; Seery & Quinton, 2016) describes how challenge and threat motivational states arise during motivated performance situations (i.e., goal-relevant situations that require instrumental cognitive responses, thereby leading to task engagement). Challenge and threat result from a person’s (un)conscious evaluation of the demands of a situation in relation to their resources to deal with it. There are many different intraindividual and interindividual factors that can weigh into the resource/demand evaluations, and these are also interrelated. Such factors are, for instance, the level of skill or ability one has, one’s beliefs about / confidence in the level of ability one has,Footnote 1 one’s self-esteem or self-doubt, the extent to which the task or situation is novel or familiar, the level of danger involved, the expected level of difficulty of a task, the level of time pressure or social pressure involved, the level of support available, et cetera (Blascovich, 2008; Seery, 2013).

These resource/demand evaluations result in a challenge motivational state when the evaluation of resources matches or exceeds the evaluation of demands, and in a threat motivational state when demands outweigh resources (Blascovich, 2008). These challenge and threat states can be measured by self-reports (Mendes et al., 2001; Scholl et al., 2018; Skinner & Brewer, 2002; Tomaka et al., 1993), as well as through physiological measures (Blascovich, 2008; Seery, 2013; Seery & Quinton, 2016).

Greater challenge has been associated with better performance on a wide variety of tasks, both in sports (e.g., baseball: Blascovich et al., 2004; golf putting: Moore et al., 2013) and in cognitive tasks such as number bisection problems (Scholl et al., 2017), or public speaking (Trotman et al., 2018). A review study by Hase et al. (2019) found positive associations between a challenge state and performance in 28 out of the 38 reviewed studies, in which different approaches to measuring challenge and threat (self-report or physiological) were used. Moreover, research on the biopsychosocial model has shown how the social context can impact challenge/threat evaluations and performance. For instance, being observed on learned tasks (i.e., high resources) led to (cardiovascular) challenge responses and higher performance, whereas on unlearned tasks (i.e., low resources and high demands, i.e., danger of ‘failing in the eyes of others’) led to threat responses and lower performance than not being observed (Blascovich et al., 1999). Being in a role with social power (high resources) can evoke (cardiovascular) challenge responses (Scheepers et al., 2012), at least when this role is construed as an opportunity to make things happen (when it is seen as a responsibility with potentially serious consequences –i.e., higher demands- threat increases; Scholl et al., 2018). Thus, even though it is not yet widely applied in educational research (for an exception, see e.g., Donker et al., 2024) the biopsychosocial model allows for theorizing and testing hypotheses about how certain instructional conditions or contexts, like the format in which a task is presented, the valence of feedback provided on their performance on a task, or aspects of the social context in which a task takes place, might affect resource/demand evaluations and thereby induce challenge or threat motivational states.

So far, there is relatively little research on the associations between challenge and threat and (anticipated) mental effort investment. Moore et al. (2014) manipulated participants’ perceptions of how much effort a motor task would require by means of instructions (i.e., that it was a difficult task that would require high physical and mental effort vs. a straightforward task that required little physical or mental effort). Participants who were led to believe the task required low effort, evaluated the task as more of a challenge, showed a cardiovascular challenge response, and performed the task better than participants who were led to believe it required high effort.

We propose here that next to self-efficacy, these challenge/threat motivational states may help explain individual differences in (anticipated) effort investment and willingness to invest effort, and took a first step towards testing this idea in four experiments. Investigating these underlying mechanisms is theoretically relevant, as it could foster our understanding of how students regulate their effort and why, in a seemingly similar instructional condition or context, some students are and remain motivated to invest effort whereas others are not. It is also practically relevant, as it could, eventually, inform the design of interventions to entice students to (continue to) invest effort.

Exploring the Relation between Challenge/Threat, Self-Efficacy and (Anticipatory) Mental Effort by Manipulating Feedback Valence

We conducted four experiments in which we experimentally manipulated feedback valence, providing participants with predominantly positive or predominantly negative feedback on their problem-solving performance, which was expected to affect their self-efficacy as well as their challenge/threat states. Prior research has shown that participants’ perceptions of how much effort they invested in solving a problem (i.e., retrospectively) is affected by feedback valence (Raaijmakers et al., 2017): When they received predominantly negative feedback on their problem-solving performance (i.e., “Your answer was incorrect.”) they reported having invested significantly more effort in the tasks than when they received predominantly positive feedback (i.e., “Your answer was correct.”). While it is unclear if participants actually increased or decreased their effort investment in response to the feedback or only reported to have done so, these findings do suggest that feedback valence altered participants’ perceptions of the task, their own ability, or both, which would affect their perceptions of the amount of effort required. The question we explore here is whether and how feedback valence would be associated with challenge/threat and self-efficacy perceptions and how this is, in turn, associated with (anticipatory) mental effort investment. The experimental procedures were reviewed and approved by the Faculty Ethics Review Board. The study data and analyses scripts are stored on an Open Science Framework (OSF) page for this project: https://osf.io/rwz3f/.

Experiment 1 and 2

In Experiment 1 and 2, participants were led to believe they would work on two rounds of problem-solving tasks. In Experiment 1, Cognitive Reflection Test problems were used (Pennycook & Rand, 2019) and in Experiment 2 ‘weekday problems’ (Van Gog et al., 2012). Participants were first shown two example problems, based upon which they were asked to rate their self-efficacy (i.e., their confidence in their ability to solve such problems). Then they completed the first (which was actually the only) round of five problems, receiving predominantly positive or predominantly negative feedback on their answers, after which they were asked to rate, with regard to the upcoming round of problems, their feelings of self-efficacy, challenge/threat and anticipatory effort (how much effort they thought the tasks would require and how much effort they would be willing to invest). They did not actually get another round of problems. While these Experiments were largely exploratory, we did expect, based on the literature reviewed above, that due to the mastery experiences and verbal persuasion it induces, positive feedback would evoke higher self-efficacy after problem-solving, more challenge, and less threat than negative feedback; and that this higher self-efficacy, higher challenge, and lower threat would be associated with lower anticipation of the amount of effort a new round of tasks would require and with higher willingness to invest effort.

Method

Participants and Design

The experiments had a between-subjects design, with half of the participants receiving predominantly positive feedback and the other half receiving predominantly negative feedback. Participants were randomly assigned to one of the two conditions. In the predominantly positive feedback condition, participants were led to believe their performance was correct on all tasks except for the fourth (PPPNP), and in the predominantly negative feedback condition they were led to believe their performance was incorrect on all tasks except for the fourth (NNNPN), irrespective of their actual performance. The feedback on the fourth task differed to make the manipulation more credible to participants.

To estimate the required sample size, we calculated how many participants were needed to detect a feedback effect on mental effort ratings. Across three experiments, Raaijmakers et al. (2017) found a medium-sized effect of feedback condition on mental effort ratings (partial eta squares were, respectively, 0.079, 0.048, and 0.106). An a priori power analysis in G*Power (Faul et al., 2007) for a one-way ANOVA with two groups, a medium effect size (Cohen’s f = 0.25), an alpha level of 0.05, and a power of 0.80, showed that 128 participants would be sufficient to detect a feedback effect on effort investment.

Participants in Experiment 1 were Dutch-speaking students from Dutch universities. 149 eligible participants completed the experiment and consented to use of their data again after having been debriefed. Data from 18 participants had to be excluded because of extremely long completion time of the experiment (> 3SD above average; n = 3), or because they indicated awareness of the aim of the study (n = 15, after removal of the aforementioned participants). The remaining sample consisted of 131 participants (age range = 17–51, M = 23.81, SD = 5.75, Mdn = 22; gender: 29 male, 99 female, 3 other): 72 in the predominantly positive and 59 in the predominantly negative feedback condition.

Participants in Experiment 2 were Dutch-speaking Dutch and Belgian students recruited via Prolific (https:///www.prolific.co). They were compensated with 1.50 GBP (i.e., approximately 1.75 Euro and 1.9 USD at the time the study was conducted; the study had an approximate duration of 10 min.). Participants could only participate on a tablet or desktop (not mobile phone). 131 eligible participants completed the experiment and consented to use of their data again after having been debriefed. Data from 9 participants had to be excluded because they indicated they did not attempt to solve the problems but just guessed (n = 2), had extremely short response times on 4 out of the 5 problems, making it unlikely that they read them, let alone thought about them (i.e., even very fast readers can read no more than 350 words per minute (Trauzettel-Klosinski et al. 2012), so we excluded participants who spent less than 0.17 s per word [60 s/350 words] on 4 out of the 5 problems; n = 1), took extremely long to complete the experiment (> 3SD above average, computed after removal of the aforementioned participants; n = 2), or because they indicated awareness of the aim of the study (n = 4, after removal of the aforementioned participants). The remaining sample consisted of 122 participants (age range = 18–40, M = 22.89, SD = 3.11, Mdn = 22.5; gender: 54 male, 64 female, 4 other): 54 in the predominantly positive and 68 in the predominantly negative feedback condition.

Materials and Measures

All materials were in Dutch and were presented in Qualtrics Survey Software (https://www.qualtrics.com).

Problem-Solving Tasks and Feedback

In Experiment 1, problem-solving tasks from the Cognitive Reflection Test (CRT) by Pennycook and Rand (2019) were used (see Appendix 1), who combined the CRTs by Frederick (2005) and Thomson and Oppenheimer (2016). Participants received two example problems (which they did not have to solve) so that they would be able to make self-efficacy estimates prior to problem solving, and five problems they did have to solve (see Appendix 1). The problems were translated into Dutch and had a four-option multiple-choice answer format (cf. Guess et al., 2020).Footnote 2 One point was awarded for each correctly solved problem (i.e., range of performance scores: 0–5).

In Experiment 2, problem-solving tasks were the ‘weekday problems’ used by Raaijmakers et al. (2017). These tasks were based on the complex tasks used in Van Gog et al. (2012), which, in turn, were developed based on an example problem that Sweller (1993) provided to illustrate the concept of high intrinsic cognitive load: “Suppose 5 days after the day before yesterday is Friday. What day of the week is tomorrow?”. Participants received two example problems (which they did not have to solve) so that they would be able to make self-efficacy estimates prior to problem solving, and five problems they did have to solve (see Appendix 1). Again, one point was awarded for each correctly solved problem (i.e., range of performance scores: 0–5).

In the predominantly negative feedback condition, participants received the feedback “Your answer was incorrect” on problems 1, 2, 3 and 5, and the feedback “Your answer was correct” on problem 4, irrespective of their actual performance. In the predominantly positive feedback condition, participants received the feedback “Your answer was correct” on problems 1, 2, 3, and 5, and the feedback “Your answer was incorrect” on problem 4, irrespective of their actual performance. Note that because both the CRT and the weekday problems we used are quite complex (and, moreover, on the CRT problems, the intuitive answer is often incorrect), it would be hard for participants to know for sure whether their answers would be correct or incorrect. This was important for the credibility of the feedback valence manipulation.

Self-Efficacy Ratings

Self-efficacy was measured prior toFootnote 3 and after problem solving. We used a translated version (Agricola et al., 2020) of the self-efficacy subscale from the Motivated Strategies for Learning Questionnaire (MSLQ; Pintrich & De Groot, 1990). Participants rated their agreement with eight statements (e.g., ‘I’m confident I will do very well on the upcoming problem-solving tasks’) on a 7-point Likert scale (labeled 1 = strongly disagree; 2 = disagree; 3 = somewhat disagree; 4 = neutral; 5 = somewhat agree; 6 = agree; 7 = strongly agree). In Experiment 1, confirmatory factor analyses on the self-efficacy questionnaire prior to the CRT (pre) and after completing the CRT (post) showed mixed results when following the guidelines by Hu and Bentler (1999). The incremental fit indexes indicated an acceptable model fit (pre: CFI = 0.90, TLI = 0.87; post: CFI = 0.92, TLI = 0.89), but the absolute fit indexes indicated good model fit on one index (pre: SRMR = 0.06; post: SRMR = 0.03) and poor model fit on the other two indexes (pre: χ2 = 106.26, p < 0.001; post: χ2 = 121.62, p < 0.001). All factor loadings were positive and significant (λ > 0.71) and both measures were reliable (pre: α = 0.94; post: α = 0.97). Agreement ratings were averaged for each participant, resulting in a self-efficacy score between 1–7.

In Experiment 2, the model fit indices showed a similar factor structure as in Experiment 1, for both self-efficacy prior to the weekday-problems, χ2 = 98.17, p < 0.001, CFI = 0.91, TLI = 0.88, RMSEA = 0.18, SRMR = 0.05, and after completing the weekday-problems, χ2 = 135.82, p < 0.001, CFI = 0.91, TLI = 0.87, RMSEA = 0.22, SRMR = 0.04, with all factor loadings being significant (λ > 0.73). Both measures were reliable (pre: α = 0.95; post: α = 0.97).

Challenge/Threat Ratings

Challenge and threat responses were measured using six items from the questionnaire by Scholl et al. (2018), translated into Dutch, three assessing challenge and three assessing threat (see Appendix 2). Participants answered on a 7 point Likert scale (labeled 1 = strongly disagree; 2 = disagree; 3 = somewhat disagree; 4 = neutral; 5 = somewhat agree; 6 = agree; 7 = strongly agree).Footnote 4 Scholl et al. (2018) computed a relative challenge index, with a higher value indicating more challenge. However, our factor analyses did not replicate the one-factor model found in Scholl et al. (2018). After exploring alternative factor structures (see Appendix 2), we found that a two-factor model with a total of five items (two for the challenge subscale and three for the threat subscale) fitted the data well, χ2 = 5.64, p = 0.228, CFI = 0.99, TLI = 0.99, RMSEA = 0.06, SRMR = 0.02, with all factor loadings being significant (λ > 0.51), and showed sufficient reliability (challenge: α = 0.63; threat α = 0.84; latent correlation between challenge and threat: -0.72, p < 0.001). The one challenge item that was dropped had an insufficient factor loading (possibly this was because the Dutch translation ‘…voel ik me erg uitgedaagd’ [I feel very challenged] could be both positively and negatively interpreted). In Experiment 2, we again found that a two-factor model fitted the data best, after excluding the one challenge item that did not load significantly, χ2 = 9.65, p = 0.047, CFI = 0.98, TLI = 0.95, RMSEA = 0.11, SRMR = 0.03, with all factor loadings being significant (λ > 0.34). The two scales showed sufficient reliability, although it was relatively low for challenge (challenge: α = 0.58; threat: α = 0.84; latent correlation between challenge and threat: -0.64, p < 0.001). Agreement ratings were averaged per subscale for each participant, resulting in challenge and threat scores between 1–7.

Mental Effort Ratings

After the problem-solving tasks, participants were asked to rate if they expected that an upcoming block of similar tasks would require high mental effort, and if they were willing to invest high mental effort in that upcoming block of similar tasks, on a 7-point scale, ranging from 1 (strongly disagree) to 7 (strongly agree; labels in between cf. challenge/threat rating scale).

Procedure

In Experiment 1, participants who signed up with the experimenter received a login code for Qualtrics. In Experiment 2, participants were recruited on the Prolific platform, which directed them to the Qualtrics survey. There, they first read an information letter, were asked to provide consent, and were asked for some demographic data on gender (male; female; other, namely…; prefer not to state) and age. In Experiment 2, they were also asked to confirm their student status (this served as an extra check on the Prolific settings; if students indicated here that they were not currently enrolled as a Ba/BSc or Ma/MSc student then they were informed they could not participate).

Then, participants were instructed that they would be about to start working on some problems, which would be completed in two rounds, each consisting of five problems for which they had one minute per problem, and they were shown the two example problems. They were informed that they did not have to solve the example problems and that these were only meant to give them an impression of the tasks. After reading the examples, they completed the self-efficacy ratings.

Subsequently, participants were instructed that they would start with the first round of five problems. They were informed that they would have one minute to complete each problem, and that if they already knew the answer, they could submit it sooner. They were also informed that they were not allowed to take notes. After completing each of the five problems, participants received positive or negative feedback depending on their assigned condition.

Participants were then told that before they would start with the second round of problems, they would be asked to answer some questions, and were presented with the challenge/threat, self-efficacy, and anticipatory effort ratings. They were also asked some questions to check if they guessed the aim of the study (i.e., showed awareness that the feedback was manipulated), and in Experiment 2, if they actually attempted to solve the problems or just guessed (those who indicated to have guessed were excluded from the sample; see participants section).

Finally, they were informed that there would not be a second round of problem-solving tasks, and they were debriefed about the actual aim of the study and the fact that the feedback was fake and did not reflect their actual performance. They were then asked, now knowing the actual aim, to consent again to the use of their data.

Results and Discussion

Effects of Feedback Condition

Table 1 shows the descriptive statistics of all variables in Experiment 1 and 2. Differences between conditions were analyzed with one-way ANOVAs. As one would expect, given the random assignment to conditions, self-efficacy prior to problem solving did not differ significantly among the two conditions (Experiment 1, F(1, 129) = 0.25, p = 0.621; Experiment 2, F(1, 120) = 0.70, p = 0.405), nor did problem-solving performance (Experiment 1: F(1, 129) = 0.34, p = 0.559; Experiment 2, F(1, 120) = 0.01, p = 0.932).

As for self-efficacy after problem solving, there was a significant difference between the predominantly positive and negative feedback conditions, both in Experiment 1, F(1, 129) = 75.29, p < 0.001, \({\eta }_{p}^{2}\) = 0.37, and in Experiment 2, F(1, 120) = 136.68, p < 0.001, \({\eta }_{p}^{2}\) = 0.53, indicating large effects. In line with our expectations, participants in the negative feedback condition showed lower levels of self-efficacy after completing the CRT tasks or the weekday problems compared to participants in the positive feedback condition (see Table 1).

Furthermore, feelings of both challenge and threat differed significantly between conditions in Experiment 1 (challenge: F(1, 129) = 28.27, p < 0.001, \({\eta }_{p}^{2}\) = 0.18; threat: F(1, 129) = 50.25, p < 0.001, \({\eta }_{p}^{2}\) = 0.28) and in Experiment 2, (challenge: F(1, 120) = 43.80, p < 0.001, \({\eta }_{p}^{2}\) = 0.27; threat: F(1, 120) = 59.55, p < 0.001, \({\eta }_{p}^{2}\) = 0.33), showing large effects. In line with our expectations, participants in the negative feedback condition reported significantly higher levels of threat and significantly lower levels of challenge compared to participants in the positive feedback condition (see Table 1).

With regard to how much effort participants anticipated a next round of problems would require, there was a large, significant difference between the conditions in Experiment 1, F(1, 129) = 35.10, p < 0.001, \({\eta }_{p}^{2}\) = 0.21, as well as in Experiment 2, F(1, 120) = 17.59, p < 0.001, \({\eta }_{p}^{2}\) = 0.13. In line with our expectations, participants who received predominantly negative feedback expected that the upcoming round of tasks would require more effort compared to participants who received predominantly positive feedback. There were, however, no significant differences in how much effort they reported they would be willing to invest in Experiment 1, F(1, 129) = 0.25, p = 0.619, or Experiment 2, F(1, 120) = 1.17, p = 0.282 (but see the results of the path analyses below).

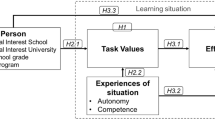

Path Analyses

Table 2 shows the Pearson correlations between the manifest variables in Experiments 1 and 2. Figure 1 shows the results of the path model analyses we conducted, to test how feedback condition affected self-efficacy, challenge, and threat, and how these variables were, in turn, related to expected effort and willingness to invest effort. The results corroborate the findings from the ANOVAs and correlation analyses. That is, in both experiments, positive feedback evoked higher levels of self-efficacy than negative feedback, and self-efficacy was, in turn, negatively associated with expected effort required, but was not significantly associated with willingness to invest effort. Positive feedback also evoked higher levels of challenge, which was not associated with expected effort required, but positively associated with willingness to invest effort. Finally, positive feedback evoked lower levels of threat, which was positively associated with both expected effort (although only significantly in Experiment 1) and, interestingly, with willingness to invest effort (significantly in both Experiments).

Path analyses Experiment 1 and Experiment 2. Note. Beta values are standardized. Values above the mediators report the bootstrapped indirect effects with 5000 samples. Values in parentheses represent total effects. Black values and solid lines are significant effects with an alpha level of 0.05. Grey values and dashed lines are non-significant effects. Covariances between self-efficacy, challenge, and threat were modeled but are not shown here. Feedback coded 0 = negative, 1 = positive

Moreover, the results of the path analyses also provide important additional insights on how self-efficacy, challenge, and threat can act as mediator in the relation between feedback and effort. First, the models on expected effort required showed that the significant effects of feedback condition were fully mediated by self-efficacy (in both Experiments) and threat (in Experiment 1). In other words, the differences between conditions in expected effort required were fully explained by the effects of the conditions on self-efficacy and threat (Experiment 1). Second, the significant indirect effects of feedback on willingness to invest effort via challenge and threat, offer an interesting explanation for the fact that feedback condition did not directly affect the willingness to invest effort, as both indirect effects pointed in different directions. Participants who received positive feedback showed relatively high levels of challenge and higher levels of challenge were related to more willingness to invest effort. Participants who received negative feedback showed relatively high levels of threat and higher levels of threat were also related to more willingness to invest effort. In other words, it seems that the feedback evoked different motivational states, but both of these states made participants more willing to invest effort, resulting in a non-significant effect of feedback on willingness to invest effort.

Overall, the findings from Experiments 1 and 2 suggest that self-efficacy is important for expected effort required, challenge for willingness to invest effort, and threat for both expected effort required (in Experiment 1) and willingness to invest effort (in both Experiments). However, a limitation of these experiments is that we manipulated feelings of self-efficacy, challenge, and threat by means of the feedback conditions, so an important question is if we would find the same associations in the absence of feedback. Another potential limitation is that we encountered some issues with the factor structure, the reliability of the challenge factor was not very high. The current challenge/threat items also had some conceptual overlap with self-efficacy, which could explain the very high correlations between those constructs. Although one could argue that self-efficacy, as one of the factors in resource/demand evaluations, should be part of challenge/threat measures, it would be interesting to see if the associations still hold when the measures show less overlap. Moreover, the self-efficacy questionnaire’s model fit was not sufficient, and the questionnaire may have been unnecessarily long in the context of the present tasks, as self-efficacy here mainly centers on participants' confidence in their ability to solve the problems. Therefore, we conducted two follow-up experiments, in which we used different measures of self-efficacy and challenge/threat and added a third, no feedback condition.

Experiment 3 and 4

Experiment 3 and 4 conceptually replicated Experiments 1 and 2, respectively, using the same problem-solving tasks (CRT in Experiment 3 and weekday problems in Experiment 4), with three important differences. First, a no-feedback control condition was added that would allow us to explore whether the relation between self-efficacy, challenge/threat experience, and effort investment would be the same in the absence of feedback. Second, different self-efficacy and challenge/threat questionnaires were used, in which the overlap between those constructs was reduced. We expected to replicate the associations among those constructs and of those constructs with effort investment that we found in Experiments 1 and 2. Third, in Experiments 3 and 4 we not only measured how much effort participants expected they would have to invest and were willing to invest in a new block of similar tasks (anticipatory), but also asked them to rate how much mental effort they had invested in the first block of tasks (retrospective). Based on the findings by Raaijmakers et al. (2017) it was hypothesized that participants who received predominantly positive feedback would report having invested less effort than participants who received predominantly negative feedback.

Method

Participants and Design

In contrast to Experiments 1 and 2, which were conducted in Dutch, Experiments 3 and 4 were conducted in English. Native English-speaking participants who were (under)graduate students and had a Prolific approval rate of 90% were recruited. Experiment 4 was not open to participants who had participated in Experiment 3. Participants could only participate on a tablet or desktop (not mobile phone). They were compensated with 1.50 GBP (i.e., approximately 1.75 Euro and 1.9 USD at the time the study was conducted; the study had an approximate duration of 10 min.).

The experiments had a between-subjects design with three conditions: (1) no feedback, (2) predominantly positive feedback (cf. Experiments 1 and 2), and (3) predominantly negative feedback (cf. Experiments 1 and 2). Participants were randomly assigned to one of the three conditions. G*Power analysis for a one-way ANOVA with three groups and the same settings as in Experiment 1 and 2 showed that 159 participants would be sufficient to detect a feedback effect.

In Experiment 3, 222 eligible participants completed the experiment and consented to use of their data again after having been debriefed. We noticed that 4 participants had participated twice (which must have been due to an error in Prolific) and we removed their second entry. Data from a further 51 participants had to be excluded because of extremely short response times on 4 out of the 5 problems (cf. Experiment 2; n = 38) or extremely long completion time of the experiment (> 3SD above average, computed after removal of the aforementioned participants; n = 3), or because they indicated awareness of the aim of the study (n = 10 after removal of the aforementioned participants). The remaining sample consisted of 167 participants (age range = 18 -71, M = 27.28, SD = 9.40, Mdn = 23; gender: 81 male, 84 female, 2 other): 63 in the no feedback condition, 55 in the predominantly positive and 49 in the predominantly negative feedback condition.

In Experiment 4, 223 eligible participants completed the experiment and consented to use of their data again after having been debriefed. Data from 12 participants had to be excluded because they indicated they did not attempt to solve the problems but just guessed (n = 5), because of extremely short response times on 4 out of the 5 problems (cf. Experiment 2; n = 1) or extremely long completion time of the experiment (> 3SD above average, computed after removal of the aforementioned participants; n = 5), or because they indicated awareness of the aim of the study (n = 1 after removal of the aforementioned participants). The remaining sample consisted of 211 participants (age range = 18–57, M = 27.29, SD = 8.72, Mdn = 24; gender: 81 male, 125 female, 5 other): 71 in the no feedback condition, 70 in the predominantly positive and 70 in the predominantly negative feedback condition.

Materials and Measures

In Experiments 3 and 4, all materials were in English. Again, they were presented in Qualtrics Survey Software (https://www.qualtrics.com).

Problem-Solving Tasks

In Experiment 3, the CRT tasks were used (cf. Experiment 1); in Experiment 4, the weekday problems (cf. Experiment 2). All tasks can be found in Appendix 1.

Self-Efficacy Ratings

Self-efficacy was assessed with a single item, prior to problem solving “After reading these examples, how much confidence do you have in your ability to solve these problems?” and after the five problems “Approaching this new round of problem-solving tasks… How much confidence do you have in your ability to solve these problems?”. They answered by clicking a radio button on a 9-point rating scale on which the uneven answer options were labeled: 1 = very, very low; 3 = low; 5 = neither low nor high; 7 = high; 9 = very, very high.

Challenge/Threat Ratings

We used the challenge state and threat state items from Skinner and Brewer (2002). The four threat state items are: “I am concerned that others will be disappointed in my performance”, “I worry that I may not be able to achieve the level of success I am aiming for” (slightly adapted: the original item said “…achieve the grade I am aiming for”), “I’m concerned about my ability to perform under pressure”, and “I am thinking about the consequences of performing poorly”. The four challenge state items are: “I am looking forward to testing my knowledge, skills, and abilities”, “I am focused on the positive benefits I will obtain from this situation”, “I am looking forward to the rewards of success”, and “I am thinking about the consequences of performing well”. Participants answered on a 7 point Likert scale (labeled 1 = strongly disagree; 2 = disagree; 3 = somewhat disagree; 4 = neutral; 5 = somewhat agree; 6 = agree; 7 = strongly agree). A one-factor model did not seem to fit the data. After exploring alternative factor structures (see Appendix 2), a two-factor model fitted the data best, both in Experiment 3, χ2 = 53.93, p < 0.001, CFI = 0.92, TLI = 0.88, RMSEA = 0.11, SRMR = 0.09, with all factor loadings being significant (λ > 0.43), and in Experiment 4, χ2 = 70.15, p < 0.001, CFI = 0.91, TLI = 0.87, RMSEA = 0.11, SRMR = 0.08, with all factor loadings being significant (λ > 0.35). The scales were reliable (Experiment 3: challenge α = 0.71, threat α = 0.85, latent correlation between challenge and threat: 0.09, p = 0.350; Experiment 4: challenge α = 0.71, threat α = 0.85 latent correlation between challenge and threat: -0.15, p = 0.064).

Mental Effort Ratings

Participants were asked to rate “How much effort did you invest in solving these problems?” (retrospective; cf. Paas, 1992), as well as “Approaching this new round of problem-solving tasks… How much effort do you expect that these problem-solving tasks will require?” and “Approaching this new round of problem-solving tasks… How much effort are you willing to invest in these problem-solving tasks?” (anticipatory). They answered by clicking a radio button on a 9-point rating scale on which the uneven answer options were labeled: 1 = very, very low; 3 = low; 5 = neither low nor high; 7 = high; 9 = very, very high.

Procedure

The procedure in Experiments 3 and 4 was the same as in Experiment 2, except that those participants assigned to the no-feedback control condition did not receive any feedback on their answers.

Results and Discussion

Effects of Feedback Condition

Table 3 shows the descriptive statistics of all variables in Experiment 3 and 4. Differences among conditions were analyzed with one-way ANOVAs and Bonferroni post-hoc analyses. Again, as one would expect, given the random assignment to conditions, self-efficacy prior to problem solving did not differ significantly among the three conditions (Experiment 3: F(2, 164) = 0.98, p = 0.378; Experiment 4: F(2, 208) = 0.29, p = 0.746), nor did problem-solving performance (Experiment 3: F(2, 164) = 1.78, p = 0.171; Experiment 4: F(2, 208) = 0.45, p = 0.637).

With regard to self-efficacy after problem solving, there was a significant difference among conditions, both in Experiment 3, F(2, 164) = 20.10, p < 0.001, \({\eta }_{p}^{2}\) = 0.20, and in Experiment 4, F(2, 208) = 21.30, p < 0.001, \({\eta }_{p}^{2}\)= 0.17. The post-hoc analyses showed that in line with our expectations (and findings from Experiments 1 and 2), participants’ self-efficacy after problem solving was significantly higher in the positive feedback condition than in the negative feedback (Experiment 3: p < 0.001; Experiment 4: p < 0.001) and no feedback (Experiment 3: p = 0.024; Experiment 4: p = 0.043) conditions, and that it was significantly lower in the negative feedback condition than in the no feedback condition (Experiment 3: p < 0.001; Experiment 4: p < 0.001).

Interestingly, in contrast to our expectations and the findings from Experiments 1 and 2, we did not find a significant effect of feedback condition on challenge, either in Experiment 3, F(2, 164) = 2.41, p = 0.093, or in Experiment 4, F(2, 208) = 1.78, p = 0.172. Possibly, the findings regarding challenge from Experiments 1 and 2 were a result of the inclusion of self-efficacy in the challenge scale in Experiments 1 and 2 (where it consisted of only 2 items, one of which conceptually overlapped with self-efficacy).

In line with our expectations, there was a significant difference in threat experienced across conditions, both in Experiment 3, F(2, 164) = 7.88, p < 0.001, \({\eta }_{p}^{2}\) = 0.09, and in Experiment 4, F(2, 208) = 8.57, p < 0.001, \({\eta }_{p}^{2}\)= 0.08. The post-hoc analyses showed that in Experiment 3, participants in the negative feedback condition reported significantly more threat than participants in the positive feedback condition, p < 0.001 (see Table 3). In Experiment 4, participants in the negative feedback condition reported significantly more threat than participants in the positive feedback condition, p < 0.001 and participants in the no feedback condition, p = 0.049 (see Table 3).

Regarding invested mental effort, we did not find a significant effect of feedback condition either in Experiment 3, F(2, 164) = 1.92, p = 0.151, or in Experiment 4, F(2, 208) = 0.32, p = 0.724, which was surprising, given the findings by Raaijmakers et al. (2017; but see the results of the path analyses below). As for how much effort participants anticipated a next round of problems would require, we did find a significant effect of condition in Experiment 3, F(2, 164) = 5.25, p = 0.006, \({\eta }_{p}^{2}\) = 0.06, but not in Experiment 4, F(2, 208) = 0.86, p = 0.425. Post-hoc analyses revealed that in line with our expectations (and the results from Experiments 1 and 2), participants in the negative feedback condition in Experiment 3, expected that a new round of problems would require significantly more effort than participants in the positive feedback (p = 0.007) and the no feedback (p = 0.039) condition did. Again, as in Experiments 1 and 2, participants’ willingness to invest effort was not affected by feedback condition, either in Experiment 3, F(2, 164) = 1.78, p = 0.674, or Experiment 4, F(2, 164) = 2.41, p = 0.093 (but see the results of the path analyses below).

Path Analyses

Table 4 shows the Pearson correlations between the manifest variables in Experiment 3 and 4 and Figs. 2 and 3 graphically displays the results of path model analyses that tested how the two feedback conditions (negative vs. positive) in Experiment 3 and 4 affected self-efficacy, challenge, and threat, and how these variables were, in turn, related to the three effort variables. Zooming in on the correlations (Table 4) in the no feedback conditions (which are not part of the path models), there are several noteworthy findings. First, in the absence of feedback, and using measures in which the overlap between the constructs was reduced, we still see strong and significant positive associations of self-efficacy after problem solving with challenge and strong and significant negative associations with threat in both experiments. Second, self-efficacy after problem solving was not associated with invested effort, expected effort required, or willingness to invest effort. Third, challenge was positively associated with invested effort, expected effort required, and willingness to invest effort in Experiment 3 but not in Experiment 4. Given that the design and the procedure of both experiments was the same except for the problem-solving tasks, this may suggest that the type of task may play a role in the relation between challenge and effort. Fourth, threat was not associated with invested effort, expected effort required, or willingness to invest effort.

Path analyses Experiment 3. Note. Cf. Figure 1

Path analyses Experiment 4. Note. Cf. Figure 1

As for the effects of feedback, the path models (Figs. 2 and 3) show that in both experiments, positive feedback evoked higher levels of self-efficacy than negative feedback and self-efficacy was, in turn, positively associated with willingness to invest effort (in Experiment 4, not in 3), but not with invested effort and expected effort required. Feedback did not significantly affect participants’ levels of challenge. Challenge was positively related with willingness to invest effort (in Experiment 4, not in 3), but not with invested and expected effort required.

Moreover, the path models in Experiment 4 (not in 3) provided additional insights on how threat can act as mediator in the relation between feedback and mental effort. First, even though results did not show a direct effect of feedback on effort invested and expected effort required, results did show negative indirect effects of feedback via threat on both mental effort variables: Negative feedback evoked relatively high levels of threat and the participants who showed these higher threat levels reported higher effort invested and expected effort required. For willingness to invest effort, results showed two indirect effects pointing in opposite directions. Participants who received positive feedback showed relatively high levels of self-efficacy after problem solving, and higher levels of self-efficacy were related to more willingness to invest effort. Participants who received negative feedback showed relatively high levels of threat and higher levels of threat were also related to more willingness to invest effort. In other words, it seems that the feedback again evoked two different motivational states, but both of these states made participants more willing to invest effort, resulting in a non-significant effect of feedback on willingness to invest effort.

In sum, for all three effort variables in Experiment 4, we found an indirect effect of feedback via threat, whereas we did not find direct feedback effects on effort. For willingness to invest effort, this could be explained by the two indirect effects in opposing direction. However, this explanation did not apply to the amount of invested effort and the amount of effort expected to be required. Consequently, we speculated that the indirect feedback effects via threat potentially only applied to a specific subgroup of our participants, depending on how the feedback affected participants’ self-efficacy. If the indirect effects indeed only applied to a specific subgroup, this could explain why we did not find significant feedback effects on the effort variables. Therefore, we explored this possibility of moderated mediation. To do so, we added self-efficacy (post) and the interaction between feedback and self-efficacy as predictors of threat to our original path models. In order to claim moderated mediation, both the feedback × self-efficacy effect on threat and the relationship between threat and the effort variables need to be significant (Muller et al., 2005). The results (Fig. 4) showed that self-efficacy indeed moderated the effect of feedback condition on experienced threat: Positive feedback evoked lower levels of threat than negative feedback, but only for participants who experienced high levels of self-efficacy after the feedback (one standard deviation above the mean). Participants who experienced average or low levels of self-efficacy felt more threatened than the group with high self-efficacy in the positive feedback condition, but the amount of experienced threat of these participants did not differ significantly between feedback conditions (see Fig. 5). In line with our previous path models, threat was again positively related to effort invested, expected effort required, and willingness to invest effort. Together, these results suggest that self-efficacy indeed moderated the mediation of threat between feedback and the three effort variables. As recommended by Yzerbyt et al. (2018), we additionally computed a moderated mediation index, which directly tested the significance of the product of path a (feedback × self-efficacy on threat) and path b (threat on effort). This index confirmed the moderated mediation in each of the three models, effort invested: 0.36, CI95% [0.09, 0.45]; expected effort: 0.28, CI95% [0.06, 0.38]; willingness to invest effort: 0.32, CI95% [0.07, 0.37]. We used the JSmediation package (Batailler et al., 2023) to test the indirect effects for different values of self-efficacy.Footnote 5 The indirect effects of feedback via threat on effort were only significant for participants with high levels of self-efficacy after feedback (one standard deviation above the mean), not for participants with average or low levels of self-efficacy after feedback.

Moderated mediation analyses Experiment 4. Note. Cf. Figure 1

One limitation of these Experiments, which could also possibly explain the different pattern of results, is that in Experiment 3, we had to exclude a very large number of participants from the analyses due to extremely short response times (which would give even fast readers insufficient time to read the problems) or because they were aware of the feedback manipulation. This resulted in substantial drop-out from the two feedback conditions, and especially the negative feedback condition. A possible reason could be that the English-speaking Prolific participants may have been familiar with the CRT problems (i.e., even in the remaining sample, 54 of the 167 participants, i.e., 32.3%, indicated they were familiar with the tasks). Although the remaining number of participants was not that far below the required sample size according to the power analysis for the ANOVAs, the path models required more power than we originally anticipated because the factor analyses showed that challenge and threat had to be considered as two separate factors. Assuming 10 participants per estimated parameter (cf. Schreiber et al., 2006), we would have needed 90 participants in the scenario with one challenge/threat score, and 140 participants in the current scenario.

General Discussion

We conducted four experiments as an initial test of the idea outlined in the theoretical introduction, that the biopsychosocial model of challenge and threat may provide a useful model to explain individual differences in (anticipated) effort investment. To do so, we manipulated the valence of performance feedback provided after problem-solving tasks and investigated its effect on participants’ challenge and threat experiences and self-efficacy perceptions, and how these, in turn, would be associated with how much effort participants expected they would have to invest and were willing to invest in a new round of the same problems.

Our findings clearly demonstrate the added value of considering challenge and threat motivational states to explain individual differences in effort investment. Had we considered only the effect of feedback conditions on effort investment, we would have concluded 1) that negative feedback leads participants to expect that they will have to invest significantly more effort in future problems than positive feedback (Experiments 1, 2, and 3) or no feedback (Experiment 3) does; and 2) that feedback does not affect the willingness to invest effort in future problems (all four experiments). Whereas, actually, the effect of feedback on expected effort required was fully mediated and thus explained by participants’ feelings of self-efficacy (in Experiments 1 and 2) and threat (in Experiment 1), and there were significant indirect effects of feedback on willingness to invest effort via challenge (in Experiments 1 and 2) and threat (in all experiments). The indirect effect suggests that positive feedback evoked higher levels of challenge and negative feedback higher levels of threat, but because both challenge and threat led to higher willingness to invest effort, this may explain why there was no significant direct effect of condition. While the outcomes may be the same, it is important to know the antecedents of willingness to invest more effort, because down the line, doing so out of threat experiences may be less sustainable than doing so out of challenge experiences.

Unfortunately, the outcomes of Experiments 3 & 4 were somewhat inconsistent with each other (which could have been due to high and unequally distributed dropout and familiarity with the tasks in Experiment 3, as discussed earlier) as well as with Experiments 1 & 2. One possible explanation for the latter, might lie in the difference in measures of mental effort, self-efficacy and challenge/threat we used in Experiments 1 & 2 vs. Experiments 3 & 4. For instance, in all four experiments, we found significant effects of feedback on self-efficacy after problem-solving, but we only found a significant effect of feedback on challenge in Experiment 1 & 2, where the measurement of the construct strongly overlapped with self-efficacy (i.e., after removal of an item with poor factor loading, the challenge scale consisted of only two items, one of which conceptually overlapped with self-efficacy), and not in Experiment 3 & 4, where this overlap was avoided. This could also explain why there was a high and significant negative latent correlation between challenge and threat in Experiments 1 & 2 but not in Experiments 3 & 4. Although one could argue that self-efficacy, as one of the factors in resource/demand evaluations, should be part of challenge/threat measures, our findings show that it is important to carefully consider which scales to use. The explorative analyses for Experiment 4 also suggest that self-efficacy may moderate the relation between feedback condition and threat, which is important to explore further in future research. Another possible explanation for the differences between Experiments 1 & 2 and Experiments 3 & 4 might lie in the difference in study samples: Dutch-speaking university students from the Netherlands and Belgium in Experiments 1 & 2 and native English-speaking university students from a variety of countries in Experiments 3 & 4.

The no feedback condition included in Experiments 3 & 4, allowed us to investigate relations between challenge, threat, self-efficacy, and effort in the absence of the influence of feedback (i.e., without a significant enhancement or reduction in feelings of challenge/threat and self-efficacy). Self-efficacy after problem solving showed strong positive associations with challenge and strong negative associations with threat (even with our measures in which the overlap between the concepts was reduced) in both experiments, as one would expect. Moreover, Experiment 3 showed that in the absence of feedback, challenge was an important predictor of how much effort participants invested in the problem-solving tasks just completed and how much effort they expected they would have to invest and were willing to invest in upcoming problems. The fact that this was not found in Experiment 4 may suggest that the type of task might play a role in the relation between challenge and effort, which is an interesting issue for future research to address.

Another interesting direction for future research would be the use of physiological (i.e., cardiovascular) measures of challenge and threat states. Although more labor-intensive and costly, future research might benefit from the use of such measures of challenge and threat, as they can be applied during task performance without disrupting focus on the task, and may be less prone to biases than self-reports (Blascovich, 2008; Seery, 2013; Seery & Quinton, 2016). The use of psychophysiological measures is still in its infancy in educational research, but is rapidly gaining interest (e.g., D’Mello et al., 2013; Donker et al., 2024; Järvelä et al., 2021; Hoogerheide et al., 2019; Mainhard et al., 2022; Mason et al., 2018). Thus far, however, emerging research is mostly focused on measuring changes in physiological arousal (by measuring heart rate or electrodermal activity), but arousal measures do not always correlate with performance (Blascovich, 2008; Seery, 2013; Seery & Quinton, 2016; see also Hoogerheide et al., 2019). From a biopsychosocial perspective, this makes sense, as both challenge and threat motivational states arise during motivated performance situations (i.e., goal-relevant situations that require instrumental cognitive responses, thereby leading to task engagement). So, in a threat state, people still engage (which seems to be underlined by our findings that not only higher challenge, but also higher threat was associated with higher willingness to invest effort), they are just more vigilant. Because there is task engagement in both challenge and threat states, these both increase heart rate, but affect performance differently. Thus, specifically measuring challenge/threat states with cardiovascular measures, could have more explanatory power.

Finally, feedback valence, as in the present study, is just one aspect of instruction that could enhance challenge/threat responses. It can also be other factors, like the social context in which a task is performed, and it would be interesting to further investigate those. This could open up possibilities for designing interventions to reduce threat experiences and improve (sustained) effort investment or performance. For instance, research has shown that participants who are or feel observed by others, tend to perform better when tasks are well-learned (and presence of others may therefore induce challenge, i.e., to show off), but not when tasks are complex (and presence of others may be seen as a threat, i.e., risk of being perceived as incompetent; Blascovich et al., 1999). Learners experiencing high threat when receiving feedback on their own performance, might perhaps benefit more from vicarious learning (cf. Bandura, 1986), that is, observing feedback provided to peer students who are similar to them. However, the extent to which individual students feel challenged or threatened under such conditions, will likely differ between students and could be associated with their self-efficacy, so it may be necessary to adapt such interventions to individual learners.

Conclusion

We proposed here, and the results of our experiments confirmed this, that the biopsychosocial model of challenge and threat (Blascovich, 2008; Seery, 2013; Seery & Quinton, 2016) may offer a useful model for research in cognitive load theory and self-regulated learning to study the motivational antecedents of (anticipated) mental effort. Rather than only investigating the effects of different instructional conditions or contexts on groups of learners, it is important to realize that individual learners may show different responses to such conditions or contexts, depending on their self-efficacy and on complex individual resource/demands evaluations that result in challenge or threat motivational states. These, in turn, may affect their effort investment, how much effort they expect to have to invest, and how much effort they are willing to invest, which will ultimately affect their learning outcomes. Understanding why, under seemingly similar instructional conditions and contexts, some students are and remain motivated to invest effort whereas others are not, is also practically relevant, as it opens up possibilities for designing interventions to reduce threat experiences, or to adapt instructional conditions or contexts to individual learners.

Notes

Indeed, self-efficacy could also be part of the resource/demand evaluations (with high self-efficacy contributing to resources, and thus to higher challenge and lower threat experiences, although this also depends on the interplay with the other factors that weigh into the resource/demand evaluations) and is included in some self-report instruments of challenge and threat.

Note that Pennycook and Rand (2019) used an open-ended answer format. However, Sirota and Juanjich (2018) investigated whether different answer formats (open-ended, two item, and four item multiple choice) would result in differences in correct responses, reliability, and predictive/construct validity, and found no significant differences between the formats, except for a faster response time for multiple-choice formats.

To be able to check for potential a priori self-efficacy differences (even though we did not expect any given the nature of our problem-solving tasks and the random assignment), as these could potentially have affected challenge/threat experiences as well.

In Experiment 1, we had also included items measuring to what extent a certain feeling applied (e.g., approaching these tasks, I am … feeling stimulated [challenge] / … feeling intimidated [threat]; cf. Scholl et al., 2018), but these showed an inconsistent pattern of factor loadings and hence were excluded from the analyses in Experiment 1 and not assessed in Experiment 2.

Note that this package could model only one mediator. Consequently, these specific indirect effects could not be tested in a model where challenge and self-efficacy were included as additional mediators.

References

Agricola, B. T., Prins, F. J., & Sluijsmans, D. M. (2020). Impact of feedback request forms and verbal feedback on higher education students’ feedback perception, self-efficacy, and motivation. Assessment in Education: Principles, Policy & Practice, 27(1), 6–25. https://doi.org/10.1080/0969594X.2019.1688764

Baars, M., & Wijnia, L. (2018). The relation between task-specific motivational profiles and training of self-regulated learning skills. Learning and Individual Differences, 64, 125–137. https://doi.org/10.1016/j.lindif.2018.05.007

Bandura, A. (1986). Social foundations of thought and action: A social cognitive theory. Prentice-Hall.

Bandura, A. (1994). Self-efficacy. In V. S. Ramachaudran (Ed.), Encyclopedia of Human Behavior (Vol. 4, pp. 71–81). Academic Press.

Bandura, A. (1997). Self-efficacy: The exercise of control. W. H. Freeman & Co.

Batailler C., Muller D., Yzerbyt V., & Judd C. (2023). JSmediation: Mediation analysis using joint significance. R package version 0.2.1. https://CRAN.R-project.org/package=JSmediation

Blascovich, J. (2008). Challenge and threat. In A. J. Elliot (Ed.), Handbook of Approach and Avoidance Motivation (pp. 431–445). Psychology Press.

Blascovich, J., Mendes, W. B., Hunter, S. B., & Salomon, K. (1999). Social “facilitation” as challenge and threat. Journal of Personality and Social Psychology, 77, 68–77. https://doi.org/10.1037/0022-3514.77.1.68

Blascovich, J., Seery, M. D., Mugridge, C. A., Norris, R. K., & Weisbuch, M. (2004). Predicting athletic performance from cardiovascular indexes of challenge and threat. Journal of Experimental Social Psychology, 40(5), 683–688. https://doi.org/10.1016/j.jesp.2003.10.007

D’Mello, S. K., Strain, A. C., Olney, A., & Graesser, A. (2013). Affect, meta-affect, and affect regulation during complex learning. In R. Azevedo & V. Aleven (Eds.), International Handbook of Metacognition and Learning Technologies, 669–681. https://doi.org/10.1007/978-1-4419-5546-3_44

De Bruin, A. B., Roelle, J., Carpenter, S. K., Baars, M., EFG-MRE. (2020). Synthesizing cognitive load and self-regulation theory: A theoretical framework and research agenda. Educational Psychology Review, 32, 903–915. https://doi.org/10.1007/s10648-020-09576-4

Donker, M. H., Scheepers, D. T., Van Gog, T., Van den Hove, M., McIntyre, N. A., & Mainhard, T. (2024). Handling demanding situations: Associations between teachers’ interpersonal behavior, physiological responses, and emotions. The Journal of Experimental Education. https://doi.org/10.1080/00220973.2023.2249837

Faul, F., Erdfelder, E., Lang, A. G., & Buchner, A. (2007). G* Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods, 39(2), 175–191. https://doi.org/10.3758/bf03193146

Feldon, D. F., Callan, G., Juth, S., & Jeong, S. (2019). Cognitive load as motivational cost. Educational Psychology Review, 31, 319–337. https://doi.org/10.1007/s10648-019-09464-6

Frederick, S. (2005). Cognitive reflection and decision making. Journal of Economic Perspectives, 19(4), 25–42. https://doi.org/10.1257/089533005775196732

Guess, A. M., Lerner, M., Lyons, B., Montgomery, J. M., Nyhan, B., Reifler, J., & Sircar, N. (2020). A digital media literacy intervention increases discernment between mainstream and false news in the United States and India. Proceedings of the National Academy of Sciences, 117(27), 15536–15545. https://doi.org/10.1073/pnas.1920498117

Hase, A., O’Brien, J., Moore, L. J., & Freeman, P. (2019). The relationship between challenge and threat states and performance: A systematic review. Sport, Exercise, and Performance Psychology, 8(2), 123. https://doi.org/10.1037/spy0000132

Hoogerheide, V., Renkl, A., Fiorella, L., Paas, F., & Van Gog, T. (2019). Enhancing example-based learning: Teaching on video increases arousal and improves problem-solving performance. Journal of Educational Psychology, 111, 45–56. https://doi.org/10.1037/edu0000272

Hu, L., & Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling: A Multidisciplinary Journal, 6, 1–55. https://doi.org/10.1080/10705519909540118

Järvelä, S., Malmberg, J., Haataja, E., Sobocinski, M., & Kirschner, P. A. (2021). What multimodal data can tell us about the students’ regulation of their learning process? Learning and Instruction, 72, 101203. https://doi.org/10.1016/j.learninstruc.2019.04.004

Mainhard, T., Donker, M. H., & van Gog, T. (2022). When closeness is effortful: Teachers’ physiological activation undermines positive effects of their closeness on student emotions. British Journal of Educational Psychology, 92(4), 1384–1402. https://doi.org/10.1111/bjep.12506

Marsh, H. W., Pekrun, R., Parker, P. D., Murayama, K., Guo, J., Dicke, T., & Arens, A. K. (2019). The murky distinction between self-concept and self-efficacy: Beware of lurking jingle-jangle fallacies. Journal of Educational Psychology, 111(2), 331. https://doi.org/10.1037/edu0000281

Mason, L., Scrimin, S., Zaccoletti, S., Tornatora, M. C., & Goetz, T. (2018). Webpage reading: Psychophysiological correlates of emotional arousal and regulation predict multiple-text comprehension. Computers in Human Behavior, 87, 317–326. https://doi.org/10.1016/j.chb.2018.05.020

Mendes, W. B., Blascovich, J., Major, B., & Seery, M. (2001). Challenge and threat responses during downward and upward social comparisons. European Journal of Social Psychology, 31, 477–497. https://doi.org/10.1002/ejsp.80

Moore, L. J., Wilson, M. R., Vine, S. J., Coussens, A. H., & Freeman, P. (2013). Champ or chump?: Challenge and threat states during pressurized competition. Journal of Sport and Exercise Psychology, 35(6), 551–562. https://doi.org/10.1123/jsep.35.6.551

Moore, L. J., Vine, S. J., Wilson, M. R., & Freeman, P. (2014). Examining the antecedents of challenge and threat states: The influence of perceived required effort and support availability. International Journal of Psychophysiology, 93(2), 267–273. https://doi.org/10.1016/j.ijpsycho.2014.05.009

Muller, D., Judd, C. M., & Yzerbyt, V. Y. (2005). When moderation is mediated and mediation is moderated. Journal of Personality and Social Psychology, 89, 852–863. https://doi.org/10.1037/0022-3514.89.6.852

Paas, F. G. (1992). Training strategies for attaining transfer of problem-solving skill in statistics: A cognitive-load approach. Journal of Educational Psychology, 84(4), 429. https://doi.org/10.1037/0022-0663.84.4.429

Paas, F. G., & Van Merriënboer, J. J. (1993). The efficiency of instructional conditions: An approach to combine mental effort and performance measures. Human Factors, 35(4), 737–743. https://doi.org/10.1177/001872089303500412

Paas, F., Tuovinen, J. E., Tabbers, H., & Van Gerven, P. W. (2003). Cognitive load measurement as a means to advance cognitive load theory. Educational Psychologist, 38(1), 63–71. https://doi.org/10.1207/S15326985EP3801_8

Paas, F., Tuovinen, J. E., Van Merrienboer, J. J., & Aubteen Darabi, A. (2005). A motivational perspective on the relation between mental effort and performance: Optimizing learner involvement in instruction. Educational Technology Research and Development, 53, 25–34. https://doi.org/10.1007/BF02504795

Pajares, F. (1996). Self-efficacy beliefs in academic settings. Review of Educational Research, 66(4), 543–578. https://doi.org/10.2307/1170653

Pennycook, G., & Rand, D. G. (2019). Lazy, not biased: Susceptibility to partisan fake news is better explained by lack of reasoning than by motivated reasoning. Cognition, 188, 39–50. https://doi.org/10.1016/j.cognition.2018.06.011

Pintrich, P. R., & De Groot, E. V. (1990). Motivational and self-regulated learning components of classroom academic performance. Journal of Educational Psychology, 82(1), 33–40. https://doi.org/10.1037/0022-0663.82.1.33

Plass, J. L., & Kalyuga, S. (2019). Four ways of considering emotion in cognitive load theory. Educational Psychology Review, 31, 339–359. https://doi.org/10.1007/s10648-019-09473-5

Raaijmakers, S. F., Baars, M., Schaap, L., Paas, F., & Van Gog, T. (2017). Effects of performance feedback valence on perceptions of invested mental effort. Learning and Instruction, 51, 36–46. https://doi.org/10.1016/j.learninstruc.2016.12.002

Salomon, G. (1984). Television is" easy" and print is" tough": The differential investment of mental effort in learning as a function of perceptions and attributions. Journal of Educational Psychology, 76(4), 647. https://doi.org/10.1037/0022-0663.76.4.647

Scheepers, D., de Wit, F., Ellemers, N., & Sassenberg, K. (2012). Social power makes the heart work more efficiently: Evidence from cardiovascular markers of challenge and threat. Journal of Experimental Social Psychology, 48, 371–374. https://doi.org/10.1016/j.jesp.2011.06.014

Scholl, A., Möller, K., Scheepers, D., Nürk, H. C., & Sassenberg, K. (2017). Physiological threat responses predict number processing. Psychological Research Psychologische Forschung, 81, 278–288. https://doi.org/10.1007/s00426-015-0719-0

Scholl, A., de Wit, F., Ellemers, N., Fetterman, A. K., Sassenberg, K., & Scheepers, D. (2018). The burden of power: Construing power as responsibility (rather than as opportunity) alters threat-challenge responses. Personality and Social Psychology Bulletin, 44(7), 1024–1038. https://doi.org/10.1177/0146167218757452

Schreiber, J. B., Nora, A., Stage, F. K., Barlow, E. A., & King, J. (2006). Reporting structural equation modeling and confirmatory factor analysis results: A review. The Journal of Educational Research, 99(6), 323–338. https://doi.org/10.3200/JOER.99.6.323-338