Abstract

Educational researchers have been confronted with a multitude of definitions of task complexity and a lack of consensus on how to measure it. Using a cognitive load theory-based perspective, we argue that the task complexity that learners experience is based on element interactivity. Element interactivity can be determined by simultaneously considering the structure of the information being processed and the knowledge held in long-term memory of the person processing the information. Although the structure of information in a learning task can easily be quantified by counting the number of interacting information elements, knowledge held in long-term memory can only be estimated using teacher judgment or knowledge tests. In this paper, we describe the different perspectives on task complexity and present some concrete examples from cognitive load research on how to estimate the levels of element interactivity determining intrinsic and extraneous cognitive load. The theoretical and practical implications of the cognitive load perspective of task complexity for instructional design are discussed.

Similar content being viewed by others

Introduction

Task complexity is a major factor influencing human performance and behaviour. In cognitive load theory (Sweller et al., 1998, 2019), task complexity is measured by counting the number of interactive elements in the learning materials. An element is defined as anything that needs to be processed and learned. Because of the nature of interactivity, elements that interact cannot be processed and learned in isolation but must be simultaneously processed together if they are to be understood. The number of elements that must be simultaneously processed in this manner determines cognitive load. Task complexity, based on element interactivity, is fundamental to cognitive load theory, determining all cognitive load effects (Sweller, 2010). Apart from cognitive load theory, there have been many other models used to measure task complexity. In this paper, we will indicate those other models and compare them with using levels of element interactivity to determine complexity.

Defining Task Complexity

The first point to note is that task complexity and task difficulty are different but sometimes are treated as being interchangeable (e.g., Campbell & Ilgen, 1976; Earley, 1985; Huber, 1985; Taylor, 1981). As detailed in the subsequent discussion on element interactivity, we will distinguish these concepts as separate entities (Locke et al., 1981). Some tasks may be difficult due to the sheer volume of individual elements involved, yet they may not be complex if these elements do not interact with each other. Conversely, tasks involving fewer but interactive elements can be both difficult and complex.

Despite many attempts, there are no widely accepted formulae for determining complexity. Liu and Li (2012) identified 24 distinct definitions (see Table 1), drawn from a broad array of publications. However, these definitions were employed to measure tasks outside of an educational context. While this number suggests an area in chaos, broadly the measures of complexity can be divided into objective measures that only take the characteristics of the information into account (i.e., structuralist, resource requirement) and subjective complexity that also considers the characteristics of the person dealing with that information (i.e., interaction).

An example of an objective measure was provided by Wood (1986) who considered the number of distinct acts used to perform a task, the number of distinct information cues that must be processed in performing those acts, the relations among acts, information cues and products, and the external factors influencing the relations between acts, information cues and products. Campbell’s (1988) model included the number of potential ways of reaching a desired outcome, the number of desired outcomes, the number of conflicting outcomes, and the clarity of the connections.

Subjective measures include objective measures of complexity along with the subjective consequences for the person processing the information. Some tasks are resource intensive where resources can be visual (McCracken & Aldrich, 1984), knowledge-based (Gill, 1996; Kieras & Polson, 1985), time-based (Nembhard & Osothsilp, 2002) and require cognitive effort (Bedny et al., 2012; Chu & Spires, 2000). Other subjective measures emphasise particular interactions between the task and the person. Gonzalez et al. (2005) defined task complexity as the interaction between the task and learners’ characteristics such as experience and prior knowledge, while Funke (2010) explained complexity only in terms of the number of task components that the person sees as relevant to a solution. Gill and Murphy (2011) suggested that task complexity is a construct showing how task characteristics influence the cognitive demands imposed on learners. Halford et al. (1998) focused on the relational complexity of information. For example, processing “restaurant” is a single factor, but choosing a restaurant may be dependent on “money” that you have, so the single factor becomes a binary relation (money and restaurant).

While purely objective measures are far easier to precisely define, indeed to the point where they can be defined by formulae, their very objectivity constitutes a flaw. We know that information that is highly complex for one person may be very simple for another, leading directly to the subjective view of complexity. Unfortunately, the subjective view seems to be no better with a multitude of definitions and no consensus. Out of 24 categories, only two reference learners’ prior knowledge. Yet, it remains unclear how variations in this prior knowledge influence task complexity. At least part of the reason for that lack of consensus is that there is an abundance of personal characteristics that could be relevant to measuring complexity.

If complexity is determined by an interaction between task characteristics, which can be objectively determined, and person characteristics, it is essential to determine the relevant person characteristics. Our knowledge of human cognitive architecture can be used for this purpose. Because of the critical importance of the person when determining task complexity, cognitive load theory has attempted to connect task complexity to the constructs and functions of human cognitive architecture.

Cognitive Load Theory and Human Cognitive Architecture

Human cognitive architecture provides a base for cognitive load theory and in turn, that base may be central to any attempt to determine complexity. The basic outline of human cognitive architecture as used by cognitive load theory is as follows.

Information may be categorised as either biologically primary or secondary (Geary, 2005, 2008, 2012; Geary & Berch, 2016). We have evolved over countless generations to acquire primary information such as learning how to listen and speak a native language. While primary information may be exceptionally complex in terms of information content, we tend not to see it as complex because we have evolved to acquire it easily, automatically, and unconsciously.

Secondary information is acquired for cultural reasons. Despite frequently being less complex than primary information, it is usually much more difficult to acquire, requiring conscious effort. Education and training institutions were developed to assist in the acquisition of biologically secondary information and cognitive load theory is similarly concerned.

Novel, biologically secondary information can be acquired either through problem solving or from other people. We have evolved to acquire information via either route, i.e., both routes are biologically primary, although it is far easier to obtain information from others than to generate it oneself via problem solving. In that sense, the same information obtained from others is likely to be seen as less complex than generating it ourselves during problem solving.

Irrespective of how it is acquired, information must be processed by a working memory that has very limited capacity (Cowan, 2001; Miller, 1956) and duration (Peterson & Peterson, 1959). Once processed in that limited capacity and duration working memory, information is transferred to long-term memory for storage and later use. On receipt of a suitable external signal, relevant stored information can be retrieved from long-term memory back to working memory to generate suitable action. The limits of working memory that apply to novel information, do not apply to information that has been organised and stored in long-term memory before being transferred back to working memory to govern action (Ericsson & Kintsch, 1995).

Consideration of this cognitive architecture is essential when determining levels of complexity. The same information can be complex for novices but simple for experts. For the expert readers of this paper, the written word “interactivity” is simple. When seen, it can be almost instantly retrieved from long-term memory as a single, simple element which does not overwhelm working memory. For anyone learning the written English alphabet and language for the first time, the squiggles on the page that make up the word “interactivity” may be complex and impossible to reproduce accurately from memory. Accordingly, complexity must be an amalgam of both the nature of the material and the knowledge of the person processing the material. Both must be considered simultaneously.

Element Interactivity

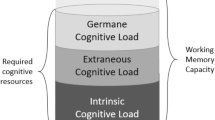

Element interactivity is a cornerstone of cognitive load theory (Sweller, 2010) and is typically applied to biologically secondary information. An element is defined as a concept or procedure that needs to be learned, which can be decomposed based on a learner’s level of expertise. For example, a novice English learner might perceive the English word “Dog” as consisting of three distinct elements (D, O, G), while a more experienced learner might comprehend it as a single element due to their knowledge held in long-term memory. Interactivity refers to the degree of intrinsic connection between multiple elements, necessitating their simultaneous processing in working memory for comprehension. Conversely, non-interacting elements can be processed individually and separately without reference to each other. The degree of interaction between elements determines element interactivity. High element interactivity, or complexity, occurs when more elements than can be handled by working memory must be processed concurrently. This concept of element interactivity, deeply rooted in human cognitive architecture, underpins both intrinsic and extraneous cognitive loads. These two types of cognitive load correspond to different categories of element interactivity. Intrinsic cognitive load is related to the inherent complexity of the information, while extraneous cognitive load is associated with the manner in which information is presented or taught.

Element Interactivity and Intrinsic Cognitive Load

Intrinsic cognitive load reflects the natural complexity of the learning materials (Sweller, 1994, 2010; Sweller & Chandler, 1994). For a given learner and given learning materials, the level of intrinsic cognitive load is constant. Intrinsic cognitive load can be altered by changing the learning materials or knowledge held in long-term memory (Sweller, 2010).

Memorising a list of names, such as the names of elements in the chemical Periodic Table or the translation of nouns from one natural language to another, is low in element interactivity, as learners can memorise those entities separately and individually without referring to each other. Therefore, when memorising that Na is the symbol for sodium, learners do not need to refer to the fact that Cl is the symbol for chlorine, which results in only one entity being processed in working memory at a given time, imposing a low level of intrinsic cognitive load.

Compared to memorising a list, learning to solve a linear equation, such as, 3x = 9 solve for x, is higher in element interactivity. Learners cannot process 3x = 9, 3x/3 = 9/3, x = 3 separately and individually with understanding. These 15 symbols (i.e., elements) must be processed simultaneously in working memory, which imposes a high level of intrinsic cognitive load.

Element Interactivity and Extraneous Cognitive Load

Extraneous cognitive load is imposed because of suboptimal instructional designs, which do not adequately facilitate learning (Sweller, 2010). Cognitive load theory has been used to devise many instructional techniques to reduce extraneous cognitive load. Those instructional procedures are effective because they reduce unnecessary levels of element interactivity.

The worked example effect will be used to demonstrate how different levels of element interactivity can be used to explain extraneous cognitive load. The effect suggests that asking novices to study examples improves learning more than having them solve the equivalent problems (Sweller & Cooper, 1985). Novices solve problems by generating steps using means-ends analysis (Newell & Simon, 1972), which involves considering the current problem state and the goal state, and randomly searching for problem solving operators that will reduce differences between the two states. As indicated below, many moves may need to be considered before a suitable sequence of moves is found, a process that involves a considerable number of interacting elements thus imposing a high working memory load. However, when learning with examples, learners only need to focus on a limited number of moves as the correct solution is provided. The reduction in element interactivity reduces extraneous cognitive load compared to problem solving. Similar explanations are available for other cognitive load effects (Sweller, 2010). Appropriate instructional procedures reduce element interactivity and so reduce complexity.

Task Complexity and Knowledge Complexity

The distinction between task and knowledge complexity is made by distinguishing between intrinsic and extraneous cognitive load. Intrinsic cognitive load covers what needs to be known (knowledge complexity) while extraneous cognitive load covers how it will be presented (task complexity). Thus, knowledge complexity or what needs to be known (intrinsic cognitive load) does not change when problems are presented in different ways, such as presenting as goal-free problems, but task complexity (extraneous cognitive load) does change.

Expertise, Strategy Use, and Element Interactivity

The levels of the different types of cognitive load are influenced by element interactivity, that is, the number of interacting elements present in learning materials and instructional procedures. Importantly, element interactivity is directly linked to a learner’s level of expertise (Chen et al., 2017). As such, by varying the learners’ expertise, one can indirectly modify the levels of cognitive load, through the alteration of element interactivity levels. Consider, for instance, the case of intrinsic cognitive load. Solving an equation such as 3x = 9 (solve for x) would involve processing 15 interactive elements simultaneously for a novice learner, placing a high demand on their working memory. However, for a knowledgeable learner, relevant knowledge can be retrieved from long-term memory, thereby reducing the number of interactive elements (i.e., lowering the level of element interactivity) and, consequently, the intrinsic cognitive load associated with the task.

With respect to extraneous cognitive load, consider the worked example effect applied to the same problem. Novices learning through problem solving must simultaneously consider the initial problem state, the goal state, and operators to convert the initial state into the goal state, generating moves through trial-and-error. This process of trial and error involves a large number of interacting elements to be processed in working memory. In contrast, by studying a worked example, all of these elements are provided in a single package that demonstrates exactly how the various elements interact thus eliminating the element interactivity associated with trial and error. However, for more knowledgeable learners, by retrieving relevant knowledge from long-term memory when problem solving, the level of element interactivity associated with trial and error is reduced or eliminated, reducing or eliminating the advantage of worked examples and so decreasing the extraneous cognitive load associated with the task. The result is the elimination or even reversal of the worked example effect (Chen et al., 2017).

The extraneous cognitive load associated with solving a problem will also be determined by the problem-solving strategies used. Some strategies used to solve the same problem can result in variations in element interactivity. For example, Ngu et al. (2018) found that students taught to solve algebra transformation problems using the commonly taught “balance” strategy used in this paper (e.g. -x applied to both sides of an equation) had more difficulty solving the problems compared to students taught to use the “inverse” strategy (move x from one side of an equation to the other and reverse the sign). The balance strategy requires the manipulation of more elements than the inverse strategy and so imposes a higher cognitive load. Another strategy that can be used to reduce cognitive load is to transfer some of the interacting elements into written form to reduce the number of elements that must be held in working memory (Cary & Carlson, 1999).

The effect of expertise levels on element interactivity and extraneous cognitive load can be seen when considering how learners semantically code problems, an example of strategy use. As indicated by Gros et al. (2020, 2021), learners with varying expertise levels may semantically code the same problem differently. For instance, novices might encode irrelevant features embedded in the problem statement, which can obstruct learning and problem-solving by imposing an extraneous cognitive load. This occurs because novices process these extraneous elements in their working memory, thereby increasing the levels of element interactivity. In contrast, knowledgeable learners, with more domain-specific knowledge, can more efficiently code problems for learning and solving. They can concentrate solely on elements beneficial for problem-solving and their intrinsic relationships, which reduces both the levels of element interactivity and extraneous cognitive load. Therefore, when measuring element interactivity of learning materials and instructional procedures for cognitive load, it is essential to consider the learners’ expertise levels. This issue is discussed in more detail when considering the measurement of element interactivity below.

The Distinction Between Element Interactivity and Task Difficulty

Element interactivity and task difficulty are different concepts. There are two reasons information might be difficult. First, many elements may need to be learned even though they do not interact. For instance, learning the symbols of the periodic table in chemistry is a difficult, but not a complex task. Although it is difficult, the element interactivity is low because learners can study the symbols individually and separately. Consequently, this task does not impose a heavy working memory load.

Second, fewer elements may need to be learned but the elements interact imposing a heavy working memory load. Such tasks also are difficult, but they are difficult for a different reason. Their difficulty does not stem from the large number of elements with which the learner must deal, but rather the fact that the elements need to be dealt with simultaneously by our limited working memory. The total number of elements may be relatively small, but the interactivity of the elements renders the tasks difficult.

Of course, some tasks may include many elements that need to be learned and in addition, the elements interact. Such tasks are exceptionally difficult and are likely to only be learnable by initially engaging in rote learning before subsequently combining the rote learned elements. In effect, the interacting elements of the task are initially treated as though they do not interact. It may only be possible to learn such material by first learning the individual elements by rote before learning how they interact in combination. Understanding occurs once the interacting elements can be combined into a single entity.

When interacting elements have been combined into a single element and stored in long-term memory, information that previously was high in element interactivity is transformed into low element interactivity information. We determine element interactivity by determining what constitutes an element for our students given their prior knowledge. That element may constitute many interacting elements for less knowledgeable students. To measure the element interactivity of information being presented to students, we must determine the number of new interacting elements that our students need to process.

Measuring Element Interactivity

It follows from the above that what constitutes an element is an amalgam of the structure of the information and the knowledge of the learner. Accordingly, to measure element interactivity as defined above and applied to biologically secondary information, we need to simultaneously consider the structure of the information being processed and the knowledge held in long-term memory of the person processing the information. The only metric currently available to accomplish this aim is to count the number of assumed interacting elements. The accuracy of determining the number of elements depends heavily on the precise measurement of knowledge stored in a learner’s long-term memory. As previously discussed, variations in a learner’s level of expertise influence the levels of element interactivity. While precise measures are unavailable, there are usable estimates. Those estimates depend on instructors being aware of the knowledge levels of the students for which the instruction is intended and knowing the characteristics of the information being processed under different instructional procedures.

It is important to note that the chosen method for measuring prior knowledge should align with the research goals and the nature of the subject matter. Various methods have been developed to measure prior knowledge, each with its own strengths and limitations. Traditional assessments often involve pre-tests or diagnostic tests, which provide a quantitative measure of what the learner already knows about a specific topic (Tobias & Everson, 2002 ). In addition to these, concept mapping, as proposed by Novak (1990), is another tool used to visually represent a learner’s understanding and knowledge structure of a specific topic. This method can capture more nuanced aspects of prior knowledge, such as the relationships between concepts. Furthermore, self-assessment methods, where learners rate their own understanding of a topic, can offer valuable insights, though they may be subject to biases (Boud & Falchikov, 1989). Lastly, interviews and discussions provide a qualitative approach to understanding a learner’s prior knowledge, offering depth and context that other methods might miss (Mason, 2002). However, these methods can be time-consuming and require careful interpretation.

In this section, we will provide some concrete examples from cognitive load theory–based experiments on how to estimate the levels of element interactivity determining intrinsic and extraneous cognitive load.

Intrinsic Cognitive Load

Estimating the level of element interactivity for intrinsic cognitive load has been demonstrated in many cognitive load theory experiments and this a priori analysis technique reflects a relatively mature process. The first attempt to measure element interactivity contributing to intrinsic cognitive load was provided by Sweller and Chandler (1994). In four experiments, they studied the effects of learning to use a variety of computer programs. They found that the two extraneous cognitive load effects that they were investigating, the split-attention and the redundancy effects could be readily obtained with large effect sizes for information that had a high level of element interactivity associated with intrinsic cognitive load but disappeared entirely for information for which the element interactivity associated with intrinsic cognitive load was low. They estimated element interactivity simply by counting the number of interacting elements faced by novice students. The procedure can be demonstrated using the example of students learning to solve problems such as a/b = c, solve for a. The numbers in the following example refer to the increasing count of interacting elements.

The denominator b (7) can be removed (8) by multiplying the left side of the equation by b (9) resulting in ab/b (10). Since the left side has been multiplied by b, in order to make the equation equal (11), the right side (12) also must be multiplied by b (13), resulting in ab/b = cb (20). On the left side (21), the b (22) in the numerator (23) can cancel out (24) the b in the denominator (25) leaving “a” (26) isolated (27) on the left side. The equation a = cb solves the problem (28).

These 28 elements all interact. To understand this solution, at some point they all must be processed simultaneously in working memory. For a novice who is learning this procedure, the working memory load can be overwhelming. For an expert who holds the entire solution in long-term memory ready to be transferred to working memory, the equation and its solution are likely to impose an element interactivity count of only 1 (i.e., retrieving the knowledge stored in long-term memory as a single entity). Without taking human cognitive architecture into account, the complexity of the task cannot be calculated.

Over the years, there have been many demonstrations of the estimation of levels of element interactivity associated with intrinsic cognitive load. For example, in Chen et al.’s (2015) experiments in the domain of geometry, learners were asked to either memorise some mathematics formulae or calculate the area of a composite shape. The materials for memorisation were estimated as low in element interactivity. As an example, to memorise the formula of the area of a parallelogram, the base × the height, included 4 interacting elements for a novice — the area, the base, the multiplication relation, and the height. However, when novices need to calculate the area of a composite shape, element interactivity is higher compared to memorising formulae.

Figure 1 provides an example. First, learners need to identify the rhombus, including four equal length lines, including the missing line, FC (5). They then needed to identify the trapezium, including four lines, again including the missing line, FC (10). Next, another two elements were concerned with calculating the areas of both (12). To calculate the two areas, the mathematics symbols involved in the different formulae must be processed, namely, the meaning of a, b and the multiplication function in the rhombus formula as well as the meaning of a, b, and h with addition, multiplication, and division by 2 for the trapezium formula (22). Finally, the two separate area values added together involved another 3 elements giving, in total, about 25 interactive elements.

Example of material used in Chen et al.’s study (2015)

In contrast, Chen et al. (2016) suggested the interactive elements for memorising trigonometry formulae were low in element interactivity. For example, to memorise sin (A+B) = sin(A)cos(B) + cos(A)sin(B), there are many elements but each of the elements can be memorised independently of the others because they do not interact. Remembering or forgetting an element should not affect the memorisation of any of the other elements. Therefore, the element interactivity count is 1 despite the many elements. However, simplifying a trigonometry formula is high in element interactivity, compared to memorising the formulae.

Leahy and Sweller (2019) attempted to calculate the number of interactive elements for a non-STEM domain, teaching learners how to write puzzle poems following several rules (see Figure 2). The 1st element was to read the sentence “To write one you need to follow all these rules”, the 2nd element was “There must be 6 lines in the poem”, the 3rd and 4th elements were the arrow linking the 3rd rule with the underlined word, the 5th, 6th and 7th elements were the two arrows linking the 4th rule (1 element) with line 1 and 3 (2 elements), similarly the 8th, 9th and 10th elements were the two arrows linking the 5th rule with line 4 and 6, the 11th and 12th elements were the next arrow linking the word “SELMAN” with the 6th rule, the 13th and 14th elements were the last arrow linking the last rule and “the word is a theorist”. Based on this count, there were 14 interactive elements, suggesting that this material was high in element interactivity.

Example of material used in Leahy et al.’s study (2019)

Measuring the element interactivity for intrinsic cognitive load could also be applied to expository text. For example, the material used by Chen et al. (under review) was a passage about the Kleefstra syndrome:

“Kleefstra syndrome is a recently recognised rare genetic syndrome (1) caused by a deletion of the chromosomal region 9q34.3 (~50% of affected individuals) (2) or heterozygous pathogenic variant of the EHMT1 gene (~50% of affected individuals) (3). The clinical phenotype of Kleefstra syndrome (the group of clinical characteristics that more frequently occur in individuals with Kleefstra syndrome than those without Kleefstra syndrome) is well documented (3). Prominent characteristics include severe developmental delay/intellectual disability (4), lack of expressive speech (5), distinctive facial features (6) and motor difficulties (7). Regression of functioning is another hallmark trait of Kleefstra syndrome (8) causing individuals to lose previously obtained daily functioning as they age (9). Unlike the clinical phenotype, the behavioural phenotype of Kleefstra syndrome is not well established (10) although frequently reported behaviours include emotional outbursts (11), self-injurious behaviour (especially hand biting) (12) and ‘autistic-like’ behaviour (including avoidance of eye contact and stereotypies) (13). However, the presence and severity of these behaviours are inconsistently reported throughout past research (14) and contradictory behavioural observations are commonplace (15), for example, ‘autistic-like’ behaviour is reported in Kleefstra syndrome alongside partially intact social communication skills (16).” The estimation for this material was by calculating the unit ideas and thought groups. There were at least 16 interacting elements for novices to learn, which renders the material being high in element interactivity.

Extraneous Cognitive Load

While Sweller and Chandler (1994) occasionally mentioned that differences in extraneous cognitive load also are due to differences in element interactivity, they made no attempt to provide details. Sweller (2010) did provide such details but did not provide examples about calculating the number of element interactivity for extraneous cognitive load.

The worked example effect facilitates learning by reducing the number of interactive elements compared to problem solving. For example, a worked example indicating how to solve, a/b = c, solve for a, requires students to study:

For a novice to fully understand this worked example requires the person to process the 28 elements indicated above, a procedure that far exceeds available working memory. For this reason, many novices studying algebra struggle even when presented with worked examples. Fortunately, there is an alternative that most students are likely to use. When studying this example, at any given time, novices only need to process in working memory as few elements as they wish. For example, they do not have to decide what the first move should be, by what process they should generate that move, or how that move may relate to the goal of the problem. The worked example provides all that information so that at any given time, they can focus just on a limited number of elements and ignore all the others.

In contrast, when novices engage in problem solving, there are limits to the extent that they can concentrate on part of the problem while ignoring other parts. They not only need to consider all 28 elements, until they have fully solved the problem, they do not know whether the elements that they are processing are ones needed to solve the problem and so they are likely to process some elements that lead to a dead-end.

Novices are likely to use a means-ends strategy that requires them to consider the entire problem including where they are now, where they need to go and how to generate moves that might allow them to accomplish this end (Sweller et al., 1983). A means-ends strategy never guarantees that the shortest route to a solution is being followed.

Problem solvers will of course, write down many of their steps in order to reduce their working memory load but until they have reached the goal, cannot know whether the steps that they have written down are on the goal route. Whatever route they follow, including dead ends, they will need to process vastly more elements than students studying a worked example.

The split-attention effect suggests that physically integrating text with diagrams should enhance learning compared to mentally integrating them (Chandler & Sweller, 1992). Consider teaching the solution of the geometry problem of Figure 3. The physically integrated format of Figure 3b allows learners to focus on the text and the diagram without randomly searching and mapping each step of the split-attention format seen in Figure 3a. For the integrated format, depending on knowledge levels, there may be only two elements, namely the two steps integrated with the diagram.

For the split-attention format, learners need to read the problem statement and search for the goal angle on the diagram (2 or more elements depending on how many angles are searched before the correct one is found); similarly, search for angles ABC, BAC, and ACB before combining them into the equation (4 elements); then reconfirm that angle DBE is the goal angle before noting it is equal to angle ABC (2 elements). There are likely to be 8 elements that need to be processed using the split-attention format, with most of the elements associated with searching for angles. These 8 elements can be compared with the 2 elements required for the integrated format.

Theoretical and Practical Contributions

Theoretical Implications

This review offers several key theoretical contributions: First, by surveying various task complexity conceptual frameworks, it is clear that the majority rely on objective measures, neglecting the influence of a learner's expertise - a crucial factor in determining task complexity. Cognitive load theory, by considering the interaction between element interactivity and a learner’s expertise, may address this limitation found in other frameworks. Second, while the relationships among element interactivity, intrinsic cognitive load, and extraneous cognitive load have been established and thoroughly explained (Sweller, 2010), it remains unclear how to estimate element interactivity for different tasks. Moreover, previous studies have predominantly used element interactivity to measure and quantify intrinsic cognitive load, often overlooking the quantification of extraneous cognitive load from the perspective of element interactivity. This review provides examples of how to estimate element interactivity for both intrinsic and extraneous cognitive loads across different tasks, thereby extending prior research on element interactivity.

Element interactivity can be used to provide an estimate of informational complexity experienced when people process information, especially when learning. Applying the concept of element interactivity to estimate informational complexity is not restricted to cognitive load theory but also offers a tool for other frameworks to quantify the complexity of information perceived by learners. Element interactivity is not a precise measure of complexity because human cognitive architecture mandates that the complexity that humans experience is a simultaneous mixture of both the nature of the information and the contents of long-term memory of the person processing the information. Notwithstanding, provided we can estimate the knowledge held in long-term memory of the individual processing the information, we can use that knowledge to estimate the working memory load imposed due to both intrinsic and extraneous cognitive load. Such estimates have practical implications for both the design of experiments and interpretations of their results. Importantly, those estimates also have implications for instructional design.

Practical Implications

With respect to experimentation, because element interactivity as a measure of complexity is always an estimate rather than an exact measure, the effects of relatively small differences in element interactivity on experimental results are not likely to be visible. In contrast, the effects of very large differences in element interactivity can be readily demonstrated. The fact that measures of element interactivity are only estimates rather than precise measures becomes irrelevant if we are dealing with very large differences. We may not know the exact level of element interactivity, but we know there are large differences. Accordingly, most experiments ensure that only very large differences in element interactivity are studied.

Differences in element interactivity can be due to the use of different materials, different levels of expertise, or both. An experiment testing students learning lists of unrelated information such as the symbols of the chemical periodic table, a low element interactivity task, may yield different results to an experiment on teaching students how to balance chemical equations, a high element interactivity task. Similarly, an experiment that tests students who can easily balance chemical equations is likely to yield different results from the same experiment testing students who are just beginning to learn how to balance such equations.

These different experimental effects feed directly into instructional recommendations. Because of the very large effects that large differences in element interactivity have on learning, we cannot afford to ignore those differences when designing instruction. Most cognitive load theory effects disappear or even reverse when students study low element interactivity information, leading to the expertise reversal effect (Chen et al., 2017). As element interactivity decreases, the size of effects decreases and may reverse. Accordingly, recommended instructional procedures depend heavily on element interactivity. Furthermore, the suitability of an instructional procedure will not only change as the nature of the information changes, the same information may be suitable for students with a particular level of knowledge and unsuitable for students with a different level of knowledge because levels of element interactivity can change either by changing the nature of the information but also, the knowledge levels of learners. Either element interactivity or another measure of complexity that similarly takes into account the characteristics of human cognitive architecture is an essential ingredient of a viable instructional design science. At present, with the exception of element interactivity, the multitude of measures of complexity ignore the intricate consequences of the flow of information between working and long-term memory.

Limitations

Despite the fact that the use of element interactivity to measure task complexity incorporates both objective (i.e., tasks) and subjective (i.e., learners’ expertise) factors, the estimation of the number of interacting elements in a task may not always be accurate due to the inherent difficulties in precisely measuring learners’ knowledge. Furthermore, the emphasis on estimating element interactivity has primarily been on well-structured tasks, such as mathematics, with fewer examples provided for expository materials.

Conclusions

In conclusion, while measuring complexity using element interactivity is not exact, it is usable. In the absence of other measures of complexity that also take human cognitive architecture into account, we believe the use of element interactivity or a very similar measure is unavoidable.

References

Bedny, G. Z., Karwowski, W., & Bedny, I. S. (2012). Complexity evaluation of computer-based tasks. International Journal of Human-Computer Interaction, 28(4), 236–257.

Boud, D., & Falchikov, N. (1989). Quantitative studies of student self-assessment in higher education: A critical analysis of findings. Higher Education, 18(5), 529–549.

Campbell, D. J. (1988). Task complexity: A review and analysis. Academy of Management Review, 13(1), 40–52.

Campbell, D. J., & Ilgen, D. R. (1976). Additive effects of task difficulty and goal setting on subsequent task performance. Journal of Applied Psychology, 61(3), 319–324.

Cary, M., & Carlson, R. A. (1999). External support and the development of problem-solving routines. Journal of Experimental Psychology: Learning, Memory, & Cognition, 25, 1053–1070.

Chandler, P., & Sweller, J. (1992). The split-attention effect as a factor in the design of instruction. British Journal of Educational Psychology, 62(2), 233–246.

Chen, O., Kalyuga, S., & Sweller, J. (2015). The worked example effect, the generation effect, and element interactivity. Journal of Educational Psychology, 107(3), 689–704.

Chen, O., Kalyuga, S., & Sweller, J. (2016). Relations between the worked example and generation effects on immediate and delayed tests. Learning and Instruction, 45, 20–30.

Chen, O., Kalyuga, S., & Sweller, J. (2017). The expertise reversal effect is a variant of the more general element interactivity effect. Educational Psychology Review, 29, 393–405.

Chu, P. C., & Spires, E. E. (2000). The joint effects of effort and quality on decision strategy choice with computerized decision aids. Decision Sciences, 31(2), 259–292.

Cowan, N. (2001). The magical number 4 in short-term memory: A reconsideration of mental storage capacity. Behavioral and Brain Sciences, 24(1), 87–114.

Earley, P. C. (1985). Influence of information, choice and task complexity upon goal acceptance, performance, and personal goals. Journal of Applied Psychology, 70(3), 481–491.

Ericsson, K. A., & Kintsch, W. (1995). Long-term working memory. Psychological Review, 102(2), 211–245.

Funke, J. (2010). Complex problem solving: A case for complex cognition. Cognitive Processing, 11, 133–142.

Geary, D. (2005). The origin of mind: Evolution of brain, cognition, and general intelligence. American Psychological Association.

Geary, D. (2008). An evolutionarily informed education science. Educational Psychologist, 43, 179–195.

Geary, D. (2012). Evolutionary educational psychology. In K. Harris, S. Graham, & T. Urdan (Eds.), APA educational psychology handbook (Vol. 1, pp. 597–621). American Psychological Association.

Geary, D., & Berch, D. (2016). Evolution and children’s cognitive and academic development. In D. Geary & D. Berch (Eds.), Evolutionary Perspectives on Child Development and Education (pp. 217–249). Springer.

Gill, T. G. (1996). Expert systems usage: Task change and intrinsic motivation. MIS Quarterly, 20(3), 301–329.

Gill, T. G., & Murphy, W. (2011). Task complexity and design science. In 9th International Conference on Education and Information Systems, Technologies and Applications (EISTA 2011).

Gros, H., Thibaut, J. P., & Sander, E. (2020). Semantic congruence in arithmetic: A new conceptual model for word problem solving. Educational Psychologist, 55(2), 69–87.

Gros, H., Thibaut, J. P., & Sander, E. (2021). What we count dictates how we count: A tale of two encodings. Cognition, 212, 104665.

Gonzalez, C., Vanyukov, P., & Martin, M. K. (2005). The use of microworlds to study dynamic decision making. Computers in Human Behavior, 21(2), 273–286.

Halford, G. S., Wilson, W. H., & Phillips, S. (1998). Processing capacity defined by relational complexity: Implications for comparative, developmental, and cognitive psychology. Behavioral and Brain Sciences, 21(6), 803–831.

Huber, V. L. (1985). Effects of task difficulty, goal setting, and strategy on performance of a heuristic task. Journal of Applied Psychology, 70(3), 492–504.

Kieras, D., & Polson, P. G. (1985). An approach to the formal analysis of user complexity. International Journal of Man-machine Studies, 22(4), 365–394.

Leahy, W., & Sweller, J. (2019). Cognitive load theory, resource depletion and the delayed testing effect. Educational Psychology Review, 31, 457–478.

Liu, P., & Li, Z. (2012). Task complexity: A review and conceptualization framework. International Journal of Industrial Ergonomics, 42(6), 553–568.

Locke, E. A., Shaw, K. N., Saari, L. M., & Latham, G. P. (1981). Goal setting and task performance: 1969–1980. Psychological Bulletin, 90(1), 125–152.

Mason, J. (2002). Linking qualitative and quantitative data analysis. In Analyzing qualitative data (pp. 103–124). Routledge.

McCracken, J. H., & Aldrich, T. B. (1984). Analyses of selected LHX mission functions: Implications for operator workload and system automation goals (Vol. ASI479-024-84). Anacapa Sciences, Inc.

Miller, G. A. (1956). The magical number seven, plus or minus two: Some limits on our capacity for processing information. Psychological Review, 63(2), 81–97.

Nembhard, D. A., & Osothsilp, N. (2002). Task complexity effects on between-individual learning/forgetting variability. International Journal of Industrial Ergonomics, 29(5), 297–306.

Newell, A., & Simon, H. A. (1972). Human problem solving. Prentice Hall.

Ngu, B. H., Phan, H. P., Yeung, A. S., & Chung, S. F. (2018). Managing element interactivity in equation solving. Educational Psychology Review, 30, 255–272.

Novak, J. D. (1990). Concept mapping: A useful tool for science education. Journal of Research in Science Teaching, 27, 937–949.

Peterson, L., & Peterson, M. J. (1959). Short-term retention of individual verbal items. Journal of Experimental Psychology, 58(3), 193–198.

Sweller, J. (1994). Cognitive load theory, learning difficulty, and instructional design. Learning and Instruction, 4(4), 295–312.

Sweller, J. (2010). Element interactivity and intrinsic, extraneous, and germane cognitive load. Educational Psychology Review, 22, 123–138.

Sweller, J., & Chandler, P. (1994). Why some material is difficult to learn. Cognition and Instruction, 12(3), 185–233.

Sweller, J., & Cooper, G. A. (1985). The use of worked examples as a substitute for problem solving in learning algebra. Cognition and Instruction, 2(1), 59–89.

Sweller, J., Mawer, R. F., & Ward, M. R. (1983). Development of expertise in mathematical problem solving. Journal of Experimental Psychology: General, 112(4), 639–661.

Sweller, J., Van Merrienboer, J. J. G., & Paas, F. (1998). Cognitive architecture and instructional design. Educational Psychology Review, 10, 251–296.

Sweller, J., van Merriënboer, J. J. G., & Paas, F. (2019). Cognitive architecture and instructional design: 20 years later. Educational Psychology Review, 31, 261–292.

Taylor, M. S. (1981). The motivational effects of task challenge: A laboratory investigation. Organizational Behavior and Human Performance, 27(2), 255–278.

Tobias, S., & Everson, H. T. (2002). Knowing what you know and what you don’t: Further research on metacognitive knowledge monitoring. In College board research report 2002 – 3. New York, NY: College Entrance Examination Board.

Wood, R. E. (1986). Task complexity: Definition of the construct. Organizational Behavior and Human Decision Processes, 37(1), 60–82.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chen, O., Paas, F. & Sweller, J. A Cognitive Load Theory Approach to Defining and Measuring Task Complexity Through Element Interactivity. Educ Psychol Rev 35, 63 (2023). https://doi.org/10.1007/s10648-023-09782-w

Accepted:

Published:

DOI: https://doi.org/10.1007/s10648-023-09782-w